Abstract

This paper presents a dual-arm suturing robot. We extend the Smart Tissue Autonomous Robot (STAR) with a second robot manipulator, whose purpose is to manage loose suture thread, a task that was previously executed by a human assistant. We also introduce novel near-infrared fluorescent (NIRF) sutures that are automatically segmented and delimit the boundaries of the suturing task. During ex-vivo experiments of porcine models, our results demonstrate that this new system is capable of outperforming human surgeons in all but one metric for the task of vaginal cuff closure (porcine model) and is more consistent in every aspect of the task. We also present results to demonstrate that the system can perform a vaginal cuff closure during an in-vivo experiment (porcine model).

Keywords: Medical robotics, robot-assisted surgery, vision-guided laparoscopic suturing

INTRODUCTION

The most common example of systems for robot-assisted surgeries (RAS) involves teleoperation between a surgeon and a remote robot with laparoscopic tools. The design and control of the remote system make abstraction of kinematic constraints and provide the surgeon with superior dexterity and perception. In contrast to the teleoperation paradigm, this manuscript introduces a dual-arm robot system for laparoscopic suturing. Efforts in automating soft tissue surgeries have until recently been limited to elemental tasks such as knot tying, adaptive suture thread and needle tracking methods, needle insertion, and executing predefined motions [1]–[5]. Machine learning techniques were introduced in robotic suturing to facilitate system calibration [6] and to imitate surgical suturing from video demonstrations [7]. Preda et al. introduced a cognitive control architecture, based on formal modeling and verification methods as best practices to ensure safe and reliable behavior, and tested a robot specifically designed for surgical applications, such as wound suturing [8].

According to 2009 data, over 479,814 hysterectomies were performed in the United States for benign indication [9] and 58% of these procedures were performed open abdominally, 19% were laparoscopic, 17% vaginal and 6% robotics. The same study reports that minimally invasive hysterectomies are known to produce better outcomes such as fewer deaths, shorter hospital stay and faster recovery. For minimally invasive procedures, the closure of the vaginal cuff can be executed by various techniques and materials [10]. Among them, barbed suture is believed to improve surgical outcomes and operative time [11]. Even when using robotic surgical assistance, vaginal cuff closure takes 25 minutes on average (range 16–41 minutes) which constitute approximately 25% of the operative time [12], [13].

The Smart Tissue Autonomous Robot (STAR) is able to perform an anastomosis procedure and to outperform human surgeons [14], [15]. Despite its success, several hurdles remain to raise the level of autonomy of the STAR. First, during a suturing procedure, since one end of the suture is tied to the patient and the other to the needle, a loose thread can accumulate in the suture path each time the robot brings the needle close to the wound. When excess suture is present and the needle is driven through tissue, the resulting suture line contains knots, and creates a locking stitch [16]. For applications that require running sutures, locking suture may not be ideal for tissue closure. For instance, continuous suturing with barbed suture has demonstrated several advantages in gynecologic surgeries including increased efficiency, more uniform wound closure, and laparoscopic closure without the need for intracorporeal knots [17], [18]. In previous versions of the STAR, a human assistant used forceps to move any loose thread away from the wound to avoid locking stitches while performing running suture (Figure 1). The sole purpose of the assistant was to keep the wound free of the loose thread and refrained from tensioning the stitches or close the wound. Instead, the STAR uses a force/torque sensor to measure the tension in the suture and a control algorithm instructs the robot to keep pulling on the suture until a predetermined force is measured. This algorithm creates another problem by requiring the robot to pull an amount of suture up to 25 cm in length. This length of suture is necessary to completely close a typical wound but results in the suture tool exiting the abdominal cavity during a laparoscopic procedure. To execute laparoscopic suturing without human assistance, it is preferable to keep the tensioning distance to less than 10 cm and keep the tools within the abdominal cavity. Finally, the STAR introduced near-infrared fluorescent (NIRF) markers to delimit the boundaries of the suturing site but these drops of NIRF glue must be applied manually with a syringe on the tissues. These markers are difficult to apply manually and can polymerize before tissue contact due to humidity within the peritoneal space.

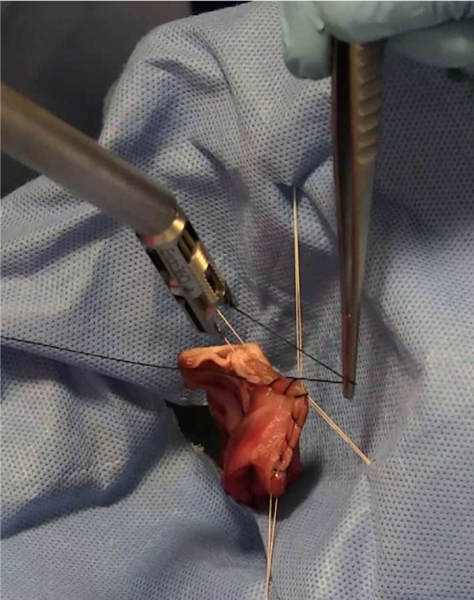

Figure 1.

Human assistant using tweezers to remove excessive thread from the anastomosis site.

The main contributions of this manuscript are to address each of the aforementioned limitations by introducing a two-arm suturing system with laparoscopic motion with NIRF stay sutures. Our contributions are as follows:

The first contribution removes the human assistant by using a robotic assistant. The assistant consists of a second robot arm mounted with a novel actuated laparoscopic grasper. The robot assistant uses its grasper as a retracting pulley to simultaneously tension the suture while moving the thread away from the suturing site. After the suturing tool drives the needle through the tissue, the suturing robot pulls a small amount of thread, just enough for the assistant to insert its grasper between the needle and the tissue and grab the suture with tool’s wrist. Then, the assistant moves the wrist to pull on the suture and tension the stitch. The second contribution addresses the problem of manually delimiting the wound with NIRF markers. We propose novel near-infrared fluorescent stay sutures that are automatically segmented and used to delimit the suturing task by determining the boundaries of the tissue. By using braided suture coated with NIRF dye, and processing the NIR images, the STAR can segment these sutures and detect the endpoints of the tissue. These endpoints fulfill the same purpose as the NIRF markers that were manually placed on the tissues [14], [15], [19]. The third contribution compares vaginal cuff closures executed using an ex-vivo porcine model by surgeons to the STAR. In these studies, we demonstrate that our system can execute an in-vivo laparoscopic vaginal cuff closure using a porcine model.

Research and development of robotic suturing systems, whereas tele-operated or autonomous, are generally broader in scope and focus on closing gashes instead of a specific part of the anatomy [4], [20], [21]. A large body of work proposes novel algorithms to assist the surgeon during the execution of a suture [22] but as pointed in [11] several suturing techniques exists for a single procedure and developing a technology that can assist all of them, let alone for any procedure, is a challenging objective. In our previous research, we developed the STAR with the intention of implementing the best suturing practices for a given procedure. This led the STAR to execute several in vivo anastomoses on pig models [15]. Yet several elements remain unaddressed to increase the degree of autonomy (DoA) of the STAR. In this paper, we specialize the STAR to automate the execution of a vaginal cuff closure and demonstrate that our technical contributions increase its DoA.

Several methods exist to assess the autonomy of surgical robots. IEC/TR 60601–4-1 [23] is an ISO standard that defines the Degree of Autonomy based on the capability of a robot to 1) generate and 2) select plans 3) execute and 4) monitor a clinical procedure. Likewise, Levels of Automation (LoA) were presented in [24] and further refined in [25] to emphasize the contribution to a procedure, or part thereof, that a robot can perform. Another recent example of classification is presented in [26] where the spectrum of autonomy contains direct control, shared control, supervised autonomy and full autonomy.

The STAR described herein was able to execute vaginal cuff closure without human assistance and is categorized under LoA-3 (supervised autonomy) and, according to IEC TR 60601–4-1, reached a DoA of level 5 (decision support). The DoA-5 level of the STAR is determined by the following:

Monitoring (Human/Computer):

Whereas the human operator initiates and can interrupt the procedure, the computer monitors 2D and 3D images to determine the parameters of the tissue and monitors measured forces and the position of both robots to ensure synchronization.

Generating (Human/Computer):

The human specifies parameters of the suturing task such as spacing between stitches, waypoints for tensioning. The computer uses 2D/3D cameras to determine the position of the tissue and its bounds to generate a single plan based on the (human-specified) parameters. Although the previous version of the STAR also used a combination of human and computer to generate a plan, our proposed NIRF sutures decrease the human contribution by removing manual initialization of the tissue’s bounds with NIRF markers.

Selection (Human):

The computer generates a single suturing plan. Henceforth, there is no selection.

Execution (Computer):

The human operator does not participate in the execution of the suture. In the previous version of the STAR, the execution was shared between a human assistant and a computer. The addition of a robot assistant is responsible for increasing the execution autonomy from Human/Computer to Computer with the implication that the DoA of the STAR increases from 4 (Shared Decision) to 5 (Decision Support).

MATERIALS AND METHODS

This section presents the architecture of the STAR presented in this manuscript. The STAR was initially developed for laparoscopic suturing. The following sections present the hardware, software architecture and protocols used in our experiments.

1. STAR Hardware

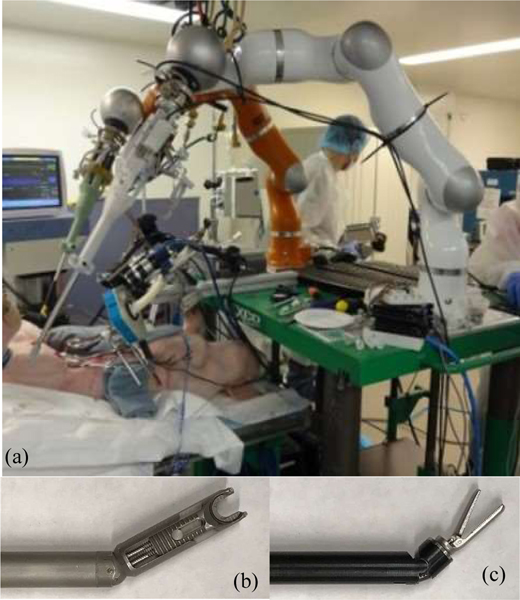

The initial hardware of the STAR included a single KUKA LWR arm equipped with an actuated Endo360™ (Figure 2a). The Endo360 is a laparoscopic suturing tool with a circular needle at its tip that drives along a circular track. The tool is connected to a unit where one motor actuates a revolute joint near the tip and one motor drives the needle along a track inside the tool as show in Figure 2b. The STAR also uses an ATI Force/Torque (FT) sensor used to measure forces. The FT sensor is mounted between the LWR flange and the motor pack and is used to measure contact and tensioning forces during a suturing. The vision system of the STAR combines a plenoptic camera for 3D acquisition and a NIR camera for detecting NIRF dye [16]. The plenoptic camera provides a 3D point cloud within a volume of 70×65×30 mm. The NIR camera uses an 845 nm ±55nm bandpass filter to pass the light from NIRF dye. A 760 nm LED light source is used to excite a NIRF dye composed of 2 mg of Indocyanine green (ICG), 0.5 mL of acetone and 0.25 mL of Permabond glue.

Figure 2.

Hardware for the existing STAR used for suturing procedure. Dual armed STAR system (a), Endo360 suture tool (b), Radius T assistant tool (c).

Both cameras are calibrated, and their coordinate frames registered. Furthermore, the plenoptic camera is registered with the LWR such that the 3D point cloud can be related to the robot’s coordinate system.

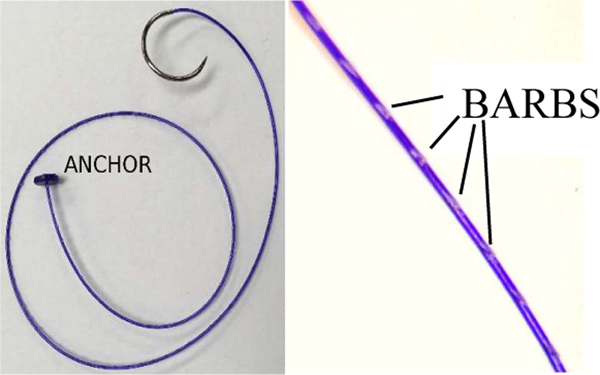

In previous research [14], [15], the STAR used silk sutures which required the execution of a double over-hand knot. In this research, the STAR uses a USP 2–0 synthetic monofilament barbed suture (Assut Europe, Rome, Italy). The sutures also provide an anchor at the end that removes the need for an initial knot (Figure 3).

Figure 3.

Barbed suture with anchor used in ex vivo and in vivo experiments.

2. STAR Assistant

A second robot arm is added to the STAR to manage the loose suture during the procedure. The second is arm is also a KUKA LWR, but it is mounted with a laparoscopic grasper (Figure 2c). This tool provides two revolute joints at the wrist and one open/close mechanism for the grasper. These joints are actuated by motors located between the tool and the LWR mounting flange. During the vaginal cuff closure, only the roll and pitch joints are used, and the gripper is maintained in a closed position. The rigid transformation between both robots is computed by mating the mounting the flange of one robot into the flange of the other robot and chaining the forward kinematics of both robots.

3. Software

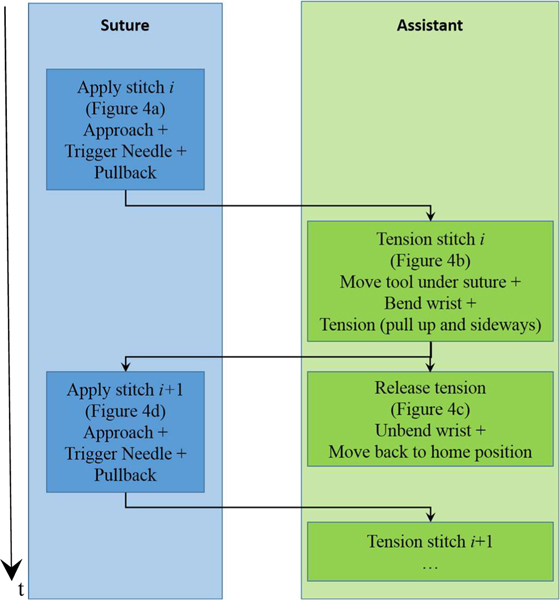

The software architecture to control and synchronize the hardware is illustrated in Figure 4. The control loop of each robot is implemented with Orocos Real-Time Toolkit (RTT) and the motion of each arm is controlled by an individual component. Each component maintains a state and communicates its state to the other component for the purpose of synchronization. The main task of the assistant is to catch the suture with its wrist (Figure 5b) and using it at as pulley to tension the suture by moving to the side (Figure 5c). The jaws of the grasper remain close during the procedure and the grasper is only used to form a “V” shape with the shaft of the tool so the wrist acts as a retracting pulley (Figure 12).

Figure 4.

Flow chart describing the steps and synchronization of both arms. The time axis is illustrated on the left.

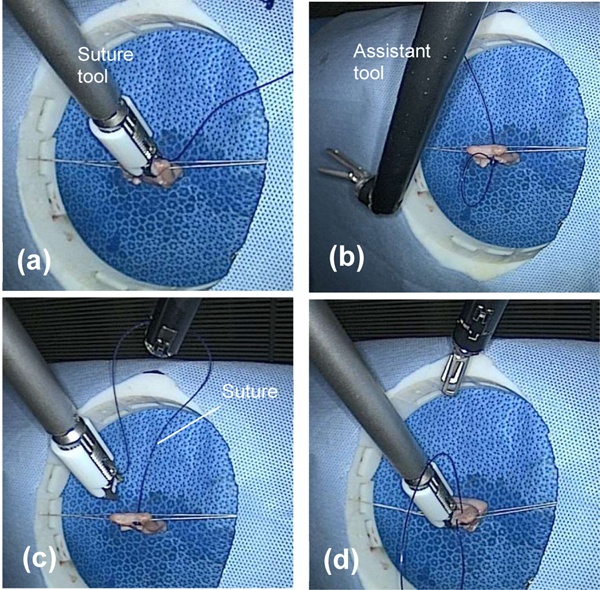

Figure 5.

Dual arm strategy for suturing vaginal cuff. Placing a stitch with suture tool (a), positioning assistant tool (b), tensioning stitch with assistant tool (c), releasing suture tension and placing next stitch (d).

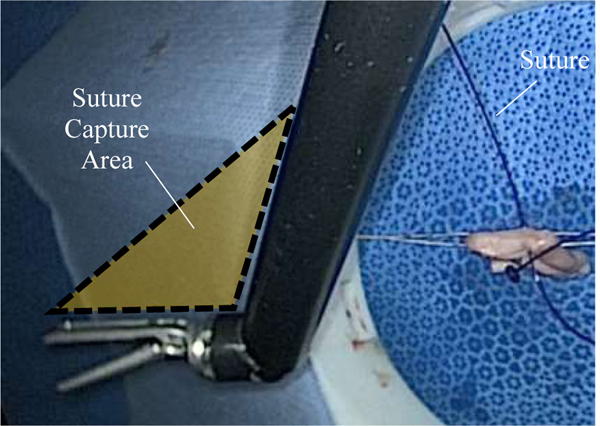

Figure 12:

Shaded region illustrating where suture can be captured by the assistant tool for tensioning.

The barbed sutures used in all experiments are terminated by an anchor that removes the need for tying a knot. Since the length of each suture is roughly 20 cm, tensioning the first stitch by only using the suturing robot requires that the needle pulls 20 cm of suture inside the body or perhaps even exit the trocar. From a laparoscopy perspective, this distance is impractical to achieve, and one constraint of our experiments is to confine the motion of both robots to a reasonable laparoscopic workspace of 10 × 10 × 10 cm.

The STAR’s workflow uses the suturing robot as the primary timing source and is illustrated in Figure 4. The workflow contains the following blocks.

Apply stitch (Suture):

This step is illustrated in Figure 5a. The suture component initiates the process by approaching the tissue and then moving the tool a small distance perpendicularly to the tissue’s surface to establish a contact. After the approach motion ends, the component activates the needle. Then, the suturing tool moves backward to create a gap between the needle and the tissue that is large enough for the assistant tool to interpose itself between the tissue and the needle.

Prepare tension (Assistant):

Following the needle’s pullback, the suture describes a 3D line segment between the tissue and the needle and both endpoints of this segment are known: one end has the 3D coordinates of the previous stitch and the other end has the coordinates of the needle. The assistant robot moves the gripper’s wrist beneath the midpoint of the line segments and bends the wrist 90 degrees to create a “V” shape that will catch the suture (Figure 5b).

Tension stitch (Assistant):

The gripper pulls the suture out of the way by moving the wrist up and to the side (Figure 5c). This pulls on the suture which tightens around the tissue. The amount of tensioning motion is determined by monitoring a force sensor that is mounted between the flange and the tool on the assistant robot. The tensioning ends when the measured magnitude of the force exceeds 5N. This threshold was obtained from our previous experiments [15]. Moreover, this tensioning algorithm prevents accidental locking stitches by moving the suture away from the suturing site. During the tensioning, the suturing tool can move to the following stitch.

Release (Assistant):

After the tensioning is completed, the suture must be released. This release is achieved by unbending the wrist and moving the grasper to let the suture fall (Figure 5d).

4. NIRF Stay Sutures

The algorithm for detecting NIRF stay sutures aims to replace the usage of NIRF markers that are manually placed on the surface of the tissues. To create the NIRF suture, a combination of 2 mg of indocyanine green (ICG), 0.5 mL of acetone and 0.25 mL of Permabond glue is produced by mixing them with a vortexer [27]. The mixture was applied on sutures manually by coating fingers with the NIRF glue and sliding the suture between them several times.

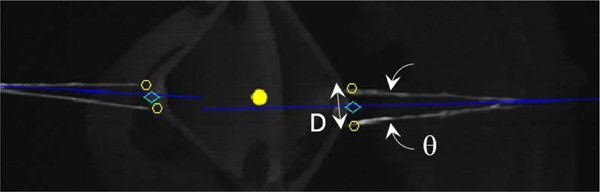

The stay suture segmentation algorithm is based on first segmenting lines, possibly parallel, and grouping them into pairs whenever possible. The main goal is to find the image coordinates where a stay suture enters and exits the tissue and the algorithm estimate a suturing endpoint as the point between these two points as illustrated in Figure 6.

Figure 6.

Detection of stay sutures and suturing endpoints. Yellow dot is the average coordinates of all the segmented pixels. The two closest points (yellow circles) of each suture to that point are selected (one on each side of the dividing blue line) and midpoint between each pair is used to delimit the suturing task.

The segmentation and detection algorithm are presented in Algorithm 1. Line 2 segments edges of sutures and tissues from a rectified NIR image. This includes a standard convolution with a Sobel filter, threshold of the gradient and filling small holes in the resulting binary image. Line 3 assigns a label to each connected component and prunes components with small areas. At this point, the binary image L contains the line segments that are labelled individually. Line 4 performs grouping of individual lines into pairs. The first step is to compute the 2D orientation of each line segment. This is implemented by computing the first and second moments (2D mean and covariance) of each line segment and extracting the angle from the covariance elements. Then, line segments that are nearly parallel (within θ degrees) and within a maximum distance (D) are considered to be from the same stay suture. The angle θmax defines the maximum angle between two segments of the same stay suture. The distance Dmax defines the maximum allowed distance (in pixels) between two segments of the same stay suture. For example, a reasonable value for Dmax is the expected distance between two endpoints belonging to the same suture. Examples of θ ≤ θmax and D ≤ Dmax are illustrated in Figure 6.

Algorithm 1.

NIRF Stay Suture Detection

| 1. | procedure NIRFSUTUREDETECTION( I ) |

| 2. | E ← edges segmentation( I ) |

| 3. | L ← labelling(E) |

| 4. | G ← grouping( L ) |

| 5. | return G |

| 6. | end procedure |

Once the sutures are segmented and grouped, the boundaries of the suturing procedure are estimated according to Algorithm 2. First, the center of scene is computed as the average of all segmented points as illustrated by the yellow dot in Figure 6. Then, the two closest points of a stay suture to the center are selected (yellow circles in Figure 6). Each of these points must be on either side of the angle bisector that evenly divides a stay suture in two (blue lines in Figure 6). Finally, the midpoints between these endpoints are returned as the boundaries for the suturing task as illustrated by the cyan diamonds in Figure 6.

Algorithm 2.

Estimation of suturing boundaries

| 1. | procedure BOUNDARIESESTIMATION(G) |

| 2. | C ← centroid( G ) |

| 3. | for i ← 1 : size( G ) do |

| 4. | b ← bisector( G( i ) ) |

| 5. | p1 ← nearest point( C, G( i ), b, true) |

| 6. | p2 ← nearest point(C, G( i ), b, false) |

| 7. | end procedure |

5. Ex-Vivo Vaginal Cuff Closure

Tissue Preparation:

For all experiments, a vaginal porcine tissue (Animal technologies) was purchased, rinsed, and cervix dissected. The vaginal stump was linearized with two stay sutures placed at the corners of the tissue. Stay sutures were then secured to a plastic ring simulating the top of the pelvic opening and iliac crest. The plastic ring was mounted to an adjustable positioning arm (NOGA), so it could be positioned within any of the simulated surgical fields. All modalities were instructed to close the vaginal cuff using 2–0 barbed suture and perform the anastomosis with a starting knot, running stitch, and locking stitch at the end of the vaginal cuff. Operating surgeons were instructed to perform a running anastomosis with similar quality to what they would use in a human. Timing for all experiments begins when the operator throws the first stitch.

Robotic Assisted Surgery Setup:

For RAS tests, all tissue samples were prepared and mounted to a mobile cart within the surgical field of a Da Vinci Standard surgical robot. Surgeons were given two needle drivers to use for the anastomosis, and stereoscopic vision from the camera. Prior to testing, surgeons could adjust the location of the tissue within the surgical field, position of the robotic arms, and viewing angle of the camera. Readjustment of surgical arms or camera with robotic clutch was not considered a mistake, but duration was recorded as additional time for average time per stitch calculation. All experiments were recorded from the master console using a video grabber and laptop for analysis of procedure timing.

STAR setup:

For STAR surgical tests, tissue samples were prepared, mounted to the surgical cart, and centered within the field of view of the camera system. Tissue samples were then adjusted to 25cm from the plenoptic camera. STAR was provided one suturing tool, and one atraumatic grasper to perform suturing and suture management. All procedures were recorded using a 0°, 10mm telescope and HD camera connected to an imaging surgical tower (STORZ). All robotic motions were performed under laparoscopic constraint for fair comparison to the alternative suturing modalities.

6. In-Vivo Vaginal Cuff Closure

Pig Preparation:

The STAR was used to perform anastomosis of the vaginal stump after laparoscopic hysterectomy in a porcine animal model. To create the model, a Yorkshire piglet (weight 23kg, N=1) was sedated with Xylazine, intubated, and anesthetized with vaporized Isoflurane. A 15° wedge pillow was placed under the back legs of the piglet to raise the pelvis, and four legs were secured to the table. The operating surgeon placed one midline, and two lateral ports for surgical access and insufflation of the abdominal space with CO2. A 0°, 5 mm telescope (STORZ) was used to visualize the surgical field, and a transabdominal syringe with 18-gauge needle was used to drain the bladder of excess urine. Using atraumatic grasper and shears, the bladder was dissected down, and the vaginal tract exposed. The uterus and cervix were then dissected with electrocautery and removed through one of the access ports. The vaginal cuff was linearized with transabdominal NIRF stay sutures and secured with hemostats outside of the piglet’s body. All laparoscopic ports and tools were removed from the animal. This animal study was conducted with Institutional Animal Care and Use Approval under protocol 30403. All efforts were made to reduce the number of animals in this study by incorporating porcine cadaver tissues for ex vivo statistical tests and limiting the in-vivo study to N=1. In-vivo testing was necessary to demonstrate feasibility of the technique in living tissue which is susceptible to motion artifacts due to breathing, movement caused by muscle tone, and obstructions from bleeding, all of which are not observed in the cadaveric tissues used in ex vivo testing.

STAR setup:

To perform anastomosis of the vaginal cuff, a midline incision from pubis to umbilicus was created and spread open with retractors. The incision is necessary for STAR’s camera system to visualize the suspended vaginal cuff. STAR was positioned at the animal’s head, with surgical arms placed on either side of the animal. The surgical cart was then adjusted such that the vaginal cuff was centered 25 cm from the camera system, and robotic arms were positioned within the surgical field. STARs vision system was used to detect the NIR suture and identify the corners of the vaginal cuff.

RESULTS

1. NIRF Stay Sutures

The algorithm for the segmentation of NIRF stay sutures and the estimation of the boundaries of suturing was evaluated using sutures with United States Pharmacopeia (USP) designation 2–0 and coating them with a NIRF glue. The glue solution was initially designed to create fiducial markers [27] but due to its short cure time a large suture can be coated by gliding the thread through the glue and removing any excess manually with the fingers.

We note that the glue does stiffen the suture and is brittle when bending the thread. Although they must be manipulated with care, we found that for the purpose of stay sutures, the thread mainly remains straight and tissues typically occlude the few areas with high curvature, where the glue can peel off.

In the context of hysterectomy, these sutures were attached to ex-vivo vaginal cuff of porcine models. Since the closure involves a single running suture, only one stay suture is required on each side of the vaginal cuff (size(G) = 2 on Line 3 of Algorithm 2). Each stay suture was mounted inside a circular device with dock cleats that suspends the tissue in the middle of the device. Then the tissues and sutures were placed under the NIR camera and the 2048 × 2048 images are processed to estimate the boundaries of the suturing task. Processing result returns two image coordinates, each representing one bound of the suturing task in the image space. The Matlab implementation of the algorithm takes 11.2 seconds on average to execute on an Intel Dual core 2GHz.

After computing the results, a human operator is presented with the same NIR image and is asked to use a mouse and click on the coordinates where he/she thinks the bounds of the suturing procedure are. These results are then compared to the ones from the algorithm.

We used five images similar to the one in Figure 6 and found that the mean difference between the coordinates provided by humans and the algorithm where 7.21 pixels with a standard deviation of 4.52 pixel. Given the 2048 × 2048 resolution of the NIR images is used to frame a relatively small scene of approximately 20×20 cm, these results suggest that our algorithm can pinpoint the bounds of a suturing task that uses stay sutures. Albeit these results do not provide an assessment of the 3D accuracy of the algorithm, we note that the correspondence of the image points to 3D coordinates depends on the calibration and registration between the NIR and the 3D cameras and that the same errors propagation applies whether the bounds are selected by a human or by the algorithm.

2. NIRF Sutures for 3D Consistency Assessment

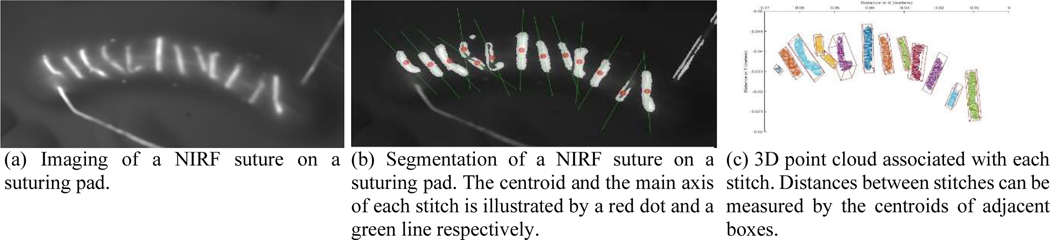

While the main application of NIRF sutures is to bound a suturing task in the image space, a different application for NIRF suture is to assess the 3D accuracy and consistency of a suture.

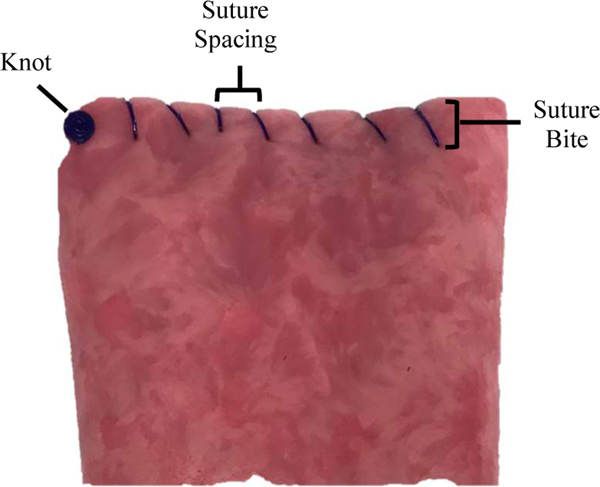

In our previous research [14], [15], [28], we proposed to evaluate the performance of the STAR by measuring the consistency of the spacing between stitches. These measurements were obtained by measuring each spacing with a caliper and then computing various statistics. As illustrated in Figure 7a, a regular suture can also be coated by NIRF glue and segmented in NIR images for measuring the spacing between stitches and Algorithm 1 can be adapted to segment and group each stitch as illustrated in Figure 7.

Figure 7.

NIRF suture used to measure spacings between stitches

These segmented points are then unprojected on the registered 3D point cloud to obtain the 3D coordinates of each stitch (Figure 7c). Each cluster of 3D points is then fitted with an oriented bounding box (OBB) by computing its centroid and covariance matrix. Finally, the faces corresponding to all endpoints are identified and the distance between the centers of adjacent endpoints is computed and compared to the distances that manually measured. The average error was 0.817 mm with a standard deviation of 0.21 mm.

3. Tool Repeatability

The pose repeatability of the tool was tested along the guidelines of the ISO 9283:1998 standard [29]. ISO 9283:1998 defines methods for specifying and testing various characteristics of industrial robots and is widely accepted by manufacturers when representing their products. Furthermore, ISO 8373 [30] requires an “industrial robot” to have three or more axes. Since the proposed tool only provide two axes (the third one being for the gripper), it is unclear if ISO 9283:1998 can be applied to assess its characteristics. Nevertheless, using an accepted industry standard to characterize an actuated surgical tool enables to establish a fair comparison with other similar devices.

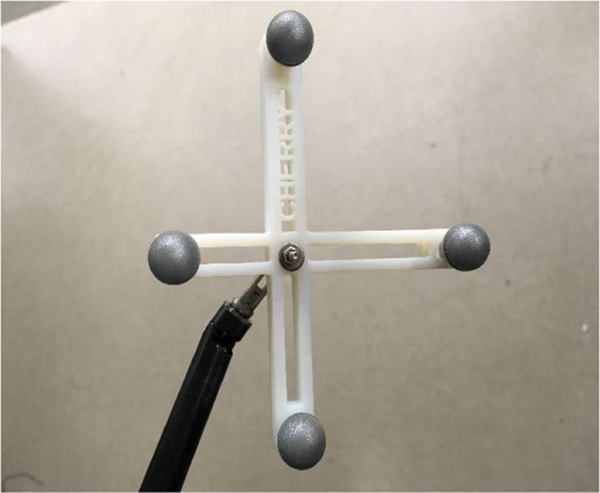

Configuration and Protocol: We use a Northern Digital Inc. (NDI) Polaris Vega to measure the position and orientation of the tool. The 3D repeatability of the sensor was measured by NDI to be +/− 0.06 mm. We used the marker “Cherry1” from an open source library [31]. The marker frame was 3D printed and fastened to the gripper by a screw inserted through the jaws as illustrated in Figure 8. The tool was mounted on a Kuka IIWA and the marker was positioned in the non- extended pyramid of the sensor. We let the actuators warm up for several hours and then started the execution of trajectories. ISO 9283:1998 requires moving to five different configurations at least 30 times. Because of the kinematics of the tool is limited to two joints, these five points cannot define a largest test cube as required by 9283:1998. Therefore, we selected five configurations for the joints that were near boundaries of the configuration space while preserving the visibility of the marker. After reaching a configuration with 15 seconds settling time, the position and orientation of the marker was recorded. That process was repeated 30 times.

Figure 8.

Marker fastened to the end of the assistant grasper.

The repeatability was measured to be 5.3989 mm, which is significant given the short length of the tool tip. The largest cause of this large repeatability is the backlash of the gripper and the wrist of the tool. In comparison, industrial manipulators, which are orders of magnitude larger, typically report submillimeter repeatability.

4. Ex-Vivo Vaginal Cuff Closure

To demonstrate the accuracy and efficacy of the suturing system, STAR (N=6) was compared to surgeons performing simulated closure of the vaginal cuff using either laparoscopic (LAP) approach (N=6) or robotic assisted surgery (RAS) (Da Vinci surgical system) (N=6). The average time per knot, time per suture, number of mistakes, suture spacing, and suture bite were measured for each tissue depth. For these experiments, the average time per knot was calculated as the number of seconds it took each modality to throw and tie beginning stitch. The average time per suture was measured as the number of seconds between consecutive suture throws. The number of average mistakes was defined as the total number of misplaced sutures, locked sutures, or repositioned sutures per tissue sample (STAR, LAP, RAS), as well as number of human interventions (STAR). Average suture spacing was measured as the distance in millimeters between two consecutive sutures collected for all sutures on the front and back of each tissue sample (see Figure 9). Average bite depth was measured as the distance from the top edge of the vaginal cuff to each sutures entry and exit locations in the vaginal cuff as illustrated in Figure 9. Surgeons were instructed to use the same spacing and consistency they use on humans and that the emphasis would be on assessing the Figure 9. Illustration of suture spacing and bite consistency of the spacing. The STAR was instructed to use metrics on vaginal cuff phantom tissue 3mm spacing between stitches and use 3 mm bite depth. The STAR and surgeons used knotless barbed sutures for the ex-vivo (and in-vivo) experiments. An image of a barbed suture used by the STAR is illustrated in Figure 3.

Figure 9.

Illustration of suture spacing and bite metrics on vaginal cuff phantom tissue

The results of the ex-vivo comparison tests are displayed in Table I. From the study, we observe that STARs time per knot was shorter 54 seconds as compared to LAP and RAS with average suture times of 215 seconds and 202 seconds respectively. Additionally, it was observed that STAR only made one mistake for all tissue samples as compared to 30 and 13 mistakes for LAP and RAS respectively, although this number was not found to be statistically significant (p > 0.05). When measuring suture spacing to quantify anastomosis quality, the STAR’s average spacing of 2.63 mm was less than that for LAP (4.22 mm) and RAS (5.05 mm). The average suture spacing was significantly more consistent for STAR as compared to LAP and RAS (p < 0.05). Likewise, when considering bite depth as a metric for suture quality, it was observed that STAR had an average bite depth of 3.29 mm as compared to 3.80 mm and 2.30 mm for LAP and RAS respectively. The average bite depth for STAR was not statistically more consistent than that for LAP and RAS (p > 0.05).

Table 1.

Ex-vivo vaginal cuff closure.

| Modality | Time per knot (seconds) | Time per stitch (seconds) | # of mistakes | Suture spacing (mm) | Bite depth (mm) |

|---|---|---|---|---|---|

| STAR (with robotic assistant) | 54.00 ± 2.61 | 23.38 ± 2.52 | 1 | 2.63 ± 1.66 | 3.29 ± 1.32 |

| STAR (with human assistant) | 83.74±43.60 | 45.63±9.46 | 5 | 2.60±1.04 | n/a |

| LAP | 215.67 ± 49.42 | 92.15 ± 41.93 | 30 | 4.22 ± 2.64 | 3.80 ± 1.70 |

| RAS | 202.17 ± 27.93 | 103.38 ± 44.57 | 13 | 5.05 ± 2.42 | 2.30 ± 1.49 |

Additionally, as Illustrated in Table 1, the results from these experiments correlate well with our previously published work using the STAR system to perform linear suturing in porcine cadaver tissues [15] using a human assistant. It was observed that suture spacing, and variance was nearly identical between STAR with a human assistant and STAR with a robotic assistant (2.60±1.04mm and 2.63±1.66mm respectively). Additionally, the average time per stitch and average time per knot was markedly improved with the robotic assistant which can be attributed to the used of barded suture and anchor mechanism for this study.

5. In-Vivo Vaginal Cuff Closure

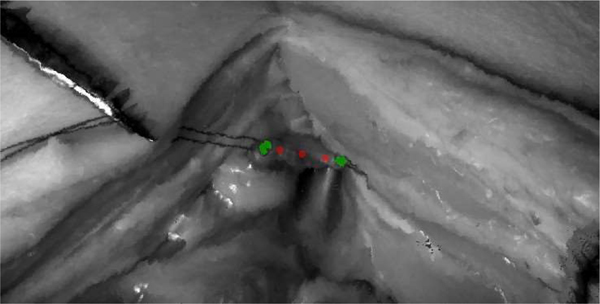

To demonstrate clinical workflow for vaginal cuff closure under laparoscopic conditions, the STAR first generated a surgical plan to suture the vaginal cuff informed by idealized number, location, and spacing for suture placement as found previously [14], [15]. An illustration of the plan is overlaid on top of the 3D point cloud in Figure 10.

Figure 10.

Suturing plan on top of the 3D point cloud. The green dots are the bounds of the suturing procedure and the red dots are the planned stitches.

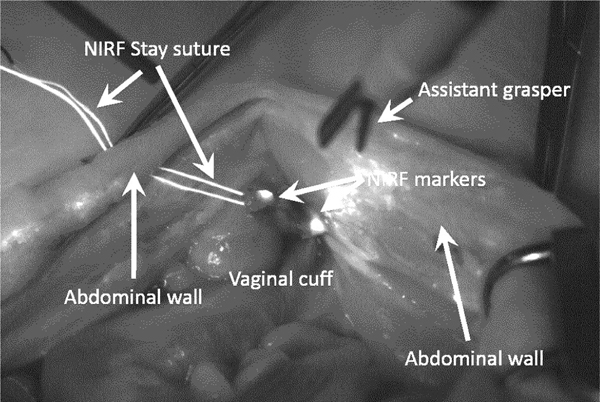

Finally, STAR executed the surgical plan using a mechanized suturing tool with 2–0 barbed suture, while performing suture management in the surgical field with automated surgical grasper. After the anastomosis, the vaginal cuff was dissected from the animal and analyzed for average suture spacing, rupture strength, and procedure time. During the experiment, one of the two NIRF stay suture broke while being installed inside the abdominal cavity and could only be replaced with a non-NIRF one. Therefore, we resorted to use fiducial markers by depositing a small drop of NIRF glue near the corners of the vaginal cuff as seen in Figure 11.

Figure 11.

Enhanced NIR image during in-vivo experiment. One NIRF stay suture was damaged during the experiment and was replaced by a non-NIRF one. Therefore, we used NIRF markers that where manually installed on the corners of the vaginal cuff. The picture shows the remaining NIRF stay suture entering and exiting the abdominal cavity.

After manual dissection and linearization of the vaginal cuff, the NIRF markers were manually initialized, and the STAR generated a surgical plan for a knot and three stitches with programmed 3 mm suture spacing. STAR then performed anastomosis by executing collaborative suturing and suture management within the 10 × 10 × 10 cm workspace. STAR successfully placed one knot and three running sutures to close the vaginal cuff. Average measured suture spacing was 2.75±0.43 mm with a total anastomosis time of 5.43 minutes. After dissection of the vaginal cuff, the tissue was placed on a linear stage with force sensor. Tissue edges were secured to either side of the linear stage, and the anastomosis was stretched to a force of 10N without rupture.

DISCUSSION AND CONCLUSION

Despite recent advances in laparoscopic and robotically assisted hysterectomy, surgical times and complication rates remain unchanged. Dehiscence of the vaginal cuff still occurs in nearly 5% of the cases including those where the da Vinci surgical system is used.

In the ex-vivo porcine tests, we found that STAR performed significantly better than LAP and RAS regarding consistency of suture spacing, bite depth, and number of mistakes. We can attribute the improved consistency of STAR in this suturing task to the superior accuracy and repeatability of the robotic system. After demonstrating superior suture consistency of the vaginal cuff as compared to LAP and RAS modalities, STAR was used to perform complete anastomosis of the vaginal cuff in a laparoscopic hysterectomy model. In previous work, STAR required the presence of a human assistant to perform management of the suture in the surgical field, relied on NIRF fiducials that the operating surgeon manually placed on target tissue, and performed suturing motions with no surgical constraints. To eliminate these limitations for a laparoscopic procedure, three new technologies have been incorporated into the surgical platform. First, a second robotic arm with articulated grasper was integrated into the system. This second arm replaced the assistive surgeon by enabling STAR to grasp, manipulate, and remove excess suture from the surgical field. The collaborative motions between the two robots, allows STAR to place a running stitch, while pulling excess suture from the surgical field to prevent tangling and locking stitches. Second, we developed NIRF suture that is placed by the operating surgeon to linearize the vaginal cuff for suturing. Linearization of the vaginal cuff is already preformed clinically to stage the tissue for anastomosis, so the surgeon workflow remains unchanged. By using NIRF suture, STAR can detect segment, and identify the corners of the vaginal cuff without the extra step of manually applying NIRF fiducials. Finally, the robotic motions of both surgical arms are limited with Remote Center of Motion (RCM) to simulate laparoscopic constraints. By incorporating the RCM into robotic control, we ensure all tool motions would be possible in a clinical laparoscopic procedure. With the addition of these technologies, STAR performed anastomosis of the vaginal cuff in in-vivo porcine model. To the best of our knowledge, this is the first time a surgical robot has performed a suturing task in soft tissue in a laparoscopic procedure.

One limitation of this study is that the in-vivo testing could not be performed under complete laparoscopic conditions with insufflation. Due to the large footprint of STARs vision system, the animal model had to be cut from pubis to umbilicus such that the NIR and plenoptic cameras could visualize the vaginal stump in the pelvis. This was the only deviation of a completely laparoscopic procedure in the in-vivo model, as all staging, planning, and suturing were performed under laparoscopic conditions. To perform a completely laparoscopic procedure from dissection to anastomosis, we will need to reduce the footprint of the camera into a laparoscopic borescope. Work is ongoing to make the vision system compatible with the laparoscopic approach, by using a borescope with relay lens network to capture surgical images within a 10 mm incision. Initial results have demonstrated that this borescope is capable of reconstructing surgical images with depth accuracy of 0.008 mm and is even sensitive enough to detect 4–0 suture in the surgical field [32]. The borescope is also compatible with NIR cameras, indicating that it could be simultaneously provide accurate tissue tracking and metric coordinates necessary for precise robotic control. After incorporating this camera into the STAR system, we will perform complete laparoscopic anastomosis of the vaginal cuff in an in-vivo porcine model.

Using the ISO 9283:1989 standard, the repeatability of the assistant tool motion was calculated to be significantly greater than the repeatability of industrial robots. This result is not surprising since the performance requirements for an assistant surgical tool can vary greatly depending on the surgical application, whereas industrial robots always require high repeatability and precision for assembly tasks. For instance, despite the large repeatability error of the assistant tool, we did not observe a depreciation in the performance of the suture tensioning technique we report in this paper. This phenomenon is illustrated in Figure 12, where the assistant tool has been fully articulated in preparation for suture tensioning. When fully articulated, the assistant tool creates an area roughly 300 mm2 (shaded region) that can be used to catch the target suture. This capture area is more than 50x the repeatability of the assistant tool, ensuring that the suture is tensioned despite inaccurate placement. For surgical tasks that require sub-millimeter accuracy such as needle grasping or tissue debridement, the assistant tool repeatability would need to be improved. Future work to eliminate tool backlash will be performed so that the assistant tool can be used for grasping tasks.

This paper reported the design and execution of a new robotic surgical system capable of performing anastomosis of the vaginal cuff under laparoscopic conditions. The incorporation of a second robotic arm collaborative control scheme, NIRF suture, and RCM allow STAR to detect, generate, and execute surgical plans with more consistency that expert surgeons using with LAP or RAS modalities. The system was also tested in an in-vivo animal model where a surgeon performed complete laparoscopic hysterectomy and linearization of the vaginal cuff, after which STAR closed the vaginal cuff under the same conditions. To our knowledge, this is the first time a surgical robot has executed part of a surgical task in soft tissue under laparoscopic conditions without human assistance. After incorporation of a new 3D and NIR fusion borescope, STAR will be able to perform complete anastomosis of the vaginal cuff in fully laparoscopic procedure.

ACKNOWLEDGMENT

The authors would like to thank STORZ for the donation of the laparoscopic trainer, laparoscopic tools, and surgical tower used in the comparison tests. Additionally, the authors would like to thank the research animal facility at Children’s National Hospital for their support for in vivo testing.

Contributor Information

Simon Leonard, Laboratory for Computational Sensing and Robotics, Johns Hopkins University, Baltimore, MD, USA.

Justin Opfermann, Department of Mechanical Engineering, Johns Hopkins University, Baltimore, MD, USA.

Nicholas Uebele, Epic Systems Corporation, Verona, WI, USA.

Lydia Carroll, Rotary Mission Systems, Lockheed Martin, Mount Laurel, NJ, USA.

Ryan Walter, SURVICE Engineering, Belcamp, MD, USA.

Christopher Bayne, Department of Urology, University of Florida, Gainesville, FL, USA.

Jiawei Ge, Department of Mechanical Engineering, Johns Hopkins University, Baltimore, MD, USA.

Axel Krieger, Department of Mechanical Engineering, Johns Hopkins University, Baltimore, MD, USA.

REFERENCES

- [1].Jackson RC, Yuan R, Chow D-L, Newman W, and Çavuşoğlu MC, “Real-Time Visual Tracking of Dynamic Surgical Suture Threads,” IEEE Trans. Autom. Sci. Eng. Publ. IEEE Robot. Autom. Soc, vol. 15, no. 3, pp. 1078–1090, Jul. 2018, doi: 10.1109/TASE.2017.2726689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Knoll A, Mayer H, Staub C, and Bauernschmitt R, “Selective automation and skill transfer in medical robotics: a demonstration on surgical knot-tying,” Int. J. Med. Robot, vol. 8, no. 4, pp. 384–397, 2012, doi: 10.1002/rcs.1419. [DOI] [PubMed] [Google Scholar]

- [3].Mayer H. et al. , “Automation of Manual Tasks for Minimally Invasive Surgery,” in Fourth International Conference on Autonomic and Autonomous Systems (ICAS’08), Mar. 2008, pp. 260–265, doi: 10.1109/ICAS.2008.16. [DOI] [Google Scholar]

- [4].Iyer S, Looi T, and Drake J, “A single arm, single camera system for automated suturing,” in 2013 IEEE International Conference on Robotics and Automation, May 2013, pp. 239–244, doi: 10.1109/ICRA.2013.6630582. [DOI] [Google Scholar]

- [5].Krupa A. et al. , “Development of Semi-autonomous Control Modes in Laparoscopic Surgery Using Automatic Visual Servoing,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2001, Berlin, Heidelberg, 2001, pp. 1306–1307, doi: 10.1007/3-540-45468-3_206. [DOI] [Google Scholar]

- [6].Hwang M. et al. , “Efficiently Calibrating Cable-Driven Surgical Robots With RGBD Fiducial Sensing and Recurrent Neural Networks,” IEEE Robot. Autom. Lett, vol. 5, no. 4, pp. 5937–5944, Oct. 2020, doi: 10.1109/LRA.2020.3010746. [DOI] [Google Scholar]

- [7].Tanwani AK, Sermanet P, Yan A, Anand R, Phielipp M, and Goldberg K, “Motion2Vec: Semi-Supervised Representation Learning from Surgical Videos,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), May 2020, pp. 2174–2181, doi: 10.1109/ICRA40945.2020.9197324. [DOI] [Google Scholar]

- [8].Preda N. et al. , “A Cognitive Robot Control Architecture for Autonomous Execution of Surgical Tasks,” J. Med. Robot. Res, vol. 01, no. 04, p. 1650008, Aug. 2016, doi: 10.1142/S2424905X16500082. [DOI] [Google Scholar]

- [9].Cohen SL, Vitonis AF, and Einarsson JI, “Updated Hysterectomy Surveillance and Factors Associated With Minimally Invasive Hysterectomy,” JSLS, vol. 18, no. 3, 2014, doi: 10.4293/JSLS.2014.00096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Smith K. and Caceres A, “Vaginal Cuff Closure in Minimally Invasive Hysterectomy: A Review of Training, Techniques, and Materials,” Cureus, vol. 9, no. 10, doi: 10.7759/cureus.1766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Nawfal AK, Eisenstein D, Theoharis E, Dahlman M, and Wegienka G, “Vaginal Cuff Closure during Robotic-Assisted Total Laparoscopic Hysterectomy: Comparing Vicryl to Barbed Sutures,” JSLS, vol. 16, no. 4, pp. 525–529, 2012, doi: 10.4293/108680812X13462882736772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Akdemir A, Zeybek B, Ozgurel B, Oztekin MK, and Sendag F, “Learning curve analysis of intracorporeal cuff suturing during robotic single-site total hysterectomy,” J. Minim. Invasive Gynecol, vol. 22, no. 3, pp. 384–389, Apr. 2015, doi: 10.1016/j.jmig.2014.06.006. [DOI] [PubMed] [Google Scholar]

- [13].Sendag F, Akdemir A, Zeybek B, Ozdemir A, Gunusen I, and Oztekin MK, “Single-site robotic total hysterectomy: standardization of technique and surgical outcomes,” J. Minim. Invasive Gynecol, vol. 21, no. 4, pp. 689–694, Aug. 2014, doi: 10.1016/j.jmig.2014.02.006. [DOI] [PubMed] [Google Scholar]

- [14].Leonard S, Wu KL, Kim Y, Krieger A, and Kim PCW, “Smart Tissue Anastomosis Robot (STAR): A Vision-Guided Robotics System for Laparoscopic Suturing,” IEEE Trans. Biomed. Eng, vol. 61, no. 4, pp. 1305–1317, Apr. 2014, doi: 10.1109/TBME.2014.2302385. [DOI] [PubMed] [Google Scholar]

- [15].Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, and Kim PCW, “Supervised autonomous robotic soft tissue surgery,” Sci. Transl. Med, vol. 8, no. 337, pp. 337ra64–337ra64, May 2016, doi: 10.1126/scitranslmed.aad9398. [DOI] [PubMed] [Google Scholar]

- [16].Rao P, “Dr. Nutan Jain: State of the Art Atlas and Textbook of Laparoscopic Suturing in Gynecology,” J. Obstet. Gynecol. India, vol. 66, no. 2, pp. 137–138, Apr. 2016, doi: 10.1007/s13224-015-0811-9. [DOI] [Google Scholar]

- [17].Kleier DJ, “The Continuous Locking Suture Technique,” J. Endod, vol. 27, no. 10, pp. 624–626, Oct. 2001, doi: 10.1097/00004770-200110000-00006. [DOI] [PubMed] [Google Scholar]

- [18].Hart S. and Sobolewski CJ, “The benefits of using barbed sutures with automated suturing devices in gynecologic endoscopic surgeries,” Surg. Technol. Int, vol. 23, pp. 161–165, Sep. 2013. [PubMed] [Google Scholar]

- [19].Decker RS, Shademan A, Opfermann JD, Leonard S, Kim PCW, and Krieger A, “Biocompatible Near-Infrared Three-Dimensional Tracking System,” IEEE Trans. Biomed. Eng, vol. 64, no. 3, pp. 549–556, Mar. 2017, doi: 10.1109/TBME.2017.2656803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Kapoor A, Li M, and Taylor RH, “Spatial motion constraints for robot assisted suturing using virtual fixtures,” Med. Image Comput. Comput.-Assist. Interv. MICCAI Int. Conf. Med. Image Comput. Comput.-Assist. Interv., vol. 8, no. Pt 2, pp. 89–96, 2005, doi: 10.1007/11566489_12. [DOI] [PubMed] [Google Scholar]

- [21].Göpel T, Härtl F, Schneider A, Buss M, and Feussner H, “Automation of a suturing device for minimally invasive surgery,” Surg. Endosc, vol. 25, no. 7, pp. 2100–2104, Jul. 2011, doi: 10.1007/s00464-010-1532-x. [DOI] [PubMed] [Google Scholar]

- [22].Reiley CE, Plaku E, and Hager GD, “Motion generation of robotic surgical tasks: Learning from expert demonstrations,” in 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Aug. 2010, pp. 967–970, doi: 10.1109/IEMBS.2010.5627594. [DOI] [PubMed] [Google Scholar]

- [23].International Organization for Standardization, “Medical electrical equipment — Part 4–1: Guidance and interpretation — Medical electrical equipment and medical electrical systems employing a degree of autonomy (ISO Standard IEC/TR 60601–4-1:2017),” 2017. https://www.iso.org/cms/render/live/en/sites/isoorg/contents/data/standard/07/07/70755.html (accessed Apr. 11, 2021).

- [24].Yang G-Z et al. , “Medical robotics—Regulatory, ethical, and legal considerations for increasing levels of autonomy,” Sci. Robot, vol. 2, no. 4, Mar. 2017, doi: 10.1126/scirobotics.aam8638. [DOI] [PubMed] [Google Scholar]

- [25].Haidegger T, “Autonomy for Surgical Robots: Concepts and Paradigms,” IEEE Trans. Med. Robot. Bionics, vol. 1, no. 2, pp. 65–76, May 2019, doi: 10.1109/TMRB.2019.2913282. [DOI] [Google Scholar]

- [26].Yip M. and Das N, “Robot autonomy for surgery,” in The Encyclopedia of Medical Robotics, 4 vols., WORLD SCIENTIFIC, 2018, pp. 281–313. [Google Scholar]

- [27].Shademan A, Dumont MF, Leonard S, Krieger A, and Kim PCW, “Feasibility of near-infrared markers for guiding surgical robots,” in Optical Modeling and Performance Predictions VI, Sep. 2013, vol. 8840, p. 88400J, doi: 10.1117/12.2023829. [DOI] [Google Scholar]

- [28].Leonard S, Shademan A, Kim Y, Krieger A, and Kim PCW, “Smart Tissue Anastomosis Robot (STAR): Accuracy evaluation for supervisory suturing using near-infrared fluorescent markers,” in 2014 IEEE International Conference on Robotics and Automation (ICRA), May 2014, pp. 1889–1894, doi: 10.1109/ICRA.2014.6907108. [DOI] [Google Scholar]

- [29].International Organization for Standardization, “Manipulating industrial robots — Performance criteria and related test methods (ISO standard 9283:1998),” 1998. https://www.iso.org/standard/22244.html (accessed Apr. 11, 2021).

- [30].International Organization for Standardization, “Robots and robotic devices — Vocabulary (ISO Standard 8373:2012),” 2012. https://www.iso.org/obp/ui/#iso:std:iso:8373:ed-2:v1:en (accessed Apr. 11, 2021).

- [31].Brown AJV, Uneri A, Silva TSD, Manbachi A, and Siewerdsen JH, “Design and validation of an open-source library of dynamic reference frames for research and education in optical tracking,” J. Med. Imaging, vol. 5, no. 2, p. 021215, Feb. 2018, doi: 10.1117/1.JMI.5.2.021215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Saeidi H. et al. , “Autonomous Laparoscopic Robotic Suturing with a Novel Actuated Suturing Tool and 3D Endoscope,” in 2019 International Conference on Robotics and Automation (ICRA), May 2019, pp. 1541–1547, doi: 10.1109/ICRA.2019.8794306. [DOI] [PMC free article] [PubMed] [Google Scholar]