Delphi Title and Abstract

A CBPR-Enhanced Delphi Method: The Measurement Approaches to Partnership Success (MAPS) Case Study

As part of a 5-year study to develop and validate an instrument for measuring success in long-standing community-based participatory research (CBPR) partnerships, we utilized the Delphi method with a panel of 16 community and academic CBPR experts to assess face and content validity of the instrument’s broad concepts of success and measurement items. In addition to incorporating quantitative and qualitative feedback from two on-line surveys, we included a two-day face-to-face meeting with the Expert Panel to invite open discussion and diversity of opinion in line with the CBPR principles framing and guiding the study. The face-to-face meeting allowed experts to review the survey data (with maintained anonymity), convey their perspectives, and offer interpretations that were untapped in the on-line surveys. Using a CBPR approach facilitated a synergistic process that moved above and beyond the consensus achieved in the initial Delphi rounds, to enhance the Delphi technique and the development of items in the instrument.

Introduction

The Delphi method uses a structured communication process to solicit and collate opinions from selected experts on topic areas where evidence is lacking or unclear (Hsu & Sandford, 2007; Okoli & Pawlowski, 2004). Developed by the RAND Corporation in 1950 as a technique to forecast the impact of technology on war (Suto et al., 2019), the method involves a series of questionnaires that allow each expert to reassess and modify his/her judgments based on the results from other experts in previous iterations (Delbecq et al., 1975; Hsu & Sandford, 2007). Expert anonymity is maintained to prevent the authority and reputation of any one participant from dominating the process (Dalkey & Helmer, 1963). Through this iterative feedback process, it is believed that the range of opinions will decrease and stabilize and that the panel of experts will converge towards consensus (Hohmann et al., 2018; Linstone & Turoff, 1975).

Some argue that consensus may be thwarted if experts converge their opinions too quickly, too easily, or in favor of a majority opinion (Bolger et al., 2011; Murray, 1979), creating outcomes that represent the lowest common denominator rather than the “best opinion” (Powell, 2003, p. 377). Others have criticized the failure of investigators to define or report the meaning of consensus (Diamond et al., 2014) or properly execute the Delphi method such that results may be inaccurate or skewed (Boulkedid et al., 2011). To address these concerns, some researchers have modified the Delphi process (Bleijlevens et al., 2016; Farrell et al., 2015), including using a community-based participatory research (CBPR) approach to promote greater interaction between experts (Dari et al., 2019; Escaron et al., 2016; Rideout et al., 2013; Suto et al., 2019).

CBPR, which emphasizes and celebrates differences among community and academic partners, embraces principles of mutual respect, power sharing, co-learning, balancing research and action, and a long-term commitment to achieving racial and health equity (Israel et al., 1998; Israel et al., 2013; Wallerstein & Duran, 2018). In this inclusive research approach, all partners contribute expertise and share decision-making through open dialogue to promote mutual learning, create knowledge, and integrate that knowledge with interventions and policies to promote health equity (Israel et al., 1998; Israel et al., 2019).

As shown in the following case study, combining CBPR principles with the Delphi method has the potential to strengthen the latter by bringing diverse perspectives together from community and academic experts to create collective and novel solutions. Providing an opportunity for face-to-face discussion and encouraging the expression of diverse opinions allows for richer and more inclusive outcomes than those based solely on the more reductionist approach that the Delphi process typically necessitates.

The Measurement Approaches to Partnership Success (MAPS) Study

We used the Delphi method as part of the MAPS study, a five-year NIH funded project whose overall aim is to define and assess success in long-standing CBPR partnerships. Long-standing in the MAPS study was defined as those partnerships in existence for at least six years, which we rationalized as continuing beyond a typical 5-year federal funding cycle (Israel et al., 2020). MAPS is being carried out by the Detroit Community-Academic Urban Research Center (Detroit URC), established in 1995, to foster CBPR partnerships aimed at understanding and addressing social determinants of health toward eliminating health inequities in Detroit (Israel et al., 2001). The Detroit URC is guided by a Board composed of members representing eight community-based organizations, two health and human service agencies, and three schools in an academic institution (see acknowledgements). Building on the Detroit URC’s long-standing CBPR approach, MAPS’ three aims are to: (1) develop a clear definition of success in long-standing CBPR partnerships; (2) identify factors that contribute to success; and (3) design, test and validate a practical measurement tool for partnerships to assess and strengthen their efforts to achieve health equity.

Expert Panelists.

A 16-person national Expert Panel composed of eight community and eight academic experts with extensive experience in CBPR and involvement in long-standing CBPR partnerships was selected by the research team and the Detroit URC Board based on their leadership in the field, contributions to the peer-reviewed literature, and diversity along multiple dimensions. Expert panel members included academics in schools of public health and social work from seven universities across the United States and community experts from eight community based organizations. They represented all regions of the US, including urban, rural, and tribal communities, and were racially and ethnically diverse.

To address study aims 1 and 2, members of the Expert Panel initially participated in key informant interviews whose data, combined with results from a comprehensive review of the literature (Brush et al., 2020; Israel et al., 2020), helped the research team draft the first iteration of the MAPS instrument composed of 96 items across seven key dimensions of success: equitable relationships (22 items), partnership synergy (7 items), reciprocity (9 items), competence enhancement (12 items), sustainability (18 items), realization of benefits over time (17 items), and achievement of intermediate and long-term goals/objectives (11 items). The involvement of the Expert Panel in the in-depth interviews was another way that the diverse perspectives, both community and academic, were incorporated equitably in the process and informed the research team over and above what we might have come up with had we developed the first draft of the instrument based solely on our experience and the literature.

Delphi Stages 1 and 2.

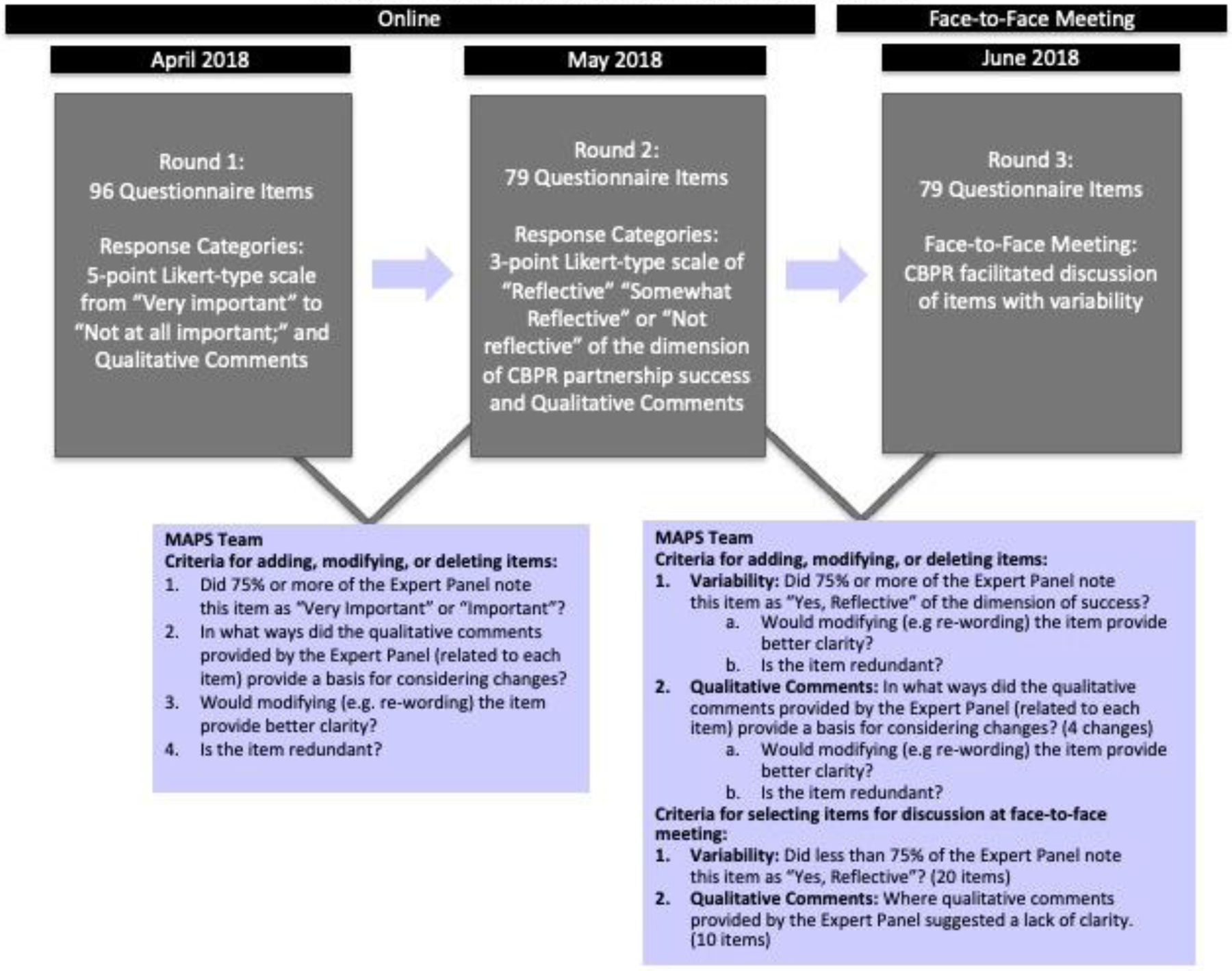

As depicted in Figure 1, we then used a mixed-methods approach to conduct a three-round Delphi process with the Expert Panel to assess the face and content validity of the instrument items. The first two rounds followed the traditional Delphi process with an on-line survey asking panelist members to utilize specific criteria in evaluating each of the 96 instrument items. In Round 1, panelists individually and anonymously ranked the importance, appropriateness, and clarity of the instrument items as they related to CBPR partnership success on a 5-point Likert-type scale (from very important to not very important) and assessed whether an item should be retained, modified, or removed. Items were retained if 75% or more of the Panel ranked items as “Very Important” or “Important” for assessing each dimension of partnership success. Panelists’ qualitative comments provided a basis for considering changes such as modifying or re-wording an item to improve clarity or removing items deemed redundant (Coombe et al., 2020). After reviewing Round 1 quantitative and qualitative responses, 79 of the original 96 items were retained, and 33 of the 79 items were reworded. In Round 2, one month later, panelists were asked to rank the 79 items as “reflective” or “not reflective” of dimensions of CBPR partnership success on a 3-point Likert-type scale. After analyzing the responses from Round 2, we did not delete any of the items. Instead, we flagged items that showed variability in responses (20 items) among Expert Panelists as well as items that had qualitative comments (10 items) warranting collective discussion. Four items were also reworded.

Figure 1:

The CBPR-Enhanced Delphi Process with Expert Panel

Delphi Stage 3.

The third round of the Delphi involved a two-day face-to-face meeting, the purpose of which was to allow the Expert Panel members the time and opportunity to discuss the 20 items with variability and the 10 items flagged by the research team for additional review. We followed CBPR principles and processes to facilitate an open and in-depth discussion among the panelists, for example, by valuing and encouraging everyone’s opinion, agreeing to disagree, embracing diversity, and providing a respectful environment for exchanging ideas (Israel et al., 2018). By highlighting the differences in panelists’ rating of items and relying on their familiarity with CBPR principles, we expected to create new insights or revelations that were not thought of previously. Thus, at the beginning of the meeting, we explained to the panelists that achievement of consensus was not the primary objective of the meeting. Rather, the intent was to reach a shared understanding, or construct a “collective truth” of the rationale for identifying and prioritizing items to be included in the instrument for further validation.

An Example from the MAPS Delphi Face-to-Face Meeting

In addition to helping finalize the MAPS items by looking at those items that had variability in responses from the first two rounds, the face-to-face meeting also gave the MAPS research team the opportunity to engage with the Expert Panelists in a collaborative way that included back and forth dialogue facilitated by a research team member with expertise in group process. At the request of the Expert Panel, we engaged in a broad conversation about equity and CBPR and the extent to which issues around equity were captured in the MAPS instrument. Described below, this unplanned discussion revealed new issues and insights that would not have been possible in a traditional Delphi process format.

Based on our key informant interviews and literature review, our research team defined Equity as “an environment has been created that: (a) enhances open, equitable, collaborative, and authentic relationships (i.e., relational equity); and (b) sharing of power and resources within the partnership (i.e., structural equity).” Panelists felt the original definition was too strongly focused on relationships and that equity should also be about power and resource sharing. One academic panelist noted:

Equity is both relational and structural. We created items that are both relational and structural. Many items are relational and soft items and [there are] fewer structural items. Perhaps, maybe separate into two constructs? Naming them these things makes sure that the structural is embedded. Structural items include percentage of dollars shared.

Another panelist, also an academic expert, responded to the comment by pointing out that the structural aspect was partly reflected in another dimension of partnership success, Reciprocity.

Those comments prompted a community panelist to challenge the possibility of equity in CBPR partnerships at all:

Equitable relationships are not possible between communities and academics – I would start there and say that I don’t think it makes sense to ask about equitable relationships…You’ve got to change structure. You can’t ask about [equitable relationships] because they don’t exist. There can’t be equity between me and [a university], between the power and money that [a university] has and how the media sees that, and how external forces see that.

Other panelists questioned whether equity was really about a 50–50 balance, whether equitable relationships were a function of who was involved (individuals vis-a-vis institutions), and whether equity could be achieved by allocating resources to where they were needed, rather than distributing them evenly among partners. This led to the question, “who has the power to make resources equitable and to change institutional practices and policies?” A community panelist shared an example to demonstrate that changing institutional policies was possible. By acting collectively, her partnership was able to change a university’s Institutional Review Board (IRB) process and alter the way the university’s cancer center examined and assessed disparities in cancer. She stressed: “We did it collectively. We strived for it as you can’t do it by yourself.”

This example led several panelists to comment on the importance of balancing the structural and relational components (or the “analytical” and “people” sides) of equity. A panelist suggested:

Maybe it would help if Section A [of the instrument] became Equitable Partnerships and under that are the process dimensions, the structural (sharing resources), but in a way that conveys the connection to power. As for the relationship issues, it would help if they were a little more and take into account some partners. In our work, the health department, who is an important partner, noted that they would come back after the touchy-feely part is over. (Academic Expert)

Another academic panelist followed:

When I think of equitable relationships, I think of my responsibility to be at the table and bring structural elements to the table. It is almost tied to cultural humility. It comes to: are we answering as a whole or as an individual? I think we are in agreement with these items. Am I answering this as [name of panelist] with my partners? We are all in agreement in the definitions but getting to the more concrete ideas to get to later.

Both comments helped the MAPS research team realize that some of the Equity items needed to be clarified and revised. Subsequent discussions also led to questions about the potential use of the instrument – specifically, whether it is a scale for assessing partnership success or a tool that helps CBPR partnerships evaluate their state of development and identify changes that are needed to ensure long-term success.

Lessons Learned

The traditional Delphi method is limited in the extent to which diverse opinions can be expressed and discussed in depth and considered by the group as a collective. Instead, expert opinions are largely individualized, with little or no opportunity for give and take dialogue around complex topics. The MAPS approach demonstrates how the Delphi process can be enriched by incorporating CBPR principles to achieve a more in-depth, nuanced, and collective understanding of phenomena. Below, we highlight the lessons learned and practice implications for a CBPR-enhanced Delphi method.

The MAPS approach used a set of established criteria to guide the Delphi process and analyzed both quantitative and qualitative responses to eliminate redundancies and ensure items adequately captured dimensions of partnership success from the perspective of CBPR experts, thus assessing the face and content validity of the item pool. The integration of qualitative and quantitative data, without prioritizing one form of data over the other (i.e., not relying solely on a quantitative Delphi cutoff), reflected our use of CBPR principles – specifically, co-learning – in practice.

Our efforts to reach consensus through the initial anonymous rounds (Rounds 1 and 2) were designed strategically to lay the groundwork for deeper in-person discussions with the MAPS Expert Panel in Round 3. The face-to-face meeting created opportunities for diverse opinions to be expressed and led to an atmosphere of listening and curiosity that allowed the uncovering of multiple perspectives and deeper levels of understanding. Additionally, since all MAPS Expert Panelists were experienced academic and community partners with background and skills using CBPR principles, the process was understood to be inclusive. Our process also benefitted by having a heterogeneous group by race, ethnicity, geography, and research interest rather than a more uniform group of experts typically represented in a Delphi approach. The intentional selection of equal numbers of community and academic experts was also essential and methodologically beneficial. This suggests that the selection of panelists and the broader interpretation of “expert” is an essential element when using this approach and that integrating a community-academic panel for Delphi methods facilitates greater understanding of complex problems, improves bidirectional learning in informing research and practice outcomes, and may lead to new research questions and identification of gaps in knowledge.

Another critical aspect of the CBPR-enhanced Delphi process was the attention to facilitating the face-to-face meeting. It was critical to have an individual experienced with Delphi methods and familiar with CBPR principles and group dynamics facilitate the discussions. The facilitator invited differing opinions and handled them in ways that led to respectful and fruitful discussions in keeping with CBPR principles. Moreover, the ability to work with both the quantitative and qualitative results of the Delphi rounds to allow for discussions that went beyond simple agreements and disagreements led to deeper understanding of the constructs and clear wording of items included in the final MAPS instrument.

Conclusion

The Delphi method has been shown to be useful in generating opinions and reactions to information that will inform decision making in groups. A strength of the Delphi method is the opportunity to view the reflections of experts on a given subject area and rank opinions without the influence of any one expert dominating the process. Adding a face-to-face component to the Delphi process creates an opportunity to reflect at a deeper level and facilitate discussion that allows for debate and work toward a shared understanding or “collective truth.” The use of the Delphi method in groups that have knowledge and skills related to CBPR principles, as demonstrated with the MAPS approach, adds two critical components to the process. First, the CBPR process values qualitative information, and the use of qualitative and quantitative information together. When qualitative information is integrated into the Delphi rounds, it allows for deeper understanding of rankings and adds to a more nuanced interpretation of the content. Second, the integration of CBPR principles and processes in face-to-face meetings creates an atmosphere of shared respect for diverse opinions and sets the stage for all voices to get into the mix. This in turn allows for the creation of a “third view” (Coombe et al., 2020) of new insights or revelations that might not have been revealed at earlier stages of the process. Using a CBPR-enhanced Delphi method facilitates a synergistic process that moves above and beyond consensus to enhance the Delphi technique and the development of the items of the MAPS instrument.

Acknowledgments

The research team gratefully acknowledges the MAPS Expert Panel for their contributions to the conceptualization and implementation of this project: Alex Allen, Elizabeth Baker, Linda Burhansstipanov, Cleopatra Caldwell, Bonnie Duran, Eugenia Eng, Ella Greene-Moton, Marita Jones, Meredith Minkler, Angela G. Reyes, Al Richmond, Zachary Rowe, Amy J. Schulz, Peggy Shepard, Melissa Valerio, and Nina Wallerstein. This publication was made possible by the National Institutes of Health (NIH), National Institute of Nursing Research (NINR) award R01NR016123.

Footnotes

The authors have no conflicts of interest to disclose.

References

- Bleijlevens MH, Wagner LM, Capezuti EA, & Hamers JP (2016). Physical restraints: Consensus of a research definition using a modified Delphi technique. Journal of the American Geriatrics Society, 64(11), 2307–2310. DOI: 10.1111/jgs.14435 [DOI] [PubMed] [Google Scholar]

- Bolger F, Stranieri A, Wright G, & Yearwood J (2011). Does the Delphi process lead to increased accuracy in group-based judgmental forecasts or does it simply induce consensus amongst judgmental forecasters? Technological Forecasting and Social Change, 78(9), 1671–1680. 10.1016/j.techfore.2011.06.002 [DOI] [Google Scholar]

- Boulkedid R, Abdoul H, Lousta M, Sibony O, & Alberti C (2011). Using and reporting the Delphi method for selecting healthcare quality indicators: A systematic review. PLoS one, 6(6), e20476. 10.1371/journal.pone.0020476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brush BL, Mentz G, Jensen M, Jacobs B, Saylor K, Israel BA, Rowe Z, & Lachance L (2020). Success in long-standing community-based participatory research (CBPR) partnerships: A scoping literature review. Health Education and Behavior, 47(3), 556–568. 10.1177/109019811988298971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coombe CM, Chandanabhumma P, Bhardwaj P, Brush BL, Greene-Moton E, Jensen M, Lachance L, Lee DSY, Meisenheimer M, Minkler M, Muhammad M, Reyes AG, Rowe Z, Wilson-Powers E, & Israel BA (2020). A participatory mixed methods approach to define and measure partnership synergy in longstanding equity focused CBPR partnerships. American Journal of Community Psychology, 66(3–4), 427–438. 10.1002/ajcp.12447 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalkey N, & Helmer O (1963). An experimental application of the Delphi method to the use of experts. Management Science, 9(3), 458–467. 10.1287/mnsc.9.3.458 [DOI] [Google Scholar]

- Dari T, Laux JM, Liu Y, & Reynolds J (2019). Development of community-based participatory research competencies: A Delphi study identifying best practices in the collaborative process. The Professional Counselor, 9(1), 1–19. 10.15241/td.9.1.1 [DOI] [Google Scholar]

- Delbecq AL, Van de Ven AH, & Gustafson DH (1975). Group techniques for program planning. Scott, Foreman and Co. [Google Scholar]

- Diamond IR, Grant RC, Feldman BM, Pencharz PB, Ling SC, Moore AM, & Wales PW (2014). Defining consensus: A systematic review recommends methodologic criteria for reporting of Delphi studies. Journal of Clinical Epidemiology, 67(4), 401–409. DOI: 10.1016/j.jclinepi.2013.12.002 [DOI] [PubMed] [Google Scholar]

- Escaron AL, Weir RC, Stanton P, Vangala S, Grogan TR, & Clarke RM (2016). Testing an adapted modified Delphi method: Synthesizing multiple stakeholder ratings of health care service effectiveness. Health Education in Health Care, 17(2), 217–225. doi: 10.1177/1524839915614308 [DOI] [PubMed] [Google Scholar]

- Farrell B, Tsang C, Raman-Wilms L, Irving H, Conklin J, & Pottie K (2015). What are priorities for deprescribing for elderly patients? Capturing the voice of practitioners: A modified Delphi process. PloS one, 10(4), e0122246. 10.1371/journal.pone.0122246 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hohmann E, Cote MP, & Brand JC (2018). Research pearls: Expert consensus-based evidence using the Delphi method. Arthroscopy: The Journal of Arthroscopic and Related Surgery, 34(12), 3278–3282. DOI: 10.1016/j.arthro.2018.10.004. [DOI] [PubMed] [Google Scholar]

- Hsu C & Sandford BA (2007). The Delphi technique: Making sense of consensus. Practical Assessment, Research, & Evaluation, 12(10), 1–8. 10.7275/pdz9-th90 [DOI] [Google Scholar]

- Israel BA, Eng E, Schulz AJ, & Parker EA (2013) Introduction to methods for community-based participatory research for health. In Israel BA, Eng E, Schulz AJ, & Parker EA (Eds.), Methods in community-based participatory research for health (2nd ed., pp. 3–37). Jossey-Bass. [Google Scholar]

- Israel BA, Lachance L, Coombe CM, Lee SYD, Jensen M, Wilson-Powers E, Mentz G, Muhammad M, Rowe Z, Reyes AG, & Brush BL (2020). Measurement approaches to partnership success: Theory and methods for measuring success in long-standing CBPR partnerships. Progress in Community Health Partnerships, 14(1), 129–140. 10.1353/cpr.2020.0015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Israel BA, Lichtenstein R, Lantz P, McGranaghan R, Allen A, Guzman JR, Softley D, & Maciak B (2001). The Detroit Community-Academic Urban Research Center: Development, implementation, and evaluation. Journal of Public Health Management and Practice, 7(5), 1–19. 10.1097/00124784-200107050-00003 [DOI] [PubMed] [Google Scholar]

- Israel BA, Schulz A, Coombe C, Parker EA, Reyes AG, Rowe Z, & Lichtenstein R (2019). Community based participatory research: An approach to research in the urban context. In Galea S, Ettman C, & Vlahov D (Eds.), Urban health (pp. 272–282). Oxford University Press. [Google Scholar]

- Israel BA, Schulz AJ, Parker EA, & Becker AB (1998). Review of community-based research: Assessing partnership approaches to improve public health. Annual Review of Public Health, 19, 173–202. 10.1146/annurev.publhealth.19.1.173 [DOI] [PubMed] [Google Scholar]

- Israel BA, Schulz AJ, Parker EA, Becker AB, Allen AJ III, Guzman R & Lichtenstein R (2018). Critical issues in developing and following CBPR principles. In Wallerstein N, Duran B, Oetzel J, & Minkler M (Eds.), Community-based participatory research for health: Advancing social and health equity (pp. 31–34). John Wiley & Sons, Inc. [Google Scholar]

- Linstone HA & Turoff M (1975). The Delphi method: Techniques and applications Addison-Wesley. [Google Scholar]

- Murray TJ (1979). Delphi methodologies: A review and critique. Urban Systems, 4(2), 153–158. 10.1016/0147-8001(79)90013-5 [DOI] [Google Scholar]

- Okoli C & Pawlowski SD (2004). The Delphi method as a research tool: An example, design considerations and applications. Information and Management, 42, 15–19. DOI: 10.1016/j.im.2003.11.002 [DOI] [Google Scholar]

- Powell C (2003). The Delphi techniques: Myth and realities. Journal of Advanced Nursing, 41(4), 376–382. DOI: 10.1046/j.1365-2648.2003.02537.x [DOI] [PubMed] [Google Scholar]

- Rideout C, Gil R, Browne R, Calhoon C, Rey M, Gourevitch M, & Trinh-Shevrin C (2013). Using the Delphi and Snow Card techniques to build consensus among diverse community and academic stakeholders. Progress in Community Health Partnerships: Research, Education, and Action, 7(3), 331–339. 10.1353/cpr.2013.0033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suto MJ, Lapsley S, Balram A, Barnes SJ, Hou S, Ragazan DC, Austin J, Scott M, Berk L, & Michalak EE (2019). Integrating Delphi consensus consultation and community-based participatory research. Engaged Scholar Journal: Community-Engaged Research, Teaching, and Learning, 5(1), 21–35. 10.15402/esj.v5i1.67847 [DOI] [Google Scholar]

- Wallerstein N & Duran B (2018). Theoretical, historical, and practice roots of community based participatory research. In Wallerstein N, Duran B, Oetzel J, & Minkler M (Eds.), Community-based participatory research for health. 3rd Edition (pp. 17–29). Jossey-Bass. [Google Scholar]