Abstract

Artificial intelligence (AI) and a popular branch of AI known as machine learning (ML) are increasingly being utilized in medicine and to inform medical research. This review provides an overview of AI and ML (AI/ML), including definitions of common terms. We discuss the history of AI and provide instances of how AI/ML can be applied to pediatric neurology. Examples include imaging in neuro-oncology, autism diagnosis, diagnosis from charts, epilepsy, cerebral palsy, and neonatal neurology. Topics such as supervised learning, unsupervised learning, and reinforcement learning are discussed.

Keywords: machine learning, pediatric neurology, artificial intelligence

Section I: Introduction

The search to understand how humans and animals think is old and diverse: from Aristotle1 to Broca and Wernicke’s studies2,3 to localize specific brain functions. The field of Artificial Intelligence (AI) attempts to understand human intelligence and to create intelligent machines. AI and a branch of AI known as Machine Learning (ML) are gaining increased interest and utilization in precision medicine, clinical diagnosis, management, and research. Rather than an exhaustive treatise, we introduce AI and ML (AI/ML) with a brief history (Figure 1A and 1B) and review examples of how ML can be used in pediatric neurology.

Figure 1:

A Timeline of Artificial Intelligence/Machine Learning. A) Depicts events from 1940s to the present and B) from 1990s-present day, focusing the events that led up to one of the biggest achievements of AI: outperforming human champions in the game, Go. In Figure 1B, the state space is a measure of the possible configurations of a system (e.g., game). As shown by the dashed line, AI/ML has shown an exponential increase over recent years in it its ability to “solve” increasingly complex games (as measured against human expert performance). The bar graph for years 2010-2017 show a corresponding reduction in the error of deep learning systems in identifying objects in images within the ImageNet competition, with AlexNet developed in 2012 marking the dawn of Deep Learning and 2015 coincident with both reaching human-level accuracy (solid, horizontal line, “Human Level”) in the ImageNet competition and AlphaGo defeating Lee Sedol in Go.

Definitions

Definitions of AI/ML are established first, although some may interpret these terms differently. Al is the field that seeks to create intelligent machines, i.e. the “computational part of the ability to achieve goals in the world”.4 ML is a subset of AI that develops “computer algorithms that improve automatically through experience.”5 AI can be applied to any of the key facets of human cognition: perception, decision-making, and action.

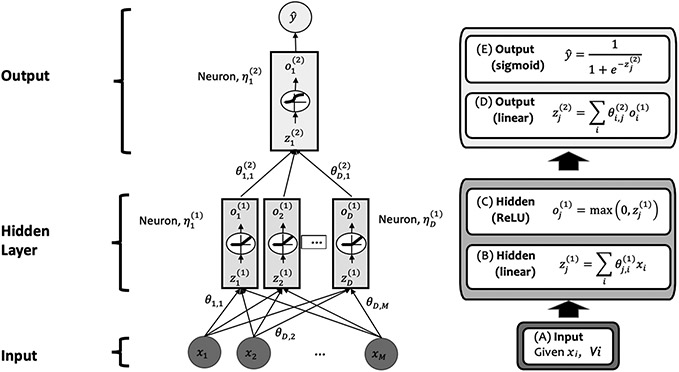

ML uses data to infer a mapping of a set of inputs to a desired output, such as an Artificial Neural Network (ANN, Figure 2) that learns to predict whether a tumor is present on magnetic resonance imaging (MRI) given examples of MRI images that experts have annotated as a tumor is present or not – a process known as supervised learning (Figure 3A).6 The ANN is a crude, computational model of a biological neural network with simulated neurons, connection between those neurons, and groupings of neurons into “layers.” Information is passed from the input of the ANN (e.g., the intensity of each pixel of an MRI) to the first layer, from the first layer to the second, and so forth until the network outputs a desired signal (e.g., the probability of a tumor being present on MRI). These layers perform a series of multiplication, addition, and activations (e.g., the neuron can be turned “on” with an output of 1 if the input value exceeds a threshold), and the parameters governing these mathematical operations are trained on data to perform the desired operation (Figure 2). Different training mechanisms are discussed later in Section III. There is ongoing interest in developing ANNs that are more biologically plausible, called Spiky Neural Networks.7

Figure 2:

This figure depicts an artificial neural network with one input layer, one hidden layer, and one output layer. The input layer consists of M input features, (x1, x2, … , xM). The single, hidden layer consist of D neurons, . The superscript (1) denotes the layer, where the input layer is layer 0 and the output layer, in this case, is layer 2. The hidden layer has nonlinear activation functions known as a Rectified Linear Unit (ReLU), which sets the output of the neuron to be the same as its input if the input is positive and 0 otherwise. The output layer has a sigmoid activation function in its single neuron, , to convert the output into a single number between 0 and 1, e.g., for a supervised learning classification task for predicting whether the inputs correspond to a positive (y = 1) or negative (y = 0) instance and is the estimated likelihood of the input belonging to the positive class. The input to each layer is a weighted, linear combination of the outputs of the previous layer. A mathematical description of the neural network functioning is provided to the right for completeness. Note that neural networks may consist of any number of hidden layers and neurons per hidden layer, may vary in the types of activation functions, inputs, and outputs, may have various connection configurations, and more.

Figure 3:

Graphical representations of Supervised Learning (A), Unsupervised Learning (B), and Reinforcement Learning (C).

Al can be used in either a screening capacity (i.e., the machine makes a diagnoses or treatment recommendations before the physician sees the data) or as a second opinion. There are trade-offs. Humans are susceptible to the anchoring-and-adjustment heuristic.8 The consequence is that the physician might be inclined to overly rely on an AI if the AI is screening the patient, and the physician might under-rely on the AI if the AI is providing a second opinion. Moreover, AI can reason both about the likelihood of the test results given a potential disease and the prior probability of that disease occurring within the population. However, if the system is built using data from only individuals with rare diseases, then the system would not have the capacity to calibrate to the prior probability of the disease’s occurrence. As such, either the system should be trained on data from everyone (sick and healthy) or the system needs to be handed a prior probability for the occurrence of each disease in order to calibrate its predictions.

Al techniques that are not ML-based may tackle these same problems (i.e., perception, decision-making, and action) but would not use systems that learn from data like ML. One such example of a human-designed mapping would be expert systems, which is a computational representation of a set of IF-THEN rules solicited by domain experts.9

Al also includes subfields, such as Natural Language Processing (NLP), Computer Vision, or Robotics. NLP develops computational models for understanding human language for tasks such as translating English to Chinese (i.e., machine translation). Computer vision enables computers to recognize visual objects and can be used to identify abnormalities on images (discussed below, Section IV: Neuro-oncology). Computer vision can be used to identify a single type of abnormality, or an algorithm can be trained to identify multiple different abnormalities.10 Robotics develops intelligent machines that physically act within the world; thus, robotics encompasses includes the design of computational techniques and physical systems to interact with physical objects. For this review, however, we consider those “subfields” instead to be research communities focused on an application or extension of AI for a specific problem that may focus on a different set of algorithmic and modeling techniques (and hardware design, in the case of robotics). For a reference list of abbreviations and definitions of terms, please refer to Table 1.

Table 1:

A list of abbreviations and definitions used in this review.

| Term | Abbreviation | Definition |

|---|---|---|

| Artificial Intelligence | AI | The field of creating machines that are intelligent. |

| Artificial Neural Network | ANN | A crude, computational model of a biological neural network with simulated neurons, connection between those neurons, and groupings of neurons into “layers.” See Figure 2. |

| Computer Vision | -- | Computers recognize visual objects in the world |

| Convolutional Neural Network | CNN | A type of ANN that is most commonly used to analyze images, and uses layers of pattern-detecting filters to analyze images |

| Decision tree | -- | A method to predict the output variable based upon different input variables |

| Deep Learning | -- | Uses neural networks with three or more layers |

| Expert System | -- | A computational representation of a set of IF-THEN rules solicited by domain experts |

| Explainable Artificial Intelligence | XAI | A subfield of AI that seeks to develop algorithmic mechanisms to afford users insight into the inner workings of these algorithms |

| Generative adversarial network | GAN | A class of machine learning frameworks in which two neural networks challenge each other -- a generator and a discriminator -- duel each other, with the generator attempting to create synthetic data (e.g., a fake MRI) that can fool the discriminator and a discriminator attempting to tease out fake from real data. |

| Logic Theorist | -- | Finding a sequence of logical steps to prove a theorem |

| Machine Learning | ML | A subset of AI that develops and studies computer algorithms that improve automatically through experience |

| Natural Language Processing | NLP | Computational models for understanding human language for tasks |

| Random Forest Model | -- | An ensemble learning method by using multiple decision trees. See Figure 5. |

| Reinforcement Learning | RL | A method of learning in which an agent needs to interact with or experience the world to learn the best possible sequence of actions to maximize its future rewards. See Figure 3C. |

| Robotics | -- | The field that develops intelligent machines that physically act within the world |

| Supervised Learning | -- | An ML algorithm that is trained upon labeled training data to predict the outcomes. See Figure 3A. |

| Support Vector Machine | SVM | A supervised learning method in ML to analyze data for classification and regression analyses. See Figure 4. |

| Transformer | -- | A deep learning model that uses self-attention to differentially weigh each part of the input data as well as the outputs of each hidden layer of the neural network. |

| Unsupervised Learning | -- | An ML algorithm discovers patterns in unlabeled training data; also referred to as knowledge discovery. See Figure 3B. |

Section II: History of ML/AI

For brevity and simplicity in presentation, this history focuses on ML through the lens of supervised learning (Figure 1A and 1B). A few comments on other ML methods (i.e., unsupervised learning and reinforcement learning, Figures 3B and 3C) are discussed in Section III. An early work in AI is attributed to McCulloch and Pitts in 194311 where they encoded propositions in nets using binary neurons, which were later used for learning.12 Donald Hebb13 later demonstrated learning by changing the connection strengths for neurons and Minsky and Edmonds in 1950 developed the first neural network (NN) computer to simulate a network of 40 neurons replicating functions of smaller systems of neurons.14

Meanwhile, Alan Turing in his “Computing Machinery and Intelligence” described ideas which would later become machine learning, reinforcement learning (Figure 3C), and genetic algorithms.15 He also described the “Turing test” as a valid test for intelligent behavior. In this test a human judge needs to distinguish the human participant from the unseen pair of a human and a computer participant using natural language questions and typed answers. The test still remains a high bar for computer algorithms today.15 If not for the work of Turing in developing AI to decrypt Nazi messages, the allies might have lost World War II.

The term, AI, was coined by John McCarthy at a 1955 conference.16 A popular work at this conference was Logic Theorist, which proved theorems by searching to find a sequence of logical steps to begin at the initial premise to arrive at the theorem’s conclusion.17 Logic theorist is the first non-numeric AI system and subsequent work resulted in a branch of AI studying cognitive architectures.17-19 In 1958, Rosenblatt developed the “Perceptron” as the first ANN capable of learning a binary (yes/no) decision with a linear decision boundary19, but it was overhyped.14 Subsequent reports documented the failure of these systems,14 which led to the first “AI Winter” from 1974-1980. This winter came with a decreased funding and interest in AI.

The 1980s saw a renewal in AI research owing to the development of expert systems.9 While impressive, these hand-designed expert systems were brittle, required extensive domain-expertise, and lacked common sense. The initial hype followed by a lack of capability led to the second AI winter in the late 1980s.20

A few standout methodologies were developed during this winter, including Hopfield networks21 (enabling ANNs to contain memories) and the backpropagation algorithm22, which allows ANNs to be trained more efficiently. Other progress in ML was made in defining the hardness of a learning problem using statistical learning theory. Vapnik formulated the empirical risk minimization framework along with a ML model called Support Vector Machines (SVMs, Figure 4), which provided guarantees on model performance.23 In addition, probabilistic reasoning and Bayesian Networks were developed to encode uncertainty.24

Figure 4:

Graphical representation of support vector machines, which can divide data into linear (A) or non-linear groups (B). This figure depicts two instances in which a support vector machine (SVM) is applied to classify positive (white circles, y = +1) and negative (black circles, y = −1) examples in data. To the left is the simpler case when the data are linearly separable (i.e., a SVM with a linear kernel can draw a line such that all examples from one class are on one side of the line and vice versa). To the right is the more complicated case where the data are not linearly separable, and a nonlinear kernel is required to separate the positive and negative examples. In the linearly separable case, we want to maximize the “margin,” defined as the inverse of the magnitude of the “support vector,” , such that the positive examples are above the separating line and the negative examples are below the line. This separation constraint is described by , which describes a linear kernel. For the non-linearly separable case on the right, the data could be separated by a radial basis function kernel, a description for which is beyond the scope of this review.101

With the internet and the massive accumulation of electronic data led to an era in the 2000’s known as “Big Data” (Figure 1B).25 Big Data enabled innovations, such as IBM Watson;26 an ES operating on Big Data. The real success of Big Data was enabling the next era – Deep Learning.27 The dawn of Deep Learning was marked by AlexNet,28 a type of ANN architecture, which nearly cut in half the error rate in labeling an object within an image (e.g., labeling an image of a cat as “cat”). Deep Learning on Deep Neural Networks (DNNs), as opposed to “just” ANNs, is the confluence of five factors:

Improved ANN architectures (e.g., the convolutional neural network27 (CNN) in AlexNet, which can be seen as a computational analogue for biological vision)29,

Deeper ANN architectures (i.e., have more layers of artificial neurons) than the shallower networks of previous decades,

The same backpropagation algorithms developed in the 1980’s,

Access to Big Data, and

Access to more computational power with Moore’s Law30 and graphics processing units, which enable fast operations over ANNs.27

By 2015-2016, DNNs with Reinforcement Learning (RL) were used to achieve human-level performance in playing Atari Games31 and the game, Go, with Google DeepMind’s AlphaGo.32 More modern techniques have led to state-of-the-art performance in natural language understanding and generation,33 protein folding predictions with AlphaFold,34 and super-human performance in multi- and single-player games.35 Another variation on neural networks include using generative adversarial network (GANs) -- a generator and a discriminator – duel each other, with the generator attempting to create synthetic data (e.g., a fake MRI) that can fool the discriminator and a discriminator attempting to tease out fake from real data.36 GANs are entering the public arena with ANNs that can create art from user text prompts.37 Transformers are another type of neural network model using a mechanism called “attention,” and have become the dominant approach for applications of supervised learning in NLP and CV.38

One aspect of AI is the “black box” problem where the input(s) and output(s) are known but the inner workings are not. Explainable AI (XAI)39,40 is a burgeoning subfield of AI that seeks to develop algorithmic mechanisms to afford users insight into the inner workings of these algorithms. A basic XAI mechanism is feature importance41 (i.e., conveying to the user which feature inputs were most consequential in the AI’s decision-making). Within XAI, researchers classify various approaches based upon transparency. For example, a decision tree would be highly transparent as it literally allows a human to simulate the operations of the machine. However, the computation of feature importance for a neural network model on MRI or EEG data might provide information about what subset of the data is important but not why. A helpful review on the importance of XAI is by Rudin.42

Having discussed XAI generally, the question becomes: is XAI impactful and how? The answers are “yes” and “it depends.” In Dr. Matthew Gombolay’s prior work,43 we have shown that XAI can increase a person’s trust in an AI system and increase the degree to which a person thinks the AI is human-like. However, that is potentially dangerous, as increasing trust and heightened anthropomorphization are risk factors for humans to incorrectly accept bad advice from a machine.44 As such, one must be careful to balance the potential value of giving physicians insights into a machine’s decision-making without creating a situation in which the perceive intelligence of the explanation gives the physician a false sense of security that the machine is competent. How these different techniques can apply in pediatric neurology will be discussed later on in this review.

Section III: Types of ML/AI and Applications

Types of ML

ML can be divided into three main subtypes: supervised (predictive), unsupervised (descriptive), and reinforcement learning (RL, see Figure 3).

In supervised learning, the parameters of the ML model, such as an ANN, are trained (i.e., automatically tuned through optimization algorithms to maximize the model’s performance) to accurately predict the label (e.g., tumor or not) given the example (e.g., an MRI image) in cases where the input has not been seen before (e.g., an MRI for a new patient, Figure 3A). This focus on maximizing performance on test (i.e., novel) examples partly distinguishes ML from traditional statistics.

In contrast, unsupervised learning is a paradigm in which training data is unlabeled and the algorithm discovers patterns in the data, which can also be referred to as knowledge discovery.6 Unsupervised learning generally seeks to understand and extract the underlying processes within the data (Figure 3B). While the origins of unsupervised learning trace back to Grossberg in 1982,45 unsupervised learning became prominent in the 2000’s.

Arguably, the most challenging class of learning is RL46 in which an agent needs to interact with or experience the world to learn the best possible sequence of actions to maximize its future “rewards” (Figure 3C).47 In general, RL approximates reward/punishment mechanisms used to train behaviors in animals. RL is critical in scenarios where the best action must be learned through trial and error and deducing which actions taken were responsible for a good or bad outcome. The analogy to animal training breaks down, though, as most RL systems start out knowing nothing, whereas animals generally have mastered fundamental motor and social skills to facilitate the trainer-trainee interaction. Nonetheless, the training mechanisms of some RL algorithms, such as temporal difference methods (TD),48 have been identified in animal dopamine receptors.49

General ML/AI Approach

In approaching an ML application, different methods can be used to develop a model to learn the data. Traditional statistical methods, such as linear or logistic regression, take the data (inputs) to generate a model (output) to explain the data. This process is similar to ML but with a different emphasis and associated set of techniques. In ML, the input is likewise data; however, the model is not the output. The model is the mapping from the input to the output, and the output (at least in supervised learning), is a prediction about the data.

The ML practitioner must make four decisions: 1) Selecting the type of ML (e.g., supervised, unsupervised, reinforcement learning, see Figure 3); 2) Designing the ML model (e.g., neural networks – Figure 2, support vector machines – Figure 4, random forest – Figure 5, and if a neural network is selected, how many layers will be used; examples of how these models are used will be discussed in Section IV); 3) Picking an objective function that scores how well the model is performing (e.g., the root mean squared error), and 4) Choosing a training procedure (e.g., whether to use a feature selection algorithm to search for and eliminate unhelpful components of the input data). While researchers in ML are more focused on novel deep learning architectures and while the proliferation of deep learning applications is profound, random forest models still often outperform neural networks in tabular data settings (e.g., excel spreadsheets with typical healthcare data).50,51 As such, data scientists and ML practitioners alike commonly employ random forest models. Nonetheless, there is growing interest in enabling neural networks to handle such data more effectively.52,53

Figure 5:

Graphical depiction of random forest, an ensemble of multiple decision trees. Each decision tree is created at random and combines different data features to classify the data. The random forest takes different random decision trees and uses the output from the multiple trees to make a decision.

While this review will not discuss many validation methods, K-fold cross validation is one way to assess the model accuracy. In this method, the data is used as both training and test data and is useful when insufficient data is available for separate sets. The dataset is divided into a k number of groups. Every group but one is used to train the model and then the other group is used as the test model, and this is performed k-1 times to develop the optimal model.6 Leave one out cross validation (LOOCV) is the extreme version in which one datapoint is left out and the model is evaluated based upon the remaining data.

Different combinations of ML techniques can be mixed, matched, and combined in a patchwork to create complex ML systems, which can become complicated and difficult to describe in a parsimonious fashion. A further complication is that no one ML approach is better than all others on all data54 – one must tailor the ML approach to the problem at hand. Some applications of ML to healthcare will utilize a single method, whereas others will use different methods to find the optimal model for the data.

“Optimal” can be defined in different ways. For example, an “optimal model” for identifying tumors on imaging would be a model that is 100% sensitive and 100% specific, with identifying 100% of tumors 100% of the time and not mislabeling any tumors. The mislabeling of tumors would be the misclassification rate. However, one must be careful not to overly focus on achieving a 100% model performance on the training data as doing so often comes with poor test performance – a problem called “overfitting” in which the model learns spurious correlations in the data. For example, people know what a "tree" is by determining the right features (e.g., a tall, wooden plant with branches and leaves). Overfitting would be to look at the instance of trees in a single dataset, by counting all the leaves, such as three trees in a single dataset with 11,567 and 12,508, and 13,701 leaves each. An overfitting model would say that a tree has that exact number of leaves; otherwise, that object is not a tree. This would obtain 100% accuracy of the model on the training data but fails to generalize. Underfitting would be to say that a tree is anything with a leaf which would erroneously include other plants such as bushes, flowers, and vines. ML models are designed (e.g., regularization) to try to balance this over- vs. underfitting problem. ML researchers often employ the principle known as Occam's Razor, which prefers simpler models/explanations over more complicated ones, as simpler (fewer parameters) models tend to have a similar testing accuracy to their training accuracy, whereas more complicated models tend to have really good training accuracy but are wrong for testing. There are mechanisms to address overfitting and several other issues in ML, but such are outside of the scope of this review.

Section IV: Examples of ML in Pediatric Neurology

Here we will next highlight different studies in pediatric neurology when possible. ML/AI can be used in any aspect or any field of pediatric neurology, but this review is to highlight specific ways that ML/AI has been used for pediatric neurology.

Cerebral Palsy

The Gross Motor Function Measure-66 (GMFM-66) is an assessment tool used to measure gross motor function in patients with cerebral palsy (CP) that takes 45-60 minutes to administer. One study aimed to use ML to develop a shorter, more efficient version of the GMFM-66, or reduced version called rGMFM-66. Existing data was used from children with CP participating in a single center’s rehabilitation program. Random forest (Figure 5), SVM (Figure 4), and ANN (Figure 2) were self-learning models utilized to estimate a GMFM-66 score using fewest possible test items. Intraclass correlation coefficients (ICC) were used to evaluate agreements between tests. A total of 1217 GMFM-66 one-time assessments and 187 GMFM-66 assessments/reassessments after 1 year were evaluated. GMFM-66 scores were accurately predicted using SVM. The rGMFM-66 and GMFM66 had high ICCs for one-time assessments and evaluating change over 1 year. Thus, ML algorithms could administer the GMFM-66 more efficiently.55

Neuro-oncology

Objective, standardized assessment of tumor burden and treatment response is essential in pediatric neuro-oncology. The Response Assessment in Pediatric Neuro-Oncology (RAPNO) working groups have developed objective criteria for tumor measurement and treatment response.56 Manual tumor segmentation for volumetric measurements is a labor-intensive process, and methods to automate tumor segmentation using artificial intelligence have emerged. For example, a deep learning-based technique is used for two-dimensional and volumetric measurement of a mixed cohort of patients with high grade gliomas, medulloblastoma, or other leptomeningeal seeding tumors.57

In the study, 704 preoperative and 1003 postoperative MRIs were split into training and testing sets. A convolutional neural network (CNN) was used for tumor segmentation. CNN is a type of ANN (Figure 2) that is most commonly used to analyze images, and uses layers of pattern-detecting filters to analyze images. Two-dimensional and volumetric measurements were made from the segmented T1 post-contrast and T2/FLAIR imaging sequences and these measurements were used to calculate RAPNO scores. Deep learning algorithm performance were compared to manual measurements and RAPNO scoring by ICC. Excellent agreement occurred between the automated and manually derived tumor volumes for both pre- and postoperative MRIs, (ICCs 0.896 to 0.960), and automated and manual RAPNO scores were also high (ICCs 0.851 to 0.909).57 The study highlights the use of deep learning methods for pediatric brain tumors.

Epilepsy

ML can be applied to different data sources in epilepsy including analyzing ictal patterns on electroencephalography (EEG) to automate seizure detection and localization. This can be done using supervised learning employing spatiotemporal feature extraction from seizure labeled EEG segments or unsupervised ML methods that do not rely on manually labeled data.

One study used neural networks to classify video recordings of neonatal limb movements as different seizure types versus nonseizures. After training on 120 recordings, the authors achieved seizure detection with a sensitivity from 86-94% and specificity of 93 - 98%.58 Additionally, SVM (Figure 4) was used in interictal intracranial EEG recordings and functional connectivity to predict Engel I seizure freedom outcomes with 100% sensitivity and 89% specificity in children.59

A random forest classifier (Figure 5) was used to investigate clinical variables from demographics, insurance types, seizure rates to medication responses to demonstrate emergency department utilization in children with epilepsy. 60 Additionally, ML has helped analyze genetic and clinical data to expand genotype phenotype correlates, automate neuroimaging analysis, aggregate neuropsychological profiles to predict outcomes of epilepsy surgery and responses to anti-seizure medications, highlighting its utility in clinical practice to advance the management of epilepsy.61-63

Neuroimaging in Epilepsy

Over the last decades, several surface-based algorithms have been developed for fully automated detection of focal cortical dysplasia, the most prevalent epileptogenic cortical malformation 64-72. While careful manual corrections of tissue segmentation and surface extraction are essential for accurate modeling of the main pathological characteristics of focal cortical dysplasia (FCD)73, suboptimal processing of surface-based data leads to modest performance, even in cases with MRI visible lesions 74. Consequently, current automated detection algorithms fail in up to 40% of patients 67,68,71,75, particularly those with subtle FCD, and suffer from high false positive rates 72. Also, the limited set of features designed by human observes may not capture the full complexity of this brain malformation. Taking advantage of deep learning’s ability to learn independently abstract concepts from high-dimensional data, a recent study reached a sensitivity of 93% to detect FCD, while maintaining high specificity both in healthy and disease controls 76. Importantly, the algorithm based on convolutional neural networks detected MRI-negative FCD with 85% sensitivity, thus offering a considerable gain over standard radiological assessment. This algorithm relied on T1-weighted and FLAIR images of patients with histologically confirmed FCD lesions collected across multiple epilepsy centres. Results were generalizable across age, hardware, and sequence parameters. A unique feature of the algorithm was Bayesian uncertainty estimation for risk stratification, which stratified predictions according to the confidence to be lesional. In other words, all lesional clusters were ranked based on confidence, thereby assisting the examiner to establish objectively the significance of findings.

Autism

Canvas Dx is an FDA market authorized77 AI-based digital diagnosis aid, designed to support healthcare providers (HCPs) evaluating children for autism spectrum disorder (ASD) in primary care settings. Its use could shorten time to treatment initiation during the critical early years where interventions can produce greater benefits.78 ML approaches were used in early product development to derive maximally predictive ASD behavioral features from the historical data of thousands of children with diverse conditions, presentations and comorbidities.79-84 The two top performing classifiers were independently validated against novel medical record score data,81,84 with subsequent prospective validation studies of the evolving device and algorithms.82,85-88 Canvas Dx uses digital technologies to collect inputs from a caregiver, healthcare provider and trained video analysts, consistent with best practice recommendations for ASD evaluations.89 Caregivers use a smartphone application to answer a brief questionnaire, and upload home videos. Trained analysts review the videos and the clinician completes their own questionnaires. The three inputs are combined into a mathematical vector for ML analysis and classification after which the device renders a decision of ASD positive or negative or abstains from a determination due to insufficient information. The device developers highlight the importance of abstention as a safety mechanism,90,91 when developing AI for complex clinical tasks such as ASD diagnosis where degrees of uncertainty are anticipated, and additional assessment may be required.92 The Canvas Dx output is used by providers as an adjunct to their clinical judgment and criteria from the Diagnostic and Statistical Manual of Mental Disorders, fifth edition in diagnosis.

The Canvas Dx prospective pivotal validation study,92 was a double blinded comparator clinical trial conducted at 14 sites in 2019-2020 which included 425 study completers aged 18-72 months presenting with concern for developmental delay. The diagnostic output of the device was compared to the blinded diagnostic determinations of two or more experienced specialists. The device produced a determinate result (ASD positive or negative) for roughly one third of participants in the study, and abstained from diagnosing the remaining participants. For the participants who received a determinate (ASD positive or negative) result, device accuracy strongly aligned with clinicians (positive predictive value - 80.8% [95%CI, 70.3%-88.8%]; negative predictive value - 98.3% [90.6%-100%]; sensitivity 98.4% [91.6%-100%]; specificity 78.9% [67.6%-87.7%]). Promisingly, the study did not find evidence of performance inconsistencies across gender, race/ethnicity or socio-economic status. Follow-up studies would be needed, however, to confirm this finding.

Diagnosis using Natural Language Processing

Natural language processing (NLP) analyzes text and speech to infer meaning and can be used for the diagnosis, natural history and outcomes.93 Unstructured data collected in the electronic health record (EHR) can be leveraged for structured data collection. The challenge is to define through domains the possible meaning of a given word, its synonyms, and its meaning in different situations. Despite these difficulties, we will detail two examples, the first addressing the challenge of seizure count and seizure cessation94 and the second on the diagnosis of a rare epileptic and developmental encephalopathy, Dravet syndrome.95

In the first example, a novel NLP pipeline automatically extracted seizure freedom and seizure frequency from clinical reports with validation against human annotations.94 For individuals with epilepsy, the most important clinical markers used for clinical decisions are the presence or cessation of seizures and the frequency of seizures at the given time. Almost 79,000 reports of patients with epilepsy were used to identify the paragraphs of interest and to apply the algorithm through a number of questions. In parallel, a group of experts worked on manual annotations. The pipeline was created by using a combination of pretrained models with a relatively small number of additional steps to provide the requested information. This pipeline could be utilized to address other research questions in epilepsy, including frequency of seizures in a particular epilepsy syndrome or treatment response.

The second example is about the early diagnosis of a rare developmental and epileptic encephalopathy, Dravet syndrome.95 The diagnosis of Dravet syndrome is often delayed as seizures at onset are febrile and patient can be misdiagnosed as febrile seizures (FS).96 However, first seizures’ characteristics can be often identified for DS by an expert pediatric neurologist as seizures are long lasting, mainly hemiclonic (focal involving one hemisphere) and occurring after low grade fever or vaccine. This study aimed to explore blindly through NLP if these characteristics (concepts) are significantly more used in the EHR of individuals with DS before the age of 2 years compared to individuals with FS. These concepts, often reported by emergency room physicians for the first episodes, would allow an earlier diagnosis of DS and referral to expert centers that can confirm diagnosis and provide targeted care. In addition, those terms can be also automatically detected by software exploiting NLP helping non-expert physicians. This model can be applied to other rare epilepsies.

Neonatal Neurology

Leave-one-out cross validation (LOOCV) as discussed earlier, was used to evaluate a model using MRI features to predict language impairments in very-low-birth-weight neonates ≤ 1500 g and ≤ 32 weeks of gestational age.97 MRI features included structural features (white matter abnormalities, and cerebellar asymmetry) and diffusion tensor imaging (DTI), and using the Bayley Scales, correlated with language outcomes at 18-22 months adjusted age. The number of participants was defined as N. The model was developed through supervised learning multivariate logistic regression models, with exhaustive search in the feature space. After selecting the best models, the models were evaluated using LOOCV with N-1 datapoints, and testing on the single participant that was left out. N-1 models were created with optimizing sensitivity and specificity. Cerebellar asymmetry was associated with lower receptive language subscores. Moreover, white matter microstructure on DTI could predict composite, expression, and receptive language outcomes.97

Section V: Ethics of ML/AI

The ethics of ML/AI are important to address and a full discourse would need to be discussed in multiple manuscripts and discussions. For example, an incidental finding could be identified by the algorithm that was not the original intent. The AI/ML algorithm could be programmed to provide predictions about multiple potential diagnoses and not focus on a single diagnosis to reduce harm or misdiagnoses. Multiple ethical issues arise from using ML/AI in medical applications, and should be considered as AI/ML can improve diagnostic accuracy and efficiency, but should also not harm. Moreover, ethical issues can involve different levels including individual, interpersonal, group, institutional, and societal. For further discussion on ethical issues, please see this review.98

Section VI: Conclusion

As the field of ML/AI advances with increased conversions to electronic medical record systems and increasing big data, additional applications of ML/AI will be used in medicine and research. Yet, there are many challenges still for AI (and ML). For example, ANN-based systems currently make decisions using neurons whose state and decision making is opaque and biased rather than human-interpretable.42 Moreover, generating meaningful language, images, music and behavior is still very hard. Further, most AI systems lack causal reasoning99 (as opposed to reasoning based upon correlation) or common sense. 100 Today, AI researchers generally think of “AI” as “specific” – a set of computational techniques applied for a specific problem. This connotation is in contrast to “general AI” or “Artificial General Intelligence” (AGI), which is the attempt to truly replicate a computational form of animal intelligence. As AI/ML advances, they will be increasingly utilized in research and in child neurology.

Funding:

This work was supported in part by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award Number UL1TR002378 and KL2TR002381.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of Interest:

G.Y.G. receives part time salary support from the Centers for Disease Control and Prevention for acute flaccid myelitis surveillance.

J.T.M. was PI and consultant for Cognoa, Inc, Chief Medical Officer for Yamo Pharmaceuticals, Consultant for Kuzani Pharmaceuticals and Cogntio Therapeutics M.C.G. has received industry funding from Google, Ford, Konica Minolta, and Lockheed Martin.

N.G., A.B., R.N., B.S., J.H.-C. and S.B. have nothing to declare.

REFERENCE

- 1.Smith RG. Aristotle’s Logic. In. Stanford Encylopedia of Philosophy 2000. [Google Scholar]

- 2.Nishitani N, Schurmann M, Amunts K, Hari R. Broca's region: from action to language. Physiology (Bethesda). 2005;20:60–69. [DOI] [PubMed] [Google Scholar]

- 3.Jacobs B, Schall M, Scheibel AB. A quantitative dendritic analysis of Wernicke's area in humans. II. Gender, hemispheric, and environmental factors. J Comp Neurol. 1993;327(1):97–111. [DOI] [PubMed] [Google Scholar]

- 4.McCarthy J. What is Artificial Intelligence? 2004. [Google Scholar]

- 5.Mitchell TM. Machine Learning. New York: McGraw-Hill; 1997. [Google Scholar]

- 6.Murphy KP. Machine Learning : A Probabilistic Perspective. Cambridge, UNITED STATES: MIT Press; 2012. [Google Scholar]

- 7.Taherkhani A, Belatreche A, Li Y, Cosma G, Maguire LP, McGinnity TM. A review of learning in biologically plausible spiking neural networks. Neural Netw. 2020;122:253–272. [DOI] [PubMed] [Google Scholar]

- 8.Epley N, Gilovich T. The anchoring-and-adjustment heuristic: why the adjustments are insufficient. Psychol Sci. 2006;17(4):311–318. [DOI] [PubMed] [Google Scholar]

- 9.Liao S-H. Expert system methodologies and applications—a decade review from 1995 to 2004. Expert Systems with Applications. 2005;28(1):93–103. [Google Scholar]

- 10.Deng J, Berg AC, Li K, Fei-Fei L. What Does Classifying More Than 10,000 Image Categories Tell Us? 2010; Berlin, Heidelberg. [Google Scholar]

- 11.McCulloch WS, Pitts W. A logical calculus of the ideas immanent in nervous activity. 1943. Bull Math Biol. 1990;52(1-2):99–115; discussion 173–197. [PubMed] [Google Scholar]

- 12.Pitts W, Mc CW. How we know universals; the perception of auditory and visual forms. Bull Math Biophys. 1947;9(3):127–147. [DOI] [PubMed] [Google Scholar]

- 13.Hebb DO. The organization of behavior; a neuropsychological theory.: Wiley; 1949. [Google Scholar]

- 14.Crevier D The Tumultuous History of the Search for Artificial Intelligence. New York, NY: Basic Books; 1993. [Google Scholar]

- 15.Turing AM. Computing Machinery and Intelligence. In: Epstein R, Roberts G, Beber G, ed. Parsing the Turing Test. Dordrecht: Springer; 2007. [Google Scholar]

- 16.McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the dartmouth summer research project on artificial intelligence. Paper presented at: AI magazine1955. [Google Scholar]

- 17.Newell A, Simon HA. Report on a General Problem-Solving Program. 1959. [Google Scholar]

- 18.Ritter FE, Tehranchi F, Oury JD. ACT-R: A cognitive architecture for modeling cognition. Wiley Interdiscip Rev Cogn Sci. 2019;10(3):e1488. [DOI] [PubMed] [Google Scholar]

- 19.Rosenblatt F The perceptron: a probabilistic model for information storage and organization in the brain. Psychol Rev. 1958;65(6):386–408. [DOI] [PubMed] [Google Scholar]

- 20.Coats PK. Why Expert Systems Fail. Financial Management. 1988;17(3):77–86. [Google Scholar]

- 21.Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci U S A. 1982;79(8):2554–2558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986;323(6088):533–536. [Google Scholar]

- 23.Cortes C, Vapnik V. Support-vector networks. Machine Learning. 1995;20(3):273–297. [Google Scholar]

- 24.Fusion Pearl J., propagation, and structuring in belief networks. Artificial Intelligence. 1986;29(3):241–288. [Google Scholar]

- 25.Marx V. Biology: The big challenges of big data. Nature. 2013;498(7453):255–260. [DOI] [PubMed] [Google Scholar]

- 26.Chen Y, Elenee Argentinis JD, Weber G. IBM Watson: How Cognitive Computing Can Be Applied to Big Data Challenges in Life Sciences Research. Clin Ther. 2016;38(4):688–701. [DOI] [PubMed] [Google Scholar]

- 27.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. [DOI] [PubMed] [Google Scholar]

- 28.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems; 2012. [Google Scholar]

- 29.Cadieu CF, Hong H, Yamins DL, et al. Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput Biol. 2014;10(12):e1003963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Schaller RR. Moore's law: past, present and future. IEEE Spectrum. 1997;34(6):52–59. [Google Scholar]

- 31.Mnih V, Kavukcuoglu K, Silver D, et al. Human-level control through deep reinforcement learning. Nature. 2015;518(7540):529–533. [DOI] [PubMed] [Google Scholar]

- 32.Silver D, Huang A, Maddison CJ, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529(7587):484–489. [DOI] [PubMed] [Google Scholar]

- 33.Brown T, Mann B, Ryder N, et al. Language models are few-shot learners. Advances in neural information processing systems,. 2020;33:1877–1901. [Google Scholar]

- 34.Jumper J, Evans R, Pritzel A, et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;596(7873):583–589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Vinyals O, Babuschkin I, Czarnecki WM, et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature. 2019;575(7782):350–354. [DOI] [PubMed] [Google Scholar]

- 36.Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative Adversarial Nets. Advances in Neural Information Processing Systems; 27 (NIPS 2014); 2014. [Google Scholar]

- 37.AI. O. DALL-E 2. https://openai.com/dall-e-2/. Published 2022. Accessed November 22, 2022.

- 38.Vaswani A, Shazeer N, Parmar N, et al. Attention Is All You Need. arXiv. 2017. [Google Scholar]

- 39.Gunning D, Vorm E, Wang JY, Turek M. DARPA's explainable AI (XAI) program: A retrospective. Applied AI Letters. 2021;2(4):e61. [Google Scholar]

- 40.Barredo Arrieta A, Díaz-Rodríguez N, Del Ser J, et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion. 2020;58:82–115. [Google Scholar]

- 41.Sturm I, Lapuschkin S, Samek W, Muller KR. Interpretable deep neural networks for single-trial EEG classification. J Neurosci Methods. 2016;274:141–145. [DOI] [PubMed] [Google Scholar]

- 42.Rudin C Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat Mach Intell. 2019;1(5):206–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Silva A, Schrum M, Hedlund-Botti E, Gopalan N, Gombolay M. Explainable Artificial Intelligence: Evaluating the Objective and Subjective Impacts of xAI on Human–Agent Interaction. International Journal of Human-Computer Interaction. 2022:1–15. [Google Scholar]

- 44.Khavas ZR, Ahmadzadeh SR, Robinette P. Modeling Trust in Human-Robot Interaction: A Survey. Paper presented at: Social Robotics; 2020//, 2020; Cham. [Google Scholar]

- 45.Grossberg S. How Does a Brain Build a Cognitive Code? In: Studies of Mind and Brain: Neural Principles of Learning, Perception, Development, Cognition, and Motor Control. Dordrecht: Springer Netherlands; 1982:1–52. [Google Scholar]

- 46.Kaelbling LP, Littman ML, Moore AW. Reinforcement learning: A survey. Journal of artificial intelligence research. 1996;1(4):237–285. [Google Scholar]

- 47.Bellman R, Kalaba R. On the role of dynamic programming in statistical communication theory. IRE Transactions on Information Theory. 1957;3(3):197–203. [Google Scholar]

- 48.Sutton RS. Learning to predict by the methods of temporal differences. Machine Learning. 1988;3(1):9–44. [Google Scholar]

- 49.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16(5):1936–1947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ye SCM. Spatio-temporal estimation of the daily cases of COVID-19 in worldwide using random forest machine learning algorithm. Chaos Solitons Fractals. 2020;140:110210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shwartz-Ziv R, Armon A. Tabular data: Deep learning is not all you need. Information Fusion. 2022;81:84–90. [Google Scholar]

- 52.Xu L, Skoularidou M, Cuesta-Infante A, Veeramachaneni K. Modeling Tabular data using Conditional GAN. Advances in Neural Information Processing Systems 32 (NeurIPS 2019); 2019, 2019. [Google Scholar]

- 53.Arik SÖ, Pfister T. Tabnet: Attentive interpretable tabular learning. Paper presented at: Proceedings of the AAAI Conference on Artificial Intelligence 2021. [Google Scholar]

- 54.Ho YC, Pepyne DL. Simple Explanation of the No-Free-Lunch Theorem and Its Implications. Journal of Optimization Theory and Applications. 2002;115(3):549–570. [Google Scholar]

- 55.Duran I, Stark C, Saglam A, et al. Artificial intelligence to improve efficiency of administration of gross motor function assessment in children with cerebral palsy. Dev Med Child Neurol. 2022;64(2):228–234. [DOI] [PubMed] [Google Scholar]

- 56.Erker C, Tamrazi B, Poussaint TY, et al. Response assessment in paediatric high-grade glioma: recommendations from the Response Assessment in Pediatric Neuro-Oncology (RAPNO) working group. Lancet Oncol. 2020;21(6):e317–e329. [DOI] [PubMed] [Google Scholar]

- 57.Peng J, Kim DD, Patel JB, et al. Deep learning-based automatic tumor burden assessment of pediatric high-grade gliomas, medulloblastomas, and other leptomeningeal seeding tumors. Neuro Oncol. 2022;24(2):289–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Yildiz I, Garner R, Lai M, Duncan D. Unsupervised seizure identification on EEG. Comput Methods Programs Biomed. 2022;215:106604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Karayiannis NB, Tao G, Xiong Y, et al. Computerized motion analysis of videotaped neonatal seizures of epileptic origin. Epilepsia. 2005;46(6):901–917. [DOI] [PubMed] [Google Scholar]

- 60.Tomlinson SB, Porter BE, Marsh ED. Interictal network synchrony and local heterogeneity predict epilepsy surgery outcome among pediatric patients. Epilepsia. 2017;58(3):402–411. [DOI] [PubMed] [Google Scholar]

- 61.Grinspan ZM, Patel AD, Hafeez B, Abramson EL, Kern LM. Predicting frequent emergency department use among children with epilepsy: A retrospective cohort study using electronic health data from 2 centers. Epilepsia. 2018;59(1):155–169. [DOI] [PubMed] [Google Scholar]

- 62.Abbasi B, Goldenholz DM. Machine learning applications in epilepsy. Epilepsia. 2019;60(10):2037–2047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Devinsky O, Dilley C, Ozery-Flato M, et al. Changing the approach to treatment choice in epilepsy using big data. Epilepsy Behav. 2016;56:32–37. [DOI] [PubMed] [Google Scholar]

- 64.Adler S, Wagstyl K, Gunny R, et al. Novel surface features for automated detection of focal cortical dysplasias in paediatric epilepsy. NeuroImage Clinical. 2016;14:18–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ahmed B, Brodley CE, Blackmon KE, et al. Cortical feature analysis and machine learning improves detection of “MRI-negative” focal cortical dysplasia. Epilepsy & Behavior. 2015;48:21–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ahmed B, Thesen T, Blackmon KE, Kuzniekcy R, Devinsky O, Brodley CE. Decrypting" cryptogenic" epilepsy: Semi-supervised hierarchical conditional random fields for detecting cortical lesions in mri-negative patients. The Journal of Machine Learning Research. 2016;17(1):3885–3914. [Google Scholar]

- 67.Gill RS, Hong S-J, Fadaie F, et al. Automated Detection of Epileptogenic Cortical Malformations Using Multimodal MRI. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop, DLMIA 2017, ML-CDS 2017 Lecture Notes in Computer Science. 2017;10553:349–356. [Google Scholar]

- 68.Hong S-J, Kim H, Schrader D, Bernasconi N, Bernhardt B, Bernasconi A. Automated detection of cortical dysplasia type II in MRI-negative epilepsy. Neurology. 2014;83(1):48–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Jin B, Krishnan B, Adler S, et al. Automated detection of focal cortical dysplasia type II with surface-based magnetic resonance imaging postprocessing and machine learning. Epilepsia. 2018;59(5):982–992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Tan Y-L, Kim H, Lee S, et al. Quantitative surface analysis of combined MRI and PET enhances detection of focal cortical dysplasias. Neuroimage. 2018;166:10–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Snyder K, Whitehead EP, Theodore WH, Zaghloul KA, Inati SJ, Inati SK. Distinguishing type II focal cortical dysplasias from normal cortex: A novel normative modeling approach. NeuroImage: Clinical. 2021;30:102565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kini LG, Gee JC, Litt B. Computational analysis in epilepsy neuroimaging: a survey of features and methods. NeuroImage: Clinical. 2016;11:515–529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gill RS, Caldairou B, Bernasconi N, Bernasconi A. Uncertainty-informed detection of epileptogenic brain malformations using bayesian neural networks. International Conference on Medical Image Computing and Computer-Assisted Intervention - MICCAI 2019 Lecture Notes in Computer Science. 2019;11767:225–233. [Google Scholar]

- 74.Spitzer H, Ripart M, Whitaker K, et al. Interpretable surface-based detection of focal cortical dysplasias: a MELD study. medRxiv. 2021:2021.2012.2013.21267721. [Google Scholar]

- 75.Zhao Y, Ahmed B, Thesen T, et al. A non-parametric approach to detect epileptogenic lesions using restricted boltzmann machines. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2016:373–382. [Google Scholar]

- 76.Gill RS, Lee HM, Caldairou B, et al. Multicenter Validation of a Deep Learning Detection Algorithm for Focal Cortical Dysplasia. Neurology. 2021;97(16):e1571–e1582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Administration USFD. FDA Authorizes Marketing of Diagnostic Aid for Autism Spectrum Disorder https://www.fda.gov/news-events/press-announcements/fda-authorizes-marketing-diagnostic-aid-autism-spectrum-disorder. Accessed July 08, 2022.

- 78.Koegel LK, Koegel RL, Ashbaugh K, Bradshaw J. The importance of early identification and intervention for children with or at risk for autism spectrum disorders. Int J Speech Lang Pathol. 2014;16(1):50–56. [DOI] [PubMed] [Google Scholar]

- 79.Kosmicki JA, Sochat V, Duda M, Wall DP. Searching for a minimal set of behaviors for autism detection through feature selection-based machine learning. Transl Psychiatry. 2015;5:e514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Wall DP, Kosmicki J, Deluca TF, Harstad E, Fusaro VA. Use of machine learning to shorten observation-based screening and diagnosis of autism. Transl Psychiatry. 2012;2:e100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Duda M, Kosmicki JA, Wall DP. Testing the accuracy of an observation-based classifier for rapid detection of autism risk. Transl Psychiatry. 2014;4:e424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Duda M, Daniels J, Wall DP. Clinical Evaluation of a Novel and Mobile Autism Risk Assessment. J Autism Dev Disord. 2016;46(6):1953–1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Levy S, Duda M, Haber N, Wall DP. Sparsifying machine learning models identify stable subsets of predictive features for behavioral detection of autism. Mol Autism. 2017;8:65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Wall DP, Dally R, Luyster R, Jung JY, Deluca TF. Use of artificial intelligence to shorten the behavioral diagnosis of autism. PLoS One. 2012;7(8):e43855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Tariq Q, Daniels J, Schwartz JN, Washington P, Kalantarian H, Wall DP. Mobile detection of autism through machine learning on home video: A development and prospective validation study. PLoS Med. 2018;15(11):e1002705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Kanne SM, Carpenter LA, Warren Z. Screening in toddlers and preschoolers at risk for autism spectrum disorder: Evaluating a novel mobile-health screening tool. Autism Res. 2018;11(7):1038–1049. [DOI] [PubMed] [Google Scholar]

- 87.Abbas H, Garberson F, Glover E, Wall DP. Machine learning approach for early detection of autism by combining questionnaire and home video screening. J Am Med Inform Assoc. 2018;25(8):1000–1007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Abbas H, Garberson F, Liu-Mayo S, Glover E, Wall DP. Multi-modular AI Approach to Streamline Autism Diagnosis in Young Children. Sci Rep. 2020;10(1):5014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Brian JA, Zwaigenbaum L, Ip A. Standards of diagnostic assessment for autism spectrum disorder. Paediatr Child Health. 2019;24(7):444–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Kompa B, Snoek J, Beam AL. Second opinion needed: communicating uncertainty in medical machine learning. NPJ Digit Med. 2021;4(1):4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Cortes C, DeSalvo G, Gentile C, Mohri M, Yang S. Online Learning with Abstention. Paper presented at: Proceedings of the 35th International Conference on Machine Learning 2018. [Google Scholar]

- 92.Megerian JT, Dey S, Melmed RD, et al. Evaluation of an artificial intelligence-based medical device for diagnosis of autism spectrum disorder. NPJ Digit Med. 2022;5(1):57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Esteva A, Robicquet A, Ramsundar B, et al. A guide to deep learning in healthcare. Nat Med. 2019;25(1):24–29. [DOI] [PubMed] [Google Scholar]

- 94.Xie K, Gallagher RS, Conrad EC, et al. Extracting seizure frequency from epilepsy clinic notes: a machine reading approach to natural language processing. J Am Med Inform Assoc. 2022;29(5):873–881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Lo Barco T, Kuchenbuch M, Garcelon N, Neuraz A, Nabbout R. Improving early diagnosis of rare diseases using Natural Language Processing in unstructured medical records: an illustration from Dravet syndrome. Orphanet J Rare Dis. 2021;16(1):309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Wirrell EC, Hood V, Knupp KG, et al. International consensus on diagnosis and management of Dravet syndrome. Epilepsia. 2022;63(7):1761–1777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Vassar R, Schadl K, Cahill-Rowley K, Yeom K, Stevenson D, Rose J. Neonatal Brain Microstructure and Machine-Learning-Based Prediction of Early Language Development in Children Born Very Preterm. Pediatr Neurol. 2020;108:86–92. [DOI] [PubMed] [Google Scholar]

- 98.Morley J, Machado CCV, Burr C, et al. The ethics of AI in health care: A mapping review. Soc Sci Med. 2020;260:113172. [DOI] [PubMed] [Google Scholar]

- 99.Pearl J Embracing causality in default reasoning. Artificial Intelligence. 1988;35(2):259–271. [Google Scholar]

- 100.Davis E, Marcus G. Commonsense Reasoning and Commonsense Knowledge in Artificial Intelligence. Communications of the ACM. 2015;58:92–103. [Google Scholar]

- 101.Christmann A, Steinwart I. Support Vector Machines. 1 ed. New York, NY: Springer; 2008. [Google Scholar]