Abstract

We exhibit examples of high-dimensional unimodal posterior distributions arising in nonlinear regression models with Gaussian process priors for which Markov chain Monte Carlo (MCMC) methods can take an exponential run-time to enter the regions where the bulk of the posterior measure concentrates. Our results apply to worst-case initialized (‘cold start’) algorithms that are local in the sense that their step sizes cannot be too large on average. The counter-examples hold for general MCMC schemes based on gradient or random walk steps, and the theory is illustrated for Metropolis–Hastings adjusted methods such as preconditioned Crank–Nicolson and Metropolis-adjusted Langevin algorithm.

This article is part of the theme issue ‘Bayesian inference: challenges, perspectives, and prospects’.

Keywords: MCMC, Bayesian inference, Gaussian processes, computational hardness

1. Introduction

Markov chain Monte Carlo (MCMC) methods are the workhorse of Bayesian computation when closed formulae for estimators or probability distributions are not available. For this reason they have been central to the development and success of high-dimensional Bayesian statistics in the last decades, where one attempts to generate samples from some posterior distribution arising from a prior on -dimensional Euclidean space and the observed data vector. MCMC methods tend to perform well in a large variety of problems, are very flexible and user-friendly, and enjoy many theoretical guarantees. Under mild assumptions, they are known to converge to their stationary ‘target’ distributions as a consequence of the ergodic theorem, albeit perhaps at a slow speed, requiring a large number of iterations to provide numerically accurate algorithms. When the target distribution is log-concave, MCMC algorithms are known to mix rapidly, even in high dimensions. But for general -dimensional densities, we have only a restricted understanding of the scaling of the mixing time of Markov chains with or with the ‘informativeness’ (sample size or noise level) of the data vector.

A classical source of difficulty for MCMC algorithms are multi-modal distributions. When there is a deep well in the posterior density between the starting point of an MCMC algorithm and the location where the posterior is concentrated, many MCMC algorithms are known to take an exponential time—proportional to the depth of the well—when attempting to reach the target region, even in low-dimensional settings, see figure 1a and also the discussion surrounding proposition 4.2 below. However, for distributions with a single mode and when the dimension is fixed, MCMC methods can usually be expected to perform well.

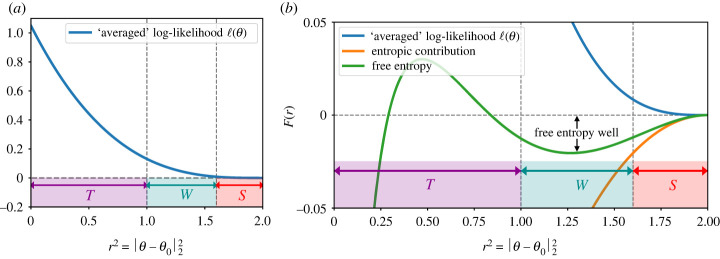

Figure 1.

Two possible sources of MCMC hardness in high dimensions: multi-modal likelihoods and entropic barriers. (a) In low dimensions (here ), MCMC hardness usually arises because of a non-unimodal likelihood, creating an ‘energy barrier’, even though the maximum likelihood is attained at . The MCMC algorithm is assumed to be initialized in the set containing a local maximum of the likelihood. (b) Illustration of the arising of entropic (or volumetric) difficulties, here in dimension : the set of points close to has much less volume than the set of points far away. As increases, this phenomenon is amplified: all ratios of volumes of the three sets scale exponentially with . (Online version in colour.)

In essence this article is an attempt to explain how, in high dimensions, wells can be formed without multi-modality of a given posterior distribution. The difficulty in this case is volumetric, also referred to as entropic: while the target region contains most of the posterior mass, its (prior) volume is so small compared to the rest of the space that an MCMC algorithm may take an exponential time to find it, see figure 1b. This competition between ‘energy’—here represented by the log-likelihood in the posterior distribution —and ‘entropy’ (related to the prior term ) has also been exploited in recent work on statistical aspects of MCMC in various high dimensional inference and statistical physics models [1–5]. These ideas somewhat date back to the nineteenth century foundations of statistical mechanics [6] and the notion of free energy, consisting of a sum of energetic and entropic contributions which the system spontaneously attempts to minimize. The ‘MCMC-hardness’ phenomenon described above is then akin to the meta-stable behaviour of thermodynamical systems, such as glasses or supercooled liquids. As the temperature decreases, such systems can undergo a ‘first-order’ phase transition, in which a global free energy minimum (analoguous to the target region above) abruptly appears, while the system remains trapped in a suboptimal local minimum of the free energy (the starting region of the MCMC algorithm). For the system to go to thermodynamic equilibrium it must cross an extensive free energy barrier: such a crossing requires an exponentially long time, so that the system appears equilibrated on all relevant timescales, similarly to the MCMC stuck in the starting region. Classical examples include glasses and the popular experiment of rapid freezing of supercooled water (i.e. water that remained liquid at negative temperatures) after introducing a perturbation.

Inspired by recent work [4,5,7], let us illustrate some of the volumetric phenomena which are key to our results below. We separate the parameter space into three regions (see figures 1 and 2), which we name by common MCMC terminology. Firstly a starting (or initialization) region , where an algorithm starts, secondly a target region where both the bulk of the posterior mass and the ground truth are situated, and thirdly an intermediate free-entropy well1 that separates from .2 In our theorems, these regions will be characterized by their Euclidean distance to the ground truth parameter generating the data. The prior volumes of the -annuli closer to the ground truth are smaller than those further out as illustrated in figure 1b, and in high dimensions this effect becomes quantitative in an essential way. Specifically, the trade-off between the entropic and energetic terms can happen such that the following three statements are simultaneously true.

-

(i)

contains ‘almost all’ of the posterior mass.

-

(ii)

As one gets closer to (and thus the ground truth ), the log-likelihood is strictly monotonically increasing.

-

(iii)

Yet still possesses exponentially more posterior mass than .

Using ‘bottleneck’ arguments from Markov chain theory (ch. 7 in [8]), this means that an MCMC algorithm that starts in is expected to take an exponential time to visit . If the step size is such that it cannot ‘jump over’ , this also implies an exponential hitting time lower bound for reaching . This is illustrated in figure 2 for an averaged version of the model described in §2.

Figure 2.

Illustration of a free-energy barrier (or free-entropy well) arising with a unimodal posterior. The model is an ‘averaged’ version of the spiked tensor model, with log-likelihood and uniform prior on the -dimensional unit sphere . is chosen arbitrarily on . The posterior is , for . Up to a constant, the free entropy can be decomposed as the sum of (that only depends on ) and the ‘entropic’ contribution . In the figure we show . (Online version in colour.)

In the situation described above, the MCMC iterates never visit the region where the posterior is statistically informative, and hence yield no better inference than a random number generator. One could regard this as a ‘hardness’ result about computation of posterior distributions in high dimensions by MCMC. In this work we show that such situations can occur generically and establish hitting time lower bounds for common gradient or random walk based MCMC schemes in model problems with nonlinear regression and Gaussian process priors. Before doing this, we briefly review some important results of Ben Arous et al. [4] for the problem of principle component analysis (PCA) in tensor models, from which the inspiration for our work was drawn. This technique to establish lower bounds for MCMC algorithms has also recently been leveraged in [5] in the context of sparse PCA, and in [7] to establish connections between MCMC lower bounds and the Low Degree Method for algorithmic hardness predictions (see [9] for an expository note on this technique).

When the target distribution is globally log-concave, pictures such as in figure 2 are ruled out (see also remark 4.7) and polynomial-time mixing bounds have been shown for a variety of commonly used MCMC methods. While an exhaustive discussion would be beyond the scope of this paper, we mention here the seminal works [10,11] which were among the first to demonstrate high-dimensional mixing of discretized Langevin methods (even upon ‘cold-start’ initializations like the ones assumed in the present paper). In concrete nonlinear regression models, polynomial-time computation guarantees were given in [12] under a general ‘gradient stability’ condition on the regression map which guarantees that the posterior is (with high probability) locally log-concave on a large enough region including . While this condition can be expected to hold under natural injectivity hypotheses and was verified for an inverse problem with the Schrödinger equation in [12], for non-Abelian X-ray transforms in [13], the ‘Darcy flow’ model involving elliptic partial differential equations (PDE) in [14] and for generalized linear models in [15], all these results hinge on the existence of a suitable initializer of the gradient MCMC scheme used. These results form part of a larger research programme [14,16–19] on algorithmic and statistical guarantees for Bayesian inversion methods [20] applied to problems with partial differential equations. The present article shows that the hypothesis of existence of a suitable initializer is—at least in principle—essential in these results if , and that at most ‘moderately’ high-dimensional () MCMC implementations of Gaussian process priors may be preferable to bypass computational bottlenecks.

Our negative results apply to (worst-case initialized) Markov chains whose step sizes cannot be too large with high probability. As we show this includes many commonly used algorithms (such as preconditioned Crank–Nicolson (pCN) and Metropolis-adjusted Langevin algorithm (MALA)) whose dynamics are of a ‘local’ nature. There are a variety of MCMC methods developed recently, such as piece-wise deterministic Markov processes, boomerang or zig-zag samplers [21–24] which may not fall into our framework. While we are not aware of any rigorous results that would establish polynomial hitting or mixing times of these algorithms for high-dimensional posterior distributions such as those exhibited here, it is of great interest to study whether our computational hardness barriers can be overcome by ‘non-local’ methods. There is some empirical evidence that this may be possible. For instance, in the numerical simulation of models of supercooled liquids [25], methods such as swap Monte Carlo [26] have been observed to equilibrate to low-temperature distributions which were not reachable by local approaches. Another example is given by the planted clique problem [27]: this model is conjectured to possess a large algorithmically hard phase, and local Monte Carlo methods are known to fail far from the conjectured algorithmic threshold [28–30]. On the other hand, non-local exchange Monte Carlo methods (such as parallel tempering [31]) have been numerically observed to perform significantly better [32].

2. The spiked tensor model: an illustrative example

In this section, we present (a simplified version of) results obtained mostly in [4]. First some notation. For any , we denote by the Euclidean unit sphere in dimensions. For we denote their tensor product.

Spiked tensor estimation is a synthetic model to study tensor PCA, and corresponds to a Gaussian Additive Model with a low-rank prior. More formally, it can be defined as follows [33].

Definition 2.1 (Spiked tensor model). —

Let denote the order of the tensor. The observations and the parameter are generated according to the following joint probability distribution:

2.1 Here, denotes the Lebesgue measure on the space of -tensors of size . is the uniform probability measure on , and is the signal-to-noise ratio (SNR) parameter. In particular, the posterior distribution is

2.2 in which is a normalization, and we defined the log-likelihood (up to additive constants) as

2.3

In the following, we study the model from definition 2.1 via the prism of statistical inference. In particular, we will study the posterior for a fixed3 ‘data tensor’ . Since such a tensor was generated according to the marginal of (2.1), we parameterize it as , with a -tensor with i.i.d. coordinates, and a ‘ground truth’ vector uniformly sampled in . The goal of our inference task is to recover information on the low-rank perturbation (or equivalently on the vector , possibly up to a global sign depending on the parity of ) from the posterior distribution .

Crucially, we are interested in the limit of the model of definition 2.1 as . In particular, all our statements, although sometimes non-asymptotic, are to be interpreted as grows. We say that an event occurs ‘with high probability’ (w.h.p.) when its probability is .4 Moreover, by rotation invariance, all statements are uniform over , so that said probabilities only refer to the noise tensor . Finally, throughout our discussion we will work with latitude intervals (or bands) on the sphere, with the North Pole taken to be . We characterize them using inner products (correlations) for odd , and for even (since in this case and are indistinguishable from the point of view of the observer).

Definition 2.2 (Latitude intervals). —

Assume that is even. For we define:

- —

,

- —

,

- —

.

If is odd, we define these sets similarly, replacing by .

Note that these sets can also be characterized using the distance to the ground truth, e.g. when is even.

(a) . Posterior contraction

We can use uniform concentration of the likelihood to show that as (after taking the limit ) the posterior contracts in a region infinitesimally close to the ground truth . We first show that a region arbitrarily close to the ground truth exponentially dominates a very large starting region:

Proposition 2.3. —

For any there exists and functions such that , as and for all :

2.4

Posterior contraction is the content of the following result:

Corollary 2.4 (Posterior contraction). —

There exists and a function satisfying as , such that for all :

2.5

The proofs of proposition 2.3 and corollary 2.4 are given in appendix A.

Remark 2.5 (Suboptimality of uniform bounds). —

Stronger than corollary 2.4, it is known that there exists a sharp threshold such that for any the posterior mean, as well as the maximum likelihood estimator, sit w.h.p. in , with , while such a statement is false for [34–36]. The given by corollary 2.4 is, on the other hand, clearly not sharp, because of the crude uniform bound used in the proof. This can easily be understood in the case, corresponding to rank-one matrix estimation: uniform bounds such as the ones used here would show posterior contraction for , while it is known through the celebrated BBP transition that the maximum likelihood estimator is already correlated with the signal for any [37]. With more refined techniques from the study of random matrices and spin glass theory of statistical physics it is often possible to obtain precise constants for such relevant thresholds.

(b) . Algorithmic bottleneck for MCMC

Simple volume arguments, associated with an ingenious use of Markov’s inequality due to Ben Arous et al. [4] and of the rotation invariance of the noise tensor , allow us to get a computational hardness result for MCMC algorithms, even though the posterior contracts infinitesimally close to the ground truth as we saw in corollary 2.4. In the context of the spiked tensor model, these computational hardness results can be found in [4] (see in particular §7). We will state similar results for general nonlinear regression models in §3: in this context we will not need to use the Markov’s inequality-based technique of Ben Arous et al. [4], and will solely rely on concentration arguments.

Recall that by §2(a), we can find such that as and for all large enough . Here, we show that escaping the ‘initialization’ region of the MCMC algorithm is hard in a large range of (possibly diverging with ). In what follows, the step size of the algorithm denotes the maximal change allowed in any iteration.5 We first state this bottleneck result informally.

Proposition 2.6 (MCMC bottleneck, informal). —

Assume that for all . Then any MCMC algorithm whose invariant distribution is , and with a step size bounded by , will take an exponential time to get out of the ‘initialization’ region.

Note that the step size condition of proposition 2.6 is always meaningful, since our hypothesis on implies , and many MCMC algorithms (e.g. any procedure in which a number of coordinates of the current iterate are changed in a single iteration) will have a step size .

Remark 2.7. —

The results of Ben Arous et al. [4] are stated when considering for the invariant distribution of the MCMC a more general ‘Gibbs-type’ distribution , with . The case we consider here is the ‘Bayes-optimal’ , for which . For the general distribution the conditions of proposition 2.6 become and . The authors of Ben Arous et al. [4] usually consider , so that they show the bottleneck under the condition .

More generally, is conjectured to be a regime in which all polynomial-time algorithms fail to recover [33,38–41]. On the other hand, ‘local’ methods (such as gradient-based algorithms [42–46], message-passing iterations [35] or natural MCMC algorithms such as the ones of previous remark) are conjectured or known to fail in the larger range . Proposition 2.6 shows that ‘Bayes-optimal’ MCMC algorithms fail for . To the best of our knowledge, analysing this class of algorithms in the regime is still open.

Let us now state formally the key ingredient behind proposition 2.6. It is a rewriting of the ‘free energy wells’ result of Ben Arous et al. [4].

Lemma 2.8 (Bottleneck, formal). —

Assume that for all , and let . Let . Then for any small enough, there exists such that for large enough , with probability at least we have:

2.6

Note that by simple volume arguments, , so that contains ‘almost all’ the mass of the uniform distribution.

One can then deduce from lemma 2.8 hitting time lower bounds for MCMCs using a folklore bottleneck argument—see Jerrum [8]—that we recall here in a simplified form (see also [5], as well as proposition 4.4, where we will detail it further along with a short proof).

Proposition 2.9. —

We fix any and , and let any . Let be a Markov chain on with stationary distribution , and initialized from , the posterior distribution conditioned on . Let be the hitting time of the Markov chain onto . Then, for any ,

2.7

Remark 2.10 (MCMC initialization). —

Note that lemma 2.8, combined with proposition 2.9, shows hardness of MCMC initialized in points drawn from . In particular, it is easy to see that this implies (via the probabilistic method) the existence of such ‘hard’ initializing points. While one might hope to show such negative results for more general initialization, this remains an open problem. On the other hand, Ben Arous et al. [4] shows that there exists initializers in for which vanilla Langevin dynamics achieve non-trivial recovery of the signal even for (a phenomenon they call ‘equatorial passes’).

3. Main results for nonlinear regression with Gaussian priors

We now turn to the main contribution of this article, which is to exhibit some of the phenomena described in §2 in the context of nonlinear regression models. All the theorems of this section are proven in detail in §4.

Consider data from the random design regression model

| 3.1 |

where is a regression map taking values in the space on some bounded subset of , and where the are drawn uniformly on . For convenience, we assume that has Lebesgue measure . The law of the data is a product measure on , with associated expectation operator . Here varies in some parameter space

and is a ‘ground truth’ (we could use ‘mis-specified’ and project it onto ). We will primarily consider the case where and , and consider high-dimensional asymptotics where (and then also ) diverge to infinity, even though some aspects of our proofs do not rely on these assumptions. We will say that events hold with high probability if as , and we will use the same terminology later when it involves the law of some Markov chain.

Let be a prior (Borel probability measure) on so that given the data the posterior measure is the ‘Gibbs’-type distribution

| 3.2 |

where

(a) . Hardness examples for posterior computation with Gaussian priors

We are concerned here with the question of whether one can sample from the Gibbs’ measure (3.2) by MCMC algorithms. The priors will be Gaussian, so the ‘source’ of the difficulty will arise from the log-likelihood function . On the one hand, recent work [10–14] has demonstrated that if is ‘on average’ (under ) log-concave, possibly only just locally near the ground truth , then MCMC methods that are initialized into the area of log-concavity can mix towards in polynomial time even in high-dimensional () and ‘informative’ () settings. In the absence of such structural assumptions, however, posterior computation may be intractable, and the purpose of this section is to give some concrete examples for this with choices of that are representative for nonlinear regression models.

We will provide lower bounds on the run-time of ‘worst case’ initialized MCMC in settings where the average posterior surface is not globally log-concave but still unimodal. Both the log-likelihood function and posterior density exhibit linear growth towards their modes, and the average log-likelihood is locally log-concave at . In particular, the Fisher information is well defined and non-singular at the ground truth.

The computational hardness does not arise from a local optimum (‘multi-modality’), but from the difficulty MCMC encounters in ‘choosing’ among many high-dimensional directions when started away from the bulk of the support of the posterior measure. That such problems occur in high dimensions is related to the probabilistic structure of the prior , and the manifestation of ‘free energy barriers’ in the posterior distribution.

In many applications of Bayesian statistics, such as in machine learning or in nonlinear inverse problems with PDEs, Gaussian process priors are commonly used for inference. To connect to such situations we illustrate the key ideas that follow with two canonical examples where the prior on is the law

| 3.3 |

where is the covariance matrix arising from the law of a -dimensional Whittle–Matérn-type Gaussian random field (see §4(d)(i) for a detailed definition). These priors represent widely popular choices in Bayesian statistical inference [47,48] and can be expected to yield consistent statistical solutions of regression problems even when , see [48,49]. In (b), we can also accommodate a further ‘rescaling’ (-dependent shrinkage) of the prior similar to what has been used in recent theory for nonlinear inverse problems [12,13,18], see remark 4.6 for details.

We will present our main results for the case where the ground truth is . This streamlines notation while also being the ‘hardest’ case for negative results, since the priors from (a) and (b) are then already centred at the correct parameter.

To formalize our results, let us define balls

| 3.4 |

centred at . We will also require the annuli

| 3.5 |

for to be chosen. To connect this to the notation in the preceding sections, the sets will play the role of the initialization (or starting) region , while (for suitable ) corresponds to the target region where the posterior mass concentrates. The ‘intermediate’ region representing the ‘free-energy barrier’ is constructed in the proofs of the theorems to follow.

Our results hold for general Markov chains whose invariant measure equals the posterior measure (3.2), and which admit a bound on their ‘typical’ step sizes. As step sizes can be random, this assumption needs to be accommodated in the probabilistic framework describing the transition probabilities of the chain. Let (for and Borel sets ), denote a sequence of Markov kernels describing the Markov chain dynamics employed for the computation of the posterior distribution . Recall that a probability measure on is called invariant for if for all Borel sets .

Assumption 3.1. —

Let be a sequence of Markov kernels satisfying the following:

- (i)

has invariant distribution from (3.2).

- (ii)

For some fixed and for sequences , , with -probability approaching as ,

This assumption states that typical steps of the Markov chain are, with high probability (both under the law of the Markov chain and the randomness of the invariant ‘target’ measure), concentrated in an area of size around the current state , uniformly in a ball of radius around . For standard MCMC algorithms (such as pCN, MALA) whose proposal steps are based on the discretization of some continuous-time diffusion process, such conditions can be checked, as we will show in the next section.

Theorem 3.2. —

Let , consider the posterior (3.2) arising from the model (3.1) and a prior of density , and let . Then there exists and a fixed constant for which the following statements hold true.

- (i)

The expected likelihood is unimodal with mode , locally log-concave near , radially symmetric, Lipschitz continuous and monotonically decreasing in on .

- (ii)

For any fixed , with high probability the log-likelihood and the posterior density are monotonically decreasing in on the set .

- (iii)

We have that in probability.

- (iv)

There exists such that for any (sequence of) Markov kernels on and associated chains that satisfy assumption 3.1 for some , , sequence and all large enough, we can find an initialization point such that with high probability (under the law of and the Markov chain), the hitting time for to reach (with as in (iii)) is lower bounded as

The interpretation is that despite the posterior being strictly increasing in the radial variable (at least for , any —note that maximizers of the posterior density may deviate from the ‘ground truth’ by some asymptotically vanishing error, cf. also proposition 4.1), MCMC algorithms started in will still take an exponential time before visiting the region where the posterior mass concentrates. This is true for small enough step size independently of . The result holds also for drawn from an absolutely continuous distribution on as inspection of the proof shows. Finally, we note that at the expense of more cumbersome notation, the above high probability results (and similarly in theorem 3.3) could be made non-asymptotic, in the sense that for all all statements hold with probability at least for all large enough, where the dependency of on can be made explicit.

For ‘ellipsoidally supported’ -regular priors (b), the idea is similar but the geometry of the problem changes as the prior now ‘prefers’ low-dimensional subspaces of , forcing the posterior closer towards the ground truth . We show that if the step size is small compared to a scaling for determined by , then the same hardness phenomenon persists. Note that ‘small’ is only ‘polynomially small’ in and hence algorithmic hardness does not come from exponentially small step sizes.

Theorem 3.3. —

Let , consider the posterior (3.2) arising from the model (3.1) and a prior of density for some , and let . Define . Then there exists and some fixed constant for which the following statements hold true.

- (i)

The expected likelihood is unimodal with mode , locally log-concave near , radially symmetric, Lipschitz continuous and monotonically decreasing in on .

- (ii)

For any fixed , with high probability is radially symmetric and decreasing in on the set .

- (iii)

Defining , we have in probability.

- (iv)

There exist positive constants and such that for any (sequence of) Markov kernels on and associated chains that satisfy assumption 3.1 for some , , sequence and all large enough, we can find an initialization point such that with high probability (under the law of and the Markov chain), the hitting time for to reach is lower bounded as

Again, (iv) holds as well for drawn from an absolutely continuous distribution on . We also note that depends only on and the choice of but not on any other parameters.

Remark 3.4. —

As opposed to theorem 3.2, due to the anisotropy of the prior density , the posterior distribution is no longer radially symmetric in the preceding theorem, whence part (ii) differs from theorem 3.2. But a slightly weaker form of monotonicity of the posterior density still holds: the same arguments employed to prove part (ii) of theorem 3.2 show that is decreasing on (any ) along the half-lines through , i.e.

3.6 We note that this notion precludes the possibility of having extremal points outside of the region of dominant posterior mass, and implies that moving toward the origin will always increase the posterior density. As a result, many typical Metropolis–Hastings would be encouraged to accept such ‘radially inward’ moves, if they arise as a proposal. Thus, crucially, our exponential hitting time lower bound in part (iv) arises not through multi-modality, but merely through volumetric properties of high-dimensional Gaussian measures.

Remark 3.5 (On the step size condition). —

One may wonder whether larger step sizes can help to overcome the negative result presented in the last theorem. If the step sizes are ‘time-homogeneous’ and on average, then we may hit the region where the posterior is supported at some time. This would happen ‘by chance’ and not because the data (via ) would suggest to move there, and future proposals will likely be outside of that bulk region, so that the chain will either exit the relevant region again or become deterministic because an accept/reject step refuses to move into such directions. In this sense, a negative result for (polynomially) small step sizes gives fundamental limitations on the ability of the chain to explore the precise characteristics of the posterior distribution. We also remark that the Lipschitz constants of are of order or in the preceding theorems, respectively. A Markov chain obtained from discretizing a continuous diffusion process (such as MALA discussed in the next section) will generally require step sizes that are inversely proportional to that Lipschitz constant in order to inherit the dynamics from the continuous process. For such examples, assumption 3.1 is natural. But as discussed at the end of the introduction, there exists a variety of ‘non-local’ MCMC algorithms for which this step size assumption may not be satisfied.

(b) . Implications for common MCMC methods with ‘cold-start’

The preceding general hitting time bounds apply to commonly used MCMC methods in high-dimensional statistics. We focus in particular on algorithms that are popular with PDE models and inverse problems, see, e.g. [50,51] and also [14] for many more references. We illustrate this for two natural examples with Metropolis–Hastings adjusted random walk and gradient algorithms. Other examples can be generated without difficulty.

(i) . Preconditioned Crank–Nicolson

We first give some hardness results for the popular pCN algorithm. A dimension-free convergence analysis for pCN was given in the important paper by Hairer et al. [52] based on ideas from Hairer et al. [53]. The results in the present section show that while the mixing bounds from Hairer et al. [52] are in principle uniform in , the implicit dependence of the constants on the conditions on the log-likelihood-function in [52] can re-introduce exponential scaling when one wants to apply the results from Hairer et al. [52] to concrete (-dependent) posterior distributions. This confirms a conjecture about pCN made in Section 1.2.1 of Nickl & Wang [12].

Let denote the covariance of some Gaussian prior on with density . Then the pCN algorithm for sampling from some posterior density is given as follows. Let be an i.i.d. sequence of random vectors. For initializer , step size and , the MCMC chain is then given by

-

1.

Proposal: ,

-

2. Accept–reject: Set

3.7

By standard Markov chain arguments one verifies (see [52] or Ch.1 in [14]) that the (unique) invariant density of equals .

We now give a hitting time lower bound for the pCN algorithm which holds true in the regression setting for which the main theorems 3.2 and 3.3 (for generic Markov chains) were derived. In particular, we emphasize that the lower bounds to follow hold for the choice of regression ‘forward’ map constructed in the proofs of theorems 3.2 and 3.3. As for the general results, we treat the two cases of or separately.

Theorem 3.6. —

Let denote the pCN Markov chain from (3.7).

- (i)

Assume the setting of theorem 3.2 with , and let be as in theorem 3.2. Then there exist constants such that for any , there is an initialization point such that the hitting time (for as in (3.4)) satisfies with high probability (under the law of the data and of the Markov chain) as that .

- (ii)

Assume the setting of theorem 3.3 with for , and let be as in theorem 3.2. Then there exist constants such that if there is an initialization point such that the hitting time satisfies with high probability that .

(ii) . Gradient-based Langevin algorithms

We now turn to gradient-based Langevin algorithms which are based on the discretization of continuous-time diffusion processes [10,50]. A polynomial time convergence analysis for the unadjusted Langevin algorithm in the strongly log-concave case has been given in [10,11] and also in [54] for the Metropolis-adjusted case (MALA). We show here that for unimodal but not globally log-concave distributions, the MCMC scheme can take an exponential time to reach the bulk of the posterior distribution. For simplicity we focus on the Metropolis-adjusted Langevin algorithm which is defined as follows. Let be a sequence of i.i.d. variables, and let be a step size.

-

1.

Proposal: .

-

2. Accept–reject: Set

3.8

Again, standard Markov chain arguments show that is indeed the (unique) invariant distribution of . We note here that for the forward featuring in our results to follows, may only be well-defined (Lebesgue-) almost everywhere on due to our piece-wise smooth choice of , see (4.6) below. However, since all proposal densities involved possess a Lebesgue density, this specification almost everywhere suffices in order to propagate the Markov chain with probability . Alternatively one could also straightforwardly avoid this technicality by smoothing our choice of function in (4.6), which we refrain from for notational ease.

Theorem 3.7. —

Let denote the MALA Markov chain from (3.8).

- (i)

Assume the setting of theorem 3.2, with prior, and let also be as in theorem 3.2. There exists some such that if the step size of satisfies , then there is an initialization point such that the hitting time (for as in (3.4)) satisfies with high probability (under the law of the data and of the Markov chain) as that .

- (ii)

Assume the setting of theorem 3.3, with a prior, and let also be as in theorem 3.3. Then there exist some constant such that whenever , there is an initialization point , such that the hitting time satisfies with high probability (under the law of the data and of the Markov chain) that .

As mentioned in remark 3.5, a bound on the step size that is inversely proportional to the Lipschitz constant of is natural for algorithms like MALA that arise from discretization of a continuous-time Markov process, see e.g. [11,54]. We emphasize again that these Lipschitz constants are - and -dependent, so that the required bounds on are not unnatural. ‘Optimal’ step size prescriptions for MALA [54–57] derived for Gaussian and log-concave targets or, more generally, mean-field limits (in which the posterior distribution possesses a product or mean-field structure, unlike in the models considered here) would need to be adjusted to our model classes to be comparable.

4. Proofs of the main theorems

We begin in §4a by constructing the family of regression maps underlying our results from §3. Section 4b,c reduce the hitting time bounds from theorems 3.2 and 3.3 (for general Markov chains) to hitting time bounds for intermediate ‘free energy barriers’ that the Markov chain needs to travel through. Subsequently, theorems 3.3 and 3.2 are proved in §4d,e, respectively. Finally, the proofs for pCN (theorem 3.6) and MALA (theorem 3.7) are contained in §4f.

(a) . Radially symmetric choices of G

We start with our parameterization of the map . In our regression model and since ,

| 4.1 |

We have and by subtracting a fixed function from if necessary we can also assume that . In this case, since ,

| 4.2 |

Take a bounded continuous function with a unique minimizer and take of the ‘radial’ form

where

The assumption implies under , so that we have

| 4.3 |

and the average log-likelihood is

| 4.4 |

Define -annuli of Euclidean space

| 4.5 |

We then also set, for any ,

For our main theorems the map will be monotone increasing and the preceding notation is then not necessary, but proposition 4.2 is potentially also useful in non-monotone settings (as remarked after its proof), hence the slightly more general notation here.

The choice that is radial is convenient in the proofs, but means that the model is only identifiable up to a rotation for . One could easily make it identifiable by more intricate choices of , but the main point for our negative results is that the function has a unique mode at the ground truth parameter and is identifiable there.

(i) . A locally log-concave, globally monotone choice of w

Define for and any the function as

|

4.6 |

where are fixed constants to be chosen. Note that is monotone increasing and

| 4.7 |

The function is quadratic near its minimum at the origin up until , from when onwards it is piece-wise linear. In the linear regime it initially has a ‘steep’ ascent of gradient until , then grows more slowly with small gradient from until , and from then on is constant. The function is not at the points , , , but we can easily make it smooth by convolving with a smooth function supported in small neighbourhoods of its breakpoints without changing the findings that follow. We abstain from this to simplify notation.

The following proposition summarizes some monotonicity properties of the empirical log-likelihood function arising from the above choice of .

Proposition 4.1. —

Let be as in (4.6). Then there exists such that for any and , we have

In particular, if is such that as then the r.h.s. is .

Proof. —

Recalling (4.4), (4.3) and since is monotonically increasing, we bound

using Chebyshev’s inequality in the last step. Since the events in the penultimate step do not depend on , the result follows.

(b) . Bounds for posterior ratios of annuli

A key quantity in the proofs to follow will be to obtain asymptotic bounds of the following functional (recalling the definition of the Euclidean annuli from (4.5)),

| 4.8 |

in terms of the map . As a side note, we remark that this functional has a long history in the statistical physics of glasses, in which it is often referred to as the Franz–Parisi potential [7,58].

Proposition 4.2. —

Consider the regression model (3.1) with radially symmetric choice of from §4a such that for some fixed (independent of ), and let denote a sequence of prior probability measures on .

- (i)

- (ii)

If in addition is monotone increasing on and if for some ,then the posterior distribution also satisfies (with high probability as ) that

4.11

4.12

Remark 4.3 (The prior condition for w from (4.6)). —

If , for from (4.6), the ‘likelihood’ term in proposition 4.2 is

4.13 so that if we also assume

4.14 to control in the proof that follows, then to verify (4.9) it suffices to check

4.15 for all large enough .

Proof. —

Proof of part (i). From the definition of in (4.3) we first note that for all ,

and

We can now further bound, for our ,

and

We estimate , and noting that

we can use Chebyshev’s (or Bernstein’s) inequality to construct an event of high probability such that the functional from (4.8) is bounded as

4.16 and

4.17 where

4.18 and this is uniform in all since is bounded. Using the above with chosen as and respectively, we then obtain

4.19 with high -probability. The result now follows from the hypothesis (4.9) and since the terms are .

(Proof of part ii). The proof of part (ii) follows from an obvious modification of the previous arguments.

In the case where and are comparable (so that the l.h.s. in (4.9) converges to zero), a local optimum at in the function away from zero can verify the last inequality for ‘intermediate’ such that . This can be used to give computational hardness results for MCMC of multi-modal distributions. But we are interested in the more challenging case of ‘unimodal’ examples from (4.6). Before we turn to this, let us point out what can be said about the hitting times of Markov chains if the conclusion (4.10) of proposition 4.2 holds.

(c) . Bounds for Markov chain hitting times

(i) . Hitting time bounds for intermediate sets

In (4.10), we can think of as the ‘initialization region’ (further away from ) and for intermediate is the ‘barrier’ before we get close to . The last bound permits the following classic hitting time argument, taken from Ben Arous et al. [5], see also [8].

Proposition 4.4. —

Consider any Markov chain with invariant measure for which (4.10) holds. For constants , suppose is started in , , drawn from the conditional distribution , and denote by the hitting time of the Markov chain onto , that is, the number of iterates required until visits the set . Then

Similarly, on the event where (4.12) holds we have that

Proof of proposition 4.4. —

We have

The second claim is proved analogously.

The last proposition holds ‘on average’ for initializers , and since where is the law of the Markov chain started at , the hitting time inequality holds at least for one point in since .

(ii) . Reducing hitting times for to ones for

We now reduce part (iv) of theorems 3.2 and 3.3, i.e. bounds on the hitting time of the region in which the posterior contracts, to a bound for the hitting time for the annulus , which is controlled in proposition 4.4. To this end, in the case of theorem 3.2, we suppose that propositions 4.2 and 4.4 are verified with some and as in the theorem, and in the case of theorem 3.3, we assume the same with choice and given after (4.27) below. For from assumption 3.1, define the events

We can then estimate, using assumption 3.1, that on the frequentist event on which proposition 4.4 holds (which we apply with ), under the probability law of the Markov chain we have

where in the second inequality we have used that on the events , the Markov chain , when started in , needs to pass through in order to reach .

(d) . Proof of theorem 3.3

In this section, we use the results derived in the previous part of §4 to finish the proof of theorem 3.3. Parts (i) and (ii) of the theorem follow from proposition 4.1 and our choice of in (4.6). We therefore concentrate on the proofs of part (iii) and (iv). We start with proving a key lemma on small ball estimates for truncated -regular Gaussian priors.

(i) . Small ball estimates for -regular priors

Let us first define precisely the notion of -regular Gaussian priors. For some fixed , the prior arises as the truncated law of an -regular Gaussian process with RKHS , a Sobolev space over some bounded domain/manifold , see e.g. section 6.2.1 in [14] for details. Equivalently (under the Parseval isometry) we take a Gaussian Borel measure on the usual sequence space with RKHS equal to

The prior is the truncated law of .

Lemma 4.5. —

Fix , and , and set

Then if , there exist constants (depending on ) such that for all () large enough:

4.20

Proof of lemma 4.5. —

Note first that the -covering numbers of the ball of radius in satisfy the well-known two-sided estimate

4.21 for equivalence constants in depending only on . The upper bound is given in proposition 6.1.1 in [14] and a lower bound can be found as well in the literature [59] (by injecting into for some strict sub-domain , and using metric entropy lower bounds for the injection ).

Using the results about small deviation asymptotics for Gaussian measures in Banach space [60]—specifically theorem 6.2.1 in [14] with —and assuming , this means that the concentration function of the ’untruncated prior’ satisfies the two-sided estimate

4.22 Here, restricting to , the two-sided equivalence constants depend only on . Setting

4.23 and noting that , we hence obtain that for some constants ,

4.24 We now show that as long as , one may use the above asymptotics to derive the desired small ball probabilities for the projected prior on .

We obviously have, by set inclusion and projection,

and hence it only remains to show the first inequality in equation (4.20). The Gaussian isoperimetric theorem (theorem 2.6.12 in [61]) and (4.24) imply that for and some , we have that (with denoting the c.d.f. for )

(see also the proof of lemma 5.17 in [19] for a similar calculation). Then if the event in the last probability is denoted by we have

On , if and by the usual tail estimate for vectors in , we have for some the bound

so that for any ,

and hence the lemma follows by appropriately choosing .

Remark 4.6. —

For statistical consistency proofs in nonlinear inverse problems, often rescaled Gaussian priors are used to provide additional regularization [12,13,19]. For these priors a computation analogous to the previous lemma is valid: specifically if we rescale by , where so that , then we just take in the above small ball computation, that is or , and the same bounds (as well as the proof to follow) apply.

(ii) . Proof of theorem 3.3, part (iv)

Lemma 4.5 and the hypotheses on immediately imply

To lower bound , we choose large enough such that

which implies for all large enough that

| 4.25 |

Now, for from (4.6), we set

| 4.26 |

for to be chosen and , , fixed constants, so that is bounded (uniformly in ) by a constant which depends only on , , , whence (4.14) holds. Now the key inequality (4.15) with and with our choice of , , will be satisfied if

| 4.27 |

We define to equal to of the l.h.s. so that (4.27) will follow for the given by choosing large enough and whenever is large enough.

Finally, let us note that with for some , where is the infinite Gaussian vector with RKHS , we can deduce from theorem 2.1.20 and exercise 2.1.5 in [61] that

Thus, using also (4.25), choosing large enough verifies (4.11). Since (4.25) and the a.s. boundedness of for from (4.3) imply that a.s., proposition 4.2 and then also proposition 4.4 apply for this prior, and the arguments from §4c(ii) yield the desired result.

(iii) . Proof of theorem 3.3, part (iii)

We finish the proof of the theorem by showing point . We use the setting and choices from the previous section. Let us write for any measurable set . Recall the notation . Repeating the argument leading to (4.17) with in place of , and using lemma 4.5, we have with high probability

where . Likewise, we also have

where . We can assume that . Hence, since in view of lemma 4.5,

| 4.28 |

Now, for fixed we can choose large enough such that the last quantity exceeds with high probability (in particular this retrospectively justifies the last as then for our choice of ). Therefore, again with high probability

| 4.29 |

For this further implies that with high probability

and then,

again with high probability, which is what we wanted to show.

Remark 4.7. —

If the map is globally convex, say for all , then a ‘large enough’ choice of after (4.27) is not possible. It is here where global log-concavity of the likelihood function helps, as it enforces a certain ‘uniform’ spread of the posterior across its support via a global coercivity constant . By contrast the above example of is not convex, rather it is very spiked on and then ‘flattens out’.

(e) . Proof of theorem 3.2

The proof of theorem 3.2 proceeds along the same lines as that of theorem 3.3, with scaling constant in , corresponding to in , and replacing the volumetric lemma 4.5 by the following basic result.

Lemma 4.8. —

Let . Let . Then for all large enough,

4.30

A proof of (4.30) is sketched in appendix B. As a consequence of the previous lemma

Moreover, to lower bound , we choose . Then, using theorem 2.5.7 in [61] as well as , and then also (4.30) with , we obtain that

for some fixed constant given by (4.30), whence and also . Therefore, the key inequality (4.15) with , holds whenever we choose small enough such that

The rest of the detailed derivations follow the same pattern as in the proof of theorem 3.3 and are left to the reader, including verification of (4.11) via an application of theorem 2.5.7 in [61]. In particular, the proof of part (iii) follows the same arguments (suppressing the scaling everywhere) as in theorem 3.3.

(f) . Proofs for §3b

In this section, we prove the results of §3b which detail the consequences of the general theorems 3.2 and 3.3 for practical MCMC algorithms.

(i) . Proofs for pCN

Theorem 3.6 is proved by verifying the assumption 3.1 for suitable choices of and , and for .

Lemma 4.9. —

Let denote the transition kernel of pCN from (3.7) with parameter .

- (i)

Suppose as in theorem 3.2, and let . Then for all and all , we have (with -probability 1)

- (ii)

Suppose as in theorem 3.3, and let . There exists some such that for all and all , we have (with -probability 1)

Proof of lemma 4.9. —

We begin with the proof of part (ii). Let . Then using the definition of pCN and that for any (Taylor expanding around ), we obtain that for any ,

The variables are equal in law to a vector with components for iid and hence for . Then, for with some sufficiently small (noting that then also ), it holds that

4.31 using, e.g. theorem 2.5.8 in [61] (and representing the -norm by duality as a supremum). This completes the proof of part (ii).

The proof of part (i) is similar, albeit simpler, whence we leave some details to the reader. Arguing similarly as before, we obtain that for any ,

where is a random variable. The latter probability is bounded by a standard deviation inequality for Gaussians, see, e.g. theorem 2.5.7 in [61]. Indeed, noting that , and that the one-dimensional variances satisfy for any , we obtain

Proof of theorem 3.6. —

We begin with part (ii). Let be as in theorem 3.3 and set as well as , where is as in theorem 3.3. With those choices, lemma 4.9 (ii) implies that assumption 3.1 is fulfilled with , so long as satisfies

Hence, the desired result immediately follows from an application of theorem 3.3 (iv).

Part (i) of theorem 3.6 similarly follows from verifying assumption 3.1 with , from theorem 3.2, and for small enough (with determined by lemma 4.9 (i)), and subsequently applying theorem 3.2 (iv).

(ii) . Proofs for MALA

Theorem 3.7 is proved by verifying the hypotheses of theorems 3.2 and 3.3, respectively. A key difference between pCN and MALA is that the proposal kernels for MALA, not just its acceptance probabilities, depend on the data itself. Again, we begin by examining part (ii) which regards priors.

Proof of theorem 3.7, part (ii). —

We begin by deriving a bound for the gradient . For Lebesgue-a.e. , recalling that , we have that

and

For any , recalling the choices for in (4.26) we see that

4.32 where, to bound the second and third term, we used that is bounded away from zero uniformly in on . Similarly, we have

Combining the above and using Chebyshev’s inequality, it follows that

Thus, the event

for some large enough , has probability as . We also verify that

4.33 so that with (for as in theorem 3.3) and recalling that , we obtain

Now, let also be as in theorem 3.3 and set (note that this is a permissible choice in theorem 3.3). Furthermore, for a small enough constant , let . Then since , we also have that

4.34 Hence, on the event and whenever ,

Using this, (4.34) and choosing small enough, conditional on the event the probability under the Markov chain satisfies

where the last inequality is proved as in (4.31) above, using theorem 2.5.8 in [61]. Thus, assumption 3.1 is satisfied with and the proof is complete.

Proof of theorem 3.7, part (i). —

The proof of part (i) proceeds along the same lines, except that (4.32) and (4.33) are replaced with the bound

for some constant independent of , as well as the bound

Then letting and be as in theorem 3.2, and fixing an arbitrary , the above implies that for sufficiently small constant and for any , it holds that

Thus, choosing small enough and arguing exactly as in the last step of the proof of theorem 3.6, part (i), assumption 3.1 is satisfied with and the proof is complete.

Acknowledgements

R.N. would like to thank the Forschungsinstitut für Mathematik (FIM) at ETH Zürich for their hospitality during a sabbatical visit in spring 2022 where this research was initiated.

Appendix A. Proofs of §2

Proof of corollary 2.4. —

We fix and place ourselves under the event of proposition 2.3, and we denote and . We can decompose, since :

Moreover, . Using proposition 2.3, for we have . Therefore, , which ends the proof.

Proof. —

The rest of this section is devoted to proving proposition 2.3. We use a uniform bound on the injective norm of Gaussian tensors:

Lemma A.1 —

For all there exists a constant , such that:

A 1 This lemma is a very crude version of much finer results: in particular the exact value of the constant such that (w.h.p.) has been first computed non-rigorously in [62], and proven in full generality in [63] (see also discussions in [33,34]). In the rest of this proof, we assume to have conditioned on equation (A 1). For any , we have for :

A 2 We upper bound . To lower bound , we use the elementary fact (which is easy to prove using spherical coordinates):

A 3 in which is the incomplete beta function, and for odd and for even . It is then elementary analysis (cf. e.g. [34]) that

A 4 uniformly in . Coming back to equation (A 2), this implies that we have, for any :

A 5 Let . It is then elementary to see that it is possible to construct with , and such that the right-hand side of equation (A 5) becomes smaller than as .

Appendix B. Small ball estimates for isotropic Gaussians

Let . In this section, we prove equation (4.30), more precisely we show:

Lemma B.1. —

Let . Then for all large enough, one has for all :

B 1

Proof of lemma B.1. —

Let , so that reaches its maximum in , with . By decomposition into spherical coordinates and isotropy of the Gaussian measure, one has directly:

B 2 Recall that , so one reaches easily:

B 3 In particular, one has for all large enough (not depending on ):

B 4 Since is increasing on , we have for large enough :

B 5

B 6 Since , let large enough such that . Then for all , one has . Plugging it in the inequality above, we reach that for all :

B 7

Footnotes

As classical in statistical physics, we call free entropy the negative of the free energy.

In a physical system, these regions would correspond respectively to a region including a meta-stable state, a region including the globally stable state and a free energy barrier.

Note that we assume here that the statistician has access to the distribution (and in particular to ), a setting sometimes called Bayes-optimal in the literature.

Often the term will be exponentially small, but we will not require such a strong control.

As we will detail in the following sections, see assumption 3.1, the statements remain true if the change is allowed to be higher than the required maximum with exponentially small probability.

Data accessibility

This article has no additional data.

Authors' contributions

R.N.: conceptualization, writing—original draft; A.S.B.: conceptualization, writing—original draft; A.M.: conceptualization, writing—original draft; S.W.: conceptualization, writing—original draft.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

RN was supported by the EPSRC programme grant on Mathematics of Deep Larning, project: EP/V026259.

References

- 1.Anderson PW. 1989. Spin glass VI: spin glass as cornucopia. Phys. Today 42, 9. [Google Scholar]

- 2.Mézard M, Montanari A. 2009. Information, physics, and computation. Oxford, UK: Oxford University Press. [Google Scholar]

- 3.Zdeborová L, Krzakala F. 2016. Statistical physics of inference: thresholds and algorithms. Adv. Phys. 65, 453-552. [Google Scholar]

- 4.Ben Arous G, Gheissari R, Jagannath A. 2020. Algorithmic thresholds for tensor PCA. Ann. Probab. 48, 2052-2087. ( 10.1214/19-AOP1415) [DOI] [Google Scholar]

- 5.Ben Arous G, Wein AS, Zadik I. 2020. Free energy wells and overlap gap property in sparse PCA. In Conf. on Learning Theory, Graz, Austria, 9–12 July 2020, pp. 479–482. PMLR.

- 6.Gibbs JW. 1873. A method of geometrical representation of the thermodynamic properties of substances by means of surfaces. Trans. Conn. Acad. Arts Sci. 2, 382–404.

- 7.Bandeira AS, Alaoui AE, Hopkins SB, Schramm T, Wein AS, Zadik I. 2022. The Franz-Parisi criterion and computational trade-offs in high dimensional statistics. In Advances in Neural Information Processing Systems (eds AH Oh, A Agarwal, D Belgrave, K Cho). See https://openreview.net/forum?id=mzze3bubjk.

- 8.Jerrum M. 2003. Counting, sampling and integrating: algorithms and complexity. Lectures in Mathematics ETH Zürich. Basel, Switzerland: Birkhäuser Verlag. [Google Scholar]

- 9.Kunisky D, Wein AS, Bandeira AS. 2022. Notes on computational hardness of hypothesis testing: predictions using the low-degree likelihood ratio. In ISAAC Congress (International Society for Analysis, its Applications and Computation), Aveiro, Portugal, 29 July–2 Aug 2019, pp. 1–50. Springer.

- 10.Dalalyan AS. 2017. Theoretical guarantees for approximate sampling from smooth and log-concave densities. J. R. Stat. Soc. Ser. B Stat. Methodol. 79, 651-676. ( 10.1111/rssb.12183) [DOI] [Google Scholar]

- 11.Durmus A, Moulines E. 2019. High-dimensional Bayesian inference via the unadjusted Langevin algorithm. Bernoulli 25, 2854-2882. ( 10.3150/18-BEJ1073) [DOI] [Google Scholar]

- 12.Nickl R, Wang S. 2020. On polynomial-time computation of high-dimensional posterior measures by Langevin-type algorithms. J. Eur. Math. Soc. [Google Scholar]

- 13.Bohr J, Nickl R. 2021. On log-concave approximations of high-dimensional posterior measures and stability properties in non-linear inverse problems. (http://arxiv.org/abs/2105.07835)

- 14.Nickl R. 2022. Bayesian non-linear statistical inverse problems. ETH Zurich Lecture Notes.

- 15.Altmeyer R. 2022. Polynomial time guarantees for sampling based posterior inference in high-dimensional generalised linear models. (http://arxiv.org/abs/2208.13296)

- 16.Nickl R. 2020. Bernstein–von Mises theorems for statistical inverse problems I: Schrödinger equation. J. Eur. Math. Soc. 22, 2697-2750. ( 10.4171/JEMS/975) [DOI] [Google Scholar]

- 17.Monard F, Nickl R, Paternain GP. 2019. Efficient nonparametric Bayesian inference for -ray transforms. Ann. Stat. 47, 1113-1147. ( 10.1214/18-AOS1708) [DOI] [Google Scholar]

- 18.Monard F, Nickl R, Paternain GP. 2021. Statistical guarantees for Bayesian uncertainty quantification in nonlinear inverse problems with Gaussian process priors. Ann. Stat. 49, 3255-3298. ( 10.1214/21-AOS2082) [DOI] [Google Scholar]

- 19.Monard F, Nickl R, Paternain GP. 2021. Consistent inversion of noisy non-Abelian X-ray transforms. Commun. Pure Appl. Math. 74, 1045-1099. ( 10.1002/cpa.21942) [DOI] [Google Scholar]

- 20.Stuart AM. 2010. Inverse problems: a Bayesian perspective. Acta Numer. 19, 451-559. ( 10.1017/S0962492910000061) [DOI] [Google Scholar]

- 21.Fearnhead P, Bierkens J, Pollock M, Roberts GO. 2018. Piecewise deterministic Markov processes for continuous-time Monte Carlo. Stat. Sci. 33, 386-412. ( 10.1214/18-STS648) [DOI] [Google Scholar]

- 22.Bouchard-Côté A, Vollmer SJ, Doucet A. 2018. The bouncy particle sampler: a nonreversible rejection-free Markov chain Monte Carlo method. J. Am. Stat. Assoc. 113, 855-867. [Google Scholar]

- 23.Bierkens J, Grazzi S, Kamatani K, Roberts G. 2020. The boomerang sampler. In Int. Conf. on Machine Learning, Online, 12–18 July 2020, pp. 908–918. PMLR.

- 24.Wu C, Robert CP. 2020. Coordinate sampler: a non-reversible Gibbs-like MCMC sampler. Stat. Comput. 30, 721-730. ( 10.1007/s11222-019-09913-w) [DOI] [Google Scholar]

- 25.Scalliet C, Guiselin B, Berthier L. 2022. Thirty milliseconds in the life of a supercooled liquid. Phys. Rev. X 12, 041028. ( 10.1103/PhysRevX.12.041028) [DOI] [PubMed]

- 26.Grigera TS, Parisi G. 2001. Fast Monte Carlo algorithm for supercooled soft spheres. Phys. Rev. E 63, 045102. ( 10.1103/PhysRevE.63.045102) [DOI] [PubMed] [Google Scholar]

- 27.Jerrum M. 1992. Large cliques elude the Metropolis process. Random Struct. Algorithms 3, 347-359. ( 10.1002/rsa.3240030402) [DOI] [Google Scholar]

- 28.Gamarnik D, Zadik I. 2019. The landscape of the planted clique problem: dense subgraphs and the overlap gap property. (http://arxiv.org/abs/1904.07174).

- 29.Angelini MC, Fachin P, de Feo S. 2021. Mismatching as a tool to enhance algorithmic performances of Monte Carlo methods for the planted clique model. J. Stat. Mech: Theory Exp. 2021, 113406. ( 10.1088/1742-5468/ac3657) [DOI] [Google Scholar]

- 30.Chen Z, Mossel E, Zadik I. 2023. Almost-linear planted cliques elude the metropolis process. In Proceedings of the 2023 Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), Florence, Italy, 22–25 January 2023, pp. 4504–4539. Philadelphia, PA: SIAM. ( 10.1137/1.9781611977554.ch171) [DOI]

- 31.Hukushima K, Nemoto K. 1996. Exchange Monte Carlo method and application to spin glass simulations. J. Phys. Soc. Jpn. 65, 1604-1608. ( 10.1143/JPSJ.65.1604) [DOI] [Google Scholar]

- 32.Angelini MC. 2018. Parallel tempering for the planted clique problem. J. Stat. Mech: Theory Exp. 2018, 073404. [Google Scholar]

- 33.Richard E, Montanari A. 2014. A statistical model for tensor PCA. In Advances in neural information processing systems 27, Montreal, Canada, 8–13 Dec 2014. Red Hook, NY: Curran Associates.

- 34.Perry A, Wein AS, Bandeira AS. 2020. Statistical limits of spiked tensor models. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques 56, 230–264.

- 35.Lesieur T, Miolane L, Lelarge M, Krzakala F, Zdeborová L. 2017. Statistical and computational phase transitions in spiked tensor estimation. In 2017 IEEE Int. Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017, pp. 511–515. New York, NY: IEEE.

- 36.Jagannath A, Lopatto P, Miolane L. 2020. Statistical thresholds for tensor PCA. Ann. Appl. Probab. 30, 1910-1933. ( 10.1214/19-AAP1547) [DOI] [Google Scholar]

- 37.Baik J, Ben Arous G, Péché S. 2005. Phase transition of the largest eigenvalue for nonnull complex sample covariance matrices. Ann. Appl. Probab. 33, 1643-1697. [Google Scholar]

- 38.Wein AS, El Alaoui A, Moore C. 2019. The Kikuchi hierarchy and tensor PCA. In 2019 IEEE 60th Annual Symposium on Foundations of Computer Science (FOCS), Baltimore, MA, 9–12 November 2019, pp. 1446–1468. New York, NY: IEEE.

- 39.Hopkins SB, Shi J, Steurer D. 2015. Tensor principal component analysis via sum-of-square proofs. In Conf. on Learning Theory, Paris, France, 3–6 July 2015, pp. 956–1006. PMLR.

- 40.Hopkins SB, Schramm T, Shi J, Steurer D. 2016. Fast spectral algorithms from sum-of-squares proofs: tensor decomposition and planted sparse vectors. In Proc. of the Forty-Eighth Annual ACM Symposium on Theory of Computing, Cambridge, MA, 19–21 June 2016, pp. 178–191. New York, NY: ACM.

- 41.Kim C, Bandeira AS, Goemans MX. 2017. Community detection in hypergraphs, spiked tensor models, and sum-of-squares. In 2017 International Conference on Sampling Theory and Applications (SampTA), Bordeaux, France, 8–12 July 2017, pp. 124–128. New York, NY: IEEE.

- 42.Sarao Mannelli S, Biroli G, Cammarota C, Krzakala F, Zdeborová L. 2019. Who is afraid of big bad minima? Analysis of gradient-flow in spiked matrix-tensor models. Advances in neural information processing systems 32, Vancouver, Canada, 8–14 Dec 2019. Red Hook, NY: Curran Associates.

- 43.Sarao Mannelli S, Krzakala F, Urbani P, Zdeborová L. 2019. Passed & spurious: descent algorithms and local minima in spiked matrix-tensor models. In International Conference on Machine Learning, pp. 4333-4342. PMLR.

- 44.Biroli G, Cammarota C, Ricci-Tersenghi F. 2020. How to iron out rough landscapes and get optimal performances: averaged gradient descent and its application to tensor PCA. J. Phys. A: Math. Theor. 53, 174003. ( 10.1088/1751-8121/ab7b1f) [DOI] [Google Scholar]

- 45.Ben Arous G, Gheissari R, Jagannath A. 2020. Bounding flows for spherical spin glass dynamics. Commun. Math. Phys. 373, 1011-1048. ( 10.1007/s00220-019-03649-4) [DOI] [Google Scholar]

- 46.Ben Arous G, Gheissari R, Jagannath A. 2021. Online stochastic gradient descent on non-convex losses from high-dimensional inference. J. Mach. Learn. Res. 22, 106-1. [Google Scholar]

- 47.Rasmussen CE, Williams CKI. 2006. Gaussian processes for machine learning. Adaptive Computation and Machine Learning. Cambridge, MA: MIT Press. [Google Scholar]

- 48.Ghosal S, van der Vaart AW. 2017. Fundamentals of nonparametric Bayesian inference. New York, NY: Cambridge University Press. [Google Scholar]

- 49.van der Vaart A, van Zanten JH. 2008. Rates of contraction of posterior distributions based on Gaussian process priors. Ann. Stat. 36, 1435-1463. ( 10.1214/009053607000000613) [DOI] [Google Scholar]

- 50.Cotter SL, Roberts GO, Stuart AM, White D. 2013. MCMC methods for functions: modifying old algorithms to make them faster. Stat. Sci. 28, 424-446. ( 10.1214/13-STS421) [DOI] [Google Scholar]

- 51.Beskos A, Girolami M, Lan S, Farrell PE, Stuart AM. 2017. Geometric MCMC for infinite-dimensional inverse problems. J. Comput. Phys. 335, 327-351. ( 10.1016/j.jcp.2016.12.041) [DOI] [Google Scholar]

- 52.Hairer M, Stuart AM, Vollmer SJ. 2014. Spectral gaps for a Metropolis-Hastings algorithm in infinite dimensions. Ann. Appl. Probab. 24, 2455-2490. ( 10.1214/13-AAP982) [DOI] [Google Scholar]

- 53.Hairer M, Mattingly J, Scheutzow M. 2011. Asymptotic coupling and a general form of Harris’ theorem with applications to stochastic delay equations. Probab. Theory Relat. Fields 149, 223-259. ( 10.1007/s00440-009-0250-6) [DOI] [Google Scholar]

- 54.Chewi S, Lu C, Ahn K, Cheng X, Le Gouic T, Rigollet P. 2021. Optimal dimension dependence of the Metropolis-Adjusted Langevin Algorithm. In Conference on Learning Theory, Boulder, CO, 15–19 August 2021. PMLR.

- 55.Roberts GO, Rosenthal JS. 2001. Optimal scaling for various Metropolis-Hastings algorithms. Stat. Sci. 16, 351-367. ( 10.1214/ss/1015346320) [DOI] [Google Scholar]

- 56.Breyer LA, Piccioni M, Scarlatti S. 2004. Optimal scaling of MaLa for nonlinear regression. Ann. Appl. Probab. 14, 1479-1505. ( 10.1214/105051604000000369) [DOI] [Google Scholar]

- 57.Mattingly JC, Pillai NS, Stuart AM. 2012. Diffusion limits of the random walk Metropolis algorithm in high dimensions. Ann. Appl. Probab. 22, 881-930. ( 10.1214/10-AAP754) [DOI] [Google Scholar]

- 58.Franz S, Parisi G. 1995. Recipes for metastable states in spin glasses. J. Phys. I 5, 1401-1415. [Google Scholar]

- 59.Edmunds DE, Triebel H.. 1996. Function spaces, entropy numbers, differential operators. Cambridge Tracts in Mathematics, vol. 120. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 60.Li WV, Linde W. 1999. Approximation, metric entropy and small ball estimates for Gaussian measures. Ann. Probab. 27, 1556-1578. ( 10.1214/aop/1022677459) [DOI] [Google Scholar]

- 61.Giné E, Nickl R.. 2016. Mathematical foundations of infinite-dimensional statistical models. Cambridge Series in Statistical and Probabilistic Mathematics. New York, NY: Cambridge University Press. [Google Scholar]

- 62.Crisanti A, Sommers HJ. 1992. The spherical -spin interaction spin glass model: the statics. Zeitschrift für Physik B Condensed Matter 87, 341-354. ( 10.1007/BF01309287) [DOI] [Google Scholar]

- 63.Subag E. 2017. The complexity of spherical -spin models – a second moment approach. Ann. Probab. 45, 3385-3450. ( 10.1214/16-AOP1139) [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This article has no additional data.