Abstract

Purpose

Systematic reviews and meta-analyses (SRMAs) are used to generate evidence-based guidelines. Although the number of SRMAs published in the literature has increased dramatically in the last decade, the training and the experience of the researchers performing the SRMAs are usually not explained in the SRMAs’ methodology, and this may be a source of bias. Although some studies pointed out the need for quality control of SRMAs and training in proper statistical methods, to the best of our knowledge, no study has reported the importance of training the researchers that conduct the SRMAs. The aim of this study is to describe a training program designed to impart the essential knowledge and skills required for the conduct of an SRMA and to assess the need for, and outcome of, such a training.

Materials and Methods

Researchers were trained for use of Scopus, study eligibility, assessment of the quality of evidence (QoE) through the Cambridge Quality Checklist for observational studies, as well as the Cochrane Risk of Bias tool, the Consolidated Standards of Reporting Trials (CONSORT) guidelines, and the Jadad score for randomized controlled trials (RCTs), Population, Intervention, Comparison, Outcome (PICO) questions and data extraction. A total of 35 of them were approved to join a planned SRMA. At the end of the SRMA, they were administered 43 multiple-choice questions (MCQs) on demographics, motivation for participation in the SRMA, self-perceived change in knowledge before and after conducting the SRMA, and self-assessment of performance. The senior researchers then revised the spreadsheet of the SRMA and, based on the mistakes found, organized a training focused on the correct assessment of the study design, where 43 researchers (9 joined midway) and 11 trainees with no experience in conducting SRMA attended. They all were tested through a 5 MCQ assessment that was administered before and after the training. Those scoring poorly were re-trained and re-tested, and only those scoring satisfactorily were admitted to further SRMAs.

Results

Approximately 54.3% of the participants were medical doctors (MD), 31.4% were urologists and 48.6% had previous experience with SRMAs. Joining an international collaborative study was the main motivation, chosen by 19.7% of researchers. The results of the self-perceived change in knowledge showed a significant improvement in the use of Scopus, checklists for QoE, PICO questions, data required to perform a meta-analysis, and critical reading of scientific articles. Also, the majority of the researchers ranked the quality of their work as high. The pre-test results of the 5 MCQ showed a low score, which was not different from that achieved by a group of fresh trainees (median, 2; IQR 1–3 vs. median, 1; IQR, 1–2; p=0.3). Post-training there was significant improvement in both groups (researchers: median, 4; IQR, 3–5 vs. median, 2; IQR, 1–3; p<0.001; trainees: median, 4; IQR, 3–4 vs. median, 1; IQR, 1–2; p=0.02). Out of the 44 researchers, 12 (27.3%) scored poorly (≤3). After re-training, all of them scored satisfactorily (>3) and were admitted to subsequent SRMAs.

Conclusions

At the end of our model, 100% of researchers participating in this study were validated to be included in a meta-analysis. This validation required the involvement of the MT, two meetings, a self-evaluation survey, and one or two sets of objective tests with explanations and corrections. Our results indicate that even well-trained clinicians are naïve when it comes to the methodology of SRMA. All the researchers performing an SRMA need comprehensive training that must cover each aspect of the SRMA methodology. This paper provides a replicable training program that could be used by other investigators to train the researchers to perform high-quality SRMAs.

Keywords: Meta-analysis, Research, Systematic review, Teaching, Training

INTRODUCTION

Evidence-based medical practice requires reliable information on the etiology, pathogenesis, risk factors, diagnosis, and management of various medical conditions. Systematic reviews and meta-analyses (SRMAs) are considered the best form of evidence, as they provide evidence-based and generalizable answers [1,2,3]. Clinicians and researchers regularly read SRMAs to keep themselves up to date in their respective fields of interest [4]. SRMAs are published every day in medicine. According to a quick Scopus analysis, 273,000 meta-analyses have been published in the field of medicine since 1980 until today. The years 2020 and 2021 have broken records with 25,000 and 32,000 SRMAs published respectively. This phenomenon is undoubtedly due, in part, to the pandemic and the time available to researchers for bibliographic research [5]. Given this abundance of SRMAs, the question of training or teaching researchers how to conduct an SRMA begs to be answered. Indeed, despite the existence, and recent updates, of clear guidelines for effectively conducting an SRMA [4,6], the steps remain poorly detailed in the literature or the journal author guidelines and there are no specifications on how researchers should be trained to carry out these steps [7].

Conducting an SRMA involves many steps that need to be performed correctly and accurately. First, the SRMA research question must be feasible, interesting, novel, and relevant. Therefore, a clear, logical, and well-defined research question (or questions) needs to be formulated. Two common tools are generally used in qualitative syntheses: PICO (Population, Intervention, Comparison, Outcome) or SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research Type). PICO is mostly used in quantitative data [8]. It is important that those involved in the meta-analysis must also understand the PICO questions because they will model the subsequent steps, including literature search, screening of titles, abstracts, and full texts for inclusion and exclusion.

Second, there has to be a literature search of a sufficient number of databases, at least two according to the AMSTAR-2 checklist [9]. Researchers, therefore, need to be trained to use the main databases (Scopus, PubMed, Cochrane, Embase) using varying search strategies and keywords. For example, Scopus uses classic keywords and allows one to search for these keywords, either in the title, abstract, keywords, text, authors, or references. The researcher needs to know how to download the search results into an Excel spreadsheet, complete and without duplicates.

Then, the abstracts need to be screened to select papers for further screening of the full text. The full texts will then have to be downloaded and screened for eligibility, keeping in mind the PICO question. Next comes the stage of evaluation of the quality of evidence (QoE) and data extraction. Various QoE scales are available and should be well understood by the researchers. The quality of the statistical analysis and the utility of the SRMA will depend on the robustness of all these steps. Finally, the researchers will need to be trained in article writing and non-plagiarism [10]. Thus, researchers need to be trained in a number of steps, and their knowledge and skill need to be assessed before they embark on a meta-analysis. Although some studies have pointed out the need for quality control of SRMAs and a proper statistical method [11,12], to the best of our knowledge no study in the medical literature so far has reported the importance of training the researchers that conduct SRMAs.

The Global Andrology Forum (GAF) [13] is an international online scientific and medical group established in 2021, committed to conducting the highest quality research related to male reproductive and sexual health including SRMAs. While conducting SRMAs, the GAF realized that it is necessary to train the researchers rigorously, to avoid mistakes during the course of the study. Hence, GAF developed an online training program that focused on all the steps described above.

The aim of this study is to describe this novel, online training program to train clinicians and researchers engaged in the conduct of SRMAs, to document their level of knowledge and skill prior to this training, and to assess the change in these parameters after the training program.

MATERIALS AND METHODS

1. Participants

In June 2021, the GAF planned to carry out an SRMA on varicocele repair. From May 2021 to August 2021, the GAF sent a call to its members for participation in the SRMA. All candidates expressing an interest were invited to email their curriculum vitae and were then interviewed. Those selected had to be clinicians (urologists, andrologists, endocrinologists) or researchers (e.g., medical doctors, biologists, or PhDs performing basic, translational, or clinical research) involved in the field of male infertility. Having an academic position, previous publications, and knowledge about how to perform a search strategy or a meta-analysis were not necessary for inclusion. Candidates with poor knowledge of the English language were excluded. Authorship was offered to the participants performing well and respecting the deadlines.

2. Training on conducting SRMA

All included candidates underwent focused training before being involved in the SRMA. The training included four exercises: the first two were based on the use of the Scopus database, while the other exercises focused on the evaluation of the QoE of the studies and data extraction.

The goals of the first exercise were to train the candidate to (1) use the database (Scopus) features to modify the search, (2) learn how to correctly name and save all the collected files, (3) organize the work and the folders in a way that it is easily understandable for other collaborators. The second exercise was assigned only after the correct completion of the first one. Its goals were to (1) refine the candidates’ search strategy and (2) learn how duplicates can be identified and excluded, (3) provide training on the study eligibility.

Following completion of the Scopus exercises, the candidates were emailed a tutorial that explained how to assess the QoE of the articles using four different scales: the Cambridge Quality Checklist [14], to be applied to observational studies, and the Cochrane Risk of Bias tool for randomized controlled trials (RCTs) [15], the Consolidated Standards of Reporting Trials (CONSORT) guidelines [16], and the Jadad score [17], to be applied to RCTs. The first two exercises aimed to assess the candidate’s capability to correctly score the QoE of four articles (two articles for each exercise). The candidates were also provided with an Excel spreadsheet for data extraction. The candidates failing to correctly complete the exercises were offered additional training and were re-tested. Only candidates correctly completing all the exercises were admitted to the SRMA (Supplement File 1).

3. Performing the planned SRMA

A total of 35 candidates were approved to join the planned SRMA, which was conducted from July 2021 to December 2021. Eight additional researchers completed the training after the beginning of the SRMA and joined the research midway (making it a total of 43 researchers involved in the SRMA). They were divided into four teams, and the work of each team was coordinated by a leader. For each step of the SRMA, the participants worked in pairs, verifying the work of their partner; disagreements were resolved by the team leader. Thus, constant feedback and supervision were available throughout the study.

4. Test number 1: self-perceived change in knowledge

In January 2022, a questionnaire with 43 multiple-choice questions (MCQs) was administered to the 35 participants who had joined the SRMA from its beginning. The participants had to rate their knowledge and skills before and after the training. The MCQs, which were created using the Google Form tool and made available online, covered demographic information of the candidates, their level of knowledge in using Scopus advanced search, assessment of QoE of published articles, understanding of PICO models, and data extraction for a meta-analysis pre-and post-training (Supplement File 2).

5. Revision of the SRMA spreadsheet

After completion of the SRMA, the senior researchers carefully reviewed the spreadsheet. The mistakes found were corrected and the spreadsheet was sent to the statisticians for statistical analysis. The most commonly made mistakes were identified and additional focused training sessions (two virtual meetings) were organized and extended to all the researchers who had, in the meantime, joined the GAF.

6. Virtual meeting and online test

A total of 43 researchers with recent experience in SRMA and 11 trainees with no experience in conducting SRMA joined a virtual meeting led by the GAF leaders and experts. The topics discussed during the meeting were the classification of the study designs [18], the statistical principles of SRMAs, PICO questions, common mistakes made during the classification of the studies, and data entry [19].

Both the experienced researchers and the fresh trainees were asked to complete a 5-question test on the same topics, which was administered online, using the Google Form Module Tool, before (Supplement File 3) and after (Supplement File 4) the virtual meeting.

The researchers who scored poorly (≤3 correct answers out of 5) underwent a second online recorded training session led by one of the management team (MT) members [20]. They were also contacted by another member of the MT, to ensure that the researchers had correctly understood their mistakes. They were subsequently re-tested and only the researchers performing more than 4 points out of 5 were admitted to future SRMAs.

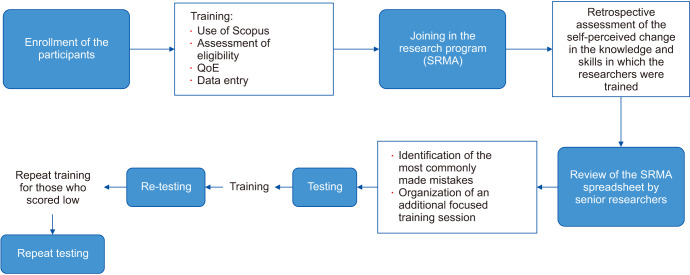

The entire sequence of training and testing is illustrated in Fig. 1.

Fig. 1. Description of the training methodology provided. QoE: quality of evidence, SRMA: systematic review and meta-analysis.

7. Statistical analysis

Survey questions were visualized using grouped bar plots (Likert scale). The mean±standard deviation (SD) or the median (interquartile range, IQR) were used for parametric and non-parametric data, respectively.

The comparison of means was done using the student t-test while those of medians were done using the Mann–Whitney U test. Paired comparisons were done using Wilcoxson signed-rank test. The repeated measure ANOVA test could not be performed because the data was not normally distributed. All statistics were done using R programming language v. 4.1.2.

8. Ethics statement

The study protocol was approved by the internal Review Board of the GAF management, and informed written consent was obtained from each participant after full explanation of the purpose and nature of all procedures used. The study has been carried out in accordance with the principles expressed in the Declaration of Helsinki.

RESULTS

1. Demographics

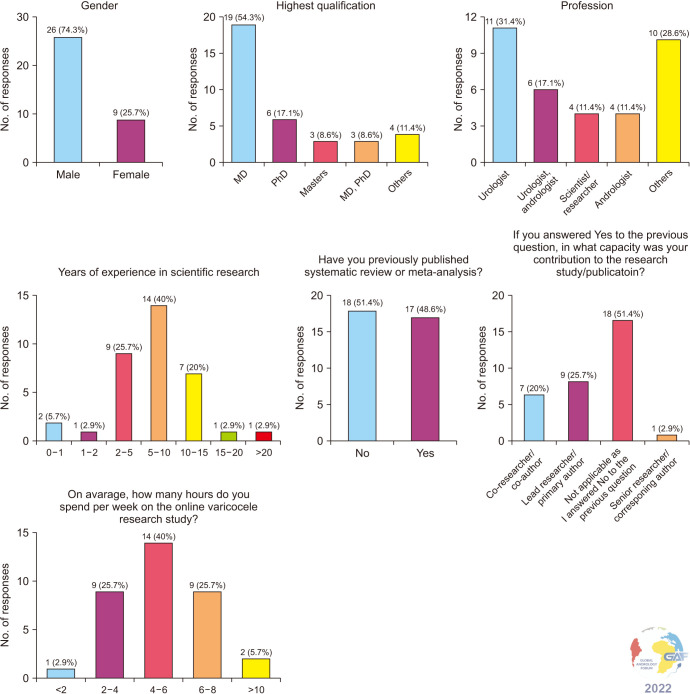

The MD degree was the highest qualification for 19 (54.3%) participants. Approximately, one-third were urologists (31.4%) and 40.0% had 5–10 years of experience. Seventeen (48.6%) of the respondents had previous experience in performing a meta-analysis. Fig. 2 and Supplement Table 1 illustrate the responses of the demographic portion of the survey.

Fig. 2. Demographics of researchers participating in the systematic reviews and meta-analyses (SRMA).

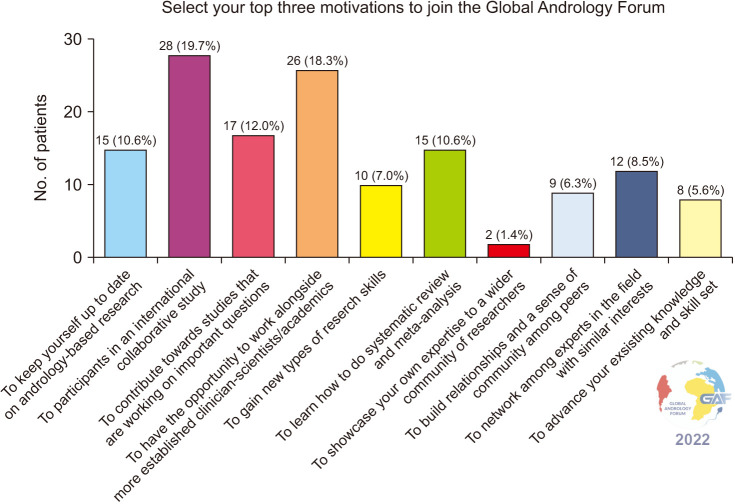

The top 3 motivations to participate in SRMAs were to join an international collaborative study, to work alongside established clinicians, scientists, and academics, and to contribute to a study that answers important clinical questions, in a total of 28 (19.7%), 26 (18.3%), and 17 (12.0%) researchers, respectively. Fig. 3 shows the list of reasons for participation in an SRMA.

Fig. 3. Motivation for participation in the meta-analysis study.

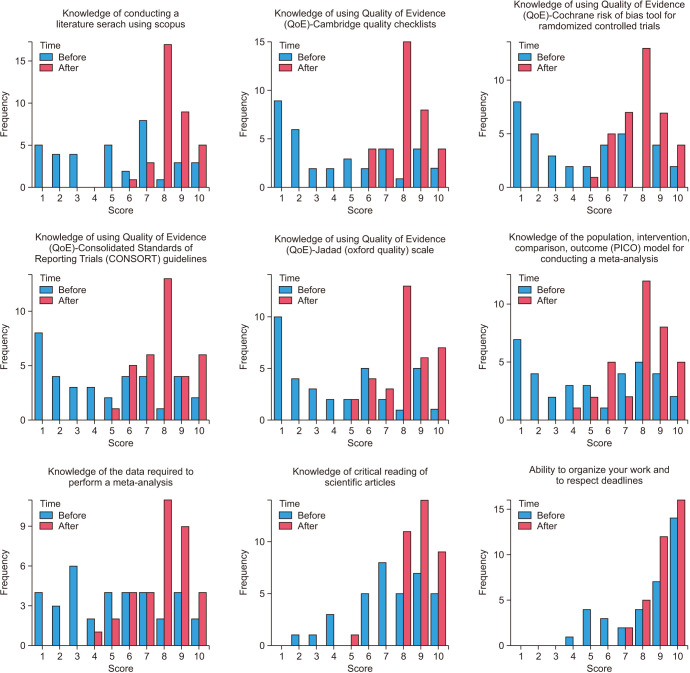

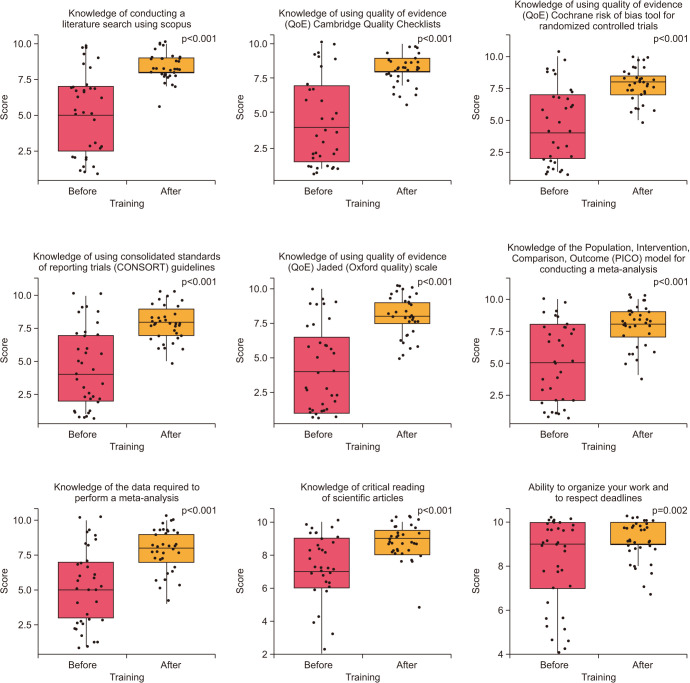

2. Comparison of the knowledge scores of the research methodology domains pre- and post-training

1) Knowledge of conducting a literature search using Scopus

The knowledge level was assessed on a scale from 1 to 10 with 10 being the highest level of knowledge. Before receiving training, half of the participants scored 5 or less in this knowledge domain. In the post-training survey, none of the candidates scored 5 points or less, and 48.6% scored 8 (Fig. 4).

Fig. 4. Results of the multiple-choice question (MCQ) subdomains.

2) Knowledge of Cambridge quality checklists

Before training, 25.7% of participants gave a score of 1, while 42.9% scored 8 after training (Fig. 4).

3) Knowledge of using Quality of Evidence (QoE) - Cochrane Risk of Bias Tool for Randomized Controlled Trials

While approximately half of the participants scored 5 or less before training, all scored 5 or more after training with an increase in the percentage of the highest scores (Fig. 4).

4) Knowledge of using Quality of Evidence (QoE) - Consolidated Standards of Reporting Trials (CONSORT) guidelines

The most common level of knowledge before training was a score of 1 (22.9%) while the most prevalent score after training was 8 (37.1%). None scored less than 5 after training (Fig. 4).

5) Knowledge of using Quality of Evidence (QoE) - Jadad (Oxford Quality) scale

The most common score before training was 1 (28.6%) followed by 6 and 9 (14.3%, respectively) compared to scores 8 (37.1%) and 10 (20.0%) after training (Fig. 4).

6) Knowledge of the Population, Intervention, Comparison, Outcome (PICO) model for conducting a meta-analysis

Before training, 7 (20.0%) participants had the lowest level of knowledge and 3 (8.6%) participants still scored 5 or less after training. On the other hand, 5 (14.3%) scored 8 before training which increased to 12 (34.3%) after training (Fig. 4).

7) Knowledge of the data required to perform a meta-analysis

Approximately half of the participants reported a lower level of knowledge (5 or less) before training, while more than 90% had a score of more than 6 after training, with 8 being the most commonly described score (11 respondents, 31.4%) (Fig. 4).

8) Knowledge of critical reading of scientific articles

While most of the participants have considerable knowledge about the critical reading of scientific articles, 5 (14.3%) participants (scoring 2 to 4) have improved to only one participant scoring 5 with the rest above 5 post-training (Fig. 4).

9) Ability to organize your work and respect deadlines

Despite only 5 (14.3%), respondents reported a low score (5 or less) before training, this improved after training with none reporting a score of 6 or less. In addition, all higher-level scores improved (Fig. 4).

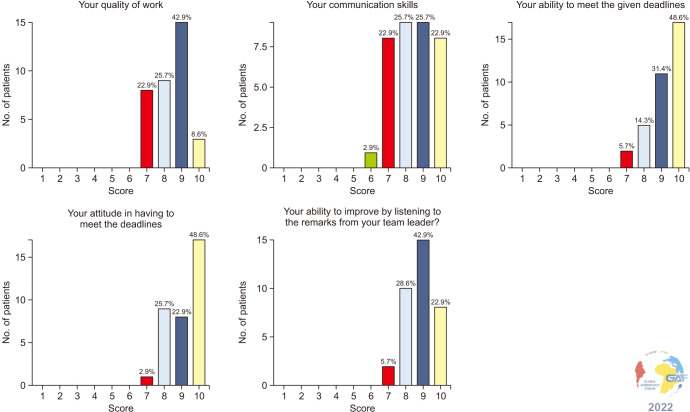

10) Self-assessment of performance skills by researchers

The majority of the researchers ranked the quality of their work as high (42.9% self-identified themselves with a score of 9 out of 10), as well as their communication skills (25.7% for a score of 8 and 25.7% for a score of 9), ability to meet the given deadlines (48.6% for a score of 10), attitude in having to meet the given deadlines (48.6% for a score of 10). The self-assessed score on the ability to improve by listening to the remarks from the Team leader was 9 for 42.9% of the researchers (Fig. 5).

Fig. 5. Self-assessment of performance skills by researchers.

The data of this study are tabulated in Supplement Table 2, 3, 4.

3. Comparison of the distribution of pre and post-training scores of the self-assessment test

When comparing the median scores before and after training, there was a significant improvement in scores after training. Results are summarized in Fig. 6.

Fig. 6. Paired scores comparison pre and post-training.

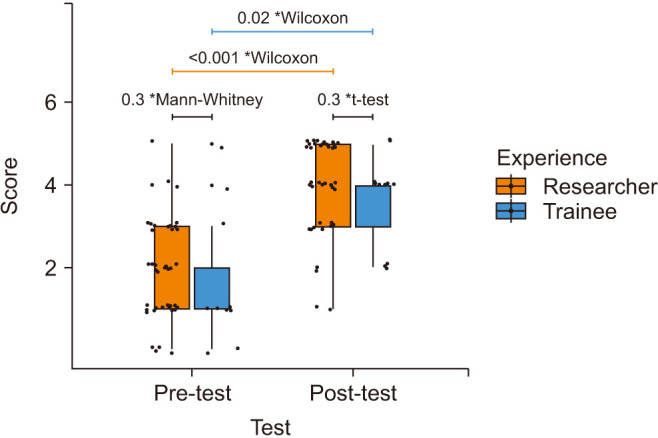

4. Results of the test

The pre-test median (IQR) scores were very low and not significantly different between the experienced researchers (median, 2; IQR, 1–3) and the fresh trainees (median, 1; IQR, 1–2) (p=0.3). Post-training there was a marked improvement in both groups with mean±SD scores of 4.0±1.1 for researchers and 3.6±1.1 for trainees (p=0.3). For researchers, it improved from 2 (IQR, 1–3) to 4 (IQR, 3–5) (p<0.001). For the trainees, the post-test median score was 4 (IQR, 3–4) compared to 1 (IQR, 1–2) (p=0.02) (Fig. 7). This suggests the effectiveness of the training session.

Fig. 7. Pre- post-training comparison between researchers and trainees. *Statistical test used to calculate the p-value.

Out of the 44 researchers, 12 (27.3%) scored ≤3. After re-training, all of them scored satisfactorily (>3).

DISCUSSION

In the era of evidence-based medical practice, SRMAs have great value in molding opinions and guiding practice recommendations. Hence, a large number of researchers dedicate their time and effort to performing SRMAs, and the past two years of COVID-imposed isolation and limitation on clinical practice have given a further boost to academic research.

It is generally assumed that the authors of an SRMA are fully trained and qualified to carry out this type of research. However, no study has specifically evaluated this issue. Indeed, whether a focused training of the researchers involved in SRMAs can impact the quality of the SRMA has not been addressed so far. With the recent explosion of SRMAs, there is an increasing risk of poorly conducted studies being published and used for establishing clinical guidelines. Though the methodology of data acquisition and analysis for performing an SRMA has been clearly defined [4,6], there are no guidelines for training in the implementation of these processes, and therefore no safeguard against a poorly done study. Previous studies have emphasized the need for quality control and for the use of an accurate methodology in conducting SRMA. In 2010, Bown and Sutton [11] published a study suggesting methods to be applied for literature search and data extraction, although they did not attempt to validate their suggestions. By reviewing the QoE data provided in 100 published SRMAs, Hameed et al [12] indicated that the involvement of librarians could affect the articles’ scores, thus raising some concerns about the quality of published SRMAs. This could lead to spurious associations reported in the literature and dilute evidence-based medicine [21].

The GAF is engaged in several SRMAs. A unique feature of this research group is that it is entirely online which enables it to break free of geographical boundaries and engage a large number of talented researchers from all over the world. Thanks to our large team we have been able to perform SRMAs with no limitations of time or language, and also verify each step in duplicate. To further ensure that the research done by the GAF was of the highest quality, we decided to provide online training in the various steps involved in conducting SRMAs and also test the research knowledge and skills of our team.

Our study showed that even experienced clinicians (71.4% were MD or PhD; 59.9% were urologists or andrologists) have little knowledge or experience in study design, PICO models, and data extraction. In addition, more than half of the members of the research team had little knowledge (score 5 or less on a scale of 1 to 10) of how to conduct a search using Scopus, or assess the QoE of a paper using the Cambridge quality of evidence checklist, Cochrane Risk of Bias tool, CONSORT guidelines or the Jadad scale. Further, 54.3% expressed limited knowledge of the PICO model for conducting a meta-analysis and 54.2% were unclear about the data that needed to be extracted for a meta-analysis.

These findings validate our concern that possibly a large number of SRMAs published in the worldwide literature may be flawed due to inadequate skills of those carrying out these advanced studies. Indeed, errors can be seen in published papers, and even the paper title can be misleading. For example, the title of a paper published in 2017 claimed to be a prospective controlled study that assessed changes in DNA fragmentation and oxidative stress after varicocelectomy [22]. However, the control group in this study comprised fertile, normozoospermic men whose parameters were measured only at the onset of the study. This is not an appropriate control group to study the changes after varicocelectomy in infertile men, and this is actually an uncontrolled study. It is likely that an untrained researcher would be misled by the title (as were the reviewers and editors) and would incorrectly enroll this study in a MA as a controlled study. In another widely referenced older study [23], classified by the last Cochrane SRMA on varicocele repair as a RCT [24], the authors have not mentioned how the control group was selected and provided only one set of semen parameters for the control group (presumably taken at the onset of the study). In the absence of the second set of semen analyses for the control group, done at the termination of the study period there is no valid data to interpret the results as a controlled study. These examples highlight the need for a well-trained researcher to review the papers being considered for a MA.

The mistakes made during the conduction of SRMA can be prevented if sufficient training is provided before a research team proceeds to implement the different steps of a MA. The results of the current study demonstrate the effectiveness of an online training program to enhance the knowledge and skills relevant to conducting a proper SRMA.

Interestingly, all scores of targeted training domains improved significantly (scores of 8–10 on a scale of 1 to 10). After the training, the majority of researchers (88.6%) expressed confidence in their ability to do an advanced search using Scopus, and 71.5% understood well how to implement the PICO model of SRMA. Scores on the use of QoE checklists and scales also improved remarkably with high confidence (scores of 8–10) in skills for using the Cambridge quality of evidence checklist for the assessment of observational studies (77.2%), and the Cochrane Risk of Bias tool (62.8%), CONSORT guidelines (65.6%), and Jadad scale (74.2%) for assessment of RCTs. Although researchers had expressed confidence in their ability to critically read scientific papers, organize their work, and respect deadlines prior to training, these scores improved further after the training.

Interestingly, in the 5-question test on the evaluation of the study design, which was administered after the completion of the first MA, the researchers still scored low, at the same level as new trainees. This indicated that additional focused training is needed to ensure that researchers categorize the study design correctly. After training and re-training in some cases, all researchers were able to identify the study design accurately. Thus, with extensive and appropriate training, monitored through testing, all researchers could be involved in further SRMAs.

Based on the results of our study we suggest the following:

(1) All researchers engaged in systematic reviews and data collection for meta-analysis should undergo training in various aspects of the process including database searches, proper identification of study design and eligibility, assessment of QoE and risk of bias, and accuracy of data extraction and data entry.

(2) These skills need to be periodically tested and additional training provided as needed.

(3) Training and testing can be effectively conducted online.

Limitations of this study

Our conclusions are limited by the relatively small number of researchers trained and evaluated. In addition, the analysis of the self-assessment score may be biased due to the retrospective nature of the survey where the respondents gave subjective impressions of their knowledge and performance before and after the training. Nevertheless, the changes in all training domains are large and significant enough to validate the results of our study.

CONCLUSIONS

To the best of our knowledge, this is the first study demonstrating the need for training of the researchers involved in the conduct of SRMAs. Indeed, even well-trained clinicians are often naïve in the methodology of SRMAs. Therefore, we advocate that all researchers performing an SRMA should undergo a comprehensive training that must cover each aspect of the SRMA methodology. Furthermore, our article offers an example of an online training program that could be used to impart knowledge and skills to researchers performing high-quality SRMAs. Optimizing the performance of the research team involved in conducting SRMAs will ensure the accuracy of all steps of the SRMA, and that the best available medical evidence is presented.

Footnotes

Conflict of Interest: The authors have nothing to disclose.

Funding: None.

- Conceptualization: RC, Rupin Shah, AA.

- Formal analysis: AH.

- Methodology: RC.

- Project administration: RC.

- Supervision: AA.

- Writing – original draft: All authors.

- Writing – review & editing & final approval: All authors.

Supplementary Materials

Supplementary materials can be found via https://doi.org/10.5534/wjmh.220128.

Instructions to exercises.

Survey on training and management of quality in scientific research.

Global Andrology Forum: pre-meeting practice test.

Global Andrology Forum: post-meeting practice test.

Demographics of researchers participating in the systematic reviews and meta-analyses (SRMA)

Self-assessed scores before and after training

The impact of training on performance

Paired scores comparison before and after training

References

- 1.Bello A, Vandermeer B, Wiebe N, Garg AX, Tonelli M. Evidence-based decision-making 2: systematic reviews and meta-analysis. Methods Mol Biol. 2021;2249:405–428. doi: 10.1007/978-1-0716-1138-8_22. [DOI] [PubMed] [Google Scholar]

- 2.Glasziou P, Vandenbroucke JP, Chalmers I. Assessing the quality of research. BMJ. 2004;328:39–41. doi: 10.1136/bmj.328.7430.39. Erratum in: BMJ 2004;329:621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Murad MH, Asi N, Alsawas M, Alahdab F. New evidence pyramid. Evid Based Med. 2016;21:125–127. doi: 10.1136/ebmed-2016-110401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moher D, Liberati A, Tetzlaff J, Altman DG PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Else H. How a torrent of COVID science changed research publishing - in seven charts. Nature. 2020;588:553. doi: 10.1038/d41586-020-03564-y. [DOI] [PubMed] [Google Scholar]

- 6.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Goldberg J, Boyce LM, Soudant C, Godwin K. Assessing journal author guidelines for systematic reviews and meta-analyses: findings from an institutional sample. J Med Libr Assoc. 2022;110:63–71. doi: 10.5195/jmla.2022.1273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Methley AM, Campbell S, Chew-Graham C, McNally R, Cheraghi-Sohi S. PICO, PICOS and SPIDER: a comparison study of specificity and sensitivity in three search tools for qualitative systematic reviews. BMC Health Serv Res. 2014;14:579. doi: 10.1186/s12913-014-0579-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358:j4008. doi: 10.1136/bmj.j4008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Marusic A, Wager E, Utrobicic A, Rothstein HR, Sambunjak D. Interventions to prevent misconduct and promote integrity in research and publication. Cochrane Database Syst Rev. 2016;4:MR000038. doi: 10.1002/14651858.MR000038.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bown MJ, Sutton AJ. Quality control in systematic reviews and meta-analyses. Eur J Vasc Endovasc Surg. 2010;40:669–677. doi: 10.1016/j.ejvs.2010.07.011. [DOI] [PubMed] [Google Scholar]

- 12.Hameed I, Demetres M, Tam DY, Rahouma M, Khan FM, Wright DN, et al. An assessment of the quality of current clinical meta-analyses. BMC Med Res Methodol. 2020;20:105. doi: 10.1186/s12874-020-00999-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Agarwal A, Saleh R, Boitrelle F, Cannarella R, Hamoda TAA, Durairajanayagam D, et al. The Global Andrology Forum (GAF): a world-wide, innovative, online initiative to bridge the gaps in research and clinical practice of male infertility and sexual health. World J Mens Health. 2022;40:537–542. doi: 10.5534/wjmh.220127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Murray J, Farrington DP, Eisner MP. Drawing conclusions about causes from systematic reviews of risk factors: the Cambridge quality checklists. J Exp Criminol. 2009;5:1–23. [Google Scholar]

- 15.Sterne JAC, Savović J, Page MJ, Elbers RG, Blencowe NS, Boutron I, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898. doi: 10.1136/bmj.l4898. [DOI] [PubMed] [Google Scholar]

- 16.Schulz KF, Altman DG, Moher D CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMC Med. 2010;8:18. doi: 10.1186/1741-7015-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jadad AR, Moore RA, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control Clin Trials. 1996;17:1–12. doi: 10.1016/0197-2456(95)00134-4. [DOI] [PubMed] [Google Scholar]

- 18.Song JW, Chung KC. Observational studies: cohort and casecontrol studies. Plast Reconstr Surg. 2010;126:2234–2242. doi: 10.1097/PRS.0b013e3181f44abc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.American Center for Reproductive Medicine. Training session for researchers in SRMA teams 1 [Internet] Washington, D.C.: American Center for Reproductive Medicine; c2022. [cited 2022 May 17]. Available from: https://youtu.be/2oSiKoba4_s. [Google Scholar]

- 20.Cannarella R. Virtual training. Moreland Hils, OH: Global Andrology Forum; [cited 2022 May 17]. Available from: https://drive.google.com/file/d/1renYOKGRcSqZrpyiqlYuGllL5Yga5zU4/view?usp=sharing. [Google Scholar]

- 21.Simón C, Bellver J. Scratching beneath 'the scratching case': systematic reviews and meta-analyses, the back door for evidence-based medicine. Hum Reprod. 2014;29:1618–1621. doi: 10.1093/humrep/deu126. [DOI] [PubMed] [Google Scholar]

- 22.Abdelbaki SA, Sabry JH, Al-Adl AM, Sabry HH. The impact of coexisting sperm DNA fragmentation and seminal oxidative stress on the outcome of varicocelectomy in infertile patients: a prospective controlled study. Arab J Urol. 2017;15:131–139. doi: 10.1016/j.aju.2017.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Nilsson S, Edvinsson A, Nilsson B. Improvement of semen and pregnancy rate after ligation and division of the internal spermatic vein: fact or fiction? Br J Urol. 1979;51:591–596. doi: 10.1111/j.1464-410x.1979.tb03609.x. [DOI] [PubMed] [Google Scholar]

- 24.Persad E, O'Loughlin CA, Kaur S, Wagner G, Matyas N, Hassler-Di Fratta MR, et al. Surgical or radiological treatment for varicoceles in subfertile men. Cochrane Database Syst Rev. 2021;4:CD000479. doi: 10.1002/14651858.CD000479.pub6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Instructions to exercises.

Survey on training and management of quality in scientific research.

Global Andrology Forum: pre-meeting practice test.

Global Andrology Forum: post-meeting practice test.

Demographics of researchers participating in the systematic reviews and meta-analyses (SRMA)

Self-assessed scores before and after training

The impact of training on performance

Paired scores comparison before and after training