Abstract

Processing of positive and negative facial expressions in infancy follows a distinct course with a bias toward fearful facial expressions starting at 7 months of age; however, little is known about the developmental trajectory of fear processing and other facial expressions, and if this bias is driven by specific regions of the face. This study used eye tracking to examine the processing of positive and negative emotional faces in independent groups of 5- (n = 43), 7- (n = 60), and 12-month-old infants (n = 70). Methods: Infants were shown static images of female faces exhibiting happy, anger, and fear expressions, for one second each. Total looking time and looking time for areas of interest (AOIs) including forehead and eyes (top), mouth and chin (bottom) and contour of each image were computed. Infants across all ages looked longer to fear faces than angry or happy faces. Negative emotions generally elicited greater looking times for the top of the face than did happy faces. In addition, we also found that at 12 months of age infants looked longer for the bottom of the faces than did 5-month-olds. Our study suggests that the visual bias to attend longer to fearful faces may be in place by 5 months of age, and between 5 and 12 months of age there seems to be a developmental shift towards looking more to the bottom of the faces.

Keywords: infancy, face processing, emotional expressions

1. Introduction

Prior to the onset of verbal language, infants communicate with others primarily through nonverbal channels such as facial expressions. Indeed, there is a long history on the study of infants’ perception and discrimination of facial emotion (for recent reviews, see Johnson et al. 2005; Leppänen & Nelson, 2009, 2012, 2013). These studies have typically examined infants’ reactions to a variety of emotional stimuli (e.g., human faces depicting emotional content, or face-like arrays, where elements are located within a head shaped contour to represent a face configuration), and the findings together suggest that the ability to discriminate facial emotions is present at very early stages of development.

The interest in faces starts in the first hours of life (Farroni, Menon, Rigato, & Johnson, 2007; Field, Woodson, Grenberg, & Cohen, 1982). Newborns present distinct visual fixations depending on the emotion (happy, sadness and surprise), and the pattern of fixation changes when they are exposed to a stimulus of a different emotional category (Field et al. 1982). At this early age, it seems that infants have a preference for happy faces over fearful and neutral ones (Farroni et al. 2007), a preference that seems to hold for the first months of life (LaBarbera, Izard, Vietze, & Parisi, 1976). At around four months of age, infants show a bias for expressions depicting positive emotions compared with negative and neutral ones (LaBarbera et al., 1976). Later, at approximately five to seven months of age, infants start to show a looking preference for fearful faces (Safar, Kusec & Moulson, 2017). Nelson and colleagues have demonstrated that seven-month-old infants are able to categorize happy and fearful faces and have a preference (i.e., looked longer) for fearful over happy faces (Nelson & Dolgin, 1985; Nelson, Morse & Leavitt, 1979).

Since then, multiple studies have replicated research to confirm this fearful bias in infants using behavioral and electrophysiological measures (Grossman et al., 2011; Kotsoni, de Haan, & Jonhson, 2001; Leppänen, Moulson, Vogel -Farley, & Nelson, 2007; Nelson & de Haan, 1996). The findings are consistent; at seven months of age, fearful faces evoke a greater event-related potentials (ERPs) associated with face processing (infant N290 and P400), similar to N170 found in adults (Kobiella, Grossman, Reid, & Striano, 2008; Leppänen, Moulson, Vogel-Farley, & Nelson, 2007).

There is a substantial body of literature concerning the seven-month-old and older infant bias for viewing fearful faces, but less is known about the mechanisms underlying the bias and the developmental trajectory of looking time preference. Emerging longitudinal studies have provided some information about the attentional bias to faces in the first year of life and later stages of development (Leppänen, Cataldo, Enlow, & Nelson, 2018; Peltola, Yrttiaho & Leppänen, 2017). Some have found the bias for fearful faces holds at 36 months of age (Leppänen et al., 2018) and others showed a decline in looking times for fearful faces between seven and 24 months of age (Peltola et al., 2017). Other investigators who examined infants from four to 24 months of age have found that infants spend a greater amount of time attending to emotional faces with age, particularly threatening faces (Perez-Edgar et al., 2017).

However, it is still uncertain what captures infants’ and children’s attention to one emotional stimulus versus another. Particularly, it is not known if the preference to look at different emotional expressions is driven by distinct regions of the face. For example, in adults, looking at the eye region is important for discriminating facial expressions (Bentin, Golland, Flevaris, Robertson, & Moscovitch, 2006; Schyns, Jentzsch, Johnson, Schweinberger, & Gosselin, 2003), and fearful eyes alone enhance the detection of threatening faces (Yang, Zald, & Blake, 2007). In seven-month-old infants, there seems to be an association between the time spent looking at the eye region for negative emotions and the amplitude of N290 and P400 infant ERP components (Vanderwert et al., 2015). Still, fearful eyes by themselves do not seem to be the sole feature that infants use to extract information about facial expressions (Peltola, Leppänen, Vogel-Farley, Hietanen & Nelson, 2009). Together, the body of research suggests that infants may need to use information from different parts of the face to detect a fearful expression.

The emergence of tools that accurately measure eye-gaze, such as eye tracking, has provided a valuable understanding of social-emotional development in pre-verbal populations, including face processing (Gredeback, Johnson & von Hofsten, 2010). By providing data on where on the face infants look we are able to obtain a more accurate estimate of the developmental trajectory of face processing. One of the few studies to examine face processing in infants at different ages used a scanning task to study looking time at threat-related emotional faces (angry and fearful) and non-threat-related emotional faces (happy, sad and neutral) with a sample of four- and seven-month-olds and adults (Hunnius, de Wit, Vrins, & von Hofsten, 2011). The results showed that independent of the age group, all participants looked less at expressions of anger and fear, showed reduced dwell times, and displayed a lower percentage of fixations towards these emotions. The scanning pattern of the eye region, however, was distinct across the groups. Whereas adults avoided scanning the eye region for negative, as compared to positive expressions, infants displayed the same pattern of eye-looking for all expressions. These findings suggest that avoidance of negative emotion may be acquired later than the first year of life as a result of visual experience and interacting with a social world; no attentional bias was found at seven months of age.

Peltola, Hietanen, Forssman, and Leppänen (2013) present one of the few exceptions in examining the processing of facial expressions across a broader range of ages. These authors employed a cross-sectional design using an Overlap paradigm with five-, seven-, nine- and eleven-month-old infants. They found that while five-month-olds did not differentially disengage from happy or fearful faces (i.e. took just as long to shift attention from facial expressions to a distracting object presented on the side), seven- and nine-month-olds did differentially disengage, and took longer to disengage from fear faces. Further, this bias toward fearful faces was not observed in eleven-month-olds. This attentional bias toward fearful faces was confirmed by a second longitudinal experiment, with only five- and seven-month old infants. Results from these experiments provided strong evidence that this developmental change in attention occurs between the age of five and seven months. It remains unclear whether this attentional bias is maintained over time. Findings from recent longitudinal studies have shown that the preference to look at threatening stimuli is maintained over time (Leppänen et al., 2018; Nakagawa & Sukigara, 2012; Perez-Edgar et al., 2017).

Few studies have examined if the preference to look at the different emotional expressions in infancy is driven by distinct regions of the face (e.g., might the preference for fear be driven, in part, by the amount of sclera shown in the eye region, which in turn increases the contrast to this region). Our study sought to fill this gap by examining looking preferences for different regions of the face among infants at important developmental stages (i.e., ages five, seven, and twelve months). We chose this age range because it captures a period during which important social-emotional milestones are emerging, namely joint attention and social referencing (Happé & Frith, 2014). Moreover, this time is also when infants become more mobile, a feature that could potentially increase their attention to threatening facial expressions (Leppanen & Nelson, 2009). Our study was particularly designed to 1) include a broader range of ages and, therefore, offer a better representation of development, 2) provide a standardized examination of emotional processing across three age groups, which has only been accomplished in one study to date, and 3) examine the looking patterns of the face in each age group.

We examined differences across age groups in eye gaze to happy, fearful, and angry facial expressions using measures of total looking time for specific regions of the face (e.g., forehead and eyes, mouth and chin). We hypothesized that seven- and twelve-month-olds will present longer total looking times for images depicting negative expressions (particularly fear) compared to five-months-olds. Moreover, we hypothesized that looking time for the facial areas of interest (forehead and eyes, mouth and chin) will be the same for five-month-olds for expressions of happy, anger, and fear; seven-month-olds will present longer looking times for the forehead and eyes for negative emotions; and twelve-month-olds will present longer looking times for the forehead and eyes for negative emotions and longer looking times for the mouth and chin for happy faces.

2. Methods

2.1. Participants

The study was conducted at Boston Children’s Hospital/ Harvard Medical School. Typically developing 5-, 7- and 12-month-olds were recruited from a registry established in the Laboratory to participate in a study of emotion processing. Infants (n=308) enrolled in the project participated in a passive-viewing emotion discrimination task during which continuous EEG and eye gaze location were simultaneously recorded. EEG/ERP data will be reported separately (see Xie, McCormick, Westerlund, Bowman, & Nelson, 2018). Infants were shown either human or animal faces; here we report only on the eye tracking data from the human face condition (n=222). The final cross-sectional sample of participants consisted of 173 infants, forty-three 5-month-olds (145 – 161 days; 18 females), sixty 7-month-olds (205 – 223 days; 22 females), and seventy 12-month-olds (358 – 375 days; 34 females). One infant was excluded due to prenatal factors (anticonvulsant medication taken by the mother during pregnancy); the additional 48 infants tested were excluded from the analyses due to failed calibration (n=6; five 5-month-olds and one 7-month-old), inattentiveness to the stimuli and/or excessive fussiness that resulted in fewer than 5 trials per emotional face condition (n=42; eighteen 5-month-olds, eight 7-month-olds, and sixteen 12-month-olds). All infants were born full term (37–42 weeks) and had no known pre- or peri- natal complications. Included infants did not differ in age [t(220) = 1.49, p = .137, d = .24] or sex [t(220) = −1.02, p = .307, d = .16] from excluded infants. The average total family income was $100,000 or greater and 78.4% of parents of participants had a Bachelor’s degree or higher. Race and ethnicity of participants was generally representative of the greater Boston area. Among all infants, 78.6% were White, 2.9% were Black or African American, .6% were Asian Indian, 2.9% were Asian, 13.3% were Mixed Race, and 1.7% did not respond. Further, 86.7% were of Non-Hispanic origin, 2.3% were Mexican, Mexican-American, or Chicano/a, 2.3% were Puerto Rican, 6.4% were of other Hispanic, Latino/a, or Spanish origin, and 2.3% did not respond. Approval for the project was obtained from the Institutional Review Board of Boston’s Children’s Hospital.

Stimuli

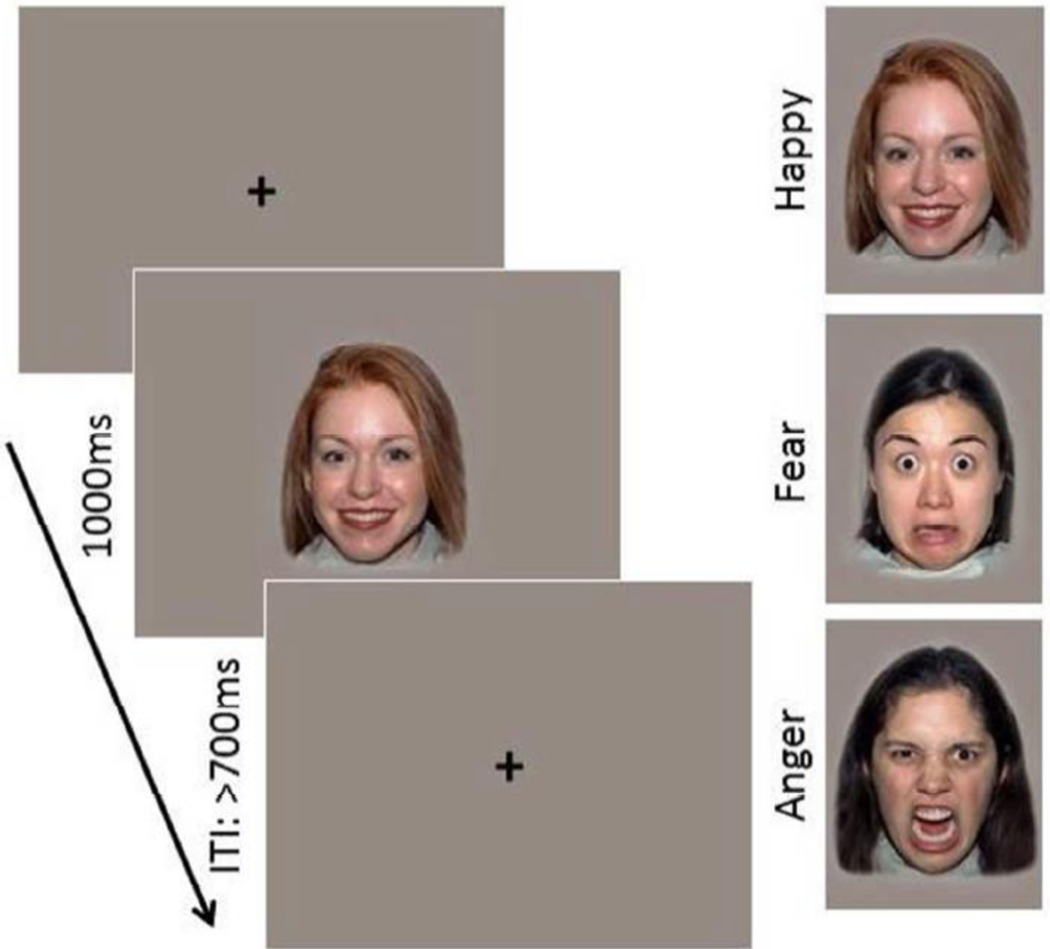

The face stimuli were color images of female faces (NimStim, Tottenham et al., 2009) exhibiting happy, anger, and fear expressions (See Figure 1). Previous research has shown that in the first year of life infants are sensitive to race and gender facial information (Vogel et al., 2012), so the race of the faces presented was matched as closely possible to the race of the infant’s mother. Infants viewed five different models expressing the three emotions for a maximum of 150 trials, 50 of each emotional condition (happy, anger, fear). Each category was presented by 5 different models; therefore, each model appeared on the screen 10 times per emotion. The mean total number of trials completed for 5-month-olds was 121.19, SD = 26.22 (M = 27.28, SD = 8.39 valid trials for happy; M = 25.86, SD = 8.58 for anger; M = 26.74, SD = 8.43 for fear), M = 128.32, SD = 21.42 trials for 7-month-olds (M = 29.53, SD = 8.51 valid trials for happy; M = 29.32, SD = 8.48 for anger; M = 28.88, SD = 8.63 for fear), and M = 125.34, SD = 30.37 trials for 12-month-olds (M = 29.36, SD = 8.75 valid trials for happy; M = 29.01, SD = 9.06 for anger; and M = 29.00, SD = 8.85 for fear). On average, infants saw 125.34 total trials, and this did not vary by age [F(2,170) = .905, p = .406]. Number of emotional face trials used in analysis also did not vary by age: happy [F(2,170) = 1.03, p = .359], angry [F(2,170) = 2.31, p = .102], and fearful [F(2,170) = 1.05, p = .354]. Stimuli were presented on a gray background. The faces were 16.5 cm x 14 cm, and with a 65-cm viewing distance, subtended a visual angle of 14.3° x 12.2°.

Fig 1.

Task design and examples of face stimuli expressing happy, fearful, and angry expressions.

2.3. Experimental Procedure

The experimental procedure took place in a sound-attenuated room with low lighting. A 5-point calibration procedure, using the standard calibration procedure within the Tobii Studio software, was performed twice to ensure the eye tracker was adequately tracking the infant’s gaze. The calibration stimulus consisted of a looming red dot appearing in five locations on the screen (four corners and center). Calibration is assessed from a plot showing error vector for each of the calibration points. The calibration procedure was repeated if a good calibration (a small error vector in a minimum of 3 out of 5 locations) was not obtained for both eyes. Sounds from a keyboard piano were played on speakers placed behind the screen and used to attract infantś attention toward the screen for calibration. The surroundings of the monitor were concealed with black curtains. Before beginning stimuli presentation, infants were fitted with a 128-channel HydroCel Geodesic Sensor EEG Net (HCGSN; Electrical Geodesic Inc.) that was connected to a NetAmps 300 amplifier (Electrical Geodesic Inc.) and channel impedances were checked. Total testing time, including calibration and net placement, took approximately thirty minutes.

Stimulus presentation was controlled by an experimenter in an adjacent room using E-Prime 2.0 (Psychological Software Products, Harrisburg, PA). This experimenter manually presented stimuli only when the infant was looking at the screen. Sessions were videotaped and monitored live by the experimenter. Each image was presented for 1000 ms, followed by a fixation cross. The minimum inter-stimulus interval (ISI) was 700 ms, and the actual ISI could vary beyond this depending on the infant’s attention to the screen. Another experimenter was seated at the same level of the infant, directing his/her attention by tapping on and pointing to the screen when necessary, only during attention-getters and not during presentation of face stimuli. The testing progressed until the maximum number of trials was reached (150) or the infant’s attention could no longer be maintained.

2.3.2. Eye tracking recording and analysis

Eye tracking data were obtained while infants were seated on their parent’s lap in front of either a Tobii T60 or T120 corneal reflection eye tracking monitor (Tobii Technology AB, Sweden). Cameras embedded within the monitor record the reflection of an infrared light source on the infant’s cornea in relation to the pupil from both eyes at a frequency of 60 Hz (T120 recorded at 60 Hz though it has the capability to record at 120 Hz as well). Data from one eye were used to estimate gaze coordinates when both eyes could not be measured accurately (e.g. due to infant movement or angle of head position). The Tobii T60 and T120 systems have an average accuracy of .5°, which approximates to a .5 cm area on the screen when the viewing distance is about 70 cm. The T60 eye tracker tolerates head movements within a range of 44 × 22 × 30 cm and the T120 within a range of 30 X 22 X 30 cm. Both further compensate for robust head movements, typically resulting in a temporary accuracy error of approximately less than 1°, and has a 100-ms recovery time (on average) to full tracking ability after movement disruption. These are the average values over the screen measured at a distance of 63 cm in a controlled office environment for adults without a chin rest (Tobii Technology AB, 2010). Of the final sample included in analysis, 147 infants were tested on the T60 system and the remaining 26 infants on the T120 system. There were no significant differences in ages tested [t(171) = 0.595, p = .553, d = 0.13], number of trials presented [t(171) = 0.849, p = .397, d = 0.16], or total looking time for happy [t(171) = 0.986, p = .325, d = 0.23], angry [t(171) = 0.002, p = .999, d = 0.001], or fearful [t(171) = 0.655, p = .513, d = 0.15] emotion faces between the two eye-tracking systems.

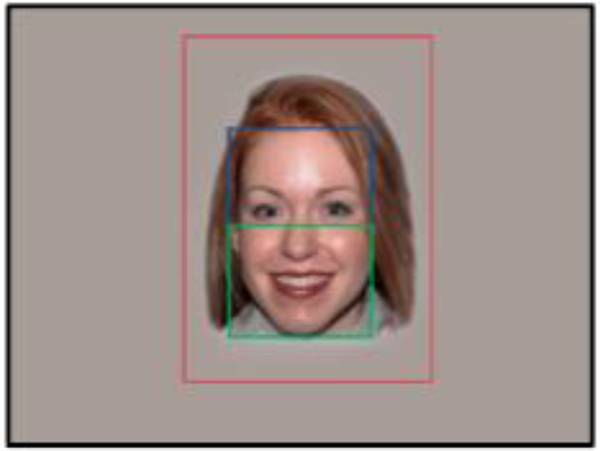

For each stimulus, areas of interest (AOI) that delineated the forehead and eyes (top), the mouth and chin (bottom) and outer face contour (image contour excluding inner face regions) were traced onto the stimuli using transparent objects in E-Prime (see Figure 2 for an example). AOIs differ slightly between models and emotions due to subtle differences in stimuli face sizes. The range of pixel areas are defined here: Top: 332.80 (width) by 204.80 (height) to 448 (width) by 307.20 (height) in pixels; Bottom: 332.80 (width) by 204.80 (height) to 448 (width) by 307.20 (height) in pixels; Image: 652.80 (width) by 890.88 (height) in pixels, minus the inner AOI areas. The outer face contour was added to obtain a measure of how much time the infant spent attending to the screen, but not to specific regions of the face. The following analyses were done using these AOIs.

Fig 2.

Example of stimulus image with AOIs drawn. Blue rectangle indicates what is considered the top portion of the face. Green rectangle indicated the bottom portion of the face, and red rectangle the image contour.

Gaze data files were run through a custom-made Python script (Python Software Foundation, http://www.python.org/), which extracted gaze information on each trial. Gaze coordinates and AOI location were recorded for each sample during each trial, and then added together to get a measure of total looking time per trial in each AOI, which was then averaged across trials for each emotion. Data were then processed using SAS software version 9.3 for Windows (Copyright 2002–2010 by SAS Institute Inc., Cary, NC, USA). Trials were included if gaze information was recorded for at least 500 ms of the 1000 ms total image time. Number of trials excluded from analyses for having less than 500 ms of gaze information did not differ by age: F(2,170) = .143, p = .867, (M = 41.3, SD = 21.57 for 5-month-olds; M = 40.58, SD = 17.39 for 7-month-olds; M = 39.4, SD = 19.04 for 12-month-olds). Subjects were included in further analysis if they maintained 5 included trials per emotional category. Variables were examined for skewness in order to ensure a normal distribution.

3. Results

Correlations and descriptive statistics for variables of interest are presented in Tables 1–4. The primary analysis examined the distribution of looking time using a between- (3 age group) and within- (3 emotion and 3 area of interest) analysis of variance design. Age group was defined as 5, 7 and 12 months; emotion was expressed as happy, anger and fear; and AOI was defined as looking time top (LTT), looking time bottom (LTB) and image contour. The model included main effects for each factor along with all possible interactions. Post hoc comparisons were conducted using Bonferroni adjustments to account for type I error. The following results are described separately for each AOI (section 3.1), emotion (section 3.2), along with respective interactions with age group, and the interaction between AOI and emotion (section 3.3). There was no main effect of age [F(2,170) = 0.73, p = 0.49, ηp2 = .01] and therefore we chose not to report this effect separately. There was no significant three-way interaction between age, AOI, and emotion [F(8,680) = .49, p = .86, ηp2 = .01].

Table 1:

Correlations and Descriptive Statistics: All Ages

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Happy, Top | 1 | |||||||||

| 2. Happy, Bottom | −.86** | 1 | ||||||||

| 3. Happy, Contour | −.18* | −.31** | 1 | |||||||

| 4. Angry, Top | .93** | −.77** | −.23** | 1 | ||||||

| 5. Angry, Bottom | −.79** | .94** | −.32** | −.82** | 1 | |||||

| 6. Angry, Contour | −.18* | −.30** | .96** | −.26** | −.30** | 1 | ||||

| 7. Fear, Top | .94* | −.79** | −.21** | .92** | −.77** | −.21** | 1 | |||

| 8. Fear, Bottom | −.80** | .94** | −.32** | −.76** | .93** | −.31** | −.84** | 1 | ||

| 9. Fear, Contour | −.18* | −.30** | .97** | −.24** | −.30** | .97** | −.22** | −.32** | 1 | |

| 10. Age Group | −.08 | .21** | −.25** | −.10 | .24** | −.25** | −.06 | .21** | −.27** | 1 |

|

| ||||||||||

| M | 323.82 | 531.02 | 45.38 | 370.76 | 484.52 | 46.40 | 361.90 | 502.54 | 44.95 | |

| SD | 236.55 | 244.70 | 112.34 | 224.68 | 230.58 | 118.66 | 237.41 | 243.60 | 123.80 | |

Note:

indicates significance at the .001 level and

at the .05 level

Table 4:

Correlations and Descriptive Statistics: 12 months

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Happy, Top | 1 | ||||||||

| 2. Happy, Bottom | −.97** | 1 | |||||||

| 3. Happy, Contour | −.13 | −.01 | 1 | ||||||

| 4. Angry, Top | .91** | −.88** | −.11 | 1 | |||||

| 5. Angry, Bottom | −.89** | .91** | .00 | −.96** | 1 | ||||

| 6. Angry, Contour | .03 | −.13 | .53** | −.09 | −.06 | 1 | |||

| 7. Fear, Top | .94** | −.91** | −.18 | .92** | −.89** | .01 | 1 | ||

| 8. Fear, Bottom | −.92** | .93** | .09 | −.91** | .92** | −.08 | −.98** | 1 | |

| 9. Fear, Contour | −.07 | −.03 | .64** | −.09 | .03 | .52** | −.09 | .01 | 1 |

|

| |||||||||

| M | 302.29 | 582.48 | 19.71 | 345.71 | 540.50 | 19.92 | 346.71 | 550.47 | 14.92 |

| SD | 239.94 | 235.91 | 21.02 | 212.74 | 218.09 | 17.51 | 238.05 | 237.03 | 16.33 |

Note:

indicates significance at the .01 level

3.1. Looking time by area of interest (AOI)

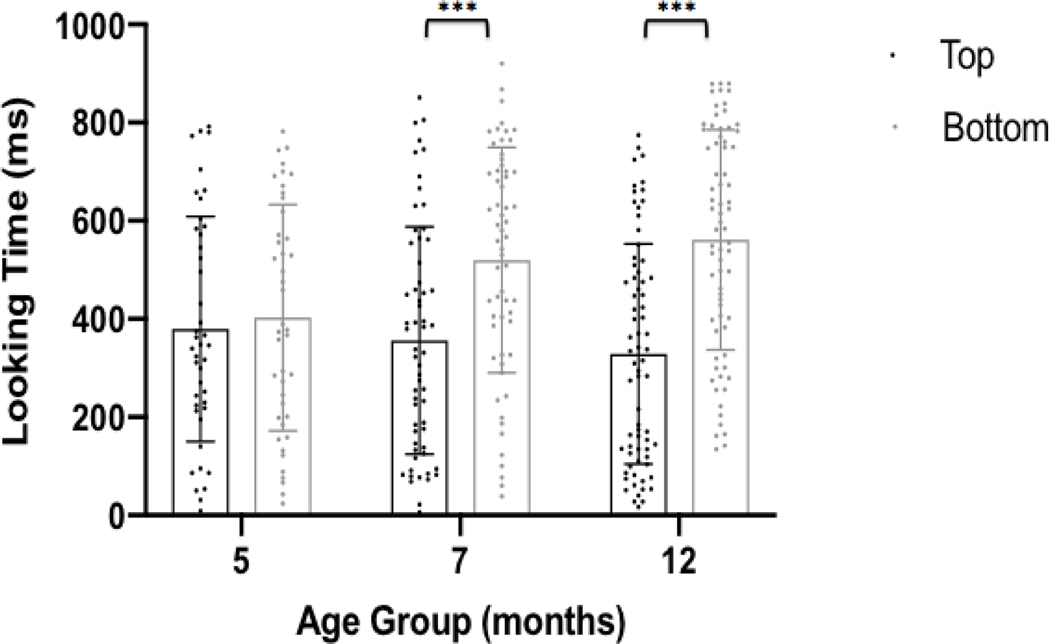

There was a main effect for AOI [[F(2, 340) = 145.77, p < 0.001, ηp2,= .46], which was qualified by an AOI x age interaction [F(2, 340) = 4.33, p = 0.007, ηp2 = .05]. Paired t tests revealed that for 5-month-olds, there were no significant differences in looking time between the top (M = 379.73 ms, SD = 229.20 ms) and bottom of faces (M = 402.53 ms, SD = 230.56 ms), t(42) = 0.38, p = .71, d = 0.09. Both the top (t(42) = 4.47, p < .001, d = 1.18) and bottom (t(42) = 4.90, p < .001, d = 1.28) recruited longer looking time in the 5-month-olds than the image contour (M = 114.66 ms, SD = 217.35 ms). For 7-month-olds, there was significantly more bottom (M = 519.78 ms, SD = 229.52 ms) than top looking (M = 356.42 ms, SD = 231.55 ms), t(59) = 2.77, p = .008, d = 0.71. Both the top (t(59) = 10.83, p < .001, d = 1.98) and bottom (t(59) = 16.02, p < .001, d = 2.99) recruited longer looking time in the 7-month-olds than the image contour (M = 28.02 ms, SD = 36.80 ms). Finally, for 12-month-olds, there was significantly more bottom (M = 557.82 ms, SD = 223.85 ms) than top looking (M = 331.57 ms, SD = 224.21 ms), t(69) = 4.25, p < .001, d = 1.01. Both the top (t(69) = 11.59, p < .001, d = 1.97) and bottom (t(69) = 20.09, p < .001, d = 3.40) recruited longer looking time in the 12-month-olds than the image contour (M = 18.18 ms, SD = 15.42 ms) (see Figure 3).

Fig 3.

Looking time by AOI and age. Only looking time to the main AOIs of interest, the top and bottom, are presented here for clarity. Each dot represents an individual data point, error bars represent the SD, and height of bars represents the mean value of each category.

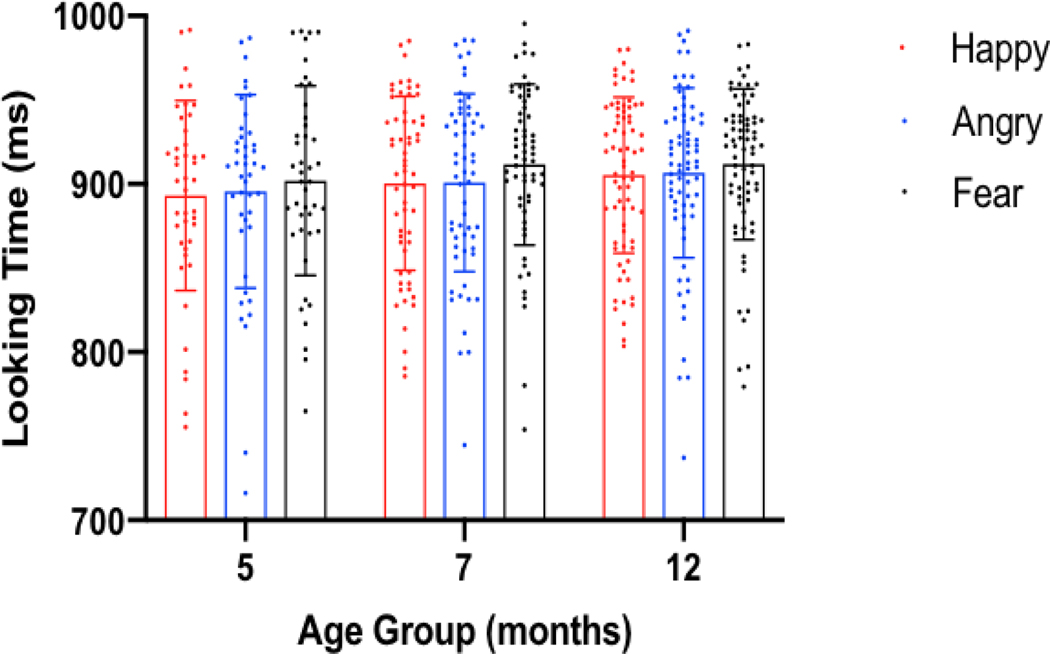

3.2. Looking time by emotion

There was a main effect of emotion [[F(2, 340) = 5.33, p < 0.01, ηp2 = .03]. Paired t tests revealed that fear (M = 909.39 ms, SD = 48.96 ms) resulted in longer looking times when compared to angry (M = 901.68 ms, SD = 53.05 ms), t(172) = 2.64, p = .009, d = 0.15, and happy faces (M = 900.23 ms, SD = 50.71 ms), t(172) = 3.14, p = .002, d = 0.18. Looking time to angry and happy faces did not differ, t(172) = 0.48, p = .63, d = 0.03. Contrary to the AOI effects, the emotion x age interaction was not statistically significant [F(4, 340) = 0.16, p = 0.96, ηp2 = .002] (see figure 4).

Fig 4.

Looking time by emotion (happy, angry and fear) and age. Each dot represents an individual data point, error bars represent the SD, and height of bars represents the mean value of each category.

3.3. Looking time by area of interest (AOI) and emotion

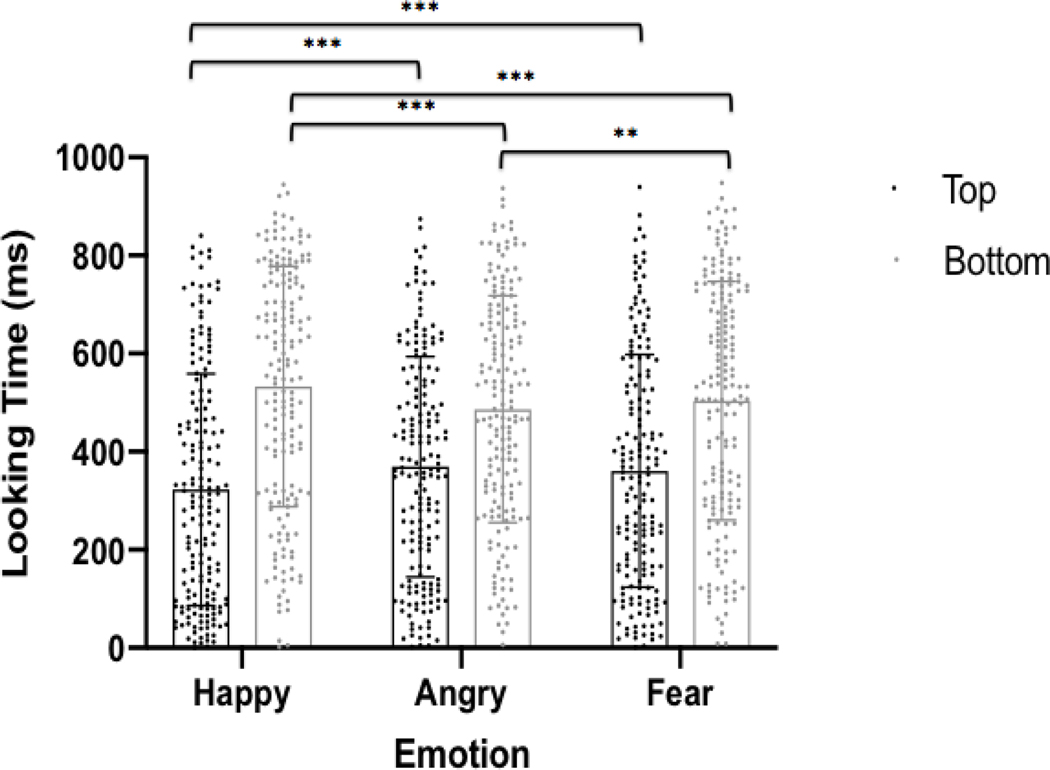

Analyses revealed an emotion x AOI interaction [F (4, 680) = 25.21, p < 0.001, ηp2 = .13]. Paired t tests revealed greater looking times for the top of the face for fear (M = 361.90 ms, SD = 237.41 ms) compared to happy (M = 323.82 ms, SD = 236.55 ms), t(172) = 6.33, p < .001, d = 0.16. Angry faces also recruited more top looking (M = 370.76 ms, SD = 224.68 ms) compared to happy, t(172) = 6.99, p < .001, d = 0.20. There were no significant differences in looking to the top of the face between angry and fear, t(172) = 1.26, p = .21, d = 0.04. Further, infants looked longer at the bottom of happy faces (M = 531.02 ms, SD = 244.70 ms) compared to angry faces (M = 484.52 ms, SD = 230.58 ms), t(172) = 7.13, p < .001, d = 0.20, and fear faces (M = 502.54 ms, SD = 243.61 ms), t(172) = 4.57, p < .001, d = 0.12. Infants also looked longer at the bottom of fear faces compared to angry faces, t(172) = 2.62, p = .01, d = 0.08. Finally, there were no significant differences in how infants looked at the image contour for happy faces (M = 45.38 ms, SD = 112.34 ms) compared to angry (M = 46.40 ms, SD = 118.66 ms), t(172) = 0.42, p = .68, d = 0.01, or fear faces (M = 44.95 ms, SD = 123.80 ms), t(172) = 0.19, p = .85, d = 0.00. There were also no differences in image contour looking between angry and fear faces, t(172) = 0.66, p = .51, d = 0.01. (see figure 5).

Fig 5.

AOI x emotion interaction. Only looking time to the main AOIs of interest, the top and bottom, are presented here for clarity. Each dot represents an individual data point, error bars represent the SD, and height of bars represents the mean value of each category.

4. Discussion

In the current study, we examined how infants discriminate between positive and negative facial expressions. This research is unique in its assessment of eye tracking across participants in their first year of life and looking times for specific regions of the face and contributes to our understanding of the development of perceptual biases towards particular facial emotions (e.g., fear). In contrast to previous findings, here we report that at five months, infants look longer at fearful faces than either angry or happy faces. In addition, we also found that for negative, as opposed to positive emotions, the top part of the face elicits longer looking times.

Infants’ ability to discriminate between facial expressions seems to be present in the first months of life. There are reports on face discrimination as early as 36 hours after infants are born (Field et al. 1982; Farroni et al. 2007), and the literature suggests that in the first months, infants have a bias toward positive emotions (LaBarbera et al. 1976). At approximately seven months of age, the infants seem to shift their attention and begin to exhibit a looking preference for fearful faces (Nelson & Dolgin, 1985; Nelson et al., 1979). This finding has been replicated in many studies, but there is a lack of research on the developmental trajectory for face processing in infants. Our study was designed with this in mind and was conceptualized to provide developmental information through utilizing a serial cross-sectional design.

We observed that across all ages, infants are likely to direct more attention to negative emotions, specifically fearful expressions. This bias is in line with previous research on seven-month-olds that has consistently found that at these infants are less likely to disengage their attention from fearful faces, compared with happy and neutral ones (Nelson & Dolgin, 1985; Nelson et al., 1979). This attentional bias is well documented in both behavioral and electrophysiological studies (Grossman et al., 2011; Kotsoni et al., 2001; Leppänen et al., 2007; Nelson & de Haan, 1996). Although fewer studies have examined older infants, the findings suggest that the bias towards fearful faces is maintained at eight, nine, and twelve months of age (Hoehl & Straino, 2010; Leppänen et al., 2018; LoBlue & DeLouache, 2010; Nakagawa & Sukigara, 2012; Perez-Edgar et al., 2017), which is also consistent with our findings. The twelve-month-olds in our study also looked longer at fearful, compared with angry and happy, faces. Peltola and colleagues (2013) failed to observe this effect in eleven-month-old infants. However, they used a different paradigm with fewer models and emotional categories, which may have contributed to the lack of effect in their study.

Another possible explanation for our results is that infants processed happy and angry emotions more rapidly than fear because happy and angry emotions were accompanied by the ‘most appropriate’ gaze direction (i.e.. a direct gaze); it took longer for these infants to process the fearful expression, as it was also displayed with a direct gaze, which might not enhance the perception of that emotion (Adams & Kleck 2003, 2005; Rigato, Menon, Farroni, & Johnson, 2013). According to the shared signal hypothesis theory, the perception of approach-oriented emotional expressions (i.e. happy and angry) is enhanced when accompanied with a direct gaze, while the perception of avoidance-oriented emotional expressions (i.e., fear and sadness) is enhanced when accompanied with an averted gaze (Adams & Kleck 2003, 2005; Rigato, Menon, Farroni, & Johnson, 2013).

Our findings pertaining to five-month-olds showed that infants at this age do not differ from seven- and twelve-month-olds regarding the bias toward fearful faces. Previous research suggests that the bias toward fearful faces happens between five and seven months of age, but most studies did not uncover differences in looking time at five months (Bornstein & Arterberry, 2003; Peltola, Leppänen, Maki & Hietanen, 2009). There is, however, a recent study that found that at five months of age, infants take longer to disengage from fearful faces compared with happy and neutral faces (Heck, Hock, White, Jubran & Bhatt, 2016) when the stimuli presented are dynamic rather than static. Electrophysiological data also show that at five months of age, infants already show a pronounced deceleration in heart rate when looking at fearful, compared to happy and neutral, faces. This suggests that at just five months, infants already present an automatic defense-related reflex that allows them to extract information regarding harmful stimuli in the environment (Peltola, Hietanen, Forssman, & Leppänen, 2013). The difference in results between our study and Peltola et al. (2013) might be attributable to methodological differences. Whereas we used a visual preference paradigm, Peltola and colleagues used a gap overlap paradigm, which has been reported as more challenging for infants younger than six months (Frick, Colombo, & Saxon, 1999). Although there is evidence that four-month-olds perform similarly to seven-month-olds in an overlap paradigm when the stimulus is non-emotional (Blaga & Colombo, 2006), it could be that for emotional stimuli, the task is more challenging. Another important difference between the two studies is that infants included in the work from Peltola et al. were presented with facial expressions posed by two female models. This design feature improved the internal validity of the study; however, it does limit the ability to generalize the findings across different facial shapes. In our study, each emotion was represented by five different female facial expressions in order to confirm that the associations were independent of the face model. More studies, including those focusing on infants younger than six months of age, are needed to better understand the developmental trajectory for face processing. Further, future research should consider how emotional face processing in infancy may differ depending on the race, age, and gender of the stimuli used, as research has demonstrated that infants show prioritized processing of facial categories with which they have the most experience (Safar, Kusec & Moulson, 2017; Vogel et al., 2012; see Scherf & Scott, 2012 and Sugden & Marquis, 2017 for reviews).

Our study also examined the distribution of looking time across areas of interest to which infants attended. We found that overall, seven- and twelve-month-olds spent more time looking at the bottom of faces compared with five-month-olds, who looked more at the top. This could be due to the fact that at six months of age, infants seem to shift their attention from the eye region to the mouth region, looking for cues related to speech (Lewkowicz & Hansen-Tift, 2012; Tenenbaum, Shah, Sobel, Malle & Morgan, 2012). However, there were some interesting patterns when considering the interplay between area of interest and emotion. We found that negative emotions elicited greater looking times for the top of the face than did positive emotions, a finding has been observed in adults (Hunnius et al., 2011), though some studies in infants have failed to replicate this finding (Hunnius et al., 2011; Peltola et al. 2009), and one does seem to find an effect (Vanderwert et al. 2015). In Vanderwert et al.’s study (2015), infants who spent more time looking to the eye region of human faces showing fearful or angry expressions exhibited greater amplitudes in specific ERP components, demonstrating that the eye region is important in processing negative emotions. In other studies, infants showed similar looking times for the top of the face, independent of emotional expressions (Hunnius et al., 2011; Peltola et al. 2009). The difference between our results and others might have to do with the delineation of areas of interest (AOI); whereas we had only two main AOIs of interest --the forehead and eyes, and the mouth and chin -- Hunnius and Peltola included more AOIs, each of smaller size, potentially explaining the differences in the results. We also did not find differences in age group responses, suggesting that the preference to look at the eye region of the face is already present by five months of age, similar to older children and adults (Schyns, Petro, & Smith, 2007). Our results are consistent with Hunnius et al. (2011), who found no differences between four- and seven-month-olds and adults in dwell times on the eye and nose area. Future research should carefully consider the selection of AOIs, both when considering size and number.

This study is not without limitations. We used a serial cross-sectional design to infer age trends, making it difficult to derive a “true” developmental story. In addition, it is possible that the associations between emotion and age were attenuated by confounding factors; for example, we could not control for individual differences in the exposure to emotional expressions (e.g., by caregivers), which is a variable known to influence how infants code emotions (Taylor-Colls & Fearon, 2015). Further, given the short stimulus durations used in this study (1000 ms), it is difficult to compare our findings with those of others (e.g., Hunnius et al., 2011). Eye-tracking data collected in the current study were collected as part of a larger EEG/ERP paradigm where shorter stimulus presentation was more appropriate. We believe that data collected during this shorter stimulus presentation time still reflects underlying emotional face processing, but differences in stimulus presentation time and paradigm design should be taken into account when interpreting results. Additionally, we did not ask caregivers to report on infant mobility. Given the motivating theory that the emergence of threat-related attention may develop as infants become more mobile (Leppänen & Nelson, 2009), this could be an important variable to include in future research. Finally, two negative emotion conditions and one positive emotion condition were used in the current study. The imbalance of emotional valence in stimuli presented should also be considered when interpreting findings.

The strengths of our study include the number of infants assessed along with the rigorous and precise quantification of looking time. Thus, our results provide suggestive evidence of emotion processing across different developmental stages and add clarity to the expected trends documented in the existing literature.

Here we provide evidence, using eye-tracking methods, that the attentional bias for fearful expressions is present at five months of age, and is maintained in seven- and twelve-month-old infants. This adds to models put forward by Leppänen, Nelson, and Peltola (2009, 2012) suggesting that the bias to threat stimuli might be present before the second half of the first year. Future studies addressing the developmental course of face processing that use rigorous measurements and similar paradigms are needed to further understand how face processing evolves during the first year of life. Moreover, it would be interesting to examine how individual differences can help understand the developmental course of emotion processing in the first year of life.

Table 2:

Correlations and Descriptive Statistics: 5 months

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Happy, Top | 1 | ||||||||

| 2. Happy, Bottom | −.55** | 1 | |||||||

| 3. Happy, Contour | −.45* | −.47** | 1 | ||||||

| 4. Angry, Top | .95** | −.46** | −.52** | 1 | |||||

| 5. Angry, Bottom | −.51** | .96** | −.47** | −.47** | 1 | ||||

| 6. Angry, Contour | −.49** | −.42** | .98** | −.58** | −.42** | 1 | |||

| 7. Fear, Top | .95** | −.46** | −.49** | .94** | −.45** | −.53** | 1 | ||

| 8. Fear, Bottom | −.46** | .97** | −.53** | −.39** | .95** | −.47** | −.44** | 1 | |

| 9. Fear, Contour | −.45** | −.46** | .99** | −.53** | −.45** | .98** | −.51** | −.52** | 1 |

|

| |||||||||

| M | 354.51 | 430.13 | 108.51 | 401.71 | 377.76 | 116.21 | 382.98 | 399.71 | 119.27 |

| SD | 231.28 | 239.84 | 204.42 | 243.64 | 224.74 | 221.22 | 225.17 | 236.39 | 230.71 |

Note:

indicates significance at the .01 level

Table 3:

Correlations and Descriptive Statistics: 7 months

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Happy, Top | 1 | ||||||||

| 2. Happy, Bottom | −.96** | 1 | |||||||

| 3. Happy, Contour | −.02 | −.17 | 1 | ||||||

| 4. Angry, Top | .94** | −.91** | −.04 | 1 | |||||

| 5. Angry, Bottom | −.91** | .95** | −.16 | −.96** | 1 | ||||

| 6. Angry, Contour | .07 | −.24 | .85** | .05 | −.21 | 1 | |||

| 7. Fear, Top | .95** | −.92** | −.02 | .92** | −.89** | .02 | 1 | ||

| 8. Fear, Bottom | −.93** | .94** | −.08 | −.89** | .91** | −.14 | −.97** | 1 | |

| 9. Fear, Contour | −.06 | −.11 | .84** | −.06 | −.07 | .85** | −.11 | −.04 | 1 |

|

| |||||||||

| M | 326.95 | 543.29 | 30.09 | 377.79 | 495.72 | 27.26 | 364.52 | 520.34 | 26.71 |

| SD | 237.63 | 240.44 | 50.99 | 224.68 | 226.42 | 33.01 | 247.68 | 238.25 | 32.35 |

Note:

indicates significance at the .01 level

Acknowledgements:

We thank the infants and their families for their participation in the Emotion Project. This work was supported by the National Institutes of Health grant #MH078829 [awarded to CAN]. We would also like to acknowledge the Emotion Project team past and present - Hannah Behrendt, Michelle Bosquet, Lindsay Bowman, Dana Bullister, Julia Cataldo, Perry Dinardo, Anna Fasman, Kristina Joas, Mir Lim, Jukka Leppänen, Ruqian (Daisy) Ma, Lina Montoya, Halie Olsen, Katherine Perdue, Elena Piccardi, Zarin Rahman, Miranda Ravicz, Emma Satterthwaite-Muresianu, Anisha Shenai, Ross Vanderwert, and Anna Zhou - for their assistance in data acquisition, data processing, and relevant discussion.

4. References

- Adams R, & Kleck R.(2003). Perceived Gaze Direction and the Processing of Facial Displays of Emotion. Psychological Science, 14(6), 644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x [DOI] [PubMed] [Google Scholar]

- Adams R, & Kleck R.(2005). Effects of Direct and Averted Gaze on the Perception of Facially Communicated Emotion. Emotion, 5(1), 3–11. doi: 10.1037/1528-3542.5.1.3 [DOI] [PubMed] [Google Scholar]

- Blaga OM & Colombo J.(2006). Visual processing and infant ocular Latencies in the overlap paradigm. Developmental Psychology 42, pp: 1069–76. doi: 10.1037/0012-1649.42.6.1069. [DOI] [PubMed] [Google Scholar]

- Bentin S, Golland Y, Flevaris A, Robertson L, & Moscovitch M.(2006). Processing the Trees and the Forest during Initial Stages of Face Perception: Electrophysiological Evidence. Journal of Cognitive Neuroscience, 18(8), 1406–1421. doi: 10.1162/jocn.2006.18.8.1406 [DOI] [PubMed] [Google Scholar]

- Bornstein MH, & Arterberry ME (2003). Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science, 6, 585–599. doi: 10.1111/1467-7687.00314 [DOI] [Google Scholar]

- Farroni T, Menon E, Rigato S, Johnson MH (2007). The perception of facial expressions in newborns. The European Journal of Developmental Psychology 4, 2–13. doi: 10.1080/17405620601046832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field TM, Woodson R, Grenberg R, Cohen D.(1982). Discrimination and imitation of facial expressions by neonates. Science, 218, 179–181. doi: 10.1126/science.7123230 [DOI] [PubMed] [Google Scholar]

- Frick JE, Colombo J, & Saxon TF (1999). Individual and developmental differences in disengagement of fixation in early infancy. Child Development, 70(3), 537–548. doi: 10.1111/1467-8624.00039 [DOI] [PubMed] [Google Scholar]

- Gredeback G, Johnson S, & von Hofsten C (2010). Eye tracking in infancy research. Developmental Neuropsychology. 35, pp. 1–19. doi: 10.1080/87565640903325758. [DOI] [PubMed] [Google Scholar]

- Grossman T, Johnson MH, Vaish A, Hughes DA, Quinque D, Stoneking M.& Friederici AD (2011). Genetic and neural dissociation of individual responses to emotional expressions in human infants. Developmental Cognitive Neuroscience, 1, pp: 57–66. doi: 10.1016/j.dcn.2010.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Happé F, & Frith U.(2014). Annual Research Review: Towards a developmental neuroscience of atypical social cognition. Journal of Child Psychology and Psychiatry, 55(6), 553–577. doi: 10.1111/jcpp.12162 [DOI] [PubMed] [Google Scholar]

- Heck A.Hock A, White H, Jubran R, & Bhatt RS (2016). The development of attention to dynamic facial emotions. Journal of Experimental Child Psychology, 147, pp: 100–10. doi: 10.1016/j.jecp.2016.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoehl S, & Striano T.(2010). The development of emotional face and eye gaze processing. Developmental Science, 13, 813–825. doi: 10.1111/j.1467-7687.2009.00944.x. [DOI] [PubMed] [Google Scholar]

- Hunnius S, de Wit TCJ, Vrins S, & von Hofsten C.(2011). Facing threat: Infants’ and adults’ visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cognition and Emotion, 25, pp. 193–205. doi: 10.1080/15298861003771189. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Griffin R, Csibra G, Halit H, Farroni T, De Haan M, ... & Richards J.(2005). The emergence of the social brain network: Evidence from typical and atypical development. Development and Psychopathology, 17(3), 599–619. doi: 10.1017/S0954579405050297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobiella A, Grossmann T, Reid V, & Striano T.(2007). The discrimination of angry and fearful facial expressions in 7-month-old infants: An event-related potential study. Cognition and Emotion, 22(1), 134–146. doi: 10.1080/02699930701394256 [DOI] [Google Scholar]

- Kotsoni E, de Haan M, & Johnson MH (2001). Categorical perception of facial expressions by 7-month-old infants. Perception, 30(9), 1115–1125. doi. 10.1068/p3155 [DOI] [PubMed] [Google Scholar]

- LaBarbera JD, Izard CE, Vietze P, Parisi SA (1976). Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development 47, 535–538. doi: 10.2307/1128816 [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Cataldo JK, Enlow MB, & Nelson CA (2018). Early development of attention to threat-related facial expressions. PloS one, 13(5), doi.org/ 10.1371/journal.pone.0197424 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, & Nelson CA (2007). An ERP study of emotional face processing in the adult and infant brain. Child Development, 78(1), 232–245. doi: 10.1111/j.1467-8624.2007.00994.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM & Nelson CA (2009). Tuning the developing brain to social signals of emotion. Nature Reviews Neuroscience, 10 (1), 37–47. doi: 10.1038/nrn2554 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM & Nelson CA (2012). Early development of fear processing. Current Directions in Psychological Science, 21(3): 200–204 doi: 10.1177/0963721411435841 [DOI] [Google Scholar]

- Leppänen JM & Nelson CA (2013). The emergence of perceptual preferences for social signals of emotion. In Banaji M.& Gelman S.(Eds.) The Development of Social Cognition (pp.161–164). Oxford, UK: Oxford University Press. [Google Scholar]

- Lewkowicz DJ & Hansen- Tift AM (2012). Infants deploy selective attention to the mouth of a talking face when learning speech. Proceedings of the National Academy of Sciences of the United States of America 109, 1431–6. doi: 10.1073/pnas.1114783109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LoBue V, & DeLoache JS (2010). Superior detection of threat-relevant stimuli in infancy. Developmental Science, 13, 221–228. doi: 10.1111/j.1467-7687.2009.00872.x. [DOI] [PubMed] [Google Scholar]

- Nakagawa A.& Sukigara M.(2012). Difficulty in disengaging from threat and temperamental negative affectivity in early life: A longitudinal study of infants aged 12–36 months. Behavioral and Brain Functions. 40, pp- 1–8. doi: 10.1186/1744-9081-8-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA, Morse PA, Leavitt LA (1979). Recognition of facial expressions by seven-month olds infants. Child Development 50 1239–1242. doi: 10.2307/1129358 [DOI] [PubMed] [Google Scholar]

- Nelson CA, Dolgin KG (1985). The generalized discrimination of facial expressions by seven-month-old infants. Child Development, 56, 58–61. .doi: 10.2307/1130173 [DOI] [PubMed] [Google Scholar]

- Nelson CA, & De Haan M.(1996). Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology 29, 577–595. doi: . [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Leppanen JM, Vogel-Farley VK, Hietanen JK, & Nelson CA (2009). Fearful faces but not fearful eyes alone delay attention disengagement in 7-month-old infants. Emotion 9, 560–565. doi: 10.1037/a0015806 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola MJ, Hietanen JK, Frossman L, Leppänen JM (2013).The emergence and stability of the attentional bias to fearful faces in infancy. Infancy 18, 905–926. doi: DOI: 10.1111/infa.12013 [DOI] [Google Scholar]

- Peltola M, Yrttiaho S, & Leppänen J.(2018). Infants’ attention bias to faces as an early marker of social development. Developmental Science, 21(6), e12687. doi: 10.1111/desc.12687. [DOI] [PubMed] [Google Scholar]

- Pérez-Edgar K, Morales S, LoBue V, Taber-Thomas B, Allen E, Brown K, & Buss K.(2017). The impact of negative affect on attention patterns to threat across the first 2 years of life. Developmental Psychology, 53(12), 2219–2232. doi: 10.1037/dev0000408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigato S, Menon E, Farroni T, & Johnson M.(2011). The shared signal hypothesis: Effects of emotion-gaze congruency in infant and adult visual preferences. British Journal of Developmental Psychology, 31(1), 15–29. doi: 10.1111/j.2044-835x.2011.02069.x [DOI] [PubMed] [Google Scholar]

- Safar K, Kusec A, & Moulson MC (2017). Face Experience and the Attentional Bias for Fearful Expressions in 6- and 9-Month-Old Infants. Frontiers in Psychology, 8. doi: 10.3389/fpsyg.2017.01575 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherf K, & Scott L.(2012). Connecting developmental trajectories: Biases in face processing from infancy to adulthood. Developmental Psychobiology, 54(6), 643–663. doi: 10.1002/dev.21013 [DOI] [PubMed] [Google Scholar]

- Schyns PG, Petro LS & Smith ML (2007). Dynamics of Visual Information Integration in the Brain for Categorizing Facial Expressions. Current Biology 17, 1580–1585bb doi: 10.1016/j.cub.2007.08.048 [DOI] [PubMed] [Google Scholar]

- Sugden NA & Marquis AR (2017). Meta-analytic review of the development of face discrimination in infancy: Face race, face gender, infant age, and methodology moderate face discrimination. Psychological bulletin, 143 (11), 1201–1244. doi: 10.1037/bul0000116 [DOI] [PubMed] [Google Scholar]

- Taylor-Colls S.& Fearon P.(2015). The effects of parental behavior on infantś neural processing of emotion expressions. Child Development 86, 877–888. doi: 10.1111/cdev.12348 [DOI] [PubMed] [Google Scholar]

- Tenenbaum EJ, Shah RJ, Sobel DM, Malle BF, & Morgan JL (2013). Increased Focus on the Mouth Among Infants in the First Year of Life: A Longitudinal Eye Tracking Study. Infancy, 18(4), 534–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon A, McCarry T, Nurse M, & Hare T.et al. (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. doi: 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanderwert R, Westerlund A, Montoya L, McCormick S, Miguel H, & Nelson C.(2014). Looking to the eyes influences the processing of emotion on face-sensitive event-related potentials in 7-month-old infants. Developmental Neurobiology, 75(10), 1154–1163. doi: 10.1002/dneu.22204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel M, Monesson A, & Scott L.(2012). Building biases in infancy: the influence of race on face and voice emotion matching. Developmental Science, 15(3), 359–372. doi: 10.1111/j.1467-7687. [DOI] [PubMed] [Google Scholar]

- Xie W, McCormick SA, Westerlund A, Bowman LC, & Nelson CA (2018). Neural correlates of facial emotion processing in infancy. Developmental Science, e12758. doi: 10.1111/desc.12758 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang E, Zald D, & Blake R.(2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion, 7(4), 882–886. doi: 10.1037/1528-3542.7.4.882 [DOI] [PMC free article] [PubMed] [Google Scholar]