Abstract

We investigate the box-counting dimension of the image of a set under a random multiplicative cascade function f. The corresponding result for Hausdorff dimension was established by Benjamini and Schramm in the context of random geometry, and for sufficiently regular sets, the same formula holds for the box-counting dimension. However, we show that this is far from true in general, and we compute explicitly a formula of a very different nature that gives the almost sure box-counting dimension of the random image f(E) when the set E comprises a convergent sequence. In particular, the box-counting dimension of f(E) depends more subtly on E than just on its dimensions. We also obtain lower and upper bounds for the box-counting dimension of the random images for general sets E.

Introduction

The random multiplicative cascade is a well-studied random measure on the unit cube in d-dimensional Euclidean space. It originally arose in Mandelbrot’s study of turbulence [22] but has since been investigated in its own right, see e.g. [3–6, 14, 17, 19, 23]. In one dimension the measure may be constructed iteratively by subdividing the unit line into dyadic intervals, multiplying the length of each subdivision by an i.i.d. copy of a common positive random variable W with mean . The resulting measure can alternatively be thought of in terms of its cumulative distribution function which may also be interpreted as a random metric by setting . The latter approach was picked up as a model for quantum gravity by Benjamini and Schramm [8], who analysed the change in Hausdorff dimension of deterministic subsets under the random metric, or equivalently, its image under f with the Euclidean metric. They obtained an elegant formula for the almost sure Hausdorff dimension s of F with respect to the random metric in terms of the Hausdorff dimension d of F in the Euclidean metric and the moments of W:

| 1.1 |

Further, when W has a log-normal distribution, they showed that the formula reduces to the famous KPZ equation, first established by Knizhnik, Polyakov, and Zamolodchikov [20], that links the dimensions of an object in deterministic and quantum gravity metrics. Barral et al. [5] removed some of the assumptions of Benjamini and Schramm, and Duplantier and Sheffield [11] studied the same phenomenon in another popular model of quantum gravity, Liouville quantum gravity. Duplantier and Sheffield show that a KPZ formula holds for the Euclidean expectation dimension, an “averaged” box-counting type dimension.

Using dimensions to study random geometry has a fruitful history, see e.g. [1, 8, 10, 15, 21, 25], which use dimension theory in their methodology. Whilst much of the literature in random geometry considers Hausdorff dimension or other ‘regular’ scaling dimensions, box-counting dimensions have not been explored as thoroughly. In part this may be due to the more complicated geometrical properties of box-counting dimension of a set, manifested, for instance, in its projection properties, see [13].

One might hope that a formula analogous to (1.1) would also hold for the box-counting dimension of images of sets under the cascade function f. We investigate this question and find that this need not be the case for sets that are not sufficiently homogeneous. We give bounds that are valid for the box-counting dimensions of f(E) for general sets E, and then in Theorems 1.11 and 1.12 give an exact formula for the box dimension of f(E) for a large family of sets of a very different form from (1.1).

We remark that the study of dimensions of the images of sets under various random functions goes back a considerable time. For example, with as index-alpha fractional Brownian motion, , see Kahane [18]. On the other hand, the corresponding result for packing and box-counting dimensions is more subtle, depending on ‘dimension profiles’, as demonstrated by Xiao [26].

Notation and definitions

This section introduces random multiplicative cascade functions and dimensions along with the notation that we shall use. We will use finite and infinite words from the alphabet throughout. We write finite words as for with as the empty word, with , and for the infinite words. We combine words by juxtaposition, and write for the length of a finite word.

For let denote the dyadic interval

taking the rightmost intervals to be closed. We denote the set of such dyadic intervals of lengths by . Note that every interval of is the union of exactly two disjoint intervals in .

Underlying the random cascade construction is a random variable W, with a tree of independent random variables with the distribution of W. We will assume throughout that W is positive, not almost-surely constant and that

| 1.2 |

Note implies for .

We differentiate between the subcritical regime when and the critical regime when . Unless otherwise noted, we assume the subcritical regime. Here, the length of the random image f([0, 1]) is given by

where |A| denotes the diameter of a set A, and with the (subcritical) random cascade measure. Comprehensive accounts of the properties of L can be found in [8] and [19], in particular the assumption that implies that L exists and almost surely and . Similarly, the length of the random image of the interval is given by

has the distribution of L, independently for for each fixed k. The random multiplicative cascade measure on [0, 1] is obtained by extension from the . Almost surely, has no atoms and for every interval I, so the associated random multiplicative cascade function given by is almost surely strictly increasing and continuous. We do not need to refer to further and will work entirely with f.

In the critical regime a similar measure exists. In particular, normalising with gives

where the convergence is in probability. The random limit L exists and almost surely under the additional assumption that , see [9]. Here , unlike the subcritical case. The associated measure is therefore finite almost surely, and it was shown in [5] that this measure almost surely has no atoms. We refer the reader to [5] for a detailed account of critical Mandelbrot cascades. Note further that the length of the random image of the interval is given by

where is a random variable that is equal to L in distribution (and hence has infinite mean).

Note that while we will consider image sets f(E) as subsets of with the Euclidean metric, equivalently one could define a random metric by setting and investigate instead. For more details on such alternative interpretations, see [8].

The Hausdorff dimension is the most commonly considered form of fractal dimension. The Hausdorff dimension of a subset E of a metric space (X, d) may be defined as

Perhaps more intuitive are the box-counting dimensions. Let (X, d) be a metric space and be non-empty and bounded. Write for the minimal number of sets of diameter at most needed to cover E. The upper and lower box-counting dimensions (or box dimensions) are given by

If this limit exists, we speak of the box-counting dimension of E. Note that whilst many ‘regular’ sets (such as Ahlfors regular sets) have equal Hausdorff and box-counting dimension this is not true in general.

Statement of results

Our aim is to find or estimate the dimensions of f(E) where f is the random cascade function and . Note that these dimensions are tail events, since changing for a fixed k results in just a bi-Lipschitz distortion of the set f(E). This implies that the Hausdorff and upper and lower box-counting dimensions of f(E) each take an almost sure value.

Benjamini and Schramm established the formula for the Hausdorff dimension.

Theorem 1.1

(Benjamini, Schramm [8]). Let f be the distribution of a subcritical random cascade. Suppose that for all in addition to the standard assumptions (1.2). Let and write . Then the almost sure Hausdorff dimension of the random image of E is the unique value s that satisfies

| 1.3 |

Note that the expression on the right in (1.3) is continuous in s and strictly increasing, mapping [0, 1] onto [1, 2], see [8, Lemma 3.2].

This result was improved upon by Barral et al. who also proved the result for the critical cascade measure.

Theorem 1.2

(Barral, Kupiainen, Nikula, Saksman, Webb [5]). Let f be the distribution of a subcritical or critical random cascade. Assume that for all and for some . Let be some Borel set with Hausdorff dimension . Then the almost sure Hausdorff dimension of the random image of E is the unique value s that satisfies

General bounds for box-counting dimensions of images

Our first result is that the upper box-counting dimension of E is bounded above by a value analogous to that in (1.3), though the assumption that for is not required here for subcritical cascades.

Theorem 1.3

(General upper bound). Let f be the distribution of a subcritical random cascade or the distribution of a critical random cascade with the additional assumption that for some and . Let be non-empty and compact and let . Then almost surely where s is the unique non-negative number satisfying

| 1.4 |

Combining this result with Theorem 1.2 we get the immediate corollary for sets with equal Hausdorff and (upper) box-counting dimension, such as Ahlfors regular sets.

Corollary 1.4

Let f be the distribution of a subcritical or critical random cascade. Suppose additionally that for all and in the critical case assume also that . If is non-empty and compact, and , then almost surely where s is given by (1.4).

We can also apply Theorem 1.3 to the packing dimension.

Corollary 1.5

Let f be the distribution of a subcritical cascade. If is non-empty and compact and , then almost surely where s satisfies

Proof

Recall that the packing dimension of a set E equals its modified upper box-counting dimension, that is , where the may be taken to be compact. The conclusion follows by applying Theorem 1.3 to countable coverings of E.

We also derive general lower bounds.

Theorem 1.6

(General lower bound). Let f be the distribution of a subcritical random cascade. Let be non-empty and compact. Then almost surely

| 1.5 |

and, provided that additionally for some , then

| 1.6 |

Further, the same inequalities hold for critical random cascades under the additional assumptions that for some and .

It should be noted that these upper and lower bounds are asymptotically equivalent for small dimensions.

Proposition 1.7

Let and let be the unique solution to

| 1.7 |

Further, let

| 1.8 |

Then as .

Theorems 1.3 and 1.6, as well as Proposition 1.7 will be proved in Sect. 2.1.

Decreasing sequences with decreasing gaps

To show that neither the expressions in (1.4) nor (1.5)–(1.6) give the actual box dimensions of f(E) for many sets E, and that the box dimension of the random image f(E) depends more subtly on E than just on its dimension, we will consider sets formed by decreasing sequences that accumulate at 0, and obtain the almost sure box dimensions of their images in our main Theorems 1.11 and 1.12. Let be a sequence of positive reals that converge to 0. We write .

Given two sequences and of positive reals that are eventually decreasing and convergent to 0 we say that eventually separates if there is some such that for all there exists such that . We will need this property, which is preserved under strictly increasing functions, when comparing dimensions of the images of sequences under the random function f. However, we first use it to compare the box-counting dimensions of deterministic sets. The simple proofs of the following two lemmas are given in Sect. 2.3.

Lemma 1.8

Let and be strictly decreasing sequences convergent to 0 such that eventually separates . Then

We write for the set of sequences convergent to 0 such that . We say that the sequence is decreasing with decreasing gaps if and is (not necessarily strictly) decreasing.

Lemma 1.9

Let and be decreasing sequences with decreasing gaps with . Then eventually separates .

Of course, the most basic example of such sequences are the powers of reciprocals. For let and let

We may compare with other sequences in .

Corollary 1.10

Let be a strictly decreasing sequence with decreasing gaps such that , where . Then

Proof of Corollary 1.10

Clearly for and it is well-known that , see [12, Example 2.7]. If then eventually separates and eventually separates , by Lemma 1.9, so by Lemma 1.8,

with similar inequalities for upper box dimension. Since we may take and arbitrarily close to p, the conclusion follows.

Random images of decreasing sequences with decreasing gaps

We aim to find the almost sure dimension of for sequences . To achieve this we work with special sequences for which is more tractable, and then extend these conclusions across the using the eventual separation property.

Let be a real parameter and let be the set given in terms of binary expansions by

where denotes m consecutive 0s and represents all digit sets of length m of 0s and 1s. Equivalently, letting be the set of infinite strings

then is the image of under the natural bijection where , and we will identify such strings with binary numbers in the obvious way throughout. Clearly, consists of a decreasing sequence of numbers with decreasing gaps, together with 0.

If the nth term in this sequence is with , then . Moreover,

Hence

| 1.9 |

Letting and thus , it follows that , so by Corollary 1.10.

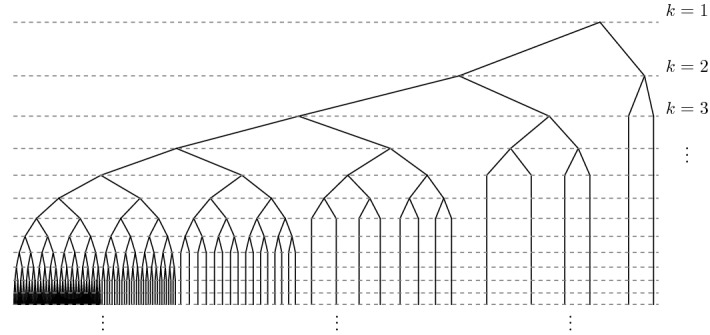

We may think of the structure of a set as a tree formed by the hierarchy of binary intervals that overlap E. The structure of , with a ‘stem’ at 0 and a sequence of full trees branching off this stem, see Fig. 1, makes it convenient for analysing the box dimension of the random image . To obtain the lower bound, we will require a result on large deviations in binary trees that requires the additional assumptions that

| 1.10 |

The first condition implies that for all , and in particular that is smooth for all . Applying the dominated convergence theorem, we can compute the derivatives of the t-moments of W:

We also note that

| 1.11 |

so in particular is strictly increasing in , since, by the Cauchy-Schwarz inequality,

Fig. 1.

The coding tree of for . At every left-most level k node a full binary tree of height k branches off

We can now state our main results.

Theorem 1.11

Let W be a positive random variable that is not almost surely constant and satisfies (1.2) and (1.10). Let f be the random homeomorphism given by the (subcritical) multiplicative cascade with random variable W. Then, almost surely, the random image has box-counting dimension

| 1.12 |

for all . We note that we only require (1.10) for the lower bound in (1.12).

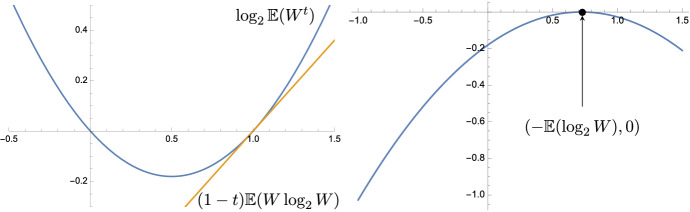

The dimension formula is expressed in terms of the Legendre transform of the logarithmic moment . Figure 2 shows the logarithmic moment and its Legendre transform for a log-normally distributed W that satisfies our assumptions.

Fig. 2.

A plot of the moments (left) along with its Legendre transform (right) for W having log-normal distribution with variation

The right hand side of (1.12) is strictly increasing and continuous in , as we verify in Lemma 2.3. Using this, and noting that the ‘eventually separated’ condition is preserved under monotonic increasing functions, we may compare with , where , to transfer this conclusion to more general sequences.

Theorem 1.12

Let W be a positive random variable that is not almost surely constant and satisfies (1.2) and (1.10). Let f be the random homeomorphism given by the (subcritical) multiplicative cascade with random variable W. Then, almost surely, the random images have box-counting dimension

| 1.13 |

for all decreasing sequences with decreasing gaps and simultaneously.

The formula in (1.13) clearly does not coincide with (1.3) which gives the Hausdorff dimension in [8] or the average box-counting dimension in [11]. In particular, unlike Hausdorff dimension, the almost sure box-counting dimension of f(E) cannot be found simply in terms of the box-counting dimension of E and the random variable W underlying the f. One can easily construct a Cantor-like set E of box and Hausdorff dimensions with the almost sure box dimension of f(E) as the solution in (1.3), see Corollary 1.4. But the set with also has box dimension with the box dimension of given by (1.13), so E and have the same box dimension but with their random images having different box dimensions. Thus the structure of the set and not just its box-counting dimension determine the image dimension.

We obtain different dimension results for sets accumulating at 0 because we seek a balance between the behaviour of products of the along the ‘stem’ , which grows like (a ‘geometric’ mean), and that of the trees that branch off this stem and grow like (an ‘arithmetic’ mean). These different large deviation behaviours are exploited in the proofs. The stark difference in these two behaviours was analysed in detail in [24] in a different context.

On the other hand, homogeneous, or regular sets, have a structure resembling that of a tree that grows geometrically and there is no ‘stem’ that distorts this uniform behaviour.

Finally we remark that Theorems 1.11 and 1.12 can be extended to critical cascades in a similar fashion to our general bounds. We ommit details to avoid unneccesary technicalities.

Specific W distributions

The expressions for the box-counting dimension in (1.13) and the lower and upper bounds above can be simplified or numerically estimated for particular distributions of W. Most often considered is a log-normal distribution, and we also examine a two-point discrete distribution, as was done for the Hausdorff dimension of images in [8].

Log-normal W

Let be the set formed by the sequence , and let W be log-normally distributed with parameters , that is where . The condition that requires and we can compute . The standing condition that can be shown to be equivalent to . Further, the conditions in (1.2) and (1.10) can easily be checked. Let and be the general lower and upper bound given by Theorems 1.6 and 1.3, respectively, for these W. Then,

Noting that

we can calculate the upper bound since (1.4) becomes the quadratic

To compute the almost sure dimension of , first note that for the infimum in the numerator of the dimension formula (1.13) is zero. For the infimum occurs at where

giving

for and 0 otherwise. Notice in particular that the infimum is clearly continuous at . We obtain

Differentiating the right hand side with respect to x gives

Equating this with 0 and solving for x gives two solutions since the numerator is quadratic and the denominator is non-zero for . Only one solution of the quadratic is positive so

where

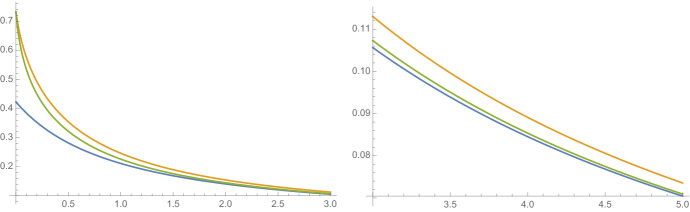

Figure 3 contains a plot of the almost sure dimension of with W being log-normally distributed for parameter , chosen to give clearly visible separation between the dimension and the general bounds.

Fig. 3.

A plot of for and , where W is a log-normal random variable with parameters

Discrete W

Again, be the set formed by the sequence . Fix a parameter and let W be the random variable satisfying . Clearly, and our assumptions follow by the boundedness of W. The geometric mean is and Theorem 1.6 gives the lower bound

The upper bound from Theorem 1.3 is implicitly given by

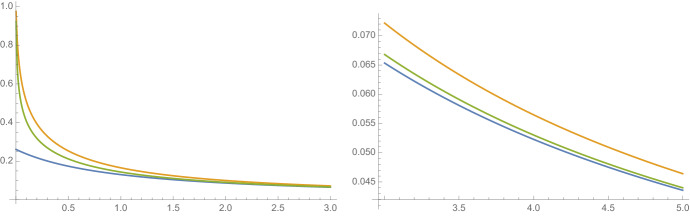

The functions for are plotted in Fig. 4. We were unable to find a closed form for from (1.13) and the figure was produced computationally.

Fig. 4.

A plot of for and , where W is a discrete random variable with and occurring with equal probability

Proofs

General bounds

In this section we prove Theorems 1.3 and 1.6 giving almost sure bounds for and for a general set .

General upper bound

We establish Theorem 1.3 by estimating the expected number of intervals such that intersects f(E) and , to provide an almost sure bound for this number which we relate to the upper box-counting dimension of f(E).

Proof of Theorem 1.3

First consider to be a subcritical cascade measure. Let and let satisfy

| 2.14 |

Let and . For each , Markov’s inequality gives

| 2.15 |

We estimate the expected number of dyadic intervals with image of length at least r. For each , let be the set of intervals in that intersect E and let be the number of such intervals, so for all sufficiently large k. Let

From (2.15), the fact that , and that ,

Let be the least integer such that

| 2.16 |

Then

| 2.17 |

where we have used (2.14) and (2.16), and where does not depend on or .

Note that, for , the image set f(E) is covered by the disjoint intervals where , with if . We denote by the minimal number of intervals of lengths at most r that intersect the set F. Then

| 2.18 |

since each interval with has a parent interval with with at most two such having a common parent interval.

We now sum over a geometric sequence of . Let . From (2.18) and (2.17)

so

Hence, almost surely, is bounded in n, so from the definition of box-counting dimension, noting that it is enough to take the limit through a geometric sequence , we conclude that for all . Since is arbitrary . We may let and correspondingly let with t satisfying (2.14), where s is given by (1.4), recalling that is increasing and continuous. Thus almost surely where s satisfies (1.4).

If is the critical cascade measure, the proof follows similarly. We can first estimate

Noting that for , see [16, Theorem 1.5] or [5, Equation (26)], gives

for some constant and one obtains an additional subexponential contribution to the expected covering number. The rest of the proof follows in much the same way and details are left to the reader.

General lower bound

For the lower bound, Theorem 1.6, we note that, by the strong law of large numbers,

almost surely, where the are independent with the distribution of W. This enables us to deduce that a significant proportion of the intervals that intersect f(E) must be reasonably large. Further, since we are taking logarithms we can ignore any subexponential growth which in particular means that also

almost surely.

We will use the following two lemmas.

Lemma 2.1

Let and let be events such that for all . Let . Then

| 2.19 |

Note that there is no independence requirement on the .

Proof

Let Y be the event and let . By the law of total expectation

Hence giving (2.19).

The following lemma can be derived from Hoeffding’s inequality.

Lemma 2.2

Let be a sequence of i.i.d. binomial random variables with and . Then,

and

Proof

Hoeffding’s inequality states that for any sequence of independent random variables with and for ,

Thus,

where we have applied Hoeffding’s inequality with ,, and .

For the second inequality we similarly obtain

Proof of Theorem 1.6

Write and let . Then, for each , by the strong law of large numbers, almost surely, so there is some such that

for all . As has the distribution of L, there exists such that . Since , and is independent of ,

| 2.20 |

for each if .

The same argument can be repeated for the critical case. Here, the strong law of large numbers gives almost surely and so for k large enough,

Again is equal to L in distribution and there exists such that . We can now conclude that (2.20) also holds in the critical case.

For each , let be the set of intervals in that intersect E, and let be the number of such intervals. By the definition of upper box-counting dimension for infinitely many k; write K for this infinite set of . Applying Lemma 2.1 to the intervals , taking and ,

| 2.21 |

for all .

Let be the maximum number of disjoint intervals of lengths at least r that intersect a set F. Write for each . From (2.21), with probability at least for each , so with probability at least it holds for infinitely many . It is easy to see that an equivalent definition of upper box-counting dimension is given by . It is enough to evaluate this limit along the geometric sequence , so

with probability at least , and therefore with probability 1, since is a tail event for all s. Since is arbitrary, (1.5) follows.

For the lower box dimensions for subcritical cascades, we let , which we may assume to be positive, and . We need an estimate on the rate of convergence in the laws of large numbers: if for some then

| 2.22 |

this follows, for example, from estimates of Baum and Katz (taking and in [7, Theorem 3(b)]). For write

noting that is independent of . By (2.22) . For each let be the event

so .

For each , let be the set of intervals in that intersect E, so there is a number such that if then . Fixing , let , which depends only on . By Lemma 2.1,

The random variables are independent of and of each other. Let . Conditional on , a standard binomial distribution estimate, which follows from Hoeffding’s inequality (see Lemma 2.2), gives that

Hence, unconditionally, for each k,

Since and , the Borel-Cantelli lemma implies that, with probability one,

for all sufficiently large k. As in the upper dimension part, but taking lower limits, it follows that for all , giving (1.6).

For the lower box dimensions and critical cascades we note that

Following the same argument as above with the additional term we conclude that

for sufficiently large k. Again, taking lower limits and noting that we get the required lower bound for critical cascades.

Asymptotic behaviour

Proof of Proposition 1.7

Solving (1.8) for d and substituting in (1.7) gives

Rearranging gives

Note that as . Recall that our assumptions imply and for all . It is well-known that the power means converge to the geometric mean, i.e. . Combining this with the above means that as required.

Box dimension of images of decreasing sequences

We now proceed to the substantial proof of Theorems 1.11 from which we easily deduce Theorem 1.12. First, the following lemma notes some properties of the expressions that occur in (1.12) and (1.13), in particular it follows that they are continuous in and p respectively (for example, the right hand side of (1.12) is with as in (2.23)).

Lemma 2.3

(a) For let

If this infimum is attained at . If the infimium is attained at . Furthermore is continuous for .

(b) For let

| 2.23 |

Then is strictly decreasing and continuous in .

Proof

(a) Let for and . Then by (1.11) so is a strictly convex function. Also , so in particular, and , by Jensen’s inequality and that W is not almost surely constant, so the conclusions in (a) on the infimum follows. The function is continuous for since it is the Legendre transform of the twice continuously differentiable strictly convex function .

(b) Now consider the function

which is continuous for , and note that . Since the supremum in is over a bounded interval, it is an exercise in basic analysis to see that is continuous in and that, since is strictly decreasing in for each x, is strictly decreasing.

Upper bound for

Throughout this section, the distribution of W, and so , are fixed, as is .

First we bound the expected number of intervals of length at most r needed to cover the part of by bounding the expected number of dyadic intervals in that intersect E such that .

Lemma 2.4

Let . Let and suppose that for some . Then for all , there exists such that

| 2.24 |

for all . The numbers may be taken to vary continuously in and do not depend on or r.

Proof

We bound from above the expected number of dyadic intervals which intersect such that . We split these intervals into three types.

There are k intervals which cover to give the right-hand term of (2.24).

For , let be the collection of all intervals of the form considered in (a),(b),(c) above that intersect and such that and , where if then , so the intervals with have length at most r and cover . Each has a ‘parent’ interval with at most two intervals in having a common parent interval. These parent intervals have and are included in those counted in (a),(b),(c) so is bounded above by twice this number of intervals.

Hence, combining (a), (2.28) and (2.31) we obtain (2.24), where is continuous on (0, 1) and we can replace by .

By writing r in an appropriate form relative to , we can bound the expectation in the previous lemma by r raised to a suitable exponent. Note that in the following lemma we have to work with the infimum over where in order to get a uniform constant . At the end of the proof of Proposition 2.6 we show that the infimum can be taken over .

Lemma 2.5

Let . Let and suppose that for some . Then for all , there exists , independent of k, r and , such that, provided that ,

| 2.32 |

for all , where

Proof

In Lemma 2.4 is continuous and positive on (0, 1), so let . For and define by

| 2.33 |

We bound the right hand side of (2.24) using (2.33). For ,

Changing the base of logarithms to 1/r and taking the infimum over ,

Inequality (2.32) now follows from (2.24) by taking the supremum over . If ,

since, by calculus, the middle term is increasing in x for , provided that , so it is enough to take the supremum over .

It remains to sum the estimates in Lemma 2.5 over for an appropriate K and make a basic estimate to cover . The Borel-Cantelli lemma leads to a suitable bound for for all sufficiently small r, and finally we note that the infimum can be taken over .

Proposition 2.6

Let . Under the assumptions in Theorem 1.11, but without the need for (1.10), almost surely,

| 2.34 |

Proof

Let and let with , where is as in Lemma 2.5. By the strong law of large numbers, as , so almost surely there exists a random number such that for all . We condition on and let A be this number.

Given , set . Then, covering by intervals of lengths 1/r,

Thus, using Lemma 2.5, taking a as this random A and the same ,

for small r. Hence, conditional on , almost surely,

for r sufficiently small, using Markov’s inequality, so the Borel-Cantelli lemma taking gives that for all sufficiently small r, almost surely.

We conclude that, almost surely, for all with ,

| 2.35 |

for all . For ,

where M is the maximum of the derivative of over [0, 1]. Substituting this in the numerator of (2.35) with and , and noting that is bounded for , we may let , so that we may take the infima over in (2.35) and thus over using Lemma 2.3(a). We may then let in (2.35) and finally let , using the continuity in from Lemma 2.3(b), to get (2.34).

Lower bound for

To obtain the lower bound of Theorem 1.11 we establish a bound on the distribution of the products of independent random variables on a binary tree. We will use a well-known relationship between the free energy of the Mandelbrot measure that goes back to Mandelbrot [22] and has been proved in a very general setting in Attia and Barral [2].

Proposition 2.7

(Attia and Barral [2]) Let X be a random variable with finite logarithmic moment function for all . Write for the rate function and assume that is twice differentiable for . If are independent and identically distributed with the distribution of X, then,

We refer the reader to the well-written account of the history of this statement in [2], where Proposition 2.7 is a special case of their Theorem 1.3(1), see in particular (1.1) and situation (1) discussed in [2, page 142]. Note that the application of this theorem requires the strongest assumptions thus far on the random variable W.

We derive a version of this Proposition suited to our setting.

Lemma 2.8

Let and , and choose such that . Then there exists such that

| 2.36 |

Proof

Using Proposition 2.7 with , , , and replacing x by , we see that almost surely,

Since we are, for the moment, restricting to , we can assume that the infimum occurs when by Lemma 2.3

Since the event decreases as , for all , almost surely,

By Egorov’s theorem, there exists such that with probability at least ,

for all , from which (2.36) follows.

We now develop Lemma 2.8 to consider the independent subtrees with nodes a little way down the main binary tree to get the probabilities to converge to 1 at a geometric rate. When we apply the following lemma, we will take to be small and close to 1.

Lemma 2.9

Assume that for some . Let be such that , and let be sufficiently small so that . Let and . Then there exists , and , such that for all ,

| 2.37 |

where .

Proof

Fix some and let where , with given by Lemma 2.8. At level of the binary tree there are nodes of subtrees which have depth . By Lemma 2.8, for each node , there is a probability of at least such that its subtree of depth has ‘sufficiently many paths with a large W product’, that is with

| 2.38 |

| 2.39 |

Since these subtrees are independent, the probability that none of them satisfy (2.39) is at most for some . Otherwise, at least one subtree satisfies (2.39), say one with node for some , choosing the one with minimal binary string if there are more than one. We condition on this existing, which depends only on .

Choose such that . Using Markov’s inequality,

Let be the (random) number in (2.38). Recalling that for all , and using a standard binomial distribution estimate coming from Hoeffding’s inequality (see Lemma 2.2),

Hence, conditional on ,

| 2.40 |

with probability at least

for some and , for all .

The conclusion (2.37) now follows, since the unconditional probability of (2.40) is at least , on choosing , and increasing if necessary to ensure that for all .

Using Lemma 2.9 we can obtain the lower bound for Theorem 1.11.

Proposition 2.10

Let . Under the assumptions in Theorem 1.11, almost surely,

Proof

Fix and let be as in Lemma 2.9. For let . Replacing k by in (2.37) and noting that , it follows from the Borel-Cantelli lemma that almost surely there exists a random such that for all ,

| 2.41 |

Here the numbers , which are introduced for notational convenience so we can replace by and by in (2.37), depend on but not on k.

By the strong law of large numbers, almost surely, so almost surely there exists such that for all .

For let

Then

provided that , using (2.41).

Since no faster than geometrically, it suffices to compute the (lower) box-counting dimension along the sequence . Hence

almost surely, on letting and dividing through by . This is valid for all and , so we obtain

| 2.42 |

for all . However, for the infimum in (2.42) is 0 by Lemma 2.3, whereas the denominator is increasing in x. Thus the supremum is achieved taking , as required.

Proof of Theorem 1.11

For fixed , Theorem 1.11 follows immediately from Propositions 2.6 and 2.10. Further, with probability 1, (1.13) holds simultaneously for all countable subsets and so in particular for . Since (1.13) is continuous in p, it must hold for all simultaneously and so Theorem 1.11 holds.

Box dimension of for

It remains to extend Theorem 1.11 to Theorem 1.12 which we do using the ‘eventually separating’ notion.

Proof of Theorem 1.12

For let

Let for and let . Then and , see (1.9). By Lemma 1.9, eventually separates , and eventually separates . Since f is almost surely monotonic, it preserves ‘eventual separation’ for all pairs of sequences, so eventually separates and eventually separates . By Lemma 1.8,

By Lemma 2.3 is continuous in , so taking arbitrarily close to p, we conclude that .

Further, since ‘eventual separation’ is preserved almost surely for all pairs of sequences and , the box-counting dimension of is constant for all . Applying Theorem 1.11 we get that for all and simultaneously with probability 1.

Decreasing sequences

We now prove the statements in Sect. 1.2.2.

Proof of Lemma 1.8

We may assume that in the definition of eventually separating , since removing a finite number of points from a sequence does not affect its box-counting dimensions. For and E a bounded subset of let be the maximal number of points in an r-separated subset of E, and let be a maximal r-separated subset of (with increasing). Then for each there exists such that . Then is an r-separated set, where N is the largest odd number less than . It follows that . The inequalities now follow from the definition of the lower box-counting dimension , and similarly for upper box-counting dimension.

Proof of Theorem 1.9

Given there is such that if then

Since that gaps of are decreasing, by comparing with the gaps between and , we see that

for all , for all sufficiently large n, equivalently all sufficiently small . Hence by redefining , given the right-hand inequality of

| 2.43 |

holds for all sufficiently large n; the left-hand inequality following from a similar estimate. For the sequence

Choose , and take x small enough, that is n, m large enough, for (2.43) and (2.43) to hold. For such an n, choose . Taking m such that ,

Thus the interval intersects the shorter interval , so either or , so eventually separates .

Acknowledgements

The authors thank the anonymous referee for their many helpful suggestions that improved this manuscript. The authors further thank Xiong Jin for his comments on an earlier draft.

Funding

Open access funding provided by Austrian Science Fund (FWF).

Declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Footnotes

ST was funded by Austrian Research Fund (FWF) Grant M-2813.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Kenneth J. Falconer, Email: kjf@st-andrews.ac.uk

Sascha Troscheit, Email: sascha@troscheit.eu.

References

- 1.Aldous D. The continuum random tree I. Ann. Probab. 1991;19(1):1–28. doi: 10.1214/aop/1176990534. [DOI] [Google Scholar]

- 2.Attia N, Barral J. Hausdorff and packing spectra, large deviations, and free energy for branching random walks in . Commun. Math. Phys. 2014;331(1):139–187. doi: 10.1007/s00220-014-2087-9. [DOI] [Google Scholar]

- 3.Barral J, Jin X. Multifractal analysis of complex random cascades. Commun. Math. Phys. 2010;297(1):129–168. doi: 10.1007/s00220-010-1030-y. [DOI] [Google Scholar]

- 4.Barral J, Jin X, Mandelbrot B. Convergence of complex multiplicative cascades. Ann. Appl. Probab. 2010;20(4):1219–1252. doi: 10.1214/09-AAP665. [DOI] [Google Scholar]

- 5.Barral J, Kupiainen A, Nikula M, Saksman E, Webb C. Critical mandelbrot cascades. Commun. Math. Phys. 2014;325(2):685–711. doi: 10.1007/s00220-013-1829-4. [DOI] [Google Scholar]

- 6.Barral J, Peyrière J. Mandelbrot cascades on random weighted trees and nonlinear smoothing transforms. Asian J. Math. 2018;22(5):883–917. doi: 10.4310/AJM.2018.v22.n5.a5. [DOI] [Google Scholar]

- 7.Baum L, Katz M. Convergence rates in the law of large numbers. Trans. Am. Math. Soc. 1965;120:108–123. doi: 10.1090/S0002-9947-1965-0198524-1. [DOI] [Google Scholar]

- 8.Benjamini I, Schramm O. KPZ in one dimensional random geometry of multiplicative cascades. Commun. Math. Phys. 2009;289:653–662. doi: 10.1007/s00220-009-0752-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Boutaud P, Maillard P. A revisited proof of the Seneta–Heyde norming from branching random walks under optimal assumptions. Electron. J. Probab. 2019;24:1–22. doi: 10.1214/19-EJP350. [DOI] [Google Scholar]

- 10.Ding J, Gwynne E. The fractal dimension of Liouville quantum gravity: universality, monotonicity, and bounds. Commun. Math. Phys. 2020;374:1877–1934. doi: 10.1007/s00220-019-03487-4. [DOI] [Google Scholar]

- 11.Duplantier B, Sheffield S. Liouville quantum gravity and KPZ. Invent. Math. 2011;185:333–393. doi: 10.1007/s00222-010-0308-1. [DOI] [Google Scholar]

- 12.Falconer KJ . Fractal Geometry: Mathematical Foundations and Applications. 3. New York: Wiley; 2014. [Google Scholar]

- 13.Falconer KJ. A capacity approach to box and packing dimensions of projections of sets and exceptional directions. J. Fractal Geom. 2021;8:1–26. doi: 10.4171/JFG/96. [DOI] [Google Scholar]

- 14.Falconer KJ, Jin X. Exact dimensionality and projection properties of Gaussian multiplicative chaos measures. Trans. Am. Math. Soc. 2019;372(4):2921–2957. doi: 10.1090/tran/7776. [DOI] [Google Scholar]

- 15.Gwynne E, Holden N, Pfeffer J, Remy G. Liouville quantum gravity with matter central charge in : a probabilistic approach. Commun. Math. Phys. 2020;376:1573–1625. doi: 10.1007/s00220-019-03663-6. [DOI] [Google Scholar]

- 16.Hu Y, Shi Z. Minimal position and critical martingale convergence in branching random walks, and directed polymers on disordered trees. Ann. Probab. 2009;37:742–789. doi: 10.1214/08-AOP419. [DOI] [Google Scholar]

- 17.Jin X. A uniform dimension result for two-dimensional fractional multiplicative processes. Ann. Inst. Henri Poincaré Probab. Stat. 2014;50(2):512–523. doi: 10.1214/12-AIHP509. [DOI] [Google Scholar]

- 18.Kahane JP. Some Random Series of Functions. Cambridge: Cambridge University Press; 1985. [Google Scholar]

- 19.Kahane JP, Peyrière J. Sur certaines martingales de Benoit Mandelbrot. Adv. Math. 1976;22(2):131–145. doi: 10.1016/0001-8708(76)90151-1. [DOI] [Google Scholar]

- 20.Knizhnik VG, Polyakov AM, Zamolodchikov AB. Fractal structure of 2d-quantum gravity. Mod. Phys. Lett. A. 1988;3(8):819–826. doi: 10.1142/S0217732388000982. [DOI] [Google Scholar]

- 21.Le Gall J-F. Uniqueness and universality of the Brownian map. Ann. Probab. 2013;41(4):2880–2960. [Google Scholar]

- 22.Mandelbrot B. Intermittent turbulence in self similar cascades: divergence of high moments and dimension of carrier. J. Fluid Mech. 1974;62:331–333. doi: 10.1017/S0022112074000711. [DOI] [Google Scholar]

- 23.Molchan G. On the uniqueness of the branching parameter for a random cascade measure. J. Stat. Phys. 2004;115:855–868. doi: 10.1023/B:JOSS.0000022382.88228.fd. [DOI] [Google Scholar]

- 24.Troscheit S. On the dimensions of attractors of random self-similar graph directed iterated function systems. J. Fractal Geom. 2017;4(3):257–303. doi: 10.4171/JFG/51. [DOI] [Google Scholar]

- 25.Troscheit S. On quasisymmetric embeddings of the Brownian map and continuum trees. Probab. Theory Relat. Fields. 2021;179(3):1023–1046. doi: 10.1007/s00440-020-01024-2. [DOI] [Google Scholar]

- 26.Xiao Y. Packing dimension of the image of fractional Brownian motion. Stat. Probab. Lett. 1997;33:379–387. doi: 10.1016/S0167-7152(96)00151-4. [DOI] [Google Scholar]