Abstract

Brain–computer interface (BCI) can obtain text information by decoding language induced electroencephalogram (EEG) signals, so as to restore communication ability for patients with language impairment. At present, the BCI system based on speech imagery of Chinese characters has the problem of low accuracy of features classification. In this paper, the light gradient boosting machine (LightGBM) is adopted to recognize Chinese characters and solve the above problems. Firstly, the Db4 wavelet basis function is selected to decompose the EEG signals in six-layer of full frequency band, and the correlation features of Chinese characters speech imagery with high time resolution and high frequency resolution are extracted. Secondly, the two core algorithms of LightGBM, gradient-based one-side sampling and exclusive feature bundling, are used to classify the extracted features. Finally, we verify that classification performance of LightGBM is more accurate and applicable than the traditional classifiers according to the statistical analysis methods. We evaluate the proposed method through contrast experiment. The experimental results show that the average classification accuracy of the subjects’ silent reading of Chinese characters “左(left)”, “壹(one)” and simultaneous silent reading is improved by 5.24%, 4.90% and 12.44% respectively.

Keywords: Brain–computer interface, Chinese characters speech imagery, LightGBM, Feature classification

Introduction

Brain–computer interface (BCI) is a technology that connects the brain with external devices by collecting human or animal brain activity signals, so as to realize the control of external devices by the brain (Ramakrishnan et al. 2017; Xu et al. 2021). BCI technology is widely used in motor rehabilitation (Pan et al. 2021, 2020), human–computer interaction (Pan et al. 2021, 2020), neural decoding (Lee and Choi 2019; Pan et al. 2022) and other fields. Speech imagery is a relatively new research field of BCI system, which has a similar experimental paradigm with motor imagery. The BCI system based on speech imagery can realize a certain number of output instructions by collecting the EEG signals of subjects’ brain imagination to send out speech (but not produce face and vocal cord movement), and preprocessing, extracting and classifying the data (Fei and Changjie 2020).

There are three kinds of speech imagery BCI systems: phonemes, syllables and words speech imagery. Since vowels are the most basic phonemes in English, the study of the phonemes speech imagery BCI system has occupied most. Dasarla et al. collected the EEG signals of subjects who imagined 2 vowel speechs. The classification accuracy is 68–78% (DaSalla et al. 2009). Riaz et al. asked subjects to imagine the speech of 5 vowels and the matching classification accuracy reached 91% (Riaz et al. 2014). At present, the speech imagery of syllables accounts for less in the research of BCI system. Zhao et al. recorded 7 imagined syllables and 4 words. The classification accuracy is as high as 71% (Zhao and Rudzicz 2015). Hashim et al. collected 2 words imagined by the subjects. The average classification accuracy is 58% (Hashim et al. 2018). Mohanchandra et al. collected subjects to read 5 words silently and extracted EEG signals for 5 classifications. The accuracy is as high as 92% (Mohanchandra and Saha 2016).

Due to the phonemic differences between English and Chinese characters, studies based on English speech imagery are not suitable for most Chinese patients. Therefore, speech imagery based on Chinese characters has important research value. Wang et al. studied and recorded the EEG signals of 8 subjects when they silently read the Chinese characters “左(left)” and “壹(one)” in mind. The average classification accuracy of Chinese characters “左(left)” and “Rest” signals is 83.97%, that of Chinese characters “壹(one)” and “Rest” signals is 82.19%, and that of Chinese characters “左(left)” and “壹(one)” signals is 66.87% (Wang et al. 2013). Guo et al. extracted the EEG signals when 9 subjects continuously silent read the 4 Chinese characters “喝(drink)”, “右(right)”, “吃(eat)”, and “冷(cold)”. The average classification accuracy of the four Chinese characters of the BCI system reached 70% (Miaomiao and Zhiguang 2018). Although the research on Chinese characters speech imagery BCI system has a certain foundation, these studies generally have the problem of low accuracy of features classification.

Common BCI speech imagery feature classification algorithms include support vector machine (SVM) (Martin et al. 2016; Zhao and Rudzicz 2015; Mohanchandra and Saha 2016; Kristensen et al. 2020), linear discriminant analysis (LDA) (Sereshkeh et al. 2019; Lee et al. 2020, 2019; Sereshkeh et al. 2018; Jahangiri and Sepulveda 2017), extreme learning machine (ELM) (Qureshi et al. 2018; Min et al. 2016), etc. The advantage of SVM is that the classification idea is simple and the interval between samples and decision surface can be maximized, but it is limited by the sensitivity of SVM to the selection of parameters and kernel functions. The advantage of LDA is that the prior knowledge and experience of categories are used in the dimensionality reduction process. The classification information of samples depends on the mean, and the dimensionality reduction effect is good. However, the limitation of LDA in dimension reduction may leads to overfitting. As a new algorithm of single hidden layer feed forward neural network, the ELM has the advantages of fast learning speed and good generalization performance. However, only considering the empirical risk of ELM without its structural risk will lead to the problem of overfitting. This paper introduces a new classification algorithm—light gradient boosting machine (LightGBM) to feature classification. Compared with other classification algorithms, LightGBM can adapt to the EEG signals and its prediction speed is higher than the other traditional classification method. In addition, LightGBM is not easy to overfit and has the advantages of lower memory consumption, higher accuracy, and can effectively solve multi-classification problems (Abenna et al. 2021; Zeng et al. 2019).

In this paper, we propose a new speech imagery BCI classification algorithm—LightGBM. Firstly, wavelet packet decomposition (WPD) is used to extract the features of Chinese character speech images from EEG signals. Secondly, the LightGBM is introduced to classify features. The extracted features are bound by Gradient-based One-Side Sampling (GOSS); The bound features are fused through Exclusive Feature Bundling (EFB), which can reduce the number of irrelevant features and improve the accuracy of classification. Finally, this paper compares the LightGBM algorithm with the traditional machine learning classification algorithms used for the speech imagery BCI system, and evaluates the applicability and accuracy of the adopted LightGBM algorithm relative to the traditional classification algorithms. The contrast experiment is designed to evaluate the performance of proposed method. The experimental results show that when classifying the “左(left)” and “Rest” signals, the average classification accuracy of EEG signals is close to 90%; When classifying the “壹(one)” and “Rest” signals, the average classification accuracy of EEG signals is 87.28%; When classifying the “左(left)” and “壹(one)” signals, the average classification accuracy is 75.19%. The results show that the BCI classification algorithm based on LightGBM has better classification effectiveness than the existing algorithms.

Dataset description

The dataset in this paper comes from the literature (Wang et al. 2013). In order to ensure the integrity of the paper and facilitate reader to understand, this part is briefly introduced the paradigm and data acquisition below, and the collected data is preprocessed.

Experimental paradigm

In the experimental paradigm, the two Chinese characters “左(left)” and “壹(one)” are repeatedly prompted, which have different pronunciations and meanings. In the time range of 0–1 s (Idle period), the background of LCD screen is black, and the fixed symbol “+” is displayed in the center of the screen. Subjects are required not to do any imagination tasks during this period, which is to make the EEG signals restore to the baseline level and avoid interference with the subsequently collected EEG signals. In the time range of 1–2 s (Prompt period), the Chinese character “左(left)” or “壹(one)” is randomly displayed on the LCD screen. In the time range of 2–6 s (Imagination period), the Chinese characters disappear and the screen appears black. The subjects imagined the speech of Chinese characters continuously in the imagination period with the condition of ensuring no sound and no activity of facial organs. In the following 68s (Relax), in order to prevent the subjects from adapting to the experiment, the LCD screen displays the fixed symbol “*” on the black background, and the subjects can rest during this period. In each experiment, two Chinese characters were presented 30 times, and each Chinese character was presented 15 times in random order. Each subject completed 5 sets of experiments, and each Chinese character had 75 experiments data, a total of 150 experiments. Each Between each group of experiments, subjects can have a break of 5 min. The sequence diagram of the experimental paradigm is shown in Fig. 1.

Fig. 1.

The diagram of the experimental paradigm

Data acquisition

Studies have shown that the left inferior frontal gyrus is the neuroanatomical basis of speech imagery. Through functional magnetic resonance imaging, Shergill et al. found that there was obvious activation in the left inferior frontal gyrus when imagining sentence speech (Shergill et al. 2001). Bocquelet et al. showed that the process of speech imagery is related to the left hemisphere, and confirmed that Wernicke’s area (located in the superior temporal area of the left hemisphere) and Broca’s area (located in the inferior temporal area of the left hemisphere) contain a lot of speech imagery (Bocquelet et al. 2017).

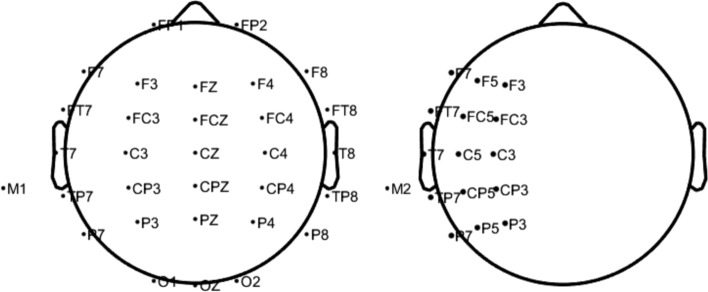

For acquiring the data of EEG signals, Neuroscan Synamps 2 is used to record EEG signals according to 10–20 international standard lead system, as shown in Fig. 2. The experimental data are from two different BCI electrode array systems. The first is the whole brain electrode information. The electrode device assembly contains 30 acquisition channels. The second is the left hemisphere electrode information including the areas of Wernicke and Broca. The electrode device assembly contains 15 acquisition channels. The dataset records EEG signals of eight Chinese subjects with physical and mental health. All the subjects are right-handed, age from 22 to 27 years, and do not participate in similar experiments. Among the eight subjects, two subjects with collecting whole brain are represented by A1 and A2; Six subjects with collecting the left hemisphere containing the areas of Wernicke and Broca are represented by B1–B6.

Fig. 2.

Electrode locations of two EEG settings

Data analysis and classification

Pre-processing

The electrodes of HEOG and VEOG are used to record ocular artifacts when EEG signals are collected. The reference electrode is placed on the left mastoid (M1). In order to take into account the data quality and operation speed, the sampling frequency of EEG signals are set to 250Hz. Firstly, the baseline of the collected signals are corrected, and the EEG signals are filtered by 6–30 Hz band-pass zero-phase filter. Secondly, in order to remove the interference caused by noise such as electrooculogram, electromyogram and heartbeat, the Independent Component Analysis (ICA) method is added in the experiment, and the filtered signals are decomposed into independent components to remove the interference (Xu et al. 2021). Finally, according to the experimental paradigm and the induction time of Chinese characters speech imagery, the processed EEG signals are divided into a series of data segments to obtain the preprocessed EEG data.

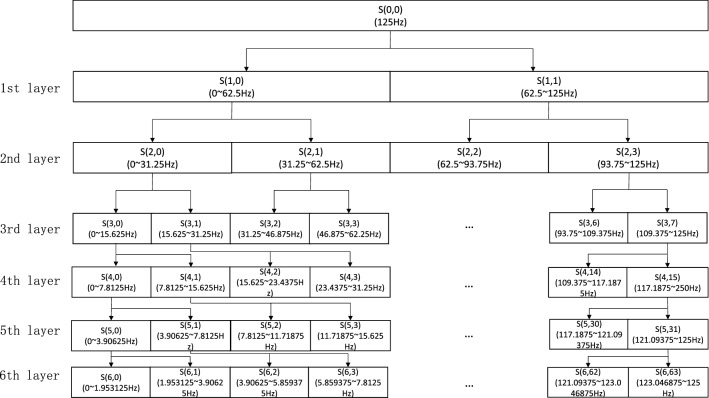

Feature extraction using WPD

Wavelet transform is a time-frequency analysis method widely used in feature extraction of EEG signals. The wavelet transform gradually decomposes the low-frequency part of the signal, but does not decompose the high-frequency part (Nguyen et al. 2017). This method cannot decompose a large amount of detail information contained in EEG signals well, and cannot meet the requirements of high time resolution and high frequency resolution. Wavelet packet decomposition (WPD) is a more refined signal analysis method to solve the problem of poor frequency resolution in the high-frequency part of wavelet decomposition. It can decompose both low-frequency and high-frequency signals, so it can better analyze the time-frequency localization of signals containing a large amount of medium and high-frequency information, and improve the time-domain resolution of the signal. Because the wavelet basis function determines the result of WPD. In the experiment, the EEG signals collected by peripheral equipment is discrete signal, so the wavelet basis function of discrete wavelet transform is selected for WPD. In this paper, Db4 wavelet basis function is selected to decompose the channel data in six-layer, and the relevant features of EEG signals of Chinese characters are extracted from the WPD coefficients of the sixth layer.

The wavelet packet is used to decompose the EEG signal s(t) to ith layer to obtain subband. s(t) is defined as

| 1 |

where , are the signal values after reconstruction of node (i, j). is time of the jth node. If the lowest frequency of EEG signal s(t) is 0 and the highest frequency is , then the frequency width of ith layer is . The obtained EEG signals are processed by WPD. For the EEG signals dataset about the Chinese characters speech imagery with 250Hz sampling rate, we find that the Db4 wavelet basis function can effectively decompose the signals. Hence, Db4 wavelet basis function is used to decompose the signal by six-layer of wavelet packet, as shown in Fig. 3.

Fig. 3.

Six-layer wavelet packet decomposition

According to Parseval’s theorem, the total energy of signals in time domain is equal to that in the frequency domain. The energy of each subband signal can be calculated by WPD of EEG signals to the second layer. The formula is

| 2 |

where is the discrete sampling point amplitude of the reconstructed signal , . The m represents the number of sampling points. The is the band energy of the jth node of the ith layer decomposed by the EEG signal. The formula for calculating the total energy of wavelet packet of EEG signal s(t) is

| 3 |

The percentage of energy occupied by each subband signal calculated from EEG signals is taken as the feature vector of the signal, and the formula is

| 4 |

Feature classification using LightGBM

LightGBM is a fast, distributed, high-performance gradient boosting framework based on decision tree algorithm (Ke et al. 2017; Abenna et al. 2022). It mainly includes two core algorithms, namely GOSS and EFB. In this paper, the GOSS method is used to segment the features and set feature weights, and the mutually exclusive features are bound by EFB method to reduce the number of features, speed up the calculation and perform features selection.

Segmentation of EEG features by GOSS

The features of EEG signals with large gradients have more influence on the information gain, so the EEG features with larger gradients are retained when downsampling, and undersampling is performed on EEG features with smaller gradients to improve the efficiency of model training. Assuming that there are n instances in the training set, the characteristic dimension of EEG signals is s, and the negative gradient direction of the loss function of model data variable is , the extracted Chinese characters speech imagery eigenvalues are divided into their respective nodes by calculating the segmentation point of maximum information gain. After segmenting the feature j, the formula of the segmentation point d is

| 5 |

| 6 |

| 7 |

| 8 |

where O represents the train set of a leaf node in the decision tree, represents the number of train set samples of a leaf node in the decision tree, represents the number of samples in the decision tree whose value on the first feature is less than or equal to d, and represents the number of samples in the decision tree whose value on the second feature is greater than d.

After finding the segmentation points, traverse the segmentation points of each feature in the decision tree to calculate the , so as to obtain the maximum information gain . Finally, the data is divided into left and right sub nodes according to the feature of segmentation points.

In the GOSS method, firstly, the gradients of the extracted EEG signals features is sorted down, and the a data instances in the front row are retained as a data subset A. Secondly, the remaining dataset is randomly sampled to obtain another data subset B. Finally, the information gain is calculated in the set of , and the formula is

| 9 |

The GOSS method calculates the information gain through a small dataset, which greatly reduces the amount of calculation without traversing all sample points, and ensures the classification accuracy.

Selecting of extracted features by EFB

In practice, due to the problem of more features but sparse feature space, there is loss in reducing features. Therefore, multiple features of high-dimensional data are placed in a sparse feature space. By bundling mutually exclusive features, the bundling problem is reduced to a graph coloring problem. Finally, the greedy algorithm is used to obtain the approximate solution, which can effectively reduce the number of features without damaging the accuracy of segmentation points. Firstly, EFB algorithm transforms the problem of binding mutually independent features into the problem of graph coloring. All features are regarded as a graphic for each vertex,and non-independent features are connected by an edge. The weight of the edge is the total conflict value of two connected features, that is, the degree of non mutual exclusion between features. The features that need to be bound are those points (features) that need to be painted with the same color in the graph coloring problem, so that the features that can be bound. Secondly, for features that can be bound, feature merging is realized by adding the offset to the original value of the feature. For example, suppose that there are two features, A and B, respectively. The original feature value of A is [0,10], and the original feature value of B is [0,20]. We add offset 10 to B, so B takes [10, 30]. In this way, features A and B can be combined accurately, and a feature with a value of [0,30] can be used to replace AB.

Results and discussions

Process implementation

For the EEG signals of each subject, we filter the EEG signals by setting a band-pass filter with a filtering range of 6–30 Hz. In addition, ICA is used to decompose the filtered signals to remove electrooculogram, electromyogram and heartbeat. The EEG signals of a single subject is divided into three parts: the first part is the data collected when the subjects imagines the speech of Chinese character “左(left)” in 2–6 s; the second part is the data collected when the subjects imagine the pronunciation of the Chinese character “壹(one)” in 2–6 s; the third part is the data collected by the subjects during the relaxation period of 6–8 s.

After feature extraction by WPD, EEG data are divided into training set and test set by a 10-fold cross-validation method, which can effectively evaluate the classification accuracy of any two parts of data. Specifically, the data are randomly divided into ten parts, nine of which are selected as the training set for feature extraction and building the LightGBM model; one is used as a testing set to test the classification accuracy. Each of the ten random segment data will be used as a testing set, and the other nine will be used as a training for repeated training and testing to ten times. In the BCI system based on Chinese characters speech imagery, the main parameters of LightGBM model are fixed as shown in Table 1.

Table 1.

LightGBM parameter selection

| Parameter | Value | Parameter meaning |

|---|---|---|

| Boosting_type | GBDT | Lifting algorithm in LightGBM |

| Learning_rate | 0.01 | Learning rate |

| Num_leaves | 500 | Number of leaves on the decision tree |

| Min_data_in_leaf | 20 | Minimum amount of data in the leaf. The default is 20 |

| Lambda_l1 | 1 | Regularization coefficient L1 |

| Lambda_l2 | 0.001 | Regularization coefficient L2 |

| Feature_fraction | 0.8 | Select some features in each iteration 80 % |

| Device | GPU | Select CPU or GPU |

When classifying the features of Chinese characters speech imagery, it is necessary to adjust the parameters of LightGBM. The is the main parameter of the complexity of the control tree model. In theory, we can learn from the of the tree, that is, the maximum depth to set the value of and convert the formula into . However, this simple transformation does not perform well in practical application, and the model may be overfitted. We set to avoid overfitting and merge the model for higher classification accuracy.

= default is set to make the choice of more flexible and then we empirically set the parameter = 500. is an important parameter to deal with model overfitting, which is defaulted to 20. In order to obtain higher classification accuracy, the choice of should be smaller. In this paper, =0.1 is set. Set =0.8 to use feature subsampling, and select =1, =0.001 for regularization empirically. The model is implemented in Python and trained and tested on computers equipped with Intel i5 CPU, NVIDIA GeForce MX350 GPU and 8Gb RAM.

Comparison with traditional classifiers

Language accounts for a large proportion of human communication. If BCI technology can decode the inner minds of aphasia, it can greatly improve the quality of life of patients. The main purpose of this paper is to use a new machine learning algorithm to achieve a more accurate classification of the EEG signals of the Chinese characters speech imagery. Here, in order to illustrate the superiority of LightGBM for classifier of speech imagery, we employ a statistical analysis-based analysis method to compare the classification performance of the traditional classification algorithm (LDA, ELM, SVM) with LightGBM. The analysis aims to evaluate the applicability and accuracy of the adopted LightGBM algorithm relative to traditional machine learning classification algorithms.

The results of four classification models (LDA, ELM, SVM and LightGBM) under four metrics (Ketu and Mishra 2022) (accuracy, precision, recall and f1-score) are shown in Figs. 4, 5 and 6 respectively. The detail data of the Figs. 4, 5 and 6 are shown in the appendix Tables 3, 4 and 5. The results show that, compared with other traditional classification algorithms, the classification algorithm based on LightGBM proposed in this paper has a higher accuracy which can reach up to 99%.

Fig. 4.

The performance of different classifier under four metrics about “左(left) vs Rest”

Fig. 5.

The performance of different classifier under four metrics about “壹(one) vs Rest”

Fig. 6.

The performance of different classifier under four metrics about “左(left) vs 壹(one)”

Table 3.

The detail performance of different classifier under four metrics about “左(left) vs Rest”

| Classifiers | A1 (%) | A2 (%) | B1 (%) | B2 (%) | B3 (%) | B4 (%) | B5 (%) | B6 (%) |

|---|---|---|---|---|---|---|---|---|

| LDA | ||||||||

| Accuracy | 66.62 | 66.36 | 80.68 | 65.93 | 72.84 | 78.83 | 71.02 | 73.13 |

| Precision | 69.71 | 67.73 | 83.21 | 69.31 | 75.95 | 80.67 | 73.63 | 75.89 |

| Recall | 66.62 | 66.36 | 80.68 | 65.93 | 72.84 | 78.83 | 71.00 | 72.08 |

| f1_score | 66.96 | 65.84 | 80.99 | 66.41 | 73.30 | 78.84 | 71.28 | 73.45 |

| ELM | ||||||||

| Accuracy | 75.47 | 75.84 | 80.01 | 68.61 | 72.33 | 73.44 | 74.63 | 69.93 |

| Precision | 78.40 | 78.38 | 82.23 | 72.82 | 76.08 | 75.73 | 76.84 | 73.78 |

| Recall | 75.47 | 75.95 | 80.01 | 68.61 | 72.33 | 73.43 | 74.34 | 69.93 |

| f1_score | 75.74 | 76.16 | 80.34 | 69.33 | 72.95 | 73.62 | 74.86 | 70.37 |

| SVM | ||||||||

| Accuracy | 78.46 | 79.47 | 83.95 | 90.61 | 78.28 | 93.68 | 70.74 | 74.08 |

| Precision | 81.10 | 82.95 | 85.07 | 91.34 | 80.31 | 93.51 | 73.04 | 75.53 |

| Recall | 78.36 | 79.48 | 83.37 | 90.42 | 78.62 | 93.68 | 71.27 | 74.08 |

| f1_score | 76.84 | 76.12 | 83.22 | 90.62 | 77.22 | 93.87 | 71.01 | 72.43 |

| LightGBM | ||||||||

| Accuracy | 88.95 | 86.38 | 91.36% | 91.75 | 92.43 | 99.16 | 83.57 | 83.37 |

| Precision | 90.20 | 92.63 | 95.97 | 95.17 | 92.50 | 99.25 | 88.04 | 87.91 |

| Recall | 88.39 | 86.11 | 91.46 | 92.51 | 91.11 | 99.34 | 82.31 | 82.56 |

| f1_score | 89.29 | 87.04 | 93.41 | 93.61 | 91.08 | 99.27 | 85.03 | 84.73 |

Table 4.

The detail performance of different classifier under four metrics about “壹(one) vs Rest”

| Classifiers | A1 (%) | A2 (%) | B1 (%) | B2 (%) | B3 (%) | B4 (%) | B5 (%) | B6 (%) |

|---|---|---|---|---|---|---|---|---|

| LDA | ||||||||

| Accuracy | 70.68 | 65.61 | 83.21 | 70.39 | 69.62 | 76.17 | 71.72 | 69.66 |

| Precision | 72.59 | 66.47 | 85.12 | 73.79 | 72.85 | 78.58 | 74.33 | 72.95 |

| Recall | 70.49 | 65.61 | 83.22 | 70.42 | 69.53 | 75.84 | 70.95 | 69.66 |

| f1_score | 70.27 | 64.92 | 83.47 | 70.96 | 70.13 | 76.36 | 72.13 | 70.01 |

| ELM | ||||||||

| Accuracy | 75.82 | 71.14 | 77.04 | 73.77 | 70.01 | 72.09 | 69.65 | 67.74 |

| Precision | 79.20 | 73.87 | 79.45 | 77.21 | 73.94 | 74.86 | 72.57 | 71.27 |

| Recall | 75.80 | 71.73 | 77.09 | 73.57 | 71.29 | 73.96 | 69.33 | 67.65 |

| f1_score | 76.15 | 71.33 | 77.35 | 74.31 | 70.50 | 72.51 | 69.99 | 68.28 |

| SVM | ||||||||

| Accuracy | 77.73 | 77.25 | 85.79 | 88.98 | 74.74 | 95.89 | 70.88 | 69.43 |

| Precision | 80.51 | 82.52 | 86.79 | 90.04 | 77.13 | 96.23 | 72.76 | 70.11 |

| Recall | 77.65 | 77.25 | 85.78 | 88.64 | 74.36 | 94.53 | 71.72 | 69.43 |

| f1_score | 74.68 | 73.00 | 85.54 | 89.01 | 71.56 | 95.78 | 70.51 | 64.58 |

| LightGBM | ||||||||

| Accuracy | 88.04 | 86.33 | 90.57 | 92.55 | 86.29 | 97.05 | 78.57 | 81.18 |

| Precision | 88.61 | 85.71 | 90.22 | 90.22 | 86.02 | 94.59 | 78.32 | 80.68 |

| Recall | 86.16 | 83.60 | 90.38 | 90.41 | 84.12 | 97.18 | 72.50 | 78.29 |

| f1_score | 87.49 | 84.95 | 90.76 | 85.21 | 85.20 | 95.52 | 78.54 | 80.25 |

Table 5.

The detail performance of different classifier under four metrics about “左(left) vs 壹(one)”

| Classifiers | A1 | A2 | B1 | B2 | B3 | B4 | B5 | B6 |

|---|---|---|---|---|---|---|---|---|

| LDA | ||||||||

| Accuracy | 57.71 | 59.20 | 62.31 | 61.13 | 58.43 | 61.00 | 68.00 | 61.33 |

| Precision | 61.21 | 63.24 | 65.04 | 63.52 | 61.88 | 63.10 | 70.17 | 65.11 |

| Recall | 60.17 | 60.00 | 62.26 | 61.23 | 58.73 | 61.67 | 68.33 | 61.67 |

| f1_score | 60.59 | 60.46 | 62.17 | 60.82 | 58.11 | 61.10 | 68.09 | 60.86 |

| ELM | ||||||||

| Accuracy | 72.63 | 64.13 | 73.19 | 65.13 | 67.60 | 67.00 | 70.00 | 76.00 |

| Precision | 75.71 | 67.96 | 76.16 | 68.01 | 71.06 | 69.80 | 72.85 | 80.64 |

| Recall | 72.33 | 64.13 | 73.19 | 65.33 | 67.45 | 67.33 | 70.37 | 76.00 |

| f1_score | 72.52 | 64.02 | 73.17 | 64.98 | 67.44 | 66.92 | 69.83 | 76.08 |

| SVM | ||||||||

| Accuracy | 70.13 | 60.20 | 70.75 | 60.47 | 65.67 | 65.67 | 63.67 | 69.33 |

| Precision | 75.62 | 66.94 | 74.37 | 65.04 | 69.94 | 70.20 | 67.52 | 75.11 |

| Recall | 70.11 | 61.33 | 70.25 | 60.45 | 65.68 | 65.33 | 63.29 | 69.37 |

| f1_score | 69.96 | 64.06 | 70.71 | 60.04 | 65.44 | 65.75 | 63.63 | 69.00 |

| LightGBM | ||||||||

| Accuracy | 78.67 | 75.73 | 78.26 | 77.70 | 72.97 | 72.00 | 70.33 | 80.33 |

| Precision | 78.81 | 77.62 | 78.66 | 76.48 | 73.61 | 77.36 | 72.29 | 81.28 |

| Recall | 79.32 | 75.86 | 77.06 | 79.48 | 74.02 | 68.38 | 73.60 | 80.15 |

| f1_score | 77.21 | 74.93 | 77.43 | 75.82 | 71.81 | 70.93 | 70.67 | 77.41 |

The performance of proposed method

For verify the effectiveness of our proposed method, we compare our method with the one of the literature (Wang et al. 2013). The experimental results of our method and the literature (Wang et al. 2013) are recorded in Table 2. All the values in these tables are the average of the results obtained after repeating 20 tests. To further compare the performance of the experimental results between the our method and the one of literature (Wang et al. 2013), we calculate the average of the classification results for all subjects. It can be generally observed that the classification accuracies of the silently reading the Chinese character “左(left)” and “Rest” are between 81.15 and 99.36% in our method. In particular, the classification accuracies of subjects B1, B2, B3 and B4 are more than 90%. In literature Wang et al. (2013), the classification accuracies of subjects B1 and B3 are less than 80% while the one of subject B4 is as high as 99.36% in our method. The classification accuracies of the silently reading the Chinese character “壹(one)” and “Rest” are between 78.47 and 96.98% in our method. Among them, the ones of all subjects reach more than 80% except subject B5, while the half of classification accuracies are less than 70% in literature Wang et al. (2013). In our method, the experimental results of the silently reading the Chinese character “左(left)” and “壹(one)” are relatively lower than the ones of “左(left)” and “Rest”, “壹(one)” and “Rest”. But the classification accuracies of subjects A1, A2, B1, B2, B3, B5 and B6 are more than 70% while the half of classification accuracies are less than 70% in literature Wang et al. (2013).

Table 2.

Accuracy of speech imagery classification based on LightGBM

| Subject | 左(left) vs Rest | 壹(one) vs Rest | 左(left) vs 壹(one) | |||

|---|---|---|---|---|---|---|

| Literature (Wang et al. 2013) | Our study | Literature (Wang et al. 2013) | Our study | Literature (Wang et al. 2013) | Our study | |

| A1 | 82.13 ± 1.56 | 88.95 ± 1.82 | 76.34 ± 3.24 | 88.61 ± 2.54 | 71.06 ± 2.79 | 77.79 ± 1.51 |

| A2 | 80.45 ± 3.34 | 86.12 ± 2.51 | 79.26 ± 2.45 | 85.72 ± 0.98 | 70.23 ± 2.38 | 75.84 ± 1.43 |

| B1 | 73.65 ± ± 1.98 | 91.40 ± 0.64 | 78.45 ± 1.89 | 90.01 ± 0.71 | 63.76 ± 3.36 | 76.25 ± 2.28 |

| B2 | 88.73 ± 1.67 | 92.66 ± 1.05 | 89.78 ± 1.72 | 92.40 ± 0.84 | 69.27 ± 1.34 | 78.84 ± 1.70 |

| B3 | 79.64 ± 2.35 | 91.02 ± 0.93 | 78.95 ± 1.89 | 86.11 ± 1.63 | 66.15 ± 2.84 | 73.03 ± 1.91 |

| B4 | 93.74 ± 0.97 | 99.36 ± 0.56 | 95.76 ± 0.79 | 96.98 ± 1.16 | 59.96 ± 1.76 | 69.33 ± 2.18 |

| B5 | 85.75 ± 2.78 | 82.98 ± 1.37 | 83.02 ± 1.83 | 78.47 ± 1.59 | 70.13 ± 3.57 | 70.07 ± 1.96 |

| B6 | 87.65 ± 1.38 | 81.15 ± 0.93 | 84.18 ± 1.65 | 80.69 ± 1.02 | 64.37 ± 2.66 | 80.33 ± 1.56 |

| Mean | 83.97 ± 2.00 | 89.21 ± 1.23 | 83.22 ± 1.93 | 87.37 ± 1.31 | 66.87 ± 2.17 | 75.19 ± 1.82 |

Compared with the classification accuracies of silently reading Chinese characters in literature Wang et al. (2013), the average classification accuracies of “左(left)” and “Rest” using this method is improved by 5.24%; the classification accuracy of “壹(one)” and “Rest” is improved by 4.90%; and the classification accuracy of “左(left)” and “壹(one)” is improved by 12.44%. In addition, the average standard deviation of 8 subjects is lower than the literature Wang et al. (2013). Specifically, the average standard deviation of “左(left)” and “Rest” is decreased by 0.77%; the average standard deviation of “壹(one)” and “Rest” is decreased by 0.62%; and he average standard deviation of “左(left)” and “壹(one)” is decreased by 0.35%. Furthermore, we found that compared with subjects A1 & A2, B1–B6 improved the average classification accuracy of “左(left)” and “Rest” increased by 2.23%, “壹(one)” and “Rest” increased by 0.24%, “左(left)” and “壹(one)” decreased by 2.17%. The results show that the performance of the BCI system using only the information of the left brain channel is not inferior to that of the BCI system using the information of the whole brain channel, and the information of the left brain channel is sufficient to extract the EEG features of Chinese characters speech imagery.

In a word, our method has greatly improved the overall classification effectiveness and improved the stability of classification results. Further, thanks to the universality of LightGBM algorithm, our method has the prospect of popularization in the BCI of Chinese Characters Speech Imagery.

Further discussions

In order to study the change of EEG signals with experimental events when silently reading Chinese characters, the time-frequency analysis of EEG signals is carried out by using ERSP. If the energy spectrum of EEG signals is significantly increased or decreased after Chinese prompt, it means that the EEG signals collected in the experiment has a latch relationship with the time series (Makeig 1993; Miaomiao and Zhiguang 2018). We chose the subjects B4 to verify this relationship. The signals after band-pass filtering (6–30 Hz) of the subjects B4 are analyzed by ERSP, and then the change of subject’s frequency energy with time is obtained Fig. 7.

Fig. 7.

ERSP diagram of Chinese characters speech imagery: a Subject B4 silently read the ERSP diagram of Chinese character “左(left)”; b subject B4 silently read the ERSP diagram of Chinese character “壹(one)”

It can be seen from Fig. 7a, b that the EEG signals of subject B4 changes with the time of silently reading Chinese characters. Within the time range of 2000 to 5000 ms in the imagination period, the energy of EEG signals of electrode FC5 and CP5 in the frequency range of 9–16 Hz is significantly enhanced compared with the baseline, and there is no significant change in other time periods. The energy spectrum perturbation of electrode channel CP5 is more significant than that of FC5. Therefore, the EEG signals have the latch relationship with the time series, and silently reading Chinese characters can evoke the activity of the Broca’s area (electrode FC5 in Broca’s area) and Wernicke’s area (electrode CP5 in Wernicke’s area).

Conclusions

This paper adopts a new feature classification algorithm—LightGBM, which can significantly improve the classification accuracy of Chinese characters speech imagery, and play a certain role in promoting the medical rehabilitation training with language disorders. Specifically, we filters the EEG signal and uses ICA to remove electrooculogram, electromyogram and heartbeat. Then, we select Db4 wavelet basis function to decompose the channel data in six-layer, extract relevant features from the WPD coefficients in the sixth layer. Finally, we use LightGBM to classify silent reading features. The experimental results show that when determining whether the subjects are silently reading the Chinese character “左(left)”, the average classification accuracy is close to 90%, when silently reading the Chinese character “壹(one)”, the average classification accuracy is 87.28%, and the average classification accuracy of two Chinese characters is 75.19%. The average classification accuracy of the three experiments is significantly higher than the existing results.

Appendix

Data availability

The data that support the findings of this study are available on request from the corresponding author Hongguang Pan (hongguangpan@163.com). The data are not publicly available due to the containing information that could compromise research participant privacy.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Zhuoyi Li, Chen Tian, Li Wang, Yunpeng Fu, Xuebin Qin and Fei Liu have contributed equally to this work.

Contributor Information

Hongguang Pan, Email: hongguangpan@163.com.

Zhuoyi Li, Email: zhuoyilee@163.com.

Chen Tian, Email: sweetmorning_0052@163.com.

Li Wang, Email: wangli@gzhu.edu.cn.

Yunpeng Fu, Email: yunpengfu@163.com.

Xuebin Qin, Email: qinxb@xust.edu.cn.

Fei Liu, Email: liufei@xust.edu.cn.

References

- Abenna S, Nahid M, Bajit A (2021) Brain-computer interface: A novel eeg classification for baseline eye states using LGBM algorithm. In: International conference on digital technologies and applications, pp 189–19. Springer

- Abenna S, Nahid M, Bajit A. Motor imagery based brain-computer interface: improving the EEG classification using delta rhythm and LightGBM algorithm. Biomed Signal Process Control. 2022;71:103102. doi: 10.1016/j.bspc.2021.103102. [DOI] [Google Scholar]

- Bocquelet F, Hueber T, Girin L, Chabardès S, Yvert B. Key considerations in designing a speech brain-computer interface. J Physiol Paris. 2017;110(4):392–401. doi: 10.1016/j.jphysparis.2017.07.002. [DOI] [PubMed] [Google Scholar]

- DaSalla CS, Kambara H, Sato M, Koike Y. Single-trial classification of vowel speech imagery using common spatial patterns. Neural Netw. 2009;22(9):1334–1339. doi: 10.1016/j.neunet.2009.05.008. [DOI] [PubMed] [Google Scholar]

- Chen F, Pan C. A review on brain-computer interface technologies for speech imagery. J Signal Process. 2020;36(6):816–830. doi: 10.16798/j.issn.1003-0530.2020.06.003. [DOI] [Google Scholar]

- Hashim N, Ali A, Mohd-Isa W-N (2018) Word-based classification of imagined speech using EEG. In: Computational science and technology, pp 195–204

- Jahangiri A, Sepulveda F (2017) The contribution of different frequency bands in class separability of covert speech tasks for BCIS. In: Annual international conference of the IEEE engineering in medicine and biology society, pp 2093–2096 [DOI] [PubMed]

- Ke G, Meng Q, Finley T, Wang T, Chen W, Ma W, Ye Q, Liu T-Y (2017) Lightgbm: A highly efficient gradient boosting decision tree. In: Proceedings of the 31st international conference on neural information processing systems (NIPS’17), pp 3149–3157

- Ketu S, Mishra PK. Hybrid classification model for eye state detection using electroencephalogram signals. Cogn Neurodyn. 2022;16(1):73–90. doi: 10.1007/s11571-021-09678-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kristensen AB, Subhi Y, Puthusserypady S. Vocal imagery vs intention: viability of vocal-based EEG-BCI paradigms. IEEE Trans Neural Syst Rehabil Eng. 2020;28(8):1750–1759. doi: 10.1109/TNSRE.2020.3004924. [DOI] [PubMed] [Google Scholar]

- Lee HK, Choi Y-S. Application of continuous wavelet transform and convolutional neural network in decoding motor imagery brain-computer interface. Entropy. 2019;21(12):1199. doi: 10.3390/e21121199. [DOI] [Google Scholar]

- Lee S-H, Lee M, Jeong J-H, Lee S-W (2019) Towards an EEG-based intuitive BCI communication system using imagined speech and visual imagery. In: 2019 IEEE international conference on systems, man and cybernetics (SMC), pp 4409–4414

- Lee S-H, Lee M, Lee S-W (2020) EEG representations of spatial and temporal features in imagined speech and overt speech. In: Pattern recognition, pp 387–400

- Makeig S. Auditory event-related dynamics of the EEG spectrum and effects of exposure to tones. Electroencephalogr Clin Neurophysiol. 1993;86(4):283–93. doi: 10.1016/0013-4694(93)90110-H. [DOI] [PubMed] [Google Scholar]

- Martin S, Brunner P, Iturrate I, Millán JdR, Schalk G, Knight RT, Pasley BN. Word pair classification during imagined speech using direct brain recordings. Sci Rep. 2016;6(1):25–803. doi: 10.1038/srep25803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miaomiao G, Zhiguang Q. Research on parameter optimization in speech rehabilitation system based on brain computer interface. J Signal Process. 2018;34(8):973–983. [Google Scholar]

- Min B, Kim J, Park H-J, Lee B. Vowel imagery decoding toward silent speech BCI using extreme learning machine with electroencephalogram. Biomed Res Int. 2016;2016:2618265–2618265. doi: 10.1155/2016/2618265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohanchandra K, Saha S. A communication paradigm using subvocalized speech: translating brain signals into speech. Augment Human Res. 2016;1(1):3. doi: 10.1007/s41133-016-0001-z. [DOI] [Google Scholar]

- Nguyen CH, Karavas G, Artemiadis P. Inferring imagined speech using EEG signals: a new approach using Riemannian manifold features. J Neural Eng. 2017;15(1):016002. doi: 10.1088/1741-2552/aa8235. [DOI] [PubMed] [Google Scholar]

- Pan H, Mi W, Lei X, Deng J. A closed-loop brain-machine interface framework design for motor rehabilitation. Biomed Signal Process Control. 2020;58:101877. doi: 10.1016/j.bspc.2020.101877. [DOI] [Google Scholar]

- Pan H, Mi W, Lei X, Zhong W. A closed-loop BMI system design based on the improved SJIT model and the network of Izhikevich neurons. Neurocomputing. 2020;401:271–280. doi: 10.1016/j.neucom.2020.03.047. [DOI] [Google Scholar]

- Pan H, Mi W, Zhong W, Sun J. A motor rehabilitation BMI system design through improving the SJIT model and introducing an MPC-based auxiliary controller. Cogn Comput. 2021;13(4):936–945. doi: 10.1007/s12559-021-09878-x. [DOI] [Google Scholar]

- Pan H, Mi W, Song H, Liu F. A universal closed-loop brain-machine interface framework design and its application to a joint prosthesis. Neural Comput Appl. 2021;33(11):5471–5481. doi: 10.1007/s00521-020-05323-6. [DOI] [Google Scholar]

- Pan H, Song H, Zhang Q, Mi W. Review of closed-loop brain-machine interface systems from a control perspective. IEEE Trans Human-Mach Syst. 2022 doi: 10.1109/THMS.2021.3138677. [DOI] [Google Scholar]

- Qureshi MNI, Min B, Park H-J, Cho D, Choi W, Lee B. Multiclass classification of word imagination speech with hybrid connectivity features. IEEE Trans Biomed Eng. 2018;65(10):2168–2177. doi: 10.1109/TBME.2017.2786251. [DOI] [PubMed] [Google Scholar]

- Ramakrishnan A, Byun YW, Rand K, Pedersen CE, Lebedev MA, Nicolelis MAL. Cortical neurons multiplex reward-related signals along with sensory and motor information. Proc Natl Acad Sci. 2017;114(24):4841–4850. doi: 10.1073/pnas.1703668114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riaz A, Akhtar S, Iftikhar S, Khan AA, Salman A (2014) Inter comparison of classification techniques for vowel speech imagery using EEG sensors. In: The 2014 2nd international conference on systems and informatics (ICSAI 2014), pp 712–717

- Sereshkeh AR, Yousefi R, Wong AT, Chau T. Online classification of imagined speech using functional near-infrared spectroscopy signals. J Neural Eng. 2018;16(1):016005. doi: 10.1088/1741-2552/aae4b9. [DOI] [PubMed] [Google Scholar]

- Sereshkeh AR, Yousefi R, Wong AT, Rudzicz F, Chau T. Development of a ternary hybrid FNIRS-EEG brain-computer interface based on imagined speech. Brain-Computer Interfaces. 2019;6(4):128–140. doi: 10.1080/2326263X.2019.1698928. [DOI] [Google Scholar]

- Shergill SS, Bullmore ET, Brammer MJ, Williams S, Mcguire PK. A functional study of auditory verbal imagery. Psychol Med. 2001;31(2):241. doi: 10.1017/S003329170100335X. [DOI] [PubMed] [Google Scholar]

- Wang L, Zhang X, Zhong X, Zhang Y. Analysis and classification of speech imagery EEG for BCI. Biomed Signal Process Control. 2013;8(6):901–908. doi: 10.1016/j.bspc.2013.07.011. [DOI] [Google Scholar]

- Xu L, Xu M, Jung T-P, Ming D. Correction to: Review of brain encoding and decoding mechanisms for EEG-based brain-computer interface. Cogn Neurodyn. 2021;15(5):921–921. doi: 10.1007/s11571-021-09686-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng H, Yang C, Zhang H, Wu Z, Zhang J, Dai G, Babiloni F, Kong W (2019) A lightgbm-based EEG analysis method for driver mental states classification. Comput Intell Neurosci (3761203) [DOI] [PMC free article] [PubMed]

- Zhao S, Rudzicz F (2015) Classifying phonological categories in imagined and articulated speech. In: 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 992–996

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author Hongguang Pan (hongguangpan@163.com). The data are not publicly available due to the containing information that could compromise research participant privacy.