Abstract

Automated ultrasound imaging assessment of the effect of CoronaVirus disease 2019 (COVID-19) on lungs has been investigated in various studies using artificial intelligence-based (AI) methods. However, an extensive analysis of state-of-the-art Convolutional Neural Network-based (CNN) models for frame-level scoring, a comparative analysis of aggregation techniques for video-level scoring, together with a thorough evaluation of the capability of these methodologies to provide a clinically valuable prognostic-level score is yet missing within the literature. In addition to that, the impact on the analysis of the posterior probability assigned by the network to the predicted frames as well as the impact of temporal downsampling of LUS data are topics not yet extensively investigated. This paper takes on these challenges by providing a benchmark analysis of methods from frame to prognostic level. For frame-level scoring, state-of-the-art deep learning models are evaluated with additional analysis of best performing model in transfer-learning settings. A novel cross-correlation based aggregation technique is proposed for video and exam-level scoring. Results showed that ResNet-18, when trained from scratch, outperformed the existing methods with an F1-Score of 0.659. The proposed aggregation method resulted in 59.51%, 63.29%, and 84.90% agreement with clinicians at the video, exam, and prognostic levels, respectively; thus, demonstrating improved performances over the state of the art. It was also found that filtering frames based on the posterior probability shows higher impact on the LUS analysis in comparison to temporal downsampling. All of these analysis were conducted over the largest standardized and clinically validated LUS dataset from COVID-19 patients.

Keywords: COVID-19, Cross-correlation, Deep learning, Decision trees, Lung ultrasound

1. Introduction

Since the outbreak of the 2019 global pandemic, COVID-19 pneumonia has been among the major ongoing health issues across the world [1]. Its high infection rate and increasing mortality resulted in shortage in testing capacity and supply of medical equipment [2]. Furthermore, low sensitivity of Reverse Transcription Polymerase Chain Reaction (RT-PCR) test, as well as a high rate of false negatives in COVID-19 diagnosis, added uncertainty in PCR-based clinical evaluations and interpretations [3]. These circumstances developed a strong need for identifying alternative potential methods for COVID-19 diagnosis [4].

Lung ultrasound (LUS) has lately been widely used by clinicians and is considered as a promising tool for assessing COVID-19 pneumonia impact on the lungs [5]. However, LUS based assessment mainly relies on the interpretation of visual artifacts, making the analysis subjective and prone to error [6]. These visual artifacts mainly appear as of two types, horizontal and vertical [7]. Horizontal artifacts represent the fully aerated condition of the lung and are formed by repeated reflections of ultrasound waves between the pleural line and the probe surface. Instead, vertical artifacts are due to the formation of acoustic traps following local partial de-aeration of the lung surface. These artifacts can correlate to several pulmonary diseases [7], [8], [9], [10]. To evaluate the condition of the lung based on these patterns, it is important to highlight their dependency on the imaging parameters used during the acquisition process [11]. For this purpose, a standardized acquisition protocol was proposed, as to minimize the effect of confounding factors. Alongside, a semi-quantitative approach was proposed using a 4-level scoring system to intercept different severity levels of lung damages [12]. The protocol along with the scoring system serves as a gold standard by providing a globally unified approach for LUS analysis of COVID-19 patients. To assist the clinicians in performing this analysis, efforts have been made towards automating the assessment process using deep learning (DL) techniques [13], [14], [15].

Extending the analysis from COVID-19 to post-COVID-19 patients, DL models were employed to observe the classification performance on the data and compare it with the evaluation from clinicians [16]. To address the challenges of data transmission and automated processing of LUS data in a resource-constrained environment, work was done to evaluate the impact of the data size on the performance of DL-based models [17]. In the latter study, it was found that the DL-based models showed a decline in their performance when the number of pixels in an image was reduced. On the other hand, pixel intensity levels had no significant impact on the performance. All these studies performed DL-based analysis for the evaluation of LUS data from COVID-19 patients and produced promising results at frame, video, and prognostic-level. Specifically at frame-level analysis, these studies achieved high performance using complex architecture [14] and additional domain information as input [15]. Generally, it is however important to evaluate the impact of the added complexity and the significance of the additional input data with respect to the resulting performance. Thus, a systematic analysis of state-of-the-art DL models and their performances, together with a comparison of video-level scoring techniques, and their impact on exam-level and prognostic-level results, was still missing. Furthermore, the impact of the posterior probability assigned to the network predictions on the video-level scoring is still a topic under research. Moreover, the investigation of the effect of temporal downsampling of LUS frames on the underlying evaluation is still an open question. Addressing the aforementioned shortcomings and research gaps, this paper contributes to the existing literature by:

-

•

Performing a thorough DL-based model evaluation for assessment of LUS video frames.

-

•

Introducing explainability for video-level scoring using a decision tree (DT) model, presenting a new video-level scoring technique based on cross-correlation coefficients, and comparing its performance with existing techniques.

-

•

Evaluating the significance, for the decision process (at the video, exam, and prognostic-level), of the posterior probability of frame-level predictions and frame downsampling.

Following the new international guidelines and consensus on LUS regarding the application of AI [18], this paper aims at laying the foundation for a robust methodological approach for the development and analysis of AI-driven solutions dedicated to LUS. In the general context of ultrasound image analysis, it is important to point out that the applicability of this methodological approach may extend beyond LUS. Indeed, explainability mechanisms and proper performance analysis at frame, video, patient, exam and prognostic-level need to be generally considered in the development of AI-based solutions aimed at automating ultrasound image analysis, independently on the specific application.

The rest of the paper is organized as follows. The proposed techniques and strategies are discussed in the Methods section, whereas the Experiments and Results section explains the experimental results, followed by the Discussion and Conclusion.

2. Methods

2.1. Data acquisition

LUS data used in this multi-center study were acquired from Italian medical centers in Brescia, Rome, Lodi, Pavia, Lucca, and Tione. A total of 425,476 frames collected from 121 patients (117 COVID-19 positive and 4 COVID-19 suspected) and 14 healthy individuals are distributed within two datasets i.e., Dataset-1 and Dataset-2. The dataset description along with acquisition parameters, for each dataset, is discussed in detail below.

2.1.1. Dataset-1

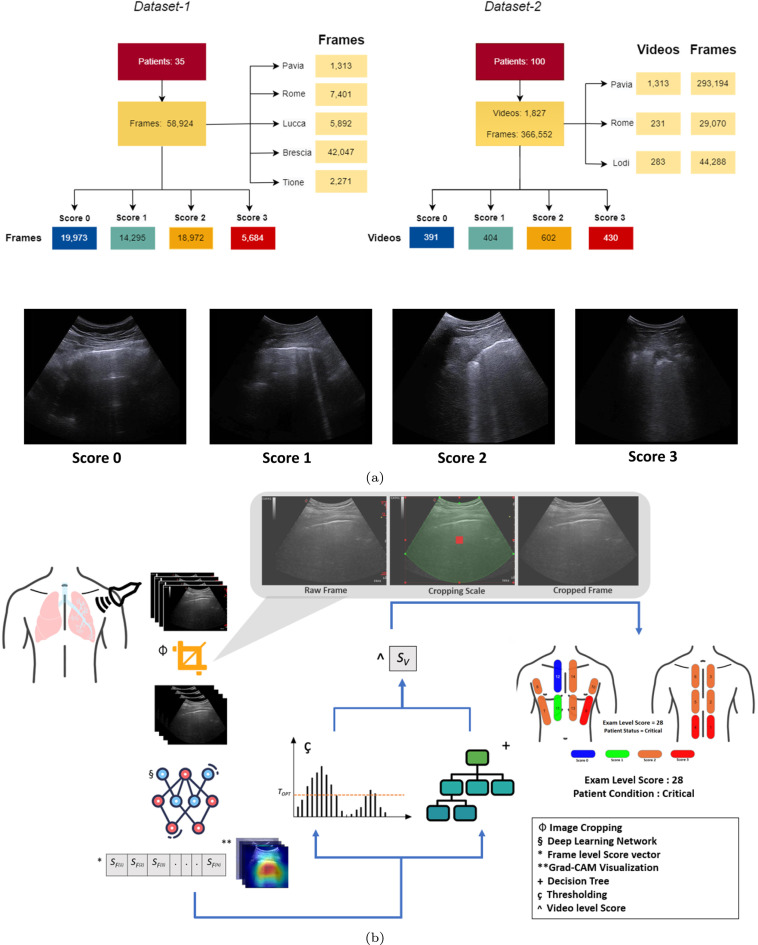

Dataset-1 represents the Italian COVID-19 Lung Ultrasound DataBase (ICLUS-DB), presented in [14]. It includes 277 LUS videos from 35 patients, corresponding to 58,924 frames (45,560 acquired with convex probe and 13,364 frames with linear probe). Among the 35 patients, 17 of them (49%) were confirmed COVID-19 positive using RT-PCR test, 4 patients (11%) were COVID-19 suspected and 14 (40%) were healthy and symptomless individuals. The data were acquired from Fondazione Policlinico Universitario San Matteo IRCCS, Pavia, Fondazione Policlinico Universitario A. Gemelli IRCCS, Rome, Valle del Serchio General Hospital, Lucca, Brescia Med, Brescia, and Tione General Hospital, Tione, Italy. Based on the scoring system proposed in [12], a total of 58,924 video frames were divided into four different categories. Score-wise and center-wise distribution is shown in Fig. 1.

Fig. 1.

(a) Represents the score and center-wise distributions of LUS data in Dataset-1 and Dataset-2. Frame-based score examples are also given for reference. (b) Represents the flow of the scoring framework starting from the LUS acquisition and cropping, to video, exam, and prognostic-level scoring. Each major step throughout the methodology is indicated by unique symbols with their description in the box on the bottom right.

To ensure accurate interpretation, the labeling process was divided into four stages. In the first stage, frame-level scores were assigned by master students, which were then validated by experts in LUS image analysis in the second stage. In the third stage, the scored frames were further verified by a biomedical engineer (with more than 10-year experience in LUS). In the fourth and final stage, labeled frames were validated by the clinicians. All data were acquired using a variety of ultrasound scanners (MindrayDC-70 Exp®, EsaoteMyLabAlpha®, ToshibaAplio XV®, WiFi Ultrasound Probes-ATL) with both convex and linear probes.

2.1.2. Dataset-2

Dataset-2 includes LUS data acquired from 100 RT-PCR confirmed COVID-19 positive patients [16]. The data were acquired from three Italian Hospitals based on the population and age distribution as follows: 63 from Fondazione Policlinico San Matteo (Pavia, Italy) (male: female 35:28, mean age of 63.72 years and min: max 26:92 years), 19 from Lodi General Hospital (Lodi, Italy) (male: female 16:3, mean age of 63.95 years and min: max 34:84 years), and 18 from the Fondazione Policlinico Universitario Agostino Gemelli (Rome, Italy) (male: female 8:10, mean age of 52.11 years and min: max 23:95). As few of the patients underwent multiple exams over time, a total of 133 LUS exams were performed resulting in 1,827 videos. This corresponded to a total of 366,552 frames. Score-wise and center-wise distribution is shown in Fig. 1. All LUS data were acquired by LUS experts with more than 10 years of experience. A variety of ultrasound scanners and probes were used. Data from Pavia have been acquired with a convex probe and with an Esaote MyLab Twice scanner, and an Esaote MyLab 50, setting an imaging depth from 5 to 13 cm (depending on the patient) and an imaging frequency from 2.5 to 6.6 MHz (depending on the scanner). The data from Lodi have been acquired using a convex probe with an Esaote Mylab Sigma scanner, and a MindRay TE7, setting an imaging depth from 8 to 12 cm (depending on the patient) and an imaging frequency from 3.5 to 5.5 MHz. The data from Rome have been acquired using both convex and linear probes with an Esaote MyLab 50, an Esaote MyLab Alpha, a Philips IU22, and an ATL Cerbero, setting an imaging depth from 5 to 30 cm (depending on the patient) and an imaging frequency from 3.5 to 10 MHz (depending on the scanner). A more detailed description of the imaging settings distribution can be found in [16] .

This study was part of a protocol that has been registered (NCT04322487) and received approval from the Ethical Committee of the Fondazione Policlinico Universitario San Matteo (protocol 20200063198), of the Fondazione Policlinico Universitario Agostino Gemelli, Istituto di Ricovero e Cura a Carattere Scientifico (protocol 0015884/20 ID 3117), of Milano area 1, the Azienda Socio-Sanitaria Territoriale Fatebenefratelli-Sacco (protocol N0031981). All the patients signed consent forms.

Fig. 1 illustrates the frame, video, and score-wise distribution of the two datasets. Note that the distinction between the two datasets is made based on the level of scoring and its evaluation, along with the provided ground truth information. For dataset-1 ground truth labels are provided at frame-level, while for dataset-2, ground truth information is provided by the clinicians at video-level.

2.2. Data preparation/pre-processing

During LUS data acquisition, scanner information and imaging parameters are recorded as part of the image. This redundant information needs to be filtered out before feeding the data to an AI-based model, to avoid ambiguous output states of the system. This include, e.g., the textual information of the imaging settings, measurement lines, and arrows identifying the focus position into the field of view. The presence of such kind of information in the LUS scans may lead the networks to learn ambiguous interpretations, hence causing anomalous prediction as pointed out in [19]. To avoid such behavior, pre-processing techniques are applied to remove redundant information from LUS data, preparing the latter for the subsequent steps. Pre-processing is carried out in the form of image cropping using the ULTRa laboratory’s LUS data processing platform, deployed over a local network within the University of Trento, Italy. As a result, LUS data were centered and cropped on the platform by the help of a guided mask. This resulted in the extraction of the field of view (FOV), preserving the spatial resolution of each pixel and removing noise. Once LUS data are pre-processed, data transformation is performed for dataset-1, as suggested in [13]. This transformation includes elastic warping, cropping, scaling, blurring, random rotation by (max. , and contrast distortion.

Next, methods have been developed to automatically score the data at frame, video, exam, and prognostic-level. At the frame and video level the score ranges from 0 to 3 [20]. At the exam level the cumulative score, ranging from 0 to 42, is utilized. At the prognostic level a binary stratification is performed following a cumulative score threshold of 24 [21]. These scoring modules collectively form the scoring framework of our automated assessment system. LUS frame samples representing the patterns corresponding to the 4-scores, along with the overall scoring framework are represented in Fig. 1.

2.3. Frame-level scoring

To develop a scoring system capable of identifying the above-mentioned patterns in LUS frames and classifying them w.r.t. their corresponding scores, we employed state-of-the-art convolutional neural networks (CNNs). Since our focus is not to localize but rather to classify LUS patterns, we can describe our approach as a weakly supervised method. Based on the two datasets, frame-level scoring is distributed in two prediction tasks: validation and testing. For the validation task, DL models are trained and validated over dataset-1. Whereas for the testing, the best performing model obtained from the validation task is used to evaluate LUS frames from dataset-2. Furthermore, the best performing model is also evaluated using pre-trained weights from the ImageNet dataset.

2.3.1. Model development and training

In this part of the study, Convolutional Neural Networks (CNNs) are used to analyze and classify LUS frames resulting in frame-level scores . Variants of state-of-the-art architectures of ResNet, DenseNet, and Inception are used. In particular, models such as ResNet-18, ResNet-50, ResNet-101, RegNetX, DensNet-121, DensNet-201, EfficientNetB7, and InceptionV3 are employed. Dropout layers followed by fully-connected layers are added to the end to avoid overfitting. During the validation task, these state-of-the-art models are trained and validated over dataset-1 under the train-test split proposed in [14], and their performances are assessed. The top performing model from the validation task is then used in the testing task to evaluate LUS frames from dataset-2. All of the models are trained by back-propagation of errors in batches of size 4, with images resized to 224 x 224 pixels for 70 epochs with a learning rate of 10−4. These models are trained using Stochastic Gradient Descent (SGD) with a weight decay of 10−5. Cross-Entropy is used as the loss function while training these models. Transformations (in the forms of rotation, warping, scaling of images, and Gaussian blur) are added to each batch during training as data augmentation, in order to enable an improved and generalized network learning.

To extend our analysis for the use of transfer-learning, the best performing model is trained by loading convolutional layers with pre-trained weights from the ImageNet dataset, with newly initialized added layers towards the output layer. The model is trained using the same hyper-parameters used to train the models from scratch. However, to further improve the performance, the pre-trained model is then fine-tuned with a relatively lower learning rate of 10−5 [22].

The variables of interest at frame-level, i.e., the ground truth and frame-level score predicted by the model , are two discrete random variables having values . Each model computes the probability that a specific frame belongs to each of the possible classes. Each frame is thus assigned to the class with the highest probability, thus assigning a value to . In our study, the state-of-the-art CNN models predict the underlying patterns of the testing samples of LUS frames and assign them to the 4-score based classes with highest probability. The posterior probability of each class is hereinafter referred to as the confidence level provided by the model for a particular frame. DL model-based scoring of LUS video frames, along with the confidence level information , is then further processed to evaluate video and exam-level scoring.

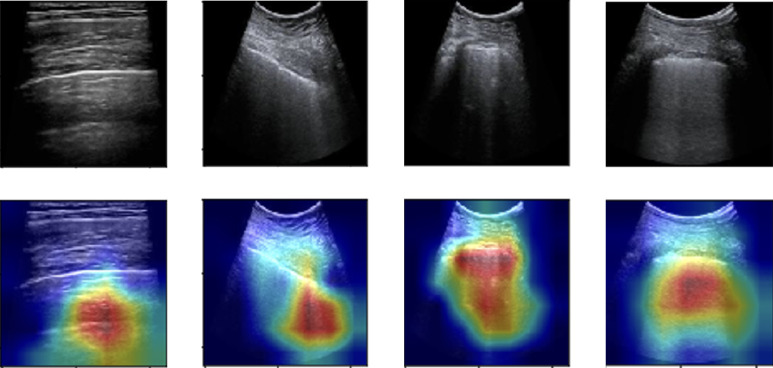

To add explainability to these network predictions, it is important to understand what the networks predict and why they predict it. To this end, we employed Gradient-Weighted Class Activation Maps (Grad-CAM) [23] to add visual explanations, as done for the first time on LUS data in the study [13].

2.4. Video, exam, and prognostic-level scoring

After obtaining the best performing model and its pre-trained version, these two models are tested on LUS data from dataset-2 (completely independent from dataset-1). As a result, the frame-level predicted score and the corresponding confidence level for each score are given as output. The output for each frame is in the form of a vector of size 1 × 5 (i.e., including confidence levels of each score and the corresponded frame score) consisting of and where , and refers to the frame index, where is the number of frames in a video. Thus, a 2-D matrix with a dimension of is obtained for each video. As clinicians evaluate LUS data at the video-level, there is the need to propose and evaluate techniques to move from frame-level score to video-level score. To do so, here we propose the use of cross-correlation-based features that are extracted and aggregated over the frames within a video. To assess these features’ capability of scoring LUS videos, a DT-based classification process has been developed.

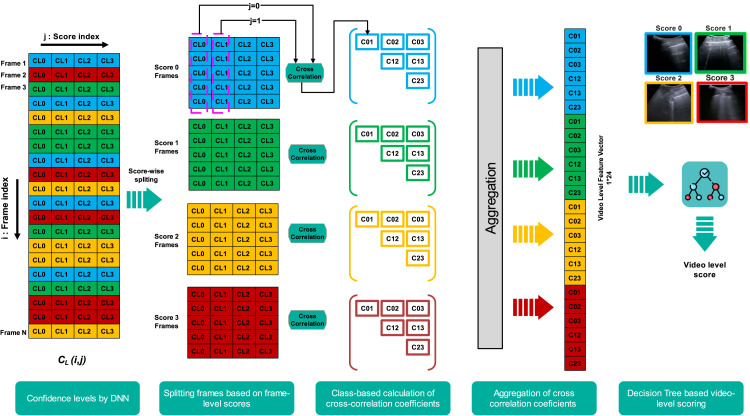

DT is a non-parametric supervised learning technique for classification and regression. By learning straightforward decision rules derived from the data attributes, the objective is to build a model that predicts the value of a target variable, in our case the video-level score . A DT is designed to assess one of the data attributes from the feature set at each node in order to split the observations during the training phase or to make a given data point follow a specified path when making a prediction. In this study, we propose a novel aggregation technique to obtain a feature set at the video-level by combining the feature set at the frame-level. The technique is based on the similarity of two data groups, which is carried out by a statistical metric known as “cross-correlation”. The metric shows how much two or more data groups vary together. This variation, , between two data groups and of dimension , can be represented as:

| (1) |

The absolute value of ranges from 0 to 1. The higher the value, the higher the similarity between changes of the two groups and y. Here, we exploit this concept to extract useful information from the frame-level results to be informative for video-level scoring. To this end, we considered the block diagram in Fig. 2 for our implementation of the proposed approach. As shown in the figure, considering a video consisting of frames distributed over the four scores, the suggested method works by splitting the part of the output matrix into four sub-matrices, based on the value of the predicted score, . Then, we calculate the correlation coefficient between each pair of columns and in each sub-matrix, and aggregate the score-wise cross-correlation values into a video-level feature vector. Finally, in the training phase, we feed this aggregated feature vector to a DT for extracting the scoring decision rules. These rules analyze the relationship among the cross-correlation coefficients within a video-level feature vector to produce the desirable video-level score. Based on those rules, during the testing phase, video-level score is calculated. The rationale behind using cross-correlation is linked to the intrinsic subjectivity connected to the semi-quantitative nature of the analysis. In practice, this implies that neighboring scores have blurred boundaries, i.e. there exists frames which cannot be unambiguously assigned to a specific score. As an example, it may not be always certain whether a frame corresponds to score 1 or 2. Indeed, very small consolidations could be simply interpreted as alterations along the pleural line (thus score 1) and not considered as actual consolidations (thus score 2). This subjectivity can be mapped using the correlation between the confidence levels (as ambiguous frames will likely show similarity w.r.t. their confidence levels), and exploited to aid the video-level classification as described above. In the following, this proposed video-level scoring method based on DT-based evaluation of cross-correlation coefficients is referred to as CC-DT.

Fig. 2.

Block diagram and graphical representation of the proposed approach for video-level scoring based on cross-correlation (CC-DT). The confidence level associated with the scores 0, 1, 2, and 3 for a frame instance, respectively, is indicated as , , , and . Also, ( where ) indicates the cross-correlation coefficient between column (which includes the confidence levels associated with score of all frames in each sub-matrix) and column (which includes the confidence levels associated with score of all frames in each sub-matrix). The steps are as follows: First, we split the data in a score-wise manner. As a result, we get four sub-matrices, each one including the confidence level information of the frames with the same score. The cross-correlation coefficients are then calculated separately for each sub-matrix which resulted in six coefficients for each sub-matrix. Next, the video-level feature vector is constructed by aggregating all the sub-matrices’ coefficients. Finally, we feed the video-level feature set to the input of DT for evaluating the video-level scoring.

To draw a comparison with the existing methods, we also evaluate the performance of the threshold-based technique [24] and Grammatically Evolved DT-based method (GE-DT) [25]. For a fair evaluation, the proposed CC-DT along with the two baseline methods are applied on the output obtained for dataset-2 by the best-performing model. The threshold-based technique works by assigning a video with the highest score found at a given percentage of frames (threshold) composing the video. The optimal threshold was found to be equal to 1% [24]. In [25], the Grammatical Evolution algorithm [26] was used instead to generate DTs. The technique is more flexible, easy to extend (e.g., it allows for multi-objective optimization), and is known to produce easy-to-interpret decision trees. The GE-DTs are trained by means of a method that takes in input the relative frequency of each of the score levels for the frames given by the NN, along with the minimum and the maximum labels predicted.

To evaluate our proposed CC-DT based aggregation technique and compare with the existing methods, score-based agreement is calculated. Specifically, the video, exam, and prognostic-level agreements are considered in the evaluation [24]. For the video-level agreement, the number of correctly classified videos by the algorithm is calculated (after the application of aggregation techniques). A video is considered as perfectly classified when the algorithm assigned the video the exact same score as assigned by the clinicians [17], [24]. Moreover, as mentioned before, since we are dealing with a semi-quantitative analysis, we are also investigating 1-error tolerance, as been reported in the studies [17], [21]. The same analysis has also been reported in scenarios where the inter-rater agreement is assessed among clinicians [27]. Therefore, in this study we also report the 1-error tolerance video-level agreement for the proposed video-level scoring techniques, which measures how many videos were correctly classified by the algorithm when allowing a disagreement of 1 point in the evaluation. As an example, if a clinician assigned score 1 to a video and the algorithm assigned scores 0 or 2 to the same video, the evaluations are in agreement with a tolerance of 1 point disagreement [24]. This is a way to assess the level of subjectivity of the analysis, which is linked to the semi-quantitative nature of the scoring system. A high 1-error tolerance agreement implies that the subjectivity is affecting only neighboring scores.

Moving to the evaluations at the exam and prognostic-level, the utilized 14-area protocol [20] has a proven prognostic value when looking at the cumulative score as calculated adding the scores over all the 14 acquisition areas (final value ranging from 0 to 42) [28]. For this reason, we evaluated the exam-level agreement, which computes the percentage of exams scored by the algorithm having a disagreement (i.e., a difference) of up to 2, 5, or 10 points with respect to the clinicians’ evaluation [24]. Moreover, we evaluated the prognostic agreement. In this case, if the cumulative score turns out to be greater than 24, the patient is considered at high risk, whereas, when the cumulative score is less than or equal to 24, the patient is considered at low risk [28]. As demonstrated in [28], the cumulative score can be applied to stratify the patients at higher risk of worsening and the patients at low risk. The prognostic agreement is hence used to assess the performance of the algorithm in automatically Fig. 5 stratifying COVID-19 patients [24].

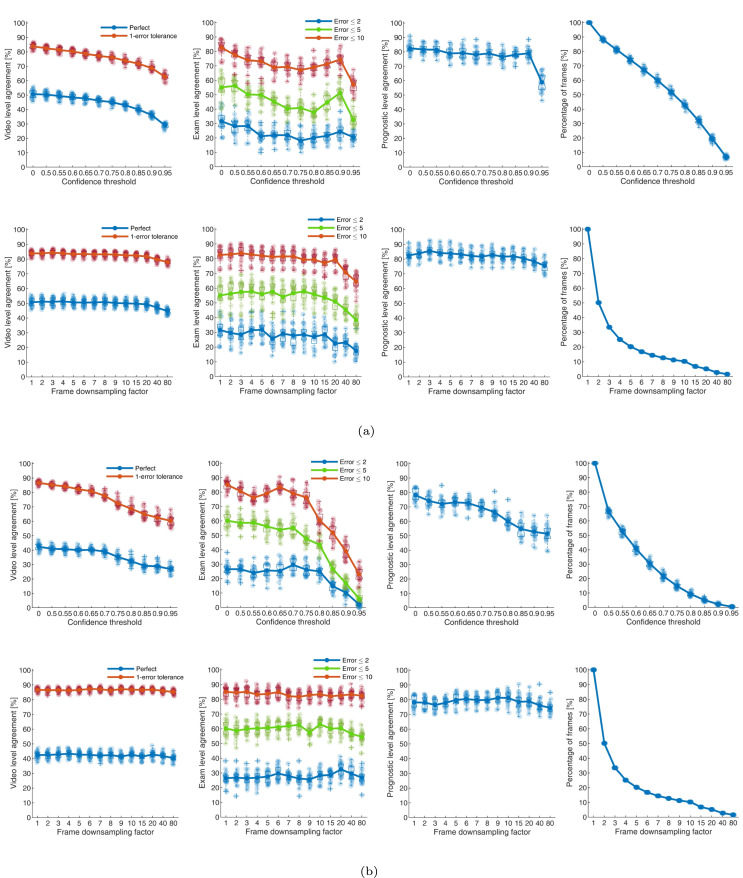

Fig. 5.

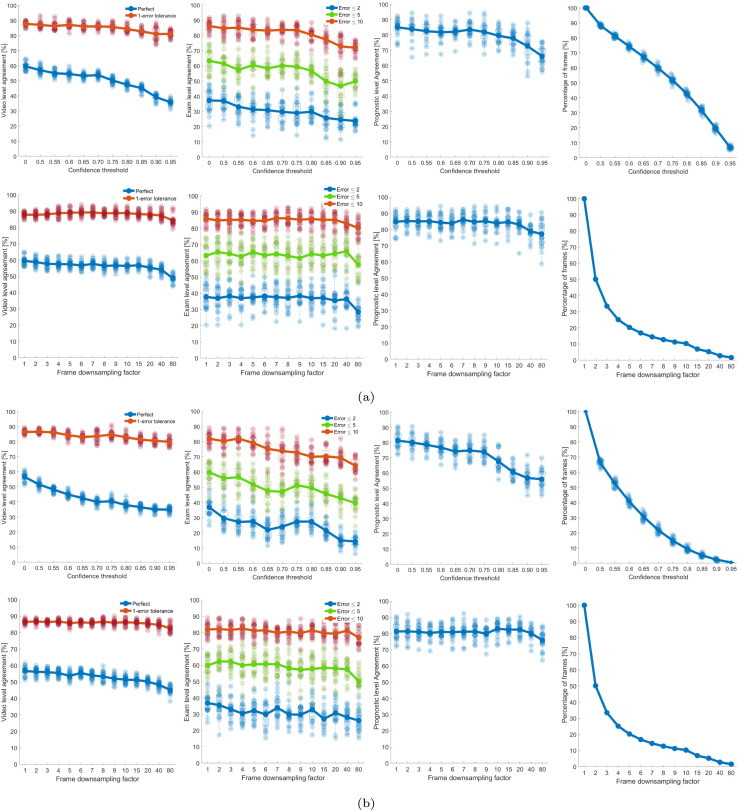

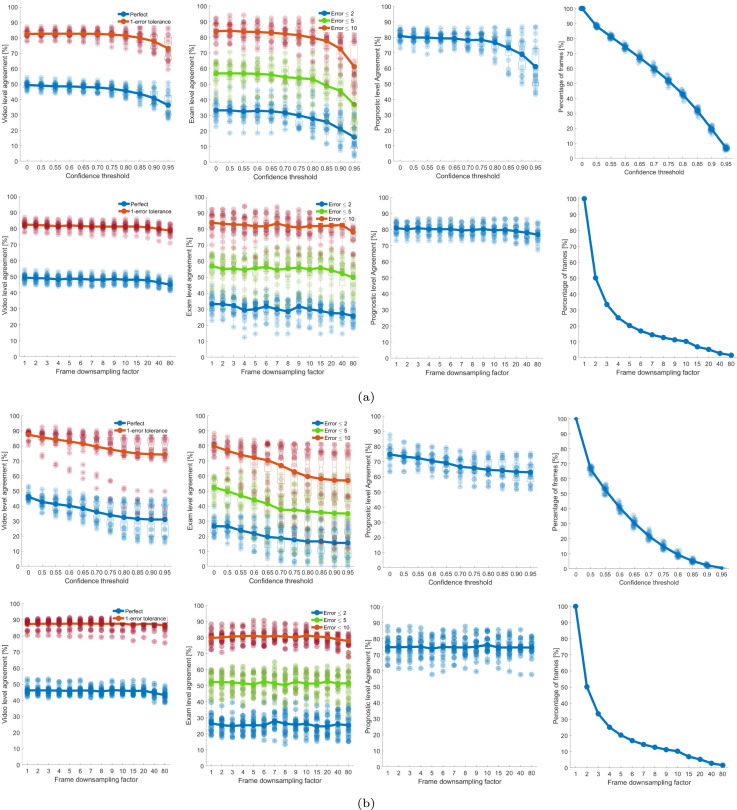

The figure indicates the results for the 60%–40% splits for threshold-based technique. (a) Results for ResNet-18. The first row represents the results obtained by applying a threshold to the confidence (x-axis). Confidence equal to 0 represents the output without applying a threshold to the confidence (all frames considered). The second row represents the results obtained by periodically skipping frames. = 1 represents the output without skipping frames (all frames considered). The first, second, and third columns indicate the video, exam, and prognostic-level agreements. The fourth column indicates how the data are reduced by increasing the (top) and the (bottom). For each point in the -axis a box is drawn, representing the 20 values obtained with the 20 different splits made with 40% of data. The continuous lines and circles indicate the mean values. Upper and lower limits of each box indicate the 75th and 25th percentiles, respectively. The upper and lower horizontal lines of each box represent the maximum and the minimum, respectively. The outliers are represented as crosses. For each point in the axis the 20 values of agreement (1st, 2nd, 3rd columns) and percentage of utilized frames (4th column) are indicated as points in transparency. (b) As for (a) but for ResNet-18.

To analyze the impact on the video-level scoring of the scores’ confidence levels as well as the impact of downsampling the frames within a video, a two criteria-based approach is followed. In the first approach, before applying the aggregation technique, we filter the frames based on the of scores given by the model. Whereas, in the second approach we downsampled the frames within the video by a frame downsampling factor, . In the first approach, we apply the aggregation techniques by considering only the frames having equal to or greater than a specific confidence threshold . Therefore, if the = 0.5, we consider only the frames in the evaluation having a . For instance, if a frame has a maximum = 0.52 for any of the four scores, the frame labeled with the respective score is considered for the aggregation techniques. In contrast, the second approach is based on a periodical skip of the frames contained within each video. In case = 2, considering a video composed of 10 frames, we would thus consider only the first, third, fifth, seventh, and ninth frame of the video for the aggregation technique. The use of the first approach would require a higher computational cost for the frame-level classification, as all the frames should be fed to the network to obtain the confidence level. In contrast, the second approach would require a lower computational cost for the frame-level classification, as a given amount of frames would be automatically skipped. Generally, the computational cost required for both aggregation techniques decreases by increasing the or the , as less frames would be considered. A preliminary study to evaluate the effect of frame downsampling over video and prognostic-level scoring was reported in [29].

2.4.1. Training strategy

For each medical center, we use three ratios of , and for (train-test) split. We consider 20 different folds for each of the above mentioned fractions. As part of the protocol proposed in [12], each patient’s data was recorded from 14 different areas. If we randomly select the mentioned fractions from the whole data, an overlap among patients may exist in the training and testing data. As a result, the problem of information leakage arises, which adds bias to the evaluation. To avoid this leakage between train and test data, the split ratios are selected at patient level, ensuring that no overlap (i.e. data from the same patient) is found between the training and testing data [30].

3. Experiments and results

3.1. Frame-level agreement

To gauge the performance of the state-of-the-art CNN models on our dataset, agreement between the frame-level predicted score by the models and the frame-level score as the ground truth needs to be measured. To do so, we statistically evaluated our model’s performance based on the metric already used in previous studies [14], [15] i.e, F1-Score; assessing classification performance based on weighted average of precision and recall.

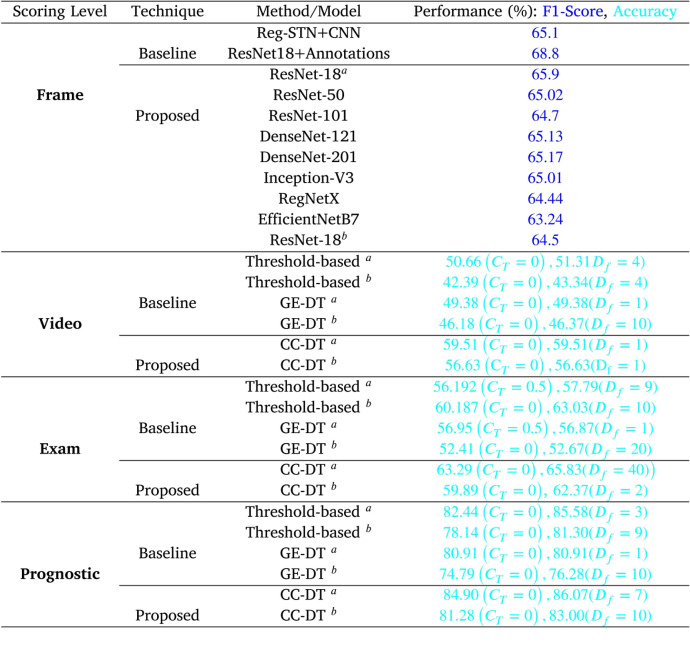

In the initial step of frame-level scoring, the validation task consisted of employing DL models, variants of ResNet, DenseNet, Inception, that were trained and validated over dataset-1. These models were evaluated using the train/test split used in [14], [15] for bench-marking and comparison purposes. Classification results of these models are represented in Table 1. As per the evaluation, ResNet-18 performed better than the rest of the models with an F1-Score of 0.659. To visualize the features and patterns the model considers while making the prediction, Grad-CAM results of input frames to ResNet-18, are also obtained and visualized in Fig. 3.

Fig. 3.

Gradient Class Activation Maps for each class (left to right representing Score 0 to Score 3).

With ResNet-18 turning out to be the best performing model, our evaluation extended towards analyzing the model with pre-trained weights from the ImageNet dataset, loaded in the convolutional layers of the network. Training and fine-tuning the model over dataset-1 with the same train/test split, resulted in giving a comparable performance with an F1-Score of 0.645. To improve the readability, ResNet-18 represents the model trained from scratch while ResNet-18 represents the pre-trained version and will be referred in the manuscript accordingly.

While comparing the performance and architectures of the existing baseline techniques, Roy et al. in [14] proposed a cascaded architecture with Spatial Transformer Network followed by CNN to categorize the LUS video frames. As a result, their method predicted the input frames with an F1-Score of 0.651. Another baseline study, working on the same dataset, belongs to Frank et al. [15], where they not only used the raw LUS frame as an input data but also used additional domain knowledge of vertical artifacts and pleural line as input channels. This multi-channel information is then fed to a set of pre-trained networks which are trained and fine-tuned accordingly. This resulted in achieving an F1-Score of 0.688 when using all of the information channels as input to pre-trained model, ResNet-18. In comparison to these baseline techniques, the best performing model in our study ResNet-18 gave a comparative F1-Score of 0.659 when trained from scratch while gave an F1-Score of 0.645 when used under transfer learning approach.

In the latter part of our frame-level scoring i.e. the testing task, the best performing model, ResNet-18 along with its pre-trained version ResNet-18, are tested over the LUS video frames from dataset-2. Given the frame-level score and the confidence levels for each score on the testing dataset-2, video, exam, & prognostic-level agreement is computed and evaluated.

3.2. Video, exam, & prognostic-level agreement

The proposed method to compute and classify cross-correlation coefficients using DT (CC-DT) and the two existing Threshold-based [24] and Grammatically Evolved DT-based (GE-DT) [26] aggregation techniques are evaluated and compared. As a result video, exam, and prognostic-level scoring is analyzed. As part of the study, the impact on the scoring of the confidence levels and downsampling is also evaluated. These evaluations were performed for three train-test split ratios of , and . However only results for the (train-test) split for the three aggregation techniques are included in the main manuscript. The other results are obtained and thus provided.1

The results of the CC-DT-based approach are shown in Fig. 4. The technique was applied to the frames evaluated by the two versions of ResNet-18 models. The results obtained from ResNet-18 are shown in Fig. 4(a). As previously mentioned, two criteria are considered for reducing the number of frames of a whole video. The first criteria is based on the confidence level, for which we provided the results in Fig. 4(a) (The first row). In the first column of the figure, we provided the video-level agreements (i.e. perfect and 1-error tolerance). As it can be seen, video-level agreement decreases as the confidence-level threshold increases. The perfect and 1-error tolerance video-level agreements are reduced from {59.51% and 87.75%} at ( = 0) to {35.77% and 81.11%} at ( = 0.95), respectively. Similar trend is observed for the exam-level evaluations (Second column in 4(a)). The average prognostic-level agreement is decreased from 84.90% at ( = 0) to 79.42% at ( = 0.95). The final part of Fig. 4(a) (fourth column) shows the percentage of whole video frames used in the aggregation stage. From this figure, it can be understood how much we are allowed to reduce the number of frames based on the confidence level. For example, if we want to reduce the number of frames by 50%, we need to set ( = 0.75) resulting in the average prognostic-level agreement of 81.9%. In the case of using less than 10% of the frames, we set ( = 0.95), which leads to a prognostic-level agreement of 79.42%. For the second criteria, the second row of Fig. 4(a) shows the results for the frame downsampling. In this instance, when the increases, the mean values decrease for all the three forms of agreement (i.e., video, exam, and prognostic-level). However, interestingly the decrease is not substantial.

Fig. 4.

The figure indicates the results for the 60%–40% splits for the proposed CC-DT method.(a) Results for ResNet-18. The first row represents the results obtained by applying a threshold to the confidence (x-axis). Confidence equal to 0 represents the output without applying a threshold to the confidence (all frames considered). The second row represents the results obtained by periodically skipping frames. = 1 represents the output without skipping frames (all frames considered). The first, second, and third columns indicate the video, exam, and prognostic-level agreements. The fourth column indicates how the data are reduced by increasing the (top) and the (bottom). For each point in the -axis a box is drawn, representing the 20 values obtained with the 20 different splits made with 40% of data. The continuous lines and circles indicate the mean values. Upper and lower limits of each box indicate the 75th and 25th percentiles, respectively. The upper and lower horizontal lines of each box represent the maximum and the minimum, respectively. The outliers are represented as crosses. For each point in the axis the 20 values of agreement (1st, 2nd, 3rd columns) and percentage of utilized frames (4th column) are indicated as points in transparency. (b) As for (a) but for ResNet-18.

Performance trends as described above are also visualized for the existing threshold [24] and GE-DT-based method [25] when using the frame-level scoring from ResNet-18 and ResNet-18 in and Fig. 6 respectively. Furthermore, the maximum of mean values of the trend for all level of agreements were also compared, which are quantitatively reported in Table 1. Results reveal that the proposed approach led to the better performance in comparison to the other two aggregation techniques. Specifically, when considering the confidence level threshold for reducing the number of video frames, the results showed that the proposed CC-DT led to the maximum values of video, exam, and prognostic-level agreements of 59.51%, 63.29%, and 84.90% for ResNet-18 and 56.63%, 59.89%, and 81.28% for ResNet-18. In the case of reducing the number of frames based on down-sampling factor, proposed CC-DT resulted in the maximum agreements of 59.51%, 65.83%, and 86.07% for ResNet-18 and 56.63%, 62.37%, and 83.00% for ResNet-18. Conclusively, the proposed CC-DT approach produced better results at video and prognostic level.

Fig. 6.

The figure indicates the results for the 60%–40% splits for GE-DT based approach. (a) Results for ResNet-18. The first row represents the results obtained by applying a threshold to the confidence (x-axis). Confidence equal to 0 represents the output without applying a threshold to the confidence (all frames considered). The second row represents the results obtained by periodically skipping frames. = 1 represents the output without skipping frames (all frames considered). The first, second, and third columns indicate the video, exam, and prognostic-level agreements. The fourth column indicates how the data are reduced by increasing the (top) and the (bottom). For each point in the -axis a box is drawn, representing the 20 values obtained with the 20 different splits made with 40% of data. The continuous lines and circles indicate the mean values. Upper and lower limits of each box indicate the 75th and 25th percentiles, respectively. The upper and lower horizontal lines of each box represent the maximum and the minimum, respectively. The outliers are represented as crosses. For each point in the axis the 20 values of agreement (1st, 2nd, 3rd columns) and percentage of utilized frames (4th column) are indicated as points in transparency. (b) As for (a) but for ResNet-18.

Table 1.

Performance analysis of the proposed approaches with comparison to the baseline methods in terms of Frame, Video, Exam, and Prognostic-level Agreement (Performance in  represents the F1-Score, whereas

represents the F1-Score, whereas  represents the maximum value for Accuracy over varying values of and ).

represents the maximum value for Accuracy over varying values of and ).

4. Discussion and conclusion

In this study, we proposed an extensive analysis of: state-of-the-art CNN models for frame-level scoring, AI strategies for video-level scoring, the impact of reducing frames based on confidence levels and temporal down-sampling. Summarizing our results, we showed that by using only LUS image frames as input, instead of additional domain knowledge [15], and classifying them with the state-of-the-art DL models, instead of complex architectures [14], similar performance can be achieved compared with existing technique [14]. Specifically, compared with the situations where performance gain is achieved while using the additional channel information [15], it is worth mentioning that this gain (2.9% increase of F1 score) may not be significant enough to justify the added computational complexity. Extending our analysis to the use of pre-trained weights from ImageNet dataset, we also observed that the model when trained from scratch performed better than the pre-trained one. This implies that in scenarios of having significant amount of representative data from multiple medical centers, CNN model when trained from scratch is able to generalize better towards unseen data.

When it comes to the video-level scoring of LUS scans, it was found that the proposed CC-DT method outperformed the existing threshold-based and GE-DT based aggregation techniques. Comparing the DT-based methods, both of them added explainability to the scoring process. However, a higher complexity is added to the aggregation method compared with the threshold-based aggregation technique. While analyzing the impact of video-frames reduction on the exam and prognostic-level agreement, results indicated that periodic frame downsampling performed better than the application of a confidence level threshold. This can be explained by the fact that imposing a high confidence level threshold is substantially in contrast with the subjectivity of the scoring system, which results in frames with close to equivalent confidence levels for 2 neighboring scores. Removing these type of frames can ultimately remove from the analysis the most important frames of the video, thus resulting in an erroneous classification.

A few key points to highlight from our analysis are that although the F1-Score of our frame-level method is relatively low, it is in line with the level of inter-observer agreement among clinicians [27]. Since the DL models are trained on data of which ground-truth is provided by the clinicians, it is not reasonable to expect higher performances. Furthermore, it is also important to point out that the relatively lower F1-Score at the frame level does not translate into a low performance at the prognostic-level, as it is demonstrated by the reported results. This can be explained by the fact that the subjectivity of the semi-quantitative scoring system is essentially limited to neighboring scores [27] combined with the cumulative nature of the score utilized to obtain the prognostic stratification. Indeed, differences at the video level do not add coherently across the 14 areas scanned. As future work, we aim to extend our analysis from CNN models to the latest DL methods such as transformers, MLP mixers, etc. With the use of the latest DL-methods we are focusing on the extension of this analysis from COVID-19 to Post-COVID-19 data.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Data availability

Data will be made available on request

References

- 1.Pollard C.A., Morran M.P., Nestor-Kalinoski A.L. The COVID-19 pandemic: a global health crisis. Physiol. Genomics. 2020 doi: 10.1152/physiolgenomics.00089.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Joshi P., Tyagi R., Agarwal K.M. Technological resources for fighting COVID-19 pandemic health issues. J. Ind. Integr. Manag. 2021;6(02):271–285. [Google Scholar]

- 3.Woloshin S., Patel N., Kesselheim A.S. False negative tests for SARS-CoV-2 infection—challenges and implications. N. Engl. J. Med. 2020;383(6) doi: 10.1056/NEJMp2015897. [DOI] [PubMed] [Google Scholar]

- 4.Yang Y., Yang M., Yuan J., Wang F., Wang Z., Li J., Zhang M., Xing L., Wei J., Peng L., et al. Laboratory diagnosis and monitoring the viral shedding of SARS-CoV-2 infection. Innov. 2020;1(3) doi: 10.1016/j.xinn.2020.100061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Soldati G., Smargiassi A., Inchingolo R., Buonsenso D., Perrone T., Briganti D.F., Perlini S., Torri E., Mariani A., Mossolani E.E., et al. Is there a role for lung ultrasound during the COVID-19 pandemic? J. Ultrasound Med. 2020 doi: 10.1002/jum.15284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Demi L. Lung ultrasound: The future ahead and the lessons learned from COVID-19. J. Acoust. Soc. Am. 2020;148(4):2146–2150. doi: 10.1121/10.0002183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Soldati G., Demi M., Smargiassi A., Inchingolo R., Demi L. The role of ultrasound lung artifacts in the diagnosis of respiratory diseases. Expert Rev. Respir. Med. 2019;13(2):163–172. doi: 10.1080/17476348.2019.1565997. [DOI] [PubMed] [Google Scholar]

- 8.Picano E., Pellikka P.A. Ultrasound of extravascular lung water: a new standard for pulmonary congestion. Eur. Heart J. 2016;37(27):2097–2104. doi: 10.1093/eurheartj/ehw164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Soldati G., Demi M., Inchingolo R., Smargiassi A., Demi L. On the physical basis of pulmonary sonographic interstitial syndrome. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2016;35(10):2075–2086. doi: 10.7863/ultra.15.08023. [DOI] [PubMed] [Google Scholar]

- 10.Copetti R., Soldati G., Copetti P. Chest sonography: a useful tool to differentiate acute cardiogenic pulmonary edema from acute respiratory distress syndrome. Cardiovasc. Ultrasound. 2008;6(1):1–10. doi: 10.1186/1476-7120-6-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mento F., Demi L. On the influence of imaging parameters on lung ultrasound B-line artifacts, in vitro study. J. Acoust. Soc. Am. 2020;148(2):975–983. doi: 10.1121/10.0001797. [DOI] [PubMed] [Google Scholar]

- 12.Soldati G., Smargiassi A., Inchingolo R., Buonsenso D., Perrone T., Briganti D.F., Perlini S., Torri E., Mariani A., Mossolani E.E., et al. Proposal for international standardization of the use of lung ultrasound for patients with COVID-19: a simple, quantitative, reproducible method. J. Ultrasound Med. 2020;39(7):1413–1419. doi: 10.1002/jum.15285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Van Sloun R.J., Demi L. Localizing B-lines in lung ultrasonography by weakly supervised deep learning, in-vivo results. IEEE J. Biomed. Health Inf. 2019;24(4):957–964. doi: 10.1109/JBHI.2019.2936151. [DOI] [PubMed] [Google Scholar]

- 14.Roy S., Menapace W., Oei S., Luijten B., Fini E., Saltori C., Huijben I., Chennakeshava N., Mento F., Sentelli A., Peschiera E., Trevisan R., Maschietto G., Torri E., Inchingolo R., Smargiassi A., Soldati G., Rota P., Passerini A., van Sloun R.J.G., Ricci E., Demi L. Deep learning for classification and localization of COVID-19 markers in point-of-care lung ultrasound. IEEE Trans. Med. Imaging. 2020;39(8):2676–2687. doi: 10.1109/TMI.2020.2994459. [DOI] [PubMed] [Google Scholar]

- 15.Frank O., Schipper N., Vaturi M., Soldati G., Smargiassi A., Inchingolo R., Torri E., Perrone T., Mento F., Demi L., et al. Integrating domain knowledge into deep networks for lung ultrasound with applications to COVID-19. IEEE Trans. Med. Imaging. 2021;41(3):571–581. doi: 10.1109/TMI.2021.3117246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Demi L., Mento F., Di Sabatino A., Fiengo A., Sabatini U., Macioce V.N., Robol M., Tursi F., Sofia C., Di Cienzo C., et al. Lung ultrasound in COVID-19 and post-COVID-19 patients, an evidence-based approach. J. Ultrasound Med. 2021 doi: 10.1002/jum.15902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Khan U., Mento F., Giacomaz L.N., Trevisan R., Smargiassi A., Inchingolo R., Perrone T., Demi L. Deep learning-based classification of reduced lung ultrasound data from COVID-19 patients. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2022;69(5):1661–1669. doi: 10.1109/TUFFC.2022.3161716. [DOI] [PubMed] [Google Scholar]

- 18.Demi L., Wolfram F., Klersy C., De Silvestri A., Ferretti V.V., Muller M., Miller D., Feletti F., Wełnicki M., Buda N., et al. New international guidelines and consensus on the use of lung ultrasound. J. Ultrasound Med. 2022 doi: 10.1002/jum.16088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Savage N. Breaking into the black box of artificial intelligence. Nature. 2022 doi: 10.1038/d41586-022-00858-1. [DOI] [PubMed] [Google Scholar]

- 20.G. Soldati, A. Smargiassi, R. Inchingolo, D. Buonsenso, T. Perrone, D.F. Briganti, S. Perlini, E. Torri, A. Mariani, E.E. Mossolani, F. Tursi, F. Mento, L. Demi, Proposal for International Standardization of the Use of Lung Ultrasound for Patients With COVID-19, J. Ultrasound Med. 39 (7) 1413–1419. [DOI] [PMC free article] [PubMed]

- 21.Mento F., Perrone T., Fiengo A., Smargiassi A., Inchingolo R., Soldati G., Demi L. Deep learning applied to lung ultrasound videos for scoring COVID-19 patients: A multicenter study. J. Acoust. Soc. Am. 2021;149(5):3626–3634. doi: 10.1121/10.0004855. [DOI] [PubMed] [Google Scholar]

- 22.Zhang A., Lipton Z.C., Li M., Smola A.J. 2021. Dive into deep learning. arXiv preprint arXiv:2106.11342. [Google Scholar]

- 23.R.R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra, Grad-cam: Visual explanations from deep networks via gradient-based localization, in: Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 618–626.

- 24.Mento F., Perrone T., Fiengo A., Smargiassi A., Inchingolo R., Soldati G., Demi L. Deep learning applied to lung ultrasound videos for scoring COVID-19 patients: A multicenter study. J. Acoust. Soc. Am. 2021;149(5):3626–3634. doi: 10.1121/10.0004855. [DOI] [PubMed] [Google Scholar]

- 25.Custode L.L., Mento F., Afrakhteh S., Tursi F., Smargiassi A., Inchingolo R., Perrone T., Iacca G., Demi L. Proceedings of Meetings on Acoustics 182ASA, Vol. 46, No. 1. Acoustical Society of America; 2022. Neuro-symbolic interpretable AI for automatic COVID-19 patient-stratification based on standardised lung ultrasound data. [Google Scholar]

- 26.O’Neil M., Ryan C. Grammatical Evolution. Springer; 2003. pp. 33–47. [Google Scholar]

- 27.Fatima N., Mento F., Zanforlin A., Smargiassi A., Torri E., Perrone T., Demi L. Human-to-AI interrater agreement for lung ultrasound scoring in COVID-19 patients. J. Ultrasound Med. 2022 doi: 10.1002/jum.16052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.T. Perrone, G. Soldati, L. Padovini, A. Fiengo, G. Lettieri, U. Sabatini, G. Gori, F. Lepore, M. Garolfi, I. Palumbo, R. Inchingolo, A. Smargiassi, L. Demi, E.E. Mossolani, F. Tursi, C. Klersy, A. Di Sabatino, A New Lung Ultrasound Protocol Able to Predict Worsening in Patients Affected by Severe Acute Respiratory Syndrome Coronavirus 2 Pneumonia, J. Ultrasound Med. 40 (8) 1627–1635. [DOI] [PubMed]

- 29.Khan U., Mento F., Giacomaz L.N., Trevisan R., Demi L., Smargiassi A., Inchingolo R., Perrone T. Proceedings of Meetings on Acoustics 182ASA, Vol. 46, No. 1. Acoustical Society of America; 2022. Impact of pixel, intensity, & temporal resolution on automatic scoring of LUS from coronavirus disease 2019 patients. [Google Scholar]

- 30.Roshankhah R., Karbalaeisadegh Y., Greer H., Mento F., Soldati G., Smargiassi A., Inchingolo R., Torri E., Perrone T., Aylward S., et al. Investigating training-test data splitting strategies for automated segmentation and scoring of COVID-19 lung ultrasound images. J. Acoust. Soc. Am. 2021;150(6):4118–4127. doi: 10.1121/10.0007272. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request