Abstract

Motivation

Deep learning approaches have empowered single-cell omics data analysis in many ways and generated new insights from complex cellular systems. As there is an increasing need for single-cell omics data to be integrated across sources, types and features of data, the challenges of integrating single-cell omics data are rising. Here, we present an unsupervised deep learning algorithm that learns discriminative representations for single-cell data via maximizing mutual information, SMILE (Single-cell Mutual Information Learning).

Results

Using a unique cell-pairing design, SMILE successfully integrates multisource single-cell transcriptome data, removing batch effects and projecting similar cell types, even from different tissues, into the shared space. SMILE can also integrate data from two or more modalities, such as joint-profiling technologies using single-cell ATAC-seq, RNA-seq, DNA methylation, Hi-C and ChIP data. When paired cells are known, SMILE can integrate data with unmatched feature, such as genes for RNA-seq and genome-wide peaks for ATAC-seq. Integrated representations learned from joint-profiling technologies can then be used as a framework for comparing independent single source data.

Availability and implementation

The source code of SMILE including analyses of key results in the study can be found at: https://github.com/rpmccordlab/SMILE, implemented in Python.

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

Deep-learning-based single-cell analysis has gained great attention in recent years and has been used in a range of tasks, including accurate cell-type annotation (Ma and Pellegrini, 2020), expression imputation (Arisdakessian et al., 2019) and doublet identification (Bernstein et al., 2020). In these tasks, deep learning showed some striking advantages. For example, in cell-type annotation, the automatic and accurate annotation using a deep classification model saves researchers from manual cell-type annotation (Kimmel and Kelley, 2021; Lopez et al., 2018; Ma and Pellegrini, 2020). Another application of deep learning is data imputation and denoising. Though there has been a dramatic improvement in scRNA-seq technology, the problem of measurement sparsity remains as a grand challenge in single-cell transcriptome data (Lähnemann et al., 2020). Due to the difficulty of modeling technical zero values and biological zeros, deep learning has become a more appealing alternative for this task. An autoencoder (AE) is a common artificial neural network that is used to learn representations for data in an unsupervised manner. Both DeepImpute (Arisdakessian et al., 2019) and DCA (Eraslan et al., 2019) adopt a variant of AE model to impute gene expression and denoise scRNA-seq data. These approaches and many others are revealing the power of deep learning applied to single-cell omics datasets.

Data integration is a rising challenge in single-cell analysis, as increasing numbers of single-cell omics datasets become available, and the types of omics data become more diverse. Consequently, data integration becomes a key research domain for understanding a complex cellular system from different angles (Argelaguet et al., 2021; Forcato et al., 2021; Longo et al., 2021; Stuart and Satija, 2019). In single-cell transcriptomes, batch effects are usually a prominent variation when comparing data from multiple sources and removing batch effects is a critical step for revealing biologically relevant variation. Besides integrating single-cell transcriptome data, integration of multimodal single-cell data is even becoming more important as technological breakthroughs make it possible to capture multiple data types from the same single cell. For example, sci-CAR and SHARE-seq can simultaneously profile chromatin accessibility and gene expression for thousands of single cells (Cao et al., 2018; Ma et al., 2020). scMethyl-HiC and sn-m3C-seq can profile DNA methylation and 3D chromatin structure at the same time at single-cell resolution (Lee et al., 2019; Li et al., 2019). However, these data types do not naturally share the same feature space: transcriptomes are described using genes as features, while chromatin accessibility is reported across all intergenic spaces. Therefore, integration of multimodal data is more challenging because of this feature discrepancy. Furthermore, a new technology named Paired-Tag now achieves joint profiling of gene expression and five different histone marks for thousands of single nuclei (Zhu et al., 2021). This new technology brings the further challenge of integrating more than two modalities.

Currently, there are three major approaches to integrate single-cell data: horizontal, vertical and diagonal approaches, respectively, and a previous review has outlined published methods in each category (Argelaguet et al., 2021). Diagonal approaches would theoretically allow the integration of datasets without paired cells or shared features, but computational studies are showing that this is a much greater challenge, and there has not been a method that demonstrates a diagonal approach successful for general use in single-cell data integration. Thus, horizontal and vertical integration approaches are highly useful in different circumstances. Horizontal approaches rely on feature anchors (such as shared gene sets) for integration. Methods in this category can address batch effects within multisource single-cell transcriptome data or multimodal single-cell data integration, if shared features exist. Since independent single-cell assays over the years have generated most single-cell omics data, integrative analysis by horizontal approaches is critical for a full use of these independent studies. On the other hand, vertical approaches need cell anchors to learn the integration and have a unique niche when shared features are difficult to engineer across different data types. For example, in joint Methyl/Hi-C data, Hi-C data quantitates 2D interaction features across the genome while the methylation level of genomic regions in is measured in 1D along the genome (Lee et al., 2019; Li et al., 2019). While 2D Hi-C data can be used to calculate 1D properties such as A/B compartment status or TAD insulation profiles, it is unclear how best to convert these data into a common feature space while preserving important biological information. But, if cell pairs are known, as in joint-profiling data, vertical methods can use this information to learn which features allow these divergent data types to be encoded into integrated representations. Joint representations developed by vertical integration can also then be used to project other single source data from any of the initially used feature spaces.

Here, we designed a deep learning model, SMILE (Single-cell Mutual Information Learning), that learns a discriminative representation for data integration in an unsupervised manner. In our approach, we restructured cells into pairs, and we aimed to maximize the similarity between the paired cells in the latent space. SMILE can perform well in a horizontal mode, using anchored features such as shared gene sets to remove batch effects in data from different sources. However, SMILE also has key advantages when applied to vertical integration. Because of its cell-pairing design, SMILE extends naturally into integration of multimodal single-cell data, where data from two sources (RNA-seq/ATAC-seq or Methyl/Hi-C) exist for each cell and thus form a natural pair. We demonstrated that SMILE can effectively project RNA-seq/ATAC-seq data, as well as Methyl/Hi-C data, into the shared space and achieve data integration. In this way, SMILE supplies the key advantages of a good vertical method, integrating datatypes that cannot be fit into shared feature spaces. We demonstrate how our representation allows us to identify genes and regions of accessibility that are critical for the mutual definition of distinctive cell types by these different data types. We also show how an integrated representation created using jointly profiled data can then be used to project and interpret single source data. Finally, we present a combinatorial use of SMILE models to integrate single-cell RNA-seq, H3K4me1, H3K9me3, H3K27me3 and H3K27ac data generated by Paired-Tag. In summary, SMILE performs as well or better than other methods designed for data integration while also having increased flexibility in terms of data input types.

2 Materials and methods

2.1 Architecture of SMILE, p (paired) SMILE and mp (modified paired) SMILE

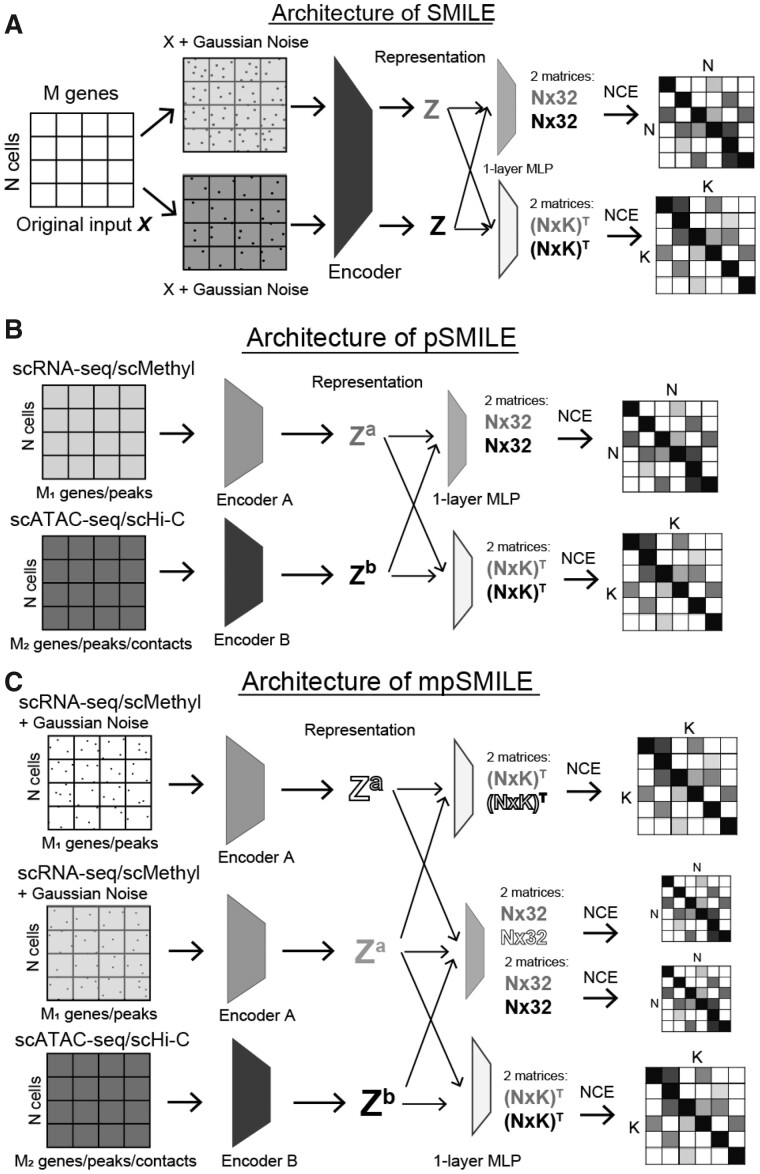

We propose three different variants of SMILE models to serve different purposes in single-cell data integration (Fig. 1). All three variants have encoders as the main components for feature extraction. In the first variant, which we term simply SMILE, there is only one encoder. This encoder consists of three fully connected layers that have 1000, 512 and 128 nodes, respectively. Each fully connected layer is coupled with a BatchNorm layer to normalize output which is further activated by ReLu function. Different from SMILE, pSMILE and mpSMILE have another encoder that has the same structure as the one in SMILE but takes an input with different dimensions. The use of two independent encoders (Encoder A and B) in pSMILE and mpSMILE allows handling inputs from two modalities with different features, e.g. RNA-seq and ATAC-seq. Therefore, pSMILE and mpSMILE do not require extra feature engineering to match features for inputs from two different data sources. Modified from pSMILE, mpSMILE has a duplicated Encoder A, which shares the same weights. Using duplicated encoders takes advantage of the discriminative power of RNA-seq or Methyl data to learn a more discriminative representation. Our own results below and several other integration methods show that giving more weight to RNA-seq data can assist in learning discriminative representations (Jain et al., 2021; Lin et al., 2021; Peng et al., 2021; Stuart et al., 2019). We stack two independent fully connected layers to the encoder(s), which further reduce the output from the encoder(s) to a 32-dimension vector with ReLu activation and K pseudo cell-type probabilities with SoftMax activation. In this study, we set K to 25 for all datasets, based on the observation that most single-cell data would contain no more than 25 major cell types unless it is an atlas-scale data. Meanwhile, though SMILE is fully unsupervised, it can be easily turned into a semisupervised method with no extra modification of the model architecture, because of the incorporation of K pseudo cell-type probabilities. When cell-type labels are available for a proportion of data, K can be set as the number of known cell types and the user can add a classification loss to reframe SMILE into semisupervised learning.

Fig. 1.

Architecture of three SMILE variants. (A) Architecture of SMILE. X is a gene expression matrix (cells = rows, gene = columns). Random Gaussian noise is added to differentiate the input X into two Xs. The Encoder consists of two fully connected layers that project X into 128-dimension representation z. Two independent fully connected layers (grey and white) are stacked onto the encoder to further reduce z into 32-dimension output and K pseudo cell-type probabilities, respectively. Computing NCE on these outputs generates two matrices: N × N (N = cells) and K × K. Gradient descending guides the model to increase values on the diagonal of the two matrices while decreasing values in the off diagonal. (B) Architecture of pSMILE. scRNA-seq/scMethyl is forwarded through Encoder A to produce representation za, and scATAC-seq/scHi-C is forwarded through Encoder B to produce representation zb. 1-layer MLPs are the same as those in SMILE. (C) Architecture of mpSMILE. mpSMILE uses Encoder A and Encoder B for different data types like pSMILE, but also has duplicated Encoder As, and cells in scRNA-seq/scMethyl are duplicated by adding Gaussian noise to create self-pairs, as in SMILE. Two 1-layer MLPs in mpSMILE are the same as those in SMILE

Next, we provide a detailed explanation about the architecture of SMILE (Fig. 1A). The main component is a multilayer perceptron (MLP) used as an encoder that projects cells from the original feature space X to representation z. To achieve this goal, SMILE relies on maximizing mutual information between X and z. Mutual information measure the dependency of z on X. If we maximize the dependency, we can end up using low-dimension z to represent the high-dimension X. Contrastive learning is one approach to maximize mutual information, and it usually requires pairing one sample with a positive or negative sample for representation learning (Amid and Warmuth, 2019). Then, the goal is to maximize similarity between the positive pair and dissimilarity between the negative pair in the representation z. Due to the lack of labels, pairing samples is a challenging task. However, treating a sample itself as its positive sample and any other cells as negative samples can be a shortcut for reframing the data into pairs, and Chen et al. (2020) demonstrated that such a simple framework can effectively learn visual representation for images. In single-cell transcriptome data, we adopt the same framework to pair each cell to itself. To prevent each pair from being completely the same, we add Gaussian noise to expression values of each cell. Then, we maximize mutual information by forcing each cell to be like its noise-added pair and to be distinct from all other cells. To implement this in the neural network, we used noise-contrastive estimation (NCE) as the core loss function to guide the neural network to learn (see Section 2.3; Wu et al., 2018). We did not directly apply NCE on representation z, but further reduced z to a 32-dimension output and K pseudo cell-type probabilities, by stacking two independent one-layer MLPs onto the encoder. A one-layer MLP generating a 32-dimension vector will produce rectified linear unit (ReLU) activated output, and the other will produce probabilities of pseudo cell types with SoftMax activation. Finally, NCE was applied on the 32-dimension output and pseudo probabilities, independently. These two one-layer MLPs produce two independent outputs which both contribute to the representation learning of the encoder (Li et al., 2020). Once trained, the encoder serves as a feature extractor that projects data from the original space X to a low-dimension representation z.

To apply SMILE to joint-profiling data, we modified it into new architectures, pSMILE (Fig. 1B) and mpSMILE (Fig. 1C). These new architectures contain two separate encoders (Encoder A and Encoder B). Encoder A projects RNA-seq or methylation data into representation za, while Encoder B handles projection of ATAC-seq or Hi-C into representation zb. We aim to learn za and zb that will be confined in the shared latent space, so we also apply the same one-layer MLPs to each, which further reduce them into 32-dimension output and probabilities of K pseudo cell types. This is the same as we did in the basic SMILE model. Using the RNA/ATAC- or Methyl/Hi-C-joint data to train p/mpSMILE will be the same as using the self-paired data, except that we introduced two separate encoders to handle inputs from two modalities. Differentiating from pSMILE, mpSMILE has two duplicated Encoder As that share the same weights. In mpSMILE, besides pairing cells from RNA-sea/Methyl and their corresponding cells from ATAC-sea/Hi-C, we will also do self-pairing for cells in RNA-seq/Methyl data as we did in SMILE, by introducing Gaussian noise. As for the difference between pSMILE and mpSMILE, we provide experimental outcomes in Section 3.3.

2.2 Cell pairing

SMILE takes paired cells as inputs. When using SMILE for integration of multisource single-cell transcriptome data, create self-pairs for each cell. To prevent the two cells in each pair from being completely the same, we add Gaussian noise to the raw observation X to differentiate them. Other noise-addition approaches should be applicable here. For example, randomly masking some expression values has been shown to an alternative way to learn discriminative representation for single-cell RNA-seq data (Ciortan and Defrance, 2021). Here, we choose Gaussian noise, and the learning process will maximize the true similarities between the pair while minimizing the effect of Gaussian noise. In all cases, the Gaussian noise mean is set to zero to avoid systematically shifting the mean of the original data. The choice of Gaussian noise variance is made after systematic parameter evaluation, described below (Section 2.4). When using pSMILE and mpSMILE for integration of multimodal single-cell data, we pair a cell from RNA-seq/Methyl with its counterpart from ATAC-seq/Hi-C. Since joint-profiling quantifies two aspects of one single cell, we know that one cell in RNA-seq/Methyl has a corresponding cell in ATAC-seq/Hi-C. When RNA-seq and ATAC-seq come from two separate studies, the user may need to pair cells of the same cell type manually. Here, we suggest using ‘FindTransferAnchors’ function in Seurat to generate cell pairs. Then, user can use these paired cells to train p/mpSMILE.

2.3 Loss function

2.3.1 Noise-contrastive estimation

The core concept of making cells resemble themselves resides in the use of NCE as the main loss function. In training, we divide a whole dataset into multiple batches, and each batch has N cells. For multisource single-cell transcriptome data, we differentiate each cell into two by adding random Gaussian noise. Therefore, there are 2N cells in one batch. In each batch, each cell has itself as a positive sample and the rest of 2(N − 1) cells as its negative samples. For joint-profiling data and in an N-pair batch, one of N cells in RNA-seq/Methyl has its corresponding cell among N in ATAC-seq/Hi-C as the positive sample and the rest of 2(N − 1) cells summed from both RNA-seq/Methyl and ATAC-seq/Hi-C as negative samples. Let denote the dot product between normalized and . Then, NCE for a positive pair of examples can be defined as

| (1) |

where denotes a temperature parameter.

2.3.2 Mean squared error

We use mean squared error (MSE) as additional loss function in p/mpSMILE to push the representation of ATAC-seq/Hi-C to be closer to the representation of RNA-seq/Methyl in the latent space

| (2) |

where and are the representations through Encoders A and B.

Of note, MSE alone is unable to drive the model to learn a discriminative representation (Supplementary Figs S9 and S13 and Section 3).

2.4 Data integration through SMILE/pSMILE/mpSMILE

We used ‘StandardScaler’ in sklearn to scale all input data before training SMILE, with the exception of the dimension-reduced Hi-C data, which was already scaled. We trained SMILE with batch size as 512 for all multisource single-cell transcriptome data in this study, and the SMILE model can converge within five epochs in all cases, indicated by the total loss. In benchmark integration of four joint-profiling RNA-seq and ATAC-seq data, we use all cell pairs for training, and we trained p/mpSMILE for 20 epochs with batch size 512. For sn-m3C-seq data, we trained p/mpSMILE on whole data for 10 epochs with batch size 512. For all experiments in this study, we used learning rate as 0.01 with 0.0005 weight decay. There are also three key parameters in all three SMILE variants, temperature τN for the 32-dimension output, temperature τK for the K pseudo cell-type probabilities and variance of Gaussian noise. For multisource single-cell transcriptome data, we performed a systematic parameters comparison (Supplementary Fig. S1). Based on the comparison results, we fixed τN as 0.1, τK as 1, and Gaussian variance as 1 for all integration by SMILE. For multimodal single-cell data integration by p/mpSMILE, we also performed systematic parameter comparison (Supplementary Fig. S2). Like the basic SMILE model, Gaussian variance of 1 is an optimal setting across different datasets. Differently, we found that the best choice of τN and τK should be small, ranging from 0.05 to 0.25. Therefore, to integrate multimodal single-cell data, we fixed τN as 0.05 and τK as 0.15. A study published after our initial preprint provides detailed support that a fundamentally similar approach can learn discriminative representation for single-cell data (Ciortan and Defrance, 2021). However, they did not extend their method to data integration, which is our focus in this study.

2.5 Evaluation of data integration

To evaluate batch-effect correction, we use ARI and silhouette score as the evaluation metrics. For ARI, we perform Leiden clustering to recluster cells using the batch-removed representation. Then, we compare the new clustering label with the cell-type label reported by the authors. For fair comparison with LIGER, Harmony and Seurat, we use multiple resolutions to get the clustering label that has the highest ARI against author-reported cell-type label, and report that ARI value as the performance of LIGER, Harmony and Seurat in that data. For silhouette score, we defined batch silhouette and cell-type silhouette. Batch silhouette measures how well different batches align. We use batch information as labels to calculate a typical silhouette score S and then report its absolute value abs(S) as batch silhouette. Cell-type silhouette indicates how disguisable representation of cells from one cell type are from other cell types. Here, we use author-reported cell-type labels to calculate the typical silhouette score S and then transform it through (1 + S)/2. Therefore, both batch and cell-type silhouette scores will range from 0 to 1. Batch silhouette scores closer to 0 indicate good batch correction, while cell-type silhouette scores closer to 1 show good cell-type separation.

To evaluate integration of multimodal single-cell data, we also use the same silhouette scores as above, but we renamed batch silhouette as modal silhouette because we use modality information for calculation. Again, modal silhouette closer to 0 represents better mixing of RNA-seq and ATAC-seq data, while cell-type silhouette closer to 1 suggests cells are separated by their cell type no matter which modality they belong to. Meanwhile, we know each cell pair because of joint profiling. So, we also measure Euclidean distance of paired cells in the 2D UMAP space, before and after training, to show if paired cells become closer in the integrated representation.

2.6 Evaluation of label transferring

Once we have an integrated representation for multiple datasets, we can transfer labels from a known data to other unknown data. To evaluate label transferring, we use macro-F1 score. In integration of multisource single-cell transcriptome data, we select one source as the training set to train a Support Vector Machine (SVM) classifier, and test the accuracy measured through macro-F1 score in other sources. In joint-profiling data, we reason a good integration should allow mutual label transferring, either from RNA-seq/Methyl to ATAC-seq/Hi-C or back from ATAC-seq/Hi-C to RNA-seq/Methyl. So, we report a macro-F1 score for both transferring directions. We use the author-reported cell-type label as ground-truth to train an SVM classifier on RNA-seq or Methyl and test it in ATAC-seq or Hi-C or vice versa.

2.7 RNA-seq, ATAC-seq, Methyl, Hi-C and histone mark processing

For RNA-seq data, raw gene expression count data were normalized through a ‘LogNormalize’ method, which normalizes the raw count for each cell by its total count, then multiplies this by a scale factor (10 000 in our analysis), and log-transforms the result. Then, we use ‘highly_variable_genes’ function in Scanpy to find most variable genes as input for SMILE (Wolf et al., 2018). For ATAC-seq data at peak level, we perform TF-IDF transformation and select top 90-percentile peaks as the input for SMILE. For ATAC-seq data at gene level, we first use ‘CreateGeneActivityMatrix’ function in Seurat to sum up all peaks that fall within a gene body and its 2000 bp upstream, and we use this new quantification to represent gene activity (Stuart et al., 2019). Then, we apply LogNormalize to gene activity matrix and find most variable genes as input for SMILE. For CG methylation data, we first calculate CG methylation level for all nonoverlapping autosomal 100 kb bins across entire human genome. Then, we apply LogNormalize to the binned CG methylation data. For Hi-C data, we use scHiCluster with default setting to generate an imputed Hi-C matrix at 1-MB resolution for each cell (Zhou et al., 2019). Due to the size of Hi-C matrix, we are unable to concatenate all chromosomes to get a genome-wide Hi-C matrix. Therefore, we use a dimension-reduced Hi-C data of whole genome, which is implemented in scHiCluster. For histone mark data, we perform TF-IDF to transform the raw peak data and select top 95-percentile peaks as the input for SMILE. All data used in this study are publicly available with GEO accession or hosted by institution data portals (Supplementary Table S1).

2.8 Downstream analysis

We performed Wilcoxon tests to identify key differential genes/peaks and their ranking in mouse skin RNA-seq, ATAC-seq gene activity and ATAC-seq peak data, using author-reported cell-type labels. We only selected the top 15 genes in RNA-seq, top 150 genes in ATAC-seq gene activity and top 3000 peaks in ATAC-seq peak of each cluster as key differential genes. For testing which features contribute to the representation learned by SMILE, key genes/peaks for each cluster were sequentially assigned values of zero and then the dataset was fed back through the encoder to determine the effect on the representation. We also suppressed activity values of each gene to zero and forwarded the data with one gene suppressed through the encoder. Then, we measure how much disruption one gene has on the integrated representation, and we selected the top 5% genes that have the most disruption in one particular cell type. Finally, we forwarded data, in which these top 5% screened genes were suppressed to 0 s, through the encoder again to measure the collective disruption on the integrated representation. To screen candidate peaks, we first assigned all peaks into 50 topics via negative matrix factorization, and used the same approach to identify which topic contributes most to a particular cell type. Our screening approach is also conceptually similar to the motif screening used to probe a deep learning representation in Fudenberg et al. (2020).

3 Results

3.1 SMILE accommodates many single-cell data types

Before we demonstrate applications of SMILE in data integration, we first show that SMILE can handle most types of single-cell omics data separately. We tested SMILE on RNA-seq data from human pancreas, ATAC-seq data from Mouse ATAC Atlas and Hi-C data from mouse embryo cells (Baron et al., 2016; Collombet et al., 2020; Cusanovich et al., 2018). SMILE can effectively learn a discriminative representation for single-cell human pancreas RNA-seq (Supplementary Fig. S3A). Meanwhile, SMILE distinguishes tissue types within the mouse ATAC Atlas, and it also recovers major cell types in the brain tissue (Supplementary Fig. S3A). In the task of clustering single-cell Hi-C data, SMILE has a slight advantage of distinguishing different cell stages compared to PCA (the baseline) (Supplementary Fig. S3B). However, for single source data, SMILE does not show a substantial difference from a standard PCA approach. Since PCA finds most variations and it is unlikely that unwanted variations are confounded within single source data, there would be no obvious advantage of using SMILE to find biological variations. Instead, we turn to the primary application of SMILE: single-cell data integration.

3.2 SMILE eliminates batch effects in single-cell transcriptome data from multiple sources

It is now common to find multiple single-cell transcriptomics datasets for the same tissue or biological system generated by different techniques or research groups. A standard clustering analysis often fails to identify cell types, but instead only detects differences between experimental batches. In contrast, SMILE directly learns a representation that is not confounded by batch effect and can be combined with common clustering methods for cell-type identification. We tested batch-effect correction in human pancreas data, human peripheral blood mononuclear cell (PBMC) data and human heart data (Baron et al., 2016; Grün et al., 2016; Lawlor et al., 2017; Litviňuková et al., 2020; Muraro et al., 2016; Segerstolpe et al., 2016; Tucker et al., 2020; Zheng et al., 2017). To benchmark the performance of SMILE in removing batch effects, we compared SMILE with LIGER, Harmony and Seurat. These three methods have been reported as the three top methods in a benchmarking study of batch-effect correction (Butler et al., 2018; Korsunsky et al., 2019; Liu et al., 2020; Tran et al., 2020). We found that SMILE has comparable performance to Harmony across these three systems in terms of batch and cell-type silhouette scores (Fig. 2A). Meanwhile, the integrated representations learned by SMILE and Harmony are friendly for de novo clustering and label transferring via classification, as measured through ARI and macro-F1 scores (Fig. 2A). Both methods removed batch effects and recovered cell types identified in original reports (Fig. 2B–D). In our benchmark study, LIGER is ranked as the 4th place in all four metrics. We also noticed LIGER returns the worst representation in human PBMC data (Fig. 2C). On the other hand, Seurat ranks as the best method overall. However, a substantial disadvantage of Seurat is its inefficient computation design for large datasets. We must use 128GB memory to successfully run Seurat for human heart data, while the other three tools use 64 GB memory with significantly less computation time (Supplementary Fig. S4).

Fig. 2.

Integration of multisource single-cell transcriptome data using SMILE. (A) Evaluation of batch-effects correction. Batch and cell-type silhouette scores measure how well different batches are mixed while distinct cell types are separated apart. Batch silhouette of 0 indicates good mixing of different batches, while cell-type silhouette closer to 1 indicates better separation of distinct cell types. ARI (1 = optimal) measures the match between assigned clustering labels and original study cell-type labels. To measure label transferring, SVM classifiers are trained with single source data, and then macro-F1s are calculated by assigning cell types to the rest of the data sources using that classifier. (B–D) UMAP visualization of integrated representations of (B) human pancreas data, (C) human PBMC data and (D) human heart data, using raw data, or representations learned by LIGER, Harmony, Seurat and SMILE. Cells in upper rows colored by putative cell types reported in original studies; and cells in lower rows colored according to their sources or batch ID

One advantage of SMILE in contrast to LIGER, Harmony and Seurat is that SMILE can perform batch-effect correction without needing explicit batch information to be provided. This is useful when batch information is complicated by other differences, as is the case for data collected from different tissues: these experiments are different both biologically and technically. However, in most cases, the mixed information can also serve as a batch label to guide tools like LIGER, Harmony and Seurat for batch correction. We next tested SMILE on such a dataset: single-cell transcriptome data from six different tissues in Human Cell Landscape (Han et al., 2020). Without dealing with batch effects and sample differences, a standard analysis pipeline recovers tissue differences as the major source of variation, while endothelial cells, which we focus on as an example cell type, are spread out rather than clustered within each tissue (Supplementary Fig. S5). However, by applying SMILE to these tissues, we show that SMILE can find a common representation for cell types (e.g. endothelial cell, B cell and T cell) that are conserved across these tissues. For comparison, we also used LIGER, Harmony and Seurat for this task. Like SMILE, Harmony preserves endothelial cells while mixing cells from different tissues. On the other hand, LIGER, again, shows poor representation learning, while Seurat maintains some tissue differences in the integrated representation.

3.3 Joint clustering through mpSMILE improves upon previous methods and reveals key biological variables

Moving from integration of multisource single-cell transcriptome data, we next tested the performance of pSMILE on a simulated joint single-cell transcriptome dataset and two joint-profiling datasets generated by SNARE-seq and sci-CAR to demonstrate the applicability of SMILE in multimodal single-cell data integration (Cao et al., 2018; Chen et al., 2019). The results with simulated joint data, produced by splitting a single scRNA-seq dataset (Zeisel et al., 2015) into two subsets with separate genes indicates that pSMILE can integrate data from two entirely different feature spaces (Supplementary Fig. S6). We also found a compromise of discriminative representation while using pSMILE to integrate RNA-seq and ATAC-seq data (Supplementary Fig. S7). It is often observed that RNA-seq data have a greater cell-type discriminative power than ATAC-seq, and other integration methods give more weights to RNA-seq in representation learning (Jain et al., 2021; Lin et al., 2021; Peng et al., 2021; Stuart et al., 2019). Therefore, we introduced mpSMILE to take advantage of the discriminative power of RNA-seq. Indeed, mpSMILE revealed more cell types in the mixed cell line and mouse kidney data (Supplementary Fig. S8). Meanwhile, we benchmarked three loss functions in mouse skin data, and we found that NCE is the key to integration while MSE can further decrease the modality difference (Supplementary Fig. S9).

In this part, we benchmarked methods that fall into all three integration categories (horizontal, vertical and diagonal). We selected UnionCom to represent the diagonal approach (Cao et al., 2020), LIGER and Harmony for horizontal (Korsunsky et al., 2019; Liu et al., 2020) and MCIA and Seurat for vertical (Meng et al., 2014; Stuart et al., 2019). Other vertical approaches are emerging, such as a cross-modal AE model (Yang et al., 2021) and BABEL (Wu et al., 2021). But, since Seurat is a very commonly used integration tool and these other methods have different primary goals from SMILE (diagonal integration of imaging and sequencing data and prediction of one datatype from another), we focus here on the comparison of mpSMILE with MCIA and Seurat in the category of vertical approach. We note a key difference of Seurat from MCIA and SMILE. Seurat implements canonical correlation analysis (CCA) to project both RNA-seq and ATAC-seq data into the same low-dimension space (Stuart et al., 2019). The use of CCA requires the two datasets to share the same features, so Seurat cannot work with datasets where the two data types involve entirely different features (e.g. genes versus genomic bins). To make the data work for all methods, we requantified the ATAC-seq into gene activities, and we further included mouse brain and mouse skin datasets generated by SHARE-seq (Ma et al., 2020). Since cell pairs are known in these datasets, we used all pairs to train all three vertical methods. We ran all methods with default settings, and we visualized integration results by these methods through UMAP with the same settings. We found that all vertical methods outperform the two horizontal approaches, LIGER and Harmony. This suggests that there is a more prominent unknown discrepancy between two modalities, even though two modalities have been processed to have shared features. In our hands, UnionCom, the diagonal approach, failed integration tasks for all four datasets. Currently, there does not appear to exist a truly successful diagonal method to integrate complex multimodal single-cell data without knowing either feature or cell anchors. All three vertical approaches, MCIA, Seurat and mpSMILE were able to project ATAC-seq and RNA-seq data into the shared space while discriminating cell types for the mixed cell-lines data, but MCIA shows less power of cell-type discrimination than the other two (Fig. 3A). Of note, Seurat showed poor performance on the mouse kidney data by sci-CAR and the mouse brain data by SHARE-seq, failing to project the two data sources into the shared space and distinguish cell types (Fig. 3A and C). For the mouse skin data by SHARE-seq, MCIA, Seurat and mpSMILE have comparable performance (Fig. 3D). In summary, we conclude that horizontal methods may not necessarily address modality discrepancy, even though the feature discrepancy is solved via feature engineering. This emphasizes the benefit of joint-profiling data to create an integrated space through vertical methods onto which other single source data can be projected for comparison and cell-type annotation. Compared to Seurat, MCIA and mpSMILE show a higher performance with mutual label transferring. Surprisingly, Seurat has good label transferring from RNA-seq to ATAC-seq, but this quality of label transfer is not reversible, as shown with lower macro-F1 scores from ATAC-seq to RNA-seq. Comparing MCIA and mpSMILE, we observed that SMILE has better multimodal integration in terms of modal and cell-type silhouette scores (Fig. 3A). In terms of using pSMILE or mpSMILE, we would always recommend mpSMILE for discriminative representation learning. However, pSMILE can be chosen to ensure an equal contribution from both modalities.

Fig. 3.

Integration of scRNA-seq and scATAC-seq through mpSMILE. (A) Evaluation of integration by modal silhouette, cell-type silhouette, macro-F1 (RtoA) and macro-F1 (AtoR). For macro-F1 and cell-type silhouette score, 1 indicates the best performance, and higher is better. RtoA represents label transferring from RNA-seq to ATAC-seq, and AtoR represents from ATAC-seq to RNA-seq. For modal silhouette score, 0 is the best, indicating that modalities align. (B–D) UMAP visualization of integrated representation by different approaches of (B) mixed cell lines, (C) mouse kidney, (D) mouse brain and (E) mouse skin data. Cells are colored by cell type in the upper panels and colored by data types in the lower panels. (E) Boxplot of Euclidean distances between paired cells. Salmon box: original data were forwarded through trained mpSMILE and Euclidean distances between cells in RNA-seq and their corresponding cells in ATAC-seq were measured in the integrated 2D PCA. Green box or blue box: either key differential genes or nonkey genes were suppressed to zeros, then the suppressed data were forwarded through trained mpSMILE, and Euclidean distances between cells in RNA-seq and their corresponding cells in ATAC-seq were measured in the integrated 2D PCA. Upper panel is suppression of key gene expression, and lower panel is suppression of key gene activity

To evaluate which biological factors drive the coembedding we observe, we set candidate genes from the mouse skin to zero and pass this altered data through the mpSMILE encoder to evaluate whether the coembedding would be disrupted. Indeed, when we remove key differential genes, clusters are greatly disrupted in the coembedding (Fig. 3E). Next, since this type of evaluation is rapid and does not require retraining, we asked if we could use a screening approach to identify gene or peak sets that contribute to the coembedding for each cell type. We focused on eight previously identified cell types (Ma et al., 2020). Then, we suppressed the gene activity value of each gene to zero one at a time to test how this suppression affects the co-embedding. We then selected the top 5% genes that can disrupt the coembedding. We observed that the disruption by the top 5% screened genes is larger than the disruption by random genes but lower than separately identified key differential genes (Supplementary Fig. S10). Like screening key gene activities, we also trained mpSMILE using ATAC-seq peak data and aimed to identify key peak sets. Due to the large quantity of peaks to be screened, we used nonnegative matrix factorization to assign all peaks into 50 topics. Next, we screened which topic contributes most to coembedding as we did for gene activity. Again, the screened peaks show less disruption than the top differential peaks but higher than random peaks (Supplementary Fig. S11). Users can apply this approach to evaluate any candidate set of genes or peaks for their importance in defining an integrated cell type defining landscape.

Though p/mpSMILE was designed to do joint clustering for joint-profiling data, it can be combined with pair-identification tools to achieve integration for nonjoint-profiling data. Seurat implements ‘FindTransferAnchors’ function, which can identify quality pairs in bimodal datasets. Here, we combined Seurat and SMILE to achieve integration for nonjoint-profiling human hematopoiesis and mouse kidney data (Granja et al., 2019; Miao et al., 2021). Empowered by Seurat, SMILE did decent integration for both nonjoint-profiling datasets (Supplementary Fig. S12A). Since RNA-seq and ATAC-seq were annotated separately by the authors, we can fairly compare the performance of Seurat and SMILE, even though SMILE relies on Seurat for anchor identification. Consistent to our benchmarking in joint-profiling data, SMILE demonstrated better modality mixing, in terms of modality silhouette. We found SMILE favorably compares to Seurat in terms of cell-type silhouette and macro-F1 from RNA-seq to ATAC-seq. Surprisingly, SMILE significantly outperforms Seurat in label transferring from ATAC-seq to RNA-seq, indicating SMILE learns an integration that better preserves mutual information between two modalities (Supplementary Fig. S12B). Of note, after pairing is accomplished, SMILE can allow the user to input mismatched features for the two modalities.

3.4 Application of p/mpSMILE in joint-profiling DNA methylation and chromosome structure data

We next evaluated the applicability of SMILE to the integration of joint-profiling DNA methylation and chromosome structure data of mESC and NMuMG cells, and the human prefrontal cortex (PFC) (Lee et al., 2019; Li et al., 2019). Unlike integration of RNA-seq and ATAC-seq, it is difficult to match DNA methylation features to chromosome structure features since chromosome contacts are represented in a two-dimensional space. Therefore, using CCA for integration of Methyl and Hi-C as in Seurat, or using any horizontal method requiring matched features, would be a challenging task. SMILE has the unique advantage of not requiring feature matching. We applied pSMILE in both mESC and NMuMG data and human PFC data. pSMILE can distinguish mESC and NMuMG cells in a joint Hi-C and methylation representation (Fig. 4A). As observed when integrating scRNA-seq and scATAC-seq data, we find that NCE loss is key to this integration, while MSE loss alone pushes two modalities closer without discriminating cell type differences (Supplementary Fig. S13). pSMILE only revealed five major cell types in human PFC (Fig. 4B), so we next applied mpSMILE, using methylation data in place of RNA-seq and Hi-C in place of ATAC-seq, since methylation data recovers more distinct cell types in Lee et al. (2019). However, mpSMILE did not reveal more cell types than pSMILE (Fig. 4B). Because we used all 100 kb bins of CG methylation as input for SMILE, it is possible that SMILE cannot fully unlock the discriminative power of methylation data. Thus, we further projected Hi-C cells onto the tSNE space of CG methylation from the original study, but in a SMILE manner. The tSNE space of CG methylation preserves the distinct structure for each cell type identified by the authors. However, neuron subtypes in the Hi-C data did not overlap with their methylation counterparts in this projection task, though other cell types did (Fig. 4C). These results suggest that the Hi-C data has less discriminative power to distinguish certain neuron subtypes than DNA methylation data, as the original report showed. This is also consistent to a new study in mouse forebrain, where chromatin conformation data has less ability to reveal neuron subtypes than expression data (Tan et al., 2021).

Fig. 4.

Integration of scMethyl and scHi-C through p/mpSMILE. (A and B) UMAP visualization of integrated representation of (A) mESC and NMuMG cells and (B) human PFC data, by p/mpSMILE. Cells colored by author-reported cell types (left panel), or data types (right panel). (C) Projection of Hi-C onto tSNE space of CG methylation using SMILE. We used the tSNE space of CG methylation as input for Encoder A. Training SMILE in this case becomes training Encoder B to project Hi-C data into the tSNE space of CG methylation, though we visualized the integrated representation through UMAP. Top row: Hi-C and methylation data on the same graph. Second row: Same representation, CG methylation (left) and Hi-C (right) shown separately. Red circle highlights region of indistinct neuronal cell types

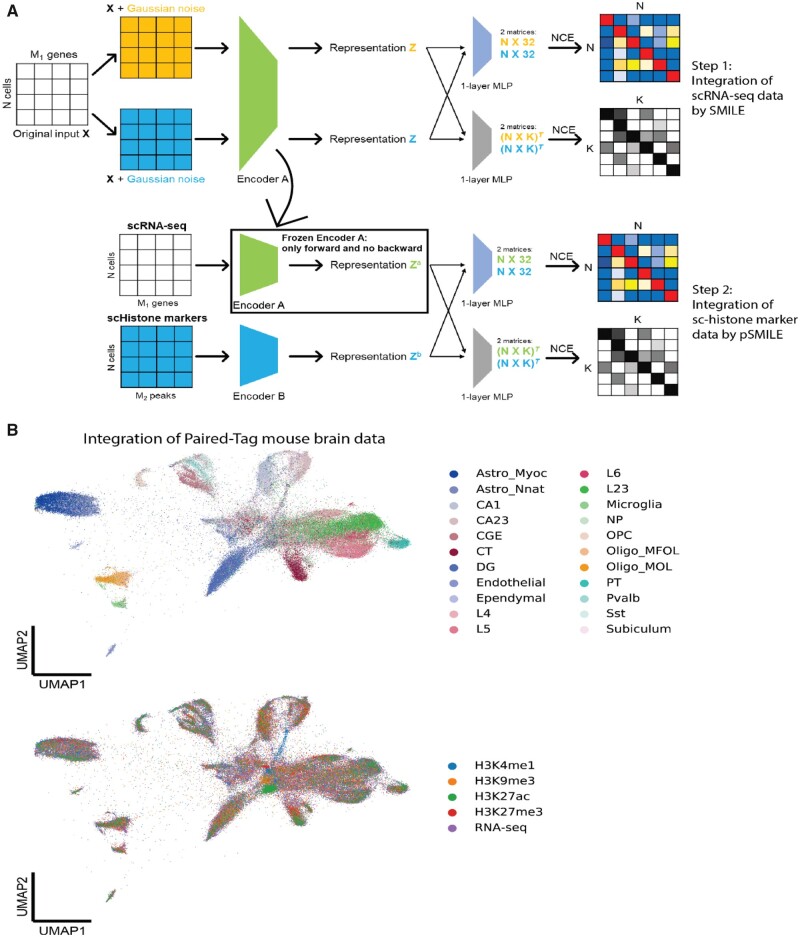

3.5 Combining SMILE and pSMILE for integration of more than two data modalities

The recently published Paired-Tag technology can jointly profile one histone mark and gene expression from the same nucleus (Zhu et al., 2021). The unique design of this study paired RNA-seq data with five different histone marks, and it provides us demonstration data to show how we can combine SMILE and pSMILE to achieve integration of more than two modalities. With these modifications, SMILE can integrate RNA-seq, H3K4me1, H3K9me3, H3K27me3 and H3K27ac (Fig. 5A). In the first step, we used SMILE to integrate RNA-seq data from six batches, as we did previously for multisource transcriptome data. Then, we replaced Encoder A in pSMILE with the trained encoder in SMILE with frozen weights. Therefore, RNA-seq data would be only forwarded through the Encoder A to generate representation za and no gradients will be sent back during training. Since SMILE had already learned discriminative representation for RNA-seq data, training pSMILE in the second step was aimed to project histone mark data into the representation of RNA-seq. Because these histone mark data are not paired, we trained four pSMILE models with four paired RNA-seq/Histone marker data. Finally, we can project all nuclei from the five modalities into the same UMAP space for visualization. Indeed, this approach preserved distinct properties of cell types while mixing data types (Fig. 5B). As we did above with the ATAC-seq and RNA-seq joint profile, this learned encoder could be used to screen for which modification peaks are most important for cell-type discrimination.

Fig. 5.

Integration of Paired-Tag mouse brain data through SMILE. Please see version online for color figure. (A) Procedure of combining SMILE and pSMILE for integration of Paired-Tag data. (B) UMAP visualization of integrated representation of mouse brain data. Cells are colored by cell types (upper panel) and data types (lower panel)

4 Discussion

Contrastive learning has been extensively shown to learn good representation for different data types (Amid and Warmuth, 2019; Chen et al., 2020). A simultaneous study further extended contrastive learning to single-cell RNA-seq analysis (Ciortan and Defrance, 2021). However, all these previous studies focus on learning good data representation and have not shown a potential use of contrastive learning in data integration. Here, we designed SMILE, a contrastive-learning-based integration method, and introduced the new use of contrastive learning. We presented three variants of SMILE models that perform single-cell omics data integration in different cases. Through our benchmarking, we demonstrated that our SMILE approach effectively accomplished both batch correction for multisource transcriptome data and multimodal single-cell data integration with comparable or even better outcomes than existing tools. Encoders learned by SMILE can be used to determine what biological factors underlie the derived joint clustering and to transfer cell-type labels to future related experiments. We further applied our SMILE to the joint Methyl and Hi-C data, and we showed that SMILE can save users from engineering shared features if cell anchors exist for training. Finally, we demonstrated how to combine or modify our SMILE models to address the integration of more than two modalities. For the joint-profiling data and the Paired-Tag data, the learned encoders could be used to project other single source datasets (e.g. ATAC-seq, ChIP-seq or Hi-C without paired RNA-seq or methylation data) into the shared space for cell-type classification or cellular composition analysis across different conditions.

Integrating single-cell data is still a grand challenge in the community. Among the three categories of integration approaches, our method falls into the category of horizontal approach for integration of multisource single-cell transcriptome data and the category of vertical approach for multimodal single-cell data integration. The distinct difference between horizontal and vertical approaches is either using features or cells as anchors. When data are generated through separated single-cell assays, cell anchors are not available and horizontal approaches can rely on shared features to bring data from different sources to the shared space. However, anchoring cells may be necessary for data integration when engineering shared features is not straightforward. As we demonstrated in joint Methyl/Hi-C data, Hi-C data quantitates 2D interaction features across the genome while methylation is measured at each genomic region in 1D, so matching these features is difficult. Increasing numbers of joint-profiling technologies are coming out and will provide more cell-anchored references, and we could combine SMILE with these joint-profiling technologies to achieve multimodal integration that brings gene expression, epigenetic modification, chromatin structure and even imaging-based phenotypes to the shared space. With the ability to integrate data without shared features, SMILE has its niche in such scenarios.

In these joint-profiling datasets, where the pairing between datasets is already known, it may be less obvious why an integrated representation is needed. Indeed, some uses of such data uses just one datatype to classify cell types, e.g. and then examines the properties of the other data within those established categories (Lee et al., 2019). We show here that by learning a joint representation where each datatype is separately projected into the space, we can evaluate what biological features are most important for allowing the two datatypes to be embedded in the same space. Further, with this type of joint representation, we can then project a new single source data (i.e. ATAC-seq or Hi-C) from a different experiment onto this joint space. Thus, we can use the power of both paired datatypes to create the representation space and call cell types, and then we can take a new dataset that has only one type of data and compare it and annotate cell types for the new single data based on the joint space. Instead of directly learning an integrated representation like SMILE, a recent study adopted a data translation strategy to integrate different modalities (Wu et al., 2021). BABEL (Wu et al., 2021) uses an AE on jointly profiled datasets to explicitly predict the values for a new type of data (e.g. RNA-seq) given a single source dataset (e.g. ATAC-seq). Like p/mpSMILE, training BABEL is a vertical approach, explicitly relying on cell pairing information. While the goal of BABEL differs from the focus of SMILE, its success again emphasizes the value of vertical approaches with jointly profiled data to increase the insights gleaned from other single source datasets.

Ideally, we would like to perform integration without knowing either feature or cell anchors, and developing useful diagonal methods is needed for single-cell community. However, diagonal integration faces extreme computational and theoretical challenges. So far, none of the integration methods in this category have been extensively tested across multiple datasets. In our hands, the diagonal approach, UnionCom, did not achieve any ideal integration of multimodal single-cell data. Another method, cross-modal AE (Yang et al., 2021), has the flexibility to act as either a vertical or diagonal approach, and extended the application of integration to sequencing and imaging data, where matching features across modalities would be extremely challenging. This approach shows promise for diagonal integration of very distinct chromatin imaging and RNA sequencing data, but it was tested in only a case where two clear subtypes of cells are first shown to exist in both datatypes. Meanwhile, the authors also carefully investigated the two modalities to make sure there is a shared underlying data distribution. Therefore, it is not clear whether such a diagonal method would work in a multicell-type complex system. Similarly, another diagonal method, SCOT, also relies on learning shared data distributions to align modalities (Demetci et al., 2020). While further work is done to test and improve the performance of such approaches on more complex systems, we argue that horizontal or vertical integration approaches play a critical role in revealing underlying mechanisms for multimodal data.

Overall, our SMILE approach shows the ability to integrate single-cell omics data as a comprehensive tool. One limitation of SMILE for multimodal integration is that cell pairs must be known. Therefore, training SMILE involves creating self-pairs across a single modality or using the natural pairs in joint-profiling data. We combined Seurat and SMILE to show how SMILE can also be used for nonjoint-profiling data. Future development of SMILE will focus on testing and incorporating different cell-anchor identification methods, e.g. scJoint (Lin et al., 2021) and GLUER (Peng et al., 2021). A benchmark study on computational cell-anchor identification methods would provide insight about anchoring cells with higher accuracy, rather than relying on joint-profiling assays.

Supplementary Material

Acknowledgements

We thank Joseph Ecker’s lab for sharing information about processing their sn-m3C-seq data. We also thank Amir Sadovnik, Tongye Shen and Tian Hong for helpful discussions.

Author contributions

Y.X. conceived and developed the method with guidance from R.P.M. and produced all the figures. P.D. computationally processed raw sequencing data for input to SMILE. Y.X. and R.P.M. wrote the manuscript with input from P.D. All authors read and approved the final manuscript.

Data Availability

All data used in this study are publicly available with GEO accession or hosted by institution data portals (Supplementary Table S1).

Funding

This work was supported by the National Institute of General Medical Sciences of the National Institutes of Health [R35GM133557] to R.P.M.

Conflict of Interest: none declared.

Contributor Information

Yang Xu, UT-ORNL Graduate School of Genome Science and Technology, University of Tennessee, Knoxville, TN 37996, USA.

Priyojit Das, UT-ORNL Graduate School of Genome Science and Technology, University of Tennessee, Knoxville, TN 37996, USA.

Rachel Patton McCord, Department of Biochemistry and Cellular and Molecular Biology, University of Tennessee, Knoxville, TN 37996, USA.

References

- Amid E., Warmuth M.K. (2019) TriMap: large-scale dimensionality reduction using triplets. arXiv:1910.00204.

- Argelaguet R. et al. (2021) Computational principles and challenges in single-cell data integration. Nat. Biotechnol., 39, 1202–1215. [DOI] [PubMed] [Google Scholar]

- Arisdakessian C. et al. (2019) DeepImpute: an accurate, fast, and scalable deep neural network method to impute single-cell RNA-seq data. Genome Biol., 20, 211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron M. et al. (2016) A single-cell transcriptomic map of the human and mouse pancreas reveals inter- and intra-cell population structure. Cell Syst., 3, 346–360.e344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein N.J. et al. (2020) Solo: doublet Identification in single-cell RNA-Seq via semi-supervised deep learning. Cell Syst., 11, 95–101.e105. [DOI] [PubMed] [Google Scholar]

- Butler A. et al. (2018) Integrating single-cell transcriptomic data across different conditions, technologies, and species. Nat. Biotechnol., 36, 411–420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cao J. et al. (2018) Joint profiling of chromatin accessibility and gene expression in thousands of single cells. Science, 361, 1380–1385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cao K. et al. (2020) Unsupervised topological alignment for single-cell multi-omics integration. Bioinformatics, 36, i48–i56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen S. et al. (2019) High-throughput sequencing of the transcriptome and chromatin accessibility in the same cell. Nat. Biotechnol., 37, 1452–1457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen T. et al. (2020) A simple framework for contrastive learning of visual representations. arXiv:2002.05709.

- Ciortan M., Defrance M. (2021) Contrastive self-supervised clustering of scRNA-seq data. BMC Bioinform., 22, 280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collombet S. et al. (2020) Parental-to-embryo switch of chromosome organization in early embryogenesis. Nature, 580, 142–146. [DOI] [PubMed] [Google Scholar]

- Cusanovich D.A. et al. (2018) A single-cell atlas of in vivo mammalian chromatin accessibility. Cell, 174, 1309–1324.e1318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demetci P. et al. (2020) Gromov-Wasserstein optimal transport to align single-cell multi-omics data. bioRxiv.

- Eraslan G. et al. (2019) Single-cell RNA-seq denoising using a deep count autoencoder. Nat. Commun., 10, 390–390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forcato M. et al. (2021) Computational methods for the integrative analysis of single-cell data. Brief. Bioinform., 22, 20–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fudenberg G. et al. (2020) Predicting 3D genome folding from DNA sequence with Akita. Nat. Methods, 17, 1111–1117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granja J.M. et al. (2019) Single-cell multiomic analysis identifies regulatory programs in mixed-phenotype acute leukemia. Nat. Biotechnol., 37, 1458–1465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grün D. et al. (2016) De novo prediction of stem cell identity using single-cell transcriptome data. Cell Stem Cell, 19, 266–277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han X. et al. (2020) Construction of a human cell landscape at single-cell level. Nature, 581, 303–309. [DOI] [PubMed] [Google Scholar]

- Jain M.S. et al. (2021) MultiMAP: dimensionality reduction and integration of multimodal data. bioRxiv. [DOI] [PMC free article] [PubMed]

- Kimmel J.C., Kelley D.R. (2021) Semi-supervised adversarial neural networks for single cell classification. Genome Res., 31, 1781–1793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korsunsky I. et al. (2019) Fast, sensitive and accurate integration of single-cell data with Harmony. Nat. Methods, 16, 1289–1296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lähnemann D. et al. (2020) Eleven grand challenges in single-cell data science. Genome Biol., 21, 31–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawlor N. et al. (2017) Single-cell transcriptomes identify human islet cell signatures and reveal cell-type-specific expression changes in type 2 diabetes. Genome Res., 27, 208–222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D.-S. et al. (2019) Simultaneous profiling of 3D genome structure and DNA methylation in single human cells. Nat. Methods, 16, 999–1006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li G. et al. (2019) Joint profiling of DNA methylation and chromatin architecture in single cells. Nat. Methods, 16, 991–993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y. et al. (2020) Contrastive clustering. arXiv:2009.09687.

- Lin Y. et al. (2021) scJoint: transfer learning for data integration of single-cell RNA-seq and ATAC-seq. bioRxiv.

- Litviňuková M. et al. (2020) Cells of the adult human heart. Nature, 588, 466–472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J. et al. (2020) Jointly defining cell types from multiple single-cell datasets using LIGER. Nat. Protoc., 15, 3632–3662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Longo S.K. et al. (2021) Integrating single-cell and spatial transcriptomics to elucidate intercellular tissue dynamics. Nat. Rev. Genet., 22, 627–644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez R. et al. (2018) Deep generative modeling for single-cell transcriptomics. Nat. Methods, 15, 1053–1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma F., Pellegrini M. (2020) ACTINN: automated identification of cell types in single cell RNA sequencing. Bioinformatics, 36, 533–538. [DOI] [PubMed] [Google Scholar]

- Ma S. et al. (2020) Chromatin potential identified by shared single-cell profiling of RNA and chromatin. Cell, 183, 1103–1116.e1120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meng C. et al. (2014) A multivariate approach to the integration of multi-omics datasets. BMC Bioinform., 15, 162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Z. et al. (2021) Single cell regulatory landscape of the mouse kidney highlights cellular differentiation programs and disease targets. Nat. Commun., 12, 2277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muraro M.J. et al. (2016) A single-cell transcriptome atlas of the human pancreas. Cell Syst., 3, 385–394.e383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peng T. et al. (2021) GLUER: integrative analysis of single-cell omics and imaging data by deep neural network. bioRxiv.

- Segerstolpe Å. et al. (2016) Single-cell transcriptome profiling of human pancreatic islets in health and type 2 diabetes. Cell Metab., 24, 593–607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart T., Satija R. (2019) Integrative single-cell analysis. Nat. Rev. Genet., 20, 257–272. [DOI] [PubMed] [Google Scholar]

- Stuart T. et al. (2019) Comprehensive integration of single-cell data. Cell, 177, 1888–1902.e1821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan L. et al. (2021) Changes in genome architecture and transcriptional dynamics progress independently of sensory experience during post-natal brain development. Cell, 184, 741–758.e717. [DOI] [PubMed] [Google Scholar]

- Tran H.T.N. et al. (2020) A benchmark of batch-effect correction methods for single-cell RNA sequencing data. Genome Biol., 21, 12–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tucker N.R. et al. (2020) Transcriptional and cellular diversity of the human heart. Circulation, 142, 466–482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf F.A. et al. (2018) SCANPY: large-scale single-cell gene expression data analysis. Genome Biol., 19, 15–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu K.E. et al. (2021) BABEL enables cross-modality translation between multiomic profiles at single-cell resolution. Proc. Natl. Acad. Sci. USA, 118, e2023070118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Z. et al. (2018) Unsupervised feature learning via non-parametric instance-level discrimination. arXiv:1805.01978.

- Yang K.D. et al. (2021) Multi-domain translation between single-cell imaging and sequencing data using autoencoders. Nat. Commun., 12, 31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeisel,A. et al. (2015) Brain structure. Cell types in the mouse cortex and hippocampus revealed by single-cell RNA-seq. Science, 347, 1138–1142. [DOI] [PubMed] [Google Scholar]

- Zheng G.X.Y. et al. (2017) Massively parallel digital transcriptional profiling of single cells. Nat. Commun., 8, 14049–14049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou J. et al. (2019) Robust single-cell Hi-C clustering by convolution- and random-walk-based imputation. Proc. Natl. Acad. Sci. USA, 116, 14011–14018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu C. et al. (2021) Joint profiling of histone modifications and transcriptome in single cells from mouse brain. Nat. Methods, 18, 283–292. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data used in this study are publicly available with GEO accession or hosted by institution data portals (Supplementary Table S1).