Contributor Roles Taxonomy (CRediT) has recently changed how author contributions are acknowledged. To extend and complement CRediT, we propose MeRIT, a new way of writing the Methods section using the author’s initials to further clarify contributor roles for reproducibility and replicability.

Subject terms: Research management, Ethics

Lack of information on authors’ contribution to specific aspects of a study hampers reproducibility and replicability. Here, the authors propose a new, easily implemented reporting system to clarify contributor roles in the Methods section of an article.

Background

As scientific endeavours become increasingly complex, research projects require larger teams which leads to an ever-increasing division of labour1. To acknowledge different authorship roles within a project or ‘contributorship’, Contributor Roles Taxonomy (CRediT) has been proposed2–4, where authors’ contributions are specified across 14 possible tasks (‘Conceptualization’, ‘Data curation’, ‘Formal analysis’, ‘Funding acquisition’, ‘Investigation’, ‘Methodology’, ‘Project administration’, ‘Resources’, ‘Software’, ‘Supervision’, ‘Validation’, ‘Visualization’, ‘Writing—original draft’, and ‘Writing—review & editing’). CRediT has been adopted by many scientists and publishers5 (at least 40 publishers currently; https://credit.niso.org/adopters/). However, CRediT lacks granularity to reveal precisely who did what, which is especially important when degrees of contributions differ. For example, a survey of 30,770 papers using CRediT identified that 55% of authors contribute to the broad category of ‘Methodology’, with the average number of authors being seven per paper6. Therefore, CRediT’s broad categorisations limit its ability to promote transparency in contributorship.

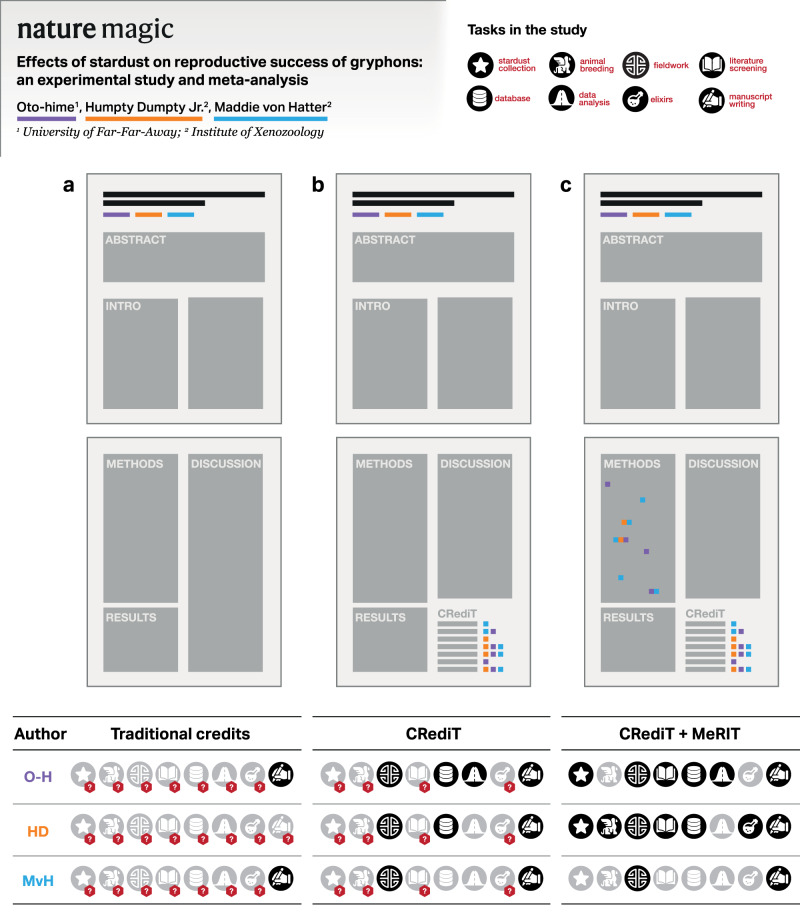

To address this limitation, we propose supplementing CRediT’s methodology-related contributor roles – ‘Data curation’, ‘Formal analysis’, ‘Investigation’, ‘Methodology’, ‘Resources’, ‘Software’, ‘Validation’, and ‘Visualization’– by using author initials in the Methods section to attribute these specific research steps to specific authors (e.g., “SN obtained field data between 2003-2007” or “ML conducted all the statistical analyses, which were checked by SN”). We term this reporting system ‘Method Reporting with Initials for Transparency’ (MeRIT), and it extends method-reporting practices conventionally used in systematic reviews to all research outputs. We emphasize MeRIT’s complementarity to CRediT, as shown in Fig. 1. Of note, CRediT is intended to be a machine-readable add-on, whereas MeRIT is a part of the main text. As we outline below, MeRIT has many benefits despite its expected obstacles.

Fig. 1. MeRIT visualized.

A diagram illustrating how a combination of CRediT (Contributor Roles Taxonomy) and MeRIT (Method Reporting with Initials for Transparency) can make author contributions clearer and more granular than CRediT alone: a without CRediT and MeRIT, one can only assume the first and/or last author would have written the text, but it cannot be certain, b CRediT can clarify some of the tasks authors did, but not all tasks, especially methodological ones, and c the use of CRediT and MeRIT together can clarify all author contributions.

Benefits of MeRIT

MeRIT transforms the Methods section, providing transparency and granularity to a level not possible with CRediT. Such transparency and granularity explicitly attribute the specific portion of work to each contributor (e.g., sample collection, DNA extraction, sequencing, and bioinformatic analyses). Further, MeRIT assigns the responsibility for a particular research stage to a specific author or authors, leading to more accountability and, perhaps, more integrity. Concerningly, recent surveys have found that questionable research practices (e.g., p-hacking, selective reporting and HARKing) are surprisingly common7–10. By increasing accountability, MeRIT may contribute to building an academic environment where questionable research practices that inflate rates of false positive findings are less likely to occur.

Such accountability also discourages contributors from being omitted from an author list unfairly (i.e., orphan authorship5,11) because each task requires assigned initials under MeRIT. Combined with CRediT, increased accountability may prevent gift and ghost authorships. This is precisely what the Vancouver protocol (recommendations) for co-authorship was intended for (ref. 5,12) and many universities recommend this protocol to their academics and students. However, the Vancouver protocol obfuscates contributorship, by requiring all co-authors (or contributors) to be responsible for the accuracy and integrity of the study in all aspects, effectively diluting responsibility—“everybody’s responsibility is nobody’s responsibility”. Fair sharing of responsibility, as well as fair recognition of credit, would lead to a better and stronger collaborative project with open and healthy communication and discussion among contributors.

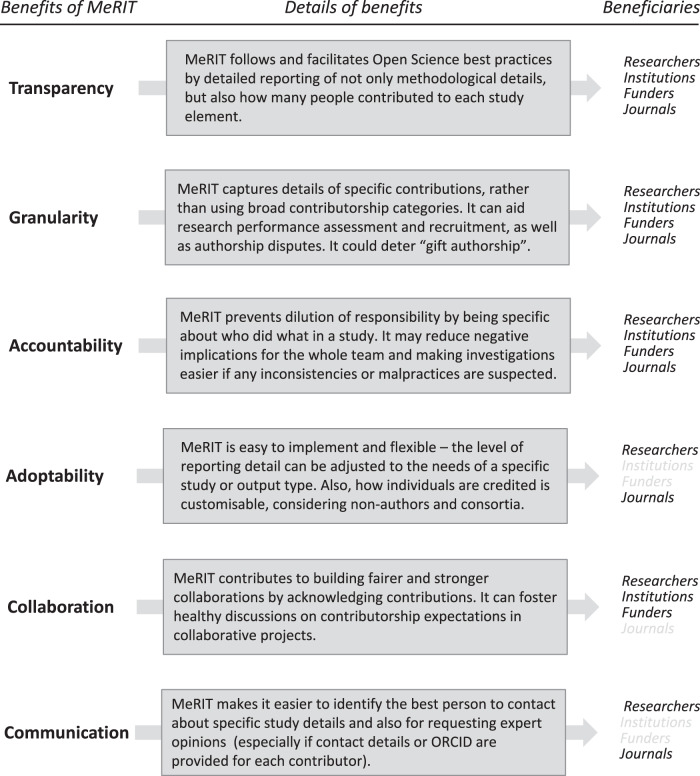

In addition to these broader benefits, MeRIT is practical and easy to implement, as it can be used regardless of journal policy. We can start adopting MeRIT in any research article intended for any journal. MeRIT is flexible in its format and scale. For example, you could write who did what at the beginning of each subsection or weave MeRIT into the Methods section by using initials as the subject of sentences, where appropriate. If the complete adoption of MeRIT is complicated, using it in just one subsection is fine. Further, MeRIT should not add too much extra writing time. Instead, using initials can animate the Methods section with the active voice (e.g., “EIC and MJG created an accompanying webpage” rather than the passive voice version, “an accompanying webpage was created”), reducing excessive use of passive sentences. Notably, MeRIT’s granularity seems timely, given a recent shift to increasing the space for the Methods section (e.g., many Nature journals allow almost ‘unrestricted’ space). The benefits of MeRIT are summarised in Fig. 2 (see ref. 13).

Fig. 2. MeRIT’s benefits.

Six benefits of MeRIT, transparency, granularity, accountability, adoptability, collaboration and communication with their beneficiaries (stakeholders: researchers, institutions, funders and journals).

To help with the implementation, we have set up a website for MeRIT with many examples and styles (www.merit.help). We intend to build up such examples by spreading MeRIT to many disciplines via this website, which also includes FAQ (e.g., “What if two authors share the same initials?”). Furthermore, we plan to use this website to collate publications that adopt MeRIT. This collation will represent a new project to gauge how MeRIT is adopted in different fields (e.g., complete or partial adoption) and how quickly it spreads (the project may also include a survey of MeRIT users to evaluate new benefits and obstacles). Therefore, to be able to discover and assemble ‘MeRIT papers’, we kindly request future users and supporters to cite this commentary (see the website for examples on how to do so).

Potential obstacles and resolutions

Despite all these benefits, MeRIT is likely to face some difficulties. These difficulties are often intertwined, but they can be divided into two kinds: practical and sociological. Practically, it is sometimes hard to assign a particular contribution to a single person, for example, because this person has supervised or advised the work. In such a case, you can still use MeRIT to clarify this point (e.g., “REO conducted the statistical analysis with the support of JLP”). Also, it may not be easy to draw dividing lines on the relative contributions within a research task, for example, when coding software and analysis together. It might be possible to assign an author’s contribution in percentages, expressed as the number of contributed code lines, quantifiable via platforms like GitHub. We must be careful with such quantification, however, as quantity does not equate to quality14. It is probably best to be handled in the text; for example, “AW led the software development with the assistance of EG & DPW, and additional support from SMW to improve scalability”. Notably, explicitly acknowledging error checking and supporting roles in data collection and analysis, like the examples above, may increase error-prevention activities, reducing mistakes that lead to paper corrections and retractions.

Sociological issues are potentially more challenging to address, although this situation is not unique to MeRIT (i.e., CRediT and any other systems of author contributions would also suffer from them). Power dynamics can undoubtedly influence the implementation of MeRIT. Supervisors can pressure student-lead authors to attribute more credit to supervisors and/or other senior academics or underplay the contributions of students or research staff. Confronting and resolving issues of power dynamics require top-down institutional-level interventions (e.g., empowering junior authors by creating a set of expectations and criteria). Relatedly, some researchers may feel unease and oppose MeRIT because it exposes what one did not do. For example, some principal investigators may have primarily contributed to non-methodological components of projects and, therefore, would not appear in the Methods section. Also, an early career researcher (ECR) may be concerned about MeRIT negatively affecting one’s career path, for example, by revealing that they did not lead their own data analysis. At the same time, however, ECRs could benefit from being acknowledged for conducting a bulk of analysis despite being a mid-author.

These concerns resemble those raised when data archiving was mandated in five major journals in ecology and evolution (American Naturalist, Evolution, Journal of Evolutionary Biology, Molecular Ecology, and Heredity) in 201015 (see also ref. 16). At that time, researchers were concerned about others ‘stealing’ their data and not getting enough credit. More than a decade after the data archiving mandate, researchers would agree that the benefits of transparency and accountability have outweighed the costs they feared17,18. We believe MeRIT gives us great opportunities to discuss contributorship and reproducibility—simply because it is the Methods section that is the most important for study replication.

Editorial implications of MeRIT

As mentioned above, MeRIT does not require any editorial intervention, yet editorial support and recommendations will go a long way in adopting MeRIT. Its benefits would only improve the quality of journal publications and credibility. We suggest MeRIT is an innovation that increases an article’s accountability and replicability. Such innovation is timely and necessary in the face of the current ‘replication/reproducibility crisis’19. Of relevance, the Reproducibility Project in Cancer Biology only managed to conduct 50 (~25%) out of 193 initially planned experiments, and among these 50 experiments, the project only obtained <50% success in reproducing the original results20. One of the main challenges in this project was that the authors of this project could not obtain responses from the authors of original studies that the project planned to replicate. Given the ever-growing number of collaborators and tasks involved in a project, it is not surprising that the corresponding author does not know specific aspects of data, analysis, and interpretation. Because MeRIT can facilitate contacting a person in charge of a specific task, it is possible that more people would have responded to emails, changing the fate of the Reproducibility Project in Cancer Biology.

Given the above, we question the tradition of the corresponding author. We should contact the person who did the task in question instead of the “general” corresponding author. To improve research accountability and replicability, editors should seriously consider mandating the reporting of all authors’ contact details, along with MeRIT. Alternatively, editors could mandate providing ORCID (Open Researcher and Contributor ID) for all authors, where the latest contact information should be up to date, although obtaining an ORCID account should not become a barrier to contributorship. Notably, it is beneficial and sometimes necessary to be able to contact more people; for example, it increases the chance of somebody responding. Increased communication can benefit researchers interested in replicating or building upon previous work as well as editors who seek expert opinions. Furthermore, MeRIT can assist in implementing fair research assessments. For example, MeRIT supports and aligns well with the San Francisco Declaration on Research Assessment (DORA) and the Hong Kong Principles21 by: (1) assessing responsible research practices, (2) valuing complete reporting, (3) rewarding the practice of open science, and (4) acknowledging a broad range of research activities (i.e., four out of the five principles), consequently supporting equity, diversity, and inclusiveness in science.

Lastly, it is plausible that MeRIT will help not only research-misconduct investigations but also scientific award committees22 in the future. To put it more broadly, MeRIT recognises the collaborative nature of research and allows each person involved to be credited for their methodological contributions. It also enables others to trace people’s roles and responsibilities in the research process and implementation, whose details are essential for study replication and reproducibility, in a more personal and accurate manner than ever before, especially used with CRediT.

Acknowledgements

We thank the organisers of the SORTEE (Society for Open Reliable, and Transparent Ecology and Evolutionary biology) 2022 conference (12th July 2022), where SN and ML held an initial workshop to formalise the idea of MeRIT. SN thanks Alex Holcombe, who commented on an earlier version of this manuscript.

Author contributions

Conceptualisation: S.N., M.L., E.R.I.-C., M.J.G., and R.E.O., investigation: S.N., E.R.I.-C., M.J.G., R.E.O., S.B., S.M.D., E.G., E.L.M., A.R.M., K.M., M.P., J.L.P., P.P., L.R., D.P.W., A.W., C.W., L.A.B.W., S.M.W., Y.Y. and M.L. (i.e., all), software: E.R.I.-C., M.J.G. and J.L.P., visualisation: S.M.D. and M.L., writing—original draft: S.N., and writing—review and editing: all.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Shinichi Nakagawa, Email: s.nakagawa@unsw.edu.au.

Malgorzata Lagisz, Email: m.lagisz@unsw.edu.au.

References

- 1.Coles NA, Hamlin JK, Sullivan LL, Parker TH, Altschul D. Build up big-team science. Nature. 2022;601:505–507. doi: 10.1038/d41586-022-00150-2. [DOI] [PubMed] [Google Scholar]

- 2.Allen L, O’Connell A, Kiermer V. How can we ensure visibility and diversity in research contributions? How the Contributor Role Taxonomy (CRediT) is helping the shift from authorship to contributorship. Learn Publ. 2019;32:71–74. doi: 10.1002/leap.1210. [DOI] [Google Scholar]

- 3.Holcombe A. Farewell authors, hello contributors. Nature. 2019;571:147–147. doi: 10.1038/d41586-019-02084-8. [DOI] [PubMed] [Google Scholar]

- 4.Holcombe AO, Kovacs M, Aust F, Aczel B. Documenting contributions to scholarly articles using CRediT and tenzing. PLoS One. 2020;15:e0244611. doi: 10.1371/journal.pone.0244611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McNutt MK, et al. Transparency in authors’ contributions and responsibilities to promote integrity in scientific publication. P Natl Acad. Sci. USA. 2018;115:2557–2560. doi: 10.1073/pnas.1715374115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lariviere V, Pontille D, Sugimoto CR. Investigating the division of scientific labor using the Contributor Roles Taxonomy (CRediT) Quant. Sci. Stud. 2021;2:111–128. doi: 10.1162/qss_a_00097. [DOI] [Google Scholar]

- 7.Gerrits RG, et al. Occurrence and nature of questionable research practices in the reporting of messages and conclusions in international scientific Health Services Research publications: a structured assessment of publications authored by researchers in the Netherlands. BMJ Open. 2019;9:e027903. doi: 10.1136/bmjopen-2018-027903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fraser H, Parker T, Nakagawa S, Barnett A, Fidler F. Questionable research practices in ecology and evolution. PLoS One. 2018;13:e0200303. doi: 10.1371/journal.pone.0200303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.John LK, Loewenstein G, Prelec D. Measuring the prevalence of questionable research practices with incentives for truth telling. Psychol. Sci. 2012;23:524–532. doi: 10.1177/0956797611430953. [DOI] [PubMed] [Google Scholar]

- 10.Agnoli F, Wicherts JM, Veldkamp CLS, Albiero P, Cubelli R. Questionable research practices among italian research psychologists. PLoS One. 2017;12:e0172792. doi: 10.1371/journal.pone.0172792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wislar JS, Flanagin A, Fontanarosa PB, DeAngelis CD. Honorary and ghost authorship in high impact biomedical journals: a cross sectional survey. Brit Med J. 2011;343:e0172792. doi: 10.1136/bmj.d6128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Macfarlane B. The ethics of multiple authorship: power, performativity and the gift economy. Stud. High. Educ. 2017;42:1194–1210. doi: 10.1080/03075079.2015.1085009. [DOI] [Google Scholar]

- 13.Rechavi, O. & Tomancak, P. Who did what: changing how science papers are written to detail author contributions. Nat. Rev. Mol. Cell. Biol. 10.1038/s41580-023-00587-x (2023). [DOI] [PubMed]

- 14.Saltelli A. Ethics of quantification or quantification of ethics? Futures. 2020;116:102509. doi: 10.1016/j.futures.2019.102509. [DOI] [Google Scholar]

- 15.Whitlock MC, McPeek MA, Rausher MD, Rieseberg L, Moore AJ. Data archiving. Am. Nat. 2010;175:E45–E146. doi: 10.1086/650340. [DOI] [PubMed] [Google Scholar]

- 16.Boyer DM, Jahnke LM, Mulligan CJ, Turner T. Response to letters to the editor concerning AJPA commentary on “data sharing in biological anthropology: Guiding principles and best practices”. Am. J. Phys. Anthropol. 2020;172:344–346. doi: 10.1002/ajpa.24065. [DOI] [PubMed] [Google Scholar]

- 17.Sholler D, Ram K, Boettiger C, Katz DS. Enforcing public data archiving policies in academic publishing: a study of ecology journals. Big Data Soc. 2019;6:2053951719836258. doi: 10.1177/2053951719836258. [DOI] [Google Scholar]

- 18.Gomes DG, et al. Why don’t we share data and code? Perceived barriers and benefits to public archiving practices. Proc. R. Soc. B. 2022;289:20221113. doi: 10.1098/rspb.2022.1113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Baker M. 1500 scientists lift the lid on reproducibility. Nature. 2016;533:452–454. doi: 10.1038/533452a. [DOI] [PubMed] [Google Scholar]

- 20.Errington TM, Denis A, Perfito N, Iorns E, Nosek BA. Reproducibility in cancer biology: challenges for assessing replicability in preclinical cancer biology. eLife. 2021;10:e67995. doi: 10.7554/eLife.67995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Moher D, et al. The Hong Kong Principles for assessing researchers: fostering research integrity. PLoS Biol. 2020;18:e3000737. doi: 10.1371/journal.pbio.3000737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lagisz, M. et al. Little transparency and equity in scientific awards for early and mid-career researchers in ecology and evolution. Nat. Ecol. Evol. 10.1038/s41559-023-02028-6 (2023). [DOI] [PubMed]