Abstract

ChatGPT, an artificial intelligence chatbot, has rapidly gained prominence in various domains, including medical education and healthcare literature. This hybrid narrative review, conducted collaboratively by human authors and ChatGPT, aims to summarize and synthesize the current knowledge of ChatGPT in the indexed medical literature during its initial four months. A search strategy was employed in PubMed and EuropePMC databases, yielding 65 and 110 papers, respectively. These papers focused on ChatGPT's impact on medical education, scientific research, medical writing, ethical considerations, diagnostic decision-making, automation potential, and criticisms. The findings indicate a growing body of literature on ChatGPT's applications and implications in healthcare, highlighting the need for further research to assess its effectiveness and ethical concerns.

Keywords: machine learning (ml), hybrid method, medical practice, artificial intelligence in medicine, ethical considerations, diagnostic decision-making, chatgpt, medical writing, generative language model, medical education

Introduction and background

ChatGPT, a new artificial intelligence platform created by OpenAI, has undoubtedly taken the world by storm in no time. Released in November 2022, this artificial intelligence chatbot uses a neural network machine learning model and generative pre-trained transformer (GTP) to pull from a significant amount of data to formulate a conversation-style response in various written content, for a multitude of domains, from history to philosophy, science to technology, banking, marketing, entertainment, in the form of articles, social media posts, essays, computer programming codes and emails [1, 2]. ChatGPT has taken its role in academia, particularly in medical education. A recent study shows that ChatGPT has reached the standard of passing a third-year medical student exam [3]. ChatGPT is a state-of-the-art AI model that can generate human-like text in response to user queries [4]. Thus, shortly, a ChatGPT-supported application is a potential source for an interactive tool in medical education to support learning [3, 5]. Exploring what the new ChatGPT publications address has the potential to enlighten healthcare providers and medical education specialists on ChatGPT's contributions to healthcare and medical education. This review aims to summarize and synthesize the current knowledge of ChatGPT in healthcare and medical education during the last four months since its launch.

Review

Methodology

This paper presents a hybrid narrative review on the topic of ChatGPT in medical education and medical literature, aiming to identify gaps in the existing literature and provide a comprehensive overview of the current state of research [6]. Our hybrid approach combines the conventional narrative review methodology with the assistance of ChatGPT in analyzing and synthesizing the retrieved abstracts. PubMed and EuropePMC were searched using the keyword "ChatGPT".

Inclusion Criteria

The inclusion criteria included articles discussing ChatGPT in the context of medical education, medical literature, or medical practice, including its applications, potential benefits, and limitations; articles published in peer-reviewed journals or conference proceedings, ensuring a certain level of quality and credibility; studies employing quantitative, qualitative, or mixed-methods approaches to assess the impact or implications of ChatGPT in the medical domain; commentaries, editorials, or other non-research articles that provided early insights into ChatGPT in medical education or medical literature; and articles published in English between November 01, 2022, and February 27, 2023.

Exclusion Criteria

The exclusion criteria included articles focusing on ChatGPT applications in non-medical domains or unrelated contexts; articles published in languages other than English, as our review team was not equipped to assess non-English sources accurately; articles published outside the specified date range; and articles published in other databases.

Upon retrieval of relevant titles and abstracts, we input them into ChatGPT, prompting it to discern and emphasize the principal themes among the selected papers. The human authors then meticulously analyzed ChatGPT's output, cross-referencing it with the original articles to ensure accuracy and comprehensiveness. This hybrid approach allowed us to leverage the capabilities of ChatGPT in the review process while maintaining human oversight for quality and interpretation. The following summary of the review's findings was then compiled, providing insights into the current state of ChatGPT's application in medical education and literature.

Results

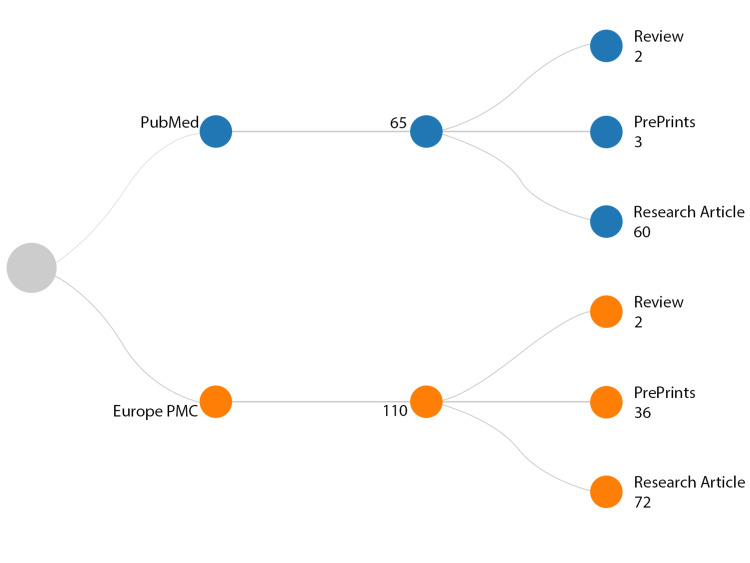

The PubMed search uncovered 65 papers, comprising 60 research articles, three preprints, and two reviews. Interestingly, one of the papers listed ChatGPT as a co-author [7]. Of the total papers, five were listed in PubMed in 2022, with 60 published since January 2023. On Europe PMC, 110 papers were found, consisting of 72 research articles, 36 preprints, two reviews, and five papers listing ChatGPT as a co-author. Out of these, 11 papers were published in 2022 and 99 since January 2023 (Figure 1).

Figure 1. Overview of retrieved studies.

Among the 65 papers selected on PubMed since December 2022, we found a focus on the utilization of ChatGPT in various medical fields, including medical education, scientific writing, research, and diagnostic decision-making. These papers can be categorized into eight themes based on their abstracts: (1) medical writing, (2) medical education, (3) diagnostic decision-making, (4) public health, (5) scientific research, (6) ethical considerations of ChatGPT use, (7) ChatGPT's potential to automate medical tasks, and (8) criticism of ChatGPT's usage (summarized in Table 1). These themes are supported by the papers' topics, including ChatGPT's ability to provide medical information, its impact on medical education and research, its potential to replace human-written papers, its ethical implications, and its limitations in providing accurate diagnoses.

Table 1. Summary of key findings from the review.

| Theme of ChatGPT | Key findings |

| ChatGPT's impact on medical education | Enhances learning, interpretation, and recall of medical information. Provides reliable information in specific medical fields. Concerns about undermining clinical reasoning. |

| Use in scientific research | Generates coherent and readable content. Raises ethical concerns regarding authorship, transparency, and accountability. Concerns about accuracy and promoting conspiracy theories. |

| Use in medical writing | Popular tool for generating medical content. Challenges traditional roles of authorship. Concerns about accuracy and ethical implications. |

| Ethical considerations | Raises concerns about authorship, accountability, and transparency. Potential for generating misleading or inaccurate information and promoting harmful beliefs. Importance of human oversight and transparency in its use. |

| Role in diagnostic decision-making | Improves efficiency and reduces errors in decision-making. Concerns about accuracy, perpetuating biases, and need for human oversight. |

| Role in public health | Provides reliable estimates in public health research. Concerns about potential misleading information and amplification of harmful beliefs. |

| Potential for automating medical tasks | Concerns about undermining the value of human expertise. Need for human oversight. |

| Criticisms of use | Concerns about accuracy and potential to promote conspiracy theories. Challenges traditional roles of authorship and transparency. Ethical concerns and potential to promote bias in decision-making. |

Implications of ChatGPT in Medical Writing

ChatGPT is becoming a popular tool for generating medical writing, leading to discussions on its reliability and ethical considerations. It is argued that ChatGPT may shape the future of medical writing, as it can generate papers with high coherence and readability [8]. However, there are concerns about its accuracy as it may produce inaccurate information or promote conspiracy theories. Additionally, the use of ChatGPT raises ethical concerns as it challenges the traditional role of authorship, transparency, and accountability in scientific research [9, 10].

ChatGPT and Medical Education

ChatGPT has been explored as a tool for medical education, particularly in enhancing learning, interpretation, and recall of medical information [11, 12]. Some studies have shown that ChatGPT can provide reliable information comparable to medical students in specific medical fields such as parasitology. However, it is crucial to ensure the standardization, validity, and reliability of the information fed to ChatGPT to maintain its effectiveness, particularly considering that its current data is up to 2021.

Also, medical students' dependence on ChatGPT can undermine clinical reasoning despite its potential benefits [13]. Moreover, ChatGPT's responses may lack context or may not be tailored to the specific needs of individual learners, which is a limitation considering the complexity of the medical education process and the diverse learning needs and styles of students.

ChatGPT and Diagnostic Decision-making

ChatGPT has been used as an adjunct in diagnostic decision-making in radiology, cardiology, and urology, where it improved efficiency and reduced errors in decision-making. However, there are concerns about the accuracy of the generated output, the potential for ChatGPT to perpetuate biases in diagnoses, and the need for human oversight in its use [14-17]. As an AI language model, ChatGPT can only provide information based on the data it has been trained on, so its responses should be regarded as a source of information rather than a substitute for professional medical advice.

ChatGPT and Public Health

ChatGPT has been used in public health research to analyze population-level data on vaccine effectiveness, COVID-19 conspiracy theories, and compulsory vaccination. Some studies have shown that ChatGPT can provide reliable estimates in public health research, provided that the input data is valid and accurate. However, concerns persist about the potential for misleading information and the amplification of harmful beliefs if the input data is flawed or biased [18].

Implications of ChatGPT in Science and Research

ChatGPT has been explored as a tool for generating scholarly content, including scientific research and academic publishing, such as drafting articles, abstracts, and research proposals [19]. Some argue that ChatGPT can improve the efficiency and speed of scientific writing by streamlining the writing process and assisting with literature reviews, data interpretation, and hypothesis generation. However, there are concerns about its accuracy, the potential to undermine the value of human expertise, and challenges to traditional authorship and transparency in scientific research [20]. Discussions on the implications of ChatGPT in science and research include the need for ethical considerations, the importance of human oversight, and the potential to promote transparency and efficiency in scientific writing [21].

Ethical Considerations of ChatGPT

ChatGPT has raised ethical concerns regarding authorship, accountability, and transparency in scientific research and medical writing [9]. In the context of medical education, there are additional concerns about the potential for bias or misinformation, as AI models can amplify existing biases present in the training data, and there is a risk that ChatGPT's responses may perpetuate these biases. Therefore, it is crucial to ensure that ChatGPT is trained on unbiased and diverse data and that its responses are carefully monitored for accuracy and fairness. Furthermore, discussions on the ethical considerations of ChatGPT include the need for transparency in its use, the importance of human oversight, and the potential to promote bias in decision-making [8, 10].

ChatGPT's Potential to Automate Medical Tasks

ChatGPT, alongside other large language models, has raised discussions on the future of programming and its potential to replace programmers. ChatGPT has been used in generating code, and some studies have shown that it can improve the efficiency and speed of programming. However, there are concerns about the potential to undermine the value of human expertise in programming, the need for human oversight in its use, and the risk of the tool being hacked, compromising genuine data [20].

Criticism of ChatGPT Usage

Incorporating AI and large language models (LLMs) such as ChatGPT into healthcare necessitates meticulous attention to emerging challenges and concerns. While ChatGPT's adeptness in mimicking human dialogue presents opportunities for improving patient-provider interactions and potentially enhancing patient adherence to prescribed treatments, the model's limited grasp of context and nuance, along with its failure to consistently recognize its own limitations, highlights the perils of unsupervised deployment in clinical settings [22, 23].

Research examining ChatGPT's application in medical scholarship shows that the language model exhibits a disconcerting tendency to produce plausible yet erroneous content (i.e., hallucinations), including spurious references, thereby jeopardizing scientific integrity and the dissemination of accurate information. [24]. Furthermore, despite the potential advantages of utilizing ChatGPT for peer-to-peer mental health support, its vulnerability to bias and perpetuating stereotypes demands rigorous ethical and legal scrutiny, particularly when AI-assisted clinicians commit errors, or patients eschew professional consultation in favor of AI-generated medical counsel [25]. The urgent need for comprehensive training based on expert annotations, formal evaluations, and stringent regulatory safeguards to ensure alignment with clinical performance benchmarks and avert detrimental outcomes is paramount. These considerations emphasize the necessity for interdisciplinary collaboration and judicious incorporation of AI and LLMs into healthcare systems, prioritizing the augmentation of human expertise to pursue optimal patient outcomes [26].

Discussion

The positive aspects of the medical journal's publications on ChatGPT include the in-depth analysis of ChatGPT and its capabilities and the potential benefits of using chatbots in various industries [27]. The publications also provide a comprehensive list of references, which can be useful for further research. ChatGPT represents a significant leap forward in natural language processing (NLP), employing a large-scale, pre-trained neural network to generate human-like responses to user queries [28].

Furthermore, its ability to personalize responses to a broad spectrum of medical queries makes it an essential tool in the medical field. This is possible due to the accuracy and flexibility of ChatGPT achieved through transfer learning, a deep learning technique that fine-tunes responses for specific medical domains [29]. This approach allows ChatGPT to analyze the context in which a query is used and generate tailored responses for the specific user [30]. However, it is important to note that ChatGPT's responses are limited to the data it has been trained on, and its knowledge is based on patterns it has learned from large amounts of text data. Therefore, its responses may not always be up-to-date or accurate for every scenario.

Additionally, since ChatGPT is not a live system and its responses are generated based on the data it has learned from, it may not always have access to the latest information or developments in a given field, especially in rapidly evolving areas such as medicine or healthcare. Despite these limitations, one of the notable advantages of ChatGPT over previous NLP tools and other chatbots is the generation of human-like responses. Traditional chatbots rely on a linear, decision-tree approach, which provides only predefined answers to a limited set of questions.

In contrast, ChatGPT can respond to more extensive queries with personalized and natural-sounding responses, allowing for enhanced human-like interaction. [31-33]. This is particularly critical in the medical field, where there is a need for clear and accurate communication of medical information to patients and healthcare professionals [34].

Furthermore, ChatGPT's ability to provide a higher range of interactions is another advantage over previous NLP tools and chatbots. Traditional chatbots are limited to predefined responses, whereas ChatGPT can provide more diverse responses to user queries. This is possible due to the neural network's size and complexity, allowing ChatGPT to process and analyze vast data [35]. As a result, ChatGPT can provide more informative and detailed responses, which can be especially useful in the medical field [36]. However, some negative aspects of the publication include the limited scope of the analysis and the lack of empirical evidence to support the claims [37]. Despite its numerous advantages, ChatGPT has some limitations and disadvantages compared to other available AI and chatbots in the medical field. One significant drawback is its high computational cost [33, 38]. ChatGPT employs a large-scale neural network, which requires substantial computational power and considerable memory, making it more challenging to deploy and integrate into smaller medical applications.

Moreover, the model's size can make fine-tuning for a specific domain difficult, leading to inconsistencies in the generated responses [39]. Another limitation of ChatGPT is its inability to incorporate external knowledge sources, limiting its accuracy in the medical field. In contrast to some other AI and chatbots, ChatGPT is not designed to extract information from external sources such as medical journals or textbooks, which can provide additional context and background knowledge [22]. Furthermore, ChatGPT may not generate responses incorporating the latest medical research or best practices without its ability to integrate updated and reliable data, considering that its original data training was up until 2021 [40]. Lastly, ChatGPT's responses can be inconsistent, leading to confusion and mistrust in the medical field [41]. As it is trained on a vast corpus of text, it can generate contradictory or inconsistent responses with medical guidelines. This can be especially problematic in the medical field, where accuracy and consistency are critical. Although ChatGPT can be fine-tuned to improve its accuracy, this process can be time-consuming and costly, making it less practical for smaller medical applications [23, 42]. Concerns about the ChatGPT training datasets have also been raised, causing possible biases. This effect could not only possibly limit its capabilities and could produce counterfactual results [43, 44].

Limitations

This review has limitations in the context of searching in multiple databases. Since ChatGPT is a relatively new topic, some preprints were included. Other papers that PubMed does not yet index could have been missed. Furthermore, we included articles published before November 2022 to reflect on AI chatbots, given that the available data on ChatGPT was still limited during our literature review.

Conclusions

In conclusion, this review offers a comprehensive summary of the early presence of ChatGPT in the medical literature during the first four months following its launch. It highlights the growing body of literature on the adaptations and healthcare responses to this transformative AI model. The publications on ChatGPT in medical indexing platforms provide a valuable overview of its potential applications in medical education, scientific research, medical writing, and diagnostic decision-making. However, they also raise ethical concerns, such as authorship, accountability, transparency, and the potential for bias or misinformation. To maintain the integrity of health science publications, specific criteria for including ChatGPT as a co-author should be established by publishers. Nevertheless, more research is needed to evaluate the effectiveness and ethical implications of using AI chatbots like ChatGPT across different disciplines.

Acknowledgments

We acknowledge the assistance of ChatGPT, an AI language model, in the writing process of this manuscript. ChatGPT was used to generate some of the text and provided helpful suggestions for improving the language and flow of the manuscript.

The authors have declared that no competing interests exist.

References

- 1.An overview of chatbot technology. Adamopoulou E, Moussiades L. Artificial Intelligence Applications and Innovations. 2020;584:373–383. [Google Scholar]

- 2.Jin Jin, B. and M. Kruppa. The backstory of ChatGPT creator. OpenAI. 2022. https://www.wsj.com/articles/chatgpt-creator-openai-pushes-new-strategy-to-gain-artificial-intelligence-edge-11671378475 https://www.wsj.com/articles/chatgpt-creator-openai-pushes-new-strategy-to-gain-artificial-intelligence-edge-11671378475

- 3.How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment [PREPRINT] Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D. JMIR Med Educ. 2023;9:0. doi: 10.2196/45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Reflection with ChatGPT about the excess death after the COVID-19 pandemic. Temsah MH, Jamal A, Al-Tawfiq JA. New Microbes New Infect. 2023;52:101103. doi: 10.1016/j.nmni.2023.101103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.ChatGPT - Reshaping medical education and clinical management. Khan RA, Jawaid M, Khan AR, Sajjad M. Pak J Med Sci. 2023;39:605–607. doi: 10.12669/pjms.39.2.7653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Demiris G, Oliver D, Washington K. Behavioral Intervention Research in Hospice and Palliative Care. Elsevier; 2018. Behavioral Intervention Research in Hospice and Palliative Care. [Google Scholar]

- 7.Can artificial intelligence help for scientific writing? Salvagno M, Taccone FS, Gerli AG. Crit Care. 2023;27:75. doi: 10.1186/s13054-023-04380-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. Macdonald C, Adeloye D, Sheikh A, Rudan I. J Glob Health. 2023;13:1003. doi: 10.7189/jogh.13.01003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Journals take up arms against AI-written text. Brainard J. https://www.science.org/doi/pdf/10.1126/science.adh2762. Science. 2023;379:740–741. doi: 10.1126/science.adh2762. [DOI] [PubMed] [Google Scholar]

- 10.AI for life: Trends in artificial intelligence for biotechnology. Holzinger A, Keiblinger K, Holub P, Zatloukal K, Müller H. N Biotechnol. 2023;74:16–24. doi: 10.1016/j.nbt.2023.02.001. [DOI] [PubMed] [Google Scholar]

- 11.ChatGPT passing USMLE shines a spotlight on the flaws of medical education. Mbakwe AB, Lourentzou I, Celi LA, Mechanic OJ, Dagan A. PLOS Digit Health. 2023;2:0. doi: 10.1371/journal.pdig.0000205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Initial impressions of ChatGPT for anatomy education [PREPRINT] Mogali SR. Anat Sci Educ. 2023 doi: 10.1002/ase.2261. [DOI] [PubMed] [Google Scholar]

- 13.ChatGPT, an artificial intelligence chatbot, is impacting medical literature. Lubowitz JH. Arthroscopy. 2023;39:1121–1122. doi: 10.1016/j.arthro.2023.01.015. [DOI] [PubMed] [Google Scholar]

- 14.Evaluating ChatGPT as an adjunct for radiologic decision-making. Rao A, Kim J, Kamineni M, Pang M, Lie W, Succi MD. medRxiv. 2023 doi: 10.1016/j.jacr.2023.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.ChatGPT is shaping the future of medical writing but still requires human judgment [PREPRINT] Kitamura FC. Radiology. 2023:230171. doi: 10.1148/radiol.230171. [DOI] [PubMed] [Google Scholar]

- 16.Artificial intelligence for clinical interpretation of bedside chest radiographs. Khader F, Han T, Müller-Franzes G, et al. Radiology. 2023;307:0. doi: 10.1148/radiol.220510. [DOI] [PubMed] [Google Scholar]

- 17.ChatGPT and other large language models are double-edged swords. Shen Y, Heacock L, Elias J, Hentel KD, Reig B, Shih G, Moy L. Radiology. 2023:230163. doi: 10.1148/radiol.230163. [DOI] [PubMed] [Google Scholar]

- 18.Are ChatGPT's knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination?: a descriptive study. Huh S. J Educ Eval Health Prof. 2023;20:1. doi: 10.3352/jeehp.2023.20.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Correction to: can artificial intelligence help for scientific writing? Salvagno M, Taccone FS, Gerli AG. Crit Care. 2023;27:99. doi: 10.1186/s13054-023-04390-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.ChatGPT listed as author on research papers: many scientists disapprove. Stokel-Walker C. Nature. 2023;613:620–621. doi: 10.1038/d41586-023-00107-z. [DOI] [PubMed] [Google Scholar]

- 21.Open artificial intelligence platforms in nursing education: tools for academic progress or abuse? O'Connor S, ChatGP ChatGP. Nurse Educ Pract. 2023;66:103537. doi: 10.1016/j.nepr.2022.103537. [DOI] [PubMed] [Google Scholar]

- 22.Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. Cascella M, Montomoli J, Bellini V, Bignami E. J Med Syst. 2023;47:33. doi: 10.1007/s10916-023-01925-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.The potential impact of ChatGPT in clinical and translational medicine. Xue VW, Lei P, Cho WC. Clin Transl Med. 2023;13:0. doi: 10.1002/ctm2.1216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma. Homolak J. Croat Med J. 2023;64:1–3. doi: 10.3325/cmj.2023.64.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.The rise of ChatGPT: Exploring its potential in medical education [PREPRINT] Lee H. Anat Sci Educ. 2023 doi: 10.1002/ase.2270. [DOI] [PubMed] [Google Scholar]

- 26.Will ChatGPT transform healthcare? Nat Med. 2023;29:505–506. doi: 10.1038/s41591-023-02289-5. [DOI] [PubMed] [Google Scholar]

- 27.Chatting about ChatGPT: how may AI and GPT impact academia and libraries? Lund BD, Wang T. Library Hi Tech News. 2023;40 [Google Scholar]

- 28.Is ChatGPT leading generative AI? What is beyond expectations? Aydın Ö, Karaarslan E. 2023:1–23. [Google Scholar]

- 29.Using ChatGPT in the medical field: a narrative. Pekşen A, ChatGPT ChatGPT. Clin Microbiol Infect. 2023;5 [Google Scholar]

- 30.A review of ChatGPT AI's impact on several business sectors. George S, George H, Martin G. PUIIJ. 2023;1:9–23. [Google Scholar]

- 31.A review of AI based medical assistant chatbot. Bulla C, Parushetti C, Teli A, Aski S, Koppad S. Research and Applications of Web Development and Design. 2020;2:1–14. [Google Scholar]

- 32.Healthcare ex Machina: are conversational agents ready for prime time in oncology? Bibault JE, Chaix B, Nectoux P, Pienkowsky A, Guillemasse A, Brouard B. Clin Transl Radiat Oncol. 2019;16:55–59. doi: 10.1016/j.ctro.2019.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.An efficient search for context-based chatbots. Atiyah A, Jusoh S, Almajali S. 8th International Conference on Computer Science and Information Technology. 2018:125–130. [Google Scholar]

- 34.Interoperability of heterogeneous health information systems: a systematic literature review. Torab-Miandoab A, Samad-Soltani T, Jodati A, Rezaei-Hachesu P. BMC Med Inform Decis Mak. 2023;23:18. doi: 10.1186/s12911-023-02115-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Let's have a chat! A conversation with ChatGPT: technology, applications, and limitations. Shahriar S, K Hayawi. 2023 [Google Scholar]

- 36.Mitigating the burden of severe pediatric respiratory viruses in the post-COVID-19 era: ChatGPT insights and recommendations. Alhasan Alhasan, Al-Tawfiq Al-Tawfiq, Aljamaan Aljamaan, et al. Cureus. 2023;15:0. doi: 10.7759/cureus.36263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37."So what if ChatGPT wrote it?" Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Dwivedi Dwivedi, Kshetri Kshetri, Hughes Hughes, et al. https://pure.kfupm.edu.sa/en/publications/so-what-if-chatgpt-wrote-it-multidisciplinary-perspectives-on-opp Int J Inf Manage. 2023;71:102642. [Google Scholar]

- 38.Thomas Thomas, L. The Pros and Cons of Healthcare Chatbots. The pros and cons of healthcare chatbots. 2023. https://www.news-medical.net/health/The-Pros-and-Cons-of-Healthcare-Chatbots.aspx pp. 2023–2022.https://www.news-medical.net/health/The-Pros-and-Cons-of-Healthcare-Chatbots.aspx

- 39.A brief review of ChatGPT: its value and the underlying GPT technology [PREPRINT] Lund BD. 2023 [Google Scholar]

- 40.What is ChatGPT? OpenAI. ChatGPT General FAQ. 23 March. 2023. https://help.openai.com/en/articles/6783457-what-is-chatgpt https://help.openai.com/en/articles/6783457-what-is-chatgpt

- 41.Artificial hallucinations in ChatGPT: implications in scientific writing. Alkaissi H, McFarlane SI. Cureus. 2023;15:0. doi: 10.7759/cureus.35179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.The potential impact of ChatGPT in clinical and translational medicine. Baumgartner C. Clin Transl Med. 2023;13:0. doi: 10.1002/ctm2.1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.The benefits and challenges of ChatGPT: an overview. Deng J, Lin Y. Front Artif Intell. 2022;2:81–83. [Google Scholar]

- 44.Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. Kung TH, Cheatham M, Medenilla A, et al. PLOS Digit Health. 2023;2:0. doi: 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]