Abstract

Background

Interest in internet‐based patient reported outcome measure (PROM) collection is increasing. The NHS myHealthE (MHE) web‐based monitoring system was developed to address the limitations of paper‐based PROM completion. MHE provides a simple and secure way for families accessing Child and Adolescent Mental Health Services to report clinical information and track their child's progress. This study aimed to assess whether MHE improves the completion of the Strengths and Difficulties Questionnaire (SDQ) compared with paper collection. Secondary objectives were to explore caregiver satisfaction and application acceptability.

Methods

A 12‐week single‐blinded randomised controlled feasibility pilot trial of MHE was conducted with 196 families accessing neurodevelopmental services in south London to examine whether electronic questionnaires are completed more readily than paper‐based questionnaires over a 3‐month period. Follow up process evaluation phone calls with a subset (n = 8) of caregivers explored system satisfaction and usability.

Results

MHE group assignment was significantly associated with an increased probability of completing an SDQ‐P in the study period (adjusted hazard ratio (HR) 12.1, 95% CI 4.7–31.0; p = <.001). Of those caregivers' who received the MHE invitation (n = 68) 69.1% completed an SDQ using the platform compared to 8.8% in the control group (n = 68). The system was well received by caregivers, who cited numerous benefits of using MHE, for example, real‐time feedback and ease of completion.

Conclusions

MHE holds promise for improving PROM completion rates. Research is needed to refine MHE, evaluate large‐scale MHE implementation, cost effectiveness and explore factors associated with differences in electronic questionnaire uptake.

Keywords: Child and adolescent mental health, patient‐reported outcome measures, remote monitoring, acceptability

Key Practitioner Message.

• Patient‐reported outcome measures (PROMs) are considered an important tool for measuring treatment success and outcomes in healthcare systems.

• Adherence to routine PROM guidance in Child and Adolescent Mental Health Services (CAMHS) remains low, largely driven by limitations associated with paper‐based data collection.

• Paperless monitoring systems (i.e. digital) as an alternative to traditional outcome measure delivery and collection are growing in healthcare settings.

• Remote questionnaire completion using the myHealthE (MHE) system is feasible and acceptable to caregivers of children accessing CAMHS in South London. Results suggest a 12‐fold increase in Strengths and Difficulties questionnaire reporting compared to standard practice.

• More research is required to understand whether MHE implementation affords similar improvements in remote PROM completion at scale and whether electronic questionnaire uptake is equal for different socio‐demographic and clinical populations.

Introduction

Patient‐reported outcome measures (PROMs) enable standardised and direct collection of a patient's perceived health status (Devlin & Appleby, 2010). Used routinely, PROMs are recognised as a clinically valuable method to measure patient‐ or caregiver‐rated symptoms, assess intervention success, and encourage shared patient and practitioner communication and decision making (Carlier, Meuldijk, Van Vliet et al., 2012; Lambert, Whipple, Hawkins et al., 2003; Soreide & Soreide, 2013). Child and Adolescent Mental Health Services (CAMHS) in England are encouraged to collect information about young people's presenting problems at entry to CAMHS and again within 6 months of receiving treatment (Department of Health (DoH), 2004, 2015; Morris et al., 2020) using PROMs. However, audit and survey studies demonstrate low guideline adherence, suggesting that CAMHS struggle to implement PROMs (Batty et al., 2013; Hall et al., 2013; Johnston & Gowers, 2005). Recent research investigating the electronic health records of 28,000 patients accessing CAMH services across South London identified paired use of the Strengths and Difficulties Questionnaire PROM (SDQ; Goodman, 1997), in only 8% of patients (Morris et al., 2020) and as few as 1% within specific clinical groups (Cruz et al., 2015).

Data collection using traditional paper questionnaires is associated with several time‐ and resource‐intensive steps, including printing, postage and processing returned outcome measures. Although paper questionnaires are practical and easy to complete, already‐burdened clinicians struggle with the administrative effort required to capture paper‐based questionnaires (Boswell, Kraus, Miller, & Lambert, 2015; Hall et al., 2014; Johnston & Gowers, 2005). Response data are also easily compromised, for example, users can omit questions, select multiple responses per item, and mark outside the questions tick box margins, leading to missing or unusable data (Ebert, Huibers, Christensen, & Christensen, 2018).

A rapid rise in internet use has paved the way for electronic questionnaires (Lyon, Lewis, Boyd, Hendrix, & Liu, 2016). Electronic PROMs (ePROMs) are reported to be less time consuming (Cella et al., 2015), require fewer administrative duties (Black, 2013; Coons et al., 2015; Eremenco, Coons, & Paty, 2014), cost less (Zuidgeest, Hendriks, Koopman, Spreeuwenberg, & Rademakers, 2011) and evoke more honest (Black & Ponirakis, 2000) and less erroneous responses; prompting patients to respond to all items within a questionnaire and only provide one response per question (Coons et al., 2015; Dillon et al., 2014; Eremenco et al., 2014; Jamison et al., 2001).

Feasibility trials of web‐based monitoring systems report positive outcomes relating to patient engagement, satisfaction and clinical value (Ashley et al., 2013; Barthel et al., 2016; Nordan et al., 2018; Schepers et al., 2017). However, less research is available on the development and application of ePROM systems in CAMHS. Interviews with mental health service users demonstrate positive attitudes toward the use of technology to assist traditional care (Borzekowski et al., 2009). However, patients have highlighted barriers to web‐based portal acceptability, including computer literacy, perceived usefulness, suitability, confidentiality, feedback and the effect application use has on their capacity to manage their condition and therapeutic relationships (Niazkhani, Toni, Cheshmekaboodi, Georgiou, & Pirnejad, 2020).

The myHealthE (MHE) system was built to enable remote PROM monitoring in CAMHS. This system aims to automate the communication, delivery and collection of ePROMs at predefined post‐treatment periods, providing caregivers with a safe and engaging way to share clinically relevant information about their child with their allocated care team with minimal human input. MHE architecture, development and implementation methodology, including key aspects of data safety and governance, have been described previously (Morris et al., 2021). MHE external web‐development was provided by Digital Marmalade (see Acknowledgements). Novel healthcare applications require feasibility and acceptability testing to ensure that the technology is understandable and can be used successfully by the target end‐user in real‐world clinical surroundings before conducting a large‐scale system evaluation (Steele Gray et al., 2016). As described in our protocol [(ISRCTN) 22581393], the primary purpose of this trial was to understand whether MHE use should be assessed in CAMHS on a wider scale. Therefore, we conducted a feasibility pilot study to evaluate whether introducing MHE increased completion of PROMS over the course of CAMHS treatment compared to standard data collection procedures, as measured by the proportion of ePROMS relative to paper questionnaires completed over a 3‐month period. Secondly, we aimed to assess caregiver satisfaction with the MHE system via individual caregiver phone consultations. Given resource constraints we were unable to assess the economic benefit of MHE compared to standard data acquisition as per our protocol. We hypothesised that MHE implementation would afford a substantial increase in completed standardised caregiver‐reported follow‐up data and caregiver satisfaction with CAMHS services compared to routine data collection.

Methods

Design

The current study comprised a single‐blindxed parallel group feasibility pilot randomised control trial (RCT) of MHE. Outcome, sociodemographic and service level data were obtained from the Clinical Record Interactive Search (CRIS) system. CRIS contains de‐identified medical record history from the South London and Maudsley (SLaM) National Health Service Foundation Trust, one of Europe's largest mental health care organisations providing services to over 34,400 children and adolescents between the 1 January 2008 and 1 December 2019 (Downs et al., 2019; Perera et al., 2016; Stewart et al., 2009). This research tool was established by SLaM's National Institute of Health Research Biomedical Research Centre (NIHR BRC) in 2008, to enable information retrieval for the purpose of approved research (Fernandes et al., 2013). Comprehensive electronic health record (EHR) information is available for SLaM services from 2006.

Setting and participants

The trial was conducted at Kaleidoscope, a community paediatric mental health centre, based in Lewisham, South London, between the 11 February 2019 and the 14 May 2019. Eligible participants were caregivers of CAMHS patients aged between 4 and 18 years old with a diagnosis of autism spectrum disorder (ASD). Patients were under the care of Lewisham Neuro‐developmental Team and had at least one SDQ present in their EHR. Caregivers were recruited if they had contact details (mobile phone number and/or email address) recorded in their child's EHR. The MHE data collection process was directly comparable to current paper‐based practice, except for its electronic basis and only collected data which was ordinarily requested from families by their treating clinical team. Caregivers did not have to provide informed consent to participate in this trial, but could choose to opt‐out via email or phone call to the trial research assistant (ACM). Recruitment was achieved through SLaM EHR screening. A Microsoft SQL script was developed and implemented by a senior member of the SLaM Clinical Systems Team and automatically provided an extract of eligible patients to the research team. Subsequently, computerised condition allocation and simple randomisation assigned eligible caregivers to either receive PROM outcome monitoring as usual (MAU; control group) or enrolment to the MHE platform (intervention group) on a 1:1 basis. Clinicians were blinded to condition allocation, and not informed which patients on their case load had been allocated to receive MHE or MAU.

Measures, sociodemographic and clinical characteristics

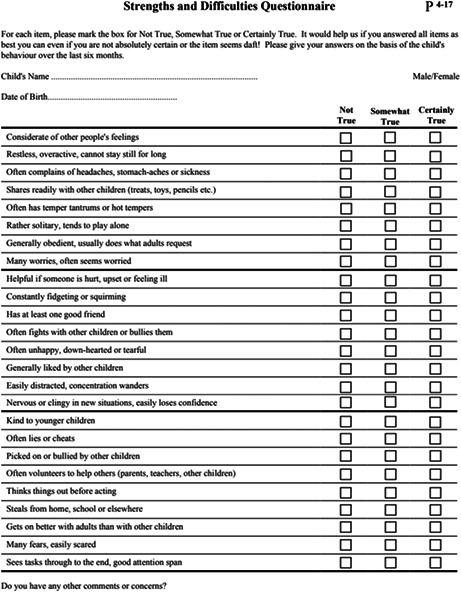

The primary outcome variable was time to completed follow‐up caregiver SDQ (SDQ‐P; electronic vs. paper SDQ‐P) within the 3‐month observation period. The SDQ‐P (Appendix 1) is a structured 25‐item questionnaire screening for symptoms of childhood emotional and behavioural psychopathology (Goodman, 1997). SLaM holds a sub‐licence to use the SDQ to support clinical service via NHS Digital Copyright Licensing Service. It is current clinical practice to collect SDQ‐P for young people, either by post before their first face‐to‐face meeting, or on site during a clinical appointment to inform their baseline assessment and again 6 months after starting treatment or upon discharge from CAMHS. Other variables extracted from CRIS are presented in Table S1.

Process evaluation: usability testing

To evaluate MHE usability, we contacted by telephone a subset of caregivers randomly assigned to MHE. This subset included a convenience sample of six caregivers who had engaged with MHE and two caregivers who had not. Caregivers were asked to access the MHE portal and complete the System Usability Scale (SUS; Brooke, 1996) to examine subjective usability. SUS comprises 10 statements reported on a 5‐point Likert scale ranging from strongly disagree to strongly agree. The total score is presented as a figure from 0 to 100, with a greater score reflecting higher usability. Mean SUS score was computed and ranked using Bangor, Kortum, and Miller's (2008) acceptability scale defined as ‘not acceptable’, ‘marginal’ and ‘acceptable’. Following administration of the SUS, caregivers were invited to ask questions about the platform or provide any further comments about their experience of using MHE.

Sample size

The current trial aimed to inform the development of a larger, adequately powered RCT by providing precise estimates of acceptability and feasibility, in addition to outcome variability. A threshold of clinical significance was decided a priori to be 15% between MAU and MHE groups for SDQ‐P completion within 3‐months, based on consensus from Kaleidoscope staff and previous research indicating an expected baseline completion rate of 8% SDQ‐P in the control group (Morris et al., 2020). For a fixed sample size design, the sample size required to achieve a power of 1 − β = .80 for the two‐tailed chi‐square test at level α = .05, under the prior assumptions, was 2 × 91 = 182 on a 1:1 allocation ratio. The power calculation was carried out using Gpower 3.1.7. To increase power and reduce the risk of chance imbalance between MHE and non‐MHE groups, we followed recent guidance on covariate adjustment within RCTs of moderate sample size (Kahan, Jairath, Doré, & Morris, 2014, and included in our analyses, several factors which could have potential influence on PROM completion (Morris et al., 2020).

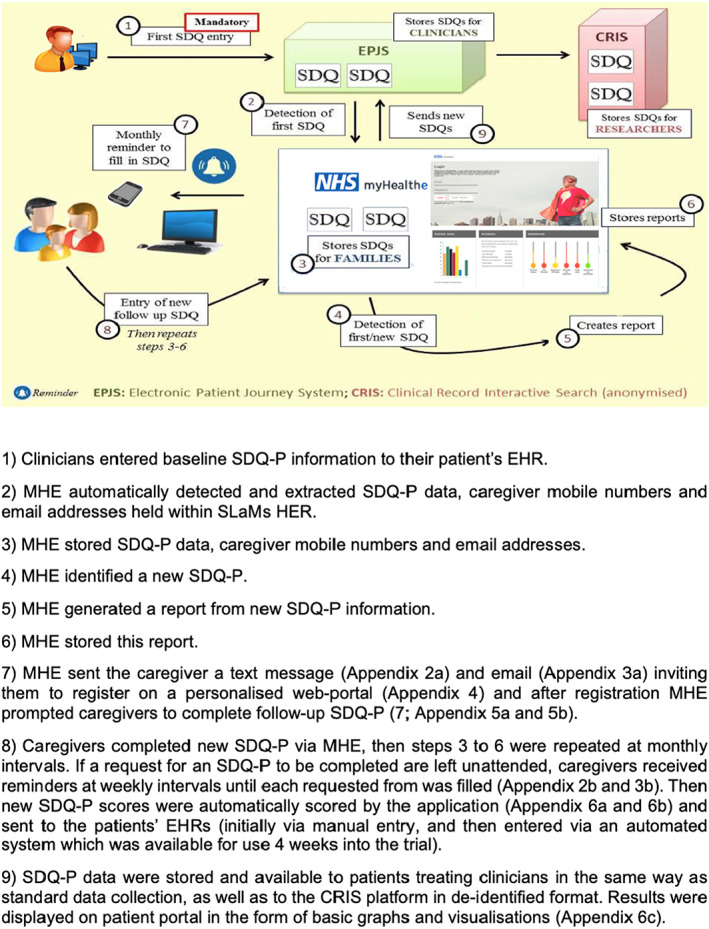

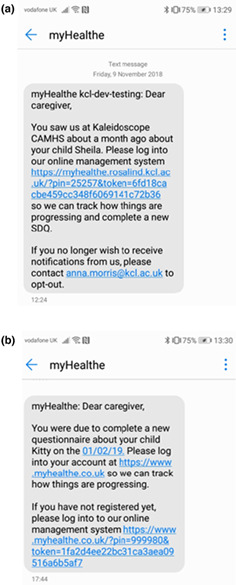

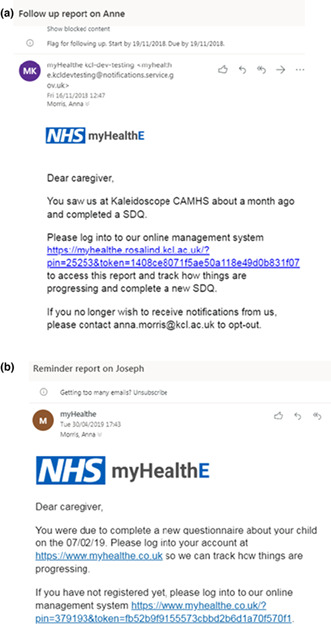

Intervention and procedure

Figure 1 provides an overview and description of the MHE data flow. All caregivers of patients receiving care from Lewisham Neurodevelopmental Team were contacted by letter. This letter informed them of potential changes to clinical information collection (i.e. electronic rather than paper questionnaires) and provided with an information sheet and MHE information leaflet (Appendix 7a,b). After group assignment, caregivers allocated to receive MHE were contacted with a text (Appendix 2a) or email message (Appendix 3a) inviting them set up a personalised web‐portal (Appendix 4) and complete an SDQ‐P (Appendix 5a,b, caregivers were enrolled in the trial irrespective of whether they registered their MHE account). Caregivers who did not register were sent an automated weekly prompt to enrol and complete an SDQ‐P (see Appendix 2b and 3b). Once an online questionnaire was completed, caregivers were presented with infographics based on their responses (Appendix 6a–c), and they were then contacted monthly to provide follow‐up SDQ data. In the control group caregivers were requested to complete paper SDQ‐P face‐to‐face or by post according to clinician discretion. Apart from electronic SDQ‐P completion for the intervention group, treatment remained the same for all participants. Information collected through MHE was stored in the child's EHR and managed in the same way as all other confidential information. SDQ‐P data were checked daily by ACM and promptly entered to the patient's EHR. Post intervention, all participants received a letter thanking them for their participation.

Figure 1.

myHealthE data flow‐diagram

Strategy for analysis

All analyses were conducted using STATA version 14 (StataCorp., 2015). Analyses were conducted to determine differences in SDQ‐P completion between paper based (MAU, monitoring as usual) approaches and MHE. Analysis was performed subject to intention‐to‐treat like principles (intention‐to‐contact), whereby all participants were analysed according to their initially assigned intervention arm, irrespective of protocol adherence or deviations. Cox regression was used to examine the relationship between MAU versus MHE group assignment and SDQ‐P completion rates. Using a Kaplan–Meier curve, we checked whether group assignment (as predictor) satisfied the proportional hazards assumption. Our first analysis examined the association between treatment group only and SDQ‐P completion. The second model adjusted for demographic and clinical covariates captured in this trial. An inverse Kaplan–Meier curve was plotted to visualise the probability of SDQ‐P completion, comparing caregivers who completed electronic and paper SDQ‐P. For the intervention group the MHE website–SDQ‐P completion conversion rate was reported as a percentage by measuring the number of caregivers that register on MHE and subsequently completed a follow‐up SDQ‐P.

Results

Enrolment and baseline characteristics

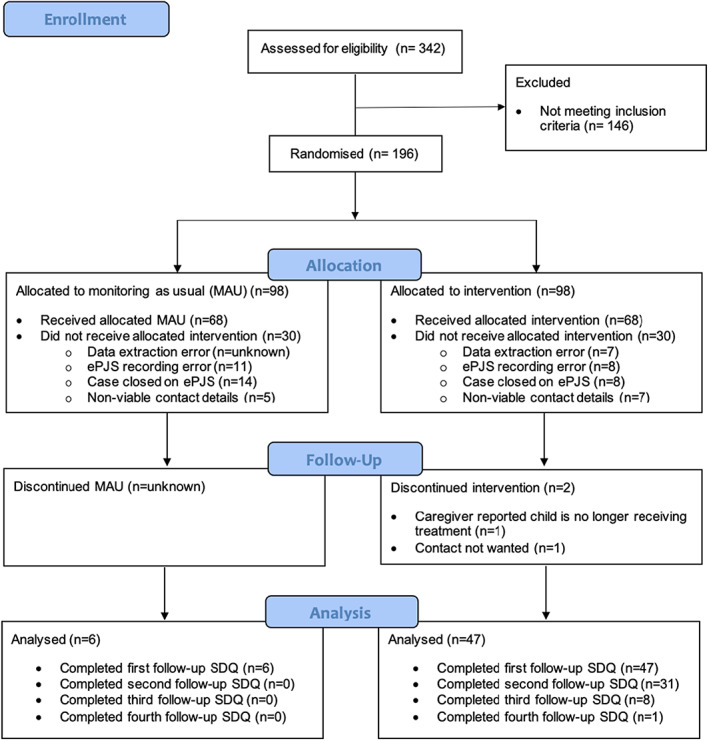

Within study, participant flow and data collection rates are provided in Figure 2. A total of 342 caregivers were screened for eligibility of which (n = 196) met the inclusion criteria. Of the 146 excluded, the majority were due to lack of baseline SDQ (n = 132) During eligibility screening caregiver contact information was often missing or located in an area of the patients' EHRs different from expected, therefore manual contact detail collection was carried out to enable digital communication via MHE. In some cases, no current parental mobile phone number nor email address was found within the EHR (n = 14). Caregivers were enrolled and randomly assigned to the intervention group (MHE n = 98) and the control group (MAU n = 98). Of caregivers assigned to MHE and MAU, 30 (36.3%) did not receive notifications from MHE, with the text monitoring system logging these mobile numbers were incorrect or not in use The conversion rate from account registration to SDQ completion was 98% (47/48). Table S2 outlines account registration issues and opt‐out preferences reported by caregivers.

Figure 2.

Consort diagram presenting recruitment and rate of data collection for MHE and MAU

Table 1 presents sociodemographic and service characteristics for the whole sample. Participants were ethnically diverse, predominantly male and at the older end of the age range accepted by CAMHS.

Table 1.

Baseline patient and clinical characteristics of MHE versus MAU following randomisation (n = 196)

| Total sample n = 196 | ||

|---|---|---|

| MAU = 98 | MHE n = 98 | |

| Gender, n (%) | ||

| Male | 74 (75.5) | 74 (75.5) |

| Mean age at trial start (SD) | 14.3 (2.7) | 14.3 (2.8) |

| Ethnicity, n (%) | ||

| White | 39 (39.8) | 46 (46.9) |

| Black | 35 (35.7) | 23 (23.5) |

| Asian | 2 (2.0) | 1 (1.0) |

| Mixed | 13 (13.3) | 15 (15.3) |

| Other or not stated | 9 (9.2) | 13 (13.3) |

| Level of deprivation, n (%) | ||

| 1st (least deprived) | 22 (23.2) | 25 (25.8) |

| 2nd | 21 (22.1) | 27 (27.8) |

| 3rd | 24 (25.2) | 24 (24.7) |

| 4th (most deprived) | 28 (29.5) | 21 (21.7) |

| Co‐morbid diagnosis, n (%) | ||

| ADHD | 48 (49.0) | 39 (39.8) |

| LD | 14 (14.3) | 11 (11.2) |

| Emotional disorder | 14 (14.3) | 17 (17.4) |

| Mean CGAS score (SD) | 53.1 (10.7) | 54.6 (8.8) |

| Mean days of active care (SD) | 592.1 (196.4) | 563.0 (210.4) |

| Mean attended F2F events (SD) | 6.0 (10.2) | 9.1 (21.0) |

| Mean baseline SDQ Scores (SD) | ||

| Emotional | 5.3 (2.5) | 5.5 (2.8) |

| Conduct | 4.3 (2.3) | 4.5 (2.4) |

| Hyperactivity | 7.5 (2.3) | 7.7 (2.1) |

| Peer difficulties | 4.8 (2.3) | 5.2 (2.3) |

| Prosocial | 5.2 (2.6) | 5.4 (2.3) |

| Impact score | 5.9 (3.4) | 5.6 (2.9) |

| Total difficulties | 22.1 (5.5) | 23.0 (5.3) |

SD, standard deviation.

Electronic versus paper SDQ‐P collection

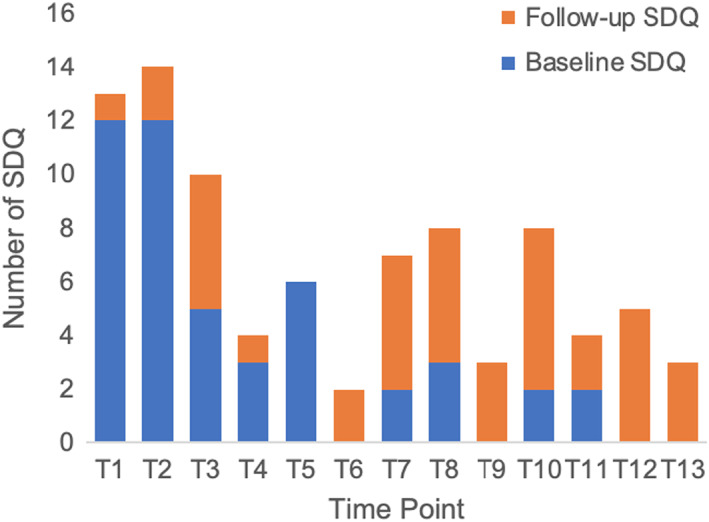

During the trial 47 caregivers [47.9% of intention‐to‐contact (total n = 98), 69.1% of actually contacted (total n = 68)] registered an account on the MHE platform and completed at least one follow‐up SDQ‐P. In the corresponding timeframe 6 (intention to contact = 6% (n = 98) and actually contacted = 8.8% (n = 68) caregivers assigned to receive MAU completed at least one follow‐up SDQ‐P. Second follow‐up was due for 43 of the MHE cohort by the end of the study period (at least 1 month had elapsed since completing their first online SDQ‐P) and of these 31 caregivers completed this (72%). Overall, 87 follow‐up SDQ‐Ps were completed via the MHE platform: Figure 3 provides a breakdown of SDQ‐P completion within each 7‐day notification reminder period.

Figure 3.

Baseline and follow‐up SDQ completion within each 7‐day notification period

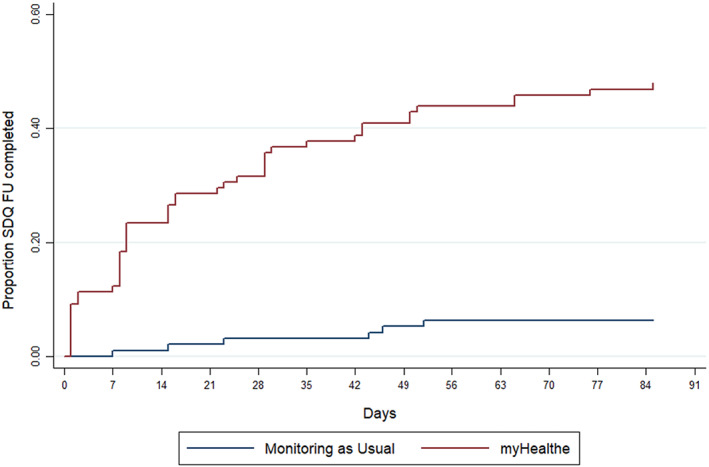

The ITC Cox regression models are presented in Table 2, and graphically depicted in Figure 4. MHE group assignment was significantly associated with an increased probability of completing an SDQ‐P in the study period (adjusted hazard ratio (HR) 12.1, 95% CI 4.7–31.0; p = <.001). This was observed after controlling for potentially confounding socio‐demographic characteristics and clinical factors including, gender, age at the start if the trial, baseline CGAS (Schaffer et al., 1983) and SDQ profiles, co‐morbid ADHD, learning disability, and emotional disorders as well as number of days of active care and attended face‐to‐face events. No significant interaction was found between ethnic status (white and non‐white ethnic groups) and SDQ‐P completion by group.

Table 2.

An Intention to contact Cox‐regression analysis of the relationship between electronic compared to paper‐based SDQ‐P assignment and SDQ‐P completion rates (n = 195), adjusted model taking into account participant characteristics

| Crude H.R (95% CI) | p‐Value | Adjusted model H.R (95% CI) | p‐Value | |

|---|---|---|---|---|

| Group (MHE vs. MAU) | 10.1 (4.3–23.6) | <.01 | 12.1 (4.7–30.9) | <.01 |

| Gender | ||||

| Male | 0.4 (0.2–0.8) | .02 | ||

| Ethnicity | ||||

| White | Reference | – | ||

| Black | 0.5 (0.2–1.2) | .13 | ||

| Asian a | na | na | ||

| Mixed | 1.1 (0.4–2.5) | .88 | ||

| Other or not stated | 0.5 (0.2–1.4) | .16 | ||

| Age at trial start | 1.0 (0.9–1.1) | .90 | ||

| Co‐morbid diagnosis | ||||

| ADHD | 0.8 (0.4–1.6) | .47 | ||

| LD | 1.5 (0.6–4.0) | .44 | ||

| Emotional disorder | 2.5 (1.0–5.8) | .04 | ||

| Days of active care | 1.0 (1.0–1.0) | .77 | ||

| Attended F2F events | 1.0 (1.0–1.0) | .61 | ||

| Baseline SDQ scores | ||||

| Emotional | 1.0 (0.9–1.2) | .61 | ||

| Conduct | 1.0 (0.9–1.1) | .85 | ||

| Hyperactivity | 1.1 (1.0–1.3) | .17 | ||

| Peer difficulties | 1.0 (0.9–1.2) | .94 | ||

| Prosocial | 1.1 (0.9–1.2) | .94 | ||

Covariate dropped due to <5 cell size value.

Figure 4.

Kaplan‐Meier curve illustrating the probability of SDQ‐P within study period between caregivers assigned to complete electronic compared to paper SDQ‐P

Caregiver perspective of MHE implementation

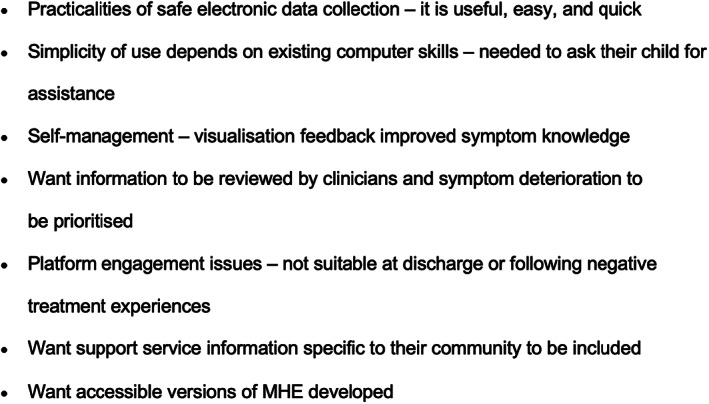

A total of eight SUS questionnaires and usability interviews were completed. The mean SUS score for users of the website was 78/100 indicating that the application was ‘acceptable’ to users. Figure 5 provides a summary of caregiver's comments regarding MHE.

Figure 5.

Summary of patient feedback following MHE use

Discussion

This feasibility pilot showed that the collection of electronic PROMs using web‐based technology is feasible in CAMHS practice. Implementation of MHE, a novel remote monitoring platform afforded considerable rates of SDQ‐P completion (69%) for caregiver's who received an invitation to register for MHE compared to 12% paper‐based SDQ‐P completion. By way of contrast, a comprehensive audit of over 28,000 young people services accessing CAMHS found paired SDQ‐P completion rates of 8%. By automating unassisted delivery of PROMs at specified time points, MHE may address several fundamental challenges inherent to paper‐based information gathering in busy clinical settings, such as processing burden, lack of supportive infrastructure and poor administration guideline knowledge (Boswell et al., 2015; Duncan & Murray, 2012; Waldron, Loades, & Rogers, 2018; Wolpert, 2014).

In post‐trial interviews caregivers rated MHE as ‘acceptable’, suggesting good levels of usability. Many caregivers favoured the ease and speed of using MHE to complete outcome measures compared to paper‐based methods, while barriers included how readily information provided through the platform was used by clinicians to identify children with worsening symptoms and data privacy concerns. However, only a small number of caregivers were contacted to provide their views on the system; therefore, it is possible that other undetected usability issues influenced the results of this trial, for example: language, literacy level, disability, and cultural sensitivity difficulties (Bodie & Dutta, 2008; Kontos, Bennett, & Viswanath, 2007; Lindsay, Bellaby, Smith, & Baker, 2008; Morey, 2007).

Historically, low engagement with eHealth has been attributed to unequal internet access (Latulippe, Hamel, & Giroux, 2017) but did not appear to account for non‐engagement in the current trial. This finding is likely to reflect the substantial increase in mobile phones and other internet‐enabled mobile technology availability (Pew Research Center, 2019), reduced cost of internet subscriptions and widening availability of free public Wi‐Fi (Kontos et al., 2007; McAuley, 2014). However, despite physical internet access, end‐users may not have the skills necessary to fully engage with digital technologies (Hargittai, 2002). This was the case for several caregivers who reported that their limited information technology capabilities and knowledge, making it hard to navigate MHE without assistance from family members. This disparity may deepen as digital platforms are increasingly integrated into routine clinical practice (Van Dijk, 2005) and should be iteratively considered during the design and implementation of emerging digital health platforms, paying particular attention to the role of co‐design (Andersen, 2019).

Strengths and limitations

This trial was conducted in a naturalistic manner independent of clinical practice to ensure that clinician's behaviour, for example, promoting MHE use did not inflate observed rates of engagement. Moreover, the research was conducted in a socio‐demographically diverse geographical area, resulting in a broad range of caregivers testing the system. Finally, condition allocation was computerised meaning that all participants were instantly allocate to either receive MAU or MHE. Therefore, it was unlikely that allocation bias would have influenced the trial findings.

Limitations include the fact that families only had the opportunity to enrol to the trial if they had a baseline SDQ present in their child's EHR, which relies on this being initiated by a clinician in the first instance. In the future, using MHE to capture baseline and follow up SDQ‐P data may afford a more realistic assessment of ePROM feasibility. It is also possible that neurodevelopmental team service users perceived the SDQ‐P as less useful than a disorder specific questionnaire, which may have resulted in lower rates of completion level. As we were primary focused on developing an interface for parents, co‐design sessions with clinicians were limited. Further work is needed to examine what is potentially lost using ePROMS compared pencil and paper approaches, and how this could be mitigated by improved design within later versions of myHealthE. Lastly, owing to resource constraints phone interviews were conducted after the trial ended meaning that responses could be influenced by recall bias.

Future research and MHE refinement

The next phase of this research is to extend this feasibility study across multiple‐healthcare sites and other child mental health specialties and additional pertinent PROMs. Plans are already in place to extend MHE introduction to national and specialist teams and further SLaM CAMHS teams across Southwark, Lambeth and Croydon. Recent funding secured from the National Institute for Health Research (NHIR; https://fundingawards.nihr.ac.uk/award/RP‐PG‐0618‐20003) and the Medical Research Council (MRC) Mental Health Pathfinder award to King's College London has enabled MHE to be converted into a scalable NHS software as a service (SaaS) product, with a roadmap to implement MHE across four other Trusts in England. Collecting data from a larger number of caregivers will enable us to explore the effects of various patient factors on ePROM engagement. Research investigating differential uptake in PROM collection suggests that several patient characteristics including ethnicity and social deprivation are associated with inequitable PROM use (Latulippe et al., 2017; Morris et al., 2020). While this was not the case in the current small‐scale trial, it is essential that further research is conducted to determine whether these systems sustain possible health inequalities with larger sample sizes. System refinements are also required to enable alternative methods for acquiring and inputting caregiver contact information to circumvent the difficulties encountered with automatic data extraction in this study.

In‐depth interviews are needed to explore how ePROM platforms can be adapted to meet different service user and clinician needs. Qualitative work is needed to provide more general insights into: (a) caregivers' reasons for deciding to complete or not complete electronic questionnaires; (b) clinicians' perspectives on how digital collection systems and analysis of outcomes could enhance decision making at individual level; (c) clinician and caregivers' views on the concept, design and delivery of MHE, the barriers and facilitators for MHE implementation and identify potential harms and study protocol refinement (e.g., platform design and frequency of questionnaire completion); and (d) young people's perspective on whether the MHE could be adapted as self‐reported outcome collection system, and if trialled, how it should be evaluated.

Conclusion

Routine PROM collection is essential for delivering personalised health services that reflect clinical need from the perspective of young people and their families. This study supports the feasibility of a remote PROM monitoring platform within a real‐world outpatient setting providing treatment to a demographically diverse population. Intimating that web‐platforms may provide an acceptable and convenient method to maintain and scale up improved patient monitoring, service‐user communication, and service evaluation. A future multisite trial of MHE is required to evaluate this e‐system at scale.

Ethical information

Approval for the study was given by the South London and Maudsley NHS Foundation Trust CAMHS Clinical Audit, Service Evaluation and Quality Improvement Committee (approval date: 07/04/2017). Extraction and analysis of deidentified outcome data were carried out using the CRIS platform and security model approved by Oxford Research Ethics Committee C (reference 18/SC/0372).

Trial Registration: International Standard Randomised Controlled Trial Number (ISRCTN) 22581393; https://doi.org/10.1186/ISRCTN22581393.

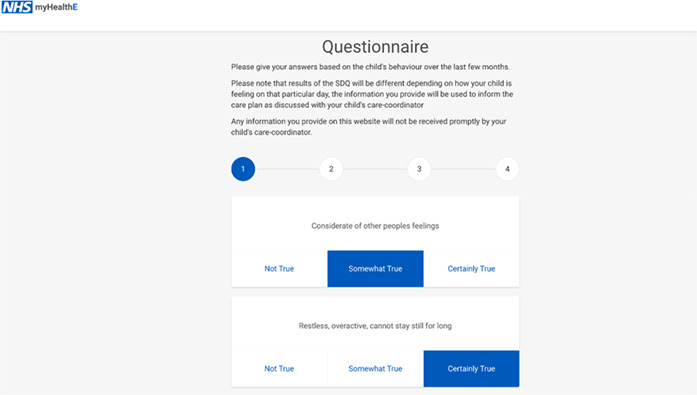

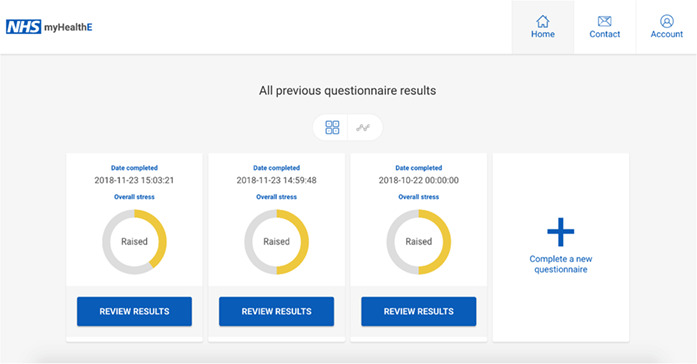

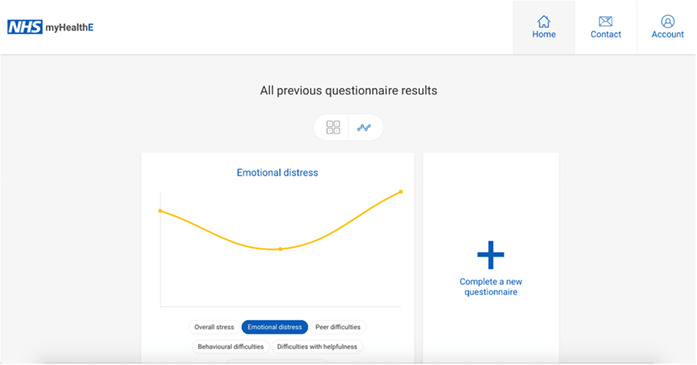

(b) Electronic Strengths and Difficulties Questionnaire

(b) Strengths and Difficulties Questionnaire previous results summary

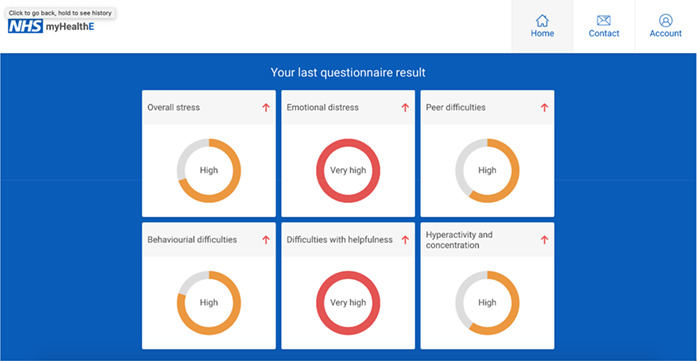

(c) Strengths and Difficulties Questionnaire results visualisation

Supporting information

Table S1. List of socio‐demographic and clinical variables extracted from CRIS.

Table S2. Description of caregiver opt‐out preferences and technical difficulties encountered at MHE registration.

Acknowledgements

Z.I., M.P., R.S., M.H., A.P., R.J.B.D., E.S. and J.D. are affiliated with the National Institute of Health Research (NIHR) Biomedical Research Centre for Mental Health (BRC) Nucleus at the South London and Maudsley (SLaM) NHS Foundation Trust and Institute of Psychiatry, Psychology and Neuroscience (IoPPN), King's College London (KCL). Additionally, the Clinical Record Interactive Search (CRIS) is supported by the NIHR BRC at the SLaM NHS Foundation Trust and KCL. A.C.M is supported by the Guy's and St Thomas' (GSST) Charity. Z.I. and R.J.B.D. are additionally supported by the NIHR University College London Hospitals BRC. R.J.B.D. is further supported by (a) Health Data Research (HDR) UK and (b) The BigData@Heart Consortium under grant agreement No. 116074. M.H. reports funding from the NIHR. A.S. is supported by the Intramural Research Program of the National Institute of Mental Health National Institutes of Health (NIH) (Grant No. ZIA‐MH002957‐01). R.S. is additionally part‐funded by (a) a Medical Research Council (MRC) Mental Health Data Pathfinder Award to King's College London; (b) an NIHR Senior Investigator Award; (c) the NIHR Applied Research Collaboration South London (NIHR ARC South London) at King's College Hospital NHS Foundation Trust. The views expressed are those of the authors and not necessarily those of the NIHR or the Department of Health and Social Care. M.H. declares funding from the Innovative Medicines Initiative for the RADAR‐CNS consortium which includes contributions from Janssen, MSD, UCB, Biogen and Lundbeck. A.P. is partially supported by the NIHR (NF‐SI‐0617‐10120). E.S. is supported from the NIHR BRC at SLaM NHS Foundation Trust (IS‐BRC‐1215‐20018), the NIHR through a programme grant (RP‐PG‐1211‐20016) and Senior Investigator Award (NF‐SI‐0514‐10073 and NF‐SI‐0617‐10120), the European Union Innovative Medicines Initiative (EU‐IMI 115300), Autistica (7237) MRC (MR/R000832/1, MR/P019293/1), the Economic and Social Research Council (ESRC 003041/1) and GSST Charity (GSTT EF1150502) and the Maudsley Charity. J.D. is supported by NIHR Clinician Science Fellowship award (CS‐2018‐18‐ST2‐014) and has received support from a Medical Research Council (MRC) Clinical Research Training Fellowship (MR/L017105/1) and Psychiatry Research Trust Peggy Pollak Research Fellowship in Developmental Psychiatry. The authors give thanks to the families and Kaleidoscope staff who participated in this trial and the MHE digital development team – Digital Marmalade (see https://www.digitalmarmalade.co.uk/) – with particular thanks to Andy McEniry and Jeremy Jones. J.D conceived the trial aims, supervised data analysis and writing. A.C.M led on data analysis and manuscript writing. M.P. assisted with study design and data acquisition. All authors reviewed and provided critical revisions to the manuscript and approved the final version of the manuscript. The remaining authors declare that they have no competing or potential conflicts of interest.

Appendix 1.

Strengths and Difficulties Questionnaire

Appendix 2.

(a) MHE invitation text message; (b) MHE reminder text message

Appendix 3.

(a) MHE invitation email; (b) MHE reminder email

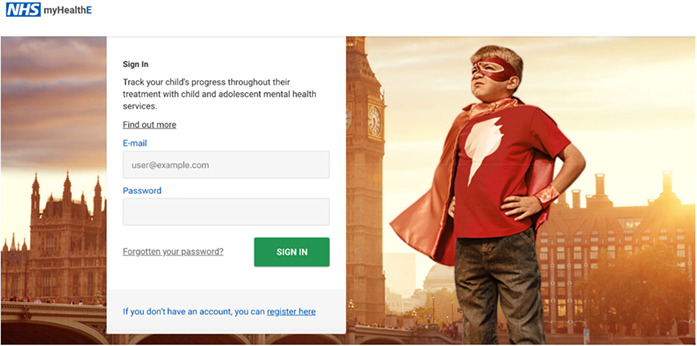

Appendix 4.

MHE login page

Appendix 5.

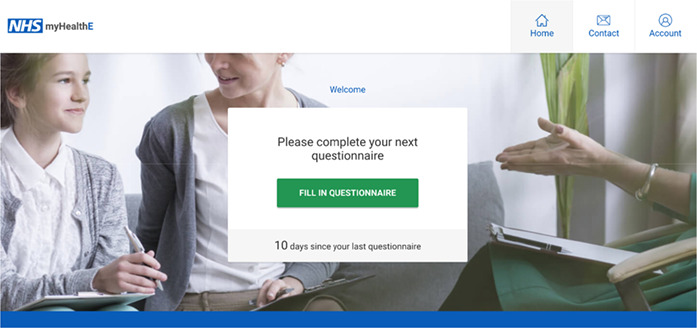

(a) MHE home page (when questionnaire is due to be completed)

Appendix 6.

(a) Strengths and Difficulties Questionnaire results summary

Appendix 7.

(a) MHE feasibility trial caregiver information sheet

(b) MHE feasibility trial caregiver leaflet

References

- Ashley, L. , Jones, H. , Thomas, J. , Newsham, A. , Downing, A. , Morris, E. , … & Wright, P. (2013). Integrating patient reported outcomes with clinical cancer registry data: A feasibility study of the electronic Patient‐Reported Outcomes from Cancer Survivors (ePOCS) system. Journal of Medical Internet Research, 15, e230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen, T.O. (2019). Large‐scale and long‐term co‐design of digital health. Interactions, 26, 74–77.35185310 [Google Scholar]

- Bangor, A. , Kortum, P.T. , & Miller, J.T. (2008). An empirical evaluation of the system usability scale. International Journal of Human‐Computer Interaction, 24, 574–594. [Google Scholar]

- Barthel, D. , Fischer, K. , Nolte, S. , Otto, C. , Meyrose, A.‐K. , Reisinger, S. , … & Ravens‐Sieberer, U. (2016). Implementation of the Kids‐CAT in clinical settings: A newly developed computer‐adaptive test to facilitate the assessment of patient‐reported outcomes of children and adolescents in clinical practice in Germany. Quality of Life Research, 25, 585–594. [DOI] [PubMed] [Google Scholar]

- Batty, M.J. , Moldavsky, M. , Foroushani, P.S. , Pass, S. , Marriott, M. , Sayal, K. , & Hollis, C. (2013). Implementing routine outcome measures in child and adolescent mental health services: From present to future practice. Child and Adolescent Mental Health, 18, 82–87. [DOI] [PubMed] [Google Scholar]

- Black, M.M. , & Ponirakis, A. (2000). Computer‐administered interviews with children about maltreatment methodological, developmental, and ethical Issues. Journal of Interpersonal Violence, 15, 682–695. [Google Scholar]

- Black, N. (2013). Patient reported outcome measures could help transform healthcare. British Medical Journal, 346, f167. [DOI] [PubMed] [Google Scholar]

- Bodie, G.D. , & Dutta, M.J. (2008). Understanding health literacy for strategic health marketing: eHealth literacy, health disparities, and the digital divide. Health Marketing Quarterly, 25, 175–203. [DOI] [PubMed] [Google Scholar]

- Borzekowski, D.L.G. , Leith, J. , Medoff, D.R. , Potts, W. , Dixon, L.B. , Balis, T. , … & Himelhoch, S. (2009). Use of the Internet and other media for health information among clinic outpatients with serious mental illness. Psychiatric Services, 60, 1265–1268. [DOI] [PubMed] [Google Scholar]

- Boswell, J.F. , Kraus, D.R. , Miller, S.D. , & Lambert, M.J. (2015). Implementing routine outcome monitoring in clinical practice: Benefits, challenges, and solutions. Psychotherapy Research, 25, 6–19. [DOI] [PubMed] [Google Scholar]

- Brooke, J. (1996). SUS: A “quick and dirty” usability scale. In Jordan P.W., Thomas B., Weerdmeester B.A., & McClelland I.L. (Eds.), Usability evaluation in industry (pp. 189–194). London: Taylor & Francis. [Google Scholar]

- Cella, D. F. , Hahn, E.A , Jensen, S. E. , Butt, Z. , Nowinski, C. J. , Rothrock, N. , & Lohr, K. N. (2015). Patient‐reported outcomes in performance measurement . Research Triangle Park. Available from: https://www.rti.org/rti‐press‐publication/patient‐reported‐outcomes [PubMed]

- Coons, S.J. , Eremenco, S. , Lundy, J.J. , O'Donohoe, P. , O'Gorman, H. , & Malizia, W. (2015). Capturing patient‐reported outcome (PRO) data electronically: The past, present, and promise of ePRO measurement in clinical trials. Patient, 8, 301–319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlier, I.V. , Meuldijk, D. , Van Vliet, I.M. , Van Fenema, E. , Van der Wee, N.J. , & Zitman, F.G. (2012). Routine outcome monitoring and feedback on physical or mental health status: evidence and theory. Journal of evaluation in clinical practice, 18(1), 104–110. [DOI] [PubMed] [Google Scholar]

- Cruz, L.F.D.L. , Simonoff, E. , McGough, J.J. , Halperin, J.M. , Arnold, L.E. , & Stringaris, A. (2015). Treatment of children with attention‐deficit/hyperactivity disorder (ADHD) and irritability: Results from the multimodal treatment study of children with ADHD (MTA). Journal of the American Academy of Child & Adolescent Psychiatry, 54, 62–70.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Department of Health . (2004). National service framework for children, young people and maternity services: Core standards. Available from: https://www.gov.uk/government/publications/national‐service‐framework‐children‐young‐people‐and‐maternity‐services [DOI] [PubMed]

- Department of Health . (2015). Future in mind: Promoting, protecting and improving our children and young people's mental health and wellbeing . Available from: https://www.gov.uk/government/publications/improving‐mental‐health‐servicesfor‐young‐people

- Devlin, N.J. , & Appleby, J. (2010). Getting the most out of PROMS. Putting health outcomes at the heart of NHS decision‐making. London, UK: The King's Fund. [Google Scholar]

- Dillon, D.G. , Pirie, F. , Rice, S. , Pomilla, C. , Sandhu, M.S. , Motala, A.A. , … African Partnership for Chronic Disease Research (APCDR) . (2014). Open‐source electronic data capture system offered increased accuracy and cost‐effectiveness compared with paper methods in Africa. Journal of Clinical Epidemiology, 67, 1358–1363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downs, J. , Ford, T. , Stewart, R. , Epstein, S. , Shetty, H. , Little, R. , … & Hayes, R. (2019). An approach to linking education, social care and electronic health records for children and young people in South London: A linkage study of child and adolescent mental health service data. BMJ Open, 9, e024355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan, E.A. , & Murray, J. (2012). The barriers and facilitators to routine outcome measurement by allied health professionals in practice: A systematic review. BMC Health Services Research, 12, 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebert, J.F. , Huibers, L. , Christensen, B. , & Christensen, M.B. (2018). Paper‐ or web‐based questionnaire invitations as a method for data collection: Cross‐sectional comparative study of differences in response rate, completeness of data, and financial Cost. Journal of Medical Internet Research, 23, e24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eremenco, S. , Coons, S.J. , & Paty, J. (2014). PRO data collection in clinical trials using mixed modes: Report of the ISPOR PRO mixed modes good research practices task force. Value in Health, 17, 501–516. [DOI] [PubMed] [Google Scholar]

- Fernandes, A.C. , Cloete, D. , Broadbent, M.T. , Hayes, R.D. , Chang, C.K. , Jackson, R.G. , … & Callard, F. (2013). Development and evaluation of a de‐identification procedure for a case register sourced from mental health electronic records. BMC Medical Informatics and Decision Making, 13, 71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman, R. (1997). The Strengths and Difficulties Questionnaire: A research note. Journal of Child Psychology and Psychiatry, 38, 581–586. [DOI] [PubMed] [Google Scholar]

- Hall, C.L. , Moldavsky, M. , Baldwin, L. , Marriott, M. , Newell, K. , Taylor, J. , … & Hollis, C. (2013). The use of routine outcome Measures in two child and adolescent mental health services: A completed audit cycle. BMC Psychiatry, 13, 270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall, C.L. , Taylor, J. , Moldavsky, M. , Marriott, M. , Pass, S. , Newell, K. , … & Hollis, C. (2014). A qualitative process evaluation of electronic session‐by‐session outcome measurement in child and adolescent mental health services. BMC Psychiatry, 14, 113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hargittai, E. (2002). Second‐level digital divide: Differences in people's online skills. First Monday, 7(4). Available from: http://firstmonday.org/article/view/942/864 [Google Scholar]

- Jamison, R.N. , Raymond, S.A. , Levine, J.G. , Slawsby, E.A. , Nedeljkovic, S.S. , & Katz, N.P. (2001). Electronic diaries for monitoring chronic pain: 1‐Year validation study. Pain, 91, 277–285. [DOI] [PubMed] [Google Scholar]

- Johnston, C. , & Gowers, S. (2005). Routine outcome measurement: A survey of UKchild and adolescent mental health services. Child Adolescent Mental Health, 10, 133–139. [DOI] [PubMed] [Google Scholar]

- Kahan, B.C. , Jairath, V. , Doré, C.J. , & Morris, T. (2014). The risks and rewards of covariate adjustment in randomized trials: an assessment of 12 outcomes from 8 studies. Trials, 15, 139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kontos, E.Z. , Bennett, G.G. , & Viswanath, K. (2007). Barriers and facilitators to home computer and internet use among urban novice computer users of low socioeconomic position. Journal of Medical Internet Research, 9, e31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambert, M.J. , Whipple, J.L. , Hawkins, E.J. , Vermeersch, D.A. , Nielsen, S.L. , & Smart, D.W. (2003). Is it time for clinicians to routinely track patient outcome? A meta‐analysis. Clinical Psychology: Science and Practice, 10(3), 288–301. [Google Scholar]

- Latulippe, K. , Hamel, C. , & Giroux, D. (2017). Social health inequalities and eHealth: A literature review with qualitative synthesis of theoretical and empirical studies. Journal of Medical Internet Research, 27, e136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindsay, S. , Bellaby, P. , Smith, S. , & Baker, R. (2008). Enabling healthy choices: Is ICT the highway to health improvement? Health (London, England), 12, 313–331. [DOI] [PubMed] [Google Scholar]

- Lyon, A.R. , Lewis, C.C. , Boyd, M.R. , Hendrix, E. , & Liu, F. (2016). Capabilities and characteristics of digital measurement feedback systems: Results from a comprehensive review. Administration and Policy in Mental Health and Mental Health Services Research, 43, 441–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAuley, A. (2014). Digital health interventions: Widening access or widening inequalities? Public Health, 128, 1118–1120. [DOI] [PubMed] [Google Scholar]

- Morey, O.T. (2007). Digital disparities: The persistent digital divide as related to health information access on the internet. Journal of Consumer Health on the Internet, 11, 23–41. [Google Scholar]

- Morris, A. C. , Ibrahim, Z. , Moghraby, O. S. , Stringaris, A. , Grant, I. M. , Zalwwski, L. , … & Downs, J. (2021). Moving from development to implementation of digital innovations within the NHS: myHealthE, a remote monitoring system for tracking patient outcomes in child and adolescent mental health services. medRxiv. 10.1101/2021.06.09.21257998 [DOI] [PMC free article] [PubMed]

- Morris, A.C. , Macdonald, A. , Moghraby, O. , Stringaris, A. , Hayes, R.D. , Simonoff, E. , … & Downs, J.M. (2020). Sociodemographic factors associated with routine outcome monitoring: a historical cohort study of 28,382 young people accessing child and adolescent mental health services. Child Adolescent Mental Health, 16, 56–64. [DOI] [PubMed] [Google Scholar]

- Niazkhani, Z. , Toni, E. , Cheshmekaboodi, M. , Georgiou, A. , & Pirnejad, H. (2020). Barriers to patient, provider, and caregiver adoption and use of electronic personal health records in chronic care: A systematic review. BMC Medical Informatics and Decision Making, 20, 153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nordan, L. , Blanchfield, L. , Niazi, S. , Sattar, J. , Coakes, C.E. , Uitti, R. , … & Spaulding, A. (2018). Implementing electronic patient‐reported outcomes measurements: Challenges and success factors. BMJ Quality and Safety, 27, 852–856. [DOI] [PubMed] [Google Scholar]

- Perera, G. , Broadbent, M. , Callard, F. , Chin‐Kuo, C. , Downs, J. , Dutta, R. , … & Stewart, R. (2016). Cohort profile of the South London and Maudsley NHS Foundation Trust Biomedical Research Centre (SLaM BRC) Case Register: Current status and recent enhancement of an electronic mental health record‐derived data resource. BMJ Open, 6, e008721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pew Research Center . (2019) Smartphone ownership is growing rapidly around the World, but not always equally . Available from: https://www.pewresearch.org/global/2019/02/05/smartphone‐ownership‐is‐growing‐rapidly‐around‐the‐world‐but‐not‐always‐equally/

- Schepers, S. , Sint Nicolaas, S. , Maurice‐Stam, H. , Van Dijk‐Lokkart, E. , Van den Bergh, E. , De Boer, N. , … & Grootenhuis, M.A. (2017). First experience with electronic feedback of the Psychosocial Assessment Tool in pediatric cancer care. Supportive Care in Cancer, 25, 3113–3121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaffer, D. , Gould, M. , Brasic, J. , Ambrosini, P. , Fisher, P. , Bird, H. , & Aluwahlia, S. (1983). A children's global assessment scale. Archives of General Psychiatry, 40, 1228–1231. [DOI] [PubMed] [Google Scholar]

- Søreide, K. , & Søreide, A.H. (2013). Using patient‐reported outcome measures for improved decision‐making in patients with gastrointestinal cancer – the last clinical frontier in surgical oncology?, Frontiers in Oncology, 3. 10.3389/fonc.2013.00157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- StataCorp . (2015). Stata Statistical Software: Release 14. College Station, TX: StataCorp LP. [Google Scholar]

- Steele Gray, C. , Gill, A. , Khan, A.I. , Hans, P.K. , Kuluski, K. , & Cott, C. (2016). The electronic patient reported outcome tool: Testing usability and feasibility of a mobile app and portal to support care for patients with complex chronic disease and disability in primary care settings. JMIR mHealth and uHealth, 4, e58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart, R. , Soremekun, M. , Perera, G. , Broadbent, M. , Callard Denis, M. , … & Lovestone, S. (2009). The South London and Maudsley NHS Foundation Trust Biomedical Research Centre (SLaM BRC) case register: Development and descriptive data. BMC Psychiatry, 9, 51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Dijk, J.A.G.M. (2005). The deepening divide: Inequality in the information society. London, UK: Sage. [Google Scholar]

- Waldron, S.M. , Loades, M.E. , & Rogers, L. (2018). Routine outcome monitoring in CAMHS: How can we enable implementation in practice? Child Adolescent Mental Health, 23, 328–333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert, M. (2014). Uses and abuses of patient reported outcome measures (PROMs): Potential Iatrogenic impact of PROMs implementation and how it can be mitigated. Administration and Policy in Mental Health and Mental Health Services Research, 41, 141–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuidgeest, M. , Hendriks, M. , Koopman, L. , Spreeuwenberg, P. , & Rademakers, J. (2011). A comparison of a postal survey and mixed‐mode survey using a questionnaire on patients' experiences with breast care. Journal of Medical Internet Research, 13, e68. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1. List of socio‐demographic and clinical variables extracted from CRIS.

Table S2. Description of caregiver opt‐out preferences and technical difficulties encountered at MHE registration.