Abstract

Resilience is learnable and broadly described as an individual's adaptive coping ability, its potential value for stress reduction must be explored. With a global coronavirus pandemic, innovative ways to deliver resilience training amidst heightened mental health concerns must be urgently examined. This systematic review aimed to (1) evaluate the effectiveness of digital training for building resilience and reducing anxiety, depressive and stress symptoms and (2) to identify essential features for designing future digital training. A three‐step search was conducted in eight electronic databases, trial registries and grey literature to locate eligible studies. Randomised controlled trials examining the effects of digital training aimed at enhancing resilience were included. Data analysis was conducted using the Stata version 17. Twenty‐two randomised controlled trials involving 2876 participants were included. Meta‐analysis revealed that digital training significantly enhanced the participants' resilience with moderate to large effect (g = 0.54–1.09) at post‐intervention and follow‐up. Subgroup analyses suggested that training delivered via the Internet with a flexible programme schedule was more effective than its counterparts. This review supports the use of digital training in improving resilience. Further high‐quality randomised controlled trials with large sample size are needed.

Keywords: anxiety, depressive symptoms, digital training, meta‐analysis, resilience, stress

1. INTRODUCTION

Stress is an inherent part of everyday life (Schwarzer & Luszczynska, 2013) and having chronic stress can lead to deleterious impacts on one's health and well‐being (Bliese et al., 2017; García‐León et al., 2019). Theoretically, stress may be understood from two approaches, systemic or psychological stress. The systemic stress approach based on the general adaptation syndrome (Selye, 1965), defines stress as a state brought upon by changes in the physiological systems. On the other hand, the transactional model of stress (Lazarus & Folkman, 1984) adopts a psychological stance and describes how stress arises when individuals perceive that they are unable to adequately cope with the situation or are experiencing threats to their well‐being. While different in their approaches, both models depict an individual's response to stress as a process, and without adequate coping mechanisms, humans will falter from excessive stress (Krohne, 2002).

In 2019, the world experienced a global coronavirus pandemic (COVID‐19). Pandemic measures such as social isolation has inevitably led to more individuals experiencing mental health‐related issues, stress, and burnout (Droit‐Volet et al., 2020; Park et al., 2019). Reviews have reported that the prevalence rates of mental health issues such as anxiety (25.8%–31.9%), depression (24.3%–33.7%), and stress (29.6%–45%) during the COVID‐19 pandemic (Salari, Hosseinian‐Far, et al., 2020; Salari, Khazaie, et al., 2020). Further, a meta‐analysis concluded that up to 26% of healthcare professionals have low resilience which increased to 31% during the COVID‐19 pandemic (Cheng et al., 2022). Under these circumstances, reducing the prevalence of low resilience and building one's resistance to stress and adversity is necessary.

1.1. Significance of resilience

Resilience can be understood from a trait, process or outcome perspective and has been broadly defined as an ability to overcome or ‘bounce back’ from adversity (Rutter, 2012; Southwick et al., 2005; Van Breda, 2018). Consequently, this leads to either maintenance or a better than before physiological and psychological status (Van Breda, 2018). Being resilient has numerous benefits; resilient individuals have better mental well‐being and health (Gheshlagh et al., 2017; Joyce et al., 2018; Leppin et al., 2014), enjoy greater productivity (Zehir & Narcıkara, 2016) and obtain better academic outcomes then non‐resilient people (Van Hoek et al., 2019). Among individuals with mental health issues such as post‐traumatic stress disorders, resilience is negatively correlated with high levels of distress (Hébert et al., 2014).

Given the significance of resilience, appreciating how resilience may be measured is important. Due to different conceptualizations of resilience (Van Breda, 2018), numerous indicators of resilience have subjectively measured from a trait, process, or outcome perspective (Windle et al., 2011). For instance, the Connor Davidson resilience scale (Connor & Davidson, 2003) and dispositional resilience scale (Bartone, 2007) measures resilience as a trait, while the current experience scale (Tedeschi & Calhoun, 1996) evaluates resilience as a process, and the brief resilience scale (Smith et al., 2008) considers resilience as an ability to bounce back (outcome perspective). Although self‐reported scales are easy to administer, they may be limited due to several reasons such as social desirability or recall biases (Althubaiti, 2016). Therefore, it is worthy to investigate objective measures to indicate levels of resilience. Objective measures include biomarkers such as salivary cortisol which have been used to measure resilience (Petros et al., 2013). However, costs associated with the collection, processing and storage of specimens may be a barrier for wide adaptation (Grizzle et al., 2011).

Recent reviews have identified that an individual's resilience is malleable with resilience improving following resilience training (Ang et al., 2022; Linz et al., 2019). While this provides an insight into one's abilities to cope with adversity, it does not explain how one may recover or bounce back from adversity. Instead, it may be useful to draw connections with other indicators of health such as anxiety, depressive and stress symptoms to examine how individuals bounce back and recover from adversity (Southwick et al., 2005; Taylor & Carr, 2021). Aforementioned, prevalence rates for anxiety, depressive and stress symptoms are increasing exponentially due to the COVID‐19 pandemic. This will be a good starting point to examine one's resilience, by looking beyond resilience but other indicators of health such as reduced anxiety, depressive and stress symptoms, to demonstrate how one copes and bounces back from adversity.

1.2. Mechanism of digital resilience training

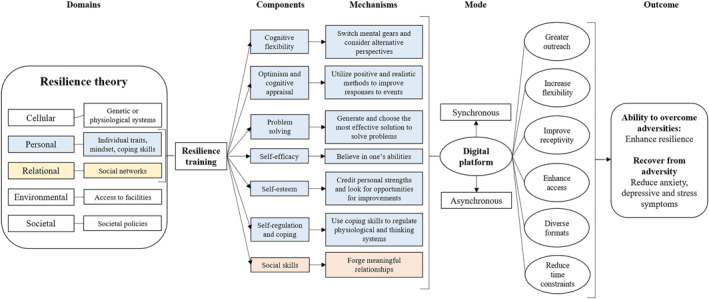

Resilience training has been primarily developed as therapeutic for individuals with clinical symptoms or preventive for healthy or at‐risk populations (Alvord et al., 2016). Based on the resilience theory (Szanton & Gill, 2010), a conceptual framework was developed, and Figure 1 depicts the numerous domains, components and mechanisms entailed by resilience training to enhance resilience (Alvord et al., 2016).

FIGURE 1.

Mechanism of resilience training for building resilience

According to the resilience theory, resilience is influenced by numerous domains, ranging from cellular to environmental levels (Alvord et al., 2016; Lee & Stewart, 2013; Szanton & Gill, 2010). The majority of the existing resilience interventions have largely situated their training on psychosocial factors, targeting the personal and relational levels (Ang et al., 2022; Joyce et al., 2018). At the individual level, several techniques aimed at improving one's ability to overcome challenges and adversities are used (Alvord et al., 2016; Carr et al., 2013). Cognitive flexibility refers to one's ability to consider alternative solutions and build resilience in stressful circumstances (Ionescu, 2012). Fostering an individual with an optimistic and realistic mindset can allow individuals to hold favourable expectancies while maximising their adaptive responses towards adversity (Alvord et al., 2016; Carver et al., 2010). Equipping one with problem‐solving skills may also lead to generating and evaluating a list of possible solutions and taking action to address the challenge (Tenhula et al., 2014). Advancing self‐regulation and coping strategies are also central to being resilient by acquiring skills to modulate and cope with challenges (Alvord et al., 2016). From a relational perspective, building one's relationships through access to strong social support and meaningful relationships can foster resilience (Hill et al., 2020; Li et al., 2021). Cumulatively, it is postulated that these individual and relational protective factors may result in one becoming resilient and demonstrating recovery from adversity through reductions in anxiety, depressive and stress symptoms. Evidence from existing resilience training focussing on these components has concluded positive effects on resilience and mental health issues and symptoms (Ang et al., 2022; Brewer et al., 2019; Chmitorz et al., 2018; Joyce et al., 2018). Given that more conclusive evidence and links can be drawn between psychosocial factors and resilience, this review focuses on how these psychosocial factors can enhance resilience.

1.3. Digital training for building resilience

The positive benefits of these resilience trainings largely refer to trainings conducted using delivered face‐to‐face methods (Ang et al., 2022; Brewer et al., 2019; Chmitorz et al., 2018; Joyce et al., 2018). With advancements in the telecommunications infrastructure due to additional funding (Harb, 2017), there is greater access and availability (Organization for Economic Cooperation and Development, 2022). Supported by the rise of digital literacy, emphasis on curating digital content and the COVID‐19 pandemic (Schwarz et al., 2020; Torous et al., 2020), digital platforms have gained traction and interest for the dissemination of psychological interventions (Lattie et al., 2019; Torous et al., 2020).

This review scopes digital platforms as computers, the Internet, and mobile devices such as smartphones and mobile software applications (Fairburn & Patel, 2017; Fu et al., 2020). The enormous reach of the Internet, remote access, anonymity, and diversity of the formats improve the scalability of digital training (Fraser et al., 2011). Digital training can be delivered in autonomous through asynchronous learning or supported through blended modes or synchronous learning (Fairburn & Patel, 2017). Depending on their format, training can be delivered through various mediums such as audio (e.g., mindfulness audio clips), visual (e.g., animations, interactive videos) or kinaesthetic (e.g., practical tasks) (Fairburn & Patel, 2017; Fraser et al., 2011).

1.4. Gaps in existing review

Numerous reviews have reported the effectiveness of digital training among various populations, including, college students (Ang et al., 2022; Lattie et al., 2019), employees (Armaou et al., 2020; Carolan et al., 2017) and individuals with mental health problems (Fu et al., 2020) or at risk of suicide (Torok et al., 2020). However, the majority of the reviews were not aimed at building resilience, and most were limited by high heterogeneity (Armaou et al., 2020; Carolan et al., 2017; Fu et al., 2020), and the use of mixed study designs and narrative synthesis (Lattie et al., 2019). Two reviews (Armaou et al., 2020; Carolan et al., 2017) did not use Hedges' g as an effect measure. Hedges' g was shown to have greater precision than other effect measures when trials had small sample sizes (Borenstein et al., 2010). In addition, the majority of the reviews did not adopt meta‐regression techniques to examine the effects of trial or training characteristics on the outcome. Given that trials characteristics possibly differ due to context, meta‐regression techniques are particularly useful in detecting the presence of potential impact of between‐study variations on outcomes (Higgins et al., 2020). Collectively, the use of Hedges' g and meta‐regression techniques may improve the confidence of a review's findings.

The effectiveness of digital training has been largely evaluated from a psychotherapeutic stance (Armaou et al., 2020; Carolan et al., 2017; Fu et al., 2020). With stigma towards mental health and its treatment, a preventive approach might be advantageous and necessary because individuals may be highly receptive to receive a training that focuses on building strengths (Angermeyer et al., 2017; Griffith & West, 2013). Studies on interventions to promote mental well‐being were all directed at students (Ang et al., 2022; Lattie et al., 2019) or workplace‐specific training for employees (Carolan et al., 2017). Hence, additional work is needed to evaluate the effects of resilience training in improving mental well‐being (e.g., anxiety and depressive symptoms) for the general population. Although face‐to‐face resilience training is favourable (Ang et al., 2022; Angelopoulou & Panagopoulou, 2021; Dray et al., 2017; Joyce et al., 2018), whether these benefits persist in a digital platform remains unclear. While both platforms (face‐to‐face and digital) offer the possibility of synchronous communication, the core difference is its physical presence. The lack of physical presence in an online platform has been found to negatively influence a learner's interest and motivation in active participation (Car et al., 2021; Chen & Jang, 2010). Further, in order to receive training via a digital platform, one has to have access to a device and a certain degree of digital literacy (Martínez‐Alcalá et al., 2018). These aforementioned factors highlight the distinctions between face‐to‐face and digital offered trainings.

In light of the improvements in the digital infrastructure, digital literacy, the occurrence of coronavirus pandemic and the increasing prevalence of mental health‐related issues, it is timely to evaluate the use of digital platforms to host resilience training. Therefore, this current review seeks to (1) evaluate the effectiveness of digital resilience training and (2) identify the essential features for designing future training.

2. METHODS

This review was prepared in accordance with the Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA) statement (Moher et al., 2009) (Table S1) and prospectively registered in the PROSPERO database at the Centre of Reviews and Dissemination in the United Kingdom (CRD42021258993).

2.1. Eligibility criteria

Randomised controlled trials that evaluated the effects of digital training to build resilience compared with usual care or active control or waitlist control groups were included. The resilience training had to be delivered over a digital platform either over a computer, Internet, or mobile application for eligibility. The primary outcome was resilience using either objective or subjective measures. The components of digital training that build resilience were derived with reference from the American Psychological Association (Alvord et al., 2016) and include the following: (1) cognitive flexibility, (2) optimism and cognitive appraisal, (3) problem solving, (4) relationships, (5) self‐efficacy, (6) self‐esteem and (7) self‐regulation and coping. This review was limited to studies published in the English language. No restriction was imposed on population and publication date and published, and publication trials were included to prevent publication bias (McMaster University, 2015; Rothstein et al., 2005). The details of the eligibility criteria can be found in Table S2.

2.2. Search strategy

A scoping search for similar reviews was conducted in the Cochrane Database of Systematic Reviews, Joanna Briggs Institute and PROSPERO international register of systematic reviews to prevent duplication. Preliminary screening was performed by initially searching the terms ‘training’, ‘digital’, ‘Internet’, ‘mobile’ and ‘resilience’. The keywords from articles retrieved during the preliminary screen contributed to the development of the search terms. The final search terms were developed iteratively in consultation with a university librarian. The final search terms can be found in Table S3.

A three‐step search (Higgins et al., 2020) was conducted from inception to 12 February 2022, to search for articles based on the eligibility criteria. First, one reviewer (DA) conducted a systematic search in eight electronic databases: (1) Cochrane library, (2) Cumulative Index for Nursing and Allied Health, (3) Embase, (4) PsycINFO, (5) PubMed, (6) Scopus, (7) Web of Science and (8) ProQuest Dissertations and Theses Global. Second, clinical trial registries such as ClinicalTrials.gov, Cochrane Central Register and EU Clinical Trials Registers were searched for ongoing trials. Authors were sent an email to obtain information such as completion status and availability of preliminary data from their study. Finally, a snowball search of the reference lists of existing reviews, included studies and grey literature such as Google Scholar and CogPrints was performed to maximise the comprehensiveness of this work. Authors were sent a follow‐up email, to gather data (e.g., mean, and standard deviation scores) when the information provided in their publications do not provide sufficient details.

2.3. Study selection

Studies retrieved from the search were imported and managed using Endnote X20. Following the removal of duplicates, two reviewers (DA and LY) screened all records by their titles and abstracts according to the eligibility criteria. When disagreements occurred, the eligibility criteria and another reviewer (JC) was consulted for resolution. Inter‐rater reliability was measured using Cohen's kappa, k with −1 (absence of an agreement) to 1 (perfect agreement) (Cohen, 1960). Values greater than 0.75 were quantified as excellent agreement, and values between 0.40 and 0.75 as good agreement (Marston, 2010).

2.4. Data management and extraction

Microsoft Word and Excel were used to manage the extracted data. The data extraction form was designed based on the Cochrane Handbook (Higgins et al., 2020). One reviewer (DA) extracted all the data, and another reviewer (LY) reviewed the data for accuracy. The following three main components were retrieved from the studies: (1) trial characteristics, (2) intervention description and (3) outcomes. Trial characteristics included author, year of publication country, design, participant characteristics, age, sample size, intervention, control, attrition rate, intention‐to‐treat (ITT) analysis, missing data management, protocol, trial registration and grant support. Characteristics of the digital training that were extracted included theoretical basis, platform (Internet or mobile application), communication mode (asynchronous or synchronous), pedagogical consideration (didactic or dialectic), approach (individual or group), frequency, duration of sessions and intervention and follow‐up. All trial reported outcomes (mean and standard deviation) were extracted.

2.5. Quality assessment

Quality assessment was conducted independently by two reviewers (DA, JC). The Cochrane Collaboration's risk of bias tool (Higgins et al., 2011) via Review Manager version 5.3 was used to appraise the methodological quality of included studies. Any disagreements were resolved through discussion and resolution with a third reviewer (LY). Risk of bias was examined on the following conditions: (1) selection bias, (2) performance bias, (3) detection bias, (4) attrition bias, (5) reporting bias and (5) other sources of biases such as baseline imbalances or contamination of intervention (Higgins et al., 2011). Based on the information from the studies, the risk of bias was classified as high risk, low risk or unclear risk when information is insufficient (Higgins et al., 2011).

The Grading of Recommendations, Assessments, Development and Evaluation criteria was used by two reviewers (DA, JC) to independently assess the overall certainty of evidence and strength of the recommendations (Guyatt et al., 2011). Risk of bias, inconsistency, indirectness, imprecision, and effect was used to determine the certainty of evidence. The rating was classified as very low, low, moderate, or high and was determined when justifications can support the decision (Guyatt et al., 2011). Publication bias was determined using Egger regression test (Egger et al., 1997) and funnel plot of precision with a standardized mean difference (Zwetsloot et al., 2017). Publication bias was established using a p‐value of less than 0.05 from the Egger test (Egger et al., 1997) and the asymmetry in the funnel plot (Sterne et al., 2011).

2.6. Data synthesis

Stata version 17 (StataCorp, 2021) was used to conduct the meta‐analyses, subgroup analyses, and meta‐regression. Mean and standard deviation with 95% confidence intervals was applied to report continuous outcomes. Hedges' g was employed as an effect size measure because of its precision for studies with small sample sizes (Hedges & Olkin, 2014; Lakens, 2013). Inverse‐variance method and random‐effects model were utilised to analyse continuous outcomes (Higgins et al., 2020). Dichotomous outcomes were expressed as relative measures, and the Mantel–Haenszel method (Mantel & Haenszel, 1959) was used to obtain the pooled intervention effect.

Heterogeneity was examined using the Cochran Q test, with a p‐value of less than 0.01 indicating heterogeneity, and its extent was quantified using I 2 values and between‐study variance tau2 (Higgins et al., 2020). Heterogeneity was classified based on I 2 values as follows: unimportant (<40%), moderate (30%–60%), substantial (50%–90%) and considerable (75%–100%) (Higgins et al., 2020). Sensitivity analyses were conducted by removing outliers or heterogeneous trials to ensure homogeneity as indicated by an I 2 value of less than 40% (Higgins et al., 2020).

Subgroup analyses were conducted based on pre‐determined groups sorted by trial and training characteristics. The test of subgroup differences using Q statistic is statistically significant if the subgroup effect is p < 0.1 (Richardson et al., 2019). Finally, meta‐regression analysis was conducted to examine the effect of a covariate on resilience outcome. Relationships were expressed using coefficient β which represents the change in the value of the dependent variable relative to the unit change in the covariates (Bring, 1994). Q‐value for the model, degree of freedom df and p‐value were used (Higgins et al., 2020). A p‐value of less than 0.05 was used to conclude an association between the covariate and dependent variable based on the effect size (Higgins et al., 2020). A narrative synthesis was performed when studies did not provide sufficient information or when a meta‐analysis cannot be conducted.

3. RESULTS

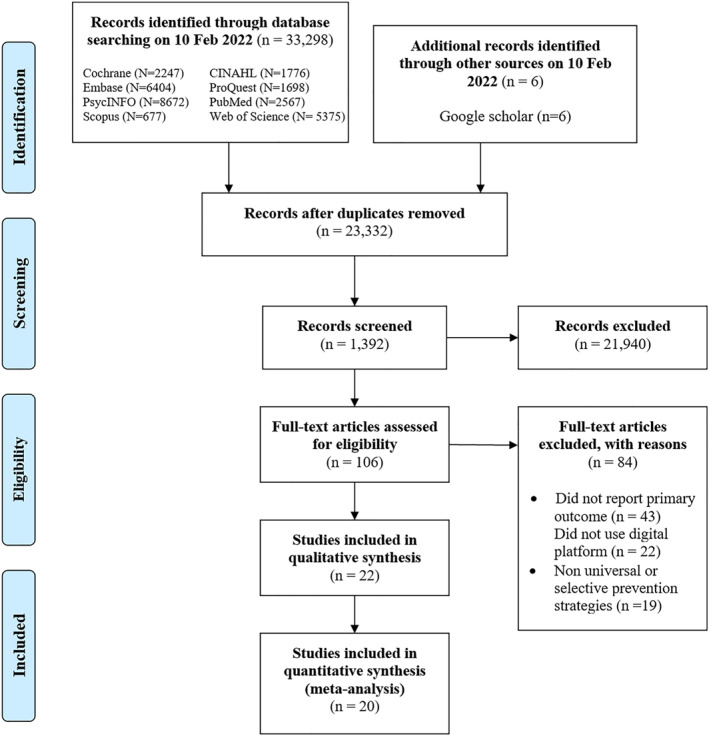

The search results are shown in Figure 2. A total of 28,816 records were identified from eight electronic databases and 6 records were retrieved from Google Scholar. After 5490 duplicates were removed, 1392 records were screened by their title and abstract based on the eligibility criteria. One hundrend six articles were screened in full text, and 84 articles were further excluded. Finally, 22 randomised controlled trials were included in this review, 21 were published papers (Aiken et al., 2014; Anderson et al., 2017; Barry et al., 2019; Bekki et al., 2013; Brog et al., 2022; Ebert et al., 2021; Flett et al., 2019, 2020; Harrer et al., 2018; Heckendorf et al., 2019; Hoorelbeke et al., 2015; Litvin et al., 2020; Morrison et al., 2017; Park et al., 2020; Raevuori et al., 2021; Roig et al., 2020; Shaygan et al., 2021; Suranata et al., 2020; Zhou et al., 2019) and 1 unpublished doctoral dissertation (Baqai, 2020). The reviewer agreement on the selection of eligible studies was good (k = 0.74). Two studies (Joyce et al., 2019; Smyth et al., 2018) did not provide sufficient information in their publication and were excluded from the meta‐analysis.

FIGURE 2.

Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA) flow diagram

3.1. Study characteristics

Table 1 presents the characteristics of 22 trials among 2876 participants published from 2013 (Bekki et al., 2013) to 2022 (Brog et al., 2022). Countries included Australia (18.2%), Belgium (4.5%), Finland (4.5%), Germany (13.6%), Indonesia (4.5%), Iran (4.5%), Ireland (4.5%), New Zealand (9.1%), Switzerland (4.5%), the United Kingdom (9.1%) and the United States of America (18.2%). 18 trials adopted a two‐arm randomised controlled trial design, and four (Flett et al., 2019; Litvin et al., 2020; Roig et al., 2020; Suranata et al., 2020) used a three‐arm randomised controlled trial. The sample size ranged from 5 (Baqai, 2020) to 709 (Litvin et al., 2020). All studies adopted subjective measures to evaluate resilience with the majority using scales with a trait orientation (77.3%) of resilience (Table S4).

TABLE 1.

Characteristics of included randomised controlled trials

| Author/Year | Country/Setting | Design | Participants' characteristics | Sample size | Age(M±SD) | Digital training | Comparator | Outcomes (measures) | Attrition rate/ITT/MDD | Register/Protocol/Grant |

|---|---|---|---|---|---|---|---|---|---|---|

| Aiken et al. (2014) | USA/Workplace | Two‐arm RCT | Healthy employees | T: 90 | >18 ± NR | Mindful resilience programme | Waitlist control | Resilience (CD‐RISC), stress (PSS) | 26.67%/Y/Y | N/N/Y |

| I: 45 | ||||||||||

| C: 45 | ||||||||||

| Anderson et al. (2017) | USA/University | Two‐arm RCT | Healthy paramedic students | T: 138 | 25.5 ± NR | Resiliency training programme | Usual care | Resilience (RS) | 0%/NA/NA | N/N/Y |

| I: 81 | ||||||||||

| C: 57 | ||||||||||

| Barry et al. (2019) | Australia/University | Two‐arm RCT | Healthy university students | T: 82 | 38 ± NR | Mindfulness intervention | Usual care | Anxiety, depression (DASS), resilience (PCQ), stress (PSS) | 10.98%/N/N | N/N/Y |

| I: 43 | ||||||||||

| C: 39 | ||||||||||

| Baqai (2020)# | UK/University | Two‐arm RCT | Healthy paramedic students | T: 17 | >18 ± NR | Digital resilience training | Usual care | Resilience (CD‐RISC) | 70.59%/N/N | N/N/N |

| I: 9 | ||||||||||

| C: 8 | ||||||||||

| Bekki et al. (2013) | Australia/University | Two‐arm RCT | Healthy university students | T:129 | 27.3 ± NR | Personal resilience training programme | Waitlist control | Resilience (RS) | 26.7%/N/N | N/N/Y |

| I: 61W | ||||||||||

| C: 68 | ||||||||||

| Brog et al. (2022) | Switzerland/Community | Two‐arm RCT | Individuals with mild depressive symptoms | T: 107 | 40.36 ± 14.59 | ROCO programme | Waitlist control | Depression (PHQ‐9), resilience (CD‐RISC) | 17.76%/NA/NA | Y/N/N |

| I: 53 | ||||||||||

| C: 54 | ||||||||||

| Ebert et al. (2021) | Germany/Workplace | Two‐arm RCT | Healthy employees | T: 396 | 41.76 ± 10.09 | GET.ON stress | Waitlist control | Depression (CES‐D), resilience (CD‐RISC), stress (PSS) | 20.96%/Y/Y | Y/N/Y |

| I: 198 | ||||||||||

| C: 198 | ||||||||||

| Flett et al. (2019) | New Zealand/University | Three‐arm RCT | Healthy university students | T: 210 | 20.08 ± 2.8 | Headspace and smiling mind | Active control | Anxiety (HADS‐A), depression (CES‐D), resilience (RS), stress (PSS) | 7.62%/N/N | Y/N/Y |

| I1: 72 | ||||||||||

| I2: 63 | ||||||||||

| C: 75 | ||||||||||

| Flett et al. (2020) | New Zealand/University | Two‐arm RCT | Healthy university students | T: 195 | 17.87 ± 0.47 | Headspace | Waitlist control | Resilience (BRS) | 22%/Y/Y | Y/N/Y |

| I: 91 | ||||||||||

| C: 104 | ||||||||||

| Harrer et al. (2018) | Germany/University | Two‐arm RCT | Healthy university students | T: 150 | 24.1 ± 4.1 | StudiCare stress | Waitlist control | Depression (CES‐D), resilience (CD‐RISC), Stress (PSS) | 30%/Y/Y | Y/Y/Y |

| I: 75 | ||||||||||

| C: 75 | ||||||||||

| Heckendorf et al. (2019) | Germany/Community | Two‐arm RCT | Adults with elevated RNT | T: 262 | 42.4 ± 10.9 | GET.ON gratitude | Waitlist control | Anxiety (GAD‐7), depression (CES‐D), resilience (CD‐RISC) | 28.2%/Y/N | Y/Y/N |

| I: 132 | ||||||||||

| C: 130 | ||||||||||

| Hoorelbeke et al. (2015) | Belgium/University | Two‐arm RCT | University students at risk for high trait rumination | T: 47 | 20.65 ± 2.27 | Cognitive control training | Active control | Depression (BDI‐II), resilience(D‐RS) | 11.32%/N/N | N/N/Y |

| I: 25 | ||||||||||

| C: 22 | ||||||||||

| Joyce et al. (2019) | Australia/Workplace | Two‐arm RCT | Healthy firemen | T: 143 | 43.9 ± 7.8 | Resilience@Work mindfulness programme | Active control | Resilience (CD‐RISC) | 53.15%/Y/N | Y/N/Y |

| I: 60 | ||||||||||

| C: 83 | ||||||||||

| Litvin et al. (2020) | UK/Workplace | Three‐arm RCT | Healthy employees | T: 709 | 35–44 | eQuoo mobile app | Usual care | Anxiety (STAI), resilience (RRC‐ARM) | 50.07%/N/N | Y/N/N |

| I: 222 | ||||||||||

| C1: 269 | ||||||||||

| C2: 218 | ||||||||||

| Morrison et al. (2017) | Australia/University | Two‐arm RCT | Healthy university students | T: 46 | 26.24 ± 7.65 | Willpower strengthening | Usual care | Depression (DASS), resilience (RS) | 0%/N/N | N/N/N |

| I: 23 | ||||||||||

| C: 23 | ||||||||||

| Park et al. (2020) | USA/Hospital | Two‐arm RCT | Parents of children with LAD | T: 53 | 47 ± 5.7 | SMART‐3RP | Waitlist control | Anxiety and depression (PHQ‐4), resilience (CES), stress (TPSS) | 24.52%/N/N | Y/Y/Y |

| I: 31 | ||||||||||

| C: 22 | ||||||||||

| Raevuori et al. (2021) | Finland/University | Two‐arm RCT | University students | T: 124 | 25.1 ± 4.5 | Meru health programme | Usual care | Anxiety (GAD‐7), depression (PHQ‐9), resilience (RS), stress (PSS) | 32.3%/Y/N | Y/N/Y |

| I: 63 | ||||||||||

| C: 61 | ||||||||||

| Roig et al. (2020) | Ireland/University | Three‐arm RCT | Healthy university students | T: 83 | 26 (median) | Space for resilience | Waitlist control | Resilience (BRS, CD‐RISC), stress (PSS) | 24.1%/Y/Y | Y/Y/N |

| I1: 26 | ||||||||||

| I2: 26 | ||||||||||

| C: 28 | ||||||||||

| Shaygan et al. (2021) | Iran/Hospital | Two‐arm RCT | Patients with COVID‐19 | T: 50 | 36.77 ± 11.81 | Online multimedia psycho‐educational intervention | Usual care | Resilience (CD‐RISC), stress (PSS) | 6%/N/N | Y/N/Y |

| I: 27 | ||||||||||

| C: 23 | ||||||||||

| Smyth et al. (2018) | USA/Community and Hospital | Two‐arm RCT | Patients with medical conditions | T: 70 | 46.9 ± 12.8 | Web‐PAJ | Usual care | Anxiety (HADS), depression (HADS), Resilience (BRS) | 4.28%/N/N | Y/N/Y |

| I: 35 | ||||||||||

| C: 35 | ||||||||||

| Suranata et al. (2020) | Indonesia/School | Three‐arm RCT | Healthy high school students | T: 60 | 12 ± NR | Internet cognitive behavioural counselling | Waitlist control | Resilience (RYDM) | 0%/N/N | N/N/Y |

| I: 30 | ||||||||||

| C: 30 | ||||||||||

| Zhou et al. (2019) | China/Hospital | Two‐arm RCT | Patients with breast cancer | T: 132 | 44.56 ± 7.11 | Cyclic adjustment training | Usual care | Anxiety (SAS), depression (SDS), resilience (CD‐RISC) | 10.6%/N/Y | Y/N/Y |

| I: 66 | ||||||||||

| C: 66 |

Note: BDI‐II: Beck's Depression Index II; BRS: Brief Resilience Scale; CD‐RISC: Connor‐Davidson Resilience Scale; CDI: Children's Depression Inventory; CES: Current Experience Scale; CES‐D: Centre for Epidemiological Studies – Depression; D‐RS: Dutch‐Resilience Scale; DASS: Depression, Anxiety, Stress Scale; GAD: General Anxiety Disorder Scale; HADS‐A: Hospital Anxiety and Depression Scale‐Anxiety Subscale; ITT: Intention‐to‐treat; LAD: Learning and Attentional Disabilities; MDD: Missing Data Management; N: No; NA: Not Applicable; NR: Not Reported; PAJ: Positive Affect Journaling; PCQ: Patient Care Questionnaire; PSS: Perceived Stress Scale; RNT: Repeated Negative Thinking; ROCO: Resilience and Optimism during COVID‐19; RS: Resilience Scale; RYDM: Resilience Youth Development Module; SAS: Self‐rating Anxiety Scale; SCARED: Screen for Child Anxiety Related Emotional Disorders; SD: Standard Deviation; SDS: Self‐rating Depression Scale; SMART‐3RP: Stress Management and Resiliency Training‐Relaxation Response Resilience Programme; STAI: State‐Trait Anxiety Inventory; TPSS: The Parental Stress Scale; UK: United Kingdom; USA: United States of America; Y: Yes; #: Unpublished doctoral dissertation; +: Estimated based on age of first year university students in the United Kingdom.

3.2. Description of digital training

The description of digital training that builds resilience is detailed in Table S4. Digital training was delivered over three modes, namely, audio disc (4.5%), Internet (54.5%) and mobile/tablet application (31.2%) or a combination of Internet and mobile applications (9.1%). The majority of the studies (68.2%) used an asynchronous communication style and a didactic pedagogy. All training were delivered using an individual approach except for two studies (Aiken et al., 2014; Park et al., 2020). The types of media varied among audio clips, discussions, lectures, videos, and practical exercises. The frequency of the training ranged from daily sessions (Barry et al., 2019) to once every 2 weeks (Hoorelbeke et al., 2015) with a duration lasting 10 min (Flett et al., 2020) to 100 min (Bekki et al., 2013). The number of sessions began from 1 (Harrer et al., 2018) to 84 (Flett et al., 2020) and lasted between 7 (Morrison & Pidgeon, 2017) and 168 days (Baqai, 2020). The training was delivered by a provider (18.2%), self‐help (59.1%) or a combination of provider and self‐help (22.7%). The components of universal and selective prevention strategies used in the digital training can be found in Table S5. Different combinations of the strategies were adopted. The imparted skills include cognitive flexibility (27.3%), optimism and cognitive appraisal (54.5%), problem‐solving (9.1%) and relationships (9.1%). All but one study (Smyth et al., 2018) comprised self‐regulation and coping skills in their digital training programme.

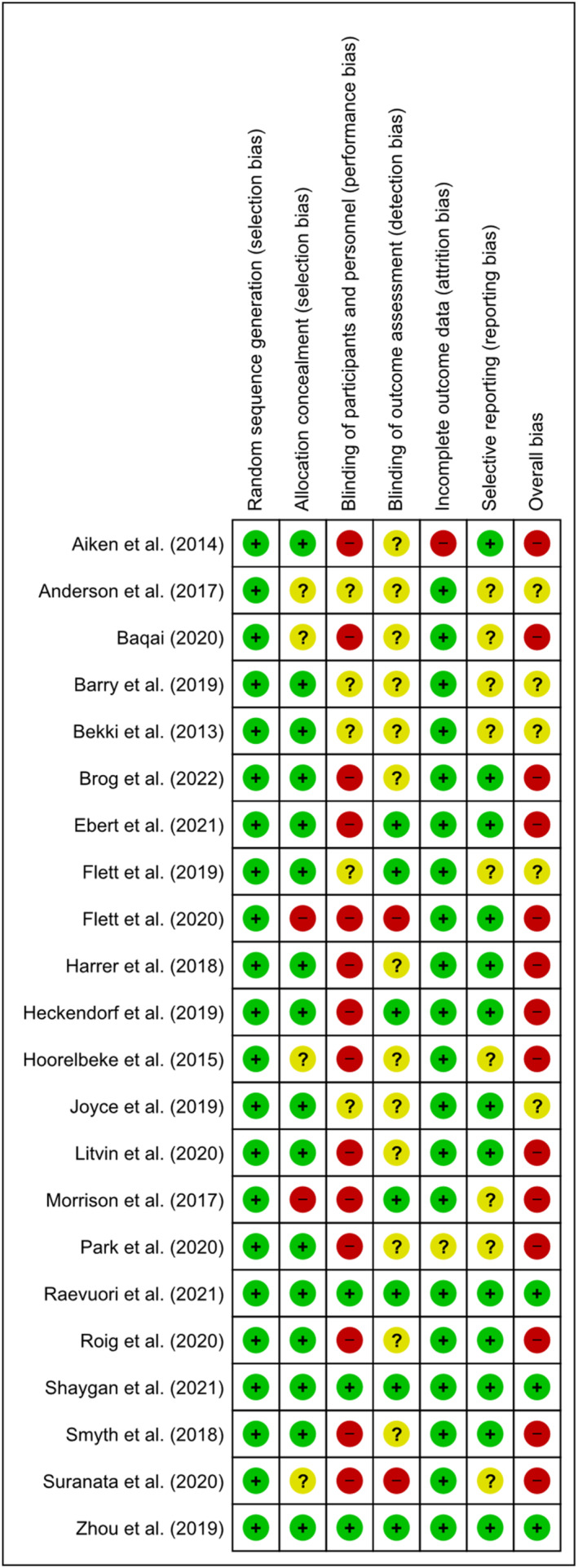

3.3. Risk of bias assessment

Figure 3 presents the risk of bias assessment for 22 studies. All studies (100%) had adequate random sequence generation. Six trials (27.3%) managed to conceal the allocation. Owing to the nature of the intervention, the researchers were not able to blind participants and personnel, leading to serious concerns relating to performance bias. Only three studies were able to overcome performance bias (13.6%). The majority of the studies successfully addressed issues relating to incomplete outcome data and were rated low risk for attrition bias. 13 trials (59.1%) had a protocol for comparison and were rated low risk for selective reporting.

FIGURE 3.

Risk of bias assessment

3.4. Resilience outcome

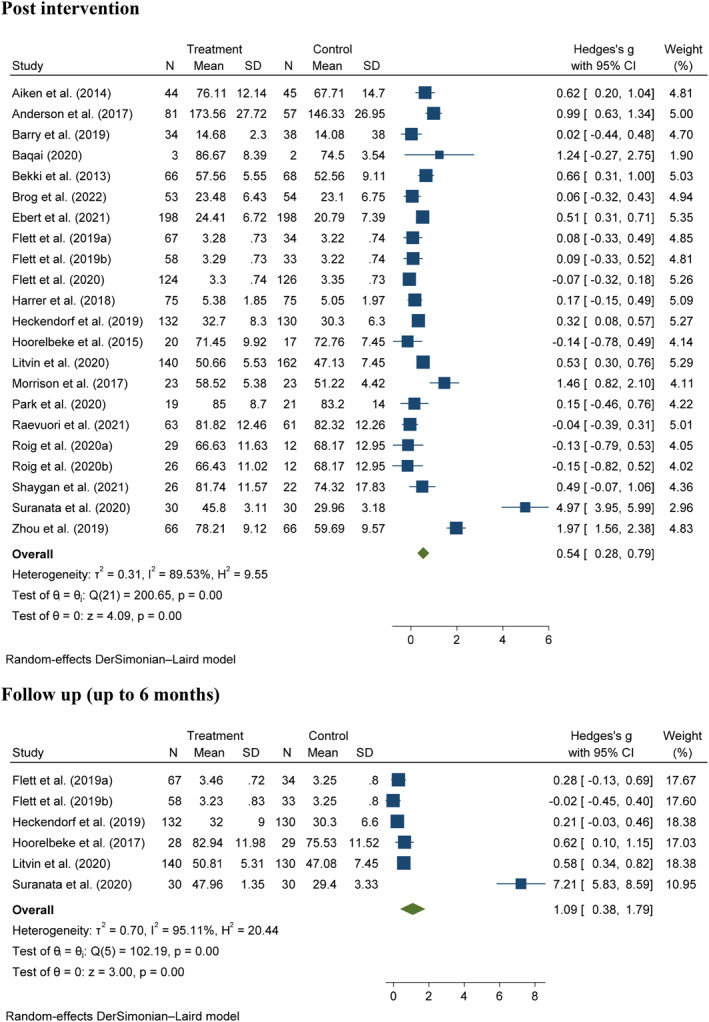

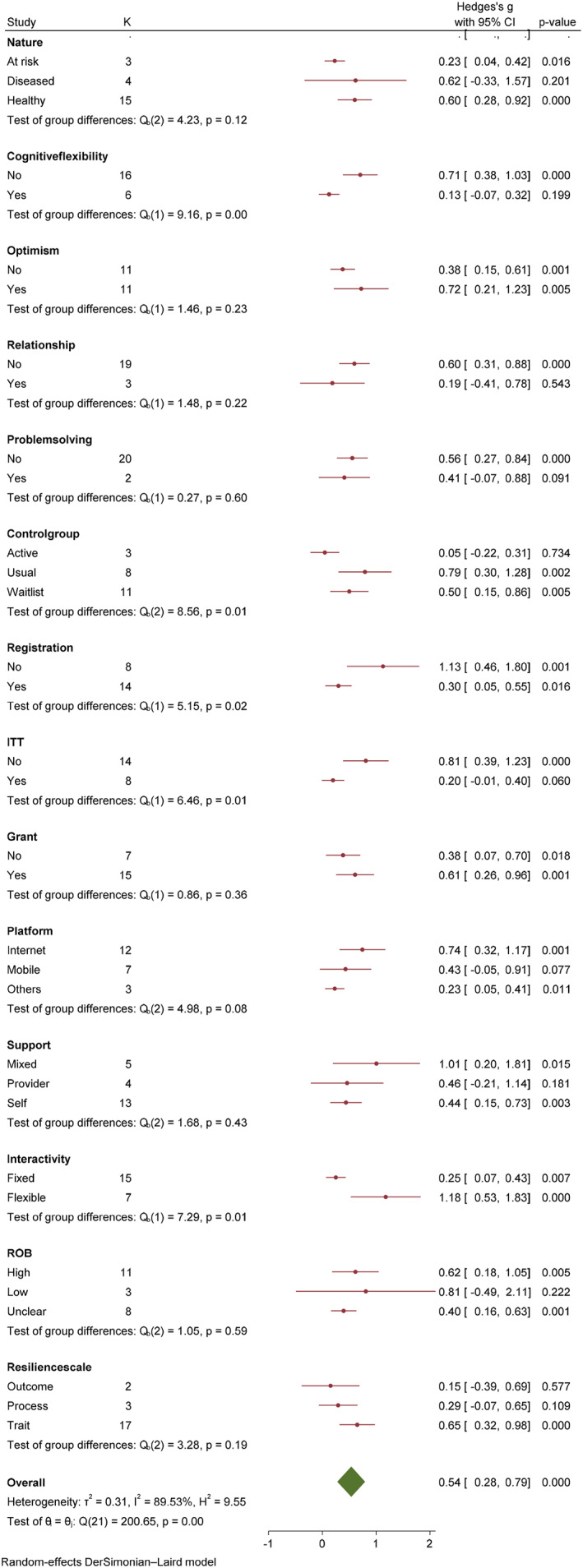

A meta‐analysis was conducted on 20 trials assessing resilience among 2663 participants at the post‐intervention time point (Figure 4). The results suggest that digital training can enhance resilience (Z = 4.09, p < 0.05) with a moderate effect size (g = 0.54; 95% CI: 0.28–0.79). At the follow‐up comprising of studies up to the 6 months, the pooled data from the five studies (Figure 4) concluded that digital training continuously yielded positive effects on resilience (Z = 3.00, p < 0.05) with a large effect (g = 1.09, 95% CI: 0.38–1.79). In consideration of substantial heterogeneity (I 2 = 89.53%) for resilience outcome at post‐intervention, subgroup and meta‐regression were conducted.

FIGURE 4.

Forest plot of effect size (Hedges' g) on resilience outcomes for digital training and comparator

3.4.1. Subgroup analyses

A series of subgroup analyses were conducted and shown in Figure 5 and further details are provided in Figures S2 to S14. Based on the effect sizes, the effect size was greater when the optimism and cognitive appraisal skills were imparted (g = 0.72, 95% CI: 0.21–1.23) and a combination of provider support and self‐help (g = 1.01, 95% CI: 0.20–1.81). Based on the theoretical orientation of the resilience scales, the effect sizes were, trait orientation (g = 0.65, 95% CI: 0.28–0.79), process orientation (g = 0.29, 95% CI: −0.07–65) and outcome orientation (g = 0.15, 95% CI: −0.39–0.69).

FIGURE 5.

Subgroup analyses based on resilience outcome at the post intervention time point for digital training and comparator

Significant subgroup differences were found for the use of cognitive flexibility skills (Q = 9.16, p < 0.05), different comparators (Q = 8.56, p = 0.01), registration status (Q = 5.15, p = 0.02), ITT analysis (Q = 6.46, p = 0.01), type of platform (Q = 4.98, p = 0.08) and interactivity (Q = 7.29, p = 0.01). The improvement on resilience outcome was greater when the resilience training did not adopt cognitive flexibility skills (g = 0.71, 95% CI: 0.38–1.03) when compared to those that did (g = 0.13, 95% CI: −0.07–0.32). Trials that used a usual care design had greater effect on the resilience outcome (g = 0.79, 95% CI: 0.30–1.28) in comparison with active control (g = 0.05, 95% CI: −0.22–0.31) or waitlist control (g = 0.50, 95% CI: 0.28–0.79). Additionally, trials that were not registered on a trial registry had a greater effect (g = 1.13, 95% CI: 0.46–1.80) than registered trials (g = 0.54, 95% CI: 0.28–0.79). Further, studies that adopted an ITT analysis (g = 0.81, 95% CI: 0.39–1.23) had a better effect on resilience outcome when compared to studies that did not (g = 0.54, 95% CI: 0.28–79). With regards to the platform, training that was delivered over the Internet (g = 0.74, 95% CI: 0.32–1.17) had greater effect when compared to mobile applications (g = 0.43, 95% CI: −0.05–0.91) or others (g = 0.23, 95% CI: 0.05–0.79). Finally, training programs that provided participants with a flexible programme schedule (g = 0.54, 95% CI: 0.28–0.79) showed better resilience outcomes than those with fixed schedules (g = 0.25, 95% CI: 0.07–0.43) (See Table 2).

TABLE 2.

Effects of digital training for building resilience on resilience, anxiety, depressive and stress symptoms

| Outcomes | Time points | Number of studies (reference) | Z statistics (p value) | Hedges's g (95% confidence interval) | Heterogeneity (I 2) | GRADE confidence |

|---|---|---|---|---|---|---|

| Resilience | Post‐intervention | 20 (1–20) | 4.09 (<0.00*) | 0.54 (1.56–2.38) | 89.53% | Very low |

| Follow‐up to 6 months | 5 (8,11–13,19) | 3.00 (<0.00*) | 1.09 (0.38–1.79) | 95.11% | Very low | |

| Anxiety symptoms | Post‐intervention | 3 (3,11,13) | 1.57 (0.12) | 0.36 (0.09–0.81) | 85.84% | Very low |

| Follow up | 3 (8,11,13) | 3.67 (0.00*) | 0.28 (0.13–0.43) | 0% | Low | |

| Depressive symptoms | Post‐intervention | 7 (3,6–8,10–12) | 4.32 (<0.00*) | 0.36 (0.02–0.53) | 43.75% | Very low |

| Follow up | 3 (8,10,11) | 3.83 (<0.00*) | 0.35 (0.17–0.53) | 15.6% | Low | |

| Stress symptoms | Post intervention | 5 (3,7,8,10,17) | 0.80 (0.42) | 0.14 (0.20–0.48) | 79.31% | Very low |

| Follow up | 2 (8,10) | 1.34 (0.18) | 0.25 (0.12–0.62) | 63.29% | Very low |

Note: GRADE: Grading of Recommendations, Assessment, Development and Evaluations; p < 0.05; 1: Aiken et al. (2014); 2: Anderson et al. (2017); 3: Barry et al. (2019); 4: Baqai (2020); 5: Bekki et al. (2013); 6: Brog et al. (2022); 7: Ebert et al. (2021); 8: Flett et al. (2019); 9: Flett et al. (2020); 10: Harrer et al. (2018); 11: Heckendorf et al. (2019); 12: Hoorelbeke et al. (2015); 13: Litvin et al. (2020); 14: Morrison et al. (2017); 15: Park et al. (2020); 16: Raevuori et al. (2021); 17: Roig et al. (2020); 18: Shaygan et al. (2021); 19: Suranata et al. (2020); 20: Zhou et al. (2019).

3.4.2. Meta‐regression analyses

A univariate random‐effects meta‐regression analysis was conducted to examine the effect of covariates on effect size (Table 3). Covariates such as year of publication (β = −0.03, p = 0.65), participants age (β = −0.01, p = 0.44), sample size (β < −0.001, p = 0.27), attrition rate (β = −0.02, p = 0.05) and duration of intervention (days) (β < 0.001, p = 0.93) had no significant effect on the resilience outcome.

TABLE 3.

Univariate random‐effects meta‐regression analysis of digital training by various covariates

| Covariate | Beta | Standard error | 95% Lower | 95% Upper | Z | p value |

|---|---|---|---|---|---|---|

| Year of publication | −0.03 | 0.06 | −0.14 | 0.09 | −0.44 | 0.66 |

| Age | −0.01 | 0.01 | −0.04 | 0.02 | −0.78 | 0.44 |

| Sample size | −0.00 | 0.00 | −0.00 | 0.00 | −1.10 | 0.27 |

| Attrition rate | −0.02 | 0.01 | −0.04 | 0.00 | −1.93 | 0.05 |

| Duration of intervention (Days) | 0.00 | 0.00 | −0.01 | 0.01 | 0.09 | 0.93 |

3.4.3. Narrative synthesis

Two trials (Joyce et al., 2019; Smyth et al., 2018) did not provide the mean and standard deviation scores for the resilience outcome, thus, the findings were narratively reported. Joyce et al. (2019) used the test of group‐by‐time interaction analysis and showed that participants in the digital training had increased resilience at post‐intervention and 6‐month follow‐up (p = 0.01). Similarly, Smyth et al. (2018) reported that the participants in the intervention group had greater resilience than those in the usual care.

3.5. Anxiety, depressive and stress symptoms

Included studies have measured anxiety, depressive and stress symptoms using subjective measures. The effects of digital training on anxiety, depressive and stress symptoms are shown in Table 2. Three studies were pooled to examine the effect of digital training on anxiety. The meta‐analysis found that anxiety was not reduced at the post‐intervention (Z = 0.36, p = 0.12), and substantial heterogeneity was found (I 2 = 85.84%). Meanwhile, a statistically significant reduction in anxiety was observed at the follow‐up (Z = 3.67, p < 0.05) with small effect (g = 0.28, 95% CI: 0.13–0.43).

Seven studies were pooled to examine the effect of digital training on depression. The meta‐analysis concluded that digital training could reduce depressive symptoms at the post‐intervention (Z = 4.32, p < 0.05) with small effect size (g = 0.36, 95% CI: 0.20–0.53). The positive effect on depressive symptoms (Z = 3.83, p < 0.05) persist at the follow‐up with small effect size (g = 0.35, 95% CI: 0.17–0.53). The effect of digital training on stress was evaluated in five studies at the post‐intervention and two studies at the follow‐up. Digital training did not improve stress at post‐intervention (Z = 0.80, p = 0.42) and follow‐up (Z = 1.34, p = 0.18).

3.6. Overall evidence

The Grading of Recommendations, Assessments, Development and Evaluations assessment for 20 studies is documented in Table S6. Serious concerns related to the risk of biases were found because the studies had a high or unclear risk of bias (Figure 2). Inconsistency due to the presence of moderate to substantial heterogeneity for resilience (I 2 = 89.53%–95.11%), anxiety at the post‐intervention (I 2 = 85.84%) and stress (I 2 = 63.29%–79.31%) was also noted. Given the variation in the population and intervention characteristics such as the approach, component, duration and frequency, the domain of indirectness was rated as serious. Half of the studies (50%) had a sample size of less than 50 in each arm, leading to serious concerns with imprecision. Therefore, the overall certainty of the evidence was downgraded based on the concerns on inconsistency, indirectness, imprecision, and risk of biases. The overall certainty of the evidence was rated very low for resilience (all time points), anxiety (post‐intervention), depression (post‐intervention), and stress (all time points) and low for anxiety (follow‐up depression (follow‐up). The included trials on resilience outcome exhibited asymmetrical distribution in a funnel plot (Figure S1), and the p‐value of Egger's regression for the small‐study effects test was less than 0.05, thereby suggesting evidence of publication bias.

4. DISCUSSION

4.1. Summary of key findings

This meta‐analysis revealed statistically substantial improvements in resilience, anxiety, and depressive symptoms outcomes. Subgroup analyses yield several findings with regards to the content and features of digital training. With regard to the contents of digital training, studies that did not impart cognitive flexibility skills were more effective in building resilience. From an intervention's features perspective, studies delivered over the Internet and allowing the participants to undergo the training at their own pace had better outcomes. For trial designs, trials that were registered in a trial registry, adopted a usual care control group, and used ITT analyses showed a statistically significant differences on resilience outcome. Compared with their counterparts, a greater effect size was observed when the selected trials adopted usual care, those not registered on trial registries, and those that did not use intention to treat analyses. Meta‐regression analyses found that none of the covariates influenced the resilience outcome. However, moderate to substantial heterogeneity and publication bias was detected.

4.2. Quality of evidence

The included trials had issues with allocation concealment, participant blinding, personnel, outcome assessment and selective reporting. Issues with allocation concealment occurred due to the lack of active control or a waitlist control design. Additionally, the included digital training used a combination of behavioural and cognitive components which can pose difficulties in concealing and blinding (Page & Persch, 2013). With regards to outcome assessment, 14 studies did not employ a blinded assessor which led to a high or unclear risk for detection bias. For studies that largely use subjective measures, a blinded assessor can reduce the risk of exaggerated reporting (Hróbjartsson et al., 2012). Nine trials were rated for unclear risk for selective reporting because they lack registration for comparison. Trial registration is important for detecting publication bias and ensuring that trial outcomes are not selectively published (Wager & Williams, 2013). This review did not find any major issues with incomplete outcome data because the majority of included studies (n = 20) either have low attrition rates (Anderson et al., 2017) or adopted strategies such as intention to treat analyses to appropriately manage attrition bias (Flett et al., 2020).

The overall certainty of the evidence of this review was determined based on several reasons. The high or unclear risk of bias rating on the majority of trials, high heterogeneity and variation in the contents and the design and features of digital training. The certainty of evidence ranged from low to very low, suggesting the uncertainty in the estimation of the effect (Schünemann et al., 2017). Collectively, the findings from the quality of existing trials suggest that more attention is warranted to improve the quality of future trials to ensure that grounded inferences may be made from its conclusions.

4.3. Resilience

This meta‐analysis found that the resilience in the intervention group improved significantly following digital resilience training. This finding was consistent with reviews examining the effectiveness of resilience training and thus indicated the value of digitally delivered resilience training that provides the convenience of remote resilience training (Ang et al., 2022; Angelopoulou & Panagopoulou, 2021; Dray et al., 2017; Joyce et al., 2018). Several reasons are proposed for this review's findings. Firstly, in line with the resilience theory (Szanton & Gill, 2010), the included trials comprised contents targeting the individual and relational protective factors that enhance resilience. From an individual level, the included trials adopted techniques such as optimism and cognitive appraisal skills that can enhance resilience (Alvord et al., 2016; Carr et al., 2013). Digital training also comprises skills that served to enhance participants' relationships. The development of social skills such as communication skills and empathy can be useful to enhance connections with others (Alvord et al., 2016). This ability can potentially increase ones' access to strongly forged and meaningful relationships to enhance their resilience through numerous mechanisms such as a sense of safety, sense of belonging and social support (Hill et al., 2020; Li et al., 2021).

Secondly, half of the included trials (n = 11) had a small sample size, this parameter could have potentially inflated the effect size (Kühberger et al., 2014). This was observed in our meta‐analysis where a study with a sample size of 5, yield a large effect (g = 1.24) as compared to a larger study (n = 396) which had concluded a modest effect (g = 0.51). The phenomenon of inflated effect sizes in small samples was also similarly reported in another meta‐analysis (Ang et al., 2022). This suggests a need to look beyond the existing categorisation of effect sizes and locate other suitable indicators that may subsequently inform practice and policy (Kraft, 2020; Schäfer & Schwarz, 2019). Hence, more work is needed to explore the benchmarks for effect sizes specific to resilience interventions.

It is also important to relate to the distinctions in theoretical orientations of the included resilience scales when making conclusions on the effectiveness of digital resilience training. While the theoretical orientation of resilience scales did not affect the resilience outcome (p = 0.19), this review found that scales with a trait orientation had a moderate effect (g = 0.65), while scales with a process and outcome orientation had small effect sizes of 0.29 and 0.15 respectively. The positive effects of resilience training are observed in studies adopting a trait orientation (p < 0.05) but diminished in both outcome (p = 0.07) and process orientations (p = 0.10). This result could be due to an overwhelming number of studies (n = 17) adopting a trait orientation. Furthermore, given that trait‐oriented scales focus on one's abilities and skills, these were potentially enhanced following resilience training. For instance, existing resilience training focuses on equipping participants with skills that enable better coping and regulation. In line with the trait orientation (Ong et al., 2006), these training lead to one developing their abilities to overcome adversity.

4.4. Essential features

The subgroups analyses contribute to the broader resilience literature by identifying content and design considerations for future trials evaluating digital resilience training. First, this review concluded that digital training comprising cognitive flexibility was less effective. It may be possible that the findings are skewed because only five studies (22.7%) adopted cognitive flexibility training in their digital programme. In addition, for one to become adept in cognitive flexibility, it is commonly taught by training individuals to be able to come up with alternative solutions and problem‐solving skills (Alvord et al., 2016; Ionescu, 2012). This suggests a degree of complexion and may require a therapist or provider support. Given that the majority of the digital training is designed as a self‐help programme (54.5%), the lack of guidance to aid in framing participants' minds may be a probable reason why it does not improve their resilience.

Second, this review found that digital training delivered through the Internet significantly improved participants' resilience. Given that the Internet can be found on almost any electronic device (computer or smartphone or tablet), its wide accessibility could potentially explain why this mode is superior to the rest (Houlden & Veletsianos, 2019). In addition, considering that the grand mean age of the participants in this study is 30 years old with 15 trials (84.62%) recruiting either students or employees, these individuals are likely to have higher digital literacy (Abdulai et al., 2021; Buckingham, 2016). They may also have access to the Internet in school or at the workplace. These suggestions may offer an insight as to why the Internet mode is superior. These findings, however, also inform that individuals with poorer digital literacy or without access to the Internet will be limited by their access to digital resilience training.

Third, providing participants with flexibility has also improved their resilience. Flexible learning formats are increasingly popular in massive open online courses (MOOCs) and institutes of higher learning. Flexible programme schedules provide users with more control and organization of their learning, and this may then increase their interest and thus sustain them in the training programme (Li et al., 2020; Wanner & Palmer, 2015). However, it is noteworthy that online self‐paced programs are largely dependent on an individual's motivations (Hartnett, 2016) and it presents with its own set of unique challenges (Shorey & Chua, 2021). For instance, it is now highlighted that students have preferences for a mix of asynchronous and synchronous learning (Shorey & Chua, 2021). This suggests that more work to needed to quantify the right mix of flexibility.

Fourth, trials that adopted a usual care control group showed good performance because participants in waitlist control may present with an expectancy effect, whereas those in active control groups can often identify whether they are in the active or inactive group, thereby giving rise to inaccurate reports (Hart et al., 2008). Given that the waitlist control design can lead to poor recruitment and additionally affect the overall effect (Hart et al., 2008), future trials may consider adopting a usual control group design. Alternatively, trials with a waitlist design may potentially overcome the expectancy effect by using an objective outcome measure.

Finally, trials that were not registered on trial registries (n = 8) or did not use intention‐to‐treat analyses (n = 13) had a greater effect on resilience outcomes. A review found that studies that did not register their trials were able to report favourable outcomes with statistical significance (Wayant et al., 2017). Therefore, these trials had a greater effect on resilience outcomes. With regards to the analytical methods, the use of the ITT approach (considers all subjects who were randomised and does not consider non‐compliance or deviation) led to a conservative estimate of the treatment effect (Gupta, 2011). Hence, studies without intention‐to‐treat analyses can likely inflate the effect size (Gupta, 2011). Future studies should conform to the Consolidated Standards of Reporting Trials (CONSORT) guidelines (Bennett, 2005) by registering their trials and when faced with significant attrition, should consider using ITT approaches or its equivalent to ensure that the reported treatment effects are precise.

4.5. Anxiety

This meta‐analysis concluded that digital resilience training did not improve anxiety post‐intervention. This was similarly reported in other similar reviews (Dray et al., 2017; Leppin et al., 2014). The lack of statistical significance could also be due to the lack of statistical power as there were only two studies that measured anxiety. However, the trend shifted at the follow‐up time point, where a statistically significant improvement in anxiety was observed among participants in the intervention group. This was similarly reported in one review (Dray et al., 2017). This could be due to the inclusion of a study with a larger sample size (Flett et al., 2019). Further, digital training comprises of skills that enhance one to adopt self‐regulation skills, which has been described as a means to facilitate one to adopt strategies and behavioural changes to regulate their anxiety (Sandars & Cleary, 2011). Given that the digital training comprises of a habitual component, where participants are required to adopt new behaviours and subsequently translate their newly acquired skills into habits. This habit‐forming process would require time ranging from 21 to 66 days could offer an explanation why the improvement was only detected at the follow‐up (Gardner et al., 2012; Lally & Gardner, 2013).

4.6. Depressive symptoms

This review found that depressive symptoms significantly reduced following digital resilience training, which was also reported in two reviews (Ang et al., 2022; Dray et al., 2017). This could be due to the use of optimism and cognitive appraisal techniques which are known to relieve depressive symptoms (Kelberer et al., 2018; Wong & Lim, 2009). Cognitive appraisal in particular, has been shown to reduce negative emotions and promote positive emotions (Troy et al., 2018), potentially suggesting how it reduces the low moods associated with depressive symptoms. In line with cognitive behavioural theories of depression (Blatt & Maroudas, 1992), the use of cognitive reappraisal techniques allows one to reinterpret the situation and change its associated emotional trajectory thus alleviating depressive symptoms (Gross & John, 2003). Further, the presence of a therapist among guided digital interventions likens to cognitive behavioural therapies and this may have allowed participants to have access to formal social support. With the introduction of social competency skills in building relationships, individuals may have more access to social support, and this may provide an additional protective buffer (Alsubaie et al., 2019). Collectively, as depression continues to be the most common mental health disorder (Lim et al., 2018), future digital resilience training should orientate their content surrounding cognitive behavioural techniques that could ameliorate depressive symptoms.

4.7. Stress symptoms

This meta‐analysis found that stress did not improve following digital resilience training. This finding was echoed by Leppin et al. (2014) but contradicted by another review (Ang et al., 2022). This was an unexpected finding given that there is a linear relationship between resilience and stress (Smith et al., 2018). A plausible reason could be due to a small number of studies (n = 5) resulting in a lack of statistical power to detect a change. In addition, the majority of included trials (n = 6) that evaluated stress were conducted among healthy students who may not be experiencing any significant form of stress. Only two studies (Ebert et al., 2021; Harrer et al., 2018) specifically focussed their training on stress. As the majority of the training focuses on psychological stress, these may not benefit individuals who are alternative forms of stress (e.g., physiological stresses). Therefore, it will be premature to draw any conclusions on the effect of digital training on stress, instead, more trials should be conducted to confirm its effectiveness.

4.8. Strengths and limitations

This work has several strengths and limitations. To the authors' knowledge, this review and meta‐analysis is the first to examine the effectiveness of digital resilience training. The findings were obtained from a collective of randomised controlled trials, the gold standard in establishing causal relationships. The robust search strategy consisting of a comprehensive three‐step search in eight electronic databases, trial registries and grey literature ensured the retrieval of all potential published or unpublished articles. Given that the included studies have small sample sizes, an appropriate effect size estimate (Hedges' g) was used to provide precise estimates (Hedges & Olkin, 2014; Lakens, 2013).

However, this review is limited for several reasons. Given that eligible articles that are not published in the English Language were omitted, this has potentially led to publication bias. High heterogeneity was also detected, which was not unexpected because the eligible trials showed variation in the population (e.g., healthy, or diseased individuals) and their intervention contents. Additionally, the included trials had relatively small sample sizes that could potentially lead to an overestimation of the effect sizes. The use of subjective measures for outcomes may be limited by social desirability biases and expectancy effects. Finally, the GRADE evidence suggests low to very low confidence in the findings.

4.9. Implications

The findings of this review conclude that digital training is promising in improving resilience while allowing individuals to recover and bounce back with preliminary evidence shown by the reduction of anxiety and depressive symptoms. Contemporarily, the COVID‐19 pandemic has led to poorer mental health. Capitalising on a digital platform to ensure that resilience training becomes accessible to the broader population is timely and necessary. Though inundated by several limitations, the following implications are outlined.

This review found that studies using a trait‐orientated scale has positive effects. Although it is beyond the scope of this review, these findings suggest that attention is needed to ensure that the theoretical basis of resilience training mirrors the outcome it measures. As contemporary resilience literature (Alvord et al., 2016; Van Breda, 2018) shift to appreciate resilience as a set of competencies such as assets, resources, and protective factors (i.e., process), instead of one with deficits (i.e., trait), it is critical to now re‐examine how resilience should be evaluated. This was supported by a recent review which found that the theoretical orientation of resilience scales influenced their outcomes (Cheng et al., 2022). More research work examining the effect of resilience training on the trait, process and outcome orientations will be necessary to provide a roadmap for further expansions on the resilience literature. This could translate to more work focusing on drawing links between the trait, process, and outcome indicators of resilience. This in turn, influences how training programs can effectively measure its influence on one's resilience.

From a content perspective, it was found that not imparting cognitive flexibility skills were more favourable in improving resilience. This is, however, premature to conclude in light of the relatively small number of included trials and inflated effect sizes. Furthermore, it contradicts, as having cognitive flexibility is a known protective factor to build one's resilience (Alvord et al., 2016). Therefore, it is proposed that the use of cognitive flexibility skills be further examined before conclusions may be made.

In consideration of the most suitable platform, the review concludes that the Internet outperforms other digital platforms (e.g., mobile applications). Although significant improvements having led to accessibility to the Internet, this should still be taken into context, given that certain disadvantaged groups (i.e., homeless, or financially challenged) continue to be digitally divided (Majeed et al., 2020; Vázquez et al., 2015) and alternative platforms should be considered for these individuals.

To the authors' knowledge, few studies specifically examined the interactivity of digital training. This is meaningful as educators, trainers and administrators start to identify suitable ways to conduct online training. While this review found that allowing participants with the flexibility improved their resilience, other factors such as motivation comes into play and attention to the target population is necessary when designing future resilience training. For instance, conducting a needs assessment of participants' learning patterns within the chosen community or institution to ensure that resilience training remains effective will be important (Ang et al., 2021).

From an application perspective, there are promising findings that support the use of digital resilience training in both a preventive and psychotherapeutic stance. As stigma against mental health and receiving psychotherapies remains prevalent (Henderson & Gronholm, 2018; Kendra et al., 2014; Lannin et al., 2013), delivering an intervention over a digital platform must be considered as a good strategy to reduce stigma (Bayar et al., 2009). Furthermore, a preventive and strength‐building approach can potentially improve the populations' resilience may further protect them against mental health issues (Alvord et al., 2016; Gheshlagh et al., 2017).

Finally, the various proposed features (i.e., using the Internet, flexible schedule) provide a starting point for future training programs. Key stakeholders should not rely on resilience training as the only mode of ensuring mental wellness. Instead, starting with needs assessments by identifying target participants' wants for resilience training and acceptability and preferences for a digital platform will be useful (Perski & Short, 2021). This approach may curate an ideal digital programme specific to the target population allowing it to be accessible and sustainable. Given that the overall evidence for digital resilience training ranged from low to very low, future trials are needed for more conclusive findings.

4.10. Recommendations for future trials

Based on the overall evidence, this review proposes several recommendations for future resilience training programs. As resilience may be promoted by various protective factors (Szanton & Gill, 2010), future trials should endeavour to identify an ideal combination or factor that may best improve resilience. Secondly, resilience training may be curated using a flexible learning model and delivered via the Internet. Third, additional large‐scale randomised controlled trials are needed to confirm the effectiveness of digital resilience training. Based on our findings, it will be important for trials to be prospectively registered on trial registries and adopt intention‐to‐treat analyses to ensure that outcomes are not selectively reported or potentially inflated. Lastly, this review was unable to provide details on the most suitable duration and cost‐effectiveness of digital resilience training and these should be considered as potential outcomes in future trials. With more convincing results emerging from studies, these may persuade policy makers and insurance companies to further incorporate digital health services thus broadening the universal health coverage.

5. CONCLUSION

This review applied a rigorous and systematic approach to examine the effectiveness of digital resilience training by conducting the meta‐analysis and meta‐regression of randomised controlled trials. With respect to its limitations of high heterogeneity and publication bias, the findings lay the foundation for future work. Nonetheless, this review supports the use of digital resilience training from a strength‐based and preventive angle. Digital resilience training delivered over the Internet with a flexible training programme was found to exert a greater positive influence on psychological well‐being such as resilience while reducing anxiety and depressive symptoms. Future trials are needed to examine the effects of digital training platforms on resilience. Large‐scale randomised controlled trials that are prospectively registered and adopting intention‐to‐treat analysis must be performed to ascertain the effectiveness of digital resilience training.

CONFLICT OF INTEREST

All authors declare that they have no conflicts of interest.

ETHICAL STATEMENT

Not required.

AUTHOR CONTRIBUTION

Wei How Darryl Ang: Data curation, formal analysis, investigation, writing – original draft, writing – review and editing and visualization. Han Shi Jocelyn Chew: Data curation, formal analysis, writing – original draft and writing – review and editing. Jie Dong: Writing – original draft and writing – review and editing. Huso Yi: Writing – original draft and writing – review and editing. Rathi Mahendren: Writing – original draft and writing – review and editing. Ying Lau: Conceptualization, methodology, design, validation, formal analysis, investigation, resources, data curation, writing – original draft, writing – review and editing, visualization and supervision.

Supporting information

Supplementary Information S1

ACKNOWLEDGEMENTS

We would like to thank authors for sending further information on their studies for the purpose of our systematic review. This review was supported by the Workforce Development Applied Research Fund grant: WF19‐14 and NUS Teaching Enhancement Grant: TEG 2021. The funding sources Workforce Development Singapore and the National University of Singapore had no role in the study design, collection, analysis, or interpretation of the data, writing the manuscript or the decision to submit this paper for publication.

Ang, W. H. D. , Chew, H. S. J. , Dong, J. , Yi, H. , Mahendren, R. , & Lau, Y. (2022). Digital training for building resilience: Systematic review, meta‐analysis, and meta‐regression. Stress and Health, 38(5), 848–869. 10.1002/smi.3154

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.

REFERENCES

*References marked with an asterisk were included in the meta‐analyses

- Abdulai, A.‐F. , Tiffere, A.‐H. , Adam, F. , & Kabanunye, M. M. (2021). COVID‐19 information‐related digital literacy among online health consumers in a low‐income country. International Journal of Medical Informatics, 145, 104322. 10.1016/j.ijmedinf.2020.104322 [DOI] [PubMed] [Google Scholar]

- * Aiken, K. A. , Astin, J. , Pelletier, K. R. , Levanovich, K. , Baase, C. M. , Park, Y. Y. , & Bodnar, C. M. (2014). Mindfulness goes to work: Impact of an online workplace intervention. Journal of Occupational and Environmental Medicine, 56(7), 721–731. 10.1097/JOM.0000000000000209 [DOI] [PubMed] [Google Scholar]

- Alsubaie, M. M. , Stain, H. J. , Webster, L. A. D. , & Wadman, R. (2019). The role of sources of social support on depression and quality of life for University students. International Journal of Adolescence and Youth, 24(4), 484–496. 10.1080/02673843.2019.1568887 [DOI] [Google Scholar]

- Althubaiti, A. (2016). Information bias in health research: Definition, pitfalls, and adjustment methods. Journal of Multidisciplinary Healthcare, 9, 211–217. 10.2147/JMDH.S104807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alvord, M. K. , Rich, B. A. , & Berghorst, L. H. (2016). Resilience interventions. In PA handbooks in psychology® APA handbook of clinical psychology: Psychopathology and health (pp. 505–519). 10.1037/14862-023 [DOI] [Google Scholar]

- * Anderson, G. S. , Vaughan, A. D. , & Mills, S. (2017). Building personal resilience in paramedic students. Journal of Community Safety and Well‐Being, 2(2), 51–54. 10.35502/jcswb.44 [DOI] [Google Scholar]

- Ang, W. H. D. , Lau, S. T. , Cheng, L. J. , Chew, H. S. J. , Tan, J. H. , Shorey, S. , & Lau, Y. (2022). Effectiveness of resilience interventions for higher education students: A meta‐analysis and metaregression. Journal of Educational Psychology. Advance online publication. 10.1037/edu0000719 [DOI] [Google Scholar]

- Ang, W. H. D. , Shorey, S. , Lopez, V. , Chew, H. S. J. , & Lau, Y. (2021). Generation Z undergraduate students’ resilience during the COVID‐19 pandemic: A qualitative study. Current Psychology, 1–15. 10.1007/s12144-021-01830-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelopoulou, P. , & Panagopoulou, E. (2021). Resilience interventions in physicians: A systematic review and meta‐analysis. Applied Psychology: Health and Well‐Being, 14(1), 3–25. 10.1111/aphw.12287 [DOI] [PubMed] [Google Scholar]

- Angermeyer, M. C. , Van Der Auwera, S. , Carta, M. G. , & Schomerus, G. (2017). Public attitudes towards psychiatry and psychiatric treatment at the beginning of the 21st century: A systematic review and meta‐analysis of population surveys. World Psychiatry, 16(1), 50–61. 10.1002/wps.20383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armaou, M. , Konstantinidis, S. , & Blake, H. (2020). The effectiveness of digital interventions for psychological well‐being in the workplace: A systematic review protocol. International Journal of Environmental Research and Public Health, 17(1), 255. 10.3390/ijerph17010255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baqai, K. (2020). Resilience over recovery: A feasibility study on a self‐taught resilience programme for paramedics [doctoral dissertation]. University of Central Lancashire. [Google Scholar]

- * Barry, K. M. , Woods, M. , Martin, A. , Stirling, C. , & Warnecke, E. (2019). A randomized controlled trial of the effects of mindfulness practice on doctoral candidate psychological status. Journal of American College Health, 67(4), 299–307. 10.1080/07448481.2018.1515760 [DOI] [PubMed] [Google Scholar]

- Bartone, P. T. (2007). Test‐retest reliability of the dispositional resilience scale‐15, a brief hardiness scale. Psychological Reports, 101(3), 943–944. 10.2466/pr0.101.7.943-944 [DOI] [PubMed] [Google Scholar]

- Bayar, M. R. , Poyraz, B. Ç. , Aksoy‐Poyraz, C. , & Arikan, M. K. (2009). Reducing mental illness stigma in mental health professionals using a web‐based approach. Israel Journal of Psychiatry, 46(3), 226. [PubMed] [Google Scholar]

- * Bekki, J. M. , Smith, M. L. , Bernstein, B. L. , & Harrison, C. (2013). Effects of an online personal resilience training program for women in STEM doctoral programs. Journal of Women and Minorities in Science and Engineering, 19(1), 17–15. 10.1615/JWomenMinorScienEng.2013005351 [DOI] [Google Scholar]

- Bennett, J. A. (2005). The consolidated standards of reporting trials (CONSORT): Guidelines for reporting randomized trials. Nursing Research, 54(2), 128–132. 10.1097/00006199-200503000-00007 [DOI] [PubMed] [Google Scholar]

- Blatt, S. J. , & Maroudas, C. (1992). Convergences among psychoanalytic and cognitive‐behavioral theories of depression. Psychoanalytic Psychology, 9(2), 157–190. 10.1037/h0079351 [DOI] [Google Scholar]

- Bliese, P. D. , Edwards, J. R. , & Sonnentag, S. (2017). Stress and well‐being at work: A century of empirical trends reflecting theoretical and societal influences. Journal of Applied Psychology, 102(3), 389–402. 10.1037/apl0000109 [DOI] [PubMed] [Google Scholar]

- Borenstein, M. , Hedges, L. V. , Higgins, J. P. , & Rothstein, H. R. (2010). A basic introduction to fixed‐effect and random‐effects models for meta‐analysis. Research Synthesis Methods, 1(2), 97–111. 10.1002/jrsm.12 [DOI] [PubMed] [Google Scholar]

- Brewer, M. L. , Van Kessel, G. , Sanderson, B. , Naumann, F. , Lane, M. , Reubenson, A. , & Carter, A. (2019). Resilience in higher education students: A scoping review. Higher Education Research and Development, 38(6), 1105–1120. 10.1080/07294360.2019.1626810 [DOI] [Google Scholar]

- Bring, J. (1994). How to standardize regression coefficients. The American Statistician, 48(3), 209–213. 10.2307/2684719 [DOI] [Google Scholar]

- * Brog, N. A. , Hegy, J. K. , Berger, T. , & Znoj, H. (2022). Effects of an internet‐based self‐help intervention for psychological distress due to COVID‐19: Results of a randomized controlled trial. Internet Interventions, 27, 100492. 10.1016/j.invent.2021.100492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckingham, D. (2016). Defining digital literacy. Nordic Journal of Digital Literacy, 10(Jubileumsnummer), 21–34. 10.18261/ISSN1891-943X-2015-Jubileumsnummer-03 [DOI] [Google Scholar]

- Car, L. T. , Kyaw, B. M. , Panday, R. S. N. , van der Kleij, R. , Chavannes, N. , Majeed, A. , & Car, J. (2021). Digital health training programs for medical students: Scoping review. JMIR Medical Education, 7(3), e28275. 10.2196/28275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carolan, S. , Harris, P. R. , & Cavanagh, K. (2017). Improving employee well‐being and effectiveness: Systematic review and meta‐analysis of web‐based psychological interventions delivered in the workplace. Journal of Medical Internet Research, 19(7), e271. 10.2196/jmir.7583 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr, W. , Bradley, D. , Ogle, A. D. , Eonta, S. E. , Pyle, B. L. , & Santiago, P. (2013). Resilience training in a population of deployed personnel. Military Psychology, 25(2), 148–155. 10.1037/h0094956 [DOI] [Google Scholar]

- Carver, C. S. , Scheier, M. F. , & Segerstrom, S. C. (2010). Optimism. Clinical Psychology Review, 30(7), 879–889. 10.1016/j.cpr.2010.01.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, K.‐C. , & Jang, S.‐J. (2010). Motivation in online learning: Testing a model of self‐determination theory. Computers in Human Behavior, 26(4), 741–752. 10.1016/j.chb.2010.01.011 [DOI] [Google Scholar]

- Cheng, C. K. T. , Chua, J. H. , Cheng, L. J. , Ang, W. H. D. , & Lau, Y. (2022). Global prevalence of resilience in healthcare professionals: A systematic review, meta‐analysis, and meta‐regression. Journal of Nursing Management, 30(3), 795–816. 10.1111/jonm.13558 [DOI] [PubMed] [Google Scholar]

- Chmitorz, A. , Kunzler, A. , Helmreich, I. , Tüscher, O. , Kalisch, R. , Kubiak, T. , Wessa, M. , & Lieb, K. (2018). Intervention studies to foster resilience–A systematic review and proposal for a resilience framework in future intervention studies. Clinical Psychology Review, 59, 78–100. 10.1016/j.cpr.2017.11.002 [DOI] [PubMed] [Google Scholar]

- Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46. 10.1177/001316446002000104 [DOI] [Google Scholar]

- Connor, K. M. , & Davidson, J. R. (2003). Development of a new resilience scale: The Connor‐Davidson resilience scale (CD‐RISC). Depression and Anxiety, 18(2), 76–82. 10.1002/da.10113 [DOI] [PubMed] [Google Scholar]

- Dray, J. , Bowman, J. , Campbell, E. , Freund, M. , Wolfenden, L. , Hodder, R. K. , McElwaine, K. , Tremain, D. , Bartlem, K. , Small, T. , Palazzi, K. , Oldmeadow, C. , Wiggers, J. , & Bailey, J. (2017). Systematic review of universal resilience‐focused interventions targeting child and adolescent mental health in the school setting. Journal of the American Academy of Child & Adolescent Psychiatry, 56(10), 813–824. 10.1186/s13643-015-0172-6 [DOI] [PubMed] [Google Scholar]

- Droit‐Volet, S. , Gil, S. , Martinelli, N. , Andant, N. , Clinchamps, M. , Parreira, L. , Rouffiac, K. , Dambrun, M. , Huguet, P. , Pereira, B. , Bouillon, J. B. , Dutheil, F. , COVISTRESS network , & Dubuis, B. (2020). Time and Covid‐19 stress in the lockdown situation: Time free, «Dying» of boredom and sadness. PLoS One, 15(8), e0236465. 10.1371/journal.pone.0236465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- * Ebert, D. D. , Franke, M. , Zarski, A. , Berking, M. , Riper, H. , Cuijpers, P. , Funk, B. , & Lehr, D. (2021). Effectiveness and moderators of an internet‐based mobile‐supported stress management intervention as a universal prevention approach: Randomized controlled trial. Journal of Medical Internet Research, 23(12), e22107. 10.2196/22107 [DOI] [PMC free article] [PubMed] [Google Scholar]