Abstract

Objectives

Difficulties with understanding research literature can lead to nurses having low engagement with evidence‐based practice (EBP). This study aimed to test the feasibility of an education intervention using an academic literacies approach to improve nurses' research literacy.

Methods

An interactive workshop was devised utilizing genre analysis and tested in a pre/post pilot study. EBP self‐efficacy was measured at baseline and posttest using the Self‐Efficacy in Evidence‐Based Practice instrument (26 items on an 11‐point scale for total scores from 0 to 260). Research comprehension was measured with a 10‐question quiz (range 0−10).

Results

When analyzed with a paired t‐test, EBP self‐efficacy increased significantly (MD: 56.9, SD: 39.9, t = 4.5, df = 9, p < .001). Research comprehension also improved (MD: 1.1; SD: 1.1, t = 2.9, df 9, p < .01). The workshop evaluations (n = 9) were overwhelmingly positive.

Conclusion

This novel approach to research pedagogy aligns well with adult learning theory and social learning theory and is suitable for small group learning in continuing education. There is considerable potential for further work in this area. Genre analysis shows promise as a strategy for teaching nurses to understand research literature.

Keywords: education, evidence based, innovations, quantitative, research, teaching/learning

1. INTRODUCTION

Research literacy, or the ability to understand and apply research to practice, is a vital skill for nursing practice. 1 Evidence‐based practice (EBP) is required of nurses in countries worldwide. Using research evidence means understanding the language of research and this can often be quite difficult. 2 To read and understand research needs a specific type of academic literacy that not all nurses have had the educational opportunities to develop.

Scientific research literature can be considered its own specific genre of written communication, and understanding it requires that specific linguistic features need to be understood to fully grasp its meaning. 3 The language used in research literature is of a specific kind; it can be conceptualized as an individual language register with its own conventions, grammar, word usage, and jargon. 4 Scientific research language has been described as a blend of natural and specific language, visual data, standard discourse characteristics, and unique symbolism. 5

Different genres of written communication, such as research, can be analyzed and understood by following the process of genre analysis. 6 While a number of models and theories for genre analysis have been proposed; this study used the model proposed by Flowerdew, based on the work by Swales. 7 Flowerdew's model asserts that understanding genre begins by examining structure, style, content, and purpose. 7 The purpose of each text element of a research article, that is, the introduction, methods, results and discussion, is analyzed for the actions it performs, known as its “moves.” 6 The words and phrases typically used to indicate actions within the individual sections of the text are examined so they can be understood. 7 By participating in this process, learners develop an understanding of how to navigate the text and understand the language that indicates what is happening in each part of the text. 8 This technique is beneficial for learning to read documents with a high degree of similarity, such as research papers. 3

A 2006 paper explored using genre analysis for improving the research literacy of undergraduate English majors in Taiwan. 9 While reading and understanding research was a component of the study; the main focus was on developing students' ability to write a research paper. 9 Participants were guided into looking for the linguistic features of each move in the texts they were studying, including those terms explicitly associated with research. 9 Students who engaged with the research literacy program achieved significantly better results across most fields of their final assessment than those who did not engage. 9 While these participants were not health professionals, it seemed likely that similar results could be attained with a population of nurses.

Nurses' research literacy has been identified as a vital contributing factor to nurses' engagement with EBP, or the lack of it. 2 Evidence implementation literature frequently highlights the gap between research and practice 10 and, despite the passage of over 50 years since it was first discussed in the literature, this gap is as apparent as ever. 11 Numerous theories and strategies have been proposed to bridge this gap in nursing, but the gap remains. 12 , 13 A “missing piece” of the research utilization puzzle may be the way nursing education has dealt with teaching nurses to read and apply research. With this idea in mind, a workshop using genre analysis and adult learning techniques specifically for use with nurses working in clinical practice was designed.

2. METHODS

2.1. Aims

The study aimed to explore whether research education using genre analysis and other language‐based teaching approaches that focused on reading and understanding research literature would be feasible for improving nurses' research comprehension and EBP self‐efficacy. Secondary aims were to assess the educational materials used by eliciting participant feedback. This study was planned as the first stage of a larger work implementing this intervention.

2.2. Research design

A pilot pretest/posttest pilot study was conducted with a single facilitator delivering research education in a 6‐h interactive workshop. The facilitator was a Nurse Researcher experienced with teaching EBP and research methods.

2.3. Setting

The study's setting was a tertiary acute health service providing public and privately funded healthcare to in patient and outpatient adults, children and neonates in Brisbane, Australia. Approximately 500,000 patients are seen by the health service per year and approximately 2000 registered nurses are employed. A small range of on‐site EBP and research education activities were regularly provided by research staff, however none were aimed specifically at addressing research literacy.

2.4. Population and sample

The sample was recruited from registered nurses working in the above setting. Analysis of unpublished internal data within the health service found that overall the nursing staff were predominantly female (93%), registered nurses, the majority spoke English as their first language, and their highest educational achievement was a bachelor's degree, although almost a third had completed a graduate certificate or diploma, and most worked in patient care.

A convenience sample of 10 participants was recruited as the aim of the study was to test the intervention rather than seek statistical evidence of an effect. The sample was comprised of registered nurses who self‐identified as experiencing difficulties with reading and understanding research literature. The sample was recruited using the health service's internal electronic notices system and staff email.

2.5. Intervention

A 6‐hour face‐to‐face educational workshop was designed to be delivered in 1 day. The workshop teaching approaches used concepts from Adult Learning Theory, 14 social learning theory, 15 genre analysis techniques, 16 and academic literacies techniques in a context appropriate to nursing to facilitate clinicians' understanding of the language used in research and EBP publications. 17 The intervention was designed to improve research literacy through focusing on understanding the language of research. The workshop used solely quantitative research examples and activities, as quantitative research is the most common research methodology encountered by nurses and the one they find most challenging to understand. 18 , 19 The overall learning outcome was participants' understanding of how each part of the research paper contributes to telling the story of the research.

The workshop structure followed the process used in genre analysis, where key “moves” or parts of research papers are highlighted and understood. 3 , 6 The focus of learning in this technique was what is part of the paper is doing, as well as what it is saying. Each “standard” section of a research paper (introduction, methods, results, and discussion/conclusions) formed a separate workshop session. Participants were encouraged throughout the workshop to identify words and phrases they did not understand, and strategies were discussed for locating meanings. Participants were each given a workbook with relevant examples from research papers and activities to work through throughout the workshop sessions.

The workshop began with a discussion of participants' reasons for wanting to know more about reading research. The group discussed their previous experience with research either inside or outside of nursing, in terms of participating, using, and reading research. The workshop continued with outlining and introducing genre analysis, then segued into the specifics of moves occurring in introduction sections, such as presenting background information, which was illustrated with examples of how this information is presented in different quantitative papers.

The second session dealt with methods sections of research papers. The session began with illustrations of several methods sections from different quantitative research articles, highlighting the moves. The group discussed the different ways papers may perform these moves, before focusing on the example paper's methods section. Activity‐based learning was utilized for the methods section as the methods section's purpose is to describe what was done by the researchers. An activity based on Thiel's “cookie experiment” 20 was used to physically demonstrate randomization, blinding, and data collection. This physical acting out of key concepts can help to fix meanings through embodied learning. 21

Participants were randomized into two groups using identical sealed envelopes containing a randomly allocated assignment to a group and an individually wrapped identical‐looking chocolate cookie (name brand or store brand). Participants were then asked to record some data about themselves and the cookie they received, including recording on a numerical rating scale their rating of the cookie's deliciousness. The deliciousness scale data was a starting point to discuss frequently used elements of data collection and the words used in different parts of a methods section. This prompted discussion of different data collection strategies, such as scales, measurement, observation, self‐report, and other options used for different kinds of data. These methods were discussed in terms of their relationship to the data collection the group had undertaken during the cookie activity. The data analysis section of the methods was covered in the following session on results to contextualize the group's learning properly.

The third session began with a discussion of the function of the results section in a research paper. Outcomes were discussed as a concept with examples from various studies. Different expressions of results and how to read them were discussed, focusing initially on graphs and tables. A number of illustrations from different studies displaying descriptive and inferential statistics were shown and questions asked from the group. Consistent and nonconsistent observations were illustrated using sections from different papers with the relevant moves highlighted (literally “here is what this part does”). Parametric and nonparametric data were explained as an introduction to the different words used to discuss choices in data analysis. In the cookie activity, the participants had recorded dichotomous data from a question asking if they would eat the cookie again, helping to illustrate concepts related to this data type. In this session, that activity was extended. The participants moved back into their randomized groups and added the dichotomous data together for each group and calculated a percentage of “yes” responses. Questions prompted them to consider the limitations of simply reporting a percentage, and whether these results could have been reached by chance. Using the continuous data collected in the cookie activity, participants calculated a mean “taste” score from their earlier ratings of the deliciousness of the cookie they had received. This activity prompted a discussion of measures of variability within continuous data such as standard deviations. The session was completed by introducing a basic “rule‐of‐thumb” for understanding p‐values and confidence intervals.

The final session addressed the functions of, and expectations for, the discussion and conclusion sections of a research paper. A section of a research study with the relevant genre analysis moves highlighted were shown to discuss how the authors had accomplished the specific moves. Using an activity in their workbook, the group and the facilitator worked through an example discussion section and identified: what the study found in the context of what it was trying to find; why the results were the way they were; and how the results connect to other research and literature. Participants were asked to find the moves in the discussion section of the example paper and identify the paper's overall “bottom line” conclusion. The conclusions section only required a brief discussion to round out the session and complete the learning activity.

2.6. Outcomes

The primary outcome of EBP self‐efficacy was measured by the Self‐Efficacy in Evidence‐Based Practice (SE‐EBP) tool developed by Chang and Crowe. 22 This tool has been tested and found to be valid (r = .95) and reliable (α = .97) for measuring nurses' confidence in using EBP, and their outcome expectancies when using EBP. 22 Factor analysis of the SE‐EBP tool shows that it measures three distinct factors: Identifying the clinical problem; searching for evidence; and implementing evidence into practice, and these three factors are in line with the steps followed in EBP. 22 The SE‐EBP instrument has 26 items for which participants indicate their level of confidence on an 11‐point scale (0 = no confidence, 10 = completely confident). The minimum possible score for the SE‐EBP is 0 and the maximum is 260 and whole scores are used. SE‐EBP was measured at both pre and postworkshop timepoints. A systematic review of psychometric properties of EBP self‐efficacy scales included 11 scales and found that the SE‐EBP scale has the highest rated content validity according to the Consensus‐based standards for the selection of health measurement instruments (COSMIN) checklist. 23 , 24

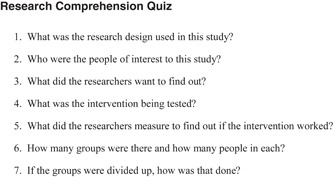

The secondary outcome was research literacy at pretest and posttest as measured by a quiz based on the content of a nursing research paper reporting a randomized controlled trial not otherwise used in the workshop. The researcher developed the quiz and piloted it with junior colleagues (nursing research interns) before the workshop. Research literacy is defined as the ability to read and understand research, and so the ideal measure is one that tests the application of the skill being taught. The research comprehension quiz used 10 questions covering participants' understanding of the research methods, the results (including understanding basic graphical representations of data) and the conclusions of a quantitative research study. Different research studies of similar complexity were used in the preworkshop and postworkshop assessments. Results were scored as the number of correct answers out of 10 (Figure 1).

Figure 1.

Research comprehension quiz items

Data were also collected on participants' age, sex, nursing role, type of clinical area worked, years of nursing experience, and prior involvement in participation in research education and EBP education. In addition, participants were asked to complete an evaluation of the workshop at the end of the day to give their opinions on the intervention and make suggestions for changes.

2.7. Data analysis

Descriptive statistics were used to analyze the demographic data. Paired t‐tests (two‐tailed) were used to compare within‐subject changes in EBP self‐efficacy and research comprehension. Mean differences with standard deviations were calculated for pretest to posttest SE‐EBP and research comprehension scores. Statistical significance was set at <.05. SPSS version 25 was used to conduct analyses. Participant evaluations were summarized.

3. RESULTS

Ten registered nurses were recruited to participate in this pilot study. Two workshops were held to recruit the required sample. The majority were female (n = 9) and the mean age was 45.7 (SD: 8.06) years. Participants came from various clinical backgrounds and nursing positions, but most were in supervisory roles. Most participants had lengthy nursing experience (mean 23.66 years, SD: 13). The majority of participants had received some training on literature searching (n = 8) and using computers (n = 7). All but one had participated in previous EBP education, however only three had received any specific research education. EBP education, if received, was between 2 and 18 years previously (Table 1).

Table 1.

Participant descriptions (n = 10)

| Variable | N | Percent (%) | |

|---|---|---|---|

| Female | 9 | 90 | |

| Male | 1 | 10 | |

| Previous research education | 3 | 30 | |

| Previous EBP education | 9 | 90 | |

| Previous education in literature searches | 8 | 80 | |

| Previous computer use training | 7 | 70 |

| Variable | N | Mean ± SD | Minimum/maximum |

|---|---|---|---|

| Age | 10 | 45.7 ± 8.0 | 37/59 |

| Years as RN | 9 | 23.6 ± 13.0 | 5/42 |

Abbreviations: EBP, evidence‐based practice; SD, standard deviation.

EBP self‐efficacy, as measured by the SE‐EBP instrument, increased in every participant when measured at posttest, although there was a large variation in the within‐subject changes (2−127). The mean pretest SE‐EBP score was 135.7 (SD: 51.2) which increased to a mean posttest score of 192.6 (SD: 48.6) for a mean difference of 56.9 (SD: 39.9, t = 4.5, df = 9, p = .001 [2‐tailed]).

Research comprehension also improved but on a lesser scale. The average research comprehension score at pretest was 7.9 (SD:2.4) and at posttest this score had risen to 9 (SD: 1.4). The mean difference of 1.1 was statistically, but not practically, significant (t =2.9, df = 9, p = .01) (Tables 2 and 3).

Table 2.

Mean SE‐EBP and research comprehension scores

| Instrument | Pretest (N = 10) | Posttest (N = 10) |

|---|---|---|

| Mean ± SD | Mean ± SD | |

| SE‐EBP | 135.7 ± 51.2 | 192.6 ± 48.6 |

| Research comprehension | 7.9 ± 2.4 | 9.0 ± 1.4 |

Abreviations: SD, standard deviation; SE‐EBP, Self‐Efficacy in Evidence‐Based Practice.

Table 3.

Within‐subject changes (n = 10)

| 95% Confidence Interval of difference | ||||||||

|---|---|---|---|---|---|---|---|---|

| Instrument | Mean | SD | Std error mean | Lower | Upper | t | df | Sig (two‐tailed) |

| SE‐EBP | 56.9 | 39.9 | 12.6 | 85.4 | 28.3 | 4.5 | 9 | 0.001 |

| Research Comprehension | 1.1 | 1.1 | 0.3 | 1.9 | 0.2 | 2.9 | 9 | 0.01 |

Abreviation: SD, standard deviation.

Nine of the ten participants completed a workshop evaluation form; the tenth participant left the workshop room without completing one. Evaluations were consistently positive, with all participants agreeing the workshop helped them to read a research paper. When asked about the most useful part of the day, responses focused on learning how to understand research language, deciphering results sections, working through tables and graphs, and understanding statistics. Suggestions for changes or improvements primarily focused on requests for more information, another follow‐up workshop, or more resources such as a glossary of terms or guides to reading research that they could take away with them and use (Table 4).

Table 4.

Participant evaluations

| Participant | Do you feel like the workshop helped you learn to read a research article? | What were the most useful parts of the day? | If you could change any part of the workshop, what would that be? | Do you have any other feedback? |

|---|---|---|---|---|

| P1 | Yes, overall. | Data analysis, research language, specific research analysis. | Perhaps appendix at back with key research terms/language and plain English translation. Used as a quick cheat sheet when analyzing research article. | Great day, just heavy‐going topic. |

| P2 | Yes, overall. | 1. Deciphering the language, what does the article actually mean? 2. Confidence to read through an article and try to understand it. 3. Highlights my need to attend EBP workshop. | A list of ask these questions—a go‐to list when I pick up an article, what do I look for. | Just thank you! |

| P3 | Yes, definitely. | The discussion following the different research papers. | I would be open to further information days and workshops. | Nil. |

| P4 | Yes. | All of it! | Larger text in slides with tables. | Still so much more to learn. Email quiz. Activity sheet to help me. When reading research articles. |

| P5 | Yes, a great introduction. | Discussion and self‐expression. | Small group was great. | Appreciated affirming manner, |

| Gave permission to own up to deficiencies | ||||

| P6 | Yes. | How to interpret jargon, what to focus on, how to read graphs, what's important. | May be prereading or prefilling in of preworkshop survey. | Thank you. Would love to follow up with you around research. |

| P7 | Yes, well structured. Core literacy discussed variety of different articles and research designs, methods, data analysis etc. it was really helpful to explore with you. | Application of theory by doing the activities with feedback by facilitator‐ variety of offerings. | Prereading with glossary of terms to refer to, although this was well‐captured in the session. | Well done, Probably one of the most useful workshops for research I have ever had. The Tim Tam randomization was a great practical exercise. |

| P8 | Yes, but think a second would help again. | The whole day was interesting. Looking at graphs and discussing the findings was very useful. | That there be a second one in a week or month to reassess and to build on what was learnt today. | Thank you! |

| P9 | Yes, a good reminder of how to dissect. A lot more practice required for confidence! | Earlier part of the day learning about statistical analysis. | Some written descriptor/definitions commonly used in the day for reference. An outline of what will covered before enrolling and on the day. | What else is on offer from research dept? |

4. DISCUSSION

While this study was not designed to provide evidence of effectiveness, it provided some thought‐provoking results. The study achieved its intended aim, to test the materials and workshop format, and found them successful in terms of the outcomes measured but questions still remain. The relatively high baseline research comprehension test results and the large mean difference in pre and posttest self‐efficacy scores might indicate that these participants did not so much lack knowledge as lack self‐efficacy or self‐confidence, which the intervention helped to increase. Several participants mentioned confidence or concerns in their evaluation feedback or in conversations with the workshop facilitator.

Participants in this study clearly did, on average, lack self‐efficacy in terms of EBP and the posttest SE‐EBP increased with both practical and statistical significance immediately after the intervention. Possibly, individuals self‐selecting to participate in this study were those with some research knowledge but a high degree of uncertainty about it, hence their low self‐rated self‐efficacy. Some study participants did display nervousness about sharing the results of their comprehension quizzes, even though they scored relatively highly, and their evaluation feedback is consistent, discussing fears about looking incompetent before their peers. Avoidance behavior and a tendency to catastrophize is closely tied to low self‐efficacy. 25

A number of interventions have been used to try to improve nurses' research utilization and EBP self‐efficacy. A systematic review examining interventions to improve nurses' research literacy included 10 studies that aimed to improve research knowledge, critical appraisal skills and EBP confidence and reported data on a range of different interventions, some effective, some not. 26 Overall, Hines et al. recommended that future educational interventions be based on appropriate theories and be highly interactive. 26 However, none of the included studies in Hines et al.'s review directly addressed nurses' research literacy and none used language‐based techniques such as those used in this study.

This study is not the first in the field of nursing to use genre analysis and other socio‐linguistic techniques to examine language and enable learning about it. Genre analysis has been used examine the language of mental health nursing reports, 27 clinical nursing procedure texts, 28 intra and interprofessional communication, 29 among others. Genre analysis has also been used to improve the research literacy of English majors. 9 It does not appear, however, that genre analysis has yet been used to help nurses learn about the language of research. There is considerable potential for further work in this area.

Using genre analysis to focus on reading and understanding research, rather than other pedagogical strategies has several benefits. Most nurses will never conduct their own research study but will need to become educated consumers of research. Nurses have identified the language of research as a barrier to EBP participation. 2 , 30 Using a language‐based approach to overcoming a language problem seems both practical and logical.

Learning the language, however, may not be the whole solution. Nurses learn and use complex language constantly in their workplaces. The root of the problem may lie in differences in nurses' perceptions of the place of research in nursing, the worth of learning about research to the individual, or something specific to quantitative research literature, such as statistical reporting.

Future work on this intervention involves testing the workshop with larger numbers of participants and with different nurse populations. Currently, a course using genre analysis is being devised for use with remote area nurses and uses for allied health professionals are also being examined. Educators seeking to implement genre analysis in research education should focus the content of interventions on the meanings of commonly used research words and phrases. As language is often identified as a barrier to reading research, it is important to make improved research literacy the primary consideration when devising interventions. This approach is most suitable for beginning levels of research literacy where having a basic understanding of common methodological language improves confidence and willingness to read a full research paper.

5. CONCLUSIONS

Research literacy education using genre analysis and a language focused approach has considerable potential to be developed as a new strategy for teaching nurses to read and understand research. Genre analysis shows promise as tool for nurse education. Further research into nurses' experiences learning about research may lend insight into this complex issue.

ETHICS STATEMENT

The conduct of the study was approved by the study organization's Human Research Ethics Committee (approval number HREC/17/MHS/36). Each participant gave informed written consent after reading the study's participant information sheet.

ACKNOWLEDGMENTS

The authors would like to acknowledge the valuable contribution of Flinders Rural and Remote Health, Alice Springs, in enabling the completion of this project. We would also like to thank Mater Health for their support and assistance. The authors would also like to acknowledge the valuable contribution of the Australian College of Nursing for awarding Sonia Hines a Florence Nightingale PhD Scholarship. The funding body had no input into the study design, findings, or reporting. Open access publishing facilitated by Queensland University of Technology, as part of the Wiley ‐ Queensland University of Technology agreement via the Council of Australian University Librarians.

Hines S, Ramsbotham J, Coyer F. A theory‐based research literacy intervention for nurses: a pilot study. Nurs Forum. 2022;57:1052‐1058. 10.1111/nuf.12780

DATA AVAILABILITY STATEMENT

The data sets generated by this study (interview transcripts and recordings) are not publicly available due to the conditions of the ethical approval received to conduct the study.

REFERENCES

- 1. Jakubec S, Astle B. Research Literacy for Health and Community Practice. Canadian Scholars' Press; 2017. [Google Scholar]

- 2. Ubbink DT, Guyatt GH, Vermeulen H. Framework of policy recommendations for implementation of evidence‐based practice: a systematic scoping review. BMJ Open . 2013;3(1). 10.1136/bmjopen-2012-001881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Li L‐J, Ge G‐C. Genre analysis: structural and linguistic evolution of the English‐medium medical research article (1985–2004). English Specif Purp. 2009;28(2):93‐104. 10.1016/j.esp.2008.12.004 [DOI] [Google Scholar]

- 4. Gottlieb M. Assessing English language learners: bridges to educational equity: connecting academic language proficiency to student achievement. Corwin Press; 2016. [Google Scholar]

- 5. Silliman ER, Wilkinson LC, Brea‐Spahn M. Writing the science register and multiple levels of language. In: Bailey A, Maher CA, Wilkinson LC, eds. Language, literacy, and learning in the STEM disciplines: how language counts for English learners. Routledge; 2018:115‐139. [Google Scholar]

- 6. Nwogu KN. The medical research paper: structure and functions. English Specif Purp. 1997;16(2):119‐138. [Google Scholar]

- 7. Flowerdew J. Action, content and identity in applied genre analysis for ESP. Lang Teach. 2011;44(4):516‐528. [Google Scholar]

- 8. Tardy CM, Swales JM. Genre analysis. In: Schneider KP, Barron A, eds. Pragmatics of discourse. De Gruyter Mouton; 2014;3:165‐188. [Google Scholar]

- 9. Hsu W. Easing into research literacy through a genre and courseware approach. Electron J Foreign Lang Teach. 2006;3(1):70‐89. [Google Scholar]

- 10. Leach MJ, Hofmeyer A, Bobridge A. The impact of research education on student nurse attitude, skill and uptake of evidence‐based practice: a descriptive longitudinal survey. J Clin Nurs. 2016;25(1‐2):194‐203. [DOI] [PubMed] [Google Scholar]

- 11. Westerlund A, Sundberg L, Nilsen P. Implementation of implementation science knowledge: the research‐practice gap paradox. Worldviews Evid Based Nurs. 2019;16(5):332‐334. 10.1111/wvn.12403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement Sci. 2010;5(14):5908‐5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Nesbitt J, Barton G. Nursing journal clubs: a strategy for improving knowledge translation and evidenced‐informed clinical practice. J Radiol Nurs. 2014;33(1):3‐8. [Google Scholar]

- 14. Knowles MS. The modern practice of adult education. 41. New York Association Press New York; 1970. [Google Scholar]

- 15. Bandura A. Social learning theory. Prentice‐Hall; 1976:247. [Google Scholar]

- 16. Swales J. Genre analysis: English in academic and research settings. Cambridge University Press; 1990. [Google Scholar]

- 17. McWilliams R, Allan Q. Embedding academic literacy skills: towards a best practice model. J Univ Teach Learn Pract. 2014;11(3):8‐114. [Google Scholar]

- 18. Nagy S, Lumby J, McKinley S, Macfarlane C. Nurses' beliefs about the conditions that hinder or support evidence‐based nursing. Int J Nurs Pract. 2001;7(5):314‐321. [DOI] [PubMed] [Google Scholar]

- 19. McCaughan D, Thompson C, Cullum N, Sheldon TA, Thompson DR. Acute care nurses' perceptions of barriers to using research information in clinical decision‐making. J Adv Nurs. 2002;39(1):46‐60. [DOI] [PubMed] [Google Scholar]

- 20. Thiel CA. Views on research: the cookie experiment: a creative teaching strategy. Nurse Educ. 1987;12(3):8‐10. 10.1097/00006223-198705000-00004 [DOI] [PubMed] [Google Scholar]

- 21. Merriam SB, Bierema LL. Adult learning: Linking theory and practice. John Wiley & Sons; 2013. [Google Scholar]

- 22. Chang AM, Crowe L. Validation of scales measuring self‐efficacy and outcome expectancy in evidence‐based practice. Worldviews Evid Based Nurs. 2011;8(2):106‐115. [DOI] [PubMed] [Google Scholar]

- 23. Hoegen P, de Bot C, Echteld M, Vermeulen H. Measuring self‐efficacy and outcome expectancy in evidence‐based practice: a systematic review on psychometric properties. IJNS Advances. 2021;3:100024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Mokkink LB, de Vet HCW, Prinsen CAC, et al. COSMIN risk of bias checklist for systematic reviews of patient‐reported outcome measures. Qual Life Res. 2018;27(5):1171‐1179. 10.1007/s11136-017-1765-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Bandura A. Reflections on self‐efficacy. Adv Behav Res Ther. 1978;1(4):237‐269. 10.1016/0146-6402(78)90012-7 [DOI] [Google Scholar]

- 26. Hines S, Ramsbotham J, Coyer F. The effectiveness of interventions for improving the research literacy of nurses: a systematic review. Worldviews Evid Based Nurs. 2015;12(5):265‐272. [DOI] [PubMed] [Google Scholar]

- 27. Crawford P, Johnson AJ, Brown BJ, Nolan P. The language of mental health nursing reports: firing paper bullets. J Adv Nurs. 1999;29(2):331‐340. 10.1046/j.1365-2648.1999.00893.x [DOI] [PubMed] [Google Scholar]

- 28. Ford‐Sumner S. Genre analysis: a means of learning more about the language of health care. Nurse Res. 2006;14(1):7‐17. [DOI] [PubMed] [Google Scholar]

- 29. Philip S, Woodward‐Kron R, Manias E. Overseas qualified nurses' communication with other nurses and health professionals: an observational study. J Clin Nurs. 2019;28(19‐20):3505‐3521. [DOI] [PubMed] [Google Scholar]

- 30. Stavor DC, Zedreck‐Gonzalez J, Hoffmann RL. Improving the use of evidence‐based practice and research utilization through the identification of barriers to implementation in a critical access hospital. J Nurs Adm. 2017;47(1):56‐61. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data sets generated by this study (interview transcripts and recordings) are not publicly available due to the conditions of the ethical approval received to conduct the study.