Abstract

Background

The aim of this study is to provide a comprehensive understanding of the current landscape of artificial intelligence (AI) for cancer clinical trial enrollment and its predictive accuracy in identifying eligible patients for inclusion in such trials.

Methods

Databases of PubMed, Embase, and Cochrane CENTRAL were searched until June 2022. Articles were included if they reported on AI actively being used in the clinical trial enrollment process. Narrative synthesis was conducted among all extracted data: accuracy, sensitivity, specificity, positive predictive value, and negative predictive value. For studies where the 2x2 contingency table could be calculated or supplied by authors, a meta-analysis to calculate summary statistics was conducted using the hierarchical summary receiver operating characteristics curve model.

Results

Ten articles reporting on more than 50 000 patients in 19 datasets were included. Accuracy, sensitivity, and specificity exceeded 80% in all but 1 dataset. Positive predictive value exceeded 80% in 5 of 17 datasets. Negative predictive value exceeded 80% in all datasets. Summary sensitivity was 90.5% (95% confidence interval [CI] = 70.9% to 97.4%); summary specificity was 99.3% (95% CI = 81.8% to 99.9%).

Conclusions

AI demonstrated comparable, if not superior, performance to manual screening for patient enrollment into cancer clinical trials. As well, AI is highly efficient, requiring less time and human resources to screen patients. AI should be further investigated and implemented for patient recruitment into cancer clinical trials. Future research should validate the use of AI for clinical trials enrollment in less resource-rich regions and ensure broad inclusion for generalizability to all sexes, ages, and ethnicities.

Clinical trials are quintessential to the advancement of research and medical practice, in all domains including oncology. Guideline and practice recommendations are ideally supported by clinical trials (1,2), to minimize bias and confounding effects. Furthermore, centers that are able to accrue well have been associated with better outcomes possibly though greater experience, faster implementation of improved treatments, and superior quality of care (3). Granting agencies, governments, and cooperative trial organizations have recognized the importance of inclusion of underrepresented groups, including women, ethnic minorities, and racialized populations, especially during the COVID-19 pandemic (4). Despite the potential benefits of clinical trials, less than 5% of adults with cancer are enrolled in a clinical trial, and nearly one-fifth of trials are terminated early because of insufficient enrollment (5). Ultimately, this enrollment difficulty leads to insufficiently powered trials and potential delayed scientific progress and wasted resources.

In recent years, there has been growing interest to use technology to assist with the screening and enrollment process for patient-trial matching (6). One of the most exciting and promising prospects is the use of artificial intelligence (AI) to triage and identify patients eligible for cancer clinical trials. Several studies have demonstrated the ability of AI to assist in multiple steps of the enrollment process including clinical trial site selection, patient recruitment, and patient screening. Akin to oncologists and scientists, AI, a broad family of computer algorithms, can digest and synthesize large sets of data and provide a recommendation as to whether a patient is eligible to be enrolled in a clinical trial. What distinguishes AI from other automated screening tools is its ability to parse unstructured and textual data to extract meaning from sources such as patient charts, potentially at levels of effectiveness comparable with a human chart reviewer. An added benefit of AI, compared with clinicians and scientists, is its ability to process this data rapidly and repetitively without fatigue and be able to rapidly make these decisions on a large scale to facilitate clinical trial enrollment. Given the practical challenges of clinical trials enrollment as described above, of particular interest is the ability for AI to identify potential patients who may have been missed by clinicians or manual screening. Supplementary Figure 1 (available online) provides a sample workflow as to how an AI algorithm for clinical trial enrollment might work: input data are parsed via natural language processing (NLP) if necessary and then combined and sorted to produce a recommendation for trial enrollment. Although machine learning could be used at the NLP and sorting steps, the structure and applications of the algorithms vary from study to study. Nevertheless, all variations in theory could yield important benefit to assist and expedite clinical trial enrollment process.

Several studies have investigated the use of AI for clinical trial eligibility (7-10), but to date there has been no systematic review or meta-analysis to provide an assessment of the value of AI provided by these relatively small and varied reports. The aim of this article is to provide a comprehensive understanding of the current landscape of AI for the use of cancer clinical trial enrollment and its predictive accuracy in identifying patients for inclusion in trials. We review and summarize several metrics of AI performance in comparison to manual screening including sensitivity, specificity, positive predictive value, and negative predictive value.

Methods

This review was registered a priori on PROSERO (CRD42022336075) (11). Databases of PubMed, Embase, and Cochrane CENTRAL were searched from database inception up until June 2022 (Supplementary Box 1, available online). After duplication removal and a calibration exercise, 3 review authors (RC, JM, JK) independently screened each article according to our eligibility criteria. Articles were eligible after level 1 (title and abstract) screening if they reported on the use of AI in oncology clinical trials. These articles were subsequently eligible after level 2 (full text) screening if they reported on AI being actively used in the clinical trial enrollment process. Any disagreements between review authors were resolved by discussion and consensus. If consensus was not achieved, a fourth and senior author (SR) was involved to adjudicate.

All articles were subsequently assessed for data extraction of quantitative test characteristics assessing predictive accuracy of AI for clinical trial enrollment using accepted formal clinical trial methodology. Reference standard, as reported by studies, involves manual screening alone for determination of clinical trial eligibility. Test characteristics reported by studies required evaluation by humans, comparing manual screening with AI-based screening. Studies were included for data extraction in this review if they reported on at least 1 of the following outcomes: accuracy (correct enrollment decision), sensitivity, specificity, positive predictive value, or negative predictive value. Studies that reported on the use of AI in multiple settings were extracted separately into different groups from each study (Supplementary Table 1, available online). Where studies may have additional metrics available, but not reported, as evaluated by review authors, first and/or corresponding authors were contacted via email with data requests. If no response was received, a follow-up email was sent 1 week later. Included studies were also noted by their sample size, institution, patients’ cancer diagnosis, methodology, final conclusion and recommendation. As well, study quality was assessed using QUADAS-2 (12).

Narrative synthesis was conducted among all extracted data. For studies where the 2x2 table of true positive, true negative, false positive, and false negative could be calculated or supplied by authors, a meta-analysis was conducted using the hierarchical summary receiver operating characteristics curve model (13). Summary diagnostic test statistics and 95% confidence intervals (CIs) were computed. All analyses were conducted using StataBE 17.0.

Results

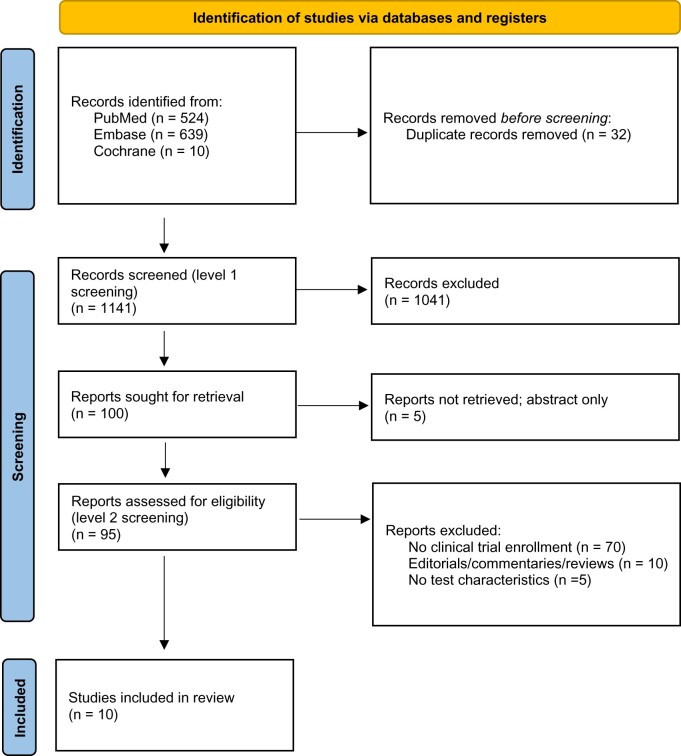

A total of 1173 records were identified from the search strategies. After duplicate removal, 1141 articles were eligible for level 1 screening, and 100 were eligible for level 2 screening. Ultimately, 10 articles (14-23) were included in this review (Figure 1), resulting in 19 datasets reported across 10 studies, with more than 50 000 patients included.

Figure 1.

PRISMA flow diagram.

Study characteristics are presented in Table 1. Sample size ranged from 96 to 48 124. Six studies were conducted in the United States, 3 in Europe, and 1 in Australia. Three studies included patients with any cancer diagnosis, 3 with only breast cancer diagnosis, 1 with lung cancer diagnosis, 1 with prostate cancer diagnosis, and 2 with either breast or lung cancer diagnoses. Four studies used licensed, published AI algorithms purchased from industry, 5 studies developed their own algorithms, and 1 study evaluated a predeveloped algorithm created as part of a previous research project. Three studies using licensed algorithms in fact used the same algorithm. All studies used clinical data, both structured and unstructured, to feed into an AI classifier to determine if patients were eligible for prespecified clinical trials conducted at their local cancer center. Common structured data across studies were age, sex, laboratory data, and cancer diagnosis staging, and these were obtained from electronic medical records, as reported by the included studies. Unstructured data in clinical notes were processed using text recognition systems such as NLP to identify past medical history, medications, comorbidities, and prior treatments. The algorithms varied widely in the machine learning approaches used (eg, random forest vs support vector machines) (Supplementary Box 2, available online). Nearly all studies were retrospective simulation studies, where studies retrospectively reviewed patient records to assess clinical trial eligibility, and this was used as the gold standard for comparing against AI-based tools. Nearly all studies had low concern for bias; given the limited reporting on reference standard by Cesario et al. (17), there was some concern for bias in their study (Supplementary Figure 2, available online).

Table 1.

Study characteristicsa

| Study | No. | Institution | Cancer diagnosis | Enrollment methodology | AI source | AI methodology | Algorithm runtime | Conclusion |

|---|---|---|---|---|---|---|---|---|

| Alexander et al. (14) | 102 | Peter MacCallum Cancer Center, Melbourne, Australia | Lung | Clinical data extracted from study database and medical records to match patients to 10 phase I-III cancer clinical trials on clinicaltrials.gov at local cancer center | Watson for Clinical Trial Matching (WCTM), developed by IBM | Trial data intake was optimized with 3 rounds of trial ingestion by NLP before matching patients to clinical trials based on primary cancer staging, metastatic disease, performance status, mutations, prior cancer therapy, lung surgery type, cancer histology, demographics, echocardiography, pathology, past medical history, medications, comorbidities | 15.5 s | The AI-based clinical trial matching system allows efficient and reliable screening of cancer patients for clinical trials |

| Beck et al. (15) | 239 | Highlands Oncology Group, Arkansas, USA | Breast | Structured and unstructured patient data were included to assess clinical trials eligibility to 4 breast cancer trials listed on clinicaltrials.gov at local cancer center | Watson for Clinical Trial Matching (WCTM), developed by IBM | Trial data intake was optimized with 3 rounds of trial intake before used to match patients to clinical trials based on structured patient data (laboratory tests, sex, cancer diagnosis, age) and unstructured data sources (most recent medical progress note) | 24 min, which is 78% reduction compared with manual screening | Clinical trial matching system displayed a promising performance in screening patients with breast cancer for trial eligibility |

| Calaprice-Whitty et al. (16) | 48 124 | Comprehensive Blood and Cancer Center, Bakersfield, CA, USA | Breast, lung | Structured and unstructured medical records evaluated to identify eligible patients retrospectively in 3 completed trials at local cancer center | Mendel.ai, developed by Mendel | Text recognition system to extract text from scanned medical documents, clinical language understanding, and entailment system to read output of text recognition system and its meanings, knowledge-based ontology and wisdom system to synthesize data to data dictionary |

|

Augmentation of human resources with artificial intelligence could yield sizable improvements over standard practices in several aspects of the patient prescreening process, as well as in approaches to feasibility, site selection, and trial selection |

| Cesario et al. (17) | 96 | Comprehensive Cancer Center, Roma, Italy | Breast, lung | Digital research assistant via progressive web app identifies patients eligible for a clinical trial of all those conducted at the cancer center | Digital Research Assistant, developed in-house | AI-based models using age, immunophenotype, genetics, histology, BMI, stage of therapy | NR | Might represent a valid research tool supporting clinicians and scientists to optimize the enrollment of patients in clinical trials |

| Cuggia et al. (18) | 285 | Centre Eugene Marquis, Rennes, France | Prostate | Automatic selection of clinical trials eligibility criteria (national research project) to retrospectively identify patients discussed in multidisciplinary meetings for clinical trial eligibility, for eligibility of 4 clinical trials conducted at cancer center | Computerized recruitment support system, developed as a French national research project | Computerized recruitment support system based on semantic web approach | NR | System was scalable to other clinical domains |

| Delorme et al. (19) | 264 | Gustave Roussy Cancer Campus, Villejuif, France | All | Free text consultation reports evaluated to identify eligible patients retrospectively included in phase I or II oncology trials | Model developed in-house | Natural language preprocessing pipeline to turn free text into numerical features for random forest model | NR | Machine learning with semantic conservation is a promising tool to assist physicians in selecting patients prone to achieve successful screening and dose-limiting toxicity period completion in early phase oncology clinical trials |

| Haddad et al. (20) | 318 | Mayo Clinic, Rochester, MN, USA | Breast | Structured and unstructured medical records evaluated to identify eligible patients for 4 breast cancer trials listed on clinicaltrials.gov at local cancer center | Watson for Clinical Trial Matching (WCTM), developed by IBM | NLP to identify cancer stage, cancer subtype, genetic markers, prior cancer therapy, surgical status, pathology, therapy-related characteristics | NR | Accurately exclude ineligible patients and offer potential to increase screening efficiency and accuracy |

| Meystre et al. (21) | 229 | Hollings Cancer Center, Charleston, SC, USA | Breast | Clinical notes assessed to assess eligibility for 3 breast cancer clinical trials at local cancer center | Model developed in-house | Named entity recognition task based on sequential token-based labeling using a support vector machine retrieved clinical notes, extracted eligibility criteria | NR | Can be used to extract eligibility criteria from HER clinical notes and automatically discover patients possibly eligible for a clinical trial with good accuracy, which could be leveraged to reduce the workload of humans screening patients for trials |

| Ni et al. (22) | NR | Cincinnati Children’s Hospital Medical Center, Cincinnati, OH, USA | All | Demographics and notes processed to evaluate eligibility to all 70 clinical trials at local cancer center | Model developed in-house | NLP and information extraction of demographics, diagnoses, clinical notes | 1 min, which saves 346 min of manpower | Could dramatically increase trial screening efficiency of oncologists and enable participation of small practices, which are often left out from trial enrollment |

| Zeng et al. (23) | NR | MD Anderson Cancer Center, Houston, TX, USA | All | Genetic textual document repositories and matching documents assessed to evaluate eligibility for 153 preprocessed potential targeted therapy clinical trials from clinicaltrials.gov and MD Anderson clinical trial database | Model developed in-house | Genetic textual document repository to identify 1 of 543 genes whose molecular abnormality can be detected on sequencing panels | NR | NLP tool was generalizable; tool may partially automate process of information gathering |

AI = artificial intelligence; BMI = body mass index; NLP = natural language processing; NR = not reported.

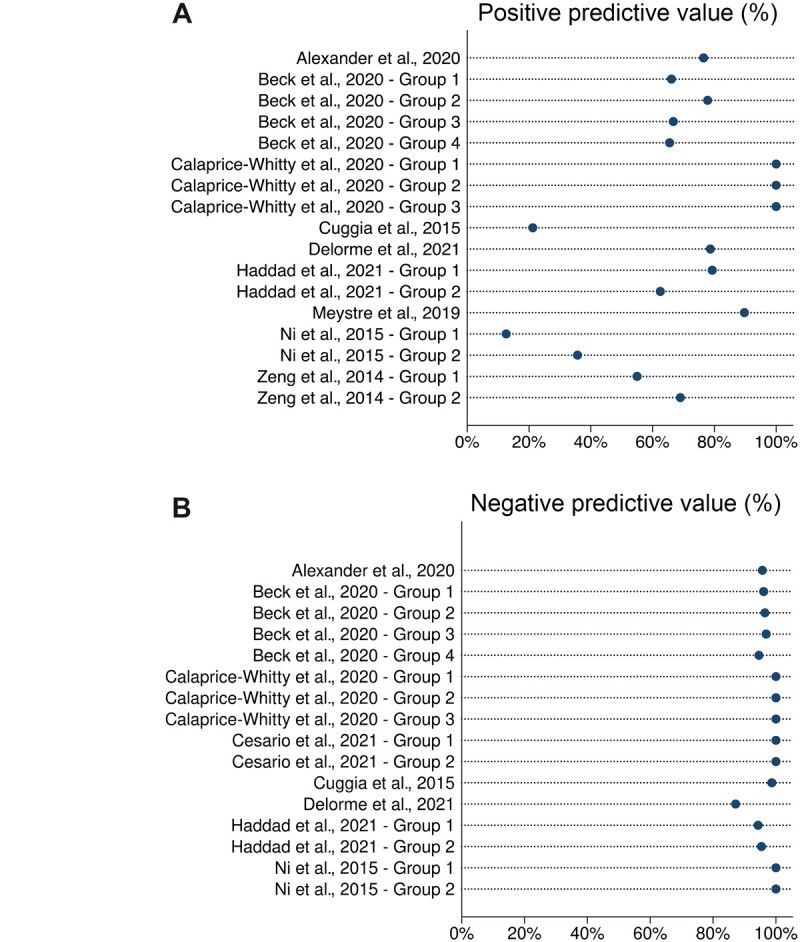

Accuracy, sensitivity, specificity, positive predictive value, and negative predictive value for each article are presented in Figure 2 and Supplementary Figure 3 (available online). A total of 19 datasets were reported across the 10 publications. In the application of the algorithm across 13 datasets, accuracy ranged from 62.8% to 100%; all but 1 reported accuracy greater than 80%. Among the 17 datasets, sensitivity ranged from 46.7% to 100%; all but 1 dataset reported sensitivity greater than 80%. Across 16 datasets, specificity ranged from 59.2% to 100%; all but 1 reported specificity greater than 80%. Positive predictive value was reported in 17 datasets and ranged from 12.6% to 100%; 5 datasets reported positive predictive value in excess of 80%. Negative predictive value was reported in all but 3 datasets and ranged from 87.2% to 100%; all datasets reported negative predictive value in excess of 80%.

Figure 2.

Predictive ability of artificial intelligence. A) Positive predictive value. B) Negative predictive value.

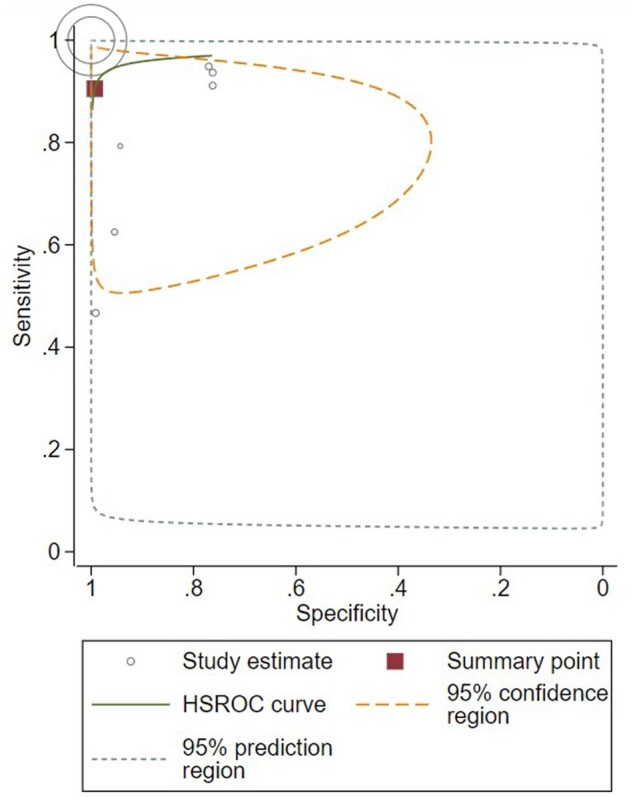

Eight datasets, with 40 447 patient inputs reported by 3 studies, had sufficient data for meta-analysis. Accuracy, sensitivity, specificity, positive predictive value, and negative predictive value of these studies are reported in Supplementary Figure 4 (available online). From meta-analysis, the summary sensitivity of AI was 90.5% (95% CI = 70.9% to 97.4%); the summary specificity was 99.3% (95% CI = 81.8% to 99.9%) (Figure 3). The prediction region includes essentially everything, except very low sensitivities (<10%).

Figure 3.

Receiver operator curve. Study estimates are reported by individual circles, with the size of circle denoting weighting. Summary point indicates summary sensitivity and specificity. HSROC = hierarchical summary receiver-operating characteristic.

All 4 studies that reported on time savings from AI noted substantial time savings (Table 1). All studies seemed to conclude that AI-based systems are efficient and reliable, with generally concordant results to gold standard manual screening. Some studies also retrospectively analyzed and compared the AI algorithm to what was achieved with historical manual screening and found cases where patients were incorrectly classified by manual screening (16,20).

Discussion

To our knowledge, this is the first systematic review and meta-analysis reporting on the use of AI for cancer clinical trial enrollment. We evaluated 10 studies, which reported on 19 datasets investigating more than 50 000 patients. In nearly all evaluable metrics, AI was highly accurate and efficient for the purposes of cancer clinical trial enrollment, exceeding 80% in the evaluated test characteristic. From meta-analysis, AI had a summary sensitivity of 90.5% and summary specificity of 99.3%. When evaluating the results further, there is an observed trend toward higher predictive accuracy for ruling out ineligible patients—specificity and negative predictive values are generally higher than sensitivity and positive predictive values. Were AI adopted in clinical trial screening, it could be more useful to have a tool that has greater confidence in ruling out patients who are ineligible rather than a tool that misses eligible patients. In the event of some false positives because of the relatively lower sensitivity than specificity (therefore some patients are incorrectly deemed eligible), this could be rectified when patients are approached by research staff for consent and, at that point, excluded. Based on this analysis, the optimal application of AI in cancer clinical trial enrollment seems to be in augmenting and expediting, rather than replacing, manual human review. With AI as the initial screener, and followed by human review, this workflow should be more time efficient and reduce the likelihood that eligible patients are excluded.

AI algorithms used structured and unstructured data to assess patient eligibility for cancer clinical trials. The details of the industry-developed algorithms are not publicly available, but the de novo algorithms used a variety of machine learning methods to accomplish the given classification task. Furthermore, the de novo algorithms still employed many predeveloped processes and packages, both open-source and paid (17,19,22,23). This highlights an important feature of AI research in this application: the AI-based software is rarely built completely from scratch but rather is often a combination of original code and preexisting structures, methods, and models, adapted to particular purposes. More transparency and reproducibility of these AI models may speed up their adoption (24).

Despite differences between individual algorithms, the use of NLP was common to all studies, in that all algorithms had a step in their pipeline wherein AI was used to extract information from unstructured text. Compared with automated methods that do not employ NLP to read chart data, Ni et al. (22) found that the addition of NLP to screening filters reduced the postscreening pool size by up to 85% and trial recommendation quantity by more than 90%, representing a substantial reduction in workload, and up to approximately a tenfold increase in precision. This indicates that algorithmically inclusion of NLP using AI may provide a substantial benefit as compared with nonlearning clinical trial filter algorithms when used in tandem with human review postalgorithmic screening.

It is important to compare the in-house developed algorithms with those that were purchased from industry; this may provide insight into the relative cost-effectiveness of continuing to develop new algorithms vs focusing on deployment and streamlining of existing software and pipelines. The positive predictive values for industry-developed algorithms were on average higher and with substantially smaller variance than in-house algorithms (Supplementary Table 2, available online). There were no other discernible trends between the 2 groups.

Perhaps of greatest interest is the potential savings to resources for clinical trial screening. All 4 studies that reported on efficiency reported that there was observed time savings associated with clinical trial enrollment when compared with a counterfactual scenario of manual screening for cancer clinical trials. This could ultimately allow for reallocation of resources to other activities associated with clinical trials and permit health-care centers with limited research personnel to quickly screen and participate in clinical trials. It is important to mention, however, that it is unclear if the algorithm time calculation took into account time spent on potential preprocessing and manual abstraction steps; there may be some overestimation of time savings. It is of value to also note that in some cases, AI was able to identify eligible patients who were initially excluded during manual screening (16).

AI involvement in clinical trial enrollment can also assist in the increasing interest of diversity, equity, and inclusion in clinical trials, as well as the adoption of personalized medicine. AI can unbiasedly assess inclusion criteria to patients regardless of background and/or cultural demographics and hopefully provide a more representative patient population for clinical trials (25-28). The use of biomarkers and molecular testing has revolutionized the field of medical oncology, although adding complexity to therapeutic decision making and clinical trial design. AI can ingest large amounts of data in pursuit of personalized medicine and help assess patients against increasingly complex eligibility criteria in future trials (29). For example, in our review, Zeng et al. (23) used AI to assess 543 biomarkers per patient, for the purpose of oncology clinical trial enrollment, and reported accuracy and sensitivity in excess of 80%.

Of final note, it is also important to caution about the current availability of AI for clinical trial enrollment. AI algorithms are often resource intensive to develop, and only centers with sufficient means and expertise can afford to develop their own AI algorithms as part of their clinical trial screening programs. Deployment of AI tools in health care will therefore require additional work to ensure its fair access across large and community centers. AI algorithms for patient screening also require access to sensitive information present in the electronic medical record. A high level of security is required to ensure that the AI tools do not disclose sensitive information in any way, as to maintain patient confidentiality. This is true for local and cloud-based deployments where the use of secure computational technologies and security audits is essential for the safe adoption of AI tools in clinical settings. It is not surprising that all of the included studies in this review that developed de novo algorithms were conducted in high-income countries. Pricing information for industry-developed algorithms could not be obtained. The current research and use of AI appear to be currently limited to resource-rich countries and may even increase the disparity in distribution between resource-rich and less resource-rich regions. However, future deployment of AI has the potential to reduce clinical trial costs, thereby allowing AI and clinical trials to be conducted in less resource-rich regions, ultimately increasing participation in clinical trials across the globe (30). This highlights the importance of parallel development of such algorithms by individual research groups so that these algorithms could eventually be made available either as open-source or at a sufficiently low cost to become a standardized tool.

As well, although no studies identified patterns of failure for settings in which the AI algorithm did not behave as expected, this would be important information to report in future studies to ensure the generalizability of these AI-based screening tools. Finally, we observed that the few cohorts with a much larger sample size than other included studies had excellent test characteristics (16), and a potential relationship between performance and sample size warrants further study.

This review was not without limitations. It is important to note that there was heterogeneity in the AI algorithms used for clinical trial enrollment assessment. Future studies should aim to determine the critical input parameters for classification using AI algorithms to achieve the best predictive ability and reduce computational time and resources, especially if the goal is to allow AI to assist in clinical trial enrollment in less resource-rich regions. Given that the AI algorithms used a large quantity and variety of health information to make enrollment decisions, it is uncertain if these results would be applicable to less resource-rich environments; future research could focus on implementing AI for clinical trial enrollment when data could be limited. By harnessing the powerful predictive and inference abilities of AI, these algorithms could assist in and enhance clinical trial enrollment in regions with sparse, limited, or poorly documented health-care data. Moreover, in the context of this review, AI is used only as a screening tool. Many other patient and physician factors impact enrollment into clinical trials, and as such, it is unclear if improved screening processes would translate into increased clinical trials enrollment in all settings.

It is also important to note that all of these studies were retrospective analyses rather than prospective implementation; there may be unique challenges encountered in real-time operation, which are yet to be discovered. The implementation of a clinical tool and its prospective testing will inevitably reveal complexities inherent to the clinical settings (which are likely to be institution specific) and potential biases present in future datasets (eg, different patient populations, different definitions used in unstructured clinical notes). Additionally, several studies reported on drastically different samples, ranging from small cohorts of a few hundred patients to a large cohort of almost 48 000 patients, and assessed eligibility for only a portion of all clinical trials conducted at the hospital. As well, the authors of several studies could not be contacted to obtain data for the meta-analysis, leading to meta-analysis conclusions drawn from a few rather than all studies. There may exist publication bias, given this limited data. Despite this, more than 40 000 patients were included in the meta-analysis, across the 8 datasets reported in the 3 studies. Finally, there is overrepresentation of the most common cancers: breast, lung, and prostate. It is uncertain whether AI would perform as well in rarer tumors, where there may be more nuanced considerations required for clinical trial enrollment.

In conclusion, based on the currently available literature, AI appears to have comparable, if not superior, performance to manual screening for oncology clinical trials enrollment. As well, AI is efficient, requiring less time and resources to enroll patients; therefore, AI should be further investigated and implemented for this application. Future research should investigate critical parameters needed for AI algorithms and validate the use of AI for clinical trials enrollment in less resource-rich regions and to improve inclusivity of underrepresented patient groups.

Supplementary Material

Contributor Information

Ronald Chow, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada; London Regional Cancer Program, London Health Sciences Centre, Schulich School of Medicine and Dentistry, University of Western Ontario, London, ON, Canada; Institute of Biomedical Engineering, Faculty of Applied Science and Engineering, University of Toronto, Toronto, ON, Canada.

Julie Midroni, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Jagdeep Kaur, London Regional Cancer Program, London Health Sciences Centre, Schulich School of Medicine and Dentistry, University of Western Ontario, London, ON, Canada.

Gabriel Boldt, London Regional Cancer Program, London Health Sciences Centre, Schulich School of Medicine and Dentistry, University of Western Ontario, London, ON, Canada.

Geoffrey Liu, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Lawson Eng, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Fei-Fei Liu, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Benjamin Haibe-Kains, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Michael Lock, London Regional Cancer Program, London Health Sciences Centre, Schulich School of Medicine and Dentistry, University of Western Ontario, London, ON, Canada.

Srinivas Raman, Princess Margaret Cancer Centre, University Health Network, Temerty Faculty of Medicine, University of Toronto, Toronto, ON, Canada.

Funding

This work was partially funded by the CARO-CROF Pamela Catton Summer Studentship Award, and the Robert L. Tundermann and Christine E. Couturier philanthropic funds.

Notes

Role of the funder: The funders had no influence on the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Disclosures: None.

Author contributions: Conceptualization: RC, SR. Data Curation: RC, JM, JK, GB. Formal Analysis: RC. Funding Acquisition: RC, SR. Investigation: RC, SR. Methodology: RC, SR. Project Administration: RC, ML, SR. Resources: RC, ML, SR. Software: RC, ML, SR. Supervision: ML, SR. Validation: RC, ML, SR. Visualization: RC, SR. Writing—original draft: All authors. Writing—review & editing: All authors.

Data availability

No new data were generated or analyzed in support of this research. The data underlying this article will be shared on reasonable request to the corresponding author.

References

- 1. American Society of Clinical Oncology. ASCO guidelines, tools, & resources. 2022. https://www.asco.org/practice-patients/guidelines. Accessed May 27, 2022.

- 2. National Comprehensive Cancer Network. NCCN guidelines. 2022. https://www.nccn.org/guidelines/category_1. Accessed May 27, 2022.

- 3. Chiang AC, Lake J, Sinanis N, et al. Measuring the impact of academic cancer network development on clinical integration, quality of care, and patient satisfaction. J Oncol Pract. 2018;14(12):e823-e833. [DOI] [PubMed] [Google Scholar]

- 4. Ottevanger PB, De Mulder PH, Grol RP, Van Lier H, Beex LV.. Effects of quality of treatment on prognosis in primary breast cancer patients treated in daily practice. Anticancer Res. 2002;22(1a):459-465. [PubMed] [Google Scholar]

- 5. Stensland KD, McBride RB, Latif A, et al. Adult cancer clinical trials that fail to complete: an epidemic? J Natl Cancer Inst. 2014;106(9):dju229. [DOI] [PubMed] [Google Scholar]

- 6. von Itzstein MS, Hullings M, Mayo H, Beg MS, Williams EL, Gerber DE.. Application of information technology to clinical trial evaluation and enrollment: a review. JAMA Oncol. 2021;7(10):1559-1566. [DOI] [PubMed] [Google Scholar]

- 7. Liu R, Rizzo S, Whipple S, et al. Evaluating eligibility criteria of oncology trials using real-world data and AI. Nature. 2021;592(7855):629-633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Hassanzadeh H, Karimi S, Nguyen A.. Matching patients to clinical trials using semantically enriched document representation. J Biomed Inform. 2020;105:103406. [DOI] [PubMed] [Google Scholar]

- 9. Jung E, Jain H, Sinha AP, Gaudioso C.. Building a specialized lexicon for breast cancer clinical trial subject eligibility analysis. Health Informatics J. 2021;27(1):1460458221989392. [DOI] [PubMed] [Google Scholar]

- 10. London JW, Balestrucci L, Chatterjee D, Zhan T.. Design-phase prediction of potential cancer clinical trial accrual success using a research data mart. J Am Med Inform Assoc. 2013;20(e2):e260-e266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Chow R, Lock M, Raman S. The use of artificial intelligence for clinical trial enrolment in oncology trials. 2022. https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42022336075. Accessed November 1, 2022.

- 12. Whiting PF, Rutjes AWS, Westwood ME, et al. ; for the QUADAS-2 Group. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011;155(8):529-536. [DOI] [PubMed] [Google Scholar]

- 13. Rutter CM, Gatsonis CA.. A hierarchical regression approach to meta-analysis of diagnostic test accuracy evaluations. Stat Med. 2001;20(19):2865-2884. [DOI] [PubMed] [Google Scholar]

- 14. Alexander M, Solomon B, Ball DL, et al. Evaluation of an artificial intelligence clinical trial matching system in Australian lung cancer patients. JAMIA Open. 2020;3(2):209-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Beck JT, Rammage M, Jackson GP, et al. Artificial intelligence tool for optimizing eligibility screening for clinical trials in a large community cancer center. JCO Clinical Cancer Informatics. 2020;4:50-59. [DOI] [PubMed] [Google Scholar]

- 16. Calaprice-Whitty D, Galil K, Salloum W, Zariv A, Jimenez B.. Improving clinical trial participant prescreening with artificial intelligence (AI): a comparison of the results of AI-assisted vs standard methods in 3 oncology trials. Ther Innov Regul Sci. 2020;54(1):69-74. [DOI] [PubMed] [Google Scholar]

- 17. Cesario A, Simone I, Paris I, et al. Development of a digital research assistant for the management of patients’ enrollment in oncology clinical trials within a research hospital. J Pers Med. 2021;11(4):244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Cuggia M, Campillo-Gimenez B, Bouzille G, et al. Automatic selection of clinical trials based on a semantic web approach. Stud Health Technol Inform. 2015;216:564-568. [PubMed] [Google Scholar]

- 19. Delorme J, Charvet V, Wartelle M, et al. Natural language processing for patient selection in phase I or II oncology clinical trials. J Clin Oncol Clin Cancer Inform. 2021;5:709-718. [DOI] [PubMed] [Google Scholar]

- 20. Haddad T, Helgeson JM, Pomerleau KE, et al. Accuracy of an artificial intelligence system for cancer clinical trial eligibility screening: retrospective pilot study. JMIR Med Inform. 2021;9(3):e27767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Meystre SM, Heider PM, Kim Y, Aruch DB, Britten CD.. Automatic trial eligibility surveillance based on unstructured clinical data. Int J Med Inform. 2019;129:13-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Ni Y, Wright J, Perentesis J, et al. Increasing the efficiency of trial-patient matching: automated clinical trial eligibility pre-screening for pediatric oncology patients. BMC Med Inform Decis Mak. 2015;15(1):28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Zeng J, Wu Y, Bailey A, et al. Adapting a natural language processing tool to facilitate clinical trial curation for personalized cancer therapy. AMIA Jt Summits Transl Sci Proc. 2014;2014:126-131. [PMC free article] [PubMed] [Google Scholar]

- 24. Haibe-Kains B, Adam GA, Hosny A, et al. ; for the Massive Analysis Quality Control (MAQC) Society Board of Directors. Transparency and reproducibility in artificial intelligence. Nature. 2020;586(7829):E14-E16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Dankwa-Mullan I, Weeraratne D.. Artificial intelligence and machine learning technologies in cancer care: addressing disparities, bias, and data diversity. Cancer Discov. 2022;12(6):1423-1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. El-Deiry WS, Giaccone G.. Challenges in diversity, equity, and inclusion in research and clinical oncology. Front Oncol. 2021;11:642112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Kahn JM, Gray DM, Oliveri JM, Washington CM, DeGraffinreid CR, Paskett ED.. Strategies to improve diversity, equity, and inclusion in clinical trials. Cancer. 2022;128(2):216-221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Bodicoat DH, Routen AC, Willis A, et al. Promoting inclusion in clinical trials—a rapid review of the literature and recommendations for action. Trials. 2021;22(1):880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Schork NJ. Artificial intelligence and personalized medicine. In: Von Hoff DD, Han H, eds. Precision Medicine in Cancer Therapy. Cham: Springer International Publishing; 2019:265-283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Hosny A, Aerts Hugo JWL.. Artificial intelligence for global health. Science. 2019;366(6468):955-956. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

No new data were generated or analyzed in support of this research. The data underlying this article will be shared on reasonable request to the corresponding author.