Abstract

Artificial intelligence (AI) is a powerful tool that can assist researchers and clinicians in various settings. However, like any technology, it must be used with caution and awareness as there are numerous potential pitfalls. To provide a creative analogy, we have likened research to the PAC-MAN classic arcade video game. Just as the protagonist of the game is constantly seeking data, researchers are constantly seeking information that must be acquired and managed within the constraints of the research rules. In our analogy, the obstacles that researchers face are represented by “ghosts”, which symbolize major ethical concerns, low-quality data, legal issues, and educational challenges. In short, clinical researchers need to meticulously collect and analyze data from various sources, often navigating through intricate and nuanced challenges to ensure that the data they obtain are both precise and pertinent to their research inquiry. Reflecting on this analogy can foster a deeper comprehension of the significance of employing AI and other powerful technologies with heightened awareness and attentiveness.

Keywords: artificial intelligence, research, anesthesia, intensive care, pain, hospital, big data, legal medicine, data analysis, education

1. Introduction

Clinical researchers are professionals who plan, design, and conduct clinical studies to advance scientific discoveries while ensuring that ethical principles and standards are upheld [1]. In recent years, the increasing use of new technologies, particularly artificial intelligence (AI) [2], has greatly enhanced the potential of these tools for clinical research. AI has demonstrated impressive results in every phase and type of study, including drug discovery, protocol optimization, clinical trials, and data management [3,4].

In particular, the capability to handle extensive volumes of data provides researchers rapidly and precisely with unprecedented prospects to achieve substantial breakthroughs that were previously deemed unattainable [5]. Consequently, the rapid evolution of new technologies has revolutionized the field of clinical research, offering exciting opportunities for researchers to improve the accuracy and efficiency of their work. However, these advancements also come with significant challenges that must be necessarily addressed to ensure their successful implementation [6]

One of the most critical challenges facing clinical researchers is the need for a high level of awareness and understanding of these new technologies. While they offer a wide range of benefits, they can also lead to potentially serious errors if not used correctly. This requires researchers to be well-versed in all aspects of the technology, including its strengths, weaknesses, and limitations.

To fully capitalize on these tools, clinical researchers must be motivated to continuously acquire knowledge and develop practical solutions that can help them address complex research questions. This means staying up to date on the latest advancements, collaborating with experts in other fields, and constantly seeking new approaches to improve their work [7].

Despite the challenges, the benefits of using new technologies in clinical research are undeniable. For instance, through their capability to process large amounts of data quickly and accurately, they can offer researchers the opportunity to make significant breakthroughs that were once thought impossible. By utilizing these advancements efficiently, clinical researchers can expand the frontiers of what can be achieved and unveil novel insights that have the potential to revolutionize healthcare practices [8].

Drawing an imaginative analogy, we compared the role of a clinical researcher to that of the PAC-MAN video game protagonist, whose insatiable appetite for dots and energy pills is akin to the clinical researcher’s unrelenting pursuit of knowledge and considerable scientific objectives. Following this parallel, we have developed a simple guide for helping those working with AI technologies in the anesthesiology field. It should be useful to optimize the creation, development, and evaluation of research projects.

2. The PAC-MAN Metaphor

PAC-MAN is a classic arcade video game that gained immense popularity in the 1980s. Initially, “Puck Man” was created by the Japanese video game developer and publisher Namco. Midway Games later obtained the license to distribute the game in the United States and changed its name to “PAC-MAN”.

The game features a comical round yellow character who must collect as many yellow balls or pellets as possible while navigating through a maze [9]. Throughout the game, various elements (fruits) appear at random intervals, providing PAC-MAN with extra bonuses that help him achieve his objective more quickly [10].

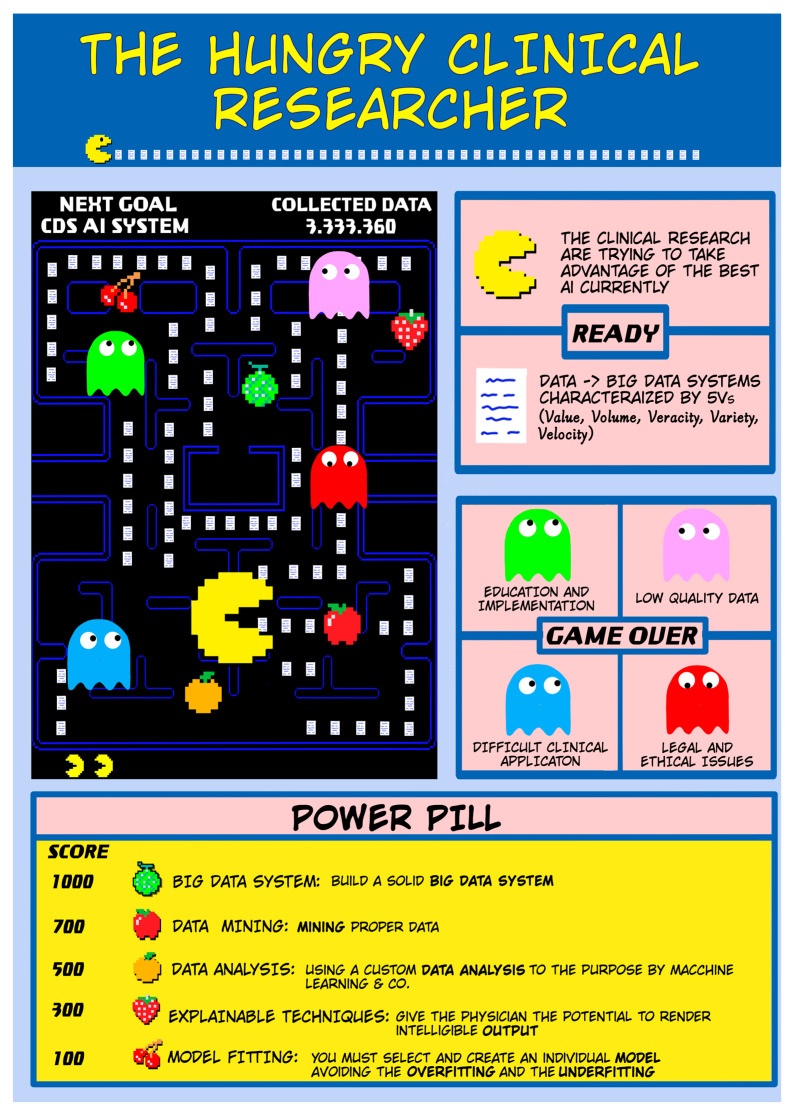

The metaphor of the hungry clinical researcher reflects the complexity and challenges of conducting research in AI. It highlights the need for multiple tools and resources, the importance of collaboration across disciplines, and the ongoing efforts to address open issues and maximize the benefits of AI in healthcare (Figure 1).

Figure 1.

The Hungry Clinical Researcher. It is a metaphor for a clinical researcher’s job and shows the tools, objectives, and open issues of clinical research in AI.

In the context of clinical research, the “yellow balls” can be likened to data, which are the essential building blocks for creating an effective and robust study.

The “fruits” are intermediate steps to accomplish the objective; once present, they can give stability to the methodology and thus allow for reaching the goal. These are represented by:

Building a big data system. A big data system pertains to a technological framework engineered to store, process, and analyze vast amounts of data that may be unstructured, heterogeneous, and produced at great speed. Building a big data system entails a sequence of phases that mandate careful planning, proficiency in data science technologies, and a profound comprehension of the objectives and data prerequisites [1].

Mining proper data, extracting, and recognizing useful information. An essential task in data analysis is to identify pertinent data from a vast collection of available data, and apply diverse tools and techniques to extract valuable insights and knowledge.

Using a custom data analysis to achieve the purpose. This entails tailoring data analysis techniques and tools to the specific needs and objectives of a research project. This process enables researchers and analysts to extract more relevant and valuable insights [11].

Rendering intelligible output. This is the process of transforming complex or abstract data into a form that is easily understood and interpreted by other researchers [2].

Selecting and creating an optimized model. This refers to the process of choosing the best algorithm or technique for a specific data analysis task and then fine-tuning its parameters to achieve the best possible performance [12].

On the other hand, enemies are represented by all the steps that must be faced. The “ghosts” are [3]:

Major ethical and legal issues. While ethical and legal considerations are always present in clinical research, the use of AI raises specific issues that must be addressed. The ethical implications are so significant that, in 2018, the theologian Paolo Benanti introduced the concept of algorethics. This is defined as the study of ethics applied to technology [13,14]. Since then, many important institutions have drafted recommendations for ethical and conscious AI use, such as the United Nations Educational, Scientific and Cultural Organization (UNESCO) adopting the first global agreement on AI ethics in 2021 [15]. The concrete risk, as depicted in dystopian films and books, is that AI may replace human beings rather than serve as a valuable tool. This would have immediate implications on the labor market, as seen during the industrial revolution, and more long-term implications in terms of security. However, legal regulation on the use of AI in medicine and clinical research is still in its infancy. One of the most discussed issues is the responsibility associated with several approaches described in the literature, including the formal, technological, and compromise approaches [16]. The formal and technological approaches differ in terms of fault attribution, with responsibility falling, respectively, on the developer or the insurance company. In the case of the compromise approach, the regulation focuses solely on the ethical question. The best approach is not yet known, and likely no single answer exists. European law has introduced the rule that the “developer’s responsibility is directly proportional to the autonomy of the AI robot.” However, most authors agree that AI is not yet mature enough to have legal responsibilities, and, therefore, AI should be seen as a clinical tool with humans bearing full responsibility [17]. In summary, the main ethical and legal issues related to AI can be categorized as algorithmic transparency and accountability; data bias, fairness, and equity; data privacy and security; malicious use of AI; and informed consent to use data [15,18]. Liability for harm and cybersecurity are also important considerations.

Low-quality data. Large datasets are crucial for Machine Learning and Deep Learning, but the use of low-quality or irrelevant data can seriously compromise the efficiency and usability of these systems. This is commonly known as the GIGO rule in computer science, which emphasizes that “garbage in, garbage out”. This means that the quality of the output is only as good as that of the input. In healthcare, where the output can directly affect crucial clinical decisions, this rule takes on an even greater significance.

Difficult clinical application. The main limitations in daily practice include performance drift, lack of external validation, lack of uncertainty quantification, and lack of proven clinical value. These limitations prevent the achievement of the fifth V of big data systems, which is the clinical value derived from the application of AI in clinical research and practice. Without overcoming these limitations, AI models remain only theoretical tools that are not applicable in the real world [10].

Education and implementation. Training physicians to understand the potentials and limits of AI clinical use [19,20] and studying strategies for their implementation in daily clinical practice are essential steps to extrapolate the full potential of these tools [13,21]. The risk is either not to use them or to use them incorrectly, implying, respectively, an underuse or an error.

Notably, clinical researchers and healthcare professionals employing AI techniques need to know these issues and try to address them (Table 1). However, not all roadblocks currently have a clear and practical solution, and several studies are underway to understand the best ways to overcome current limitations.

Table 1.

Relevant issues and main specific items.

| RELEVANT ISSUES | MAIN SPECIFIC ITEMS |

|---|---|

| ETHICAL ISSUES |

|

| LEGAL ISSUES |

|

| EDUCATION AND IMPLEMENTATION |

|

| QUALITY OF DATA |

|

| CLINICAL APPLICATION |

|

Legend. Relevant challenges to the use of AI techniques in medicine are reported. Next to them, some of the main specific items that are essential to developing tests or using an AI-based clinical decision support system are listed. These issues should be addressed or researched whenever an AI tool for healthcare is created or implemented in clinical practice, respectively (AI = Artificial Intelligence).

Obviously, the PAC-MAN metaphor should be interpreted as a simplistic reading of a complex and multifaceted problem. In fact, it is not possible to approach the theme of AI like a video game. However, the PAC-MAN metaphor, like all metaphors, can also be useful in arousing interest in a new field of clinical and research application.

3. Game Rules for a Clinical Researcher

Like in any game, a player (i.e., a clinical researcher) must follow rules to reach the targets. One of the main rules for our protagonist, PAC-MAN, is to eat all the Pac-dots in the labyrinth while avoiding being touched by ghosts. Failure to do so results in the loss of one of the available lives. Similarly, to bring a study from theory into practice, a researcher must follow guidelines and parameters to minimize bias, ensure replicability, and enhance reliability.

However, as AI in anesthesia is a relatively new field, there were initially no specific guidelines to follow. Existing guidelines did not fully adapt to the methodology and presentation of results generated using AI techniques, resulting in inadequate reporting and insufficiently robust studies. This could lead reviewers to draw incorrect conclusions [22,23]. Nowadays, there are various types of guidelines available, some of which are an extension of existing ones, to aid researchers in using AI in healthcare (Table 2) [23,24,25,26,27,28,29,30]. Adhering to these guidelines is critical for researchers, much like PAC-MAN following the game rules. Failure to do so could result in a progressive loss of the study’s robustness until it becomes clinically irrelevant. Following guidelines is a secure pathway to deliver high-quality output since they address specific AI issues and were developed to guide clinical research in this context [31]. Transparency in research is a novel feature that must be present and is one of the main aspects addressed by the guidelines. The clinical implications of using AI tools can be significant, and, therefore, applying the correct methodology is imperative [32].

Table 2.

The main guidelines regarding the use of AI in clinical research.

| Guideline | Description | Setting |

|---|---|---|

| SPIRIT-AI [23] | Standard Protocol Items: Recommendations for Interventional Trials—Artificial Intelligence | This promotes transparency and completeness for clinical trial protocols for AI interventions. |

| CONSORT-AI [25] | Consolidated Standards of Reporting Trials—Artificial Intelligence | This was developed to supplement SPIRIT-AI in order to improve the quality of trials for AI interventions. |

| STARD-AI [26] | Standards For Reporting Diagnostic Accuracy Studies—Artificial Intelligence | These guidelines help to improve transparency and completeness of reporting of diagnostic accuracy studies. |

| TRIPOD-AI [27] | Development of a reporting guideline for diagnostic and prognostic prediction studies based on artificial intelligence. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis–Aritificial Intelligence |

They will both be published to improve the reporting and critical appraisal of prediction model studies that applied ML techniques for diagnosis and prognosis. |

| PROBAST-AI [27] | Statement and the Prediction model Risk Of Bias ASsessment Tool–Artificial Intelligence | |

| MI-CLAIM [28] | Minimum Information about CLinical Artificial Intelligence Modeling | This improves the reporting of information regarding clinical AI algorithms. |

| MINIMAR [29] | MINimum Information for Medical AI Reporting | This establishes the minimum information necessary to understand intended predictions, target populations, hidden biases, and the ability to generalize these emerging technologies. |

| DECIDE-AI [30] | Developmental and Exploratory Clinical Investigation of DEcision-support systems driven by Artificial Intelligence | This improves the evaluation and reporting of human factors in clinical AI studies. This guideline will address the essential role that human factors will have in how a clinical AI algorithm performs. |

The main guidelines regarding the use of AI in clinical research [24] (AI = artificial intelligence; ML = Machine Learning).

The introduction of AI and new technologies is changing the traditional rules of the game in medicine. In recent decades, clinical practice has been founded on evidence-based medicine (EBM), which relies on the current scientific literature, individual practice, and the specific characteristics of each clinical case [33]. Although EBM is not perfect, it has become an essential part of modern medicine [33]. The disruptive force of new technologies is gradually changing this paradigm, as scientific evidence of the potential of these technologies in medicine continues to grow. These intelligent tools are increasingly entering our clinical practice, as researchers and physicians. However, their different methodology challenges the traditional pyramid of evidence, which is a cornerstone of EBM. To address this, some authors have proposed modifying the pyramid to include evidence from AI in clinical practice and medical research. This proposal would guide the evaluation of AI technologies in everyday life and help clinicians to navigate this new area of medicine. However, there is no unique game strategy for clinical researchers to follow. Just as PAC-MAN can choose different paths to tackle the maze, clinicians can reach their goals through different approaches. For example, in recent years, many predictive models have emerged that were validated internally but lacked external validation. Clinicians must consider whether it is appropriate to externally validate each proposed model before moving on to the next one, or whether there is a better approach. Ultimately, the goal should be to make the most of these technologies for our patients, and clinicians must continue to evaluate and improve their use of these tools to achieve this goal.

4. AI in Anesthesia

Although comparing a researcher to playing a video game may be a simplistic analogy, AI applied to clinical anesthesia requires addressing several scenarios to have a consolidated experience. Despite the term being coined in 1956 by American mathematician John McCarthy, there have been many unsuccessful attempts to carry out certain tasks in the field of anesthesia. However, recent advancements have led to the slow closing of the deep chasm between AI-based research articles and their application to clinical anesthesia.

For instance, the implementation of closed-loop systems capable of accurate risk assessment, intraoperative management, automated drug delivery, and predicting perioperative outcomes could reduce the intensity of physician work, improve efficiency, lower the rate of misdiagnosis, and lead to a fully automated anesthesia maintenance system. However, AI has its limitations and cannot replace human skills, communication, and empathy of hospital staff. Additionally, AI systems are at a preliminary and immature stage, and their auto-updating still requires human interface [34].

Clinicians must serve as administrators in governing the use of clinical AI. One example is “dataset shift”, a malfunction that occurs when an ML system underperforms due to a mismatch between the data set with which it was developed and data for which it is deployed. When using an AI system, clinicians should note any misalignment between the model’s predictions and their clinical judgment, and act accordingly [35]. However, the use of AI in healthcare may lead to new types of errors, which will require targeted strategies to study and adapt clinical practice.

The manufacturers of AI tools must declare their safety margin, and users must be aware of it. For example, Esteva et al. [36] showed that in the discrimination of benign and malignant melanocytic lesions, while human doctors overdiagnosed, the model underestimated, leading to potentially unfavorable outcomes. AI models do not consider their real implications, and users must be aware of these behaviors to limit the misuse of these tools. It is necessary to train for this new hybrid work team, transforming the use of AI tools into real collaboration, with the optimization of patient outcomes as a common goal rather than only the best predictive performance.

5. Clinical Practice and Research Perspectives

The ultimate goal of AI is to develop intelligent tools that can enhance our clinical practice, increase patient safety, and improve diagnostic and treatment accuracy. However, AI is currently unable to provide the same level of results in all areas of medicine due to varying interests and specializations.

Radiology is an excellent example of how AI can improve clinical practice through the creation of intelligent tools. Conversely, other areas, such as predicting postoperative complications in perioperative medicine, have not yet produced the desired results. Although many predictive models have been published, most are still in the research stage, and a valid and universally applicable intelligent tool for clinical practice has yet to be developed [37,38,39,40,41,42,43,44,45].

Nevertheless, even if a model with strong performance is developed, it is not a guarantee of success. According to the game metaphor, “true victory” is only achieved if the model created is valid and applicable to daily clinical practice (Figure 2).

Figure 2.

An example of AI in clinical practice. On the left side (A), the researcher manages to win the challenge about AI in radiology scenario (“NEXT LEVEL”). On the right side (B), the researcher fails to achieve the goal in perioperative medicine (“GAME OVER”), as many issues about AI are still to be solved in this context, represented by ghosts. “INSERT COIN” identifies the many challenges that AI faces in clinical practice before its widespread use.

By following this approach, it is possible to enhance the applications of AI and explore new avenues for future research. For example, Explainable AI is an emerging research field that aims to create AI systems capable of justifying their decisions and offering insight into the reasoning behind their conclusions. This could be especially valuable in medical contexts, where comprehending the rationale behind a decision is critical [46]. Additionally, predictive analytics is an AI domain that can leverage past data to make predictions about future events. In the medical domain, predictive analytics has the potential to anticipate patient outcomes and aid healthcare practitioners in their treatment decision-making [47]. Furthermore, Natural Language Processing (NLP) is an AI field focused on enabling interaction between humans and computers using natural language. Remarkably, there is a great potential for future research to investigate how NLP can be utilized in healthcare settings to enhance communication between healthcare professionals and patients [48]. Finally, multi-modal data analysis involves combining data from different sources, such as medical images, clinical notes, and genetic data, to provide a more comprehensive picture of a patient’s health [49].

6. Conclusions

The PAC-MAN analogy can serve as a useful reminder of the essential role that data play in clinical research, and highlights the importance of gathering, analyzing, and utilizing data in a thoughtful and strategic manner. Just as PAC-MAN must navigate through a complex maze to collect all the dots, clinical researchers must carefully gather and analyze data from a variety of sources, often working through complex and nuanced issues to ensure that the data they collect are accurate and relevant to their research question.

In the future of healthcare, and particularly in fields such as anesthesia and critical care medicine, AI is set to continue playing a significant role, and it is crucial to adopt suitable technologies that uphold medical ethics principles. Nevertheless, while AI presents numerous benefits, it is a complex tool that requires strict adherence to precautions to prevent any potential negative outcomes.

AI, being a completely new field of clinical and research application with possible enormous developments in the future, is open to speculation. Such speculations are dangerous, not only for those who want to automatically replace human intelligence with artificial intelligence, but, if possible, they are even more dangerous in the field of research. The human factor is fundamental in a field such as Anesthesia and Critical Care, where not everything can be reduced to science. If the human factor is eliminated from research, this will also inevitably produce disasters in the clinical field. Like any change, the advent of AI needs to be governed and addressed.

Author Contributions

Conceptualization, E.G.B., M.C., A.V. and V.B.; methodology, E.G.B., A.V., M.C. and V.B.; software, E.G.B., R.L., C.C. and V.B. validation, E.G.B., R.L., C.C. and V.B.; writing—original draft preparation, E.G.B., A.V., M.C. and V.B.; writing—review and editing, E.G.B., A.V., R.L., C.C., M.C. and V.B.; visualization, E.G.B. and V.B.; supervision, E.G.B. and V.B.; project administration, E.G.B., A.V. and V.B. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Harnett J.D. Research Ethics for Clinical Researchers. Methods Mol. Biol. 2021;2249:53–64. doi: 10.1007/978-1-0716-1138-8_4. [DOI] [PubMed] [Google Scholar]

- 2.Cobianchi L., Piccolo D., Dal Mas F., Agnoletti V., Ansaloni L., Balch J., Biffl W., Butturini G., Catena F., Coccolini F., et al. Surgeons’ Perspectives on Artificial Intelligence to Support Clinical Decision-Making in Trauma and Emergency Contexts: Results from an International Survey. World J. Emerg. Surg. 2023;18:1. doi: 10.1186/s13017-022-00467-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Weissler E.H., Naumann T., Andersson T., Ranganath R., Elemento O., Luo Y., Freitag D.F., Benoit J., Hughes M.C., Khan F., et al. The Role of Machine Learning in Clinical Research: Transforming the Future of Evidence Generation. Trials. 2021;22:537. doi: 10.1186/s13063-021-05489-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Klumpp M., Hintze M., Immonen M., Ródenas-Rigla F., Pilati F., Aparicio-Martínez F., Çelebi D., Liebig T., Jirstrand M., Urbann O., et al. Artificial Intelligence for Hospital Health Care: Application Cases and Answers to Challenges in European Hospitals. Healthcare. 2021;9:961. doi: 10.3390/healthcare9080961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dolezel D., McLeod A. Big Data Analytics in Healthcare: Investigating the Diffusion of Innovation. Perspect. Health Inf. Manag. 2019;16:1a. [PMC free article] [PubMed] [Google Scholar]

- 6.Garcia-Vidal C., Sanjuan G., Puerta-Alcalde P., Moreno-García E., Soriano A. Artificial Intelligence to Support Clinical Decision-Making Processes. EBioMedicine. 2019;46:27–29. doi: 10.1016/j.ebiom.2019.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yu K.-H., Beam A.L., Kohane I.S. Artificial Intelligence in Healthcare. Nat. Biomed. Eng. 2018;2:719–731. doi: 10.1038/s41551-018-0305-z. [DOI] [PubMed] [Google Scholar]

- 8.Hogg H.D.J., Al-Zubaidy M., Technology Enhanced Macular Services Study Reference Group. Talks J., Denniston A.K., Kelly C.J., Malawana J., Papoutsi C., Teare M.D., Keane P.A., et al. Stakeholder Perspectives of Clinical Artificial Intelligence Implementation: Systematic Review of Qualitative Evidence. J. Med. Internet Res. 2023;25:e39742. doi: 10.2196/39742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gallagher M., Ryan A. Learning to Play Pac-Man: An Evolutionary, Rule-Based Approach; Proceedings of the IEEE Conference Publication The 2003 Congress on Evolutionary Computation; Canberra, ACT, Australia. 8–12 December 2003; [(accessed on 10 January 2023)]. Available online: https://ieeexplore.ieee.org/document/1299397. [Google Scholar]

- 10.“DeNero J., Klein D. Teaching Introductory Artificial Intelligence with Pac-Man; Proceedings of the AAAI Conference on Artificial Intelligence; Atlanta, GA, USA. 11–15 July 2010; [(accessed on 10 January 2023)]. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/18829. [Google Scholar]

- 11.Ahn E. Introducing Big Data Analysis Using Data from National Health Insurance Service. Korean J. Anesthesiol. 2020;73:205–211. doi: 10.4097/kja.20129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bellini V., Cascella M., Cutugno F., Russo M., Lanza R., Compagnone C., Bignami E.G. Understanding Basic Principles of Artificial Intelligence: A Practical Guide for Intensivists. Acta Biomed. 2022;93:e2022297. doi: 10.23750/abm.v93i5.13626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Algoretica-Parole Nuove-Accademia Della Crusca. [(accessed on 11 February 2023)]. Available online: https://accademiadellacrusca.it/it/parole-nuove/algoretica/18479.

- 14.Mantini A. Technological Sustainability and Artificial Intelligence Algor-Ethics. Sustainability. 2022;14:3215. doi: 10.3390/su14063215. [DOI] [Google Scholar]

- 15.Recommendation on the Ethics of Artificial Intelligence-UNESCO Digital Library. [(accessed on 11 February 2023)]. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000381137.

- 16.Laptev V.A., Ershova I.V., Feyzrakhmanova D.R. Medical Applications of Artificial Intelligence (Legal Aspects and Future Prospects) Laws. 2022;11:3. doi: 10.3390/laws11010003. [DOI] [Google Scholar]

- 17.Zhang J., Zhang Z. Ethics and Governance of Trustworthy Medical Artificial Intelligence. BMC Med. Inform. Decis. Mak. 2023;23:7. doi: 10.1186/s12911-023-02103-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Petrucci E., Vittori A., Cascella M., Vergallo A., Fiore G., Luciani A., Pizzi B., Degan G., Fineschi V., Marinangeli F. Litigation in Anesthesia and Intensive Care Units: An Italian Retrospective Study. Healthcare. 2021;9:1012. doi: 10.3390/healthcare9081012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bellini V., Valente M., Pelosi P., Del Rio P., Bignami E. Big Data and Artificial Intelligence in Intensive Care Unit: From “Bla, Bla, Bla” to the Incredible Five V’s. Neurocrit. Care. 2022;37:170–172. doi: 10.1007/s12028-022-01472-9. [DOI] [PubMed] [Google Scholar]

- 20.Hatherley J., Sparrow R., Howard M. The Virtues of Interpretable Medical Artificial Intelligence. Camb. Q. Healthc. Ethics. 2022:1–10. doi: 10.1017/S0963180122000305. [DOI] [PubMed] [Google Scholar]

- 21.Bellini V., Montomoli J., Bignami E. Poor Quality Data, Privacy, Lack of Certifications: The Lethal Triad of New Technologies in Intensive Care. Intensive Care Med. 2021;47:1052–1053. doi: 10.1007/s00134-021-06473-4. [DOI] [PubMed] [Google Scholar]

- 22.Luo W., Phung D., Tran T., Gupta S., Rana S., Karmakar C., Shilton A., Yearwood J., Dimitrova N., Ho T.B., et al. Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research: A Multidisciplinary View. J. Med. Internet Res. 2016;18:e323. doi: 10.2196/jmir.5870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cruz Rivera S., Liu X., Chan A.-W., Denniston A.K., Calvert M.J., SPIRIT-AI and CONSORT-AI Working Group. SPIRIT-AI and CONSORT-AI Steering Group SPIRIT-AI and CONSORT-AI Consensus Group Guidelines for Clinical Trial Protocols for Interventions Involving Artificial Intelligence: The SPIRIT-AI Extension. Nat. Med. 2020;26:1351–1363. doi: 10.1038/s41591-020-1037-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Crossnohere N.L., Elsaid M., Paskett J., Bose-Brill S., Bridges J.F.P. Guidelines for Artificial Intelligence in Medicine: Literature Review and Content Analysis of Frameworks. J. Med. Internet Res. 2022;24:e36823. doi: 10.2196/36823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Liu X., Cruz Rivera S., Moher D., Calvert M.J., Denniston A.K. SPIRIT-AI and CONSORT-AI Working Group Reporting Guidelines for Clinical Trial Reports for Interventions Involving Artificial Intelligence: The CONSORT-AI Extension. Lancet Digit. Health. 2020;2:e537–e548. doi: 10.1016/S2589-7500(20)30218-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Sounderajah V., Ashrafian H., Aggarwal R., De Fauw J., Denniston A.K., Greaves F., Karthikesalingam A., King D., Liu X., Markar S.R., et al. Developing Specific Reporting Guidelines for Diagnostic Accuracy Studies Assessing AI Interventions: The STARD-AI Steering Group. Nat. Med. 2020;26:807–808. doi: 10.1038/s41591-020-0941-1. [DOI] [PubMed] [Google Scholar]

- 27.Collins G.S., Dhiman P., Andaur Navarro C.L., Ma J., Hooft L., Reitsma J.B., Logullo P., Beam A.L., Peng L., Van Calster B., et al. Protocol for Development of a Reporting Guideline (TRIPOD-AI) and Risk of Bias Tool (PROBAST-AI) for Diagnostic and Prognostic Prediction Model Studies Based on Artificial Intelligence. BMJ Open. 2021;11:e048008. doi: 10.1136/bmjopen-2020-048008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Norgeot B., Quer G., Beaulieu-Jones B.K., Torkamani A., Dias R., Gianfrancesco M., Arnaout R., Kohane I.S., Saria S., Topol E., et al. Minimum Information about Clinical Artificial Intelligence Modeling: The MI-CLAIM Checklist. Nat. Med. 2020;26:1320–1324. doi: 10.1038/s41591-020-1041-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hernandez-Boussard T., Bozkurt S., Ioannidis J.P.A., Shah N.H. MINIMAR (MINimum Information for Medical AI Reporting): Developing Reporting Standards for Artificial Intelligence in Health Care. J. Am. Med. Inform Assoc. 2020;27:2011–2015. doi: 10.1093/jamia/ocaa088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Vasey B., Nagendran M., Campbell B., Clifton D.A., Collins G.S., Denaxas S., Denniston A.K., Faes L., Geerts B., Ibrahim M., et al. Reporting Guideline for the Early-Stage Clinical Evaluation of Decision Support Systems Driven by Artificial Intelligence: DECIDE-AI. Nat. Med. 2022;28:924–933. doi: 10.1038/s41591-022-01772-9. [DOI] [PubMed] [Google Scholar]

- 31.Rogers W.A., Draper H., Carter S.M. Evaluation of Artificial Intelligence Clinical Applications: Detailed Case Analyses Show Value of Healthcare Ethics Approach in Identifying Patient Care Issues. Bioethics. 2021;35:623–633. doi: 10.1111/bioe.12885. [DOI] [PubMed] [Google Scholar]

- 32.Reddy S., Rogers W., Makinen V.-P., Coiera E., Brown P., Wenzel M., Weicken E., Ansari S., Mathur P., Casey A., et al. Evaluation Framework to Guide Implementation of AI Systems into Healthcare Settings. BMJ Health Care Inform. 2021;28:e100444. doi: 10.1136/bmjhci-2021-100444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Szajewska H. Evidence-Based Medicine and Clinical Research: Both Are Needed, Neither Is Perfect. Ann. Nutr. Metab. 2018;72((Suppl. 3)):13–23. doi: 10.1159/000487375. [DOI] [PubMed] [Google Scholar]

- 34.Garg R., Patel A., Hoda W. Emerging Role of Artificial Intelligence in Medical Sciences-Are We Ready! J. Anaesthesiol. Clin. Pharmacol. 2021;37:35–36. doi: 10.4103/joacp.JOACP_634_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Finlayson S.G., Subbaswamy A., Singh K., Bowers J., Kupke A., Zittrain J., Kohane I.S., Saria S. The Clinician and Dataset Shift in Artificial Intelligence. N. Engl. J. Med. 2021;385:283–286. doi: 10.1056/NEJMc2104626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Esteva A., Kuprel B., Novoa R.A., Ko J., Swetter S.M., Blau H.M., Thrun S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lee S., Summers R.M. Clinical Artificial Intelligence Applications in Radiology: Chest and Abdomen. Radiol. Clin. N. Am. 2021;59:987–1002. doi: 10.1016/j.rcl.2021.07.001. [DOI] [PubMed] [Google Scholar]

- 38.Groot Lipman K.B.W., de Gooijer C.J., Boellaard T.N., van der Heijden F., Beets-Tan R.G.H., Bodalal Z., Trebeschi S., Burgers J.A. Artificial Intelligence-Based Diagnosis of Asbestosis: Analysis of a Database with Applicants for Asbestosis State Aid. Eur. Radiol. 2022 doi: 10.1007/s00330-022-09304-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cascella M., Montomoli J., Bellini V., Bignami E. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. J. Med. Syst. 2023;47:33. doi: 10.1007/s10916-023-01925-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cascella M., Monaco F., Nocerino D., Chinè E., Carpenedo R., Picerno P., Migliaccio L., Armignacco A., Franceschini G., Coluccia S., et al. Bibliometric Network Analysis on Rapid-Onset Opioids for Breakthrough Cancer Pain Treatment. J. Pain Symptom Manag. 2022;63:1041–1050. doi: 10.1016/j.jpainsymman.2022.01.023. [DOI] [PubMed] [Google Scholar]

- 41.Saputra D.C.E., Sunat K., Ratnaningsih T. A New Artificial Intelligence Approach Using Extreme Learning Machine as the Potentially Effective Model to Predict and Analyze the Diagnosis of Anemia. Healthcare. 2023;11:697. doi: 10.3390/healthcare11050697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cascella M., Coluccia S., Monaco F., Schiavo D., Nocerino D., Grizzuti M., Romano M.C., Cuomo A. Different Machine Learning Approaches for Implementing Telehealth-Based Cancer Pain Management Strategies. J. Clin. Med. 2022;11:5484. doi: 10.3390/jcm11185484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lee T.-S., Lu C.-J. Health Informatics: The Foundations of Public Health. Healthcare. 2023;11:798. doi: 10.3390/healthcare11060798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cascella M., Racca E., Nappi A., Coluccia S., Maione S., Luongo L., Guida F., Avallone A., Cuomo A. Bayesian Network Analysis for Prediction of Unplanned Hospital Readmissions of Cancer Patients with Breakthrough Cancer Pain and Complex Care Needs. Healthcare. 2022;10:1853. doi: 10.3390/healthcare10101853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Moussaid A., Zrira N., Benmiloud I., Farahat Z., Karmoun Y., Benzidia Y., Mouline S., El Abdi B., Bourkadi J.E., Ngote N. On the Implementation of a Post-Pandemic Deep Learning Algorithm Based on a Hybrid CT-Scan/X-Ray Images Classification Applied to Pneumonia Categories. Healthcare. 2023;11:662. doi: 10.3390/healthcare11050662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Linardatos P., Papastefanopoulos V., Kotsiantis S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy. 2020;23:18. doi: 10.3390/e23010018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sghir N., Adadi A., Lahmer M. Recent Advances in Predictive Learning Analytics: A Decade Systematic Review (2012–2022) Educ. Inf. Technol. 2022:1–35. doi: 10.1007/s10639-022-11536-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Wu S., Roberts K., Datta S., Du J., Ji Z., Si Y., Soni S., Wang Q., Wei Q., Xiang Y., et al. Deep Learning in Clinical Natural Language Processing: A Methodical Review. J. Am. Med. Inform. Assoc. 2020;27:457–470. doi: 10.1093/jamia/ocz200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Fu J., Wang H., Na R., Jisaihan A., Wang Z., Ohno Y. Recent Advancements in Digital Health Management Using Multi-Modal Signal Monitoring. Math. Biosci. Eng. 2023;20:5194–5222. doi: 10.3934/mbe.2023241. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.