Abstract

The Sammen Om Demens (together for dementia), a citizen science project developing and implementing an AI-based smartphone app targeting citizens with dementia, is presented as an illustrative case of ethical, applied AI entailing interdisciplinary collaborations and inclusive and participative scientific practices engaging citizens, end users, and potential recipients of technological-digital innovation. Accordingly, the participatory Value-Sensitive Design of the smartphone app (a tracking device) is explored and explained across all of its phases (conceptual, empirical, and technical). Namely, from value construction and value elicitation to the delivery, after various iterations engaging both expert and non-expert stakeholders, of an embodied prototype built on and tailored to their values. The emphasis is on how moral dilemmas and value conflicts, often resulting from diverse people’s needs or vested interests, have been resolved in practice to deliver a unique digital artifact with moral imagination that fulfills vital ethical–social desiderata without undermining technical efficiency. The result is an AI-based tool for the management and care of dementia that can be considered more ethical and democratic, since it meaningfully reflects diverse citizens' values and expectations on the app. In the conclusion, we suggest that the co-design methodology outlined in this study is suitable to generate more explainable and trustworthy AI, and also, it helps to advance towards technical-digital innovation holding a human face.

Keywords: Value-sensitive design, Applied AI, Citizen science, Stakeholder engagement, eHealth and mHealth technologies, Explainable and trustworthy AI

Introduction

By definition, Value-Sensitive Design (VSD) is a popular tripartite and iterative methodology for the design of ethical technologies compounded by three main phases: conceptual, empirical, and technological (see [36, 52, 53, 131–133] inter alia) that has been applied to develop manifold ethical technological-digital tools and infrastructures (more details in Sect. 3. As Friedman et al. sum up, VSD is “a theoretically grounded approach to the design of technology that accounts for human values in a principled and comprehensive manner throughout the design process” (Friedman et al. [56], p. 1; see also, Friedman et al. [55]). More recently, VSD has been connected to the so-called “AI for social good” [129].

In this paper, we aim to demonstrate how VSD can profitably be applied to the design of AI, digital health, and mHealth/eHealth1 technologies targeting vulnerable individuals such as people living with dementia, who are directly involved in all its three phases. The World Health Organization (WHO) defines mHealth/eHealth as “the use of mobile and wireless devices to improve health outcomes, healthcare services and, related research” ([141], p. 6). Consistently, an AI-based smartphone app belonging to such mHealth/eHealth technologies has been developed in the Sammen Om Demens (SOD) project (together for dementia). This project is promoted by the Danish municipality of Nyborg (Southern Denmark region) within the frame of “smart cities”2 initiatives and an overall strategy for the management and care of dementia3 actively involving the local community. Briefly, the SOD app can transform a smartphone in an AI-based tracking device (a form of the so-called surveillance technology) that automatically detects when a person with dementia gets lost, and hence, it triggers an alert to families and local, nearest volunteers to jointly involve them in a rescue operation (obviously, all of them must have previously downloaded the app in their smartphones). A citizen science stance has been adopted to validate an original co-design approach; namely, an inclusive and participative VSD that entails to proactively engage relevant stakeholders (both experts and non-experts), end users, and potential recipients of the technology in question in its technical development and in the outline of a protocol for its functioning. The rationale behind this strategy is to find a balance and legitimately trade-off between personal privacy of the users and functionality of the app (i.e., the surveillance ability).

Although a univocal definition is lacking, citizen science is often used as an umbrella term to describe a variety of ways in which citizens and the public can participate in research activities producing knowledge (as data collectors and co-analysts, as policy co-developers and so on) inspired by the principles of co-creation, cooperation, sustainability, and social impact. Frequently, the term is employed to bring together scientific ideals, such as participatory science, post-normal science, civic science, and crowd science (see [68, 138]). A new-fangled movement has been led by the European Citizen Science Association (ECSA) wherein a multidisciplinary community of scholars and practitioners has established the ten leading principles of citizen science [65, 66, 102]4. While providing new principles or definitions of citizen science is not an aim of this study, the general ideal behind this stance has been adopted to frame the participatory VSD of the SOD mHealth app and justify the direct involvement of a wide range of stakeholders; in primis citizens with dementia (CwD), but also relatives, social workers, healthcare professionals, and citizen representatives from the (local) public in the making of a prototype and the establishment of an agreed protocol for the app implementation and usage.

The pragmatic approach adopted in this study is rather original for two main reasons: (a) engaging stakeholders with cognitive or mental problems is not a common praxis either in dementia studies [12, 16, 109]5 or in the design of eHealth/mHealth technologies aimed at improving mental healthcare targeting individuals with cognitive impairments (see Maathuis et al. [84], p. 872). Additionally, in the existing VSD literature, a few studies have reported iterations directly coming from promised enhanced design practices incorporating values (see [140], p. 3) and this trend comprises eHealth/mHealth technologies. A remarkable exception concerning the involvement of patients with mental diseases in VSD is represented by the design of a “web-based QoL-instrument” for measuring people’s mental health problems (see Maathuis et al. [84]).6 Here, values, such as autonomy, efficiency, empowerment, universal usability, privacy, redefinition of roles, (redistribution) of responsibilities, reliability, solidarity, surveillance, and trust, are seen to be central for the technology to develop. Although stakeholders’ values are elicited by means of participative practices and empirical methods, a persistent limitation of this study relies on reporting only the first stages of VSD (conceptual and empirical phases). The most important phase of VSD, namely, the technical phase in which previously selected values are weighed and prioritized to be meaningfully embodied in the prototype of a brand-new technology having ethical and social importance, is missing.

This is not surprising since how to translate a set of abstract values into concrete design requirements and technical solutions is a big challenge in technological design and a notorious pending task in the current AI studies (see [35] Ch. 6–12, [89]). That is, producing embodied AI and technological-digital tools reflecting not only desirable values and virtuous principles but also capable of producing the expected positive ethical–social effects once implemented in society, seems difficult enough (e.g., AI enhancing human agency, trust or, fairness). Due to an exclusive focus on the conceptual level and in selecting a set of universal values, mainstream accounts in AI ethics [50, 51, 125] or digital humanities [14] seem unable to satisfactorily link theory and practice. As recently evidenced by Gerdes [61] despite substantial theoretical advancements in AI ethics, such as the emerging field of “AI ethics by design” [21, 129], either interdisciplinary teamwork or practical applications of participatory methods, jointly involving AI experts and citizens/the public in the design of AI-based technologies and algorithms, are still rather infrequent at an applied science level. However, these aspects seem crucial to advance the current applied research on explainable AI and trustworthy AI, especially in view of engendering public trust on algorithmic decisions and technical-digital innovation generated [48, 49, 95, 98]. Both interdisciplinarity and participatory design practices, we maintain, can be fruitful to open the “black box” in ways that the AI and algorithms generated can publicly be acceptable; namely, AI and algorithms can be perceived as effective, fair, secure, and trustworthy, since the design process is sufficiently transparent (inclusive, participative and, value-sensitive), and thus, outputs are well understood by recipients.

In this paper, the shortcomings regarding interdisciplinarity and stakeholder participation in the fields of AI ethics and AI ethics by design are addressed by focusing on the participatory VSD of the SOD mHealth app. While we do not discuss why the design of AI should involve ethical considerations (this is assumed from the beginning) or specific values (as usual in AI ethics), the main focus is on the rationale of the design process to extrapolating pragmatic considerations. In contrast to standard approaches to ethical AI, this study is less concerned with discussing ethical objections arising in the design of AI, and instead, it concentrates on how both elements (interdisciplinarity and stakeholder participation) have been accommodated in the actual doing of the SOD app and clarifies the advantages for delivering ethical AI with higher social relevance and utility. Quite originally, the following sections report all phases of VSD: from (empirical) value construction and value elicitation to the delivery after several iterations, all of them actively engaging a wide range of diverse stakeholders, of an embodied prototype meaningfully built on their values. In targeting the stakeholder engagement and participatory design activities within the SOD app/project, an emphasis is on how value dilemmas and value conflicts, regularly resulting from diverse people’s needs or vested interests, have been resolved pragmatically to deliver a unique digital artifact with moral imagination that fulfills vital ethical–social–democratic desiderata without weakening technical efficiency (as often believed by technical people/scholars). To our knowledge, it is the first time that an AI-based technology for the management and care of dementia (a comprehensive review in [67]) has been developed by directly involving a wide range of stakeholders, including CwD, through innovative, participatory VSD methods (on that, see also Andersen and Chiarandini [7]).

In the next sections, the importance of adopting a procedural ethics stance and procedural values (i.e., an original, procedural-deliberative VSD) and thus, of choosing design values underlying the SOD app by means of empirical techniques holding a dialogic-deliberative rationale, is stated and defended against rival views (Sect. 3). Next, we expound on how different people's values have been elicited by means of an inclusive and participative empirical procedure and translated into concrete design requirements and specific embodied technical-digital solutions (Sects. 4, 4.1, 4.2 and, 5). Finally (Sect. 6), we suggest that the co-design methodology resulting from the participatory VSD of the SOD mHealth app could profitably be extended to other AI fields and digital technologies to generate genuine, human-centered and society-oriented AI and technological-digital innovation.

The case: the SammenomDemens project (together for dementia)

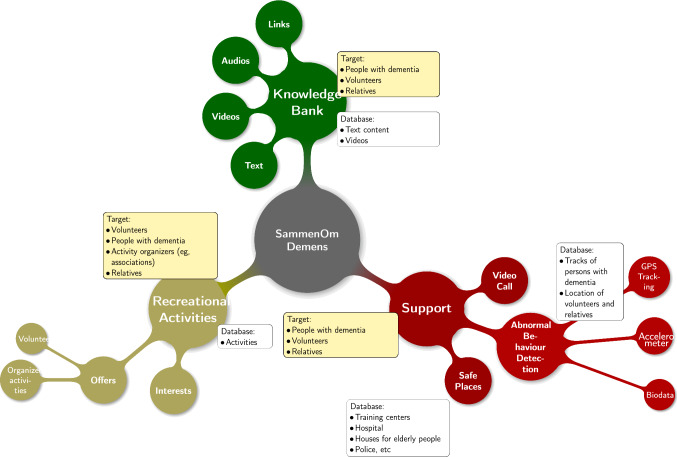

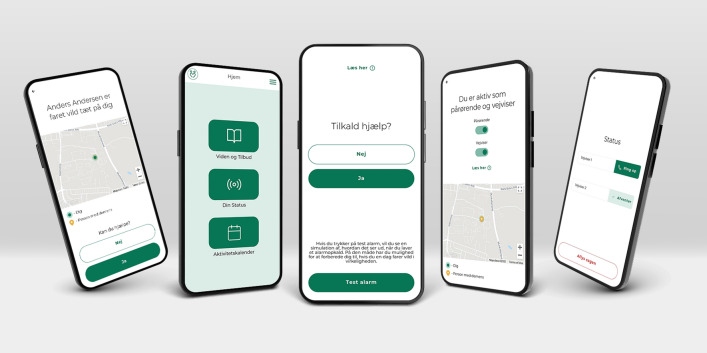

The Sammen Om Demens (SOD) is a citizen science project co-funded by the municipality of Nyborg (Southern Denmark region) and TrygFonden (a private foundation)7 that is developing a smartphone app targeting citizens with mild-to-moderate forms of dementia with the aim of enhancing their and their relatives’ safety, well-being, and quality of life. The SOD mHealth app8 is the result of a close collaboration of public and private actors with a university9 to carry out genuine interdisciplinary teamwork and participatory science practices engaging relevant stakeholders. The methodology known as VSD has been adopted to conceptualize and develop both the user interfaces and (partly) the detection algorithms underlying the surveillance technology in question. As it is anticipated, the SOD mHealth app transforms a smartphone in a tracking device aimed at helping citizens with dementia (CwD) who got lost (so-called “wandering” behavior) to get in contact with their relatives and be rescued by way of the support of volunteering citizens. If a CwD deviates from his/her usual route, the SOD app automatically triggers an alarm that activates a relative and three volunteers in the proximity (which contacts and profiles are recorded by the app) who will intervene to bring the lost person to a safe place (e.g., the local dementia centre, a police station and, the like) to finally, meet the next of kin who is in charge of managing and co-ordinating the entire “rescue operation” (as we named this task in the SOD project). For final users (CwD, volunteers and, relatives), the SOD app consists of three main components, each supplying a different functionality. First, a knowledge bank10 providing citizens with ready-to-use information on dementia as a disease and the related services offered by the municipality of Nyborg. This part includes guidelines for recognizing the disease and obtaining advice on how to get aid and assistance from local health authorities specialized in the treatment and management of dementia and affected people. Second, a help component embedding the main functionalities of the app which, in the case of need, activates relatives and the three nearest volunteers. This is the element that has been developed by applying participatory VSD methods indeed, how stakeholders’ input has been used to shape its main technical features is described extensively (this paper, Sect. 5). Finally, the SOD app includes a recreational activity calendar meant to facilitate social gathering and enable encounters between CwD and local volunteers who share the same interests. An additional task of this last component (currently inactive)11 will be to assist CwD in finding accompanying persons for events or activities of common interest. A conceptual map summarizing the content of the SOD app is shown in Fig. 1.

Fig. 1.

The conceptual map of the SOD app

The participatory VSD of the SOD mHealth app: the theory

In recent times, different ethical methods have been devised to incorporate human values in the design of AI and technological innovation and they have been applied to a number of different fields to develop digital-technological artifacts embracing an ethical stance (comprehensive reviews in [43, 140]).

Particularly, VSD12 has been applied successfully (first) to the field of information and communication technologies (ICTs) (see [18, 19, 47, 55, 57, 87], and over the years, the approach has been extended to the fields of energy technologies [28, 40, 91], artificial intelligence [29, 105, 128, 129], and nanotechnology [126, 127]. In the same line, VSD has been employed to develop specific medical technologies. For example, Denning et al. [39] used the VSD framework to inform the design of security mechanisms for wireless implantable medical devices (IMDs). While Schikhof et al. [115], Dahl and Holbø [37], and Burmeister [26] applied the principles of VSD to develop assistive technologies to be used in dementia care, Van Wynsberghe [135] used VSD to create a framework for the design of care robots. Whereas VSD has predominantly been applied in the field of Human–Computer Interaction (HCI),13 medical and care technologies in somatic health care, including dementia care, have been benefitted by this approach too. However, VSD has barely been used to support the design of eHealth/mHealth technological innovation (an exception is Maathuis et al., [84]) where ethical reflection is urgently needed to figure out which values can be ascribed in similar tools and how they would shape future technical developments and actual uses (on the need of ethical insights, see [27, 44, 82, 83, 110]. In this kind of research, one foremost critical aspect, from both a philosophical and practical standpoint, is how to select the values of ethical and social importance—according to what ethical theory—that VSD should rely on to develop ethical AI and digital technologies capable of addressing moral dilemmas and/or solving social problems associated to the technology under construction understood in its concrete settings of use [76, 85].

Two issues are central in practical applications:

the values of whom: should values be selected (a) by an expert judgment or (b) by engaging stakeholders from civil society or (c) by relying on (powerful) institutional promoters’ and/or technologist-designer’ values?

values should be elicited by means of what methods: (a) by abstract ethical reasoning and conceptual analysis or (b) by making a document analysis of former studies on similar technologies or (c) by applying empirical techniques (quantitative/qualitative methods) to engage stakeholders, end users, potential recipients, and the public?

Although latest appraisals of studies in AI ethics have shown that the philosophical underpinnings of this academic field should be rethought since, only a small number of texts mentions any major philosophical tradition or concept [9], some core meta-ethical aspects actually emerge. Mainstream approaches to ethical AI and principled reflection on digital technologies, for instance, so-called “technological design for wellbeing (WB)” and “AI for social good (AI4SG)” regularly adopt substantive ethics; namely, front-loading, expert-led, and top–down approaches to the selection of values and ethical principles informing technological-digital development and design practices (see [25, 50, 51, 76, 120, 121, 125, 130] inter alia). This interpretation is also common in the emerging field of “AI ethics by design” (see [21], also when adopting VSD methods [29, 129], in which relying on predefined ethical frameworks or an “objective list” of (allegedly) universal values is the standard.14 Regularly, AI-specific design principles, such as human autonomy, prevention of harm, fairness, and explicability, are indicated as the best ethical principles to develop prototypes of moral AI and related technologies ([129], p. 287). Even if these values are remarkable, the main limitation (intrinsic to any substantive ethics approach) is that values and ethical principles informing the design of AI and digital innovation are selected a priori by the theorist according to abstract ethical reflection or at best, some rather general ethical desiderata are chosen by the most powerful stakeholders (promoters and designers) regardless the ideals or specific needs of local stakeholders, end users, and other potential recipients. In this way, design values and moral standards behind them are supposed to be universally valid and applicable irrespective of the technological-digital product at stake and the diversity in purpose or uses in different contexts and for diverse people.

Substantive ethical views and related ethical design procedures might be particularly detrimental for delivering AI and digital innovation targeting vulnerable individuals (i.e., the SOD app is conceived for assisting cognitively challenging people), since their special needs would largely remain without consideration in the design process and, thus, in the embodied outputs. While a focus on substantive ethics and substantive values can be acceptable to formulate general guidelines and/or a posteriori assessment criteria for existing technologies, it seems rather insufficient in the case of technological-digital innovation, especially when highly contentious from an ethical–social standpoint. This is the case of surveillance technologies to which the AI-based SOD mHealth app legitimately belongs. They have been object of lively debates in recent years, but the efforts to go beyond the usual trade-off between privacy and security to ensure wider public acceptance have been scarcely effective [38, 58, 94]. The main problems of substantive, ethical views arise, precisely, when a set of abstract values, all of them reasonably important (the “moral overload” problem depicted by van den [134]), need to be selected, weighted, and prioritized to be translated into concrete design requirements and specific technical solutions. That is, the two phases of VSD suitable to deliver AI4SG called by van de Poel and Umbrello ([129], pp. 292–3) (a) formulation of design requirements and (b) prototyping (the most important aspects at an applied science level).

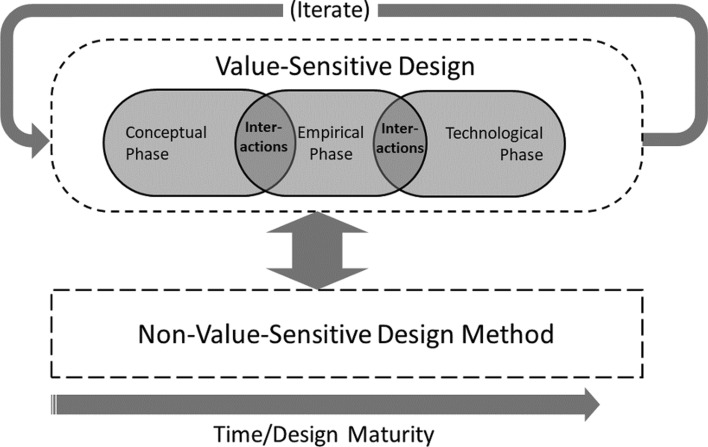

In both undertakings (formulating design requirements and prototyping), we argue that the procedural-deliberative VSD introduced by Cenci and Cawthorne [31], which tenets underpin the participatory VSD of the SOD mHealth app, can be more adequate and fruitful than traditional substantive approaches to the good and value. As it is stated (cf. [31], p. 2651), and in contrast to (substantive) objective list theories, “the main ethical task does not rely on identifying a list of universal, ethical values but on avoiding ethical and scientific paternalism by guaranteeing the correctness of the social choice procedure behind the selection of plural and incommensurable values of ethical and social importance by extending participation and engagement to the decision-making”. This version of VSD and the related “open framework most positive contribution” rely on “its procedural-deliberative tenets” that are “crucial to achieve ethical-democratic goals such as enhancing stakeholders’ agency, positive freedom, self-determination as well as to boost transparency, legitimacy and accountability of both the ethical procedure and the chosen values and normative ideals” (cf. [31], p. 2651). To further expound into the substantive vis á vis procedural distinction in ethical theory and justice15 exceeds the objectives of this study that is concerned instead, with practical applications and the operationalization of the insightful assumptions of the procedural view espoused. Major practical advantages of a VSD incorporating a procedural ethics stance are for enhancing both the explicability and fairness of the AI-based technological innovation generated (i.e., the SOD app) and, as a result, they can boost public trust on the capacity of AI to solve pressing social problems (i.e., the ability of the SOD app to assist vulnerable citizens with dementia in their daily life and improving their safety and wellbeing). In this vein, the peculiarity of a procedural-deliberative VSD (i.e., a participatory VSD) is that the conceptual and empirical phases are carried out simultaneously by engaging moral players (e.g., AI/technology developers, institutional promoters, end users, the public or, other relevant stakeholders) in creative, cooperative and, empowering dialogic processes (cf. [31], pp. 2654–5) aimed at selecting the foundational values informing the subsequent technological phase (see Fig. 2). The values previously selected are then, embodied in AI and digital tools having both moral and practical significance, since they are built on and aligned to the inputs of end users and other potential recipients (i.e., the direct and indirect stakeholders’ inputs).

Fig. 2.

Schema of an interactive and iterative VSD

(Source: Cawthorne and Cenci [30])

Fundamentally, we maintain that values and normative ideals underlying the creation of ethical AI and digital innovation should not be the result of abstract ethical reflections delivering a set of universal values, and then, merely juxtaposed to the technical solutions adopted by AI experts external to the whole process. In its place, scientific practices mirroring democratic processes (e.g., deliberative workshops) and empirical investigations into the social preferences for value of a wide range of expert and non-expert stakeholders are believed to address both efficiency and vital ethical–social–democratic concerns more profitably. In the case of the SOD mHealth app, the espousal of the procedural VSD stance (instead of substantive ethics) has allowed pursuing scientific-technical and ethical–societal goals simultaneously; that is to say, pursuing the safety and well-being achievements for the primary end users (CwD) and more generally, key social objectives such as the social inclusion of disadvantaged individuals through technological progress and civic cooperation. The resulting co-design procedure is more ethical and democratic, since the values informing design practices are negotiated by way of interpersonal critical scrutiny and deliberation, while the needs of vulnerable, disadvantaged stakeholders—the worst off16 can be prioritized by design. Demonstrably, procedural values inferred from real deliberative scenarios, and not resulting from abstract moral reasoning and hypothetical deliberation, as traditional in classical procedural ethics views (e.g., John Rawls’ Theory of Justice [97]), are better suited to identify a “hierarchy of values” purposeful to “bridge the cooperative design gap” (see van de Poel [131]).

The procedural-deliberative stance behind the peculiar version of VSD defended here implies selecting design values by way of real deliberations among different stakeholder groups. The resultant ethical procedure can be not only fairer (due to the direct involvement of stakeholders), but it can also point to explicit values correlated to certain technical solutions, since AI experts are actively involved in the entire value elicitation process: to assist participants with limited technical knowledge in taking well-informed decisions. Yet, this value elicitation procedure to be reasonable enough demands to be carried out by means of recognized empirical techniques (and by fulfilling their criteria of validity, reliability, and objectivity-impartiality). In keeping with these pragmatic procedural-deliberative tenets, in the SOD mHealth app, design values have been selected empirically by engaging local stakeholders via qualitative inquiry, and then, such values have been embodied in an AI-based tool better aligned to the actual values, needs and, vested interests of end users and other potential recipients in the concrete context of its use (on “value alignment” see [41]). A similar pragmatic, ethical procedure is not only more transparent and accountable, but the selected values are legitimate, since they are really shared among participants. As a result, the AI and digital tool generated can be more explainable, trustworthy, and acceptable for all stakeholder groups involved in the design process (likely, beyond participants to a qualitative micro-study). Thus, once implemented, the AI generated is expected to have a superior ability to enhance either individuals’ well-being or the social good (and not only for the people immediately involved in the design process).

As acknowledged, extending participation to non-expert citizens (beyond promoters, designers, and ethicists) is a pending task and major flaw at an applied science level of mainstream accounts in AI4SG supporting substantive ethics, that are highly questionable regarding their actual capacity to boost individual well-being or the social good in practice. Demonstrably, both goals (fostering well-being and social good) can better be attainable, once less-standardized technical-digital products are generated by systematically engaging the major possible number of stakeholder groups via (qualitative) participatory-deliberative methods. In the participatory VSD of the SOD app, the adoption of focus group-based methods is the empirical strategy adopted to achieve acceptable levels of transparency, accountability, and fairness in the eliciting procedures behind design values and, thus, to increase the trust in the AI-based tool generated. Precisely, vital democratic desiderata have been satisfied, by granting open debates among different stakeholder groups in which conflicting values, needs, or vested interests (and related design implications) has been conferred and evaluated by concentrating on their underlying reasons and pragmatic consequences; mainly, to balancing individual and social relevance and utility. In the hierarchy of values ultimately adopted in the technical design of the SOD app (this paper, Sects. 4.1 and 4.2), special prominence has been given to the opinion of participants belonging to the most vulnerable end-user group (the worst off). Namely, CwD’s aspiration to safety and wellbeing but also their legitimate wish for safeguarding their personal autonomy and individual freedom (as well as an acceptable level of privacy).

Major benefits of selecting design values by means of empirical methods mirroring democratic-deliberative processes rely on that people’s dialogic interaction takes place at any moment of the decision-making while accepted standards of evidence and objectivity of the empirical methods applied are satisfied as well.17 In other words, the VSD of the SOD app can be taken as a paradigmatic example of applied AI incorporating the citizen science ideal, holding the values of liberal democracy and “interactive objectivity” [45, 46], since design values, and related design requirements, are obtained by scientific inquiry and design practices “ensuring processes of transparent critical discussion between (moral) agents” (see [13], p. 635). This social epistemological approach to objective science and social knowledge production is meant to counteract pragmatically the bias typically associated with the losses of value-freedom/neutrality by the traditional approaches to applied science (see [86], pp. 1–13 and Ch.14), and the well-known shortcomings of sociological studies of technology [79], without renouncing an ethical understanding or a moral foundation. Although an in-depth discussion on the epistemic value of deliberative democracy exceeds the scope of this study (on that, see [78]), we agree on the import of real democratic deliberations in selecting values for science and technological design and, similarly, the relevance of liberal democratic values for the ethical design of AI and digital technologies (on that, see also Bozdag & van den Hoven, [20]).

The normative view defended in this study (underlying a procedural-deliberative VSD) is not merely plausible but become also highly practicable precisely, by further supposing methodical stakeholder engagement (i.e., a multi-stakeholder approach18) and close interdisciplinary teamwork. Notoriously, both aspects were (until now) pending tasks in the ethical design of AI and technological-digital innovation, since in most of “ethics-first” methods, stakeholders’ involvement in establishing design values was absent or seldom successful (see [43]). Another clear advancement introduced by the VSD of the SOD mHealth app is indeed, that a multidisciplinary expert team (i.e., philosophers, social scientists, AI/data scientists, data protection, and communication specialists) has been actively involved in its creation from the beginning and through all phases of VSD (more details in the next sections). This strategy has avoided the usual division of labor between ethicists, social scientists, technologists, and AI experts, that is standard also in VSD (e.g., [136]), and thus, many ethical, epistemic and, pragmatic bias have been anticipated. That is to say, either the lack of technical understanding of ethicists and social scientists or the scarce concern of AI/technical experts for ethical problems and social consequences arising from the implementation and use of certain AI and technical-digital innovation in concrete settings have been avoided. In this way, the shortcomings of standard approaches to ethical AI and applied AI deriving by the lack of either ethical or technical understanding, and often resulting in mere a posteriori critical assessments (by ethicists) of existing AI and technologies on the basis of their uses and misuses or in unethical technologies (not based on explicit ethical reflexion and values), are circumvented. It is done by applying genuine co-design practices where ethical–social bias and misuses are prevented from the onset while preserving technical efficiency. The upshot is a functional, AI-based technology (the SOD app) that is highly sensitive to agents’ values and the context of use, since the ethical design procedure meaningfully incorporates the inputs of experts and non-expert, end users, and other potential recipients. Design values are obtained by accurate empirical investigations into the process of social value construction in concrete situations. Hence, the AI and digital innovation generated can be ethically and socially relevant and democratic while the underlying technological design process remains rigorous but also, open-ended, flexible, versatile and, largely re-applicable to other technical-digital products directed to different recipients or other settings (on that, see [31], pp. 2658–59).

To conclude, the participatory VSD design of the SOD mHealth app, which is rooted on a procedural VSD entailing practical applications of participatory-deliberative methods, is the conceptual-pragmatic strategy used to cooperatively involve end users, the public and different expertise in the production of ethical AI and technological-digital innovation. Thanks to this alternative method, the gap between theory and practice, rightly depicted by recent critical appraisals as inherent to mainstream AI ethics, also the emergent fields of AI ethics by design and VSD [61, 89]), and so harmful for applied AI studies, has been re-addressed and solved by genuine inter-/cross-disciplinary research and collaboration among different expertise. Moreover, the citizen science perspective implies the application of empirical techniques to scrutinize the values, needs, and vested interests of different stakeholders in relation to the AI-based tool under construction. The next sections report the participatory VSD of the SOD app (all three phases) and further elucidate how the positive effects for explicability, (perceived) fairness, or public trust can realistically emerge: due to the enhanced democracy of the ethical design procedure implemented.

The VSD of the SOD mHealth app: the practice

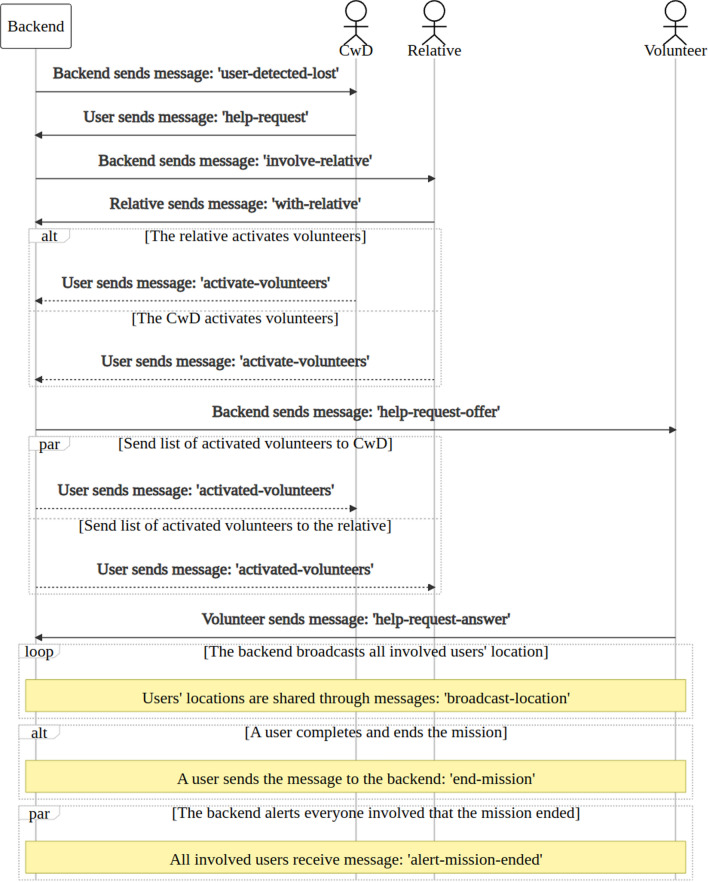

The main phases of the participatory VSD of the SOD mHealth app (see the chart below) substantially relies on the inclusive and participative tenets of the normative view (i.e., a procedural-deliberative VSD) seen in the former section. Excepting an initial conceptualization by the SDU/expert team (in collaboration with institutional promoters from the municipality of Nyborg), the VSD has been characterized by a co-design process substantially based on (focus group-based) deliberative workshops. The knowledge on values obtained has informed the development of a prototype of the user interfaces of the app and (to a lesser extent), it has provided insights to refine the tracking algorithms. While the rescue protocol has been discussed and agreed with all groups of participants (see Appendix 1), the workshops has also been determinant to establish which kind of information could be exchanged among the users (what info, how, and when) to legitimately trade off between personal privacy and the functionality of the app.

| Design and test phases | Period | Tasks and strategies |

|---|---|---|

| VSD preliminary phase | Spring 2020 | The focus group design and a stakeholder analysis aimed at establishing direct and indirect stakeholders are carried out (also to elaborate a feasible recruitment strategy) |

| VSD conceptual-empirical phase | August 2020 |

Focus groups with stakeholders aim to: Disclose stakeholders’ values, needs, and expectations on the SOD tracking app Figure out a suitable implementation strategy (based on stakeholders’ needs and values) Get insights regarding future development of contentious surveillance technology (to find out how to handle privacy issues and reduce controversies by increasing social utility) |

| VSD technical phase | Fall 2020/2021 |

The collected data have been used to develop an early draft/prototype of the user interfaces and the tracking algorithms and, likewise, to establish an agreed “rescue protocol” by prioritizing the main stakeholders’ values and needs (I Iteration) Numerous user tests have been arranged to provide feedback on the prototype of the SOD app; mainly, in view of refining the user interfaces and communication tools, including the precise text and wording to display to the end users (II iteration) |

| Communication tests | April 2021 | The feedback obtained from communication experts had the aim of improving the app interfaces concerning the displayed text, position of buttons and to choose colors |

| Implementation tests | June 2021 | To register end users' impressions, an early prototype of the SOD app has been shown to representatives of the three main stakeholder groups: (a) CwD (woman, retired, 64 years old, not familiar with apps); (b) next of kin (woman, 42 years old, familiar with apps), and (c) potential volunteer (woman, 24 years old, student, technology savvy). The same tests have been performed by involving institutional promoters from the municipality of Nyborg |

| Installation tests | January 2022 | Three CwD, two promoters from the municipality of Nyborg, and a social worker (dementia coordinator) from the local dementia centre in Nyborg have been invited to test the usability of the user interfaces of the app. The emphasis was on the “easiness to use” for people with cognitive problems |

| Simulation tests | On-going | Selected informed participants from the main stakeholder groups are involved in “rescue missions” under controlled conditions |

| Detection algorithms tests | Planned | Real-life data on the daily routines of CwD will be collected and used for tailoring, selecting, fine-tuning, and testing alternative “wandering” detection algorithms. A restricted group of informed CwD will download and use the SOD app for a certain period (either with or without the automatic detection function on). The aim is to gather real data on people’s daily routines, so that the original tracking algorithms, currently using synthetic data, will be adjusted accordingly (III iteration) |

| Continuous assessment tests | Planned | Feedback from real users downloading the SOD app, and having no previous contact with the SOD project and the SDU team is expected. This final step will help to amend bugs, further improve communication tools, and carry out the last functionality adjustments |

The conceptual-empirical phases of VSD

As it is anticipated, in the SOD project, a multidisciplinary team is involved from the onset in the participatory VSD of the SOD mHealth app: data scientists (n. 7), social scientists/experimental philosophers (n. 2), political scientists (1), data security experts (1), and communication experts (3). In contrast to usual VSD practices applying predetermined ethical frameworks, the first two phases of VSD (conceptual and empirical phases) have been carried out simultaneously to allow that the values informing technological design practices could be elicited empirically by involving local stakeholders. In the preliminary stage of VSD (Spring 2020), a stakeholder analysis has been carried out and six categories of direct and indirect stakeholders with different roles and degree of involvement in the technological design have been identified by the SDU/expert team as follows: (a) institutional promoters (4); (b) CwD (6); (c) nurses, caregivers, and social workers, so-called dementia coordinators (DC) (4 + 2); (d) next of kin of CwD (6); (e) potential volunteers from local associations for dementia and elderly care (5 + 7); (f) GP doctors (2). Afterward (June 2020), preliminary consultations took place by involving members of the SDU/expert team, institutional promoters of the SOD initiative from the municipality of Nyborg, and social workers/DC from the Tårnparken local dementia centre. In this same period, deliberative workshops engaging all stakeholder groups above have been planned and a recruitment strategy has been arranged by the SDU/expert team. The initial aim was not only informing the technical development of the app but also, promoting its implementation at societal level (in the Danish municipality of Nyborg). The consultation with medicine experts, (2) GP doctors frequently working with dementia patients, took place online in the beginning of July 2020.19 The main direct and indirect stakeholders (by group) and their respective degree of involvement in the VSD of the SOD app can be resumed as follows (see Table 1).

Table 1.

Overview of participants and expected contribution to the conceptual-cum-empirical phase of VSD

| Main actors (direct and indirect stakeholders) | Methods applied and n. of participants | Role and contribution to the SOD app design | Stakeholders’ degree of involvement in VSD |

|---|---|---|---|

| Group A: promoters from the municipality of Nyborg, leaders of local dementia centre and, SDU expert team |

2 focus groups: Group 1 (10 June 2020): 4 promoters of “smart city” initiative (at Nyborg kommune) + SDU expert team Group 2 (19 June 2020): 2 promoters of “smart cities” initiative + SDU expert team + leaders of Tarnparken’s dementia centre at Nyborg |

Set up goals and initiatives related to the SOD project Scheduling the development phases of the SOD app Drafting a possible implementation strategy (to discuss with the other stakeholders) |

Indirect stakeholders (but powerful): moderate influence on the technological design and the establishment of a “rescue” protocol |

| Group B: citizens with dementia (=CwD) | 2 focus groups of 4 people each (3 CwD + 1 dementia coordinator as facilitator) | Most important stakeholders of the SOD tracking app so, they were engaged to uncover/grasp their values, needs and, expectations on the app and its future developments (also, regarding surveillance technologies more generally). | Direct, end users of the SOD app: their inputs had rather significant influence on the technological design phase |

| Group C: Staff at local dementia centre (dementia coordinators, nurses and caregivers) | 1 focus group interview with 4 staff members |

Asked on patterns of dementia relevant for the design of the SOD app Asked for help in engaging CwD and their relatives (connected to the dementia centre) |

Indirect (but very interested) stakeholders: moderate influence on the technological design phase |

| Group D: next of kin (NOK) | 1 focus group with 6 people (3 men and 3 women) |

Asked/reported on CwD’s ability to use and take advantage of the SOD app Helped in designing the main functions of the app and envisioning future developments (also, regarding surveillance technologies) |

Direct, end users of the SOD app: significant influence on the technological design phase and the establishment of a “rescue” protocol |

| Group E: (potential) Volunteers |

2 focus groups: Group 1: 5 people (3 men + 2 women) Group 2: 7 people (2 men + 5 women) |

Asked about the citizenry’s willingness to volunteering with vulnerable social groups like CwD Asked about their availability to attend courses, training and get certification to be accepted as volunteers in the SOD app/project |

Direct, end users of the SOD app: but limited influence on the technological design (presupposed as technically skilled) |

| Group F: GP Doctors | Expert consultation (online) with 2 GP doctors |

Asked on patterns of dementia as a disease (loss of memory, wandering) Gave advise on the SOD project/app communication strategy and implementation |

External consultancy: limited influence on the technological design phase |

Total: 41 stakeholders

26 Direct stakeholders

13 Indirect stakeholders (and 2 external consultants)

Recruitment and (value) eliciting strategy

Due to the COVID-19 pandemic, both the lockdown and rules about restricted no. of people (5–10) allowed for in-person meetings, and to guarantee the safest possible environment, participants have been recruited by relying on the patients–relatives network of the local dementia centre. First, CwD with mild-to-moderate forms of dementia and their relatives have been invited for participating to the planned workshops by their dementia coordinators (DC). When a citizen in Nyborg is diagnosed with dementia, he/she is offered to become associated with a DC who will offer to him/her and his/her next of kin aid, assistance, and supervision. The involvements of DC have been crucial for recruiting participants from this sensitive target group. DC have in-depth knowledge on local CwD, their stage of illness, and actual ability to contribute to the technological design of the app and their participation has increased the trust in the SOD project and the utility of the SOD app. Second, representatives of DC, nurses and, caregivers from the staff at the local dementia centre did participate over the basis of the work shifts scheduled in the days that the workshops were carried out. Regarding potential volunteers and again, due to COVID-19 restrictions, the prospect of inviting the whole local community via newspapers or social media has been overruled. Yet, to ensure a comprehensive representation of different social groups (e.g., young people, seniors, the disabled, and people already volunteering with the elderly), an invitation in official forums targeting these categories and related associations, has been sent out by the municipality of Nyborg. The participants at the volunteer workshops did spontaneously respond to this newsletter. Although at this point of the research, they were not asked to commit to become volunteers in the SOD project, they have agreed on providing feedback on the SOD app concept and share their impressions and opinions on both the initial design ideas and on controversial surveillance technologies more generally. Specifically, the participants to the volunteers’ workshops belonged to: (first group) two members of the local “youth Council” (trad. from Danish), two volunteers at the local care centre “Svanedammens Venner”, three persons often volunteering in activities for elderly people at local “Venner Club” and (second group) two members of the local “senior council” (trad. from Danish) and finally, two members of the local “disability council” (trad. from Danish) and two members of the local “Alzheimerforeningen” (Alzheimer Association) in the municipality of Nyborg.

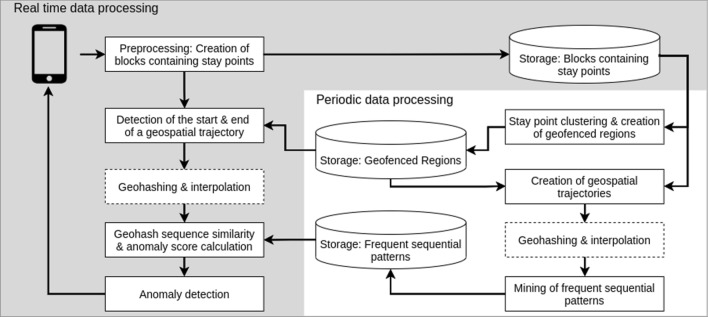

Before every focus group discussion, an explanation (about 15–20 min) of the SOD project’s main goals and the technical working of the SOD app has been provided to all group participants by the SDU expert team: a data scientist managing and co-ordinating the various parts of the SOD project and a “knowledge facilitator” conducting the workshops in Danish. It has been explained that the only prerequisites for downloading and using the app are the ability to use a smartphone and having some familiarity with the use of apps (so, a wide public of potential users is estimated). At that time, a prototype of the app to show to participants was not available. This was deliberate, since the main purpose of doing focus groups has been to uncover different stakeholders’ attitudes in relation to surveillance technologies, such as the SOD tracking app is, and eliciting participants’ values, needs, and expectations to inform the subsequent technical phase of VSD (see Sect. 5). Even so, a set of initial design proposals mainly concerning the help function, including the user interfaces, have been discussed with participants. In view of developing not only of the SOD mHealth app but also, to develop better AI-based surveillance technologies in the future, participants have been invited to share their impressions on the main functions of the app, including the tracking device (based on algorithms). The prototype has been developed by concentrating on participants’ inputs (VSD first iteration) and further feedback has been obtained in the following user tests with selected participants (VSD second iteration). Accordingly, SDU data scientists did refine the initial design ideas and related technological solutions: to adjust them as much as possible to the features, values, and needs of the main end users (CwD) and the other potential recipients. As from the chart above (this paper, Sect. 4), a third iteration of VSD is planned. During the workshops held, participants belonging to the main stakeholder group (CwD) have been invited to become leading actors in the future steps of the SOD project and the app development. Indeed, further upgrades of the algorithms detecting wandering behaviors (see [6]) entail the use of collected, real data on people’s daily routines, as a substitute of synthetic data currently used (more details in Sect. 5). Participants will download the app in their smartphones and will be tracked, and thus, the data collected on their daily routes will be stored in a server computer (at SDU) and used to customize the detection algorithms to make them more sensitive to the features of real agents and the specific context of use.

Focus groups (methodological issues)

The data collection informing the VSD of the SOD app has been carried out on 18–19–20 August 2020 by means of qualitative (semi-structured) focus group interviews (see [10, 11, 81]) lasting in between 60 and 90 min, that has been held at Tårnparken dementia centre in Nyborg. In keeping with the so-called interactive design popular in human–technology interaction (see [118], Ch. 2 and 7), a qualitative approach has been chosen to frame the ethical design (the VSD of the SOD app) and to extrapolate the information about values suitable to take technical choices and develop technical solutions accordingly. A qualitative research design is often considered crucial to inform participatory design practices (see [119], Ch. 1, 5, 6, and 7) involving stakeholders, end users, and potential recipients of AI and technological-digital innovation. In fact, “information rich” knowledge and, likewise, “process-related meanings” (see [34, 93]) are required to properly inform technologists and data scientists on the fundamental patterns of agents and contexts, including their plural values and specific needs. This applied strategy has been crucial to extrapolate a coherent set of values meaningfully obtained by the dialogic interaction among participants to the various focus groups with different stakeholders thus, once embodied, such values are expected to deliver a technological-digital product (the SOD app) truly aligned to end users and the concrete context of use.

When compared to individual interviews, focus group-based workshops are certainly more difficult to arrange because of the need of finding agreements on essential practicalities (time, date, location, and so on). Nonetheless, this participatory-deliberative methodology has been preferred because of its well-known ability to create significant group dynamics and facilitate the collection of genuine social/group knowledge [10, 11, 73]. This approach was considered better suited to inform the subsequent technological phase of VSD in a genuine citizen science perspective. As seen in Sect. 3, the adoption of a procedural-deliberative VSD as normative view implicates the use of participatory-deliberative methods which allow participants to directly challenge and trade-off their values, needs, or vested interests to achieve authentic collective agreements on aims and desiderata regarding the AI and digital technology under construction. Another example of methodology based on an alternative working rationale are popular survey-based techniques in which individual views, also on values, are merely sum-aggregated to get a collective standpoint20; namely, the hierarchy of values obtained by surveys is not actually discussed by group participants who merely fill up a questionnaire in isolation). As acknowledged, respondents are more accurate in their answers when placed in a dialogic situation, since similar scenarios permit an immediate, intersubjective validation of values and aims among respondents or between respondents and the researcher (see [77], pp. 242–243). In the same line, individual interviews have also been considered inadequate to carry out the main design tasks underlying the SOD app, precisely, for the absence of interpersonal confrontation and interaction among group participants when selecting design values and identifying the main goals of the SOD app in relation to the different needs and expectations of end users and other potential recipients.

More importantly, technological design practices demand meaning rich discursive data which can barely be obtained by means of the rigid structure of a survey. Also, surveys are considered of doubtful applicability in the case of cognitively impaired people who might be unable to fill up a questionnaire: this is the main reason why population large studies targeting people with dementia regularly focus on relatives or nurses and doctors on their behalf [42].21 In the specific case of the deliberative workshops informing the VSD of the SOD app, both the knowledge facilitator and the data science expert involved in all workshops could provide technical explanations and additional background knowledge both on the functioning of the app and the implementation process to all participants, including to challenging CwD. Thanks to this strategy, value conflicts and value dilemmas have been instantly addressed and solved to envision what specific ethical principles or moral standards (correlated to specific technical solutions) could be acceptable for all stakeholder groups. Once applying focus groups-based deliberative workshops, there can be genuine co-creation of value and thus, co-design, co-development, and co-production of AI and technological-digital innovation based on truly social and democratic values. Therefore, the adoption of group-based deliberative techniques is pivotal to give concrete and unambiguous indications to experts/data scientists on specific embodied technical-digital solutions adjusted to the (self-chosen) values of all stakeholder groups involved. The result is a digital product like the SOD mHealth app that, once implemented, can really enhance the wellbeing and safety of the most vulnerable final users (CwD) whose values and needs have been prioritized by design. Explicitly, the technical functions of the SOD app have been conceived with the main purpose of removing the obstacles caused by dementia on vulnerable citizens (CwD) and improving their wellbeing and quality of life (also of their families) through civic solidarity and cooperation among residents (of Nyborg).

In practice, and due to the specific objectives of this study (technological design of a digital product), a traditional qualitative inquiry, either concentrating on the demographics of participants (unless very general features like gender or estimated age) or specifying the forms of dementia suffered by participants, has been considered redundant. Due to the close collaboration with the local dementia centre (and the municipality), we were confident enough about the relevance of participants for our objectives and, also to foster participation (of vulnerable CwD), we decided to keep the recruiting process as less intrusive as possible. Yet, an informed consent form and explicit authorization for data use reporting their essential demographic information have been signed by all participants. In practice, the data on values, needs, and expectations of the diverse stakeholders that inform the VSD of the SOD mHealth app have been extrapolated by means of a qualitative content analysis focusing on meanings (see [63, 111]). The group sessions have been video and audio recorded and transcribed by a student assistant to be then, coded manually by the (2) SDU team experts in social science and experimental philosophy. The raw data have been analyzed according to the main coding categories below to identify:

the values and needs of users, so that the expected utility and social impact of the app (for different stakeholder groups);

the behavioral aspects of dementia (e.g., disorientation and loss of memory) relevant to the design of the app and likewise, all stakeholder groups' attitudes on tracking devices and surveillance technologies targeting people with dementia;

the role of volunteers and degree of acceptance of their contribution by other stakeholders (mainly, CwD and relatives) with an emphasis on (a) their motivations for volunteering with CwD, (b) previous experience (if any) in volunteering with CwD, and (c) potential volunteers’ willingness to receive training to properly do so;

the suggestions of different stakeholder groups on how to deal with the controversial issues inherent to surveillance technologies in the SOD mHealth app and tracking devices in general; namely, how to make these technologies more sensitive to end users’ needs and values and how to solve the trade-off between privacy and safety by enhancing individual and social utility.

These broad coding categories have been chosen to represent, and concentrate on, the information immediately relevant to the development of the main components of the app, such as the help component (see Sect. 2) including the user interfaces and the detection algorithms (more details in Sect. 5) and, to get participant’s opinions regarding the main purpose of the SOD app (i.e., enhancing safety and wellbeing of vulnerable individuals by strengthening social solidarity between citizens). Moreover, real-time written notes on participants’ suggestions for further improving the app have been taken during the various workshops by the data scientist responsible of the SOD project who introduced the original concept behind the SOD app during all the sessions (the suggestions not incorporated in the SOD app, mainly for budget constraints, are reported in the conclusions). It is important to note that although we start with a deductive, concept-driven coding approach (i.e., a predefined set of coding categories, see [17]), the manual coding have allowed us to be more flexible and incorporate (and take into account) the extra-information emerged during the sessions (e.g., emotions of participants, their desires, and the like) that does not fall into the predefined description above (but still relevant for the VSD of the SOD app).

Values (by group) underlying the SOD mHealth app

Due to this paper size limitations, only the main empirical findings from focus groups with stakeholders are reported here: by focusing on their explicit impact on the main technical solutions adopted in the SOD mHealth app.

First of all, increasing safety and the mobility in security, together with the personal wellbeing of CwD, are the values with the highest priority for all groups of participants. Yet, considerable importance has been given to the purpose of relieving the burden caused by dementia on relatives and close friends (who have declared to feel lonely in this duty). These values are fulfilled in the SOD app concept by designing an AI-based tracking device targeting CwD that involves the local community in providing help as soon as they get lost. In the words of promoters from the municipality of Nyborg, in the SOD mHealth app/project “the focus are CwD first (…) co-creating together with other citizens a meaningful life, value and health for people (…) in order to live a worthy aging”. As usual in citizen science projects, the main goal of “smart cities” initiatives is to develop technological-digital solutions that can foster the local community’s active participation to activities and public services tailored to the special needs of vulnerable citizens; here, social inclusion and empowering disadvantaged individuals in view of enhancements in wellbeing and quality of life for them and their families are the aims/values strongly pursued.

Mainly, DC and staff at the local dementia centre have been questioned on the patterns of dementia as a disease and encouraged to share their opinion on the expected ability of CwD to use apps and technology in general (on the basis of their experience in working with them). To provide precise information to data scientists about how to make the app more functional and usable, the same inquiry has been directed to CwD and their next of kin (NOK) as well. In brief, although CwD seem not very familiar with technology and apps, all of them participating to the workshops declared that they could count with the help of relatives and friends for downloading or activating the SOD mHealth app (when ready). Even if not very proficient with technology and apps, CwD are the main recipients and end users of the SOD app concept; thus, this category of stakeholders could not be excluded by the VSD. In keeping with the participatory rationale behind the SOD app/project, and to shape the functionalities of the embodied tool in ways to circumvent, as much as possible, the cognitive or practical difficulties of people’s living with dementia (CwD), their participation has been considered fundamental both from an ethical and technical-practical standpoint. As seen before, the co-design approach has been considered central to develop a prototype meaningfully expressing the values and special needs of this challenging category of users and to promote the app usage based on its practical utility (likely beyond participant to this micro-study).

Consistently, promoting the actual usage of the SOD app—by explaining its functioning and benefits to all of its main recipients—has been seen as another fundamental research task within the SOD project. While the co-design approach adopted seemed pivotal for a good design of AI and technological-digital innovation targeting vulnerable individuals; likewise, the direct involvement of CwD from the onset of the project has been very important in view of achieving technical efficiency (a vital scientific value) and thus, in promoting the acceptance of the app at a society level (by stressing its helpfulness). One of the main concerns of the SDU expert team was, in fact, whether these users would be welcoming such a technology or whether factors, such as a difficult usage, losses of privacy, and other kinds of AI aversion reasons, would be dominating and leading to the rejection of the SOD app. Yet, the positive reception that the SOD app received in the workshops seemed mainly motivated by the wish of CwD to relieve the burden on their relatives and equally, the hope for preserving their freedom of movement (more details below). Visibly, the interaction between researchers and end users in the participatory design workshops has been decisive to the communication of the positive properties of the tool, and most likely, it might help outweighing the risk of rejection often implicit in similar surveillance technologies. The workshops have also strived to contribute to the creation of a positive culture towards the SOD app (and AI in general) in view of fostering its actual usage by participants (and beyond).

The CwD of the local dementia centre in Nyborg (including CwD participating to these interviews) can have the privilege to be the first in using the app (once ready). Thus, the differences between the tracking device currently in use at the centre (a very basic surveillance tool) and the SOD app (an AI-based system able to automatically detect and trigger an alert) have been elucidated to DC and nurses of the centre who assist CwD at a regular basis. In view of further enhancing the usability (for end users) and efficacy of the app, and to indicate some possible future developments, the main recommendation coming from DC, but common to all groups, was for having the tracking device incorporated in a smartwatch instead of a smartphone (due to budget constraints, this option has been ruled out). Other suggestions for improving the SOD app coming from DC’s group have been: (1) to give CwD the possibility to share a caption/headline with their relatives before leaving home (e.g., “I am leaving home” or “I am going to the supermarket”); (2) to add a function asking to CwD “are you lost?” and give them the possibility to answer “yes” or “no”. While the second option has been implemented as default behavior under the automatic detection function, the first option is planned but not yet available. It will be implemented in the app as a voluntary automatic feature that is able to learn stay places (i.e., home, office, the dementia centre, and the like), and once activated, it will announce to relatives when these places are abandoned. Due to obvious privacy concerns, this functionality demands to be activated deliberately (by CwD).

In the same line, while DC and relatives would have preferred that activation/deactivation functions of the app were not fully available to CwD (to avoid that the tracking functions could be inactivated on purpose), in the SOD app, a priority has been given to the wish of CwD to maintain acceptable degrees of autonomy, self-determination and control on their own choices (“being in charge”). That is, CwD are entitled/allowed to declare themselves inactive. Their approval is required to activate the tracking functions, and likewise, while the automatic detection alert is triggering, CwD are asked to clarify if they are actually lost: a text message is asking “are you lost” and they must answer in few instants “yes” or “no”. It happens before activating volunteer citizens (in Danish they are called “vejviser” that means “cicerones”) and starting a “rescue mission” entailing that the actual position/location of CwD will become visible on a map on the smartphones of both relatives and the three nearest volunteers. This technical decision has been taken, since CwD demonstrated scarce concern for their own privacy22 and a high level of self-awareness for their own condition. Hence, there is a high probability that they will keep the tracking mechanism activated when going out, since they are deeply conscious about the possibility of being, sooner or later, in need of help and assistance from a stranger (also in view of a worsening of their disease/dementia over time).

“I have dementia, so I will benefit from this too in the long run (…). Now, I have no problem in going around but I am aware that my illness means that is the way it is headed. And then, I think I would be extremely happy to have it (the app), because I like going out. Otherwise, I will be trapped at home” (CwD, age 65–70, male, no former experience with getting lost).

To make CwD more conscious about safety flaws when the device is inactive (if inactive the users’ location is not received by the server computer at SDU), a reminder is sent to their devices in the form of a message at regular intervals of time. Additionally, to further encourage the maintenance of an active status, another function allowing CwD to delete the locations visited on certain days has been included. As a result, most of them agree on the valuable contribution of tracking devices such as the SOD app in case of wandering behaviors and declared that they would have used the app once ready to be downloaded. CwD involved also expressed a strong willingness to participate in future steps of the SOD project aimed at refining the tracking algorithms underlying the app: to increasing the precision of the app in detecting wandering behaviors and its ability to look after the safety of other CwD (full details in Sect. 5).

“Well, if I can help make life better for people with dementia, I would really like to be part of that” (CwD, age 65–70, female, former experience with getting lost).

It is important to remark that positive attitudes of participants (especially CwD) regarding the SOD app clearly evolved during the workshops, and to the extent that additional information was granted, and their initial doubts were clarified and addressed in the group discussions. For example, when CwD have been told that they are allowed to enter themselves their personal data and delete the info registered on their daily routes at any moment (both stored by the server computer at SDU), their trust on the whole project and willingness to contribute to its goals seem to have increased considerably. From the point of view of data scientists (highly concerned with both privacy and functionality), a major challenge has been to deliver an AI-based tracking tool, substantially based on continuous data flows from smartphones to other devices that can be efficient while preventing unwanted personal data leakages. By following the existing regulation on data protection in Denmark,23 personal data of CwD are stored under their explicit consent (requested when downloading the app). Yet, under the advice of DC and relatives, the amount of personal information that CwD can provide via the SOD app has been reduced to a minimum, since CwD are known for overestimating their skills (and this can be misleading for volunteers when involved in a rescue mission). Both relatives and CwD are not allowed to know in advance the identity of volunteers (and vice versa) until these last ones agree on participating to a rescue mission. In this case, the current location of volunteers is transmitted to the server system (but their location is not stored) and their personal profiles become fully available to relatives (but only once a mission is accepted). Volunteers can also write personal information in their profile as free text (similarly to a social media app).24

The role of volunteers, whereas widely appreciated (by DC, next of kin/NOK and, CwD), has been limited in the SOD app by two different measures: (1) when downloading the app, an access code is required to become active as a volunteer. The code will be released by the municipality of Nyborg on the basis of former training (e.g., an introductory course) or an interview testing qualifications or skills for volunteering with vulnerable individuals like CwD. Also, (2) NOK are given total control over rescue missions (i.e., the privilege to start and close missions once a safe place is reached or to interrupt missions if they realize that a CwD is not actually lost but he/she is simply visiting an unusual place). This policy has been adopted to respond to the doubts of both families and DC about the capacity of average citizens to deal properly with cognitively challenging people and in situations like “wandering” where they might likely be confused and disoriented (or even violent). Potential volunteers appear to be strongly motivated by values such as social solidarity25 towards disadvantaged and vulnerable individuals. Even so, they demonstrated to be concerned about how CwD could react when wandering and approached by a stranger, and in fact, they seemed very receptive about the possibility to receive specialized training. Thus, the overall policy adopted in the SOD app can satisfy different stakeholders’ needs and worries (and likely encourage more people to download the app or to volunteering). To conclude, the danger of screening out too many potential volunteers have been carefully explored and the possibility of involving volunteers gradually, by allowing them to take more responsibility over time (e.g., a sort of reward system), has been discussed. Even if these options seemed appreciated by both relatives and DC, these proposals have been considered not practicable, and so, they have been discharged. One reason is that promoting a subset of volunteers could discourage others from entering the system. Most importantly, volunteers are expected to help in every circumstance of risk: if there is only one volunteer close to the lost person and/or reacting to the request of help, he/she must go (no matter what). Thus, it has been considered detrimental for the safety of CwD (main goal of the SOD app) to have volunteers with different degrees of responsibility and/or limited power of action.

Below Table 2, the full hierarchy of values (by groups) extrapolated and how they have been embodied in the SOD mHealth app is outlined. The table does not include the specific values of the SDU team that will be made explicit in the next section when addressing the technological phase of VSD.

Table 2.

Hierarchy of stakeholders’ values (by groups) and alignment with main technical solutions adopted in the SOD mHealth app

| Hierarchy of values (by group) inferred by the participatory VSD | ||

|---|---|---|

| Stakeholder group | Values (elicited via focus groups) | (Aligned) technical solutions adopted |

| Group A—promoters from the municipality of Nyborg and SDU expert team |

Increase safety (of CwD) Increase wellbeing and quality of life of vulnerable citizens by involving the whole local community Relieve the burden caused by dementia on affected citizens and their families Enhance agency, freedom and, self-determination of vulnerable citizens (CwD) Empower vulnerable citizens (CwD) |

Overall, develop an AI-based tracking tool (in the form of a smartphone app) to assist CwD who get lost to connect with relatives, also by the help of local volunteers Becoming active as volunteer in the app requires an access code released by the municipality (after a training or skill test that certifies people’s ability to volunteering with challenging individuals like CwD). |

| Group B—citizens with dementia (CwD) |

Keep control on decisions and daily activities Keep certain autonomy and self-determination Maintain certain independence from external help Increase their own safety (and wellbeing) Little concern for their own privacy Strong desire to relieve burden on families and friends |

CwD are given total control on activating/deactivating functions of the app (accessible to all users) Need to request the open consent of CwD to activate tracking functions Once the automatic detection is alerted, CwD must confirm their “lost” status and a video call with relatives is established before activating volunteers (and make the position of CwD visible on their smartphones) CwD are allowed to delete data on their position, stored by the app, in certain days The profiles of CwD contain little personal information and can be delated by them at any time (this option is available to all end users) |

| Group C—Staff of local dementia centre (dementia coordinators, nurses and caregivers) |

Increase safety (of CwD) Promote individual wellbeing (of CwD) Protect individual freedom and self-determination (of CwD) Minimize threats to privacy (of CwD) Reduce workload on local dementia centre |

Rescue functions are not directly connected to dementia centre and its staff In order to address privacy issues (of CwD), the dementia centre is kept as eventual “safe place” in rescue missions (when the location is the closest to the lost person) |

| Group D—Next of kin (NOK) |

Improve usability of the SOD app (for CwD) Give primacy to safety over privacy (of CwD) Relieve burden and responsibility (on NOK) for the daily care of CwD Allow freedom of movement of CwD while increasing perception of security in the relatives (via the tracking function) |

NOK are assigned the privilege of co-ordinating and closing rescue missions (when a lost CwD reaches a safe place) NOK can be informed by a text message when a CwD leave a “stay place” (home, office, dementia centre) |

| Group E—Volunteers (V) |

Social solidarity Responsibility (towards CwD) Primacy to safety and wellbeing (of CwD) over privacy concerns Desire to help in fostering independence, personal freedom and, autonomy (of CwD) Desire to help in relieving the burden on NOK Collaborative attitude for lowering risks of adverse reactions by CwD when rescued (by taking specific training to volunteering) |

The profiles of lost CwD become visible to V only when a rescue mission is accepted (for not sharing the personal data of CwD unnecessarily) V can make phone calls to NOK during rescue missions Location data of V are not stored by the app and their personal profiles (made by them) can be delated at any time |

The technical phase of the VSD of the SOD mHealth app

The technical information provided in this section relies substantially on the contributions by [5–7]. This content is reinterpreted here in the light of the values extrapolated from the conceptual–empirical phases of the participatory VSD previously described. This section aims to further elucidate how particular values have been prioritized—by design—to adopt technical-digital solutions that could satisfy in primis the needs of CwD; explicitly, to achieve enhancements in safety but also, personal autonomy and well-being (largely intended as empowerment of vulnerable and disadvantaged individuals by the usage of technological-digital innovation) while being respectful of the opinions expressed by all stakeholder groups involved in the co-design process (and by following the regulation on sensible data usage, storage, and in fulfilling other data privacy concerns).

As a start, the research on digital health, eHealth, and mHealth applications for dementia patients and their caregivers has recently been conducted in a fair amount (see [23, 72, 142]). Some of the most identifiable symptoms of dementia are forgetfulness and memory loss, and thus, behaviors such as “wandering” and getting lost have been considered the most probable risks in view of people’s safety. Accordingly, quite a few mHealth apps that have been developed in recent times take the form of tracking devices monitoring the location of people with dementia (see [3, 4, 62, 64, 116, 122, 123, 137]). Among existing mHealth apps and devices with a functionality for tracking CwD’s current location, three of them also include an alert system for caregivers and relatives (see [62, 123, 137]). In comparison to existing tools, the peculiarity of the SOD mHealth app relies on going beyond the mere monitoring of the location of the lost person/CwD, and indeed, once a wandering behaviour is automatically detected, a rescue mission involving relatives and neighbor volunteers is triggered and thoroughly coordinated via its technical functionalities.