Abstract

It is considered that 1 in 10 adults worldwide have diabetes. Diabetic foot ulcers are some of the most common complications of diabetes, and they are associated with a high risk of lower-limb amputation and, as a result, reduced life expectancy. Timely detection and periodic ulcer monitoring can considerably decrease amputation rates. Recent research has demonstrated that computer vision can be used to identify foot ulcers and perform non-contact telemetry by using ulcer and tissue area segmentation. However, the applications are limited to controlled lighting conditions, and expert knowledge is required for dataset annotation. This paper reviews the latest publications on the use of artificial intelligence for ulcer area detection and segmentation. The PRISMA methodology was used to search for and select articles, and the selected articles were reviewed to collect quantitative and qualitative data. Qualitative data were used to describe the methodologies used in individual studies, while quantitative data were used for generalization in terms of dataset preparation and feature extraction. Publicly available datasets were accounted for, and methods for preprocessing, augmentation, and feature extraction were evaluated. It was concluded that public datasets can be used to form a bigger, more diverse datasets, and the prospects of wider image preprocessing and the adoption of augmentation require further research.

Keywords: diabetic foot ulcer (DFU), burn, chronic wounds, deep learning, convolutional neural network (CNN), datasets

1. Introduction

Diabetes mellitus is a metabolic disorder resulting from a defect in insulin secretion, resistance, or both [1]. According to a 2021 estimate made by International Diabetes Federation, 537 million adults, or 1 in 10 adults worldwide, had diabetes, and an additional 541 million adults were at high risk of diabetes [2]. Diabetes results in high blood glucose levels, among other complications; these can damage blood vessels and nerves and may result in peripheral neuropathy and vascular disease. These two conditions are associated with foot ulcer development. It is estimated that diabetic foot ulcers (DFUs) will develop in up to 25% of diabetes patients [3]. Patients with DFUs have a high risk of amputation, and this was found to result in mortality over 5 years for 50% of patients [4]. Periodic checks help prevent lower-limb amputations and even foot ulceration, but this creates a large burden for the healthcare system. This burden can be reduced by employing computer vision technologies based on deep learning (DL) for ulcer diagnosis and monitoring without increasing the need for human resources.

For the ImageNet dataset [5], the creation and adoption of graphics processing units (GPUs) led to the rapid development of deep learning algorithms and their application, including in the field of computer vision. Computer vision has already been used in medical imaging for multiple decades, but the adoption of deep learning technologies has fostered its application for multi-modal image processing in other more complex medical domains [6]. Computer vision technologies based on machine learning, including deep learning, have been applied for wound assessment, and multiple reviews have already been conducted. Tulloch et al. [7] found that the most popular research was related to image classification, and the reported results were only limited by the lack of training data and the labeling accuracy. Additionally, it was concluded that the application of thermography is limited by the inability to use asymmetric detection and the high equipment costs. A review dominated by shallow machine learning solutions concluded that 3D (three-dimensional) imagery and deep learning methods should be used to develop accurate pressure ulcer assessment tools [8], but the costs related to 3D imaging were not considered. Chan and Lo [9] performed a review of existing DFU monitoring systems, with some using multiple image modalities, to conclude that, while they are useful in improving clinical care, majority have not been reviewed to prove wound measurement accuracy. A review of ulcer measurement and diagnosis methods performed by Anisuzzaman et al. [10] concluded that datasets of multimodal data types are needed in order to apply artificial intelligence (AI) methods for clinical wound analysis. Another review of state-of-the-art (SOTA) deep learning methods for diabetic foot ulcers evaluated performance in terms of three main objectives: classification (wound, infection, and ischemia), DFU detection, and semantic segmentation. Small datasets and a lack of annotated data were named as the main challenges for the successful adoption of deep learning for DFU image analysis [11]. Similar conclusions were drawn in a wider wound-related review [12] in which different wound types were considered.

Multiple past reviews concluded that multimodal data are able to deliver better classification, detection, and semantic segmentation results in the clinical care of wounds. The lack of annotated training data was identified as the main challenge in SOTA deep learning applications. This paper seeks to review the latest applications of deep learning in diabetic foot ulcer image analysis that are applicable in the context of a home environment and can be used with a conventional mobile phone. The findings of this review will be used in the development of a home-based DFU monitoring system. It is inevitable that some of the findings overlap with those of existing reviews. This paper seeks to demonstrate that:

Research in this field can benefit from publicly available datasets.

The current research has not fully facilitated data preprocessing and augmentation.

As a result, the current review has the following contributions:

Publicly available wound datasets are accounted for.

The preprocessing techniques used in the latest research are reviewed.

The usage and techniques of data augmentation are analyzed.

Further research avenues are discussed.

This article is organized as follows. Section 2 presents the methodology used for search for and selection of scientific articles. The selected articles are reviewed in terms of their performance and methodology in Section 3. Section 4 lists the limitations of this review, and the article is concluded with a discussion on the findings in Section 5.

2. Methods

The search for and selection of articles were conducted by using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) methodology [13]. The current section will explain the article search and selection process in detail.

2.1. Eligibility Criteria

Papers were selected by using the eligibility criteria defined in Table 1. Articles’ quality, availability, comparability, and methodological clarity were the key attributes considered during the definition of the eligibility criteria. The criteria included the following metrics: publishing language, paper type, full paper availability, medical domain, objective, data modality, usage of deep learning, and performance evaluation. Initially, exclusion criteria were used to filter out articles based on their titles, abstracts, and keywords. The remaining papers were read fully and further evaluated for eligibility.

Table 1.

Article eligibility criteria.

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Published in English | |

| Journal articles | Conference proceedings, unpublished articles, and reviews |

| Full text available | Abstract or full text not available |

Research subject of chronic wounds:

|

Other types of dermatological pathologies |

Computer vision used for:

|

Unrelated to computer vision |

| Red, green, and blue (RGB) images were used as the main modality | Thermograms, spectroscopy, depth, and other modalities requiring special equipment were used as the main modality |

| Deep learning technologies were used | Only machine learning or statistical methods were used |

Performance was reported with any of the following metrics:

|

Performance was not reported |

2.2. Article Search Process

The articles reviewed in this paper were collected by using two search strategies: a primary and a secondary search. The primary search was performed by using the following electronic databases:

Web of Science

Scopus.

The database search was conducted on 17 December 2022, and articles that had been published since 2018 were considered. This time range limited the number of search results that were returned and helped ensure that the selected articles used the latest available deep learning methods and dataset preparation protocols. The search queries contained the following information categories:

Terms for wound origin (diabetic foot, pressure, varicose, burn);

Terms for wounds (ulcer, wound, lesion);

Terms for objectives (classification, detection, segmentation, monitoring, measuring);

Terms for neural networks (artificial, convolutional, deep).

Boolean logic was used to define queries by joining term categories with a logical AND gate and concatenating terms with a logical OR gate; popular abbreviations (e.g., DFU for diabetic foot ulcer, CNN for convolutional neural network, etc.) were included. Some terms were truncated to add wildcard symbols in order to extend the search results (e.g., classif*, monitor*, etc.). During the search, queries were only applied on titles and abstracts.

During the secondary search, the references found in articles from the primary search were reviewed and manually included based on their relevance according to the eligibility criteria. Similar review articles [7,8,9,10,11,12] were additionally consulted for references, and these references were also included in the secondary search pool.

2.3. Selection Process

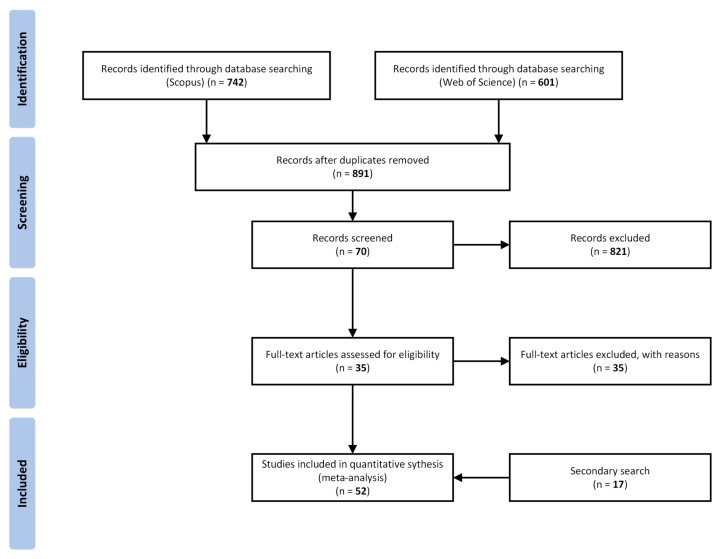

The selection was performed by the main author. The results from the primary search were imported into Microsoft Excel by exporting a CSV file from the search databases. The imported information included the title, authors, publication date, article type, where the article was published, the full abstract, and keywords. Duplicates were removed based on the title by using a standard Excel function. Non-journal and non-English articles were filtered out. The titles, abstracts, and keywords of the remaining articles were screened by using the criteria presented in Table 1. The remaining articles were read fully to exclude those that did not meet the set criteria. Forward snowballing [14] and a review of additional reference lists in other review articles resulted in an additional seventeen articles that passed the eligibility verification and were added to the final article set. The overall article selection procedure is presented in a PRISMA chart in Figure 1.

Figure 1.

PRISMA flow diagram for article selection.

2.4. Data Extraction

The aims that were set for this review dictated the data type to be extracted. The online databases provided some metadata related to the articles, but in order to quantitatively evaluate them, the following data were extracted during full reading of the articles: the objective, subject, classes, methodology, initial sample count, training sample count, preprocessing and augmentation techniques, and deep learning methods that were applied. To avoid missing important information, the data extraction was independently performed by the first two authors. Unfortunately, not all articles specified the required data, and as a result, the following assumptions about the missing information were made:

Training sample count—the initial sample count was considered for the evaluation of the training dataset size, and the training sample count is not listed here.

Preprocessing technique usage—it was considered that no preprocessing techniques were used.

Augmentation technique usage—it was considered that no augmentation techniques were used.

2.5. Data Synthesis and Analysis

For a performance comparison, the collected data were split based on the objective into three categories: classification, object detection, and semantic segmentation. Then, the data were ordered based on the publication year and author. This segregation was used to provide an overview of all collected articles and to describe the best articles in terms of performance metrics.

Analyses of the datasets, preprocessing and augmentation techniques, and backbone popularity and usage were conducted on the whole set of articles.

3. Results

The search in the Scopus and Web of Science databases returned 1343 articles. The articles’ titles were checked to remove duplicates, which resulted in 891 original articles. This set had titles, abstracts, and keywords that were evaluated against the eligibility criteria (Table 1). For the remaining 70 articles, the same criteria were used to evaluate their full texts. Finally, 35 articles were selected, and an additional 17 were added as a result of forwards snowballing and a review of the references in similar review papers. The resulting set of articles was further reviewed to extract the quantitative and qualitative data that were analyzed. This section provides the results of an analysis of the selected articles.

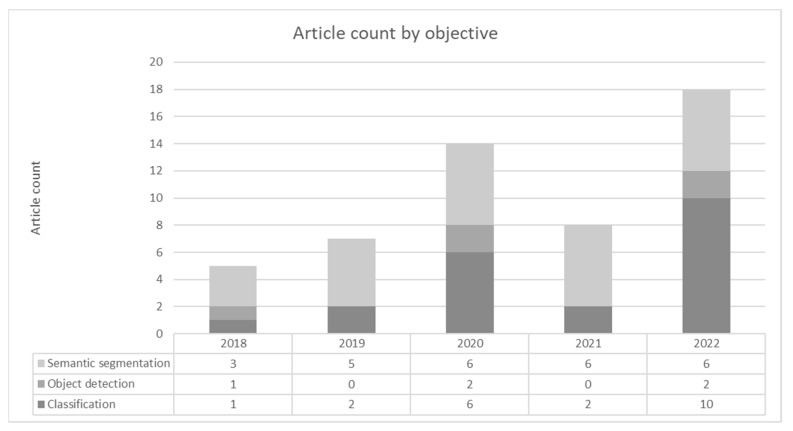

The distribution of articles based on the date and objective is provided in Figure 2. It can be observed that interest in this research field has been increasing for the last 5 years (with the exception of 2021). The distribution of objectives shows that classification and semantic segmentation were researched the most.

Figure 2.

Distribution of search results by year and objective.

3.1. Performance Metrics

Multiple standard performance metrics are used for model evaluation and for benchmarking against the work of other authors. This section provides details about the most commonly used metrics and their derivations. Metrics that were used by more than five (inclusive) articles will be described; only these measurements are presented in tables listing the reviewed articles.

Model performance is quantified by applying a threshold on model prediction. For classification, a threshold is directly applied to the model output. For object detection and semantic segmentation, model prediction is evaluated by using the intersection over union (), and a threshold is applied to the result. In object detection, the result is a bounding box that contains a region of interest (ROI), and for semantic segmentation, it is a group of image pixels that contain the same semantic information. As shown below, the is calculated by dividing the number of image pixels () that overlap in the predicted area () and the ground-truth area () by the number of image pixels that are in both areas and .

| (1) |

Thresholding results are used to create confusion matrix and are grouped into four self-explanatory categories: true positive (), true negative (), false positive (), and false negative (). Confusion matrices help evaluate a model’s performance and make better decisions for fine-tuning [15]. A confusion matrix is summarized by using the calculated metrics (see Table 2) to specify the model performance by using normalized units. It should be noted that some articles also used the performance measure, which is calculated as the mean of the of all images.

Table 2.

| Metric | Formula | Description | |

|---|---|---|---|

| Accuracy | Correct prediction ratio | ||

| Sensitivity | Fraction of correct positive predictions | ||

| Specificity | Fraction of correct negative predictions | ||

| Precision | Correct positive predictions over all positive predictions | ||

| Recall | Correct positive predictions over all correct predictions | ||

| F1 (or DICE or F score) | Harmonic mean between precision and recall | ||

| AUC (area under the curve) | Threshold-invariant prediction quality | ||

| MCC (Matthews correlation coefficient) | Correlation between prediction and ground truth | ||

| mAP (mean average precision) | Average precision of all classes | ||

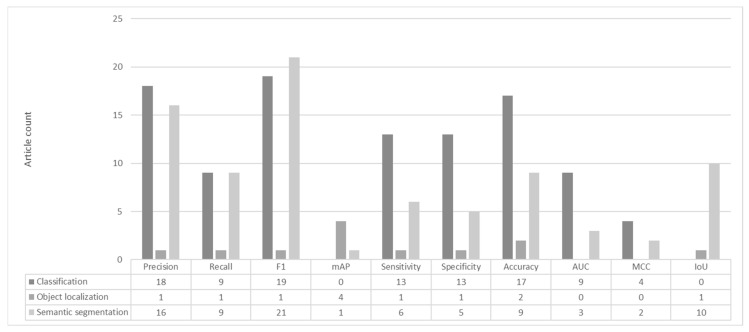

Performance metrics were analyzed in all selected articles, and it was found that 94% of the articles could be compared by using the accuracy, F1, mAP, and MCC metrics. Though their calculation and meanings are not the same, their normalized form provides a means for comparison.

3.2. Datasets

Deep learning models eliminate the need for manual feature engineering, but this results in a high model parameter count. To train such models, large datasets are required; though they are general-purpose, public datasets have accumulated enough samples (e.g., ImageNet [5]), and model development is only limited by inference time constraints and the hardware on which the model will run. Publicly available medical data are not abundant, and this creates limitations for deep learning applications. Ethical implications limit the collection of medical images, and the use of existing imagery is limited by a lack of retrospective patients’ consent. In this context, researchers are motivated to collaborate with medical institutions and other researchers to collect new samples or to reuse valid publicly available data.

All reviewed articles used images that represented three color channels: red, green, and blue (RGB). This was one of the conditions applied during article selection, and it makes the findings applicable in a home-based solution in which a conventional mobile phone can be used to take images. This section aims to account for all publicly available datasets by listing the datasets reported in the reviewed research.

Table 3 lists all of the datasets that have been made publicly available. The datasets were grouped based on their originating institution(s), and the “References” column provides the article(s) that presented the dataset. The “Sets Used” column indicates how many different datasets originated from the same institution, the “Use Count” column provides the total number of uses in the selected articles, and the “External Use References” column provides additional articles that referenced the listed dataset. Though the majority of the public datasets used contained diabetic foot ulcers, other dermatological conditions were also included to extend the available data.

Table 3.

Publicly available datasets.

| Nr. | References | Image Count | Sets Used | Wound Types | Annotation Types | Use Count | External Use References |

|---|---|---|---|---|---|---|---|

| 1 | [19] | 188 | 1 |

|

Mask | 0 | |

| 2 | [20] | 74 | 1 |

|

0 | ||

| 3 | [21] | 4000 | 1 |

|

Mask | 0 | |

| 4 | [22] | N/A | 1 |

|

2 | [23] | |

| 5 | [24] | 594 | 8 |

|

9 | [16,22,23,25,26,27,28,29,30] | |

| 6 | [31] | 1200 | 1 |

|

1 | ||

| 7 | [25,32,33,34] | 3867 | 4 |

|

ROI Mask |

4 | |

| 8 | [35] | 40 | 2 |

|

2 | [16,36] | |

| 9 | [37] | 210 | 1 |

|

1 | [27] | |

| 10 | [38,39,40] | 5659 | 2 |

|

Class labe lROI |

10 | [17,18,27,41,42,43,44,45,46] |

| 11 | [47] | 1000 | 1 |

|

1 | ||

| Total: | 11,173 |

The first three datasets are public, but were not used in any of the selected articles. The Medetec dataset [24] is an online resource of categorized wounds, and different studies have included it in different capacities; the table above provides the largest use case. Datasets 7 and 10 are used the most, but at the same time, they originated in multiple articles from a single medical/academic institution. This creates a risk that duplicate images are included in the total number; therefore, a duplicate check should be applied prior to using these datasets. Some of the research that was performed with dataset 10 included image patch classification, but the patches were not included in the count to avoid misleading estimation.

Though the combined number of public images was 11,173, the three largest proprietary datasets used contained 482,187 [48], 13,000 [49], and 8412 [50] images prior to applying any augmentation. Most of the public datasets had a simple image collection protocol (constant distance, conventional illumination, and perpendicular angle), and though samples within a single set were collected under constant conditions, using images from different datasets can be beneficial in improving dataset diversity. Different patient ethnicities, illumination conditions, and capturing devices create diversity, which is beneficial when training a model to be used at home by non-professionals with commercially available smartphones.

3.3. Preprocessing

Data quality determines a CNN model’s capability to converge during training and generalize during inference. Dataset quality is affected by the protocol used during image capture, the cameras, lenses, and filters used, the time of day, etc. All of these factors introduce variability that can be addressed before model training with a technique called preprocessing. The context of home-based monitoring increases the importance of preprocessing because different image-capturing devices will be used, and the illumination guidelines will be difficult to fulfill.

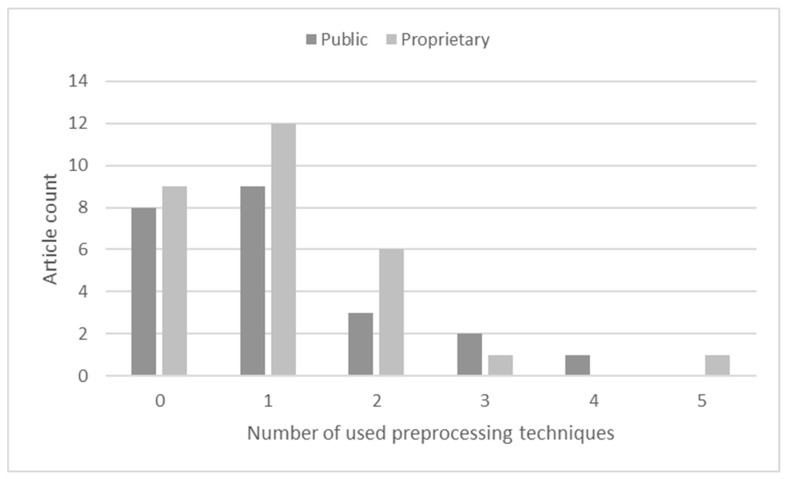

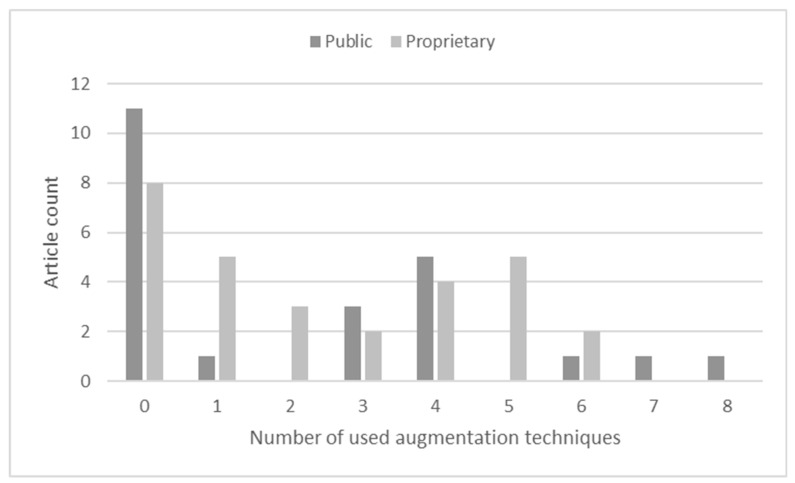

As seen in Figure 3, the majority of the selected articles used one or no (or unreported) preprocessing techniques. Here, all articles that used public or a mix of public and proprietary datasets were assigned to the Public class, and the Proprietary class only included research in which publicly available data were not used.

Figure 3.

Usage counts of performance metrics.

The most commonly used techniques were cropping (mentioned in 17 articles) and resizing (mentioned in 15 articles). Cropping was used to remove background scenery that could affect the image processing results. Resizing was used to unify a dataset when different devices were used to capture images or when cropping resulted in multiple different image sizes. Three articles reported [32,50,51] the use of zero padding instead of resizing to avoid the distortion of image features. Non-uniform illumination was addressed by applying equalization [17], normalization [51,52,53], standardization [22], and other illumination adjustment techniques [16]. Color information improvement was performed by using saturation, gamma correction [17], and other enhancement techniques [54]. Sharpening [17,53], local binary patterns (LBPs) [46], and contrast-limited adaptive histogram equalization (CLAHE) [16,55] techniques were used for image contrast adjustment. Noisy images were improved by using median filtering [56] or other noise removal techniques [16]. Images taken using a flash were processed to eliminate overexposed pixels [36,57]. Other techniques included grayscale image production [58], skin detection by using color channel thresholding [22], and simple linear iterative clustering (SLIC) [59,60].

Though multiple preprocessing techniques were reported, the motivations for their usage were not specified. Therefore, we can only speculate about the goal of their application. A handful of articles applied multiple techniques, but without the reporting of improvements, we can only predict that the inference time overhead was justified. At the same time, numerous articles did not report any preprocessing techniques, and this raises the question of whether the best result was actually achieved or if it was only a poorly presented methodology. One of the public datasets [40] contained augmented data that were preprocessed before augmentation. As a result, the articles that used this dataset did not used any preprocessing or augmentation techniques [17,42,43,44,45].

3.4. Data Augmentation

As it was found during the public dataset analysis that most of the selected research articles used a limited number of image samples. In order to train and test a model, a large number of samples is required, even when the model backbone is trained by using transfer learning. Data augmentation techniques can be employed to increase dataset size by using existing data samples. This is a cost-effective substitute for additional data collection and labeling, is well controlled, and was found in the past to overcome the problem of model overfitting [61].

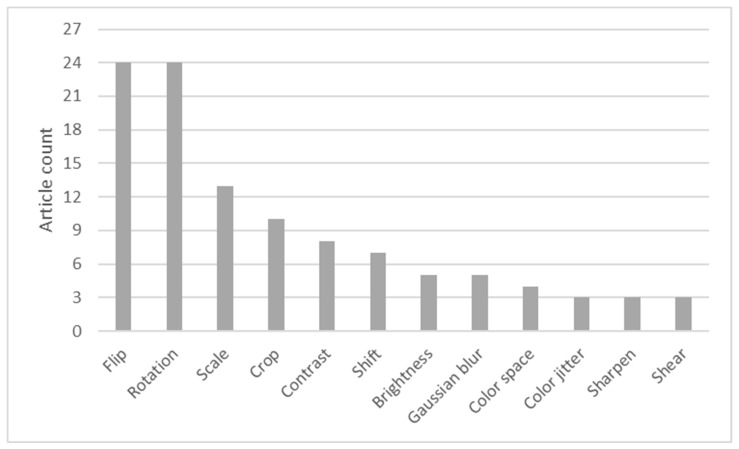

The chart in Figure 4 shows the augmentation techniques that were used the most. Image flipping and rotation were used in most of the articles. While most of the articles performed all possible (vertical, horizontal, and both) flipping operations, some articles only used one type of flipping [52,62,63]. Only some of the articles specified rotation increments. In those, 25°, 45°, and 90° increment values were used, but this selection was not objectively verified, and the only obvious reasons could be balancing a multiclass dataset and avoiding too much data resulting from a single augmentation technique. Cropping and scaling techniques were used to vary wound proportions in images, to isolate wounds within images, and to change the amount of background information. Other techniques can be segmented into two large groups: geometric transformations (shift and shear) and color information adjustments (contrast, brightness, Gaussian blur, color space change, and color jittering and sharpening). The first group affects contextual information by changing a wound’s location or shape, while the second group changes images’ color features.

Figure 4.

Preprocessing technique usage.

As seen in Figure 5, data augmentation was not related to the dataset source. Though most of the research did not use or report the use of augmentation, there were no general tendencies, and varying numbers of techniques were used. Most of the techniques were used in articles that produced public datasets [40], and this set a positive precedence when making data public. The authors invested time and effort in producing a balanced dataset that could encourage more reproducible research, and the results in different studies can, thus, be objectively compared. At the same time, it should be noted that even a single augmentation technique can produce multiples of an original dataset; e.g., Li [22] reported that they increased the training dataset by 60 times, but did not specify what augmentation techniques were used. Even two techniques would be enough to produce this multiplication, but here, concern should be expressed as to how useful such a dataset is and if a model trained with it does not overfit. Notably, Zhao [53] reported that image augmentation by flipping reduced the model’s performance, but this could also be an indication that model overfitting was reduced. One possible solution to this problem is training-time augmentation with randomly selected methods [41,52,64,65,66].

Figure 5.

The image augmentation techniques used.

Based on the image augmentation taxonomy presented by Khalifa [61], almost all augmentation techniques that were found fell into the category of classical image augmentation and geometrics, photometrics, or other subcategories. Style-GAN [67] was the only deep learning data augmentation technique that was used for synthetic data generation [68]. According to Khalifa [61], this method falls into the deep learning augmentation subcategory of generative adversarial networks (GANs). The other two subcategories are neural style transfer (NST) [69] and meta-metric learning [70].

3.5. Backbone Architectures

In computer vision based on convolutional neural networks, an unofficial backbone term is used to describe the part of the network that is used for feature extraction [71]. Though this term is more commonly used to describe feature extraction in object detection and segmentation architectures, here, it will be used for all considered deep learning objectives, including classification.

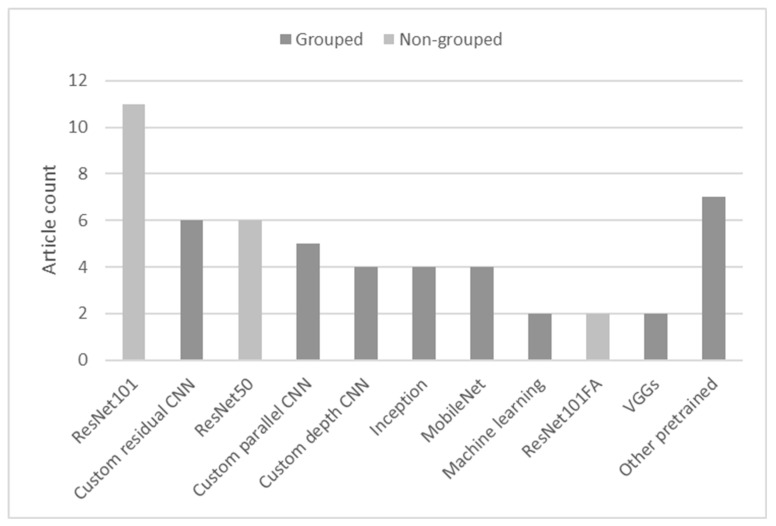

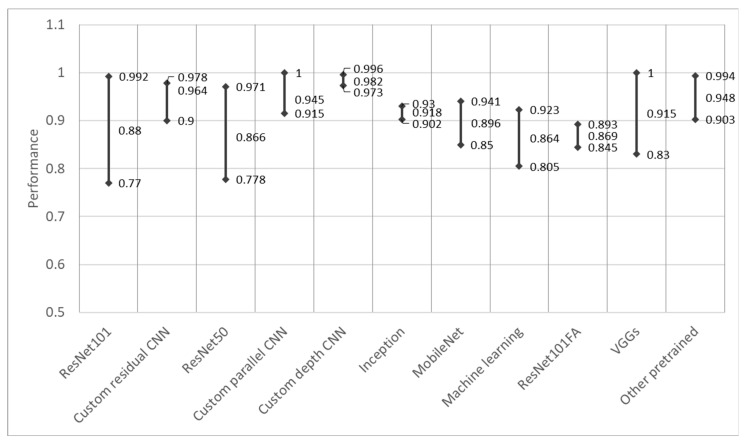

The results in Figure 6 display most of the backbone architectures that were used in the reviewed articles. To achieve a better representation, some different versions of popular networks (e.g., MobileNet [72], Inception [73], VGG [74], and EfficientNet [75]) and custom-made architectures were grouped (see the two different bar colors).

Figure 6.

Number of augmentation techniques used.

The chart indicates that the small size of the datasets used in the reviewed articles motivated researchers to mostly rely on off-the-shelf feature extraction architectures that were pretrained on large datasets, such as ImageNet [5] and MS COCO [76]. ResNet [77] was found to be the most popular architecture; it was used in 42% of the analyzed articles. Feature extractors taken from classifiers of various depths (18, 50, 101, and 152 layers) were used for all three analyzed objectives, but in the majority of the articles (14 out of 22), they were used as a backbone for the network to perform semantic segmentation. Custom convolutional structures were found to be the second-largest subgroup of feature extraction networks (15 out of 52). There were two main motivations for using custom feature extractors: a lack of data and the aim of developing an efficient subject-specific network [51].

Custom CNNs were grouped into three groups based on their topologies: depth-wise networks, networks that used parallel convolution layers, and networks that used residual or skip connections. The use of a depth-wise topology is best explained when the image network size is considered. Two of the shallowest networks [36,57] were used to process 5 × 5 pixel image patches while using backbones with two and three convolutional layers. In other cases, the increase in the number of feature extractor layers matched the increase in the processed images’ resolution; four convolutional layers were used to extract features from 151 × 151 [46] and 300 × 300 [42] pixel images. Networks with parallel convolutions were inspired by the Inception [73] architecture and sought to extract features of multiple scales. All of them [17,51,78,79,80] were used to process image patches containing wounds in classification tasks. Networks within the last group were designed by using concepts of 3D [26] and parallel convolutions [30,47], repeatable network modules [44,81], and other concepts [66]. All of them used residual connections to promote deeper gradient backpropagation training, but less complex networks [77]. The use of two other publicly available backbones was motivated by their applicability for mobile applications (MobileNet) or multiscale feature detection (Inception).

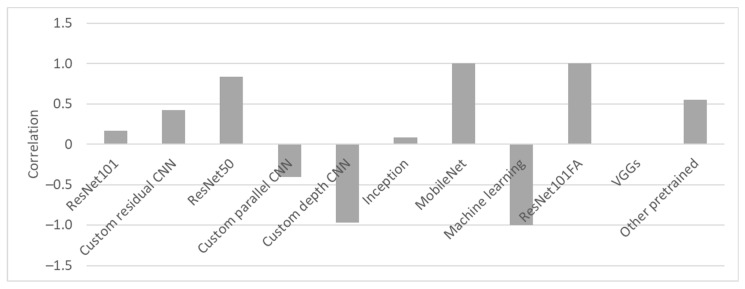

Considering the different objectives, datasets, and annotations that were used, it was difficult to objectively compare the backbones’ performance, but here, we attempt to compare the effectiveness of feature extraction. Figure 7 shows an evaluation of the model performance while using the most common metrics, as discussed in Section 3.1; when multiple values were provided (in the case of multiclass classification performance), the best result was taken. The best, worst, and median performance data are shown.

Figure 7.

Backbone architecture usage.

Though all of the reviewed articles described their methodologies, for a few of the articles, it was difficult to objectively evaluate the backbones’ performance. Some studies presented binary classification results for multiple different classes [40,41,43,44,45,46], while others used unpopular performance metrics [22,23,58] and were excluded from this analysis. It could be observed that a performance metric of 0.9 or higher was achieved by using custom architectures, Inception, ResNet152, and other pretrained networks. ResNet architectures, which were used the most (with depths of 101 and 50), demonstrated high variability in performance, and the median value proved that this was a tendency and not the effect of an outlier. Conversely, the custom architectures showed a small variability, and this demonstrated their fine-tuning for the subject.

To objectively evaluate the backbones’ performance, the correlations between the performance and training datasets were calculated, as shown in Figure 8. The evaluation of these correlations was problematic because the augmentation results were not disclosed in all articles, and it was not clear what the actual training datasets’ sizes were. The same applies to articles that did not provide any details about augmentation. Correlations that did not reach extreme values of −1 or 1 indicated that the results were compared with those of similar dataset sizes. It can be concluded that the performance of all pretrained backbones improved when more training data were used. Network fine-tuning resulted in better generalization when more data were provided. A negative correlation for custom parallel and depth architectures showed that an increase in dataset size resulted in a performance reduction. Though the performance, as seen in Figure 9, was outstanding, the negative correlation indicated that more diverse datasets should be used to objectively verify the design.

Figure 8.

Correlation of the backbone performance with training dataset size.

Figure 9.

Backbone performance evaluation.

3.6. Performance Comparison

The features extracted in a backbone network are processed to make a decision in a network element called a head. Different heads output different results and, thus, define a network objective. A classifier head will output the probability of an image belonging to two or more classes. A regression head that is used in object detection will output fitted bounding-box coordinates. A segmentation head will output an input image mask in which each pixel is individually assigned to a class. This subsection analyzes the architectures used in full and attempts to highlight the customizations that yielded the best performance. All reviewed articles were grouped by objective and ordered by date of publication and the main author. The best results for each objective are reviewed in detail after the presentation of a related table. Table 4 presents classification studies, Table 5 lists reviewed object detection articles and Table 6 provides details about semantic segmentation papers.

Table 4.

Summary of studies on chronic wound classification.

| Reference | Subject and Classes (Each Bullet Represents a Different Model) | Methodology | Original Images (Training Samples) |

Results |

|---|---|---|---|---|

| Goyal et al. (2018) [51] |

Diabetic foot ulcer

|

|

344 (22,605) |

|

| Cirillo et al. (2019) [63] |

Burn wound

|

|

23 (676) |

|

| Zhao et al. (2019) [53] |

Diabetic foot ulcer

|

|

1639 | Wound Depth:

|

| Abubakar et al. (2020) [52] |

Burn wound

|

|

1900 |

|

| Alzubaidi et al. (2020) [79] |

Diabetic foot ulcer

|

|

754 (20,917) |

|

| Alzubaidi et al. (2020) [47] |

Diabetic foot ulcer

|

|

1200 (2677) |

|

| Chauhan et al. (2020) [62] |

Burn wound

|

|

141 (316) |

|

| Goyal et al. (2020) [40] |

Diabetic foot ulcer

|

|

Ischemia: 1459 (9870) Infection: 1459 (5892) |

Ischemia:

|

| Wang et al. (2020) [54] |

Burn wound

|

|

484 (5637) |

|

| Rostami et al. (2021) [33] |

Wound

|

|

400 (19,040) |

|

| Xu et al. (2021) [45] |

Diabetic foot ulcer

|

|

Ischemia: 9870 (9870) Infection: 5892 (5892) |

Ischemia:

|

| Al-Garaawi et al. (2022) | Diabetic foot ulcer

|

|

Wound: 1679 (16,790) Ischemia: 9870 (9870) Infection: 5892 (5892) |

Wound:

|

| Al-Garaawi et al. (2022) [43] |

Diabetic foot ulcer

|

|

1679 (1679) |

|

| Alzubaidi et al. (2022) [30] |

Diabetic foot ulcer

|

|

3288 (59,184) |

|

| Anisuzzaman et al. (2022) [29] |

Wound

|

|

1088 (6108) |

|

| Das et al. (2022) [80] |

Diabetic foot ulcer

|

|

397 (3222) |

|

| Das et al. (2022) [81] |

Diabetic foot ulcer

|

|

Wound: 1679 (1679) Ischemia: 9870 (9870) Infection: 5892 (5892) |

Wound:

|

| Das et al. (2022) [44] |

Diabetic foot ulcer

|

|

Ischemia: 9870 (9870) Infection: 5892 (5892) |

Ischemia:

|

| Liu et al. (2022) [41] |

Diabetic foot ulcer

|

|

Ischemia: 2946 (58,200) Infection: 2946 (58,200) |

Ischemia:

|

| Venkatesan et al. (2022) [78] |

Diabetic foot ulcer

|

|

1679 (18,462) |

|

| Yogapriya et al. (2022) [17] |

Diabetic foot ulcer

|

|

5892 (29,450) |

|

All classification articles were filtered to obtain those with F1 scores of at least 0.93 and training datasets with 9000 or more samples (at least 1000 non-augmented samples); six articles were found. Al-Garaawi et al. used local binary patterns to enrich visual image information for binary classification [46]. A lightweight depth CNN was proposed for the classification of DFUs, the presence of ischemia, and infections. Though the classification used achieved the poorest results (F1 score: 0.942 (wound), 0.99 (ischemia), and 0.744 (infection)), the smallest dataset was used for model training. Similar results were achieved (F1 score: 0.89 (wound), 0.93 (ischemia), and 0.76 (infection)) [43] by fusing features extracted by using deep (GoogLeNet [73]) and machine learning algorithms (Gabor [82] and HOG [83]) and applying a random forest classifier. It was demonstrated that fused features outperformed deep features alone. Though the same public datasets were used in both cases, here, augmentation was not used. The deeper and more complex (29 convolutions in total) architecture designed by Alzubaidi et al. [30] achieved an F1 score of 0.973 and was demonstrated to perform well in two different domains: diabetic foot ulcers and the analysis breast cancer cytology (accuracy of 0.932), outperforming other SOTA algorithms. Parallel convolution blocks were used to extract multiscale features by using four different kernels (1 × 1, 3 × 3, 5 × 5, and 7 × 7 pixels), a concept that is used in Inception architectures. Residual connections were used to foster backpropagation during training and solve the vanishing gradient problem. Das et al. proposed repeatable CNN blocks (stacked convolutions with the following kernel sizes: 1 × 1, 3 × 3, and 1 × 1 pixels) with residual connections to design different networks of different depths [44]. Two different networks of different depths were used; four residual blocks were used for ischemia classification and achieved an F1 score of 0.98, while a score of 0.8 was achieved in infection classification with a network containing seven residual blocks. This difference in classifier complexity and performance was explained by an imbalance in the training datasets, as the ischemia dataset had almost two times more training samples. Liu et al. used EfficientNet [75] architectures and transfer learning to solve the same problem of ischemia and infection classification. Though they used more balanced datasets, two different networks of different complexities were used to arrive at comparable performances. EfficientNetB1 was used for ischemia classification (F1 score: 0.99), while infections were classified by using EfficientNetB5 (F1 score: 0.98). Finally, Venkatesan et al. reported an F1 score of 1.0 in binary diabetic foot wound classification by using a lightweight (11 convolutional layers) NFU-Net [78] based on parallel convolutional layers. Each parallel layer contained two convolutions: feature pooling (using a 3 × 3 kernel) and projection (using a 1 × 1 kernel). Parametrized ReLU [84] was used to promote gradient backpropagation during training, resulting in better fine-tuning of the network hyperparameters. Additionally, images were augmented by using the CLoDSA image augmentation library [85], and the class imbalance problem was solved by using the SMOTE technique [86].

Table 5.

Summary of papers on chronic wound detection.

| Reference | Subject and Classes (Each Bullet Represents a Different Model) | Methodology | Original Images (Training Samples) |

Results |

|---|---|---|---|---|

| Goyal et al. (2018) [65] |

Diabetic foot ulcer

|

|

Normal: 2028 (28,392)Abnormal: 2080 (29,120) |

|

| Amin et al. (2020) [42] |

Diabetic foot ulcer

|

|

Ischemia: 9870 (9870) Infection: 5892 (5892) |

Ischemia:

|

| Han et al. (2020) [87] |

Diabetic foot ulcer

|

|

2688 (2668) |

|

| Anisuzzaman et al. (2022) [25] |

Wound

|

|

1800 (9580) |

|

| Huang et al. (2022) [88] |

Wound

|

|

727 (3600) |

|

The articles in which object detection was the main objective were filtered to obtain those with an mAP of at least 0.9 and a training dataset that contained 9000 or more samples (at least 1000 non-augmented samples); three articles were found. Goyal et al. analyzed multiple SOTA models that could be used in real-time mobile applications [65]. Faster R-CNN [88] with the InceptionV2 [89] backbone was found to be the best compromise among inference time (48 ms), model size (52.2 MB), and ulcer detection precision (mAP: 0.918). Two-step transfer learning was used to cope with the problem of small datasets. The first step partially transferred features from a classifier trained on the ImageNet [5] dataset, and the second step fully transferred features from a classifier trained on the MS COCO object detection dataset [76]. In another study, Amin et al. [42] proposed a two-step method for DFU detection and classification. First, an image was classified by using a custom shallow classifier (four convolutional layers) into the ischemic or infectious classes (accuracies: ischemia—0.976, infection—0.943); then, an exact DFU bounding box was detected by using YOLOv2 [90] with the ShuffleNet [91] backbone (average mAP—0.94). YOLOv2 with the ShuffleNet backbone outperformed conventional YOLOv2 by 0.22 mAP points when trained from scratch. The best mAP score (0.973) in DFU wound detection was achieved by Anisuzzaman et al. [25] with the next-generation YOLOv3 algorithm [92]. It was reported that the proposed model could process video taken by a mobile phone at 20 frames per second.

Table 6.

Summary of studies on the semantic segmentation of chronic wounds.

| Reference | Subject and Classes (Each Bullet Represents a Different Model) | Methodology | Original Images (Training Samples) |

Results |

|---|---|---|---|---|

| García-Zapirain et al. (2018) [26] |

Pressure ulcer

|

|

193 (193) |

|

| Li et al. (2018) [22] |

Wound

|

|

950 (57,000) |

|

| Zahia et al. (2018) [36] |

Pressure ulcer

|

|

Granulation: 22 (270,762) Necrotic: 22 (37,146) Slough: 22 (80,636) |

Granulation:

|

| Jiao et al. (2019) [93] |

Burn wound

|

|

1150 |

|

| Khalil et al. (2019) [16] |

Wound

|

|

377 |

|

| Li et al. (2019) [23] |

Wound

|

|

950 |

|

| Rajathi et al. (2019) [57] |

Varicose ulcer

|

|

1250 |

|

| Şevik et al. (2019) [94] |

Burn wound

|

|

105 |

|

| Blanco et al. (2020) [59] |

Dermatological ulcer

|

|

217 (179,572) |

|

| Chino et al. (2020) [66] |

Wound

|

|

446 (1784) |

|

| Muñoz et al. (2020) [95] |

Diabetic foot ulcer

|

|

520 |

|

| Wagh et al. (2020) [55] |

Wound

|

|

1442 |

|

| Wang et al. (2020) [32] |

Foot ulcer

|

|

1109 (4050) |

|

| Zahia et al. (2020) [28] |

Pressure ulcer

|

|

210 |

|

| Chang et al. (2021) [96] |

Burn wound

|

|

2591 |

|

| Chauhan et al. (2021) [64] |

Burn wound

|

|

449 |

|

| Dai et al. (2021) [68] |

Burn wound

|

|

1150 |

|

| Liu et al. (2021) [31] |

Burn wound

|

|

1200 |

|

| Pabitha et al. (2021) [56] |

Burn wound

|

|

1800 | Segmentation:

|

| Sarp et al. (2021) [49] |

Wound

|

|

13,000 |

|

| Cao et al. (2022) [18] |

Diabetic foot ulcer

|

|

1426 |

|

| Chang et al. (2022) [60] |

Pressure ulcer

|

|

Wound And Reepithelization: 755 (2893) Tissue: 755 (2836) |

Wound And Reepithelization:

|

| Chang et al. (2022) [50] |

Burn wound

|

|

4991 |

|

| Lien et al. (2022) [58] |

Diabetic foot ulcer

|

|

219 |

|

| Ramachandram et al. (2022) [48] |

Wound

|

|

Wound: 465,187Tissue: 17,000 | Wound:

|

| Scebba et al. (2022) [27] |

Wound

|

|

1330 |

|

The articles on semantic segmentation were filtered to obtain those with an F1 score of at least 0.89 and a training dataset size of 2000 or more samples (at least 1000 non-augmented samples); in total, five articles were found. Dai et al. proposed a framework [68] for synthetic burn image generation by using Style-GAN [67] and used those images to improve the performance of Burn-CNN [93]. The proposed method achieved an F1 score of 0.89 when testing on non-synthetic images. The authors used 3D whole-body skin reconstruction. Therefore, the proposed method can be used for the calculation of the %TBSA (total body surface area). It was stated that this framework could also be used to synthesize other wound types. Three different DeeplabV3+ [97] models with the ResNet101 [77] backbone were used to estimate burn %TBSA while using the rule of palms [50]. Two models were used for wound and palm segmentation, and the third was used for deep burn segmentation. The SLIC [98] super-pixel technology was used for accurate deep burn annotation. Deep burn segmentation achieved an F1 score of 0.902 (total wound area: 0.894; palm area: 0.99). Sarp et al. achieved an F1 score of 0.93 [49] on simultaneous chronic wound border and tissue segmentation by using a conditional generative adversarial network (cGAN). Though the proposed model “is insensitive to colour changes and could identify the wound in a crowded environment” [49], it was demonstrated that the cGAN failed to converge; therefore, training should be closely monitored by evaluating the loss and validity of the generated image masks. Wang et al. used MobileNetV2 [99] for foot ulcer wound image segmentation [32] and achieved a Dice coefficient of 0.94. The model was pre-trained on the Pascal VOC segmentation dataset [100]. The connected component labeling (CCL) postprocessing technique was used to refine the segmentation results, and this contributed to a slight performance increase.

4. Limitations

The articles included in this review varied in their reporting quality. Therefore, extracting and evaluating data while using one set of rules is problematic. Section 2.4 describes the strategies that were used to cope with unreported information. Article selection was performed by the main author, and though clear eligibility criteria were used, this might have imposed some limitations. Because the main goal of this review was not performance evaluation, there was no requirement for the reporting quality or size (page count) of the articles. To avoid distorting the reported results, the authors of this review imposed some constrains, such as the limitations on performance and training sample counts in Section 3.6 and the outlier removal (based on median values) in Section 3.5.

5. Discussion and Conclusions

The articles that were found and analyzed for this review demonstrated the importance and popularity of DFU diagnosis and monitoring as a research subject. The application of deep learning methods for this computer vision problem was found to be the most universal and to deliver the best results, but lack of training data makes this challenging. The academic community has failed to compete with enterprises (Swift medical [48], eKare [49]) simply due to a lack of diabetic foot imagery. Though good results have been achieved, the generalization capabilities of some deep learning models developed to date raise doubts.

Zhang et al. found in their review that most of the time, only hundreds of samples were used [11]. Other reviews identified the lack of public datasets as the main challenge [7,8,12]. Therefore, this article had the goal of taking an inventory of available public datasets that could be used for the creation of diverse training dataset. Though this criterion is arbitrary and does not necessarily ensure adequate training capabilities or good model generalization, it was found that more than 40% of the reviewed articles used less than 1000 original images. Wang et al. used excessive augmentation to increase their dataset size by sixty times [22]. On the other hand, Zahia et al. proposed a segmentation system that used decisions from a 5 × 5 pixel patch classifier to create picture masks [36]. They had 22 original pressure ulcer images, which resulted in a training dataset of 270,762 image patches. These two examples are extreme, but they illustrate the importance of data availability well.

In a review performed by Zahia et al., data preprocessing was considered as a conventional step in a typical CNN application pipeline [8]. It is considered to significantly improve performance [12]. Models’ generalization capabilities can also be affected by different image-taking protocols or even just different cameras. This issue is especially relevant in the context of home-based model usage. Though models can be trained on a large and diverse dataset to mitigate this risk, special attention should be paid to preprocessing techniques. In the current review, more than 30% of the articles did not report the use of any image preprocessing techniques, and only one used four [17]. Though this was not usually the case, the use of different public dataset calls for an approach to image adjustment that can later be directly applied during inference under any environmental conditions.

Augmentation is considered a solution to the problem of small datasets [8,11]. It was found that more than one-third of the reviewed articles did not report augmentation. Others reported using augmentation, but it was not possible to account for the increase in the training dataset. This made it difficult to evaluate the relationship between model performance and training dataset size. Though most articles used data augmentation, the effects of augmentation on the results were rarely evaluated. There are two big branches of augmentation techniques: classical and deep-learning-based [61]. Of the reviewed articles, only one used deep-learning-based augmentation [68]. Though augmentation can increase the dataset size by sixty times, the acceptable level of augmentation should be investigated.

The backbone is the core part of a deep learning model, as it generalizes data and provides feature maps for downstream processing. Though ResNet architectures of various depths were used the most, the resulting model performance had the widest spread, indicating that there is not a straightforward application of pre-trained backbones. Less than one-third of papers reported using a custom backbone design. While some were simple and extracted features of 5 × 5 pixel image patches [36,57], others provided model scaling capabilities [44] by using a custom number of standardized blocks. Models with custom backbones demonstrated the best performance and the smallest spread in the results, but this is to be expected considering the effort put into their design and fine-tuning. It should be noted that past reviews (referenced in the Introduction section) did not analyze this aspect. Finally, this is all related to data availability, as having more data helps design more complex custom architectures that do not overfit; at the same time, having more data results in better off-the-shelf backbone adoption through transfer learning or even training from scratch.

The best-performing models for each objective were reviewed in an effort to objectively find a common trend and direction for further research. In classification, past reviews found that CNNs based on residual connection were used the most (DenseNet [8], ResNet [12]), but no conclusions were made on their performance. In the current review, the best results were achieved by using backbones based on parallel convolutions, Inception, and custom architectures. There were only a few articles that focused on object detection, but the best performance was achieved by using the YOLO architecture family, and it was found to be the most popular in past reviews [11,12]. Semantic segmentation was found to be performed the most by using the Mask R-CNN and DeepLabV3 architectures with ResNet101 for feature extraction. These two methods were also in the two best-performing models. Other reviews found Mask R-CNN [8], UNet [12], and other FCNs [11] to be used the most, but conclusions about their performance were not reported.

The observations in this review have indicated the following problems for future research:

There are poor preprocessing strategies when images are taken from multiple different sources. Methods that can improve uniformity and eliminate artifacts introduced in an uncontrolled environment should be analyzed.

There is a lack of or excessive use of augmentation techniques. Augmentation strategies that yield better model generalization capabilities should be tested. Deep-learning-based methods should be applied.

There is a limited amount of annotated data. An unsupervised or weakly supervised deep learning model for semantic segmentation should be developed.

Author Contributions

Conceptualization, J.C. and V.R.; methodology, V.R.; article search, A.K.; data extraction, A.K. and R.P.; data synthesis, A.K.; writing—original draft preparation, A.K. and R.P.; writing—review and editing, J.C. and V.R.; supervision, V.R. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Peer N., Balakrishna Y., Durao S. Screening for Type 2 Diabetes Mellitus. Cochrane Database Syst. Rev. 2020;5:CD005266. doi: 10.1002/14651858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.International Diabetes . Federation IDF Diabetes Atlas. IDF; Brussels, Belgium: 2021. [Google Scholar]

- 3.Mavrogenis A.F., Megaloikonomos P.D., Antoniadou T., Igoumenou V.G., Panagopoulos G.N., Dimopoulos L., Moulakakis K.G., Sfyroeras G.S., Lazaris A. Current Concepts for the Evaluation and Management of Diabetic Foot Ulcers. EFORT Open Rev. 2018;3:513–525. doi: 10.1302/2058-5241.3.180010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moulik P.K., Mtonga R., Gill G.V. Amputation and Mortality in New-Onset Diabetic Foot Ulcers Stratified by Etiology. Diabetes Care. 2003;26:491–494. doi: 10.2337/diacare.26.2.491. [DOI] [PubMed] [Google Scholar]

- 5.Deng J., Dong W., Socher R., Li L.J., Li K., Li F.F. ImageNet: A Large-Scale Hierarchical Image Database; Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; Miami, FL, USA. 20–25 June 2009. [Google Scholar]

- 6.Wang J., Zhu H., Wang S.H., Zhang Y.D. A Review of Deep Learning on Medical Image Analysis. Mob. Netw. Appl. 2021;26:351–380. doi: 10.1007/s11036-020-01672-7. [DOI] [Google Scholar]

- 7.Tulloch J., Zamani R., Akrami M. Machine Learning in the Prevention, Diagnosis and Management of Diabetic Foot Ulcers: A Systematic Review. IEEE Access. 2020;8:198977–199000. doi: 10.1109/ACCESS.2020.3035327. [DOI] [Google Scholar]

- 8.Zahia S., Zapirain M.B.G., Sevillano X., González A., Kim P.J., Elmaghraby A. Pressure Injury Image Analysis with Machine Learning Techniques: A Systematic Review on Previous and Possible Future Methods. Artif. Intell. Med. 2020;102:101742. doi: 10.1016/j.artmed.2019.101742. [DOI] [PubMed] [Google Scholar]

- 9.Chan K.S., Lo Z.J. Wound Assessment, Imaging and Monitoring Systems in Diabetic Foot Ulcers: A Systematic Review. Int. Wound J. 2020;17:1909–1923. doi: 10.1111/iwj.13481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Anisuzzaman D.M., Wang C., Rostami B., Gopalakrishnan S., Niezgoda J., Yu Z. Image-Based Artificial Intelligence in Wound Assessment: A Systematic Review. Adv. Wound Care. 2022;11:687–709. doi: 10.1089/wound.2021.0091. [DOI] [PubMed] [Google Scholar]

- 11.Zhang J., Qiu Y., Peng L., Zhou Q., Wang Z., Qi M. A Comprehensive Review of Methods Based on Deep Learning for Diabetes-Related Foot Ulcers. Front. Endocrinol. 2022;13:1679. doi: 10.3389/fendo.2022.945020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang R., Tian D., Xu D., Qian W., Yao Y. A Survey of Wound Image Analysis Using Deep Learning: Classification, Detection, and Segmentation. IEEE Access. 2022;10:79502–79515. doi: 10.1109/ACCESS.2022.3194529. [DOI] [Google Scholar]

- 13.Page M.J., Moher D., Bossuyt P.M., Boutron I., Hoffmann T.C., Mulrow C.D., Shamseer L., Tetzlaff J.M., Akl E.A., Brennan S.E., et al. PRISMA 2020 Explanation and Elaboration: Updated Guidance and Exemplars for Reporting Systematic Reviews. BMJ. 2021;372:n160. doi: 10.1136/bmj.n160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wohlin C. ACM International Conference Proceeding Series, Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, London, UK, 13–14 May 2014. Blekinge Institute of Technology; Karlskrona, Sweden: 2014. Guidelines for Snowballing in Systematic Literature Studies and a Replication in Software Engineering. [Google Scholar]

- 15.Hossin M., Sulaiman M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process. 2015;5:1–11. doi: 10.5121/ijdkp.2015.5201. [DOI] [Google Scholar]

- 16.Khalil A., Elmogy M., Ghazal M., Burns C., El-Baz A. Chronic Wound Healing Assessment System Based on Different Features Modalities and Non-Negative Matrix Factorization (NMF) Feature Reduction. IEEE Access. 2019;7:80110–80121. doi: 10.1109/ACCESS.2019.2923962. [DOI] [Google Scholar]

- 17.Yogapriya J., Chandran V., Sumithra M.G., Elakkiya B., Shamila Ebenezer A., Suresh Gnana Dhas C. Automated Detection of Infection in Diabetic Foot Ulcer Images Using Convolutional Neural Network. J. Healthc. Eng. 2022;2022:2349849. doi: 10.1155/2022/2349849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cao C., Qiu Y., Wang Z., Ou J., Wang J., Hounye A.H., Hou M., Zhou Q., Zhang J. Nested Segmentation and Multi-Level Classification of Diabetic Foot Ulcer Based on Mask R-CNN. Multimed Tools Appl. 2022:1–20. doi: 10.1007/s11042-022-14101-6. [DOI] [Google Scholar]

- 19.Kręcichwost M., Czajkowska J., Wijata A., Juszczyk J., Pyciński B., Biesok M., Rudzki M., Majewski J., Kostecki J., Pietka E. Chronic Wounds Multimodal Image Database. Comput. Med. Imaging Graph. 2021;88:101844. doi: 10.1016/j.compmedimag.2020.101844. [DOI] [PubMed] [Google Scholar]

- 20.Yadav D.P., Sharma A., Singh M., Goyal A. Feature Extraction Based Machine Learning for Human Burn Diagnosis from Burn Images. IEEE J. Transl. Eng. Health Med. 2019;7:1800507. doi: 10.1109/JTEHM.2019.2923628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kendrick C., Cassidy B., Pappachan J.M., O’Shea C., Fernandez C.J., Chacko E., Jacob K., Reeves N.D., Yap M.H. Translating Clinical Delineation of Diabetic Foot Ulcers into Machine Interpretable Segmentation. arXiv. 20222204.11618 [Google Scholar]

- 22.Li F., Wang C., Liu X., Peng Y., Jin S. A Composite Model of Wound Segmentation Based on Traditional Methods and Deep Neural Networks. Comput. Intell. Neurosci. 2018;2018:4149103. doi: 10.1155/2018/4149103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li F., Wang C., Peng Y., Yuan Y., Jin S. Wound Segmentation Network Based on Location Information Enhancement. IEEE Access. 2019;7:87223–87232. doi: 10.1109/ACCESS.2019.2925689. [DOI] [Google Scholar]

- 24.Medetec Pictures of Wounds and Wound Dressings. [(accessed on 16 January 2023)]. Available online: http://www.medetec.co.uk/files/medetec-image-databases.html.

- 25.Anisuzzaman D.M., Patel Y., Niezgoda J.A., Gopalakrishnan S., Yu Z. A Mobile App for Wound Localization Using Deep Learning. IEEE Access. 2022;10:61398–61409. doi: 10.1109/ACCESS.2022.3179137. [DOI] [Google Scholar]

- 26.García-Zapirain B., Elmogy M., El-Baz A., Elmaghraby A.S. Classification of Pressure Ulcer Tissues with 3D Convolutional Neural Network. Med. Biol. Eng. Comput. 2018;56:2245–2258. doi: 10.1007/s11517-018-1835-y. [DOI] [PubMed] [Google Scholar]

- 27.Scebba G., Zhang J., Catanzaro S., Mihai C., Distler O., Berli M., Karlen W. Detect-and-Segment: A Deep Learning Approach to Automate Wound Image Segmentation. Inf. Med. Unlocked. 2022;29:100884. doi: 10.1016/j.imu.2022.100884. [DOI] [Google Scholar]

- 28.Zahia S., Garcia-Zapirain B., Elmaghraby A. Integrating 3D Model Representation for an Accurate Non-Invasive Assessment of Pressure Injuries with Deep Learning. Sensors. 2020;20:2933. doi: 10.3390/s20102933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Anisuzzaman D.M., Patel Y., Rostami B., Niezgoda J., Gopalakrishnan S., Yu Z. Multi-Modal Wound Classification Using Wound Image and Location by Deep Neural Network. Sci. Rep. 2022;12:20057. doi: 10.1038/s41598-022-21813-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Alzubaidi L., Fadhel M.A., Al-Shamma O., Zhang J., Santamaría J., Duan Y. Robust Application of New Deep Learning Tools: An Experimental Study in Medical Imaging. Multimed Tools Appl. 2022;81:13289–13317. doi: 10.1007/s11042-021-10942-9. [DOI] [Google Scholar]

- 31.Liu H., Yue K., Cheng S., Li W., Fu Z. A Framework for Automatic Burn Image Segmentation and Burn Depth Diagnosis Using Deep Learning. Comput. Math. Methods Med. 2021;2021:5514224. doi: 10.1155/2021/5514224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wang C., Anisuzzaman D.M., Williamson V., Dhar M.K., Rostami B., Niezgoda J., Gopalakrishnan S., Yu Z. Fully Automatic Wound Segmentation with Deep Convolutional Neural Networks. Sci. Rep. 2020;10:21897. doi: 10.1038/s41598-020-78799-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rostami B., Anisuzzaman D.M., Wang C., Gopalakrishnan S., Niezgoda J., Yu Z. Multiclass Wound Image Classification Using an Ensemble Deep CNN-Based Classifier. Comput. Biol. Med. 2021;134:104536. doi: 10.1016/j.compbiomed.2021.104536. [DOI] [PubMed] [Google Scholar]

- 34.Wang C., Mahbod A., Ellinger I., Galdran A., Gopalakrishnan S., Niezgoda J., Yu Z. FUSeg: The Foot Ulcer Segmentation Challenge. arXiv. 2022 doi: 10.48550/arxiv.2201.00414. [DOI] [Google Scholar]

- 35.National Pressure Ulcer Advisory Panel Pressure Injury Photos. [(accessed on 16 January 2023)]. Available online: https://npiap.com/page/Photos.

- 36.Zahia S., Sierra-Sosa D., Garcia-Zapirain B., Elmaghraby A. Tissue Classification and Segmentation of Pressure Injuries Using Convolutional Neural Networks. Comput. Methods Programs Biomed. 2018;159:51–58. doi: 10.1016/j.cmpb.2018.02.018. [DOI] [PubMed] [Google Scholar]

- 37.Yang S., Park J., Lee H., Kim S., Lee B.U., Chung K.Y., Oh B. Sequential Change of Wound Calculated by Image Analysis Using a Color Patch Method during a Secondary Intention Healing. PLoS ONE. 2016;11:e0163092. doi: 10.1371/journal.pone.0163092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yap M.H., Cassidy B., Pappachan J.M., O’Shea C., Gillespie D., Reeves N.D. Analysis towards Classification of Infection and Ischaemia of Diabetic Foot Ulcers; Proceedings of the BHI 2021—2021 IEEE EMBS International Conference on Biomedical and Health Informatics, Proceedings; Athens, Greece. 27–30 July 2021; [DOI] [Google Scholar]

- 39.Cassidy B., Reeves N.D., Pappachan J.M., Gillespie D., O’Shea C., Rajbhandari S., Maiya A.G., Frank E., Boulton A.J., Armstrong D.G., et al. The DFUC 2020 Dataset: Analysis Towards Diabetic Foot Ulcer Detection. Eur. Endocrinol. 2021;1:5. doi: 10.17925/EE.2021.17.1.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Goyal M., Reeves N.D., Rajbhandari S., Ahmad N., Wang C., Yap M.H. Recognition of Ischaemia and Infection in Diabetic Foot Ulcers: Dataset and Techniques. Comput. Biol. Med. 2020;117:103616. doi: 10.1016/j.compbiomed.2020.103616. [DOI] [PubMed] [Google Scholar]

- 41.Liu Z., John J., Agu E. Diabetic Foot Ulcer Ischemia and Infection Classification Using EfficientNet Deep Learning Models. IEEE Open J. Eng. Med. Biol. 2022;3:189–201. doi: 10.1109/OJEMB.2022.3219725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Amin J., Sharif M., Anjum M.A., Khan H.U., Malik M.S.A., Kadry S. An Integrated Design for Classification and Localization of Diabetic Foot Ulcer Based on CNN and YOLOv2-DFU Models. IEEE Access. 2020;8:228586–228597. doi: 10.1109/ACCESS.2020.3045732. [DOI] [Google Scholar]

- 43.Al-Garaawi N., Harbi Z., Morris T. Fusion of Hand-Crafted and Deep Features for Automatic Diabetic Foot Ulcer Classification. TEM J. 2022;11:1055–1064. doi: 10.18421/TEM113-10. [DOI] [Google Scholar]

- 44.Das S.K., Roy P., Mishra A.K. Recognition of Ischaemia and Infection in Diabetic Foot Ulcer: A Deep Convolutional Neural Network Based Approach. Int. J. Imaging Syst. Technol. 2022;32:192–208. doi: 10.1002/ima.22598. [DOI] [Google Scholar]

- 45.Xu Y., Han K., Zhou Y., Wu J., Xie X., Xiang W. Classification of Diabetic Foot Ulcers Using Class Knowledge Banks. Front. Bioeng. Biotechnol. 2022;9:1531. doi: 10.3389/fbioe.2021.811028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Al-Garaawi N., Ebsim R., Alharan A.F.H., Yap M.H. Diabetic Foot Ulcer Classification Using Mapped Binary Patterns and Convolutional Neural Networks. Comput. Biol. Med. 2022;140:105055. doi: 10.1016/j.compbiomed.2021.105055. [DOI] [PubMed] [Google Scholar]

- 47.Alzubaidi L., Fadhel M.A., Al-Shamma O., Zhang J., Santamaría J., Duan Y., Oleiwi S.R. Towards a Better Understanding of Transfer Learning for Medical Imaging: A Case Study. Appl. Sci. 2020;10:4523. doi: 10.3390/app10134523. [DOI] [Google Scholar]

- 48.Ramachandram D., Ramirez-GarciaLuna J.L., Fraser R.D.J., Martínez-Jiménez M.A., Arriaga-Caballero J.E., Allport J. Fully Automated Wound Tissue Segmentation Using Deep Learning on Mobile Devices: Cohort Study. JMIR Mhealth Uhealth. 2022;10:e36977. doi: 10.2196/36977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Sarp S., Kuzlu M., Pipattanasomporn M., Guler O. Simultaneous Wound Border Segmentation and Tissue Classification Using a Conditional Generative Adversarial Network. J. Eng. 2021;2021:125–134. doi: 10.1049/tje2.12016. [DOI] [Google Scholar]

- 50.Chang C.W., Ho C.Y., Lai F., Christian M., Huang S.C., Chang D.H., Chen Y.S. Application of Multiple Deep Learning Models for Automatic Burn Wound Assessment. Burns. 2022 doi: 10.1016/j.burns.2022.07.006. in press . [DOI] [PubMed] [Google Scholar]

- 51.Goyal M., Reeves N.D., Davison A.K., Rajbhandari S., Spragg J., Yap M.H. DFUNet: Convolutional Neural Networks for Diabetic Foot Ulcer Classification. IEEE Trans. Emerg. Top. Comput. Intell. 2018;4:728–739. doi: 10.1109/TETCI.2018.2866254. [DOI] [Google Scholar]

- 52.Abubakar A., Ugail H., Bukar A.M. Assessment of Human Skin Burns: A Deep Transfer Learning Approach. J. Med. Biol. Eng. 2020;40:321–333. doi: 10.1007/s40846-020-00520-z. [DOI] [Google Scholar]

- 53.Zhao X., Liu Z., Agu E., Wagh A., Jain S., Lindsay C., Tulu B., Strong D., Kan J. Fine-Grained Diabetic Wound Depth and Granulation Tissue Amount Assessment Using Bilinear Convolutional Neural Network. IEEE Access. 2019;7:179151–179162. doi: 10.1109/ACCESS.2019.2959027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Wang Y., Ke Z., He Z., Chen X., Zhang Y., Xie P., Li T., Zhou J., Li F., Yang C., et al. Real-Time Burn Depth Assessment Using Artificial Networks: A Large-Scale, Multicentre Study. Burns. 2020;46:1829–1838. doi: 10.1016/j.burns.2020.07.010. [DOI] [PubMed] [Google Scholar]

- 55.Wagh A., Jain S., Mukherjee A., Agu E., Pedersen P.C., Strong D., Tulu B., Lindsay C., Liu Z. Semantic Segmentation of Smartphone Wound Images: Comparative Analysis of AHRF and CNN-Based Approaches. IEEE Access. 2020;8:181590–181604. doi: 10.1109/ACCESS.2020.3014175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pabitha C., Vanathi B. Densemask RCNN: A Hybrid Model for Skin Burn Image Classification and Severity Grading. Neural Process. Lett. 2021;53:319–337. doi: 10.1007/s11063-020-10387-5. [DOI] [Google Scholar]

- 57.Rajathi V., Bhavani R.R., Wiselin Jiji G. Varicose Ulcer(C6) Wound Image Tissue Classification Using Multidimensional Convolutional Neural Networks. Imaging Sci. J. 2019;67:374–384. doi: 10.1080/13682199.2019.1663083. [DOI] [Google Scholar]

- 58.Lien A.S.Y., Lai C.Y., Wei J.D., Yang H.M., Yeh J.T., Tai H.C. A Granulation Tissue Detection Model to Track Chronic Wound Healing in DM Foot Ulcers. Electronics. 2022;11:2617. doi: 10.3390/electronics11162617. [DOI] [Google Scholar]

- 59.Blanco G., Traina A.J.M., Traina C., Azevedo-Marques P.M., Jorge A.E.S., de Oliveira D., Bedo M.V.N. A Superpixel-Driven Deep Learning Approach for the Analysis of Dermatological Wounds. Comput. Methods Programs Biomed. 2020;183:105079. doi: 10.1016/j.cmpb.2019.105079. [DOI] [PubMed] [Google Scholar]

- 60.Chang C.W., Christian M., Chang D.H., Lai F., Liu T.J., Chen Y.S., Chen W.J. Deep Learning Approach Based on Superpixel Segmentation Assisted Labeling for Automatic Pressure Ulcer Diagnosis. PLoS ONE. 2022;17:e0264139. doi: 10.1371/journal.pone.0264139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Khalifa N.E., Loey M., Mirjalili S. A Comprehensive Survey of Recent Trends in Deep Learning for Digital Images Augmentation. Artif. Intell. Rev. 2022;55:2351–2377. doi: 10.1007/s10462-021-10066-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chauhan J., Goyal P. BPBSAM: Body Part-Specific Burn Severity Assessment Model. Burns. 2020;46:1407–1423. doi: 10.1016/j.burns.2020.03.007. [DOI] [PubMed] [Google Scholar]

- 63.Cirillo M.D., Mirdell R., Sjöberg F., Pham T.D. Time-Independent Prediction of Burn Depth Using Deep Convolutional Neural Networks. J. Burn. Care Res. 2019;40:857–863. doi: 10.1093/jbcr/irz103. [DOI] [PubMed] [Google Scholar]

- 64.Chauhan J., Goyal P. Convolution Neural Network for Effective Burn Region Segmentation of Color Images. Burns. 2021;47:854–862. doi: 10.1016/j.burns.2020.08.016. [DOI] [PubMed] [Google Scholar]

- 65.Goyal M., Reeves N.D., Rajbhandari S., Yap M.H. Robust Methods for Real-Time Diabetic Foot Ulcer Detection and Localization on Mobile Devices. IEEE J. Biomed. Health Inf. 2019;23:1730–1741. doi: 10.1109/JBHI.2018.2868656. [DOI] [PubMed] [Google Scholar]

- 66.Chino D.Y.T., Scabora L.C., Cazzolato M.T., Jorge A.E.S., Traina C., Traina A.J.M. Segmenting Skin Ulcers and Measuring the Wound Area Using Deep Convolutional Networks. Comput. Methods Programs Biomed. 2020;191:105376. doi: 10.1016/j.cmpb.2020.105376. [DOI] [PubMed] [Google Scholar]

- 67.Karras T., Laine S., Aila T. A Style-Based Generator Architecture for Generative Adversarial Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021;43:4217–4228. doi: 10.1109/TPAMI.2020.2970919. [DOI] [PubMed] [Google Scholar]

- 68.Dai F., Zhang D., Su K., Xin N. Burn Images Segmentation Based on Burn-GAN. J. Burn. Care Res. 2021;42:755–762. doi: 10.1093/jbcr/iraa208. [DOI] [PubMed] [Google Scholar]

- 69.Gatys L., Ecker A., Bethge M. A Neural Algorithm of Artistic Style. J. Vis. 2016;16:326. doi: 10.1167/16.12.326. [DOI] [Google Scholar]

- 70.Zoph B., Le Q.V. Neural Architecture Search with Reinforcement Learning; Proceedings of the 5th International Conference on Learning Representations, ICLR 2017-Conference Track Proceedings; Toulon, France. 24–26 April 2017. [Google Scholar]

- 71.Amjoud A.B., Amrouch M. Image and Signal Processing, Proceedings of the 9th International Conference, ICISP 2020, Marrakesh, Morocco, 4–6 June 2020. Volume 12119 Springer International Publishing; Berlin/Heidelberg, Germany: 2020. Convolutional Neural Networks Backbones for Object Detection. Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), LNCS. [Google Scholar]

- 72.Howard A.G., Zhu M., Chen B., Kalenichenko D., Wang W., Weyand T., Andreetto M., Adam H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv. 20171704.04861 [Google Scholar]

- 73.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going Deeper with Convolutions; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015. [Google Scholar]

- 74.Simonyan K., Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition; Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings; San Diego, CA, USA. 7–9 May 2015. [Google Scholar]

- 75.Tan M., Le Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks; Proceedings of the 36th International Conference on Machine Learning, ICML; Long Beach, CA, USA. 9–15 June 2019; [Google Scholar]

- 76.Lin T.Y., Maire M., Belongie S., Hays J., Perona P., Ramanan D., Dollár P., Zitnick C.L. Computer Vision, Proceedings of the ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014. Volume 8693 Springer International Publishing; Berlin/Heidelberg, Germany: 2014. Microsoft COCO: Common Objects in Context. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), LNCS. [Google Scholar]

- 77.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; San Juan, PR, USA. 17–19 June 1997; [Google Scholar]

- 78.Venkatesan C., Sumithra M.G., Murugappan M. NFU-Net: An Automated Framework for the Detection of Neurotrophic Foot Ulcer Using Deep Convolutional Neural Network. Neural Process. Lett. 2022;54:3705–3726. doi: 10.1007/s11063-022-10782-0. [DOI] [Google Scholar]

- 79.Alzubaidi L., Fadhel M.A., Oleiwi S.R., Al-Shamma O., Zhang J. DFU_QUTNet: Diabetic Foot Ulcer Classification Using Novel Deep Convolutional Neural Network. Multimed Tools Appl. 2020;79:15655–15677. doi: 10.1007/s11042-019-07820-w. [DOI] [Google Scholar]

- 80.Das S.K., Roy P., Mishra A.K. DFU_SPNet: A Stacked Parallel Convolution Layers Based CNN to Improve Diabetic Foot Ulcer Classification. ICT Express. 2022;8:271–275. doi: 10.1016/j.icte.2021.08.022. [DOI] [Google Scholar]

- 81.Das S.K., Roy P., Mishra A.K. Fusion of Handcrafted and Deep Convolutional Neural Network Features for Effective Identification of Diabetic Foot Ulcer. Concurr. Comput. 2022;34:e6690. doi: 10.1002/cpe.6690. [DOI] [Google Scholar]

- 82.Petkov N. Biologically Motivated Computationally Intensive Approaches to Image Pattern Recognition. Future Gener. Comput. Syst. 1995;11:451–465. doi: 10.1016/0167-739X(95)00015-K. [DOI] [Google Scholar]

- 83.Dalal N., Triggs B. Histograms of Oriented Gradients for Human Detection to Cite This Version: Histograms of Oriented Gradients for Human Detection; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; San Diego, CA, USA. 20–25 June 2005. [Google Scholar]

- 84.He K., Zhang X., Ren S., Sun J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification; Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), IEEE; Santiago, Chile. 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- 85.Casado-García Á., Domínguez C., García-Domínguez M., Heras J., Inés A., Mata E., Pascual V. Clodsa: A Tool for Augmentation in Classification, Localization, Detection, Semantic Segmentation and Instance Segmentation Tasks. BMC Bioinform. 2019;20:323. doi: 10.1186/s12859-019-2931-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Blagus R., Lusa L. SMOTE for High-Dimensional Class-Imbalanced Data. BMC Bioinform. 2013;14:106. doi: 10.1186/1471-2105-14-106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Han A., Zhang Y., Liu Q., Dong Q., Zhao F., Shen X., Liu Y., Yan S., Zhou S. Application of Refinements on Faster-RCNN in Automatic Screening of Diabetic Foot Wagner Grades. Acta Med. Mediterr. 2020;36:661–665. doi: 10.19193/0393-6384_2020_1_104. [DOI] [Google Scholar]

- 88.Ren S., He K., Girshick R., Sun J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:1137–1149. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 89.Ioffe S., Szegedy C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; Proceedings of the 32nd International Conference on Machine Learning, ICML; Lille, France. 6–11 July 2015; [Google Scholar]

- 90.Redmon J., Farhadi A. YOLO9000: Better, Faster, Stronger; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR; Honolulu, HI, USA. 21–26 July 2017. [Google Scholar]