Abstract

Theories of consciousness are often based on the assumption that a single, unified neurobiological account will explain different types of conscious awareness. However, recent findings show that even within a single modality, such as conscious visual perception, the anatomical location, timing, and information flow of neural activity related to conscious awareness vary depending on both external and internal factors. This suggests that the search for generic neural correlates of consciousness may not be fruitful. I argue that consciousness science requires a more pluralistic approach and propose a new framework: Joint Determinant Theory. This theory may be capable of accommodating different brain-circuit mechanisms for conscious contents as varied as percepts, wills, memories, emotions, and thoughts, as well as their integrated experience.

Keywords: Consciousness, Conscious Content, Visual Awareness, Conscious Perception, Prior Knowledge, Stimulus Ambiguity, States of Consciousness, State Space

A Pluralistic Approach to Consciousness

In consciousness science, it is commonly assumed that a single set of mechanisms can explain diverse phenomena of consciousness, from states of consciousness to contents of consciousness, from perception to introspection. This assumption partly stems from Crick and Koch’s seminal paper in 1990 [1]. This paper legitimized the topic of consciousness as a serious scientific discipline and launched the next thirty years of fruitful experimental research on consciousness. At the same time, it declared visual awareness as the “favorable form of consciousness to study neurobiologically”, reasoning that “all forms of consciousness (e.g., seeing, thinking, and pain) employ, at bottom, rather similar mechanisms”. This reasoning has instilled optimism in many consciousness scientists that theories inspired by the investigation of visual awareness will automatically extend to other forms of awareness (for a historical perspective, see Box 1). However, could it be that different types of conscious awareness (e.g., perception vs. emotion) require different neurobiological accounts?

Box 1. Additional Historical Context.

While the founding fathers of modern psychological theories recognized that conscious awareness or the lack thereof is an important dimension organizing most mental faculties [105], the rise of the behaviorist school in the mid-20th century stigmatized and smothered the study of subjectivity including consciousness until the revival of the discipline by Crick and Koch, as mentioned in the main text. The re-legitimization of consciousness science happened slowly over the next couple of decades (~1990–2010), pushed by the efforts of a small group of psychologists and neuroscientists who were unafraid of taboos and funding/job challenges [106].

This group has grown significantly in both legitimacy and size, as represented by ever-increasing mainstream publications of consciousness research and the growing membership of the Association for Scientific Study of Consciousness (currently with over 700 active members). However, because the consciousness field is small, the bulk of the research has centered around relatively narrow slices of consciousness-related questions—notably, conscious perception and states of consciousness. Bridges with other related cognitive/systems neuroscience disciplines, such as memory, emotion, pain, and decision making, remain scanty. Two factors may have contributed significantly to the lack of integration with other neuroscientific disciplines: 1) reservation held by scientists working in these other disciplines to be associated with the “C” word; 2) the difficulty of assessing subjective awareness in animal models that have become an important component of scientific investigation on these other topics.

However, at least within human neuroscience where subjective reports provide rich access into the content of conscious awareness, there is no reason that consciousness science should not have stronger bridges with other cognitive neuroscience disciplines. For instance, in memory research, the classification of memory systems into two types according to whether the memory content is accessible to conscious recall (explicit/declarative memory) or not (implicit/non-declarative memory) lies at the foundation of modern memory research [107–110]. However, over the last 30 years, consciousness research and memory research has largely proceeded in parallel with little crosstalk. Similarly, there is a large and vibrant field on pain research, involving both human neuroimaging and animal models. Although pain is by definition a form of conscious awareness, research findings from pain research have not strongly informed theories of consciousness. Conversely, animal models of pain routinely use a tail-flicking test in response to noxious stimuli without questioning whether there is any conscious pain perception at all.

Here, I advocate for an alternative approach to empirical research on consciousness, which is to fully examine the neurobiological underpinnings of each type of conscious awareness (e.g., percepts, introspection, wills, memories, emotions, and thoughts) without favoritism. This pluralistic approach will allow the field to build a stronger empirical foundation and be more integrated with other cognitive neuroscience disciplines. If, in the end, a common set of neurobiological principles emerge across diverse types of conscious awareness, then that would be wonderfully simplifying; if not, we will have gained much fundamental knowledge along the way that is important for both basic science and clinical applications.

Another key motivation for this pluralistic approach to consciousness research is that although prominent theories of consciousness focus on unifying principles, experimental work on consciousness has focused on testing specific neural correlates of consciousness (NCCs) (for definition, see [2]) favored by each theory, often assuming that NCCs of a similar nature will be found across modalities and experimental paradigms. I first argue that the search for generic (i.e., context-independent) NCCs is unlikely to be fruitful, as even within the same modality (e.g., conscious visual perception), the anatomical location, timing, and information flow pattern of neural activity related to awareness vary depending on external and internal factors. From there, zooming out, I suggest that the assumption that the mechanistic understanding obtained by studying visual awareness will automatically extrapolate to other forms of awareness may not hold, and, therefore, neurobiological investigations of consciousness will benefit from a more pluralistic approach. Last, I propose a new framework, the Joint Determinant Theory of Consciousness, that may be able to accommodate a variety of brain-circuit-level mechanisms that co-contribute to conscious awareness and its integrated phenomenology.

Current Theories and Major Debates

A recent in-depth review of prominent consciousness theories can be found in [3]. This section only provides a brief introduction for those not already familiar with this field.

Integrated Information Theory (IIT) is a formal mathematical theory postulating that the irreducible maximum of integrated information (phi) is equivalent to consciousness and its geometry equivalent to phenomenal experience (i.e., qualia) [4]. Originally inspired by observations about different states of consciousness [5], IIT’s principles have been generalized to explain the contents of consciousness [6]. A widely known weakness of IIT is that it is difficult to calculate phi in any large system, including the brain. Thus, experiments currently testing IIT focus on its postulate that the grid-like structure in the posterior cortex is sufficient for conscious perception [7].

Similar to IIT, recurrent processing theory (RPT) predicts that posterior brain regions (especially occipitotemporal cortices) are sufficient for supporting visual awareness [8]. RPT was originally inspired by neurophysiological observations of conscious visual perception and has stayed close to its roots in delineating the theory’s explanatory scope as visual awareness.

Like RPT, the global neuronal workspace (GNW) theory [9] has focused on visual awareness in its empirical investigation. However, because it is motivated by the functionalist account suggesting that consciousness is critical for multiple cognitive functions [10], GNW makes the opposite neuroanatomical prediction from RPT, suggesting that an “ignition process” in the prefrontal cortex (PFC), in coordination with the parietal cortex, is integral to all forms of conscious awareness. A recent challenge for GNW is that a key neural signature of conscious access proposed by GNW, the P3b event-related potential (ERP), is not a reliable neural correlate of conscious perception [11–13].

Similar to GNW, higher-order theories (HOT) of consciousness predict that the PFC is essential to all forms of conscious awareness [14, 15]. However, unlike GNW or RPT, HOT does not stem from neurobiological observations but rather has deep roots in philosophy, with its original ideas postulating that a mental re-representation of an initial representation is needed for conscious awareness, without being specific about its neurobiological implementation.

Finally, the predictive processing framework was recently proposed as a general theory for brain function that may guide consciousness research rather than a theory of consciousness per se [16–18]. As it currently stands, how much predictive processing can be carried out unconsciously and which attributes allow a predictive process to gain access to conscious awareness remain unclear, and more needs to be done to make the theory more precise.

As can be gleaned from the above short summaries, a major current point of contention is the role of the PFC in conscious awareness, with some theories postulating that it is critical (GNW and some versions of HOT) and others postulating that it is nonessential (RPT and IIT). Another point of contention is whether the neural correlate of conscious awareness happens early (<250 ms) or late (>250 ms) following stimulus onset [9, 11–13, 19]. Importantly, in these current debates, both sides embrace the unified-account assumption, assuming that the anatomical regions and temporal latency of neural activity related to conscious perception are relatively fixed. In what follows, I first review recent evidence suggesting that this is not the case, I then broaden the discussion to examine other types of conscious awareness.

Conscious Visual Perception

Increasing evidence suggests that the neural mechanisms supporting visual awareness may vary depending on external and internal factors.

Stimulus Properties (External Factors)

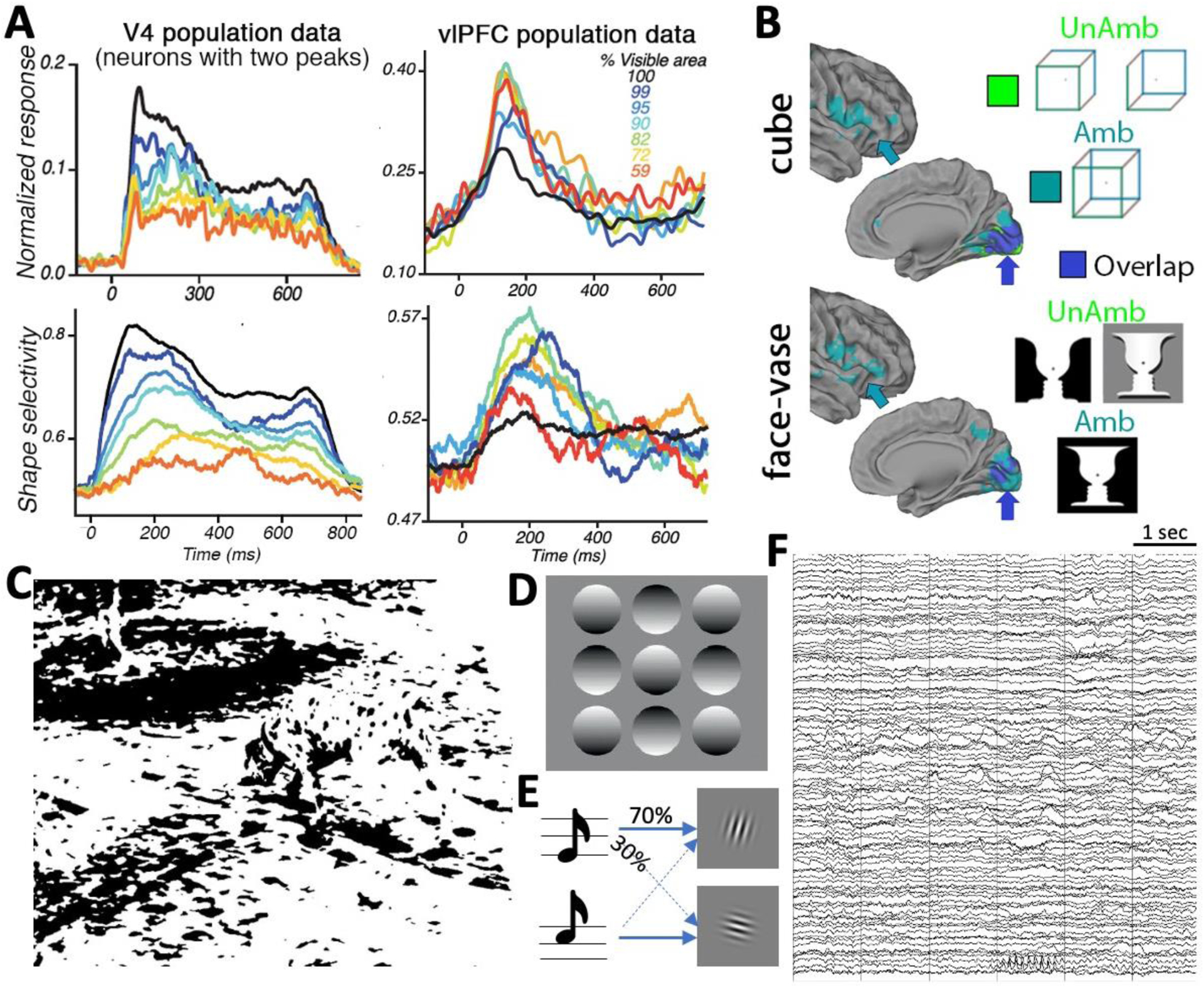

Accumulating evidence suggests that the more challenging and ambiguous a sensory input is, the more involved the PFC becomes in order to resolve conscious perception. In an fMRI study [20], the authors presented participants with ambiguous figures triggering bistable perception and their modified versions in which the ambiguity was removed to elicit stable perception. They found that perceptual content could be decoded from early visual cortex in both bistable perception and unambiguous perception, whereas it could be decoded from ventrolateral PFC (vlPFC) and orbitofrontal cortex (OFC) only during bistable perception (Figure 1A). Using visual masking and combined fMRI and magnetoencephalography (MEG), an earlier study proposed that a fast feedforward sweep via the magnocellular pathway activates the OFC at an early latency (100–150 ms), which sends a top-down template with a crude “guess” of stimulus content to the inferotemporal (IT) cortex to facilitate object recognition [21].

Figure 1. External and internal factors influencing conscious perception and its neural correlates.

(A) V4 and vlPFC neuronal firing rates (top) and shape selectivity (bottom) at a range of stimulus occlusion levels (line colors). Dark blue colors show low occlusion levels, and yellow-red colors show high occlusion levels. Reproduced with permission from [22]. (B) Searchlight decoding of perceptual content when the image is ambiguous (teal) or unambiguous (green), with the overlap shown in dark blue. Results are similar for the Necker cube (top) and Rubin face-vase image (bottom). Dark blue arrows point to V1, teal arrows point to vlPFC. Reproduced with permission from [20]. (C) The famous Dalmatian dog picture created by Richard Gregory [38]. (D) Our “light-from-above” prior learnt from life-long experiences confers strong depth cues to 2-D images. (E) A schematic of common paradigms used to manipulate top-down knowledge. Here, a low (high) tone predicts higher probability of a right-leaning (left-leaning) Gabor patch occurring. (F) A six-second stretch of raw intracranial EEG data recorded from >100 channels in one patient, during resting wakefulness, showing the constant wax-and-wane of spontaneous brain activity at many different frequencies (e.g., alpha waves at the bottom of the graph lasting ~1 sec, delta waves in the middle section of channels, and aperiodic fluctuations [102] in all channels). Data collected by B.J.H.

These findings are amplified by recent monkey neurophysiology evidence. In one study, monkeys were presented with occluded shapes [22]. As the occlusion level increased, V4 neurons’ firing rates and shape selectivity decreased, consistent with the reduced bottom-up stimulus strength (Figure 1B, left). By contrast, firing rates and shape selectivity of vlPFC neurons exhibited an inverted-U function with occlusion level, being highest for an intermediate level of occlusion (Figure 1B, right). Importantly, vlPFC activity (peaking at 150–180 ms) preceded a second activity peak in V4, in which V4 neurons displayed stronger shape selectivity than during their first peak, raising the intriguing possibility of top-down transmission of shape-related information from vlPFC to V4. Another study showed that the inactivation of vlPFC specifically disrupted object recognition for more challenging images that were typically associated with longer reaction times (RTs) [23], demonstrating the necessity of vlPFC for resolving object recognition of more complex sensory input. A recent no-report study using binocular rivalry showed that vlPFC neurons track the content of conscious perception even when there was no need for task report [24].

Thus, converging evidence suggests that whether the PFC is involved in conscious perception might depend on the characteristics of the sensory input: if it is simple and unambiguous, the PFC might not be needed [25]; if it is complex or ambiguous, at least ventral PFC (vlPFC or OFC) appears to be recruited. Why ventral PFC? A straightforward answer is that vlPFC and OFC are both one synapse away from IT cortex [26] and contain many neurons tuned to specific stimulus properties, including spatial location, shape, color, and object category [27, 28] (e.g., there exists a frontal face patch in vlPFC [29, 30]). Hence, these areas might be ideally suited to providing a top-down perceptual template based on a fast initial analysis of the global scene to facilitate more detailed processing in ventral visual regions. Consistent with this idea, OFC lesion [31] and electrical stimulation of vlPFC [32] can cause complex visual hallucinations (although stimulation-induced after-discharges in [32] might complicate its interpretation). This general idea is also well aligned with the coarse-to-fine processing framework [33–35], which has received increasing empirical support recently [36, 37]. Incidentally, the idea that higher-order brain regions become increasingly involved in resolving conscious perception of more challenging stimuli also helps to explain why classic vision science and research on conscious visual perception have had somewhat different anatomical focuses (Box 2).

Box 2. Historical differences between conscious perception research and classic vision research.

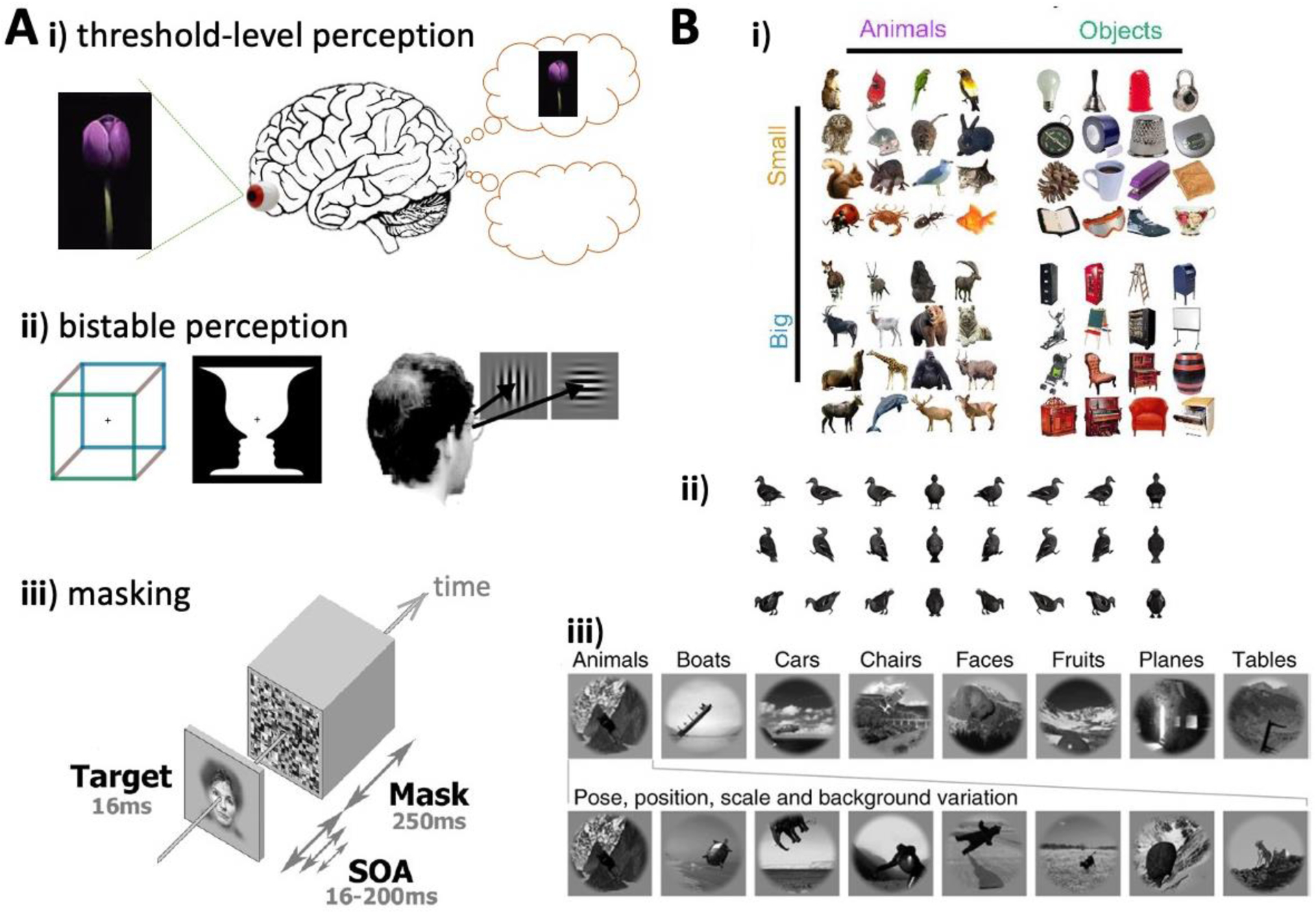

Historically, consciousness science and mainstream vision research have targeted different regions of the stimulus space. The majority of conscious visual perception work to date has employed threshold-level (low-contrast or masked) or otherwise ambiguous stimuli such that the same stimulus can trigger different perceptual outcomes at different times, in order to distinguish neural activity associated with conscious perception from that associated with unconscious processing (Figure IA) (e.g., [90, 111–115]). By contrast, mainstream vision research tends to use high-contrast, unambiguous stimuli, and focuses on identifying neural activity underlying different contents of (presumably conscious) visual perception or neural activity supporting perceptual invariance (Figure IB) (e.g., [25, 116–118]). Another major line of mainstream vision research involves using stimuli across a range of ambiguity levels (e.g., the random dot-motion task) to investigate visually-guided behavior, in a field called perceptual decision-making, without being much concerned about whether the perception guiding behavior is conscious or not [119].

Paralleling this difference in stimulus choice, mainstream vision research largely focuses on visual cortices (i.e., dorsal and ventral stream regions in occipitoparietal and occipitotemporal cortices), and PFC is discussed mainly in the context of top-down attention or task set [120, 121]. By contrast, PFC is a focal point of contention in conscious perception research and is postulated to be essential for visual awareness according to at least two major theories (GNW and HOT). The idea described in the main text (see ‘Stimulus Properties (External Factors)’) suggests that this difference in the neuroanatomical focus between vision science and consciousness science might, at least to some extent, be attributed to their different choices of stimuli and paradigms.

Figure I. Paradigms commonly used in conscious visual perception and classic vision studies.

Both fields tackle both low-level (e.g., orientation and color) and high-level (e.g., faces, objects, and scenes) perception; primarily high-level examples are given here. (A) Depicts paradigms used in conscious visual perception studies: i) Threshold-level perception, where the same stimulus, when repeatedly presented at the perceptual threshold, triggers different perceptual outcomes on different trials. ii) Bistable perception, triggered by ambiguous figures (left) or binocular rivalry (right). iii) Visual backward masking: at a fixed threshold-level stimulus-onset asynchrony (SOA) (e.g., 80 ms), the target is consciously perceived on some trials and not perceived on other trials. (B) Depicts paradigms used in classic vision studies: i) Object stimuli organized by two cardinal dimensions: animacy (top) and size (left). ii) Pose manipulation of a given object. iii) Stimuli set manipulating category (top row) and low-level properties such as pose, position, size, and background (bottom row). A-ii,iii were adapted with permission from [20, 122] and [112], respectively. B-i,ii,iii were adapted with permission from [123], [116], and [124], respectively.

Pre-existing Brain States (Internal Factors)

Pre-existing brain states, including both synaptic connectivity patterns sculpted by past experiences (latent memories) and moment-to-moment changes in brain activity (active dynamics), can powerfully influence conscious perception. Latent memories influencing conscious perception can be acquired through a one-time experience (one-shot perceptual learning, such as the first time recognizing the famous “Dalmatian Dog” picture [38], Figure 1C) or life-long experiences (such as the “light-from-above” prior conferring depth cues to 2-D images [39], Figure 1D). Active neural dynamics influencing conscious perception can be induced through top-down knowledge (e.g., about the probability of the upcoming stimulus content [40, 41], Figure 1E) or result from spontaneous brain activity fluctuations [42–46] (Figure 1F). That all these pre-existing brain states influence conscious perception is well established; here I review evidence showing that the neural signatures (in the post-stimulus period) directly related to conscious perception vary as a function of pre-existing brain states.

Inspired by the “Dalmatian Dog” picture, recent studies presented participants with degraded black-and-white images that are difficult to recognize at first, their matching original grayscale pictures, and the degraded images again [47]. This paradigm elicits a highly robust behavioral effect: recognition of the degraded images is drastically improved by seeing their matching original pictures, with the effect lasting >5 months [48, 49]. Strikingly, this effect does not depend on the hippocampus, suggesting a neocortical plasticity mechanism [48]. Following disambiguation, recognition-related RTs to degraded images are ~800 ms [50], which is much longer than the typical RTs to clear images (~300 ms). fMRI and MEG findings show that this slow RT is explained by long-range recurrent neural dynamics across multiple large-scale brain networks unfolding over 300–500 ms after stimulus onset [51–53]. Thus, when prior knowledge is required to resolve difficult sensory input, the timing of involved neural activity can be very slow due to the need for long-range recurrent activity.

A recent study using invasive EEG revealed that lifelong experiences influencing perception also recruit long-range feedback in the brain [54]. In bistable perception triggered by ambiguous images such as the Necker cube, congruency with lifelong experiences (e.g., we tend to see a cube situated on the floor rather than hanging in the air) can promote one of the alternative percepts (e.g., seeing the cube from the top). When perceiving such a view, there is increased top-down feedback activity, whereas perceiving the alternative view is accompanied by strengthened bottom-up feedforward activity. Thus, not only the latency of neural activity but also the pattern of information flow underlying conscious perception may depend on whether prior knowledge needs to be brought to bear to resolve perception (or, alternatively, whether the perceptual outcome is consistent with prior knowledge).

The above studies reveal how latent memories in the brain influence conscious perception. Regarding active neural dynamics, an early EEG study using a perceptual hysteresis paradigm showed that the availability of stimulus-relevant prior knowledge (having seen a clear letter before seeing a noisy version of it) hastened the temporal latency of the EEG response related to conscious perception from ~300 ms to ~200 ms [55]. Because this paradigm does not induce long-lasting memories, the stimulus-relevant prior knowledge was likely encoded in active neural dynamics, manifesting as specific prestimulus brain states [56].

Finally, in supra-threshold visual stimulus detection/discrimination tasks, where a physically identical stimulus is repeatedly presented across trials and always consciously perceived, prestimulus spontaneous brain activity—as measured by fMRI [57], intracranial EEG [58], and EEG oscillation powers [59]—influence the magnitude (and sometimes even the sign) of stimulus-evoked responses across widespread brain regions. Therefore, although it remains possible that there is a constant, invariant neural signature of conscious perception, it was not discovered by current standard analysis methods. (For a state-space trajectory-based view that does not depend on a standard analysis pipeline involving baseline correction, see [60].)

In sum, neural signatures—in terms of anatomical location, activity magnitude, timing, and information flow patterns—underlying conscious visual perception vary depending on both external (stimulus properties) and internal (preexisting brain states) factors.

Beyond Visual Perception

Beyond conscious visual perception, it is even more plausible that diverse forms of conscious awareness, even within the same species or the same individual, are supported by distinct kinds of neurobiological mechanisms. In this section, I discuss these considerations and highlight several fruitful topics of investigation that have received relatively little attention within consciousness research.

First, the neurobiological mechanisms underlying visual awareness may not automatically translate to other sensory modalities. For example, olfactory awareness is supported by anatomical pathways that have a different structure as compared to the other senses: The primary olfactory cortex is the piriform cortex (part of the three-layered paleocortex) and related structures, which send inputs directly to OFC, bypassing the thalamus [61]. Electrical stimulation of the OFC elicits a conscious sense of smell [62] and lesions to the OFC can cause a complete loss of olfactory awareness [63, 64]. Thus, the debate about whether NCC lives in the “front” or “back” of the brain [65, 66] seems reflective of a vision-centric view, and conclusions that only posterior brain regions specify the content of consciousness (e.g., [65]) cannot be correct.

Second, the limbic circuit plays a strong role in emotional awareness, and electrical stimulation of medial PFC, anterior cingulate cortex, and insular cortex can elicit emotions such as fear, joy, and sadness [62, 67]. Electrical stimulation of the posterior cingulate cortex causes distorted bodily awareness and self-dissociation [68]. The role of bodily physiological changes [69] in emotional awareness is an intriguing topic: Are our conscious emotions the result of the brain perceiving bodily changes [70] or are conscious emotions and bodily changes two parallel consequences of the same unconscious processing in the subcortical circuitry [71]?

Third, conscious volition—conscious awareness related to voluntary actions—has been an intense topic of investigation in philosophy, psychology, and neuroscience [72]. Volition has two components: conscious intention (the feeling that we act ‘as we choose’ [73]) and the sense of agency (the feeling that one is in control of one’s actions [74]). Conscious intention is generated by an interconnected brain circuit involving dlPFC (‘distal intention’), inferior parietal lobule (IPL, ‘proximal intention’), and supplementary motor area (SMA)/pre-SMA (‘release of inhibition’). Electrical stimulation of IPL or SMA/pre-SMA can alter or trigger conscious movement intention [75–78]. The sense of agency, on the other hand, appears to depend on a comparator process within the parietal lobe that compares a forward model (i.e., efference copy) with sensory feedback to compute an error for online movement correction [74, 79]; the larger the error, the weaker the sense of agency [80]. Therefore, the computational architecture underlying volition seems to be quite different from that underlying perception—it is hard to imagine a top-down prediction as precise as that triggered by self-initiated movements contributing to conscious perception in most daily environments.

Finally, two important aspects of consciousness have received relatively little attention in the consciousness field: conscious memory recall and conscious thinking. A large and growing neuroscientific literature describes the coordination between hippocampus and neocortex (specifically, regions of the default-mode network) during conscious memory recall [81–83], which is as vivid a conscious experience as perceptual awareness. Conscious thinking likely relies heavily on working memory and executive control, and, therefore, the frontoparietal circuits supporting these functions.

Importantly, all these forms of conscious awareness also have an unconscious counterpart, allowing for the contrastive approach that consciousness researchers have fruitfully employed in studying visual awareness. For instance, emotional processing has an unconscious component [70, 84]; an unconscious accumulator process might precede conscious movement intention [85]; implicit memory influences behavior but cannot be consciously recalled [86, 87]; finally, the inner workings of our intuitive “gut feelings”, which support expert judgments [88, 89], are typically outside conscious awareness.

The Joint Determinant Theory (JDT) of Consciousness

Here, I sketch out a neurobiological framework consistent with the above view, which could serve as a starting point, an initial scaffold, for neurobiological investigations of different types of conscious awareness through a pluralistic approach. The explanatory target of JDT is human consciousness, and its ideas rest primarily on empirical findings in human subjects, where subjective reports are available. As such, although JDT could be extrapolated to other animals with similar brains, JDT remains agnostic about consciousness in animals with very different nervous systems or machine consciousness.

JDT has the following postulates:

In neurotypical humans, consciousness is primarily generated by the cerebral cortex, with subcortical structures and the cerebellum playing a supportive role. For instance, subcortical structures could provide a generic activation to help maintain content-specific signals in the cortex [90, 91] or modulate network interactions within the cortex [92]. However, this doesn’t prevent the subcortical system from generating rudimentary awareness when the cortex is unavailable due to agenesis or atrophy [93].

Due to the high level of recurrent connectivity within the cerebral cortex, its small-world topology [94], and the overall similar microcircuitry across the cortex [95] (notwithstanding macroscopic gradients in spine densities and neurotransmitter profiles [96]), all cortical regions are capable of contributing to conscious awareness.

Different cortical regions/networks contribute preferentially to different aspects of conscious awareness. For instance, sensory regions (in coordination with associative regions, see below) contribute to perceptual awareness; the limbic circuit contributes to bodily awareness, pain, and emotional awareness; dlPFC and posterior parietal cortex contribute to conscious working memory and conscious thinking; and the default-mode network contributes to conscious memory recall.

The specific computational mechanisms within each brain region/circuit contributing to conscious awareness might differ from each other. For instance, conscious perception is likely fastest in audition and slowest in olfaction; perception and volition likely have different computational architecture (see above); conscious thinking likely depends on a more recurrent, less hierarchical, brain network as compared to conscious perception; conscious memory recall involves a tight cortico-hippocampal coordination likely not needed for perception or volition.

The dominant activity pattern in each brain region/circuit automatically contributes to the integrated conscious experience; as a result, conscious phenomenology is multimodal by default [95]. For instance, my visual awareness of the computer screen is experienced in the context of my awareness that I’m currently full, not hungry. Similarly, perception rarely operates in isolation but is always informed by our cognition and memories (the extent to which cognition and memories influence perceptual awareness might dictate the extent of the brain networks involved, as discussed above). Thus, although an isolated visual system might in theory be capable of supporting visual awareness, that is not how it works in the normal human brain.

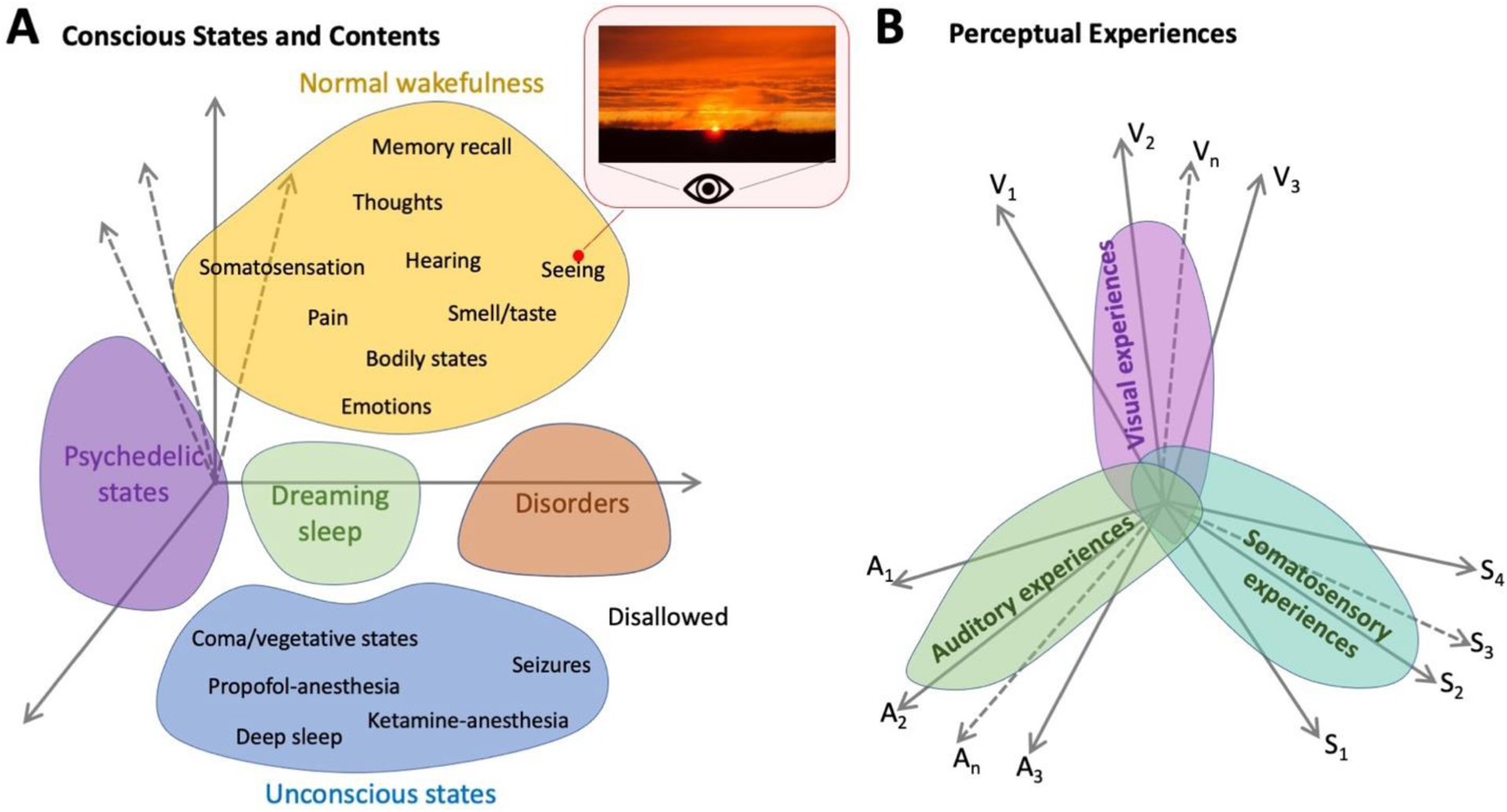

A state-space view (Figure 2, Key Figure) can account for the relationship between states of consciousness and contents of consciousness, with each content of consciousness being a point or a sub-region within a larger region of the state-space corresponding to the relevant state of consciousness. Note that this view accommodates multi-level nesting: Within the region of state-space corresponding to “normal wakefulness”, a sub-region corresponds to “seeing”, and a point within this smaller sub-region is “seeing this particular sunset”. States or contents of consciousness that are more similar in phenomenology are closer together in this state space (Figure 2A), and any point within the state space has defined values along all axes relevant to awareness, explaining the multimodal nature of conscious phenomenology (Figure 2B).

Figure 2 (Key Figure). A state-space view of neurobiological underpinnings of consciousness.

(A) In a state-space view of the brain (e.g., [60, 103]), each axis of the state space describes the activity of a group of neurons, and the brain at each time point occupies one position within the (very high-dimensional) state space. JDT proposes that each state of consciousness occupies a region of the state space, with different contents of consciousness occupying different subregions within the relevant state-of-consciousness. A specific content of consciousness (e.g., seeing this particular sunset) occupies a point position within the state space. States or contents of consciousness that are more similar to each other are closer together in the state space. Certain parts of the state space are disallowed (according to the brain’s network organization) [104]. Thus, a key objective of empirical research is to identify the axes that matter for each state or content of consciousness. (B) The relationship between perceptual experiences in different sensory modalities. For each modality, there are specific axes that are most crucial in determining its phenomenology. Thus, experience in one modality can vary relatively independently from that in other modalities (e.g., we can have a great many visual experiences with the same olfactory experience). However, the axes are not completely orthogonal between modalities, such that experience in one modality can influence that in another (e.g., seeing a burnt toast might evoke a burnt smell even when no such smell is present), and this correlation between modalities is strongest in synesthetes.

An important question is what gives conscious phenomenology its integrated, unitary impression [97]. That is, why aren’t we a collection of micro-consciousnesses? Under JDT, because of the small-world connectivity of the cerebral cortex, the dominant activity pattern in each brain region/circuit automatically influences other regions/circuits and automatically becomes integrated within the conscious experience. In this regard, JDT resonates with a previously expressed view that the philosophical zombie — a brain just like ours with all its normal activity and functions, but devoid of consciousness — is impossible [98].

The above description of JDT leaves some key questions unanswered (see Outstanding Questions). Most pressing is what properties govern the “dominant activity pattern” within each brain region/circuit that contributes to awareness. This was deliberately left vague, to accommodate potentially different mechanisms in different brain circuits. (One possibility is that the dominant activity pattern contributing to awareness is the attractor accounting for aperiodic population activity, which is modulated by brain oscillations [42, 54, 99]. However, the validity of JDT does not depend on this specific prediction.)

Outstanding Questions.

How is perceptual awareness generated in different internal and external contexts?

What are the similarities and differences between neural mechanisms underlying conscious perception in different sensory modalities (e.g., visual, auditory, somatosensory, olfactory, gustatory, interoceptive), in terms of brain regions involved (beyond sensory cortex) and information processing architecture?

What are the neural underpinnings of pain awareness, and how to dissociate them from related signals (e.g., unconscious processing of noxious stimuli, heightened arousal)? What gives pain its defining subjective quality?

What neural mechanisms are responsible for voluntary actions and the sense of agency? How is agency constructed during deliberate actions vs. non-deliberate actions?

What neural mechanisms underlie different emotions, and how much overlap is there between neural mechanisms underlying emotional awareness and perceptual awareness?

What are the differences in neural mechanisms between unconscious and conscious emotions? What roles do bodily physiological signals play in emotional processing and emotional awareness?

What are the neural mechanisms supporting conscious thinking? And how does “gut feeling” work, which is unconscious information processing in the brain with the end result presented to conscious awareness?

How is information from long-term memory recalled into awareness? What are the neural architectural differences, in terms of encoding, storage and retrieval, between conscious/declarative memory (e.g., episodic and semantic memory) and unconscious/non-declarative memory (e.g., priming and implicit statistical learning)?

What is the relationship between the hippocampus and the aware-unaware axis of memory organization? Does information within conscious awareness have privileged access to long-term memory encoding (as postulated by the global workspace theory), and, if so, what are the underlying neural mechanisms?

Relations to Previous Theories and Frameworks

IIT, RPT, GNW, and HOT are all theories of consciousness in the sense they each specify a key property that renders a set of neural activities conscious. For IIT, this is integrated information; for RPT, recurrent processing; for GNW, ignition in the global workspace; for HOT, re-representation of a first-order representation. The first major difference between JDT and these previous theories is that JDT allows the specific form of neural activities underlying awareness to vary depending on the brain circuitry involved and the context. The second major difference is that while all these previous theories emphasize certain cortical regions in contributing to awareness (e.g., posterior regions in IIT; frontoparietal regions in GNW; the PFC in HOT), JDT gives equal weight to different cortical regions, postulating that they contribute preferentially to different types of awareness (hence, ‘joint determinant’). As such, JDT brings conscious perception, volition, emotion, thoughts, and memory recall into the same framework.

As it currently stands, JDT lies between a theory and a framework, as it is not yet precise about what kinds of properties allow neural activity to directly underlie conscious awareness in each brain circuitry. Contrary to the dominant NCC framework, JDT does not emphasize “minimal sufficiency”; this is because any neural activity contributing to awareness is doing so in the context of a host of “enabling factors” [100, 101]. In addition, JDT emphasizes that contextual factors might alter the specific neural activity underlying awareness and de-emphasizes the distinction between states and contents of consciousness, as they are simply nested sub-regions of the state space (Figure 2A). In these regards, JDT is more aligned with a recently proposed philosophical framework advocating for approaching consciousness from the perspective of a highly interactive system [101].

Concluding Remarks

In this article, I argue that for a mature science of consciousness, the field needs more patience, more mortar-and-brick work that builds a stronger empirical foundation, and a pluralist approach that prioritizes different forms of conscious awareness equally. In addition, stronger bridges between consciousness science and adjacent fields of cognitive neuroscience will pay dividends. An implication of this pluralistic view is that there may not be a “Eureka” moment that explains all of consciousness and the work ahead may take longer than some may expect [98]. However, elucidating the neurobiological underpinnings of every aspect of consciousness is a success in and of itself, with real-world implications such as helping those with hallucinations, pain, negative affect, disorders of volition, or disorganized thoughts.

Highlights.

Recent neuroscientific findings challenge the widely held assumption that similar neural mechanisms underlie different types of conscious awareness, such as seeing, feeling, knowing, and willing.

These data show that even within visual awareness, the brain regions involved, the latency of neural activity, and the information flow patterns underlying conscious perception vary depending on both external and internal factors.

Different types of conscious awareness—e.g., perception and volition—likely employ different neural processing architecture. Thus, a pluralistic approach to investigating the neurobiological underpinnings of consciousness may be more fruitful.

A new framework, the Joint Determinant Theory, is proposed, which has the potential to accommodate different brain-circuit-level mechanisms underlying different aspects of conscious awareness.

Acknowledgments

This work was supported by the U.S. National Science Foundation (CAREER Award BCS1753218 and Grant BCS1926780), National Institutes of Health (R01EY032085), Templeton World Charity Foundation (TWCF0567 and TWCF-2022-30264), and an Irma T. Hirschl Career Scientist Award (to B.J.H).

Glossary

- Active Dynamics

Neuronal activity that can be measured by a variety of neuroimaging (e.g., fMRI) and electrophysiology (e.g., EEG, MEG, LFP, single/multi-unit activity) methodologies. In the context of this paper, pre-existing brain states manifesting as active dynamics can be triggered by cognitive influences (e.g., prior knowledge) or spontaneous neural activity fluctuations unrelated to cognition.

- Baseline Correction

The common practice of subtracting prestimulus “baseline” brain activity from post-stimulus brain activity to extract “stimulus-evoked activity” in neuroimaging and electrophysiology analyses.

- Latent Memories

Changes in synaptic connectivity between neurons due to synaptic plasticity induced by experiences. These synaptic connectivity changes are encoded in structural neural networks and are not always accompanied by measurable neuronal activity until a sensory input that ‘reinstates’ (i.e., reactivates) that memory arrives; hence, they are called “latent”.

- Neural correlates of consciousness (NCC)

The minimum neural mechanisms jointly sufficient for any one specific conscious experience.

- One-shot Perceptual Learning

When perceptual outcome for a given sensory input is qualitatively and robustly altered by a single, related experience. In the example given in the main text, viewing a clear picture allows instant recognition of a related degraded picture that was previously unrecognizable and this effect lasts over many months.

- Perceptual Invariance

The ability to recognize/identify the same object or the same class of objects under different viewing conditions.

- Selectivity

How informative a neuron’s firing rate/pattern is about an aspect of stimulus input (such as shape in the main text example).

- Trajectory

At any moment in time, brain activity from a given region or the whole brain can be conceived as a point in a high-dimensional state space, where each dimension describes the activity level of a particular neuron (or group of neurons, or a brain region). Over time, the evolution of brain activity traces a trajectory in this state space.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Crick F and Koch C (1990) Some Reflections on Visual Awareness. Cold Spring Harb Symp Quant Biol 55, 953–962. [DOI] [PubMed] [Google Scholar]

- 2.Koch C et al. (2016) Neural correlates of consciousness: progress and problems. Nat Rev Neurosci 17 (5), 307–21. [DOI] [PubMed] [Google Scholar]

- 3.Seth AK and Bayne T (2022) Theories of consciousness. Nat Rev Neurosci 23 (7), 439–452. [DOI] [PubMed] [Google Scholar]

- 4.Tononi G et al. (2016) Integrated information theory: from consciousness to its physical substrate. Nat Rev Neurosci 17 (7), 450–61. [DOI] [PubMed] [Google Scholar]

- 5.Tononi G (2005) Consciousness, information integration, and the brain. Prog Brain Res 150, 109–26. [DOI] [PubMed] [Google Scholar]

- 6.Balduzzi D and Tononi G (2009) Qualia: the geometry of integrated information. PLoS Comput Biol 5 (8), e1000462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Melloni L et al. (2021) Making the hard problem of consciousness easier. Science 372 (6545), 911–912. [DOI] [PubMed] [Google Scholar]

- 8.Lamme VA (2006) Towards a true neural stance on consciousness. Trends Cogn Sci 10 (11), 494–501. [DOI] [PubMed] [Google Scholar]

- 9.Mashour GA et al. (2020) Conscious Processing and the Global Neuronal Workspace Hypothesis. Neuron 105 (5), 776–798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Baars BJ (1988) A cognitive theory of consciousness, Cambridge University Press. [Google Scholar]

- 11.Sergent C et al. (2021) Bifurcation in brain dynamics reveals a signature of conscious processing independent of report. Nat Commun 12 (1), 1149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cohen MA et al. (2020) Distinguishing the Neural Correlates of Perceptual Awareness and Postperceptual Processing. J Neurosci 40 (25), 4925–4935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shafto JP and Pitts MA (2015) Neural Signatures of Conscious Face Perception in an Inattentional Blindness Paradigm. J Neurosci 35 (31), 10940–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brown R et al. (2019) Understanding the Higher-Order Approach to Consciousness. Trends Cogn Sci 23 (9), 754–768. [DOI] [PubMed] [Google Scholar]

- 15.Lau H et al. (2022) The mnemonic basis of subjective experience. Nat Rev Psychol 1, 479–488. [Google Scholar]

- 16.Hohwy J et al. (2008) Predictive coding explains binocular rivalry: an epistemological review. Cognition 108 (3), 687–701. [DOI] [PubMed] [Google Scholar]

- 17.Parr T et al. (2019) Perceptual awareness and active inference. Neurosci Conscious 2019 (1), niz012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hohwy J and Seth A (2020) Predictive processing as a systematic basis for identifying the neural correlates of consciousness. Philosophy and the Mind Sciences 1 (II), 3. [Google Scholar]

- 19.Dembski C et al. (2021) Perceptual awareness negativity: a physiological correlate of sensory consciousness. Trends Cogn Sci 25 (8), 660–670. [DOI] [PubMed] [Google Scholar]

- 20.Wang M et al. (2013) Brain mechanisms for simple perception and bistable perception. Proc Natl Acad Sci U S A 110 (35), E3340–E3349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bar M et al. (2006) Top-down facilitation of visual recognition. Proc Natl Acad Sci U S A 103 (2), 449–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fyall AM et al. (2017) Dynamic representation of partially occluded objects in primate prefrontal and visual cortex. Elife 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kar K and DiCarlo JJ (2021) Fast Recurrent Processing via Ventrolateral Prefrontal Cortex Is Needed by the Primate Ventral Stream for Robust Core Visual Object Recognition. Neuron 109 (1), 164–176 e5. [DOI] [PubMed] [Google Scholar]

- 24.Kapoor V et al. (2022) Decoding internally generated transitions of conscious contents in the prefrontal cortex without subjective reports. Nat Commun 13 (1), 1535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.DiCarlo JJ et al. (2012) How does the brain solve visual object recognition? Neuron 73 (3), 415–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Barbas H and Mesulam MM (1985) Cortical afferent input to the principalis region of the rhesus monkey. Neuroscience 15 (3), 619–37. [DOI] [PubMed] [Google Scholar]

- 27.Riley MR et al. (2017) Functional specialization of areas along the anterior-posterior axis of the primate prefrontal cortex. Cereb Cortex 27 (7), 3683–3697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Haile TM et al. (2019) Visual stimulus-driven functional organization of macaque prefrontal cortex. Neuroimage 188, 427–444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tsao DY et al. (2008) Patches of face-selective cortex in the macaque frontal lobe. Nat Neurosci 11 (8), 877–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Guntupalli JS et al. (2017) Disentangling the Representation of Identity from Head View Along the Human Face Processing Pathway. Cereb Cortex 27 (1), 46–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kosman KA and Silbersweig DA (2018) Pseudo-Charles Bonnet Syndrome With a Frontal Tumor: Visual Hallucinations, the Brain, and the Two-Hit Hypothesis. J Neuropsychiatry Clin Neurosci 30 (1), 84–86. [DOI] [PubMed] [Google Scholar]

- 32.Vignal JP et al. (2000) Localised face processing by the human prefrontal cortex: stimulation-evoked hallucinations of faces. Cogn Neuropsychol 17 (1), 281–91. [DOI] [PubMed] [Google Scholar]

- 33.Bullier J (2001) Integrated model of visual processing. Brain Res Brain Res Rev 36 (2–3), 96–107. [DOI] [PubMed] [Google Scholar]

- 34.Campana F and Tallon-Baudry C (2013) Anchoring visual subjective experience in a neural model: the coarse vividness hypothesis. Neuropsychologia 51 (6), 1050–60. [DOI] [PubMed] [Google Scholar]

- 35.Hochstein S and Ahissar M (2002) View from the top: hierarchies and reverse hierarchies in the visual system. Neuron 36 (5), 791–804. [DOI] [PubMed] [Google Scholar]

- 36.Campana F et al. (2016) Conscious Vision Proceeds from Global to Local Content in Goal-Directed Tasks and Spontaneous Vision. J Neurosci 36 (19), 5200–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Dobs K et al. (2019) How face perception unfolds over time. Nat Commun 10 (1), 1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gregory RL (1970) The Intelligent Eye, 1 edn. [Google Scholar]

- 39.Ramachandran VS (1988) Perception of shape from shading. Nature 331 (6152), 163–6. [DOI] [PubMed] [Google Scholar]

- 40.Chalk M et al. (2010) Rapidly learned stimulus expectations alter perception of motion. J Vis 10 (8), 2. [DOI] [PubMed] [Google Scholar]

- 41.Pinto Y et al. (2015) Expectations accelerate entry of visual stimuli into awareness. J Vis 15 (8), 13. [DOI] [PubMed] [Google Scholar]

- 42.Baria AT et al. (2017) Initial-state-dependent, robust, transient neural dynamics encode conscious visual perception. PLoS Comput Biol 13 (11), e1005806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Podvalny E et al. (2019) A dual role of prestimulus spontaneous neural activity in visual object recognition. Nat Commun 10 (1), 3910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Samaha J et al. (2020) Spontaneous Brain Oscillations and Perceptual Decision-Making. Trends Cogn Sci 24 (8), 639–653. [DOI] [PubMed] [Google Scholar]

- 45.Hesselmann G et al. (2008) Ongoing activity fluctuations in hMT+ bias the perception of coherent visual motion. J Neurosci 28 (53), 14481–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.McCormick DA et al. (2020) Neuromodulation of Brain State and Behavior. Annu Rev Neurosci 43, 391–415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Gorlin S et al. (2012) Imaging prior information in the brain. Proc Natl Acad Sci U S A 109 (20), 7935–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Squire LR et al. (2021) One-trial perceptual learning in the absence of conscious remembering and independent of the medial temporal lobe. Proc Natl Acad Sci U S A 118 (19). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ludmer R et al. (2011) Uncovering camouflage: amygdala activation predicts long-term memory of induced perceptual insight. Neuron 69 (5), 1002–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hegde J and Kersten D (2010) A link between visual disambiguation and visual memory. J Neurosci 30 (45), 15124–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Flounders MW et al. (2019) Neural dynamics of visual ambiguity resolution by perceptual prior Elife 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gonzalez-Garcia C et al. (2018) Content-specific activity in frontoparietal and default-mode networks during prior-guided visual perception. Elife 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Gonzalez-Garcia C and He BJ (2021) A Gradient of Sharpening Effects by Perceptual Prior across the Human Cortical Hierarchy. J Neurosci 41 (1), 167–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hardstone R et al. (2021) Long-term priors influence visual perception through recruitment of long-range feedback. Nat Commun 12 (1), 6288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Melloni L et al. (2011) Expectations change the signatures and timing of electrophysiological correlates of perceptual awareness. J Neurosci 31 (4), 1386–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Summerfield C and de Lange FP (2014) Expectation in perceptual decision making: neural and computational mechanisms. Nat Rev Neurosci 15 (11), 745–56. [DOI] [PubMed] [Google Scholar]

- 57.He BJ (2013) Spontaneous and task-evoked brain activity negatively interact. J Neurosci 33 (11), 4672–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.He BJ and Zempel JM (2013) Average is optimal: an inverted-U relationship between trial-to-trial brain activity and behavioral performance. PLoS Comput Biol 9 (11), e1003348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Iemi L et al. (2019) Multiple mechanisms link prestimulus neural oscillations to sensory responses. Elife 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.He BJ (2018) Robust, Transient Neural Dynamics during Conscious Perception. Trends Cogn Sci. [DOI] [PubMed] [Google Scholar]

- 61.Merrick C et al. (2014) The olfactory system as the gateway to the neural correlates of consciousness. Front Psychol 4, 1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Fox KCR et al. (2020) Intrinsic network architecture predicts the effects elicited by intracranial electrical stimulation of the human brain. Nat Hum Behav 4 (10), 1039–1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Li W et al. (2010) Right orbitofrontal cortex mediates conscious olfactory perception. Psychol Sci 21 (10), 1454–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Cicerone KD and Tanenbaum LN (1997) Disturbance of social cognition after traumatic orbitofrontal brain injury. Arch Clin Neuropsychol 12 (2), 173–88. [PubMed] [Google Scholar]

- 65.Boly M et al. (2017) Are the Neural Correlates of Consciousness in the Front or in the Back of the Cerebral Cortex? Clinical and Neuroimaging Evidence. J Neurosci 37 (40), 9603–9613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Odegaard B et al. (2017) Should a Few Null Findings Falsify Prefrontal Theories of Conscious Perception? J Neurosci 37 (40), 9593–9602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Yih J et al. (2019) Intensity of affective experience is modulated by magnitude of intracranial electrical stimulation in human orbitofrontal, cingulate and insular cortices. Soc Cogn Affect Neurosci 14 (4), 339–351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Parvizi J et al. (2021) Altered sense of self during seizures in the posteromedial cortex. Proc Natl Acad Sci U S A 118 (29). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Nummenmaa L et al. (2018) Maps of subjective feelings. Proc Natl Acad Sci U S A 115 (37), 9198–9203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Damasio A (2000) The Feeling of What Happens, Mariner Books. [Google Scholar]

- 71.LeDoux J (2019) The Deep History of Ourselves, Penguin Random House. [Google Scholar]

- 72.Fried I et al. (2017) Volition and Action in the Human Brain: Processes, Pathologies, and Reasons. J Neurosci 37 (45), 10842–10847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Desmurget M and Sirigu A (2012) Conscious motor intention emerges in the inferior parietal lobule. Curr Opin Neurobiol 22 (6), 1004–11. [DOI] [PubMed] [Google Scholar]

- 74.Haggard P (2017) Sense of agency in the human brain. Nat Rev Neurosci 18 (4), 196–207. [DOI] [PubMed] [Google Scholar]

- 75.Sirigu A et al. (1996) The mental representation of hand movements after parietal cortex damage. Science 273 (5281), 1564–8. [DOI] [PubMed] [Google Scholar]

- 76.Fried I et al. (1991) Functional organization of human supplementary motor cortex studied by electrical stimulation. J Neurosci 11 (11), 3656–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Lau HC et al. (2007) Manipulating the experienced onset of intention after action execution. J Cogn Neurosci 19 (1), 81–90. [DOI] [PubMed] [Google Scholar]

- 78.Douglas ZH et al. (2015) Modulating conscious movement intention by noninvasive brain stimulation and the underlying neural mechanisms. J Neurosci 35 (18), 7239–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Wolpert DM et al. (1998) Maintaining internal representations: the role of the human superior parietal lobe. Nat Neurosci 1 (6), 529–33. [DOI] [PubMed] [Google Scholar]

- 80.Nahab FB et al. (2011) The neural processes underlying self-agency. Cereb Cortex 21 (1), 48–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Buzsaki G et al. (2022) Neurophysiology of Remembering. Annu Rev Psychol 73, 187–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Richter FR et al. (2016) Distinct neural mechanisms underlie the success, precision, and vividness of episodic memory. Elife 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Simons JS et al. (2022) Brain Mechanisms Underlying the Subjective Experience of Remembering. Annu Rev Psychol 73, 159–186. [DOI] [PubMed] [Google Scholar]

- 84.Smith R and Lane RD (2016) Unconscious emotion: A cognitive neuroscientific perspective. Neurosci Biobehav Rev 69, 216–38. [DOI] [PubMed] [Google Scholar]

- 85.Schurger A et al. (2012) An accumulator model for spontaneous neural activity prior to self-initiated movement. Proc Natl Acad Sci U S A 109 (42), E2904–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Bayley PJ et al. (2005) Robust habit learning in the absence of awareness and independent of the medial temporal lobe. Nature 436 (7050), 550–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Turk-Browne NB (2012) Statistical learning and its consequences. Nebr Symp Motiv 59, 117–46. [DOI] [PubMed] [Google Scholar]

- 88.Wan X et al. (2011) The neural basis of intuitive best next-move generation in board game experts. Science 331 (6015), 341–6. [DOI] [PubMed] [Google Scholar]

- 89.Kahneman D (2013) Thinking, Fast and Slow, Farrar, Straus and Giroux.

- 90.Levinson M et al. (2021) Cortical and subcortical signatures of conscious object recognition. Nat Commun 12 (1), 2930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Schmitt LI et al. (2017) Thalamic amplification of cortical connectivity sustains attentional control. Nature 545 (7653), 219–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Shine JM (2019) Neuromodulatory Influences on Integration and Segregation in the Brain. Trends Cogn Sci 23 (7), 572–583. [DOI] [PubMed] [Google Scholar]

- 93.Merker B (2007) Consciousness without a cerebral cortex: a challenge for neuroscience and medicine. Behav Brain Sci 30 (1), 63–81; discussion 81–134. [DOI] [PubMed] [Google Scholar]

- 94.Bullmore E and Sporns O (2012) The economy of brain network organization. Nat Rev Neurosci 13 (5), 336–49. [DOI] [PubMed] [Google Scholar]

- 95.Douglas RJ and Martin KA (2004) Neuronal circuits of the neocortex. Annu Rev Neurosci 27, 419–51. [DOI] [PubMed] [Google Scholar]

- 96.Wang XJ (2020) Macroscopic gradients of synaptic excitation and inhibition in the neocortex. Nat Rev Neurosci 21 (3), 169–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Bayne T (2010) The Unity of Consciousness, Oxford University Press. [Google Scholar]

- 98.Seth A (2021) Being You, Penguin Random House. [Google Scholar]

- 99.Hardstone R et al. (2022) Frequency-specific neural signatures of perceptual content and perceptual stability. Elife 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Block N (2005) Two neural correlates of consciousness. Trends Cogn Sci 9 (2), 46–52. [DOI] [PubMed] [Google Scholar]

- 101.Klein C et al. (2020) Explanation in the science of consciousness: From the neural correlates of consciousness (NCCs) to the difference makers of consciousness (DMCs). Philosophy and the Mind Sciences 1 (II). [Google Scholar]

- 102.He BJ (2014) Scale-free brain activity: past, present, and future. Trends Cogn Sci 18 (9), 480–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Stokes MG et al. (2013) Dynamic coding for cognitive control in prefrontal cortex. Neuron 78 (2), 364–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Luczak A et al. (2009) Spontaneous events outline the realm of possible sensory responses in neocortical populations. Neuron 62 (3), 413–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.James W (1890) Principles of Psychology. Henry Holt & Company. [Google Scholar]

- 106.Michel M et al. (2019) Opportunities and challenges for a maturing science of consciousness. Nat Hum Behav 3 (2), 104–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Squire LR and Dede AJ (2015) Conscious and unconscious memory systems. Cold Spring Harb Perspect Biol 7 (3), a021667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Squire LR et al. (2004) The medial temporal lobe. Annu Rev Neurosci 27, 279–306. [DOI] [PubMed] [Google Scholar]

- 109.Tulving E (2002) Episodic memory: from mind to brain. Annu Rev Psychol 53, 1–25. [DOI] [PubMed] [Google Scholar]

- 110.Tulving E and Schacter DL (1990) Priming and human memory systems. Science 247 (4940), 301–6. [DOI] [PubMed] [Google Scholar]

- 111.Dehaene S et al. (2001) Cerebral mechanisms of word masking and unconscious repetition priming. Nat Neurosci 4 (7), 752–8. [DOI] [PubMed] [Google Scholar]

- 112.Fisch L et al. (2009) Neural “ignition”: enhanced activation linked to perceptual awareness in human ventral stream visual cortex. Neuron 64 (4), 562–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Brascamp J et al. (2018) Multistable Perception and the Role of the Frontoparietal Cortex in Perceptual Inference. Annu Rev Psychol 69, 77–103. [DOI] [PubMed] [Google Scholar]

- 114.Fahrenfort JJ et al. (2012) Neuronal integration in visual cortex elevates face category tuning to conscious face perception. Proc Natl Acad Sci U S A 109 (52), 21504–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Kronemer SI et al. (2022) Human visual consciousness involves large scale cortical and subcortical networks independent of task report and eye movement activity. Nat Commun 13 (1), 7342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Bao P et al. (2020) A map of object space in primate inferotemporal cortex. Nature 583 (7814), 103–108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Grill-Spector K and Weiner KS (2014) The functional architecture of the ventral temporal cortex and its role in categorization. Nat Rev Neurosci 15 (8), 536–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Kanwisher N (2010) Functional specificity in the human brain: a window into the functional architecture of the mind. Proc Natl Acad Sci U S A 107 (25), 11163–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Gold JI and Shadlen MN (2007) The neural basis of decision making. Annu Rev Neurosci 30, 535–74. [DOI] [PubMed] [Google Scholar]

- 120.Desimone R and Duncan J (1995) Neural mechanisms of selective visual attention. Annu Rev Neurosci 18, 193–222. [DOI] [PubMed] [Google Scholar]

- 121.Gilbert CD and Li W (2013) Top-down influences on visual processing. Nat Rev Neurosci 14 (5), 350–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Sterzer P et al. (2009) The neural bases of multistable perception. Trends Cogn Sci 13 (7), 310–8. [DOI] [PubMed] [Google Scholar]

- 123.Konkle T and Caramazza A (2013) Tripartite organization of the ventral stream by animacy and object size. J Neurosci 33 (25), 10235–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Hong H et al. (2016) Explicit information for category-orthogonal object properties increases along the ventral stream. Nat Neurosci 19 (4), 613–22. [DOI] [PubMed] [Google Scholar]