Abstract

As the world moves towards industrialization, optimization problems become more challenging to solve in a reasonable time. More than 500 new metaheuristic algorithms (MAs) have been developed to date, with over 350 of them appearing in the last decade. The literature has grown significantly in recent years and should be thoroughly reviewed. In this study, approximately 540 MAs are tracked, and statistical information is also provided. Due to the proliferation of MAs in recent years, the issue of substantial similarities between algorithms with different names has become widespread. This raises an essential question: can an optimization technique be called ‘novel’ if its search properties are modified or almost equal to existing methods? Many recent MAs are said to be based on ‘novel ideas’, so they are discussed. Furthermore, this study categorizes MAs based on the number of control parameters, which is a new taxonomy in the field. MAs have been extensively employed in various fields as powerful optimization tools, and some of their real-world applications are demonstrated. A few limitations and open challenges have been identified, which may lead to a new direction for MAs in the future. Although researchers have reported many excellent results in several research papers, review articles, and monographs during the last decade, many unexplored places are still waiting to be discovered. This study will assist newcomers in understanding some of the major domains of metaheuristics and their real-world applications. We anticipate this resource will also be useful to our research community.

Keywords: Optimization, Metaheuristic algorithm, Nature inspired algorithm, Parameter

Introduction

The term ‘meta’ is becoming more prevalent nowadays; it generally translates to ‘beyond’ or ‘higher level’. Although there is no agreed mathematical definition, the continued development of heuristic algorithms is usually referred to as MAs (Yang 2020). A heuristic algorithm is a method for producing acceptable solutions to optimization problems through trial and error. Intelligence is found not only in humans but also in animals, microorganisms, and other minute aspects of nature, such as ants, bees, and other creatures. Nature serves as a source of inspiration for many MAs, which are referred to as nature-inspired algorithms (NIAs) (Yang 2010a). Nature performs all tasks optimally, whether it’s moving light through space in the shortest path, carrying out the work function of any living organ with the least amount of energy expansion, or forming bubbles with the least amount of surface area that is a sphere. Natural selection favors optimization. That is the most efficient method of completing any task successfully and hassle-free. This simple concept can be applied to any type of work that we perform in our everyday lives. However, when it comes to large-scale operations, such as those in businesses, national security, distribution in large areas, and the design of some structures, we require a concrete method or tool to ensure that resources are utilized properly and that are maximized, which leads to operations research (OR). During the last decade, metaheuristics have emerged as a powerful optimization tool in OR. Also, MAs are becoming more critical in computational intelligence because they are flexible, adaptive, and have an extensive search capacity. MAs are used in NP-Hard problems, fixture and manufacturing cell design, soft computing, foreign exchange trading, robotics, medical science, behavioral science, photo-voltaic models, and so on, which is evidence of the importance of MAs. As MAs are stochastic by nature, they cannot guarantee the achievement of the optimal solution. As a result, the question naturally arises: Is it a worthy choice? It is roughly akin to ‘something is better than nothing.’ When others fail, MAs provide us with a satisfactory ‘something’. In practice, we achieve a satisfactory or workable solution in a reasonable amount of time. Most of the algorithms have been tested for lower dimensions. It is necessary to test them for a higher dimensional problem and improve them if necessary to tackle the ‘curse of dimensionality’. A significant research gap between theory and implementation has been shown, which should be taken care of. As exploration and exploitation are the fundamental strategies of most MAs, balancing them is another challenge. The main contributions of this study can be summarized as:

The article presents a recent metaheuristics survey. The data set for this study contains about 540 MAs.

This study provides critical yet constructive analysis, addressing improper methodological practices to accomplish helpful research.

A new classification of MAs is proposed based on the number of parameters.

The limitations of metaheuristics, as well as open challenges, are highlighted.

Several potential future research directions for metaheuristics have been identified.

The rest of this paper is organized as follows. A brief history is discussed in Sect. 2. A compilation of existing MAs and other literary works are provided in Sect. 3. In Sect. 4, few statistical data are provided, while constructive criticism has been done in Sect. 5. MAs are classified into subgroups based on four different points of view in Sect. 6. In addition, Sect. 7 contains some real-world metaheuristics applications. A few limitations, including some open challenges, are addressed in Sect. 8. A brief overview of the potential future directions of metaheuristics is provided in Sect. 9. Finally, conclusions are drawn in Sect. 10.

Brief history

What was the first use of (meta) heuristic? Because the heuristic process automatically dominates the human mind, humans may have employed it from the beginning, whether they realized it or not: the use of fire, the acquisition of number systems, and the usage of the wheel are all examples of heuristic process applications. Any practical problem can be modeled mathematically for optimization—this is a challenging task; even the more challenging task is to optimize it. To address this situation, scientists proposed several approaches that are now referred to as ‘conventional methods’. They are mainly as follows:

Direct search: random search method, uni-variant method, pattern search method, convex optimization, linear programming, interior-point method, quadratic programming, trust-region method, etc.

Gradient-based method: steepest descent method, conjugate gradient method, Newton–Raphson method, quasi-Newton method, etc.

Since the most realistic optimization problems are discontinuous and highly non-linear, conventional methods fail to prove their efficiency, robustness, and accuracy. Researchers devised alternative approaches to tackle such problems. It is worth noting that nature has inspired us since the beginning–whether making fire from a jungle blaze or making ships from floating wood. In general, all are gifted by nature, directly or indirectly.

However, Hungarian mathematician George Pólya wrote the book ‘How to Solve It’ about the subject in 1945, where he gave an idea about heuristic searches and mentioned four steps to grasping a problem as follows: (a) understand the problem, (b) devising a plan, (c) looking back, and (d) carrying the plan (Polya 2004). The book gained immense attraction and was translated into several languages, selling over a million copies. Still, the book is used in mathematical education, Pólya work inspired Douglas Lenat’s Automated Mathematician and Eurisko artificial intelligence programs.

Also, scientists all over the world tried to solve many practical problems. In this case, in 1945, the first success came by breaking the Enigma ciphers’ code at Bletchley Park by using heuristic algorithms; British scientist Turing called his method ‘heuristic search’ (Hodges 2012). He was one of the designers of the bombe, used in World War II. After then, he proposed a ‘learning machine’ in 1950, which would parallel the principle of evolution. Barricelli started work with computer simulation as early as 1954 at the Institute for Advanced Study, New Jersey. Although his work was not noticed widely, his work in evolution is considered pioneering in artificial life research. Artificial evolution became a well-recognized optimization approach in the 1960s and early 1970s due to the work of Rechenberg and Schwefel (sulfur 1977). Rechenberg solved many complex engineering problems through evolution strategies. Next, Fogel proposed generating artificial intelligence. Decision Science Inc. was probably the first company to use evolutionary computation to solve real-world problems in 1966. Owens and Burgin further expanded the methodology, and the Adaptive Maneuvering Logic flight simulator was initially deployed at Langley Research Center for air-to-air combat training (Burgin and Fogel 1972). Fogel and Burgin also experimented with simulations of co-evolutionary games in Decision Science. They also worked on the real-world applications of evolutionary computation in many ways, including modeling human operators and thinking about biological communication (Fogel et al. 1970). In the early 1970s, Holland formalized a breakthrough programming technique, the genetic algorithm (GA), which he summarised in his book ‘Adaptation in Natural and Artificial Systems’ (Holland 1991). He worked to extend the algorithm’s scope during the next decade by creating a genetic code representing any computer program structure. Also, he developed a framework for predicting the next generation’s quality, known as Holland’s schema theorem. Kirkpatrick et al. (1983) proposed simulated annealing (SA), which is a single point-based algorithm inspired by the mechanism of metallurgy’s annealing process. Glover (1989) formalized the tabu search computer-based optimization methodology. This is based on local search, which has a high probability of getting stuck in local optima. Another interesting artificial life program, called boid, was developed by Reynolds (1987), which simulates birds’ flocking behavior. It was used for visualizing information and optimization tasks. Moscato et al. (1989) introduced a memetic algorithm in his technical report inspired by Darwinian principles of natural evolution and Dawkins’ notion of a meme. The memetic algorithm was an extension of the traditional genetic algorithm. It was used as a local search technique to reduce the likelihood of premature convergence. Another nature-inspired algorithm from the early years was developed in 1989 by Bishop and Torr (1992), later referred to as stochastic diffusion search (SDS). Kennedy and Eberhart (1995) developed particle swarm optimization (PSO), which was first intended for simulating social behaviour. This is one of the simplest and most widely used algorithm. In the next two years, an appreciable and controversial work, the no free lunch theorem (NFL) for optimization, was introduced and proved explicitly by Wolpert and Macready (1997). While some researchers argue that NFL has some significant insight, others argue that NFL has little relevance to machine learning research. But the main thing is that NFL unlocks a golden opportunity to further research for developing new domain-specific algorithms. The validity of the NFL for higher dimensions is still under investigation. Later on, several efficient algorithms have been developed, such as differential evolution (DE) by Storn and Price (1997), ant colony optimization (ACO) by Dorigo et al. (2006), artificial bee colony (ABC) by Karaboga and Basturk (2007), and others, as shown in the following section.

Metaheuristics

It is difficult to summarize all existing MAs and other valuable data in a single article. In this section, we collect as many existing MAs as possible. Here about 540 existing MAs are complied. It enables us to comprehend the broader context in order to offer constructive criticism in this area, and this can be used as a toolbox (Table 1).

Table 1.

Metaheuristic algorithms (up to 2022)

| SN | Algorithm | References |

|---|---|---|

| 1 | Across Neighbourhood Search (ANS) | Wu (2016) |

| 2 | Adaptive Social Behavior Optimization (ASBO) | Singh (2013) |

| 3 | African Buffalo Optimization (ABO) | Odili et al. (2015) |

| 4 | African Vultures Optimization Algorithm (AVOA) | Abdollahzadeh et al. (2021a) |

| 5 | African Wild Dog Algorithm (AWDA) | Subramanian et al. (2013) |

| 6 | Algorithm of the Innovative Gunner (AIG) | Pijarski and Kacejko (2019) |

| 7 | Ali Baba and the Forty Thieves Optimization (AFT) | Braik et al. (2022b) |

| 8 | Anarchic Socity Optimization (ASO) | Ahmadi-Javid (2011) |

| 9 | Andean Condor Algorithm (ACA) | Almonacid and Soto (2019) |

| 10 | Animal Behavior Hunting (ABH) | Naderi et al. (2014) |

| 11 | Animal Migration Optimization Algorithm (AMO) | Li et al. (2014) |

| 12 | Ant Colony Optimization (ACO) | Dorigo et al. (2006) |

| 13 | Ant Lion Optimizer (ALO) | Mirjalili (2015a) |

| 14 | Aphid-Ant Mutualism (AAM) | Eslami et al. (2022) |

| 15 | Aphids Optimization Algorithm (AOA) | Liu et al. (2022) |

| 16 | Archerfish Hunting Optimizer (AHO) | Zitouni et al. (2021) |

| 17 | Archery Algorithm (AA) | Zeidabadi et al. (2022) |

| 18 | Archimedes Optimization Algorithm (AOA) | Hashim et al. (2021) |

| 19 | Arithmetic Optimization Algorithm (AOA) | Abualigah et al. (2021b) |

| 20 | Aritificial Algae Algorithm (AAA) | Uymaz et al. (2015) |

| 21 | Artificial Atom Algorithm (A3) | Karci (2018) |

| 22 | Artificial Bee Colony (ABC) | Karaboga and Basturk (2007) |

| 23 | Artificial Beehive Algorithm (ABA) | Munoz et al. (2009) |

| 24 | Artificial Butterfly Optimization (ABO) | Qi et al. (2017) |

| 25 | Artificial Chemical Process (ACP) | Irizarry (2004) |

| 26 | Artificial Chemical Reaction Optimization Algorithm (ACROA) | Alatas (2011) |

| 27 | Artificial Cooperative Search (ACS) | Civicioglu (2013a) |

| 28 | Artificial Coronary Circulation System (ACCS) | Kaveh and Kooshkebaghi (2019) |

| 29 | Artificial Ecosystem Algorithm (AEA) | Adham and Bentley (2014) |

| 30 | Artificial Ecosystem-based Optimization (AEO) | Zhao et al. (2020b) |

| 31 | Artificial Electric Field Algorithm (AEFA) | Yadav et al. (2019) |

| 32 | Artificial Feeding Birds Algorithm (AFB) | Lamy (2019) |

| 33 | Artificial Fish Swarm Algorithm (AFSA) | Li (2003) |

| 34 | Artificial Flora Optimization Algorithm (AF) | Cheng et al. (2018) |

| 35 | Artificial Gorilla Troops Optimizer (GTO) | Abdollahzadeh et al. (2021b) |

| 36 | Artificial Hummingbird Algorithm (AHA) | Zhao et al. (2022b) |

| 37 | Artificial Infection Disease Optimization (AIO) | Huang (2016) |

| 38 | Artificial Jellyfish Search Optimizer (AJSO) | Chou and Truong (2021) |

| 39 | Artificial Lizard Search Optimization (ALSO) | Kumar et al. (2021) |

| 40 | Artificial Photosynthesis and Phototropism Mechanism (APPM) | Cui and Cai (2011) |

| 41 | Artificial Physics Optimization (APO) | Xie et al. (2009) |

| 42 | Artificial Plants Optimization Algorithm (APO) | Zhao et al. (2011) |

| 43 | Artificial Raindrop Algorithm (ARA) | Jiang et al. (2014) |

| 44 | Artificial Reaction Algorithm (ARA) | Melin et al. (2013) |

| 45 | Artificial Searching Swarm Algorithm (ASSA) | Chen et al. (2009) |

| 46 | Artificial Showering Algorithm (ASA) | Ali et al. (2015) |

| 47 | Artificial Swarm Intelligence (ASI) | Rosenberg and Willcox (2018) |

| 48 | Artificial Tribe Algorithm (ATA) | Chen et al. (2012) |

| 49 | Asexual Reproduction Optimization (ARO) | Farasat et al. (2010) |

| 50 | Atmosphere Clouds Model Optimization (ACMO) | Gao-Wei and Zhanju (2012) |

| 51 | Atomic Orbital Search (AOS) | Azizi (2021) |

| 52 | Backtracking Search Optimization (BSO) | Civicioglu (2013b) |

| 53 | Bacterial Chemotaxis Optimization (BCO) | Muller et al. (2002) |

| 54 | Bacterial Colony Optimization (BCO) | Niu and Wang (2012) |

| 55 | Bacterial Evolutionary Algorithm (BEA) | Numaoka (1996) |

| 56 | Bacterial Foraging Optimization Algorithm (BFOA) | Das et al. (2009) |

| 57 | Bacterial Swarming Algorithm (BSA) | Tang et al. (2007) |

| 58 | Bacterial-GA Foraging (BF) | Chen et al. (2007) |

| 59 | Bald Eagle Search (BES) | Alsattar et al. (2020) |

| 60 | Bar Systems (BS) | Del Acebo and de-la Rosa (2008) |

| 61 | Bat Algorithm (BA) | Yang and He (2013) |

| 62 | Bat Inspired Algorithm (BIA) | Yang (2010b) |

| 63 | Bat Intelligence (BI) | Malakooti et al. (2012) |

| 64 | Battle Royale Optimization (BRO) | Rahkar Farshi (2021) |

| 65 | Bean Optimization Algorithm (BOA) | Zhang et al. (2010) |

| 66 | Bear Smell Search Algorithm (BSSA) | Ghasemi-Marzbali (2020) |

| 67 | Bee Colony Optimization (BCO) | Teodorovic and Dell’Orco (2005) |

| 68 | Bee Conoly-Inspired Algorithm (BCIA) | Häckel and Dippold (2009) |

| 69 | Bee Swarm Optimization (BSO) | Akbari et al. (2010) |

| 70 | Bee System (BS) | Sato and Hagiwara (1998) |

| 71 | Bee System.1 (BS.1) | Lucic and Teodorovic (2002) |

| 72 | BeeHive (BH) | Wedde et al. (2004) |

| 73 | Bees Algorithm (BA) | Pham et al. (2006) |

| 74 | Bees Life Algorithm (BLA) | Bitam et al. (2018) |

| 75 | Beetle Swarm Optimization Algorithm (BSOA) | Wang and Yang (2018) |

| 76 | Beluga Whale Optimization (BWO) | Zhong et al. (2022) |

| 77 | Big Bang-Big Crunch (BBBC) | Erol and Eksin (2006) |

| 78 | Billiards-Inspired Optimization Algorithm (BOA) | Kaveh et al. (2020b) |

| 79 | Binary Slime Mould Algorithm (BSMA) | Abdel-Basset et al. (2021) |

| 80 | Binary Whale Optimization Algorithm (bWOA) | Reddy K et al. (2019) |

| 81 | Biogeography-Based Optimization(BBO) | Simon (2008) |

| 82 | Biology Migration Algorithm (BMA) | Zhang et al. (2019) |

| 83 | Bioluminiscent Swarm Optimization (BSO) | de Oliveira et al. (2011) |

| 84 | Biomimicry of Social Foraging Bactera for Distributed (BSFBD) | Passino (2002) |

| 85 | Bird Mating Optimization (BMO) | Askarzadeh (2014) |

| 86 | Bird Swarm Algorithm (BSA) | Meng et al. (2016) |

| 87 | Bison Behavior Algorithm (BBA) | Kazikova et al. (2017) |

| 88 | Black Hole Algorithm (BH.1) | Hatamlou (2013) |

| 89 | Black Hole Mechanics Optimization (BHMO) | Kaveh et al. (2020c) |

| 90 | Blind, Naked Mol-Rats Algorithm (BNMR) | Taherdangkoo et al. (2013) |

| 91 | Blue Monkey Algorithm (BM) | Mahmood and Al-Khateeb (2019) |

| 92 | Boids | Reynolds (1987) |

| 93 | Bonobo Optimizer (BO) | Das and Pratihar (2019) |

| 94 | Brain Storm Optimization (BSO) | Shi (2011) |

| 95 | Bull Optimization Algorithm (BOA) | FINDIK (2015) |

| 96 | Bumble Bees Mating Optimization (BBMO) | Marinakis et al. (2010) |

| 97 | Bus Transport Algorithm (BTA) | Bodaghi and Samieefar (2019) |

| 98 | Butterfly Optimization Algorithm (BOA) | Arora and Singh (2019) |

| 99 | Butterfly Optimizer(BO) | Kumar et al. (2015) |

| 100 | Buzzards Optimization Algorithm (BOA) | Arshaghi et al. (2019) |

| 101 | Camel Algorithm (CA) | Ibrahim and Ali (2016) |

| 102 | Camel Herd Algorithm (CHA) | Al-Obaidi et al. (2017) |

| 103 | Capuchin Search Algorithm (CapSA) | Braik et al. (2021) |

| 104 | Car Tracking Optimization Algorithm (CTOA) | Chen et al. (2018) |

| 105 | Cat Swarm Optimization (CSO) | Chu et al. (2006) |

| 106 | Catfish Particle Swarm Optimization (CatfishPSO) | Chuang et al. (2008) |

| 107 | Central Force Optimizartion (CFO) | Formato (2008) |

| 108 | Chaos Game Optimization (CGO) | Talatahari and Azizi (2021) |

| 109 | Chaos Optimization Alfgorithm (COA) | JIANG (1998) |

| 110 | Chaotic Dragonfly Algorithm (CDA) | Sayed et al. (2019b) |

| 111 | Charged System Search (CSS) | Kaveh and Talatahari (2010) |

| 112 | Cheetah Based Algorithm (CBA) | Klein et al. (2018) |

| 113 | Cheetah Chase Algorithm (CCA) | Goudhaman (2018) |

| 114 | Cheetah Optimizer (CO) | Akbari et al. (2022) |

| 115 | Chef-Based Optimization Algorithm (CBOA) | Trojovská and Dehghani (2022) |

| 116 | Chemical Reaction Optimization (CRO) | Alatas (2011) |

| 117 | Chicken Swarm Optimization (CSO) | Meng et al. (2014) |

| 118 | Child Drawing Development Optimization (CDDO) | Abdulhameed and Rashid (2022) |

| 119 | Chimp Optimization Algorithm (ChOA) | Khishe and Mosavi (2020) |

| 120 | Circle Search Algorithm (CSA) | Qais et al. (2022) |

| 121 | Circular Structures of Puffer Fish Algorithm (CSPF) | Catalbas and Gulten (2018) |

| 122 | Circulatory System-based Optimization (CSBO) | Ghasemi et al. (2022) |

| 123 | City Councils Evolution (CCE) | Pira (2022) |

| 124 | Clonal Selection Algorithm (CSA) | De Castro and Von Zuben (2000) |

| 125 | Cloud Model-Based Differential Evolution Algorithm (CMDE) | Zhu and Ni (2012) |

| 126 | Cockroach Swarm Optimization (CSO) | ZhaoHui and HaiYan (2010) |

| 127 | Cognitive Behavior Optimization Algorithm (COA) | Li et al. (2016b) |

| 128 | Collective Animal Behavior (CAB) | Cuevas et al. (2012a) |

| 129 | Collective Decision Optimization Algorithm (CDOA) | Zhang et al. (2017b) |

| 130 | Colliding Bodies Optimization (CBO) | Kaveh and Mahdavi (2014) |

| 131 | Color Harmony Algorithm (CHA) | Zaeimi and Ghoddosian (2020) |

| 132 | Community of Scientist Optimization (CoSO) | Milani and Santucci (2012) |

| 133 | Competitive Learning Algorithm (CLA) | Afroughinia and Kardehi M (2018) |

| 134 | Competitive Optimization Algorithm (COOA) | Sharafi et al. (2016) |

| 135 | Consultant Guide Search (CGS) | Wu and Banzhaf (2010) |

| 136 | Co-Operation of Biology Related Algorithm (COBRA) | Akhmedova and Semenkin (2013) |

| 137 | Coral Reefs Optimization (CRO) | Salcedo-Sanz et al. (2014) |

| 138 | Corona Virus Optimization (CVO) | Salehan and Deldari (2022) |

| 139 | Coronavirus Herd Immunity Optimizer (CHIO) | Al-Betar et al. (2021) |

| 140 | Coronavirus Optimization Algorithm (COVIDOA) | Khalid et al. (2022) |

| 141 | Covariance Matrix Adaptation-Evolution Strategy (CMAES) | Hansen et al. (2003) |

| 142 | Coyote Optimization Algorithm (COA) | Pierezan and Coelho (2018) |

| 143 | Cricket Algorithm (CA) | Canayaz and Karcı (2015) |

| 144 | Cricket Behaviour-Based Algorithm (CBA) | Canayaz and Karci (2016) |

| 145 | Cricket Chirping Algorithm (CCA) | Deuri and Sathya (2018) |

| 146 | Crow Search Algorithm (CSA) | Askarzadeh (2016) |

| 147 | Crystal Energy Optimization Algorithm (CEO) | Feng et al. (2016) |

| 148 | Crystal Structure Algorithm (CryStAl) | Talatahari et al. (2021b) |

| 149 | Cuckoo Optimization Algorithm (COA) | Rajabioun (2011) |

| 150 | Cuckoo Search (CS) | Yang and Deb (2009) |

| 151 | Cultural Algorithm (CA) | Jin and Reynolds (1999) |

| 152 | Cultural Coyote Optimization Algorithm (CCOA) | Pierezan et al. (2019) |

| 153 | Cuttlefish Algorithm (CA) | Eesa et al. (2013) |

| 154 | Cyclical Parthenogenesis Algorithm (CPA) | Kaveh and Zolghadr (2017) |

| 155 | Dandelion Optimizer (DO) | Zhao et al. (2022a) |

| 156 | Deer Hunting Optimization Algorithm (DHOA) | Brammya et al. (2019) |

| 157 | Dendritic Cells Algorithm (DCA) | Greensmith et al. (2005) |

| 158 | Deterministic Oscillatory Search (DOS) | Archana et al. (2017) |

| 159 | Dialectic Search (DS) | Kadioglu and Sellmann (2009) |

| 160 | Differential Evolution (DE) | Storn and Price (1997) |

| 161 | Differential Search Algorithm (DSA) | Civicioglu (2012) |

| 162 | Dolphin Echolocation (DE) | Kaveh and Farhoudi (2013) |

| 163 | Dolphin Partner Optimization (DPO) | Shiqin et al. (2009) |

| 164 | Dragonfly Algorithm (DA) | Mirjalili (2016a) |

| 165 | Driving Training-Based Optimization (DTBO) | Dehghani et al. (2022b) |

| 166 | Duelist Algorithm (DA) | Biyanto et al. (2016) |

| 167 | Dynamic Differential Annealed Optimization (DDAO) | Ghafil and Jármai (2020) |

| 168 | Dynastic Optimization Algorithm (DOA) | Wagan et al. (2020) |

| 169 | Eagle Strategy (ES) | Yang and Deb (2010) |

| 170 | Earthwarm Optimization Algorithm (EOA) | Wang et al. (2018a) |

| 171 | Ebola Optimization Search Algorithm (EOSA) | Oyelade and Ezugwu (2021) |

| 172 | Ecogeography-Based Optimization (EBO) | Zheng et al. (2014) |

| 173 | Eco-inspired Evolutionary Algorithm (EEA) | Parpinelli and Lopes (2011) |

| 174 | Egyptian Vulture Optimization (EV) | Sur et al. (2013) |

| 175 | Election-Based Optimization Algorithm (EBOA) | Trojovskỳ and Dehghani (2022a) |

| 176 | Electromagnetic Field Optimization (EFO) | Abedinpourshotorban et al. (2016) |

| 177 | Electro-Magnetism Optimization (EMO) | Cuevas et al. (2012b) |

| 178 | Electromagnetism-Like Mechanism Optimization (EMO) | Birbil and Fang (2003) |

| 179 | Electron Radar Search Algorithm (ERSA) | Rahmanzadeh and Pishvaee (2020) |

| 180 | Elephant Clan Optimization (ECO) | Jafari et al. (2021) |

| 181 | Elephant Herding Optimization (EHO) | Wang et al. (2015) |

| 182 | Elephant Search Algorithm (ESA) | Deb et al. (2015) |

| 183 | Elephant Swarm Water Search Algorithm (ESWSA) | Mandal (2018) |

| 184 | Emperor Penguin Optimizer (EPO) | Dhiman and Kumar (2018) |

| 185 | Emperor Penguins Colony (EPC) | Harifi et al. (2019) |

| 186 | Escaping Bird Search (EBS) | Shahrouzi and Kaveh (2022) |

| 187 | Eurasian Oystercatcher Optimiser (EOO) | Salim et al. (2022) |

| 188 | Evolution Strategies (ES) | Beyer and Schwefel (2002) |

| 189 | Exchange Market Algorithm (EMA) | Ghorbani et al. (2017) |

| 190 | Extremal Optimization (EO) | Boettcher and Percus (1999) |

| 191 | Farmland Fertility Algorithm (FFA) | Shayanfar and Gharehchopogh (2018) |

| 192 | Fast Bacterial Swarming Algorithm (FBSA) | Chu et al. (2008) |

| 193 | Fertilization Optimization Algorithm (FOA) | Ghafil et al. (2022) |

| 194 | Fibonacci Indicator Algorithm (FIA) | Etminaniesfahani et al. (2018) |

| 195 | FIFA Word Cup Competitions (FIFA) | Razmjooy et al. (2016) |

| 196 | Find-Fix-Finish-Exploit-Analyze Algorithm (F3EA) | Kashan et al. (2019) |

| 197 | Fire Hawk Optimizer (FHO) | Azizi et al. (2022) |

| 198 | Firefly Algorithm (FA) | Yang (2009) |

| 199 | Fireworks Algorithm (FA) | Tan and Zhu (2010) |

| 200 | Fireworks Optimization Algorithm (FOA) | Ehsaeyan and Zolghadrasli (2022) |

| 201 | Fish School Search (FSS) | Bastos Filho et al. (2008) |

| 202 | Fish Swarm Algorithm (FSA) | Tsai and Lin (2011) |

| 203 | Fitness Dependent Optimizer (FDO) | Abdullah and Ahmed (2019) |

| 204 | Flock by Leader (FL) | Bellaachia and Bari (2012) |

| 205 | Flocking Based Algorithm (FA) | Cui et al. (2006) |

| 206 | Flow Direction Algorithm (FDA) | Karami et al. (2021) |

| 207 | Flow Regime Algorithm (FRA) | Tahani and Babayan (2019) |

| 208 | Flower Pollination Algorithm (FPA) | Yang (2012) |

| 209 | Flying Elephant Algorithm (FEA) | Xavier and Xavier (2016) |

| 210 | Football Game Algorithm (FGA) | Fadakar and Ebrahimi (2016) |

| 211 | Forensic Based Investigation (FBI) | Chou and Nguyen (2020) |

| 212 | Forest Optimization Algorithm (FOA) | Ghaemi and Feizi-Derakhshi (2014) |

| 213 | Fox Optimizer (FOX) | Mohammed and Rashid (2022) |

| 214 | Fractal-Based Algorithm (FA) | Kaedi (2017) |

| 215 | Frog Call Inspired Algorithm (FCA) | Mutazono et al. (2009) |

| 216 | Fruit Fly Optimization Algorithm (FOA) | Pan (2012) |

| 217 | Gaining Sharing Knowledge Based Algorithm (GSK) | Mohamed et al. (2020) |

| 218 | Galactic Swarm Optimization (GSO) | Muthiah-Nakarajan and Noel (2016) |

| 219 | Galaxy Based Search Algorithm (GBS) | Shah-Hosseini (2011) |

| 220 | Gannet Optimization Algorithm (GOA) | Pan et al. (2022) |

| 221 | Gases Brownian Motion Optimization (GBMO) | Abdechiri et al. (2013) |

| 222 | Gene Expression (GE) | Ferreira (2002) |

| 223 | Genetic Algorithm (GA) | Holland (1991) |

| 224 | Genetic Programming (GP) | Koza et al. (1994) |

| 225 | Geometric Octal Zones Distance Estimation Algorithm (GOZDE) | Kuyu and Vatansever (2022) |

| 226 | Giza Pyramids Construction Algorithm (GPC) | Harifi et al. (2020) |

| 227 | Global Neighborhood Algorithm (GNA) | Alazzam and Lewis (2013) |

| 228 | Glowworm Swarm Optimization (GSO) | Zhou et al. (2014) |

| 229 | Golden Ball Algorithm (GB) | Osaba et al. (2014) |

| 230 | Golden Eagle Optimizer (GEO) | Mohammadi-Balani et al. (2021) |

| 231 | Golden Jackal Optimization (GJO) | Chopra and Ansari (2022) |

| 232 | Golden Search Optimization Algorithm (GSO) | Noroozi et al. (2022) |

| 233 | Golden Sine Algorithm (Gold-SA) | Tanyildizi and Demir (2017) |

| 234 | Good Lattice Swarm Optimization (GLSO) | Su et al. (2007) |

| 235 | Goose Team Optimizer (GTO) | Wang and Wang (2008) |

| 236 | Gradient Evolution Algorithm (GE) | Kuo and Zulvia (2015) |

| 237 | Gradient-Based Optimizer (GBO) | Ahmadianfar et al. (2020) |

| 238 | Grasshoper Optimization Algorithm (GOA) | Saremi et al. (2017) |

| 239 | Gravitational Clustering Algorithm (GCA) | Kundu (1999) |

| 240 | Gravitational Emulation Local Search (GELS) | Barzegar et al. (2009) |

| 241 | Gravitational Field Algorithm (GFA) | Zheng et al. (2010) |

| 242 | Gravitational Interactions Optimization (GIO) | Flores et al. (2011) |

| 243 | Gravitational Search Algorithm (GSA) | Rashedi et al. (2009) |

| 244 | Great Deluge Algorithm (GDA) | Dueck (1993) |

| 245 | Greedy Politics Optimization (GPO) | Melvix (2014) |

| 246 | Grenade Explosion Method (GEM) | Ahrari and Atai (2010) |

| 247 | Grey Wolf Optimizer (GWO) | Mirjalili et al. (2014) |

| 248 | Group Counseling Optimization (GCO) | Eita and Fahmy (2014) |

| 249 | Group Escape Behavior (GEB) | Min and Wang (2011) |

| 250 | Group Leaders Optimization Algorithm (GIOA) | Daskin and Kais (2011) |

| 251 | Group Mean-Based Optimizer (GMBO) | Dehghani et al. (2021) |

| 252 | Group Search Optimizer (GSO) | He et al. (2009) |

| 253 | Group Teaching Optimization Algorithm (GTOA) | Zhang and Jin (2020) |

| 254 | Harmony Element Algorithm (HEA) | Cui et al. (2008) |

| 255 | Harmony Search (HS) | Lee and Geem (2005) |

| 256 | Harris Hawks Optimizer (HHO) | Heidari et al. (2019) |

| 257 | Heart Optimization (HO) | Hatamlou (2014) |

| 258 | Heat Transfer Optimization Aalgorithm (HTOA) | Asef et al. (2021) |

| 259 | Heat Transfer Search Agorithm (HTS) | Patel and Savsani (2015) |

| 260 | Henry Gas Solubility Optimization (HGSO) | Hashim et al. (2019) |

| 261 | Hirerarchical Swarm Model (HSM) | Chen et al. (2010) |

| 262 | Honey Badger Algorithm (HBA) | Hashim et al. (2022) |

| 263 | Honeybee Social Foraging (HSF) | Quijano and Passino (2007) |

| 264 | Honeybees Mating Optimization Algorithm (HMOA) | Haddad et al. (2006) |

| 265 | Hoopoe Heuristic (HH) | El-Dosuky et al. (2012) |

| 266 | Human Evolutionary Model (HEM) | Montiel et al. (2007) |

| 267 | Human Felicity Algorithm (HFA) | Veysari et al. (2022) |

| 268 | Human Group Formation (HGF) | Thammano and Moolwong (2010) |

| 269 | Human Mental Search (HMS) | Mousavirad and Ebrahimpour (2017) |

| 270 | Human-Inspired algorithm (HIA) | Zhang et al. (2009) |

| 271 | Hunting Search (HuS) | Oftadeh et al. (2010) |

| 272 | Hurricane Based Optimization Algorithm (HOA) | Rbouh and El Imrani (2014) |

| 273 | Hydrological Cycle Algorithm (HCA) | Wedyan et al. (2017) |

| 274 | Hysteresis for Optimization (HO) | Zarand et al. (2002) |

| 275 | Ideology Algorithm (IA) | Huan et al. (2017) |

| 276 | Imperialist Competitive Algorithm (ICA) | Atashpaz-Gargari and Lucas (2007) |

| 277 | Improve Genetic Immune Algorithm (IGIA) | Tayeb et al. (2017) |

| 278 | Integrated Radiation Optimization (IRO) | Chuang and Jiang (2007) |

| 279 | Intelligent Ice Fishing Algorithm (IIFA) | Karpenko and Kuzmina (2021) |

| 280 | Intelligent Water Drop Algorithm (IWD) | Shah-Hosseini (2009) |

| 281 | Interactive Autodidactic School Algorithm (IAS) | Jahangiri et al. (2020) |

| 282 | Interior Search Algorithm (ISA) | Gandomi (2014) |

| 283 | Invasive Tumor Growth Optimization (ITGO) | Tang et al. (2015) |

| 284 | Invasive Weed Optimization Algorithm (IWO) | Karimkashi and Kishk (2010) |

| 285 | Ions Motion Optimization (IMO) | Javidy et al. (2015) |

| 286 | Jaguar Algorithm (JA) | Chen et al. (2015) |

| 287 | Japanese Tree Frogs Calling Algorithm (JTFCA) | Hernández and Blum (2012) |

| 288 | Jaya Algorithm (JA) | Rao (2016) |

| 289 | Kaizen Programming (KP) | De Melo (2014) |

| 290 | Kernel Search Optimization (KSO) | Dong and Wang (2020) |

| 291 | Keshtel Algorithm (KA) | Hajiaghaei and Aminnayeri (2014) |

| 292 | Killer Whale Algorithm (KWA) | Biyanto et al. (2017) |

| 293 | Kinetic Gas Molecules Optimization (KGMO) | Moein and Logeswaran (2014) |

| 294 | Komodo Mlipir Algorithm (KMA) | Suyanto et al. (2021) |

| 295 | Kril Herd (KH) | Gandomi and Alavi (2012) |

| 296 | Lambda Algorithm (LA) | Cui et al. (2010) |

| 297 | Laying Chicken Algorithm (LCA) | Hosseini (2017) |

| 298 | Leaders and Followers Algorithm (LFA) | Gonzalez-Fernandez and Chen (2015) |

| 299 | League Championship Algorithm (LCA) | Kashan (2014) |

| 300 | Lévy Flight Distribution (LFD) | Houssein et al. (2020) |

| 301 | Light Ray Optimization (LRO) | Shen and Li (2010) |

| 302 | Lightning Attachment Procedure Optimization (LAPO) | Nematollahi et al. (2017) |

| 303 | Lightning Search Algorithm (LSA) | Shareef et al. (2015) |

| 304 | Linear Prediction Evolution Algorithm (LPE) | Gao et al. (2021a) |

| 305 | Lion Algorithm (LA) | Rajakumar (2012) |

| 306 | Lion Optimization Algorithm (LOA) | Yazdani and Jolai (2016) |

| 307 | Locust Search (LS) | Cuevas et al. (2015) |

| 308 | Locust Swarm Optimization (LSO) | Chen (2009) |

| 309 | Ludo Game-Based Swarm Intelligence Algorithm (LGSI) | Singh et al. (2019) |

| 310 | Magnetic Charged System Search (MCSS) | Kaveh et al. (2013) |

| 311 | Magnetic Optimization Algorithm (MFO) | Tayarani-N and Akbarzadeh-T (2008) |

| 312 | Magnetotactic Bacteria Optimization Algorithm (MBOA) | Mo and Xu (2013) |

| 313 | Marine Predator Algorithm (MPA) | Faramarzi et al. (2020) |

| 314 | Marriage in Honey Bees Optimization (MHBO) | Abbass (2001) |

| 315 | Material Generation Algorithm (MGA) | Talatahari et al. (2021a) |

| 316 | Mean Euclidian Distance Threshold (MEDT) | Kaveh et al. (2022) |

| 317 | Meerkats Inspired Algorithm (MIA) | Klein and dos Santos Coelho (2018) |

| 318 | Melody Search (MS) | Ashrafi and Dariane (2011) |

| 319 | Membrane Algorithm (MA) | Nishida (2006) |

| 320 | Memetic Algorithm (MA) | Moscato et al. (1989) |

| 321 | Method of Musical Composition (MMC) | Mora-Gutiérrez et al. (2014) |

| 322 | Migrating Birds Optimization (MBO) | Duman et al. (2012) |

| 323 | Mine Blast Algorithm (MBA) | Sadollah et al. (2013) |

| 324 | MOEA/D | Zhang and Li (2007) |

| 325 | Momentum Search Algorithm (MSA) | Dehghani and Samet (2020) |

| 326 | Monarch Butterfly Optimization (MBO) | Feng et al. (2017) |

| 327 | Monkey Search (MS) | Mucherino and Seref (2007) |

| 328 | Mosquito Flying Optimization (MFO) | Alauddin (2016) |

| 329 | Moth Flame Optimization Algorithm (MFO) | Mirjalili (2015b) |

| 330 | Moth Search Algorithm (MSA) | Wang (2018) |

| 331 | Mouth Breeding Fish Algorithm (MBF) | Jahani and Chizari (2018) |

| 332 | Mox Optimization Algorithm (MOX) | Arif et al. (2011) |

| 333 | Multi-Objective Beetle Antennae Search (MOBAS) | Zhang et al. (2021) |

| 334 | Multi-Objective Trader algorithm (MOTR) | Masoudi-Sobhanzadeh et al. (2021) |

| 335 | Multi-Particle Collision Algorithm (M-PCA) | da Luz et al. (2008) |

| 336 | Multivariable Grey Prediction Model Algorithm (MGPEA) | Xu et al. (2020) |

| 337 | Multi-Verse Optimizer (MVO) | Mirjalili et al. (2016) |

| 338 | Naked Moled Rat (NMR) | Salgotra and Singh (2019) |

| 339 | Namib Beetle Optimization (NBO) | Chahardoli et al. (2022) |

| 340 | Natural Aggregation Algorithm (NAA) | Luo et al. (2016) |

| 341 | Natural Forest Regeneration Algorithm (NFR) | Moez et al. (2016) |

| 342 | Neuronal Communication Algorithm (NCA) | A Gharebaghi and Ardalan A (2017) |

| 343 | New Caledonian Crow Learning Algorithm (NCCLA) | Al-Sorori and Mohsen (2020) |

| 344 | Newton Metaheuristic Algorithm (NMA) | Gholizadeh et al. (2020) |

| 345 | Nomadic People Optimizer (NPO) | Salih and Alsewari (2020) |

| 346 | Old Bachelor Acceptance (OBA) | Hu et al. (1995) |

| 347 | OptBees (OB) | Maia et al. (2013) |

| 348 | Optics Inspired Optimization (OIO) | Kashan (2015) |

| 349 | Optimal Foraging Algorithm (OFA) | Sayed et al. (2019a) |

| 350 | Optimal Stochastic Process Optimizer (OSPO) | Xu and Xu (2021) |

| 351 | Orca Optimization Algorithm (OOA) | Golilarz et al. (2020) |

| 352 | Orca Predation Algorithm (OPA) | Jiang et al. (2021) |

| 353 | Oriented Search Algorithm (OSA) | Zhang et al. (2008) |

| 354 | Paddy Field Algorithm (PFA) | Kong et al. (2012) |

| 355 | Parliamentary Optimization Algorithm (POA) | Borji and Hamidi (2009) |

| 356 | Particle Collision Algorithm (PCA) | Sacco et al. (2007) |

| 357 | Particle Swarm Optimization (PSO) | Eberhart and Kennedy (1995) |

| 358 | Passing Vehicle Search (PVS) | Savsani and Savsani (2016) |

| 359 | Pathfinder Algorithm (PFA) | Yapici and Cetinkaya (2019) |

| 360 | Pearl Hunting Algorithm (PHA) | Chan et al. (2012) |

| 361 | Pelican Optimization Algorithm (POA) | Trojovskỳ and Dehghani (2022b) |

| 362 | Penguins Search Optimization Algorithm (PeSOA) | Gheraibia and Moussaoui (2013) |

| 363 | Photon Search Algorithm (PSA) | Liu and Li (2020) |

| 364 | Photosyntetic Algorithm (PA) | Murase (2000) |

| 365 | Pigeon Inspired Optimization (PIO) | Duan and Qiao (2014) |

| 366 | Pity Beetle Algorithm (PBA) | Kallioras et al. (2018) |

| 367 | Plant Competition Optimization (PCO) | Rahmani and AliAbdi (2022) |

| 368 | Plant Growth Optimization (PGO) | Cai et al. (2008) |

| 369 | Plant Propagation Algorithm (PPA) | Sulaiman et al. (2014) |

| 370 | Plant Self-Defense Mechanism Algorithm (PSDM) | Caraveo et al. (2018) |

| 371 | Plasma Generation Optimization (PGO) | Kaveh et al. (2020a) |

| 372 | Political Optimizer (PO) | Askari et al. (2020) |

| 373 | Poplar Optimization Algorithm (POA) | Chen et al. (2022) |

| 374 | POPMUSIC | Taillard and Voss (2002) |

| 375 | Population Migration Algorithm (PMA) | ZongXXSlahUndXXyuan (2003) |

| 376 | Prairie Dog Optimization (PDO) | Ezugwu et al. (2022) |

| 377 | Predator–Prey Optimization (PPO) | Narang et al. (2014) |

| 378 | Prey Predator Algorithm (PPA) | Tilahun and Ong (2015) |

| 379 | Projectiles Optimization (PRO) | Kahrizi and Kabudian (2020) |

| 380 | Quantum-Inspired Bacterial Swarming Optimization (QBSO) | Cao and Gao (2012) |

| 381 | Queen-Bees Evolution (QBE) | Jung (2003) |

| 382 | Queuing Search Algorithm (QSA) | Zhang et al. (2018) |

| 383 | Raccoon Optimization Algorithm (ROA) | Koohi et al. (2018) |

| 384 | Radial Movement Optimization (RMO) | Rahmani and Yusof (2014) |

| 385 | Rain Optimization Algorithm (ROA) | Moazzeni and Khamehchi (2020) |

| 386 | Rain Water Algorithm (RWA) | Biyanto et al. (2019) |

| 387 | Rain-Fall Optimization (RFO) | Kaboli et al. (2017) |

| 388 | Raven Roosting Optimization Algorithm (RRO) | Brabazon et al. (2016) |

| 389 | Ray Optimization (RO) | Kaveh and Khayatazad (2012) |

| 390 | Red Deer Algorithm (RDA) | Fard and Hajiaghaei k (2016) |

| 391 | Reincarnation Algorithm (RA) | Sharma (2010) |

| 392 | Remora Optimization Algorithm (ROA) | Jia et al. (2021) |

| 393 | Reptile Search Algorithm (RSA) | Abualigah et al. (2021a) |

| 394 | Rhino Herd Behavior (RHB) | Wang et al. (2018b) |

| 395 | Ring Toss Game-Based Optimization Algorithm (RTGBO) | Doumari et al. (2021) |

| 396 | Ringed Seal Search (RSS) | Saadi et al. (2016) |

| 397 | River Formation Dynamics (RFD) | Rabanal et al. (2007) |

| 398 | Roach Infestation Optimization (RIO) | Havens et al. (2008) |

| 399 | Root Growth Optimizer (RGO) | He et al. (2015) |

| 400 | Root Tree Optimization Algorithm (RTO) | Labbi et al. (2016) |

| 401 | RUNge Kutta optimizer (RUN) | Ahmadianfar et al. (2021) |

| 402 | Runner Root Algorithm (RRA) | Merrikh-Bayat (2015) |

| 403 | SailFish Optimizer (SFO) | Shadravan et al. (2019) |

| 404 | Salp Swarm Algorithm (SSA) | Mirjalili et al. (2017) |

| 405 | SaMW | Tychalas and Karatza (2021) |

| 406 | Saplings Growing Up Algorithm (SGUA) | Karci (2007) |

| 407 | Satin Bowerbird Optimizer (SBO) | Moosavi and Bardsiri (2017) |

| 408 | Scatter Search Algorithm (SS) | Glover (1977) |

| 409 | Scientific Algorithm (SA) | Felipe et al. (2014) |

| 410 | Search Group Algorithm (SGA) | Gonçalves et al. (2015) |

| 411 | Search in Forest Optimizer (SFO) | Ahwazian et al. (2022) |

| 412 | Seed based Plant Propagation Algorithm (SPPA) | Sulaiman and Salhi (2015) |

| 413 | Seeker Optimization Algorithm (SOA) | Dai et al. (2006) |

| 414 | See-See Partidge Chicks Optimization (SSPCO) | Omidvar et al. (2015) |

| 415 | Self-Organizing Migrating Algorithm (SOMA) | Zelinka (2004) |

| 416 | Self-Driven Particles (SDP) | Vicsek et al. (1995) |

| 417 | Seven-spot Labybird Optimization (SLO) | Wang et al. (2013) |

| 418 | Shark Search Algorithm (SSA) | Hersovici et al. (1998) |

| 419 | Shark Smell Algorithm (SSA) | Abedinia et al. (2016) |

| 420 | Sheep Flock Heredity Model (SFHM) | Nara et al. (1999) |

| 421 | Sheep Flock Optimization Algorithm (SFOA) | Kivi and Majidnezhad (2022) |

| 422 | Shufed Complex Evolution (SCE) | Duan et al. (1993) |

| 423 | Shuffled Frog Leaping Algorithm (SFLA) | Eusuff et al. (2006) |

| 424 | Shuffled Shepherd Optimization Algorithm (SSOA) | Kaveh and Zaerreza (2020) |

| 425 | Simple Optimization (SO) | Hasançebi and K Azad (2012) |

| 426 | Simulated Annealing (SA) | Kirkpatrick et al. (1983) |

| 427 | Simulated Bee Colony (SBC) | McCaffrey (2009) |

| 428 | Sine Cosine Algorithm (SCA) | Mirjalili (2016b) |

| 429 | Skip Salp Swam Algorithm (SSSA) | Arunekumar and Joseph (2022) |

| 430 | Slime Mold Optimization Algorithm (SMOA) | Monismith and Mayfield (2008) |

| 431 | Small World Optimization (SWO) | Du et al. (2006) |

| 432 | Smart Flower Optimization Algorithm (SFOA) | Sattar and Salim (2021) |

| 433 | Snake Optimizer (SO) | Hashim and Hussien (2022) |

| 434 | Snap-Drift Cuckoo Search (SDCS) | Rakhshani and Rahati (2017) |

| 435 | Soccer Game Optimization (SGO) | Purnomo (2014) |

| 436 | Soccer League Competition (SLC) | Moosavian and Roodsari (2014) |

| 437 | Social Cognitive Optimization (SCO) | Xie et al. (2002) |

| 438 | Social Cognitive Optimization Algorithm (SCOA) | Wei et al. (2010) |

| 439 | Social Emotional Optimization Algorithm (SEOA) | Xu et al. (2010) |

| 440 | Social Spider Algorithm (SSA) | James and Li (2015) |

| 441 | Social Spider Optimization (SSO) | Cuevas et al. (2013) |

| 442 | Society and Civilization Algorithm (SCA) | Ray and Liew (2003) |

| 443 | Sonar Inspired Optimization (SIO) | Tzanetos and Dounias (2017) |

| 444 | Space Gravitational Algorithm (SGA) | Hsiao et al. (2005) |

| 445 | Special Relativity Search (SRS) | Goodarzimehr et al. (2022) |

| 446 | Sperm Motility Algorithm (SMA) | Raouf and Hezam (2017) |

| 447 | Sperm Swarm Optimization Algorithm (SSO) | Shehadeh et al. (2018) |

| 448 | Sperm Whale Algorithm (SWA) | Ebrahimi and Khamehchi (2016) |

| 449 | Spherical Search Algorithm (SSA) | Misra et al. (2020) |

| 450 | Spherical Search Optimizer (SSO) | Zhao et al. (2020a) |

| 451 | Spider Monkey Optimization (SMO) | Bansal et al. (2014) |

| 452 | Spiral Dynamics Optimization (SDO) | Tamura and Yasuda (2011) |

| 453 | Spiral Optimization Algorithm (SOA) | Jin and Tran (2010) |

| 454 | Spotted Hyena Optimizer (SHO) | Dhiman and Kumar (2017) |

| 455 | Spring Search Algorithm (SSA) | Dehghani et al. (2017) |

| 456 | Spy Algorithm (SA) | Pambudi and Kawamura (2022) |

| 457 | Squirrel Search Algorithm (SSA) | Jain et al. (2019) |

| 458 | Star Graph Algorithm (SGA) | Gharebaghi et al. (2017) |

| 459 | Starling Murmuration Optimizer (SMO) | Zamani et al. (2022) |

| 460 | States Matter Optimization Algorithm (SMOA) | Cuevas et al. (2014) |

| 461 | Stem Cells Algorithm (SCA) | Taherdangkoo et al. (2011) |

| 462 | Stochastic Difusion Search (SDS) | Al-Rifaie and Bishop (2013) |

| 463 | Stochastic Focusing Search (SFS) | Weibo et al. (2008) |

| 464 | Stochastic Fractal Search (SFS) | Salimi (2015) |

| 465 | Stochastic Search Network (SSN) | Bishop (1989) |

| 466 | Strawberry Algorithm (SA) | Merrikh-Bayat (2014) |

| 467 | String Theory Algorithm (STA) | Rodriguez et al. (2021) |

| 468 | Student Psychology Based Optimization (SPBO) | Das et al. (2020) |

| 469 | Success History Intelligent Optimizer (SHIO) | Fakhouri et al. (2021) |

| 470 | Sunflower Optimization (SFO) | Gomes et al. (2019) |

| 471 | Superbug Algorithm (SA) | Anandaraman et al. (2012) |

| 472 | Supernova Optimizer (SO) | Hudaib and Fakhouri (2018) |

| 473 | Surface Simplex Swarm Evolution Algorithm (SSSE) | Quan and Shi (2017) |

| 474 | Swallow Swarm Optimizer (SWO) | Neshat et al. (2013) |

| 475 | Swarm Inspired Projection Algorithm (SIP) | Su et al. (2009) |

| 476 | Swine Influenza Models Based Optimization (SIMBO) | Pattnaik et al. (2013) |

| 477 | Symbiosis Organisms Search (SOS) | Cheng and Prayogo (2014) |

| 478 | Synergistic Fibroblast Optimization (SFO) | Subashini et al. (2017) |

| 479 | Tabu Search (TS) | Glover (1989) |

| 480 | Tangent Search Algorithm (TSA) | Layeb (2021) |

| 481 | Tasmanian Devil Optimization (TDO) | Dehghani et al. (2022a) |

| 482 | Teaching-Learning Based Optimization Algorithm (TLBO) | Rao et al. (2011) |

| 483 | Team Game Algorithm (TGA) | Mahmoodabadi et al. (2018) |

| 484 | Termite Colony Optimizer (TCO) | Hedayatzadeh et al. (2010) |

| 485 | Termite Life Cycle Optimizer (TLCO) | Minh et al. (2022) |

| 486 | Termite-hill Algorithm (ThA) | Zungeru et al. (2012) |

| 487 | The Great Salmon Run (TGSR) | Mozaffari et al. (2013) |

| 488 | Thermal Exchange Optimization (TEO) | Kaveh and Dadras (2017) |

| 489 | Tiki-Taka Algorithm (TTA) | Rashid (2020) |

| 490 | Transient Search Optimization Algorithm (TSO) | Qais et al. (2020) |

| 491 | Tree Growth Algorithm (TGA) | Cheraghalipour et al. (2018) |

| 492 | Tree Physiology Optimization (TPO) | Halim and Ismail (2018) |

| 493 | Tree Seed Algorithm (TSA) | Kiran (2015) |

| 494 | Trees Social Relations Optimization Algorithm (TSR) | Alimoradi et al. (2022) |

| 495 | Triple Distinct Search Dynamics (TDSD) | Li et al. (2020) |

| 496 | Tug of War Optimization (TWO) | Kaveh and Zolghadr (2016) |

| 497 | Tuna Swarm Optimization (TSO) | Xie et al. (2021) |

| 498 | Tunicate Swarm Algorithm (TSA) | Kaur et al. (2020) |

| 499 | Unconscious Search (US) | Ardjmand and Amin-Naseri (2012) |

| 500 | Vapor Liquid Equilibrium Algorithm (VLEA) | Taramasco et al. (2020) |

| 501 | Variable Mesh Optimization (VMO) | Puris et al. (2012) |

| 502 | Variable Neighborhood Descent Algorithm (VND) | Hertz and Mittaz (2001) |

| 503 | Vibrating Particles System (VPS) | Kaveh and Ghazaan (2017) |

| 504 | Virtual Ants Algorithm (VAA) | Yang et al. (2006) |

| 505 | Viral Systems Optimization (VS) | Cortés et al. (2008) |

| 506 | Virtual Bees Algorithm (VBA) | Yang (2005) |

| 507 | Virulence Optimization Algorithm (VOA) | Jaderyan and Khotanlou (2016) |

| 508 | Virus Colony Search (VCS) | Li et al. (2016c) |

| 509 | Virus Optimization Algorithm (VOA) | Juarez et al. (2009) |

| 510 | Virus Spread Optimization (VSO) | Li and Tam (2020) |

| 511 | Volcano Eruption Algorithm (VCA) | Hosseini et al. (2021) |

| 512 | Volleyball Premier League Algorithm (VPL) | Moghdani and Salimifard (2018) |

| 513 | Vortex Search Algorithm (VS) | Doğan and Ölmez (2015) |

| 514 | War Strategy Optimization (WSO) | Ayyarao et al. (2022) |

| 515 | Wasp Swarm Optimization (WSO) | Pinto et al. (2005) |

| 516 | Water Cycle Algorithm (WCA) | Eskandar et al. (2012) |

| 517 | Water Evaporation Algorithm (WEA) | Saha et al. (2017) |

| 518 | Water Evaporation Optimization (WEO) | Kaveh and Bakhshpoori (2016) |

| 519 | Water Flow Algorithm (WFA) | Basu et al. (2007) |

| 520 | Water Flow-Like Algorithm (WFA) | Yang and Wang (2007) |

| 521 | Water Optimization Algorithm (WAO) | Daliri et al. (2022) |

| 522 | Water Strider Algorithm (WSA) | Kaveh and Eslamlou (2020) |

| 523 | Water Wave Optimization (WWO) | Zheng (2015) |

| 524 | Water Wave Optimization.1 (WWO.1) | Kaur and Kumar (2021) |

| 525 | Water-Flow Algorithm Optimization (WFO) | Tran and Ng (2011) |

| 526 | Weed Colonization Optimization (WCO) | Mehrabian and Lucas (2006) |

| 527 | Weightless Swarm Algorithm (WSA) | Ting et al. (2012) |

| 528 | Whale Optimization Algorithm (WOA) | Mirjalili and Lewis (2016) |

| 529 | White Shark Optimizer (WSO) | Braik et al. (2022a) |

| 530 | Wind Driven Optimization (WDO) | Bayraktar et al. (2010) |

| 531 | Wingsuit Flying Search (WFS) | Covic and Lacevic (2020) |

| 532 | Wisdom of Artificial Crowds (WoAC) | Yampolskiy and El-Barkouky (2011) |

| 533 | Wolf Colony Algorithm (WCA) | Liu et al. (2011) |

| 534 | Wolf Pack Search (WPS) | Yang et al. (2007) |

| 535 | Wolf Search Algorithm (WSA) | Tang et al. (2012) |

| 536 | Woodpecker Mating Algorithm (WMA) | Karimzadeh Parizi et al. (2020) |

| 537 | Worm Optimization (WO) | Arnaout (2014) |

| 538 | Xerus Optimization Algorithm (XOA) | Samie Yousefi et al. (2019) |

| 539 | Yin-Yang-Pair Optimization (YYPO) | Punnathanam and Kotecha (2016) |

| 540 | Zombie Survival Optimization (ZSO) | Nguyen and Bhanu (2012) |

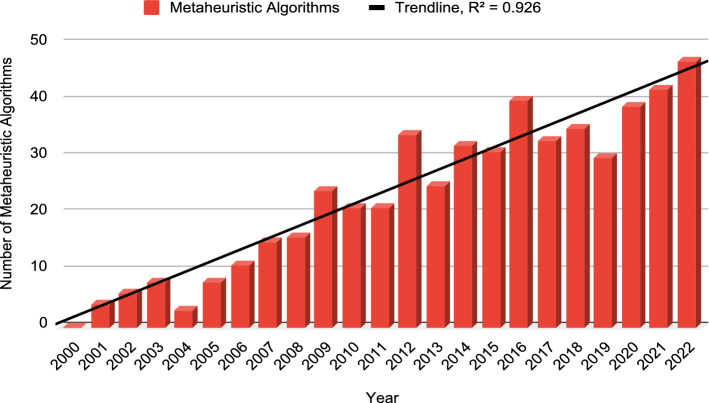

Not only algorithms but also related research works have increased rapidly in the last decade (Fig. 4). Apart from algorithm development, the literature in this field mainly includes the following categories of studies:

Fig. 4.

Number of published documents with the word ‘optimization’ in the title/abstract/keywords and at least one of the words ‘meta-heuristic’, ‘metaheuristic’, ‘bio-inspired optimization’, ‘bio inspired optimization’, ‘nature-inspired algorithm’, ‘nature inspired algorithm’, ‘nature-inspired technique’, ‘nature inspired technique’, and ‘evolutionary algorithm’ in the title/abstract/keywords over the period 2000–2022. Data source—Scopus on December 31, 2022

Enhanced of algorithms

There are many techniques that can be employed to enhance the algorithm’s average performance. Such few techniques have been described by Wang and Tan (2017). Numerous improved methods have been developed to get better results in comparison with the original ones. Random grey wolf optimizer is such an efficient modified algorithm due to Gupta and Deep (2019). An enhanced salp swarm algorithm has propose by Hegazy et al. (2020). The chaotic dragonfly method is modified to an improved one, by Sayed et al. (2019b). Many more modified algorithms are available in literature, such as improved genetic algorithm (Dandy et al. 1996) and improved particle swarm optimization (Jiang et al. 2007). To achieve high computational efficiency, researchers introduce a powerful notion parallelism. Mainly three parallelism techniques have been recorded in literature as they are (a) parallel moves model, (b) parallel multi-start model, and (c) move acceleration model (Alba et al. 2005).

Hybridization of algorithms

The idea of hybridizing metaheuristics is not new but dates back to their origins. Several classifications of hybrid metaheuristics can be found in the literature. Hybrid metaheuristics can be classified based on many objectives as the level of hybridization, the order of execution, the control strategy, etc. (Raidl 2006).

Level of hybridization

Hybrid MAs are distinguished into two types based on the level (or strength) at which the various algorithms are combined: high-level and low-level combinations. High-level combinations retain the individual identities of the original algorithms while cooperating over a relatively well-defined interface. In contrast, low-level combinations heavily rely on each other, exchanging individual components or functions of the algorithms. Because both the original algorithms are strongly independent in high-level combinations, it is sometimes referred to as ‘weak coupling’. In contrast, in low-level combinations, it is referred to as ‘strong coupling’ because they are both dependent on each other.

Order of execution

Hybrid MAs can be divided based on the execution process as a batch, interleaved, and parallel. The batch model employs a one-way data flow in which each algorithm is executed sequentially. On the contrary, we have interleaved and parallel models in which the algorithms might interact in more sophisticated ways (Alba 2005).

Control strategy

Based on their control strategy, we may further subclass hybrid MAs into integrative (coercive) and collaborative (cooperative) combinations. In integrative approaches, one algorithm is considered a subordinate or embedded part of another. This method is quite common. For example, the memetic algorithm is embedded in an evolutionary algorithm for locally improving candidate solutions obtained from variation operators. Algorithms in collaborative combinations share information but are not embedded. For example, Klau et al. (2004) combined a memetic algorithm with integer programming to solve the prize-collecting steiner tree problem heuristically.

Comparison of MAs

In industries, determining which algorithm works best for a particular type of problem is a practical concern. Generally, the difficulty of an optimization task is measured based on its objective function. A fitness landscape consists essentially of the objective values of all variables within the decision variable space. To characterize the fitness landscape of a particular optimization problem, fitness landscape analysis (FLA) is a valuable and potent analytic tool (Wang et al. 2017). Thus, many research papers evolve by comparison of MAs. FLA is essential for studying how complex problems are for MAs to solve. The number of local optima is the first and most apparent fitness landscape characteristic to consider when determining the complexity of a particular optimization problem. Horn and Goldberg (1995) have found that multimodal optimization problems with half the points in the search space are more accessible to solve than unimodal problems. That is, only considering the number of local optima is neither sufficient nor necessary for an optimization algorithm. Another significant characteristic of the fitness landscape is the basin of attraction on local optima. Basins of attraction are classified into two types (Pitzer et al. 2010): strong basins of attraction, in which all individuals from the basin of attraction can approach a single optimum exclusively, and weak basins of attraction, in which some individuals from the basin of attraction can approach to another optimum. When determining the complexity of a specific optimization problem, basins of attraction might potentially offer additional helpful information about the size, shape, stability, and distribution of local optima. Recent developments in FLA can be found in (Zou et al. 2022).

Multi/many objective optimization

Most real life problems naturally involve multiple objectives. Multiple conflicting objectives are common and make optimization problems challenging to solve. Problems with more than one conflicting objective, there is no single optimum solution. There exist a number of solutions which are all optimal. Without more information, none of the optimum solutions may be deemed superior to the others. This is the fundamental difference between a single-objective (except in multimodal optimization scenarios where multiple optimal solutions exist) and multi-objective optimization task. In multi-objective optimization, a number of optimal solutions arise because of trade-offs between conflicting objectives.

To address multi-objective optimization, several extended versions of MAs are proposed. Few most popular examples are non-dominated sorting genetic algorithm II (NSGA-II) (Deb et al. 2002), multi-objective evolutionary algorithm based on decomposition (MOEA/D) (Zhang and Li 2007), and non-dominated sorting genetic algorithm III (NSGA-III) (Deb and Jain 2013). When the number of functions are greater than three, the majority of solutions in the NSGA-II search spaces become non-dominated, resulting in a rapid loss of search capability. MOEA/D decomposes a multi-objective optimization problem into a number of scalar optimization subproblems and optimizes them simultaneously. Also, each subproblem is optimized by only using information from its several neighbouring subproblems, which makes MOEA/D have lower computational complexity at each generation. NSGA-III uses the basic framework of NSGA-II. It uses a well-spread reference point mechanism to maintain diversity. NSGA-III was developed to solve optimization problems with more than four objectives.

Review articles

These studies offer young researchers a valuable perspective on the current state of existing works and their potential future prospects, which can be highly beneficial for their research. Some important articles are highlighted as follows. A novel taxonomy of 100 algorithms based on movement of population along with a few significant conclusions are given by Molina et al. (2020). Some significant future directions of metaheuristics are addressed by Del Ser et al. (2019). Tzanetos and Dounias (2021) have strongly criticised the unethical practises and have given few ideas for the future. A comprehensive overview and classification along with bibliometric analysis is given by Ezugwu et al. (2021). A recent survey of the multi-objective optimization algorithms, their variants, applications, open challenges and future directions can be found in (Sharma and Kumar 2022).

Benchmark test functions

Numerous test or benchmark functions have been reported in the literature; however, no standard list or set of benchmark functions for evaluating the performance of an algorithm exists. To combat this, CEC benchmark functions are published regularly (Liang et al. 2014). 175 benchmark functions are collected by Jamil and Yang (2013). Mirjalili and Lewis (2019) have provided a set of benchmark optimization functions considering different levels of difficulty. 67 non-symmetric benchmark functions have collected by Gao et al. (2021b).

Statistical analysis

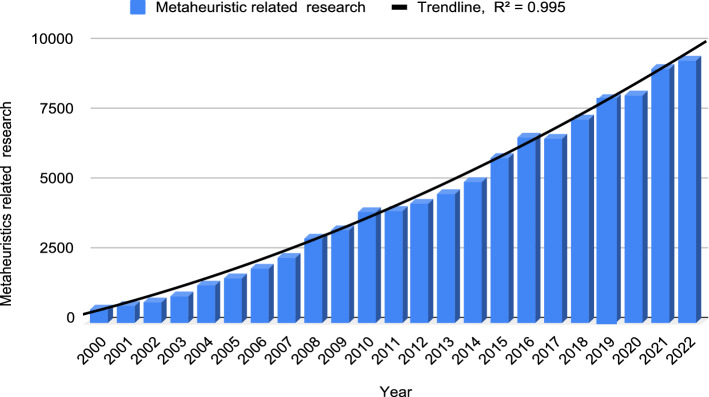

Approximate 540 new MAs have been developed, with about 385 of them appearing in the last decay. Furthermore, in the year 2022 alone, around 47 ‘novel’ MAs are proposed. A graphical representation is shown in Fig. 1. It can be seen in Fig. 1 that, the trend line, with coefficient of determination , is highly upward. is a measure that provides information about the goodness of fit of a model. A trend line is most reliable when its value is at or near 1. It is clear from the high valuation of that, the development of ‘novel’ MAs is growing rapidly.

Fig. 1.

Number of metaheuristic algorithms developed during 2000–2022

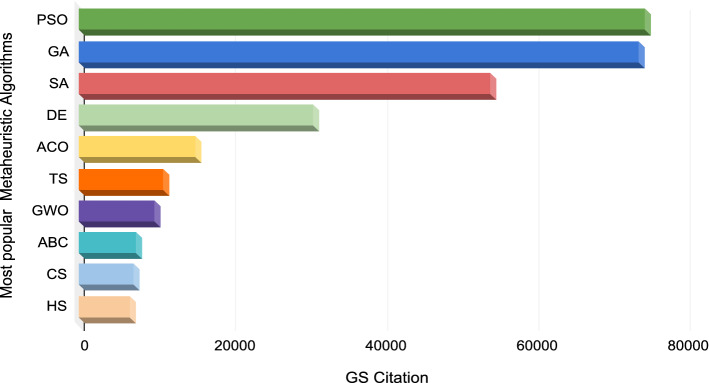

Figure 2 is a summary of the top 10 MAs that have been cited the most, based on Google Scholar (GS). The most widely used algorithm is particle swarm optimization (PSO), which has more than 75000 citations on its own. Genetic algorithm (GA) is ranked as the second most popular algorithm with more than 70000 citations. Ant colony optimization (ACO), differential evolution (DE), and simulated annealing (SA) are ranked third, fourth, and fifth, respectively, with more than 50,000, 30,000, and 15,000 citations respectively. In order of most-cited algorithms to date, tabu search (TS), grey wolf optimizer (GWO), artificial bee colony (ABC), cuckoo search (CS), and harmony search (HS) are rated fifth, sixth, seventh, eighth, ninth, and tenth, respectively.

Fig. 2.

Top ten cited MAs. Data source—Google Scholar (GS) on December 31, 2022

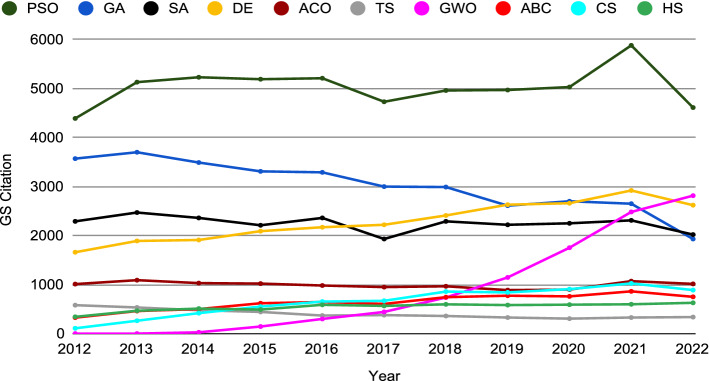

Additionally, Fig. 3 shows GS-citations for the most popular MAs during the last decade. The graph demonstrates how quickly these algorithms are gaining popularity. Grey wolf optimizer (GWO) has gained the attention of researchers and become one of the most popular in a short period of time. Other algorithms, such as the particle swarm optimization (PSO), genetic algorithm (GA), simulated annealing (SA), and differential evolution (DE), have attracted interest at a nearly steady pace during the last decade.

Fig. 3.

Citations of the top ten GS-cited MAs from 2012 to 2022. Data source—Scopus on December 31, 2022

Another very interesting question: How much metaheuristic research is being carried out now? We require data to address this question. Extraction of data from radically various types of repositories is a difficult task. However, we address this question and ascertain the present knowledge regarding metaheuristic studies. Our investigation is made based on available data in Scopus. Even though we do not have complete statistics, our data provide a picture of metaheuristics and leads to significant insights. To identify metaheuristic documents, we use two screening processes: The publications whose titles, abstracts, or keywords include the term ‘optimization’ are listed first. In the second step to identifying only the metaheuristic subdomain of optimization, we consider publications that contain at least one of the terms ‘meta-heuristic’, ‘metaheuristic’, ‘bio-inspired optimization’, ‘bio inspired optimization’, ‘nature-inspired algorithm’, ‘nature inspired algorithm’, ‘nature-inspired technique’, ‘nature inspired technique’, and ‘evolutionary algorithm’ in the titles, abstracts, or keywords. The period is taken from 2000 to 2022. Figure 4 depicts the search results. Each year there are more publications on metaheuristics than the year before. The trend line with indicates that metaheuristic research is expanding significantly. Table 2 lists the various document types. Statistics show that most of the weight in this metaheuristics domain publication comes from articles and conference papers.

Table 2.

Different types of published documents with the word ‘optimization’ in the title/abstract/keywords and at least one of the words ‘meta-heuristic’, ‘metaheuristic’, ‘bio-inspired optimization’, ‘bio inspired optimization’, ‘nature-inspired algorithm’, ‘nature inspired algorithm’, ‘nature-inspired technique’, ‘nature inspired technique’, and ‘evolutionary algorithm’ in the title/abstract/keywords

| Document type | Total number | Percentage (%) |

|---|---|---|

| Article | 54,158 | 54.22 |

| Conference Paper | 40,788 | 40.84 |

| Conference Review | 1292 | 1.29 |

| Review | 1093 | 1.09 |

| Book | 145 | 0.15 |

| Editorial | 88 | 0.09 |

| Retracted | 61 | 0.06 |

| Erratum | 37 | 0.04 |

| Data Paper | 9 | 0.01 |

| Undefined | 18 | 0.02 |

| Total | 99,877 | 100 |

Data source—Scopus on December 31, 2022

Constructive criticism

According to statistical data, numerous MAs appear one after the other; on average, approximately 38 algorithms have appeared yearly during the last decade. In light of this, it seems that metaheuristics is nearing the pinnacle of research effort, but is this the case? Many research community members have expressed alarm about this unanticipated scenario (Aranha et al. 2021; Del Ser et al. 2019). Genetic algorithm (GA), particle swarm optimization (PSO), ant colony optimization (ACO), and differential evolution (DE) were probably developed in a context when scientists lacked alternative optimization methods. Each has its own set of feathers and controlling equations. Many algorithms, particularly those of the most recent generation, are alleged to be non-unique. Furthermore, they are unable to deliver impactful effects. Criticizing this overcrowded situation, Osaba et al. (2021) have pointed out three factors: (a) being unable to provide beneficial containment rather than causing confusion in this area, (b) statistical data authenticity, and (c) unfair comparisons to promote own algorithms. Readers should be aware that several front-line algorithms have been claimed to have lost their novelty. Noted cases are BHO vs PSO (Piotrowski et al. 2014), GWO vs PSO (Villalón et al. 2020), FA vs PSO (Villalón et al. 2020), BA vs PSO (Piotrowski et al. 2014), IWD vs ACO (Camacho-Villalón et al. 2018), and HS vs ES (Weyland 2010). Constructive debate is essential to strengthening this area. Steer et al. (2009) separate the sources of inspiration for NIAs into two groups as well. The first group includes ‘strong’ inspiration algorithms, which mimic mechanisms that address real-world phenomena. Algorithms with ‘weak’ inspiration go into the second group since they do not precisely adhere to the norms of a phenomenon. A significant proportion of these algorithms are remarkably similar to other already available ones. Algorithms with little creativity usually keep their titles to distinguish themselves from other popular metaheuristic approaches that function similarly. Many algorithms, such as bacterial foraging optimization (BFO), birds swarm algorithm (BSA), krill herd (KH), cat swarm optimization (CSO), chicken swarm optimization (CSO), and blue monkey algorithm (BMA), are alleged to be PSO-like algorithms in (Tzanetos and Dounias 2021). Although there are numerous improved versions, new algorithms are frequently compared to older versions of well-known algorithms like GA and PSO. The intriguing aspect here is that each author individually codes these algorithms, and the results are often questionable due to the lack of transparency as the code is kept private. In another study, Molina et al. (2020) determine which algorithms are most influential for developing other algorithms. They compile other algorithms that can be considered variants of the classical algorithms. From this group, the following conclusions can be drawn: about 57 algorithms that are similar to PSO, including african buffalo optimization (ABO) and bee colony optimization (BCO), about 24 algorithms that are similar to GA, including crow search algorithm (CSA) and earthworm optimization algorithm (EOA), and about 24 algorithms that are similar to DE, including artificial cooperative search (ACS) and differential search algorithm (DSA). Research on ‘duplicate’ algorithms is just a repetition of research concepts already investigated in the context of the original algorithm, resulting in a waste of resources and time. However, several algorithms in recent years have demonstrated their efficacy in various real-world challenges, opening up new avenues for research. A new algorithm should be produced when the existing algorithms cannot generate a satisfactory solution to a real-world optimization problem or when a more intelligent mechanism is identified that makes the new algorithm more efficient than others.

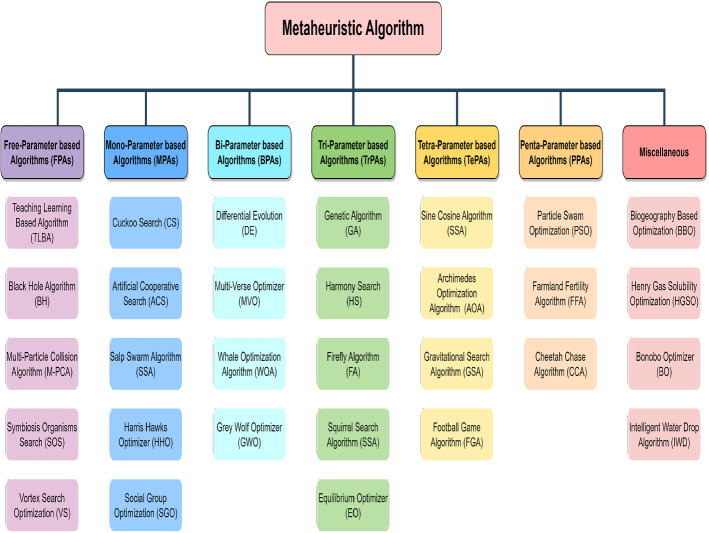

Taxonomy

In the literature, there are several classifications for MAs. For example, classification based on the source of inspiration (6.1) is the most common, but it does not provide us with any mathematical inside of algorithms. Another classification based on the number of finding agents (6.2) provides insight into the number of agents deployed in an iteration. However, this is highly non-uniform because relatively few algorithms fall into one group while the remainder falls into another. Molina et al. (2020) categorize MAs based on their behavior (6.3), rather than their source of inspiration, as (a) Differential Vector Movement and (b) Solution Creation, which provides additional information about the inner workings of MAs. An additional essential tool is employed in this study to classify the existing MAs. Parameters are pretty sensitive in any algorithm. Tuning a parameter for a new situation is difficult since we do not have a chart or set of instructions. Because of a lack of detailed mathematical analysis of algorithms and problems, we must execute the algorithms numerous times for different parameter values in this case. Thus, it is crucial to study the parameters of algorithms to improve the result. This study presents a novel classification based on the number of parameters (6.4).

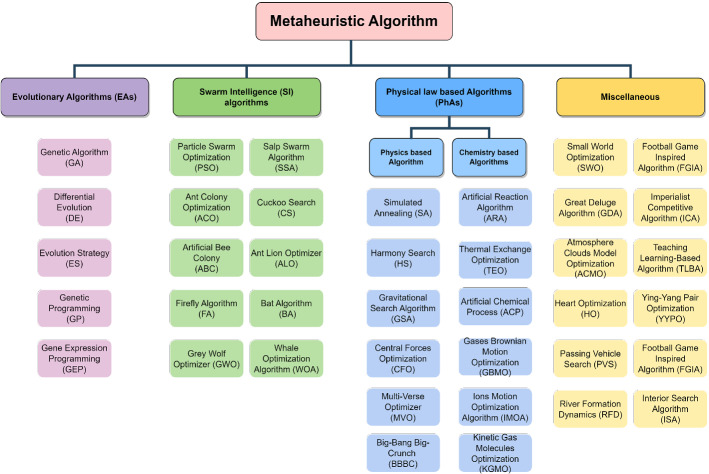

Taxonomy by source of inspiration

This is the oldest classification. Furthermore, it is a beneficial classification because nature-inspired algorithms or metaheuristics concept is primarily based on natural or biological phenomena. Depending on the source of inspiration, MAs have been categorized in various ways by different authors. Fister Jr et al. (2013) have classified it into four categories as swarm intelligence (SI) based algorithms, bio-inspired (not SI) based algorithms, physic-chemistry based algorithms, and the rest as another algorithm, whereas Siddique and Adeli (2015) have divided it into three subgroups as physics-based, chemistry-based and biology-based algorithms. Molina et al. (2020) have classified it into six subgroups as breeding-based evolutionary algorithms, SI-based algorithms, physics-chemistry-based algorithms, human social behavior-based algorithms, and plant-based algorithms, and the rest part are mentioned as miscellaneous. The widely recognized classification is addressed in this text. Hence, in this study, MAs are classified into four subgroups (Fig. 5), which are as follows:

Fig. 5.

Classification of MAs based on the source of inspiration

Evolutionary algorithms (EAs)

Darwinian ideas of natural selection or survival of the fittest inspired EAs. EAs start with a population of individuals and simulate sexual reproduction and mutation in order to create a generation of offspring. The practice is repeated to maintain genetic material that makes an individual more adapted to a particular environment while eliminating that which makes it weaker.

Charles Darwin’s theory of natural evolution motivates genetic algorithm (GA) and differential evolution (DE), while genetic programming (GP) is based on the paradigm of biological evolution. EAs examples include gene expression programming (GEP), learning classifier systems (LCS), neuroevolution (NE), evolution strategy (ES), and so on.

Swarm intelligence (SI) algorithms

Although Beni and Wang (1993) invented the term ‘Swarm Intelligence’ in 1989 in the context of cellular robotic systems, SI has since become a sensational topic in many industries. SI is defined as a decentralized and self-organized system’s collective behavior. The swarm system’s primary qualities are adaptability (learning by doing), high communication, and knowledge-sharing. While organisms cannot perform tasks like defending themselves against a vast predator or attacking for food on their own, they rely heavily on swarming. Even when they are looking for food, they swarm. SI has inspired a vast number of MAs; for example, the intelligent social behavior of birds flock motivates particle swarm optimization (PSO), the monkey climbing process on trees while looking for food motivates monkey search (MS), grey wolf leadership hierarchy and hunting mechanism motivates grey wolf optimizer (GWO), and so on. SI examples include, but are not limited to, ant lion optimizer (ALO), bat algorithm (BA), firefly algorithm (FA), ant colony optimization (ACO), cuckoo search (CS), artificial bee colony (ABC), and glowworm swarm optimization (GSO).

Physical law-based algorithms (PhAs)

Algorithms that are inspired by physical and chemical law fall under this subcategory. Furthermore, PhAs can be subclassified as:

-

(i)

Physics based algorithms:

Gravitation, big bang, black hole, galaxy, and field are the primary key source of the idea of this subcategory. The consumption of stars by a black hole and the formation of new beginnings motivate the black hole algorithm (BH). Harmony search (HS) is developed based on the improvisation of musicians. Simulated annealing (SA) is based on metallurgy’s annealing process, where metal is heated quickly, then cooled slowly, increasing strength and making it simpler to work with. Among these are the big bang-big crunch algorithm (BBBC), central forces optimization (CFO), charged systems optimization (CSO), electro-magnetism optimization (EMO), galaxy-based search algorithm (GBS), and gravitational search algorithm (GSA).

-

(ii)

Chemistry based algorithms:

MAs inspired by the principle of chemical reactions, such as molecular reaction, Brownian motion, molecular radiation, etc. come under this category. Gases brownian motion optimization (GBMO), artificial chemical process (ACP), ions motion optimization algorithm (IMOA), and thermal exchange optimization (TEO) are a few examples of this category.

Miscellaneous

Algorithms based on miscellaneous ideas like human behaviors, game strategy, mathematical theorems, politics, artificial thoughts, and other topics fall into this category. The creation, movement, and spread of clouds inspire the atmosphere clouds model optimization algorithm (ACMO), whereas trading shares on the stock market motivates the exchange market algorithm (EMA). Several other examples are the grenade explosion method (GEM), heart optimization (HO), passing vehicle search (PVS), simple optimization (SO), small world optimization (SWO), ying-yang pair optimization (YYPO), and great deluge algorithm (GDA).

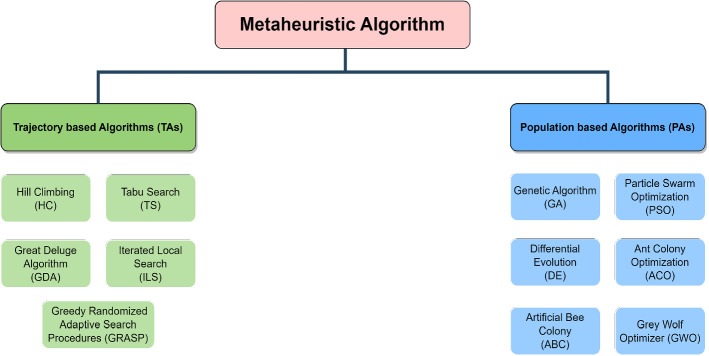

Taxonomy by population size

Multiple agents work better together than a single agent, and there are several advantages, such as information sharing, data remembering, etc. Inspired by it; researchers try to discover the best solution with multiple agents. When it comes to investigating a region, several agents have shown to be superior to a single agent. In our literature, existing algorithms are classified into two categories as trajectory-based and population-based algorithms (Fig. 6) (Yang 2020).

Fig. 6.

Classification of MAs based on the size of the population

Trajectory-based algorithms (TAs)

In contrast, most classical algorithms are built on trajectories, which implies that the movement of the solution during each iteration constitutes a single trajectory. At the beginning of the procedure, a random estimate was made, and the result was refined with each subsequent step. For example, simulation annealing (SA) involves a single agent or solution that moves piece-wise through the design or search space in which it is applied. Better moves and solutions are always welcome, whereas less-than-ideal moves are more likely to be accepted. These actions create a path through the search space, and there is a nonzero probability that this path will lead to the global optimal solution. Hill climbing (HC), tabu search (TS), great deluge algorithm (GDA), iterated local search (ILS), and greedy randomized adaptive search procedures (GRASP) are a few examples of this category.

Population-based algorithms (PAs)

This category encompasses all significant algorithms. Because population-based algorithm utilizes multiple finding agents, it enables an extraordinary exploration of the search space’s diversification, sometimes called an exploration-based algorithm. Elitism can be used easily here, which is a bonus point. Genetic algorithm (GA), particle swarm optimization (PSO), ant colony optimization (ACO), and firefly algorithm (FA) are a few examples of this category.

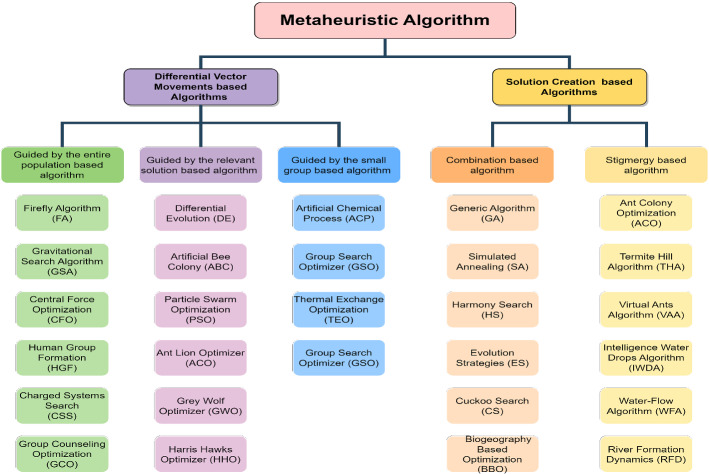

Taxonomy by movement of population

Molina et al. (2020) have attempted to categorize based on its behavior rather than its source of inspiration. How the population for the next iteration is updated remains the key feature of this classification. This classification is a good tool for understanding the same type of algorithms. According to them, MAs can be classified as algorithms based on differential vector movement and algorithms based on solution creation (Fig. 7).

Fig. 7.

Classification of MAs based on the movement of the population

Differential vector movement (DVM)

DVM is a method of creating new solutions by shifting or mutating an existing one. The newly generated solution could compete against earlier ones or other solutions in the population to obtain space and remain there in the following search cycles. That decision further subdivides this category. The movement—and thus the search—can be guided by (i) the entire population; (ii) only the meaningful/relevant solutions, e.g., the best and/or worst candidates in the population; and (iii) a small group, which could represent the neighborhood around each solution or, in subpopulation based algorithms, only the subpopulation to which each solution belongs.

Solution creation (SC)