Abstract

Accurately predicting peptide secondary structures remains a challenging task due to the lack of discriminative information in short peptides. In this study, PHAT is proposed, a deep hypergraph learning framework for the prediction of peptide secondary structures and the exploration of downstream tasks. The framework includes a novel interpretable deep hypergraph multi‐head attention network that uses residue‐based reasoning for structure prediction. The algorithm can incorporate sequential semantic information from large‐scale biological corpus and structural semantic information from multi‐scale structural segmentation, leading to better accuracy and interpretability even with extremely short peptides. The interpretable models are able to highlight the reasoning of structural feature representations and the classification of secondary substructures. The importance of secondary structures in peptide tertiary structure reconstruction and downstream functional analysis is further demonstrated, highlighting the versatility of our models. To facilitate the use of the model, an online server is established which is accessible via http://inner.wei‐group.net/PHAT/. The work is expected to assist in the design of functional peptides and contribute to the advancement of structural biology research.

Keywords: explainable deep hypergraph learning, hypergraph multihead attention network, peptide secondary structure prediction

Accurately predicting peptide secondary structures remains a challenging task due to the lack of discriminative information in short peptides. Based on transfer learning and hypergraph algorithm, sequential semantic information can be incorporated from large‐scale biological corpus and structural. semantic information from multi‐scale structural segmentation, leading to better accuracy and interpretability even with extremely short peptides.

1. Introduction

Peptides have recently emerged as potential therapeutic molecules against various diseases, and have garnered increasing attention due to their many advantages, including high specificity, high penetration, low production cost, and ease of manufacturing and modification.[ 1 ] Various disease‐specific functional peptides have entered the global market, including antiviral peptides (AVPs), antimicrobial peptides (AMPs), and anticancer peptides (ACPs).[ 2 , 3 , 4 ] Specifically, a family of peptides known as cell‐penetrating peptides (CPPs) has shown enormous success in the cellular uptake of therapeutic molecules.[ 5 ] Currently, over 40 cyclic peptide drugs are in clinical use, and approximately one new cyclic peptide drug is approved for clinical use each year on average.[ 6 ] Furthermore, predicting the secondary structure of bioactive peptides can provide key insights into the functional mechanisms of peptides and could serve as a basis for designing peptides with desired functions.[ 1 ] Predicting the secondary structure of peptides is an intermediate step in predicting 3D or tertiary structures, all of which are essential determinants of peptide bioactivity.[ 7 ] Therefore, reliable and accurate computational methods for predicting the secondary structures of peptides are urgently needed.

Many efforts have been made to predict the secondary structure of proteins through computational approaches, most of which are based on machine learning algorithms. For instance, Heffernan et al. developed a multi‐task deep learning model[ 8 ] in which a long‐ and short‐term memory bidirectional regression neural network (LSTM‐BRNNS) was constructed to capture both short‐term and long‐term residue interaction relationships.[ 9 ] Li et al. developed the diffusion convolutional recurrent neural network (DCRNN), a hybrid neural network that alleviates the local features derived from convolutional neural networks (CNNs) and the global features captured from stacked bi‐directional gated recurrent units (BIGRU) to predict the secondary structures of proteins.[ 10 ] Similarly, Busia et al. integrated CNN and residual connections to predict the secondary structures of peptides and achieved good performance, demonstrating the importance of the primary protein sequence information in secondary structure prediction.[ 11 ] In addition to the above methods, there are many other protein secondary structure predictors, such as DeepCNF, JPRED, PROTEUS2, RaptorX, and MUfold‐SSW, among others.[ 12 , 13 , 14 , 15 , 16 , 17 ] However, these methods are designed for the prediction of protein structures and are not applicable for secondary structure prediction due to the inherent structural differences between peptides and proteins. For example, evolutionary information is frequently integrated and used for model training in the prediction of protein secondary structures, and potential biases might be introduced when designing peptide secondary structure models due to the short length of peptides. Additionally, previous studies have demonstrated that even for identical segments of residues in proteins and peptides, their secondary structures might be quite different.[ 1 ] One possible reason is that proteins have more complex tertiary structures, which presumably leads to changes in secondary structures. Particularly, hydrophobic collapse is a major force responsible for a well‐defined tertiary structure. However, this phenomenon is only applicable to proteins and not peptides.[ 18 ] Therefore, developing a peptide‐specific secondary structure prediction method is urgently needed.

Singh et al.[ 1 ] proposed PEP2D, the first peptide‐specific secondary structure predictor that trains a random forest (RF) model with peptide sequential and evolutionary data and achieves good performance. Recently, Cao et al.[ 19 ] designed PSSP‐MVIRT (peptide secondary structure prediction based on multi‐view information, restriction, and transfer learning) for the prediction of peptide secondary structures, employing CNNs and BIGRU to learn high‐latent features and introducing transfer learning to overcome the lack of training data. In addition to the aforementioned methods, there are several other peptide structure prediction methods, such as PEP‐FOLD.[ 20 ] However, existing methods have several limitations. Particularly, most of them rely heavily on feature engineering to design handcrafted features, the quality of which might greatly impact the predictive performance because the feature design is based on the researchers’ prior knowledge. Additionally, existing protein‐specific secondary structure prediction methods focus on long‐distance dependence of sequences with hundreds of residues rather than local fragments, whereas peptide‐specific methods focus more on neighborhood information among residues, thus easily ignoring global information. Ultimately, although deep learning has been successfully used in secondary structure prediction, the current methods still follow a “black box” model and lack good interpretability. These shortcomings limit our ability to predict the relationships between peptide primary sequences and their secondary structures.

In this study, we propose an innovative deep learning framework called PHAT (hypergraph learning and transfer learning‐based framework for peptide structure exploration) to predict peptide secondary structures and explore the related downstream functional analysis. Importantly, our PHAT incorporates several novel features: i) we introduce a powerful pre‐trained protein language model[ 21 ] to transfer semantic knowledge from large‐scale proteins to peptides and learn high‐latent and long‐term features of peptide residues. ii) Considering the local continuity and diversity of peptide secondary structures,[ 22 , 23 ] we equip PHAT with a novel HyperGMA (hypergraph multihead attention network), in which we can encode peptide residues with multi‐semantic secondary structural information while capturing contextual features from consecutive regions using multi‐level attention mechanisms. Additionally, our constructed hypergraph effectively prevents over‐smoothing, which is a common issue in conventional graph networks (e.g., GCN,[ 24 ] GAT[ 25 ]). iii) To reveal the predicting mechanisms of PHAT, the transition and emission matrices were visualized in conditional random fields (CRFs) that can automatically learn a set of biologically meaningful knowledge on secondary substructures. This overcomes the limitations of “black‐box” approaches in deep learning‐based models to some extent and provides good interpretability of our PHAT framework. iv) We also demonstrated that the structural predictions obtained from our model can assist in peptide‐related downstream tasks, such as the prediction of peptide toxicity,[ 26 ] T‐cell receptor (TCR) interactions with MHC (major histocompatibility complex)–peptide complexes,[ 27 ] and protein–peptide binding sites. v) A case study demonstrated that our PHAT can also accurately predict distance map and contact map matrices, which can be further used for the reconstruction of peptide 3‐D structures. Benchmarking results indicated that the proposed PHAT significantly outperforms the state‐of‐the‐art methods in either 3‐state or 8‐state secondary structure prediction, demonstrating the superiority and robustness of our model. To facilitate the use of our method, we established a code‐free, interactive, and nonprogrammatic web interface of PHAT at http://inner.wei‐group.net/PHAT/, which can lessen the programming burden for biological and biomedical researchers.

2. Experimental Section

2.1. Datasets

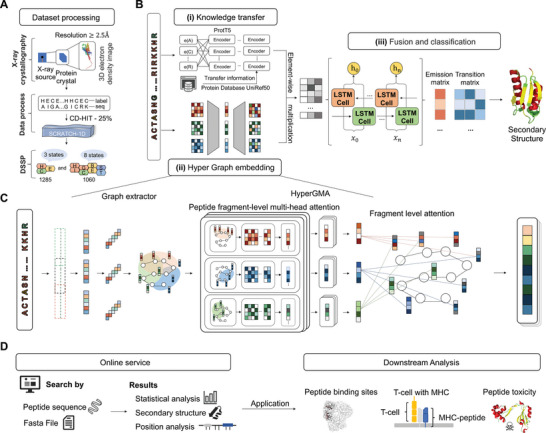

To evaluate the effectiveness of the model, the same benchmark dataset commonly used as a “gold standard” dataset in several studies is used.[ 19 , 28 ] The dataset contains 5772 secondary structures of protein data with three structural states: helix (H), strand (E), and coil (C). The dataset processing process is illustrated in Figure 1A. Specifically, the protein structures are derived from X‐ray crystallography, and this process is executed with a resolution of at least 2.5 Å, with no chain breaks and less than five unknown amino acids. The sequence similarity in this dataset is reduced to 25% to ensure a fair performance evaluation. Additionally, there are some sequences containing the “X” symbol, representing unnatural residues in this dataset. Following the same data pre‐processing in,[ 19 ] the unnatural sequences were removed including the “X” symbol, and 4542 protein and peptide sequences were retained. Afterward, among the remaining sequences, the sequences were selected with <100 residues lengths, finally yielding 1285 peptide sequences as the three‐structure‐state dataset. The length of the sequences ranged from 30 to 99 residues. Moreover, previous studies have demonstrated that the secondary structures of protein and peptides can also be defined with eight states, including H (alpha‐helix), G (310helix), I (π‐helix), E (extended beta‐strand), B (isolated beta‐strand), T (turns), S (bend), and others (C).[ 8 , 29 , 30 ] To account for this scenario, a new dataset of 1,060 peptide sequences was further constructed, derived from the DSSP (Dictionary of Protein Secondary Structure) structure database.[ 31 ]

Figure 1.

The workflow and framework of PHAT. A) Dataset processing. We extracted the benchmark datasets from SCRATCH‐1D, where the protein and peptide structures were derived with X‐ray crystallography and operated with a resolution of at least 2.5 angstroms, for three‐state and eight‐state secondary structures. B) Architecture of deep learning network in PHAT. The architecture consists of three modules: (i) Knowledge transfer module, (ii) Hyper Graph embedding module, and (iii) Fusion and classification module. In Knowledge transfer module, the original sequences are encoded by a pretrained protein model to gain the features of peptide residues. In Hyper Graph embedding module, the peptide sequences are constructed into hypergraph structures and embedded by HyperGMA. In fusion and classification module, the outputs of the Knowledge transfer module and the Hyper Graph embedding module are firstly fused through the element‐wise multiplication and better integrated by the Bi‐LSTM. Then the output of Bi‐LSTM is inputted into the CRF layer, and as a result, the secondary structure of related residues can be predicted. C) Illustrates the details of Hyper Graph embedding module. In the part of graph extractor, peptide sequences are firstly sliced into fragments with specific length and constructed as hyperedges of the hypergraph structure. Then the hyperedges are cut into residue groups to be built as hypernodes in the hypergraph structure. Next, the hypergraph structure from graph extractor is inputted into HyperGMA to capture the multi‐scale relationships in view of residue groups and peptide fragments by the multi‐scale attention mechanism. D) Online service. Our web server of PHAT is freely available to provide researchers with peptide details in three‐state or eight‐state secondary structures, statistical analysis, and position analysis. The predictions of our model can be applied in many downstream tasks as in Downstream Analysis.

2.1.1. Training and Testing Datasets

To account for the characteristics of short peptide sequences and fairly evaluate the performance of the methods, the dataset was divided into two categories: >50 residue sequences and ≤50 residue sequences. The sequences with ≤50 residues consisting of 257 peptide sequences (with H of 5,294, E of 1,119, and C of 3,733) were used as the test set. The remaining 1,028 peptide sequences were used as the training dataset. For model training, 10% peptide sequences was randomly selected as the validation set from the training dataset to adjust the parameters of the model. Additionally, the training and testing datasets were labeled with the three‐state secondary structures, with the sequence length of peptides ranging from 30 to 100. For the eight‐structure‐state dataset,1060 sequences were also collected to re‐train and test the model. The details of the datasets are summarized in Tables S1 and S2 (Supporting Information).

2.2. Architecture of the Deep Learning Network of PHAT

The overall network architecture of the deep learning network of PHAT is illustrated in Figure 1B with three main modules: i) knowledge transfer module, ii) hypergraph embedding module, and iii) feature fusion and classification module. Specifically, the PHAT framework only takes peptide sequences as input. In module (i), to address the scarcity of peptides, the model employs and fine‐tuned and pretrained large‐scale protein language model called ProtT5 for the analysis of the peptide datasets. By doing so, rich contextual information can be transferred from large‐scale protein sequences to the model and learn discriminative feature embeddings of peptide sequences. In module (ii), a HyperGMA (hyper graph multi‐head attention network) is proposed to learn local and global features. Specifically, given a peptide sequence, the model first exploits the graph extractor to divide the peptide sequence into fragments with particular lengths as hyperedges and residue groups as hypernodes. Then, by using the hyperedges and hypernodes, the hypergraph structure is constructed and passed it to the HyperGMA to integrate the sequence information of different scales in the hypergraph structure. The model can capture both local and global features at the residue group level and peptide fragment level through the multiscale hypergraph attention mechanism. Afterward, in module (iii), the feature embeddings is integrated from the above two channels (knowledge transfer module and hypergraph embedding module) through an element‐wise multiplication strategy. Furthermore, the model adopts Bi‐LSTM (bidirectional long short‐term memory networks)[ 32 ] to improve and optimize the feature representation ability and exploits CRFs to learn useful correlations among the sub‐secondary structures. Finally, PHAT takes the resulting features from module (iii) as the input of a Viterbi algorithm and predicts the structural state to which each peptide residue belongs.

2.2.1. Feature Embedding from the Pretrained Model ProtT5

Although there are some differences between proteins and peptides in terms of structure, they are similar in many aspects such as the transcription process and residue sequence composition. Therefore, the pre‐trained model ProtT5 was used based on the t5‐3b model,[ 33 ] which was pre‐trained using the UniRef50 database[ 34 ] (i.e., a database consisting of 45 million protein sequences), in a self‐supervised manner to transfer semantic knowledge from proteins to peptides. Its weight was pretrained with a BERT‐like mask language model denoising objective using raw protein sequences without labeling. The model can fully learn the semantic information and generate different residue features belonging to multiple expressions in different context scenarios.

The original peptide sequences are fed into ProtT5, and the output vectors are extracted from many encoder blocks that are dependent on the self‐attention mechanism. Each encoder block calculates the attention for each residue with all residues in the sequence, aiming to obtain the relevance and importance between every two residues. The calculation formula of the self‐attention mechanism is as follows:

| (1) |

where Q, K, and V are the query vector, key vector, and value vector of the corresponding individual residues in the peptide sequence, respectively, and d Key is the dimension of the input key vector.

2.2.2. Hypergraph Multihead Attention Networks

Inspired by the previous studies for hypergraphs in natural language processing,[ 35 ] a hypergraph structure was constructed by taking the peptide residue groups as nodes and the peptide fragments as edges. Based on this structure, a novel HyperGMA was proposed. Figure 1C shows the hypergraph construction process and HyperGMA architecture. i) The peptide sequence was inputted into the graph extractor, which takes a particular length as the sliding window size and moves the sliding window to select the sequence fragments with cross residues. ii) The sequence fragment is divided into smaller residue groups in a similar way as in step i) but with a smaller sliding window size. The residue groups are regarded as hypernodes and the peptide fragments are taken as the hyperedges. iii) The structure of the hypergraph is constructed using the hyperedges and hypernodes generated from steps (i) and (ii). iv) Then, the hypergraph structure is inputted into HyperGMA to extract the graph embeddings of the peptide sequence.

The context of residues in a peptide sequence describes the language characteristics of local co‐occurrence among residues, and its function in sequence representation learning has also been proved to be effective. In the model, two residues were established as a group, based on which 400 types of groups were identified. Moreover, a set of residue groups is regarded as a hyperedge, which is a sequence fragment with a specific length. This enables the model to simultaneously capture structural information both at the residue group level and peptide fragment level. Specifically, a hypergraph is defined as G = (ν, ε), where ν = {v 1, v 2, …, vn } represents a set of n nodes in the graph, and ε = {e 1, e 2, …, em } represents a set of m hyperedges. Moreover, the model can connect two or more nodes for any hyperedge ej .

2.2.3. Residue Group‐Level Multihead Attention

| (2) |

where k represents the index of the residue group (hypernode) in the fragment (hyperedge) ej , j indicates the index of the fragment in edge set ε, vk ∈ ej indicates that vk is contained in fragment ej , is the representation of residue group (hypernode) vk at layer l, σ is the activation function LeakyReLU, W m is the weight matrix trained in the m‐head attention, and m represents the head number of multi heads. α jk is the attention coefficient of the residue group vk in the fragment ej , which can be computed by:

| (3) |

where is a weight vector for measuring the importance of residue groups in the m‐head attention, vp ∈ ej represents that residue group vp is contained in fragment ej , and T means “transpose.” uk represents vk on the hypergraph defined as:

| (4) |

The expression represents hyperedge ej from m‐head attention at layer l. The multi‐head attention mechanism was constructed, connected it, and compressed it to the desired dimension after the layer was fully connected. This structure is aimed to capture residue context information. The output represents the connected representation of hyperedge ej at layer l.

2.2.4. Peptide Fragment‐Level Attention

With the representations of all peptide fragments (hyperedges) as connecting to residue group vi , the fragment level attention mechanism was introduced to capture the structural information of peptide fragments with distance interval for learning the next‐layer representation of residue group vi , which is expressed as follows:

| (5) |

where is the output representation of residue group (hypernode) vi (vi ∈ ν) at layer l, i represents the index of the residue group (hypernode) in the node set ν, all the hyperedges containing residue group vi are in ε i , and W fragment is a weight matrix. ej is a fragment (hyperedge) divided at a fixed length from peptide sequence, and ε i is the set of fragments of the peptide.

β ij shows the attention interaction of peptide fragment (hyperedge) ej on residue group (hypernode) vi . The computing process is described below:

| (6) |

where is a weight vector similar to but for measuring the importance of peptide fragments

| (7) |

| (8) |

|| represents the concatenation operation, · is matrix multiplication, and W d is a trainable matrix for dimensional reduction.

2.2.5. Bidirectional Long Short‐Term Memory and Conditional Random Field

The secondary structural information in peptide sequences is often related to the residues in the forward and backward peptide fragments. Therefore, Bi‐LSTM (bidirectional long short‐term memory networks) to extract information from two directions in the peptide sequence. Additionally, the previously learned features from ProtT5 and HyperGMA are fused in the form of element‐wise multiplication, which may introduce redundant information. Therefore, a layer of Bi‐LSTM was added to better integrate them and provide a sequence‐level view for the CRF layer. Bi‐LSTM is a deep‐learning architecture with two LSTM layers in different directions, which can capture the dependence of long‐distance residues, and selectively learn and forget information with corresponding importance through training.[ 36 ] Moreover, LSTM has three gate structures (inputting gate, forgetting gate, and outputting gate) and a Cell State's hiding state. In LSTM, the inputting gate is responsible for processing the input of the current sequence position, whereas the forgetting gate controls whether the hidden cell state of the upper layer must be forgotten based on probability. The results of the forgetting gate and inputting gate will act on the cell state. Then, information from the previous sequence, the current sequence, and the cell state will be combined with the activation function and weights to obtain the output. Therefore, the model can better capture semantic information of peptide sequences and the prediction can more accurately select Bi‐LSTM, as shown in Figure S1 (Supporting Information).

To the best of the knowledge, the model is the first to determine the probability of each residue belonging to specific secondary structures by adding a linear layer with the softmax function behind the Bi‐LSTM, after which the label with the highest probability can be obtained. However, this will ignore the correlation among secondary structures and decrease the prediction performance. Alternatively, the CRF approach was chosen, which is widely used in named entity recognition to predict secondary structures, while exploring the context‐related interactions between secondary structures and residue‐level contributions to all secondary structures.

CRFs consist of emission matrices including the probability of residues occupying different secondary structure states and transition matrices including the likelihood of transferring one secondary substructure state to another. During the training process, the model uses the forward and backward algorithms to infer the conditional probability of the secondary structures at each position of the sequence and finally predict the secondary structure by the scoring matrices. The specific calculation process is described below.

There are two kinds of feature functions. The first is referred to as the emission function, which is only related to the current position i in the peptide sequence:

| (9) |

where x represents all residues of the peptide, yi represents the secondary structure at position i, and L indicates the number of all secondary structures.

The second function is defined in the context of secondary structures and is referred to as the transition function, which is related to the current structure yi and the previous structure y i − 1:

| (10) |

where K indicates the number of all permutations of two secondary structure states, which is 9 for 3‐state secondary structures and 64 for 8‐state secondary structures.

Assuming thatK 1 transition functions and K 2 emission functions, there are a total of K 1 + K 2 feature functions. The formula fk (y i − 1, yi ,x, i) was then used to express them:

| (11) |

The weight coefficient fk (y i − 1, yi ,x, i) was also unified with wk:

| (12) |

where λ k represents the weight coefficient of the k‐th transition function and µ l represents the weight l‐th coefficient of the emission function.

The parametric form is simplified as:

| (13) |

Z(x) is the normalization factor:

| (14) |

In the traditional CRF, it is found that the only global transition matrix is easily affected by the noise from datasets, resulting in unstable prediction results. To solve this problem, the outputs from Bi‐LSTM into linear layers are first arranged, transferring the outputs to local transition matrices with the same dimension as the global transition matrix. Then, the local transition matrices were connected to the global transition matrix, as using the fused transition matrices improved the ability of the model to assess different datasets. The details of the CRF architecture are shown in Figure S2 (Supporting Information).

2.3. Model Training and Predicting Process

2.3.1. Training Process

The Bi‐LSTM‐CRF layer was introduced to fuse features and predict the secondary structure of peptides. In Bi‐LSTM‐CRF, the secondary structure label paths are constructed with the emission and transition matrices. The loss function of the model consists of two parts, the score of the real label path and the total score of all paths, with different secondary structure label combinations. The score of the real path should be the highest in all paths and the goal of the optimization is to minimize the gap between the predicted score and the real score.

If a certain path is a real path and the secondary structure label sequence is the correct prediction result, then it should have the highest score of all possible paths. According to the following loss function, the parameters of the model will be updated continuously with every iteration of the training process, making the ratio of the score of the real path to the total score larger.

| (15) |

Assuming that the score of each possible path is Si , and there are n paths in total, then the total score of all paths is (where e is Euler number):

| (16) |

Next, the composition of Sk can be expressed as follows:

| (17) |

| (18) |

The is the score function resulting in a probability to predict the current residue xi as the secondary structure yi .

| (19) |

where is the score function in support of generating the probability of transferring the secondary structure yi to yj .

2.3.2. Prediction Process

In the prediction process, the Viterbi algorithm[ 37 ] is used to obtain the secondary structure prediction. The Viterbi algorithm is a dynamic programming algorithm that uses the start and end states and the recurrence formula to gain the secondary structure labels. The input of the Viterbi algorithm consists of K feature functions of the model, K weights related to the functions, the observation peptide sequence x = (x 1,x 2,…, xn ), and the number of secondary structure states m. The output of this calculation is the optimal prediction secondary structure label sequence . The details of the prediction process of the Viterbi algorithm are described below.

First, the start recursive algorithm is initialized as:

| (20) |

| (21) |

where L is the number of secondary structure labels.

For i = 1, 2, …, n − 1, the recursion formula is performed as follows:

| (22) |

| (23) |

When the following condition occurs, program recursion is stopped:

| (24) |

Through the backtracking algorithm, the final prediction structure is obtained:

| (25) |

In the end, the prediction is:

| (26) |

2.3.3. Performance Metrics

The performance of our proposed PHAT is evaluated by the accuracy and SOV (segment overlap measure) for each secondary structure state. Acci, F1‐scorei {i represents the secondary structure element [H(Helix), E(Sheet) or C(Coil) for 3‐state and H(alpha‐helix), G(310helix), I(π‐helix), E(extended beta‐strand), B(isolated beta‐strand), T (turns), S (bend) and others (C) for 8‐state]}, the accuracy in all states (hereinafter referred to as Acc), and SOV are calculated as follows:

| (27) |

| (28) |

| (29) |

| (30) |

| (31) |

where Ai is the sum of correctly predicted residues in each state; Aii is the number of correctly predicted residues in state i; α i is the proportion of state i in the entire test set; Pi indicates the proportion of residues correctly predicted to be i among those predicted to be i; Ri is the proportion of residues correctly predicted to be i among residues with the actual i; s1 and s2 are segments corresponding to actual and predicted secondary structures; len(s1) represents the number of residues defining the segment s1; max ov(s1, s2) is the maximum length overlap of s1 and s2 for which either of the segments has a residue in state i; min ov(s1, s2) represents the length overlapping s1 segments and s2 segments. δ(s1, s2) is calculated as follows:

| (32) |

The normalization value N is a sum of N(i) over the entire set of conformational states:

| (33) |

SOV was introduced because the segment overlap measure treats H, E, and C on an equal basis (eight‐state assignment is the same). There are no arbitrary cutoffs on segment length, thus ensuring a consecutive and threshold‐free assessment of prediction accuracy.

3. Results

3.1. PHAT Outperforms Existing Methods When Analyzing an Independent Testing Set

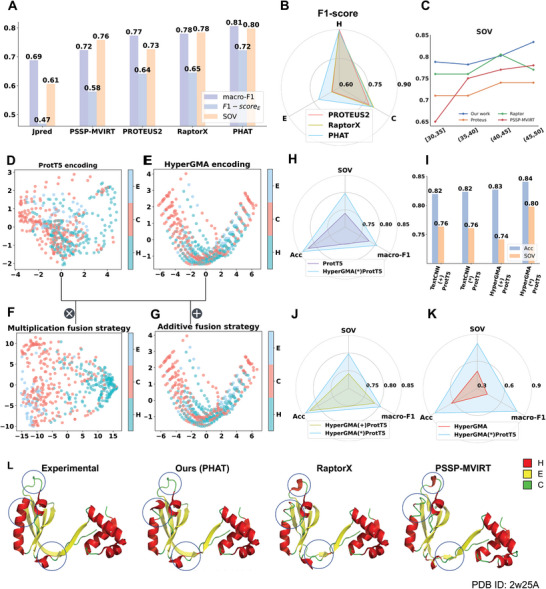

To evaluate the performance of the proposed PHAT in peptide secondary structure prediction, we compared it with four state‐of‐the‐art methods: PROTEUS2,[ 14 ] RaptorX,[ 16 ] Jpred,[ 12 ] and PSSP‐MVIRT.[ 19 ] The first three were designed for protein secondary structure prediction whereas the other is for peptide secondary structure prediction. To ensure a fair comparison, the models were executed and evaluated using the same independent test set. As shown in Table S3 (Supporting Information, PHAT achieved the best performance among all of the tested methods, with an Acc of 84.07%, AccH of 89.08%, AccE of 71.76%, AccC of 80.66%, and SOV of 79.78%. Specifically, compared to other existing methods, our method delivered 1.39% to 19.16% higher SOV values (see Figure 2A and Table S3, Supporting Information), which is an important metric at the segment level and evaluates the overall performance of the methods. The superior SOV performance of our proposed model might be related to the context information of the peptide sequences extracted by the Bi‐LSTM‐CRF layer and multi‐scale features captured by the hypergraph multi‐head attention network. Furthermore, all methods exhibited a relatively low accuracy in the prediction of the structural state E compared to the other two states (H and C). This was due to the low proportion of E in the dataset (see Figure 2B and Table S1, Supporting Information). Therefore, the existing models capture more information for the H and C states, rather than E, during model training. Nevertheless, our PHAT achieved the highest accuracy at E among all of the evaluated methods. This was likely because our multi‐head attention mechanism is capable of capturing a more informative structural representation of E. Additionally, the comparison results in the dataset of the eight‐state secondary structure shown in Table S10 (Supporting Information) also demonstrate the outstanding performance of our method. Therefore, we concluded that our method is more effective than Jpred, PSSP‐MVIRT, PROTEUS2, and RaptorX in the prediction of peptide secondary structures, especially for AccE, Acc, and SOV. We also input the testing set into Alphafold[ 38 ] and trRosetta,[ 39 ] which are the most popular software for protein structure prediction, and extract secondary structure from their predicted PDB files using the DSSP algorithm. Interestingly, our method achieves similar or even better performance compared with them while using less computational and time costs (see Table S4, Supporting Information).

Figure 2.

The performances of our method and existing methods on independent test subsets, comparison of different encoding strategies, and visualization of different methods on one peptide: A) SOV, macro‐F1, and F1‐scoreH are used as the evaluation metrics; B) F1‐scores under three sub‐structures are used as the evaluation metrics. C) SOV of four methods at the different length intervals. D–G) represent PCA visualization results of individual features of ProtT5, HyperGMA, and the fusion features in multiplication or additive respectively; H–K) represent the comparison between multiplication fusion strategy and other three strategies. I) represents performance comparison between HyperGMA and TextCNN. L) The visualization of predictions by our method and other two methods for the peptide with PDB ID: 2w25.

3.2. Length Preference Investigation for Peptide Secondary Structure Prediction

Previous studies have demonstrated that the functionality of peptides (e.g., affinity) is easily affected by the length of sequences, with most bioactive peptides being normally less than 40 residues long.[ 19 , 40 , 41 ] To investigate if our model had length biases for peptide secondary structure prediction, we further explored whether peptide length affected the performance of our model. We first divided the test set into four subsets with different length intervals ([ 30 , 35 ], [ 35 , 40 ], [ 40 , 45 ], and [ 45 , 50 ]), then separately evaluated our model and existing methods using the subsets. Figure 2C and Figure S3 (Supporting Information) show the SOV, Acc, and F1‐score of the different methods for the prediction of peptide secondary structures using the aforementioned subsets. As illustrated in Figure S3 (Supporting Information), the performance of all of the tested methods clearly decreased as the length of the sequences declined, which indicates that shorter sequences are more difficult to predict as their contextual information is less. Furthermore, as illustrated in Figure 2C, the SOV score of our method was higher than that of the other methods in almost all ranges of peptide sequence lengths. Particularly, our PHAT exhibited an outstanding performance, with average Acc, SOV, and F1‐score values up to 7.02%, 6.21%, and 3.33% higher than the runner‐up PSSP‐MVIRT in different sequence length intervals. These results demonstrate that our method is better at the prediction of shorter peptides.

3.3. Exploration of the Optimal Architecture of Our Model

To investigate the performances of our model using different encoding strategies, we compared the prediction results of different encoding strategies including the two individual feature extractors (HyperGMA and ProtT5) and their different fusion combinations. Table S5 (Supporting Information) shows that our final element‐wise multiplication strategy achieves an Acc of 84.07%, AccH of 89.08%, AccE of 71.76%, AccC of 80.66%, and SOV of 79.78%, outperforming the Acc and SOV of ProtT5 by 1.77% and 5.79% and the fused extractor in the additive strategy by 1.36% and 5.64%, respectively. Furthermore, although ProtT5 performed better than HyperGMA, the model performed better than the individual extractors and the fused extractor in the additive strategy after fusing the features from HyperGMA and ProtT5 with the element‐wise multiplication fusion strategy. This indicated that the different information is complementary to each other in the fusion strategy, thus effectively improving the predictive performance of the model. Moreover, it can be seen from Figure 2H–K that the element‐wise multiplication fusion strategy of HyperGMA and ProtT5 achieved better performance than the fusion strategies of TextCNN and ProtT5 in terms of Acc and SOV.

To further illustrate the effect of different encoding strategies more intuitively, we visualized the distribution of feature representations in the test set, which reveals the discriminability of features for distinguishing different secondary sub‐structure states through dimension reduction. In the principal component analysis (PCA)[ 42 ] in Figure 2D–G, each point represents a site in the peptide sequence and different colors are used to distinguish the helix (H), strand (E), and coil (C) secondary structures. Compared with the two fusion strategies, the distribution of the site samples belonging to different classes in the feature space from the individual ProtT5 and HyperGMA are almost connected, making it difficult to distinguish the region for each secondary sub‐structure class. Regarding the two fusion strategies, the site samples of three classes are more clearly distributed in the feature space of the multiplication fusion strategy (Figure 2F) than in the feature space of the additive fusion strategy (Figure 2G). Furthermore, to avoid biases between different dimension reduction methods, we also applied another non‐linear method T‐SNE[ 43 ] for dimension reduction, and similar results can be seen in Figure S3 (Supporting Information). In conclusion, our results demonstrate that our PHAT with the multiplication fusion strategy can capture more discriminative and high‐quality features.

3.4. The PHAT Has Good Interpretability in Terms of Extracting Multiscale Features and Making Classifications

To verify the effect of the Bi‐LSTM‐CRF layer in our model, we compared the performance of our model under two training strategies (Cross Entropy loss function and Bi‐LSTM‐CRF), and the results are shown in Table S6 (Supporting Information). Clearly, our model with Bi‐LSTM‐CRF layer performed better (especially in terms of SOV) than the model using the Cross‐Entropy loss function. To explain how the Bi‐LSTM‐CRF efficiently predicts the secondary structure at each site in the peptide sequence, we randomly selected and predicted the secondary structures of the peptide sequence with PDB ID 1edm chain B (Protein Data Bank Identity). Afterward, we chose several sites of this peptide and visualized the corresponding weights of the transition matrix and emission matrices from our model in Figure 3A. As illustrated in Figure 3A, the secondary structure labels corresponding to the highest values in the emission matrices match the real secondary structures of the residues. Moreover, the probability of transferring the labels of the current residues to the real labels of the adjacent residues was the highest in the transition matrices.

Figure 3.

The interpretability of our model. A) Visualization of the weights of transition matrix and emission matrix in Bi‐LSTM‐CRF layer. The emission matrix and transition matrix are calculated by our model. The emission matrix shows the possibilities of current site in different classes and the transition matrix indicates the possibility of the secondary structure transformation in adjacent positions. B,C) Visualization of the attention matrices in hypergraph multi‐head attention network, where B represents the attention of peptide fragments to residue groups and C represents the attention of residue groups to peptide fragments. Darker color means stronger attention.

To further explore the role HyperGMA of in our model, we visualized and analyzed the attention matrices from HyperGMA in Figure 3. The HyperGMA includes two main steps, the residue group level attention encoding and the peptide fragment level attention encoding. In the first step, the feature representations of peptide fragments are aggregated from the contained residue groups through the multi‐head attention mechanism at residue group level. The contribution of each residue group to corresponding peptide fragments is shown in Figure 3B. Moreover, Figure 3C illustrates that the peptide fragments are more likely to reflect the characteristics of specific residue groups, meaning that the peptide fragments are more strongly influenced by local information. In the second step, the feature representation of the residue group is encoded by the peptide fragments where it exists through the fragment level attention mechanism. The contribution of the peptide fragment to corresponding residue groups is shown in Figure 3C, which indicates that a given residue group can aggregate the information from different fragments where it exists. Therefore, our model can better capture the local and global information by collecting secondary structure information at the residue group level and peptide fragment level using HyperGMA.

3.5. Application of Our PHAT in Three Peptide‐Related Downstream Tasks

Several experiments were conducted to verify that the secondary structures predicted by our method can be useful for downstream tasks. Figure 4A–C shows the results of prediction of peptide toxicity, prediction of T‐cell receptor interactions with MHC‐peptide complexes, and prediction of protein‐peptide binding sites, respectively. In Figure 4, it can be seen that when fused with the structure predictions of our PHAT model, the evaluated methods (ATSE, NetTCR‐2.0, and PepBCL) achieve higher performance in terms of most metrics than without the PHAT predictions. Similar results were observed with the methods fused with structure predictions from PROTEUS2 and PSSP‐MVIRT in the corresponding task.

Figure 4.

Comparative results for three downstream tasks. A) The results on the task of prediction of peptide toxicity. B) The results on the task of prediction of T‐cell receptor interactions with MHC‐peptide complexes. C) The results on the task of prediction of protein‐peptide binding sites. D) ROC curve and Precision‐Recall cure of comparison experiment in ATSE. E,F) Density of positive and negative examples under different confidence in prediction of peptide toxicity. G) ROC curve and Precision‐Recall cure of comparison experiment in NetTCR‐2.0. H, I) Density of positive and negative examples under different confidence in prediction of TCR interactions with MHC‐peptide complexes.

3.5.1. PHAT Has an Outstanding Performance for Aiding in Predicting Peptide Toxicity

We first used the methods (PSSP‐MVIRT, PROTEUS2, and PHAT) to predict the secondary structures of the dataset in ATSE,[ 26 ] a peptide toxicity predictor, and add the secondary structures from the three methods to ATSE. As shown in Figure 4D and Table S7 (Supporting Information), ATSE with our PHAT model achieved an SN of 95.06%, SP of 93.4%, Acc of 94.74%, MCC of 89.62%, AUC of 96.7% (the definition of these metrics can be found in Supplementary metrics), which constituted a 0.17%, 0.18%, 0.43%, 0.5%, and 1.1% higher performance than ATSE with PROTEUS2, and a 0.25%, 0.37%, 0.88%, 1.87%, and 0.8% higher performance than ATSE with PSSP‐MVIRT, respectively. Additionally, Figure 4E,F shows PHAT had an outstanding performance for the prediction and classification of ATSE, and there was also a general improvement over the original method. These results demonstrate the efficiency of our model to predict secondary structures to assist in peptide toxicity prediction. Particularly, the higher SOV of our method reveals that our model can more accurately capture the integrity and continuity of secondary structures, which may explain the superior performance of our method.

Secondary structure is an important determinant of toxicity.[ 44 ] However, few studies have used the secondary structure of peptides to predict peptide toxicity. Predicting the secondary structures of peptides by various methods can compensate for these limitations and build a bridge between peptide secondary structure and peptide toxicity.

3.5.2. PHAT Achieves Superior Performance for the Prediction of T‐Cell Receptor Interactions with MHC–peptide Complexes

Our prediction of the secondary structure of peptides can also be applied to the study of T‐cell receptor interactions with MHC‐peptide complexes. Here, we used the NetTCR‐2.0 method,[ 27 ] which has a CNN architecture, to predict the interactions between the α/β TCR and MHC–peptide sequences and assess the effect of adding secondary structures predicted from the three methods (PSSP‐MVIRT, PROTEUS2, and our PHAT). As indicated in Figure 4G and Table S8 (Supporting Information), analysis of the NetTCR‐2.0 dataset with PHAT achieved an average Acc of 94.04%, a precision of 45.54%, a recall of 78.6%, an F1‐score of 57.29%, and an AUC of 92.7%, which was higher than the original method by 0.61%, 3.51%, 2.61%, and 2.4%, respectively. Furthermore, our model outperformed the Acc, Precision, F1‐score, and AUC of PSSP‐MVIRT by 0.38%, 1.61%, 1.28%, and 1%, as well as PROTEUS2 by 0.59%, 2.29%, 1.82%, and 1.3%, respectively. Moreover, Figure 4H,I shows that PHAT achieved a better prediction of NetTCR‐2.0 classification.

Additionally, we found that two groups of α/β TCR sequences, which have similar sequences but different secondary structures, cannot be classified correctly using NetTCR‐2.0 without adding secondary structures. Fortunately, they were accurately predicted after introducing the secondary structure features from our PHAT. In Figure S4 (Supporting Information), we visualized the secondary structures of the two peptide sequences predicted by our method. Therefore, our findings demonstrated that the secondary structures predicted by our method provide useful biochemical information and improve the performance of NetTCR‐2.0. In conclusion, the above results can prove that our prediction of peptide secondary structures has a positive effect on promoting the accuracy of TCR tasks and provide a new direction for TCR research.

3.5.3. PHAT Exhibited Competitive Performance for Assisting in the Prediction of Protein–Peptide Binding Sites

Protein–peptide interactions are involved in various fundamental cellular functions and are crucial for designing new peptide drugs. To explore the effect of the secondary structures from our model in the prediction of protein‐peptide binding sites, comparison experiments with the PepBCL model were conducted.[ 45 ] Specifically, we first combined our structure predictions with the features from the PepBCL model. Then, protein–peptide binding site predictions were conducted based on a random forest machine learning method.[ 46 ] In a previous study that used the PepBCL model,[ 36 ] the secondary structure from SPOT‐1D‐Single was introduced to generate structural features, which we generated in the same way. In this context, the efficiency of our prediction can be verified by comparing secondary structures from several different sources (Table S9, Supporting Information). Our findings indicated that the application of peptide secondary structures predicted by our PHAT achieves significantly better performance than other methods. Some researchers have already incorporated secondary structures into their predictions. Moreover, the prediction of more accurate and continuous secondary structures may enhance the efficiency of site mining. As illustrated in Figure 4C, the features from PepBCL combined with the prediction of PHAT can achieve higher AUC and MCC than using peptide secondary structures from other methods.

3.6. The Visualization of Two Cases Demonstrated That Our Proposed PHAT Method Performs Better Than Existing Methods

To intuitively assess the performance of existing methods, we first randomly selected two peptide chains (PDB ID: 2w25A and 1ejbA) with experimental secondary structures, and applied different methods (PHAT, RaptorX, PSSP‐MVIRT, PROTEUS2, and Jpred) for the prediction of the secondary structure of two peptides. As illustrated in Figure 2L and Figure S5 (Supporting Information), the secondary structures from different methods were mapped into the tertiary structures, where the red area represents helix (H), the yellow area represents strand (E), and the green area represents coil (C). The differences between the structures predicted by our method and the experimental ones were smaller than those of the predictions of the other methods described above. In Figure 2L, our model achieved more correct helix (H) and strand (E) predictions, whereas the other methods were more likely to identify the helix (H) and strand (E) structures as a coil (C). Furthermore, in Figure S5 (Supporting Information), the other four methods (RaptorX, PSSP‐MVIRT, PROTEUS2, and Jpred) tended to predict the coil (C) as helix (H), whereas our method made more correct predictions in local consecutive sequence regions. In conclusion, our method can achieve better performance in terms of continuity and accuracy compared to the existing methods.

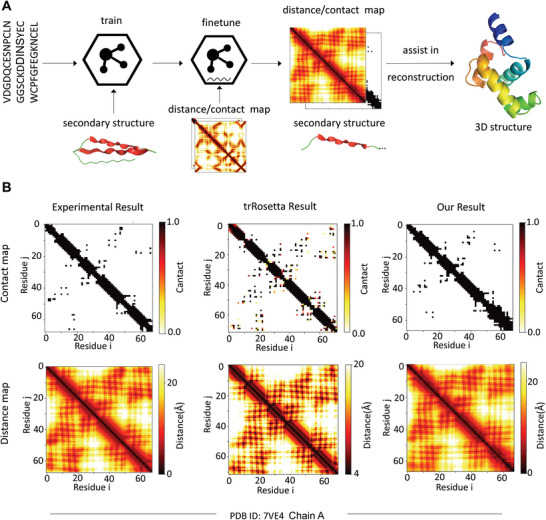

3.6.1. The Proposed PHAT Model Facilitates the Construction of 3‐D Peptide Structures

To explore the potential of PHAT in capturing 3‐D structure information of peptides, we used our model to predict the distance map and contact map matrices, which is an essential process in protein 3‐D structure prediction. The workflow of exploration is shown in Figure 5A. Specifically, our PHAT model was first trained using a secondary structure dataset to capture the 2‐D structure information of the peptide. Then, a fully connected network was added to our model and fine‐tuned using the contact map dataset (the details are shown in Table S11, Supporting Information) to obtain the distance information of the 3‐D structure. Next, we calculated the distance of each amino acid pair to construct the distance map and contact map of the peptide sequence. Compared with the experimental results from the test set, our model achieved an average variation of less than 1 Å for each amino acid pair in terms of distance map prediction. To intuitively assess the performance of our model, we visualized and compared our predictions with the state‐of‐the‐art method trRosetta[ 39 , 47 , 48 ] based on the experimental results from a randomly selected peptide with PDB ID 7ve4 (Figure 5B). Our predicted contact map is more accurate in terms of contacting amino acid pairs than the one obtained with trRosetta. Additionally, our predicted distance map is closer to the experimental result than the trRosetta‐generated map, indicating that our model can more accurately capture the distance between amino acids. With our predicted contact maps and distance maps, the 3‐D structures of corresponding peptides can be reconstructed more realistically by folding algorithms.[ 49 , 50 , 51 ] In this case, we extended our prediction of the secondary structure to the contact map and distance map, thus aiding in the prediction of the peptide 3‐D structure. Therefore, our PHAT model has the potential to promote the development of therapeutic molecules against various diseases, as well as the design of functional peptides.[ 42 ]

Figure 5.

The exploration in constructing 3‐D structure of peptide with our method. A) The workflow of assisting in building 3‐D peptide structure with our predicted contact and distance map matrices. B) The visualization of contact map and distance map matrices of experimental results, trRosetta prediction and our prediction for the peptide with PDB ID: 7ve4.

4. Discussion and Conclusion

In this study, we developed PHAT, a deep learning‐based framework for peptide secondary structure prediction, and systematically evaluated it using benchmark datasets. Compared with other methods designed for protein secondary structure prediction, our model achieved superior performance in most metrics, especially AccE and SOV. The conventional methods designed for the prediction of protein structure might be biased toward extracting long‐distance dependence within protein sequences with hundreds of residues. However, the peptides in our dataset are significantly shorter than most proteins, and therefore the neighborhood information in peptides may not be easily captured by these methods. In contrast, our method can capture more contextual information of peptide sequences through the hypergraph multi‐head attention network, and can thus make more correct predictions in local consecutive sequence regions, as demonstrated by the visualization of our predictions for two peptides (PDB ID: 2w25A and 1ejbA).

Similar results can be seen when comparing the peptide‐specific secondary structure predictors (e.g., PSSP‐MVIRT) with our method. This is likely because previous methods designed for peptides focus more on neighborhood information of peptide residues and therefore tend to ignore long‐term information. In contrast, in addition to being capable of capturing contextual information, our method can obtain long‐term and bio‐semantic knowledge for peptide sequences by using ProtT5, a model pre‐trained with millions of protein sequences, thus achieving a good prediction performance. The peptide length preference experiments for secondary structure prediction illustrated that although the prediction performance of the tested methods decreased as the length of the sequences declined, our method achieved better performance than other existing methods when analyzing shorter peptide sequences. This indicated that our model can integrate contextual information and long‐term knowledge to make predictions.

Moreover, to reveal the feature extraction and prediction mechanisms of our PHAT model, we visualized matrices of a hypergraph multi‐head attention network (HyperGMA) and Bi‐LSTM‐CRF, which provide good interpretability while achieving an outstanding prediction performance. Specifically, the visualization of attention matrices in HyperGMA demonstrated that our model can effectively capture the local and global features of peptides at the residue group level and the peptide fragment level, thus providing insights into its attention mechanisms. Similarly, the visualization of the classification layer in Bi‐LSTM‐CRF illustrates that CRFs can guide our model to efficiently predict the secondary structure for each site in the peptide sequences.

Furthermore, to verify the accuracy of the secondary structures predicted by our model in downstream tasks, we applied our predicted structural information to the prediction of peptide toxicity, T‐cell receptor interactions with MHC–peptide complexes, and identification of protein–peptide binding sites. Using the secondary structures predicted by our model enhanced the performances of these tasks, which indicated that our predicted structural information can assist in predicting more accurate properties and is complementary to sequential and evolutionary features in peptide‐related downstream tasks. Additionally, to explore the potential of PHAT in capturing 3‐D structural information of peptides, we applied our model to predict distance map and contact map matrices and achieved an outstanding performance, thus demonstrating that our model can help in the reconstruction of peptide 3‐D structures. We also developed an online service (the workflow is shown in Figure 1D) to implement our PHAT, thus saving researchers the need to write programs or scripts. We hope that this online tool will be helpful to the research community.

Although our PHAT model achieves improved performances for predicting peptide secondary structure, there is still room for improvement. For example, PHAT is meant to be used for general peptide secondary structure prediction, and therefore we focused particularly on sequences with lengths <50. However, for datasets with peptide sequences longer than 50, we cannot ensure that our method will have the same performance. Moreover, when interacting with other targets (e.g., protein, DNA, RNA, etc.), peptide sequences remain the same, but the secondary structure of the peptides may change considerably. However, our PHAT makes its predictions based on the sequence patterns and thus cannot make adjustments to account for potential molecular interactions. Therefore, we are planning to incorporate additional data such as interaction information with other targets to further improve the prediction of peptide secondary structures in different interacting scenarios.

Conflict of Interest

The authors declare no conflict of interest.

Supporting information

Supporting Information

Acknowledgements

Y.J. and R.W. contributed equally to this work. The work was supported by the National Natural Science Foundation of China (Nos. 62071278, 62250028, and 62072329), and Natural Science Foundation of Shandong Province (ZR2020ZD35).

Jiang Y., Wang R., Feng J., Jin J., Liang S., Li Z., Yu Y., Ma A., Su R., Zou Q., Ma Q., Wei L., Explainable Deep Hypergraph Learning Modeling the Peptide Secondary Structure Prediction. Adv. Sci. 2023, 10, 2206151. 10.1002/advs.202206151

Contributor Information

Qin Ma, Email: qinma@osumc.edu.

Leyi Wei, Email: weileyi@sdu.edu.cn.

Data Availability Statement

The data that support the findings of this study are openly available in SSpro/ACCpro 5: almost perfect prediction of protein secondary structure and relative solvent accessibility using profiles, machine learning and structural similarity at https://doi.org/10.1093/bioinformatics/btu352, reference number 28. The details of data and code can also be found in Supporting Information.

References

- 1. Singh H., Singh S., Raghava G. P. S., bioRxiv 2019, 558791. [Google Scholar]

- 2. Chowdhury A. S., Reehl S. M., Kehn‐Hall K., Bishop B., Webb‐Robertson B.‐J. M., Sci. Rep. 2020, 10, 19260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. He W., Wang Y., Cui L., Su R., Wei L., Bioinformatics 2021, 37, 4684. [DOI] [PubMed] [Google Scholar]

- 4. Huan Y., Kong Q., Mou H., Yi H., Front. Microbiol. 2020, 11, 2559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Habault J., Poyet J.‐L., Molecules 2019, 24, 927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Zorzi A., Deyle K., Heinis C., Curr. Opin. Chem. Biol. 2017, 38, 24. [DOI] [PubMed] [Google Scholar]

- 7. Ward K. B., Hendrickson W. A., Klippenstein G. L., Nature 1975, 257, 818. [DOI] [PubMed] [Google Scholar]

- 8. Heffernan R., Paliwal K., Lyons J., Dehzangi A., Sharma A., Wang J., Sattar A., Yang Y., Zhou Y., Sci. Rep. 2015, 5, 11476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Heffernan R., Yang Y., Paliwal K., Zhou Y., Bioinformatics 2017, 33, 2842. [DOI] [PubMed] [Google Scholar]

- 10. Li Z., Yu Y., in Proc. Proceedings of the Twenty‐Fifth International Joint Conference on Artificial Intelligence, New York, USA 2016.

- 11. Busia A., Jaitly N., Next‐step conditioned deep convolutional neural networks improve protein secondary structure prediction, arXiv preprint arXiv:1702.03865, 2017.

- 12. Cole C., Barber J. D., Barton G. J., Nucleic Acids Res. 2008, 36, W197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Fang C., Li Z., Xu D., Shang Y., Bioinformatics 2020, 36, 1293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Montgomerie S., Cruz J. A., Shrivastava S., Arndt D., Berjanskii M., Wishart D. S., Nucleic Acids Res. 2008, 36, W202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rost B., Sander C., Schneider R., Bioinformatics 1994, 10, 53. [DOI] [PubMed] [Google Scholar]

- 16. Wang S., Li W., Liu S., Xu J., Nucleic Acids Res. 2016, 44, W430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Wang S., Peng J., Ma J., Xu J., Sci. Rep. 2016, 6, 1.28442746 [Google Scholar]

- 18. Bradley P., Chivian D., Meiler J., Misura K. M. S., Rohl C. A., Schief W. R., Wedemeyer W. J., Schueler‐Furman O., Murphy P., Schonbrun J., Strauss C. E. M., Baker D., Proteins: Struct., Funct., Bioinf. 2003, 53, 457. [DOI] [PubMed] [Google Scholar]

- 19. Cao X., He W., Chen Z., Li Y., Wang K., Zhang H., Wei L., Cui L., Su R., Wei L., Briefings Bioinf. 2021, 22, bbab203. [DOI] [PubMed] [Google Scholar]

- 20. Maupetit J., Derreumaux P., Tuffery P., Nucleic Acids Res. 2009, 37, W498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Elnaggar A., Heinzinger M., Dallago C., Rihawi G., Wang Y., Jones L., Gibbs T., Feher T., Angerer, Steinegger M., Bhowmik D., Rost B., IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7112. [DOI] [PubMed] [Google Scholar]

- 22. Maity I., Parmar H. S., Rasale D. B., Das A. K., J. Mater. Chem. B 2014, 2, 5272. [DOI] [PubMed] [Google Scholar]

- 23. Metrano A. J., Abascal N. C., Mercado B. Q., Paulson E. K., Hurtley A. E., Miller S. J., J. Am. Chem. Soc. 2017, 139, 492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kipf T. N., Welling M., Semi‐supervised classification with graph convolutional networks, arXiv preprint arXiv:.02907 2016.

- 25. Velickovic P., Cucurull G., Casanova A., Romero A., Liò P., Bengio Y., in Proc. International Conference on Learning Representations, Vancouver, BC, Canada: 2018. [Google Scholar]

- 26. Wei L., Ye X., Xue Y., Sakurai T., Wei L., Briefings Bioinf. 2021, 22, bbab041. [DOI] [PubMed] [Google Scholar]

- 27. Montemurro A., Schuster V., Povlsen H. R., Bentzen A. K., Jurtz V., Chronister W. D., Crinklaw A., Hadrup S. R., Winther O., Peters B., Jessen L. E., Nielsen M., Commun. Biol. 2021, 4, 1060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Magnan C. N., Baldi P., Bioinformatics 2014, 30, 2592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Fang C., Shang Y., Xu D., Proteins 2018, 86, 592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Torrisi M., Kaleel M., Pollastri G., Sci. Rep. 2019, 9, 12374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Kabsch W., Sander C., Biopolymers 1983, 22, 2577. [DOI] [PubMed] [Google Scholar]

- 32. Graves A., Schmidhuber J., Neural Networks 2005, 18, 602. [DOI] [PubMed] [Google Scholar]

- 33. Raffel C., Shazeer N., Roberts A., Lee K., Narang S., Matena M., Zhou Y., Li W., Liu P. J., J. Mach. Learn. Res. 2020, 21, 1.34305477 [Google Scholar]

- 34. Suzek B. E., Wang Y., Huang H., Mcgarvey P. B., Wu C. H., Bioinformatics 2015, 31, 926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Ding K., Wang J., Li J., Li D., Liu H., in Proc. 2020 Conference on Empirical Methods in Natural Language Processing, Virtual, Online, 2020.

- 36. Frishman D., Argos P., Protein Eng., Des. Sel. 1996, 9, 133. [DOI] [PubMed] [Google Scholar]

- 37. Forney G. D., Proc. IEEE 1973, 61, 268. [Google Scholar]

- 38. Jumper J., Evans R., Pritzel A., Green T., Figurnov M., Ronneberger O., Tunyasuvunakool K., Bates R., Žídek A., Potapenko A., Bridgland A., Meyer C., Kohl S. A. A., Ballard A. J., Cowie A., Romera‐Paredes B., Nikolov S., Jain R., Adler J., Back T., Petersen S., Reiman D., Clancy E., Zielinski M., Steinegger M., Pacholska M., Berghammer T., Bodenstein S., Silver D., Vinyals O., et al., Nature 2021, 596, 583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Du Z., Su H., Wang W., Ye L., Wei H., Peng Z., Anishchenko I., Baker D., Yang J., Nat. Protoc. 2021, 16, 5634. [DOI] [PubMed] [Google Scholar]

- 40. O'brien C., Flower D. R., Feighery C., Immunome Res. 2008, 4, 6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Roberts P. R., Burney J. D., Black K. W., Zaloga G. P., Digestion 1999, 60, 332. [DOI] [PubMed] [Google Scholar]

- 42. Abdi H., Williams L. J., Princ. Compon. Anal.: Eng. Appl. 2010, 2, 433. [Google Scholar]

- 43. Van der Maaten L., Hinton G., J. Mach. Learn. Res. 2008, 9, 2579. [Google Scholar]

- 44. Hideji T., Kazuo H., Toxicol. Lett. 1982, 11, 125.7090003 [Google Scholar]

- 45. Wang R., Jin J., Zou Q., Nakai K., Wei L., Bioinformatics 2022, 38, 3351. [DOI] [PubMed] [Google Scholar]

- 46. Qi Y., in Ensemble Machine Learning (Eds: Zhang C., Ma Y. Q.), Springer, New York: 2012, pp. 307–323. [Google Scholar]

- 47. Yang J., Anishchenko I., Park H., Peng Z., Ovchinnikov S., Baker D., Proc. Natl. Acad. Sci. USA 2020, 117, 1496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Su H., Wang W., Du Z., Peng Z., Gao S.‐H., Cheng M.‐M., Yang J., Adv. Sci. 2021, 8, 2102592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Aszódi A., Gradwell M. J., Taylor W. R., J. Mol. Biol. 1995, 251, 308. [DOI] [PubMed] [Google Scholar]

- 50. Skolnick J., Kolinski A., Ortiz A. R., J. Mol. Biol. 1997, 265, 217. [DOI] [PubMed] [Google Scholar]

- 51. Vendruscolo M., Kussell E., Domany E., Structure 1997, 2, 295. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information

Data Availability Statement

The data that support the findings of this study are openly available in SSpro/ACCpro 5: almost perfect prediction of protein secondary structure and relative solvent accessibility using profiles, machine learning and structural similarity at https://doi.org/10.1093/bioinformatics/btu352, reference number 28. The details of data and code can also be found in Supporting Information.