Abstract

Information and communication technologies hold immense potential to enhance our lives and societal well-being. However, digital spaces have also emerged as a fertile ground for fake news campaigns and hate speech, aggravating polarization and posing a threat to societal harmony. Despite the fact that this dark side is acknowledged in the literature, the complexity of polarization as a phenomenon coupled with the socio-technical nature of fake news necessitates a novel approach to unravel its intricacies. In light of this sophistication, the current study employs complexity theory and a configurational approach to investigate the impact of diverse disinformation campaigns and hate speech in polarizing societies across 177 countries through a cross-country investigation. The results demonstrate the definitive role of disinformation and hate speech in polarizing societies. The findings also offer a balanced perspective on internet censorship and social media monitoring as necessary evils to combat the disinformation menace and control polarization, but suggest that such efforts may lend support to a milieu of hate speech that fuels polarization. Implications for theory and practice are discussed.

Keywords: Polarization, Disinformation, Fake news, Hate speech, Internet censorship, Social media monitoring

Introduction

Information and communication technologies (ICT) hold immense potential for people and societal advancement in a multitude of avenues (Bentley et al., 2019; Parthasarathy & Ramamritham, 2009; Saha et al., 2022). However, on the flipside, ICTs also constitute a threat to society (Ahmed et al., 2022), and digital spaces have been accused of providing a fertile ground for fake news campaigns and online hate speech (Aanestad et al., 2021; Castaño-Pulgarín et al., 2021), while exacerbating societal polarization (Törnberg, 2022). Polarization is a social phenomenon characterized by the fragmentation of society into antagonistic factions with vehemently opposed values and identities that impede cooperation and the pursuit of a common good (Stewart et al., 2020). The threat is significant, and democracies globally are under siege and reeling from the impact of polarized societies. According to the latest World Economic Forum study on global risks, societal polarization is not just one of the world’s top 10 present concerns, but also a long-term threat over the next decade (Zahidi, 2023). The phenomenon has impeded pandemic response in countries (Stuenkel, 2021), slowed consensus on critical global issues such as climate change (Sparkman et al., 2022), and continues to challenge the resilience of societies, resulting in a catastrophic loss of diversity in the social fabric (Kelly, 2021). Not only does polarization increase the likelihood of violence in societies (Piazza, 2022), but violent demonstrations may exacerbate polarization and divide individuals along partisan lines (De Salle, 2020). These devastating effects of polarization have catapulted it to a top priority for scholars across disciplines seeking an understanding of the causes of this social evil (Stewart et al., 2020).

Information technology and social media, in particular, play a crucial role in fostering polarization (Arora et al., 2022; Qureshi et al., 2022), with a spillover effect on offline violence and protests (Gallacher et al., 2021). These platforms are not merely spaces of public discourse, but also raise concerns over free speech, particularly when they contribute to the online amplification of the most radical and polarizing content (Riemer & Peter, 2021). Debates on free speech on platforms predominantly revolve around disinformation and hate speech (Riemer & Peter, 2021), both of which are considered to exacerbate polarization (Meneguelli & Ferré-Pavia, 2021; WEF, 2023). While governments and platforms pursue content moderation and censorship to combat this menace of fake news and hate speech (Riemer & Peter, 2021; Vese, 2022), such practices are regarded as a threat to free speech (Vese, 2022) and contribute to polarization (Frasz, 2022).

The role of fake news and hate speech in polarizing societies is widely acknowledged in prior literature (Au et al., 2021; Piazza, 2020). Its crippling effects are now accompanied by rising calls for action on online disinformation, which threatens to polarize society and destroy democratic life (The Express Tribune, 2022). Fake news as a term gained prominence during the U.S. presidential elections of 2016 (Allcott & Gentzkow, 2017). Ever since, the issue has persisted and poses a grave threat to the societal fabric (Olan et al., 2022). The term overlaps with other forms of information disorder such as misinformation and disinformation (Lazer et al., 2018). While the former refers to false or misleading information (Lazer et al., 2018), the latter is a deliberate fabrication and dissemination of falsehood with an intent to deceive (Zimdars & Mcleod, 2020). In contrast to falsehood, hate speech refers to the use of offensive language directed against a specific group of individuals who share a common characteristic (Castaño-Pulgarín et al., 2021).

Politics remains a favored breeding ground for disinformation and hate speech (Freelon & Wells, 2020; Piazza, 2020). While social media and online platforms have played a pivotal role in amplifying the spread of disinformation and hate speech (Meske & Bunde, 2022; Olan et al., 2022), recent reports reveal the rampant misuse of these platforms by governments and political parties in over eighty countries engaged in political disinformation campaigns (Alba & Satariano, 2019; Oxford Internet Institute, 2021). Likewise there are global concerns over hate speech “super-charged by the internet” in allowing falsehood and conspiracy theories to proliferate and provoke offline violence (United Nations, 2023).

While social networking sites (SNSes) have started to ramp up efforts to detect and curb this menace (Hatmaker, 2022; O’Regan & Theil, 2020), governments have also stepped up measures to actively monitor social media using sophisticated technology that enables them to monitor citizens’ behaviors on these platforms (Shahbaz & Funk, 2019). Some governments have pushed the envelope further by imposing censorship on the internet (Ovide, 2022). Such measures challenge the freedom of expression online (González-Quiñones & Machin-Mastromatteo, 2019) and contribute to polarization and political animosity among citizens (Frasz, 2022).

In light of the fact that the discourse on free speech online is embroiled in a conflict with surveillance and censorship efforts, even as disinformation and hate speech proliferate on these platforms, we assert that these core factors in the free speech debate merit exploration regarding their combinatorial effect on polarization as a phenomenon. This significance stems from the fact that extant literature has predominantly engaged in linear investigations of polarization as a phenomenon. In this context, several studies have analyzed the relationship between polarization and factors such as internet and social media usage (Boxell et al., 2017), democratic erosion and media censorship (Arbatli & Rosenberg, 2021), media exposure (Hawdon et al., 2020), fake news (Piazza, 2022), and hate speech (Piazza, 2020). However, the vast majority of studies have examined factors in isolation and assumed linear relationships between phenomena.1 Political processes are predominantly non-linear in nature (Richards & Doyle, 2000; Timofeev, 2014), and recent literature emphasizes the importance of non-linearity in appreciating aspects that might explain and alleviate polarization (Kelly, 2021; Leonard et al., 2021).

Polarization is characterized by asymmetry and constitutes a collection of complex, ever-evolving systems, the complexity of which necessitates a novel approach to unravel its intricacies (Kelly, 2021; Leonard et al., 2021; Levin et al., 2021). In addition, the socio-technical character of fake news makes standard methods of investigation intrinsically complex (Au et al., 2021). In light of this complexity, this paper acknowledges the phenomenon’s sophistication and leans on complexity theory and configurational analysis in an effort to decipher the causal pathways that influence societal polarization. In particular, this study seeks to illustrate how various sources of political disinformation, hate speech, social media monitoring efforts, and online censorship influence the degree of polarization in societies. In lieu of focusing on the main effects between polarization and its causes, the current study seeks to identify the configurations that explain societal polarization. Consequently, this study addresses the following research question:

RQ: What configurations of political disinformation, hate speech, social media monitoring, and internet censorship lead to polarization in societies?

This configural narrative towards the conceptualization of polarization lends credence to recent assertions regarding the impact of fake news in fracturing societies and intensifying polarization. Specifically, the study’s findings point to a balanced view of surveillance and censorship by governments, which may be useful in combating disinformation campaigns on social media and controlling polarization, although such efforts may lend support to a milieu of hate speech that fuels polarization. Additionally, identification of configurations as causal pathways to polarization should serve as crucial insights to social media platform players in recognizing the varying manifestations of disinformation, hate speech and monitoring efforts to deal with societal polarization, while the findings of this study should also assist political scientists and complex systems theorists with a more nuanced understanding of the dynamics of polarization in society.

The paper is structured as follows. In the next section, we review the literature on polarization, political disinformation, hate speech, internet censorship, and social media monitoring and develop the conceptual model based on complexity theory which serves as the theoretical foundation for the study. Section 3 discusses the research methodology and measures utilized for analysis. Section 4 describes the process of data analysis using the configurational approach. Section 5 analyzes the empirical results, and Section 6 discusses the implications to theory and practice. Section 7 highlights limitations alongside discussing future research directions before concluding in Section 8.

Theoretical Background and Conceptual Framework

This section reviews literature related to the key constructs (see Table 11 in the Appendix for details for details) and discusses the theoretical foundations for the study.

Table 11.

Selected studies on the key constructs

| Sources | Key highlights |

|---|---|

| Polarization | |

| Baldassarri and Bearman (2007) | Discusses the dynamics surrounding polarization through two paradoxes, namely the simultaneous presence and absence of attitude polarization and social polarization. |

| Iyengar et al. (2012) | Demonstrates an increasing dislike and loathing of opponents by the political factions in the United States with partisan effect found inconsistently in policy attitudes. |

| Gruzd and Roy (2014) | Examines user engagement on Twitter as a social media platform and posits that discussions on the forum may embed partisan loyalties and contribute to political polarization. |

| Boxell et al. (2017) | Examines relationship between internet and social media usage and polarization and finds increase in polarization among groups with least likelihood of usage of these technologies. |

| McCoy et al. (2018) | Posits a set of causal patterns to establish links between polarization and impact on democracies coupled with illustrations from four nations as cases. |

| Enders and Armaly (2019) | Finds individual-level differences in actual and perceived polarization in terms of their links to attitudinal and behavioral outcomes of normative interest. |

| de Bruin et al. (2020) | Examines political polarization during the COVID-19 pandemic and found variations in risk perception and risk mitigation preferences based on political inclination. |

| Piazza (2020) | Highlights the mediating role of polarization in the impact of hate speech on domestic terrorism. |

| Hawdon et al. (2020) | Examines likelihood of polarization based on exposure to traditional and social media and further investigates influence of polarization on social capital. |

| Heaney (2020) | Highlights the role of political polarization as a key factor contributing to the ubiquity of protests in the United States. |

| Arbatli and Rosenberg (2021) | Examine links between polarization and government intimidation of opposition by creating a new measure of political polarization. |

| Levin et al. (2021) | Discusses the dynamics surrounding political polarization through an interdisciplinary approach and highlights the interplay of several processes at various levels in the context of this phenomenon |

| Casola et al. (2022) | Finds increasing levels of political polarization surrounding the perceived importance of conservation issues during the COVID-19 pandemic. |

| Frasz (2022) | Highlights the role of increased censorship efforts by governments and other agents in exacerbating political polarization among the citizens |

| Leonard et al. (2021) | Examines drivers of political polarization and factors which account for its asymmetry as a phenomenon. |

| Argyle and Pope (2022) | Examines links between polarization and political participation finding a higher likelihood of political participation by people with more extreme attitudes. |

| Ertan et al. (2023) | Highlights the issue of political polarization during extreme events and its negative impact on response and recovery operations. |

| Patkós (2023) | Highlights the need for a relook at polarization indices in light of an attention shift from ideological aspects to partisan and affective aspects and introduces a partisan polarization index, and compares it with other indices. |

| Political disinformation | |

| Levendusky (2013) | Highlights the role of partisan media outlets and slanted news presentations in leading viewers to develop more negative perceptions and lower trust for other parties alongside lower support for bipartisanship. |

| Allcott and Gentzkow (2017) | Discusses economics of fake news in light of concerns expressed over falsehood amidst the 2016 U.S. presidential elections with a key finding being that people believed stories favoring their preferred political candidate. |

| Tandoc et al. (2018) | Defines a typology of fake news based on level of factivity and deception. |

| Bradshaw and Howard (2018) | Highlights concerns over the use of social media for propaganda and analyzes how states and political parties are using these platforms to shape public opinions at home and on foreign soil. |

| Freelon and Wells (2020) | Discusses sociopolitical factors contributing to disinformation in recent times. |

| Humprecht et al. (2020) | Recognizes the menace of disinformation in democracies and analyzes conditions that contribute to resilience to disinformation through a cross-country study. |

| Au et al. (2021) | Proposes a multi-stage model depicting the pathways from online misinformation and fake news toward ideological polarization. |

| Serrano-Puche (2021) | Highlights the reliance of fake news on emotionally provocative content to induce outrage and its subsequent virality on platforms. |

| Soares and Recuero (2021) | Analyzes political disinformation during the 2018 presidential elections in Brazil and the role of hyper-partisan outlets in shaping discursive struggles and strategies used for dissemination of disinformation. |

| Bringula et al. (2022) | Analyzes factors that contribute to individuals’ susceptibility to disinformation. |

| Piazza (2022) | Highlights role of disinformation in fueling domestic tourism with political polarization mediating the relationship. |

| Shu (2022) | Provides a computational perspective on combating disinformation on digital media. |

| Davidson and Kobayashi (2022) | Investigates individual differences in recall and recognition based on exposure to factually correct and false content. |

| Pentney (2022) | Draws attention to government disinformation which is comparatively less discussed as opposed to other forms of disinformation, and discusses it in conjunction with its regulation and freedom of expression. |

| Hate speech | |

| Papacharissi (2004) | Analyzes messages on political newsgroups online and finds most messages being civil in nature while suggesting that the absence of face-to-face interaction may have resulted in heated discussions. |

| Waldron (2012) | Sheds light on the perils of hate speech and underscores the need for its regulation. |

| Cohen-Almagor (2014) | Defines hate speech (examines how hate speech proliferates on the internet and discusses how the issue can be mitigated. |

| Iginio et al. (2015) | Discusses measures to counter hate speech on the digital channels. |

| Howard (2019) | Contends that the debate on banning hate speech needs disaggregation and discusses the phenomenon alongside freedom of expression. |

| Bilewicz and Soral (2020) | Highlights the impact of hate speech on emotions and behaviors and its influence in breeding intergroup contempt and desensitizing people which reduces the ability to recognize the offensive character of such language. |

| Paz et al. (2020) | Analyzes hate speech discussions, particularly in the legal and communication fields through a review of literature. |

| Piazza (2020) | Finds the role of hate speech by political personalities in boosting online terrorism with the relationship mediated by political polarization. |

| Kojan et al. (2020) | Examines the role of counter-speech as a mechanism in bolstering public deliberation and reducing polarization. |

| Matamoros-Fernández and Farkas (2021) | Reviews literature on hate speech and racism in the context of social media research. |

| Ali et al. (2022) | Discusses methods for hate speech detection on Twitter as a social media platform. |

| García-Díaz et al. (2022) | Analyzes strategies involving feature combinations to detect hate speech in the Spanish language. |

| Internet censorship and social media monitoring | |

| Lyon (2007) | Draws on global examples to analyze surveillance and offers tools for analyzing surveillance trends. |

| Marwick (2012) | Discusses the framing of social surveillance and its distinctions from traditional surveillance along the axes of power (hierarchy and reciprocity |

| Arsan (2013) | Examines censorship and self-censorship in Turkey based on the experiences of journalists in the region. |

| Westcott and Owen (2013) | Examines how lateral surveillance can be leveraged to initiate friendships on social networks with Twitter as the platform. |

| Richards (2013) | Recognizes the harms of surveillance and discusses legal aspects in light of the need to preserve civil liberties |

| Staples (2014) | Discusses surveillance alongside the rise of social media and the impact of surveillance on how we comprehend individuals and our lives. |

| Trottier (2016) | Describes growing social media monitoring practices as a central tenet of surveillance |

| Busch et al. (2018) | Examines internet censorship efforts through blocking mechanisms in liberal democracies |

| Kruse et al. (2018) | Highlights that social media users do not engage in communicative action online due to fear of being surveilled and engage only with politically similar individuals. |

| Chang and Lin (2020) | Demonstrates the use of internet censorship as a reactive mechanism in autocracies to suppress civil society |

| Cobbe (2021) | Discusses algorithm-driven censorship on social media platforms as an approach to content moderation. |

| Büchi et al. (2022) | Discusses the negative impact of surveillance and collection of digital traces on individuals’ communication behaviors online. |

| Chan et al. (2022) | Finds links between surveillance and censorship with varying levels engendering or suppressing political engagement in different ways |

| Zhai et al. (2022) | Discusses the prevalence of internet surveillance and examine the reasons why certain individuals approve of such practices involving censorship and surveillance. |

Polarization of Society

Polarization refers to a widening gulf concerning perspectives on various political issues between societal groups (Enders & Armaly, 2019) and represents the distance between opposing political orientations (Kearney, 2019). While some degree of polarization is observed in the majority of pluralistic democracies and can encourage political engagement, a high degree of polarization can lead to estrangement between social groupings and impede democratic dialogue (McCoy et al., 2018). In case of extreme polarization, a society’s typical diversity of distinctions rapidly aligns along a single axis, and individuals increasingly view and describe politics and society in terms of split factions (McCoy et al., 2018). Extreme polarization tends to have a large affective component (Iyengar et al., 2012), and poses a grievous threat to democracy by eroding social cohesiveness and disrupting political stability (McCoy et al., 2018).

Polarization poses challenges that extend well beyond its impacts on political stability and democratic discourse. In recent years, polarization is believed to have hindered the public health response in countries (de Bruin et al., 2020), abetted by disinformation surrounding the pandemic (Casola et al., 2022). In a similar vein, polarization has resulted in divergent perspectives on major issues such as climate change (Hai & Perlman, 2022), domestic terrorism (Piazza, 2020), and increased ubiquity of protests (Heaney, 2020). In an era of deep polarization, a central question concerns the contribution of fake news to polarization in society (Qureshi et al., 2020), while hate speech with partisan leanings is also believed to aggravate polarization (Piazza, 2020). In a similar vein, recent studies also examine instances of social media censorship in countries and highlight that countries that engage in high levels of censorship tend to experience high degree of polarization among their citizens (Frasz, 2022). Nevertheless, despite the fact that censorship and surveillance are closely related, varying levels of these phenomena can either promote or inhibit political participation (Chan et al., 2022), a phenomenon that is closely linked to polarization in the political realm (Argyle & Pope, 2022).

Extant literature has focused primarily on linear investigations into the relationship between polarization and factors such as media censorship (Arbatli & Rosenberg, 2021), fake news (Piazza, 2022), and hate speech (Piazza, 2020). However, these inquiries have predominantly dealt with components in isolation and assumed linear relationships. Political processes are characterized by non-linearity (Richards & Doyle, 2000; Timofeev, 2014), and recent literature underscores the importance of non-linearity in comprehending factors that can explain and combat polarization (Kelly, 2021; Leonard et al., 2021). In view of the complexity of polarization as a phenomenon, understanding its intricacies necessitates an appreciation of its asymmetric nature and a perspective of it as a collection of complex systems comprising interactions between various components (Kelly, 2021; Leonard et al., 2021; Levin et al., 2021). Towards addressing this complexity, we first delve deeper into each of the constructs influencing polarization in the following sections.

Political Disinformation

Disinformation is regarded as false, inaccurate, or misleading information intentionally presented, disseminated, and exploited with a malicious purpose (Directorate-General for Communications Networks, 2018). The role of social media is propagating disinformation in widely acknowledged in literature (Grossman & Goldstein, 2021; Shu, 2022). Research suggests that online falsehood has resulted in ideologically polarized societies (Au et al., 2021). While producers of disinformation seek to alter readers’ perceptions of issues and influence their opinions and actions (Allcott & Gentzkow, 2017), the production of polarizing news also serves commercial interests and helps generate revenue (Tandoc et al., 2018). Although polarized audiences may be motivated to watch news of a partisan nature (Arceneaux & Johnson, 2013), exposure to such partisan news exacerbates the polarization that already prevails (Levendusky, 2013). Disinformation may exploit affective triggers to attract the reader’s attention, using emotionally provocative content to elicit strong feelings of outrage in users and subsequent virality on digital platforms (Serrano-Puche, 2021). Such mobilization of strong emotions is also associated with the rise of ideological polarization in the public sphere (Rosas & Serrano-Puche, 2018).

A wide range of actors may engage in disinformation warfare online. While the modal actors engaged in disinformation campaigns are generally the government ministries in authoritarian regimes, these actors are predominantly the political parties in democratic states (Bradshaw & Howard, 2018). While the former’s disinformation campaigns function alongside efforts to secure cyberinfrastructure and content as well as exertion of pressure on the regulation of cyberspace, the latter focuses primarily on targeting the domestic audience during the elections or major political events (Bradshaw & Howard, 2018). In this context, governments may employ disinformation as a tool for withholding information, which helps them maintain monopoly over information and diminishes society’s power to hold them accountable for inaction when the state controls access to information (Pentney, 2022).

Although the diverse interests outlined here are limited to the government and political parties, foreign adversaries may also have vested interests in intervening in the affairs of another nation. Foreign governments may employ clandestine psychological warfare on social media for a range of persuasion purposes, ranging from influencing users’ attitudes about certain politicians or policies to undermining trust in the government (Arnold et al., 2021). For instance, Russia has been accused of engaging in propaganda in the run-up to the 2016 U.S. presidential election (de Groot, 2022), and Iranian state actors are said to have expanded their disinformation campaign on social media in order to sow dissension inside the U.S. (Bennett, 2021).

The discussion thus far reveals a complex web of disinformation operations by a range of players, including the state, political parties, and foreign governments. However, few studies to date have examined all of these campaigns in tandem (Ruohonen, 2021). In particular, the extent of influence that each type of disinformation wields on societal polarization remains largely underexplored in the extant literature.

Hate Speech

Hate speech refers to abusive or hostile remarks intended to demean a segment of the population on the basis of their actual or perceived innate characteristics (Cohen-Almagor, 2014). Continuous exposure to hate speech can have crippling consequences on an individual’s behavioral and emotional response and breeds intergroup contempt as a result of the pejorative rhetoric that hate speech carries (Bilewicz & Soral, 2020). Hate speech can result in a deterioration of empathy and social norms, causing society to become more receptive to offensive language and less tolerant of communities.

The repercussions of hate speech include fostering an environment of prejudice and intolerance, promoting acts of violence (Iginio et al., 2015), incivility, denying individuals their personal liberties, and stereotyping certain social groups (Papacharissi, 2004). Politicians who incite hate speech against specific ethnic or religious groups in society make those groups’ members more allied with other members of their own group and less tolerant of individuals from rival factions (Piazza, 2020). In this regard, hate speech poses a threat to the social fabric by increasing divisiveness (Piazza, 2020). While research highlights that both hate speech and disinformation result in polarization (Kojan et al., 2020), it can be challenging to distinguish between the two when political discourse becomes increasingly infused with hate (Stokel-Walker, 2019). In light of this blurring distinction between the two phenomena, we contend that the interactions between hate speech and disinformation toward influencing polarization merit scrutiny.

Internet Censorship and Social Media Monitoring

Censorship involves governments’ efforts to suppress the free flow of ideas and information that deviates from the status quo and delineate which communications are acceptable and unacceptable in society (Liu & Wang, 2021). Unlike censorship, which blocks online content, social media monitoring employs surveillance technologies. Online surveillance refers to governments’ ongoing automated efforts to gather, store, and analyze digital traces of particular individuals or the entire population (Büchi et al., 2022; Chan et al., 2022). Censorship and surveillance are inextricably linked in the present day, considering that online surveillance activities may involve intercepting all outgoing internet requests and assessing whether they are for banned websites (Doctorow, 2012).

In light of the internet’s potential to enhance democracy by expanding avenues for political participation (Margetts, 2013), states’ fears and skepticism over this emancipatory potential have resulted in censorship efforts to bar users from gaining access to material from sources the authorities objected to (Busch et al., 2018). Under the guise of national security, governments have been accused of censoring the internet, and such actions are viewed as a danger to civil society in the digital age (Chang & Lin, 2020) through interference in citizens’ online freedom and access to information (Busch et al., 2018). On similar lines, online surveillance is viewed as an advanced form of coercion and social control that hinders the exercise of basic civil liberties (Richards, 2013) by limiting participation and impeding the flow of knowledge in society (Marwick, 2012; Staples, 2014; Trottier, 2016; Westcott & Owen, 2013). The looming threat of social media surveillance in societies may deter citizens from engaging in political debates and diminish the mobilizing power of online news content (Chan et al., 2022).

The spiral of silence theory offers a useful lens to unravel the influence of censorship and surveillance on societal polarization. According to the spiral of silence theory of media effects on public opinion, people opt to speak out against issues based on the climate of opinion (Noelle-Neumann, 1993). However, censorship measures by the state could also be accompanied by self-censorship. For instance, while censorship could be imposed on the journalistic press (Arsan, 2013), journalists also could self-censor to avoid political pressures (Schimpfössl et al., 2020). When individuals self-censor, the opinion climate becomes increasingly homogeneous, and a dominating viewpoint in society is reinforced (Noelle-Neumann, 1993). Similarly, when individuals are aware that their social media activity is being monitored, they tend to recalibrate their behavior (Lyon, 2007). Monitoring content on SNSes thereby leads to constrained behavior on these sites (Trottier, 2016). The monitoring inhibits the freedom of expression on these sites, as users are cautious about expressing their political leanings honestly for fear of grave consequences such as online harassment and its reverberating effects on their work and personal lives (Kruse et al., 2018).

In light of this restrictive nature of publicly articulated viewpoints, public discourse tends to be choked by a spiral of silence that threatens democratic systems (Dahlgren, 2002, 2005) and leads to polarization (Sunstein, 2018). Moreover, given the inseparability of surveillance and censorship in current times (Doctorow, 2012) and discussions of the two concepts in conjunction with one another (Zhai et al., 2022), we contend that a combined perspective could shed light on how their interaction contributes to the polarization of societies.

Complexity Theory and Configurational Analysis

Our study is conceptually grounded in the core tenets of complexity theory and configurational analysis (Rihoux & Ragin, 2008; Woodside, 2017), which aid in building a thorough comprehension of interlinkages between decisions and individuals’ actions in a networked world (Merali, 2006). The theory of complexity “suggests that the whole is greater than the sum of its parts in complex adaptive systems” (Hurlburt, 2013, p. 28) and provides an opportunity to streamline ostensibly complex systems. The theory is predominantly based on the studies of Byrne (1998) and Byrne & Callaghan (2013) and is comprised of two basic tenets which are as follows: (a) the complex context comprises an open system with transformative ability, (b) it has a pathway to accomplish a particular outcome and can be configured towards that purpose (Byrne, 2005). Hence, complex systems are created on the basis that they constitute several complex interacting elements (Frenken, 2006). The explicit modeling of elements such as “conjunctural causation, equifinality, causal asymmetry, and sufficiency” underpins configurational analysis as an approach (Fainshmidt et al., 2020, p. 456). In the setting of conjunctural causation, the outcome is dependent on a combination of causes and configurational analysis assists researchers to theorize and empirical investigate causal complexity (Fainshmidt et al., 2020).

Problems in the real world are incredibly challenging to explicate through precisely symmetrical relations between the antecedents and consequences of interest, as all real-world problems have asymmetric linkages (Ragin, 2008). This asymmetry is used to demonstrate how simple linear models cannot account for complicated interactions (Anderson, 1999). Byrne (2005) expands on this by emphasizing the relationship between the theory of complexity and configurations, which are combinations of characteristics that illuminate the pathways to the outcome of interest. In this aspect, causal complexity has a strong relationship with complexity theory and refers to circumstances in which numerous explanatory factors interact in sophisticated and, at times, divergent patterns, and manifests in equifinality (i.e., an outcome can be explained through several pathways) (Furnari et al., 2021).

In the wake of recent arguments in the IS discipline regarding the appropriateness of conventional statistical methods for modeling complex phenomena, questions have been raised around the assumptions surrounding simple causality2 (Delgosha et al., 2021; El Sawy et al., 2010; Liu et al., 2017). In a similar sense, concerns have been raised around the issues of non-normality of datasets, insufficient attention toward contrarian cases, and multicollinearity (Olya & Mehran, 2017). Configurational perspective is a welcome departure from these conventional approaches since it offers scholars in the IS discipline a new set of tools for data analysis, a unique theoretical base, and a holistic approach to extend and enhance our understanding of constructs (Delgosha et al., 2021).

In the context of the current study, we contend that polarization is best analyzed through the lens of complexity theory (Kelly, 2021; Levin et al., 2021). We support our assertion with two reasons. First, incorporating the principle of equifinality, the outcome of interest can be equally characterized by various sets of condition variables that combine in sufficient configurations for the outcome to occur (Fiss, 2011; Woodside, 2014). In this regard, the significance of propaganda operations in generating societal polarization is obvious from our discussion thus far (Neyazi, 2020). Despite the fact that disinformation campaigns may be executed by a range of entities, including the state, political parties, and foreign governments, few studies have analyzed all of these efforts concurrently to date (Ruohonen, 2021). In particular, the extent of influence each type of disinformation wields on polarizing societies remains largely underexplored in the extant literature. Hate speech contributes to polarization as well (Piazza, 2020), while state-controlled media with censorship efforts could also polarize societies (Zhu, 2019). Hence, configurations resulting in polarization may include combinations of disinformation emerging from the state, political parties, and foreign adversaries coupled with hate speech, censorship, and surveillance efforts by the governments.

Second, leaning on causal asymmetry, the principle contends that the outcome’s occurrence depends on how the condition variables interact with one another and not just their presence or absence (Woodside, 2014). In this regard, while hate speech poses a threat to the social fabric through a deepening of polarization (Piazza, 2020), government efforts to censor online content and monitor social media may limit publicly expressed opinions through a spiral of silence, which could threaten democratic systems (Dahlgren, 2002, 2005) and lead to polarization (Sunstein, 2018). While nationalists may adhere to state-controlled media, liberals may seek less regulated media outlets (Zhu, 2019). In this aspect, censorship creates political divisiveness and breeds extremism (Lane et al., 2021). However, citizens may fail to comprehend the significance of free speech, and certain segments may become accustomed to the stifling of other viewpoints and approve of government crackdowns on nonconformist beliefs (JMcCall, 2022). In addition, recent research also highlights citizens’ awareness of risks posed by free speech, such as disinformation (Knight Foundation, 2022). Also, while research indicates that surveillance and censorship are closely connected, they can result in differing degrees of political engagement or suppression (Chan et al., 2022). This complex concoction of phenomena opens up the possibility of these phenomena interacting in a myriad of possibilities to result in polarization.

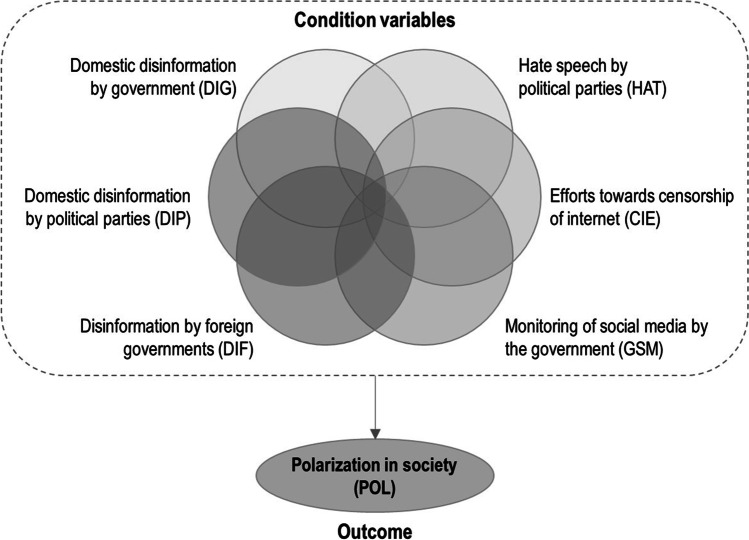

The discussion thus far demonstrates the complexity of polarization and its causative mechanisms, while also emphasizing the novelty of configurational analysis, in which the mutual influence of the variables enables researchers to study asymmetrical condition variable-outcome relationships. In this regard, Fig. 1 exemplifies our conceptual framework by displaying the condition variables in various types of political disinformation, hate speech, censorship, and social media monitoring that predict societal polarization, our outcome of interest.

Fig. 1.

Outcome of interest and condition variables

Methodology

Data

We rely on archival data collected for each of the condition variables and outcome of interest from the Varieties of Democracy database (V-Dem). The V-Dem project’s headquarters is based at the University of Gothenburg, Sweden in the department of political science (Coppedge et al., 2022; Pemstein et al., 2022) . Our reliance on this archival dataset is driven by two key reasons: (a) the cross-country nature of research in the current study constrains the collection of primary data on account of the enormity of efforts towards undertaking such a study (Frankfort-Nachmias & Nachmias, 2007) (b) publicly available archival data, as noted by researchers (e.g., Arayankalam & Krishnan, 2021; Jarvenpa, 1991) is accompanied by several benefits including, but not limited to easy replication (Calantone & Vickery, 2010), robustness to the threat of common method bias (Woszczynski & Whitman, 2004), etc.

Notably, the V-Dem project team comprises over 3,500 country experts who support the data collection process by providing expert information through online surveys. The project applies a rigorous process for recruiting country experts. The research team identifies between one hundred and two hundred experts in each country. To preserve objectivity, the study team also avoids prominent individuals, such as political party members. In addition, individuals from varied disciplines, including academia, the media, and the judicial system, are included to ensure a pool with diverse expertise. The project team also ensures the construct validity of instruments by conducting tests based on differential item functioning, a method for evaluating the cross-cultural validity of measures and permits the pooling of data for macro-level investigations (Arayankalam & Krishnan, 2021; Tennant et al., 2004).

Following the initial identification of a pool of potential experts for each country, basic information of each expert (e.g., educational qualifications, current position, area of documented expertise, etc.) is compiled (Coppedge et al., 2022). Prior to the completion of coding, country coordinators engage in multiple interactions with all the experts to ensure internal data consistency. The research team also ensures that at least three out of five experts for each country are natives or permanent residents (Coppedge et al., 2022). However, in case of countries where it is difficult to identify in-country experts who are qualified and independent of the prevailing regime, allowances are made to steer clear of potential western or northern biases in coding (Coppedge et al., 2022). Despite this step to minimize biases, the possibility of experts exhibiting varying levels of reliability and biases cannot be ruled out (Coppedge et al., 2022). In light of this fact, the V-Dem team employs rigorous statistical techniques to leverage patterns of cross-rater disagreements to estimate variations in reliability and biases and adjusts the estimates of the latent, indirectly observed concepts being measured (Coppedge et al., 2022). In doing so, the process enhances the confidence in reliability of the estimates provided by the team and corrects for measurement errors, if any (Coppedge et al., 2022). As a final step, to account for any remaining errors, the research team administers a post-survey questionnaire to each expert, capturing their understanding of democracy in the country and other countries with diverse characteristics of democracy or autocracy, incorporating some of these output values into the measurement model and others ex post to examine validity of the model’s output (Coppedge et al., 2022).

In summary, the process ensures that the final values offered by the V-Dem database are optimized for any potential biases and fit for empirical evaluation by ensuring high levels of validity and reliability of the data through adherence to strict protocols (Coppedge et al., 2022; Pemstein et al., 2022). The database has been utilized in studies published in reputed journals such as the British Journal of Political Science (e.g., Edgell et al., 2022) and American Journal of Political Science (e.g., Claassen, 2020) while it has also been leveraged for disinformation studies in the information systems (IS) domain (Arayankalam & Krishnan, 2021). Our dataset for performing the fuzzy-set qualitative comparative analysis (fsQCA) consists of data from 177 countries for 2021, with two countries excluded for missing data.3 The complete list of countries included in the study is provided in Appendix A.

Condition Variables and Outcome

The outcome of interest in this study is polarization in society, and the condition variables are (a) the government’s dissemination of disinformation on social media, (b) political parties’ dissemination of disinformation on social media, (c) disinformation by foreign governments, (d) hate speech by political parties, (e) censorship of the internet and (f) monitoring of social media by the government. The condition variables related to disinformation and hate speech are derived from the measures provided by the digital society project which focuses on the political environment of the internet and social media (Mechkova et al., 2019). Throughout this article, we refer to the dissemination of disinformation to influence the population within the country as domestic disinformation. In this context, the three disinformation-related condition variables seek to understand separately the extent to which the governments, political parties, and foreign adversaries and their agents leverage social media to influence the populace and the political climate in the country. The condition related to hate speech seeks to measure how often major political parties indulge in hate speech in their rhetoric, whereas the conditions pertaining to censorship and social media monitoring quantify the extent to which governments censor information on digital media and monitor political content on SNSs. Each of these variables and outcome was operationalized using the measures from the V-Dem database (Coppedge et al., 2022; Pemstein et al., 2022). Table 1 provides a summary of the measures, their description, and the questions used to operationalize them.

Table 1.

Condition variables and outcome of interest

| Measure | Description | Question used to operationalize this measure |

|---|---|---|

| Conditions | ||

| DIG | Domestic disinformation by government | “How often do the government and its agents use social media to disseminate misleading viewpoints or false information to influence its own population?” (Coppedge et al., 2022, p. 319) |

| DIP | Domestic disinformation by political parties | “How often do major political parties and candidates for office use social media to disseminate misleading viewpoints or false information to influence their own population?” (Coppedge et al., 2022, p. 320) |

| DIF | Disinformation by foreign governments | “How routinely do foreign governments and their agents use social media to disseminate misleading viewpoints or false information to influence domestic politics in this country?” (Coppedge et al., 2022, p. 321) |

| HAT | Hate speech by political parties | “How often do major political parties use hate speech as part of their rhetoric?” (Coppedge et al., 2022, p. 334) |

| CIE | Efforts towards censorship of internet | “Does the government attempt to censor information (text, audio, or visuals) on the Internet?” (Coppedge et al., 2022, p. 202) |

| GSM | Monitoring of social media by the government | “How comprehensive is the surveillance of political content in social media by the government or its agents?” (Coppedge et al., 2022, p. 324) |

| Outcome of interest | ||

| POL | Polarization in society | “Is society polarized into antagonistic, political camps?” (Coppedge et al., 2022, p. 227) |

To make the standardized values of the condition variables more intuitive, the notation for country score with respect to each variable was reversed, seeking guidance from prior literature which have leveraged a similar approach (Arayankalam & Krishnan, 2021; MacCabe et al., 2012). A summary of the variables and descriptive statistics is provided below (see Table 2).

Table 2.

Condition variables influencing polarization as the outcome of interest

| Measures | Description | M | SD | Min | Max |

|---|---|---|---|---|---|

| DIG | Domestic disinformation by government | 0.196 | 1.551 | -2.858 | 3.555 |

| DIP | Domestic disinformation by political parties | 0.264 | 1.269 | -2.993 | 3.566 |

| DIF | Disinformation by foreign governments | 0.016 | 1.281 | -2.496 | 4.194 |

| HAT | Hate speech by political parties | -0.084 | 1.328 | -2.761 | 2.963 |

| CIE | Efforts towards censorship of internet | -0.351 | 1.398 | -2.215 | 4.147 |

| GSM | Monitoring of social media by the government | 0.117 | 1.402 | -2.885 | 3.689 |

| POL | Polarization in society | 0.290 | 1.396 | -3.033 | 4.085 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society, M Mean, SD Standard deviation, Min Minimum value, Max Maximum value

Data Analyses and Results

Contrarian Case Analysis

When two variables are examined and an influence of one over the other is established as a main effect, it signifies that this holds true for the majority of instances in the sample (Pappas et al., 2016a). However, an association of an opposite kind may also manifest in some instances, necessitating investigation for such occurrences as well (Woodside, 2014). In this regard, contrarian analysis facilitates the detection of such relationships, hence justifying the rationale for configurational analysis (Woodside, 2014). This analysis is rarely carried out in research articles (Pappas & Woodside, 2021). Seeking guidance from Pappas et al. (2020), quintiles are created on each variable, and cross-tabulations are performed across these quintiles. Results are published in Table 3 below. Contrarian cases are denoted by bold markings, whilst the main effect is shown in italics. The presence of significant number of contrarian cases signifies the need for QCA analysis.

Table 3.

Results from the contrarian case analysis

| POL | POL | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | ||||

| GSM (phi = 0.467, p < 0.05) | 1 | 16 (9.04%) | 5 (2.82%) | 7 (3.95%) | 4 (2.26%) | 3 (1.69%) | CIE (phi = 0.422, p < 0.05) | 1 | 15 (8.47%) | 6 (3.39%) | 4 (2.26%) | 7 (3.95%) | 3 (1.69%) |

| 2 | 4 (2.26%) | 11 (6.21%) | 10 (5.65%) | 5 (2.82%) | 6 (3.39%) | 2 | 6 (3.39%) | 7 (3.95%) | 9 (5.08%) | 9 (5.08%) | 5 (2.82%) | ||

| 3 | 2 (1.13%) | 9 (5.08%) | 7 (3.95%) | 7 (3.95%) | 10 (5.65%) | 3 | 2 (1.13%) | 12 (6.78%) | 9 (5.08%) | 4 (2.26%) | 8 (4.52%) | ||

| 4 | 3 (1.69%) | 9 (5.08%) | 7 (3.95%) | 10 (5.65%) | 7 (3.95%) | 4 | 5 (2.82%) | 4 (2.26%) | 8 (4.52%) | 11 (6.21%) | 8 (4.52%) | ||

| 5 | 10 (5.65%) | 2 (1.13%) | 4 (2.26%) | 10 (5.65%) | 9 (5.08%) | 5 | 7 (3.95%) | 7 (3.95%) | 5 (2.82%) | 5 (2.82%) | 11 (6.21%) | ||

| DIG (phi = 0.534, p < 0.001) | 1 | 16 (9.04%) | 8 (4.52%) | 8 (4.52%) | 3 (1.69%) | 0 (0%) | DIP (phi = 0.589, p < 0.001) | 1 | 17 (9.6%) | 7 (3.95%) | 8 (4.52%) | 2 (1.13%) | 1 (0.56%) |

| 2 | 5 (2.82%) | 11 (6.21%) | 12 (6.78%) | 4 (2.26%) | 4 (2.26%) | 2 | 9 (5.08%) | 11 (6.21%) | 10 (5.65%) | 2 (1.13%) | 4 (2.26%) | ||

| 3 | 6 (3.39%) | 4 (2.26%) | 7 (3.95%) | 12 (6.78%) | 6 (3.39%) | 3 | 5 (2.82%) | 5 (2.82%) | 11 (6.21%) | 8 (4.52%) | 7 (3.95%) | ||

| 4 | 3 (1.69%) | 8 (4.52%) | 4 (2.26%) | 9 (5.08%) | 12 (6.78%) | 4 | 2 (1.13%) | 8 (4.52%) | 2 (1.13%) | 11 (6.21%) | 12 (6.78%) | ||

| 5 | 5 (2.82%) | 5 (2.82%) | 4 (2.26%) | 8 (4.52%) | 13 (7.34%) | 5 | 2 (1.13%) | 5 (2.82%) | 4 (2.26%) | 13 (7.34%) | 11 (6.21%) | ||

| HAT (phi = 0.766, p < 0.001) | 1 | 23 (12.99%) | 9 (5.08%) | 2 (1.13%) | 1 (0.56%) | 0 (0%) | DIF (phi = 0.408, p < 0.05) | 1 | 10 (5.65%) | 11 (6.21%) | 6 (3.39%) | 5 (2.82%) | 3 (1.69%) |

| 2 | 7 (3.95%) | 11 (6.21%) | 10 (5.65%) | 6 (3.39%) | 2 (1.13%) | 2 | 6 (3.39%) | 6 (3.39%) | 10 (5.65%) | 7 (3.95%) | 7 (3.95%) | ||

| 3 | 2 (1.13%) | 6 (3.39%) | 13 (7.34%) | 8 (4.52%) | 6 (3.39%) | 3 | 6 (3.39%) | 8 (4.52%) | 10 (5.65%) | 7 (3.95%) | 4 (2.26%) | ||

| 4 | 2 (1.13%) | 8 (4.52%) | 7 (3.95%) | 7 (3.95%) | 12 (6.78%) | 4 | 5 (2.82%) | 8 (4.52%) | 8 (4.52%) | 9 (5.08%) | 6 (3.39%) | ||

| 5 | 1 (0.56%) | 2 (1.13%) | 3 (1.69%) | 14 (7.91%) | 15 (8.47%) | 5 | 8 (4.52%) | 3 (1.69%) | 1 (0.56%) | 8 (4.52%) | 15 (8.47%) | ||

Cases in bold represent contrarian cases. Cases in italics represent the main effect. DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society

fsQCA Method

fsQCA is a set-theoretical method that integrates fuzzy logic and fuzzy sets with QCA (Ragin, 2000). The advantages of this approach result from the restrictions imposed by conventional regression-based techniques (Woodside, 2017). In contrast to regression-based methods where leaving out a relevant variable reduces the explanatory power, especially so if the missing variable in correlated with the included ones which results in biased estimates, QCA relies on Boolean algebra rather than correlations, thereby eliminating the issue of omitted variable bias (Fainshmidt et al., 2020). An implication of this is the “absence of a methodological requirement for control variables” when evaluating QCA-based results (Fainshmidt et al., 2020, p. 458).

The method empirically investigates the relation between the outcome and the multiple combinations of condition variables that can explain it as necessary and sufficient conditions. In this process, we first calibrate the raw data, and they analyze it for the presence of necessary conditions, which form a superset of the outcome (Ragin, 2008). This implies that the outcome’s membership score is lower when compared with the causal conditions’ fuzzy-set membership score for each of the cases in the sample being analyzed (Pappas et al., 2020). As a next step, we analyze the data and cases for a set of sufficient conditions which combine towards the outcome of interest. We then interpret the results and finally test for predictive validity of the model.

Data Calibration

The next step in configurational analysis is data calibration, which entails converting variable values to fuzzy set membership scores (Ragin, 2008). Calibration helps transform ordinal or interval-scale data into set membership scores through either of two methods, namely direct and indirect method of calibration. In the direct method, the researchers select three qualitative breakpoints or anchors for full membership, full non-membership, and a crossover point. As opposed to this method, the indirect method requires researchers to rescale through a qualitative assessment. The choice of method is dependent on data and the researchers’ expertise (Ragin, 2008). However, the direct method is advised, more common, and given that the choice of thresholds is clearer, it bolsters the replicability and validity of the findings and leads to more rigorous studies (Pappas & Woodside, 2021).

In line with this guidance, we employed the direct method of calibration in the current study. Towards calibration of the data, we chose the three thresholds as 0.95, 0.50, and 0.05. This results in a log-odd metric post the data transformation with values in the range of 0 to 1 (see Table 4). Seeking guidance from Pappas and Woodside (2021), we used the percentiles to compute the threshold (or breakpoint) values and used these to calibrate the data in fsQCA (see table for percentiles used as threshold values). In line with guidance from Fiss (2011), a constant value of 0.001 was added to variable conditions with values below 1 as fsQCA has a challenge evaluating cases with membership scores of exactly 0.5 (Delgosha et al., 2021; Pappas et al., 2020; Ragin, 2008). This correction ensures that none of the cases are left out of the analysis while having no effect on the results (Pappas et al., 2020).

Table 4.

Percentile thresholds used for calibration of condition variables and outcome of interest

| Percentile thresholds | DIG | DIP | DIF | HAT | CIE | GSM | POL |

|---|---|---|---|---|---|---|---|

| 5% | -2.276 | -1.650 | -1.861 | -2.144 | -2.023 | -2.057 | -2.114 |

| 50% | 0.393 | 0.247 | -0.105 | -0.149 | -0.671 | 0.136 | 0.189 |

| 95% | 2.972 | 2.647 | 2.277 | 2.114 | 2.174 | 2.441 | 2.854 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society. Values rounded to three decimal places

Analyzing Necessary Conditions

The necessity condition analysis evaluates if an individual condition must be present for the outcome’s occurrence. This analysis examines the extent to which instances of the outcome concur in displaying the condition variable that is believed to be a required condition for the occurrence of the outcome, as well as the particular variable’s empirical relevance in terms of consistency and coverage (Muñoz et al., 2022). Elaborating further, the result of this process step implies that when an outcome is present, the condition deemed necessary is also present such that the outcome’s occurrence is contingent on the condition variable (Schneider et al., 2012). The results of the necessary condition analysis for high and low degree of polarization are presented in this section (Ragin & Davey, 2016). Conditions with a consistency value over the threshold of 0.9 are regarded as necessary conditions (Ragin, 2008). However, in the context of the current study, the necessity condition analyses demonstrate that among all the conditions, no single condition is a necessary condition for either high or low level of polarization. The results are shown in Table 5.

Table 5.

Analysis of necessity results for high and low levels of polarization

| Condition variables | POL | ~ POL | ||

|---|---|---|---|---|

| Consistency | Coverage | Consistency | Coverage | |

| DIG | 0.720 | 0.778 | 0.549 | 0.577 |

| ~ DIG | 0.609 | 0.581 | 0.789 | 0.733 |

| DIP | 0.745 | 0.787 | 0.549 | 0.564 |

| ~ DIP | 0.587 | 0.572 | 0.792 | 0.752 |

| DIF | 0.702 | 0.725 | 0.614 | 0.617 |

| ~ DIF | 0.629 | 0.626 | 0.726 | 0.703 |

| HAT | 0.804 | 0.818 | 0.530 | 0.525 |

| ~ HAT | 0.533 | 0.538 | 0.816 | 0.802 |

| CIE | 0.680 | 0.714 | 0.590 | 0.602 |

| ~ CIE | 0.621 | 0.608 | 0.720 | 0.687 |

| GSM | 0.702 | 0.726 | 0.597 | 0.601 |

| ~ GSM | 0.614 | 0.610 | 0.728 | 0.704 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society, ~ denotes negation

Analyzing Sufficient Conditions

As opposed to the necessary condition analysis at the level of each individual condition, sufficient condition analysis examines if a set of conditions or a particular condition is capable of producing the outcome. Post calibration of data, a truth table comprising 2 k rows was constructed, with each row representative of a possible configuration of the k condition variables. Utilizing the criteria of frequency and consistency, the truth table was further refined (Ragin, 2008). The frequency cut-off point is chosen to secure a minimum number of empirical cases for configurational evaluation.

Setting frequency thresholds for the truth table involves a trade-off wherein higher thresholds indicate more cases in the sample for each configuration but reduces the sample percentage (or coverage) explained by the configurations that are retained. In contrast, lower thresholds boost the coverage, but fewer cases in the sample are represented by each configuration. Based on guidance from prior literature (Fiss, 2011; Pappas & Woodside, 2021; Ragin, 2008), samples with cases in excess of 150 may use a frequency threshold of 3. Our sample comprises 177 cases and hence, we opt for 3 as the frequency threshold towards reduction of the truth table.

Second, in accordance with recommendations from past research, a minimum consistency threshold of 0.75 must be specified after excluding configurations with low frequency (Rihoux & Ragin, 2008). At this stage, the researcher is required to identify natural breaking points and opt for a suitable threshold with appropriate justification (Pappas & Woodside, 2021). Towards aiding the researcher in determining the threshold, fsQCA provides another measure, namely proportional reduction in consistency (PRI), which aids in preventing concurrent subset relations of configurations in the outcome and its negated state. It is recommended to set PRI consistency threshold higher than 0.5 as values below this threshold are regarded significantly inconsistent (Greckhamer et al., 2018). On this basis, we set the cut-off for PRI consistency at 0.75 and set the consistency threshold at 0.95 for high degree of polarization as the outcome. In the context of low degree of polarization as the outcome, given the lower values of raw consistency and PRI consistency (with only one configuration having a PRI consistency value above 0.75), we chose a PRI consistency threshold of 0.5 and the raw consistency threshold of 0.9.

FsQCA computes three solutions for the outcome of interest, namely the complex, parsimonious and intermediate solutions. The parsimonious solution is a simplified form of the complex solution, presenting the most important conditions that cannot be excluded from any solution and are also known as core conditions, whereas the complex solution presents all possible combinations of conditions when traditional logical operations are used (Fiss, 2011). In contrast, the intermediate solution includes the parsimonious solution and is a component of the complex solution. Additionally, whereas core conditions are present in both the intermediate and parsimonious solutions, peripheral conditions are those that are excluded in the parsimonious solution and only occur in the intermediate solution (Fiss, 2011). In this regard, core conditions exhibit a strong causal relationship with the outcome, given their presence in both the intermediate and parsimonious solutions (Ho et al., 2016).

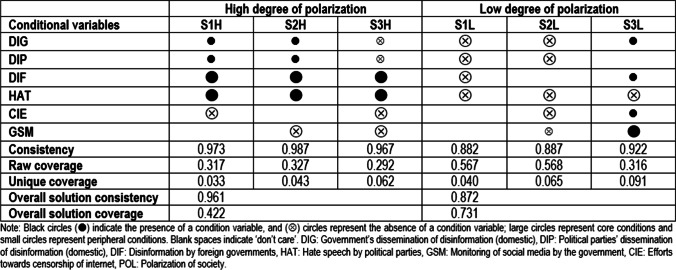

The results are depicted in Table 6. For a configuration to be deemed sufficient, the consistency value must be greater than 0.75 (Pappas & Woodside, 2021) and the coverage value must exceed 0.2 (Rasoolimanesh et al., 2021). In the current context, all configurations (or models) confirm to this requirement and may be regarded as the set of sufficiency configurations. The analysis reveals three solutions each for high and low degree of polarization. The solutions in Table 6 explain the outcome in high degree of polarization with raw coverage indicating 33 percent to 29 percent of the cases associated with the outcome, while the outcome in low degree of polarization is explained through a range of 57 percent to 32 percent of the cases associated with it.

Table 6.

Configurations for high and low degree of polarization

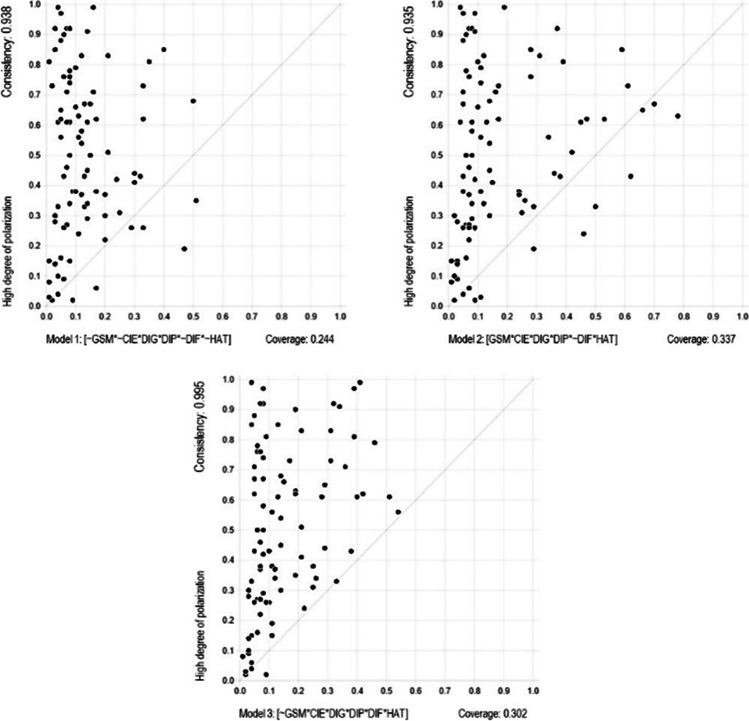

Assessing Predictive Validity

A key test of robustness of the fsQCA analysis is to examine if the model performs equally well on a different sample by assessing the predictive validity (Pappas et al., 2016b; Woodside, 2014). Although it is not frequently evaluated, this test assumes enormous significance given that a model with good fit may not always explain the outcome to expectations (Pappas et al., 2020). Seeking guidance from Pappas et al. (2019), this test follows a two-step process. First, the sample is randomly partitioned into a subsample and a holdout sample, and then the fsQCA analysis is conducted on the subsample. The results of this subsample analysis are then compared to the holdout sample. In both instances, the samples must explain the outcome to a similar extent.

The configurations comprising complex antecedent conditions are consistent indicators of the outcome of interest, i.e., high degree of polarization, with overall solution consistency and coverage of 0.948 and 0.439, respectively (see Table 7 for details). Every solution in the table below represents a model that should be plotted against the outcome of interest using the data from the holdout sample. The findings based on these plots (see figure) indicate highly consistent models with consistencies and coverage in the range of 0.935 to 0.995 and 0.244 to 0.337, respectively (see Fig. 2).

Table 7.

Complex configurations indicating high degree of polarization for the subsample

| Models from Subsample for high degree of polarization | Raw coverage | Unique coverage | Consistency |

|---|---|---|---|

| 1. ~ GSM* ~ CIE*DIG*DIP* ~ DIF* ~ HAT | 0.279 | 0.066 | 0.953 |

| 2. GSM*CIE*DIG*DIP* ~ DIF*HAT | 0.313 | 0.067 | 0.967 |

| 3. ~ GSM*CIE*DIG*DIP*DIF*HAT | 0.291 | 0.051 | 0.985 |

| Overall solution coverage | 0.439 | ||

| Overall solution consistency | 0.948 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society, ~ denotes negation

Fig. 2.

Testing for predictive validity

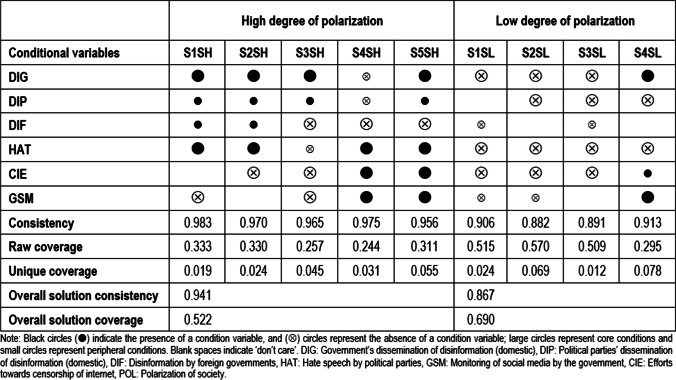

Supplemental Analysis

Prior political research suggests using lagged variables as a robustness check due to the dynamic nature of data (Kenny, 2020). Hence, to confirm the validity of results in the event of a lag,4 we incorporated the V-Dem database’s 2020 data for the condition variables (Coppedge et al., 2021; Pemstein et al., 2021) while we retained the values for polarization as outcome from V-Dem’s 2021 data (Coppedge et al., 2022; Pemstein et al., 2022).

We used the direct method of data calibration. The thresholds based on this method are listed in Table 8.

Table 8.

Percentile thresholds used for calibration of condition variables and outcome of interest

| Percentile thresholds | DIG | DIP | DIF | HAT | CIE | GSM | POL |

|---|---|---|---|---|---|---|---|

| 5% | -2.367 | -1.505 | -1.867 | -1.987 | -1.928 | -2.064 | -2.114 |

| 50% | 0.202 | 0.271 | -0.009 | -0.006 | -0.521 | 0.118 | 0.189 |

| 95% | 2.566 | 2.320 | 2.167 | 2.154 | 2.114 | 2.443 | 2.854 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society. Values rounded to three decimal places

The necessary condition analysis (see Table 9,) reveals that none of the conditions is a necessity in itself for the realization of the outcome.

Table 9.

Analysis of necessity results

| Condition variables | POL | ~ POL | ||

|---|---|---|---|---|

| Consistency | Coverage | Consistency | Coverage | |

| DIG | 0.743 | 0.763 | 0.571 | 0.571 |

| ~ DIG | 0.581 | 0.582 | 0.763 | 0.743 |

| DIP | 0.751 | 0.774 | 0.555 | 0.557 |

| ~ DIP | 0.571 | 0.569 | 0.775 | 0.751 |

| DIF | 0.711 | 0.726 | 0.619 | 0.615 |

| ~ DIF | 0.623 | 0.627 | 0.724 | 0.709 |

| HAT | 0.775 | 0.803 | 0.516 | 0.520 |

| ~ HAT | 0.537 | 0.533 | 0.804 | 0.776 |

| CIE | 0.657 | 0.723 | 0.568 | 0.609 |

| ~ CIE | 0.645 | 0.605 | 0.741 | 0.678 |

| GSM | 0.712 | 0.728 | 0.609 | 0.606 |

| ~ GSM | 0.615 | 0.618 | 0.727 | 0.711 |

DIG Government’s dissemination of disinformation (domestic), DIP Political parties’ dissemination of disinformation (domestic), DIF Disinformation by foreign governments, HAT Hate speech by political parties, GSM Monitoring of social media by the government, CIE Efforts towards censorship of internet, POL Polarization of society, ~ denotes negation

Similar to the previous instance, in the context of sufficiency analysis (see Table 10), we set the cut-off for PRI consistency at 0.75 and set the consistency threshold at 0.95 for high degree of polarization as the outcome. In the context of low degree of polarization as the outcome, we chose a PRI consistency threshold of 0.5 and the raw consistency threshold of 0.9.

Table 10.

Configurations for high and low degree of polarization

Interpreting the Results

The results from the primary analysis reveal six different combinations of disinformation, hate speech, internet censorship, and social media monitoring that are associated with high and low degrees of polarization. In the context of high degree of polarization, foreign disinformation and hate speech as core conditions are reinforced by complimentary ingredients in the form of disinformation by the state and political parties in two configurations combined with the absence of either one or both surveillance and censorship across the three configurations. Solutions S1H and S2H present combinations in which all forms of disinformation, namely disinformation by the state, political parties, and foreign governments and hate speech by political parties are present. In addition, censorship of internet is absent in solution S1H while monitoring of social media by government is absent in solution S2H. Solution S3H presents a combination in which disinformation peddled by foreign governments is present along with hate speech by political parties. However, all other forms of disinformation are absent along with an absence of both internet censorship and monitoring of social media by the government.

For a low degree of polarization, the absence of hate speech as a core condition common across the three configurations is reinforced by other peripheral conditions which vary across the three configurations and are discussed below. Solution S1L presents a combination where all forms of disinformation and hate speech are absent and a low degree of polarization is present regardless of internet censorship and monitoring of social media by the government. Solution S2L represents a combination wherein most conditions are absent, and the solution is indifferent to disinformation by foreign governments. Solution S3L represents a combination wherein hate speech is absent, disinformation by the state and foreign governments is present, both social media monitoring and internet censorship are present, and a low degree of polarization is present regardless of disinformation by political parties.

In summary, the results of the main analysis suggests that there exist multiple, equally effective configurations of disinformation variants that cause polarization in societies. The configurations also reveal that single condition variables may be present or absent depending on how it combines with other conditions, which is indicative of causal asymmetry. Lastly, at least one variant of disinformation is present as a condition variable across all configurations which represent high degree of polarization.

Moving on to an analysis of data with a one-year lag between condition variables and the outcome, the analysis reveals two configurations associated with a high level of polarization (S1SH and S2SH) that are comparable to those from the primary analysis (S1H and S2H) although they are distinct in terms of core and peripheral conditions. Configurations S3SH and S5SH reveal that disinformation by government and political parties and the absence of foreign disinformation operations result in a high degree of polarization while governments’ role in censorship and monitoring of social media along with hate speech in society may either be present (S5SH) or absent (S3SH). However, when all forms of disinformation are absent, censorship and monitoring of social media combined with hate speech could result in high level of polarization (S4SH). On the contrary, three configurations associated with low degree of polarization reveal the absence or minor role of most conditions (S1SL to S3SL). However, even if governments engage in disinformation operations, monitoring of social media and censorship combined with an absence of both hate speech and disinformation by political parties ensures low levels of polarization (S4SL).

Discussion

The current study leverages complexity theory and argues that various forms of disinformation, hate speech, government monitoring of social media, and internet censorship form configurations that predict the degree of societal fragmentation. To this end, a conceptual model that serves as the basis for identifying configurations was constructed.

The primary analysis underscores the core function of disinformation by foreign adversaries and hate rhetoric in the setting of highly polarized societies. However, these conditions may or may not be complemented by disinformation by the state and political parties, while censorship or surveillance is absent across the configurations (S1H-S3H). In contrast, the supplementary analysis related to high polarization reveals disinformation by the state and political parties emerging as more prominent through their presence across four configurations (S1SH, S2SH, S3SH, S5SH) as opposed to foreign disinformation present only across two of the configurations (S1SH, S2SH). This supports recent claims around domestic disinformation emerging as a greater threat than disinformation from foreign adversaries (Bentzen, 2021) while the possibility of blurring lines between the two disinformation variants with foreign agents engaging local actors as proxies to carry out disinformation operations (Polyakova & Fried, 2020) cannot be ruled out. In addition, the supplemental analysis reveals that hate speech remains a core condition across four configurations (S1SH, S2SH, S4SH, S5SH). A key difference is the presence of censorship and surveillance along with hate speech across two configurations (S4SH and S5SH) which highlights that hate speech’s role in fragmenting societies may be bolstered by the surveillance and censorship efforts. This aligns with recent literature highlighting that censorship may be used to suppress dissent and that hate speech laws could be misused to silence political viewpoints that are not viewed as favorable by the state (Brown & Sinclair, 2019). In this regard, we contend that while opposing viewpoints are suppressed, a certain political faction may continue to engage in hate rhetoric, and the amplification of this narrative in light of other views suppressed by censorship efforts may fuel polarization.

In the context of low polarization, hate rhetoric is absent across all configurations in the primary analysis. In addition, while disinformation dissemination by government and political parties is absent in two of the three configurations (S1L, S2L), polarization is contained even if the state and foreign adversaries engage in disinformation operations in the country (S3L). It is likely that polarization control is made possible by the prevalence of social media monitoring and internet censorship. This is further substantiated by the results of the supplementary analysis which highlights the role of surveillance and censorship in containing polarization despite states’ indulgence in disinformation operations (S4SL).

In conclusion, although the significance of propaganda in promoting societal polarization is well-established in the literature (Neyazi, 2020), the variable influence of various forms of disinformation is rarely addressed (Ruohonen, 2021). In this context, the current study emphasizes the central role of political disinformation on social media and the importance of hate speech in polarizing societies. Also, in contrast to the commonly held belief that internet censorship and social media monitoring stifle fundamental liberties (Richards, 2013; Zappin et al., 2022), this study emphasizes the role of internet censorship and social media monitoring in keeping polarization in check despite the spread of disinformation by a variety of actors while it also highlights that these surveillance and censorship measures in combination with hate speech may fuel polarization in societies.

Implications

Implications for Research

The findings of the study have four implications for research. First, our study offers a novel configural conceptualization of polarization by leveraging fsQCA as a method. Recent research emphasizes the intricacy surrounding polarization as a phenomenon and the fact that it consists of a collection of complex systems with interactions between its numerous components (Kelly, 2021; Levin et al., 2021). Recognizing this sophistication, the current study, leaning on complexity theory as the theoretical foundation, uses fsQCA as a set-theoretical method to unravel the interactions among causal mechanisms which combine in several ways to result in polarized societies. In doing so, we engage in an exploratory analysis of societal polarization and offer a configurational perspective of the phenomenon which suggests that combinations of conditions comprising disinformation, hate speech, censorship and surveillance explain polarization better than an individual condition in isolation. Through this configural analysis, we not only highlight the asymmetric and equifinal nature of conditions coming together to result in polarization, but we also pave the way for future studies to analyze the specifics using symmetric methods, including experiments and observational research, to enhance our understanding of polarization, while asymmetric methods may be further leveraged to expand on the conditions contributing to polarization.