Abstract

In this paper, we present a new ellipsoid-type algorithm for solving nonsmooth problems with convex structure. Examples of such problems include nonsmooth convex minimization problems, convex-concave saddle-point problems and variational inequalities with monotone operator. Our algorithm can be seen as a combination of the standard Subgradient and Ellipsoid methods. However, in contrast to the latter one, the proposed method has a reasonable convergence rate even when the dimensionality of the problem is sufficiently large. For generating accuracy certificates in our algorithm, we propose an efficient technique, which ameliorates the previously known recipes (Nemirovski in Math Oper Res 35(1):52–78, 2010).

Keywords: Subgradient method, Ellipsoid method, Accuracy certificates, Separating oracle, Convex optimization, Nonsmooth optimization, Saddle-point problems, Variational inequalities

Introduction

The Ellipsoid Method is a classical algorithm in Convex Optimization. It was proposed in 1976 by Yudin and Nemirovski [23] as the modified method of centered cross-sections and then independently rediscovered a year later by Shor [21] in the form of the subgradient method with space dilation. However, the popularity came to the Ellipsoid Method only when Khachiyan used it in 1979 for proving his famous result on polynomial solvability of Linear Programming [10]. Shortly after, several polynomial algorithms, based on the Ellipsoid Method, were developed for some combinatorial optimization problems [9]. For more details and historical remarks on the Ellipsoid Method, see [2, 3, 14].

Despite its long history, the Ellipsoid Method still has some issues which have not been fully resolved or have been resolved only recently. One of them is the computation of accuracy certificates which is important for generating approximate solutions to dual problems or for solving general problems with convex structure (saddle-point problems, variational inequalities, etc.). For a long time, the procedure for calculating an accuracy certificate in the Ellipsoid Method required solving an auxiliary piecewise linear optimization problem (see, e.g., sect. 5 and 6 in [14]). Although this auxiliary computation did not use any additional calls to the oracle, it was still computationally expensive and, in some cases, could take even more time than the Ellipsoid Method itself. Only recently an efficient alternative has been proposed [16].

Another issue with the Ellipsoid Method is related to its poor dependency on the dimensionality of the problem. Consider, e.g., the minimization problem

| 1 |

where is a convex function and is the Euclidean ball of radius . The Ellipsoid Method for solving (1) can be written as follows (see, e.g., sect. 3.2.8 in [19]):

| 2 |

where , (I is the identity matrix) and is an arbitrary nonzero subgradient if , and is an arbitrary separator1 of from Q if .

To solve problem (1) with accuracy (in terms of the function value), the Ellipsoid Method needs

| 3 |

iterations, where is the Lipschitz constant of f on Q (see theorem 3.2.11 in [19]). Looking at this estimate, we can see an immediate drawback: it directly depends on the dimension and becomes useless when . In particular, we cannot guarantee any reasonable rate of convergence for the Ellipsoid Method when the dimensionality of the problem is sufficiently big.

Note that the aforementioned drawback is an artifact of the method itself, not its analysis. Indeed, when , iteration (2) reads

Thus, the method stays at the same point and does not make any progress.

On the other hand, the simplest Subgradient Method for solving (1) possesses the “dimension-independent” iteration complexity bound (see, e.g., sect. 3.2.3 in [19]). Comparing this estimate with (3), we see that the Ellipsoid Method is significantly faster than the Subgradient Method only when n is not too big compared to and significantly slower otherwise. Clearly, this situation is strange because the former algorithm does much more work at every iteration by “improving” the “metric” which is used for measuring the norm of the subgradients.

In this paper, we propose a new ellipsoid-type algorithm for solving nonsmooth problems with convex structure, which does not have the discussed above drawback. Our algorithm can be seen as a combination of the Subgradient and Ellipsoid methods and its convergence rate is basically as good as the best of the corresponding rates of these two methods (up to some logarithmic factors). In particular, when , the convergence rate of our algorithm coincides with that of the Subgradient Method.

Contents

This paper is organized as follows. In Sect. 2.1, we review the general formulation of a problem with convex structure and the associated with it notions of accuracy certificate and residual. Our presentation mostly follows [16] with examples taken from [18]. Then, in Sect. 2.2, we introduce the notions of accuracy semicertificate and gap and discuss their relation with those of accuracy certificate and residual.

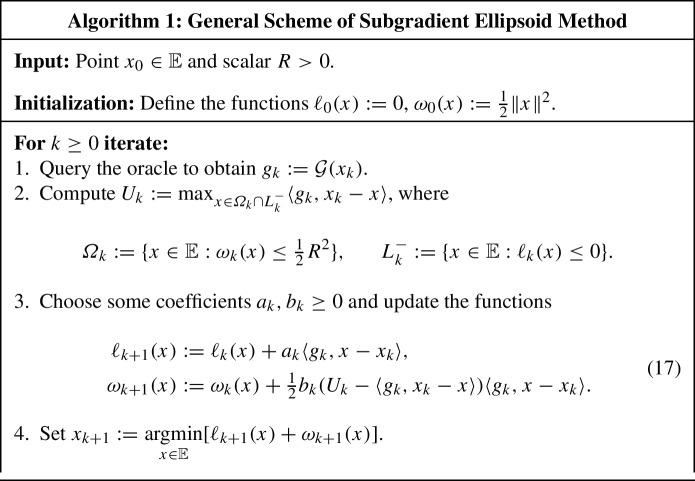

In Sect. 3, we present the general algorithmic scheme of our methods. To measure the convergence rate of this scheme, we introduce the notion of sliding gap and establish some preliminary bounds on it.

In Sect. 4, we discuss different choices of parameters in our general scheme. First, we show that, by setting some of the parameters to zero, we obtain the standard Subgradient and Ellipsoid methods. Then we consider a couple of other less trivial choices which lead to two new algorithms. The principal of these new algorithms is the latter one, which we call the Subgradient Ellipsoid Method. We demonstrate that the convergence rate of this algorithm is basically as good as the best of those of the Subgradient and Ellipsoid methods.

In Sect. 5, we show that, for both our new methods, it is possible to efficiently generate accuracy semicertificates whose gap is upper bounded by the sliding gap. We also compare our approach with the recently proposed technique from [16] for building accuracy certificates for the standard Ellipsoid Method.

In Sect. 6, we discuss how to efficiently implement our general scheme and the procedure for generating accuracy semicertificates. In particular, we show that the time and memory requirements of our scheme are the same as in the standard Ellipsoid Method.

Finally, in Sect. 7, we discuss some open questions.

Notation and generalities

In this paper, denotes an arbitrary n-dimensional real vector space. Its dual space, composed of all linear functionals on , is denoted by . The value of , evaluated at , is denoted by . See [19, sect. 4.2.1] for the supporting discussion of abstract real vector spaces in Optimization.

Let us introduce in the spaces and a pair of conjugate Euclidean norms. To this end, let us fix a self-adjoint positive definite linear operator and define

Note that, for any and , we have the Cauchy-Schwarz inequality

which becomes an equality if and only if s and Bx are collinear. In addition to and , we often work with other Euclidean norms defined in the same way but using another reference operator instead of B. In this case, we write and , where is the corresponding self-adjoint positive definite linear operator.

Sometimes, in the formulas, involving products of linear operators, it is convenient to treat as a linear operator from to , defined by , and as a linear operator from to , defined by . Likewise, any can be treated as a linear operator from to , defined by , and as a linear operator from to , defined by . Then, and are rank-one self-adjoint linear operators from to and from to respectively, acting as follows: and for any and .

For a self-adjoint linear operator , by and , we denote the trace and determinant of G with respect to our fixed operator B:

Note that, in these definitions, is a linear operator from to , so and are the standard well-defined notions of trace and determinant of a linear operator acting on the same space. For example, they can be defined as the trace and determinant of the matrix representation of with respect to an arbitrary chosen basis in (the result is independent of the particular choice of basis). Alternatively, and can be equivalently defined as the sum and product, respectively, of the eigenvalues of G with respect to B.

For a point and a real , by

we denote the closed Euclidean ball with center x and radius r.

Given two solids2, we can define the relative volume of Q with respect to by , where e is an arbitrary basis in , are the coordinate representations of the sets in the basis e and is the Lebesgue measure in . Note that the relative volume is independent of the particular choice of the basis e. Indeed, for any other basis f, we have , , where is the change-of-basis matrix, so , and hence .

For us, it will be convenient to define the volume of a solid as the relative volume of Q with respect to the unit ball:

For an ellipsoid , where is a self-adjoint positive definite linear operator, we have .

Convex problems and accuracy certificates

Description and examples

In this paper, we consider numerical algorithms for solving problems with convex structure. The main examples of such problems are convex minimization problems, convex-concave saddle-point problems, convex Nash equilibrium problems, and variational inequalities with monotone operators.

The general formulation of a problem with convex structure involves two objects:

- Solid (called the feasible set), represented by the Separation Oracle: given any point , this oracle can check whether , and if not, it reports a vector which separates x from Q:

4 Vector field , represented by the First-Order Oracle: given any point , this oracle returns the vector g(x).

In what follows, we only consider the problems satisfying the following condition:

| 5 |

Remark 1

A careful reader may note that the notation overlaps with our general notation for the linear operator generated by a point x (see Sect. 1). However, there should be no risk of confusion since the precise meaning of can usually be easily inferred from the context.

A numerical algorithm for solving a problem with convex structure starts at some point . At each step , it queries the oracles at the current test point to obtain the new information about the problem, and then somehow uses this new information to form the next test point . Depending on whether , the kth step of the algorithm is called productive or nonproductive.

The total information, obtained by the algorithm from the oracles after steps, comprises its execution protocol which consists of:

The test points .

The set of productive steps .

The vectors reported by the oracles: , if , and , if , .

An accuracy certificate, associated with the above execution protocol, is a nonnegative vector such that (and, in particular, ). Given any solid , containing Q, we can define the following residual of on :

| 6 |

which is easily computable whenever is a simple set (e.g., a Euclidean ball). Note that

| 7 |

and, in particular, in view of (5).

In what follows, we will be interested in the algorithms, which can produce accuracy certificates with at a certain rate. This is a meaningful goal because, for all known instances of problems with convex structure, the residual upper bounds a certain natural inaccuracy measure for the corresponding problem. Let us briefly review some standard examples (for more examples, see [16, 18] and the references therein).

Example 1

(Convex minimization problem) Consider the problem

| 8 |

where is a solid and is closed convex and finite on .

The First-Order Oracle for (8) is , , where is an arbitrary subgradient of f at x. Clearly, (5) holds for being any solution of (8).

One can verify that, in this example, the residual upper bounds the functional residual: for or , where , we have and .

Moreover, , in fact, upper bounds the primal-dual gap for a certain dual problem for (8). Indeed, let be the conjugate function of f. Then, we can represent (8) in the following dual form:

| 9 |

where and . Denote . Then, using (7) and the convexity of f and , we obtain

Thus, and are -approximate solutions (in terms of function value) to problems (8) and (9), respectively. Note that the same is true if we replace with .

Example 2

(Convex-concave saddle-point problem) Consider the following problem: Find such that

| 10 |

where U, V are solids in some finite-dimensional vector spaces , , respectively, and is a continuous function which is convex-concave, i.e., is convex and is concave for any and any .

In this example, we set , and use the First-Order Oracle

where is an arbitrary subgradient of at u and is an arbitrary supergradient of at v. Then, for any and any ,

| 11 |

In particular, (5) holds for in view of (10).

Let and be the functions

In view of (10), we have for all . Therefore, the difference (called the primal-dual gap) can be used for measuring the quality of an approximate solution to problem (10).

Denoting and using (7), we obtain

where the second inequality is due to (11) and the last one follows from the convexity-concavity of f. Thus, the residual upper bounds the primal-dual gap for the approximate solution .

Example 3

(Variational inequality with monotone operator) Let be a solid and let be a continuous operator which is monotone, i.e., for all . The goal is to solve the following (weak) variational inequality:

| 12 |

Since V is continuous, this problem is equivalent to its strong variant: find such that for all .

A standard tool for measuring the quality of an approximate solution to (12) is the dual gap function, introduced in [1]:

It is easy to see that f is a convex nonnegative function which equals 0 exactly at the solutions of (12).

In this example, the First-Order Oracle is defined by for any . Denote . Then, using (7) and the monotonicity of V, we obtain

Thus, upper bounds the dual gap function for the approximate solution .

Establishing convergence of residual

For the algorithms, considered in this paper, instead of accuracy certificates and residuals, it turns out to be more convenient to speak about closely related notions of accuracy semicertificates and gaps, which we now introduce.

As before, let be the test points, generated by the algorithm after steps, and let be the corresponding oracle outputs. An accuracy semicertificate, associated with this information, is a nonnegative vector such that . Given any solid , containing Q, the gap of on is defined in the following way:

| 13 |

Comparing these definitions with those of accuracy certificate and residual, we see that the only difference between them is that now we use a different “normalizing” coefficient: instead of . Also, in the definitions of semicertificate and gap, we do not make any distinction between productive and nonproductive steps. Note that .

Let us demonstrate that by making the gap sufficiently small, we can make the corresponding residual sufficiently small as well. For this, we need the following standard assumption about our problem with convex structure (see, e.g., [16]).

Assumption 1

The vector field g, reported by the First-Order Oracle, is semibounded:

A classical example of a semibounded field is a bounded one: if there is , such that for all , then g is semibounded with , where D is the diameter of Q. However, there exist other examples. For instance, if g is the subgradient field of a convex function , which is finite and continuous on Q, then g is semibounded with (variation of f on Q); however, g is not bounded if f is not Lipschitz continuous (e.g., on ). Another interesting example is the subgradient field g of a -self-concordant barrier for the set Q; in this case, g is semibounded with (see, e.g., [19, Theorem 5.3.7]), while at the boundary of Q.

Lemma 1

Let be a semicertificate such that , where r is the largest of the radii of Euclidean balls contained in Q. Then, is a certificate and

Proof

Denote , , . Let be such that . For each , let be a maximizer of on . Then, for any , we have with . Therefore,

| 14 |

where the inequality follows from the separation property (4) and Assumption 1.

Let be arbitrary. Denoting , we obtain

| 15 |

where the inequalities follow from the definition (13) of and (14), respectively.

It remains to show that is a certificate, i.e., . But this is simple. Indeed, if , then, taking in (15) and using (14), we get , which contradicts our assumption that is a semicertificate, i.e., .

According to Lemma 1, from the convergence rate of the gap to zero, we can easily obtain the corresponding convergence rate of the residual . In particular, to ensure that for some , it suffices to make . For this reason, in the rest of this paper, we can focus our attention on studying the convergence rate only for the gap.

General algorithmic scheme

Consider the general scheme presented in Algorithm 1. This scheme works with an arbitrary oracle satisfying the following condition:

| 16 |

The point from (16) is typically called a solution of our problem. For the general problem with convex structure, represented by the First-Order Oracle g and the Separation Oracle for the solid Q, the oracle is usually defined as follows: , if , and , otherwise. To ensure that (16) holds, the constant R needs to be chosen sufficiently big so that .

Note that, in Algorithm 1, are strictly convex quadratic functions and are affine functions. Therefore, the sets are certain ellipsoids and are certain halfspaces (possibly degenerate).

Let us show that Algorithm 1 is a cutting-plane scheme in which the sets are the localizers of the solution .

Lemma 2

In Algorithm 1, for all , we have and , where .

Proof

Let us prove the claim by induction. Clearly, , , hence by (16). Suppose we have already proved that for some . Combining this with (16), we obtain , so it remains to show that . Let () be arbitrary. Note that . Hence, by (17), and , which means that .

Next, let us establish an important representation of the ellipsoids via the functions and the test points . For this, let us define for each . Observe that these operators satisfy the following simple relations (cf. (17)):

| 18 |

Also, let us define the sequence by the recurrence

| 19 |

Lemma 3

In Algorithm 1, for all , we have

In particular, for all and all , we have .

Proof

Let be the function . Note that is a quadratic function with Hessian and minimizer . Hence, for any , we have

| 20 |

where .

Let us compute . Combining (17), (18) and (20), for any , we obtain

| 21 |

Therefore,

| 22 |

where the last identity follows from the fact that (since in view of (18)). Since (22) is true for any and since , we thus obtain, in view of (19),

| 23 |

Let be arbitrary. Using the definition of and (23), we obtain

Thus, . In particular, for any , we have and .

Lemma 3 has several consequences. First, we see that the localizers are contained in the ellipsoids whose centers are the test points .

Second, we get a uniform upper bound on the function on the ellipsoid : for all . This observation leads us to the following definition of the sliding gap:

| 24 |

provided that . According to our observation, we have

| 25 |

At the same time, in view of Lemma 2 and 16

Comparing the definition (24) of the sliding gap with the definition (13) of the gap for the semicertificate , we see that they are almost identical. The only difference between them is that the solid , over which the maximum is taken in the definition of the sliding gap, depends on the iteration counter k. This seems to be unfortunate because we cannot guarantee that each contains the feasible set Q (as required in the definition of gap) even if so does the initial solid . However, this problem can be dealt with. Namely, in Sect. 5, we will show that the semicertificate can be efficiently converted into another semicertificate for which when taken over the initial solid . Thus, the sliding gap is a meaningful measure of convergence rate of Algorithm 1, and it makes sense to call the coefficients a preliminary semicertificate.

Let us now demonstrate that, for a suitable choice of the coefficients and in Algorithm 1, we can ensure that the sliding gap converges to zero.

Remark 2

From now on, in order to avoid taking into account some trivial degenerate cases, it will be convenient to make the following minor technical assumption:

Indeed, when the oracle reports for some , it usually means that the test point , at which the oracle was queried, is, in fact, an exact solution to our problem. For example, if the standard oracle for a problem with convex structure has reported , we can terminate the method and return the certificate for which the residual .

Let us choose the coefficients and in the following way:

| 26 |

where are certain coefficients to be chosen later.

According to (25), to estimate the convergence rate of the sliding gap, we need to estimate the rate of growth of the coefficients and from above and below, respectively. Let us do this.

Lemma 4

In Algorithm 1 with parameters (26), for all , we have

| 27 |

where , , and can be chosen arbitrarily. Moreover, if for all , then, for all with .

Proof

By the definition of and Lemma 3, we have

| 28 |

At the same time, in view of Lemma 2 and (16). Hence,

where the identity follows from (26). Combining this with (19), we obtain

| 29 |

Note that, for any and any , we have

(look at the minimum of the right-hand side in ). Therefore, for arbitrary ,

where we denote and . Dividing both sides by , we get

Since this is true for any , we thus obtain, in view of (19), that

Multiplying both sides by and using that , we come to (27).

When for all , we have and for all . Therefore, by Lemma 3, and hence (28) is, in fact, an equality. Consequently, (29) becomes , where .

Remark 3

From the proof, one can see that the quantity in Lemma 4 can be improved up to .

Lemma 5

In Algorithm 1 with parameters (26), for all , we have

| 30 |

Proof

By the definition of and (26), we have

where . Let us estimate each sum from below separately.

For the first sum, we can use the trivial bound , which is valid for any (since in view of (18)). This gives us .

Let us estimate the second sum. According to (19), for any , we have . Hence, and it remains to lower bound . By 18 and 26, and for all . Therefore,

where we have applied the arithmetic-geometric mean inequality. Combining the obtained estimates, we get (30).

Main instances of general scheme

Let us now consider several possibilities for choosing the coefficients , and in (26).

Subgradient method

The simplest possibility is to choose

In this case, for all , so and for all and all (see (17) and (18)). Consequently, the new test points in Algorithm 1 are generated according to the following rule:

where . Thus, Algorithm 1 is the Subgradient Method: .

In this example, each ellipsoid is simply a ball: for all . Hence, the sliding gap , defined in (24), does not “slide” and coincides with the gap of the semicertificate on the solid :

In view of Lemmas 4 and 5, for all , we have

(tend in Lemma 4). Substituting these estimates into (25), we obtain the following well-known estimate for the gap in the Subgradient Method:

The standard strategies for choosing the coefficients are as follows (see, e.g., sect. 3.2.3 in [19]):

- We fix in advance the number of iterations of the method and use constant coefficients , . This corresponds to the so-called Short-Step Subgradient Method, for which we have

- Alternatively, we can use time-varying coefficients , . This approach does not require us to fix in advance the number of iterations k. However, the corresponding convergence rate estimate becomes slightly worse:

(Indeed, , while .)

Remark 4

If we allow projections onto the feasible set, then, for the resulting Subgradient Method with time-varying coefficients , one can establish the convergence rate for the “truncated” gap

where , . For more details, see sect. 5.2.1 in [2] or sect. 3.1.1 in [12].

Standard ellipsoid method

Another extreme choice is the following one:

| 31 |

For this choice, we have for all . Hence, and for all . Therefore, the localizers in this method are the following ellipsoids (see Lemma 3):

| 32 |

Observe that, in this example, for all , so there is no preliminary semicertificate and the sliding gap is undefined. However, we can still ensure the convergence to zero of a certain meaningful measure of optimality, namely, the average radius of the localizers :

| 33 |

Indeed, let us define the following functions for any real :

| 34 |

According to Lemma 4, for any , we have

| 35 |

At the same time, in view of (18) and (26), for all . Combining this with (32)–(34), we obtain, for any , that

| 36 |

Let us now choose which minimizes . For such computations, the following auxiliary result is useful (see Sect. A for the proof).

Lemma 6

For any and any , the function , defined in (34), attains its minimum at a unique point

| 37 |

with the corresponding value .

Applying Lemma 6 to 36, we see that the optimal value of is

| 38 |

for which . With this choice of , we obtain, for all , that

| 39 |

One can check that Algorithm 1 with parameters (26), (31) and (38) is, in fact, the standard Ellipsoid Method (see Remark 6).

Ellipsoid method with preliminary semicertificate

As we have seen, we cannot measure the convergence rate of the standard Ellipsoid Method using the sliding gap because there is no preliminary semicertificate in this method. Let us present a modification of the standard Ellipsoid Method which does not have this drawback but still enjoys the same convergence rate as the original method (up to some absolute constants).

For this, let us choose the coefficients in the following way:

| 40 |

Then, in view of Lemma 4, for all , we have

| 41 |

Also, by Lemma 5, for all . Thus, for each , we obtain the following estimate for the sliding gap (see (25)):

| 42 |

where and is defined in (34).

Note that the main factor in estimate (42) is . Let us choose by minimizing this expression. Applying Lemma 6, we obtain

| 43 |

Theorem 1

In Algorithm 1 with parameters (26), (40), (43), for all ,

Proof

Suppose . According to Lemma 6, we have . Hence, by (42), . It remains to estimate from below .

Since , we have . Hence, . Note that the function is increasing on . Therefore, using (43), we obtain . Thus, for our choice of .

Now suppose . Then, . Therefore, it suffices to prove that or, in view of (24), that , where and are arbitrary. Note that since (see (18)). Hence, it remains to prove that .

Recall from (18) and (19) that and . Therefore,

where the penultimate inequality follows from Lemma 2 and 3. According to (41), (recall that ). Thus, it remains to show that . But this is immediate. Indeed, by (34) and (43), we have , so .

Subgradient ellipsoid method

The previous algorithm still shares the drawback of the original Ellipsoid Method, namely, it does not work when . To eliminate this drawback, let us choose similarly to how this is done in the Subgradient Method.

Consider the following choice of parameters:

| 44 |

where are certain coefficients (to be specified later) and is defined in (37).

Theorem 2

In Algorithm 1 with parameters (26) and (44), where , we have, for all ,

| 45 |

Proof

Applying Lemma 4 with and using (44), we obtain

| 46 |

At the same time, by Lemma 5, we have

| 47 |

Note that by (44). Since , we thus obtain

| 48 |

where the last two inequalities follow from (44). Therefore, by (25), (46) and (48),

where is defined in (34). Observe that, for our choice of , by Lemma 6, we have . This proves the second estimate3 in (45).

On the other hand, dropping the second term in (47), we can write

| 49 |

Suppose . Then, from (34) and (44), it follows that

Hence, by (46), . Combining this with (25) and (49), we obtain

By numerical evaluation, one can verify that, for our choice of , we have . This proves the first estimate in (45).

Exactly as in the Subgradient Method, we can use the following two strategies for choosing the coefficients :

- We fix in advance the number of iterations of the method and use constant coefficients , . In this case,

50 - We use time-varying coefficients , . In this case,

Let us discuss convergence rate estimate (50). Up to absolute constants, this estimate is exactly the same as in the Subgradient Method when and as in the Ellipsoid Method when . In particular, when , we recover the convergence rate of the Subgradient Method.

To provide a better interpretation of the obtained results, let us compare the convergence rates of the Subgradient and Ellipsoid methods:

To compare these rates, let us look at their squared ratio:

Let us find out for which values of k the rate of the Subgradient Method is better than that of the Ellipsoid Method and vice versa. We assume that .

Note that the function is strictly decreasing on and strictly increasing on (indeed, its derivative equals ). Hence, is strictly decreasing in k for and strictly increasing in k for . Since , we have . At the same time, when . Therefore, there exists a unique integer such that for all and for all .

Let us estimate . Clearly, for any , we have

while, for any , we have

Hence,

Thus, up to an absolute constant, is the switching moment, starting from which the rate of the Ellipsoid Method becomes better than that of the Subgradient Method.

Returning to our obtained estimate (50), we see that, ignoring absolute constants and ignoring the “small” region of the values of k between and , our convergence rate is basically the best of the corresponding convergence rates of the Subgradient and Ellipsoid methods.

Constructing accuracy semicertificate

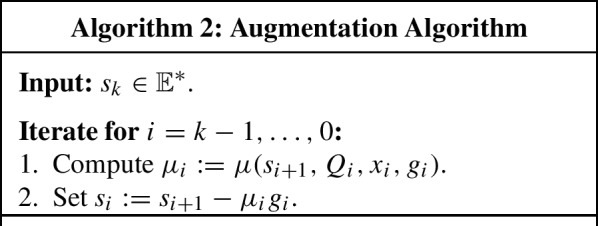

Let us show how to convert a preliminary accuracy semicertificate, produced by Algorithm 1, into a semicertificate whose gap on the initial solid is upper bounded by the sliding gap. The key ingredient here is the following auxiliary algorithm which was first proposed in [16] for building accuracy certificates in the standard Ellipsoid Method.

Augmentation algorithm

Let be an integer and let be solids in such that

| 51 |

where , . Further, suppose that, for any and any , we can compute a dual multiplier such that

| 52 |

(provided that certain regularity conditions hold). Let us abbreviate any solution of this problem by .

Consider now the following routine.

Lemma 7

Let be generated by Algorithm 2. Then,

Proof

Indeed, at every iteration , we have

Summing up these inequalities for , we obtain

where the identity follows from the fact that .

Methods with preliminary certificate

Let us apply the Augmentation Algorithm for building an accuracy semicertificate for Algorithm 1. We only consider those instances for which so that the sliding gap is well-defined:

Recall that the vector is called a preliminary semicertificate.

For technical reasons, it will be convenient to add the following termination criterion into Algorithm 1:

| 53 |

where is a fixed constant. Depending on whether this termination criterion has been satisfied at iteration k, we call it a terminal or nonterminal iteration, respectively.

Remark 5

In practice, one can set to an arbitrarily small value (within machine precision) if the desired target accuracy is unknown. As can be seen from the subsequent discussion, the main purpose of the termination criterion (53) is to ensure that never becomes equal to zero during the iterations of Algorithm 1. This guarantees the existence of dual multiplier in (52) for any at every nonterminal iteration. The case corresponds to the degenerate situation when Algorithm 1 has “accidentally” found an exact solution.

Let be an iteration of Algorithm 1. According to Lemma 2, the sets satisfy (51). Since the method has not been terminated during the course of the previous iterations, we have4 for all . Therefore, for any , there exists such that . This guarantees the existence of dual multiplier in (52).

Let us apply Algorithm 2 to in order to obtain dual multipliers . From Lemma 7, it follows that

(note that ). Thus, defining , we obtain and

Thus, is a semicertificate whose gap on is bounded by the sliding gap .

If is a terminal iteration, then, by the termination criterion and the definition of (see Algorithm 1), we have . In this case, we apply Algorithm 2 to to obtain dual multipliers . By the same reasoning as above but with the vector instead of , we can obtain that , where .

Standard ellipsoid method

In the standard Ellipsoid Method, there is no preliminary semicertificate. Therefore, we cannot apply the above procedure. However, in this method, it is still possible to generate an accuracy semicertificate, although the corresponding procedure is slightly more involved. Let us now briefly describe this procedure and discuss how it differs from the previous approach. For details, we refer the reader to [16].

Let be an iteration of the method. There are two main steps. The first step is to find a direction , in which the “width” of the ellipsoid (see (32)) is minimal:

It is not difficult to see that is given by the unit eigenvector5 of the operator , corresponding to the largest eigenvalue. For the corresponding minimal “width” of the ellipsoid, we have the following bound via the average radius:

| 54 |

where . Recall that in view of (39).

At the second step, we apply Algorithm 2 two times with the sets : first, to the vector to obtain dual multipliers and then to the vector to obtain dual multipliers . By Lemma 7 and (54), we have

(note that ). Consequently, for , we obtain

Finally, one can show that

where D is the diameter of Q and r is the maximal of the radii of Euclidean balls contained in Q. Thus, whenever , is a semicertificate with the following gap on :

Compared to the standard Ellipsoid Method, we see that, in the Subgradient Ellipsoid methods, the presence of the preliminary semicertificate removes the necessity in finding the minimal-“width” direction and requires only one run of the Augmentation Algorithm.

Implementation details

Explicit representations

In the implementation of Algorithm 1, instead of the operators , it is better to work with their inverses . Applying the Sherman-Morrison formula to (18), we obtain the following update rule for :

| 55 |

Let us now obtain an explicit formula for the next test point . This has already been partly done in the proof of Lemma 3. Indeed, recall that is the minimizer of the function . From (21), we see that . Combining it with (55), we obtain

| 56 |

Finally, one can obtain the following explicit representations for and :

| 57 |

where, for any ,

| 58 |

Indeed, recalling the definition of functions , we see that for all . Therefore, . Further, by Lemma 3, . Note that for any . Hence, .

Remark 6

Now we can justify the claim made in Sect. 4.2 that Algorithm 1 with parameters (26), (31) and (38) is the standard Ellipsoid Method. Indeed, from (26) and (32), we see that and . Also, in view of (38), . Hence, by (56) and (55),

| 59 |

Further, according to (35) and (38), for any , we have , where . Thus, method (59) indeed coincides6 with the standard Ellipsoid Method (2) under the change of variables .

Computing support function

To calculate in Algorithm 1, we need to compute the following quantity (see (57)):

Let us discuss how to do this.

First, let us introduce the following support function to simplify our notation:

where is a self-adjoint positive definite linear operator, and . In this notation, assuming that , we have

Let us show how to compute . Dualizing the linear constraint, we obtain

| 60 |

provided that there exists some such that , (Slater condition). One can show that (60) has the following solution (see Lemma 10):

| 61 |

where is the unconstrained minimizer of the objective function in (60).

Let us present an explicit formula for . For future use, it will be convenient to write down this formula in a slightly more general form for the following multidimensional7 variant of problem (60):

| 62 |

where , is a self-adjoint positive definite linear operator, is a linear operator with trivial kernel and , . It is not difficult to show that problem (62) has the following unique solution (see Lemma 9):

| 63 |

Note that, in order for the above approach to work, we need to guarantee that the sets and satisfy a certain regularity condition, namely, . This condition can be easily fulfilled by adding into Algorithm 1 the termination criterion (53).

Lemma 8

Consider Algorithm 1 with termination criterion (53). Then, at each iteration , at the beginning of Step , we have . Moreover, if k is a nonterminal iteration, we also have for some .

Proof

Note that . Now suppose for some nonterminal iteration . Denote . Since iteration k is nonterminal, and hence . Combining it with the fact that , we obtain and, in particular, . At the same time, slightly modifying the proof of Lemma 2 (using that for any since is a strictly convex quadratic function), it is not difficult to show that . Thus, , and we can continue by induction.

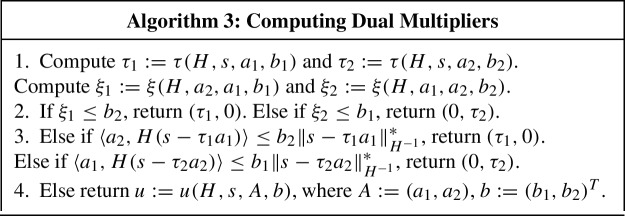

Computing dual multipliers

Recall from Sect. 5 that the procedure for generating an accuracy semicertificate for Algorithm 1 requires one to repeatedly carry out the following operation: given and some iteration number , compute a dual multiplier such that

This can be done as follows.

First, using (57), let us rewrite the above primal problem more explicitly:

Our goal is to dualize the second linear constraint and find the corresponding multiplier. However, for the sake of symmetry, it is better to dualize both linear constraints, find the corresponding multipliers and then keep only the second one.

Let us simplify our notation by introducing the following problem:

| 64 |

where is a self-adjoint positive definite linear operator, and . Clearly, our original problem can be transformed into this form by setting , , , , . Note that this transformation does not change the dual multipliers.

Dualizing the linear constraints in (64), we obtain the following dual problem:

| 65 |

which is solvable provided the following Slater condition holds:

| 66 |

Note that (66) can be ensured by adding termination criterion (53) into Algorithm 1 (see Lemma 8).

A solution of (65) can be found using Algorithm 3. In this routine, , and are the auxiliary operations, defined in Sect. 6.2, and is the linear operator acting from to . The correctness of Algorithm 3 is proved in Theorem 3.

Time and memory requirements

Let us discuss the time and memory requirements of Algorithm 1, taking into account the previously mentioned implementation details.

The main objects in Algorithm 1, which need to be stored and updated between iterations, are the test points , matrices , scalars , vectors and scalars , see (19), (55), 56 and (58) for the corresponding updating formulas. To store all these objects, we need memory.

Consider now what happens at each iteration k. First, we compute . For this, we calculate and according to (58) and then perform the calculations described in Sect. 6.2. The most difficult operation there is computing the matrix-vector product, which takes time. After that, we calculate the coefficients and according to (26), where , and are certain scalars, easily computable for all main instances of Algorithm 1 (see Sects. 4.1–4.4). The most expensive step there is computing the norm , which can be done in operations by evaluating the product . Finally, we update our main objects, which takes time.

Thus, each iteration of Algorithm 1 has time and memory complexities, exactly as in the standard Ellipsoid Method.

Now let us analyze the complexity of the auxiliary procedure from Sect. 5 for converting a preliminary semicertificate into a semicertificate. The main operation in this procedure is running Algorithm 2, which iterates “backwards”, computing some dual multiplier at each iteration . Using the approach from Sect. 6.3, we can compute in time, provided that the objects , , , , , , are stored in memory. Note, however, that, in contrast to the “forward” pass, when iterating “backwards”, there is no way to efficiently recompute all these objects without storing in memory a certain “history” of the main process from iteration 0 up to k. The simplest choice is to keep in this “history” all the objects mentioned above, which requires memory. A slightly more efficient idea is to keep the matrix-vector products instead of and then use (55) to recompute from in operations. This allows us to reduce the size of the “history” down to while still keeping the total time complexity of the auxiliary procedure. Note that these estimates are exactly the same as those for the best currently known technique for generating accuracy certificates in the standard Ellipsoid Method [16]. In particular, if we generate a semicertificate only once at the very end, then the time complexity of our procedure is comparable to that of running the standard Ellipsoid Method without computing any certificates. Alternatively, as suggested in [16], one can generate semicertificates, say, every iterations. Then, the total “overhead” of the auxiliary procedure for generating semicertificates will be comparable to the time complexity of the method itself.

Conclusion

In this paper, we have addressed one of the issues of the standard Ellipsoid Method, namely, its poor convergence for problems of large dimension n. For this, we have proposed a new algorithm which can be seen as the combination of the Subgradient and Ellipsoid methods.

Our developments can be considered as a first step towards constructing universal methods for nonsmooth problems with convex structure. Such methods could significantly improve the practical efficiency of solving various applied problems.

Note that there are still some open questions. First, the convergence estimate of our method with time-varying coefficients contains an extra factor proportional to the logarithm of the iteration counter. We have seen that this logarithmic factor has its roots yet in the Subgradient Method. However, as discussed in Remark 4, for the Subgradient Method, this issue can be easily resolved by allowing projections onto the feasible set and working with “truncated” gaps. An even better alternative, which does not require any of this machinery, is to use Dual Averaging [18] instead of the Subgradient Method. It is an interesting question whether one can combine the Dual Averaging with the Ellipsoid Method similarly to how we have combined the Subgradient and Ellipsoid methods.

Second, the convergence rate estimate, which we have obtained for our method, is not continuous in the dimension n. Indeed, for small values of the iteration counter k, this estimate behaves as that of the Subgradient Method and then, at some moment (around ), it switches to the estimate of the Ellipsoid Method. As discussed at the end of Sect. 4.4, there exists some “small” gap between these two estimates around the switching moment. Nevertheless, the method itself is continuous in n and does not contain any explicit switching rules. Therefore, there should be some continuous convergence rate estimate for our method, and it is an open question to find it.

Another interesting question is to understand what happens with the proposed method on other (less general) classes of convex problems than those, considered in this paper. For example, it is well-known that, on smooth and/or strongly convex problems, (sub)gradient methods have much better convergence rates than on the general nonsmooth problems. We expect that similar conclusions should also be valid for the proposed Subgradient Ellipsoid Method. However, to achieve the acceleration, it may be necessary to introduce some modifications in the algorithm such as using different step sizes. We leave this direction for future research.

Finally, apart from the Ellipsoid Method, there exist other “dimension-dependent” methods (e.g., the Center-of-Gravity Method8 [13, 20], the Inscribed Ellipsoid Method [22], the Circumscribed Simplex Method [6], etc.). Similarly, the Subgradient Method is not the only “dimension-independent” method and there exist numerous alternatives which are better suited for certain problem classes (e.g., the Fast Gradient Method [17] for Smooth Convex Optimization or methods for Stochastic Programming [7, 8, 11, 15]). Of course, it is interesting to consider different combinations of the aforementioned “dimension-dependent” and “dimension-independent” methods. In this regard, it is also worth mentioning the works [4, 5], where the authors propose new variants of gradient-type methods for smooth strongly convex minimization problems inspired by the geometric construction of the Ellipsoid Method.

Acknowledgements

We would like to thank the anonymous reviewers for their valuable time and efforts spent on reviewing this manuscript. Their feedback was very useful.

Proof of Lemma 6

Proof

Everywhere in the proof, we assume that the parameter c is fixed and drop all the indices related to it.

Let us show that is a convex function. Indeed, the function , defined by , is convex. Hence, the function q, defined in (34), is also convex. Further, since is increasing in its first argument on , the function , defined by , is also convex as the composition of with the mapping , whose first component is convex (since ) and the second one is affine. Note that is increasing in its first argument. Hence, is indeed a convex function as the composition of with the mapping , whose first part is convex and the second one is affine.

Differentiating, for any , we obtain

Therefore, the minimizers of are exactly solutions to the following equation:

| 67 |

Note that (see (34)). Hence, (67) can be written as or, equivalently, . Clearly, is not a solution of this equation. Making the change of variables , , we come the quadratic equation or, equivalently, to . This equation has two solutions: and . Note that . Hence, cannot be a minimizer of . Consequently, only is an acceptable solution (note that in view of our assumptions on c and p). Thus, (37) is proved.

Let us show that belongs to the interval specified in (37). For this, we need to prove that . Note that the function , where , is decreasing in t. Indeed, is an increasing function in t. Hence, . On the other hand, using that and denoting , we get , where . Note that g is decreasing in . Indeed, denoting , we obtain , which is a decreasing function in . Thus, .

It remains to prove that . Let be the function

| 68 |

We need to show that for all or, equivalently, that the function , defined by , satisfies for all . For this, it suffices to show that is convex, and . Differentiating, we see that and for all . Thus, we need to justify that

| 69 |

for all and that

| 70 |

Let be arbitrary. Differentiating and using (67), we obtain

| 71 |

Therefore,

where the inequality follows from (34) and the fact that for any . Thus, to show (69), we need to prove that or, equivalently, . But this is immediate. Indeed, using (37), we obtain since the function is decreasing. Thus, (69) is proved.

It remains to show (70). From (37), we see that and as . Hence, using (34), we obtain

Consequently, in view of (68) and (71), we have

which is exactly (70).

Support function and dual multipliers: proofs

For brevity, everywhere in this section, we write and instead of and , respectively. We also denote .

Auxiliary operations

Lemma 9

Let , let be a linear operator with trivial kernel and let , . Then, problem (62) has a unique solution given by (63).

Proof

Note that the sublevel sets of the objective function in (62) are bounded:

for all . Hence, problem (62) has a solution.

Let be a solution of problem (62). If , then , which coincides with the solution given by (63) (note that, in this case, ).

Now suppose . Then, from the first-order optimality condition, we obtain that , where . Hence, and

Thus, and given by (63).

Lemma 10

Let , be such that for some . Then, problem (60) has a solution given by (61). Moreover, this solution is unique if .

Proof

Let be the function . By our assumptions, if and if . If additionally , then .

If , then for all , so 0 is a solution of (60). Clearly, this solution is unique when because then .

From now on, suppose . Then, is differentiable at 0 with . If , then , so 0 is a solution of (60). Note that this solution is unique if because then , i.e., is strictly increasing on .

Suppose . Then, and thus . Note that, for any , we have . Hence, the sublevel sets of , intersected with , are bounded, so problem (60) has a solution. Since , any solution of (60) is strictly positive and so must be a solution of problem (62) for and . But, by Lemma 9, the latter solution is unique and equals .

We have proved that (61) is indeed a solution of (60). Moreover, when , we have shown that this solution is unique. It remains to prove the uniqueness of solution when , assuming additionally that . But this is simple. Indeed, by our assumptions, , so . Hence, a and s are linearly independent. But then is strictly convex, and thus its minimizer is unique.

Computation of dual multipliers

In this section, we prove the correctness of Algorithm 3.

For , let X(s) be the subdifferential of at the point s:

| 72 |

Clearly, for any . When , we denote the unique element of X(s) by x(s).

Let us formulate a convenient optimality condition.

Lemma 11

Let A be the linear operator from to , defined by , where , and let , . Then, is a minimizer of over if and only if , where, for each and , we denote , if , and , if .

Proof

Indeed, the standard optimality condition for a convex function over the nonnegative orthant is as follows: is a minimizer of on if and only if there exists such that and for all . It remains to note that .

Theorem 3

Algorithm 3 is well-defined and returns a solution of (65).

Proof

i. For each and , denote , , , if , and , if .

ii. From (66) and Lemma 10, it follows that Step is well-defined and, for each , is a solution of (60) with parameters . Hence, by Lemma 11,

| 73 |

iii. Consider Step . Note that the condition is equivalent to since . If , then, by (73), , so, by Lemma 11, is indeed a solution of (65).

Similarly, if , then and is a solution of (65).

iv. From now on, we can assume that , , where , . Combining this with (66), we obtain9

| 74 |

Suppose at Step . 1) If , then is a singleton, , so we obtain . Combining this with (73), we get . 2) If , then in view of the first claim in (74) (recall that ). Thus, in any case, , and so, by Lemma 11, is a solution of (65).

Similarly, one can consider the case when at Step .

Suppose we have reached Step . From now on, we can assume that

| 75 |

Indeed, since both conditions at Step have not been satisfied, , , and , . Also, by (73), , .

Let be any solution of (65). By Lemma 11, . Note that we cannot have . Indeed, otherwise, we get , so must be a solution of (60) with parameters . But, by Lemma 10, such a solution is unique (in view of the second claim in (75), for some , so ). Hence, , and we obtain a contradiction with (75). Similarly, we can show that . Consequently, , which means that is a solution of (62).

Thus, at this point, any solution of (65) must be a solution of (62). In view of Lemma 9, to finish the proof, it remains to show that the vectors , are linearly independent and . But this is simple. Indeed, from (75), it follows that

| 76 |

since and cannot both be equal to 0. Combining (76) and (74), we see that and, in particular, . Hence, , are linearly independent (otherwise, , which contradicts (76)). Taking any , we obtain and , hence , where we have used .

Footnotes

More precisely, must be a non-zero vector such that for all . In particular, for the Euclidean ball, one can take .

Hereinafter, a solid is any convex compact set with nonempty interior.

In fact, we have proved the second estimate in (45) for all (not only for ).

Recall that for all by (2).

Here eigenvectors and eigenvalues are defined with respect to the operator B inducing the norm .

Note that, in (2), we identify the spaces , with in such a way that coincides with the standard dot-product and coincides with the standard Euclidean norm. Therefore, B becomes the identity matrix and becomes .

Hereinafter, we identify with in such a way that is the standard dot product.

Although this method is not practical, it is still interested from an academic point of view.

Take an appropriate convex combination of two points from the specified nonempty convex sets.

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant agreement No. 788368).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Anton Rodomanov, Email: anton.rodomanov@uclouvain.be.

Yurii Nesterov, Email: yurii.nesterov@uclouvain.be.

References

- 1.Auslender A. Résolution numérique d’inégalités variationnelles. RAIRO. 1973;7(2):67–72. [Google Scholar]

- 2.Ben-Tal, A., Nemirovski, A.: Lectures on modern convex optimization. Lecture notes (2021)

- 3.Bland R, Goldfarb D, Todd M. The ellipsoid method: a survey. Oper. Res. 1981;29(6):1039–1091. doi: 10.1287/opre.29.6.1039. [DOI] [Google Scholar]

- 4.Bubeck, S., Lee, Y.T.: Black-box optimization with a politician. In: International Conference on Machine Learning, pp. 1624–1631. PMLR (2016)

- 5.Bubeck, S., Lee, Y.T., Singh, M.: A geometric alternative to Nesterov’s accelerated gradient descent. arXiv preprint arXiv:1506.08187 (2015)

- 6.Bulatov, V., Shepot’ko, L.: Method of centers of orthogonal simplexes for solving convex programming problems. Methods Optim. Appl. (1982)

- 7.Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12(7) (2011)

- 8.Dvurechensky P, Gasnikov A. Stochastic intermediate gradient method for convex problems with stochastic inexact oracle. J. Optim. Theory Appl. 2016;171(1):121–145. doi: 10.1007/s10957-016-0999-6. [DOI] [Google Scholar]

- 9.Grötschel M, Lovász L, Schrijver A. The ellipsoid method and its consequences in combinatorial optimization. Combinatorica. 1981;1(2):169–197. doi: 10.1007/BF02579273. [DOI] [Google Scholar]

- 10.Khachiyan L. A polynomial algorithm in linear programming. Soviet Math. Dokl. 1979;244(5):1093–1096. [Google Scholar]

- 11.Lan G. An optimal method for stochastic composite optimization. Math. Program. 2012;133(1):365–397. doi: 10.1007/s10107-010-0434-y. [DOI] [Google Scholar]

- 12.Lan G. First-Order and Stochastic Optimization Methods for Machine Learning. Switzerland: Springer; 2020. [Google Scholar]

- 13.Levin A. An algorithm for minimizing convex functions. Soviet Math. Dokl. 1965;160(6):1244–1247. [Google Scholar]

- 14.Nemirovski, A.: Information-based complexity of convex programming. Lecture notes (1995)

- 15.Nemirovski A, Juditsky A, Lan G, Shapiro A. Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 2009;19(4):1574–1609. doi: 10.1137/070704277. [DOI] [Google Scholar]

- 16.Nemirovski A, Onn S, Rothblum UG. Accuracy certificates for computational problems with convex structure. Math. Oper. Res. 2010;35(1):52–78. doi: 10.1287/moor.1090.0427. [DOI] [Google Scholar]

- 17.Nesterov Y. A method for solving the convex programming problem with convergence rate Soviet Math. Dokl. 1983;269:543–547. [Google Scholar]

- 18.Nesterov Y. Primal-dual subgradient methods for convex problems. Math. Program. 2009;120(1):221–259. doi: 10.1007/s10107-007-0149-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nesterov Y . Lectures on convex optimization. Berlin: Springer; 2018. [Google Scholar]

- 20.Newman D. Location of the maximum on unimodal surfaces. J. ACM (JACM) 1965;12(3):395–398. doi: 10.1145/321281.321291. [DOI] [Google Scholar]

- 21.Shor N. Cut-off method with space extension in convex programming problems. Cybernetics. 1977;13(1):94–96. doi: 10.1007/BF01071394. [DOI] [Google Scholar]

- 22.Tarasov S, Khachiyan L, Erlikh I. The method of inscribed ellipsoids. Soviet Math. Dokl. 1988;37(1):226–230. [Google Scholar]

- 23.Yudin D, Nemirovskii A. Informational complexity and efficient methods for the solution of convex extremal problems. Matekon. 1976;13(2):22–45. [Google Scholar]