Abstract

Crossmodal plasticity is a textbook example of the ability of the brain to reorganize based on use. We review evidence from the auditory system showing that such reorganization has significant limits, is dependent on pre-existing circuitry and top-down interactions, and that extensive reorganization is often absent. We argue that evidence does not support the hypothesis that crossmodal reorganization is responsible for closing critical periods in deafness, rather it represents a neuronal process that is dynamically adaptable. We evaluate the evidence for crossmodal changes in both developmental and adult-onset deafness, which start as early as mild-moderate hearing loss and show reversibility with hearing restoration in some cases. Finally, crossmodal plasticity does not appear to affect the neuronal preconditions for successful hearing restoration and given its dynamic and versatile nature, we describe how it can be exploited for improving clinical outcomes after neurosensory restoration.

Keywords: deafness, cochlear implants, hearing aids, multisensory, connectivity, oscillations

Crossmodal Plasticity in the Auditory System

Crossmodal plasticity (see Glossary) is an adaptive change in the drive of neurons from one (deprived) sensory input towards another (non-deprived) sensory input, i.e. it is a form of between-sensory-systems (intermodal) plasticity [1]. Crossmodal plasticity represents a textbook example of the brain’s capacity for plastic changes.

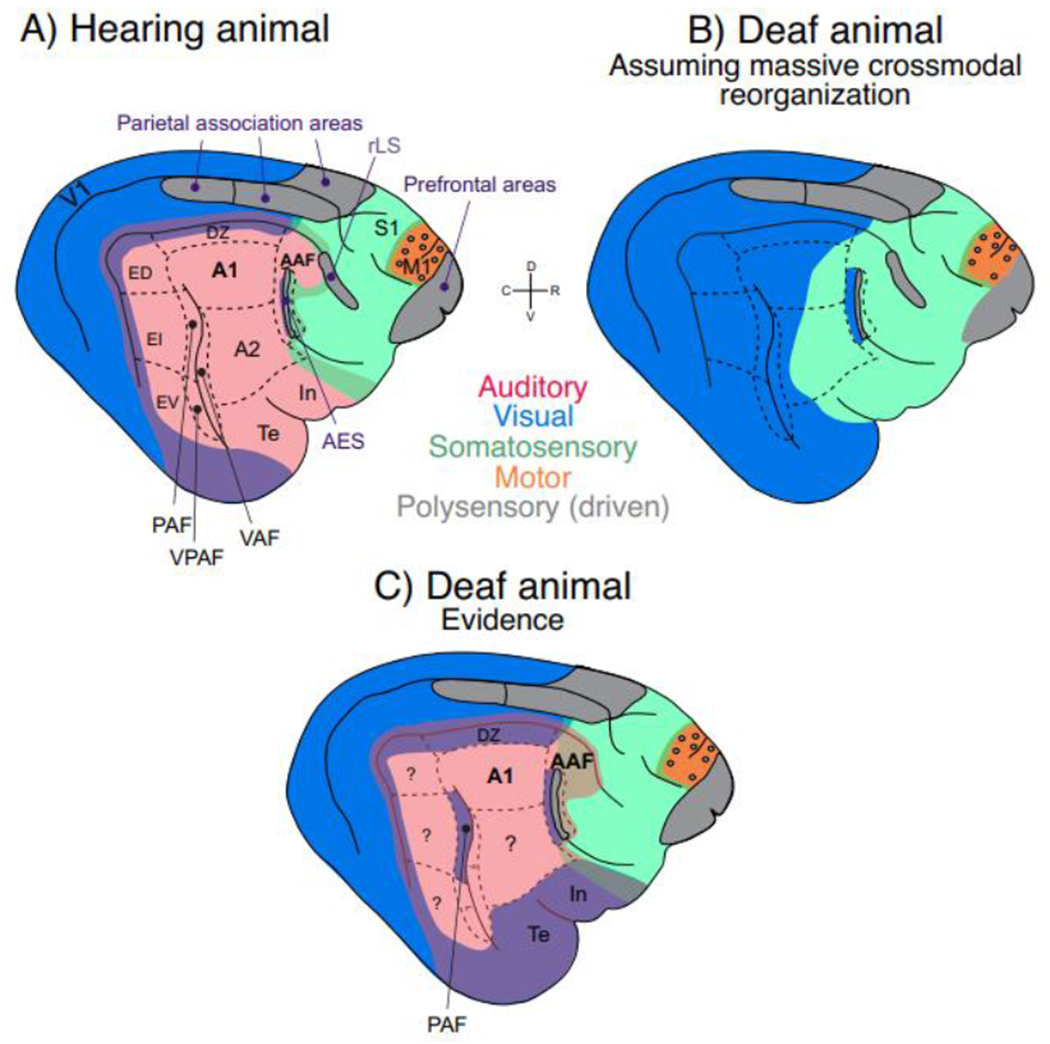

In early studies in animal models, a massive crossmodal visual takeover of the auditory cortex has been found when early deafness was combined with aspiration of the auditory midbrain: such combined intervention led to reorganization of anatomical inputs to the thalamus and a large-scale remapping of the auditory cortex by visual inputs [2,3]. These studies demonstrated probably the maximal possible functional adaptation of the auditory cortex to novel input. What was subsequently often overlooked was that this extensive reorganization was made possible by aspiration of the auditory midbrain that allowed the visual afferents to target auditory thalamus (in addition to visual thalamus) during development. That is, anatomical re-routing of visual information to the auditory thalamus (that does not normally accompany sensory deprivation) was a precondition for this massive reorganization. Many textbooks subsequently suggested that in the case of auditory deprivation the entire auditory cortex becomes a battlefield between sensory systems and each of its areas can be recruited for a new sensory function (Fig. 1). This is often considered a key reason for developmental critical periods for sensory restoration: such “colonization” of the auditory system would preclude the processing of auditory inputs after neurosensory restoration, e.g. via cochlear implants. Therefore, such crossmodal reorganization would be a key reason why critical developmental periods close. This has important clinical consequences: To prevent fostering this effect it was traditionally recommended not to use visual communication (sign-language) before cochlear implantation. However, as our review will show, the afore-mentioned concept of a massive crossmodal reorganization and its negative influence is not consistent with recent findings and requires modification.

Fig. 1: Schematic illustration of approximate locations of sensory brain areas in the cat and their reorganization in deafness.

Blue – visual; red – auditory; green – somatosensory; orange – motor; grey – association areas. Mixture of colors at sensory borders (appears as violet, light brown/golden and deeper green) depicts bimodal responsiveness (~ two colors). A) AES, the area of the anterior ectosylvian sulcus, together with area In correspond to human insular cortex [4]. The ectosylvian sulcus (divided into dorsal area, ED, intermediate area, EI, and ventral area, EV) correspond to human superior temporal gyrus [5]. Feline prefrontal cortex is likely multisensory based on tracer studies [6]. Rostral lateral sulcus (rLS) and/or anterior ectosylvian sulcus (AES) allow multisensory integration in the superior colliculus [7]. “Parietal association cortex” are two separate areas within Brodmann area 7 [8,9]. Visual responsiveness was observed in the posterior belt in ED [5], but also in DZ. Auditory and visual responses have been described around the cruciate sulcus in M1. In the pericruciate association cortex visual responses and auditory responses were reported, here shown as grey circles within the motor cortex (orange). Cingulate cortex on the medial hemisphere and ventral limbic areas (entorhinal cortex and parahippocampal gyri) are not shown. B) A putative cortical map under the assumption of massive crossmodal reorganization in congenital deafness. C) An approximate representation of actual cortical organization in congenitally deaf cats according to the evidence as reviewed in the text.

We propose that crossmodal reorganization is a dynamically modified process initiated already in mild or unilateral hearing loss continuing into complete deafness, occurring both in congenital and adult-onset hearing loss (albeit with significant differences). It is a primarily top-down driven process which exploits pre-existing neuronal connections. In its essence, we argue, crossmodal reorganization is not detrimental for neurosensory restoration, rather it is compensatory and can be used to enhance communication before therapy, or aid in enhancing real world speech perception.

Crossmodal effects in deafness

Crossmodal ‘supranormal’ enhancements of visual and somatosensory modalities have been extensively documented in subjects that were deaf from childhood [10–18]. The extensively-studied visual effects have been related to enhanced visual motion detection and localization abilities [12,19–23], better visual change detection [24] or faster reactions to visual stimuli in deaf subjects [25,26]. But not all visual functions show such supranormal performance [27]: there was e.g. no change in visual acuity, brightness discrimination, contrast sensitivity, or visual motion direction sensitivity. Mainly those functions that the visual and auditory systems have in common (like localization, movement detection and change detection, i.e., ‘supramodal’ functions [28]) have the capacity to become compensated by crossmodal plasticity. This specificity of cross-modal reorganization raises the question on the extent and limits of reorganization of the brain with respect to cross-modal plasticity.

In developmental deafness, in both humans and animals models, enhanced visual spatial localization and visual movement detection was associated with cortical auditory areas underlying localization and movement (congenitally deaf cats, CDCs [28]; early deafened cats [29]; humans [23]). In humans, face processing was observed in the temporal voice area of pre-lingually deaf humans [30]. Auditory cortex contribution to face processing was also noted in postlingually deaf adults [31]. Given these findings, it appears that crossmodal reorganization switches the sensory, but not behavioral roles of the auditory cortex [23,28,29]. Supporting this, a recent human study emphasized the overlap of the crossmodally reorganized region on to the previously observed region of auditory motion detection in hearing subjects [32]. This is compatible with a change in driving sensory input in crossmodal reorganization – from auditory to visual, but principally preserving the subsequent corticocortical processing.

The normal auditory system, characterized by exceptional timing precision, provides key “calibration” timing information to other sensory systems (similarly to the way the visual system is key for spatial information due to its high spatial acuity) [33,34]. Indeed, in cases of conflict in timing, hearing can “override” visual information (e.g., auditory capture effect, [35,36]), whereas vision similarly “overrides” hearing in spatial location discrepancy (e.g., ventriloquist effect, [37]). Therefore, visual and somatosensory temporal processing are negatively affected by deafness [33,38]. It is interesting to note that deaf individuals, when tested under well-controlled conditions, may infer timing properties also from spatial cues, and in these situations, only if both are correlated they perform well [39]. Auditory feedback of motor actions through secondary auditory cortex has also been demonstrated to be key for action timing [40]. Consequently, hearing loss may have adverse effects in the spared sensorimotor functions.

Causality and Specificity in Crossmodal Reorganization

Causal evidence of crossmodal reorganization is difficult to obtain. Studies that directly test causality of crossmodal reorganization have been conducted primarily in animal models, where invasive studies are feasible, for instance in the congenitally deaf cat (CDC), an important model of pediatric congenital deafness [41–43]. CDCs show supranormal performance in visual localization and visual motion detection compared to normal hearing cats [28]. Using cooling deactivation of specific auditory areas, activity in these areas can be reversibly silenced and behavior can be assessed to test for potential causal links between deactivated areas and their behavioral functions. Regions found to be subserving supranormal visual performance were posterior auditory field and dorsal auditory cortex (dorsal zone), but primary fields A1 and AAF were not involved [28]. The CDC replicates crossmodal effects observed in humans and allows exact identification of the cortical regions responsible for the behavioral effects. Taken together, the CDC data and the afore-mentioned human studies implicate that crossmodal plasticity shows a strict areal specificity and that crossmodal plasticity switches the sensory but not behavioral roles, i.e., supranormal visual behavior in deafness is subserved by auditory cortical regions underlying the same auditory behavior.

Areas involved in this crossmodal plasticity, when studied using retrograde tracers to reveal their anatomical connections (fiber tracts), generally showed only a small percentage of new (ectopic) connections to non-auditory areas. That means that the connectivity patterns to other sensory systems were similar for CDCs and hearing cats, only individual connection strengths were changed in CDCs (dorsal cortex: [44], posterior auditory field: [45]; for later deafening in cats see [46,47]). The overall effect size of ectopic connections (which were a small percentage of all connections) did not explain the behavioral outcomes of supranormal visual motion detection and visual localization ability in CDCs. The few ectopic projections observed are unlikely to be a consequence of new axonal sprouting targeting new cortical areas, but rather may represent developmental exuberant projections [48] that are normally pruned by experience but were preserved in congenital deafness [44,49]. These exuberant connections are often formed between anatomically-bordering areas and may provide these with a higher susceptibility to crossmodal change than distant areas. Only in the anterior auditory field AAF of early deafened cats the data are not completely consistent with this concept – here more anatomical reorganization was observed [47]. However, area AAF is special because of the large number of somatosensory areas and fibers closely neighboring it, which are potential candidates for tracer pickup and require further study.

However, this does not mean that there is no effect of congenital auditory deprivation on the auditory cortex (reviewed in [43]). Two consequences of deafness in CDCs are relevant in the present context: (i) a specific reduction of corticocortical interactions [50], with (ii) reduction in functional intrinsic cortical connectivity between supragranular and infragranular layers [51]. These deficits could be related to a specific reduction of top-down interactions between secondary and primary auditory cortices [52]. Infragranular layers are the source of top-down interactions. Indeed, dystrophic effects (reduced thickness) were observed in infragranular and granular but not supragranular layers in areas A1, DZ and All [53]. Reduced activity in infragranular layers of area A1 has also been observed [54]. This all suggests that top-down interactions (that are known to develop after bottom-up interactions [55]) are more sensitive to developmental alteration of hearing than bottom-up interactions. A change in balance between bottom-up and top-down interactions may play an important role in crossmodal plasticity [53,56]. Reduced functional connectivity (measured by spike-field coherence) between superficial and deep cortical layers appears a key element responsible for integration of cortical column and contribute to the reduced top-down interactions between primary and secondary cortical areas in deafness [51]. Taken together, the cortical column gets reorganized in congenital deafness, potentially to change the balance between thalamocortical and corticocortical inputs towards corticocortical inputs. It is important to emphasize that both the thalamic input and bottom-up interactions were not eliminated in CDCs ([52]; posterior auditory field: [50]), explaining why auditory responsiveness is preserved when stimulated with a cochlear implant (ibid.).

The timeline of feline auditory cortical synaptogenesis covers the first 1-2 months of life, followed by synaptic pruning [57]. In CDCs this process was delayed by 1 month and the pruning was significantly increased [57]. It is likely that such pruning predominantly eliminates existing inactive cortical synapses, which may involve the majority of auditory corticocortical synapses in deafness. The more active ‘visual’ synapses, on the other hand, may survive and get stabilized in deafness. These visual synapses are likely those that come from other cortical areas and from multimodal thalamic regions like lateral posterior nucleus. These may convey multimodal interactions in the auditory cortex of hearing animals.

Taken together, these lines of evidence indicate that it is not the reorganized fiber tracts but predominantly the synapses, their number and synaptic efficacy, that convey crossmodal effects. Compared to adult-onset deafness, congenital deafness has additional effects in preventing multimodal integration and potentially preserving exuberant heteromodal connections (see below). Future studies of functional connectivity would provide clearer insights into the changes related to sensory deprivation and crossmodal reorganization (see Outstanding Questions).

Outstanding Questions.

What are the exact molecular mechanisms of gain change in cross-modal plasticity, and are they the same for the different cortical areas involved? Can they be leveraged clinically? Identifying such molecular mechanisms would open a wide field of pharmacological modulation of cortical plasticity.

Given its top-down nature, is cross-modal reorganization associated with up- or down-regulation of cognitive reserve in age-related hearing loss, and does it play any role in the link between hearing loss and cognitive decline?

How can multimodal representations be established at a later age in congenital deprivation? Are there ways of extending critical periods by behavioral or new molecular approaches?

What is the therapeutic potential of sensory substitution in hearing impaired (e.g. glasses translating speech into written text in real time) for auditory cortical representations? How effective are these approaches for clinical applications - do they support or prevent adaptations to the newly provided auditory input?

How different is cross-modal plasticity between sensory systems? A different developmental sequence and different roles of the sensory systems for cognition suggest that differences in cross-modal plasticity across sensory systems may exist. Addressing this question is challenging, partly because reversibility of total deprivation is best clinically feasible in the auditory system; it is often an elusive goal in the other systems. New methodological approaches would be required for progress in this area.

Should oral language learning for deaf children with cochlear implants be multimodal, exploiting all cross-modal adaptations, or should it be primarily auditory-focused to prevent excessive cross-modal reliance?

Is cross-modal reorganization reversible even after very long periods of age-related hearing, given that typically older adults receive hearing aids after 10+ years of hearing loss onset?

Multimodal Integration and its Relation to Crossmodal Effects

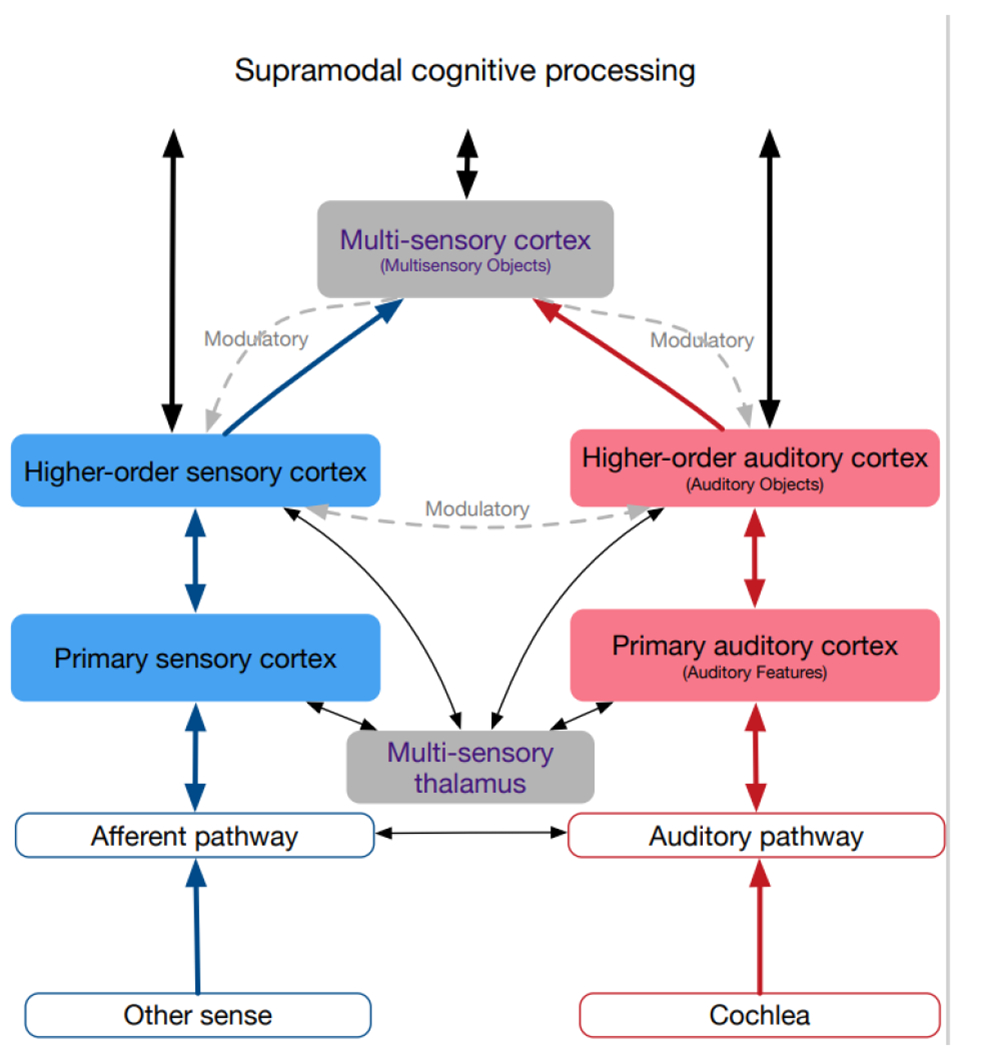

The high specificity of the crossmodal effects described above suggests a tight relation of crossmodal plasticity to regions that receive heteromodal (and even multimodal) inputs in hearing (Fig. 2, [58–61]). Heteromodal influences in sensory cortices are modulating rather than driving [62,63], often involving phase effects on oscillations [61,64,65] and reflecting behavioral low-dimensional effects [66]. Multimodal integration requires higher associative areas with large functional interareal connectivity [67] that provide the top-down heteromodal inputs to sensory areas [68].

Fig. 2: Schematics of connections between sensory systems in hearing subjects, simplified.

Auditory system shown in red, non-auditory in blue, multisensory in grey. Connections are differentiated into driving (shown as straight lines), defined as connections able to elicit action potentials in absence of other active connections, and modulatory (shown as curved dashed lines), which affect the activity of neurons, but fail to cause postsynaptic action potentials in absence of other inputs (for details, see text). Connections between primary areas not shown since these differ between different sensory cortices. Multisensory information is observable in all cortical areas (filled background), but mainly as a modulatory influence. The main driving input comes from within the sensory system (the adequate input).

Heteromodal influences are more pronounced in higher-order auditory areas [64,69,70]. It has been in part related to attention and motor activity, including corollary influence of movement [66,71]. Their effect is often inhibitory in nature, possibly with the aim to suppress responses to self-produced or otherwise predicted sounds [71,72]. This all makes clear why a sensory system cannot be completely encapsulated or isolated in the brain: there is a need for the ability to modulate it depending on behavioral context. Overall, heteromodal influences in auditory cortex are not driving but modulatory in a “normal” brain, and they increase with increasing level of “cortical hierarchy”.

Visual influences in auditory cortex have been observed in subjects with normal hearing when visual and auditory inputs were coherent, as e.g. during lipreading [73–75]. Similar effects were further documented in hearing subjects using sign language [14]. Pre-existing visual influences have been found in areas of hearing animals that are known to undergo crossmodal plasticity (visual responses in cats: [76], visual responses in mice: [70], somatosensory responses in ferrets: [77]; visual responses in ferrets: [78]). The role of these visual inputs was to modulate an auditory response [62], including inhibitory effects [79].

These effects were sometimes reminiscent of the influence of attention on oscillatory responses [64]. The effect has been stronger in higher-order auditory areas than in primary areas [80]. Similar subthreshold influences have also been described in higher order somatosensory and multisensory regions [81]. Heteromodal top-down influences may be interpreted in the sense of prediction error hypothesis, where predictions penetrate through the network hierarchically from top to bottom [82,83] and modulate the information flow in the reverse direction.

Cortical multimodal structures are extensively connected with sensory areas by top-down modulations [67,84] and thus provide multimodal and hetermodal inputs to the auditory cortex (Fig. 2). Interestingly, in early deafness, higher-order auditory cortex additionally takes over functions related to cognitive processes [85–87]. In age-related hearing loss, cognitive processes compensate for degraded auditory input [88]. Reduced auditory acuity may reorganize the interaction between sensory and higher order cortices, such that cognitive and crossmodal processes are upregulated to compensate for effortful listening, depleting cognitive reserve [89] and possibly underlying the association between hearing loss and cognitive decline in adults [90].

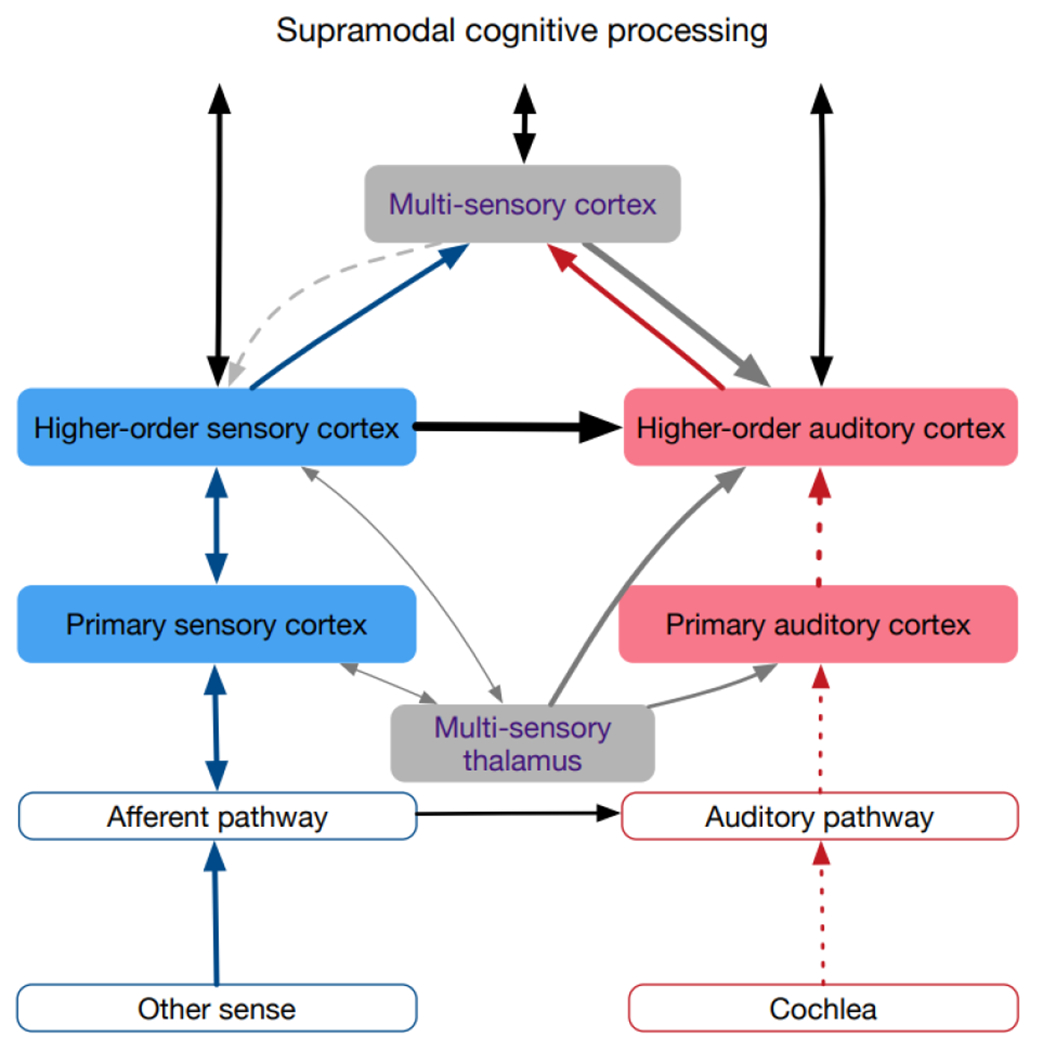

The existing evidence thus suggests that the source of heteromodal activity in deafness is resting on pre-existing modulatory influences on neurons, partly caused by top-down inputs from associative (multisensory) areas (Fig. 3). Thus, the specificity of crossmodal plasticity with respect to cortical areas is likely due to fiber tracts that normally connect these areas to heteromodal and multimodal areas and provide heteromodal information also in hearing animals. Their role is stronger, accentuated and becomes driving only when these cortical areas are deprived of their dominant driving input.

Fig. 3: Schematics of the crossmodally reorganized hearing-impaired auditory system.

The main reorganization is the change of the modulatory heteromodal inputs into driving inputs, both from the secondary heteromodal areas as well as from multimodal areas. The effect is assumed to rest on resetting the working point of neurons in the auditory cortex. The absent auditory (adequate) input is shown by dashed lines.

Studies on hearing restoration following congenital deafness additionally document issues with multimodal binding, particularly fusion of visual with auditory inputs; processing of multimodal stimuli is instead dominated by the visual inputs if hearing restoration is later in life [91]. Correspondingly, in CDCs the neurons in the cross-modally reorganized area dorsal cortex did not show evidence of audiovisual fusion [49]. Corresponding outcomes have been recently reported in congenital blindness [92]. This confirms studies showing that early multimodal experience is essential for the development of multimodal processing capabilities [93–95]. The full potential of multisensory rehabilitation cannot be harvested if sensory restoration occurs too late in congenital deafness. In humans, the timeline for full effectiveness in restoration interventions is probably the first two years of life [91]. These considerations also explain why developmental deprivation has more severe consequences compared to late (adult-onset) deprivation.

Crossmodal Plasticity: A Dynamic, Flexible and Reversible Process?

There is growing evidence of crossmodal effects in mild and aging-related hearing loss. This is underscored by the high incidence of hearing loss in aging and the key importance of hearing loss in the pathophysiology of age-related cognitive decline [90]. In rats, crossmodal visual plasticity has been observed in secondary auditory areas following partial hearing loss in adulthood [96]. This suggests that even partial adult hearing loss can induce crossmodal plasticity. Indeed, in a series of studies in people with mild-moderate aging-related hearing loss, crossmodal effects have been described [89,97–102]. Recent evidence shows that crossmodal reorganization appears early, within 3 months of adult-onset hearing loss [89] and can be reversed with as little as 6 months of treatment with hearing aids [102]. Adding to its versatility, crossmodal plasticity reported in unilateral hearing loss can be reversed after cochlear implant use, although somatosensory cross modal effects appear to reverse more completely than visual effects [103], which is consistent with a continued reliance on the visual modality for disambiguating the auditory signal through a hearing aid or cochlear implant [91]. However, reversibility is more limited with congenital deafness compared with adult-onset of hearing loss [104], with an abnormal dominance of visual inputs and absence of multisensory fusion if congenital deafness is restored after the second year of life [91].

These studies demonstrate that crossmodal reorganization is a dynamic and rather fast process. It does not require structural changes and comes and vanes with mild sensory deprivation. The same neuronal circuitry can thus be used for crossmodal effects as well as for physiological processing of multimodal inputs.

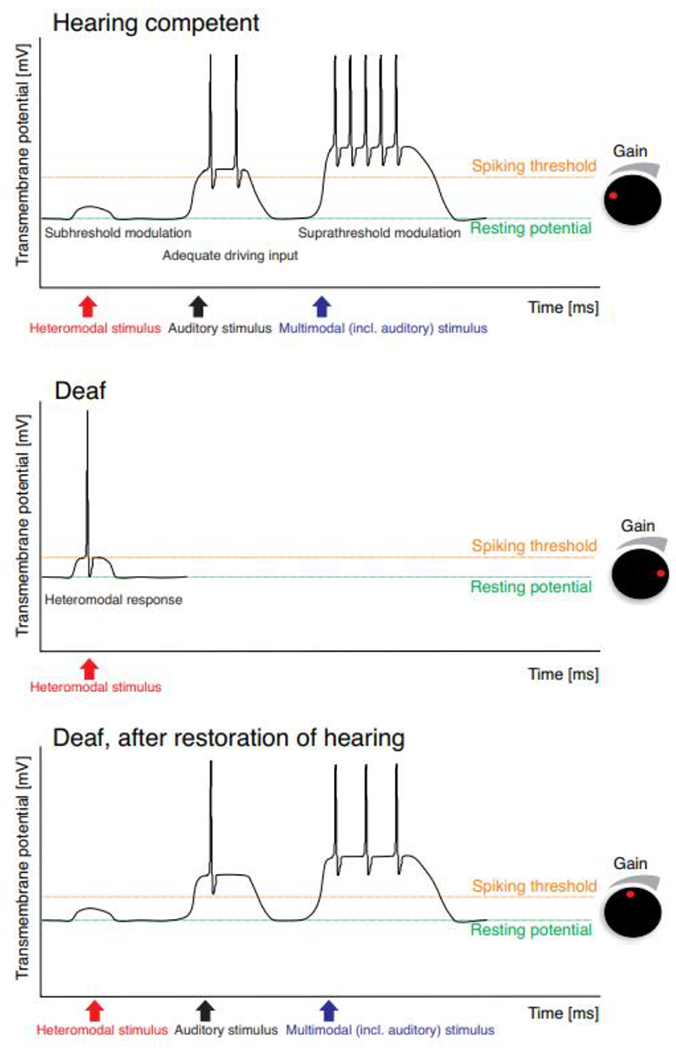

We propose that the first neuronal step in fast crossmodal changes is a change in overall neuronal responsiveness (Fig. 4). This is finely regulated in cortical neurons, and in experimental models, changes in excitability of neurons can be induced through various paradigms, e.g. using acetylcholine, which can enhance intrinsic excitability [105] or manipulations that cause changes in excitatory-inhibitory balance [106,107]. In deafness, the main driving input to auditory neurons is eliminated, and in auditory regions, heteromodal inputs are typically too weak to drive neurons. That means that as a first step, the neurons are silenced, leading to an inevitable sequence of adaptation counteracting this effect. Homeostatic plasticity [107–110] adapts the working point of synapses and the spiking threshold of neurons, and synaptic changes affect excitatory-inhibitory balance to allow neurons to generate action potentials. In our view, reduced neuronal input will cause adaptation of neuronal responsiveness by these cellular and network mechanisms. In the absence of the adequate sensory input it causes increased sensitivity to the remaining inputs. This cannot fully compensate for the non-existing auditory inputs, but heteromodal inputs may transition from an originally modulatory to a driving role, and activate deprived cortical areas.

Fig. 4: Suggested mechanism of dynamic crossmodal plasticity.

In a hearing auditory cortex (A), the heteromodal inputs are only modulatory and are therefore dependent on the driving auditory input. Provided this is present, the responses can be significantly modified by heteromodal inputs. In hearing loss (B), there is reduced or no driving input and thus homeostatic plasticity may increase excitability to such an extent that the previously weak modulating input becomes driving. Thereby both the heteromodal response and neuronal sensitivity increase, i.e. the spiking thresholds to an input is decreasing. After hearing restoration (C), the gain is reduced due to the restoration of a strong driving input. The heteromodal input becomes modulating again. Since hearing restoration is rarely complete, the gain of cortical neurons is in between the one of deaf and hearing subjects. In the illustrated schematics, the changes are modeled only by an effect on spiking threshold and response increase. This is a simplification of the multiplicity of homeostatic processes present physiologically.

Upregulation of excitability is well documented in the cortex of deaf cats [57,111], reviewed in [42]) and of hearing-impaired rodents [96,112,113]. Provided that inputs to neurons include heteromodal sensory information, a previously modulatory effect may change to driving input. Such responses will be consistent with the auditory responses observed normally in these regions and can be well-processed by the same auditory circuitry. In milder hearing impairment, these changes will provide a-priori information (in the Bayesian sense) and by that will be naturally adaptive. In complete deafness, crossmodal effects will only affect functions that the auditory system has in common with other sensory systems and that are normally used to form multimodal representations.

In this concept of a dynamic adaptive crossmodal plasticity, homeostatic excitability adaptations will serve like a volume knob on a HiFi music audio system: when input is reduced, excitability will be pulled up to guarantee that all information that is available, including non-auditory information, will be optimally exploited for behavioral advantage and functional connectivity can be strengthened by synaptic plasticity. When input to the auditory cortex is appropriately restored via hearing aids and/or cochlear implants, then excitability is dynamically downregulated reversing the crossmodal changes to some extent.

A direct consequence of such adaptation in neuronal responsiveness is a change in functional connectivity. Appearance of heteromodal responses provides the window of opportunity for strengthening functional interactions to other regions [114,115] that are structurally connected but normally functionally coupled only in specific conditions (multimodal stimulation). Neuronal oscillations ubiquitously present due to properties of neuronal membranes and environmental or self-produced rhythms [116] allow an effective mechanism of long-distance coupling by synchronized activity. Increased responsiveness also facilitates it under unimodal stimulation in the spared modality. Phase synchronization of such oscillations has been demonstrated in between-modality coupling in hearing subjects [61,116]. Phase synchrony between primary and secondary auditory areas have been observed during top-down interactions in CDCs [51,52]. This mechanism is thus a plausible candidate for crossmodal reorganization in hearing loss: oscillatory synchronization, particularly in the form of phase reset, is under top-down control (review in [116]). Dynamic crossmodal plasticity based on increased gain in the impaired sensory input allows exploitation of top-down influences from multisensory and cognitive centers to synchronize increased heteromodal responses with activity from the spared modalities. This leads to the picture of a cross-modally reorganized functional connectome. Increased functional coupling can lead to new axon collaterals, increase the synaptic counts and synaptic efficacies for heteromodal inputs in the sensory-deprived areas and explain the easier retrograde tracer pickup in anatomical tracer studies, thus explaining more abundant (“stronger”) existing connections but no ectopic connections as observed in cats.

Functional connectivity can be studied using many methods (Box 1), including some that are applicable in humans. Functional connectivity analyses thus allow studying crossmodal effects in humans both in resting state as well as in response to a stimulus (see reviews, [114,117,118]).

BOX 1: Connectivity quantification.

The connectome, defined here as the totality of all synaptic connections between neurons in the brain, can be studied using many different methods [150]:

Structural connectivity defines the totality of fiber tracts connecting the brain. It can be analyzed using diffusor tensor imaging in humans, or anatomical tracers in animals. Fiber tracts are established early in development, driven mainly by genetic makeup. Fiber tracts are a precondition for functional interactions, but structural and functional connectivity correlate only weakly [151]. Functional connectivity additionally depends on synaptic counts, synaptic efficacies, and excitatory-inhibitory balance.

- Functional connectivity (FC) defines statistical dependencies of neural activity. It can be subdivided into stimulus-related and stimulus-independent functional connectivity. Effective connectivity refers to the influence that one system exerts over the other (is directed).

- Stimulus-independent FC can be assessed by:

- Ongoing activity reveals different brain networks activated at rest or attentive condition like default mode network [118]. This method provides general information on the brain networks and its hubs.

- Stimulus-dependent connectivity includes the effects of stimuli on the networks. The overall brain networks can reconfigure depending on stimulation (review in [118,153]). Stimulus-dependent connectivity must disentangle common input from direct interactions. It allows conclusions on how stimuli propagate in the brain.

Several measures allow quantifying functional connectivity:

Correlations [154] have the disadvantage to be dependent on the temporal function of the response, but historically allowed pioneering insights into brain networks.

Granger causality [155,156] quantifies effective ongoing and stimulus-dependent connectivity. It provides directionality of the connection, but is influenced by signal power and requires longer time windows for calculation.

Mutual information and transfer entropy [157,158] directly quantify information transfer. They are influenced by signal power and require longer time windows for calculation.

Phase-based measures like phase coherence, pairwise phase consistency or spike-field coherence [159,160] are power independent and when computed on induced activity also independent of common input. Their standout advantage is the short time window required for quantification, however, they do not provide directionality.

A combination of several methods. For example, separating the time-frequency bands in which phase-based methods detect connectivity at high temporal resolution allows subsequent extraction of directional information (in the corresponding bands) using effective connectivity measures (e.g. Granger causality) [52].

A number of publications revealed increased functional connectivity between visual, multisensory and auditory cortex following hearing loss [119–121], with contribution of multisensory parietal areas [122]. A decrease in intramodal connectivity and increase in crossmodal connectivity has been reported [120]. Crossmodal reorganizations were susceptible to compensation of hearing loss by hearing aids [123] and predictive or related to outcomes after hearing compensation [121,124,125]. These findings support the concept of dynamic flexible cross-modal plasticity that appears with hearing loss and partially subsides after hearing therapy.

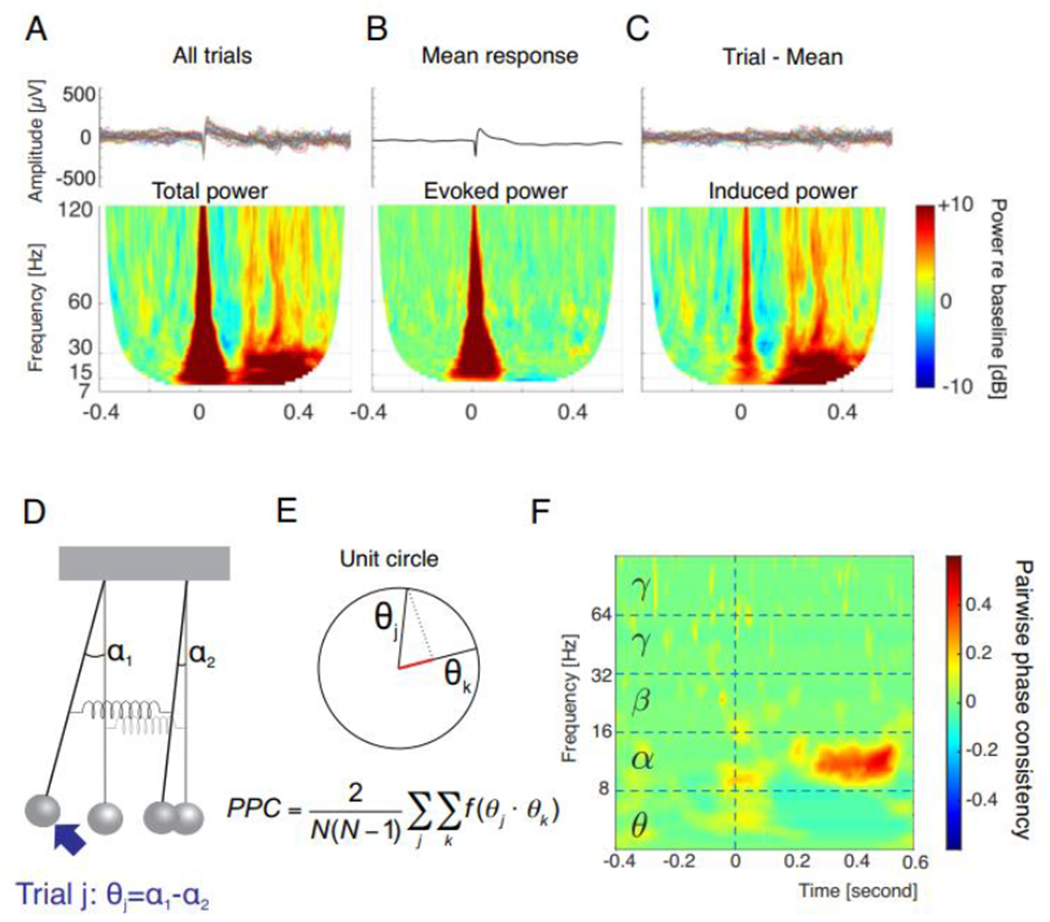

In CDCs, reduced activity in the ongoing alpha-band has been found in the cross-modally reorganized posterior auditory area but not in area A1 [50] (for similar visual effects in congenitally blind humans, see [126]). Alpha oscillations have been additionally related to excitability changes of neurons [115]. At the same time, there was a loss of top-down interactions between secondary and primary auditory cortex in CDCs prominent in the alpha band [52]. This could indicate that loss of alpha power reflects loss of top-down influence on early areas rather than crossmodal reorganization per se. Similarly, alpha oscillations convey top-down interactions in humans [127], and their power has been related to speech intelligibility in hearing subjects [128,129]. Adult onset of hearing loss may provide also a different oscillatory signature than congenital hearing loss (e.g. theta oscillations [130] or delta oscillations [131]). Methodologically, oscillatory activity has the advantage of allowing to focus on different oscillatory bands, thus separating parallel neural processes [117], and further allowing separation of common input from corticocortical interaction by calculating induced oscillations (Fig. 5, [114,116,132]). This is essential for studying corticocortical interactions in the absence of common thalamic input, to a large extend reflected in the traditional evoked response components. Current neuroscience techniques provide the tools required for more detailed analysis of these effects in the future (Box 1).

Fig. 5: Functional connectivity related to an auditory stimulus determined from oscillatory activity.

(A) Primary auditory cortex response to an auditory stimulus (click train, 3 clicks, train duration 0.006 sec.) as observed in local field potentials recorded with a microelectrode. Top: 30 trials of the stimulus presented at 0 sec. results in a response that is reproducible (phase-locked) in each trial (within 0-0.1 sec. post stimulus) and a response that jitters in time from trial to trial (0.2-0.6 sec.). Bottom: Time-frequency representation (TFR) allows to compute the mean total power, revealing both responses that cover all relevant frequency ranges. (B) Computing an average LFP in time domain preserves mainly the phase-locked response (0-0.1 sec.). TFR reveals the evoked power. (C) Subtracting the average from each trial better isolates the non-phase-locked induced activity, well-preserved in the TFR where the response does not disappear during averaging due to averaging power not the amplitude. (D) To compute connectivity between two oscillators, the trial-to-trial stability in phase differences of oscillations quantifies the coupling strength (spring stiffness). This is reflected in θj for trial j. (E) When the phase differences in two different trials (denoted j, k) are plotted on the unit circle, the projection of one on another reveals their stability. This is achieved by calculating the sum all mutual dot products of the unitary vectors for each trial combination, normalized to the number of trial combinations. PPC = pairwise phase consistency. (F) Example of two sites recorded in primary and secondary auditory cortex of a hearing cat, demonstrating a PPC increase in the time between 0.2 and 0.5 sec. after presentation of the brief click train. Using this approach, the synchrony of the activity between cortical sites can be determined with specificity to frequency and with ms precision. Using induced activity for PPC computation additionally eliminates common input. Data from [52].

Crossmodal plasticity due to gain change and subsequent effects on functional connectivity, while adaptive, has limitations: in congenital deafness it cannot provide the substrate for multisensory integration and cannot guarantee normal development of the auditory system. Critical auditory periods are not due to colonization by the visual or somatosensory systems from crossmodal processes; they are rather the consequence of absence of adequate sensory input, consequent abnormal development and pronounced loss of cortical auditory synapses (reviews in [42,43]). Therefore, predominantly corticocortical interactions are affected by early deprivation [50]. Only some auditory areas overtake specific visual and somatosensory functions. Reduced thickness in deep cortical layers (a predominant source of top-down interactions) throughout all studied auditory cortical areas [53] suggests that despite crossmodal reorganization in congenital hearing loss, massive alterations in the cortical microcircuitry are observable [50,52]. Furthermore, dystrophic changes in deep layers were observed in all studied auditory areas, including those not involved in crossmodal reorganization. This means that the effect is due to auditory deprivation per se, and is not compensated by crossmodal reorganization. In vision loss, processing within auditory cortex is strengthened in the bottom-up direction, consistent with a shift in the sensory balance in favor of hearing [56,79]. This supports the present concept and represents another interesting aspect of future cross-modal research in hearing loss.

There are also limitations regarding the compensatory nature of this plasticity: Visual stimuli may help (in the Bayesian sense) disambiguate the auditory inputs. Visual stimulation alone, however, obviously cannot compensate the auditory experience nor can it negatively interfere after early restoration of hearing. Finally, the early developmental auditory effects of deafness are subject to critical periods, and this may compromise the ability to form multimodal representation by use of the deprived modality.

Clinical implications

A dynamic flexible crossmodal plasticity has several clinical implications. In adult onset of hearing loss, cross-modal plasticity can be adaptive and compensatory in many ways. When it’s not possible to restore deprived input, a ‘sensory substitution’ approach is used to transform information from the spared modality (within certain limits using its specific properties) to compensate for deficits in the deprived modality [133]. Such an approach has been reported in blind subjects using acoustic sonification of the visual information stream [134,135]. Similarly, eyeglasses which convert speech to subtitles for persons with hearing loss have recently became technically possible and represent a future commercial cross-modal application. In hearing loss, lipreading which activates auditory cortex is used routinely for rehabilitation [104,136–141]. Somatosensory enhancement of speech perception via cochlear implants was reported to aid speech understanding in deaf patients [142,143]. Thus, crossmodal stimulation can be harvested for clinical applications using the spared modalities [144].

In prelingual (congenital) deafness, including visual cues in communication is adaptive and may be leveraged clinically, particularly in the time before intervention. The disputed maladaptive consequences of visual cross-modal plasticity are in our view unlikely to be an issue if restoration of hearing is provided as soon as feasible (within the critical period) to assure a functional auditory system and multisensory interactions that include the auditory modality.

Consistent Observations in Other Sensory Systems

The notions discussed here are further supported by observations from other sensory systems. In blindness, rewiring does not appear to be necessary for the crossmodal effects being reported [145], however, response properties and implicitly functional interactions in occipitotemporal network are affected by blindness [146–148]. Similarly, somatomotor reorganization following amputations is rather limited and corresponds to the present considerations [149]. Taken together, it appears as if the textbook examples of brain plasticity in function loss need a revision: the crossmodal adaptations do not rest on the ability of the brain to take over (any) functions, but rather use preexisting circuitry that is modified depending on the overall input to the given areas, governed by E-I balance and homeostatic plasticity. In such perspective, crossmodal plasticity is exploitable for therapeutic approaches and allows to use these as an objective monitoring of efficacy of neurosensory restoration and subsequent rehabilitation.

Concluding Remarks

The brain can make use of the same neuronal circuitry for qualitatively different functions by dynamically adapting synaptic gain (synaptic rescaling) and inhibition, causing stimulus- and condition-dependent functional connectivity reorganization in absence of structural changes. This is particularly advantageous in case of reduced sensory input. The evidence discussed in this article is consistent with the notion that the substrate of crossmodal reorganization is essentially synaptic and functional, is operating at the microscopic scale and leads to functional connectivity changes in absence of large-scale rewiring of the brain. Auditory responsiveness is generally preserved in absence of hearing experience, both in primary and higher-order auditory cortical areas. Crossmodal reorganization is likely supported by (i) few exuberant connections formed during development and abnormally persisting into adulthood in congenital hearing loss and (ii) local increases in axonal collaterals and synaptic efficacy of heteromodal inputs, facilitated by (iii) increased sensitivity of target neurons deprived of their natural (adequate) auditory input, attentional modulation and (iv) their influences on neuronal oscillations and their interareal coupling. Heteromodal inputs originate predominantly from top-down interactions that can similarly affect neuronal oscillations. This explains why even adult-onset mild-to-moderate hearing loss can facilitate crossmodal reorganization that is reduced or reversed after hearing restoration. Understanding the neuronal mechanisms of how functional connectivity and thus flow of information can be reversed from bottom-up to top-down will provide further insight into constraints of crossmodal plasticity.

Highlights.

Crossmodal plasticity is a textbook example of the ability of the brain to reorganize based on use. In the auditory system, crossmodal plasticity is evident in all degrees of auditory deprivation and may be reversed in some cases of adult onset hearing loss.

Neither developmental nor adult crossmodal plasticity appear to involve extensive reorganization of structural connectivity. Instead, they typically involve strengthening and weakening of existing connections, often top-down heteromodal projections.

The auditory system is a model system for neurosensory restoration with neuroprostheses, and the dynamic and versatile nature of crossmodal plasticity can be exploited clinically for improving outcomes of neurosensory restoration.

Acknowledgements

The authors thank the anonymous reviewers for their valuable comments. AK has been supported by Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy – EXC 2177/1 - Project ID 390895286” and DFG project Kr 3370/5-1, the EU International Training Network “Communication for Children with Hearing Impairment to Optimize Language Development” (No. 860755, Comm4CHILD) and the National Science Foundation, USA, (DLR # 01GQ1703). AS has been supported by United States National Institutes of Health grants R01DC16346, R01DC06257, U01DC13529 and R01DC006251 and a grant by the Hearing Industry Research Consortium.

Glossary:

- Crossmodal reorganization / plasticity

A change in properties of neurons leading to stronger responsiveness to stimuli of the non-deprived modality. In the present text, the term “crossmodal” is reserved for conditions with hearing loss. This could refer to structural reorganization which involves formation of new fiber tracts, or, a functional reorganization referring primarily to changes from latent unmasking of existing pathways.

- Multimodal reorganization / plasticity

A change in properties of neurons due to multimodal stimulation in subjects without sensory deprivation.

- Multimodal areas

Cortical areas responsive to stimulation of more than one modality; the neurons in these areas can even be non-responsive to stimulation of a single modality. Multimodal responses are classified into subadditive, additive and superadditive depending on the amount of change caused by adding another modality.

- Heteromodal inputs

Inputs into a given sensory area originating from another modality; i.e. visual or somatosensory inputs into the primary or secondary auditory cortex.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of Interests: AK received consultation fees from Cochlear Ltd. and Advanced Bionics GmbH. AS declares no completing interests.

References

- 1.Rauschecker JP (1995) Compensatory plasticity and sensory substitution in the cerebral cortex. Trends Neurosci 18, 36–43 [DOI] [PubMed] [Google Scholar]

- 2.Sur M, Pallas SL et al. (1990) Cross-modal plasticity in cortical development: differentiation and specification of sensory neocortex. Trends Neurosci 13, 227–233 [DOI] [PubMed] [Google Scholar]

- 3.von Melchner L, Pallas SL et al. (2000) Visual behaviour mediated by retinal projections directed to the auditory pathway. Nature 404, 871–876 [DOI] [PubMed] [Google Scholar]

- 4.Clascá F, Llamas A et al. (1997) Insular cortex and neighboring fields in the cat: a redefinition based on cortical microarchitecture and connections with the thalamus. J Comp Neurol 384, 456–482 [DOI] [PubMed] [Google Scholar]

- 5.Bowman EM and Olson CR (1988) Visual and auditory association areas of the cat’s posterior ectosylvian gyrus: cortical afferents. J Comp Neurol 272, 30–42 [DOI] [PubMed] [Google Scholar]

- 6.Musil SY and Olson CR (1988) Organization of cortical and subcortical projections to medial prefrontal cortex in the cat. Journal of Comparative Neurology 272, 219–241 [DOI] [PubMed] [Google Scholar]

- 7.Jiang W, Jiang H et al. (2002) Two corticotectal areas facilitate multisensory orientation behavior. Journal of Cognitive Neuroscience 14, 1240–1255 [DOI] [PubMed] [Google Scholar]

- 8.Irvine DR and Huebner H (1979) Acoustic response characteristics of neurons in nonspecific areas of cat cerebral cortex. J Neurophysiol 42, 107–122 [DOI] [PubMed] [Google Scholar]

- 9.Irvine DR and Phillips DP (1982) in Cortical Sensory Organization: Multiple Auditory Areas (Woolsey CN ed.), pp. 111–156, Humana Press [Google Scholar]

- 10.Nishimura H, Hashikawa K et al. (1999) Sign language ‘heard’ in the auditory cortex. Nature 397, 116. [DOI] [PubMed] [Google Scholar]

- 11.Petitto LA, Zatorre RJ et al. (2000) Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl.Acad Sci U.S.A 97, 13961–13966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Finney EM, Fine I et al. (2001) Visual stimuli activate auditory cortex in the deaf. Nat Neurosci 4, 1171–1173 [DOI] [PubMed] [Google Scholar]

- 13.Lee DS, Lee JS et al. (2001) Cross-modal plasticity and cochlear implants. Nature 409, 149–150 [DOI] [PubMed] [Google Scholar]

- 14.Emmorey K and McCullough S (2009) The bimodal bilingual brain: effects of sign language experience. Brain Lang 109, 124–132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Levänen S, Jousmäki V et al. (1998) Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr Biol 8, 869–872 [DOI] [PubMed] [Google Scholar]

- 16.Auer ET, Bernstein LE et al. (2007) Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport 18, 645–648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hauthal N, Debener S et al. (2014) Visuo-tactile interactions in the congenitally deaf: a behavioral and event-related potential study. Front Integr Neurosci 8, 98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Anderson CA, Lazard DS et al. (2017) Plasticity in bilateral superior temporal cortex: Effects of deafness and cochlear implantation on auditory and visual speech processing. Hear Res 343, 138–149 [DOI] [PubMed] [Google Scholar]

- 19.Neville HJ and Lawson D (1987) Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. II. Congenitally deaf adults. Brain Res 405, 268–283 [DOI] [PubMed] [Google Scholar]

- 20.Neville HJ and Lawson D (1987) Attention to central and peripheral visual space in a movement detection task. III. Separate effects of auditory deprivation and acquisition of a visual language. Brain Res 405, 284–294 [DOI] [PubMed] [Google Scholar]

- 21.Finney EM, Clementz BA et al. (2003) Visual stimuli activate auditory cortex in deaf subjects: evidence from MEG. Neuroreport 14, 1425–1427 [DOI] [PubMed] [Google Scholar]

- 22.Fine I, Finney EM et al. (2005) Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. J Cogn Neurosci 17, 1621–1637 [DOI] [PubMed] [Google Scholar]

- 23.Benetti S, Zonca J et al. (2021) Visual motion processing recruits regions selective for auditory motion in early deaf individuals. Neuroimage 230, 117816. [DOI] [PubMed] [Google Scholar]

- 24.Bottari D, Heimler B et al. (2014) Visual change detection recruits auditory cortices in early deafness. Neuroimage 94, 172–184 [DOI] [PubMed] [Google Scholar]

- 25.Bottari D, Nava E et al. (2010) Enhanced reactivity to visual stimuli in deaf individuals. Restor Neurol Neurosci 28, 167–179 [DOI] [PubMed] [Google Scholar]

- 26.Bottari D, Caclin A et al. (2011) Changes in early cortical visual processing predict enhanced reactivity in deaf individuals. PLoS One 6, e25607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bavelier D, Dye MWG et al. (2006) Do deaf individuals see better? Trends Cogn Sci 10, 512–518 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lomber SG, Meredith MA et al. (2010) Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci 13, 1421–1427 [DOI] [PubMed] [Google Scholar]

- 29.Meredith MA, Kryklywy J et al. (2011) Crossmodal reorganization in the early deaf switches sensory, but not behavioral roles of auditory cortex. Proc Natl Acad Sci U S A 108, 8856–8861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Benetti S, van Ackeren MJ et al. (2017) Functional selectivity for face processing in the temporal voice area of early deaf individuals. Proc Natl Acad Sci U S A [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stropahl M, Plotz K et al. (2015) Cross-modal reorganization in cochlear implant users: Auditory cortex contributes to visual face processing. Neuroimage 121, 159–170 [DOI] [PubMed] [Google Scholar]

- 32.Battal C, Rezk M et al. (2019) Representation of auditory motion directions and sound source locations in the human planum temporale. Journal of Neuroscience 39, 2208–2220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gori M, Sandini G et al. (2010) Poor haptic orientation discrimination in nonsighted children may reflect disruption of cross-sensory calibration. Curr Biol 20, 223–225 [DOI] [PubMed] [Google Scholar]

- 34.Gori M, Sandini G et al. (2014) Impairment of auditory spatial localization in congenitally blind human subjects. Brain 137, 288–293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Recanzone GH (2003) Auditory influences on visual temporal rate perception. J Neurophysiol 89, 1078–1093 [DOI] [PubMed] [Google Scholar]

- 36.Guttman SE, Gilroy LA et al. (2005) Hearing what the eyes see: auditory encoding of visual temporal sequences. Psychol Sci 16, 228–235 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Slutsky DA and Recanzone GH (2001) Temporal and spatial dependency of the ventriloquism effect. Neuroreport 12, 7–10 [DOI] [PubMed] [Google Scholar]

- 38.Barakat B, Seitz AR et al. (2015) Visual rhythm perception improves through auditory but not visual training. Current Biology 25, R60–R61 [DOI] [PubMed] [Google Scholar]

- 39.Amadeo MB, Campus C et al. (2019) Spatial Cues Influence Time Estimations in Deaf Individuals. iScience 19, 369–377 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cook JR, Li H et al. (2022) Secondary auditory cortex mediates a sensorimotor mechanism for action timing. Nat Neurosci 25, 330–344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ryugo DK, Rosenbaum BT et al. (1998) Single unit recordings in the auditory nerve of congenitally deaf white cats: Morphological correlates in the cochlea and cochlear nucleus. J Comp Neurol 397, 532–548 [DOI] [PubMed] [Google Scholar]

- 42.Kral A and Sharma A (2012) Developmental neuroplasticity after cochlear implantation. Trends Neurosci 35, 111–122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kral A, Dorman MF et al. (2019) Neuronal Development of Hearing and Language: Cochlear Implants and Critical Periods. Annu Rev Neurosci 42, 47–65 [DOI] [PubMed] [Google Scholar]

- 44.Barone P, Lacassagne L et al. (2013) Reorganization of the Connectivity of Cortical Field DZ in Congenitally Deaf Cat. PLoS One 8, e60093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Butler BE, Chabot N et al. (2017) Origins of thalamic and cortical projections to the posterior auditory field in congenitally deaf cats. Hear Res 343, 118–127 [DOI] [PubMed] [Google Scholar]

- 46.Butler BE, de la Rua A et al. (2017) Cortical and thalamic connectivity to the second auditory cortex of the cat is resilient to the onset of deafness. Brain Struct Funct 223, 819–835 [DOI] [PubMed] [Google Scholar]

- 47.Wong C, Chabot N et al. (2015) Amplified somatosensory and visual cortical projections to a core auditory area, the anterior auditory field, following early- and late-onset deafness. J Comp Neurol 523, 1925–1947 [DOI] [PubMed] [Google Scholar]

- 48.Innocenti GM and Price DJ (2005) Exuberance in the development of cortical networks. Nat Rev Neurosci 6, 955–965 [DOI] [PubMed] [Google Scholar]

- 49.Land R, Baumhoff P et al. (2016) Cross-Modal Plasticity in Higher-Order Auditory Cortex of Congenitally Deaf Cats Does Not Limit Auditory Responsiveness to Cochlear Implants. J Neurosci 36, 6175–6185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yusuf PA, Hubka P et al. (2017) Induced Cortical Responses Require Developmental Sensory Experience. Brain 140, 3153–3165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Yusuf PA, Lamuri A et al. (2022) Deficient Recurrent Cortical Processing in Congenital Deafness. Frontiers in systems neuroscience 16, 806142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Yusuf PA, Hubka P et al. (2021) Deafness weakens interareal couplings in the auditory cortex. Frontiers in Neuroscience 14, 1476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Berger C, Kühne D et al. (2017) Congenital deafness affects deep layers in primary and secondary auditory cortex. J Comp Neurol 525, 3110–3125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kral A, Tillein J et al. (2006) Cochlear implants: cortical plasticity in congenital deprivation. Prog Brain Res 157, 283–313 [DOI] [PubMed] [Google Scholar]

- 55.Batardiere A, Barone P et al. (2002) Early specification of the hierarchical organization of visual cortical areas in the macaque monkey. Cerebral Cortex 12, 453–465 [DOI] [PubMed] [Google Scholar]

- 56.Petrus E, Rodriguez G et al. (2015) Vision loss shifts the balance of feedforward and intracortical circuits in opposite directions in mouse primary auditory and visual cortices. J Neurosci 35, 8790–8801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kral A, Tillein J et al. (2005) Postnatal Cortical Development in Congenital Auditory Deprivation. Cereb Cortex 15, 552–562 [DOI] [PubMed] [Google Scholar]

- 58.Ghazanfar AA and Schroeder CE (2006) Is neocortex essentially multisensory? Trends Cogn Sci 10, 278–285 [DOI] [PubMed] [Google Scholar]

- 59.Meredith MA and Allman BL (2009) Subthreshold multisensory processing in cat auditory cortex. Neuroreport 20, 126–131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Allman BL, Bittencourt-Navarrete RE et al. (2008) Do cross-modal projections always result in multisensory integration? Cereb Cortex 18, 2066–2076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Mégevand P, Mercier MR et al. (2020) Crossmodal Phase Reset and Evoked Responses Provide Complementary Mechanisms for the Influence of Visual Speech in Auditory Cortex. J Neurosci 40, 8530–8542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Kayser C, Petkov CI et al. (2008) Visual modulation of neurons in auditory cortex. Cereb Cortex 18, 1560–1574 [DOI] [PubMed] [Google Scholar]

- 63.Morrill RJ and Hasenstaub AR (2018) Visual Information Present in Infragranular Layers of Mouse Auditory Cortex. J Neurosci 38, 2854–2862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Lakatos P, O’Connell MN et al. (2009) The leading sense: supramodal control of neurophysiological context by attention. Neuron 64, 419–430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Schroeder CE, Lakatos P et al. (2008) Neuronal oscillations and visual amplification of speech. Trends Cogn Sci 12, 106–113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Stringer C, Pachitariu M et al. (2019) Spontaneous behaviors drive multidimensional, brainwide activity. Science 364, 255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Wolff A, Berberian N et al. (2022) Intrinsic neural timescales: temporal integration and segregation. Trends Cogn Sci 26, 159–173 [DOI] [PubMed] [Google Scholar]

- 68.Michail G, Senkowski D et al. (2021) Memory Load Alters Perception-Related Neural Oscillations during Multisensory Integration. J Neurosci 41, 1505–1515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Calvert GA, Campbell R et al. (2000) Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol 10, 649–657 [DOI] [PubMed] [Google Scholar]

- 70.Merrikhi Y, Kok MA et al. (2022) Multisensory responses in a belt region of the dorsal auditory cortical pathway. European Journal of Neuroscience 55, 589–610 [DOI] [PubMed] [Google Scholar]

- 71.Schneider DM and Mooney R (2018) How Movement Modulates Hearing. Annu Rev Neurosci 41, 553–572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Numminen J, Salmelin R et al. (1999) Subject’s own speech reduces reactivity of the human auditory cortex. Neurosci Lett 265, 119–122 [DOI] [PubMed] [Google Scholar]

- 73.Calvert GA, Brammer MJ et al. (1999) Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport 10, 2619–2623 [DOI] [PubMed] [Google Scholar]

- 74.Van Wassenhove V, Grant KW et al. (2005) Visual speech speeds up the neural processing of auditory speech. Proceedings of the National Academy of Sciences 102, 1181–1186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Kauramäki J, Jääskeläinen IP et al. (2010) Lipreading and covert speech production similarly modulate human auditory-cortex responses to pure tones. J Neurosci 30, 1314–1321 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Allman BL and Meredith MA (2007) Multisensory processing in “unimodal” neurons:cross-modal subthreshold auditory effects in cat extrastriate visual cortex. J Neurophysiol 98, 545–549 [DOI] [PubMed] [Google Scholar]

- 77.Allman BL, Keniston LP et al. (2009) Adult deafness induces somatosensory conversion of ferret auditory cortex. Proc Natl Acad Sci USA 106, 5925–5930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Bizley JK, Nodal FR et al. (2007) Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb Cortex 17, 2172–2189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Whitt JL, Ewall G et al. (2022) Visual deprivation selectively reduces thalamic reticular nucleus-mediated inhibition of the auditory thalamus in adults. Journal of Neuroscience 42, 7921–7930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Ghazanfar AA, Maier JX et al. (2005) Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. Journal of Neuroscience 25, 5004–5012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Meredith MA, Keniston LR et al. (2006) Crossmodal projections from somatosensory area SIV to the auditory field of the anterior ectosylvian sulcus (FAES) in Cat: further evidence for subthreshold forms of multisensory processing. Exp Brain Res 172, 472–484 [DOI] [PubMed] [Google Scholar]

- 82.Stuckenberg MV, Schröger E et al. (2021) Modulation of early auditory processing by visual information: Prediction or bimodal integration. Attention, Perception, & Psychophysics 83, 1538–1551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Mikulasch FA, Rudelt L et al. (2023) Where is the error? Hierarchical predictive coding through dendritic error computation. Trends Neurosci 46, 45–59 [DOI] [PubMed] [Google Scholar]

- 84.Pardi MB, Vogenstahl J et al. (2020) A thalamocortical top-down circuit for associative memory. Science 370, 844–848 [DOI] [PubMed] [Google Scholar]

- 85.Cardin V, Rudner M et al. (2017) The Organization of Working Memory Networks is Shaped by Early Sensory Experience. Cereb Cortex 28, 1–15 [DOI] [PubMed] [Google Scholar]

- 86.Manini B, Vinogradova V et al. (2022) Sensory experience modulates the reorganization of auditory regions for executive processing. Brain awac 205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Kral A, Kronenberger WG et al. (2016) Neurocognitive factors in sensory restoration of early deafness: a connectome model. Lancet Neurol 15, 610–621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Griffiths TD, Lad M et al. (2020) How Can Hearing Loss Cause Dementia. Neuron 108, 401–412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Glick H and Sharma A (2017) Cross-modal plasticity in developmental and age-related hearing loss: Clinical implications. Hear Res 343, 191–201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Livingston G, Huntley J et al. (2020) Dementia prevention, intervention, and care: 2020 report of the Lancet Commission. The Lancet 396, 413–446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Schorr EA, Fox NA et al. (2005) Auditory-visual fusion in speech perception in children with cochlear implants. Proc Natl Acad Sci U S A 102, 18748–18750 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Rączy K, Hölig C et al. (2022) Typical resting-state activity of the brain requires visual input during an early sensitive period. Brain Commun 4, fcac146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Xu J, Yu L et al. (2012) Incorporating cross-modal statistics in the development and maintenance of multisensory integration. J Neurosci 32, 2287–2298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Wallace MT, Woynaroski TG et al. (2020) Multisensory Integration as a Window into Orderly and Disrupted Cognition and Communication. Annu Rev Psychol 71, 193–219 [DOI] [PubMed] [Google Scholar]

- 95.Bean NL, Smyre SA et al. (2022) Noise-rearing precludes the behavioral benefits of multisensory integration. Cereb Cortex bhac 113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Schormans AL, Typlt M et al. (2018) Adult-Onset Hearing Impairment Induces Layer-Specific Cortical Reorganization: Evidence of Crossmodal Plasticity and Central Gain Enhancement. Cereb Cortex [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Campbell J and Sharma A (2014) Cross-modal re-organization in adults with early stage hearing loss. PLoS One 9, e90594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Rosemann S and Thiel CM (2018) Audio-visual speech processing in age-related hearing loss: Stronger integration and increased frontal lobe recruitment. Neuroimage 175, 425–437 [DOI] [PubMed] [Google Scholar]

- 99.Puschmann S, Daeglau M et al. (2019) Hearing-impaired listeners show increased audiovisual benefit when listening to speech in noise. Neuroimage 196, 261–268 [DOI] [PubMed] [Google Scholar]

- 100.Cardon G and Sharma A (2019) Somatosensory cross-modal reorganization in children with cochlear implants. Frontiers in Neuroscience 13, 469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Campbell J and Sharma A (2020) Frontal Cortical Modulation of Temporal Visual Cross-Modal Re-organization in Adults with Hearing Loss. Brain Sciences 10, 498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Glick HA and Sharma A (2020) Cortical Neuroplasticity and Cognitive Function in Early-Stage, Mild-Moderate Hearing Loss: Evidence of Neurocognitive Benefit From Hearing Aid Use. Front Neurosci 14, 93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Sharma A, Glick H et al. (2016) Cortical plasticity and re-organization in pediatric single-sided deafness pre-and post-cochlear implantation: a case study. Otology & neurotology: official publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology 37, e26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Strelnikov K, Rouger J et al. (2013) Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain 136, 3682–3695 [DOI] [PubMed] [Google Scholar]

- 105.Ye H, Liu ZX et al. (2022) Effects of M currents on the persistent activity of pyramidal neurons in mouse primary auditory cortex. J Neurophysiol 127, 1269–1278 [DOI] [PubMed] [Google Scholar]

- 106.Froemke RC (2015) Plasticity of cortical excitatory-inhibitory balance. Annu Rev Neurosci 38, 195–219 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.McFarlan AR, Chou CYC et al. (2022) The plasticitome of cortical interneurons. Nat Rev Neurosci [DOI] [PubMed] [Google Scholar]

- 108.Barnes SJ, Franzoni E et al. (2017) Deprivation-Induced Homeostatic Spine Scaling In Vivo Is Localized to Dendritic Branches that Have Undergone Recent Spine Loss. Neuron 96, 871–882.e5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Zhou M, Liang F et al. (2014) Scaling down of balanced excitation and inhibition by active behavioral states in auditory cortex. Nat Neurosci 17, 841–850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Bridi MCD, de Pasquale R et al. (2018) Two distinct mechanisms for experience-dependent homeostasis. Nat Neurosci 21, 843–850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Raggio MW and Schreiner CE (1999) Neuronal responses in cat primary auditory cortex to electrical cochlear stimulation. III. Activation patterns in short- and long-term deafness. J Neurophysiol 82, 3506–3526 [DOI] [PubMed] [Google Scholar]

- 112.Mowery TM, Kotak VC et al. (2015) Transient Hearing Loss Within a Critical Period Causes Persistent Changes to Cellular Properties in Adult Auditory Cortex. Cereb Cortex 25, 2083–2094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Takesian AE, Kotak VC et al. (2012) Age-dependent effect of hearing loss on cortical inhibitory synapse function. J Neurophysiol 107, 937–947 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Siegel M, Donner TH et al. (2012) Spectral fingerprints of large-scale neuronal interactions. Nat Rev Neurosci 13, 121–134 [DOI] [PubMed] [Google Scholar]

- 115.Iemi L, Gwilliams L et al. (2022) Ongoing neural oscillations influence behavior and sensory representations by suppressing neuronal excitability. Neuroimage 247, 118746. [DOI] [PubMed] [Google Scholar]

- 116.Lakatos P, Gross J et al. (2019) A New Unifying Account of the Roles of Neuronal Entrainment. Curr Biol 29, R890–R905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Akam T and Kullmann DM (2014) Oscillatory multiplexing of population codes for selective communication in the mammalian brain. Nature Reviews Neuroscience 15, 111–122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Uddin LQ, Yeo BTT et al. (2019) Towards a universal taxonomy of macro-scale functional human brain networks. Brain topography 32, 926–942 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Bola Ł, Zimmermann M et al. (2017) Task-specific reorganization of the auditory cortex in deaf humans. Proc Natl Acad Sci U S A 114, E600–E609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Chen L-C, Puschmann S et al. (2017) Increased cross-modal functional connectivity in cochlear implant users. Sci Rep 7, 10043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Fullerton AM, Vickers DA et al. (2022) Cross-modal functional connectivity supports speech understanding in cochlear implant users. Cereb Cortex bhac 277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Hauthal N, Thorne JD et al. (2013) Source Localisation of Visual Evoked Potentials in Congenitally Deaf Individuals. Brain Topogr 27, 412–424 [DOI] [PubMed] [Google Scholar]

- 123.Rosemann S, Gieseler A et al. (2021) Treatment of age-related hearing loss alters audiovisual integration and resting-state functional connectivity: A randomized controlled pilot trial. Eneuro 8, [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Schierholz I, Finke M et al. (2017) Auditory and audio-visual processing in patients with cochlear, auditory brainstem, and auditory midbrain implants: An EEG study. Hum Brain Mapp 38, 2206–2225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Radecke J-O, Schierholz I et al. (2022) Distinct multisensory perceptual processes guide enhanced auditory recognition memory in older cochlear implant users. NeuroImage: Clinical 33, 102942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Bottari D, Troje NF et al. (2016) Sight restoration after congenital blindness does not reinstate alpha oscillatory activity in humans. Sci Rep 6, 24683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Fontolan L, Morillon B et al. (2014) The contribution of frequency-specific activity to hierarchical information processing in the human auditory cortex. Nat Commun 5, 4694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Dimitrijevic A, Smith ML et al. (2017) Cortical Alpha Oscillations Predict Speech Intelligibility. Front Hum Neurosci 11, 88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Prince P, Paul BT et al. (2021) Neural correlates of visual stimulus encoding and verbal working memory differ between cochlear implant users and normal-hearing controls. Eur J Neurosci 54, 5016–5037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Zoefel B (2021) Visual speech cues recruit neural oscillations to optimise auditory perception: Ways forward for research on human communication. Current Research in Neurobiology 2, 100015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Morillon B, Arnal LH et al. (2019) Prominence of delta oscillatory rhythms in the motor cortex and their relevance for auditory and speech perception. Neuroscience & Biobehavioral Reviews 107, 136–142 [DOI] [PubMed] [Google Scholar]

- 132.Morillon B, Hackett TA et al. (2015) Predictive motor control of sensory dynamics in auditory active sensing. Curr Opin Neurobiol 31, 230–238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Maidenbaum S, Abboud S et al. (2014) Sensory substitution: closing the gap between basic research and widespread practical visual rehabilitation. Neurosci Biobehav Rev 41, 3–15 [DOI] [PubMed] [Google Scholar]

- 134.Striem-Amit E and Amedi A (2014) Visual cortex extrastriate body-selective area activation in congenitally blind people “seeing” by using sounds. Curr Biol 24, 687–692 [DOI] [PubMed] [Google Scholar]

- 135.Vetter P, Bola Ł et al. (2020) Decoding natural sounds in early “visual” cortex of congenitally blind individuals. Current Biology 30, 3039–3044. e2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Calvert GA, Bullmore ET et al. (1997) Activation of auditory cortex during silent lipreading. Science 276, 593–596 [DOI] [PubMed] [Google Scholar]

- 137.Crosse MJ, Butler JS et al. (2015) Congruent Visual Speech Enhances Cortical Entrainment to Continuous Auditory Speech in Noise-Free Conditions. J Neurosci 35, 14195–14204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Rouger J, Lagleyre S et al. (2007) Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc Natl Acad Sci U S A 104, 7295–7300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Barone P, Chambaudie L et al. (2016) Crossmodal interactions during non-linguistic auditory processing in cochlear-implanted deaf patients. Cortex 83, 259–270 [DOI] [PubMed] [Google Scholar]

- 140.Sandmann P, Dillier N et al. (2012) Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain 135, 555–568 [DOI] [PubMed] [Google Scholar]

- 141.von Eiff CI, Frühholz S et al. (2022) Crossmodal benefits to vocal emotion perception in cochlear implant users. iScience 25, 105711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 142.Fletcher MD, Thini N et al. (2020) Enhanced pitch discrimination for cochlear implant users with a new haptic neuroprosthetic. Scientific Reports 10, 1–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143.Huang J, Lu T et al. (2020) Electro-tactile stimulation enhances cochlear-implant melody recognition: Effects of rhythm and musical training. Ear and hearing 41, 106–113 [DOI] [PubMed] [Google Scholar]

- 144.Cramer SC, Sur M et al. (2011) Harnessing neuroplasticity for clinical applications.Brain 134, 1591–1609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 145.Fine I and Park J-M (2018) Blindness and human brain plasticity. Annual review of vision science 4, 337–356 [DOI] [PubMed] [Google Scholar]

- 146.Bola Ł (2022) Rethinking the representation of sound. Elife 11, e82747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 147.Battal C, Gurtubay-Antolin A et al. (2022) Structural and Functional Network-Level Reorganization in the Coding of Auditory Motion Directions and Sound Source Locations in the Absence of Vision. J Neurosci 42, 4652–4668 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 148.Mowad TG, Willett AE et al. (2020) Compensatory Cross-Modal Plasticity Persists After Sight Restoration. Front Neurosci 14, 291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 149.Makin TR and Flor H (2020) Brain (re) organisation following amputation: Implications for phantom limb pain. Neuroimage 218, 116943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 150.Friston KJ (2011) Functional and effective connectivity: a review. Brain Connect 1, 13–36 [DOI] [PubMed] [Google Scholar]

- 151.Suárez LE, Markello RD et al. (2020) Linking Structure and Function in Macroscale Brain Networks. Trends Cogn Sci 24, 302–315 [DOI] [PubMed] [Google Scholar]

- 152.Avena-Koenigsberger A, Misic B et al. (2018) Communication dynamics in complex brain networks. Nature Reviews Neuroscience 19, 17–33 [DOI] [PubMed] [Google Scholar]

- 153.Uddin LQ (2021) Cognitive and behavioural flexibility: neural mechanisms and clinical considerations. Nature Reviews Neuroscience 22, 167–179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 154.Tomita M and Eggermont JJ (2005) Cross-correlation and joint spectro-temporal receptive field properties in auditory cortex. J Neurophysiol 93, 378–392 [DOI] [PubMed] [Google Scholar]

- 155.Dhamala M, Rangarajan G et al. (2008) Estimating Granger causality from fourier and wavelet transforms of time series data. Phys Rev Lett 100, 018701. [DOI] [PubMed] [Google Scholar]

- 156.Dhamala M, Rangarajan G et al. (2008) Analyzing information flow in brain networks with nonparametric Granger causality. Neuroimage 41, 354–362 [DOI] [PMC free article] [PubMed] [Google Scholar]