Abstract

In this article, a chaotic computing paradigm is investigated for the parameter estimation of the autoregressive exogenous (ARX) model by exploiting the optimization knacks of an improved chaotic grey wolf optimizer (ICGWO). The identification problem is formulated by defining a mean square error-based fitness function between true and estimated responses of the ARX system. The decision parameters of the ARX model are calculated by ICGWO for various populations, generations, and noise levels. The comparative performance analyses with standard counterparts indicate the worth of the ICGWO for ARX model identification, while the statistical analyses endorse the efficacy of the proposed chaotic scheme in terms of accuracy, robustness, and reliability.

Keywords: chaos, parameter estimation, ARX, grey wolf optimizer

1. Introduction

Parameter estimation plays an important role in system identification, which is the frontier of research in signal processing [1]. It is widely applied in various applications such as Hammerstein autoregressive system [2], water turbine [3], electrical machines [4], fuel cells [5], recurrent neural networks [6], health [7], Hammerstein–Wiener system [8], computer-aided design [9], renewable energy resources [10], honey production [11], Magnetorheological dampers [12], and smart grids [13]. Various techniques were proposed in the literature related to parameter estimation such as metaheuristics [14], fractional algorithms [15], least mean square [16], fuzzy logic [17], analytical methods [18], and machine learning [19].

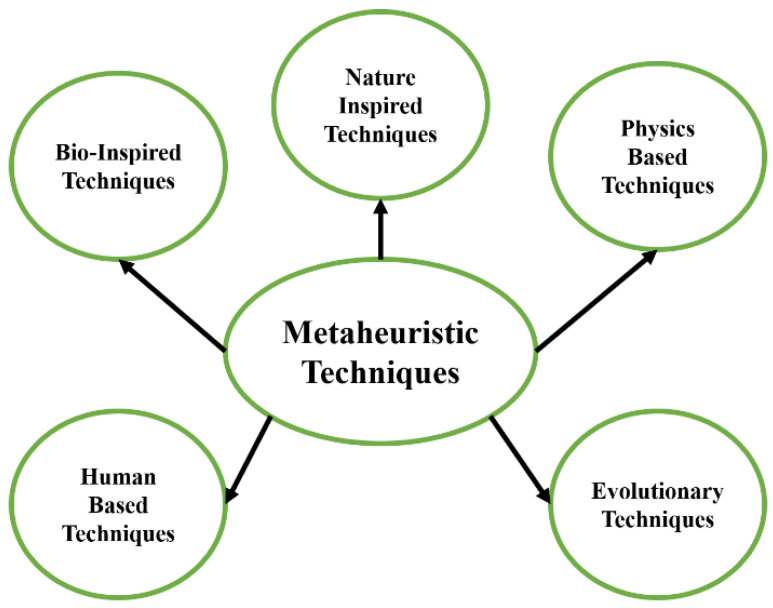

Among these techniques, metaheuristics have gained significant attraction in recent decades for system identification. As presented in Figure 1, metaheuristic techniques are classified into five domains. The first domain is bio-inspired techniques, and various techniques are proposed in this domain. The particle swarm optimization (PSO) [20] is inspired by the movement and intelligence of swarms. The artificial rabbits optimization [21] is inspired by the survival strategies of rabbits, which include detour foraging and random hiding. The grey wolf optimization (GWO) [22] mimics the behavior of grey wolves for hunting and leadership hierarchy. Manta ray foraging optimization [23] mimics the three unique strategies of manta rays, which includes chain, cyclone, and somersault for solving optimization problems. Artificial hummingbirds [24] use flight skills and foraging strategies of hummingbirds.

Figure 1.

Classification of metaheuristic techniques.

The second domain is human-based techniques which were used for optimization. In teaching–learning-based optimization [25], inspired by a classroom environment in which optimal solution is calculated by knowledge sharing between teacher and students. In city councils evolution [26], the councils evolved from smallest to largest neighborhoods. Based on the performance of council members, they became members of the larger councils. The mountaineering team-based optimization [27] is inspired by the leader’s experience of guided and regular movement by climbers to reach the mountain top. In political optimizer [28], the optimization solution is obtained by considering each party member as a solution, and their election behavior is used for evaluation. In the parliamentary optimization algorithm [29], intra- and inter-group competitions are conducted for taking control of parliament.

The third domain is evolutionary techniques applied to optimization problems. In differential evolution [30], an optimization solution is obtained by using mutation, crossover, and selection operators. In egret swarm optimization [31], sit-and-wait strategy, aggressive strategy, and discriminant conditions were used for finding the optimal solution. Genetic algorithm [32], uses the concept of genetics and natural selection for solving optimization problems. The evolutionary mating algorithm [33] adopts Hardy–Weinberg equilibrium and crossover index in finding solutions to optimization problems.

The fourth domain includes physics-based techniques applied for optimization. In big bang big crunch [34], two phases, namely, big bang and big crunch, were used for randomness and ordered particle distribution in solving optimization problems. In the circle search algorithm [35], features of a circle such as a diameter, radius, perimeter, tangent lines, and angle were used for obtaining optimization solutions. Newton’s metaheuristic algorithm [36] uses Newton’s gradient-based method for population update and incorporates a term containing the best solution in its update rule. Transit search [37] uses the exoplanet exploration method for finding the best optimal solution.

The fifth domain is nature-inspired techniques used in optimization. In the water cycle algorithm [38], the behavior of water flow in rivers, streams, and the sea is formulated for solving optimization problems. Farmland fertility [39] divides farmland into different parts for increasing optimal efficiency in internal and external memory. Sunflower optimizer [40] mimics the movement of sunflowers towards the sun by aggregating the distance between the sun for finding the best solution. In wind-driven optimization [41], velocity and position are updated based on atmospheric motion.

Chaos theory relates the chaotic dynamics of systems with initial conditions and unstable periodic motions [42]. It is applied in various applications such as biometric security [43], embedded systems [44], communications [45], lasers [46], pumped storage units [47], encryption systems [48], the Internet of Things [49], image processing [50], and image encryption [51].

Combining chaos in metaheuristics increases the exploration and exploitation of optimization techniques. Various chaotic metaheuristics were presented in the literature. In [52], a chaotic biogeography-based optimizer is proposed in which chaotic maps were incorporated in the migration, selection, and mutation operations of the optimizer. In [53], an improved version of manta ray foraging called the elite chaotic manta ray algorithm is proposed in which chaotic maps and opposition-based learning are implemented so that it does not fall in local minima. In [54], a chaotic version of the bonobo optimizer is proposed and applied for optimal power flow analysis in renewable energy sources. In [55], a chaotic variant of the salp search algorithm is used for the solution of the economic dispatch problem for different combinations of renewable energy resources. In [56], a chaotic variant of fruit fly optimization is proposed which incorporates fourteen chaotic maps and is tested on ten benchmark problems. In [57], an enhanced version of kill herd optimization is proposed by incorporating sine, circle, and tent chaotic maps. In [58], a chaotic version of invasive weed optimization is proposed for solving optimization problems. In [59], a chaotic quasi-oppositional arithmetic optimization algorithm is proposed for the thermo-economic design of tube and shell. In [60], a chaotic billiards optimization is proposed for optimum parameter estimation of solar hydrogen variable speed induction motor.

Grey wolf optimizer (GWO) has gained significant attention in recent years due to its flexibility, scalability, and few parameters [61]. It is applied in various applications such as gait analysis [62], structural strain reconstruction [63], engines [64], renewable energy systems [65], robotics [66], deep learning [67], wireless sensor networks [68], smart grid [69], medical [70], and energy management [71]. Even though GWO has been utilized in different applications, due to the complexity of real-world optimization problems, various improvements have been made in GWO in terms of updating mechanisms, hybridization, encoding schemes, multi-objective, and new operators.

In [72], a modified GWO for a wireless sensor network is presented. In this work, the weights are dynamically updated based on the distance between the wolves, their prey, and coefficient vectors for improving the optimization ability of GWO. In [73], a chaotic GWO is proposed for solving optimization problems. In this work, chaotic maps were incorporated into GWO for accelerating its convergence. Afterward, it is applied to thirteen constrained benchmark problems and five engineering-constrained problems. In [74], an improved GWO is proposed by incorporating variable weights along with a new governing equation for controlling parameters. In [75], a hybrid version of GWO is proposed. In this work a hybrid sparrow search algorithm GWO is proposed and applied for gain optimization of the proportional–integral–derivative controller. In [76], a hybrid algorithm called GWOPSO is proposed and applied for optimal parameter estimation of the proportional–integral–derivative used for the controlled pump-motor servo system. In [77], an improved chaotic GWO (ICGWO) is proposed by incorporating an adaptive convergence factor and chaotic map in GWO which is further applied in the extraction of supercritical carbon dioxide from a multi-herbal formula.

The autoregressive exogenous model (ARX) is used in different engineering problems such as time series data prediction [78], pneumatic positioning systems [79], wheeled robots [80], multiple-input–multiple-output (MIMO) systems [81], and human driving behavior modeling [82]. Various identification techniques were proposed for the parameter estimation of ARX. In [83], a modified momentum gradient descent algorithm is proposed which uses two gradient directions and sizes in each iteration for ARX identification. In [84], a recursive least squares, decomposition least squares, and interval-varying least squares were used for ARX identification. In [85], dwarf mongoose optimization is used for system identification of the ARX model. In [86], multi-innovation fractional least mean squares were used in estimation. In [87], an Aquila optimizer is used in parameter estimation of the ARX model, In [88], Kalman filter-based multi-step length gradient iterative algorithm with missing outputs is used for parameter estimation of the ARX models. In [89], a Renyi square error entropy and fourth-order statistic of the error–kurtosis–into the variable step size input for used ARX model identification.

The current study is a novel investigation exploring the potential of chaotic maps through an ICGWO for effective parameter estimation of ARX structure. The innovative contributions of the proposed study are as follows:

The parameter estimation problem of a system represented by the ARX model is investigated through optimization knacks of an improved chaotic grey wolf optimizer (ICGWO).

The performance of the proposed ICGWO scheme is examined in detail through comparison with the conventional counterparts for various generations, populations, and noise levels.

The statistical analysis through multiple independent trials confirms the accurate and robust performance of the ICGWO over the GWO, CGWO, and AGWO.

The accurate estimation for a practical example of a temperature process system further validates the convergent performance of the ICGWO.

The remainder of the article is structured as follows: ARX mathematical structure is presented in Section 2. In Section 3, the ICGWO-based proposed scheme is provided. Section 4 presents the performance comparison of ICGWO, GWO, AGWO, and CGWO. The article is concluded in Section 5.

2. ARX Mathematical Model

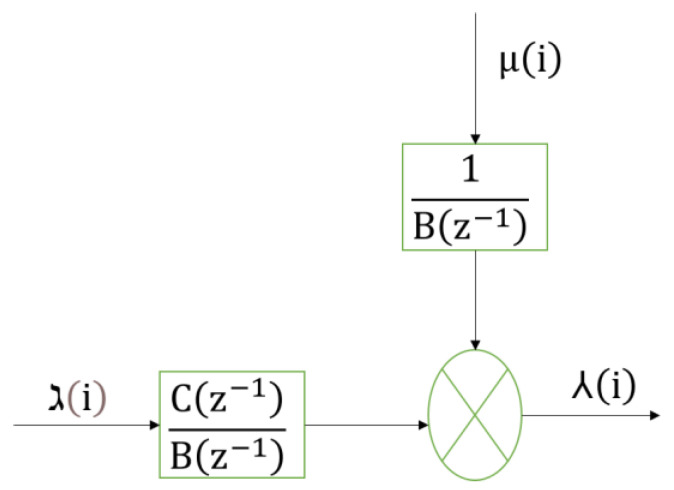

The ARX structure effectively model various engineering and applied sciences problems such as time series prediction, pneumatic positioning system, wheeled robots, MIMO systems, and behavior modeling [78,79,80,81,82]. The block diagram of the ARX model is presented in Figure 2, where and are polynomials with a degree and respectively, and given in (1) and (2). is random noise, is the input, and is the output

| (1) |

| (2) |

Figure 2.

ARX model block diagram.

The output from Figure 2 is presented in (3).

| (3) |

Solving (3) as presented in (4)

| (4) |

(4) can be rearranged as presented in (5)

| (5) |

The information vectors are defined in (6) and (7).

| (6) |

| (7) |

The parameter vectors are presented in (8) and (9).

| (8) |

| (9) |

The overall information and parameter vectors are given in (10) and (11), respectively.

| (10) |

| (11) |

The identification model of ARX system presented in Figure 1 is given in (12), and the parameter vector of ARX provided in (11) is estimated through the proposed optimization heuristics.

| (12) |

3. An Improved Chaotic Grey Wolf Optimization (ICGWO)

GWO is a recently proposed metaheuristic inspired by social hierarchy and the hunting behavior of grey wolves. Grey wolves are apex predators and prefer to live in a pack size of five to twelve on average with a strict dominant hierarchy. The leaders are male and female and responsible for decisions regarding hunting, the place for sleep, time for waking up, etc. The leader wolf is dominant, and the pack should follow his/her orders. The leader wolves may not be the strongest, but it is the best in terms of management. Hunting is the second interesting behavior of grey wolves after social hierarchy. The main steps of hunting in grey wolves are approaching the prey after tracking and chasing it, harassing the prey until it stops moving after pursuing and encircling, and finally, attack towards the prey.

ICGWO is an improved version of GWO for solving optimization problems. Its mathematical model is presented below.

3.1. Social Hierarchy

In this step, the fittest solution along with the second and third fittest solutions and , respectively, were considered. The rest of the solutions were presumed to be .

3.2. Encircling Prey

In this step, the wolves encircle the prey as presented in (13) and (14).

| (13) |

| (14) |

where is the prey’s position, and are vectors of coefficients as defined in (15) and (16).

| (15) |

| (16) |

where and are random vectors, and is an improved convergence factor whose value decreases non-linearly from 2 to 0, as presented in (17).

| (17) |

where is the maximum number of generations, and is the current generation.

3.3. Hunting

In this step, the positions from the three best solutions , , and are considered, while the rest of the solutions were required to follow the best solutions. It is presented in (18)–(24).

| (18) |

| (19) |

| (20) |

| (21) |

| (22) |

| (23) |

| (24) |

where ,, and were calculated from (16).

3.4. Attacking

In this step, the hunting step is terminated based on presented in (17) as it decreases non-linearly over generations for better exploration and exploitation in ICGWO.

3.5. Chaotic Map

To maintain the diversity, a logistic map is used such that the algorithm avoids the local minimum values during optimization. Its mapping is presented in (25).

| (25) |

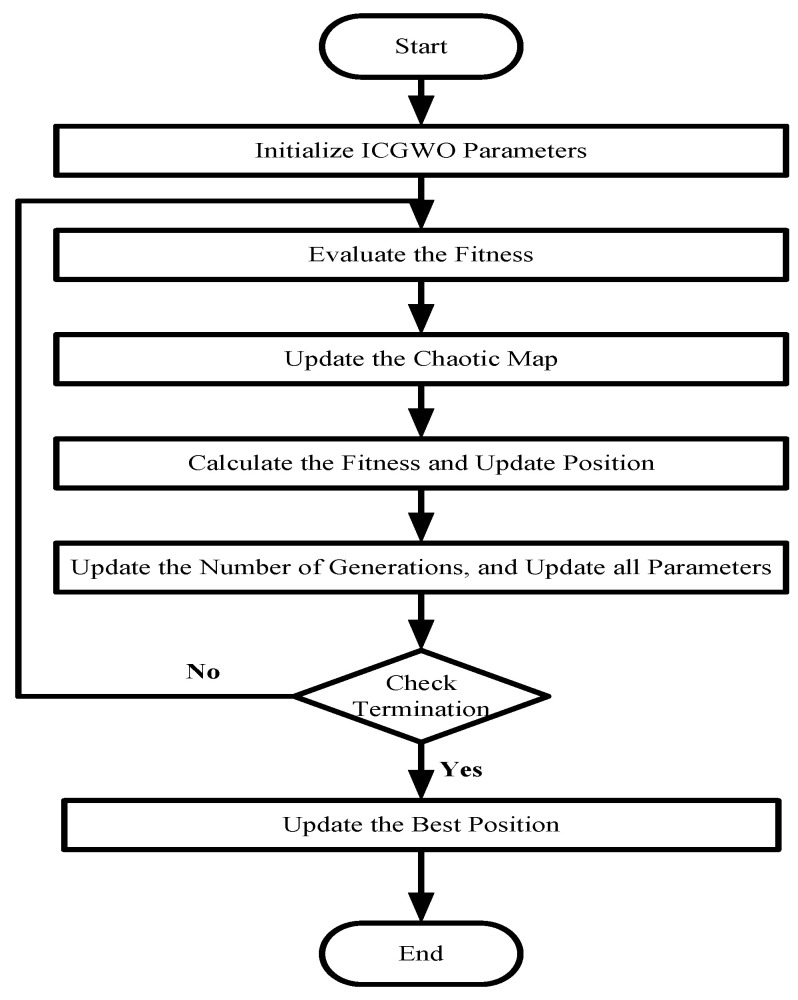

where for chaotic state population. The flowchart of ICGWO is shown in Figure 3.

Figure 3.

ICGWO Flowchart.

First, the parameters of ICGWO were set. Then, the best fitness solutions were assigned to , , and . Afterward, and the logistic chaotic map were updated. Finally, parameters were updated, and an optimal solution can be obtained.

4. Experimental Analysis

In this section, the experimental analysis of ICGWO for the ARX model is presented. The analysis was conducted on several variations of populations (), generations (), and noise levels. The simulations were conducted in a MATLAB environment with zero mean unit variance input signal, and the noise signal has a normal distribution with constant variance. The accuracy is evaluated in terms of fitness given in (26).

| (26) |

where is the estimated/approximated response and is the true/actual response. The model used for simulations is taken from [90] and presented in (27)–(28).

| (27) |

| (28) |

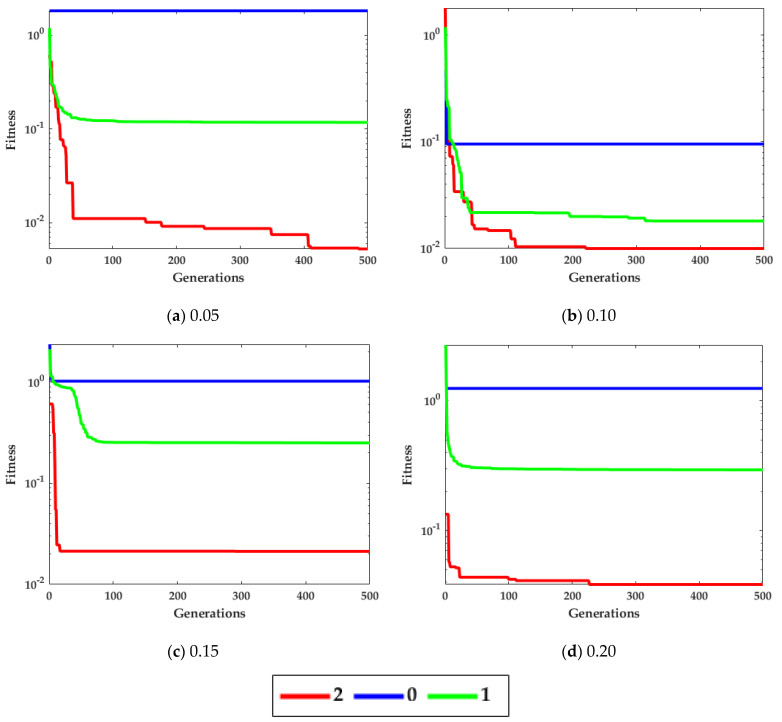

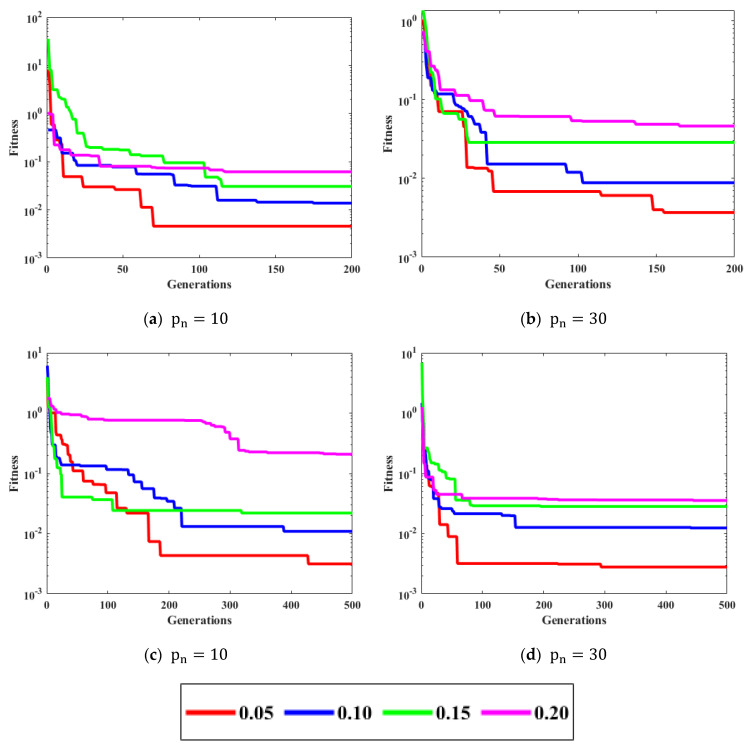

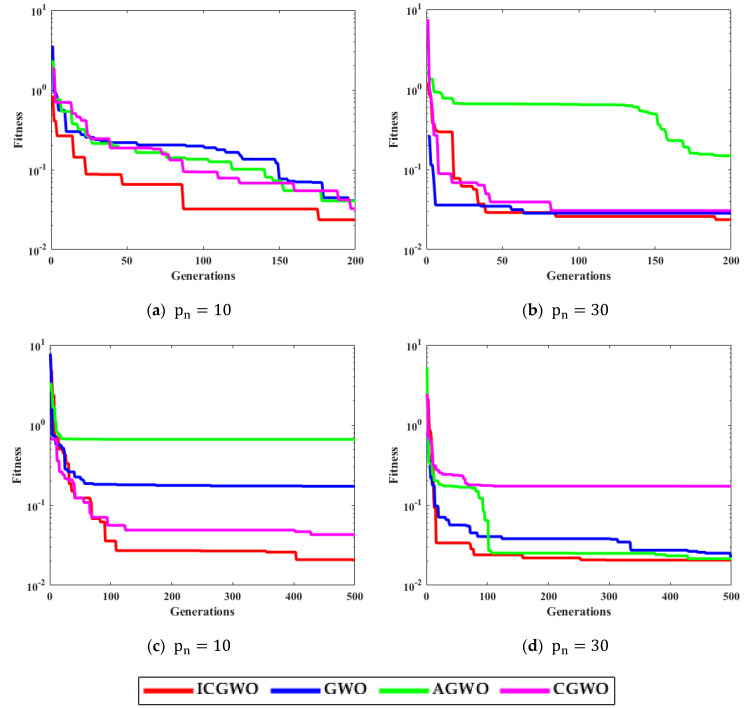

The noise is taken as white Gaussian with variances [0.05, 0.10, 0.15, 0.20]. The performance is evaluated on the population = 10, 30) and generations ( = 200, 500). Figure 4 shows the curves for different variations of the convergence factor of ICGWO. It is perceived from Figure 4a–d that upon ICGWO balances between exploration and exploitation when decreases nonlinearly from 2 to 0 for all noise variations. Table 1 shows the difference between variants of GWO. In AGWO, adaptive convergence is incorporated in GWO. This convergence factor decreases nonlinearly from 2 to 0. In CGWO, a logistic chaotic map is incorporated in GWO for balance between exploration and exploitation. In ICGWO, both the adaptive convergence factor and chaotic map were incorporated in GWO.

Figure 4.

ICGWO curves with respect to convergence factor.

Table 1.

Parameters setting.

| Method | Parameter |

|---|---|

| GWO | , decreases linearly to 0 |

| AGWO | , decreases non-linearly to 0 |

| CGWO | , decreases linearly to 0 with the chaotic map given in (25) |

| ICGWO | , decreases non-linearly to 0 with the chaotic map given in (25) |

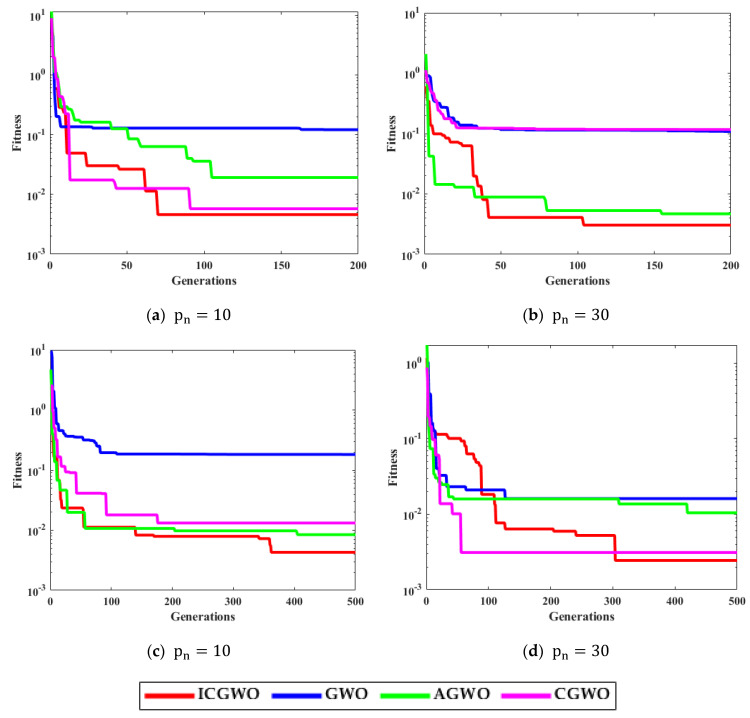

Figure 5 displays the convergence curves of ICGWO for all noise variances. It is perceived from Figure 5a–d that upon increasing and , the value of fitness reduces. However, for high noise variances, the fitness also increases.

Figure 5.

ICGWO convergence curves with respect to noise variances.

Table 2, Table 3, Table 4 and Table 5 exhibit the performance of ICGWO with GWO, AGWO, and CGWO for best-estimated parameters and corresponding fitness values for [0.05, 0.10, 0.15, 0.20] noise levels. It is notable that for low noise level, i.e., 0.05, the outcomes of ICGWO are better in contrast to higher noise levels. It is also notable from Table 2, Table 3, Table 4 and Table 5 that the best fitness for 0.05, 0.10, 0.15, and 0.20 noise levels are , and 0.03440, respectively. Therefore, it is established from Table 2, Table 3, Table 4 and Table 5 that the fitness of ICGWO reduces with an increase in noise levels.

Table 2.

Parameters estimates with respect to and at 0.05 noise level.

| Methods | Parameters | Best Fitness | |||||

|---|---|---|---|---|---|---|---|

| GWO | 200 | 10 | −1.5201 | 0.6553 | 0.2973 | 0.2588 | 0.00409 |

| 30 | −1.5418 | 0.6695 | 0.2617 | 0.2880 | 0.00232 | ||

| 500 | 10 | −1.5435 | 0.6772 | 0.2232 | 0.3523 | 0.00235 | |

| 30 | −1.5542 | 0.6810 | 0.2287 | 0.3179 | 0.00219 | ||

| AGWO | 200 | 10 | −1.4913 | 0.6427 | 0.2357 | 0.3969 | 0.00428 |

| 30 | −1.5459 | 0.6733 | 0.2059 | 0.3416 | 0.00229 | ||

| 500 | 10 | −1.5658 | 0.6916 | 0.2346 | 0.3067 | 0.00228 | |

| 30 | −1.5339 | 0.6619 | 0.2320 | 0.3209 | 0.00218 | ||

| CGWO | 200 | 10 | −1.5785 | 0.7069 | 0.2165 | 0.3121 | 0.00293 |

| 30 | −1.5304 | 0.6617 | 0.2482 | 0.3239 | 0.00223 | ||

| 500 | 10 | −1.5496 | 0.6705 | 0.2117 | 0.2990 | 0.00285 | |

| 30 | −1.5517 | 0.6775 | 0.2176 | 0.3160 | 0.00231 | ||

| ICGWO | 200 | 10 | −1.5363 | 0.6676 | 0.3003 | 0.2620 | 0.00294 |

| 30 | −1.5345 | 0.6664 | 0.2631 | 0.3037 | 0.00226 | ||

| 500 | 10 | −1.5363 | 0.6675 | 0.2230 | 0.3278 | 0.00241 | |

| 30 | −1.5495 | 0.6806 | 0.2308 | 0.3242 | 0.00222 | ||

| True Parameters | −1.5300 | 0.6600 | 0.2500 | 0.3000 | 0 | ||

Table 3.

Parameters estimates with respect to and at 0.10 noise level.

| Methods | Parameters | Best Fitness | |||||

|---|---|---|---|---|---|---|---|

| GWO | 200 | 10 | −1.5547 | 0.6779 | 0.2139 | 0.3290 | 0.00886 |

| 30 | −1.559 | 0.6862 | 0.2218 | 0.3388 | 0.00879 | ||

| 500 | 10 | −1.5729 | 0.6983 | 0.2152 | 0.3265 | 0.00884 | |

| 30 | −1.5298 | 0.6582 | 0.2119 | 0.3581 | 0.00875 | ||

| AGWO | 200 | 10 | −1.5088 | 0.6453 | 0.2003 | 0.3967 | 0.00899 |

| 30 | −1.5602 | 0.6902 | 0.2292 | 0.3390 | 0.00867 | ||

| 500 | 10 | −1.5698 | 0.6989 | 0.2378 | 0.3221 | 0.00881 | |

| 30 | −1.5425 | 0.6681 | 0.2086 | 0.3481 | 0.00878 | ||

| CGWO | 200 | 10 | −1.5740 | 0.7116 | 0.2677 | 0.3247 | 0.00975 |

| 30 | −1.5661 | 0.6938 | 0.2319 | 0.3349 | 0.00892 | ||

| 500 | 10 | −1.5030 | 0.6384 | 0.2214 | 0.3758 | 0.00892 | |

| 30 | −1.5507 | 0.6806 | 0.2144 | 0.3549 | 0.00861 | ||

| ICGWO | 200 | 10 | −1.5907 | 0.7213 | 0.2108 | 0.3339 | 0.00975 |

| 30 | −1.5511 | 0.6785 | 0.2473 | 0.3088 | 0.00881 | ||

| 500 | 10 | −1.5486 | 0.6831 | 0.2512 | 0.3257 | 0.00889 | |

| 30 | −1.5317 | 0.6641 | 0.2405 | 0.3409 | 0.00863 | ||

| True Parameters | −1.5300 | 0.6600 | 0.2500 | 0.3000 | 0 | ||

Table 4.

Parameters estimates with respect to and at 0.15 noise level.

| Methods | Parameters | Best Fitness | |||||

|---|---|---|---|---|---|---|---|

| GWO | 200 | 10 | −1.5511 | 0.6743 | 0.2067 | 0.3709 | 0.02077 |

| 30 | −1.5597 | 0.6875 | 0.2056 | 0.3773 | 0.01984 | ||

| 500 | 10 | −1.5266 | 0.6530 | 0.2330 | 0.3289 | 0.02018 | |

| 30 | −1.5427 | 0.6776 | 0.2099 | 0.3823 | 0.01940 | ||

| AGWO | 200 | 10 | −1.5655 | 0.6914 | 0.1608 | 0.3953 | 0.01997 |

| 30 | −1.5164 | 0.6568 | 0.2318 | 0.3900 | 0.01959 | ||

| 500 | 10 | −1.5524 | 0.6787 | 0.2076 | 0.3679 | 0.01975 | |

| 30 | −1.5420 | 0.6715 | 0.2111 | 0.3621 | 0.01943 | ||

| CGWO | 200 | 10 | −1.5034 | 0.6401 | 0.2316 | 0.3693 | 0.01994 |

| 30 | −1.5457 | 0.6790 | 0.2354 | 0.3592 | 0.01944 | ||

| 500 | 10 | −1.5394 | 0.6723 | 0.1991 | 0.3866 | 0.01938 | |

| 30 | −1.5129 | 0.6483 | 0.2231 | 0.3865 | 0.01951 | ||

| ICGWO | 200 | 10 | −1.5041 | 0.6408 | 0.1903 | 0.4027 | 0.02031 |

| 30 | −1.5163 | 0.6495 | 0.2248 | 0.3755 | 0.01953 | ||

| 500 | 10 | −1.5496 | 0.6803 | 0.2131 | 0.3565 | 0.01958 | |

| 30 | −1.5382 | 0.6739 | 0.1925 | 0.4079 | 0.01946 | ||

| True Parameters | −1.5300 | 0.6600 | 0.2500 | 0.3000 | 0 | ||

Table 5.

Parameters estimates with respect to and at 0.20 noise level.

| Methods | Parameters | Best Fitness | |||||

|---|---|---|---|---|---|---|---|

| GWO | 200 | 10 | −1.5064 | 0.6478 | 0.2525 | 0.3872 | 0.03496 |

| 30 | −1.5504 | 0.6782 | 0.1934 | 0.3844 | 0.03470 | ||

| 500 | 10 | −1.5498 | 0.6788 | 0.1838 | 0.3972 | 0.03464 | |

| 30 | −1.5342 | 0.6703 | 0.2040 | 0.4188 | 0.03446 | ||

| AGWO | 200 | 10 | −1.5016 | 0.6498 | 0.2500 | 0.4098 | 0.03550 |

| 30 | −1.5572 | 0.6877 | 0.2002 | 0.3906 | 0.03461 | ||

| 500 | 10 | −1.5637 | 0.6967 | 0.2228 | 0.3752 | 0.03483 | |

| 30 | −1.5370 | 0.6689 | 0.1895 | 0.4069 | 0.03444 | ||

| CGWO | 200 | 10 | −1.4745 | 0.6126 | 0.1896 | 0.4320 | 0.03577 |

| 30 | −1.5228 | 0.6583 | 0.1983 | 0.4179 | 0.03437 | ||

| 500 | 10 | −1.5052 | 0.6419 | 0.2009 | 0.4272 | 0.03460 | |

| 30 | −1.5305 | 0.6617 | 0.1899 | 0.4073 | 0.03449 | ||

| ICGWO | 200 | 10 | −1.5753 | 0.7045 | 0.1642 | 0.4106 | 0.03520 |

| 30 | −1.5329 | 0.6646 | 0.1877 | 0.4102 | 0.03445 | ||

| 500 | 10 | −1.5610 | 0.6916 | 0.1865 | 0.4064 | 0.03470 | |

| 30 | −1.5261 | 0.6627 | 0.2060 | 0.4179 | 0.03440 | ||

| True Parameters | −1.5300 | 0.6600 | 0.2500 | 0.3000 | 0 | ||

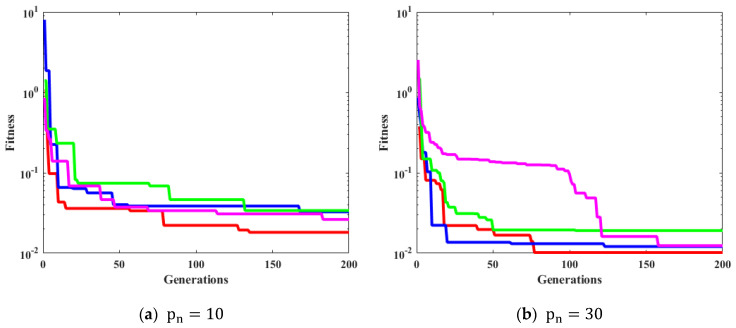

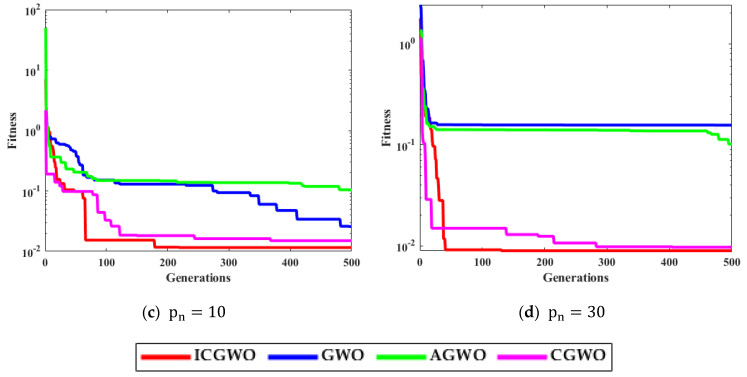

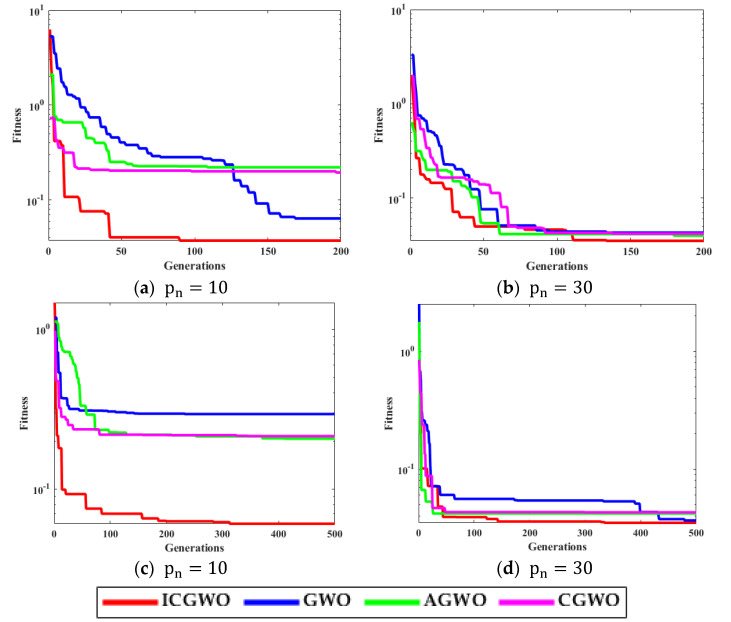

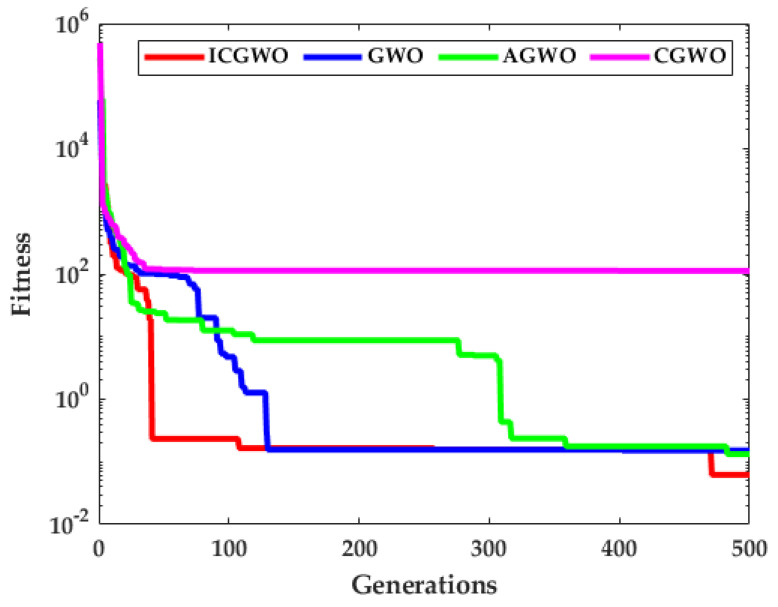

Figure 6, Figure 7, Figure 8 and Figure 9 confirm the convergence of ICGWO with GWO, AGWO, and CGWO for all levels of noise. Figure 6 shows the convergence for the 0.05 noise level. Figure 7 shows the convergence for the 0.10 noise level. Similarly Figure 8 and Figure 9 shows the convergence for 0.15 and 0.20 noise levels respectively. It is notable from Figure 6, Figure 7, Figure 8 and Figure 9 that upon the rise in noise levels, the fitness value increases. For the noise levels shown in Figure 6, Figure 7, Figure 8 and Figure 9, it is evident that the convergence of ICGWO is consistent and it accomplishes the lowest fitness value than GWO, AGWO, and CGWO for all scenarios.

Figure 6.

Convergence curves with respect to 0.05 noise.

Figure 7.

Convergence curves with respect to 0.10 noise.

Figure 8.

Convergence curves with respect to 0.15 noise.

Figure 9.

Convergence curves with respect to 0.20 noise.

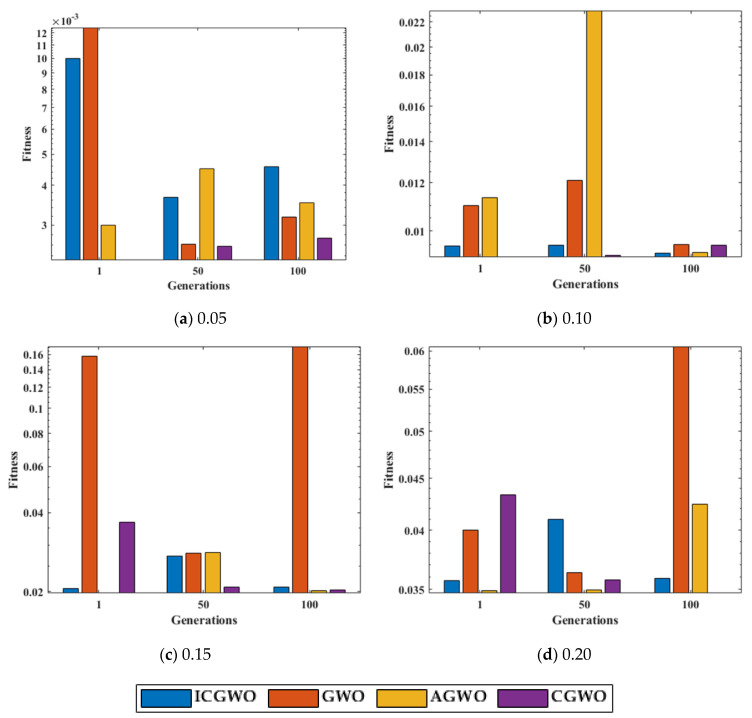

A statistical study of ICGWO against GWO, AGWO, and CGWO at , for 100 independent runs is displayed in Figure 10. Figure 10a shows the performance for 0.05 noise level. Similarly, Figure 10b–d shows the performance for noise levels 0.10, 0.15 and 0.20 respectively. It is perceived from Figure 10 that the fitness value of ICGWO against GWO, AGWO, and CGWO is lower on run#1, run#50, and run#100 for all levels of noise.

Figure 10.

Run# fitness value comparison of ICGWO with GWO, AGWO, and CGWO at and .

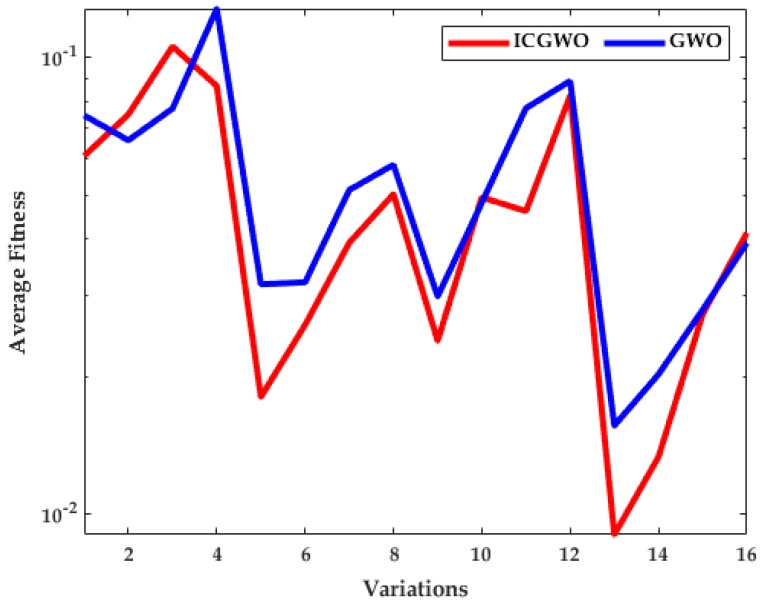

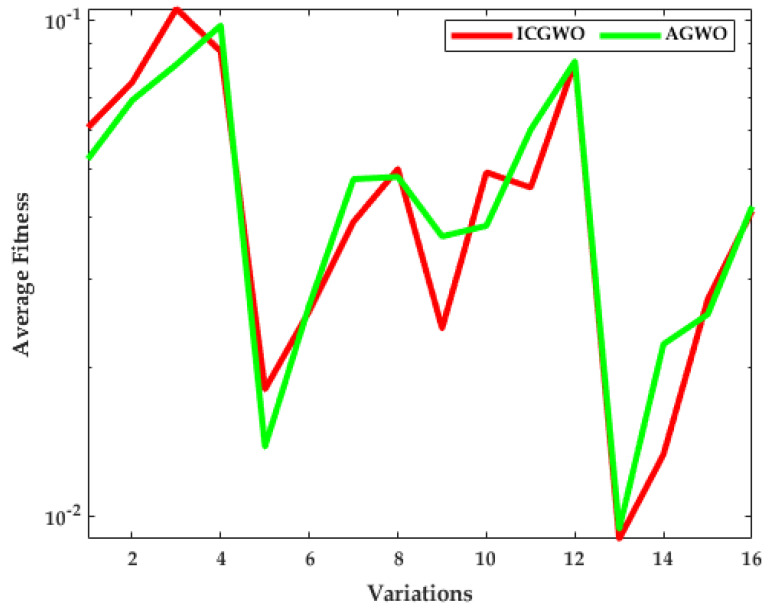

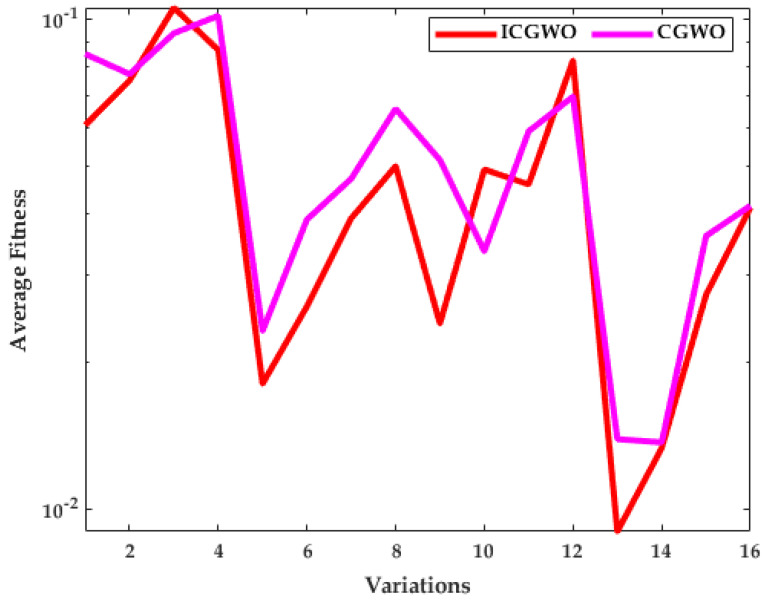

The investigation of ICGWO is further explored in terms of average fitness values for all scenarios of and , as revealed in Figure 11, Figure 12 and Figure 13. Figure 11 shows the values of average fitness for noise variances = 0.05, 0.10, 0.15, 0.20], population = 10, 30) and generations ( = 200, 500) between ICGWO and GWO. Similarly, Figure 12 and Figure 13 show these variations between ICGWO vs AGWO and ICGWO vs CGWO respectively. In Figure 11 it is established that ICGWO achieves the lowest fitness values than GWO for all sixteen variations. In Figure 12, the performance of ICGWO is still more significant than AGWO. Similarly, ICGWO outperforms CGWO in all variations in Figure 13. Therefore, it is established from Figure 11, Figure 12 and Figure 13 that ICGWO accomplishes a better performance than GWO, AGWO, and CGWO for all scenarios.

Figure 11.

ICGWO vs. GWO statistical curve with respect to average fitness.

Figure 12.

ICGWO vs. AGWO statistical curve with respect to average fitness.

Figure 13.

ICGWO vs. CGWO statistical curve with respect to average fitness.

Application to LD-Didactic Temperature Process Plant

To validate the performance of the proposed methodology, an ARX-based LD-Didactic temperature process plant model is considered. The authors of [91] described that the LD-Didactic temperature process consists of the pre-processing unit, a model selection unit, model estimation, and model validation. During pre-processing, noise data is filtered from temperature data. In model selection, ARX is considered due to low complexity [91]. The true parameters of ARX structure reflecting the actual dynamics of temperature process system are taken from the real time experimentation [91]. These parameters are presented in (29). The model is estimated by using variants of GWO i.e., AGWO, CGWO, and ICGWO.

| (29) |

The convergence curves for all noise levels at , noise level = 0.05, and is displayed in Figure 14.

Figure 14.

Convergence curves for LD-Didactic temperature process plant model.

The results presented in Figure 14 further validate the inferences drawn form the detailed analyses of the numerical example that the proposed ICGWO provides better a performance in comparison with the conventional GWO, AGWO, and simple CGWO counterparts for parameter estimation of the temperature process plant model.

5. Conclusions

In this article, the strength of GWO and its various variants CGWO, AGWO, and ICGWO is exploited for parameter estimation of the ARX structure required to model various engineering and applied sciences processes. The decision parameters of the ARX model were optimized over various populations, generations, and noise levels. The logistic chaotic map along with the improved convergence factor were fused in GWO. The ICGWO is robust, accurate, and convergent for the parameter estimation of the ARX system. The convergence plots and statistical analysis through the ample number of autonomous trials confirmed that ICGWO performs better in terms of convergence and robustness as compared to conventional counterparts of the standard GWO, an improved GWO, and a simple chaotic GWO. The accurate estimation of ARX parameters reflecting the LD-Didactic temperature process plant model further validates the better performance of ICGWO. Future studies can extend the application of the proposed scheme to solve problems such as PV solar panels, constraint-preserving mixers, and real-time estimation of harmonics in nonlinear loads [92,93,94,95,96].

Acknowledgments

The authors would like to thank the support of National Yunlin University of Science and Technology through project 112T25.

Author Contributions

Methodology, K.M.; visualization, K.M.C and Z.A.K.; formal analysis, Z.A.K., N.I.C. and M.A.Z.R.; writing—original draft preparation, K.M.; writing—review and editing, N.I.C., Z.A.K. and M.A.Z.R.; project administration, K.M.C., Z.A.K. and N.I.C. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Young P.C. Nonlinear Nonstationary Signal Processing. The Press Syndicate of the University of Cambridge; Cambridge, UK: 2000. Stochastic, Dynamic Modelling and Signal Processing: Time Variable and State Dependent Parameter Estimation; pp. 4–114. [Google Scholar]

- 2.Mehmood K., Chaudhary N.I., Khan Z.A., Cheema K.M., Raja M.A.Z., Milyani A.H., Azhari A.A. Nonlinear Hammerstein System Identification: A Novel Application of Marine Predator Optimization Using the Key Term Separation Technique. Mathematics. 2022;10:4217. doi: 10.3390/math10224217. [DOI] [Google Scholar]

- 3.Zhao W., Zhang H., Zhang Z., Zhang K., Wang L. Parameters Tuning of Fractional-Order Proportional Integral Derivative in Water Turbine Governing System Using an Effective SDO with Enhanced Fitness-Distance Balance and Adaptive Local Search. Water. 2022;14:3035. doi: 10.3390/w14193035. [DOI] [Google Scholar]

- 4.Shadab S., Revati G., Wagh S.R., Singh N.M. Finite-time parameter estimation for an online monitoring of transformer: A system identification perspective. Int. J. Electr. Power Energy Syst. 2023;145:108639. doi: 10.1016/j.ijepes.2022.108639. [DOI] [Google Scholar]

- 5.Ćalasan M., Aleem S.H.A., Hasanien H.M., Alaas Z.M., Ali Z.M. An innovative approach for mathematical modeling and parameter estimation of PEM fuel cells based on iterative Lambert W function. Energy. 2023;264:126165. doi: 10.1016/j.energy.2022.126165. [DOI] [Google Scholar]

- 6.Diao Y., Jelescu I. Parameter estimation for WMTI-Watson model of white matter using encoder–decoder recurrent neural network. Magn. Reson. Med. 2023;89:1193–1206. doi: 10.1002/mrm.29495. [DOI] [PubMed] [Google Scholar]

- 7.Jumamyradov M., Craig B.M., Munkin M., Greene W. Comparing the Conditional Logit Estimates and True Parameters under Preference Heterogeneity: A Simulated Discrete Choice Experiment. Econometrics. 2023;11:4. doi: 10.3390/econometrics11010004. [DOI] [Google Scholar]

- 8.Mehmood A., Raja M.A.Z. Novel design of weighted differential evolution for parameter estimation of Hammerstein-Wiener systems. J. Adv. Res. 2023;43:123–136. doi: 10.1016/j.jare.2022.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gao J., Cui Y., Ji X., Wang X., Hu G., Liu F. A Parametric Identification Method of Human Gait Differences and its Application in Rehabilitation. Appl. Sci. 2019;9:4581. doi: 10.3390/app9214581. [DOI] [Google Scholar]

- 10.Waleed U., Haseeb A., Ashraf M.M., Siddiq F., Rafiq M., Shafique M. A Multiobjective Artificial-Hummingbird-Algorithm-Based Framework for Optimal Reactive Power Dispatch Considering Renewable Energy Sources. Energies. 2022;15:9250. doi: 10.3390/en15239250. [DOI] [Google Scholar]

- 11.Atanasov A.Z., Georgiev S.G., Vulkov L.G. Parameter Estimation Analysis in a Model of Honey Production. Axioms. 2023;12:214. doi: 10.3390/axioms12020214. [DOI] [Google Scholar]

- 12.Liao Y., Zhao W., Wang L. Improved Manta Ray Foraging Optimization for Parameters Identification of Magnetorheological Dampers. Mathematics. 2021;9:2230. doi: 10.3390/math9182230. [DOI] [Google Scholar]

- 13.Malik N.A., Chang C.-L., Chaudhary N.I., Khan Z.A., Raja M.A.Z., Kiani A.K., Milyani A.H., Azhari A.A. Parameter estimation of harmonics arising in electrical instruments of smart grids using cuckoo search heuristics. Front. Energy Res. 2022;10:1059132. doi: 10.3389/fenrg.2022.1059132. [DOI] [Google Scholar]

- 14.Sun J., Garibaldi J.M., Hodgman C. Parameter estimation using metaheuristics in systems biology: A comprehensive review. IEEE/ACM Trans. Comput. Biol. Bioinform. 2011;91:185–202. doi: 10.1109/TCBB.2011.63. [DOI] [PubMed] [Google Scholar]

- 15.Bardet J.-M., Lang G., Oppenheim G., Philippe A., Stoev S., Taqqu M.S. Semi-parametric estimation of the long-range dependence parameter: A survey. Theory Appl. Long-Range Depend. 2003;557:577. [Google Scholar]

- 16.Raja M.A.Z., Chaudhary N.I. Two-stage fractional least mean square identification algorithm for parameter estimation of CARMA systems. Signal Process. 2015;107:327–339. doi: 10.1016/j.sigpro.2014.06.015. [DOI] [Google Scholar]

- 17.Jabri M., Chouiref H., Jerbi H., Braiek N.B. Fuzzy Logic Parameter Estimation of an Electrical System; Proceedings of the 2008 5th International Multi-Conference on Systems, Signals and Devices; Amman, Jordan. 20–22 July 2008. [Google Scholar]

- 18.Venkateswari R., Rajasekar N. Review on parameter estimation techniques of solar photovoltaic systems. Int. Trans. Electr. Energy Syst. 2021;31:e13113. doi: 10.1002/2050-7038.13113. [DOI] [Google Scholar]

- 19.Chen Y., Zhou Y. Machine learning based decision making for time varying systems: Parameter estimation and performance optimization. Knowl.-Based Syst. 2020;190:105479. doi: 10.1016/j.knosys.2020.105479. [DOI] [Google Scholar]

- 20.Kennedy J., Eberhart R. Particle Swarm Optimization; Proceedings of the ICNN’95—International Conference on Neural Networks; Perth, WA, Australia. 27 November–1 December 1995. [Google Scholar]

- 21.Wang L., Cao Q., Zhang Z., Mirjalili S., Zhao W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022;114:105082. doi: 10.1016/j.engappai.2022.105082. [DOI] [Google Scholar]

- 22.Mirjalili S., Mirjalili S.M., Lewis A. Grey wolf optimizer. Adv. Eng. Softw. 2014;69:46–61. doi: 10.1016/j.advengsoft.2013.12.007. [DOI] [Google Scholar]

- 23.Zhao W., Zhang Z., Wang L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020;87:103300. doi: 10.1016/j.engappai.2019.103300. [DOI] [Google Scholar]

- 24.Zhao W., Wang L., Mirjalili S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022;388:114194. doi: 10.1016/j.cma.2021.114194. [DOI] [Google Scholar]

- 25.Rao R.V., Savsani V.J., Vakharia D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011;43:303–315. doi: 10.1016/j.cad.2010.12.015. [DOI] [Google Scholar]

- 26.Pira E. City councils evolution: A socio-inspired metaheuristic optimization algorithm. J. Ambient. Intell. Humaniz. Comput. 2022:1–50. doi: 10.1007/s12652-022-03765-5. [DOI] [Google Scholar]

- 27.Faridmehr I., Nehdi M.L., Davoudkhani I.F., Poolad A. Mountaineering Team-Based Optimization: A Novel Human-Based Metaheuristic Algorithm. Mathematics. 2023;11:1273. doi: 10.3390/math11051273. [DOI] [Google Scholar]

- 28.Askari Q., Younas I., Saeed M. Political Optimizer: A novel socio-inspired meta-heuristic for global optimization. Knowl.-Based Syst. 2020;195:105709. doi: 10.1016/j.knosys.2020.105709. [DOI] [Google Scholar]

- 29.Borji A. MICAI 2007: Advances in Artificial Intelligence, Proceedings of the 6th Mexican International Conference on Artificial Intelligence, Aguascalientes, Mexico, 4–10 November 2007. Springer; Berlin/Heidelberg, Germany: 2007. A New Global Optimization Algorithm Inspired by Parliamentary Political Competitions. [Google Scholar]

- 30.Price K.V. Handbook of Optimization. Springer; Berlin/Heidelberg, Germany: 2013. Differential Evolution; pp. 187–214. [Google Scholar]

- 31.Chen Z., Francis A., Li S., Liao B., Xiao D., Ha T.T., Li J., Ding L., Cao X. Egret Swarm Optimization Algorithm: An Evolutionary Computation Approach for Model Free Optimization. Biomimetics. 2022;7:144. doi: 10.3390/biomimetics7040144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Holland J.H. Genetic Algorithms. Sci. Am. 1992;267:66–73. doi: 10.1038/scientificamerican0792-66. [DOI] [Google Scholar]

- 33.Sulaiman M.H., Mustaffa Z., Saari M.M., Daniyal H., Mirjalili S. Evolutionary mating algorithm. Neural Comput. Appl. 2023;35:487–516. doi: 10.1007/s00521-022-07761-w. [DOI] [Google Scholar]

- 34.Erol O.K., Eksin I. A new optimization method: Big bang–big crunch. Adv. Eng Softw. 2006;37:106–111. doi: 10.1016/j.advengsoft.2005.04.005. [DOI] [Google Scholar]

- 35.Qais M.H., Hasanien H.M., Turky R.A., Alghuwainem S., Tostado-Véliz M., Jurado F. Circle Search Algorithm: A Geometry-Based Metaheuristic Optimization Algorithm. Mathematics. 2022;10:1626. doi: 10.3390/math10101626. [DOI] [Google Scholar]

- 36.Gholizadeh S., Danesh M., Gheyratmand C. A new Newton metaheuristic algorithm for discrete performance-based design optimization of steel moment frames. Comput. Struct. 2020;234:106250. doi: 10.1016/j.compstruc.2020.106250. [DOI] [Google Scholar]

- 37.Mirrashid M., Naderpour H. Transit search: An optimization algorithm based on exoplanet exploration. Results Control. Optim. 2022;7:100127. doi: 10.1016/j.rico.2022.100127. [DOI] [Google Scholar]

- 38.Eskandar H., Sadollah A., Bahreininejad A., Hamdi M. Water cycle algorithm–A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012;110:151–166. doi: 10.1016/j.compstruc.2012.07.010. [DOI] [Google Scholar]

- 39.Shayanfar H., Gharehchopogh F.S. Farmland fertility: A new metaheuristic algorithm for solving continuous optimization problems. Appl. Soft Comput. 2018;71:728–746. doi: 10.1016/j.asoc.2018.07.033. [DOI] [Google Scholar]

- 40.Yuan Z., Wang W., Wang H., Razmjooy N. A new technique for optimal estimation of the circuit-based PEMFCs using developed Sunflower Optimization Algorithm. Energy Rep. 2020;6:662–671. doi: 10.1016/j.egyr.2020.03.010. [DOI] [Google Scholar]

- 41.Bayraktar Z., Komurcu M., Werner D.H. Wind Driven Optimization (WDO): A Novel Nature-Inspired Optimization Algorithm and Its Application to Electromagnetics; Proceedings of the IEEE Antennas and Propagation Society International Symposium; Toronto, ON, Canada. 11–17 July 2010. [Google Scholar]

- 42.Yang D., Li G., Cheng G. On the efficiency of chaos optimization algorithms for global optimization. Chaos Solitons Fractals. 2007;34:1366–1375. doi: 10.1016/j.chaos.2006.04.057. [DOI] [Google Scholar]

- 43.Liu J., Wang Y., Wang K., Liu Z. An Irreversible and Revocable Template Generation Scheme Based on Chaotic System. Entropy. 2023;25:378. doi: 10.3390/e25020378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dridi F., El Assad S., El Hadj Youssef W., Machhout M. Design, Hardware Implementation on FPGA and Performance Analysis of Three Chaos-Based Stream Ciphers. Fractal Fract. 2023;7:197. doi: 10.3390/fractalfract7020197. [DOI] [Google Scholar]

- 45.Andreyev Y. Analytical Model of an Energy Detector for Ultra-Wideband Chaotic Communications. Electronics. 2023;12:954. doi: 10.3390/electronics12040954. [DOI] [Google Scholar]

- 46.Bian H., Zhang X., Li P., Jia Z., Ma L., Xu B., Shore K.A., Qin Y., Wang Y. Sub-40 GHz Broadband Polarization Chaos Generation Using Mutually Coupled Free-Running VCSELs. Photonics. 2023;10:219. doi: 10.3390/photonics10020219. [DOI] [Google Scholar]

- 47.Wang L., Zhang L., Zhao W., Liu X. Parameter Identification of a Governing System in a Pumped Storage Unit Based on an Improved Artificial Hummingbird Algorithm. Energies. 2022;15:6966. doi: 10.3390/en15196966. [DOI] [Google Scholar]

- 48.Jirjees S.W., Alkalid F.F., Shareef W.F. Image Encryption Using Dynamic Image as a Key Based on Multilayers of Chaotic Permutation. Symmetry. 2023;15:409. doi: 10.3390/sym15020409. [DOI] [Google Scholar]

- 49.Babajans R., Cirjulina D., Capligins F., Kolosovs D., Grizans J., Litvinenko A. Performance Analysis of Vilnius Chaos Oscillator-Based Digital Data Transmission Systems for IoT. Electronics. 2023;12:709. doi: 10.3390/electronics12030709. [DOI] [Google Scholar]

- 50.Song S., Jia H., Ma J. A Chaotic Electromagnetic Field Optimization Algorithm Based on Fuzzy Entropy for Multilevel Thresholding Color Image Segmentation. Entropy. 2019;21:398. doi: 10.3390/e21040398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Firdous A., ur Rehman A., Saad Missen M.M. A highly efficient color image encryption based on linear transformation using chaos theory and SHA-2. Multimed. Tools Appl. 2019;78:24809–24835. doi: 10.1007/s11042-019-7623-3. [DOI] [Google Scholar]

- 52.Saremi S., Mirjalili S., Lewis A. Biogeography-based optimisation with chaos. Neural Comput. Appl. 2014;25:1077–1097. doi: 10.1007/s00521-014-1597-x. [DOI] [Google Scholar]

- 53.Yang J., Liu Z., Zhang X., Hu G. Elite Chaotic Manta Ray Algorithm Integrated with Chaotic Initialization and Opposition-Based Learning. Mathematics. 2022;10:2960. doi: 10.3390/math10162960. [DOI] [Google Scholar]

- 54.Hassan M.H., Elsayed S.K., Kamel S., Rahmann C., Taha I.B.M. Developing chaotic Bonobo optimizer for optimal power flow analysis considering stochastic renewable energy resources. Int. J. Energy Res. 2022;46:11291–11325. doi: 10.1002/er.7928. [DOI] [Google Scholar]

- 55.Azeem M., Malik T.N., Muqeet H.A., Hussain M.M., Ali A., Khan B., Rehman A.U. Combined Economic Emission Dispatch in Presence of Renewable Energy Resources Using CISSA in a Smart Grid Environment. Electronics. 2023;12:715. doi: 10.3390/electronics12030715. [DOI] [Google Scholar]

- 56.Mitić M., Vuković N., Petrović M., Miljković Z. Chaotic fruit fly optimization algorithm. Knowl.-Based Syst. 2015;89:446–458. doi: 10.1016/j.knosys.2015.08.010. [DOI] [Google Scholar]

- 57.Saremi S., Mirjalili S.M., Mirjalili S. Chaotic krill herd optimization algorithm. Procedia Technol. 2014;12:180–185. doi: 10.1016/j.protcy.2013.12.473. [DOI] [Google Scholar]

- 58.Ahmadi M., Mojallali H. Chaotic invasive weed optimization algorithm with application to parameter estimation of chaotic systems. Chaos Solitons Fractals. 2012;45:1108–1120. doi: 10.1016/j.chaos.2012.05.010. [DOI] [Google Scholar]

- 59.Turgut M.S., Turgut O.E., Abualigah L. Chaotic quasi-oppositional arithmetic optimization algorithm for thermo-economic design of a shell and tube condenser running with different refrigerant mixture pairs. Neural Comput. Appl. 2022;34:8103–8135. doi: 10.1007/s00521-022-06899-x. [DOI] [Google Scholar]

- 60.Elnaghi B.E., Abelwhab M.N., Ismaiel A.M., Mohammed R.H. Solar Hydrogen Variable Speed Control of Induction Motor Based on Chaotic Billiards Optimization Technique. Energies. 2023;16:1110. doi: 10.3390/en16031110. [DOI] [Google Scholar]

- 61.Faris H., Aljarah I., Al-Betar M.A., Mirjalili S. Grey wolf optimizer: A review of recent variants and applications. Neural Comput. Appl. 2018;30:413–435. doi: 10.1007/s00521-017-3272-5. [DOI] [Google Scholar]

- 62.Hu G., Qiao Y., Qin X., Wei G. Approximate Multi-Degree Reduction of SG-Bézier Curves Using the Grey Wolf Optimizer Algorithm. Symmetry. 2019;11:1242. doi: 10.3390/sym11101242. [DOI] [Google Scholar]

- 63.Zhang M., Wang J., Xiong X., Chen Z., Gong Y., Gao S., Zhang W. The Strain Distribution Reconstructions Using GWO Algorithm and Verification by FBG Experimental Data. Appl. Sci. 2023;13:1259. doi: 10.3390/app13031259. [DOI] [Google Scholar]

- 64.Alahmer H., Alahmer A., Alkhazaleh R., Alrbai M., Alamayreh M.I. Applied Intelligent Grey Wolf Optimizer (IGWO) to Improve the Performance of CI Engine Running on Emulsion Diesel Fuel Blends. Fuels. 2023;4:35–57. doi: 10.3390/fuels4010004. [DOI] [Google Scholar]

- 65.Hossain M.A., Ahmed A., Tito S.R., Ahshan R., Sakib T.H., Nengroo S.H. Multi-Objective Hybrid Optimization for Optimal Sizing of a Hybrid Renewable Power System for Home Applications. Energies. 2023;16:96. doi: 10.3390/en16010096. [DOI] [Google Scholar]

- 66.Ou Y., Yin P., Mo L. An Improved Grey Wolf Optimizer and Its Application in Robot Path Planning. Biomimetics. 2023;8:84. doi: 10.3390/biomimetics8010084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.ZainEldin H., Gamel S.A., El-Kenawy E.-S.M., Alharbi A.H., Khafaga D.S., Ibrahim A., Talaat F.M. Brain Tumor Detection and Classification Using Deep Learning and Sine-Cosine Fitness Grey Wolf Optimization. Bioengineering. 2023;10:18. doi: 10.3390/bioengineering10010018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rami Reddy M., Ravi Chandra M.L., Venkatramana P., Dilli R. Energy-Efficient Cluster Head Selection in Wireless Sensor Networks Using an Improved Grey Wolf Optimization Algorithm. Computers. 2023;12:35. doi: 10.3390/computers12020035. [DOI] [Google Scholar]

- 69.Abdullah M., Malik T.N., Ashraf M.M., Shah M.A., Iqbal Q., Sabir M. Optimal Evaluation of Power System Harmonics in Smart Grid Based on Grey Wolf Optimizer; Proceedings of the 2020 3rd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET); Sukkur, Pakistan. 29–30 January 2020; pp. 1–6. [Google Scholar]

- 70.Babu S.B., Suneetha A., Babu G.C., Kumar Y.J.N., Karuna G. Medical disease prediction using grey wolf optimization and auto encoder based recurrent neural network. Period. Eng. Nat. Sci. 2018;6:229–240. doi: 10.21533/pen.v6i1.286. [DOI] [Google Scholar]

- 71.Ayub S., Ayob S.M., Tan C.W., Arif S.M., Taimoor M., Aziz L., Bukar A.L., Al-Tashi Q., Ayop R. Multi-Criteria Energy Management with Preference Induced Load Scheduling Using Grey Wolf Optimizer. Sustainability. 2023;15:957. doi: 10.3390/su15020957. [DOI] [Google Scholar]

- 72.Zhao X., Ren S., Quan H., Gao Q. Routing Protocol for Heterogeneous Wireless Sensor Networks Based on a Modified Grey Wolf Optimizer. Sensors. 2020;20:820. doi: 10.3390/s20030820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Kohli M., Arora S. Chaotic grey wolf optimization algorithm for constrained optimization problems. J. Comput. Des. Eng. 2018;5:458–472. doi: 10.1016/j.jcde.2017.02.005. [DOI] [Google Scholar]

- 74.Gao Z.-M., Zhao J. An improved grey wolf optimization algorithm with variable weights. Comput. Intell. Neurosci. 2019;2019:2981282. doi: 10.1155/2019/2981282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Fadheel B.A., Wahab N.I.A., Mahdi A.J., Premkumar M., Radzi M.A.B.M., Soh A.B.C., Veerasamy V., Irudayaraj A.X.R. A Hybrid Grey Wolf Assisted-Sparrow Search Algorithm for Frequency Control of RE Integrated System. Energies. 2023;16:1177. doi: 10.3390/en16031177. [DOI] [Google Scholar]

- 76.Lu S., Wang H., Zhao G., Zhou G. Grey Wolf Particle Swarm Optimized Pump–Motor Servo System Constant Speed Control Strategy. Machines. 2023;11:178. doi: 10.3390/machines11020178. [DOI] [Google Scholar]

- 77.He M., Hong L., Yang Z.-Y., Yang T.-B., Zeng J. Bioactive assay and hyphenated chromatography detection for complex supercritical CO2 extract from Chaihu Shugan San using an experimental design approach. Microchem. J. 2018;142:394–402. doi: 10.1016/j.microc.2018.07.016. [DOI] [Google Scholar]

- 78.Li H., Liu X., Chen Y., Fan Y. Testing for Serial Correlation in Autoregressive Exogenous Models with Possible GARCH Errors. Entropy. 2022;24:1076. doi: 10.3390/e24081076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Muftah M.N., Faudzi A.A.M., Sahlan S., Shouran M. Modeling and Fuzzy FOPID Controller Tuned by PSO for Pneumatic Positioning System. Energies. 2022;15:3757. doi: 10.3390/en15103757. [DOI] [Google Scholar]

- 80.Khan M.A., Baig D.-E.-Z., Ashraf B., Ali H., Rashid J., Kim J. Dynamic Modeling of a Nonlinear Two-Wheeled Robot Using Data-Driven Approach. Processes. 2022;10:524. doi: 10.3390/pr10030524. [DOI] [Google Scholar]

- 81.Piñón A., Favela-Contreras A., Beltran-Carbajal F., Lozoya C., Dieck-Assad G. Novel Strategy of Adaptive Predictive Control Based on a MIMO-ARX Model. Actuators. 2022;11:21. doi: 10.3390/act11010021. [DOI] [Google Scholar]

- 82.Nwadiuto J.C., Okuda H., Suzuki T. Driving Behavior Modeling Based on Consistent Variable Selection in a PWARX Model. Appl. Sci. 2021;11:4938. doi: 10.3390/app11114938. [DOI] [Google Scholar]

- 83.Tu Q., Rong Y., Chen J. Parameter identification of ARX models based on modified momentum gradient descent algorithm. Complexity. 2020;2020:9537075. doi: 10.1155/2020/9537075. [DOI] [Google Scholar]

- 84.Ding F., Wang F., Xu L., Hayat T., Alsaedi A. Parameter estimation for pseudo-linear systems using the auxiliary model and the decomposition technique. IET Control. Theory Appl. 2017;11:390–400. doi: 10.1049/iet-cta.2016.0491. [DOI] [Google Scholar]

- 85.Mehmood K., Chaudhary N.I., Khan Z.A., Cheema K.M., Raja M.A.Z., Milyani A.H., Azhari A.A. Dwarf Mongoose Optimization Metaheuristics for Autoregressive Exogenous Model Identification. Mathematics. 2022;10:3821. doi: 10.3390/math10203821. [DOI] [Google Scholar]

- 86.Chaudhary N.I., Raja M.A.Z., He Y., Khan Z.A., Machado J.T. Design of multi innovation fractional LMS algorithm for parameter estimation of input nonlinear control autoregressive systems. Appl. Math. Model. 2021;93:412–425. doi: 10.1016/j.apm.2020.12.035. [DOI] [Google Scholar]

- 87.Mehmood K., Chaudhary N.I., Khan Z.A., Raja M.A.Z., Cheema K.M., Milyani A.H. Design of Aquila Optimization Heuristic for Identification of Control Autoregressive Systems. Mathematics. 2022;10:1749. doi: 10.3390/math10101749. [DOI] [Google Scholar]

- 88.Chen J., Zhu Q., Liu Y. Modified Kalman filtering based multi-step-length gradient iterative algorithm for ARX models with random missing outputs. Automatica. 2020;118:109034. doi: 10.1016/j.automatica.2020.109034. [DOI] [Google Scholar]

- 89.Jing S. Identification of an ARX model with impulse noise using a variable step size information gradient algorithm based on the kurtosis and minimum Renyi error entropy. Int. J. Robust Nonlinear Control. 2022;32:1672–1686. doi: 10.1002/rnc.5903. [DOI] [Google Scholar]

- 90.Filipovic V.Z. Outlier robust identification of dual-rate Hammerstein models in the presence of unmodeled dynamics. Int. J. Robust Nonlinear Control. 2022;32:1162–1179. doi: 10.1002/rnc.5865. [DOI] [Google Scholar]

- 91.Ashar N.D.B.K., Yusoff Z.M., Ismail N., Hairuddin M.A. ARX model identification for the real-time temperature process with Matlab-arduino implementation. ICIC Express Lett. 2020;14:103–111. [Google Scholar]

- 92.Chaudhary N.I., Raja M.A.Z., Khan Z.A., Mehmood A., Shah S.M. Design of fractional hierarchical gradient descent algorithm for parameter estimation of nonlinear control autoregressive systems. Chaos Solitons Fractals. 2022;157:111913. doi: 10.1016/j.chaos.2022.111913. [DOI] [Google Scholar]

- 93.Malik N.A., Chaudhary N.I., Raja M.A.Z. Firefly Optimization Heuristics for Sustainable Estimation in Power System Harmonics. Sustainability. 2023;15:4816. doi: 10.3390/su15064816. [DOI] [Google Scholar]

- 94.Satria H., Syah R.B.Y., Nehdi M.L., Almustafa M.K., Adam A.O.I. Parameters Identification of Solar PV Using Hybrid Chaotic Northern Goshawk and Pattern Search. Sustainability. 2023;15:5027. doi: 10.3390/su15065027. [DOI] [Google Scholar]

- 95.Fuchs F.G., Lye K.O., Møll Nilsen H., Stasik A.J., Sartor G. Constraint Preserving Mixers for the Quantum Approximate Optimization Algorithm. Algorithms. 2022;15:202. doi: 10.3390/a15060202. [DOI] [Google Scholar]

- 96.Haseeb A., Waleed U., Ashraf M.M., Siddiq F., Rafiq M., Shafique M. Hybrid Weighted Least Square Multi-Verse Optimizer (WLS–MVO) Framework for Real-Time Estimation of Harmonics in Non-Linear Loads. Energies. 2023;16:609. doi: 10.3390/en16020609. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.