Abstract

Background

Medical artificial intelligence (AI) in varying degrees has exerted significant influence on many medical fields, especially in the midst of the COVID-19 pandemic. However, little is known regarding how to address the reluctance of medical staff to use AI technology. While recent research has highlighted the importance of medical staff participation in the development of AI, the current understanding of influence of medical staff participation on acceptance of AI is limited.

Objectives

To provide insights into the mechanism that how medical staff participation impacts on the medical staff’s acceptance of AI and to examine the moderating effect of speciesism.

Methods

This study was conducted from 6th August to 3rd September. Data was collected from doctors and nurses and a total of 288 valid questionnaires were obtained. Smart PLS 3.2.8 was used as partial least square (PLS) software to validate the research model.

Results

The study determined that medical staff participation had a significant impact on acceptance of medical AI-IDT (β = 0.35, p ≤ 0.001) and acceptance of medical AI-ADT (β = 0.44, p ≤ 0.001). The results also show that AI self-efficacy and AI anxiety have significant mediating effects and speciesism has significant moderating effects among the theoretical model.

Conclusions

This study provides insights into ways to explore influence factors of acceptance of AI based on user participation perspective. The results indicate that medical staff participation enhances acceptance of medical AI through the cognitive path (i.e., AI self-efficacy) and the affective path (i.e., AI anxiety). These results have practical implications for how organizations assist the staff to accommodate themselves to AI technology in the future.

Keywords: Medical staff participation, Acceptance of artificial intelligence, AI self-efficacy, AI anxiety, Speciesism

1. Introduction

World Health Organization reported a global shortage about 13 million in healthcare workers by 2035 [1]. Medical artificial intelligence (AI) refers to the application of artificial intelligence (e.g., machine learning, representation learning, deep learning and other intelligent algorithms and technologies) in the medical scenarios such as auxiliary diagnosis, risk prediction, triage, health and hospital management [2]. During the period of COVID-19, medical AI alleviates the workload of medical staff [3], through medical AI imaging and AI triage [4], [5].

Although medical AI benefits the process of healthcare system, there are still many obstacles in motivating medical staff to accept AI [6]. For some medical staff, engagement with AI demands higher level of capabilities to deal with extra and unpredictable work tasks [7], [8] and the possibility of being replaced by AI has triggered considerable stress [9], [10]. Thus, it is imperative for organizations to know how to boost acceptance of artificial intelligence for medical staff in the healthcare industry.

Previous studies mainly focused on the impacts of technical characteristics (e.g., intelligence, anthropomorphism) [11], user’s cognition (e.g., perceived usefulness; perceived privacy risk) [12], [13] and AI ethics (e.g., information transparency, algorithm discrimination) [14] on user’s acceptance of AI. Although the importance of participation in successful adoption has been examined in the context of general technology [10], previous studies ignored the perspective of user participation when discussing the impact mechanism of users’ acceptance of AI [15], [16]. Meanwhile, with regard to research on the ethical AI, the importance of user participation has been highlighted [17], [18]. The pattern of user involving in development process by performing various activities is namely user participation [19]. In application scenarios of medical AI, medical staff participation refers to the mode that medical staff participate in development process of AI by providing information resources, such as their demands and feedback.

Although employees’ acceptance of AI differs from customers’ acceptance, scholars have paid limited attention to employees’ acceptance [15], [20]. For medical staff, the application of medical AI has changed their working scenarios. That is, human relationships have been substituted by human-AI relationships [20], [21]. As a result, it is critical to consider the change of working scenarios when discussing acceptance of medical AI. When medical staff use or cooperate with AI in their work, they have the dual roles of employees providing medical service and AI technology users. According to the stimulus-organism-response (SOR) framework, medical staff participation could be regarded as a stimulus, which might influence employees’ internal psychological states towards medical AI, subsequently impacting their work behavior [22]. Drawing on the SOR framework, our research considers the dual roles of medical staff, and discusses how medical staff participation in AI development impacts acceptance of medical AI.

Our research aims to make the following contributions. First, by investigating the impacts of medical staff participation on acceptance of medical AI, we advance a potential perspective for future research on acceptance of AI. Previous research explored the influencing factors of AI acceptance which were confined to technical characteristics, cognition of customers and ethics of AI [11], [13]. Going beyond the traditional standpoints, we respond to the calls of the scholars [15] and deepen the understanding of how medical staff participation in development process of AI influences their attitude towards AI. Second, we found a dual-path model to demonstrate the psychological mechanism of how medical staff participation influences their acceptance of AI based on the SOR framework (i.e., the cognitive path & the affective path), and extended the SOR framework in terms of employee-AI interaction. Third, by examining speciesism as a boundary condition of the relationship between medical staff participation and AI anxiety, we highlight the importance of group threat viewpoint in shaping employees’ responses to the new technologies.

2. Theoretical background

2.1. Acceptance of medical AI

Surveying the AI Health Care Landscape and AI released by American Medical Association provided examples of medical AI application for diagnosis and treatment, such as medical triage, diagnosis of diseases, intelligent CT image recognition, etc. [23]. Scholars classified the application of medical AI into medical AI for independent diagnosis and treatment (medical AI-IDT) and medical AI for assistive diagnosis and treatment (medical AI-ADT) [24]. According to their study, patients showed different acceptance of medical AI-IDT and medical AI-ADT due to their unique self-responsibility attribution.

In application scenarios of medical AI, medical staff have the dual roles of medical institution employees and AI technology users. Employees’ acceptance of AI is defined as “employees’ willingness to accept, supervise, manage, and cooperate with service AI” [25]. Therefore, this study classifies acceptance of medical AI into “acceptance of medical AI-IDT” and “acceptance of medical AI-ADT” based on the prior literature [24], taking account of the working scenarios where medical staff use AI. Medical AI-IDT defines a sort of artificial intelligence making diagnosis, treating diseases and analyzing medical cases without help from medical staff. Medical AI-ADT defines a sort of artificial intelligence assisting medical staff to complete non-treatment medical work, such as leading the ways, helping with the enquiries, disinfecting the wards and assisting the operations.

2.2. Medical staff participation and acceptance of medical AI

User participation in development process refers to the mode in which users provide information and other related resources for product development on the basis of their own demands [26]. Considering our research context, we define medical staff participation in AI development process as the mode that medical staff participate in the development of medical AI by offering needs, feedback and relevant resources.

The SOR model has been used in AI scenarios in previous studies. For example, Scholars have examined the impact of AI decision-making transparency on employee trust based on the SOR model [27]. Meanwhile, a few scholars have examined the stimulus in the field of technology acceptance and organizational behavior, such as the characteristics of products and work environment [28], [29], [30]. According to the SOR framework, work environment and individual situational factors will stimulate employees’ cognitive and affective state, and thus affecting their behavioral outcomes. Specifically, positive reactions will lead to approach behaviors while adverse reactions may cause avoidance behaviors [22], [27]. Recent research on hotel information management systems (HIMS), telemedicine services presented participation as a mechanism through which stimuli can sustain usage intentions [19], [31]. Under application scenarios of medical AI, medical staff participation in the development process of medical AI can be regarded as a stimulus. By participating in AI development, medical staff can have AI co-workers fitter for them by providing feedback and their demands [32]. In addition, previous studies have pointed out that medical staff participation in AI development is helpful for the improvement of the transparency and interpretability of ethical medical AI [33], [34], which is key for accelerating users’ adoption of medical AI. Therefore, drawing on the SOR framework, we argue that the positive consequences of medical staff participation in AI development are likely to improve their attitudes towards medical AI.

Notably, owing to the differences of technology development and application conditions of medical AI, when medical staff make a decision of adopting robotics, the psychological processes on their acceptance of robots may vary between different types of robots [35], [36]. For instance, the level of AI autonomy can impact AI acceptance for customers [35]. Specifically, users prefer non-autonomous AI to autonomous AI in work and living environments [37], [38]. In the study, we propose the following hypotheses:

Hypothesis 1a

Medical staff participation is positively related to acceptance of medical AI-IDT.

Hypothesis 1b

Medical staff participation is positively related to acceptance of medical AI-ADT.

2.3. The mediator role of AI self-efficacy

According to the SOR framework, organism, which is composed of individual cognitive and emotional states, plays a mediating role between stimulus and response variables [29]. The cognitive states mainly represent individual beliefs or thoughts [27]. As a cognitive state, self-efficacy is defined as the degree of confidence of whether people can perform well in their work though their skills [39]. AI self-efficacy refers to the belief that individuals possess to achieve work goals in the field of application of AI [35].

Medical staff participation in AI development process enriches their work-related knowledge. Besides that, employees who engage in the development of new technologies may have a clearer understanding of work tasks. Meanwhile, by providing advice for AI development process, the autonomy that medical staff perceived in their work may also be improved [32]. Therefore, medical staff participation in AI development process drives medical staff to be more competent and intrinsically motivated to behave proactively [40]. According to prior studies, when the employees involve in development process as users, they may experience a variety of positive psychological changes, including satisfaction, belonging, achievement, and psychological ownership [41], [42], [43].

Based on the SOR framework, when employees anticipate that new technology may enhance their ability on work, they are more likely to show a positive attitude towards adopting this technology [44], [45]. Namely, the individual’s self-efficacy might lead to a positive attitude towards work and proactive behavior [10], [46]. For example, scholars stated that the self-efficacy of medical staff might enhance the application of integrated clinical and administrative information system [47].

Thus, due to participating in AI development process, medical staff are more competent and confident to behave proactively under application scenarios of medical AI. This leads to more positive attitudes to acceptance of medical AI for medical staff. We hypothesize that:

Hypothesis 2a

AI self-efficacy plays a mediating role in the relationship between medical staff participation and acceptance of medical AI-IDT.

Hypothesis 2b

AI self-efficacy plays a mediating role in the relationship between medical staff participation and acceptance of medical AI-ADT.

2.4. The mediator role of AI anxiety

According to the SOR framework, emotional states refer to individual emotional responses to stimuli [27]. Organizational change and work environment often affect employee behavior through two independent paths (i.e., the cognition path and the affect path) [48]. Through AI self-efficacy, medical staff participation impacts acceptance of medical AI cognitively. Besides that, the change of the work environments might influence affective feelings of employees, which further impacts behaviors. Technology anxiety, as a negative affective response [49], is affected by many factors, such as job replacement [10], familiarity with technology [50] and so on. AI anxiety was defined as the individual’s fear and anxiety that AI technology could be beyond their control [51]. Meanwhile, several factors like human sociotechnical blindness (i.e., ignoring human participation in AI operation), confusion about autonomy (i.e., the confusion of autonomy between AI and human beings) may lead to AI anxiety.

Scholars argued that general attitudinal and affective tendencies are essential topics for predicting behaviors and attitudes towards certain types of AI in specific contexts [52]. As a negative affective state in the workplace, employees’ technology anxiety might impair their willingness of proactive behavior [53], [54]. Especially when anxiety arises from the application of new technologies in the organization, acceptance of new technologies for employees may be greatly reduced [55]. Under the medical AI scenarios, the technology anxiety which results from lack of experience and knowledge regarding AI technologies is likely to hinder medical staff’s adoption of medical AI. Medical staff participation in AI development process can improve their familiarity to technology of medical AI and their autonomy when using medical AI, which helps address the difficulties and disconnection in technical application.

In conclusion, medical staff participation in AI development process can enrich medical staff’s knowledge about AI technology and operational experience, which might effectively alleviate their technology anxiety and enhance acceptance of medical AI for medical staff. Hence, we propose the following assumptions:

Hypothesis 3a

AI anxiety plays a mediating role in the relationship between medical staff participation and acceptance of medical AI-IDT.

Hypothesis 3b

AI anxiety plays a mediating role in the relationship between medical staff participation and acceptance of medical AI-ADT.

2.5. The moderating effect of speciesism

Prior studies regard speciesism as a kind of widespread discrimination that is practiced by man against other species [56]. Individuals with high speciesism tend to hold a belief that humans are superior to other species and have a higher moral status than other species. Meanwhile, they prefer and respect their own ingroups, however, treat outgroups unfairly, such as prejudice, discrimination and so on [57], [58], [59]. The idea behind the concept of speciesism is that the outgroup of non-human species is denigrated as “less human”, which is called “dehumanization”. Specifically, animals and machines are the prime categories regarded as “less human”, which are “animalistic” dehumanization and “mechanistic” dehumanization [60], [61]. Since AI has gradually acquired the ability to “think” as humans [62], the boundaries separating human from the outgroup of machines have shifted. Therefore, some scholars believe that it is necessary to redefine the concept of speciesism and expand the research scope of speciesism from human-animal relationships to human-AI relationships [63], [64], [65]. For instance, scholars have redefined speciesism as “the result of a fundamental, categorical comparison of human and machine” and suggested assessing the effect of speciesism on the adoption of AI [60], [65].

Medical staff with high-level speciesism are more inclined to consider that human employees (themselves) are more competent than AI technologies or robots. Specifically, in terms of medical staff with a high level of speciesism, as they regard themselves as a more competent group than medical AI, they are more likely to experience the improvement of skills, the accumulation of knowledge and positive psychological changes when involving in development process [42], [43], which in turn produces higher self-efficacy. On the contrary, for medical staff who have a low level of speciesism, as they don’t have great perception of superiority of human beings, though the process of involving in medical AI development will provide AI knowledge and perception of control for them, their stimulated AI self-efficacy will still be lower than medical staff with a high level of speciesism. In general, medical staff with a higher level of speciesism are more likely to feel high-level self-efficacy when they participate in AI development process. Thus, we propose the following hypothesis:

Hypothesis 4a

Speciesism moderates the effect of medical staff participation on the AI self-efficacy, such that the relationship is stronger with a higher level of speciesism.

The level of speciesism is highly correlated with the level of prejudice against outgroups [66]. The prejudice against outgroups originates from perceived intergroup threats, which can trigger human anxiety, fear and other feelings [67]. Hence, compared to individuals with low-level speciesism, individuals with high-level speciesism tend to be more sensitive to the perception of intergroup threats.

Prior studies have illuminated that the increasing exposure with outgroups and the accumulation of knowledge and experience can mitigate the individuals’ prejudice against outgroups and the perceived anxiety and threat [68]. When medical staff involve in development process, their affective and energy investment will be increased [69], [70]. Specifically, in terms of medical staff with high-level speciesism, as they are more sensitive to the perception of intergroup threats, when they participate in AI development process, their exposure with medical AI and understanding of medical AI can be enhanced, which results in a greater decrease in AI anxiety. Therefore, medical staff with a higher level of speciesism are more likely to perceive low-level AI anxiety when they engage in development process of AI technology. Based on the argument, we propose the following hypothesis:

Hypothesis 4b

Speciesism moderates the effect of medical staff participation on the AI anxiety, such that the relationship is stronger with a higher level of speciesism.

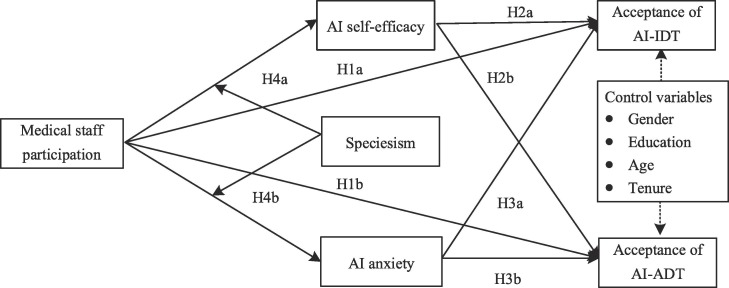

Our model is summarized in Fig. 1 as follows.

Fig. 1.

Research model.

3. Methods

3.1. Samples and procedures

This study was conducted among medical staff which widely adopted medical AI. We collected data from doctors and nurses and a total of 428 questionnaires were received by three times. At Time 1, medical staff participation and control variables were collected. At Time 2, participants answered the questions of AI self-efficacy, AI anxiety and speciesism. At Time 3, participants reported acceptance of medical AI-IDT and acceptance of medical AI-ADT. After matching and eliminating invalid questionnaires, a total of 288 valid questionnaires were obtained. The samples consisted of 26.4 percent of males and 73.6 percent of females, mainly aged from 21 to 30 (SD = 0.87). In general, their education level was mainly undergraduate (SD = 0.72). Specifically, 13.2 percent of participants were high school and below, 81.9 percent of participants were junior college and undergraduate, 4.9 percent of participants were at the master’s degree level. This paper was approved by the Institutional Review Board of Shanghai University (No. 2019-001).

3.2. Measures

We adopted all constructs from the existing studies. All the variables were measured based on a five-point Likert scale ranging from “strongly disagree” to “strongly agree”. The variables were tested based on the published sources, which ensures internal consistency, test–retest reliability and so on. The constructs, questions and sources are shown in Table 1 .

Table 1.

Measurement items of constructs.

| Construct | Variables | Measurement Items | Source |

|---|---|---|---|

| Medical Staff Participation (MSP) | MSP1 | I can participate in the development process of medical AI and share my needs and professional opinions. | [89] |

| MSP2 | When participating in the development process of medical AI, I am willing to put in effort to express my needs. | ||

| MSP3 | I have the opportunity to be highly involved in the research and development process with medical AI technology developers. | ||

| MSP4 | I could take part in the decision making of medical AI development. | ||

| AI Self-efficacy (AS) | AS1 | I feel confident of learning how to use medical AI by equipped with necessary instructions and training. | [90], [91] |

| AS2 | I am capable of using medical AI. | ||

| AS3 | I believe that I can obtain the information I need though medical AI. | ||

| AS4 | It is easy for me to use medical AI. | ||

| AI Anxiety (AA) | AA1 | The unique features of medical AI products can make me feel anxious. | [92] |

| AA2 | I feel worried that medical staff might be replaced by medical AI. | ||

| AA3 | With the large scale of medical AI application, I am concerned that medical staff lose control in the process of medical services. | ||

| AA4 | It is scary that medical AI products are similar to human and even cannot be distinguished from human. | ||

| Acceptance of Medical AI-IDT (AMAI) | AMAI1 | I am willing to apply AI technologies to design clinical protocol for patients. | [24] |

| AMAI2 | I am willing to use AI technologies to assist in preoperative three-dimensional reconstruction and accurate analysis of lesions. | ||

| AMAI3 | I am willing to take advantage of big data to search cases and clinical protocol. | ||

| AMAI4 | I am willing to adopt AI technologies to assist in surgery plans. | ||

| AMAI5 | I am willing to apply big data and machine learning to development of clinical protocol. | ||

| Acceptance of Medical AI-ADT (AMAA) | AMAA1 | I am willing to use AI to disinfect a surface in the hospital. | [24] |

| AMAA2 | I am willing to apply AI robots to the location guidance for the patients. | ||

| AMAA3 | I am willing to use AI triage for my patients. | ||

| Speciesism (SPE) | SPE1 | Interacting with robots rather than human might make me angry. | [60] |

| SPE2 | When I interact with AI, I don't feel as good as I do with human. | ||

| SPE3 | I think there's a big difference between talking to real people or talking to AI robots. |

4. Analyses and results

A structural equation model (SEM) was used to validate the research model (Fig. 1). As the sample size was smaller than 500, we used Smart PLS 3.2.8 as partial least square (PLS) software with bootstrapping technique, which is especially suited for smaller sample sizes [71], [72].

4.1. Measurement model testing

First, the measurement model was evaluated. Reliability, convergent validity and discriminant validity were measured in Table 2 . The composite reliability (CR) and Cronbach’s α were above 0.70 which can indicate internal consistency [73]. Meanwhile, we used Fornell-Larcker criterion [74] to assess the convergent validity and the discriminant validity. Specifically, the average variance extracted (AVE) of each construct was all above the threshold (i.e., 0.50), which supported the convergent validity. Table 3 exhibits that the square root of the AVE was larger than any inter-factor correlation, which shows discriminant validity was satisfied. Additionally, the inner variance inflations (VIF) were examined to test the common method bias. Values of the VIF were all below 3.30 for PLS-based SEM [75], which showed absence of collinearity and no common method bias. The evaluation results were satisfactory as summarized in Table 2, Table 3.

Table 2.

Convergent validity and internal consistency reliability.

| Construct | Indicators | Loading | Indicator Reliability | Cronbach's α | Composite Reliability | AVE |

|---|---|---|---|---|---|---|

| Medical Staff Participation (MSP) | MSP1 | 0.91 | 0.82 | 0.93 | 0.95 | 0.82 |

| MSP2 | 0.91 | 0.82 | ||||

| MSP3 | 0.89 | 0.80 | ||||

| MSP4 | 0.92 | 0.85 | ||||

| AI Self-efficacy (AS) | AS1 | 0.81 | 0.65 | 0.83 | 0.88 | 0.66 |

| AS2 | 0.82 | 0.66 | ||||

| AS3 | 0.79 | 0.62 | ||||

| AS4 | 0.83 | 0.69 | ||||

| AI Anxiety (AA) | AA1 | 0.89 | 0.78 | 0.91 | 0.94 | 0.80 |

| AA2 | 0.91 | 0.82 | ||||

| AA3 | 0.84 | 0.70 | ||||

| AA4 | 0.94 | 0.88 | ||||

| Acceptance of Medical AI-IDT (AMAI) | AMAI1 | 0.92 | 0.85 | 0.94 | 0.96 | 0.82 |

| AMAI2 | 0.92 | 0.85 | ||||

| AMAI3 | 0.87 | 0.75 | ||||

| AMAI4 | 0.92 | 0.84 | ||||

| AMAI5 | 0.89 | 0.79 | ||||

| Acceptance of Medical AI-ADT (AMAA) | AMAA1 | 0.92 | 0.85 | 0.91 | 0.94 | 0.85 |

| AMAA2 | 0.92 | 0.85 | ||||

| AMAA3 | 0.91 | 0.84 | ||||

| Speciesism (SPE) | SPE1 | 0.93 | 0.87 | 0.91 | 0.95 | 0.85 |

| SPE2 | 0.92 | 0.84 | ||||

| SPE3 | 0.92 | 0.85 |

Table 3.

Inter-construct correlations and summary statistics.

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Medical staff participation | 0.91 | |||||||||

| AI self-efficacy | 0.64*** | 0.81 | ||||||||

| AI anxiety | -0.39*** | -0.42*** | 0.89 | |||||||

| Acceptance of medical AI-IDT | 0.67*** | 0.69*** | -0.37*** | 0.90 | ||||||

| Acceptance of medical AI-ADT | 0.66*** | 0.59*** | -0.40*** | 0.68*** | 0.92 | |||||

| Speciesism | 0.37*** | 0.32*** | -0.37*** | 0.42*** | 0.42*** | 0.92 | ||||

| Gender | -0.06 | -0.02 | 0.04 | -0.01 | -0.00 | -0.08 | 1 | |||

| Education | 0.08 | -0.03 | 0.06 | 0.10 | -0.02 | -0.04 | -0.07 | 1 | ||

| Age | 0.08 | 0.02 | 0.02 | 0.06 | 0.07 | 0.08 | -0.09 | -0.06 | 1 | |

| Job title | 0.06 | -0.01 | 0.01 | 0.03 | 0.03 | -0.01 | 0.05 | 0.23*** | 0.03 | 1 |

Note: Diagonal bold numbers are the square root of the AVE; *p <.05; **p <.01; ***p <.001.

4.2. Structural model testing

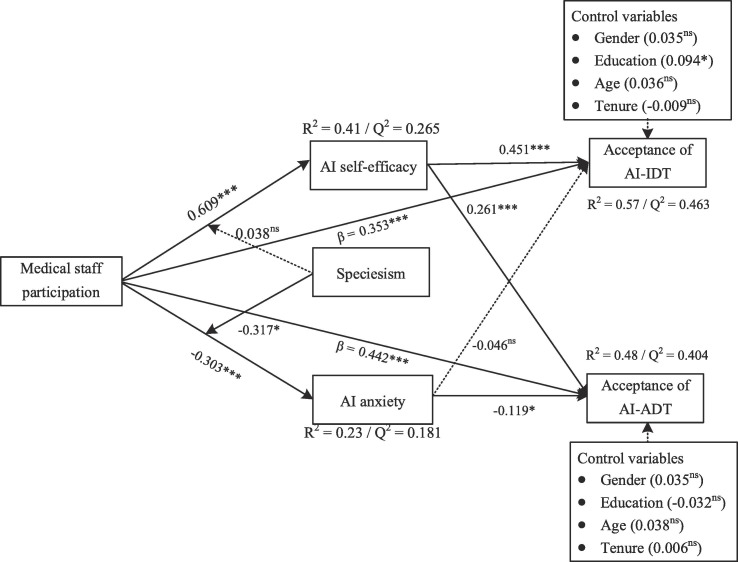

Fig. 2 shows the results of the paths. The exploratory power was examined by R2 values ranging between 0 and 1. In IT-related research, the effect size is large if it is over 0.36 when PLS is used [76]. Besides, Q2 values were assessed via blindfolding and are reported. The suitable predictive accuracy is shown by Q2 values above zero in general (we regard Q2 ≥ 0 as small, 0.25 as medium, 0.5 as large) [77].

Fig. 2.

Results (n = 288). *p <.05; **p <.01; ***p <.001; ns = not significant.

Most paths were significant on a level of p < 0.05 or below. Generally, 57 % in the variance of acceptance of medical AI-IDT was explained by the model explains (R2 = 0.57). Medical staff participation (β = 0.35, p ≤ 0.001) and AI self-efficacy (β = 0.45, p ≤ 0.001) showed a positive impact on acceptance of medical AI-IDT. However, AI anxiety (β = -0.05, p = 0.321) didn’t show the significant negative impact on acceptance of medical AI-IDT.

In addition, 48 % in the variance of acceptance of medical AI-ADT was explained (R2 = 0.48). Moreover, medical staff participation (β = 0.44, p ≤ 0.001), AI self-efficacy (β = 0.26, p ≤ 0.001) and AI anxiety (β = −0.12, p ≤ 0.05) showed a significant effect on acceptance of medical AI-ADT.

4.3. Mediation effect

To further test the indirect effects of AI self-efficacy and AI anxiety, this study adopted Bootstrapping method by referring to the recommendations of scholars [78]. Table 4 shows the results. After 5000 iterations, the analysis reveals that the indirect effect of AI self-efficacy between medical staff participation and acceptance of medical AI-IDT was significant, and the mediating effect of AI self-efficacy between medical staff participation and acceptance of medical AI-ADT was also significant. H2a and H2b were supported. At the same time, while the relationship between medical staff participation and acceptance of medical AI-IDT was not significantly mediated by AI anxiety, which means H3a was not supported, AI anxiety significantly mediated the impact of medical staff participation on acceptance of medical AI-ADT, H3b was verified.

Table 4.

The mediation effects of AI self-efficacy and AI anxiety.

| Path | Coefficient | Total Effect | 95 % Confidence Interval | |

|---|---|---|---|---|

| Low | High | |||

| Medical staff participation → AI self-efficacy | 0.609 | 0.642 | 0.194 | 0.373 |

| AI self-efficacy → Acceptance of medical AI-IDT | 0.450 | |||

| Medical staff participation → AI anxiety | -0.303 | -0.012 | 0.045 | |

| AI anxiety → Acceptance of medical AI-IDT | -0.046 | |||

| Medical staff participation → AI self-efficacy | 0.609 | 0.637 | 0.079 | 0.249 |

| AI self-efficacy → Acceptance of medical AI-ADT | 0.261 | |||

| Medical staff participation → AI anxiety | -0.303 | 0.009 | 0.081 | |

| AI anxiety → Acceptance of medical AI-ADT | -0.119 | |||

Note: Medical AI-IDT: Medical AI for independent diagnosis and treatment; Medical AI-ADT: Medical AI for assistive diagnosis and treatment.

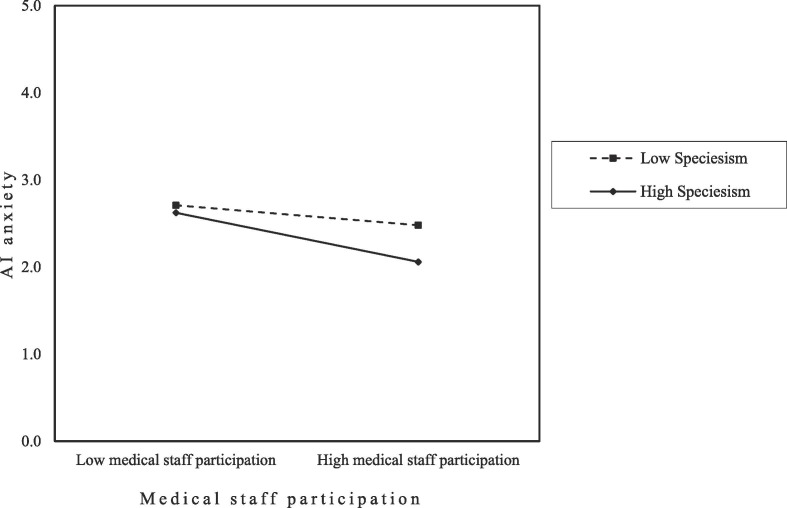

4.4. Moderation effect

Based on H4a and H4b, we examined the moderating role of speciesism on the relationship between medical staff participation and AI self-efficacy and the relationship between medical staff participation and AI anxiety. The results of the moderating effect test are presented in Table 5 . Table 5 shows that the interaction terms of medical staff participation and speciesism had a significant negative effect on AI anxiety (β = −0.2, p < 0.05) while the interaction’s effect on AI self-efficacy was not significant. The moderating effect of speciesism on the relationship between medical staff participation and AI anxiety are visualized in Fig. 3 . The results of the Johnson-Neyman floodlight effect test demonstrates a significant effect of medical staff participation on AI anxiety for levels of speciesism greater than 3.33. Therefore, H4b was supported while H4a was not supported.

Table 5.

The moderation test.

| Regression Path | Effect | Standard Error | 95 % Confidence Interval |

|---|---|---|---|

| medical staff participation × speciesism → AI self-efficacy | -0.01 | 0.06 | [-0.13, 0.11] |

| medical staff participation × speciesism → AI anxiety | -0.21* | 0.08 | [-0.37, -0.04] |

Note: *p <.05.

Fig. 3.

The moderating effect of speciesism on AI anxiety.

5. Discussion

Based on the SOR framework, we explored the positive impact of medical staff participation on acceptance of medical AI-IDT and medical AI-ADT. The results showed that both cognitive and affective attitudes had a certain impact on acceptance of medical AI for medical staff, which responded to calls made from scholars [20]. AI self-efficacy (as a positive cognitive belief) and AI anxiety (as a negative affect) respectively played a mediating role between medical staff participation and acceptance of medical AI-IDT as well as medical AI-ADT. However, there were certain differences. Specifically, acceptance of medical AI-IDT was only affected by the medical staff participation via the cognitive path (i.e., AI self-efficacy), while the medical staff participation improved their acceptance of medical AI-ADT through the positive cognitive path (i.e., AI self-efficacy) and the negative affective path (i.e., AI anxiety). This difference proved that it was necessary to differentiate acceptance of medical AI-IDT and acceptance of medical AI-ADT.

Speciesism, as an individual characteristic of human beings, moderated the effect of medical staff participation on the AI anxiety. This relationship was enhanced with a higher level of speciesism. Specifically, the medical staff with a higher level of speciesism participating in AI development process tend to reduce their AI anxiety.

5.1. Implications for theory and research

Our findings have several theoretical implications. First, by investigating the impacts of medical staff participation on their acceptance of medical AI based on the SOR framework, we provided insights into ways to explore influence factors of acceptance of AI based on user participation perspective. Most of prior researches about acceptance of AI focused on the influence of AI technical characteristics, social norms and cognition of customers [79], [80], [81], but ignored the impacts of user participation [82]. Indeed, scholars specifically called for the research to investigate the role of participation on the employees’ acceptance of new technologies [26]. Our findings revealed that there was a positive relationship between medical staff participation in AI development and acceptance of medical AI. Meanwhile, our research takes a work context into consideration where human employees work with AI, which extends the scope of research on the acceptance of AI to the context with medical staff and AI technology.

Second, based on the SOR framework, this study advanced a potential dual-path perspective for future research on acceptance of AI and deepened the understanding of how employee behavior influenced acceptance of AI. We responded to the calls of scholars [83] and explored a dual-path mechanism (i.e., the cognition and the affect path) to illuminate how medical staff participation in AI development influenced their acceptance of medical AI. Specifically, when employees participate in AI development process, the stimulus might arouse medical staff’s internal positive cognitive attitude (i.e., cognitive path, AI self-efficacy) and repress negative affective states (i.e., affective path, AI anxiety). These two paths ultimately impacted acceptance of medical AI for medical staff.

Third, our results extend the group threat viewpoint by showing that speciesism was a boundary condition in the relationship between medical staff participation and AI anxiety. The majority of previous research haven’t explored whether social identity of AI affected user’s attitudes towards AI in the context of human–computer and human-AI interaction [21], [60], [84], [85]. Meanwhile, scholar proposed that speciesism might influence the users’ attitudes of new technologies, which has not been confirmed yet [60]. Our findings responded to this assumption and explored that speciesism strengthened the negative relationship between medical staff participation and AI anxiety.

5.2. Managerial implications

In terms of practice, our findings provide implications for managers during and even after the COVID-19 pandemic. First, we found that medical staff participation was positively related to their acceptance of AI. Therefore, technology development company on medical AI should widely solicit relevant suggestions from medical staff. For instance, on the one hand, opening the “Accompanying AI Growth-Medical AI Design Suggestions Collection Section” on their company’s official website and social media, and actively interacting with the medical staff to enhance their participation in the development process of medical AI. On the other hand, medical institutions should encourage medical staff to participate in the development of medical AI proactively, including providing information of their demands in the development process of medical AI.

Second, our results also provide insights for organizations to improve the medical staff’s acceptance of medical AI. According to our findings, medical staff participation in AI development impacts acceptance of medical AI-IDT and medical AI-ADT through “the cognitive path” and “the affective path”. Therefore, while encouraging medical staff to participate in development process, developers of medical AI and managers of medical institutions should focus on the internal cognitive attitudes and affective status of employees. Specifically, the developers of medical AI should offer timely feedback on medical staff’s suggestions and popularize relevant medical AI knowledge when inviting medical staff to involve in the development of medical AI. Medical institutions could set up “a medical AI product development project team”, “a medical AI technical knowledge training project” and so on. These could contribute to medical staff participation in the development process of medical AI, thereby improving the self-efficacy of medical staff and reducing anxiety of using medical AI.

Furthermore, managers should strengthen the personalized management of employees in the medical institution, such as providing personalized AI technical support and training for employees based on different employee’s individual characteristics. Our findings suggested that the differences of employee traits were important contextual factors impacting employees’ acceptance of AI. Namely, for medical staff with a higher level of speciesism, their anxiety tends to drop when involving in medical AI development process. Therefore, managers in medical institutions should select employees with high-level speciesism as much as possible to actively make their employees involve in development process of medical AI. Meanwhile, for the employees with low-level speciesism, the managers should pay attention to their possible negative emotions.

5.3. Limitations and future research

First of all, our study considers acceptance of medical AI by the sample of healthcare workers, which may engender concerns regarding the generalizability of the results. Future research should explore the impact of employee participation on AI acceptance from the perspective of multiple types of samples, such as fund managers using AI decision-making services or HR managers using AI recruitment services. Meanwhile, it is already found that cross-cultural factors such as religions and regions influence individual’s acceptance of AI [86]. Therefore, future research could enlarge the sample size and explore the impact of user participation on acceptance of AI in different countries or regions. Second, in this study, we used a method designed with multiple time points, which overcomes the limitations of cross-sectional research and is widely accepted [24], [87]. For future research, we encourage to try to use more kinds of methods to collect data, such as collecting health data from the fitness band or designing behavioral experiments. Furthermore, this study discussed the impact of employees’ speciesism between medical staff participation and AI technology anxiety based on the group threat viewpoint. In the future, scholars could try to explore more mediating mechanism and boundary conditions from other perspectives such as the diffusion of innovation theory [88].

6. Conclusion

Over the coming years, it will be common that the medical staff cooperate with AI for AI-IDT and AI-ADT. Not only the AI producers but managers of the medical institutions should take action in supporting medical staff to adapt to work with their “AI coworkers”. There is an urgent need for both academic research and managerial practice to know which factors influence acceptance of AI based on user participation perspective. This study not only extended the previous research on acceptance of medical AI, but introduced the emerging context of AI into the SOR framework. We explored that medical staff participation in AI development impacted acceptance of medical AI through the cognitive path (i.e., AI self-efficacy) and the affective path (i.e., AI anxiety). Our study suggests that medical staff should take part in development of medical AI, which is helpful to rise acceptance of medical AI. Meanwhile, speciesism is a key individual characteristic to study the interaction between AI and humans, which moderates the relationship between medical staff participation and AI anxiety. In the future, how to motivate employees to better cooperate with AI technologies will be a topic with great potential.

Funding information

This work was supported by the National Natural Science Foundation of China [grant number 72072110]; the Shanghai Philosophy and Social Science Planning Project [grant number 2019BGL001]; the Shanghai Science and Technology Innovation Action Plan Soft Science Project (Grant No. 23692110000); the Social Science Foundation of the Ministry of Education of China (Grant No. 21YJC630067) and Project of Excellent Young Talents Support Program in Anhui Higher Education Institutions (Grant No. gxyqZD2022033).

What already known on the topic:

-

•

The acceptance of AI is affected by the technical characteristics (e.g., intelligence, anthropomorphism), user’s cognition (e.g., perceived usefulness; perceived privacy risk) and AI ethics (e.g., information transparency, algorithm discrimination).

-

•

Medical AI alleviates the workload of medical staff, through medical AI imaging and AI triage.

-

•

Medical AI benefits the process of healthcare system, there are still many obstacles in motivating medical staff to accept AI.

-

•

What this study added to our knowledge:

-

•

We confirm that medical staff participation in AI development impacts acceptance of medical AI.

-

•

We extend the SOR framework by finding a dual-path model to demonstrate the psychological mechanism of how medical staff participation in AI development influences their acceptance of AI.

-

•

This study highlights the importance of group threat viewpoint in shaping employees’ responses to the new technologies.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Biographies

Weiwei Huo (huoweiwei-2008@163.com) is an associate professor for Technological Innovation at Shanghai University, Shanghai, China. Her research focuses on the impact of technological innovation on employee and consumer behavior.

Xinze Yuan (18800205075@163.com) is a post-graduate for Technological Innovation at Shanghai University, Shanghai, China. Her research focuses on the impact of technological innovation on employee and consumer behavior.

Xianmiao Li (xianmiao@aust.edu.cn) is the associate professor at the School of Economics and Management, Anhui University of Science and Technology, Anhui Province, China. Her primary research interests include leadership, knowledge management, and organizational behavior.

Wenhao Luo (luowh1008@163.com) is an undergraduate for Technological Innovation at Shanghai University, Shanghai, China. Her research focuses on the impact of technological innovation on employee and consumer behavior.

Jiaying Xie (xiejiaying1027@163.com) is an undergraduate for Technological Innovation at Shanghai University, Shanghai, China. Her research focuses on the impact of technological innovation on employee and consumer behavior.

Bowen Shi (bowenshi0127@gmail.com) is a student of Shanghai World Foreign Language Academy. His research focuses on Artificial Intelligence and Business applications.

References

- 1.Fernandes C., de Silva C.M.O., Segundo G.R.S. Prevalence of IgE-mediated latex allergy at a university hospital. Br. J. Allergy Immunol. 2014;2(6) doi: 10.5935/2318-5015.20140033. [DOI] [Google Scholar]

- 2.He J., Baxter S.L., Xu J., Xu J., Zhou X., Zhang K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019;25(1):30–36. doi: 10.1038/s41591-018-0307-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ahmed A., Boopathy P., Rajan S.S. Artificial intelligence for the novel corona virus (COVID-19) pandemic: opportunities, challenges, and future directions. Int. J. E-Health Med. Commun. 2022;13(2):1–21. doi: 10.4018/IJEHMC.20220701.oa5. [DOI] [Google Scholar]

- 4.Li J., Zhang C., Li X., Zhang C. Patients’ emotional bonding with MHealth apps: an attachment perspective on patients’ use of MHealth applications. Int. J. Inf. Manag. 2020;51 doi: 10.1016/j.ijinfomgt.2019.102054. [DOI] [Google Scholar]

- 5.Delshad S., Dontaraju V.S., Chengat V. Artificial intelligence-based application provides accurate medical triage advice when compared to consensus decisions of healthcare providers. Curēus (Palo Alto, CA) 2021;13(8):e16956. doi: 10.7759/cureus.16956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Briganti G., Le Moine O. Artificial intelligence in medicine: today and tomorrow. Front. Med. 2020;7 doi: 10.3389/fmed.2020.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Liu C.F., Chen Z.C., Kuo S.C., Lin T.C. Does AI explainability affect physicians’ intention to use AI? Int. J. Med. Inf. 2022;168 doi: 10.1016/j.ijmedinf.2022.104884. [DOI] [PubMed] [Google Scholar]

- 8.Melkas H., Hennala L., Pekkarinen S., Kyrki V. Impacts of robot implementation on care personnel and clients in elderly-care institutions. Int. J. Med. Inf. 2020;134 doi: 10.1016/j.ijmedinf.2019.104041. [DOI] [PubMed] [Google Scholar]

- 9.Frey C.B., Osborne M.A. The future of employment: How susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 2017;114:254–280. doi: 10.1016/j.techfore.2016.08.019. [DOI] [Google Scholar]

- 10.Wang W., Chen L., Xiong M., Wang Y. Accelerating AI adoption with responsible AI signals and employee engagement mechanisms in health care. Inf. Syst. Front. 2021:1–18. doi: 10.1007/s10796-021-10154-4. [DOI] [Google Scholar]

- 11.Sinha N., Singh P., Gupta M., Singh P. Robotics at workplace: an integrated Twitter analytics-SEM based approach for behavioral intention to accept. Int. J. Inf. Manag. 2020;55 doi: 10.1016/j.ijinfomgt.2020.102210. [DOI] [Google Scholar]

- 12.Heerink M., Kröse B., Evers V., Wielinga B. Assessing acceptance of assistive social agent technology by older adults: the Almere model. Int. J. Soc. Robot. 2010;2(4):361–375. doi: 10.1007/s12369-010-0068-5. [DOI] [Google Scholar]

- 13.Vimalkumar M., Sharma S.K., Singh J.B., Dwivedi Y.K. ‘Okay google, what about my privacy?’: User’s privacy perceptions and acceptance of voice based digital assistants. Comput. Hum. Behav. 2021;120 doi: 10.1016/j.chb.2021.106763. [DOI] [Google Scholar]

- 14.Kelly C.J., Karthikesalingam A., Suleyman M., Corrado G., King D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019;17:195. doi: 10.1186/s12916-019-1426-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lu V.N., Wirtz J., Kunz W.H., Paluch S., Gruber T., Martins A., Patterson P.G. Service robots, customers and service employees: what can we learn from the academic literature and where are the gaps? J. Serv. Theory Pract. 2020;30(3):361–391. doi: 10.1108/JSTP-04-2019-0088. [DOI] [Google Scholar]

- 16.Tambe P., Cappelli P., Yakubovich V. Artificial intelligence in human resources management: challenges and a path forward. Calif. Manage. Rev. 2019;61(4):15–42. doi: 10.1177/0008125619867910. [DOI] [Google Scholar]

- 17.Muller H., Mayrhofer M.T., Van Veen E.B., Holzinger A. The ten commandments of ethical medical AI. Computer. 2021;54(7):119–123. doi: 10.1109/mc.2021.3074263. [DOI] [Google Scholar]

- 18.Holzinger A., Dehmer M., Emmert-Streib F., Cucchiara R., Augenstein I., Del Ser J., Samek W., Jurisica I., Díaz-Rodríguez N. Information fusion as an integrative cross-cutting enabler to achieve robust, explainable, and trustworthy medical artificial intelligence. Inf. Fusion. 2022;79:263–278. doi: 10.1016/j.inffus.2021.10.007. [DOI] [Google Scholar]

- 19.Martikainen S., Kaipio J., Lääveri T. End-user participation in health information systems (HIS) development: physicians’ and nurses’ experiences. Int. J. Med. Inf. 2020;137 doi: 10.1016/j.ijmedinf.2020.104117. [DOI] [PubMed] [Google Scholar]

- 20.Chiu Y.T., Zhu Y.Q., Corbett J. In the hearts and minds of employees: a model of pre-adoptive appraisal toward artificial intelligence in organizations. Int. J. Inf. Manag. 2021;60 doi: 10.1016/j.ijinfomgt.2021.102379. [DOI] [Google Scholar]

- 21.Holzinger A., Mueller H. Toward human-AI interfaces to support explicability and causability in medical AI. IEEE Comput. 2021;54(10):78–86. doi: 10.1109/MC.2021.3092610. [DOI] [Google Scholar]

- 22.Tang J., Zhang P. The impact of atmospheric cues on consumers’ approach and avoidance behavioral intentions in social commerce websites. Comput. Hum. Behav. 2018 doi: 10.1016/j.chb.2018.09.038. [DOI] [Google Scholar]

- 23.AHA Center for Health Innovation . American Hospital Association; Washington, DC: 2019. Surveying the AI Health Care Landscape. [Google Scholar]

- 24.Huo W.W., Zheng G.H., Yan J.Q., Sun L., Han L.Y. Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human–computer trust, and personality. Comput. Hum. Behav. 2022;132 doi: 10.1016/j.chb.2022.107253. [DOI] [Google Scholar]

- 25.Xiao L., Kumar V. Robotics for customer service: a useful complement or an ultimate substitute? J. Serv. Res. 2019;24(1):9–29. doi: 10.1177/1094670519878881. [DOI] [Google Scholar]

- 26.McGill T., Klobas J. User developed application success: sources and effects of involvement. Behav. Inform. Technol. 2008;27(5):407–422. doi: 10.1080/01449290601110715. [DOI] [Google Scholar]

- 27.Yu L., Li Y. Artificial intelligence decision-making transparency and employees’ trust: the parallel multiple mediating effect of effectiveness and discomfort. Behav. Sci. 2022;12(5):127. doi: 10.3390/bs12050127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tuan Mansor T.M., Mohamad Ariff A., Hashim H.A., Ngah A.H. External whistle blowing intentions of auditors: a perspective based on stimulus-organism-response theory. Corporate Governance. 2022;22(4):871–897. doi: 10.1108/CG-03-2021-0116. [DOI] [Google Scholar]

- 29.Lee S., Ha S., Widdows R. Consumer responses to high-technology products: product attributes, cognition, and emotions. J. Bus. Res. 2011;64(11):1195–1200. doi: 10.1016/j.jbusres.2011.06.022. [DOI] [Google Scholar]

- 30.Tang Z., Warkentin M., Wu L. Understanding employees’ energy saving behavior from the perspective of stimulus-organism-responses. Resour. Conserv. Recycl. 2019;140:216–223. doi: 10.1016/j.resconrec.2018.09.030. [DOI] [Google Scholar]

- 31.Amin R., Hossain M.A., Uddin M.M., Jony M.T.I., Kim M. Stimuli influencing engagement, satisfaction, and intention to use telemedicine services: an integrative model. Healthcare. 2022;10:1327. doi: 10.3390/healthcare10071327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Helman S., Terry M.A., Pellathy T., Williams A., Dubrawski A., Clermont G., Hravnak M. Engaging clinicians early during the development of a graphical user display of an intelligent alerting system at the bedside. Int. J. Med. Inf. 2022;159 doi: 10.1016/j.ijmedinf.2021.104643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Minh D., Wang H.X., Li Y.F., Nguyen T.N. Explainable artificial intelligence: a comprehensive review. Artif. Intell. Rev. 2022;55:3503–3568. doi: 10.1007/s10462-021-10088-y. [DOI] [Google Scholar]

- 34.Winfield A.F., Michael K., Pitt J., Evers V. Machine ethics: the design and governance of ethical AI and autonomous systems. Proc. IEEE. 2019;107(3):509–517. doi: 10.1109/JPROC.2019.2900622. [DOI] [Google Scholar]

- 35.Latikka R., Savela N., Koivula A., Oksanen A. Attitudes toward robots as equipment and coworkers and the impact of robot autonomy level. Int. J. Soc. Robot. 2021;13(7):1747–1759. doi: 10.1007/s12369-020-00743-9. [DOI] [Google Scholar]

- 36.Latikka R., Turja T., Oksanen A. Self-efficacy and acceptance of robots. Comput. Hum. Behav. 2019;93:157–163. doi: 10.1016/j.chb.2018.12.017. [DOI] [Google Scholar]

- 37.Esmaeilzadeh P., Mirzaei T., Dharanikota S. Patients’ perceptions toward human-artificial intelligence interaction in health care: experimental study. J. Med. Internet Res. 2021;23(11):e25856. doi: 10.2196/25856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Złotowski J., Yogeeswaran K., Bartneck C. Can we control it? Autonomous robots threaten human identity, uniqueness, safety, and resources. Int. J. Hum Comput Stud. 2017;100:48–54. doi: 10.1016/j.ijhcs.2016.12.008. [DOI] [Google Scholar]

- 39.Xiong H., Yi S., Lin Y. The psychological status and self-efficacy of nurses during COVID-19 outbreak: a cross-sectional survey. INQUIRY: J. Health Care Organ. Prov. Financ. 2020;57:020957114. doi: 10.1177/0046958020957114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Humphrey S.E., Nahrgang J.D., Morgeson F.P. Integrating motivational, social, and contextual work design features: a meta-analytic summary and theoretical extension of the work design literature. J. Appl. Psychol. 2007;92(5):1332–1356. doi: 10.1037/0021-9010.92.5.1332. [DOI] [PubMed] [Google Scholar]

- 41.Schreier M., Fuchs C., Dahl D.W. The innovation effect of user design: exploring consumers' innovation perceptions of firms selling products designed by users. J. Mark. 2012;76(5):18–32. doi: 10.1509/jm.10.0462. [DOI] [Google Scholar]

- 42.Franke N., Schreier M., Kaiser U. The ‘I designed it myself’ effect in mass customization. Manag. Sci. 2010;56(1):125–140. doi: 10.1287/mnsc.1090.1077. [DOI] [Google Scholar]

- 43.Harrigan P., Evers U., Miles M., Daly T. Customer engagement with tourism social media brands. Tour. Manag. 2017;59:597–609. doi: 10.1016/j.tourman.2016.09.015. [DOI] [Google Scholar]

- 44.Chen L., Hsieh J.J.P.A., Rai A. How does intelligent system knowledge empowerment yield payoffs? Uncovering the adaptation mechanisms and contingency role of work experience. Inf. Syst. Res. 2022 doi: 10.1287/isre.2021.1097. [DOI] [Google Scholar]

- 45.Shahbaz M., Gao C., Zhai L., Shahzad F., Luqman A., Zahid R. Impact of big data analytics on sales performance in pharmaceutical organizations: The role of customer relationship management capabilities. PLoS One. 2021;16(4):e0250229. doi: 10.1371/journal.pone.0250229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Clegg C., Spencer C. A circular and dynamic model of the process of job design. J. Occup. Organ. Psychol. 2007;80(2):321–339. doi: 10.1348/096317906X113211. [DOI] [Google Scholar]

- 47.Dillon T.W., Lending D., Crews T.R., Blankenship R. Nursing self-efficacy of an integrated clinical and administrative information system. CIN. Comput. Inform. Nurs. 2003;21(4):198–205. doi: 10.1097/00024665-200307000-00010. [DOI] [PubMed] [Google Scholar]

- 48.Pan Z., Lu Y., Gupta S., Hu Q. You change, I change: an empirical investigation of users’ supported incremental technological change in mobile social media. Internet Res. 2021;31(1):208–233. doi: 10.1108/INTR-06-2019-0226. [DOI] [Google Scholar]

- 49.Ha J.G., Page T., Thorsteinsson G. A study on technophobia and mobile device design. Int. J. Cont. 2011;7(2):19–25. doi: 10.5392/IJoC.2011.7.2.017. [DOI] [Google Scholar]

- 50.Lazar I.M., Panisoara G., Panisoara I.O. Digital technology adoption scale in the blended learning context in higher education: development, validation and testing of a specific tool. PLoS One. 2020;15(7):e0235957. doi: 10.1371/journal.pone.0235957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Johnson D.G., Verdicchio M. AI anxiety. J. Assoc. Inf. Sci. Technol. 2017;68(9):2267–2270. doi: 10.1002/asi.23867. [DOI] [Google Scholar]

- 52.Savela N., Oksanen A., Pellert M., Garcia D. Emotional reactions to robot colleagues in a role-playing experiment. Int. J. Inf. Manag. 2021;60 doi: 10.1016/j.ijinfomgt.2021.102361. [DOI] [Google Scholar]

- 53.Fisher C.D., Minbashian A., Beckmann N., Wood R.E. Task appraisals, emotions, and performance goal orientation. J. Appl. Psychol. 2013;98(2):364–373. doi: 10.1037/a0031260. [DOI] [PubMed] [Google Scholar]

- 54.Kwak Y., Seo Y.H., Ahn J.W. Nursing students' intent to use AI-based healthcare technology: path analysis using the unified theory of acceptance and use of technology. Nurse Educ. Today. 2022;119 doi: 10.1016/j.nedt.2022.105541. [DOI] [PubMed] [Google Scholar]

- 55.Suseno Y., Chang C., Hudik M., Fang E.S. Beliefs, anxiety and change readiness for artificial intelligence adoption among human resource managers: the moderating role of high-performance work systems. Int. J. Hum. Resour. Manag. 2021;33(6):1209–1236. doi: 10.1080/09585192.2021.1931408. [DOI] [Google Scholar]

- 56.Ryder R.D. Speciesism: the original leaflet. Crit. Soc. 2010;2:1–2. [Google Scholar]

- 57.Costello K., Hodson G. Explaining dehumanization among children: the interspecies model of prejudice. Br. J. Soc. Psychol. 2014;53(1):175–197. doi: 10.1111/bjso.12016. [DOI] [PubMed] [Google Scholar]

- 58.Dhont K., Hodson G. Why do right-wing adherents engage in more animal exploitation and meat consumption? Pers. Individ. Differ. 2014;64:12–17. doi: 10.1016/j.paid.2014.02.002. [DOI] [Google Scholar]

- 59.Dhont K., Hodson G., Costello K., MacInnis C.C. Social dominance orientation connects prejudicial human-human and human-animal relations. Pers. Individ. Differ. 2014;61:105–108. doi: 10.1016/j.paid.2013.12.020. [DOI] [Google Scholar]

- 60.Schmitt B. Speciesism: an obstacle to AI and robot adoption. Mark. Lett. 2019;31(1):3–6. doi: 10.1007/s11002-019-09499-3. [DOI] [Google Scholar]

- 61.Haslam N. Dehumanization: an integrative review. Pers. Soc. Psychol. Rev. 2006;10(3):252–264. doi: 10.1207/s15327957pspr1003_4. [DOI] [PubMed] [Google Scholar]

- 62.Zhou G., Chu G., Li L., Meng L. The effect of artificial intelligence on China’s labor market. China Econ. J. 2020;13(1):24–41. doi: 10.1080/17538963.2019.1681201. [DOI] [Google Scholar]

- 63.Horta O., Albersmeier F. Defining speciesism. Phil. Compass. 2020;15(11):1–9. doi: 10.1111/phc3.12708. [DOI] [Google Scholar]

- 64.Bryson K., Soligo C., Sommer V. Interrogating boundaries against animals and machines: human speciesism in British newspapers. J. Posthuman Stud. 2020;4(2):129. doi: 10.5325/jpoststud.4.2.0129. [DOI] [Google Scholar]

- 65.Huo W., Zhang Z., Qu J., Yan J., Yan S., Yan J., Shi B. Speciesism and preference of human-artificial intelligence interaction: a study on medical artificial intelligence. Int. J. Hum.-Comput. Interact. 2023 doi: 10.1080/10447318.2023.2176985. [DOI] [Google Scholar]

- 66.Caviola L., Everett J.A.C., Faber N.S. The moral standing of animals: towards a psychology of speciesism. J. Pers. Soc. Psychol. 2019;116(6):1011–1029. doi: 10.1037/pspp0000182. [DOI] [PubMed] [Google Scholar]

- 67.Riek B.M., Mania E.W., Gaertner S.L. Intergroup threat and outgroup attitudes: a meta-analytic review. Pers. Soc. Psychol. Rev. 2006;10(4):336–353. doi: 10.1207/s15327957pspr1004_4. [DOI] [PubMed] [Google Scholar]

- 68.Stephan W.G., Renfro C.L. Psychology Press; New York: 2002. From Prejudice to Intergroup Emotions: Differentiated Reactions to Social Groups. The Role of Threat in Intergroup Relations; p. 299. [Google Scholar]

- 69.Silpakit P., Fisk R.P. Services Marketing in a Changing Environment. American Marketing Association; Chicago, IL: 1985. Participatizing the service encounter: a theoretical framework; pp. 117–121. [Google Scholar]

- 70.Shah S., Robinson I. Benefits of and barriers to involving users in medical device technology development and evaluation. Int. J. Technol. Assess. Health Care. 2007;23(1):131–137. doi: 10.1017/S0266462307051677. [DOI] [PubMed] [Google Scholar]

- 71.Hair J.F., Hult G.T.M., Ringle C.M., Sarstedt M. 2nd ed. Sage Publications Ltd; 2016. A Primer on Partial Least Squares Structural Equation Modeling. [Google Scholar]

- 72.Hair J.F., Ringle C.M., Sarstedt M. PLS-SEM: Indeed a silver bullet. J. Market. Theory Pract. 2011;19(2):139–152. doi: 10.2753/MTP1069-6679190202. [DOI] [Google Scholar]

- 73.Nunnally J.C., Bernstein I.H. 3rd ed. McGraw-Hill; New York: 1994. Psychometric Theory. [Google Scholar]

- 74.Fornell C., Larcker D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981;18:39–50. doi: 10.1177/002224378101800104. [DOI] [Google Scholar]

- 75.Kock N. Common method bias in PLS-SEM: a full collinearity assessment approach. Int. J. E-Collab. 2015;11(4):1–10. doi: 10.4018/ijec.2015100101. [DOI] [Google Scholar]

- 76.Wetzels M., Odekerken-Schröder G., van Oppen C. Using PLS path modeling for assessing hierarchical construct models: guidelines and empirical illustration. MIS Q. 2009;33(1):177–195. doi: 10.2307/20650284. [DOI] [Google Scholar]

- 77.Hair J.F., Risher J.R., Sarstedt M., Ringle C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019;31(1):2–24. doi: 10.1108/EBR-11-2018-0203. [DOI] [Google Scholar]

- 78.Preacher K.J., Selig J.P. Advantages of Monte Carlo confidence intervals for indirect effects. Commun. Methods Meas. 2012;6(2):77–98. doi: 10.1080/19312458.2012.679848. [DOI] [Google Scholar]

- 79.Gursoy D., Chi O.H., Lu L., Nunkoo R. Consumers acceptance of artificially intelligent (AI) device use in service delivery. Int. J. Inf. Manag. 2019;49:157–169. doi: 10.1016/j.ijinfomgt.2019.03.008. [DOI] [Google Scholar]

- 80.Tamilmani K., Rana N.P., Wamba S.F., Dwivedi R. The extended unified theory of acceptance and use of technology (UTAUT2): a systematic literature review and theory evaluation. Int. J. Inf. Manag. 2021;57 doi: 10.1016/j.ijinfomgt.2020.102269. [DOI] [Google Scholar]

- 81.Venkatesh V., Thong J.Y.L., Xu X. Unified theory of acceptance and use of technology: a synthesis and the road ahead. J. Assoc. Inf. Syst. 2016;17(5):328–376. doi: 10.17705/1jais.00428. [DOI] [Google Scholar]

- 82.Turan A., Tunc A.O., Zehir C. A theoretical model proposal: personal innovativeness and user involvement as antecedents of unified theory of acceptance and use of technology. Proc. Soc. Behav. Sci. 2015;210:43–51. doi: 10.1016/j.sbspro.2015.11.327. [DOI] [Google Scholar]

- 83.Glikson E., Woolley A.W. Human trust in artificial intelligence: review of empirical research. Acad. Manag. Ann. 2020;14(2):627–660. doi: 10.5465/annals.2018.0057. [DOI] [Google Scholar]

- 84.Edwards C., Edwards A., Stoll B., Lin X., Massey N. Evaluations of an artificial intelligence instructor’s voice: social identity theory in human-robot interactions. Comput. Hum. Behav. 2019;90:357–362. doi: 10.1016/j.chb.2018.08.027. [DOI] [Google Scholar]

- 85.Wysocki O., Davies J.K., Vigo M., Armstrong A.C., Landers D., Lee R.B.C., Freitas A. Assessing the communication gap between AI models and healthcare professionals: explainability, utility and trust in AI-driven clinical decision-making. Artif. Intell. 2023;316 doi: 10.1016/j.artint.2022.103839. [DOI] [Google Scholar]

- 86.Ho M., Mantello P., Ghotbi N., Nguyen M., Nguyen H.T., Vuong Q. Rethinking technological acceptance in the age of emotional AI: Surveying Gen Z (Zoomer) attitudes toward non-conscious data collection. Technol. Soc. 2022;70 doi: 10.1016/j.techsoc.2022.102011. [DOI] [Google Scholar]

- 87.Baysari M.T., Hardie R.A., Lake R., Richardson L., McCullagh C., Gardo A., Westbrook J. Longitudinal study of user experiences of a CPOE system in a pediatric hospital. Int. J. Med. Inf. 2018;109:5–14. doi: 10.1016/j.ijmedinf.2017.10.018. [DOI] [PubMed] [Google Scholar]

- 88.Chen H., Li L., Chen Y. Explore success factors that impact artificial intelligence adoption on telecom industry in China. J. Manage. Anal. 2021;8(1):36–68. doi: 10.1080/23270012.2020.1852895. [DOI] [Google Scholar]

- 89.Chan K.W., Yim C.K., Lam S.S.K. Is customer participation in value creation a double-edged sword? Evidence from professional financial services across cultures. J. Mark. 2010;74(3):48–64. doi: 10.1509/jmkg.74.3.48. [DOI] [Google Scholar]

- 90.Rahman M.S., Ko M., Warren J., Carpenter D. Healthcare Technology Self-Efficacy (HTSE) and its influence on individual attitude: An empirical study. Comput. Hum. Behav. 2016;58:12–24. doi: 10.1016/j.chb.2015.12.016. [DOI] [Google Scholar]

- 91.Turja T., Rantanen T., Oksanen A. Robot use self-efficacy in healthcare work (RUSH): development and validation of a new measure. AI & Soc. 2019;34:137–143. doi: 10.1007/s00146-017-0751-2. [DOI] [Google Scholar]

- 92.Brougham D., Haar J. Smart technology, artificial intelligence, robotics, and algorithms (STARA): employees’ perceptions of our future workplace. J. Manag. Organ. 2018;24(2):239–257. doi: 10.1017/jmo.2016.55. [DOI] [Google Scholar]