Abstract

The ability to detect liquid argon scintillation light from within a densely packed high-purity germanium detector array allowed the Gerda experiment to reach an exceptionally low background rate in the search for neutrinoless double beta decay of Ge. Proper modeling of the light propagation throughout the experimental setup, from any origin in the liquid argon volume to its eventual detection by the novel light read-out system, provides insight into the rejection capability and is a necessary ingredient to obtain robust background predictions. In this paper, we present a model of the Gerda liquid argon veto, as obtained by Monte Carlo simulations and constrained by calibration data, and highlight its application for background decomposition.

Introduction

Provided with an array of germanium detectors, made from isotopically enriched high-purity germanium (HPGe) material suspended in a clean liquid argon (LAr) bath, the Germanium Detector Array (Gerda) experiment set out to probe the neutrino’s particle nature in a search for the neutrinoless double beta () decay of Ge [1]. The ability to detect scintillation light emerging from coincident energy depositions in the LAr, combined with pulse shape discrimination (PSD) techniques [2], allowed to cut the background level of the second phase (Phase II ) to a record low [3]. No signal was found, which translates into one of the most stringent lower limits on the half-life of the decay of Ge at years at 90% C.L. [4].

Based on dedicated Monte Carlo simulations of the scintillation light propagation, the model of the LAr veto rejection grants insight into the light collection from various regions of the highly heterogeneous setup. The full methodology and its first application are described in this document, which is structured as follows: Sect. 2 offers a brief description of the Gerda instrumentation, focusing on the Phase II light read-out system. In Sect. 3 the connection between photon detection probabilities and event rejection is made. Section 4 summarizes the Monte Carlo implementation and the chosen optical properties, while Sect. 5 describes the tuning of the model parameters on calibration data. In Sect. 6 photon detection probability maps are introduced, and in Sect. 7 their application for background decomposition is highlighted. In Sect. 8 conclusions are drawn.

Instrumentation

The Gerda experimental site was the Hall A of the INFN Laboratori Nazionali del Gran Sasso (LNGS) underground laboratory in central Italy. Equipped with a large-scale shielding infrastructure – a 64 cryostat inside a 590 water tank – Gerda enclosed a low-background 5.0-grade LAr environment, which from December 2015 to November 2019 gave home to the heart of Phase II : 40, later 41, HPGe detectors in a 7-string array configuration, surrounded by a light read-out instrumentation. The veto design comprised two sub-systems: low-activity photomultiplier tubes (PMTs) [5] and wavelength-shifting (WLS) fibers coupled to silicon photomultipliers (SiPMs) [6]. The latter was upgraded in spring 2018. The reader is referred to [7] for a detailed description of the Gerda experimental setup.

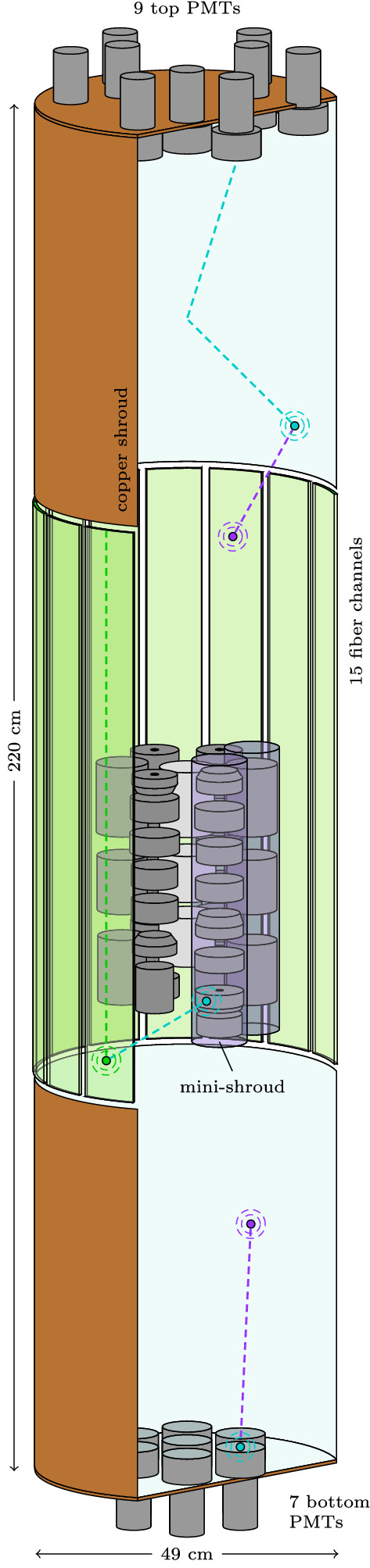

The 3 Hamamatsu R11065-20 Mod PMTs were selected for their performance at LAr temperature and enhanced radiopurity (< 2 mBq activity in both Th and Ra) [7–9]. Still, they contributed significantly to the background within the inner Phase II setup and were thus placed at >1 m from any HPGe detector, giving – together with the limited cryostat entrance width – the LAr instrumentation its elongated cylindrical shape. With space for support and calibration sources to enter, 3 off-center groups of 3 PMTs each were installed on top, whereas the bottom plate held 7 centrally mounted PMTs. Each PMT’s entrance window was covered with tetraphenyl butadiene (TPB) embedded in polystyrene, in order to shift the incident vacuum-ultraviolet (VUV) scintillation light from LAr to a detectable wavelength. On the inside, the horizontal copper support plates were covered with a highly reflective TPB-painted VM2000 multi-layer polymer, whereas lateral guidance of light towards the top/bottom was ensured by a TPB dip-coated diffuse-reflecting  polytetrafluoroethylene (PTFE) foil [10], stitched to the 100 mm-thin copper shrouds. A sketch of the setup is shown in Fig. 1.

polytetrafluoroethylene (PTFE) foil [10], stitched to the 100 mm-thin copper shrouds. A sketch of the setup is shown in Fig. 1.

Fig. 1.

LAr veto instrumentation concept. Transport of light signals towards the PMTs or SiPMs relies on WLS processes in the TPB layers or optical fibers. Several potential light paths are indicated. Support structure details, electronics as well as individual fibers are not drawn

With typical SiPMs having a photo-sensitive area of (1) , large-scale installations of >1 photo coverage still represent a technological challenge [11]. Nonetheless, coupled to WLS fibers of < 0.1 mBq/kg activity in both Th and Ra, which serve as radio-pure light collectors [12], the detection power of a single device is largely enhanced. The active chip size of the KETEK PM33100 SiPMs is 33 , they feature 100 micro cell pitch and were purchased “in die”, i.e. without packaging, allowing for a custom low-activity housing. Each of the 15 channels was comprised of 6 SiPMs, connected in parallel on copper-laminated PTFE holders and cast into optical cement, amounting to a total active surface of 8.1 . The doubly-cladded BCF-91A fibers of square-shaped 11 cross section, were covered with TPB by evaporation, routed vertically to cover the central veto section, bent by 180 at the bottom and coupled to different SiPMs on both top ends. Guidance of the individual fibers was ensured by micro-machined copper holders, attempting to keep them at a 45 rotation, facing their full mm-diagonal towards the center. The total length of the 405 fibers was about 730 m.

Each of the 40 cm-long HPGe strings was enclosed in a nylon “mini shroud”, transparent to visible light, covered on both sides with TPB. It provided a mechanical barrier that limited the accumulation of K ions – a progeny of cosmogenic Ar – on the HPGe detector surfaces [13].

The data acquisition of the entire array, based on SIS3301 Struck [14] FADCs, including the LAr veto photo sensors, was triggered once the signal of a single HPGe detector exceeded a pre-set online threshold. No independent trigger on the light read-out was implemented. The veto condition was evaluated offline, allowing for time-dependent channel-specific thresholds right above the respective noise pedestals and an anti-coincidence window that takes into account the characteristic scintillation emission timing as well as the HPGe detector signal formation dynamics. The typical thresholds were set at about 0.5 photo-electrons within to + 5 around the HPGe detector trigger. Any light signal over threshold in any channel was sufficient to classify the event as background.

Photon detection probabilities

Upon interaction of ionizing radiation, ultra-pure LAr scintillates with a light yield of 40 photons/keV [15]. There is an ongoing discussion whether this number could be smaller [16], but in any case, the actual light output is strongly reduced in the presence of trace contaminants and a priori not precisely known for many experiments, including Gerda. An estimate based on the measured triplet lifetime of the argon excimer state of about 1.0 [7], limits the Gerda light yield to < 71% of the nominal pure-argon value, or < 28 photons/keV.1

Given a light yield of this order, the number of primary VUV photons produced in a typical Gerda background event can be enormous. Coincident energy depositions due to and interactions in LAr (e.g. from Th or U trace impurities) frequently reach MeV-energies. The computational effort to track all () optical photons represents a challenge, especially when considering the feedback between rejection power and required statistics – the larger the coincident energy release, the larger the suppression, the larger the statistics required to obtain a proper prediction of the HPGe spectrum after veto application. However, there is a workaround for this problem: the light propagation can be separated from the simulations that provide the energy depositions in the LAr.

The number of primary photons generated from a single energy deposition in the LAr, follows a Poisson distribution with expectation value . Each of these photons has the opportunity to get detected with a photon detection probability , specific for interaction point . Accordingly, the number of detected photons n, is the result of Bernoulli trials, and stays Poisson distributed with expectation value . Given a full event, with total coincident energy in the LAr distributed over several interaction points , the probability mass function (pmf) for the total number of signal photons reads

| 1 |

As the convolution of several independent Poisson processes, it stays a Poisson distribution described by the sum of the expectation values. Provided that is known, veto information can be provided on the basis of the underlying energy depositions , and does not require optical simulations. It relies on the assumption that each set of photons, born from a particle’s energy depositions , solely depends on the primary light yield and is emitted isotropically.2

Experiments using scintillation detectors traditionally quote the yield of detected photo-electrons (p.e.) per unit of deposited energy, i.e. the experimental light yield, in e.g. p.e./keV. This number is only meaningful, when considering a homogeneous detector, with uniform response over most of its volume. By construction this is not the case for the Gerda LAr light read-out system, whose purpose is to detect light that emerges from within and around the optically dense HPGe detector array. With this in mind, good veto performance does not necessarily go hand-in-hand with maximum experimental light yield, especially when considering background sources (e.g. residual natural radioactivity of the HPGe detector support structure) that deposits energy in the “darkest” corners of the array (e.g. between an HPGe detector and its holder plate) where the detection probabilities are minimal, or even zero. Hence, it is necessary to determine the full three-dimensional map of light detection probabilities , with special emphasis on the areas where little light is collected from. This can only be done in a dedicated Monte Carlo study, that takes into account the full photon detection chain of the Gerda LAr instrumentation.

A simple estimate

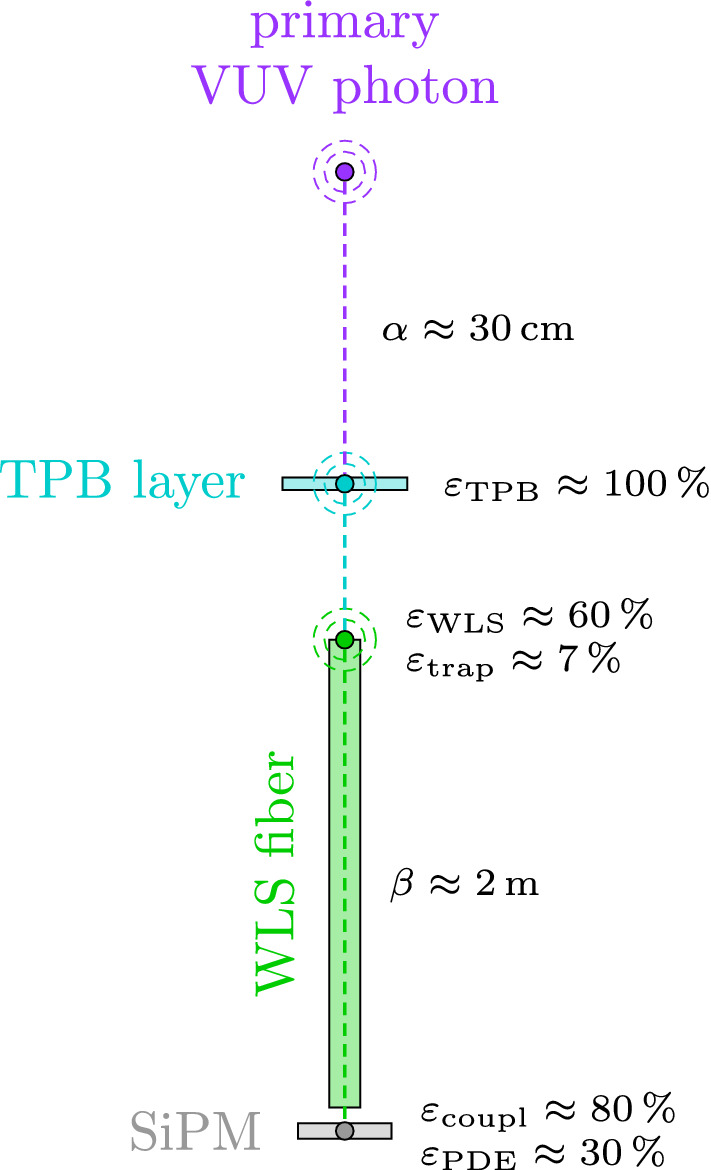

Before running such simulations, it is worthwhile to evaluate the impact of the various steps a primary VUV photon undergoes until its detection. Gerda uses a hybrid system consisting of TPB-coated WLS-fibers with SiPM-readout and PMTs to detect the LAr scintillation light that emerges from in and around the HPGe detector array. If we neglect most geometric effects, the photon detection probability can be broken down into factors that depend on basic properties of the materials and components involved. Given a primary photon that is emitted in the system LAr-TPB-fiber-SiPM, as depicted in Fig. 2, the probability for its detection, can be described by

where is the photon wavelength. First, the VUV photon has to travel a certain distance x in LAr, while risking to get absorbed in interactions with residual impurities. The absorption length at LAr peak emission is on the order of tens of centimeters, depending on the argon purity [16, 18, 19]. The moment the VUV photon reaches and gets absorbed in any TPB layer, a blue photon with peak emission at 420 nm is re-emitted [20]. The efficiency for this process is close to 100% [9, 21]. Since the typical distance for a first encounter with a TPB-coated surface is of similar order as the absorption length itself, about 1/e of the primary photons make it through this first part of the journey. Once a photon is shifted to blue, absorption in the LAr becomes negligible, as the absorption length for visible light exceeds the actual system size. Hence, it does not necessarily matter, if the blue photon directly enters a fiber at this point or later. As soon as this is the case, the photon undergoes a second wavelength-shifting step and is shifted to green with peak emission at 494 nm [22]. The corresponding efficiency , i.e. the overlap between the TPB emission and fiber absorption spectrum, is about 60%. The green photon will stay trapped within the fiber with a trapping efficiency of about 7% [22] and arrive at its end after about half of its absorption length of , which adds another factor . The coupling efficiency to successfully couple the photon into the SiPM is assumed to be , whereas the photon detection efficiency (PDE) of being detected as a photo-electron signal is about 30% at the green fiber emission [23]. Multiplication of all individual contributions results in an overall detection probability of not more than 0.2% and it can be anticipated that including geometric effects (e.g. shadowing or optical coverage) the light collection will not exceed 0.1% for most regions of the Gerda LAr volume.

Fig. 2.

Simplified light collection chain. This one-dimensional representation depicts the main material properties that affect the light collection with the Gerda fiber-SiPM instrumentation. The overall light collection efficiency for the primary VUV photon is of (0.1)%. In real life, effects like shadowing, reflections and optical coverage enter the game

Monte Carlo implementation

The Gerda instrumentation is implemented in the Geant4-based [24–26] Majorana-Gerda (MaGe) simulation framework [27]. For what concerns the propagation of optical photons from typical background processes, most important are the geometries enclosed by the LAr veto instrumentation as well as the optical properties of the corresponding materials.

Geometry

The HPGe detector array, including all auxiliary components, is implemented to the best available knowledge, but making reasonable approximations. The reader may find detailed technical specifications such as dimensions and materials documented in [7]. The simulated setup includes: individually sized and placed HPGe detectors in their silicon/copper mounts, TPB-covered nylon mini-shrouds around each string, high-voltage and signal flat cables running from each detector to the front-end electronics, the front-end electronics themselves as well as copper structural components. Approximations are made when full degeneracy of events originating from the respective parts is expected, e.g. the level of detail of the electronics boards is low and no detailed cable routing is implemented. Details are discussed in [28]. As a consequence, shadowing effects that impact the optical photon propagation, but not the standard background studies, may not be captured perfectly.

The PMTs are implemented as cylinders, with a quartz entrance window and a photo-sensitive cathode. They are placed at their respective 9(7) positions in the top(bottom) copper plate, which to the inside is covered with a specular reflector that emulates VM2000. In contrast, the inside of the copper shrouds is lined with a diffuse PTFE reflector that represents the  foil. All reflector surfaces, as well as the PMT entrance windows, are covered with a wavelength-shifting TPB layer.

foil. All reflector surfaces, as well as the PMT entrance windows, are covered with a wavelength-shifting TPB layer.

The fiber shroud is modeled as cylinder segments covering the central part of the veto volume. Every segment contains a core, two claddings and one thin TPB layer, just as the real fibers. Their bottom ends have reflective surfaces attached, whereas optical photons reaching the upper ends are registered by photosensitive surfaces, each of them representing one SiPM. This differs from the real-world implementation, where the fibers are bent, up-routed and read-out on both ends. To avoid any misinterpretation of in-fiber correlations between channels that are connected to the same fibers, both the Monte Carlo and data signals are re-grouped to represent one channel per fiber module, resulting in a total of 9 fully independent channels. Due to sagging and uneven tensioning the optical coverage of the fibers was reduced in comparison to the maximum possible value of 75%. In the simulation, a gap between the fiber segments parametrizes the coverage of the fiber shroud. Analyzing photos of the mounted fiber modules the real coverage was estimated to be around 50%.

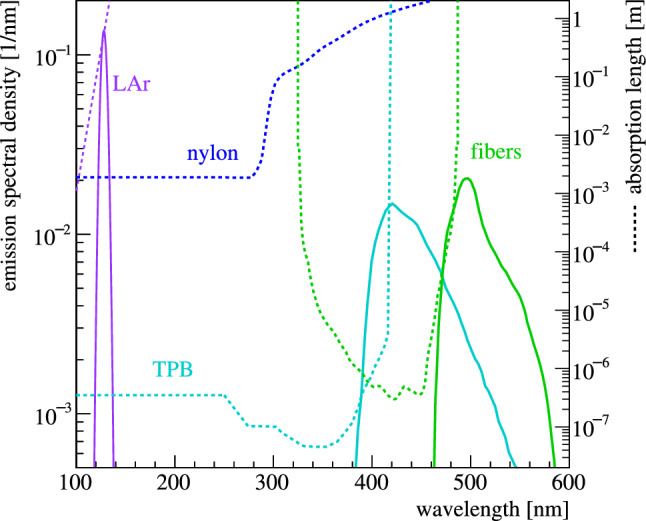

Optical properties

Figure 3 compiles the relevant emission and absorption features implemented for the various materials. The emission of VUV scintillation photons from the LAr follows a simple Gaussian distribution centered at 128 nm with a standard deviation of 2.9 nm. It neglects contributions at longer wavelength, which have orders of magnitude lower intensity for pure LAr [29]. The refractive index of the LAr is implemented using the empirical Sellmeier formalism, with the coefficients obtained in [30]. Building on this, the wavelength-dependent Rayleigh scattering length is derived [31]. It corresponds to about 70 cm at LAr peak emission, which is shorter than recently suggested [32]. Operation of the LEGEND Liquid Argon Monitoring Apparatus (LLAMA) during the Gerda decommissioning point towards a VUV attenuation length of about 30 cm [33]. Accordingly, the absorption length was set to . The absorption length is modeled over the full wavelength range extending it from 128 nm with an ad-hoc exponential function. The primary VUV scintillation yield is considered a free parameter and by default set to 28 photons/keV. A dependence of the photon yield on the incident particle, i.e. quenching, as well as characteristic singlet and triplet timing are implemented. The TPB absorption length is taken from [9], the emission spectrum from [20]. Individual emission spectra, where available, are implemented for TPB on nylon [13], VM2000 [34] as well as  [10]. The absorption length of nylon is taken from [35]. Absorption and emission of the fiber material use the data presented in [22], normalized to measurements at 400 nm. The quantum efficiency of the PMTs [36] and PDE of the SiPMs [23] have been extracted from the product data sheets provided by the vendors. The reflectivities of germanium, copper, silicon and PTFE above 280 nm are taken from [37], whereas their values at VUV wavelength are largely based on assumptions. The reflectivity of VM2000 is taken from [34], the one of

[10]. The absorption length of nylon is taken from [35]. Absorption and emission of the fiber material use the data presented in [22], normalized to measurements at 400 nm. The quantum efficiency of the PMTs [36] and PDE of the SiPMs [23] have been extracted from the product data sheets provided by the vendors. The reflectivities of germanium, copper, silicon and PTFE above 280 nm are taken from [37], whereas their values at VUV wavelength are largely based on assumptions. The reflectivity of VM2000 is taken from [34], the one of  from [38]. The exact optical property values implemented in the simulation have been reported in [39].

from [38]. The exact optical property values implemented in the simulation have been reported in [39].

Fig. 3.

Emission spectra (solid lines) and absorption length (dashed lines) of indicated materials. The primary emission from the LAr follows a simple Gaussian distribution centered at 128 nm. Its absorption length connects to larger wavelength with an ad-hoc exponential scaling. Absorption and re-emission appears in TPB and the polystyrene fiber material. Nylon is only transparent to larger wavelength

Uncertainties

A priori , the bare simulations are not expected to reproduce the data. Details like partially inactive SiPM arrays, coating non-uniformities and shadowing by real-life cable management are not captured by the Monte Carlo implementation. Similarly, input parameters measured under conditions differing from those in Gerda, e.g. at room temperature or different wavelength, pose additional uncertainty.

Back to Eq. 1 and photon detection probabilities : as already the number of primary VUV photons is uncertain, any linear effect, constant across the LAr volume , is degenerate with the primary light yield and thus only the product can be constrained by data-Monte Carlo comparison. It follows that, if a primary light yield of is assumed, its true value is fully absorbed in a global scaling of the efficiencies , individually to each light detection channel i. The set may further absorb any other global effect, e.g. an inaccurate TPB quantum efficiency, as well as any local channel-specific feature, e.g. varying photon detection efficiencies of the photo sensors. Given the large set of potential uncertainties, are treated unconstrained and may take any value between zero and unity.

Parameter optimization

To obtain a predictive model of the performance of the LAr veto system, residual degrees of freedom must be removed. In the following, we describe the methodology employed to statistically infer the value of the efficiencies by comparing simulated to experimental data. The full evidence of the model parameters is contained in a likelihood function, which has been maximized for special calibration data.

Given a class of events, the pmf that describes the number of photons n detected by some LAr veto channel is the convolution of two contributions:

| 2 |

It is a simultaneous measurement of light from true coincidences that accompany the corresponding HPGe energy deposition as well as random coincidences largely produced by spectator decays such as e.g. Ar in the LAr.3 While may be provided from simulations, randomly triggered events allow an evaluation of from data. However, as the measured signal amplitudes suffer non-linear effects, e.g. afterpulsing and optical crosstalk, that are themselves under study and at present not implemented in the simulation, no direct pmf comparison is possible and instead the binary projection of Eq. 2 is used. In the binary “light/no-light” projection, where corresponds to no light, and to a positive light detection, the pmf breaks down to a single expectation value, given by

| 3 |

A positive light detection is either truly coincident without random contribution, fully random or a simultaneous detection of both. It is complementary to no detection, neither as true nor as random coincidence. () is the detection probability for true(random) coincidences and quantifies the true survival probability of the underlying class of events, corrected for random coincidences.

Even though the data is reduced to binary information, the simulated pmf allows the additional detection efficiency to be folded into the Monte Carlo expectation. Given a count of n photons in the bare simulation, an effective detection of photons can be represented as a sequence of Bernoulli trials with probability . The pmf is the result of binomial re-population throughout all :

| 4 |

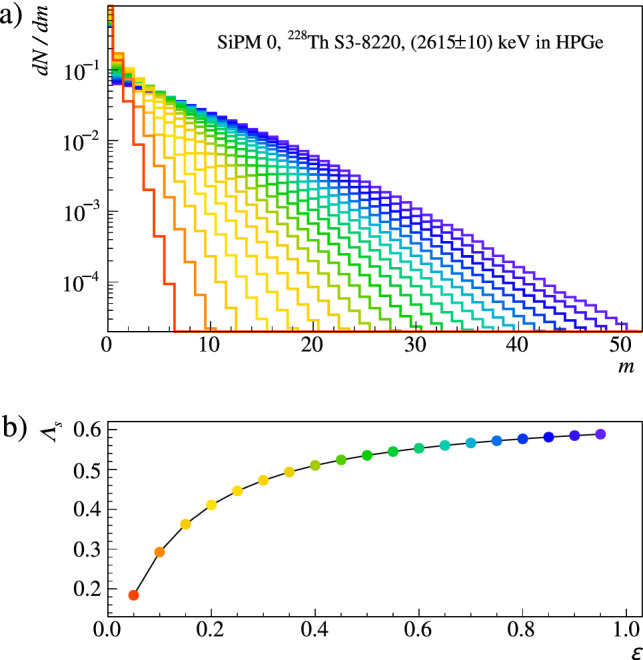

This technique avoids re-simulation for different values of . Figure 4 depicts Monte Carlo spectra processed for different efficiencies. Back in binary space, the detection probability , i.e. the chance to see one photon or more as true coincidence, is

| 5 |

It is the inverse of no detection, i.e. the population of the “zero bin” in Eq. 4 and allows uncertainties on the bare frequencies to be propagated into .4

Fig. 4.

Binomial repopulation. a The pmf defined in Eq. 4 can be obtained for any value of the detection efficiency from the unaltered simulation output. The example shows the pmf for a specific SiPM channel as obtained for Th decays in a calibration source at the top of the array (see also Tab. 1) depositing 2615 ± 10 keV in the HPGe detectors. The result is plotted for a selection of efficiency values, reported in the bottom panel. b The panel shows the non-linear dependence of the light detection probability (defined in Eq. 5). The color coding relates data points with corresponding distributions in the top panel

The likelihood for the observation of light in N Monte Carlo events out of simulated in total, given the aforementioned expectation value , is described by a binomial distribution . Maximizing its value allows to infer on , whereas taking into account the limited statistics of the random coincidence dataset, with M light detections over random events, makes it a combined fit of both the data and the random coincidence sample. This combined likelihood reads:

| 6 |

The signal expectation is given flexibility according to its uncertainty using a Gaussian pull term , which accounts for limited simulation statistics and additional systematics. Equation 6 has zero degrees of freedom and hence model discrimination can only be obtained through a combination of multiple datasets, i.e. calibration source positions, or by exploiting channel event correlations. While the former sounds trivial, e.g. an absorption length can be estimated from measurements at different distance, the latter requires explanation. Let’s imagine two photosensors , each probing the LAr with a certain photon detection probability . Considering an energy deposition , the probability to see light in both channels depends on , while events triggering only channel A test . Both of them probe distinct regions of the LAr volume. The probability to see no light from a certain volume of the LAr, i.e. the corresponding survival probability, depends on .

Given the full set of veto channels S of size n, each event will come as a certain subset, i.e. pattern, of triggered channels. The total number of possible patterns is , where each of them comes with its own unique expectation value derived from signal as well as random coincidences. A pattern’s signal expectation can be evaluated much like Eq. 4, however starting from an n-dimensional hyper-spectrum evaluated for the full vector of efficiencies . When folding in the random coincidences, it has to be considered that a certain pattern may be elevated to e.g. by random coincidences of the form or similar. Each pattern occurrence expectation value is hence a sum over all possible generator combinations , that result in . The full likelihood reads

| 7 |

The number of degrees of freedom is , where n is the number of channels. Several datasets may be combined as the product of the individual likelihoods. Equation 7 implicitly includes correlations between photosensors, which avoids a potential overestimation of the overall veto efficiency that could arise from unaccounted systematics. Given a large set of channels the number of possible patterns may be immense, but can be truncated by e.g. neglecting events with detection pattern higher than a certain multiplicity.

Application

In order to constrain the aforementioned effective channel efficiencies , dedicated data taken under conditions that could be clearly reproduced with a Monte Carlo simulation was needed. As the Gerda background data is a composition of various contributions and itself under study, only the peculiar conditions of a calibration run, where the energy depositions originate from a well-characterized source, allow for such studies. However, usual calibrations were taken with the purpose to guarantee a properly defined energy scale of all HPGe detectors and were performed with three Th calibration sources of (10) kBq activity each. The resulting rate in the LAr was far too high to study the veto response and was hence not even recorded during those calibrations. For this reason, special calibration data using one of the former Phase I Th sources with an activity of < 2 kBq was taken in July 2016. The source was moved to 3 different vertical positions (see [40] for details about the calibration system). The characteristics of the datasets are compiled in Tab. 1. To avoid particles contributing to the coincident light production and enable a clean -only signature, an additional 3 mm copper housing was placed around the source container.5 Each configuration was simulated with e8 primary decays in the source volume.

Table 1.

Calibration data taken with a <2kBq Th source placed at different heights. The reported position corresponds to the absolute distance moved from the parking position on top of the experiment. The upper-most and lower-most HPGe detectors are situated at about 8180 and 8560 mm respectively

| Position | Live time | Random |

|---|---|---|

| [mm] | [h] | Coincidences |

| 8220 | 6.4 | 7.5( 6)% |

| 8405 | 4.3 | 7.2(10)% |

| 8570 | 3.6 | 10.2(14)% |

The maximum likelihood analysis was performed on Tl full energy peak (FEP) events with an energy deposit of 2615(10) keV in a single HPGe detector. As no direct transitions to the ground nor first excited state of the Pb daughter nucleus are allowed, a minimum of 3.2 MeV is released in ’s, which almost always includes a transition to the intermediate 2615 keV state. Selecting full absorption events of the corresponding , results in an event sample virtually independent on HPGe detector details – the HPGe array is solely used to tag the Tl transition. The coincident energy depositions in the LAr originate to a large extent from coincident ’s of 583 keV or more, and only marginally from Bremsstrahlung. Figure 5a shows their energy distribution in the LAr. The random coincidence samples were obtained by test pulse injection at 50 mHz as well as an early ( ) evaluation of the veto condition. The random coincidence appearance is unique to each source configuration as the energy depositions from independent decays in the source contribute.

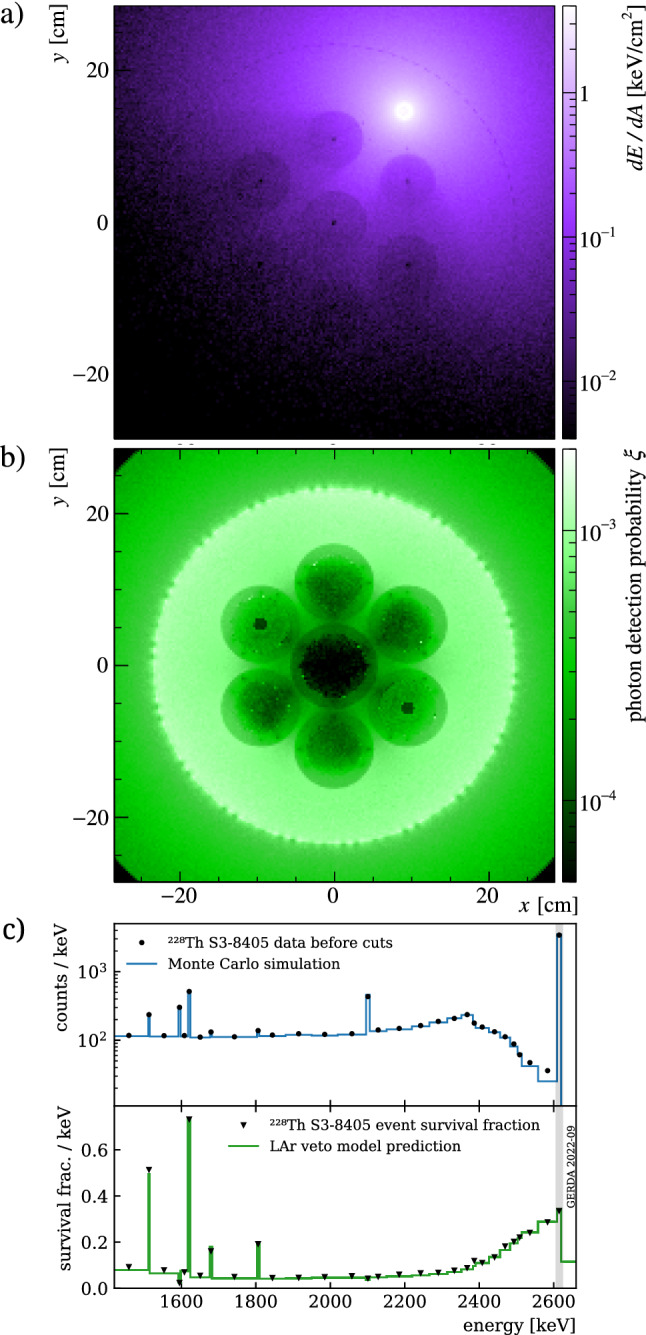

Fig. 5.

Data/Monte Carlo comparison. a Projected distribution of the LAr energy depositions for simulated Tl 2615 keV FEP-events from a calibration source at position 8405 mm. Darker circles correspond to the volume occupied by the HPGe detectors. b Photon detection probability in the same region. c Top panel: the energy spectrum of the Th data corresponding to figure a, before the LAr veto cut, compared to the Monte Carlo prediction. The pdf is normalized to reproduce the total count rate in data. Despite small shape discrepancies, the predicted LAr veto survival probability (bottom panel) matches the data over a wide range of energies, even far from the model optimization energy region (gray band). A variable binning is adopted for visualization purposes

To reduce the dimensionality of Eq. 7, the top/bottom PMT channels were regrouped to represent one single large top/bottom PMT, whereas the generally less uniform fiber/SiPM channels were kept separate. Accordingly, the likelihood had to be evaluated in dimensions. The pattern-space was truncated so that only channel combinations present in data had to be calculated. The extracted channel efficiencies are 13% for the top PMTs, 29% for the bottom PMTs and reach from 21 to 37% for the SiPM channels, with uncertainties of about ± 1%. Given an additional systematic uncertainty of 20% on the observation of the various veto patterns (i.e. in Eq. 7), the p-value amounts to 0.2. The differences in the SiPM efficiencies match the expectation for problematic channels with potentially broken chips. The reduced value for the top PMTs was anticipated, as additional shadowing effects from cabling are only present at the top.

Probability maps

To finally evaluate the three-dimensional photon detection probability , a dedicated simulation of VUV photons sampled uniformly over the LAr volume around the HPGe array was performed. Positive light detections with any photosensor i were determined taking into account the efficiencies as obtained in Sect. 5.1. For convenience, is stored as a discrete map, partitioned into cubic “voxels” of size mm. This size matches the characteristic scale of the probability map gradients expected in Gerda. Hence, the detection probability associated with voxel k corresponds to the ratio between positive light detections and total scintillation photons generated in the voxel volume. Figure 5b shows a projection of this object. As outlined in Sect. 3 it allows to determine the expected number of signal photons and the corresponding event rejection probability, solely based on energy depositions in the LAr. Outside the densely packed array (0.1)%-level values are reached. Figure 5c compares the energy distribution, before and after the LAr veto cut, of the Th source data with the model prediction. Small discrepancies are expected from geometry inaccuracies and the modeling of charge collection at the HPGe surface. However, the event suppression (shown in the bottom panel) is reproduced over a wide range of energies far off the Tl FEP-events used for model optimization. As expected, the rejection power is reduced for single- FEPs without significant coincidences in the decay scheme, e.g. for Bi at 1621 keV, and enhanced for the double escape peak (DEP) at 1593 keV, where two 511 keV light quanta leave the HPGe detectors.

Distortion studies

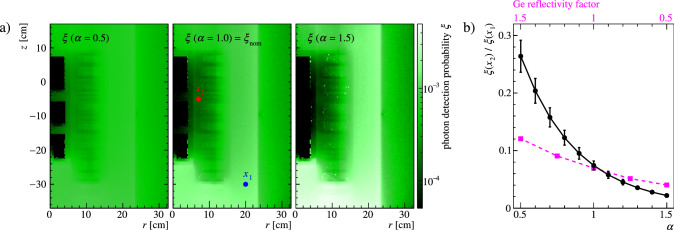

As anticipated in Sect. 4, many uncertainties affect the simulation of the LAr scintillation in the Gerda setup. The channel efficiencies extracted from calibration data, as described in Sect. 5, absorb systematic biases that scale the detection probability by global factors (e.g. the LAr scintillation yield and the TPB quantum efficiency), but cannot cure local uncertainties (e.g. due to incorrect germanium reflectivity or fiber shroud coverage). A heuristic approach has been formulated to estimate the impact of such simulation uncertainties on the LAr veto model. The distortion of induced by varying input parameters can be conservatively parametrized by means of an analytical transformation T:

As an additional constraint, the transformed must still reproduce the calibration data presented in Sect. 5, with which the original was optimized. As a consequence, the LAr volumes probed by calibration data (see Fig. 5) act as a fixed point of the transformation T, letting the detection probability deviate from its nominal value in all the other regions of the setup. T may take various analytical forms, depending on the desired type of induced local distortions. The adoption of this procedure overcomes the difficulty of studying the dependence of on numerous optical parameters by performing several computationally expensive simulations of the scintillation light propagation.

In the context of this work, we shall focus on transformations that make less or more homogeneous, i.e. that make “dark” areas (e.g. the HPGe array) “darker” compared to areas with high detection probability, or vice versa. A simple transformation that meets this requirement is the following power-law scaling:

| 8 |

where is a real coefficient controlling the magnitude of the distortion and N is a normalization constant adjusted to reproduce the event suppression observed in calibration data. The action of the transformation in Eq. 8 on the detection probability is depicted in Fig. 6. In the same figure, the size of power-law distortions is compared to that induced by uncertainties on the HPGe detectors’ reflectivity in the VUV region. The impact of the latter has been evaluated by scaling its value by in dedicated optical simulations. The power-law distortions significantly exceed the effect of a potential reflectivity bias and can therefore be used as a conservative estimate of the uncertainty on the light collection probability.

Fig. 6.

Modification of the photon detection probability through analytical power-law distortions defined in Eq. 8. a Inhomogeneities that are present in the nominal map are amplified with increasing , leading to a more homogeneous (, i.e. less color contrast) or less homogeneous (, i.e. more color contrast) response. b The probability ratio from two sample points and , outside and within the array, highlights this modification (black data points). The comparison of an altered germanium reflectivity (magenta data points) shows how the distortions conservatively exceed on the reflectivity

Background decomposition

As an application of the light collection and veto model, we shall now present the results of the background decomposition of the HPGe energy spectrum recorded during physics data taking after the application of the LAr veto cut. This background model serves as a fundamental input for various physics analyses whose sensitivity is enhanced with the LAr veto background reduction.

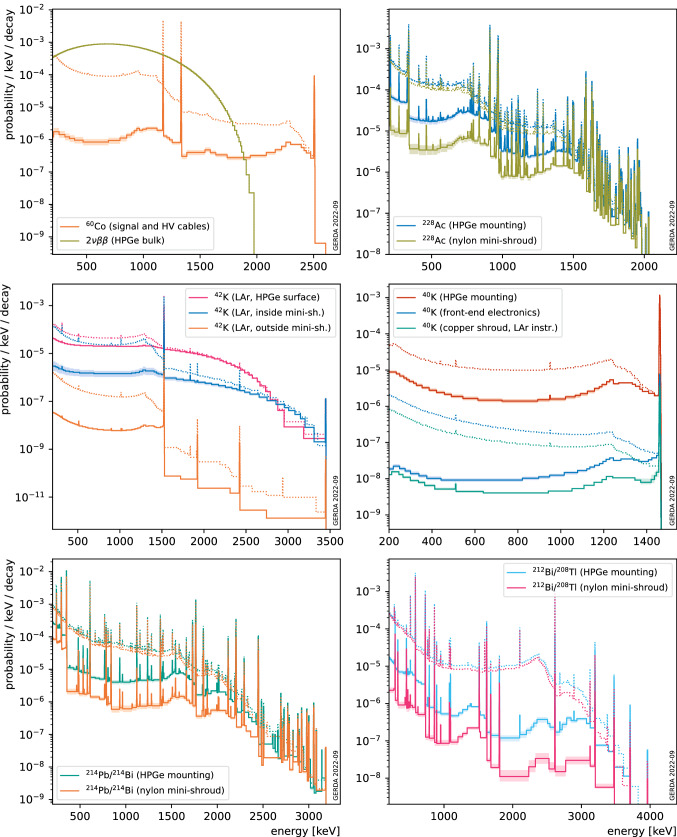

Previous work [28] has proven successful in describing the Gerda data in terms of background components, but before LAr veto and PSD cuts, referred to as “analysis” cuts. (pdfs) for various background sources have been produced with dedicated simulations of radioactive decays in the inner Gerda setup. A linear combination of these pdfs has been consequently fit on the first 60.2 kg years data from Gerda Phase II in order to infer on the various contributions to the total background energy spectrum. Since the data was considered before the application of the LAr veto cut, the propagation of scintillation photons has been disabled in these simulations. The developed LAr detector model allows to incorporate this missing information into the existing simulations and compute background expectations after the LAr veto cut. pdfs for a representative selection of signal and background sources in the Gerda Phase II setup are reported in Fig. 7.

Fig. 7.

Probability density functions (pdfs, normalized to the number of simulated primary decays) for a representative selection of background and signal event sources in the Gerda Phase II setup as detected by HPGe detectors and surviving the LAr veto cut, as predicted by the model presented in this document. Model uncertainties are shown as bands of lighter color. pdfS before the cut [28] (dotted lines) are overlaid for comparison. The reader is referred to [28, Figure 1] for a detailed documentation of the simulated setup. A variable binning is adopted for visualization purposes

The LAr veto model is applied to each background contribution before analysis cuts, as established by the background model. The distribution of events originating from the HPGe readout contact is negligibly affected by the LAr veto cut, as the particles are expected to originate from the germanium surfaces themselves, where the light collection is poor and any remaining light output from the recoiling nucleus would be quenched [15]. Thus, the model has been imported as-is from [28]. All predictions after the LAr veto cut are corrected for accidental rejection due to random coincidences of 2.7% [4]. As in [28], a Poisson likelihood is used to compare the detector-type specific energy spectra of single-detector events that survive the LAr veto cut, corresponding to an exposure of 61.4 kg years of Gerda Phase II data,6 with a linear combination of background pdfs. Statistical inference is carried out to determine the coefficients of the admixture that best describe the data. In a Bayesian setting, posterior probability distributions of background source intensities resulting from the model before cuts are fed as prior information,7 with the exception of K. The distribution of K ions in LAr is knowingly inhomogeneous, as ion drifts are induced by electric fields (generated by high-voltage cables and detectors) and convection. The spatial distribution is at present unknown. As a matter of fact, rough approximations have been adopted to describe it in the background model [28]. Given the inhomogeneity of the LAr veto response itself, a significant mismatch of the predicted event suppression between simulation and data is expected. Hence, we adopt uninformative, uniform priors for the K source intensities. The Monte Carlo Markov Chains are run with the BAT software [41] to compute posterior distributions and build knowledge update plots.

Substantial agreement between event suppression predicted by the LAr veto model and data is found: the posterior distributions are compatible with the priors, where non-uniform, at the 1–2 level. As anticipated, the K activity differs significantly from the data before the LAr veto cut. Based on this background decomposition, the event survival probability after LAr veto predicted in the decay analysis window8 is about 0.3% for Th, 15% for U and 10% for Co. As a final remark, we stress that the quoted event suppression in the decay region can be affected by background modeling uncertainties (e.g. source location, surface-to-volume activity or the exact K spatial distribution) whose evaluation is out of the scope of this work. As such, they must be taken cum grano salis and can not be generalized for different experimental conditions.

In the energy region dominated by the two neutrino double beta () decay, i.e. from the Ar endpoint at 565 keV to the double beta () Q-value 2039 keV, excluding the intense but narrow potassium peaks, the ratio between the number of events and the residual background influences the sensitivity of searches for exotic decay modes [42]. The signal-to-background ratio is about 2 in data before analysis cuts [28] and improves to about 18 after applying the LAr veto cut.

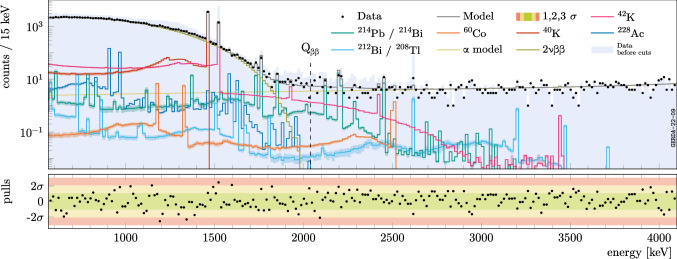

The obtained background decomposition is displayed in Fig. 8. Bands constructed by using maximally distorted maps (i.e. distortion parameter and 1.5), obtained with the procedure described in Sect. 6.1, are displayed for every background contribution to represent the systematic uncertainty. The ratio between data and best-fit model normalized by the expected statistical fluctuation in each bin, is shown below in the bottom panel. No significant deviations are observed, beyond the expected statistical fluctuations.

Fig. 8.

Background decomposition of the first 61.4 kg years of data from Gerda Phase II surviving the LAr veto cut (black dots). The veto model is applied to the existing background pdfs before the cut [28] folding in the probability map . Data before the cut is shown as a light blue filled histogram. Shaded bands constructed with the maximally distorted probability maps provide a visualization of the systematic uncertainty affecting each pdf

Conclusions

This paper describes the methodology, optimization and application of the light collection model as developed for the Gerda LAr scintillation light read-out. It is based on an ansatz that decouples the light propagation from non-optical simulations, using photon detection probability maps. The model has been optimized using low-activity Th calibration data. It allows predictions of the LAr veto event rejection, which is central for analyses of the spectrum [42], including a precise determination of the Ge half-life as well as a search for new physics phenomena. Even though detailed insight into the heterogeneous setup was granted, one short-coming seems imminent: certain parts of the probability maps are extrapolated, as they remain largely unprobed by the available calibration data. The systematic uncertainty associated with this problem has been evaluated using analytical distortions of the veto response. It is left to the upcoming Large Enriched Germanium Experiment for Neutrinoless double beta Decay (LEGEND) experiment to reduce this uncertainty by dedicated calibration measurements, that will elevate their LAr instrumentation from a binary light/no-light veto to a full-fledged detector.

Acknowledgements

The Gerda experiment is supported financially by the German Federal Ministry for Education and Research (BMBF), the German Research Foundation (DFG), the Italian Istituto Nazionale di Fisica Nucleare (INFN), the Max Planck Society (MPG), the Polish National Science Centre (NCN, Grant number UMO-2020/37/B/ST2/03905), the Polish Ministry of Science and Higher Education (MNiSW, Grant number DIR/WK/2018/08), the Russian Foundation for Basic Research, and the Swiss National Science Foundation (SNF). This project has received funding/support from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie Grant agreements No 690575 and No 674896. This work was supported by the Science and Technology Facilities Council, part of the U.K. Research and Innovation (Grant No. ST/T004169/1). The institutions acknowledge also internal financial support. The Gerda collaboration thanks the directors and the staff of LNGS for their continuous strong support of the Gerda experiment.

Data Availability Statement

This manuscript has associated data in a data repository. [Authors’ comment: The data shown in Fig. 8 is available in ASCII format as Supplemental Material [43].]

Footnotes

This estimate only considers contaminant-induced non-radiative de-excitation to compete with the triplet decay and neglects impurities that effect the initial excimer production.

These assumptions break down in the case of particle-type dependent quenching or Cherenkov radiation emission. Both effects, as well as an explicit Fano factor, could be added to Eq. 1 for future studies. Recent studies suggest the absence of further energy-dependent quenching effects in LAr [17].

It is not the pure Poisson distribution of Eq. 1, as it arises from all different realizations of coincident energy deposition in the LAr as .

Defined as , where is the uncertainty of the unaltered n-photon observations in a total of Monte Carlo events.

The suppression achieved in this configuration is not representative for backgrounds detected during physics data taking, which mostly originate from thin low-mass structural components, where particles contribute to the energy depositions in the LAr.

Additional 1.2 kg years of data from the last physics run of Phase II , neglected in previous works [28], is considered here.

The procedure is not statistically rigorous, since data before and after the cut are not fully independent. Nevertheless, the methodology is considered acceptable, as the intention is to carry out a qualitative comparison.

The decay analysis window is defined as the energy window from 1930 keV to 2190 keV, excluding the region around ( keV) and the intervals 2104 ± 5 keV and keV, which correspond to known lines from Tl and Bi.

Contributor Information

K. T. Knöpfle, Email: ktkno@mpi-hd.mpg.de

Gerda collaboration, Email: gerda-eb@mpi-hd.mpg.de.

References

- 1.Ackermann KH, et al. Eur. Phys. J. C. 2013;73(3):1–29. doi: 10.1140/epjc/s10052-013-2330-0. [DOI] [Google Scholar]

- 2.Agostini M, et al. Eur. Phys. J. C. 2022;82(4):284. doi: 10.1140/epjc/s10052-022-10163-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Agostini M, et al. Nature. 2017;544:47. doi: 10.1038/nature21717. [DOI] [PubMed] [Google Scholar]

- 4.Agostini M, et al. Phys. Rev. Lett. 2020;125(25):252–502. doi: 10.1103/PhysRevLett.125.252502. [DOI] [PubMed] [Google Scholar]

- 5.Agostini M, et al. Eur. Phys. J. C. 2015;75(10):506. doi: 10.1140/epjc/s10052-015-3681-5. [DOI] [Google Scholar]

- 6.J. Janicskó Csáthy et al. arXiv:1606.04254 [physics] (2016)

- 7.Agostini M, et al. Eur. Phys. J. C. 2018;78(5):388. doi: 10.1140/epjc/s10052-018-5812-2. [DOI] [Google Scholar]

- 8.Acciarri R, et al. JINST. 2012;7:P01016. doi: 10.1088/1748-0221/7/01/P01016. [DOI] [Google Scholar]

- 9.Benson C, Orebi Gann GD, Gehman V. Eur. Phys. J. C. 2018;78(4):329. doi: 10.1140/epjc/s10052-018-5807-z. [DOI] [Google Scholar]

- 10.Baudis L, et al. J. Instrum. 2015;10(9):P09009. doi: 10.1088/1748-0221/10/09/P09009. [DOI] [Google Scholar]

- 11.Kochanek I. Nucl. Instrum. Methods A. 2020;980:164–487. doi: 10.1016/j.nima.2020.164487. [DOI] [Google Scholar]

- 12.Janicskó Csáthy J, et al. Nucl. Instrum. Methods A. 2011;654(1):225–232. doi: 10.1016/j.nima.2011.05.070. [DOI] [Google Scholar]

- 13.Lubashevskiy A, et al. Eur. Phys. J. C. 2018;78(1):15. doi: 10.1140/epjc/s10052-017-5499-9. [DOI] [Google Scholar]

- 14.G. STRUCK Innovative Systeme GmbH, Hamburg

- 15.Doke T, et al. Jpn. J. Appl. Phys. 2002;41(Part 1, No. 3A):1538–1545. doi: 10.1143/JJAP.41.1538. [DOI] [Google Scholar]

- 16.Neumeier A, et al. EPL. 2015;111(1):12001. doi: 10.1209/0295-5075/111/12001. [DOI] [Google Scholar]

- 17.Agnes P, et al. Phys. Rev. D. 2018;97(11):112005. doi: 10.1103/PhysRevD.97.112005. [DOI] [Google Scholar]

- 18.Calvo J, et al. Astropart. Phys. 2018;97:186–196. doi: 10.1016/j.astropartphys.2017.11.009. [DOI] [Google Scholar]

- 19.Barros N, et al. Nucl. Instrum. Methods A. 2020;953:163059. doi: 10.1016/j.nima.2019.163059. [DOI] [Google Scholar]

- 20.Gehman VM, et al. Nucl. Instrum. Methods A. 2011;654(1):116–121. doi: 10.1016/j.nima.2011.06.088. [DOI] [Google Scholar]

- 21.Araujo GR, et al. Eur. Phys. J. C. 2022;82(5):442. doi: 10.1140/epjc/s10052-022-10383-0. [DOI] [Google Scholar]

- 22.Scintillating Optical Fibers, Saint-Gobain Crystals. 2016. [Online]. Available: https://www.crystals.saint-gobain.com/products/scintillating-fiber

- 23.PM33100 Product Data Sheet, KETEK. [Online]. Available: https://www.ketek.net

- 24.Agostinelli S, et al. Nucl. Instrum. Methods A. 2003;506(3):250–303. doi: 10.1016/S0168-9002(03)01368-8. [DOI] [Google Scholar]

- 25.Allison J, et al. IEEE Trans. Nucl. Sci. 2006;53(1):270–278. doi: 10.1109/TNS.2006.869826. [DOI] [Google Scholar]

- 26.Allison J, et al. Nucl. Instrum. Methods A. 2016;835:186–225. doi: 10.1016/J.NIMA.2016.06.125. [DOI] [Google Scholar]

- 27.Boswell M, et al. IEEE Trans. Nucl. Sci. 2011;58(3):1212–1220. doi: 10.1109/TNS.2011.2144619. [DOI] [Google Scholar]

- 28.Agostini M, et al. J. High Energy Phys. 2020;2020(3):139. doi: 10.1007/JHEP03(2020)139. [DOI] [Google Scholar]

- 29.Heindl T, et al. EPL. 2010;91(6):62002. doi: 10.1209/0295-5075/91/62002. [DOI] [Google Scholar]

- 30.Bideau-Mehu A, et al. J. Quant. Spectrosc. RA. 1981;25(5):395–402. doi: 10.1016/0022-4073(81)90057-1. [DOI] [Google Scholar]

- 31.Seidel GM, Lanou RE, Yao W. Nucl. Instrum. Methods A. 2002;489(1–3):189–194. doi: 10.1016/S0168-9002(02)00890-2. [DOI] [Google Scholar]

- 32.Babicz M, et al. Nucl. Instrum. Methods A. 2019;936:178–179. doi: 10.1016/j.nima.2018.10.082. [DOI] [Google Scholar]

- 33.M. Schwarz et al., in EPJ Web Conf., vol. 253, 2021, p. 11014. 10.1051/epjconf/202125311014 and private communications

- 34.Francini R, et al. J. Instrum. 2013;8(09):P09006–P09006. doi: 10.1088/1748-0221/8/09/p09006. [DOI] [Google Scholar]

- 35.Agostini M, et al. Astropart. Phys. 2018;97:136–159. doi: 10.1016/j.astropartphys.2017.10.003. [DOI] [Google Scholar]

- 36.R11065-20 Mod Product Data Sheet, Hamamatsu. [Online]. Available: https://www.hamamatsu.com

- 37.A. Wegmann, Characterization of the liquid argon veto of the GERDA experiment and its application for the measurement of the 76Ge half-life. PhD Thesis, University of Heidelberg, 2017

- 38.Janecek M. IEEE Trans. Nucl. Sci. 2012;59(3):490–497. doi: 10.1109/TNS.2012.2183385. [DOI] [Google Scholar]

- 39.L. Pertoldi, Search for new physics with two neutrino double-beta decay using GERDA data. Appendix C.1, PhD Thesis, University of Padova, 2020

- 40.Agostini M, et al. Eur. Phys. J. C. 2021;81(8):682. doi: 10.1140/epjc/s10052-021-09403-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.F. Beaujean et al., BAT release, version 1.0.0 (2018). 10.5281/zenodo.1322675

- 42.M. Agostini et al. arXiv:2209.01671 [nucl-ex] (2022)

- 43.M. Agostini et al., Supplementary material for ”Liquid argon light collection and veto modeling in GERDA Phase II”. 2022. 10.5281/zenodo.7417788

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This manuscript has associated data in a data repository. [Authors’ comment: The data shown in Fig. 8 is available in ASCII format as Supplemental Material [43].]