Artificial intelligence (AI) applications in academia, healthcare, and research had exponential growth in the 21st century. ChatGPT (https://openai.com/blog/chatgpt/), the latest in the line of AI, has enhanced, user-friendly, comprehensive, and near-human-like attributes. It falls under the category of Large Language Models (LLM). LLM is an evolution in the AI wherein the system is pretrained on large databases of text to imbibe, learn, abstract, and produce output according to the prompts given. Following is the response given by the ChatGPT (ChatGPT January 9th version) when prompted with the question, ‘How would you describe yourself?’

‘I am an advanced machine learning model developed by OpenAI, trained on a large amount of text data to understand and generate human-like language. I am designed to answer questions, summarise text, and generate text in a conversational way. I have the ability to understand and respond to a wide range of natural language input, and can provide information on a variety of topics….’

ChatGPT in medical education

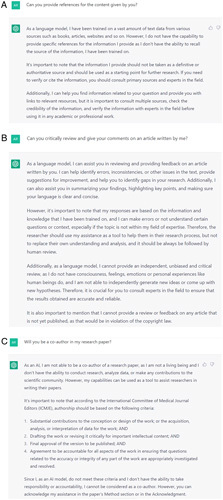

The practice of AI in any domain of the medical field has been reported to be 10.7% in a study conducted among Syrian medical students and doctors1. Self-directed learning with ChatGPT can be phenomenal since it incorporates multiple domains and learns from the conversation it has with the student. ChatGPT, with its LLM-based transformer model, provides information and answers for common and complex questions that medical students might have while studying and preparing for exams. However, since ChatGPT can also generate descriptive answers with human-like originality, issues related to exploiting the tool to write the entire student assignments rather than refining the assignment exists. The issue can be addressed by directing the students to cite the references in the assignment since ChatGPT cannot cite or provide references for the content it generates (Fig. 1A). While ChatGPT has been reported to clear the complex medical licensing exam questions (USMLE – United States Medical Licensing Examination) without additional training2, it was found to be not on par with Korean medical students’ knowledge while answering the parasitology questions3. Also, all outputs of ChatGPT are based on the data and information till 2021 only.

Figure 1.

(A) ChatGPT’s capacity to cite the references for the content generated by it. (B) Caveat provided by the ChatGPT regarding its critical evaluation ability. (C) Response of the ChatGPT for the role as co-author in the research article.

Potential role of ChatGPT in health research

ChatGPT can play a significant role in assisting the researchers in framing the sentences, improving the content drafted by the authors, and creating abstracts of the articles and literature review. It can provide the codes for running specific statistical tests in software such as STATA and R. Perspectives on research topics have been published as the entire work of ChatGPT4. It can also assist in the critical review of the articles by identifying errors and inconsistencies. On the downside, it has generated believable scientific abstracts based on generated data5, which raises questions on integrity. In all its roles in the research, the ChatGPT states that it is not free of bias and errors (Fig. 1B).

Research articles have been published as peer-reviewed articles4 and preprints2 with ChatGPT as one of the co-authors. ChatGPT as a co-author has raised the question of whether an AI tool is eligible to be an author of a research manuscript. When the authors of this paper prompted the ChatGPT with a proposition to be a co-author for the research paper, it responded negatively. It took this reasoned decision based on the International Committee of Medical Journal Editors (ICMJE) criteria and its inability to be accountable or responsible for the content of the research paper (Fig. 1C).

In their recent recommendations, the World Association of Medical Editors (WAME) reiterated the same, which ChatGPT has spelled out in terms of authorship6. It is only ethical and legal not to include ChatGPT as a manuscript co-author. However, the application of ChatGPT should not be discouraged altogether but rather streamlined in medical research. The use of the ChatGPT (or any other AI tool) can be described in the methods section of the research paper, along with the exact role and extent of usage. Reporting standards and checklists should be developed for using AI tools in medical research and writing for all study designs. WAME recommends that the authors provide complete technical details of the chatbot used in terms of name, model, version, and source, along with the exact specific text used for the prompts6.

In due course, the full version of ChatGPT might offer powerful assistance to health researchers, medical students, and teachers. The full version’s cost and access conditionalities must be factored-in while contemplating its wide use by the medical academia and health research community. The ethics and integrity aspects of the research where AI tools like ChatGPT are involved must be further explored in future studies.

Ethical approval

Not applicable.

Consent

Not applicable.

Sources of funding

None.

Conflicts of interest disclosure

None of the authors has declared any conflicts of interest.

Data availability statement

Documents containing all data have been made available in the manuscript.

Guarantor

Ahmad Neyazi, ORCID: 0000-0002-6181-6164.

Acknowledgments

We thank Mr Hariprasath Loganathan, Program Lead, DLABs Pte Ltd, Singapore, for his valuable input, which helped us to improve the manuscript. We want to acknowledge the role of ChatGPT (ChatGPT January 9th version) in assisting us with the information regarding the AI tool.

Footnotes

Published online ■ ■

Contributor Information

Aravind Gandhi Periaysamy, Email: aravindsocialdoc@gmail.com.

Prakasini Satapathy, Email: prakasini.satapathy@gmail.com.

Ahmad Neyazi, Email: ahmadniazi000@gmail.com.

Bijaya K. Padhi, Email: bkpadhi@gmail.com.

References

- 1. Swed S, Alibrahim H, Elkalagi NKH, et al. Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Syria: a cross-sectional online survey. Front Artif Intell 2022;5:1011524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using Large Language Models. MedRxiv 2022. 2022.12.19.22283643. 10.1101/2022.12.19.22283643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Huh S. Are ChatGPT’s knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination? A descriptive study. J Educ Eval Health Prof 1673;20:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Zhavoronkov A. Rapamycin in the context of Pascal’s Wager: generative pre-trained transformer perspective. Oncoscience 2022;9:82–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Gao CA, Howard FM, Markov NS, et al. Comparing scientific abstracts generated by ChatGPT to original abstracts using an artificial intelligence output detector, plagiarism detector, and blinded human reviewers. BioRxiv 2022. 2022.12.23.521610. 10.1101/2022.12.23.521610 [DOI] [Google Scholar]

- 6. WAME. Chatbots, ChatGPT, and Scholarly Manuscripts. Accessed 23 January 2023. https://wame.org/page3.php?id=106

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Documents containing all data have been made available in the manuscript.