Abstract

Organoids are advancing the development of accurate prediction of drug efficacy and toxicity in vitro. These advancements are attributed to the ability of organoids to recapitulate key structural and functional features of organs and parent tumor. Specifically, organoids are self-organized assembly with a multi-scale structure of 30–800 μm, which exacerbates the difficulty of non-destructive three-dimensional (3D) imaging, tracking and classification analysis for organoid clusters by traditional microscopy techniques. Here, we devise a 3D imaging, segmentation and analysis method based on Optical coherence tomography (OCT) technology and deep convolutional neural networks (CNNs) for printed organoid clusters (Organoid Printing and optical coherence tomography-based analysis, OPO). The results demonstrate that the organoid scale influences the segmentation effect of the neural network. The multi-scale information-guided optimized EGO-Net we designed achieves the best results, especially showing better recognition workout for the biologically significant organoid with diameter ≥50 μm than other neural networks. Moreover, OPO achieves to reconstruct the multiscale structure of organoid clusters within printed microbeads and calibrate the printing errors by segmenting the printed microbeads edges. Overall, the classification, tracking and quantitative analysis based on image reveal that the growth process of organoid undergoes morphological changes such as volume growth, cavity creation and fusion, and quantitative calculation of the volume demonstrates that the growth rate of organoid is associated with the initial scale. The new method we proposed enable the study of growth, structural evolution and heterogeneity for the organoid cluster, which is valuable for drug screening and tumor drug sensitivity detection based on organoids.

Keywords: organoid, optical coherence tomography, tracking, convolutional neural network, deep learning

1 Introduction

Organoids are 3D self-organized assemblies of stem cell or neoplastic cell derived from patient tumor (Shahbazi et al., 2019) with more similarities to the source organs with respect to their morphological and functional characteristics (Tuveson & Clevers, 2019), providing a new platform for precise drug screening and tumor drug sensitivity detection in vitro (Takebe & Wells, 2019). In recent years, an increasing variety of cancer organoid have been successfully established, including but not limited to liver and bile duct (Broutier et al., 2017), bladder (Lee et al., 2018; Mullenders et al., 2019), esophagus (Li et al., 2018), lung (Sachs et al., 2019), intestine (Boehnke et al., 2016), and stomach (Seidlitz et al., 2019). The organoid undergoes the process of self-organogenesis when dispersed in the culture matrix and has a multi-scale histomorphology of 30–800 μm (closely related to functional and tumor heterogeneity). Hence, the traditional techniques such as fluorescence microscopy and Laser Scanning Confocal Microscopy usually lack the ability of achieving long-term, non-destructive, 3D imaging and classification analysis of organoid clusters. In addition, traditional biochemical detection techniques such as detection of adenosine triphosphate (ATP) are subjected to the diffusion of culture media and substances, making it difficult to distinguish the function states among different scales of organoids. For instance, cancer organoids with diameter ≥50 μm are reported to more accurately predict the effects of antitumor drugs (Nuciforo et al., 2018; Chung et al., 2022; de Medeiros et al., 2022; Kang et al., 2022). Therefore, there is an urgent need to establish a label-free and non-invasive imaging and analysis method that can perform 3D imaging, classification, tracking and functional analysis of organoid clusters.

Optical coherence tomography (OCT) is an emerging biomedical imaging modality that enables high-resolution, label-free, non-destructive and real-time 3D imaging of biological tissues and has been widely used in ophthalmology (Ngo et al., 2020) and dermatology (Pfister et al., 2019). With the millimeter-level penetration depth and micrometer-level resolution, OCT can characterize the internal structures of organoids with high resolution. In addition, the label-free advantage of OCT allows for longitudinal monitoring of organoids for the number and morphology changes. For instance, Deloria et al. (2021) used ultra-high resolution 3D OCT to observe the internal structure of human placenta-derived trophoblast organoids. Gil et al. (2021) used OCT to track the volumetric growth of patient-derived intestinal cancer organoids, which employed k-means clustering to segment the organoids in OCT images and achieved quantitative tracking of individual organoid volumes with diameter larger than 100 μm. However, the current study (Oldenburg et al., 2015; Gil et al., 2021) both analyzed the heterogeneity of drug responses in mature organoids through traditional image processing combined with OCT, without considering the morphology changes in organoid development. The in-time reveal of tissue morphology during organoid growth and the evaluation of appropriate developmental time scales would be beneficial to guide organoid culturing (Yavitt et al., 2021). Assessing morphological changes during organoid growth requires improved accuracy of organoid quantification, especially for organoids with diameters around 50 μm (Gjorevski et al., 2016; Arora et al., 2017). However, poor contrast, unclear boundaries, the presence of noise, and the small size of the organoid target in OCT images poses a challenge in accurate organoids identification for current methods.

Recently, convolutional neural networks (CNNs) have emerged as a powerful tool in image classification (Cai et al., 2020), object detection (Xiao et al., 2020) and image segmentation (Hesamian et al., 2019). These networks exploit an encoder-decoder architecture. In detail, the encoder enables it to obtain low-resolution feature maps, then the decoder is utilized to project the low-resolution feature maps to high-resolution feature maps to achieve pixel-based classification. In organoid image analysis, CNNs have achieved automatic segmentation of organoids in 2D microscope images and confocal images, and the corresponding segmentation results outperform traditional image processing and machine learning methods (Kassis et al., 2019; MacDonald et al., 2020; Bian et al., 2021; Abdul et al., 2022). Also, CNNs has similarly shown good results in many OCT image processing tasks. For example, CNNs have been employed for segmentation of choroidal vessel (Liu et al., 2019), capillary (Mekonnen et al., 2021), retinal layer boundaries (Shah et al., 2018) and skin (Kepp et al., 2019). Despite CNNs show considerable promise in OCT image analysis, their application in OCT images is still in the early stages (Pekala et al., 2019; Badar et al., 2020).

To better detect and tracking morphological changes of printed patient-derived tumor organoid clusters, we propose a 3D imaging, segmentation and analysis method based on OCT technology with CNNs (Organoid printing and OCT-based analysis, OPO). Specifically, we exploit a designed inverted OCT system to perform automated, high-throughput imaging of organoids arising from patient-derived cancerous tissues such as liver, colon, and stomach. Moreover, two neural network joint organoid segmentation and classification algorithms are correspondingly designed to identify multi-scale organoids in OCT images with high accuracy. Based on the method of organoid mass alignment to achieve the tracking of individual organoids and the tracking analysis of multi-scale structures of organoid clusters within microbeads, we analyze the morphological and number changes of different individual organoids of the same species and different species of organoid clusters, providing a new method to study the growth, structural evolution and heterogeneity of organoid clusters.

2 Methods

2.1 Patient-derived cancer organoids preparation and culture

Multiple patient-derived cancer organoids (PCOs) were prepared by our proposed dot extrusion bioprinting (Wei et al., 2022), and a total of 52 samples of microbead organoid clusters of different patient sources were obtained, including three types of cancer organoids: liver, stomach, and intestine. Cells from patient-derived cancerous tissues were first digested with 0.25% trypsin, then suspended in base medium (DMEM/F12 with 10% FBS and 1% penicillin-streptomycin), and mixed with Matrigel in a 1:2 ratio to prepare the bioink for printing. Briefly, dot extrusion printing is a method of bioprinting using an extrusion printhead operating in intermittent mode to generate microbeads onto the target substrate surface by transient contact. The droplet-shaped microbeads will be deposited on the substrate due to the surface tension between the hydrogel and the substrate. The bioink was loaded into the extrusion printhead and kept at 37°C. Subsequently, the cell-laden bioink was printed in 96 multi-well plates using a micro-extrusion nozzle under pre-designed G-code commands with pneumatic pressure set to 100 kPa and dispensing time set to 1,000 ms. The multi-well plates were incubated at 37°C for 2–3 min to solidify the mixture and then invert to ensure free growth of PCOs suspended in the 3D environment of the Matrigel. The mixture was left to solidify for at least 20 min, then base medium supplemented with Wnt3a medium (1:1 ratio) was added to each well and the medium was renewed every 2 days during long-term incubation.

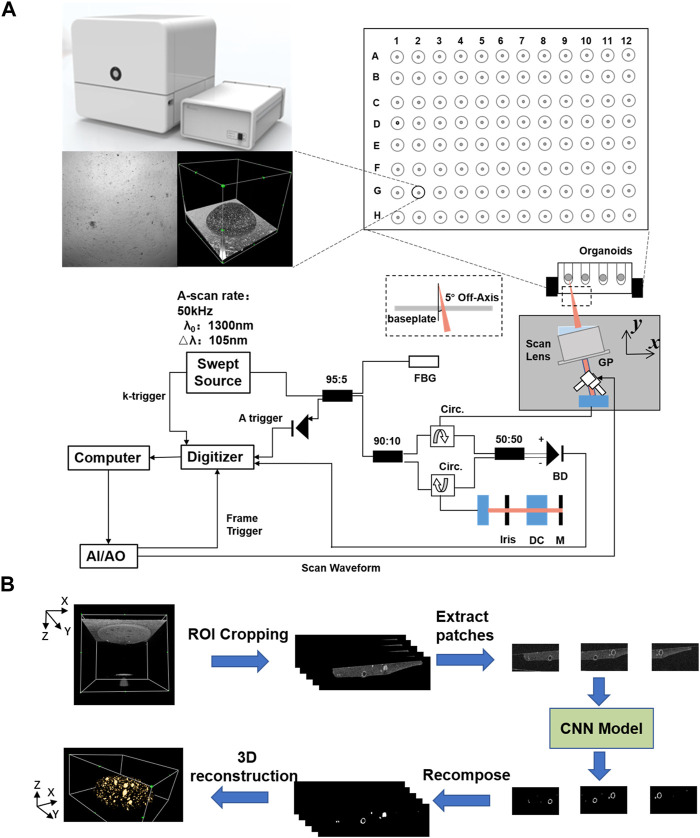

2.2 Image acquisition and preprocessing

We applied the SS-OCT system developed by Regenovo (Figure 1, Bio-Architect@ Tomography, Regenovo, China) to acquire 3D images of the organoid. The system has a central wavelength of 1,310 nm, an axial resolution of 7.6 μm and a lateral resolution of 15 μm. The OCT images were resized in the axial direction to yield isometric voxel spacing. It was noteworthy that the data used in this paper were observed using inverted imaging and tilted by 5–10° with the aim of reducing noise. For organoid clusters within printed microbeads, OCT data acquisition and data storage took no more than 30 s in total, and each OCT imaging monitoring did not exceed 1 h to reduce the impact of imaging monitoring on organoid growth. We started OCT imaging monitoring which last for 7 days when we observed most of the organoids sprouting under 4x microscope, and the time interval of each data acquisition was 24 h. Thus, a total of 364 sets of 3D OCT data were obtained for 52 samples of printed organoid clusters. The volume size of each group of 3D OCT images was 715(z) × 800(x) × 800(y) voxel, and the total field of view was 3.58 mm(z) × 4 mm(x) × 4 mm (y).

FIGURE 1.

Schematic diagram of the SS-OCT system and the data processing flowchart for organoid imaging and segmentation. (A) Schematic diagram of the SS-OCT system for organoid imaging, (B) The data processing flowchart for organoid segmentation.

We selected 3-4 well-developed organoid samples for each of the three tumor types and chose different time points to cover the entire culture cycle. The final dataset contains 10 samples with a total of 24 OCT data, which is about 1/5 of all samples. Due to the high workload of supervised machine learning algorithms that require real annotated data for training and testing, it is not feasible to segment the entire OCT data manually. Therefore, each 3D body data in the 24 OCT data was annotated every four frames, and 3729 B-Scan images were finally obtained. We adjusted the contrast of the images and denoised the images by filtering so that the organoid could be more easily identified. The ground truth images are manually annotated by experts under the guidance of bright-field images, and the data were divided into two parts and annotated from the orthogonal direction. We first compared the bright-field images for determining the position of the organoid in the OCT images. Then the ground truth baseline is established by morphological characteristics of the organoid, signal intensity, and continuity of the organoid (adjacent B-scan images). Considering that the organoids below 32 μm are not biologically significant and their labeling errors are easy to occur, we set the lower bound of organoid size to 32 μm (Gil et al., 2021). In this work, the software tool ITK Snap was used.

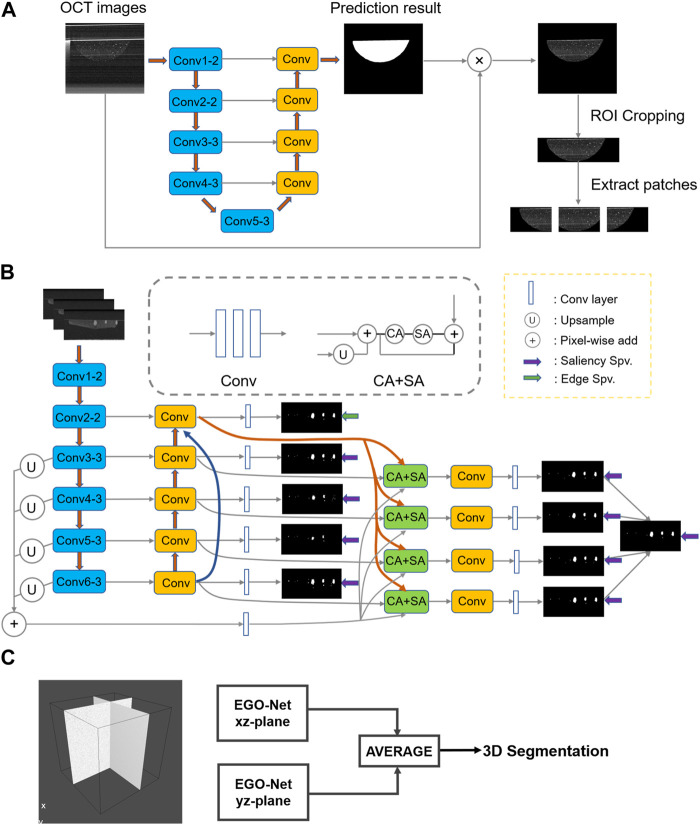

2.3 Designing convolutional neural network models to segment organoids

During 3D printing, the deviations in microbead positions and manual manipulation caused by different collectors may lead to the organoid appearance at different locations in the OCT images. In this paper, a simple neural network, VGG-Unet (Simonyan & Zisserman, 2014; Ronneberger et al., 2015), was first used to extract the printed microbead matrix region of interest (Figure 2A). Then the printing errors (volume and position deviations) were calibrated and the interference signals were removed, to accurately reconstruct the multiscale structure of the organoid clusters within the printed microbeads.

FIGURE 2.

The network structure or method used in the entire segmentation process. (A) Determine the VGG-Unet structure for the region of interest microbeads. (B) Architecture of the proposed EGO-Net. (The left side shows the encoder and decoder combination with U-shaped structure. The encoder receives the image as input and generates multi-level and multi-resolution feature representations, while fusing the features after Conv2-2 to obtain multi-scale features. The decoder then receives the feature representation from the encoder to generate the corresponding predicted output, and uses the output corresponding to Conv2-2 to generate edge features. The segmentation results are obtained on the right side by fusing the multi-scale features, predicted outputs and edge features and decoding them accordingly.) (C) The final 3D segmented image obtained by averaging results of two orthogonal direction.

After obtaining OCT images [240 (z) *800 (x) pixels] containing only Matrigel and organoid clusters, we performed preprocessing such as cropping and grayscale transformation to resolve the grayscale and non-uniform contrast of OCT images in different organoids. Then dividing a B-scan image containing organoid clusters into three 240(z)*400(x) pixel image blocks would achieved preserving the organoid integrity while reducing the size of the input image, which enabled it improve the segmentation efficiency and accuracy of the neural network. Then, we exploited four method, U-Net, U2-Net (Qin et al., 2020), EG-Net (Zhao et al., 2019) and nnU-net (Isensee et al., 2018) based CNNs, for multi-scale organoid segmentation of the pre-processed OCT images. In detail, the first three CNNs are 2D neural networks, and nnU-net was the 3D neural network with the best segmentation performance so far (Antonelli et al., 2021).

The morphological sprouting of the organoid generally occurs when the diameter reaches 50 μm. In order to better evaluate the morphological changes during the growth of the organoid, we designed EGO-Net to improve the segmentation accuracy of the morphological sprouting state organoid, as shown in Figure 2B. Since the lower level has a small receptive field, only local information can be obtained. In order to accurately segment organoids, high-level semantic or positional information is also required. When the information returns from the high level to the low level, the high level information is gradually diluted, so we fuse the feature maps obtained from each convolutional block of the down sampled part of the introduced baseline EG-Net to obtain the multi-scale features. The multi-scale features are then fused with the features of the side path outputs so that both high-level and low-level information is obtained for each side path output. Channel Attention (CA) and Spatial Attention (SA) were utilized after fusing the edge features to make the network more focused on the organoids to be segmented and to suppress irrelevant information (Woo et al., 2018). Since this network (Figure 2B) was still a 2D neural network, the correlation information of different directions of 3D OCT imaging was easily lost, which led to problems such as deformation and discontinuity in the 3D reconstruction of the segmented organoid. To this end, a method combining unidirectional continuous 3-frame OCT image input (Li et al., 2017) and averaging two predictions in the orthogonal direction (Prasoon et al., 2013; Pfister et al., 2019) was proposed (Figure 2C). This method took into account the information correlation of different directions of 3D OCT imaging and used orthogonal continuous input to form information complementarity, which enabled effectively improve the problem of unidirectional 2D neural network segmentation while avoiding the segmentation efficiency problem that exists in 3D neural networks.

We trained the network using a hybrid loss function defined as and denote the BCE loss function (de Boer et al., 2005) and the IoU loss function (Mattyus et al., 2017), respectively, where is the ground truth label of the pixel and is the predicted probability of being organoid. We set six-fold cross-validation with 60 epochs of training per cross-validation and used data augmentation such as rotation and horizontal flipping during the training process. An adaptive moment estimation solver was used to optimize the network with a learning rate of 5 × 10−5 and momentum of 0.90. This process was implemented with Python3.7 based on a Pytorch backend using a single NVIDIA Quadro RTX 4000.

2.4 Organoid tracking and quantitative analysis

The individual tracking of organoid within microbeads, individual organoid volume, overall number and volume, and clustering analysis of volume can be achieved based on the segmentation results of the CNN model proposed in this paper. The organoid volume is calculated only for the solid part and not for the whole contained in the outer contour. A three-point localization method was used for OCT imaging of the organoid clusters in the culture well plate to ensure the consistent position of each data acquisition as much as possible. Moreover, this paper proposed a combined ICP alignment and center-of-mass pairing approach to achieve time-dependent tracking of individual organoid within a cluster. Specifically, we first identified and labeled the organoids within the microbeads based on the segmentation results of the CNN model, then used a simplified point cloud based iterative closest point (ICP) algorithm to align the organoid segmentation datasets at different time points. Next, we extracted the mass center of each organoid from the aligned datasets, and created a pairing table by matching the mass center of the closest organoid in the aligned datasets at two adjacent time points. In turn, we created a pairing table for multiple time points for organoid tracking, which enabled the tracking of a single organoid. The results of individual organoid tracking were used to demonstrate the change of organoid number and morphology over time.

2.5 Evaluation metrics

Four different metrics were used to evaluate the accuracy of the segmentation. Dice and Jaccard were used to measuring the similarity between the predicted and ground truth, which are denoted as:

| (1) |

| (2) |

where true positives (TP) are the number of pixels for which the model correctly predicts the positive class, false positives (FP) are the number of pixels for which the model incorrectly predicts the positive class, and false negatives (FN) are the number of pixels for which the model incorrectly predicts the negative class.

Dice and Jaccard are more concerned with the overlap region between predicted and ground truth, and the size of the segmented region also has an impact on the results. Therefore, we selected the precision and sensitivity for indicating the classification of positive samples. The precision indicates the probability of correct prediction in the samples predicted as organoid, and a higher precision indicates fewer cases of predicting the background as organoid. It is defined as:

| (3) |

Sensitivity indicates the probability of correct prediction in samples that are actually organoid, and higher sensitivity indicates fewer cases of predicting organoid as background. It is denoted as:

| (4) |

3 Results

3.1 Quantitative analysis of segmentation performance

Previous studies reveal that morphogenesis in the organoid would occur at the diameter of 50 μm (Gjorevski et al., 2016; Arora et al., 2017), which was also observed in our study. Therefore, we compared our proposed method with other single neural networks according to different classifications of the equivalent diameter of the organoid, and the metrics of all models for test set segmentation are shown in Table 1. It should be pointed out that other neural networks do not use a series of optimized processing methods, for example, VGG-Unet to extract the printed microbead matrix region, and orthogonal continuous input prediction to solve the problems such as deformation and dislocation in the 3D reconstruction of the organoid after segmentation. From the table, it can be seen that the segmentation performance of our proposed method is better than other neural networks, and obtained the highest scores in Dice, Jaccard, Precision and Sensitivity. When the diameter of the organoid is between 32 and 50 μm, our method can achieve more than 80% segmentation accuracy. When the organoid diameter was between 50 and 90 μm, the segmentation performance of each model improved, with our method improving between 3% and 6% compared to nnUnet, U2net, and EG-Net, and 9.6% compared to U-net. When the organoid diameter is larger than 90 μm, our method improves 4.8% compared to EG-Net, the best performing of other single neural network, and 8.4% compared to U-net. Our method has the best segmentation performance for different organoid diameters, indicating that our method can accurately identify the organoid and effectively improve the segmentation accuracy.

TABLE 1.

Comparison between the proposed segmentation method and other single neural network.

| Diameter | U-net | nnUnet | U2Net | EG-net | Our method | |

|---|---|---|---|---|---|---|

| Dice | 50 > d ≥ 32 μm | 0.680 | 0.710 | 0.753 | 0.758 | 0.801 |

| 90 > d ≥ 50 μm | 0.743 | 0.785 | 0.786 | 0.809 | 0.839 | |

| d ≥ 90 μm | 0.820 | 0.828 | 0.850 | 0.856 | 0.904 | |

| Jaccard | 50 > d ≥ 32 μm | 0.546 | 0.552 | 0.635 | 0.635 | 0.683 |

| 90 > d ≥ 50 μm | 0.619 | 0.624 | 0.683 | 0.701 | 0.737 | |

| d ≥ 90 μm | 0.707 | 0.718 | 0.763 | 0.767 | 0.832 | |

| Precision | 50 > d ≥ 32 μm | 0.722 | 0.681 | 0.777 | 0.781 | 0.824 |

| 90 > d ≥ 50 μm | 0.808 | 0.759 | 0.821 | 0.843 | 0.854 | |

| d ≥ 90 μm | 0.892 | 0.861 | 0.899 | 0.901 | 0.902 | |

| Sensitivity | 50 > d ≥ 32 μm | 0.700 | 0.715 | 0.779 | 0.780 | 0.802 |

| 90 > d ≥ 50 μm | 0.733 | 0.657 | 0.791 | 0.809 | 0.844 | |

| d ≥ 90 μm | 0.765 | 0.853 | 0.837 | 0.829 | 0.921 |

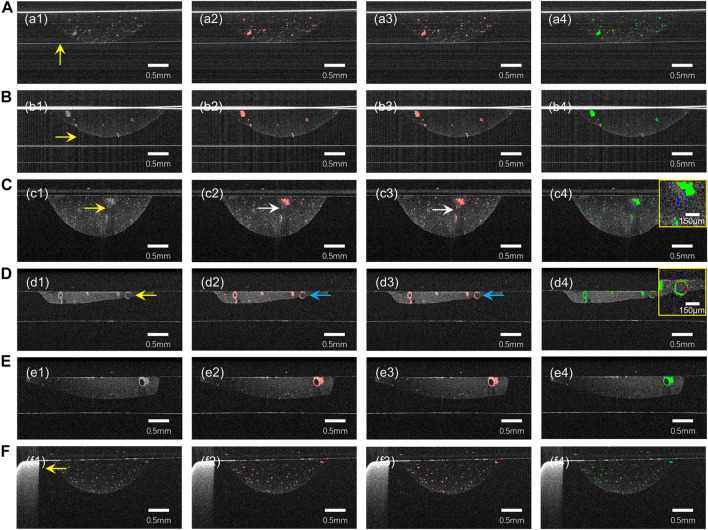

As can be seen from Figure 3, our model provides satisfactory segmentation for the problems that exist in OCT imaging in the normal culture environment of the organoid clusters such as the difference in growth density, morphological differences (Figure 3E), preparation process limitations (Figure 3F), and the strong reflection noise, autocorrelation noise (Figures 3A, B), and low contrast (Figures 3C, D). While it is prone to generate errors only in cases where expert recognition is also difficult, such as organoid with diameter less than 50 μm (Figure 3A), strong signal of Matrigel compared to surrounding signal (as shown in Figure 3C), or close signal intensity of organoid and Matrigel (as shown by arrow in Figure 3D).

FIGURE 3.

The prediction results of our model for some challenging cases of B-Scan. (A) autocorrelation noise; (B) strong reflection noise; (C,D) low contrast and blurred boundaries; (E) different density and morphology of organoid. (F) Well plates. (a1)–(f1) are OCT images, (a2)–(f2) are ground truth, (a3)–(f3) are neural network prediction results, and (a4)–(f4) are fusion results (green) of ground truth (red) and prediction results (blue). The yellow arrows indicate possible problems in the OCT images. The white arrow in row 3 points out that Matrigel was misclassified as an organoid. The blue arrow in row 4 points out that the organoid was not successfully segmented.

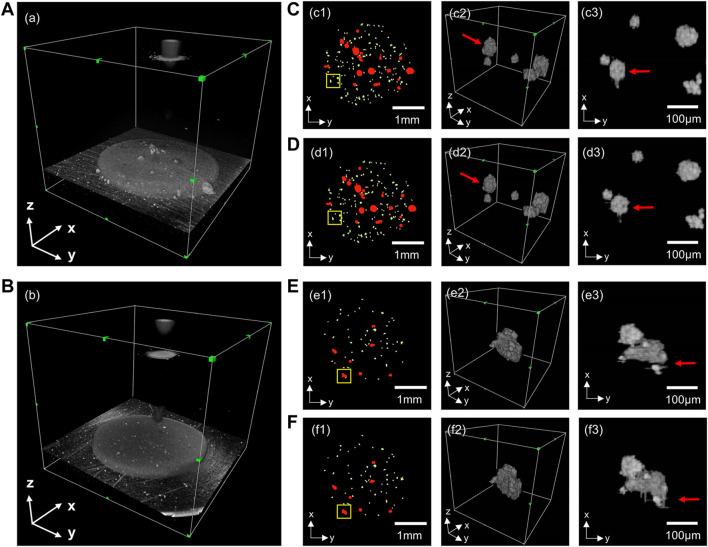

We used the orthogonal continuous input prediction method to improve the error-prone problem of unidirectional prediction of 2D neural network, and compared the effect of unidirectional prediction and orthogonal continuous input prediction, as shown in Figure 4. Due to the lack of information in the third dimension, the 2D neural network unidirectional prediction leads to problems such as deformation, missing edges (Figure 4C3) and discontinuity (Figure 4E3) in some of the organoids, which cannot accurately demonstrate the morphological changes in the organoids, and thus the quantitative analysis of multi-scale organoid clusters would also produce errors. The orthogonal continuous input prediction method effectively improves the impact due to the missing information in the third dimension. As can be seen from Figure 4D3, the orthogonal continuous input prediction method used in this paper segmented the shape of the organoid more rounded and complete; as can be seen from Figure 4F3, the method in this paper could reconnect the defective organoid predicted unidirectionally by the 2D neural network into a whole, which improves the accuracy of organoid identification.

FIGURE 4.

Comparison of the 3D segmentation results of unidirectional prediction and orthogonal continuous input prediction. (A,B) are two sets of 3D OCT examples; (C,E) are 3D segmentations with unidirectional prediction. (D,F) are 3D segmentations with orthogonal continuous input prediction. (c1–f1) are top views of 3D reconstructions of organoids segmentation; (c2–f2) are enlarged 3D reconstructions of the yellow boxes in (c1–f1); (c3–f3) are top views corresponding of (c2–f2).

3.2 Analysis of organoid growth based on segmentation results

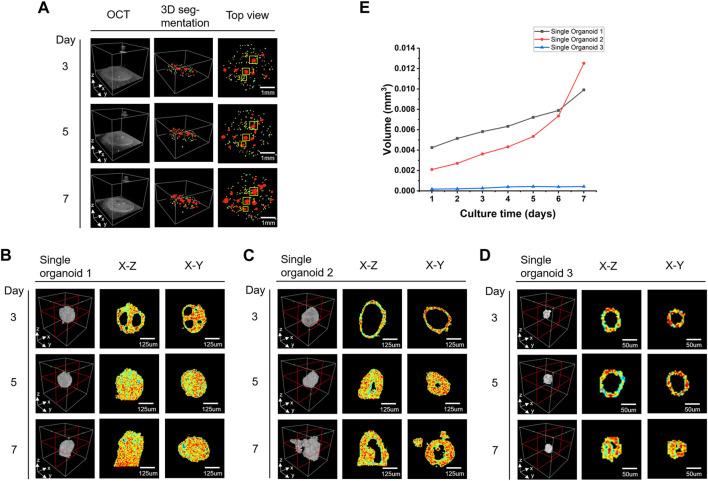

Figure 5A shows the growth changes of the colon cancer organoids during the monitoring period, where the different sizes of the organoids are presented in different colors. Three individual organoids were selected for display (Figures 5B–D) and volume analysis (Figure 5E). Different organoids showed different morphologies in the same well of the same patient. The organoid growth around 150 μm for initial diameter may exhibit almost consistent morphology in appearance but experience significant differences in internal structure, such as the gradual disappearance of the cavity of organoid 1 (Figure 5B) and the disappearance and the regrowth of the cavity of organoid 2 (Figure 5C). The morphological sprouting started when the initial diameter of the organoid was around 50 μm (Figure 5D). From the results of volume monitoring of the three organoids grown for 7 days (Figure 5E), the initial appearance diameter of the organoids over 90 μm showed that their solid volume grew rapidly over time, as in organoids 1 and 2, while organoid 3, which had an initial appearance diameter near 50 μm, grew more slowly, increasing in volume only for the first 4 days, then remaining essentially unchanged.

FIGURE 5.

Shape and size changes of colon cancer organoids during the monitoring period. (A) Colon cancer organoids at different time points. From left to right are 3D OCT images, 3D segmentation results and the top views. Different diameters of organoids are marked with different colors (yellow:50 μm > d ≥ 32μm, green: 90 μm > d ≥ 50μm, red: d ≥ 90 μm). (B–D) Enlarged views of the three organoids marked as 1, 2, and 3 by the yellow boxes in (A). (E) The volume growth analysis of three organoids in (B–D) over 7 days.

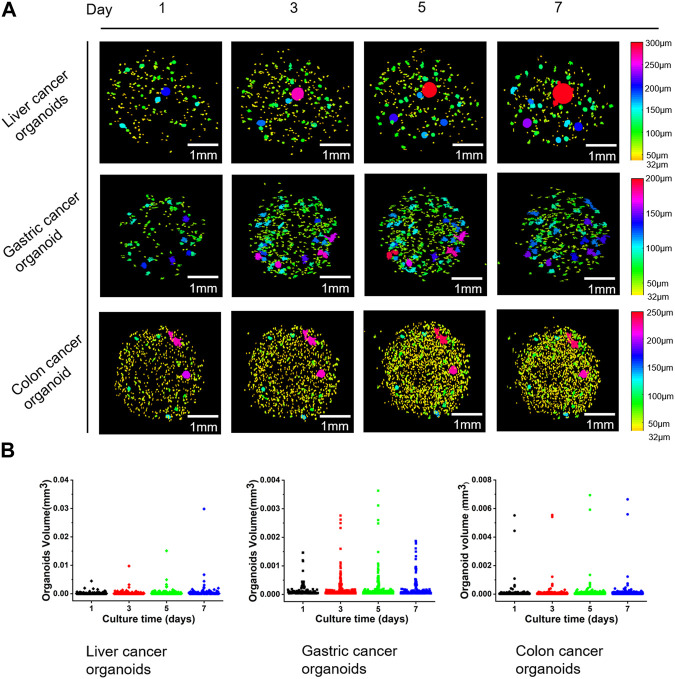

Based on the accurate identification of the internal cavities of the organoids, we achieved a more accurate quantitative analysis of the organoids and monitored the changes in the number and volume of all organoids in the well plate over time. In order to visualize the growth and development of the organoids, we first filled the inside of the organoids and then calculated the equivalent diameter based on the volume of the organoids and assigned different colors to the organoids according to the diameter size. Figure 6 shows the scatter plots of growth status and volume changes of three groups of different types of organoid clusters at different culture time points. It should be pointed out that the organoids with diameter less than 32 μm did not visualized in Figure 6A to better display organoid clusters. According to the Figures 6A, B, it can be seen that on day 1 of culture, the percentage of liver and gastric cancer organoid clusters with diameters less than 50 μm exceeded 50%, while the percentage of intestinal cancer organoid clusters with diameters less than 50 μm exceeded 80%. During the subsequent growth process, the scale and volume of liver carcinoids showed significant growth, while the number did not change significantly, and the statistics showed that the percentage of organoids less than 50 μm in diameter continued to decrease; the diameter and number of gastric cancer organoids continued to increase, while the statistics showed that the percentage of organoids less than 50 μm in diameter increased slowly in the first 5 days and then decreased slightly; the diameter of intestinal cancer organoids grew slowly and the percentage of organoids with diameters less than 50 μm decreased significantly in the first 5 days and then increased slightly. In addition, the statistics of the last day showed that the percentage of the three types of organoids with diameters less than 50 μm was the smallest at 37.4%, indicating that a significant proportion of these organoids had no significant growth.

FIGURE 6.

Examples of the changes of three different types of organoids over time and scatter plots of organoid volumes. (A) Three-dimensional reconstruction results of organoid volume changes over time for three types of liver, gastric, and colon cancers. (B) Scatter plots corresponding to the volume of the organoids in (A).

4 Discussion

Patient-derived cancer organoids (PCOs) are 3D in vitro miniaturized models that display spatial architecture strongly resembling the corresponding tumor tissues and recapitulate physiological functions of parent tissue, offering unprecedented opportunities for disease mechanism research, drug screening and personalized medicine. However, current detection methods for PCOs are usually destructive or provide only planar information, making it difficult to achieve long-term, non-destructive 3D monitoring and analysis of the PCOs cluster. In this study, we propose a OPO method for 3D imaging, segmentation and analysis of printed organoid clusters based on OCT imaging with deep CNNs, which can reconstruct the multiscale structure of organoid clusters within printed microbeads and visualize information about organoids, cavities and volumes.

The advantages of OCT, such as three-dimensional, non-invasive, and high-resolution imaging, allow it to monitor the growth and drug response of organoid clusters and capture the heterogeneity of PCOs. However, the sensitivity fall-off effect of OCT may generate signal variances in different depths of the organoid, and the low contrast and unavoidable speckle noise present in OCT images, which hamper the organoids identification. Previous studies (Nuciforo et al., 2018; Ngo et al., 2020) used conventional image processing to analyze organoid OCT images shows difficulties in segmenting organoids with scales smaller than 90 μm. However, the CNN model designed in this paper can extract the printed microbead matrix region of interest by a simple VGG-Unet (Figure 2A). On the other hand, the accuracy of organoid segmentation with diameters around 50 μm could be improved by the designed EGO-Net (Table 1). Moreover, the introduction of 3D information and ensured the continuity of segmentation (Figure 4) by unidirectional continuous 3-frame image input combined with two neural network predictions in the mean orthogonal direction information would be beneficial to reduce the deformation (Figure 4D3) and recognition deficit (Figure 4F3) of 3D organoid reconstruction. The CNN model designed in this paper exhibits good robustness and adaptability, providing satisfactory segmentation results for multi-scale organoid in OCT images with low contrast and much noise (Figure 3), showing excellent segmentation accuracy for small-scale organoid (d < 90 μm) and good prediction and identification readouts of organoid of different types of patient origin for liver, gastric and colon cancers with large morphological and density differences (Figure 6A).

Also, the CNN model we proposed in this paper is able to identify the edges of printed microbead matrix, which can be further used to calibrate the printing position. Moreover, the volume error of microbead printing would also be calibrated by quantifying the volume of the region, which is paramount for the automation of organoid preparation. The proposed organoid tracking analysis method in this paper enables the visualization of morphology and internal structure evolution of individual organoid at different time points (Figures 5B–D), and the 3D volume mapping helps to understand the underlying cellular dynamics, such as fusion, luminal dynamics, migration, and rotation, in more detail from different perspectives. The quantitative tracking analysis of individual organoid morphological parameters (Figure 5E) helps to explore the heterogeneity of PCOs for precise tumor drug use (Boehnke et al., 2016). Although the current organoid tracking analysis method has achieved the expected results, there are still limitations. The error of tracking is closely related to the segmentation accuracy, and the error of segmentation leads to the error of tracking. Large-sized organoids (d > 90 μm) are tracked accurately because they are easy to segment and errors are mainly concentrated at the edges. The segmentation accuracy of the model for the organoids with diameters between 50 and 90 μm is 83.9%. Although there is a small amount of organoid identification error, it can also be tracked accurately basically. For the organoids with diameter less than 50 μm, the identification accuracy of our segmentation algorithm is not high enough, and there is a situation that some organoid below 32 μm grows to about 50 μm, so the identification error of small-sized organoids with diameter less than 50 μm can easily lead to the error of tracking results. In this paper, we further demonstrated the changes of different types of patient-derived cancer organoid clusters over time (Figure 6A), and achieved quantification and analyze of the number and volume changes of organoid clusters (Figure 6B). It demonstrated that the growth rate of organoid was influenced by the initial scale which associates closely with the viability of organoid. In fact, the diameter and morphological characteristics of the organoid are key indicators of organoid maturation. Thus, the accurate assessment of the diameter and morphological characteristics would be beneficial to guide organoid culture. In addition, the accurate quantification and tracking of multiple scales of organoids helps to observe the growth and structural evolution of organoid clusters as well as to assess the appropriate developmental time scale (Yavitt et al., 2021), enhancing the understanding of early organoid formation mechanisms, and helping guide large-scale culture of the organoid. For example, in this paper, we found that an overall shrinking of the organoid (Figure 6A), known as size oscillations, occurs when the diameter of intestinal and gastric organoids grows to near 200 μm (Hof et al., 2021). In the next step, we consider combining classification algorithms to distinguish different morphological organoids and introducing OCT attenuation coefficients, dynamic OCT (Scholler et al., 2020; Yang et al., 2020; Ming et al., 2022) to further characterize the viability and motility of organoids and provide powerful tools for organoid growth, and drug screening based on structure-function imaging.

5 Conclusion

In this study, we propose a three-dimensional imaging, segmentation and analysis method of printed organoid clusters based on OCT with CNNs (OPO), which reconstruct the multi-scale structure of organoid clusters within printed microbeads and achieve tracking and quantitative analysis of organoids. By tracking individual organoid and quantifying morphological parameters such as number and volume of organoid by CNN prediction results, the growth, structural evolution and heterogeneity analysis of organoid clusters can be realized. The proposed method is valuable for the study of organoid growth, drug screening and tumor drug sensitivity detection based on organoids.

Acknowledgments

We thank Regenovo Ltd., (Hangzhou, China) for providing organoid samples.

Funding Statement

This work was supported by the National Key Research and Development Program of China (No. 2022YFA1104600), the National Natural Science Foundation of China (No. 31927801), and Key research and development foundation, Science and technology department of Zhejiang Province (No. 2022C01123), Natural Science Foundation of Zhejiang Province (LQ20H100002).

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Research Ethics Committee of the First Affiliated Hospital, College of Medicine, Zhejiang University. The patients/participants provided their written informed consent to participate in this study.

Author contributions

DB and XZ participated in the algorithm design. DB participated in experiments, and wrote the manuscript. LW and SY conceived the study and revised the manuscript. MX directed the whole work. DB, KH, LW, and SY participated in the analysis of the experimental results. All authors read the final manuscript and agreed to submit it.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

- Abdul L., Xu J., Sotra A., Chaudary A., Gao J., Rajasekar S., et al. (2022). D-CryptO: Deep learning-based analysis of colon organoid morphology from brightfield images. Lab a Chip 22 (21), 4118–4128. 10.1039/d2lc00596d [DOI] [PubMed] [Google Scholar]

- Antonelli M., Reinke A., Bakas S., Farahani K., Kopp-Schneider A., Landman B. A., et al. (2021). The medical segmentation decathlon. Nat. Commun. 13, 4128. 10.1038/s41467-022-30695-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arora N., Alsous J. I., Guggenheim J. W., Mak M., Munera J., Wells J. M., et al. (2017). A process engineering approach to increase organoid yield. Dev. Camb. 144 (6), 1128–1136. 10.1242/dev.142919 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badar M., Haris M., Fatima A. (2020). Computer Science Review, 35. Amsterdam, Netherlands: Elsevier Ireland Ltd. 10.1016/j.cosrev.2019.100203 Application of deep learning for retinal image analysis: A review [DOI] [Google Scholar]

- Bian X., Li G., Wang C., Liu W., Lin X., Chen Z., et al. (2021). A deep learning model for detection and tracking in high-throughput images of organoid. Comput. Biol. Med. 134, 104490. 10.1016/j.compbiomed.2021.104490 [DOI] [PubMed] [Google Scholar]

- Boehnke K., Iversen P. W., Schumacher D., Lallena M. J., Haro R., Amat J., et al. (2016). Assay establishment and validation of a high-throughput screening platform for three-dimensional patient-derived colon cancer organoid cultures. J. Biomol. Screen. 21 (9), 931–941. 10.1177/1087057116650965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broutier L., Mastrogiovanni G., Verstegen M. M. A., Francies H. E., Gavarró L. M., Bradshaw C. R., et al. (2017). Human primary liver cancer-derived organoid cultures for disease modeling and drug screening. Nat. Med. 23 (12), 1424–1435. 10.1038/nm.4438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai L., Gao J., Zhao D. (2020). A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 8 (11), 713. 10.21037/atm.2020.02.44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung P. H. Y., Babu R. O., Wu Z., Wong K. K. Y., Tam P. K. H., Lui V. C. H. (2022). Developing biliary atresia-like model by treating human liver organoids with polyinosinic:polycytidylic acid (poly (I:C)). Curr. Issues Mol. Biol. 44 (2), 644–653. 10.3390/cimb44020045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Boer P. T., Kroese D. P., Mannor S., Rubinstein R. Y. (2005). A tutorial on the cross-entropy method. Ann. operations Res. 134 (1), 19–67. 10.1007/s10479-005-5724-z [DOI] [Google Scholar]

- de Medeiros G., Ortiz R., Strnad P., Boni A., Moos F., Repina N., et al. (2022). Multiscale light-sheet organoid imaging framework. Nat. Commun. 13 (1), 4864. 10.1038/s41467-022-32465-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deloria A. J., Haider S., Dietrich B., Kunihs V., Oberhofer S., Knofler M., et al. (2021). Ultra-high-resolution 3D optical coherence tomography reveals inner structures of human placenta-derived trophoblast organoids. IEEE Trans. Biomed. Eng. 68 (8), 2368–2376. 10.1109/TBME.2020.3038466 [DOI] [PubMed] [Google Scholar]

- Gil D. A., Deming D. A., Skala M. C. (2021). Volumetric growth tracking of patient-derived cancer organoids using optical coherence tomography. Biomed. Opt. Express 12 (7), 3789. 10.1364/boe.428197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gjorevski N., Sachs N., Manfrin A., Giger S., Bragina M. E., Ordóñez-Morán P., et al. (2016). Designer matrices for intestinal stem cell and organoid culture. Nature 539 (7630), 560–564. 10.1038/nature20168 [DOI] [PubMed] [Google Scholar]

- Hesamian M. H., Jia W., He X., Kennedy P. (2019). Deep learning techniques for medical image segmentation: Achievements and challenges. J. Digital Imaging 32 (4), 582–596. 10.1007/s10278-019-00227-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hof L., Moreth T., Koch M., Liebisch T., Kurtz M., Tarnick J., et al. (2021). Long-term live imaging and multiscale analysis identify heterogeneity and core principles of epithelial organoid morphogenesis. BMC Biol. 19 (1), 37–22. 10.1186/s12915-021-00958-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isensee F., Petersen J., Klein A., Zimmerer D., Jaeger P. F., Kohl S., et al. (2018). “nnU-Net: Self-adapting framework for U-Net-Based medical image segmentation,” in 6th International Conference, Held as Part of the Services Conference Federation, SCF 2021, Virtual Event, December 10–14, 2021. [Google Scholar]

- Kang Y., Deng J., Ling J., Li X., Chiang Y.-J., Koay E. J., et al. (2022). 3D imaging analysis on an organoid-based platform guides personalized treatment in pancreatic ductal adenocarcinoma. J. Clin. Investigation 132 (24), e151604. 10.1172/jci151604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kassis T., Hernandez-Gordillo V., Langer R., Griffith L. G. (2019). OrgaQuant: Human intestinal organoid localization and quantification using deep convolutional neural networks. Sci. Rep. 9 (1), 12479. 10.1038/s41598-019-48874-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kepp T., Droigk C., Casper M., Evers M., Hüttmann G., Salma N., et al. (2019). Segmentation of mouse skin layers in optical coherence tomography image data using deep convolutional neural networks. Biomed. Opt. Express 10 (7), 3484. 10.1364/boe.10.003484 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S. H., Hu W., Matulay J. T., Silva M. v., Owczarek T. B., Kim K., et al. (2018). Tumor evolution and drug response in patient-derived organoid models of bladder cancer. Cell 173 (2), 515–528.e17. 10.1016/j.cell.2018.03.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li X., Chen H., Qi X., Dou Q., Fu C.-W., Heng P. A. (2018). H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med. Imaging 37 (12), 2663–2674. 10.1109/tmi.2018.2845918 [DOI] [PubMed] [Google Scholar]

- Li X., Francies H. E., Secrier M., Perner J., Miremadi A., Galeano-Dalmau N., et al. (2018). Organoid cultures recapitulate esophageal adenocarcinoma heterogeneity providing a model for clonality studies and precision therapeutics. Nat. Commun. 9 (1), 2983. 10.1038/s41467-018-05190-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X., Bi L., Xu Y., Feng D., Kim J., Xu X. (2019). Robust deep learning method for choroidal vessel segmentation on swept source optical coherence tomography images. Biomed. Opt. Express 10 (4), 1601. 10.1364/boe.10.001601 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald M., Fennel R., Singanamalli A., Cruz N., YousefHussein M., Al-Kofahi Y., et al. (2020). Improved automated segmentation of human kidney organoids using deep convolutional neural networks. Med. Imaging 2020 Image Process. 11313, 832–839. 10.1117/12.2549830 [DOI] [Google Scholar]

- Mattyus G., Luo W., Urtasun R. “DeepRoadMapper: Extracting road topology from aerial images,” in Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, October 2017, 3458–3466. 10.1109/ICCV.2017.372 [DOI] [Google Scholar]

- Mekonnen B. K., Hsieh T. H., Tsai D. F., Liaw S. K., Yang F. L., Huang S. L. (2021). Generation of augmented capillary network optical coherence tomography image data of human skin for deep learning and capillary segmentation. Diagnostics 11 (4), 685. 10.3390/diagnostics11040685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ming Y., Hao S., Wang F., Lewis-Israeli Y. R., Volmert B. D., Xu Z., et al. (2022). Longitudinal morphological and functional characterization of human heart organoids using optical coherence tomography. Biosens. Bioelectron. 207, 114136. 10.1016/j.bios.2022.114136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullenders J., de Jongh E., Brousali A., Roosen M., Blom J. P. A., Begthel H., et al. (2019). Mouse and human urothelial cancer organoids: A tool for bladder cancer research. Proc. Natl. Acad. Sci. U. S. A. 116 (10), 4567–4574. 10.1073/pnas.1803595116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ngo L., Cha J., Han J. H. (2020). Deep neural network regression for automated retinal layer segmentation in optical coherence tomography images. IEEE Trans. Image Process. 29, 303–312. 10.1109/TIP.2019.2931461 [DOI] [PubMed] [Google Scholar]

- Nuciforo S., Fofana I., Matter M. S., Blumer T., Calabrese D., Boldanova T., et al. (2018). Organoid models of human liver cancers derived from tumor needle biopsies. Cell Rep. 24 (5), 1363–1376. 10.1016/j.celrep.2018.07.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldenburg A. L., Yu X., Gilliss T., Alabi O., Taylor R. M., Troester M. A. (2015). Inverse-power-law behavior of cellular motility reveals stromal–epithelial cell interactions in 3D co-culture by OCT fluctuation spectroscopy. Optica 2 (10), 877. 10.1364/optica.2.000877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pekala M., Joshi N., Liu T. Y. A., Bressler N. M., Cabrera DeBuc D., Burlina P. (2019). OCT segmentation via deep learning: A review of recent work. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma. 11367, 316–322. LNCS. 10.1007/978-3-030-21074-8_27 [DOI] [Google Scholar]

- Pfister M., Schützenberger K., Pfeiffenberger U., Messner A., Chen Z., Santosdos V. A., et al. (2019). Automated segmentation of dermal fillers in OCT images of mice using convolutional neural networks. Biomed. Opt. Express 10 (3), 1315. 10.1364/boe.10.001315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prasoon A., Petersen K., Igel C., Lauze F., Dam E., Nielsen M. (2013). Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma. 8150, 246–253. 10.1007/978-3-642-40763-5_31 [DOI] [PubMed] [Google Scholar]

- Qin X., Zhang Z., Huang C., Dehghan M., Zaiane O. R., Jagersand M. (2020). U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 106, 107404. 10.1016/j.patcog.2020.107404 [DOI] [Google Scholar]

- Ronneberger O., Fischer P., Brox T. (2015). U-Net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. 9351, 234–241. 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- Sachs N., Papaspyropoulos A., Zomer‐van Ommen D. D., Heo I., Böttinger L., Klay D., et al. (2019). Long‐term expanding human airway organoids for disease modeling. EMBO J. 38 (4), e100300. 10.15252/embj.2018100300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholler J., Groux K., Goureau O., Sahel J. A., Fink M., Reichman S., et al. (2020)., 9. Springer Nature, 140. 10.1038/s41377-020-00375-8 Dynamic full-field optical coherence tomography: 3D live-imaging of retinal organoids Light Sci. Appl. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidlitz T., Merker S. R., Rothe A., Zakrzewski F., von Neubeck C., Grützmann K., et al. (2019). Human gastric cancer modelling using organoids. Gut 68 (2), 207–217. 10.1136/gutjnl-2017-314549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shah A., Zhou L., Abrámoff M. D., Wu X. (2018). Multiple surface segmentation using convolution neural nets: Application to retinal layer segmentation in OCT images. Biomed. Opt. Express 9 (9), 4509. 10.1364/boe.9.004509 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahbazi M. N., Siggia E. D., Zernicka-Goetz M. (2019). Self-organization of stem cells into embryos: A window on early mammalian development. Science 364, 948–951. 10.1126/science.aax0164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonyan K., Zisserman A. (2014). Very deep convolutional networks for large-scale image recognition. [Google Scholar]

- Takebe T., Wells J. M. (2019). Organoids by design. Science 364, 956–959. 10.1126/science.aaw7567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tuveson D., Clevers H. (2019). Cancer modeling meets human organoid technology. Science 364, 952–955. 10.1126/science.aaw6985 [DOI] [PubMed] [Google Scholar]

- Wei X., Huang B., Chen K., Fan Z., Wang L., Xu M. (2022). Dot extrusion bioprinting of spatially controlled heterogenous tumor models. Mater. Des. 223, 111152. 10.1016/j.matdes.2022.111152 [DOI] [Google Scholar]

- Woo S., Park J., Lee J.-Y., Kweon I. S. “Cbam: Convolutional block attention module,” in 15th Proceedings of the European Conference Computer Vision – ECCV 2018. ECCV 2018, Munich, Germany, September 2018. Lecture Notes in Computer Science. 10.1007/978-3-030-01234-2_1 [DOI] [Google Scholar]

- Xiao Y., Tian Z., Yu J., Zhang Y., Liu S., Du S., et al. (2020). A review of object detection based on deep learning. Multimedia Tools Appl. 79 (33–34), 23729–23791. 10.1007/s11042-020-08976-6 [DOI] [Google Scholar]

- Yang L., Yu X., Fuller A. M., Troester M. A., Oldenburg A. L. (2020). Characterizing optical coherence tomography speckle fluctuation spectra of mammary organoids during suppression of intracellular motility. Quantitative Imaging Med. Surg. 10 (1), 76–85. 10.21037/qims.2019.08.15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yavitt F. M., Kirkpatrick B. E., Blatchley M. R., Anseth K. S. (2021). 4D materials with photoadaptable properties instruct and enhance intestinal organoid development. ACS Biomaterials Sci. Eng. 8 (11), 4634–4638. 10.1021/acsbiomaterials.1c01450 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao J.-X., Liu J., Fan D.-P., Cao Y., Yang J., Cheng M.-M. “EGNet: Edge guidance network for salient object detection,” in Proceedings of the IEEE/CVF international conference on computer vision (ICCV), Seoul, Korea (South), October 2019, 8778–8787. 10.1109/ICCV.2019.00887 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.