Abstract

Communication research often focuses on processes of communication, such as how messages impact individuals over time or how interpersonal relationships develop and change. Despite their importance, these change processes are often implicit in much theoretical and empirical work in communication. Intensive longitudinal data are becoming increasingly feasible to collect and, when coupled with appropriate analytic frameworks, enable researchers to better explore and articulate the types of change underlying communication processes. To facilitate the study of change processes, we (a) describe advances in data collection and analytic methods that allow researchers to articulate complex change processes of phenomena in communication research, (b) provide an overview of change processes and how they may be captured with intensive longitudinal methods, and (c) discuss considerations of capturing change when designing and implementing studies. We are excited about the future of studying processes of change in communication research, and we look forward to the iterations between empirical tests and theory revision that will occur as researchers delve into studying change within communication processes.

Keywords: communication processes, communication theory, dynamics, intensive longitudinal data, longitudinal analyses

How do individuals and bots build their audience of followers on social media over time (e.g., Zhang et al., 2021)? When do media effects appear and fade away (e.g., Thomas, 2022)? What interpersonal communication behaviors change individuals’ emotions over the course of a conversation (e.g., Rains & High, 2021)? These are just a few questions asked in contemporary research concerning how communication changes over time or how communication leads to changes in other constructs. The field of communication has a long-standing interest in change (Kelly & McGrath, 1988; McGrath & Kelly, 1986), but new opportunities to collect and analyze data well suited to examine change are facilitating greater theoretical articulation and empirical analysis of how phenomena change (e.g., in a linear or nonlinear way) or fluctuate (e.g., in brief or long deviations from an equilibrium) and when phenomena occur (e.g., after a particular experience or state, after a certain amount of time). A greater ability to articulate change will lead to increased precision in our testing of existing theories and strengthen existing theories that lack specificity on how phenomena manifest over time or at which time scales the implied change occurs (Boker & Martin, 2018). For instance, social penetration theory (Altman & Taylor, 1973) posits that the breadth and depth of information individuals exchange should increase as a relationship develops, which implies a linear positive change in breadth and depth. However, this theory does not specify the time scale of change. Do breadth and depth increase with the number of days, the number of conversations, or the number of messages exchanged? Although all three time-based metrics are likely to be correlated, the operationalization of time will impact how relevant data are collected and modeled. Even if theory does happen to explicate the manifestation of change in detail, research on change processes has often been limited by the use of cross-sectional data rather than the analysis of longitudinal data to capture and test change. Our goals in this paper are to bring renewed attention to change processes in communication and to increase the feasibility and implementation of capturing and quantifying change. In service of these goals, we (a) describe advances in data collection and analytic methods that allow researchers to articulate complex change processes of phenomena in communication research, (b) provide an overview of change processes and how they may be captured with intensive longitudinal methods, and (c) discuss considerations of capturing change when designing and implementing studies.

Advances in Data Collection and Analytic Methods to Capture Change

We conceptualize change as a within-person (or within-dyad, within-network, or within-entity) phenomenon, in which there is time-dependent variation within a single person’s or unit’s time series (Baltes & Nesselroade, 1979; Molenaar, 2004). Time may be defined in several ways, including a consideration of clock time (e.g., time of day) or individuals’ subjective experiences of time (Ancona, Goodman et al., 2001; Ancona, Okhuysen, & Perlow, 2001). Given our focus on intensive longitudinal (i.e., time series) data, we focus our discussion on time-ordered sequences of measurement occasions, with each measurement occasion typically marked by the passage of an amount of clock or event time designated by the researcher.

Research questions that ask “how” and “when” are inquiries about process or timing and necessitate an examination of within-person (unit) change over time, which has implications for data collection and analyses to appropriately articulate that within-person change. Sometimes, researchers assume that the between-person effects obtained from cross-sectional data provides evidence for within-person processes. For example, researchers may assume that between-person findings indicating that people who spend more time on social networking sites also have more depression symptoms (e.g., Cunningham et al., 2021) can be used to make inferences at the within-person level, such that a change in time spent on social media (i.e., more time spent) is related to a within-person change in severity of depression (i.e., increased number of symptoms). The ability to extract insights about within-person change from cross-sectional data would greatly simplify the complex task of collecting the intensive longitudinal data necessary to quantify within-person variation across timepoints. However, the capacity to move from between-person results to insights about within-person change is rarely warranted (Molenaar, 2004; Molenaar & Campbell, 2009).

Hamakar (2012) provides an intuitive example of what may happen when trying to generalize the results from cross-sectional (i.e., between-person) data to within-person processes. Specifically, she describes how examining typing speed and percentage of typos at the between-person level may be negatively associated; that is, people with better typing skills type faster and make fewer mistakes. Generalizing this between-person finding to the within-person level would lead us to infer that when a person types faster, they will make fewer mistakes. However, typing behavior reflects a speed versus accuracy trade-off, that is, an individual is likely to make more mistakes when trying to type faster than they usually do. Thus, an association that holds between individuals may lead to inappropriate inferences from cross-sectional results to within-person change (i.e., a form of the ecological fallacy; Firebaugh, 1978; Robinson, 1950; Schwartz, 1994). If communication researchers are interested in within-person processes, then they must collect intensive longitudinal data to move from asking between-person questions (i.e., those possible in cross-sectional surveys) such as “do people who use more social media have poorer well-being?” to within-person questions such as “when an individual uses more social media than they typically use, do they also experience lower levels of well-being than usual?”

Researchers need to collect intensive longitudinal data to be able to answer within-person questions. Although the collection of intensive longitudinal data is a more arduous task than the collection of cross-sectional data, we are optimistic about the emerging opportunities to articulate and explicitly study change in communication processes with such data. Studying change within communication phenomena is increasingly feasible. In particular, intensive longitudinal data, consisting of at least five repeated assessments per individual (Bolger & Laurenceau, 2013) but typically encompassing tens of measurement occasions (Collins, 2006; Walls & Schafer, 2005), are now easier to gather and more amenable to analysis. This feasibility stems partly from the digitalization of data collection. For example, long-utilized paper booklets of self-report forms carried around by participants and completed in response to beeps from paging devices (Csikszentmihalyi & Kubey, 1981) may now be completed via smartphones, which exhibit high rates of ownership and use in everyday life (Olmstead, 2017), and allow self-reports to be collected multiple times per day, often in situ (Shiffman et al., 2008). Wearable devices also contribute to the ease of data collection (Ometov et al., 2021), as does increasing access to the digital breadcrumbs of everyday life created through online browsing and computer-mediated communication (Brinberg et al., 2021; Lydon-Staley, Barnett et al., 2019; Torous et al., 2017). Relevant, too, is the appreciation that intensive longitudinal data are abundant in traditional forms of data collection, found by considering single trials on reaction time tasks (Peng et al., 2021) or by zooming into brain signal fluctuations on the level of seconds (Sajda et al., 2012) instead of aggregating such data at minute and hour levels. Change also may be studied with non-intensive longitudinal data (fewer than five time points; King et al., 2018). For example, data consisting of three time points from the same participant allow a linear change trajectory to be estimated. With four timepoints, a quadratic (i.e., nonlinear) change trajectory may be estimated. Intensive longitudinal data, however, facilitate the examination of more complex and precise change processes and are often better able to capture these processes than non-intensive data.

In parallel to the increased accessibility of intensive longitudinal data, analytic methods to study change are becoming more prevalent in the field, including multilevel models (e.g., Bolger & Laurenceau, 2013), time series models (e.g., Ariens et al., 2020), network models (e.g., Beltz & Gates, 2017), dynamic structural equation models (e.g., McNeish & Hamaker, 2020), and growth models (e.g., Grimm et al., 2016). Open-source statistical programs, online tutorials, and peer-reviewed primers for methods that capture change all contribute to the burgeoning use of these approaches (see Table 1). Furthermore, these advanced methodological approaches are necessary to capture the complexity in intensive longitudinal data and address the field’s increasingly nuanced theoretical questions.

Table 1.

Types of Dynamics and Methods to Capture Each Dynamic

Note. The online resources reflect sites of Summer 2022. We recognize that the location and availability of these resources may change over time.

Articulating Change in Communication Research

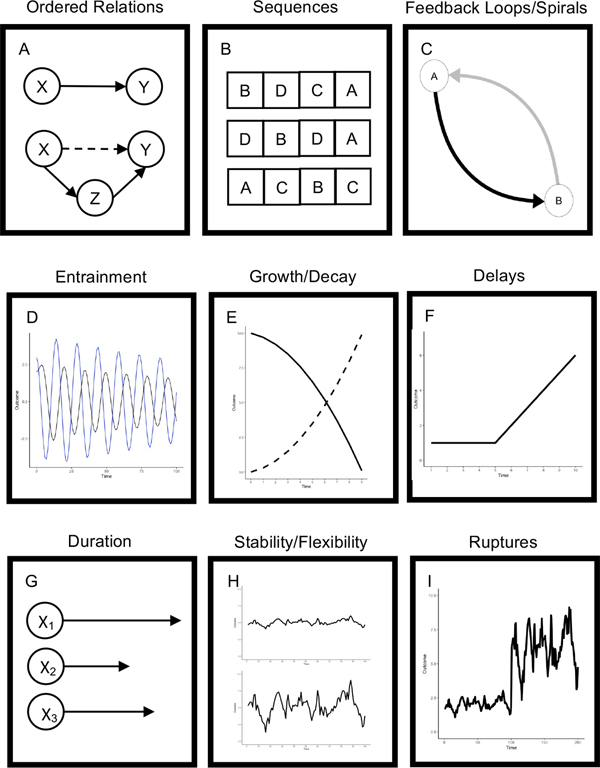

By combining intensive longitudinal data with appropriate analytic methods, many change processes of interest to communication researchers may be quantified. In this section, we review nine change processes (see Figure 1) and summarize methods and resources (see Table 1) to help researchers examine each change process. We provide (a) a general description of each change process and an exemplar study illustrating that process, (b) considerations for researchers studying each type of process (e.g., sampling), and (c) a brief description of relevant methods and potential challenges when analyzing each change process. We note that communication phenomena may exhibit elements of multiple types of change, that there are a multitude of ways in which different types of change may be combined, and that an array of analytic approaches lend themselves to capturing many types of change (see McCormick et al., 2021, for guidance on model selection). Furthermore, the facets of change we describe are non-exhaustive and not the only way to characterize change. We have chosen change processes that are familiar to most communication researchers and have sufficient development in theory, data, and method to be promising avenues for immediate advancements. Finally, our illustrations span the gamut from interpersonal communication to mediated communication to reflect the extent to which considerations of change are relevant to all aspects of communication research. By highlighting the advances in data and methods capable of articulating change in communication, we hope to encourage the field to advance theory with a sophistication that matches, and is potentially inspired by, current data and methods (Boker & Martin, 2018; Collins, 2006).

Figure 1.

Types of Change Processes.

Ordered Relations

The ability to identify the cause of an outcome, or whether one construct or event precedes another, motivates many research questions. Attending to ordered relations facilitates a quantification of the increased or decreased (i.e., change in) likelihood of a behavior, emotion, or thought occurring after a particular behavior, emotion, or thought, which we depict in Figure 1A. For example, researchers have sought to determine the order of the association between social media use and mental health, asking does social media use lead to decreased mental health or does poor mental health lead to increased social media use (for review see Orben, 2020)? In the field of communication, we are often unable to experimentally manipulate individuals’ behavior or experiences to examine causal effects and are left instead to examine the ordered relationships or associations between constructs. Although direct evidence for causality may be difficult to ascertain, researchers can use longitudinal designs to gain insights into causality. For example, longitudinal designs enable tests of Granger causality (Granger, 1969; Molenaar, 2019), which describe processes in which there are cross-lagged relations between a set of variables in one direction (e.g., poor mental health at one time point is associated with social media use at the following time point) but not the other direction (e.g., social media use at one time point is not associated with poor mental health at the following time point). Coyne et al. (2020), for example, used an eight-year longitudinal study of adolescents to address the question of ordering between social media use and poor mental health. By capturing year-to-year fluctuations in time spent on social media and depression and anxiety, these researchers were able to estimate the extent to which time spent on social media one year was associated with mental health the following year (using autoregressive latent change score models with structured residuals; Curran et al., 2014). There was no evidence that time spent on social media one year was associated with depression or anxiety the following year. Similarly, adolescents’ depression and anxiety were not associated with their social media use the following year, leading these researchers to infer that causal within-person associations between social media use and mental health, in either direction, was unlikely, thus building on prior cross-sectional work (that is unable to deduce ordered relations) that has often found between-person associations among social media use, depression, and anxiety.

Study Design Considerations for Examining Ordered Relations

Ordered relations can be examined using a variety of longitudinal approaches. Ultimately, researchers need to obtain multiple measurements that allow them to identify or deduce the order of behaviors, emotions, or thoughts and rule out other potential orders. Multiple measurements can be collected using a range of approaches including wearable devices and unobtrusive observations of mobile device use over time (e.g., changes in skin conductance preceding a switch in media content, Yeykelis et al., 2014), multiple surveys over short time scales, such as experience sampling methods or daily diaries (e.g., the consequences and antecedents of changes in parent-adolescent conflict and anger from day-to-day, LoBraico et al., 2020), or over long time scales, such as longitudinal panel assessments every year (e.g., the directional impact of social media use and wellbeing, Coyne et al., 2020).

Methods for Examining Ordered Relations

Given the wide range of research questions and study designs that focus on the ordering of constructs, there are a variety of methodological approaches researchers can employ to capture order effects (see Table 1 for a list of methods and relevant resources). Although the foundation of these models may differ, all of these models can be constructed to examine autoregressive and cross-regressive effects, with autoregressive parameters representing the lag-1 association between the same variable across time (e.g., how does an individual’s experience of anxiety last year predict their anxiety this year?) and cross-regressive parameters representing the lag-1 association between different variables across time (e.g., how does an individual’s time spent on social media last year predict their anxiety this year?). For simplicity, we focus on lag-1 associations but note that higher order lags (e.g., in an annual panel design, behaviors two years prior may be associated with current behaviors) are also possible. The main considerations when choosing an appropriate model include (a) how much data are available, with time series models and their variants (vector autoregressive models; Bringmann et al., 2013; Chatfield, 2003; Haslbeck et al., 2021; Velicer & Molenaar, 2012) requiring a large number of data points and models fit in the multilevel and structural equation modeling frameworks, such as the longitudinal panel model (Little et al., 2007; Mulder & Hamaker, 2021; Selig & Little, 2012), requiring fewer time points (Bolger & Laurenceau, 2013; Hoffman, 2015; Hox et al., 2017; Little, 2013; McNeish & Hamaker, 2020; Newson, 2015; Snijders & Bosker, 2011), (b) whether measurement models and error should be accommodated (which would point towards the selection of a structural equation model or one of its variants), and (c) what types of model extensions may be of interest (e.g., including mediation effects which are more easily accommodated within structural equation models; Goldsmith et al., 2018; Selig & Preacher, 2009).

If researchers are working with categorical data (e.g., conflict tactics enacted during a conversation between romantic partners) rather than continuous data (e.g., hours per week spent using social media), they may consider using sequential analysis (Bakeman & Gottman, 1997) or (hidden) Markov models (Visser et al., 2002; Visser, 2011), which obtain estimates of the probability of lag-1 transitions between categories. Markov models may examine a greater number of transitions than sequential analysis models (i.e., they can accommodate more categories), but the number of time points required is high and the results are person-specific (i.e., each individual or entity in the study obtains their own transition probabilities), which may complicate the interpretation of results. Sequential analysis can make estimates about transition probabilities between categories across the sample but is unable to easily examine more than a 2×2 transition probability matrix. Given these differences, the selection of the appropriate method to infer ordered relations will depend on the researcher’s goals.

Sequences

In contrast to ordered relations, behaviors, thoughts, emotions, or events may be independent from one another such that the occurrence of one is not hypothesized to lead to the other but still may occur in a particular order or sequence, as depicted in Figure 1B. The exact sequence in which a stream of information, events, or behaviors occur has substantial implications for an individual’s experience, encouraging a consideration of sequences across many domains of communication. In narratives, for instance, the sequencing of events in the story may affect reader outcomes but the elements of the story themselves may not affect each other, such as revealing the culprit at the end of the narrative (i.e., an effective plotline for a mystery) versus at the beginning (i.e., an effective plotline for a court case). The sequencing of behaviors may also be important to understand how behaviors occur together as a regularized part of individuals’ lives. For example, researchers have examined how individuals organize use of content on their mobile devices to meet their needs by unobtrusively tracking individuals’ mobile app use (Peng & Zhu, 2020). Specifically, researchers installed “on-phone meters” on individuals’ smartphones to passively track the start and end times of app use over the course of five months. Using sequence analysis (Studer & Ritschard, 2016) and k-medoid cluster analysis (Kaufman & Rousseeuw, 1987), these researchers identified common multi-app usage patterns within sessions (i.e., on-to-off periods of mobile use). They found common sequential patterns of app use across individuals including communication → social network sites and tools → communication (Peng & Zhu, 2020), thus informing media repertoire theory by helping to understand how individuals construct and organize their media use to satisfy their needs.

Study Design Considerations for Examining Sequences

The examination of sequences requires data that maintain the order of the events of interest, such as unobtrusive observation of media use (e.g., as in the observation of app use described above, Peng & Zhu, 2020) or observations of behavior in the laboratory (e.g., examining if the parent or adolescent is the first to up- or down-regulate emotional expression, Lougheed et al., 2020). Regardless of the time scale of sequencing, it is crucial to ensure that all events of interest are observed. If the sampling time frame is too slow (i.e., not frequent enough), researchers may miss events in the sequence and instead obtain a misleading representation of the sequencing pattern. For example, if researchers are interested in the sequencing of mobile app use, using data collection methods that automatically capture each switch in app content may be more effective than regular interval-sampling (e.g., screenshots every five seconds) that may unintentionally overlook switches that occur between observations.

Methods for Examining Sequences

Researchers working with categorical sequence data can consider using sequence analysis (MacIndoe & Abbott, 2004; Studer & Ritschard, 2016), with resources provided in Table 1. Broadly, sequence analysis uses a data-driven approach to identify common patterns of ordered categories. Sequence analysis is a flexible and general tool that can be used to identify sub-sequences within longer sequences (e.g., reoccurring patterns of app use within an entire use session) or to compare entire sequences of events (e.g., events across the span of a relationship). In turn, sequences can be examined in relation to antecedents and outcomes, for instance, does the ordering of events within a narrative affect persuasion? Despite not making claims about direction, examining sequences of communication phenomena furthers understanding about how these processes unfold.

Feedback Loops and Spirals

Circularly causal processes (i.e., event A influences event B, which in turn, influences event A), as depicted in Figure 1C, are common in the field of communication. For example, there is a vast literature providing support for effects of media on a wide variety of outcomes, including emotions, aggression, and attitudes (Bryant & Oliver, 2009; Bryant & Zillmann, 2002). There is also substantial work on media selection, the reasons people select the media they use (e.g., because of their current emotions and attitudes; LaRose, 2009; Robinson & Knobloch-Westerwick, 2021). Increasingly, media selection and media effects are considered reciprocal, dynamic processes such that the selection and attention to media content can be affected by the chosen media content itself (Fisher & Hamilton, 2021; Lang & Ewoldsen, 2009; Slater, 2007; Wang et al., 2012). A network perspective (Lydon-Staley, Cornblath et al., 2021) is one way to quantify circularly causal processes such as those underlying the interplay between media selection and media effects. In its simplest form, a network is composed of nodes, which represent a system’s units, and edges, which represent a system’s inter-unit links, relations, or interactions (Bassett et al., 2018). In a recent example of the interplay between affective states and valenced-media consumption (Shaikh et al., 2022), a network integrating media selection and media effects was constructed in which affective states (i.e., intensity of positive and negative affect) and news consumption (i.e., valence of news story consumed) represented network nodes and edges represented temporal associations among these units across time. This approach made use of the temporal precedence afforded by intensive longitudinal data (self-reports of current positive affect, current negative affect, and valence of recently consumed news collected four times per day over 14 days) and multilevel vector autoregressive models (Bringmann et al., 2013) to model how encountering positively valenced news at a previous time point predicted the level of positive and negative affect at the current time point. Simultaneously, the approach allowed the assessment of selection effects by modeling how the level of positive and negative affect at a previous time point predicted the valence of news stories consumed at the current time point. Findings indicated that reading positively valenced news stories was associated with increased positive affect and decreased negative affect (media effects), and current affective states predicted later valenced media consumption (selection effects). These results were consistent with mood management theory, such that people seek media experiences that allow them to attain and maintain positive affective states (Zillmann, 1988).

The network framework is sufficiently flexible to capture a range of circularly causal processes that are common in the field of communication, including parent-youth conflict processes whereby conflict is both a consequence of past anger and an antecedent of future anger (LoBraico et al., 2020; Wang et al., 2022), or self and other interactions, whereby one’s emotions are both cause and consequence of interpersonal experiences (Yang et al., 2019). Additionally, once data are in network form, they are amenable to interrogation of an important extension of feedback loops: the theoretical notion of spirals that emerge due the presence of positive feedback loops in a network (Fredrickson & Joiner, 2018; Slater, 2007). For example, the spirals perspective captures the notion that influence may flow from media use to beliefs (media effects) or from beliefs to media use (selective exposure) and extends it by considering an ongoing chain of influence between selection and media effects whereby beliefs become more extreme and media use more selective over time (Feldman et al., 2014; Slater et al., 2003). Bidirectional associations between media selection and media use in panel studies encompassing as few as two time points one year apart have been taken as evidence for the presence of spirals (Bleakley et al., 2008; see Slater, 2015 for overview). The presence of bidirectional associations establishes important order effects (see section on Ordered Relations above) but can be pushed further using intensive longitudinal data to capture the longer time scale process of spirals and ongoing chain of influence.

Study Design Considerations for Examining Feedback Loops and Spirals

The examination of feedback loops and spirals via this network perspective requires intensive longitudinal data to capture fluctuations in the phenomena making up the network nodes (e.g., valence of encountered media, positive affect). This is commonly achieved by collecting daily diary or experience sampling data via smartphone as individuals go about their daily lives. Given that network edges represent statistical estimates of how a rise in the activity in one node is accompanied by change in the activity of another node, phenomena that are not expected to fluctuate over the course of the data collection period would not make for effective nodes in such a network. Indeed, if nodes exhibit no variability across the study protocol (exhibiting an intraindividual standard deviation equal to zero), then edges will not be estimated.

Methods for Examining Feedback Loops and Spirals

Once these data have been gathered, feedback loops and spirals may be best modeled using analytic approaches capable of capturing the interplay between two or more events across time. Such feedback loops and spirals have been estimated using multilevel vector autoregressive models (Bringmann et al., 2013; Wang et al., 2022) as well as unified structural equation modeling approaches capable of fitting person-specific networks (Kim et al., 2007; Lydon-Staley, Leventhal et al., 2021; Yang et al., 2019). Person-specific networks allow an appreciation for the heterogeneity in communication phenomena by obtaining individually derived networks that showcase the between-person differences in experiences and their interplay. However, estimating well-fitting person-specific models requires substantially more data than approaches that aggregate data across groups of people (e.g., multilevel vector autoregressive models), with at least 20 measurement occasions recommended for estimating person-specific models (Beltz et al., 2016). One additional network approach, impulse response analysis (Lütkepohl, 2005), can capture the temporally extended effects of feedback loops that lead to spirals. Specifically, impulse response analysis moves beyond identifying instances whereby event A may influence event B, which in turn may influence event A, towards a more continuous interplay between events A and B across time, resulting in a closer match to theoretical notions of spirals. Once a temporal network indicating the predictive associations between key system variables has been constructed, impulse response analysis simulates an instantaneous, exogenous impulse to certain network nodes. Through simulation, the propagation of this impulse through the network, along time-lagged network edges, is charted. The impulse response function shows the hypothetical change in a node in response to one of the other nodes over a horizon of several time points. Importantly, activity in a node observed after the network receives the impulse contains system-level information. The impulse experienced by non-shocked nodes is due to the flow of activity along the network’s edges emanating from the shocked node, including as the activity gets caught up in feedback loops. Notably, the network models covered in this section are amenable not only to intensive longitudinal data collected on seconds, minutes, and daily time scales as presented in the examples discussed but may be estimated from panel data featuring at least three waves of measurement across months or years in instances where feedback loops are theorized to take place on longer time scales (Epskamp, 2020). In Table 1, we provide a list of recommended readings and coding resources to learn more about the array of methods available to model feedback loops and spirals.

Entrainment

Entrainment describes phenomena in which one cyclic process aligns and oscillates with another cyclic process (McGrath & Kelly, 1986) and is depicted in Figure 1D. We interpret “cycle” broadly, such that two processes do not necessarily have to exhibit sinusoidal (i.e., wave-like) patterns. Instead, we view entrainment as two processes that move in tandem (either in the same or opposite directions), whether in cycles, ups and downs, or onsets and offsets. Entrainment has often been examined when studying dyadic phenomena, particularly whether and how dyad members change in similar ways or in response to one another over time, described with terms such as synchrony and coregulation. For example, researchers have examined romantic partners’ coregulation through physiological synchrony during conversations in the laboratory (Helm et al., 2014). Using bivariate multilevel models (also referred to as cross-lagged panel models in the original paper) with autoregressive and cross-partner parameters from the same and previous time points, these researchers examined couple members’ respiratory sinus arrhythmia in 30-second epochs during four tasks (i.e., baseline resting task, positive conversation, negative conversation, and neutral conversation). They found that the conversation tasks exhibited cross-partner effects compared to the baseline task, but the different types of conversations (i.e., positive, negative, neutral) did not produce significantly different cross-partner effects, and thus concluded there was evidence of physiological coregulation as measured by respiratory sinus arrythmia.

Study Design Considerations for Examining Entrainment

The examination of entrainment requires intensive longitudinal data from at least two entities and the observations typically occur at the level of seconds (e.g., physiological recordings in a laboratory setting, Helm et al., 2014) or from day-to-day (e.g., daily diary reports of positive and negative affect to examine romantic couples’ affective coregulation, Butner et al., 2007). Regardless of the time scale of study, studies of entrainment require simultaneous (or at least near simultaneous) measurement of individuals or entities given that interest in this process is whether individuals change together. Although much work on entrainment has focused on social entrainment, this type of change is also applicable to any two processes, even within an individual. For example, using multiple brief surveys over the course of five days and multilevel models, Hall (2018) examined how perceived closeness and affective well-being were associated during recent interpersonal interactions. Within-person, entrainment captures two behaviors, emotions, or thoughts that change in tandem, but without any specifications of direction or order as is the case in the Ordered Relations and Sequences change processes.

Methods for Examining Entrainment

Researchers can model entrainment using a range of methods (Gates & Liu, 2016), with the choice of method depending on the specific pattern of entrainment (e.g., linear association, cyclical) and the sampling frequency (i.e., number of measurement occasions). Methods that are well-suited to examine linear associations from study designs with fewer time points (e.g., obtained from longitudinal panel or daily diary studies that last a week or two) include bivariate/multivariate multilevel models (Bolger & Laurenceau, 2013; MacCallum et al., 1997) and cross-lagged panel models (Mulder & Hamaker, 2021). Methods for examining linear associations from study designs with many time points (e.g., obtained from laboratory observations of physiology, experience sampling studies with several observations a day for many days) include vector autoregressive models (Chatfield, 2003; Haslbeck et al., 2021; Velicer & Molenaar, 2012), multilevel vector autoregressive models (Bringmann et al., 2013; Li et al., 2022), and dynamic factor models (Ferrer & Nesselroade, 2003). Finally, methods for examining cyclical entrainment include coupled differential equation models (Boker & Laurenceau, 2007), cross-recurrence quantification analysis (Brick et al., 2017), spectral analysis (Vowels et al., 2021; Warner, 1998), and windowed cross-correlation analysis (Boker et al., 2002). In Table 1, we provide a list of recommended readings and coding resources to learn more about methods to model entrainment.

Growth and Decay

Growth and decay describe directional change in phenomena over time and are depicted in Figure 1E. These trajectories of change can follow different linear and nonlinear pathways. For example, researchers have examined changes in the strategies parents enact to enhance or restrict children’s media use by using a longitudinal design in which parents of three- to seven-year-olds completed surveys every year for four years (Beyens et al., 2019). Using multiple group latent growth models that accounted for the different age cohorts, these researchers compared linear and quadratic growth curves of parental mediation behaviors over early and middle childhood and found that quadratic (i.e., inverted U-shaped) curves best described the increase and then decrease of parental mediation behaviors over time.

Study Design Considerations for Examining Growth and Decay

Growth and decay processes require intensive longitudinal data, regardless of whether those data points are collected over minutes or years, with more complex trajectories requiring a greater number of data points. The complexity of trajectories can range from linear growth, which requires at least three measurement occasions, to growth that follows a sigmoid function in which at least six measurement occasions are needed (Ram & Grimm, 2015). Researchers have used a variety of study designs to study growth and decay processes, such as unobtrusive observations of media use (e.g., exponential change in new romantic couples’ communication accommodation within text message exchanges, Brinberg & Ram, 2021), daily (or weekly) diary designs (e.g., curvilinear change in romantic couples’ intimacy and relational uncertainty as observed in weekly assessments, Solomon & Theiss, 2008), and longitudinal panel assessments (e.g., quadratic changes in parental mediation behaviors of technology during early and middle childhood as described above, Beyens et al., 2019). Experimental studies can also be used to examine processes of growth and decay, such as the effects of repeated message exposures. For example, extending research which typically uses three repetitions or fewer, researchers examined how a larger number of repeated exposures, up to 27 times in a single session, affected truth judgements (Hassan & Barber, 2021). The high number of repetitions, as well as the high number of truth ratings at multiple time points, allowed complex shapes of repetition effects to be captured, including logarithmic increases in truth ratings. When designing a study to examine growth or decay processes, the more measurement occasions collected, the greater the range of nonlinear models that may be fit.

Methods for Examining Growth and Decay

Researchers can examine processes of growth and decay using an assortment of methods, but, as their name implies, growth models (and their decay counterparts) are a good place to start (Grimm et al., 2016; McCormick et al., 2021). The choice of trajectory will depend on the hypothesized growth or decay process, but current theoretical descriptions may lack specificity about change trajectories. For example, although a theory might specify a construct should increase over time it may not specify whether that change is linear, is sudden and then flattens out, or is S-shaped, just to name a few forms. When this ambiguity occurs, we encourage researchers to plot their data to obtain visual clues and fit multiple growth or decay models and compare the fit of each model. The specific framework that researchers can use to fit their growth or decay models, whether in a multilevel modeling framework or structural equation modeling framework, will depend on a number of factors including how researchers treat missing data (multilevel modeling allows for missing data, while structural equation modeling may require imputation) and whether researchers want to incorporate measurement models to define latent constructs (which is possible in structural equation modeling, but not multilevel modeling). To determine the appropriate model for a specific research question, we suggest some reading materials and resources for coding in R on growth and decay models in Table 1.

Delays

When examining the effects of stimuli (e.g., media exposure), especially in laboratory studies, data are generally collected almost immediately after exposure. While largely a matter of convenience, the practice of collecting participant responses immediately after exposure speaks to an implicit assumption that effects will be most intense in the moments following exposure. There are instances, however, where this assumption is not met, and effects are experienced at a delayed time interval, as depicted in Figure 1F. The potential for delayed effects should lead researchers to consider when may be the most appropriate time(s) to investigate exposure effects. A well-studied example is the sleeper effect (Kumcale & Albarracín, 2004; Pratkanis et al., 1988) whereby recipients of a persuasive message may not be immediately persuaded after they receive the communication. Instead, a delayed increase in persuasion occurs minutes, hours, days, or weeks later (Kumkale & Albarracín, 2004). This delayed increase in persuasion may occur in at least two ways. The most common sleeper effect occurs when a message source with low credibility results in little or no persuasion soon after exposure. Over time, however, the reason for discounting the message (i.e., the source) becomes less accessible in memory and a sleeper effect occurs whereby an otherwise strong message leads the viewer to be persuaded. An additional sleeper effect for the source is also possible, such that persuasion may increase after a delay when credible sources present weak arguments (Albarracín et al., 2017). This is especially likely when viewers attend to the source more than the argument during initial message exposure.

Study Design Considerations and Methods for Examining Delays

The presence of sleeper effects, and other examples of delayed effects in the field of communication (e.g., Austin & Johnson, 1997), encourages researchers to consider the frequency and timing of measurement of the outcomes targeted by persuasive, and potentially other, media or messages. With intensive repeated measurement of key variables, including the outcome and memory of key message and source characteristics, data amenable to growth curve modeling (see Growth and Decay section above) could capture more precise shapes of within-person delay effects over time.

Duration

Duration refers to the length of time a behavior, emotion, or thought occurs, or broadly as the on-off interval of an event (McGrath & Kelly, 1986) and is depicted in Figure 1G. Duration also encompasses the length of time until an event occurs. For example, researchers have been interested in the duration of time that individuals stay engaged with media content. In one line of this work, researchers observed participants in the laboratory while they watched television and recorded how long participants looked at the television screen before diverting their gaze (Richards & Anderson, 2004). These researchers fit different types of distributions (e.g., exponential, log-normal) using survival analyses (Tableman & Kim, 2003) to capture the duration of attentional engagement. They found that the log-normal distribution best described the distribution of looking times, which the researchers took as evidence of attentional inertia because one characteristic of the distribution is that the likelihood of an event to occur – in this case, to disengage from the media content – diminishes over time. Recently, researchers found similar evidence of attentional inertia processes on laptops using frequently collected screenshots of individuals’ laptops and multilevel survival models (Lougheed et al., 2019) whereby the duration of time spent on specific screen content (i.e., the time before content switch) followed a log-normal distribution (Brinberg et al., 2022).

Study Design Considerations for Examining Duration

The duration of communication phenomena can be captured using a range of study designs, from laboratory observations that capture the length of an event or the time until the event of interest occurs at the second-by-second level (like in the attentional inertia work described above) to longitudinal panel studies that examine the amount of time that passes until relational events occur, such as relational dissolution (e.g., Eastwick & Neff, 2012). Importantly, the study sampling scheme must collect observations frequently enough or over a long enough time span to capture differences in duration. For example, studies that aim to study media engagement may overlook important qualities such as content switching, commenting on or “liking” posts, or scrolling if these behaviors are only summarized or observed once every minute given that prior work has shown individuals switch between content less than every 20 seconds (Yeykelis et al., 2017). Generally, more frequent observations are preferred if feasible since that will allow researchers greater precision in calculating duration.

Methods for Examining Duration

Once researchers have data capturing the duration of a phenomenon of interest, they can then model duration using a range of methods, including survival analysis and sequence analysis. First, (multilevel) survival analysis, also referred to as event history analysis, models the probability of a qualitative change (e.g., switch of media content, end of a relationship) at a specific point in time (Allison, 2014; Cox, 1972; Lougheed et al., 2019). These models can be non-parametric and make few assumptions about the distribution of the timing of events or can be parametric and make specific assumptions about the distributional form of the timing of events (e.g., exponential, log-normal). One advantage of using parametric survival models is that it is easier to make specific inferences about the timing of events, such as with the exponential survival curve in which the probability of the event occurring stays constant over time. Second, aspects of sequence analysis allow researchers to examine the duration of events (Studer & Ritschard, 2016) by capturing the ordering of states and the length of time spent in each state. Due to its flexibility in capturing duration in multiple states, sequence analysis is likely better suited when examining the duration and change of multiple states whereas survival analysis is better suited to examining the duration of a single state. Finally, we want to note that duration can be examined in traditional analysis of variance (ANOVA) and regression frameworks. However, one limitation of these approaches, particularly ANOVA when examining group differences in duration for instance, is that they do not produce an overall model that allows for predictions. For example, parametric survival analysis models produce a formula that allow researchers to make predictions of duration for new instances, whereas a group comparison using ANOVA does not facilitate these predictions. An additional benefit of using survival analysis over ANOVA and regression frameworks is the ability to accommodate instances where the duration extends beyond the sampling frame. For example, in studying the duration of smoking lapses (Lydon-Staley, MacLean et al., 2021), the dissolution of relationships (Eastwick & Neff, 2012), or the expression of anger during an interaction between parent and child (Lougheed et al., 2019), some participants may never lapse, may have relationships that do not end, or may never express anger. Survival analysis can accommodate such instances, termed censored cases, whereas regression frameworks cannot. Along with these considerations, we provide recommended reading materials and resources for coding in R on survival analysis and sequence analysis in Table 1.

Stability and Flexibility

Stability and flexibility describe how phenomena may exhibit consistency or variability over time and are depicted in Figure 1H. The concepts of stability and flexibility are emphasized in the dynamic systems literature (Han & Lang, 2019; Hollenstein, 2013) and focus not on the direction, length, or concordance of change, but the general consistency or variability of phenomena (Nesselroade, 1991). Typically, researchers quantify the stability (or lack thereof) of a system (i.e., phenomenon), inferring that these dynamics are representative or associated with certain qualities of the system. For example, researchers have examined the stability of adolescent emotion dynamics during parent-child interactions as a predictor of depressive symptoms several years later (Kuppens et al., 2012). Using second-by-second coded emotional expression during a parent-child conflict resolution interaction, these researchers operationalized emotional inertia using the autoregressive parameter (i.e., the regression of emotion at time t on emotion at time t-1) from multilevel models as an indicator of emotional stability. They found that adolescents who exhibited greater emotional inertia (i.e., stability) were at greater risk of developing depression several years later (Kuppens et al., 2012).

Study Design Considerations for Examining Stability and Flexibility

Similar to other types of change reviewed, examining stability and flexibility processes can be accomplished with different study designs, from observations in the laboratory to longitudinal panel studies. The number of occasions observed is a key consideration in studying stability and flexibility. Researchers must obtain reliable metrics of the stability and flexibility of a phenomenon. Metrics of stability and flexibility can be obtained directly from statistical models (as in the emotional inertia example above) or by calculating intraindividual variability metrics (Ram & Gerstorf, 2009), such as individuals’ standard deviation of the measured phenomenon over repeated measures1. The number of measurement occasions will impact the reliability of intraindividual variability metrics. Prior studies have found that at least seven repeated measures are needed to obtain reliable intraindividual metrics of emotion (Benson et al., 2018; Eid & Diener, 1999) and simulation studies have found that tens of assessment occasions may be needed (Wang & Grimm, 2012). Thus, researchers can ensure their intraindividual variability metrics are reliable by increasing the number of measurement occasions.

Methods for Examining Stability and Flexibility

Researchers have access to a range of methods to help them quantify stability and flexibility. The choice of method will depend on the measurement scale of the phenomenon of interest (continuous/interval or ordinal/categorical) and whether researchers want to quantify holistic or time-specific (e.g., from one time point to the following time point) characteristics of stability and flexibility. First, methods that are suitable to examine stability and flexibility in a holistic manner for continuous metrics include various intraindividual variability metrics (Ram & Gerstorf, 2009), such as intraindividual standard deviation, coefficient of variation (i.e., individuals’ variance divided by their mean), and signal-to-noise ratio (i.e., individuals’ mean divided by their variance). Second, methods that are applicable to examine stability and flexibility in a holistic manner for categorical data include the use of state space grids and its accompanying metrics (e.g., entropy, dispersion; Hollenstein, 2013), categorical intraindividual variability metrics (e.g., spread - i.e., how evenly distributed are the observations across categories?; Koffer et al., 2018), and metrics that fall under the umbrella of sequence analysis (e.g., turbulence; Gabadinho et al., 2011). Several of these intraindividual metrics capture the regularity or predictability of behaviors in a system. For example, turbulence is calculated by considering the number of distinct sub-sequences from a time-ordered sequence of categories (e.g., individuals’ app use across a study), with greater turbulence indicating more distinct sub-sequences and less predictability/regularity in moving from category-to-category (e.g., greater idiosyncrasy in how individuals use and move between apps). Finally, methods that can examine stability and flexibility in a phenomenon from one time point to the next for both continuous and categorical data have been discussed for other dynamics, including (multivariate) multilevel models and cross-lagged panel models with a time-1 lag variable capturing stability/flexibility (Bolger & Laurenceau, 2013; MacCallum et al., 1997; Mulder & Hamaker, 2021) and (multilevel) vector autoregressive models with the autoregressive parameters capturing stability/flexibility (Bringmann et al., 2013; Chatfield, 2003; Haslbeck et al., 2021; Velicer & Molenaar, 2012). Specifically, and similar to the Ordered Relations process, lag-1 associations within one construct over time have been interpreted as an indicator of the extent of stability or flexibility in a construct. Table 1 reiterates and expands on the resources discussed here for examining stability and flexibility.

Ruptures

Experiments, both manipulated and natural, create conditions that may foster a rupture in process. Specifically, a phenomenon may exhibit one set of change processes, which may abruptly shift as a result of an internal or external event, such as the one depicted in Figure 1I. For example, researchers used a natural experiment to observe a couple prior to and following the birth of their child and modeled the differences in their emotion dynamics using coupled differential equation models (Ram et al., 2014). For happiness, they found that the husband in this couple showed a decrease in regulatory tendencies and an increase in matching his wife’s mood following the birth of their child. In contrast, they found that the wife showed an increase in regulatory tendencies and that her mood had the tendency to move away from her husband’s following the birth of their child. Researchers have also used multilevel spline models to examine differences in the number of individuals who avoid the news across a 20+ year time span in the European Union, comparing use across timeframes when people generally received their news from television, radio, and newspapers versus the internet (Gorski & Thomas, 2021). They found that the number of news avoiders seemed to increase following the introduction of the internet.

Study Design Considerations and Methods for Examining Ruptures

In addition to coupled differential equation models (Boker & Laurenceau, 2007), multilevel spline models (Grimm et al., 2016), and other regression-based methods that account for phase shifts, regime-switching (i.e., threshold autoregressive) models (Hamaker et al., 2009) and the cusp catastrophe model (Chow et al., 2015; Grasman et al., 2009) can capture ruptures in processes, with specific resources included in Table 1. Generally, these analytic techniques require multiple (if not many) observations across phases to establish the observed change processes in each phase, and the change processes in each phase need to be distinct from one another. Although ruptures may conceptually capture communication phenomena, we anticipate this change process to be more difficult to observe in practice given the challenges in collecting data around transitions, especially when the timing of those transitions is unknown.

Considering Change in Study Design

We reviewed nine common change processes that communication phenomena may exhibit and outlined several approaches to observe and describe those changes. There is no one correct way to study change. Any specific study design will depend on the research question and the change processes of the phenomenon under examination. Despite the potential for variety and creativity in the implementation of studies to observe change in communication processes, there are a few points that researchers may consider when collecting the intensive longitudinal data needed to study change.

First, researchers can consider the time scale of the phenomenon. As discussed previously, conceptualizing time is complex, and it is important for researchers to reflect upon the change processes they are interested in studying and how time anchors the study of that change. Does the phenomenon of interest unfold in linear (i.e., chronological) time or does it occur in cyclical time? Does the phenomenon of interest unfold from second-to-second, day-to-day, year-to-year? Some processes may occur at a “fast” time scale, such as switching between content on mobile devices or physiological changes occurring on the scale of seconds (e.g., Helm et al., 2014; Yeykelis et al., 2017), whereas other processes may occur at a “slow” time scale, such as how relationships end over the course of months or years (Eastwick & Neff, 2012) or changes in how women are portrayed in video games over decades (Lynch et al., 2016). The time scale of a phenomenon does not necessarily determine the methods that are applicable as that will be driven by the change process of interest. Instead, the time scale of a phenomenon will influence the planned sampling or observations in the study design. Processes that occur at a faster time scale will require more frequent sampling in a short period of time to capture the hypothesized change, whereas processes that occur at a slower time scale may require the same number of samples but over a longer time period to capture the hypothesized change. The Nyquist-Shannon Sampling Theory (Nyquist, 2002; Shiyko & Ram, 2011) is often used to suggest sampling twice the frequency of the process under examination to sufficiently capture the purported change. It is likely that the time scale of a process is currently unknown, and if that is the case, we recommend collecting data at the most granular level of time as (reasonably) possible and aggregating the data as needed. Altogether, researchers can consider the time scale at which the process of interest unfolds to determine an appropriate sampling and observation scheme for their study.

Second, researchers can consider when and how the phenomenon of interest is measured because these decisions will impact the types of change that can be modeled and the conclusions about change that researchers can draw from these models. Three common types of sampling schemes for obtaining intensive longitudinal data are interval-contingent, signal-contingent, or event-based sampling schemes, or a combination of all three (Shiffman et al., 2008). Both interval-contingent and signal-contingent schemes refer to measurement approaches whereby participants respond to prompts sent at times specified by the investigator. In interval-contingent sampling, measurements are completed at set times of the day. In signal-contingent sampling, by contrast, measurements are completed at semi-random times. For example, asking adolescents to complete a set of measures about their media use and experiences with friends six times a day, at semi-random intervals, as part of an experience sampling study is an example of a signal-contingent sampling scheme (e.g., Pouwels et al., 2021). An example of interval-contingent sampling is a daily diary study that asks participants to rate their curiosity at 6:30PM each evening for 21 days (Drake et al., 2022). Interval-contingent designs allow participants to know when they will be prompted, which may make the protocol more feasible to accommodate into their daily lives. However, a limitation of interval-contingent sampling, and a reason for selecting signal-contingent sampling, is that reports may be susceptible to mental preparation since participants know when they will be asked to report on their experiences (Conner & Lehman, 2012). A limitation of signal-contingent designs, however, is that participants may miss an assessment, thus researchers may not always capture the constructs of interest in the context in which they occur.

In other cases, researchers may be interested in capturing participant responses around the occurrence of a particular behavior or event of interest, such as understanding individuals’ perceptions following interpersonal interactions (Brinberg et al., 2022) or quantifying people’s increases in negative emotions after they consume bad news (Shaikh et al., 2022). If the phenomenon of interest is infrequent and memorable, then an event-contingent sampling scheme may be most fitting as it does not burden participants by collecting observations when the phenomenon of interest has not occurred. In contrast, if the goal is to obtain snapshots of experiences, then researchers may want to consider using an interval-contingent or a signal-contingent sampling scheme. The sampling scheme impacts the potential methods researchers can use as some approaches require (approximately) equally spaced observations, which researchers may want to consider when designing their study.

In addition to the sampling scheme and recalling our discussion on time, researchers can consider whether their observations represent a moment in time or a span (i.e., interval) of time (Ram & Reeves, 2018). A momentary sampling scheme measures the presence, level, or intensity of a specific behavior, emotion, or thought at the time of observation, such as asking participants to rate their current mood or report whether and what type of media they are using currently. In contrast, an interval sampling scheme takes measurements over a specific time frame. For instance, researchers may use wearable devices (e.g., FitBit, Apple Watch) to capture physiological signals between experience sampling surveys or researchers may ask participants to reflect and report on their experiences since the past assessment. Each approach has its own advantages and disadvantages. Momentary sampling schemes may place less burden on participants because they are not required to monitor and recall their behaviors or thoughts since the previous report as done in an interval sampling scheme. However, asking participants to only reflect on the present moment may miss events of interest that occurred since the previous report. We encourage researchers to consider the inferences they would like to make when selecting a scheme. For instance, if Ordered Relations or Sequences is important, then interval sampling may be a better fit, whereas if Stability/Flexibility is important, then momentary sampling may be more appropriate.

Third, we recognize that processes of change, and the collection of intensive longitudinal data to capture these changes, can occur anywhere – even in the laboratory, for both stimuli and intended outcomes. We encourage researchers to consider the change processes produced by their experimental stimuli (when applicable) that may impact the phenomenon of interest (Kelly & McGrath, 1988). Specifically, how does an experimental stimulus affect the state it is intended to manipulate? In the instance of mood induction, (a) is the stimulus presented once and assumed to have an immediate effect on mood that does not decay over time?, (b) is the stimulus presented continuously and assumed to have an immediate effect on mood that does not decay?, (c) is the stimulus presented and assumed to have a linear, accumulating effect over time such that a mood slowly becomes more intense?, or (d) is the stimulus presented once and assumed to have an immediate effect on mood, with that mood decaying over time? These are just a few examples, but we hope future work explores further the dynamics of stimuli used in research studies because they in turn will likely impact the change processes of the observed phenomenon.

Final Thoughts

We are excited about the new opportunities afforded by intensive longitudinal data to describe and test change processes of communication phenomena, which in turn can inform theory creation and revision. Here, we briefly offer a few final considerations when researchers engage in work studying change and discuss limitations of the conceptual framework described here.

First, we recognize that much initial work on change processes of communication phenomena may be exploratory. Given the lack of specificity around change processes that may be present in many theoretical frameworks, we anticipate and look forward to descriptive and exploratory work as researchers articulate their change processes of interest, which are in and of themselves valuable (Munger et al., 2021). Our general recommendation is that when in doubt, collect more granular data to allow aggregation to slower time scales than to make inferences about a process that was not observed. Second, we recognize that our descriptions of methods that are well-suited to articulating certain change processes is incomplete. There is a large array of analytic methods to examine longitudinal data (across continuous, ordinal, and categorical data), and researchers continue to develop the capabilities of these methods. We have chosen to discuss methods which are likely to be more familiar to researchers, in the hopes that the familiarity will render immediate advancements in the study of change more likely. We look forward to further identification and description of change in theoretical and empirical work and new extensions and developments of methods to capture these dynamics.

In conclusion, we provided a conceptual overview of how communication researchers can think about the change processes of communication phenomena in their research. We described the many ways communication processes can manifest over time, illustrated these processes with current communication work, and provided suggestions for analytic methods that would be suitable to test each process using intensive longitudinal data. We hope the terminology on time and change here enables researchers to better articulate change in their own theoretical work and that our methodological guidance helps researchers match their analytic approach to their theoretical question. We are excited about the future of studying change in communication research, and we look forward to the iterations between empirical tests and theory revision that will occur as researchers delve into studying change in communication processes.

Acknowledgements

Thank you to Nilam Ram, Mia Jovanova, and Amanda L. McGowan for their feedback on an early version of this manuscript.

Funding

DML acknowledges support from the National Institute on Drug Abuse (K01 DA047417) and the Brain & Behavior Research Foundation. The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the funding agencies.

Footnotes

Disclosure Statement

The authors report there are no competing interests to declare.

We note that these metrics may have their own measurement error and new methods are being developed to treat variability as a latent variable with measurement error (e.g., Feng & Hancock, 2022).

References

- Albarracín D, Kumkale GT, & Poyner-Del Vento P (2017). How people can become persuaded by weak messages presented by credible communicators: Not all sleeper effects are created equal. Journal of Experimental Social Psychology, 68, 171–180. 10.1016/j.jesp.2016.06.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison PD (2014). Event history and survival analysis: Regression for longitudinal event data (Vol. 46). SAGE publications. 10.4135/9781452270029 [DOI] [Google Scholar]

- Altman I, & Taylor DA (1973). Social penetration: The development of interpersonal relationships. Holt, Rinehart & Winston. [Google Scholar]

- Ancona DG, Goodman PS, Lawrence BS, & Tushman ML (2001a). Time: A new research lens. The Academy of Management Review, 26(4), 645–663. 10.2307/3560246 [DOI] [Google Scholar]

- Ancona DG, Okhuysen GA, & Perlow LA (2001b). Taking time to integrate temporal research. The Academy of Management Review, 26(4), 512–529. 10.2307/3560239 [DOI] [Google Scholar]

- Ariens S, Ceulemans E, & Adolf JK (2020). Time series analysis of intensive longitudinal data in psychosomatic research: A methodological overview. Journal of Psychosomatic Research, 137, 110191. 10.1016/j.jpsychores.2020.110191 [DOI] [PubMed] [Google Scholar]

- Austin EW, & Johnson KK (1997). Immediate and delayed effects of media literacy training on third grader’s decision making for alcohol. Health Communication, 9(4), 323–349. 10.1207/s15327027hc0904_3 [DOI] [PubMed] [Google Scholar]

- Bakeman R, & Gottman JM (1997). Observing interaction: An introduction to sequence analysis. Cambridge: University Press. 10.1017/CBO9780511527685 [DOI] [Google Scholar]

- Baltes PB, & Nesselroade JR (1979). History and Rationale of Longitudinal Research. In Nesselroade JR, & Baltes PB (Eds.), Longitudinal Research in the Study of Behavior and Development (pp. 1–39). Academic Press. [Google Scholar]

- Bassett DS, Zurn P, & Gold JI (2018). On the nature and use of models in network neuroscience. Nature Reviews Neuroscience, 19, 566–78. 10.1038/s41583-018-0038-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beltz AM, & Gates KM (2017). Network mapping with GIMME. Multivariate Behavioral Research, 52(6), 789–804. 10.1080/00273171.2017.1373014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beltz AM, Wright AG, Sprague BN, & Molenaar PC (2016). Bridging the nomothetic and idiographic approaches to the analysis of clinical data. Assessment, 23(4), 447–458. 10.1177/1073191116648209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benson L, Ram N, Almeida DM, Zautra AJ, & Ong AD (2018). Fusing biodiversity metrics into investigations of daily life: Illustrations and recommendations with emodiversity. The Journals of Gerontology: Series B, 73(1), 75–86. 10.1093/geronb/gbx025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beyens I, Valkenburg PM, & Piotrowski JT (2019). Developmental trajectories of parental mediation across early and middle childhood. Human Communication Research, 45(2), 226–250. 10.1093/hcr/hqy016 [DOI] [Google Scholar]

- Bleakley A, Hennessy M, Fishbein M, & Jordan A (2008). It works both ways: The relationship between exposure to sexual content in the media and adolescent sexual behavior. Media Psychology, 11(4), 443–461. 10.1080/15213260802491986 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boker SM, & Laurenceau J-P. (2007). Coupled dynamics and mutually adaptive context. In Little TD, Bovaird JA, & Card NA (Eds.), Modeling Contextual Effects in Longitudinal Studies (299–324). Lawrence Erlbaum Associates. [Google Scholar]

- Boker SM, & Martin M (2018). A conversation between theory, methods, and data. Multivariate behavioral research, 53(6), 806–819. 10.1080/00273171.2018.1437017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boker SM, Rotondo JL, Xu M, & King K (2002). Windowed cross-correlation and peak picking for the analysis of variability in the association between behavioral time series. Psychological Methods, 7(3), 338–355. 10.1037/1082-989x.7.3.338 [DOI] [PubMed] [Google Scholar]

- Bolger N, & Laurenceau JP (2013). Intensive longitudinal methods: An introduction to diary and experience sampling research. Guilford. [Google Scholar]

- Brick TR, Gray AL, & Staples AD (2017). Recurrence quantification for the analysis of coupled processes in aging. The Journals of Gerontology: Series B, 73(1), 134–147. 10.1093/geronb/gbx018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brinberg M, & Ram N (2021). Do new romantic couples use more similar language over time? Evidence from intensive longitudinal text messages. Journal of Communication, 71(3) 454 – 477. 10.1093/joc/jqab012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brinberg M, Ram N, Conroy DE, Pincus AL, & Gerstorf D (2022). Dyadic analysis and the reciprocal one-with-many model: Extending the study of interpersonal processes with intensive longitudinal data. Psychological Methods, 27(1), 65–81. 10.1037/met0000380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brinberg M, Ram N, Wang J, Sundar SS, Cummings JJ, Yeykelis L, & Reeves B (in press). Screenertia: Understanding “stickiness” of media through temporal changes in screen use. Communication Research. 10.1177/00936502211062778 [DOI] [Google Scholar]

- Brinberg M, Vanderbilt RR, Solomon DH, Brinberg D, & Ram N (2021). Using technology to unobtrusively observe relationship development. Journal of Social and Personal Relationships, 38(12), 3429–3450. 10.1177/02654075211028654 [DOI] [Google Scholar]

- Bringmann LF, Vissers N, Wichers M, Geschwind N, Kuppens P, Peeters F, ... & Tuerlinckx F (2013). A network approach to psychopathology: new insights into clinical longitudinal data. PloS one, 8(4), e60188. 10.1371/journal.pone.0060188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryant J, & Oliver MB (2009). Media effects: Advances in theory and research (3rd ed.). Routledge. 10.4324/9780203877111 [DOI] [Google Scholar]

- Bryant J, & Zillman D (2002). Media effects: Advances in theory and research (2nd ed.). Lawrence Erlbaum. 10.4324/9781410602428 [DOI] [Google Scholar]

- Butner J, Diamond LM, & Hicks AM (2007). Attachment style and two forms of affect coregulation between romantic partners. Personal Relationships, 14(3), 431–455. 10.1111/j.1475-6811.2007.00164.x [DOI] [Google Scholar]

- Chatfield C (2003). The analysis of time series: an introduction. Chapman and hall/CRC. 10.4324/9780203491683 [DOI] [Google Scholar]

- Chow SM, Witkiewitz K, Grasman RPPP, & Maisto SA (2015). The cusp catastrophe model as cross-sectional and longitudinal mixture structural equation models. Psychological Methods, 20(1), 142–164. 10.1037/a0038962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM (2006). Analysis of longitudinal data: The integration of theoretical model, temporal design, and statistical model. Annual Review of Psychology, 57, 505–528. 10.1146/annurev.psych.57.102904.190146 [DOI] [PubMed] [Google Scholar]

- Conner TS, & Lehman B (2012). Getting started: Launching a study in daily life. In Mehl MR and Conner TS (Eds.), Handbook of research methods for studying daily life (pp. 89 – 107). Guilford Press. [Google Scholar]

- Cox DR (1972). Regression models and life-tables. Journal of the Royal Statistical Society. Series B (Methodological), 34(2), 187–220. [Google Scholar]

- Coyne SM, Rogers AA, Zurcher JD, Stockdale L, & Booth M (2020). Does time spent using social media impact mental health?: An eight year longitudinal study. Computers in Human Behavior, 104, 106160. 10.1016/j.chb.2019.106160 [DOI] [Google Scholar]

- Csikszentmihalyi M, & Kubey R (1981). Television and the rest of life: A systematic comparison of subjective experience. Public Opinion Quarterly, 45(3), 317–328. 10.1086/268667 [DOI] [Google Scholar]

- Cunningham S, Hudson CC, & Harkness K (2021). Social media and depression symptoms: a meta-analysis. Research on Child and Adolescent Psychopathology, 49(2), 241–253. 10.1007/s10802-020-00715-7 [DOI] [PubMed] [Google Scholar]

- Curran PJ, Howard AL, Bainter SA, Lane ST, & McGinley JS (2014). The separation of between-person and within-person components of individual change over time: a latent curve model with structured residuals. Journal of Consulting and Clinical Psychology, 82(5), 879 – 894. 10.1037/a0035297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drake A, Doré BP, Falk EB, Zurn P, Bassett DS, & Lydon-Staley DM (2022). Daily stressor-related negative mood and its associations with flourishing and daily curiosity. Journal of Happiness Studies, 23(2), 423–438. 10.1007/s10902-021-00404-2 [DOI] [Google Scholar]

- Eastwick PW, & Neff LA (2012). Do ideal partner preferences predict divorce? A tale of two metrics. Social Psychological and Personality Science, 3(6), 667–674. 10.1177/1948550611435941 [DOI] [Google Scholar]

- Eid M, & Diener E (1999). Intraindividual variability in affect: Reliability, validity, and personality correlates. Journal of Personality and Social Psychology, 76(4), 662–676. 10.1037/0022-3514.76.4.662 [DOI] [Google Scholar]

- Epskamp S (2020). Psychometric network models from time-series and panel data. Psychometrika, 85(1), 206–231. 10.1007/s11336-020-09697-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman L, Myers TA, Hmielowski JD, & Leiserowitz A (2014). The mutual reinforcement of media selectivity and effects: Testing the reinforcing spirals framework in the context of global warming. Journal of Communication, 64(4), 590–611. 10.1111/jcom.12108 [DOI] [Google Scholar]

- Feng Y, & Hancock GR (2022). A structural equation modeling approach for modeling variability as a latent variable. Psychological Methods. 10.1037/met0000477 [DOI] [PubMed] [Google Scholar]

- Ferrer E, & Nesselroade JR (2003). Modeling affective processes in dyadic relations via dynamic factor analysis. Emotion, 3(4), 344–360. 10.1037/1528-3542.3.4.344 [DOI] [PubMed] [Google Scholar]

- Firebaugh G (1978). A rule for inferring individual-level relationships from aggregate data. American Sociological Review, 43(4), 557–572. 10.2307/2094779 [DOI] [Google Scholar]

- Fisher JT, & Hamilton KA (2021). Integrating media selection and media effects using decision theory. Journal of Media Psychology: Theories, Methods, and Applications, 33(4), 215 – 225. 10.1027/1864-1105/a000315 [DOI] [Google Scholar]

- Fredrickson BL, & Joiner T (2018). Reflections on positive emotions and upward spirals. Perspectives on Psychological Science, 13(2), 194–199. 10.1177/1745691617692106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabadinho A, Ritschard G, Müller NS, & Studer M (2011). Analyzing and visualizing state sequences in R with TraMineR. Journal of Statistical Software, 40(4), 1–37. 10.18637/jss.v040.i04 [DOI] [Google Scholar]

- Gates KM, & Liu S (2016). Methods for quantifying patterns of dynamic interactions in dyads. Assessment, 23(4), 459–471. 10.1177/1073191116641508 [DOI] [PubMed] [Google Scholar]

- Goldsmith KA, MacKinnon DP, Chalder T, White PD, Sharpe M, & Pickles A (2018). Tutorial: The practical application of longitudinal structural equation mediation models in clinical trials. Psychological Methods, 23(2), 191–207. 10.1037/met0000154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorski LC, & Thomas F (2021). Staying tuned or tuning out? A longitudinal analysis of news-avoiders on the micro and macro-Level. Communication Research. 10.1177/00936502211025907 [DOI] [Google Scholar]

- Granger CWJ (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica, 37, 424–438. 10.1017/ccol052179207x.002 [DOI] [Google Scholar]

- Grasman R, van der Maas HL, & Wagenmakers E-J (2009). Fitting the Cusp Catastrophe in R: A cusp Package Primer. Journal of Statistical Software, 32(8), 1–27. 10.18637/jss.v032.i08 [DOI] [Google Scholar]

- Grimm KJ, Ram N, & Estabrook R (2016). Growth modeling: Structural equation and multilevel modeling approaches. Guilford Publications. [Google Scholar]