ChatGPT (Generative Pretrained Transformer), a product of OpenAI, has hit the academic world as a bolt from the blue and has created a lot of turmoil in the scientific and academic arenas. It is an artificial intelligence (AI) platform, which is based on natural language processing technology. In fact, it is a sibling model, built on a predecessor avatar, InstructGPT, which filters out socially improper responses and those with deceitful racial or sexual overtones. It scouts eclectic domains of knowledge and produces meaningful, articulate and pithy essays on the desired subject matter, utilising deep machine learning models like ‘transfer learning’, ‘supervised learning’ and ‘reinforced learning’. Transfer learning envisages applying knowledge gained from one situation to a different, but similar scenario. Supervised learning is based on the premise that data exist in pairs with each data point covariate having its associated label—a kind of input–output pair. Reinforced learning is a reward-punishment technique, with recompense for an appropriate behaviour and retribution for an undesirable one. All these advanced machine learning paradigms were created in collaboration with Microsoft on their Azure super-computers. The model is self-informing and self-rewarding, so that it evolves continuously. Besides producing straight algorithmic answers, it can also indulge in such imaginative and intuitive outputs as composing music, creating plots for plays, writing poetry and song lyrics etc., a testament to its versatility.

Notwithstanding the supra, puritans and ethicists, especially in academia, are writhing within at the viral success of ChatGPT. Academic world fears that these non-human authors may have serious connotations for integrity, authenticity and validity of scientific publications. It may lead to escalation of academic plagiarism, which as such is pretty high even now, sans ChatGPT. Scientific journals like the respected ‘Nature’ and the ‘JAMA Network Science’ have therefore decided that they would not accept ChatGPT generated articles at all, while others are demanding that there is a disclosure accompanying its use. The reputed journal ‘Science’ has an avowed policy that any manuscript with an AI program as an author ‘will constitute scientific misconduct, no different from altered images or plagiarism from existing works’, and that scientific publishing must remain a ‘human endeavour’ [1].

Equally worried have been the responses to its utility by a section of non-medical intelligentsia and the political class. In Feb 2023, University of Hongkong banned its use by the students and stated that its use would equate to the allegation of plagiarism. Australian member of parliament, Julian Hill, stated in the parliament that AI itself could cause ‘mass destruction’. In his speech, which was in part ironically written by ChatGPT, he sensitised the parliamentarians that it could result in ‘cheating, job losses, discrimination, disinformation and uncontrollable military applications’ [2]. In fact, in Elon Musk’s opinion, ‘ChatGPT is scary good. We are not far from dangerously strong AI’.

Are these concerns well-founded, or are they an over-reaction, a kind of reticence to come out of the comfort cocoon to embrace a new technology? History bears testimony that whenever a disruptive technology was conceptualised, there has been a great resistance to its adoption. Wasn’t discovery of Light Bulb by Edison ridiculed by a British Parliamentary Committee in 1878, ‘…. good enough for our trans-Atlantic friends but unworthy of the attention of practical or scientific men’? Billroth in 1880s spoke, rather condescendingly, ‘Any surgeon who wishes to preserve the respect of his colleagues would never attempt to suture the heart’. Paget followed suit with his opinion on Cardiac Surgery in 1896, ‘Surgery of the heart has probably reached the limits set by nature to all surgeries. No new method and no new discovery can overcome the natural difficulties that attend a wound of the heart’. Closer in time, even a great genius like Albert Einstein, in 1932, erred on nuclear technology, ‘There is not the slightest indication that nuclear energy will ever be obtainable. It would mean that atom would have to be shattered at will’. Commenting on first computers, Thomas Whatson, Chairman of IBM, said in 1943, ‘I think there is a world market for may be five computers’! ‘Beetles’ were rejected by the Decca recording company in 1962 with the admonition, ‘We don’t like the sound and guitar music is on the way out’. Lord Calvin, President of the Royal Society in 1883, blundered, ‘X-ray will prove to be a hoax’. Western Union internal memo in 1872 observed, ‘This telephone has too many short comings to be seriously considered as a means of communication. The device is inherently of no value to us.’ Even the first television was decried in no uncertain terms, ‘Television won’t last because people will soon get tired of staring at a plywood box every night’, said Darryl Zanuck, Movie Producer from 20th Century Fox in 1946. One can go on ad-nauseum with these blunderous loud words, but suffice it to say history may be repeating itself.

Granted, every coin has its flip side and every ‘good’ may have some ‘evil’ hidden in it, and advanced technologies are no exception. Sometimes the nature of responses from ChatGPT are ambiguous, nonsensical and undesirable; labelled interestingly—‘AI hallucination’. Authorship, accountability for AI-generated content, and legal issues may provide head-winds, as transparency and traceability in AI platforms is lacking. The fact that openAI, the owners of ChatGPT, store data raise issues related to security, privacy and confidentiality. It may even pose cyber-security risks. The reward model of ChatGPT entails human over-sight, which if over-optimised, is subject to the Goodhart’s law, whereby, ‘When a measure becomes a target, it ceases to be a good measure’—an adage formulated by the British economist Charles Goodhart against the monetary policy of the Thatcher government, but has applicability in generic terms to other situations, including AI. Needless to say, all the biases, that a normal human being may be a victim to, do creep in Chatbot GPT too. Additionally, as a training data, they also suffer from algorithmic bias. As the output from the ChatGPT is dependent on the prior data presented to it, there is every chance of repetition of the old text and novelty and creativity of the human mind will be difficult to match. Being gratis for the moment, the system do seems to choke with heavy traffic and breaks down often, but the latency may improve once openAI decides to monetise it.

However, to me, greater are the issues related to critical thinking, empathy and power of touch, which human interaction provides, and which may be difficult to surmount with artificial technologies, be they of any level of sophistication. Will short circuiting of learning processes, especially at the school level, be detrimental to the development of the child’s mind and the brain leading to constrained abilities? Will this weaken the academic foundation of the child and as Californian high school teacher and author Daniel Herman puts it, ‘Usher in the end of high schooling’? This would even include the power of memorisation, which though often under-played as rote learning, has its own applications in real life. Will these AI-based technologies stifle innovation, critical thinking and introspection, and be antithetical to the larger cause of ‘Human Evolution’? These are certainly serious questions meriting contemplative brooding before the genie is let out of the bottle. Bottom line, foundational learning must not suffer at the altar of these technologies, lest we lose the human advantage over technology viz. critical thinking. LA Celi from Harvard TH Chan School of Public health, Boston, however, sounds an optimistic note, ‘It will never replace us because AI is very bad with nuance and context. That’s something that requires a human mind’ [3].

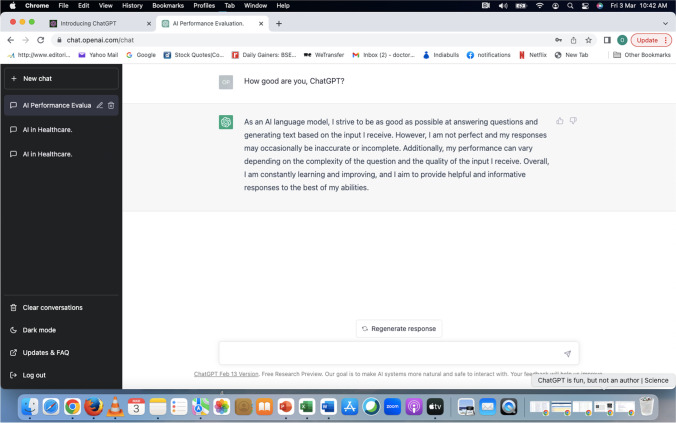

I agree, these are valid and pertinent issues with all AI platforms, and ChatGPT is not immune from them. Furthermore, I have no intent to belittle them; but in the same breath, no point bemoaning and resisting technology. As an age old adage goes, ‘a known devil is better than an unknown one’; all these issues are well recognised, and even acknowledged by ChatGPT itself (Fig. 1). Let us be sensitised to these aspects, and be on guard, always chastised by Thomas Jefferson’s admonition, ‘Eternal vigilance is the price of liberty’. We need to acknowledge ChatGPT as an important technological ally, albeit always guarding against its weaknesses and misuse, either by design or default. I therefore plead, embrace these new world technologies and build on them, rather than decrying them. Nobel Laurette Ernest Hemmingway once said, ‘The first draft of anything is shit’—ChatGPT is no exception, and is getting improved incrementally. OpenAI itself is working on its newer avatars in terms of GPT 4 and ChatGPT plus. To address matters of plagiarism, openAI is developing a digital tool christened ‘AI Classifier’, to watermark ChatGPT generated text. Moreover, this is just a beginning and competition and innovations shall soon emanate. Already Microsoft is working on its ‘Microsoft Bing’ platform, which can develop ‘Human-Like’ text. Others, like Google, are seized of the evolution and the march that Microsoft may take over them, and are upending their work to address this challenge and are working on its competitive version—‘Google Bard’, which is based on another program called ‘LaMDA AI platform’. For the moment ‘Meta’ is keeping out of the race, calling out ChatGPT as ‘not particularly innovative’ and because it runs a reputational risk. Crystal balling, sooner than later, we shall see ‘Meta’ and many other companies join the bandwagon, in what ‘Time’ magazine calls an ‘AI Arms race’.

Fig. 1.

ChatGPT response to: How good are you?

Can ChatGPT be used in academics?

ChatGPT, even in its nascent stage, has displayed enough domain knowledge to earn a ‘C + ’ grade in University of Minnesota law school exams and ‘B’ to ‘B − ’ grades in Wharton’s Business School exam [4]. ‘ChatGPT performed at or near the passing threshold for all three exams (USMLE—US medical licensing exam) without any specialised training or reinforcement’ [5]. Having said that, to the flip side, Sarraju et al. tried to qualitatively validate the appropriateness of ChatGPT’s responses to fundamental questions of cardio-vascular disease prevention [6]. However, it did not pass the test with flying colours as some of the answers were bereft of context. Moreover, there is a fair chance that the AI-generated responses, even though to patients’ own queries, may not be well accepted by them, if they get to know that there is no human interaction involved and it is only mechanistic replies that they are receiving. This was noticed by a company using mental health app using ChatGPT to counsel its patients, but had to suspend it, because ‘it felt kind of sterile’. Consequent to such applications of AI, arise a slew of ethical dilemmas—if ChatGPT is used, either independently or to assist a physician deliver care, would informed consent be required from the patient, and would these technologies muster approbation by the institutional review boards? These, and many alike, are pertinent issues needing cerebral due-diligence and redressal.

Having proven its value and versatility as a source of knowledge, technology needs to be reigned-in for its use in examinations by the students. Wharton’s Terwiesch opines, ‘Bans are needed. After all, when you give a medical doctor a degree, you want them to know medicine, not how to use a bot’ [4]. Daniel S Chow, Co-director of the Centre for AI in Diagnostic Medicine at the University of California Irvine, puts it in a more nuanced perspective, using the simile of use of a calculator in an algebra class, ‘If a student has already demonstrated their expertise of a concept without a calculator, then the use of one is considered supplemental. However, if a student uses a calculator instead of mastering a concept, then it would be cheating’ [3].

Can ChatGPT be listed as a co-author in scientific publications?

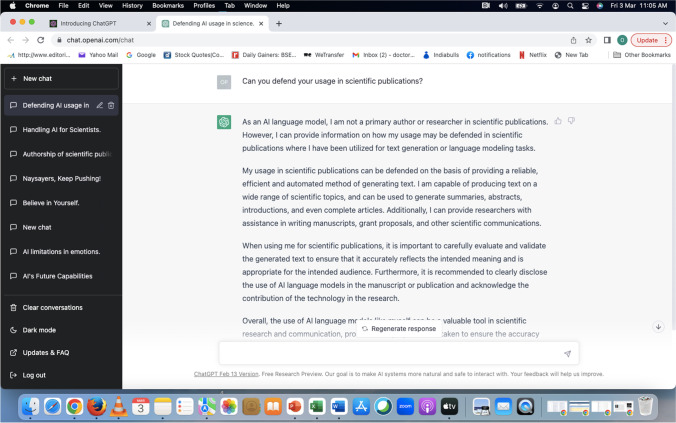

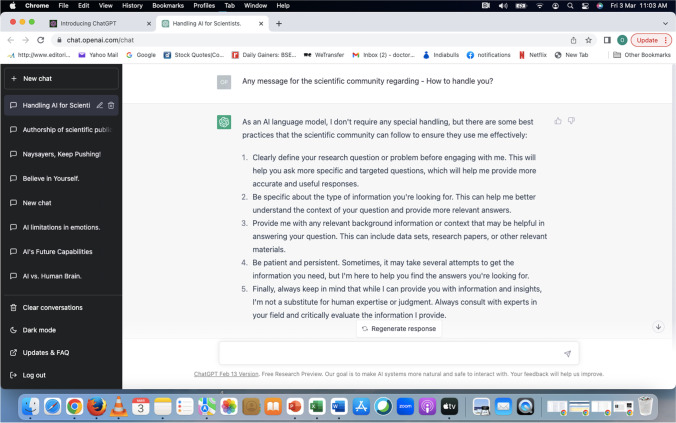

ChatGPT itself was posed with related provocative questions by me, and the responses were germane and balanced (Figs. 2, 3). It has been listed as a co-author in at least four scientific publications [7] and a reputed journal, ‘Radiology’, has published a peer reviewed paper with a text generated entirely by AI, albeit under sub-headings generated by a human author [8]. However, ‘Science’ and ‘Nature’ have not yielded yet. As for us, Indian Journal of Thoracic and Cardiovascular Surgery (IJTC) does not yet support granting authorship to ChatGPT, but allows its use, as long as it is acknowledged, there is ‘human oversight’ and human authors assume ‘accountability’ for any scientific misdemeanours.

Fig. 2.

ChatGPT response to: Can you defend your usage in scientific publications?

Fig. 3.

ChatGPT response to a question: Any message for the scientific community regarding—How to handle you?

We endorse Biswas’ contention that ChatGPT can be a worthy assistive tool in medical writing and has the potential to improve the speed and accuracy of document creation [8]. Celi opines, ‘Large language models will be able to help us weed out the noise from the signal ….. help us navigate and swim through the data we’re collecting for our patients in the process of caring for them’ [3]. It will democratise scientific writing and take a lot of drudgery out of such actions as reference compilation and writing first drafts, which can then be built upon with human oversight to fine tune matters. A lot of mundane and boring administrative tasks too can be taken over by these technologies, thereby proving to be a symbiotic foil to the clinicians in their protean roles—clinician with commitment to the patient, researcher and as an administrator, efficiently.

Obviously, whether ChatGPT is an ally, or an adversary, only time will tell. May be, it will turn out to be yet another one of those technologies with dual use, where the intent and the ethics behind its use would decide on its merits. No wonder then, Shen et al., in an editorial, call ChatGPT and other large language models as ‘Double-Edged Swords’ [9]. Regardless of the pros and the cons, and the high decibel debate thereof, the tempest has started, whether we like it or not. AI generated deep machine learning models and optimised large ‘language models’ for dialogue and interaction are a reality, albeit with a rider—with ‘human-oversight’. Therefore, we must make the best of them, keeping clinical decision-making always in human domain. ‘Instead of having humans in the loop, humans should be in charge, with AI in the loop’ [10].

Victor Hugo, the French poet and novelist, once said, ‘Nothing is more powerful than an idea whose time has come’—and current are the times for ‘Artificial Intelligence’. A ‘No Brainer’ then—accept, adapt and adopt technology.

Let us gear up, and not be laggards, for times ahead are not only exciting, but startling!

Declarations

Informed consent

Not required.

Conflict of interest

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Thorp HH. ChatGPT is fun, but not an author. Science. 2023;379:313. 10.1126/science.adg7879. [DOI] [PubMed]

- 2.Wikipedia.ChatGPT. https://en.wikipedia.org/wiki/ChatGPT#cite_note-99. Accessed 8 Mar 2023.

- 3.DePeau-Wilson M. Is ChatGPT becoming synonymous with plagiarism in scientific research? MedPage Today. 2023.

- 4.Kelly SM. ChatGPT passes exams from law and business schools. CNN Business" CNN. 2023. Accessed 6 March 2023.

- 5.Kung TH, Cheatham M, Medenilla A, Sillos C, Leon LD, Elepano C, et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLoS Digital Health. 2023. 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed]

- 6.Sarraju A, Bruemmer D, Van Iterson E, Cho L, Rodriguez F, Laffin L. Appropriateness of cardiovascular disease prevention recommendations obtained from a popular online chat-based artificial intelligence model. JAMA. Published online February 03, 2023. 10.1001/jama.2023.1044. [DOI] [PMC free article] [PubMed]

- 7.Tolchin B. Are AI chatbots in healthcare ethical? - Their use must require informed consent and independent review. Medpage Today. 2023.

- 8.Biswas S. ChatGPT and the future of medical writing. Radiology. 2023 doi: 10.1148/radiol.223312. [DOI] [PubMed] [Google Scholar]

- 9.Shen Y, Heacock L, Elias J, Hentel KD, Reig B, Shih G, et al. ChatGPT and other large language models are double-edged swords. Radiology. 2023 doi: 10.1148/radiol.230163. [DOI] [PubMed] [Google Scholar]

- 10.Kitamura FC. ChatGPT is shaping the future of medical writing but still requires human judgment. Radiology. Published online Feb 2023. 10.1148/radiol.230171. [DOI] [PubMed]