Abstract

Deep learning has become a popular tool for medical image analysis, but the limited availability of training data remains a major challenge, particularly in the medical field where data acquisition can be costly and subject to privacy regulations. Data augmentation techniques offer a solution by artificially increasing the number of training samples, but these techniques often produce limited and unconvincing results. To address this issue, a growing number of studies have proposed the use of deep generative models to generate more realistic and diverse data that conform to the true distribution of the data. In this review, we focus on three types of deep generative models for medical image augmentation: variational autoencoders, generative adversarial networks, and diffusion models. We provide an overview of the current state of the art in each of these models and discuss their potential for use in different downstream tasks in medical imaging, including classification, segmentation, and cross-modal translation. We also evaluate the strengths and limitations of each model and suggest directions for future research in this field. Our goal is to provide a comprehensive review about the use of deep generative models for medical image augmentation and to highlight the potential of these models for improving the performance of deep learning algorithms in medical image analysis.

Keywords: data augmentation, deep learning, medical imaging, generative models, variational autoencoders, diffusion models

1. Introduction

In recent years, advances in deep learning have been remarkable in many fields, including medical imaging. Deep learning is used to solve a wide variety of tasks such as classification [1,2], segmentation [3], and detection [4] using different types of medical imaging modalities, for instance, magnetic resonance imaging (MRI) [5], computed tomography (CT) [6], and positron emission tomography (PET) [7]. Most of these modalities are defined as very high-dimensional data, and the number of training samples is often limited in the medical domain (e.g., the rarity of certain diseases). As deep learning algorithms rely on large amounts of data, running such applications in a low-sample-size regime can be very challenging. Data augmentation can increase the size of the training set by artificially synthesizing new samples. It is a very popular technique in computer vision [8] and has become inseparable from deep learning applications when rich training sets are not available. Data generation is also used in the case of missing modalities for multimodal image segmentation [9]. As a result, the model can be trained to generalize images with better quality and avoid overfitting. In addition, some deep learning frameworks, including PyTorch [10], allow for on-the-fly data augmentation during training, rather than physically expanding the training dataset. Basic data augmentation operations include random rotations, cropping, flipping, or noise injection. However, these simple operations are not sufficient when dealing with complex data such as medical images.

Several studies have been conducted to propose data augmentation schemes more suitable for the medical domain. The ultimate goal would be to reproduce a data distribution as close as possible to the real data, such that it is impossible, or at least difficult, to distinguish the newly sampled data from the real data. Recent performance improvements in deep generative models have made them particularly attractive for data augmentation. For example, generative adversarial networks (GANs) [11] have demonstrated their ability to generate realistic images. As a result, this architecture has been widely used in the medical field [12,13] and has been included in several data augmentation reviews [14,15,16]. Nevertheless, GANs also have their drawbacks, such as learning instability, difficulty in converging, and suffering from mode collapse [17], which is a state where the generator produces only a few samples. Variational autoencoders (VAEs) [18] are another type of deep generative model that has received less attention in data augmentation. VAEs outperform GANs in terms of output diversity and are free of mode collapse. However, the major problem is their tendency to often produce blurry and hazy output images. This undesirable effect is due to the regularization term in the loss function. Recently, a new type of deep generative model called diffusion models (DMs) [19,20] has emerged and promises remarkable results with a great ability to generate realistic and diverse outputs. However, DMs are still in their infancy and are not yet well established in the medical field, but are expected to be a promising alternative to previous generative models. One of the drawbacks of DMs is their high computational cost and huge sampling time.

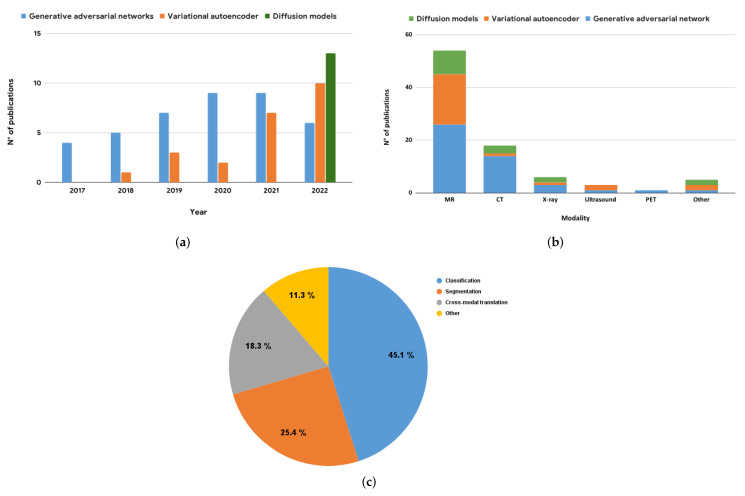

Different approaches have been proposed to solve this generative learning trilemma of quality sampling, fast sampling, and diversity [21]. In this paper, we review the state of the art of deep learning architectures for data augmentation, focusing on three types of deep generative models for medical image augmentation: VAEs, GANs, and DMs. To provide an accurate review, we harvested a large number of publications via the PubMed and Google Scholar search engines. We selected only publications dating from at least 2017 using various keywords related to data augmentation in medical imaging. Following this, a second manual filtering was performed to eliminate all cases of false positives (publications not related to the medical field and/or data augmentation). In conclusion, 72 publications have been kept, mainly from journals such as IEEE Transactions In Medical Imaging or Medical Image Analysis and conferences such as Medical Image Computing and Computer Assisted Intervention and IEEE International Symposium on Biomedical Imaging. Some publications will be described in more detail in Section 3; these have been selected according to two criteria: date of publication and number of citations. Nevertheless, all the articles are available in descriptive tables as well as other information such as datasets used to perform training. These different papers were organized according to the deep generative model employed and the main downstream tasks targeted by the generated data (i.e., classification, segmentation, and cross-modal translation). Knowing the dominance of GANs for data augmentation in the medical imaging domain, the objective of this article is to highlight other generative models. To the best of our knowledge, this is the first review article that compares different deep generative models for data augmentation in medical imaging and does not focus exclusively on GANs [14,15,16], nor traditional data augmentation methods [22,23]. The quantitative ratio of GAN-based articles to the rest of the deep generative models is very unbalanced; nevertheless, we try to bring some equilibrium to this ratio in the hope that an unbiased comparative study following this paper may be possible in the future. To further illustrate our findings, we present a graphical representation of the selected publications in Figure 1. This figure provides a comprehensive overview of the number of publications per year, per modality, and per downstream task. By analyzing these graphics, we can observe the trends and the preferences of the scientific community in terms of the use of deep generative models for data augmentation in medical imaging.

Figure 1.

Distribution of publications on deep generative models applied to medical imaging data augmentation as of 2022. (a) The number of publications per architecture type and year. (b) The distribution of publications by modality, with CT and MRI being the most-commonly studied imaging modalities. Note that for cross-modal translation tasks, both the source and target modalities are counted in this plot. (c) The distribution of publications by downstream task, with segmentation and classification being the most common tasks in medical imaging. This figure illustrates the increasing interest in using deep generative models for data augmentation in medical imaging and highlights the diversity of tasks and modalities that have been addressed in the literature.

This article is organized as follows: Section 2 presents a brief theoretical view of the above deep generative models. Section 3 reviews deep generative models for medical imaging data augmentation, grouped by the targeted application. Section 4 discusses the advantages and disadvantages of each architecture and proposes a direction for future research. Finally, Section 5 concludes the paper.

2. Background

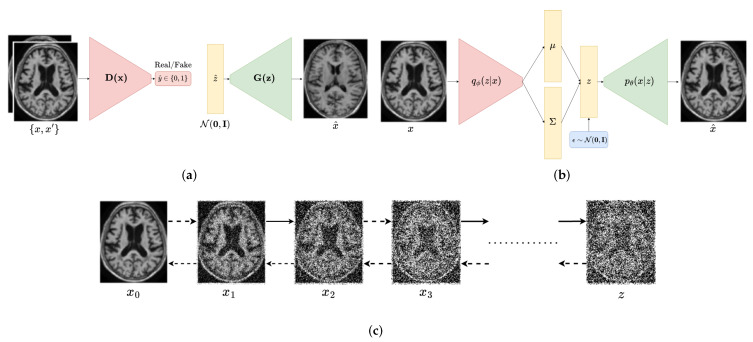

The main goal of deep generative models is to learn the underlying distribution of the data and to generate new samples that are similar to the real data. Our deep generative model can be represented as a function that maps a low-dimensional latent vector to a high-dimensional data point such as . The latent variable z is a realization of a random vector that is sampled from a prior distribution . The data point x is another realization sampled from the data distribution . The goal of the deep generative model is to learn the mapping function g such that the generated data are similar to the real data x associated with z. Each deep generative model proposes its own approach to learn the mapping function g. In this section we present a brief overview of the most popular deep generative models. Figure 2 provides a visual representation of their respective architectures.

Figure 2.

Illustration of the three deep generative models that are commonly used for medical image augmentation: (a) generative adversarial networks (GANs), which consist of a generator and a discriminator network trained adversarially to generate realistic data; (b) variational autoencoders (VAEs), which consist of an encoder and a decoder network trained to reconstruct data and learn a compact latent representation; and (c) diffusion models, which consist of a forward and backward flow of information through a series of steps to model the data distribution.

2.1. Generative Adversarial Networks

GAN [11] is a class of deep generative models composed of two separate networks: a generator and a discriminator. The generator can be seen as a mapping function G from a random latent vector z to a data point x, where z is sampled from a fixed prior distribution commonly modelled as a Gaussian distribution. The discriminator D is a binary classifier that takes a data point x as input and outputs a probability such that x is a real data point. During the training process, the generator G is trained to replicate data points so that the discriminator cannot distinguish between real data points and the generated data points . On the other hand, the discriminator D is trained to differentiate the fake from the real data points. Those two networks are trained simultaneously in an adversarial manner, hence the name generative adversarial network. The loss functions of G and D can be expressed as follow:

| (1) |

where and are the corresponding learnable parameters for the generator and discriminator neural networks, respectively.

This adversarial learning has proven to be effective in capturing the underlying distribution of the real data distribution . This has been inspired by game theory and can be seen as a minimax game between the generator and the discriminator. It is ultimately desirable to reach a Nash equilibrium where both the generator and discriminator are equally effective at their tasks. The loss function can be summarized as follows:

| (2) |

Once trained, new data points can be synthesized by sampling a random latent vector z from the prior distribution and feeding it to the generator.

2.2. Variational Autoencoders

Variational inference is a Bayesian inference technique that allows us to estimate the posterior distribution with a simpler distribution . The aim of variational inference is to minimize a Kullback–Leibler divergence between the posterior distribution and the variational distribution , where and are the posterior and variational distribution parameters, respectively. The Kullback–Leibler is the most commonly used. The loss function based on Kullback–Leibler is defined as follows:

| (3) |

With further simplifications, and applying Jensen’s inequality, we can rewrite the above equation as:

| (4) |

| (5) |

where is the marginal log likelihood of the data x, is the prior distribution of the latent variable z, generally modeled as a Gaussian distribution, and ELBO is the evidence lower bound. The variational distribution can be learned by minimizing , which is equivalent to maximizing the ELBO given a fixed . This ELBO term can be further decomposed into two terms: the reconstruction term and the regularization term. The reconstruction term measures the difference between the input data and its reconstruction, and it is typically calculated using binary cross-entropy loss. The regularization term ensures that the latent variables follow a desired distribution, such as a normal distribution, and it is calculated using the Kullback–Leibler divergence between the latent distribution and the desired distribution. Together, these two terms form the ELBO loss function, which is used to train the VAE model. The VAE is composed of an encoder and a decoder . The encoder is a neural network that maps the data x to the latent variable z. The decoder is a neural network that maps the latent variable z to the data x. The VAE is trained by minimizing the reconstruction and regularization terms (6).

| (6) |

Once trained, new data points can be synthesized by sampling a random latent vector z from the prior distribution and feeding it to the decoder. In other words, the decoder represents the generative model.

2.3. Diffusion Probabilistic Models

Diffusion models [19,20] are a class of generative models that are based on the diffusion process. The diffusion process is a stochastic process that can be seen as a parameterized Markov chain. Each transition in the chain gradually adds a Gaussian noise to an initial data point of distribution . The diffusion process can be expressed as follow:

| (7) |

where is the predefined noise variance at step t, , and T, the total number of steps. The diffusion model is trained to reverse the diffusion process starting with a noise input and reconstructing the initial data point . This denoising process can be seen as a generative model. The reverse diffusion process can be expressed as follows:

| (8) |

where and are the mean and the variance of the denoising model at step t. Similarly to the VAE, diffusion models learn to recreate the true sample at each step by maximizing the evidence lower bound (ELBO), matching the true denoising distribution and the learned denoising distribution . By the end of the training, the diffusion model will be able to map a noise input to the initial data point throught reverse diffusion; hence, new data points can be synthesized by sampling a random noise vector from the prior distribution and feeding it to the model.

2.4. Exploring the Trade-Offs in Deep Generative Models: The Generative Learning Trilemma

2.4.1. Generative Adversarial Networks

The design and training of VAEs, GANs, and DMs is often subject to trade-offs between fast sampling, high-quality samples, and mode coverage, known as the generative learning trilemma [21]. Among these models, GANs have received particular attention due to their ability to generate realistic images and are the first deep generative models to be extensively used for medical image augmentation. They are known for their ability to generate high-quality samples that are difficult to distinguish from real data. However, they may suffer from mode collapse, a phenomenon where the model only generates samples from a limited number of modes or patterns in the data distribution, potentially leading to poor coverage of the data distribution and a lack of diversity in the generated samples. To address mode collapse, several variations of GAN have been proposed. One popular approach is the Wasserstein GAN (WGAN) [24], which replaces the Jensen–Shannon divergence used in the original GAN with the Wasserstein distance, a metric that measures the distance between two probability distributions. This has the benefit of improving the quality of the generated samples. Another widely used extension is the conditional GAN (CGAN) [25], which adds a conditioning variable y to the latent vector z in the generator, allowing for more control over the generated samples and partially mitigating mode collapse. The CGAN can be seen as a generative model that can generate data points x conditioned on y and models the joint distribution . A GAN with a conditional generator has been introduced by Isola et al. [26] to learn to translate images from one domain to another by replacing the traditional noise-to-image generator with a U-Net [27]. The adversarial learning process allows the U-Net to generate more realistic images based on a better understanding of the underlying data distribution.

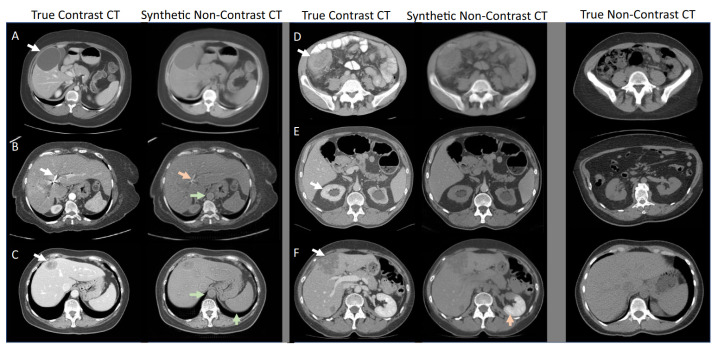

Other variations of the GAN include deep convolutional GAN (DCGAN) [28], progressive growing GAN (PGGAN) [29], CycleGAN [30], auxiliary classifier GAN (ACGAN) [31], VAE-GAN [32], and many others, which have been proposed to address various issues such as training stability, scalability, and quality of the generated samples. While these variants have achieved good results in a variety of tasks, they also come with their own set of trade-offs. Despite these limitations, GANs are generally fast at generating new images, making them a good choice for data augmentation when well-trained. As an example, Figure 3 showcases the capacity of a CycleGAN to generate realistic synthetic medical images.

Figure 3.

Adapted from Sandfort et al. [12], the study presented examples of true contrast CT scans and synthetic non-contrast CT scans generated using a CycleGAN. The left columns show the true contrast CT scans, while the right columns present the synthetic non-contrast CT scans. It is observed that the synthetic non-contrast images generated with CycleGAN appeared convincing, even in the presence of significant abnormalities in the contrast CT scans. The last column on the right displays unrelated examples of non-contrast images. The letters A to F in this figure represent various abnormalities/pathologies, and the arrows indicate their corresponding synthetic non-contrast CT images. However, they are not essential for understanding the main purpose of the figure, which is to demonstrate the generator's ability to produce realistic images.

2.4.2. Variational Autoencoders

VAEs are a another type of deep generative model that has gained popularity for their ease of training and good coverage of the data distribution. Unlike GANs, VAEs are trained to maximize the likelihood of the data rather than adversarially, making them a good choice for tasks that require fast sampling and good coverage of the data distribution. Using variational inference methods, VAEs are able to better approximate the real data distribution given a random noise vector, thus making them less vulnerable to mode collapse. Moreover, VAEs enable the extraction of relevant features and can learn a smooth latent representation of the data, which allows for the interpolation of points in the space providing more control over the generated samples [33].

VAEs have not been as commonly used for data augmentation compared to GANs due to the blurry and hazy nature of the generated samples. However, several proposals, such as inverse autoregressive flow [34], InfoVAE [35], or VQ-VAE2 [36], have been made to improve the quality of VAE-generated samples as well as the variational aspect of the model. Despite this, most of these extensions have not yet been applied to medical image augmentation. A more effective approach to addressing the limitations of VAEs in this context is to utilize a hybrid model called a VAE-GAN, which combines the strengths of both VAEs and GANs to generate high-quality, diverse, and realistic synthetic samples. While VAE-GANs cannot fully fix the low-quality generation of VAEs, they do partially address this issue by incorporating the adversarial training objective of GANs, which allows for the improvement of visual quality and sharpness of the generated samples while still preserving the ability of VAEs to learn a compact latent representation of the data. In addition to VAE-GANs, another common architecture for medical image augmentation is the use of conditional VAEs (CVAEs), which allows for the control of the output samples by conditioning the generation process on additional information, such as class labels or attributes. This can be particularly useful in medical imaging, as it allows for the generation of synthetic samples that are representative of specific subgroups or conditions within the data. By using conditional VAEs, it is possible to generate synthetic samples that are more targeted and relevant to specific tasks or analyses. In summary, VAEs, VAE-GANs, and conditional VAEs are all viable approaches for medical image augmentation, each offering different benefits and trade-offs in terms of diversity, quality, and fidelity of the generated samples.

2.4.3. Diffusion Models

There has been a recent surge in the use of DMs for image synthesis in the academic literature due to their superior performance in generating high-quality and realistic synthesized images compared to other deep generative models such as VAEs and GANs [37]. This success can be attributed to the way in which DMs model the data distribution by approximating it using a series of simple distributions combined through the diffusion process, allowing them to capture complex, high-dimensional distributions and generate samples that are highly representative of the underlying data. This is especially useful for synthesizing images as natural images often have a wide range of textures, colors, and other visual features that can be difficult to model using simpler parametric models. This can also be applied to medical imaging where data tends to be complex. However, DMs can also have some limitations, such as being computationally intensive to solve, especially for large or complex systems, and requiring a significant amount of data to be accurately calibrated. In addition, DMs have a long sampling time compared to other deep generative models such as VAEs and GANs due to the high number of steps in the reverse diffusion process (ranging from several hundreds to thousands). This issue is compounded when the model is being used in real-time applications or when it is necessary to generate large numbers of samples. As a result, researchers have proposed several solutions and variants of diffusion models that aim to improve the sampling speed while maintaining high-quality and diverse samples. These include strategies such as progressive distillation [38]. This method involves distilling a trained deterministic diffusion sampler, using many steps, into a new diffusion model that takes half as many sampling steps. Another way to improve the sampling time is the use of improved variants such as Fast Diffusion Probabilistic Model (FastDPM) [39], which uses a modified optimization algorithm to reduce the sampling time and introduces a concept of continuous diffusion process, or with non-Markovian diffusion models such as Denoising Diffusion Implicit Model (DDIM) [40]. Similarly to VAE-GAN, ref. [21] proposes the denoising diffusion GAN, which is a hybrid architecture between DMs and multimodal conditional GANs [25], which have been shown to produce high-quality and diverse samples at a much faster sampling speed compared to the original diffusion models (factor of ×2000). Overall, while diffusion models have demonstrated great potential in the field of image synthesis, their long sampling time remains a challenge that researchers are actively working to address.

3. Deep Generative Models for Medical Image Augmentation

Medical image processing and analysis using deep learning has developed rapidly in the past years, and it has been able to achieve state-of-the-art results in many tasks. However, the lack of data is still a major issue in this field. To address this, medical image augmentation became a crucial task, and many studies have been conducted in this direction. In this section, we will review the different deep generative models that have been proposed to generate synthetic medical images. This review is organized into three different categories corresponding to each one of the deep generative models. The publications are further classified according to the downstream task targeted by the generated images. We address here the most common tasks in medical imaging: classification, segmentation, and cross-model image translation, which will be summarized in the form of tables.

3.1. Generative Adversarial Networks

As part of their study, Han et al. [41] proposed the use of two variants of GANs for generating (2D) MRI sequences: a WGAN [24] and a DCGAN [28], in which combinations of convolutions and batch normalizations replace the fully-connected layers. The results of this study were presented in the form of a visual Turing test where an expert physician was asked to classify real and synthetic images. For all MRI sequences except FLAIR images, WGAN was significantly more successful at deceiving the physician than DCGAN (62% compared to 54%). The same author further proposes using PGGAN [29] combined with traditional data augmentation techniques such as geometric transformations. PGGAN is a GAN with a multi-stage training strategy that progressively increases the resolution of the generated images. The results indicate that combining PGGAN with traditionally augmented data can slightly improve the performance of the classifier when compared to using PGGAN alone.

Conditional synthesis is a technique that allows the generation of images conditioned on a specific variable y. This is particularly useful in medical imaging, where tasks such as segmentation or cross-modal translation are widespread. A variable y serves as the ground truth for the generated images and can be expressed in various ways, including class labels, segmentation maps, or translation maps. In this context, Frid-Adar et al. [42] propose to use an ACGAN [31] for synthesizing liver lesions in CT images. The ACGAN is a GAN with a discriminator conditioned on a class label. Three label classes were considered: cysts, metastases, and hemangiomas. Based solely on conventional data augmentation, the classification results produced a sensitivity of 78.6% and a specificity of 88.4%. By adding the synthetic data augmentation, the results increased to a sensitivity of 85.7% and a specificity of 92.4%. Guibas et al. [43] propose a two-stage pipeline for generating synthetic images of fundus photographs with associated blood vessel segmentation masks. In the first stage, synthetic segmentation masks are generated using DCGAN, and in the second stage, these synthetic masks are translated into photorealistic fundus images using CGAN. Comparing the Kullback–Leibler divergence between the real and synthetic images revealed no significant differences between the two distributions. In addition, the authors evaluated the generated images on a segmentation task using only synthetic images, showing an F1 score of 0.887 versus 0.898 when using real images. This negligible difference indicates the quality of the generated images. By the same token, Platscher et al. [44] propose using a two-step image translation approach to generate MRI images with ischemic stroke lesion masks. The first step consists of generating synthetic stroke lesion masks using a WGAN. The newly generated fake lesions are implanted on healthy brain anatomical segmentation masks. Finally, those segmentation masks are fed into a pretrained image-translation model that maps the mask into a real ischemic stroke MRI. The authors studied three different image translation models, CycleGAN [30], Pix2Pix [26], and SPADE [45], and reported that Pix2Pix was the most successful in terms of visual quality. A U-Net [27] was trained using both clinical and generated images and showed an improvement in the Dice score compared to the model trained only on clinical images (63.7% to 72.8%).

Regarding cross-modal translation, Yurt et al. [46] propose a multi-stream approach for generating missing or corrupted MRI contrasts from other high-quality ones using a GAN-based architecture. The generator is composed of multiple one-to-one streams and a joint many-to-one stream, which are designed to learn latent representations sensitive to unique and common features of the source, respectively. The complementary feature maps generated in the one-to-one streams and the shared feature maps generated in the many-to-one stream are combined with a fusion block and fed to a joint network that infers the final image. In their experiments, the authors compare their approach to other state-of-the-art translation GANs and show that the proposed method is more effective in terms of quantitative and radiological assessments. The synthesized images presented in this study demonstrate the effectiveness of deep learning approaches applied to data augmentation in medical imaging. Specifically, the study investigated two tasks: (a) T1-weighted image synthesis from T2- and PD-weighted images and (b) PD-weighted image synthesis from T1- and T2-weighted images. The results obtained from the proposed method outperformed other variants of GANs such as pGAN [47] and MM-GAN [48], highlighting its effectiveness for image synthesis in medical imaging.

In summary, the use of GANs for data augmentation has been demonstrated to be a successful approach. The studies discussed in this section have employed some of the most innovative and known GAN architectures in the medical field, including WGAN, DCGAN, and Pix2Pix, and have primarily focused on three tasks: classification, segmentation, and cross-modal translation. Custom-made GAN variants have also been proposed in the current state of the art (see Table 1), some of which could be explored further. Notably, conditional synthesis has proven to be particularly useful for tasks such as segmentation and cross-modal translation, as seen with the ACGAN and Pix2Pix, resulting in an improved classification performance. Additionally, two-stage pipeline approaches have been proposed for generating synthetic images conditioned on segmentation masks. To further illustrate the use of GANs for medical image augmentation, we present a summary of the relevant studies in Table 1. This table includes information about the dataset, imaging modality, and evaluation metrics used in each study, as well as the specific type of GAN architecture employed. A further discussion will be presented in Section 4.

Table 1.

Overview of GAN-based architectures for medical image augmentation, including hybrid status of architectures (if applicable), indicating used combinations of VAEs, GANs, and DMs.

| Reference | Architecture | Hybrid Status | Dataset | Modality | 3D | Eval. Metrics |

|---|---|---|---|---|---|---|

| Classification | ||||||

| [42] | DCGAN, ACGAN | Private | CT | Sens., Spec. | ||

| [41] | DCGAN, WGAN | BraTS2016 | MR | Acc. | ||

| [49] | PGGAN, MUNIT | BraTS2016 | MR | ✓ | Acc., Sens., Spec., | |

| [50] | AE-GAN | Hybrid (V + G) | BraTS2018, ADNI | MR | ✓ | MMD, MS-SSIM |

| [51] | ICW-GAN | OpenfMRI, HCP | MR | ✓ | Acc., Prec., F1 | |

| NeuroSpin, IBC | Recall | |||||

| [52] | ACGAN | IEEE CCX | X-ray | Acc., Sens., Spec. | ||

| Prec., Recall, F1 | ||||||

| [53] | PGGAN | BraTS2016 | MR | Acc., Sens., Spec. | ||

| [54] | ANT-GAN | BraTS2018 | MR | Acc. | ||

| [55] | MG-CGAN | LIDC-IDRI | CT | Acc., F1 | ||

| [56] | FC-GAN | Hybrid (V + G) | ADHD, ABIDE | MR | Acc., Sens., Spec., AUC | |

| [57] | TGAN | Private | Ultrasound | Acc., Sens., Spec. | ||

| [58] | AAE | Private | MR | Prec., Recall, F1 | ||

| [59] | DCGAN, InfillingGAN | DDSM | CT | LPIPS, Recall | ||

| [60] | SAGAN | COVID-CT, SARS-COV2 | CT | Acc. | ||

| [61] | StyleGAN | Private | MR | - | ||

| [62] | DCGAN | PPMI | MR | Acc., Spec., Sens. | ||

| [63] | TMP-GAN | CBIS-DDMS, Private | CT | Prec., Recall, F1, AUC | ||

| [64] | VAE-GAN | Hybrid (V + G) | Private | MR | Acc., Sens., Spec. | |

| [65] | CounterSynth | UK Biobank, OASIS | MR | ✓ | Acc., MSE, SSIM, MAE | |

| Segmentation | ||||||

| [43] | CGAN | DRIVE | Fundus photography | KLD, F1 | ||

| [66] | DCGAN | SCR | X-ray | Dice, Hausdorff | ||

| [67] | CB-GAN | BraTS2015 | MR | Dice, Prec., Sens. | ||

| [68] | Pix2Pix | BraTS2015, ADNI | MR | ✓ | Dice | |

| [12] | CycleGAN | NIHPCT | CT | Dice | ||

| [69] | CM-GAN | Private | MR | KLD, Dice | ||

| hausdorff | ||||||

| [70] | CGAN | COVID-CT | CT | FID, PSNR, SSIM, RMSE | ||

| [71] | Red-GAN | BraTS2015, ISIC | MR | Dice | ||

| [44] | Pix2Pix, SPADE, CycleGAN | Private | MR | Dice | ||

| [72] | StyleGAN | LIDC-IDRI | CT | Dice, Pres., Sens. | ||

| [73] | DCGAN, GatedConv | Private | X-ray | MAE, PSNR, SSIM, FID, AUC | ||

| Cross-modal translation | ||||||

| [74] | CycleGAN | Private | MR ↔ CT | ✓ | Dice | |

| [75] | CycleGAN | Private | MR → CT | MAE, PSNR | ||

| [76] | Pix2Pix | ADNI, Private | MR → CT | ✓ | MAE, PSNR, Dice | |

| [77] | MedGAN | Private | PET → CT | SSIM, PSNR, MSE | ||

| VIF, UQI, LPIPS | ||||||

| [47] | pGAN, CGAN | BraTS2015, MIDAS, IXI | T1 ⟷ T2 | SSIM, PSNR | ||

| [69] | CM-GAN | Private | MR | KLD, Dice | ||

| hausdorff | ||||||

| [46] | mustGAN | IXI, ISLES | T1 ↔ T2 ↔ PD | SSIM, PSNR | ||

| [78] | CAE-ACGAN | Hybrid (V + G) | Private | CT → MR | ✓ | PSNR, SSIM, MAE |

| [79] | GLA-GAN | ADNI | MR → PET | SSIM, PSNR, MAE | ||

| Acc., F1 | ||||||

| Other | ||||||

| [80] | VAE-CGAN | Hybrid (V + G) | ACDC | MR | ✓ | - |

Note: V = variational autoencoders, G = generative adversarial networks.

3.2. Variational Autoencoders

Zhuang et al. [51] present an empirical evaluation of 3D functional MRI data augmentation using deep generative models such as VAEs and GANs. The results indicate that CVAE and conditional WGAN can produce diverse, high-quality brain images. A 3D convolutional neural network (CNN) was used to further evaluate the generated samples on the original and augmented data in a classification task, demonstrating an accuracy improvement of when using CVAE augmented data and when using CWGAN augmented data. As part of Pesteie et al. [81], a revised variant of the CVAE is proposed, called the ICVAE, which separates the embedding space of the input data and the conditioning variables. This allows the generated image characteristics to be independent of the conditioning variables, resulting in a more diverse output. In contrast, the standard CVAE encodes the data and conditioning variables in a shared embedding space. The authors evaluate the ICVAE on classification and segmentation tasks using transverse ultrasound images of the spine and FLAIR MRI images of the brain, respectively. The results demonstrate an improvement of in classification accuracy and in the Dice score compared to the model trained on real images only. The ICVAE model is able to generate more realistic MRI images by encoding appearance features independently of the structures in its latent space. The authors demonstrate the generation of synthetic MRI and ultrasound images using the ICVAE architecture, which are conditioned on a tumor segmentation mask and a label indicating the center-line of the spine, respectively. The CVAE architecture is also shown for comparison. Chadebec et al. [82] introduce a novel Geometry-aware VAE for high dimensional data augmentation in low sample size settings. This model combines Riemannian metric learning with normalizing flows to improve the expressiveness of the posterior distribution and learn meaningful latent representations of the data. Additionally, the authors propose a new non-prior sampling scheme based on Hamiltonian Monte Carlo, since the standard procedure utilizing the prior distribution is highly dependent upon the data, especially for small datasets. As a result, the generated samples are remarkably more realistic than those generated by a conventional VAE, and the model is more resilient to the lack of data. An evaluation of the synthetic data on a classification task shows an improvement in accuracy from 66.3% to 74.3% using 50 real + 5000 synthetic MRIs, compared to using only the original data. The original paper by Chadebec et al. [82] includes a challenge in which readers are invited to identify the real brain MRIs from fake ones.

Other studies suggest the use of VAEs to improve the segmentation task performance. Huo et al. [83] introduce a progressive VAE-based architecture (PAVAE) for generating synthetic brain lesions with associated segmentation masks. The authors propose a two-step pipeline where the first step consists in generating synthetic segmentation masks based on a conditional adversarial VAE. The CVAE is assisted by a “condition embedding block” that encodes high-level semantic information of the lesion into the feature space. The second step involves generating photorealistic lesion images conditioned on the lesion mask using “mask embedding blocks”, which encodes the lesion mask into the feature space during generation, similar to SPADE. The authors compare their approach to other state-of-the-art methods and show that PAVAE can produce more realistic synthetic lesions with associated segmentation masks. A segmentation network is trained using both real and synthetic lesions and shows an improvement in the Dice score compared to the model trained only on real images (66.69% to 74.18%).

In a recent paper, Yang et al. [78] propose a new model for cross-domain translation called conditional variational autoencoding GAN (CAE-ACGAN). CAE-ACGAN combines the advantages of both VAEs and GANs in a single end-to-end architecture. The integration of VAE and GAN, along with the implementation of an auxiliary discriminative classifier network, allows for a partial resolution of the challenges posed by image blurriness and mode collapse. Moreover, the VAE incorporates skip connections between the encoder and decoder, which enhances the quality of the images generated. In addition to translating 3D CT images into their corresponding MR, the CAE-ACGAN generates more realistic images as a result of its discriminator, which serves as a quality-assurance mechanism. Based on PSNR and SSIM scores, the CAE-ACGAN model showed a mild improvement over other state-of-the-art architectures, such as Pix2Pix and WGAN-GP [84].

Table 2 compiles a summary of the relevant studies using VAEs in medical data augmentation. In contrast to GANs, the number of studies employing VAEs for data augmentation in medical imaging is relatively low. However, almost half of these studies have utilized hybrid architectures, combining VAEs with adversarial learning. Interestingly, we observe that unlike GANs, there are not many VAE variants in medical imaging. Most commonly used VAE architectures are either conditional, such as vanilla CVAE and ICVAE, or hybrid architectures, such as IntroVAE, PAVAE, and ALVAE. Further discussion on the effectiveness of VAEs for medical image augmentation and the specific architectures utilized in previous studies will be presented in Section 4.

Table 2.

Overview of VAE-based architectures for medical image augmentation, including hybrid status of architectures (if applicable), indicating the combination of VAEs and GANs used in each study.

| Reference | Architecture | Hybrid Status | Dataset | Modality | 3D | Eval. Metrics |

|---|---|---|---|---|---|---|

| Classification | ||||||

| [81] | ICVAE | Private | MR | Acc., Sens., Spec. | ||

| Ultrasound | Dice, Hausdroff, … | |||||

| [51] | CVAE | OpenfMRI, HCP | MR | ✓ | Acc., Prec., F1 | |

| NeuroSpin, IBC | Recall | |||||

| [82] | GA-VAE | ADNI, AIBL | MR | ✓ | Acc., Spec., Sens. | |

| [85] | MAVENs | Hybrid (V + G) | APCXR | X-ray | FID, F1 | |

| [61] | IntroVAE | Hybrid (V + G) | Private | MR | - | |

| [86] | DR-VAE | HCP | MR | - | ||

| [64] | VAE-GAN | Hybrid (V + G) | Private | MR | Acc., Sens., Spec. | |

| [87] | VAE | Private | MR | Acc. | ||

| [88] | RH-VAE | OASIS | MR | ✓ | Acc. | |

| Segmentation | ||||||

| [89] | VAE-GAN | Hybrid (V + G) | Private | Ultrasound | MMD, 1-NN, MS-SSIM | |

| [90] | AL-VAE | Hybrid (V + G) | Private | OCT 1 | MMD, MS, WD | |

| [83] | PA-VAE | Hybrid (V + G) | Private | MR | ✓ | PSNR, SSIM, Dice |

| NMSE, Jacc., … | ||||||

| Cross-modal translation | ||||||

| [78] | CAE-ACGAN | Hybrid (V + G) | Private | CT → MR | ✓ | PSNR, SSIM, MAE |

| [91] | 3D-UDA | Private | FLAIR ↔ T1 ↔ T2 | ✓ | SSIM, PSNR, Dice | |

| Other | ||||||

| [92] | CVAE | ACDC, Private | MR | ✓ | - | |

| [92] | CVAE | Private | MR | ✓ | Dice, Hausdorff | |

| [93] | Slice-to-3D-VAE | HCP | MR | ✓ | MMD, MS-SSIM | |

| [94] | GS-VDAE | MLSP | MR | Acc. | ||

| [80] | VAE-CGAN | Hybrid (V + G) | ACDC | MR | ✓ | - |

| [95] | MM-VAE | UK Biobank | MR | ✓ | MMD | |

| [96] | DM-VAE | Private | Otoscopy | - |

1 OCT stands for “esophageal optical coherence tomography”. V = variational autoencoders, G = generative adversarial networks.

3.3. Diffusion Models

In their study, Pinaya et al. [97] introduce a new approach for generating high-resolution 3D MR images using a latent diffusion model (LDM) [98]. LDMs are a type of generative model that combine autoencoders and diffusion models to synthesize new data. The autoencoder component of the LDM compresses the input data into a lower-dimensional latent representation, while the diffusion model component generates new data samples based on this latent representation. The LDM in this work was trained on data from the UK Biobank dataset and conditioned on clinical variables such as age and sex. The authors compare the performance of their LDM to VAE-GAN [32] and LSGAN [99], using the Fréchet inception distance [100] as the evaluation metric. The results show that the LDM outperforms the other models, with an FID of 0.0076 compared to 0.1567 for VAE-GAN and 0.0231 for LSGAN (where a lower FID score indicates a better performance). Even when conditioned on specific variables, the synthetic MRIs generated by this model demonstrate its ability to produce diverse and realistic brain MRI samples based on the ventricular volume and brain volume. As a valuable contribution to the scientific community, the authors also created a dataset of 100,000 synthetic MRIs that was made openly available for further research.

Fernandez et al. [101] introduce a generative model, named brainSPADE, for synthesizing labeled brain MRI images that can be used for training segmentation models. The model combines a diffusion model with a VAE-GAN, with the GAN component particularly utilizing SPADE normalization to incorporate the segmentation mask. The model consists of two components: a segmentation map generator and an image generator. The segmentation map generator is a VAE that takes as input a segmentation map, then encodes and builds a latent space from it. To focus on semantic information and disregard insignificant details, the latent code is then diffused and denoised using LDMs. This creates an efficient latent space that emphasizes meaningful information while filtering out noise and other unimportant details. A VAE decoder then generates an artificial segmentation map from this latent space. The image generator is a SPADE model that builds a style latent space from an arbitrary style and combines it with the artificial segmentation map to decode the final output image. The performance of the brainSPADE model is evaluated on a segmentation task using nnU-Net [102], and the results show that the model performs comparably when trained on synthetic data compared to when it is trained on real data, and that using a combination of both significantly improves the model’s performance.

Lyu and Wang [103] conducted a study that investigated the use of diffusion models for image translation in medical imaging, specifically the conversion of MRI to CT scans. In their study, the authors utilized two diffusion-based approaches: the conditional DDPM and conditional score-based model which utilizes stochastic differential equations [104]. These methods involved conditioning the reverse process on T2-weighted MRI images. To evaluate the performance of these diffusion models in comparison to other methods (conditional WGAN and U-Net), the authors conducted experiments on the Gold Atlas male pelvis dataset [105] using three novel sampling methods and compared the results to those obtained using GAN- and CNN-based approaches. The results indicated that the diffusion models outperformed both the GAN- and CNN-based methods in terms of structural similarity index (SSIM) and peak signal-to-noise ratio (PNSR).

We present a summary of the relevant studies utilizing diffusion models for medical image augmentation in Table 3. This table includes details about the dataset, imaging modality, and evaluation metrics used in each study, as well as the specific diffusion model employed. Upon examining this table, we notice that all the studies included are relatively recent, with the earliest study dating back to 2022. This suggests that diffusion models have gained increasing attention in the field of medical image augmentation and synthesis in recent years. Additionally, we see that in 2022, diffusion models received more attention for these tasks compared to GANs and VAEs, highlighting their growing popularity and potential for use in various scenarios.

Table 3.

Overview of the diffusion-model-based architectures for medical image augmentation that have been published to date (to our knowledge, no such studies were released before 2022). The table includes the reference, architecture name, and hybrid status (if applicable), indicating the combination of VAEs, GANs, and DMs used in each study. The table provides a useful summary of the current state of the art in this area and can help guide researchers in selecting appropriate approaches for their specific needs.

| Reference | Architecture | Hybrid Status | Dataset | Modality | 3D | Eval. Metrics |

|---|---|---|---|---|---|---|

| Classification | ||||||

| [97] | CLDM | UK Biobank | MR | ✓ | FID, MS-SSIM | |

| [106] | DDPM | ICTS | MR | ✓ | MS-SSIM | |

| [107] | LDM | CXR8 | X-ray | AUC | ||

| [108] | MF-DPM | TCGA | Dermoscopy | Recall | ||

| [109] | RoentGen | Hybrid (D + V) | MIMIC-CXR | X-ray | Accuracy | |

| [110] | IITM-Diffusion | BraTS2020 | MR | - | ||

| [111] | DALL-E2 | Fitzpatrick | Dermoscopy | Accuracy | ||

| [112] | CDDPM | ADNI | MR | ✓ | MMD, MS-SSIM, FID | |

| [113] | DALL-E2 | Private | X-ray | - | ||

| [114] | DDPM | OPMR | MR | ✓ | Acc., Dice | |

| [115] | LDM | MaCheX | X-ray | MSE, PSNR, SSIM | ||

| Segmentation | ||||||

| [116] | DDPM | ADNI, MRNet, | MR, CT | Dice | ||

| LIDC-IDRI | ||||||

| [101] | brainSPADE | Hybrid (V + G + D) | SABRE, BraTS2015 | MR | Dice, Accuracy | |

| OASIS, ABIDE | Precision, Recall | |||||

| [110] | IITM-Diffusion | BraTS2020 | MR | - | ||

| Cross-modal translation | ||||||

| [117] | SynDiff | Hybrid (D + G) | IXI, BraTS2015 | CT → MR | PSNR, SSIM | |

| MRI-CT-PTGA | ||||||

| [118] | UMM-CSGM | BraTS2019 | FLAIR ↔ T1 ↔ T1c ↔ T2 | PSNR, SSIM, MAE | ||

| [103] | CDDPM | MRI-CT-PTGA | CT ↔ MR | PSNR, SSIM | ||

| Other | ||||||

| [119] | DDM | ACDC | MR | ✓ | PSNR, NMSE, DICE |

Note: V = variational autoencoders, G = generative adversarial networks, D = diffusion models.

4. Key Findings and Implications

In this review, we focused on generative deep models applied to medical data augmentation, specifically VAEs, GANs, and diffusion models. These approaches each have their own strengths and limitations, as described by the generative learning trilemma [21], which states that it is generally difficult to achieve high-quality sampling, fast sampling, and mode coverage simultaneously. As illustrated in Figure 1a, the number of publications on data augmentation using VAEs increases by approximately 81% from 2017 to 2022, while the number using GANs has remained relatively stagnant. This trend may be due to the fact that most possible fields of research using GANs have already been explored, making it difficult to go beyond current methods using these architectures. However, we have also seen an increase in the use of more complex architectures combining multiple generative models [64,78], which have shown promising results in terms of both quality and mode coverage. On the other hand, the number of studies using diffusion models has drastically increased starting from 2022, and these models have shown particular potential for synthesizing high-quality images with good mode coverage [120].

Basic data augmentation operators such as Gaussian noise addition, cropping, and padding are commonly used to augment data and generate new images for training [8]. However, the complex structures of medical images, which encompass anatomical variation and irregular tumor shapes, may render these basic operations unsuitable, resulting in the production of irrelevant images that disrupt the logical image structure [22], and additionally, can lead to image deformations and the generation of aberrant data that can adversely impact the model performance. One basic data augmentation operator that is not well suited for medical images is flipping images, which can sometimes cause anatomical inconsistencies [121]. To overcome this issue, deformable augmentation techniques have been introduced, such as random displacement fields and spline interpolation, to augment the data in a more realistic way. These techniques have proved to be useful [22]; however, they are strongly dependent on the data and limited in some cases. Recent advances in deep learning have led to the development of generative models that can be trained to generate realistic images and simulate the underlying data distribution. These synthesized images are more truthful than those generated using traditional data augmentation techniques. They guarantee a better coherence of the general structure of medical images and greater variability, providing a more effective way to generate realistic and diverse data.

The use of GANs in medical imaging, as seen in Table 1, has been widespread and applied to a variety of modalities and datasets, demonstrating their versatility and potential for various applications within the field. When it comes to classification, DCGAN and WGAN have been the most-commonly used architectures and are considered safe bets in this domain. For example, Zhuang et al. [51] demonstrated a 3% accuracy improvement in generating fMRIs using an improved WGAN. These architectures, with their capacity for high-quality generation and good mode coverage, offer significant potential for the generation of synthetic images for medical imaging classification. In the case of segmentation and translation, the architectures that have shown the most promise include Pix2Pix, CycleGAN, and SPADE, all of which have proven their potential for conditional generation and cross-modal translation. Platscher et al. [44] conducted a comparative study of these three architectures, demonstrating their capacity to generate high-quality images suitable for medical image segmentation and translation tasks (improvement of 9.1% in Dice score). These architectures can significantly reduce the need for manual annotation of medical images and thus significantly reduce the time and cost required for data annotation.

On the other hand, VAEs have been utilized in fewer studies for medical image augmentation, as shown in Table 2. They have been employed in other tasks such as reconstruction, as demonstrated by Biffi et al. [92] and Volokitin et al. [93], who used CVAE for 3D volume reconstruction, and interpretability of features, as exemplified by Hyang et al. [94], who identified biomarkers using VAEs. Furthermore, VAEs are often used in hybrid architectures with adversarial learning techniques. The most promising architectures include PAVAE [83] and IntroVAE [122], alongside conditional VAEs, for various purposes including classification, segmentation, and translation tasks. However, while VAEs have shown potential in these areas, there is still room for improvement. One study that particularly shows promising results is that of Chadebec and Allassonnière [88], who propose to model the latent space of a VAE as a Riemannian manifold, allowing high-quality image generation comparable to GANs. Chadebec and Allassonnière [88] demonstrated an improvement of 8% in accuracy using synthetic images generated with their proposed VAE model. Nevertheless, this architecture requires a high computational cost and time, which is a significant drawback in practical applications.

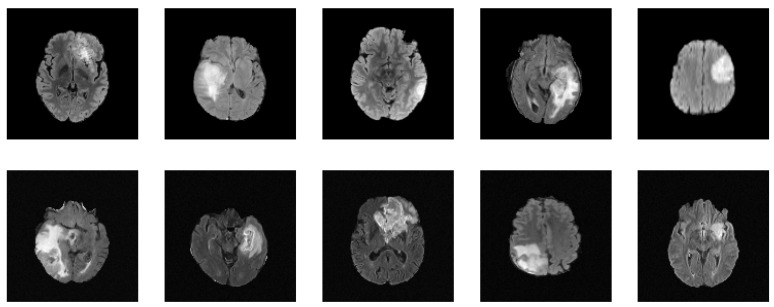

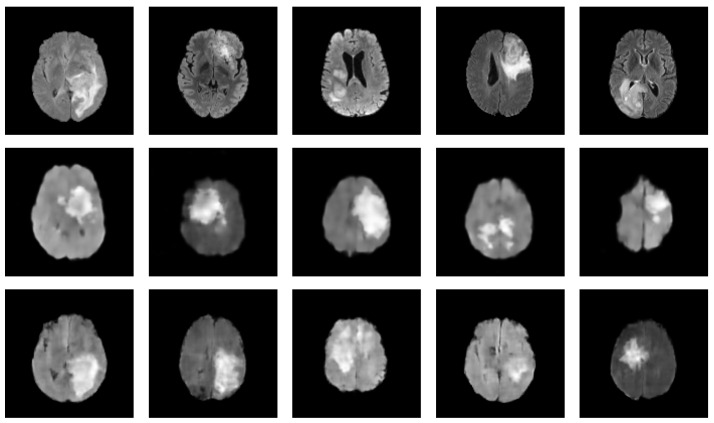

Table 3 presents a summary of the relevant studies utilizing diffusion models for medical image augmentation. These studies, all of which are relatively recent, with the earliest dating back to 2022, suggest that diffusion models have gained increasing attention in medical image augmentation and synthesis in recent years. Furthermore, in 2022, diffusion models have been the most-commonly used generative models for medical image augmentation compared to GANs and VAEs, highlighting their growing popularity and potential for use in various scenarios. Of the diffusion models studied, DDPM and LDM are the most prevalent, alongside conditional variants such as CDDPM [103] and CLDM [97]. Notably, the difference between LDM and DDPM is the ability of LDM to model long-range dependencies within the data by constructing a low-dimensional latent representation and diffusing it, while DDPMs apply the diffusion process directly to the input images. This can be especially useful for medical image augmentation tasks that require capturing complex patterns and structures. For instance, Saeed et al. [114] demonstrated the capacity of LDM conditioned on text for a task of lesion identification, achieving an accuracy improvement of 5.8%. These findings suggest that diffusion models have a promising potential for future medical image augmentation and synthesis research. To further exemplify the potential of diffusion models in generating realistic medical images, we present in Figure 4 a set of synthesized MRI images using a DDPM. These generated images exhibit high visual fidelity and are almost indistinguishable from the real images. One of the reasons for this high quality is the DDPM’s ability to model the diffusion process of the image density function. By doing so, the DDPM can generate images with increased sharpness and fine details, as seen in the synthesized MRI images.

Figure 4.

Synthesized MRIs using a diffusion-based probabilistic model (DDPM) [20] trained on the BraTS2020 dataset. The first row shows a sample of original images, while the second row shows a sample of synthesized images generated using the DDPM.

These studies have covered a range of modalities, including MRI, CT, and ultrasound, as well as dermoscopy and otoscopy. Classification is the most common downstream task targeted in these studies, but there have also been multiple state-of-the-art solutions proposed for more complex tasks such as generating multimodal missing images (e.g., from CT to MRI) and multi-contrast MRI images. In order to provide ground truth segmentation masks for tasks such as segmentation, most studies have explored the field of conditional synthesis. This allows for greater control over the synthesized images and can help to stabilize training [25], as the model is given explicit guidance on the desired output. For our discussion on medical image augmentation, we have also compiled two summary tables to provide a comprehensive overview of the datasets and metrics used in the reviewed studies. Table 4 presents a summary of the datasets used in the reviewed studies. This table includes information about the title of the dataset, a reference, and a link to the public repository if available, as well as the studied modality and anatomy. From examining this table, we see that MRI is the most-commonly used modality, followed by CT. In terms of anatomy, brain studies dominate, with lung studies coming in second. It is worth noting that the BraTS dataset is widely used across multiple studies, highlighting its importance in the field. Additionally, we notice the presence of private datasets in this table, which is not surprising given that many medical studies are associated with specific medical centers and may not be publicly available. When we consider the state of the art of medical imaging studies (see Figure 1b), we notice that the PET and ultrasound modalities are less represented compared to the others. One reason for the scarcity of PET studies is the limited availability of nuclear doctors compared to radiologists. Nuclear doctors specialize in nuclear medicine, and PET is one such imaging modality that uses radioactive tracers to produce 3D images of the body. Due to the limited number of nuclear doctors, there are fewer medical exams that use PET, leading to less publicly available data for research purposes [123]. On the other hand, ultrasound is an operator-dependent modality and requires a certain level of field knowledge. Additionally, ultrasound is not as effective as other modalities such as CT and MRI in detecting certain pathologies, which may also contribute to its lower representation in the state of the art. Despite these limitations, both PET and ultrasound remain important imaging modalities in clinical practice, and future research should aim to explore their full potential in the field of medical imaging.

Table 4.

Summary of the datasets utilized in various publications of deep generative models, organized by modality and body part. For each dataset, the corresponding availability is indicated as public, private, or under certain conditions (UC). Additionally, if a public link for the dataset is available, it is provided.

| Abbreviation | Reference | Availability | Dataset | Modality | Anatomy |

|---|---|---|---|---|---|

| ADNI | UC | Alzheimers disease neuroimaging Initiative | MR, PET | Brain | |

| BraTS2015 | Public | Brain tumor segmentation challenge | MR | Brain | |

| BraTS2016 | Public | Brain tumor segmentation challenge | MR | Brain | |

| BraTS2017 | Public | Brain tumor segmentation challenge | MR | Brain | |

| BraTS2019 | Public | Brain tumor segmentation challenge | MR | Brain | |

| BraTS2020 | Public | Brain tumor segmentation challenge | MR | Brain | |

| IEEE CCX | Public | IEEE Covid Chest X-ray dataset | X-ray | Lung | |

| UK Biobank | UC | UK Biobank | MR | Brain, Heart | |

| NIHPCT | Public | National Institutes of Health Pancreas-CT dataset | CT | Kidney | |

| DataDecathlon | Public | Medical Segmentation Decathlon dataset | CT | Liver, Spleen | |

| MIDAS | [124] | Public | Michigan institute for data science | MR | Brain |

| IXI | Public | Information eXtraction from Images Dataset | MR | Brain | |

| DRIVE | [125] | Public | Digital Retinal Images for Vessel Extraction | Fundus photography | Retinal fundus |

| ACDC | [126] | Public | Automated Cardiac Diagnosis Challenge | MR | Heart |

| MRI-CT PTGA | [105] | Public | MRI-CT Part of the Gold Atlas project | CT, MR | Pelvis |

| ICTS | [50] | Public | National Taiwan University Hospital’s Intracranial Tumor Segmentation dataset | MR | Brain |

| CXR8 | [127] | Public | ChestX-ray8 | X-ray | Lung |

| C19CT | Public | COVID-19 CT segmentation dataset | CT | Lung | |

| TCGA | Private | The Cancer Genome Atlas Program | Microscopy | - | |

| UKDHP | [128] | UC | UK Digital Heart Project | MR | Heart |

| SCR | [129] | Public | SCR database: Segmentation in Chest Radiographs | X-ray | Lung |

| HCP | [130] | Public | Human connectom project dataset | MR | Brain |

| AIBL | UC | Australian Imaging Biomarkers and Lifestyle Study of Ageing | MR, PET | Brain | |

| OpenfMRI | Public | OpenfMRI | MR | Brain | |

| IBC | Public | Individual Brain Charting | MR | Brain | |

| NeuroSpin | Private | Institut des sciences du vivant Frédéric Joliot | MR | Brain | |

| OASIS | Public | The Open Access Series of Imaging Studies | MR | Brain | |

| APCXR | [131] | Public | The anterior-posterior Chest X-Ray dataset | X-ray | Lung |

| Fitzpatrick | [132] | Public | Fitzpatrick17k dataset | Dermoscopy | Skin |

| ISIC | Public | The International Skin Imaging Collaboration dataset | Dermoscopy | Skin | |

| DDSM | Public | The Digital Database for Screening Mammography | CT | Breast | |

| CBIS-DDMS | Public | Curated Breast Imaging Subset of DDSM | CT | Breast | |

| LIDC-IDRI | Public | The Lung Image Database Consortium (LIDC) and Image Database Resource Initiative (IDRI) | CT | Lung | |

| COVID-CT | [133] | Public | - | CT | Lung |

| SARS-COV2 | [134] | Public | CT | Lung | |

| MIMIC-CXR | [135] | Public | Massachusetts Institute of Technology | CT | Lung |

| PPMI | Public | Parkinson’s Progression Markers Initiative | MR | Brain | |

| ADHD | Public | Attention Deficit Hyperactivity Disorder | MR | Brain | |

| MRNet | Public | MRNet dataset | MR | Knee | |

| MLSP | Public | MLSP 2014 Schizophrenia Classification Challenge | MR | Brain | |

| SABRE | [136] | Public | The Southall and Brent Revisited cohort | MR | Brain, Heart |

| ABIDE | Public | The Autism Brain Imaging Data Exchange | MR | Brain | |

| OPMR | [137] | Public | Open-source prostate MR data | MR | Pelvis |

| MaCheX | [115] | Public | Massive Chest X-ray Dataset | X-ray | Lung |

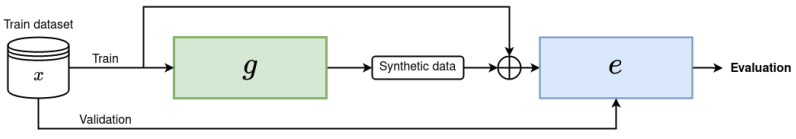

Second, Table 5 provides a summary of the metrics used to evaluate the performance of the various models discussed in the review. It is clear from this table that a variety of metrics are employed, ranging from traditional evaluation measures to more recent ones. Currently, many studies rely on shallow metrics such as the mean absolute error, peak signal-to-noise ratio [138], or structural similarity [139], which do not accurately reflect the visual quality of the image. For instance, while optimizing pixel-wise loss can produce a clearer image, it may result in lower numerical scores compared to using adversarial loss [140]. To address this challenge, researchers have proposed different methods for evaluation. The most well-known approach is to validate the quality of the generated samples through downstream tasks such as segmentation or classification. An overview of the augmentation process using a downstream task is depicted in Figure 5. Another approach is to use deep-learning-based metrics such as the learned perceptual image patch similarity (LPIPS) [141], Fréchet inception distance (FID) [100], or inception score (IS) [142], which are designed to better reflect human judgments of image quality. These deep-learning-based metrics take into account not only pixel-wise similarities, but also high-level features and semantic information in the images, making them more effective in evaluating the visual quality of the generated images. LPIPS, for instance, measures the perceptual similarity between two images by using a pretrained deep neural network. FID and IS are other popular deep-learning-based metrics for image generation, and they have been widely used in various image generation tasks to assess the quality and diversity of the generated samples. However, these metrics may not always align perfectly with human perception, and further studies are needed to assess their effectiveness for different types of medical images.

Table 5.

Summary of quantitative measures used in the reviewed publications.

| Abbrv. | Reference | Metric Name | Description |

|---|---|---|---|

| Dice | [143] | Sørensen–Dice coefficient | A measure of the similarity between two sets of data, calculated as twice the size of the intersection of the two sets divided by the sum of the sizes of the two sets |

| Hausdorff | [144] | Hausdorff distance | A measure of the similarity between two sets of points in a metric space |

| FID | [100] | Fréchet inception distance | A measure of the distance between the distributions of features extracted from real and generated images, based on the activation patterns of a pretrained inception model |

| IS | [142] | Inception score | A measure of the quality and diversity of generated images, based on the activation patterns of a pretrained Inception model |

| MMD | [145] | Maximum mean discrepancy | A measure of the difference between two probability distributions, defined as the maximum value of the difference between the two means |

| 1-NN | [146] | 1-nearest neighbor score | A method for classification or regression that involves finding the data point in a dataset that is most similar to a given query point |

| (MS-)SSIM | [139] | (Multi-scale) structural similarity | A measure of the similarity between two images based on their structural information, taking into account luminance, contrast, and structure. |

| MS | [147] | Mode score | A measure of the quality of samples generated with two probabilistic generative models based on the difference in maximum mean discrepancies between a reference distribution and simulated distribution |

| WD | [148] | Wasserstein distance | A measure of the distance between two probability distributions, defined as the minimum amount of work required to transform one distribution into the other |

| PSNR | [138] | Peak signal-to-noise ratio | A measure of the quality of an image or video, based on the ratio between the maximum possible power of a signal and the power of the noise that distorts the signal |

| (N)MSE | - | (Normalized) mean squared error | A measure of the average squared difference between the predicted and actual values |

| Jacc. | [143] | Jaccard index | A measure of the overlap between two sets of data, calculated as the ratio of the area of intersection to the area of union |

| MAE | - | Mean absolute error | A measure of the average magnitude of the errors between the predicted and actual values |

| AUC | [149] | Area under the curve | A measure of the performance of a binary classifier, calculated as the area under the receiver operating characteristic curve |

| LPIPS | [141] | Learned perceptual image patch similarity | An evaluation metric that measures the distance between two images in a perceptual space based on the activation of a deep CNN |

| KLD | [150] | Kullback–Leibler divergence | A measure of the difference between two probability distributions, often used to compare the similarity of the distributions, with a smaller KL divergence indicating a greater similarity |

| VIF | [151] | Visual information fidelity | A measure that quantifies the Shannon information that is shared between the reference and the distorted image |

| UQI | [152] | Universal quality index | A measure of the quality of restored images. It is based on the principle that the quality of an image can be quantified using the correlation between the original and restored images |

Figure 5.

Illustration of the augmentation pipeline for a generative-model-based data augmentation. The input data, x, are fed into the generative model, g, which synthesizes additional data samples to augment the training set. The downstream architecture, e, which may take the form of a convolutional neural network or U-Net, is then trained on a combination of the synthesized data and real data from the training set. The training set is split into training and validation sets, where the validation set contains only real data for evaluation purposes. After training, the model can be evaluated using various test sets.

Despite the advancements made by generative models in medical data augmentation, several challenges still remain. A common issue in GANs, known as mode collapse, occurs when the generator only produces a limited range of outputs, rather than the full range of possibilities. While techniques such as minibatch discrimination and the incorporation of auxiliary tasks [142] have been suggested as potential solutions, further research is needed to effectively address this issue. In addition, there is a balance to be struck between the sample quality and the generation speed, which affects all generative models. GANs are known for their ability to generate high-quality samples quickly, allowing them to be widely used in medical imaging and data augmentation [14,15,153]. Another approach for stabilizing the training of GANs is to use WGAN [24]. WGAN improves upon the original GAN by using the Wasserstein distance instead of the Jensen–Shannon divergence as the cost function for training the discriminator network. While these approaches have demonstrated success in improving GAN images and partially addressing mode collapse and training instability, there is still room for improvement. Diffusion models have overshadowed GANs during the latest years, particularly due to the success of text-to-image generation architectures such as DALL-E [154], Imagen [155], and stable diffusion [98]. These diffusion models naturally produce more realistic images than GANs. However, in our view, GANs have only been set aside and not entirely disregarded. With the recent release of GigaGAN [156] and StyleGAN-T [157], GANs have made a resurgence by producing comparable or even better results than diffusion models. This renewed interest in GANs demonstrates the continued relevance of this approach to image generation and indicates that GANs may still have much to offer in advancing the field. Future research could explore hybrid models that combine the strengths of both GANs and diffusion models to create even more realistic and high-quality images.

VAEs have not gained as much attention in the medical imaging field, due to their tendency to produce blurry and hazy generated images. However, some studies have used conditional VAEs or hybrid architectures to address this issue and improve the quality of the samples produced. Researchers are therefore exploring the use of hybrid models that combine the strengths of multiple generative models, as well as improved VAE variations that offer enhanced image quality. Hybrid architectures, such as VAE-GANs [32], have demonstrated the potential to partially address the issues of both VAEs and GANs, allowing a better-quality generation and good mode coverage. Interestingly, recent research has even combined all three generative models into a single pipeline [101]. This study has shown comparable results on a segmentation task when using a fully synthetic dataset compared to using the real dataset. These promising results suggest that hybrid architectures could open up new possibilities. However, these models can be complex and challenging to train, and more research is needed to fully realize their potential. In fact, many VAEs used in medical imaging are hybrid architectures, as they offer a good balance between the strengths and weaknesses of both VAEs and GANs [85,101]. It is important to note that VAEs have an advantage over GANs in operating better with smaller datasets due to the presence of an encoder [158], which can extract relevant features from the input images and significantly reduce the search space required for generating new images through the process of reconstruction. This feature also makes VAEs a form of dimensionality reduction, and the representation obtained by the encoder can provide a better starting point for the decoder to approximate the real data distribution more accurately. In contrast, GANs have a wider search space, which may lead to challenges in learning features effectively. For instance, we show in Figure 6 a comparison between synthesized MRIs using vanilla VAE [18] and the Hamiltonian VAE [159]. In addition to the advantage of operating better with smaller datasets, VAEs also offer a disentangled, interpretable, and editable latent space. This means that the encoded representation of an input image can be separated into independent and interpretable features, allowing for better understanding and manipulation of the underlying data. Another option is the use of improved variants of VAEs, which have been proposed to generate high-quality images. There has been limited exploration of improved VAE variants such as VQ-VAE2 [36], IAF-VAE [34], or Hamiltonian VAE [159] in the medical imaging field, but these variants have shown promise in generating high-quality images in other domains. It may be worth exploring their potential for medical image augmentation, as they offer the possibility of improving the quality of the generated images without sacrificing other important characteristics such as fast sampling and good mode coverage.

Figure 6.

Figure presenting a comparison between synthesized MRIs generated by a VAE and a Hamiltonian VAE [159]. Both models were trained on a limited training set of 100 images from BraTS2020 Challenge dataset. The first row showcases original images, while the second and third rows present synthesized images generated by the VAE and Hamiltonian VAE, respectively. While the images generated by both models appear slightly fuzzy, the Hamiltonian VAE demonstrates enhanced performance in generating realistic images. This comparison highlights the robustness of the VAE and Hamiltonian VAE for generating new images from a small dataset [158].

Diffusion models have more recently been applied to medical imaging [120], and some studies have demonstrated high-quality results [106]. These models are capable of synthesizing highly realistic images and have a good mode coverage while keeping the training stable, but suffer from a long sampling time due to the high number of steps in the diffusion process. This limitation may be less significant in medical imaging applications, which are not typically used in real time, but researchers are likely to continue working on optimizing diffusion models for faster sampling. It may also be possible to trade off some sample quality for faster sampling in diffusion models [21], as realism is a key requirement for data augmentation in medical imaging. For example, Song et al. [40] proposes a variant of diffusion models called Training-free Denoising Diffusion Implicit Model (DDIM) aimed to speed up the sampling process by replacing the Markovian process with a non-Markovian one in the DDPM. This resulted in a faster sampling procedure that did not significantly compromise the quality of the samples. Fast Diffusion Probabilistic Model (FastDPM) [39] introduces the concept of a continuous diffusion process with smaller time steps in order to reduce the sampling time. These efforts to improve the efficiency of diffusion models demonstrate the ongoing interest in finding ways to balance the sample quality and generation speed in medical imaging applications.

There are several other factors to consider when discussing the use of generative models for medical data augmentation. One important factor is the incorporation of domain-specific techniques and knowledge into the design of these models [160]. By incorporating knowledge of anatomy and physiology, for example, researchers can improve the realism and utility of the generated data. Another important factor is the ethical considerations of using synthetic data for medical applications, including the potential for biased or unrealistic generated data and the need for proper validation and testing. To further improve the performance and efficiency of medical data augmentation, researchers are also exploring the use of generative models in combination with other techniques such as transfer learning [23,161] or active learning [162,163]. The role of interpretability and explainability in these models is also important to consider, particularly in the context of clinical decision making and regulatory requirements. In addition to data augmentation, generative models have the potential to be used for other medical applications such as generating synthetic patient records or synthesizing medical images from non-image data [109].

5. Conclusions