Abstract

Objectives:

Mobile applications (apps) are multiplying in laryngology, with little standardization of content, functionality, or accessibility. The purpose of this study is to evaluate the quality, functionality, health literacy, readability, accessibility and inclusivity of laryngology mobile applications.

Methods:

Of the 3,230 apps identified from the Apple and Google Play stores, 28 patient-facing apps met inclusion criteria. Apps were evaluated using validated scales assessing quality and functionality: the Mobile App Rating Scale (MARS) and Institute for Healthcare Informatics App Functionality Scale. The CDC Clear Communication Index, Institute of Medicine Strategies for Creating Health Literate Mobile Applications, and Patient Education Materials Assessment Tool (PEMAT) were used to evaluate apps health literacy level. Readability was assessed using established readability formulas. Apps were evaluated for language, accessibility features and representation of a diverse population.

Results:

Twenty-six apps (92%) had adequate quality (MARS score >3). The mean PEMAT score was 89% for actionability and 86% for understandability. On average apps utilized 25/33 health literate strategies. Twenty-two apps (79%) did not pass the CDC index threshold of 90% for health literacy. Twenty-four app descriptions (86%) were above an 8th grade reading level. Only 4 apps (14%) showed diverse representation, 3 (11%) had non-English language functions, and 2 (7%) offered subtitles. Inter-rater reliability for MARS was adequate (CA-ICC=0.715).

Conclusion:

While most apps scored well in quality and functionality, many laryngology apps did not meet standards for health literacy. Most apps were written at a reading level above the national average, lacked accessibility features and did not represent diverse populations.

Keywords: Laryngology, Digital Health, Dysphagia, Dysphonia

Lay Summary

While the quality and functionality of most laryngology apps were found to be acceptable, the vast majority of apps did not meet recommended standards of health literacy or provide accessibility and inclusivity features. This likely reflects the lack of regulation of health apps.

Introduction

Mobile health application (mHealth or health app) development and use have skyrocketed over the past few years, holding the promise for more accessible and affordable care for all in the setting of limited healthcare resources.1 As of 2021, over 350,000 health apps were available for consumer use, with over 10 billion downloads. 2 mHealth can contribute greatly to patient care, providing relatively low-cost disease management, education, and communication with providers.3 However, mHealth, like other informatics interventions, can also produce intervention-generated inequalities by disproportionately benefiting more advantaged people.4

Though numerous guidelines exist for health information communication, few are dedicated to digital health technology, and there is currently little guidance for health literacy and readability of mHealth5. Yet, limited health literacy is a major barrier for health providers and patients to achieve shared understanding in multiple domains, including reading, writing, listening, speaking and interpreting numerical values.6 Low health literacy leads to detrimental health outcomes, such as delayed care7, poor adherence to medication, productive communication with providers and limited self-efficacy in disease management. 6,8 In the social sciences literature, low health literacy is viewed as an explanatory factor connecting social disadvantage, health outcomes, and health disparities.9,10 Since the 1990s, health literacy has been used to describe an individual’s ability to find health information, interpret it and apply it to health-related decisions.10 Recently, however, the US Department of Health and Human Services has proposed to redefine the term to place the responsibility on society and organizations to provide accessible and comprehensible information. Readability, in contrast to literacy, is a narrower concept and refers to the reading comprehension grade-level required by an individual to correctly understand and engage with written material.11 The average American patient reads at an 8th grade reading level, with 20% of Americans reading at a 5th grade reading level.12 Despite the National Institute of Health and American Medical Association recommendations13,14 to write health content at a 5th grade – 7th grade reading level, the majority of mHealth content does not match these criteria.15-18

Accessible technology is traditionally defined as “technology that can be utilized effectively by people with disabilities, at the time they want to utilize the technology, without any modifications”.19,20 Accessibility issues with regards to technology may also arise due to socioeconomic, racial and cultural circumstances, in the form of literacy, language, and/or infrastructure barriers for those without disabilities. Such digital exclusion affects those who are in poor or less integrated communities, with less access to information and communication technologies.21 Inclusivity is usually defined with respect to an institution or organization, as the intentional effort to ensure that diverse people with different identities are able to fully participate in all aspects of the work of an organization, including leadership positions and decision-making processes.22 In digital media, inclusivity may be manifested by graphical representations of a diverse population and by language that is acceptable and engaging for people of different genders, racial and ethnic, cultural, and socioeconomic groups. Even when health apps contain evidence-based and comprehensive health information, they remain ineffective when they do not provide accessible and inclusive contents. The medical informatics literature has an increasing number of publications on the importance of making digital material accessible and usable by diverse users.15,16 Inclusivity considerations also include image representation of diverse populations, content availability in languages other than English, and accommodations for those with disabilities, such as hearing or voice impairments.

In Otolaryngology - Head and Neck Surgery (OHNS), there are currently are over 1000 mobile applications spanning most subspecialties.38 Despite this large number, there are fewer than 200 clinical studies of these apps, and among those, only 33 focused on their validation.38,39 When reviewing the study quality of the validated apps, there was only 84% adherence to the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) guidelines, suggesting that the published studies had incomplete, or inadequate reporting.38 Furthermore, the content of OHNS apps scored inconsistently in quality grading on the Mobile App Rating Scale (MARS) and the majority did not involve otolaryngologists in content creation.38,40-42 To date, only one study in the English Literature evaluated the quality of a subset of mobile apps in OHNS. This prior systematic review of OHNS mHealth only graded the 10 apps with most reviews for each subspecialty, offering a partial quality survey. No prior investigation has examined OHNS mHealth for health literacy, accessibility, and inclusivity. Only mHealth for GERD management have been reviewed for readability by otolaryngologists, with none of the apps specifically targeting management of OHNS-specific disorders, such as laryngopharyngeal reflux.18

In light of the rapid expansion of mHealth, it is high time for otolaryngologists to study mHealth in their discipline and advocate for improved quality, evidence-base, accessibility and inclusivity standards of these apps to optimize patients’ outcomes in an equitable fashion. We hypothesize that the majority of mHealth in otolaryngology does not meet the recommended guidelines for communicating health information. We also suspect that the quality, including functionality and accessibility features in mobile apps is limited, and most apps do not show a diverse representation of the population. This study aims to provide a landscape of mobile apps in laryngology, providing an assessment of quality and functionality, health literacy, accessibility, readability and inclusivity.

Materials and Methods

Systematic Search and Screening of Available Applications

A systematic review of mHealth apps was conducted in accordance with the Primary Reporting Items for Systematic Reviews and Meta Analysis (PRISMA) Guidelines. 47 AppAGG (a mobile application metadata resource) was used to identify laryngology applications in the US Apple and GooglePlay app stores between June 2021 and March 2022. These stores were selected due to their comprehensive data of both iOS and Android devices. A set of search terms (Appendix 1) were developed, based on a previous study,38 and expert input from the senior author, a laryngologist, and two academic speech pathologists, and used to identify eligible apps related to voice, dysphagia, gastroesophageal reflux disease, airway and cough care management. We further refined the list of terms in collaboration with our multidisciplinary team, including a laryngologist, a voice speech pathologist and a dysphagia speech pathologist. Our team had weekly multidisciplinary meetings to review the screening process and included and excluded apps. Notably, the term “voice” was excluded due to the extensive number of apps unrelated to laryngology associated with that term. “Shortness of breath” pulled five apps only relevant to pulmonology, leading us to exclude it from our initial search. No app were found with the search term “subglottic stenosis”.

Apps underwent two rounds of screening, after removal of duplicate records. First, the title, descriptions, and screenshots of the app interface were used to assess relevance. Apps were also excluded if they were games, provider-facing, unavailable in English, or designed for children (under the age of 18). Voice training apps for singers were excluded, reflecting laryngology practice, in which voice coaching for performers is not standard of care. Additionally, Augmentative and Alternative Communication (AAC) apps were not considered clinically relevant within laryngology, as they are geared to individuals with speech disorders, distinct from voice disorders. Second, two speech language pathologists and a laryngologist evaluated apps for their content, ensuring relevance for clinical care in laryngology. Discrepancies were resolved as a group.

Evaluation Measures

An online survey was generated using Google forms (2022; Mountain View, CA) and distributed to raters for the evaluation of each app. The survey consisted of 103 items, which included questions from five scales: 1) the Mobile App Rating Scale (MARS)42, 2) the Intercontinental Medical Statistics (IMS) Institute for Healthcare Informatics Functionality scores48, 3) the Centers for Disease Control (CDC) Modified Clear Communication Index49, 4) the Institute of Medicine (IoM) Strategies for Creating Health Literate mHealth Applications50, and 5) the Patient Education Materials Assessment Tool (PEMAT).51 Additional questions were added to assess representation of diverse populations, availability of non-English language, and presence of accessibility features, such as subtitles. Inclusivity was assessed with three items, based on prior recommendations in the literature52: 1) Does the app show a representation of diverse populations (race and ethnicity, and gender)? 2) Is the app available in non-English languages? 3) Is the app accessible to patients with disabilities (such as hearing or voice impairment)? Each item was coded as yes=1 or no=0. Health apps characteristics such as App store star rating and number of reviews were also recorded.

MARS is a validated and widely used tool for assessing the quality of mobile health apps.42 MARS includes Likert scale questions on categories of engagement, functionality, aesthetics, and information quality. In addition to MARS, we used the IMS Institute for Healthcare Informatics Functionality Score to assess app functionality,48 which relies on 7 dimensions: inform, instruct, record (divided into: collect data, share data, evaluate data, intervene) display, guide, remind/alert, and communicate.48 One point is assigned to each function for a total potential score of 11 when all functionalities are offered.

Health literacy was assessed using the Modified Centers for Disease Control and Prevention Clear Communication Index.49 While the original index was designed for printed material, the modified index was intended for short forms and oral communications, and was explicitly created to be applicable to digital media, such as social media posts. This evidence-based tool contains 20 items in 7 categories, scored on a scale of 0 to 100 with 90 as the passing score: Main Message and Call to Action, Language, Information Design, State of the Science, Behavioral Recommendations, Numbers, and Risk. Another health app-specific literacy assessment guide was used to complement the CDC index: the Institute of Medicine (IoM) Strategies for Creating Health Literate mHealth Applications. The IoM Strategies were designed as a part of a Roundtable on Health Literacy in 2014 and encompass six overarching strategies adapted from evidence for health literate websites: Learn About Your Users, Write Actionable Content, Display Content Clearly, Organize and Simplify, Engage Users, Evaluate and Revise Your Site. While these strategies do not have a scoring system, they have previously been used to evaluate health literacy of apps in diabetes management53 and breast cancer54 with a simple yes/no coding (yes=1 and no=0). To complete the literacy assessment, we used the Patient Education Materials Assessment Tool (PEMAT), a systematic method developed by the Agency for Healthcare Research and Quality to examine the understandability and actionability of audio-visual materials.51 The PEMAT has 17 items with 13 items assessing understandability score and 4 items assessing actionability.

All app store descriptions were downloaded and evaluated using five readability formulas on the website “Readable.io”55: 1) Flesch-Kincaid Reading Level, Flesch-Kincaid Reading Ease56, 2) The Gunning-Fog Index,57 3) Coleman-Liau Index,58 4) SMOG Index,59 and 5) New Dale-Chall 60. Readability formulas can vary in purpose and calculation, focusing on different elements of the text, such as number of letters or syllables, sentence length, complexity-syntax patterns and others.61 There is no gold standard, and it is common practice to evaluate written materials using multiple scales.

Data Extraction Procedures and Analysis

In an effort to reach agreement on the use of app assessment tools, the team of raters read the CDC Clear Communication Index and PEMAT User manuals. All authors reviewed the first two apps together to reduce bias in the rating process and improve consistency. The remaining apps were reviewed independently by two raters. All ratings were entered into a spreadsheet using Google Sheets (2022; Mountain View, CA), where analysis was also performed. Descriptive statistics and correlation analysis were performed to evaluate the app ratings and compare each scale rating with app characteristics, such as the star rating and number of reviews in the App Store. An intraclass correlation score (CA-ICC) was calculated to establish interrater reliability for all scales. Upon evaluation of all apps, raters discussed any discrepancies in ratings of the functionality assessment to ensure consistency across features of the apps. Raters came to a consensus for the Institute of Medicine Strategies for creating Health Literate mHealth Applications and the IMS Institute for Healthcare Informatics Functionality scales.

Results

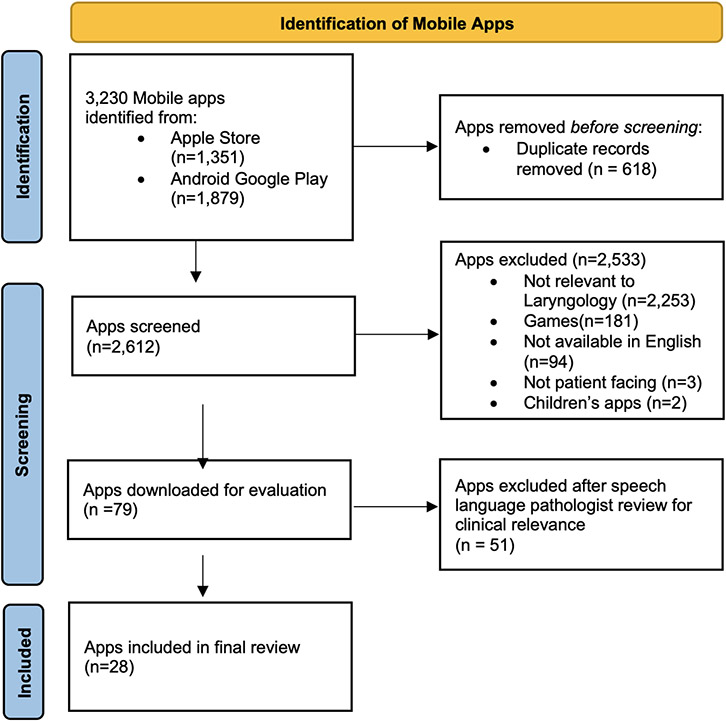

Our search queries identified a total of 3,230 potentially relevant applications, of which a total of 28 met the inclusion criteria for analysis. The PRISMA flow diagram (Figure 1) shows an overview of the screening process and the categories for exclusion criteria. Table 1 summarizes the final list of the included applications, their characteristics, and scale ratings. Twenty-seven (96%) mobile applications were available on the Apple Store, 20 (71%) were available in both Apple and Google stores, and one application (4%) was exclusively available in the Google Play store. A total of 24 (86%) apps were available for free, 9 (32%) offered a free trial or subscription, and 4 (14%) needed to be purchased with prices ranging from $2.99 to $4.99. There were five apps in the category of gastroesophageal reflux disease, one in tracheostomy care, two in cough, eleven apps in voice therapy and nine apps in swallow therapy. The average star rating of the laryngology mobile applications in the mobile App store was 3.628 out of 5. Mobile applications had an average of 99 ratings on the app store, with a median of 6 ratings. The most recent app update ranged from 2016 to early 2022, with nine (32%) of the apps not having been updated in more than two years. The interrater reliability coefficient ranged from poor (PEMAT Understandability=0.33) to excellent (PEMAT Actionability=0.798). Raters had a good interrater reliability for the ratings using the MARS scale (ICC=0.715) and fair for the CDC Index (ICC=0.434).

Figure 1:

PRISMA Diagram for mobile application selection

Table 1:

Description of Included Apps

| App Name | Brief App Store Description | Cost | App Store Star Rating |

Mean MARS |

Strategies for Creating Health Literate Apps |

IMS Institute for Healthcare Informatics Functionality |

PEMAT Actionability % |

PEMAT Understandability% |

CDC Clear Communication Index % |

Flesch-Kincaid Reading Level |

|---|---|---|---|---|---|---|---|---|---|---|

| Acid Reflux Diet Helper | Diet and food choice helper tool for Acid Reflux, GERD, and heartburn symptoms | Free | 4.5 | 3.4 | 21 | 4 | 85 | 66.67 | 65.8 | 11.9 |

| Astound - Voice &Speech Coach | Coaching app with personalized warmups, breathing and vocal exercises | Free | 4.4 | 4.5 | 29 | 7 | 100 | 75 | 85.3 | 6.9 |

| Breather Coach | Respiratory Muscle Training companion app | Free | 1.9 | 4.4 | 28 | 7 | 95 | 87.5 | 85.3 | 8.9 |

| Christella VoiceUp | Voice feminization course | Free | 4.3 | 4.0 | 28 | 6 | 100 | 100 | 91.2 | 9.8 |

| Cough Tracker & Reporting | Tool for tracking and graphically representing the frequency and triggers of cough | Free | na | 3.8 | 25 | 4 | 100 | 87.5 | 76.5 | 6.5 |

| Dysphagia Training | Exercises aimed at improving functions related to eating and drinking | Free | 4 | 4.3 | 30 | 5 | 86.36 | 100 | 78.9 | 9.6 |

| Emotion Food Company | Educational materials on meal prepration using Easy-Base®, a binding agent for people with dysphagia | Free | N/A | 3.5 | 27 | 2 | 72.73 | 50 | 88.2 | 10.3 |

| Estill Exercises | Voice training and rehabilitation exercises | Free | 3.9 | 4.1 | 28 | 5 | 100 | 100 | 97.1 | 13.5 |

| EvaF | Voice feminization training program | Free | 2.4 | 3.9 | 28 | 4 | 100 | 100 | 97.4 | 10.5 |

| EvaM | Voice masculinization training program | Free | 1.5 | 3.8 | 28 | 4 | 100 | 100 | 97.4 | 12.3 |

| GERD, Heartburn and Acid Reflux Symptoms & Remedies | Educational tool for GERD symptoms, diagnosis, and management | Free | 1 | 3.8 | 25 | 1 | 100 | 100 | 67.6 | 10.5 |

| GERDHelp | Personalized digital health diary for GERD Health | Free | 4.8 | 4.4 | 30 | 8 | 100 | 100 | 78.9 | 13.1 |

| GERD Tools | Digital diary of reflux symptoms | $4.99 | 1 | 2.7 | 24 | 3 | 77.27 | 62.5 | 64.7 | 6.9 |

| Heartburn: Acid reflux Journal | Symptom and trigger tracker for GERD and reflux | Free | 3.5 | 2.8 | 15 | 4 | 80 | 50 | 50 | 14.8 |

| HNC Virtual Coach | Customized therapy regimen for improving swallowing in patients undergoing head and neck cancer treatment | Free | 5 | 4.8 | 30 | 9 | 100 | 100 | 97.1 | 11.1 |

| Hyfe Cough Tracker | AI-based tool for tracking cough frequency and trends | Free | 4.5 | 3.4 | 19 | 7 | 95 | 87.5 | 41.2 | 6.7 |

| Loud and Clear Voice Fitness | Voice and communication program for people with Parkinson’s Disease | Free | 3.7 | 3.9 | 29 | 5 | 100 | 100 | 70.0 | 12.2 |

| LSVT Loud | Education and voice training exercises for patients with Parkinson’s disease and other neurological conditions including stroke, multiple sclerosis and cerebral palsy | Free | 2 | 3.2 | 27 | 1 | 72.73 | 83.33 | 64.7 | 12.9 |

| Mobili-T | Swallowing exrcise tool using the Mobili-T device (under-the-chin sensor) | Free | N/A | 4.1 | 26 | 8 | 80 | 100 | 64.7 | 11.7 |

| Pryde Voice &Speech Therapy | Voice modification training program | Free | 5 | 3.9 | 28 | 2 | 90.91 | 100 | 80 | 9.6 |

| Reflux Tracker | Diary for recording symptoms, medications, and eating habits related to reflux and heartburn | Free | 1.7 | 3.5 | 27 | 6 | 95.45 | 100 | 76.5 | 9.8 |

| SpeechVive | Simulates the effect of the SpeechVive, a wearable prosthetic device that improves speech loudness for people with Parkinson’s Disease | Free | 5 | 3.2 | 17 | 3 | 95 | 100 | 57.1 | 14.9 |

| Swallow Prompt: Saliva Control | Notification tool to prompt people with neurological conditions of salivary pooling | $2.99 | 5 | 4.1 | 20 | 3 | 66.67 | 66.67 | 41.2 | 10.4 |

| Swallow RehApp | Customizable plan of dysphagia management through education and exercises | $3.99 | 3 | 3.1 | 27 | 3 | 83.33 | 100 | 73.5 | 13.5 |

| Tongueometer | Tongue strength assessment and exercises with the use of an external Tongueometer device | Free | 5 | 3.5 | 21 | 7 | 45.45 | 33.33 | 52.9 | 12.3 |

| TRACHTOOLS | Communication and tracheostomy information tool | Free | 4.7 | 3.8 | 25 | 1 | 95.83 | 100 | 77.3 | 15.3 |

| Vocular | Voice analysis, including a pitch tracker and celebrity voice matching | $3.99 | 4.5 | 3.7 | 25 | 7 | 68.18 | 50 | 75 | 9.7 |

| Voice Coach | Voice exercises aimed at professional actors and public speakers | Free | 3.4 | 4.1 | 29 | 3 | 95.45 | 100 | 97.1 | 9.8 |

If an application was available in both the Apple and Google stores, it was evaluated and recorded (for characteristics such as price, ratings, and name) from the Apple store. Acid Reflux Diet Helper was the only application not available in the Apple store, so it was assessed from the Google Play store. Total score for rating scales varied: MARS mean total score out of 5; Strategies for Creating Health Literate Apps total out of 33; IMS Institute for Healthcare Informatics Functionality total out of 11; PEMAT Actionability – out of 100%; PEMAT Understandability – out of 100%; Flesch-Kincaid Reading Level corresponds to grade level of app store description.

Quality and Functionality

The overall mean MARS quality score for all applications was 3.78/5, ranging from 2.7 (GERD Tools) to 4.46 (Astound-Voice and Speech Coach). The mean MARS score for the categories of Engagement, Functionality, Aesthetics, and Information were 3.50, 4.09, 3.82, and 3.72 respectively. Of note, few apps (14%, n=4) offered options of customization, with only one offering complete tailoring to individual preferences. Less than half of the apps (43%, n=12) had an affiliation with an academic center, and only five (18%) had been evaluated in a clinical trial. Scores on the IMS Institute for Healthcare Informatics App Functionality Scale had a mean score of 4.6, ranging from 1 to 9, with no app including all 11 recommended functions. Of the 28 apps, 24 (86%) provided information using a variety of formats, 21 (75%) had an ability to capture user-entered data, 18 (64%) displayed user-entered data, 11 (39%) provided reminders, 7 (25%) allowed for communication with providers, 6 (21%) allowed for transmission of health data, and 4 (14%) provided guidance like a diagnosis, or recommendation based on user-entered data. None of the apps had an option to intervene using alerts based on the data entered. A Spearman rank correlation showed that the MARS and the Institute for Healthcare Informatics Functionality score had a very weak correlation with a higher star rating, with a coefficient of 0.31 and 0.33, respectively, indicating that apps with higher functionalities might be rated higher in the app store.

Health Literacy

The mean CDC Clear Communication Index was 76%, with only six apps (21%) reaching the passing score of 90%. The mobile applications with the lowest scores did not include a clear mission statement at the beginning of the material, explain what authoritative sources, such as subject matter experts know and don’t know about the topic, or provide a summary of the information. The IoM scores ranged from 15 to 30, with an average of 26 out of 33 recommended strategies in apps. Only 39% (n=11) of the apps included simple search and browse options, 28% (n=8) included integration with email, calendar, or other phone apps. No apps included a link to social media, the option of text messaging within the app, accessibility features for people with disabilities, or printable tools and resources. The mean PEMAT actionability score was 89% and mean PEMAT understandability score was 86%. While most apps followed the PEMAT guidelines, only two of the apps used tables with clear headings to present material and only three explained how to use charts, graphs, tables, or diagrams to take actions.

Readability

App descriptions from the Apple store were assessed for readability (Table 2). The average US-grade reading level of the app description was 11th using the Flesch-Kincaid Reading Level, and 13th using the Gunning Fox Index and Coleman-Liao Index, all much higher than the recommended 8th grade level. Similarly, the SMOG index was 12.6, representing that 12.6 years of education are needed to understand the text. Only four apps descriptions (14%) were written below an 8th grade reading level. The mean Flesch Reading Ease score was 44.7, which is classified as difficult to read. Additional analysis showed that all of the readability scales had a very weak negative correlation with the number of reviews (Spearman Rank Coefficients: Flesh: −0.39, Gunning: −0.38, Coleman: −0.34, SMOG: −0.42, New Dale: −0.37), indicating that app descriptions written in a lower reading level, or easier-to-understand text were associated with higher rated apps.

Table 2:

App Store Description Readability Assessment

| Name of App | Flesch-Kincaid Grade Level |

Flesch Reading Ease | Gunning Fog Index | Coleman-Liau Index | SMOG Index | New Dale-Chall |

|---|---|---|---|---|---|---|

| Acid Reflux Diet Helper | 11.9 | 35.9 | 12.3 | 14.7 | 12.6 | 8.1 |

| Astound - Voice &Speech Coach | 6.9 | 63.4 | 8.4 | 9.9 | 9.8 | 5.1 |

| Breather Coach | 8.9 | 50.4 | 8 | 13.5 | 10.6 | 7.9 |

| Christella VoiceUp | 9.8 | 50.6 | 13 | 12.8 | 13 | 6 |

| Cough Tracker & Reporting | 6.5 | 76 | 8.3 | 7.3 | 9.4 | 2.8 |

| Dysphagia Training | 9.6 | 51.9 | 12.7 | 10.5 | 12.3 | 5.8 |

| Emotion Food Company | 10.3 | 43.3 | 11.8 | 12.7 | 12.4 | 4.8 |

| Estill Exercises | 13.5 | 32.8 | 14.4 | 14.8 | 14.6 | 8.5 |

| EvaF | 10.5 | 50.9 | 12.4 | 11.7 | 12.8 | 6.4 |

| EvaM | 12.3 | 47.6 | 14.3 | 12.5 | 13.8 | 6.6 |

| GERD, Heartburn and Acid Reflux Symptoms & Remedies | 10.5 | 49.7 | 13 | 11.2 | 12.6 | 6.8 |

| GERDHelp | 13.1 | 32.5 | 15.7 | 15.4 | 15.1 | 8.1 |

| GERD Tools | 6.9 | 63.3 | 8.5 | 10.3 | 9.8 | 6.5 |

| Heartburn: Acid reflux Journal | 14.8 | 36 | 15.5 | 12.1 | 14.2 | 6.6 |

| HNC Virtual Coach | 11.1 | 43.9 | 13.5 | 13.1 | 13.7 | 7 |

| Hyfe Cough Tracker | 6.7 | 70.1 | 9.7 | 9.2 | 10 | 4 |

| Loud and Clear Voice Fitness | 12.2 | 43 | 14.2 | 12.9 | 13.3 | 6.8 |

| LSVT Loud | 12.9 | 27.3 | 14.2 | 19.2 | 13.3 | 9.4 |

| Mobili-T | 11.7 | 30.5 | 12.8 | 14.6 | 12.9 | 7.9 |

| Pryde Voice &Speech Therapy | 9.6 | 41.6 | 8.1 | 13.8 | 10.8 | 8.3 |

| Reflux Tracker | 9.8 | 49.3 | 12.1 | 11.1 | 12.1 | 6.2 |

| SpeechVive | 14.9 | 32.6 | 17.2 | 16.9 | 16.5 | 8.8 |

| Swallow Prompt | 10.4 | 51.6 | 10.9 | 11.7 | 13.1 | 6.9 |

| Swallow RehApp | 13.5 | 24.4 | 16.1 | 16.5 | 14.9 | 8.8 |

| Tongueometer | 12.3 | 25.9 | 14.1 | 15.8 | 13 | 8.3 |

| TRACHTOOLS | 15.3 | 17.5 | 16.1 | 17.7 | 14.8 | 9.3 |

| Vocular | 9.7 | 61.2 | 12 | 9 | 11.6 | 5.3 |

| Voice Coach | 9.8 | 48.7 | 10.8 | 13.4 | 12 | 7 |

The Flesch-Kincaid Grade Level and Gunning Fog Index, Coleman-Liau Index are equivalent to the US grade level of education. The Flesch Reading Ease gives a text a score between 1 and 100, with 100 being the highest readability score. Scoring between 70 to 80 is equivalent to school grade level 8. The SMOG Index estimates the years of education needed to comprehend the text. The New Dale-Chall readability score measures a text against a number of words considered familiar to fourth-graders. The more unfamiliar words used, the higher the reading level.

Accessibility and Inclusivity

Only three apps (11%) had a non-English language function (Estill Exercises, Emotion Food Company and TRACHTOOLS). Three apps (11%) included Spanish, two apps (7%) included French and German, while one app (4%) also included German and Russian. Four apps (14%) provided a diverse representation of populations (Loud and Clear, Vocular, Breather Coach, GERDhelp). Finally, only two apps (7%), Dysphagia Training and TRACHTOOLS, included subtitles, with no other accessibility features recorded.

Discussion

In this systematic review, we identified and evaluated consumer mHealth in laryngology, focusing on quality, functionality, literacy, readability, accessibility and inclusivity. While mHealth has the potential to provide accessible and affordable healthcare, our findings show that there are big limitations in the design of these apps, which may foster disparities in care.

While most apps scored well on measures of quality (MARS>3/5), the majority did not allow for advanced customization within the app, and few had an academic affiliation or clinical trial evaluation. These findings are consistent with an earlier review of a sample of OHNS apps, which highlighted the discrepancy between the large number of apps and low corresponding published research studies, and limited collaboration between app developers and healthcare professionals.38,40 Furthermore, this previous study emphasized that a higher MARS score did not always equate higher app efficiency. To gain better understanding of this finding, we used the Institute for Healthcare Informatics Functionality score to evaluate apps functionality in more detail. The functionality ratings showed that even though three quarters of the apps collected data, only 21% (n=6) allowed for communication with a provider and just 14% (n=4) provided guidance based on the entered data. As a result, though laryngology apps have good functionality and data collection capabilities, patients may be burdened with interpreting the data on their own or with limited guidance of questionable medical validity.

In the last decade, there have been growing efforts in improving communication guidelines and adapting systems approaches to addressing health literacy.49,62-64 These resources are based on the understanding that health literacy is not only a patient-level phenomenon.65-68 Instead, healthcare institutions are advised to implement a systems approach to addressing health literacy, placing a large emphasis on the design of information systems.69 However, most mHealth is not developed in collaboration with healthcare professionals, or communication experts, raising concerns surrounding apps health literacy. We used readability scales to assess app store descriptions and health literacy scales to evaluate the content of laryngology mHealth. All readability formulas found app descriptions to be at a higher reading level than that of the average American. App content scored poorly on measures of health literacy, with only six of the apps passing the CDC index score of 90%, indicating that laryngology apps content is not adequately formulated and presented for a broad population. The PEMAT scores indicate that the apps are more actionable than understandable, meaning that while users can identify action steps to take, they do not necessarily understand the education materials to the same extent. Interestingly, IoM scale ratings highlighted that over 60% of the apps did not include any search and browse options, such that if users did have questions about app content, answers would not be easily accessible via internet browsing within the app. This was also identified as a concern in the early use of patient portals.70 To mitigate this issue, the Institute for Family Health, National Library of Medicine, and Epic Systems, developed MedlinePlus, which integrated a look-up system within the electronic portals for patients to search medical terms without having to use an external engine.71 Despite studies showing that this feature increases patients’ health literacy,69 such a feature is mostly missing in laryngology mHealth. This may limit users’ understanding of app content and their ability to make informed health decisions.

The IoM scale highlighted the lack of accessibility features, further confirmed by our accessibility evaluation. Of the 28 apps, only three (11%) provided an option for an additional language. This does not align well with the large need of nearly 9% of the U.S. population who cannot effectively communicate in English and are said to have limited English proficiency (LEP),72 nor does it meet the regulatory requirements of Title VI,73 requiring health systems and clinicians to provide equal treatment to LEP speakers. Equal access to information is also pertinent for people with disabilities. Only two (7%) of the apps in our review included subtitles, which might be used by those with impaired hearing. OHNS patients are more likely to have hearing or voice impairment, which may limit their ability to use interactive features of health apps, unless subtitles are available or voice-recognition technology is adapted to those with voice and speech disabilities.74 Such adaptations are now recognized among technology companies, as seen with Google’s Euphonia project, which focuses on making voice-recognition technology inclusive of those with voice and speech impairments. Finally, representation of diverse populations was markedly limited across laryngology apps, with only four apps including images of racial and ethnic, and gender minorities. This is concerning given the growing recognition that image representation of diversity may promote use of and adherence to digital health resources. For example, prior studies have found that black patients are more likely to observe culturally appropriate health information. 75-77

Our study identified major gaps in the design of laryngology apps. While we used standardized tools, a limitation of this project includes the subjectivity of some of the rating scales. To best mitigate bias, the raters underwent training with the assessment tools and came to a consensus on any items they disagreed on. Furthermore, due to the lack of a validated health literacy scale specific to mHealth, scales such as the CDC Clear Communication Index, Institute of Medicine strategies for Designing Health Literate Applications, and PEMAT tools were used as proxy scales. In contrast with the low CDC index scores, the mean PEMAT scores for understandability and actionability were high at 86% and 89% respectively, though there are no defined passing scores for PEMAT. One possibility for the discrepancy between the CDC and PEMAT scores is the large number of “not applicable” answer options in the PEMAT scales, resulting in a higher rating. While literacy of the mobile app content was evaluated using the CDC and PEMAT scales, readability formulas were only applied to the app store descriptions and not the app content. Another limitation is the lack of guidelines in otolaryngology and/or speech language pathology for the development of digital health tools, making it difficult to assess whether apps achieved acceptable standards of care. A similar challenge was evaluating the accuracy and evidence-base of the information presented in the apps. In this study we used the MARS scale, the standard tool on evaluating mobile app quality, which includes a section on information quality, but does not capture an assessment of accuracy and evidence-base of all presented information. Additionally, while our search strategy was based on a previously published search term and furthered refined in collaboration with speech language pathologists and a laryngologist, it is possible that patients are using different search terms to find apps. Finally, the raters in this study are not patients or users, and there may be some bias in describing the patient, or user experience with these apps. In order to adequately evaluate the health literacy, accessibility and inclusivity of health apps, it would be critical to engage with target users in the future. Partnering with stakeholder groups of clinicians and patients, who can share their expertise and lived experiences can provide important insights in future studies.

Health apps in laryngology will only continue to multiply, with many patients turning to them for management of their voice, swallowing and airway concerns. The current lack of guidelines for mHealth design is important to address in OHNS and beyond. Prior OHNS authors have raised similar concerns regarding the lack of guidelines, and have recommended the creation of an editorial “App Board” to validate app quality.38,40 We suggest that essential components of patient-centered care, such as health literacy, readability, accessibility and inclusivity are also included in the development of such guidelines or validation process. The paucity of health literate and accessible mHealth not only burdens patients, but also puts them at risk. While mHealth developers should ideally create digital platforms that can be accessed and used by all, in the absence of enforceable standards, it is critical that healthcare providers advocate for safe and equitable paths to digital technologies expansion in their disciplines. This requires increased efforts of engagement with developers, interdisciplinary research to systematically evaluate newly developed apps and concerted efforts towards the development of equity-driven mHealth guidelines.

Conclusion

While the quality and functionality of most laryngology apps were found to be acceptable, the vast majority of apps did not meet recommended standards of health literacy or provide accessibility and inclusivity features. This likely reflects the lack of regulation and guidelines for the development of mHealth, and can be ultimately harmful to vulnerable patient populations. Healthcare providers ought to work with mHealth developers to ensure equitable access to the digital environment, so that all patients are able to engage with these resources.

Supplementary Material

Appendix – App search terms

Funding:

Anaïs Rameau’s participation was supported by a Paul B. Beeson Emerging Leaders Career Development Award in Aging (K76 AG079040) from the National Institute on Aging.

Footnotes

Conflicts of Interest: Anaïs Rameau is a medical advisor for Perceptron Health, Inc. and Virufy.org

This project was presented as a poster at the American Laryngological Association meeting in April 2022 in Dallas, TX.

References

- 1.Llorens-Vernet P, Miró J. Standards for Mobile Health–Related Apps: Systematic Review and Development of a Guide. JMIR Mhealth Uhealth. 2020;8(3):e13057. doi: 10.2196/13057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.IQVIA Institute for Human Data Science. Digital Health Trends 2021 : Innovation, Evidence, Regulation and Adoption. https://www.iqvia.com/-/media/iqvia/pdfs/institute-reports/digital-health-trends-2021/iqvia-institute-digital-health-trends-2021.pdf?_=1628089218603. Accessed July 7, 2022.

- 3.Kao CK, Liebovitz DM. Consumer Mobile Health Apps: Current State, Barriers, and Future Directions. PM&R. 2017;9:S106–S115. doi: 10.1016/j.pmrj.2017.02.018 [DOI] [PubMed] [Google Scholar]

- 4.Veinot TC, Mitchell H, Ancker JS. Good intentions are not enough: How informatics interventions can worsen inequality. Journal of the American Medical Informatics Association. 2018;25(8):1080–1088. doi: 10.1093/jamia/ocy052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Baur C, Harris L, Squire E. The U.S. National Action Plan to Improve Health Literacy: A Model for Positive Organizational Change. Stud Health Technol Inform. 2017;240:186–202. [PubMed] [Google Scholar]

- 6.Berkman ND, Davis TC, McCormack L. Health Literacy: What Is It? Journal of Health Communication. 2010;15(sup2):9–19. doi: 10.1080/10810730.2010.499985 [DOI] [PubMed] [Google Scholar]

- 7.Levy H, Janke A. Health Literacy and Access to Care. Journal of Health Communication. 2016;21(sup1):43–50. doi: 10.1080/10810730.2015.1131776 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Weekes C v. African Americans and health literacy: a systematic review. ABNF J. 2012;23(4):76–80. [PubMed] [Google Scholar]

- 9.Schillinger D. Social Determinants, Health Literacy, and Disparities: Intersections and Controversies. HLRP: Health Literacy Research and Practice. 2021;5(3). doi: 10.3928/24748307-20210712-01 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ancker JS, Grossman L v., Benda NC. Health Literacy 2030: Is It Time to Redefine the Term? Journal of General Internal Medicine. 2020;35(8):2427–2430. doi: 10.1007/s11606-019-05472-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Albright J, de Guzman C, Acebo P, Paiva D, Faulkner M, Swanson J. Readability of patient education materials: implications for clinical practice. Applied Nursing Research. 1996;9(3):139–143. doi: 10.1016/S0897-1897(96)80254-0 [DOI] [PubMed] [Google Scholar]

- 12.Safeer RS, Keenan J. Health literacy: the gap between physicians and patients. Am Fam Physician. 2005;72(3):463–468. [PubMed] [Google Scholar]

- 13.Hutchinson N, Baird GL, Garg M. Examining the Reading Level of Internet Medical Information for Common Internal Medicine Diagnoses. The American Journal of Medicine. 2016;129(6):637–639. doi: 10.1016/j.amjmed.2016.01.008 [DOI] [PubMed] [Google Scholar]

- 14.Weiss BD. Health Literacy and Patient Safety: Help Patients Understand. Manual for Clinicians. . 2nd ed. Chicago, IL: American Medical Association Foundation; 2007. [Google Scholar]

- 15.Dunn Lopez K, Chae S, Michele G, et al. Improved readability and functions needed for mHealth apps targeting patients with heart failure: An app store review. Research in Nursing & Health. 2021;44(1):71–80. doi: 10.1002/nur.22078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.McDarby M, Llaneza D, George L, Kozlov E. Mobile Applications for Advance Care Planning: A Comprehensive Review of Features, Quality, Content, and Readability. American Journal of Hospice and Palliative Medicine®. 2021;38(8):983–994. doi: 10.1177/1049909120959057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Robillard JM, Feng TL, Sporn AB, et al. Availability, readability, and content of privacy policies and terms of agreements of mental health apps. Internet Interventions. 2019;17:100243. doi: 10.1016/j.invent.2019.100243 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bobian M, Kandinov A, El-Kashlan N, et al. Mobile applications and patient education: Are currently available GERD mobile apps sufficient? Laryngoscope. 2017;127(8):1775–1779. doi: 10.1002/lary.26341 [DOI] [PubMed] [Google Scholar]

- 19.Lazar J, Goldstein D, Taylor A. Ensuring Digital Accessibility through Process and Policy. 1st Edition.; 2015. [Google Scholar]

- 20.W3C Web Accessibility Initiative (WAI). Mobile Accessibility at W3C. https://www.w3.org/WAI/standards-guidelines/mobile/. Published April 14, 2021. Accessed July 30, 2022.

- 21.Abascal J, Barbosa SDJ, Nicolle C, Zaphiris P. Rethinking universal accessibility: a broader approach considering the digital gap. Universal Access in the Information Society. 2016;15(2):179–182. doi: 10.1007/s10209-015-0416-1 [DOI] [Google Scholar]

- 22.Tan TQ. Principles of Inclusion, Diversity, Access, and Equity. The Journal of Infectious Diseases. 2019;220(Supplement_2):S30–S32. doi: 10.1093/infdis/jiz198 [DOI] [PubMed] [Google Scholar]

- 23.Benda NC, Veinot TC, Sieck CJ, Ancker JS. Broadband Internet Access Is a Social Determinant of Health! American Journal of Public Health. 2020;110(8):1123–1125. doi: 10.2105/AJPH.2020.305784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Greenberg-Worisek AJ, Kurani S, Finney Rutten LJ, Blake KD, Moser RP, Hesse BW. Tracking Healthy People 2020 Internet, Broadband, and Mobile Device Access Goals: An Update Using Data From the Health Information National Trends Survey. Journal of Medical Internet Research. 2019;21(6):e13300. doi: 10.2196/13300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Drake C, Zhang Y, Chaiyachati KH, Polsky D. The Limitations of Poor Broadband Internet Access for Telemedicine Use in Rural America: An Observational Study. Annals of Internal Medicine. 2019;171(5):382. doi: 10.7326/M19-0283 [DOI] [PubMed] [Google Scholar]

- 26.Singh GK, Girmay M, Allender M, Christine RT. Digital Divide: Marked Disparities in Computer and Broadband Internet Use and Associated Health Inequalities in the United States. International Journal of Translational Medical Research and Public Health. 2020;4(1):64–79. doi: 10.21106/ijtmrph.148 [DOI] [Google Scholar]

- 27.Ekezue BF, Bushelle-Edghill J, Dong S, Taylor YJ. The effect of broadband access on electronic patient engagement activities: Assessment of urban-rural differences. The Journal of Rural Health. 2022;38(3):472–481. doi: 10.1111/jrh.12598 [DOI] [PubMed] [Google Scholar]

- 28.Statistics Research Department. Share of U.S. health apps that can be downloaded at no cost 2015. https://www.statista.com/statistics/624104/share-of-health-apps-free/. Published September 21, 2015. Accessed July 30, 2022.

- 29.Hillyer GC, Beauchemin M, Garcia P, et al. Readability of Cancer Clinical Trials Websites. Cancer Control. 2020;27(1):107327481990112. doi: 10.1177/1073274819901125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Shneyderman M, Snow GE, Davis R, Best S, Akst LM. Readability of Online Materials Related to Vocal Cord Leukoplakia. OTO Open. 2021;5(3):2473974X2110326. doi: 10.1177/2473974X211032644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kauchak D, Leroy G. Moving Beyond Readability Metrics for Health-Related Text Simplification. IT Professional. 2016;18(3):45–51. doi: 10.1109/MITP.2016.50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Yu B, He Z, Xing A, Lustria MLA. An Informatics Framework to Assess Consumer Health Language Complexity Differences: Proof-of-Concept Study. Journal of Medical Internet Research. 2020;22(5):e16795. doi: 10.2196/16795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Digital Health Literacy. Proceedings of the First Meeting of the WHO GCM/NCD Working Group on Health Literacy for NCDs. https://www.who.int/global-coordination-mechanism/working-groups/digital_hl.pdf. Published 2017. Accessed July 30, 2022. [Google Scholar]

- 34.Borg K, Boulet M, Smith L, Bragge P. Digital Inclusion & Health Communication: A Rapid Review of Literature. Health Communication. 2019;34(11):1320–1328. doi: 10.1080/10410236.2018.1485077 [DOI] [PubMed] [Google Scholar]

- 35.Dunn P, Hazzard E. Technology approaches to digital health literacy. International Journal of Cardiology. 2019;293:294–296. doi: 10.1016/j.ijcard.2019.06.039 [DOI] [PubMed] [Google Scholar]

- 36.Conard S. Best practices in digital health literacy. International Journal of Cardiology. 2019;292:277–279. doi: 10.1016/j.ijcard.2019.05.070 [DOI] [PubMed] [Google Scholar]

- 37.Md Shamsujjoha, Grundy J, Li L, Khalajzadeh H, Lu Q. Human-Centric Issues in eHealth App Development and Usage: A Preliminary Assessment. In: 2021 IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER). IEEE; 2021:506–510. doi: 10.1109/SANER50967.2021.00055 [DOI] [Google Scholar]

- 38.Trecca EMC, Lonigro A, Gelardi M, Kim B, Cassano M. Mobile Applications in Otolaryngology: A Systematic Review of the Literature, Apple App Store and the Google Play Store. Annals of Otology, Rhinology and Laryngology. 2021;130(1):78–91. doi: 10.1177/0003489420940350 [DOI] [PubMed] [Google Scholar]

- 39.Andersen SAW, Hsueh WD. Evidence of Mobile Applications in Otolaryngology Targeted at Patients. Annals of Otology, Rhinology & Laryngology. 2021;130(1):118–118. doi: 10.1177/0003489420951113 [DOI] [PubMed] [Google Scholar]

- 40.Casale M, Costantino A, Rinaldi V, et al. Mobile applications in otolaryngology for patients: An update. Laryngoscope Investigative Otolaryngology. 2018;3(6):434–438. doi: 10.1002/lio2.201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Vandenbroucke JP, von Elm E, Altman DG, et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and elaboration. International Journal of Surgery. 2014;12(12):1500–1524. doi: 10.1016/j.ijsu.2014.07.014 [DOI] [PubMed] [Google Scholar]

- 42.Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, Mani M. Mobile App Rating Scale: A New Tool for Assessing the Quality of Health Mobile Apps. JMIR Mhealth Uhealth. 2015;3(1):e27. doi: 10.2196/mhealth.3422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Doruk C, Enver N, Çaytemel B, Azezli E, Başaran B. Readibility, Understandability, and Quality of Online Education Materials for Vocal Fold Nodules. Journal of Voice. 2020;34(2):302.e15–302.e20. doi: 10.1016/j.jvoice.2018.08.015 [DOI] [PubMed] [Google Scholar]

- 44.Wong K, Levi JR. Readability of pediatric otolaryngology information by children’s hospitals and academic institutions. Laryngoscope. 2017;127(4):E138–E144. doi: 10.1002/lary.26359 [DOI] [PubMed] [Google Scholar]

- 45.Wozney L, Chorney J, Huguet A, Song JS, Boss EF, Hong P. Online Tonsillectomy Resources: Are Parents Getting Consistent and Readable Recommendations? Otolaryngology–Head and Neck Surgery. 2017;156(5):844–852. doi: 10.1177/0194599817692529 [DOI] [PubMed] [Google Scholar]

- 46.Bailey CE, Kohler WJ, Makary C, Davis K, Sweet N, Carr M. eHealth Literacy in Otolaryngology Patients. Annals of Otology, Rhinology & Laryngology. 2019;128(11):1013–1018. doi: 10.1177/0003489419856377 [DOI] [PubMed] [Google Scholar]

- 47.Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. March 2021:n71. doi: 10.1136/bmj.n71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Aitken M, Gauntlett C. Patient apps for improved healthcare: from novelty to mainstream. Parsippany, NJ: IMS Institute for Healthcare Informatics. 2013. [Google Scholar]

- 49.CDC. The CDC Clear Communication Index.

- 50.Broderick J, Devine T, Langhans E, Lemerise AJ, Lier S, Harris LM. Designing Health Literate Mobile Apps. In: ; 2014. [Google Scholar]

- 51.PEMAT Tool for Audiovisual Materials. https://www.ahrq.gov/health-literacy/patient-education/pemat-av.html. Accessed July 7, 2022.

- 52.Psihogios AM, Stiles-Shields C, Neary M. The Needle in the Haystack: Identifying Credible Mobile Health Apps for Pediatric Populations during a Pandemic and beyond. Journal of Pediatric Psychology. 2020;45(10):1106–1113. doi: 10.1093/jpepsy/jsaa094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Caburnay CA, Graff K, Harris JK, et al. Evaluating Diabetes Mobile Applications for Health Literate Designs and Functionality, 2014. Preventing Chronic Disease. 2015;12:140433. doi: 10.5888/pcd12.140433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Ginossar T, Shah SFA, West AJ, et al. Content, Usability, and Utilization of Plain Language in Breast Cancer Mobile Phone Apps: A Systematic Analysis. JMIR Mhealth Uhealth. 2017;5(3):e20. doi: 10.2196/mhealth.7073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Readability score. https://readable.com/. Published January 18, 2018. Accessed July 7, 2022.

- 56.Kincaid JP, Fishburne RP, Rogers RL, Chissom BS. Derivation of New Readability Formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy Enlisted Personnel. In: ; 1975. [Google Scholar]

- 57.Gunning R. The Technique of Clear Writing. McGraw-Hill; ; 1952. [Google Scholar]

- 58.Coleman M, Liau TL. A computer readability formula designed for machine scoring. Journal of Applied Psychology. 1975;60(2):283–284. doi: 10.1037/h0076540 [DOI] [Google Scholar]

- 59.Mclaughlin GH. SMOG Grading - A New Readability Formula. The Journal of Reading. 1969. [Google Scholar]

- 60.Chall JS DE. Readability Revisited : The New Dale-Chall Readability Formula. Cambridge, Mass.: Brookline Books; 1995. [Google Scholar]

- 61.Ley P, Florio T. The use of readability formulas in health care. Psychology, Health & Medicine. 1996;1(1):7–28. doi: 10.1080/13548509608400003 [DOI] [Google Scholar]

- 62.Federal Plain Language Guidelines. https://www.plainlanguage.gov/media/FederalPLGuidelines.pdf. Published March 2011. Accessed July 7, 2022.

- 63.The Joint Commission. Advancing Effective Communication, Cultural Competence, and Patient- and Family-Centered Care. https://www.jointcommission.org/-/media/tjc/documents/resources/patient-safety-topics/health-equity/aroadmapforhospitalsfinalversion727pdf.pdf?db=web&hash=AC3AC4BED1D973713C2CA6B2E5ACD01B&hash=AC3AC4BED1D973713C2CA6B2E5ACD01B. Published 2010. Accessed July 7, 2022.

- 64.Agency for Healthcare Research and Quality. Strategy 2: Communicating to Improve Quality. https://www.ahrq.gov/patient-safety/patients-families/engagingfamilies/strategy2/index.html. Published June 2013. Accessed July 7, 2022.

- 65.Baker DW. The meaning and the measure of health literacy. Journal of General Internal Medicine. 2006;21(8):878–883. doi: 10.1111/j.1525-1497.2006.00540.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ancker JS, Kaufman D. Rethinking Health Numeracy: A Multidisciplinary Literature Review. Journal of the American Medical Informatics Association. 2007;14(6):713–721. doi: 10.1197/jamia.M2464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.DeWalt DA, Broucksou KA, Hawk V, et al. Developing and testing the health literacy universal precautions toolkit. Nursing Outlook. 2011;59(2):85–94. doi: 10.1016/j.outlook.2010.12.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rudd RE. Improving Americans’ Health Literacy. New England Journal of Medicine. 2010;363(24):2283–2285. doi: 10.1056/NEJMp1008755 [DOI] [PubMed] [Google Scholar]

- 69.Ancker JS. Addressing Health Literacy and Numeracy Through Systems Approaches. In: Patel VL, Arocha JF, Ancker JS, eds. Cognitive Informatics in Health and Biomedicine: Understanding and Modeling Health Behaviors. Cham: Springer International Publishing; 2017:237–251. doi: 10.1007/978-3-319-51732-2_11 [DOI] [Google Scholar]

- 70.Ahmed T. MedlinePlus at 21: A Website Devoted to Consumer Health Information. Stud Health Technol Inform. 2020;269:303–312. doi: 10.3233/SHTI200045 [DOI] [PubMed] [Google Scholar]

- 71.Miller N, Lacroix EM, Backus JE. MEDLINEplus: building and maintaining the National Library of Medicine’s consumer health Web service. Bull Med Libr Assoc. 2000;88(1):11–17. [PMC free article] [PubMed] [Google Scholar]

- 72.Agency for Healthcare Research and Quality. Improving Patient Safety Systems for Patients With Limited English Proficiency. https://www.ahrq.gov/health-literacy/professional-training/lepguide/chapter1.html. Published 2012. Accessed July 7, 2022.

- 73.HHS. Limited English Proficiency (LEP). https://www.hhs.gov/civil-rights/for-individuals/special-topics/limited-english-proficiency/index.html. Published November 2, 2020. Accessed July 7, 2022.

- 74.MacDonald B, Jiang PP, Cattiau J, et al. Disordered speech data collection: Lessons learned at 1 million utterances from project euphonia. 2021. [Google Scholar]

- 75.Prather C, Fuller TR, Jeffries WL, et al. Racism, African American Women, and Their Sexual and Reproductive Health: A Review of Historical and Contemporary Evidence and Implications for Health Equity. Health Equity. 2018;2(1):249–259. doi: 10.1089/heq.2017.0045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Tucker L, Villagomez AC, Krishnamurti T. Comprehensively addressing postpartum maternal health: a content and image review of commercially available mobile health apps. BMC Pregnancy and Childbirth. 2021;21(1):311. doi: 10.1186/s12884-021-03785-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Blacklow SO, Lisker S, Ng MY, Sarkar U, Lyles C. Usability, inclusivity, and content evaluation of COVID-19 contact tracing apps in the United States. Journal of the American Medical Informatics Association. 2021;28(9):1982–1989. doi: 10.1093/jamia/ocab093 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix – App search terms