Abstract

Objective. The aim of this mixed-methods study was to examine the effect of disabled backward navigation on computerized calculation examinations in multiple courses.

Methods. Student performance on comprehensive pharmacy calculation examinations before and after implementation of disabled backward navigation were compared. Deidentified data from ExamSoft were used to determine median examination scores, passing rates, and time to completion for all three attempts given on comprehensive calculation exams held in a pharmacy calculations course (PharDSci 504) and in three applied patient care laboratory courses (Pharm 531, 541, and 551). An anonymous, voluntary student survey gathered student perceptions of disabled backward navigation. Qualitative data were evaluated for thematic findings.

Results. The impact of disabled backward navigation on test scores and passing rates varied by course and test attempt. Students in Pharm 541 and 551 performed significantly worse on the initial test attempt after backward navigation was disabled compared to the previous year, with no significant differences in student performance seen on the retakes. Performance in PharDSci 504 and Pharm 531 followed the opposite pattern, with no significant difference in performance for the initial tests but significantly increased performance on the retakes. The amount of time spent on examinations either significantly decreased or remained the same. Student perceptions were generally consistent across all cohorts, with at least 74% agreeing that disabling backward navigation increased examination difficulty.

Conclusion. Disabling backward navigation had a mixed effect on student examination performance. This may highlight how student behaviors change as backward navigation is disabled.

Keywords: computerized examinations, backward navigation, mixed-methods study, pharmaceutical calculations, academic integrity

INTRODUCTION

Computer-based examinations are now commonplace throughout Doctor of Pharmacy (PharmD) programs. Although computer-based testing has been rapidly adopted in the last decade, many PharmD programs still face questions on best practices for using available features that balance test security while helping to minimize test anxiety for students. Of the many computer-based testing features available, one of the most contested is disabled backward navigation. When an instructor disables backward navigation, students are given only one opportunity to answer a question before moving on to the next assessment item. Alternatively, instructors can choose to allow backward navigation, which allows students to skip a question and then return to that question later in the examination, like on paper-based examinations.

When Washington State University College of Pharmacy and Pharmaceutical Sciences adopted computer-based testing using ExamSoft (ExamSoft Worldwide LLC) in 2013, the college elected to allow backward navigation to previously viewed question items. In the years following adoption of computer-based testing, examination integrity concerns were raised by faculty and students. There were growing concerns over academic dishonesty on examinations related to syncing testing questions between students that sat near each other during proctored examinations. These academic integrity concerns were occurring despite the nearly universal adoption of randomizing test questions and answer options. In addition, faculty wanted to promote better study habits and test-taking skills that would prepare the students for their North American Pharmacist Licensure Examination (NAPLEX) and Multistate Pharmacy Jurisprudence Examination (MPJE), which prohibit backward navigation. After thorough discussion by a committee consisting of faculty and students, the college’s faculty voted to change the testing policy to disable backward navigation on all examinations beginning in the 2018-2019 academic year. To prevent accidental movement on to the next question, all examinations also required students to select/input an answer to each question before moving on. This change was implemented collegewide in every course. While the primary goal was to improve academic dishonesty issues during testing, there was some concern from faculty and students that this feature would make examinations more difficult and increase testing anxiety.

Prior to starting this study, limited information was available in the literature about how the ability to freely navigate on a computerized test can impact test performance within higher education. Since the initiation of these research efforts, two studies have been published that have investigated the effect of disabling backward navigation on pharmacy student examination performance. These studies looked at different courses, but identical sets of questions were asked between the years with and without backward navigation. Neither study found a significant impact on examinationresults.1,2 A third study recently published collected student perceptions related to the implementation of computer-based testing at their institution. The authors reported that 62% of surveyed pharmacy students believed that the ability to navigate to previous questions either significantly improved or slightly improved their performance on examinations.3 Outside of the pharmacy education literature, Elsalem and colleagues investigated the stress levels of undergraduate medical students taking remote electronic examinations during the COVID-19 pandemic versus previous in-person examinations. Students who reported a greater level of stress with remote electronic examinations reported that the mode of question navigation was a main factor of their stress, along with examination duration and technical problems.4

Herein, we describe a study to determine the impact that collegewide implementation of disabled backward navigation had on students in their first (P1), second (P2), and third (P3) years of pharmacy school. To assess the impact on student examination performance, we retrospectively compared comprehensive pharmacy calculation examination scores between years where backward navigation was enabled versus disabled. Our study focused on comprehensive pharmacy calculation examinations, as these are consistently given each semester to ensure students understand calculation concepts. Because our PharmD program is competency based and allows multiple attempts to show competency, we also compared how the testing change impacted student performance on each of the three testing attempts allowed. We further compared the length of time students spent completing the examinations before and after the testing policy change. In addition to investigating examination performance measures, we collected student perceptions of the collegewide switch to disabled backward navigation, and we present both quantitative and qualitative survey findings.

METHODS

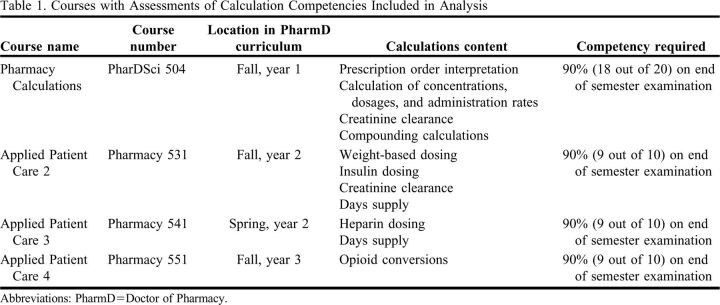

A mixed-methods approach was used to examine the impact of disabled backward navigation on student performance. Calculation examination scores from four courses throughout the curriculum were included in this analysis. An additional applied patient care course (Pharmacy 561) also includes a comprehensive calculations assessment, but it was not included in the analysis due to major changes in the course and instructor between the years of comparison. The courses included in this research are displayed in Table 1. Additionally, we gathered student perceptions of the change to disabled backward navigation. The Washington State University Office of Research Assurances has found that the project is exempt from the need for review by an institutional review board.

Table 1.

Courses with Assessments of Calculation Competencies Included in Analysis

Before describing the specific courses included in the analysis, we first give an overview of the PharmD program. Washington State University College of Pharmacy and Pharmaceutical Sciences offers a unique active-learning curricular approach where introductory materials are provided to students as preclass content (short videos, readings, worksheets, etc), and the in-class time is dedicated to collaborative exercises facilitated by faculty. In addition to an active-learning curricular delivery model, the college uses a three-tiered grading scheme, in which the grades given are “honors,” “satisfactory,” and “failure” (H-S-F system). Important aspects of the model include frequent testing, testing over smaller amounts of material, and multiple attempts to meet competency.5 The competency-based assessment model requires that students achieve at least 80% on each assessment, but some assessments, including calculation comprehensive examinations, have a more stringent competency bar of 90%. This higher competency bar for calculation-focused comprehensive examinations emphasizes that accurate completion of calculations is critical as a pharmacist. Students that fail to demonstrate competency on the first attempt of an assessment have two additional opportunities to remediate. Those three attempts are the initial test, a retake, and an extended learning experience. If students fail to show competency after three attempts on an assessment, they fail the course. The questions on each assessment are unique but maintain similar difficulty.

The first course included in this analysis was Pharmacy Calculations (PharDSci 504), which provides first-year student pharmacists with the opportunity to learn essential pharmacy calculations within their first semester. Students are given three attempts to demonstrate competency on three quizzes (80% competency required) and a final comprehensive examination (90% competency required). After completing PharDSci 504, students are required to maintain and demonstrate skills on calculations topics throughout the curriculum in a laboratory course series on applied patient care (Pharm 531, 541, and 551). Each of these laboratory courses offer multiple opportunities to practice calculation skills during the semester, culminating in a comprehensive calculation examination at the end of the semester. Student pharmacists must demonstrate competency on this examination by achieving a minimum score of 90%. Students have three opportunities to reach this competency threshold.

Our analysis included all students enrolled in one of these noted courses during the 2017-2018 academic year when backward navigation was enabled and the 2018-2019 academic year when backward navigation was disabled. Assessment questions were not identical between academic years. Instead, questions were designed to be similar in difficulty, as assessed by Bloom’s taxonomy coding, and to assess the same learning objectives. Student performance on each examination attempt was downloaded from ExamSoft and inputted into a Microsoft Excel file, after which all student identifiers, including name and student ID number, were removed. Similarly, reports of the total time taken on each examination attempt were downloaded from ExamSoft and deidentified.

First-, second-, and third-year student pharmacists in the program were offered the opportunity to participate in an online survey using Qualtrics (Qualtrics International Inc). This survey was administered at the beginning of the spring 2019 semester, following the first semester in which backward navigation was disabled on examinations. The goal of the survey was to determine students’ perceptions of how disabling backward navigation has affected them on examinations. The surveys for second- and third-year students included 15 questions, of which two were about campus and professional year, nine were Likert-scale questions, and four were free-response questions. As the first-year pharmacy students had no prior experience with enabled backward navigation, the survey for this cohort only included eight questions (two questions about campus and professional year and six Likert-scale questions).

All data and statistical analyses were completed using either Microsoft Excel or Prism 6 (GraphPad Software LLC). The D’Agostino-Pearson normality test was used to determine the normality of data. Results of the normality tests indicated almost all of the data were not normally distributed; as a result, nonparametric statistical tests were used throughout. Mann-Whitney tests were used when comparing test scores between academic years and when assessing differences in length of time spent on examinations. The Fisher exact test was used to compare statistical differences in passing rates. Differences in perceptions by professional year from the student surveys were compared using the Kruskal-Wallis test followed by the Dunn post hoc multiple comparisons test. The Mann Whitney test was used to determine whether there were significant differences between cohorts for questions asked only to the P2 and P3 students.

Data from the free-response survey questions were exported into Microsoft Excel and coded to identify themes.6 First-level coding (identifying text that is meaningful and recurrent) was performed by two researchers. The researchers coded the first question together using inductive coding methods and coded the remaining questions independently. The results of the first-level coding were compared and discussed to develop a codebook, which was stored in Excel and continuously modified as needed to improve the code names and organization. Second-level coding (grouping codes into meaning units and organizing the units into larger thematic areas) was performed by the two researchers together to determine themes. Disagreements were reconciled via discussion.

RESULTS

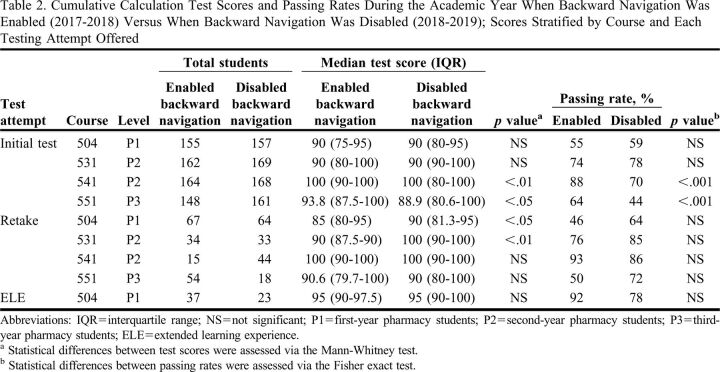

Student test scores and passing rates on comprehensive calculation examinations administered in different courses throughout the professional program are noted in Table 2. As our program is competency based, students needed to score a 90% or better on each calculation examination to pass the assessment. The extended learning experience data (third attempt) from only one class are shown due to the low numbers of students completing an extended learning experience test in other courses.

Table 2.

Cumulative Calculation Test Scores and Passing Rates During the Academic Year When Backward Navigation Was Enabled (2017-2018) Versus When Backward Navigation Was Disabled (2018-2019); Scores Stratified by Course and Each Testing Attempt Offered

The impact of disabled backward navigation varied by course. In the first-year pharmacy calculations course (PharDSci 504) and the second-year fall applied patient care laboratory course (Pharm 531), student performance was not affected by disabled backward navigation on the initial attempt or the extended learning experience attempt. Interestingly, students in the first-year calculations course and second-year fall applied patient care course performed significantly better when backward navigation was disabled on the retakes (p<.05 and <.01 for first-year calculations and second-year fall applied patient care courses, respectively). Students with disabled backward navigation on the initial tests in the second-year spring and third-year fall applied patient care laboratory courses (Pharm 541 and Pharm 551) performed significantly worse than the prior year when backward navigation was allowed (p<.01 and <.05, respectively). However, there were no significant differences in performance on the retakes for these courses.

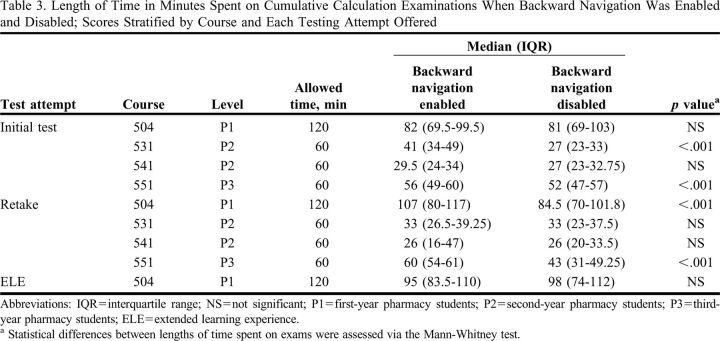

The duration of time students spent on their cumulative calculation examinations when backward navigation was enabled and disabled is reported in Table 3. The amount of time spent on examinations either significantly decreased or had no change following the disabling of backward navigation.

Table 3.

Length of Time in Minutes Spent on Cumulative Calculation Examinations When Backward Navigation Was Enabled and Disabled; Scores Stratified by Course and Each Testing Attempt Offered

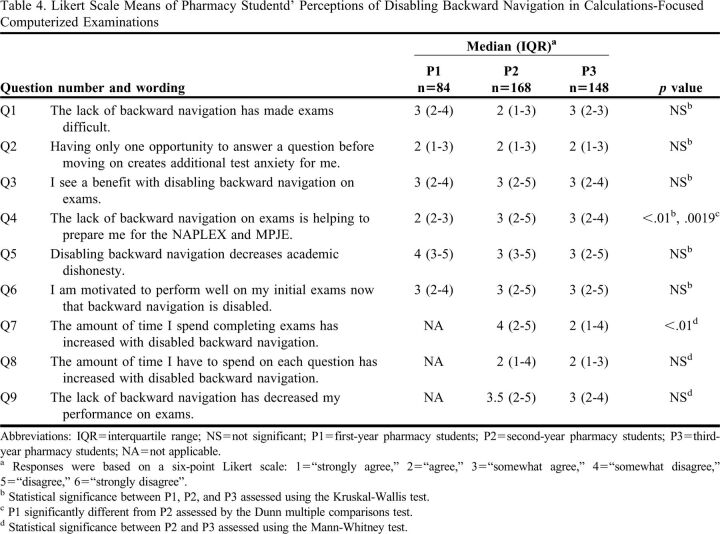

Quantitative results of student perceptions collected through the administered survey are presented in Table 4. The response rates to the survey were 50% (84/168) for P1 students, 99% (168/169) for P2 students, and 91% (148/162) for P3 students. The higher response rates for the P2 and P3 students were likely the result of their cohort being involved in providing input on the testing policy change. As the P1 students were new to program and likely had no previous experience in pharmacy school with enabled backward navigation, they were less vocal about the change. Perceptions were generally consistent between the three professional years, even though the P1 students had no past experience with enabled navigation within pharmacy curricula. Students perceived that disabled backward navigation increased examination difficulty, with 74% of P1, 79% of P2, and 80% of P3 students responding that they “strongly agree,” “agree,” or “somewhat agree” with the statement, “The lack of backward navigation has made examinations difficult.” When it came to the benefits of the testing change, 66% of students agreed that they saw a benefit, but opinions were divided on whether disabled backward navigation helped to decrease academic dishonesty (50% reported that they “somewhat disagree,” “disagree,” or “strongly disagree”). A greater number of P1 students (52%) responded “agree” or “strongly agree” with the idea that having one attempt at a question helps to prepare them for the NAPLEX/MPJE in comparison to P2 students (33% of which responded “agree” or “strongly agree”; p=.0019). Fifty-eight percent of students agreed (“strongly agree,” “agree,” or “somewhat agree”) that they were motivated to perform well on initial examinations now that backward navigation was disabled. Test anxiety was a major concern noted by the students, with 84% of students reporting that they agreed (“strongly agree,” “agree,” or “somewhat agree”) with the statement, “Having only one opportunity to answer a question before moving on creates additional test anxiety for me.” For the P2 and P3 students, who could compare to the previous academic year, students reported that they spent more time on each question but not necessarily more time completing examinations. Interestingly, 51% of P3 students agreed (“strongly agree” or “agree”) with the statement, “The amount of time I spend completing examinations has increased with disabled backward navigation,” versus 38% of P2 students (p<.01).

Table 4.

Likert Scale Means of Pharmacy Studentd’ Perceptions of Disabling Backward Navigation in Calculations-Focused Computerized Examinations

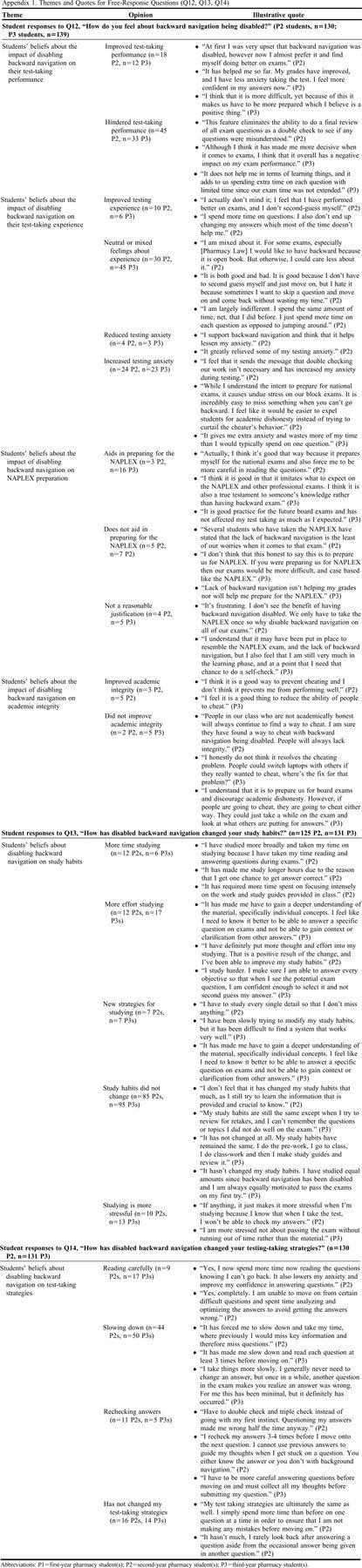

To understand how the move to disabled backward navigation impacted the P2 and P3 students who had previous experience with enabled backward navigation, free-responses questions were included on the P2 and P3 surveys. Student pharmacist responses to Question 12 (“How do you feel about backward navigation being disabled?”), Question 13 (“How has disabled backward navigation changed your study habits?”), and Question 14 (“How has disabled backward navigation changed your test-taking strategies?”), are displayed in Appendix 1. The final free-response question, Question 15 (“What are other ways the college could help prepare students for the NAPLEX?”), was used for information purposes for the college and for improving student preparedness, so these results were not included in this paper.

DISCUSSION

The decision to disable backward navigation on all examinations collegewide required considerable efforts to gain student buy-in. In the semester prior to instituting the change, student thoughts and ideas were gathered about how to address academic dishonesty concerns and also how to best prepare students for future licensure examinations. A committee was created in which students had an integral role. Having student input was pivotal in getting student buy-in with the removal of backward navigation. This did not completely alleviate student anxiety or pushback, however. As a result of these student concerns, a student and faculty group formed that led to the development of this study to evaluate student examination performance and perceptions following the disabling of backward navigation.

As shown in Table 2, the impact of disabled backward navigation on student performance varied by year in the program. No significant differences in initial test performance were seen for students earlier in the program (PharDSci 504 and Pharm 531 courses), while significant decreases in initial performance were seen for P2 and P3 applied patient care courses (Pharm 541 and Pharm 551). One possible explanation for this observed difference is related to engrained test-taking strategies, where the more senior students had at least one year of experience with being able to go back and review questions before submitting the examination. The senior students, particularly the P3 students, may have developed a test-taking routine when backward navigation was enabled that was disrupted by the change in testing policy. Interestingly, the median test score for the Pharm 541 course was identical between the years when backward navigation was enabled versus disabled, but the lower end of the interquartile range was much lower when backward navigation was disabled. This highlights that disabling backward navigation impacted the lower-performing students more than the higher-performing students within the same cohort.

An opposite pattern emerged for students completing retakes. Students had significantly better performance on retakes in the PharDSci 504 and Pharm 531 courses earlier in the program when backward navigation was disabled, while no differences were seen for retake performance for the later Pharm 541 and Pharm 551 courses. This difference is possibly due to the perceived difference in the stakes of the examinations, as shown by the student perceptions of increased examination difficulty after disabling backward navigation (Table 4). The student perceptions of increased difficulty likely led to changes in student behavior related to studying for examinations.

The mixed results of this study contrast with recently published reports that have indicated that eliminating backward navigation on examinations does not significantly affect examination scores. A study by Caetano and colleagues showed no significant differences in overall item difficulty before and after disabling backward navigation. When comparing examination scores, they found a significant decrease of 0.95% in examination scores for the highest-performing students, but the study did not demonstrate that disabling backward navigation had a significant impact on overall item performance or examination results.2 Another study by Cochran and colleagues looked at six examinations in which backward navigation had been eliminated, and they found no significant reduction in examination scores.1 Importantly, though, these studies are from programs that use a fundamentally different grading model, specifically a traditional A-F grading scheme, in comparison to our competency-based model. As the design of assessments varies between a traditional versus competency-based programs, the results presented herein may only be generalizable to other competency-based programs.

The overall time needed for students to complete their examinations (Table 3) was generally lower after disabling backward navigation, despite students’ perception that they spent longer on each examination question. This may be explained by the fact that disabling backward navigation stopped students from reviewing their examination again before submitting. Our results align with data reported by Cochran and colleagues that showed the average time that students spent on each question was significantly reduced on two of the six examinations.1

When backward navigation was enabled, students freely navigated between questions on an examination. This allowed for potential academic dishonesty by letting students skip to the same question as their neighbor. Disabling backward navigation along with instituting question randomization greatly decreases the likelihood of being on the same question as one’s neighbor at the same time. Pinpointing the best way to address academic dishonesty can be difficult given how prevalent it may be among pharmacy students. One study that assessed the prevalence of academic dishonesty found that 16.3% of students admitted to cheating during pharmacy school, and approximately 74% admitted that they or their classmates worked on an individual assignment with a friend.7 One study looked to identify specific genders involved in cheating;8 it concluded that no gender-based differences were noted in cases of admitted cheating or academic dishonesty. Yet, that study did find that the female students surveyed reported witnessing cheating more than male students, and the male students surveyed may have had a more lenient perception toward academically dishonest behavior.8 Another study by Monteiro and colleagues reviewed social networks involved in cheating, and they concluded that medical students are involved in social networks of cheating that increase over time and are more prevalent in the fifth year (17.3%) compared with the first year (3.4%).9 However, if educators continue to raise awareness of how testing modifications can be perceived by students in order to foster a professional environment while simultaneously decreasing stress associated with pharmacy school, a positive shift in culture could potentiate.10

Limitations of this study include that only one set of data was collected at one institution, and we only measured performance on comprehensive calculation examinations with assessments that were not the same between years. Although the same learning objectives were assessed, it is possible that differences between the cohorts led to the differences seen on the examination scores. We did not attempt to stratify student performance by incoming grade point average or performance in math-focused prerequisite courses. In addition, our use of historical controls could also be impacted by a multitude of other factors, such as slight variations in teaching strategies and/or use of different student resources between years of data collection. It may also be possible that the number of students who had previous experience with backward navigation on computerized examinations varied between compared cohorts. As our study focused solely on calculation assessments, it is also possible that math-based classes may be impacted differently by disabled backward navigation than other course types within a PharmD program. Further, our findings may not be generalizable to other institutions because the competency-based assessment model is inherently different from standard grading. Furthermore, our competency-based assessment model provides students with three attempts to demonstrate competency, while other competency-based programs have their own unique systems to remediate students. The number of attempts given to demonstrate competency likely impacts students’ motivations as the stakes of the assessment increase. In addition, the student survey only assessed students’ opinions after backward navigation was disabled and did not assess students’ opinions prior to the testing policy change. Other limitations related to the qualitative data analysis include researchers’ personal biases and the volume of data associated with the study.11

CONCLUSION

The impact of disabled backward navigation was evaluated in four courses, each of which assessed calculations through a comprehensive examination. When backward navigation was disabled on initial attempts, students performed worse in two of the four classes evaluated. However, when backward navigation was disabled on assessment retakes, students performed better in two of the four classes. This may highlight how student behaviors may change as the stakes of an assessment increase. The time students took completing an assessment either stayed the same or decreased significantly when backward navigation was disabled depending on the specific course and assessment attempt. Student perceptions of the disabling of backward navigation were negative in all professional years. Although most students believed their study habits did not change, almost all students (90%) noted changes in their test-taking strategies, specifically that they read questions more carefully and no longer second-guessed their answers.

Appendix 1.

Themes and Quotes for Free-Response Questions (Q12, Q13, Q14)

REFERENCES

- 1.Cochran G, Foster J, Klepser D, et al. The impact of eliminating backward navigation on computerized exam scores and completion time. Am J Pharm Educ. 2020;84(12):8034. doi: 10.5688/ajpe8034. Accessed November 15, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Caetano M, Pawasauskas J. A retrospective analysis of the impact of disabling item review on item performance on computerized fixed-item tests in a doctor of pharmacy program. Curr Pharm Teach Learn. 2020;12(5):539-543. doi: 10.1016/j.cptl.2020.01.018. Accessed November 15, 2022. [DOI] [PubMed] [Google Scholar]

- 3.Karibyan A, Sabnis G. Student perceptions of computer-based testing using examsoft. Curr Pharm Teach Learn. 2021. doi: 10.1016/j.cptl.2021.06.018. Accessed November 15, 2022. [DOI] [PubMed] [Google Scholar]

- 4.Elsalem L, Al-Azzam N, Jum’ah A, et al. Stress and behavioral changes with remote e-exams during the covid-19 pandemic: a cross sectional study among undergraduates of medical sciences. Annals of Medicine and Surgery. 2020;60:271-279. doi: 10.1016/j.amsu.2020.10.058. Accessed November 15, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bray BS, Remsberg CM, Robinson JD, Wright SK, Muller SJ, Maclean LG, Pollack GM. Implementation and preliminary evaluation of an honours-satisfactory-fail competency-based assessment model in a doctor of pharmacy programme. FIP Pharmacy Education. 2017;17(1):143-153. [Google Scholar]

- 6.Ulin PR, Robinson ET, Tolley EE. Qualitative methods in public health: a field guide for applied research. San Francisco, CA: Jossey-Bass; 2005. [Google Scholar]

- 7.Rabi SM, Patton LR, Fjortoft N, Zgarrick DP. Characteristics, prevalence, attitudes, and perceptions of academic dishonesty among pharmacy students. Am J Pharm Educ. 2006;70(4):73. doi: 10.5688/aj700473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ip EJ, Pal J, Doroudgar S, Bidwal MK, Shah-Manek B. Gender-based differences among pharmacy students involved in academically dishonest behavior. Am J Pharm Educ. 2018;82(4):6274. doi: 10.5688/ajpe6274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Monteiro J, Silva-Pereira F, Severo M. Investigating the existence of social networks in cheating behaviors in medical students. BMC Med Educ. 2018;18(1):193. Published August 9, 2018. doi: 10.1186/s12909-018-1299-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tak C, Henchey C, Feehan M, Munger MA. Modeling doctor of pharmacy students’ stress, satisfaction, and professionalism over time. Am J Pharm Educ. 2019;83(9):7432. doi: 10.5688/ajpe7432 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Anderson C. Presenting and evaluating qualitative research. Am J Pharm Educ. 2010;74(8):141. doi: 10.5688/aj7408141 [DOI] [PMC free article] [PubMed] [Google Scholar]