Abstract

Objective. To determine the association between pharmacy practice didactic course examinations and performance-based assessments with students’ performance during their advanced pharmacy practice experiences (APPEs).

Methods. This retrospective analysis included data from the graduating classes of 2018 to 2020. Students were coded as APPE poor performers (final course grade <83%) or acceptable performers. Assessments in pharmacy practice didactic and skills-based courses in students’ second and third years were included in the analysis, with thresholds correlating to grade cutoffs. The association between poor performance mean examination scores and performance-based assessments with APPE performance was calculated.

Results. Of the 403 graduates, analysis sample sizes ranged from 254 to 403. There were 49 students (12%) who met the criteria for poor performance in the APPE year. When comparing pharmacy practice didactic course performance to APPE poor performance, the proportion of mean examination scores that were <83% for six of the seven pharmacy practice didactic courses was significant; five of the seven mean examination scores were significant at the <78% threshold. Performance-based assessments that were significantly associated with APPE poor performance often required critical thinking.

Conclusion. A gap in identification of students with APPE poor performance who did not fail a didactic course was demonstrated. Specifically, this finding suggests that pre-APPE curriculum should focus on assessments that include critical thinking. These methods could be used by other pharmacy programs to find components of their curricula that help identify students who need additional support prior to the APPE year.

Keywords: advanced pharmacy practice experience, skills-based assessment, academic performance, pharmacy practice

INTRODUCTION

It is crucial for schools and colleges of pharmacy to promote student success in advanced pharmacy practice experiences (APPEs) by monitoring student performance in the pre-APPE curriculum and providing remediation as needed.1 When students require remediation prior to or during APPEs, that remediation requires intensive time and resources.2,3 Determining a process that guides remediation decisions could help programs in planning for and appropriately allocating limited resources. This begins with identifying factors within the pre-APPE curriculum that are associated with student performance during APPEs.

Thus far, evaluations that have examined performance in the pre-APPE curriculum have centered on entrance demographics and end-of-semester outcomes, such as a failed course or examination, and their relationships to APPE readiness or performance. Call and colleagues examined a range of factors, including grade point average (GPA), course grades, performance-based assessment and examination scores, professionalism issues on introductory pharmacy practice experiences (IPPEs), and academic honor code violations.4 Nyman and colleagues analyzed the predictive value of student demographics, along with admission and didactic performance measures.5 Both of these studies found that two factors correlate to APPE readiness more than others: aggregate pharmacy education knowledge-based variables and the entering age of students (more than skills-based variables, admission measures, or other student demographics).4,5

Medical educators have also examined indicators that are predictive of medical student performance. Efforts have focused on four general categories, including demographics (eg, gender), other background factors (eg, college major), performance/aptitude (eg, medical college admission test scores), and noncognitive factors (eg, curiosity).6-10 The pass-fail grading system used by many medical schools makes it challenging to identify performance predictors within preclerkship curricula. An evaluation at Harvard Medical School examined the association between frequency of first-year medical students’ performance in the bottom quartile on major examinations and subsequent academic and clinical performance. The number of appearances in the bottom quartile in year one was associated with poor performance on knowledge-based assessments and poor clinical performance during experiential rotations.11

The literature has provided mixed results on the association between performance-based assessments within skills-based courses, which are fundamental components of Doctor of Pharmacy (PharmD) curricula, and APPE readiness. Additionally, despite these previous evaluations, some students perform poorly during their APPE years and are not identified in the pre-APPE curriculum. This current evaluation aims to determine the association between pharmacy practice didactic course examinations and performance-based assessments with students’ performance during their APPEs.

METHODS

The University of Wisconsin-Madison School of Pharmacy is a four-year PharmD program. The first three years of the program include didactic coursework, skill-based coursework, and IPPEs. Throughout students’ second (P2) and third (P3) years in the program, students are required to take seven didactic pharmacy practice courses, including pharmacokinetics, pharmacotherapy (four courses), nonprescription medications, and drug literature evaluation. Across the didactic courses, student assessments consist of examinations and lower-stakes assignments, such as problem sets and quizzes. The pharmacy practice skills-based course series beginning in the second year of the program includes the Integrated Pharmacotherapy Skills courses (I-IV). The skills-based courses prepare students for APPEs through practice and skill development, along with formative and summative assessment of pharmacy practice skills through simulated patient and provider encounters.12 Each of the Integrated Pharmacotherapy Skills courses consist of approximately eight one-hour discussions and eight three-hour laboratory sessions spaced throughout the semester. For this analysis, didactic and skills-based courses related to the pharmacy practice sequence were included. The courses and variables are further described in Appendix 1.13-15 Briefly, for this analysis, unweighted mean examination scores were used as the primary indicator for didactic course performance. During the fourth year of the program (the APPE year), students are expected to care for patients in various clinical settings, primarily across Wisconsin, with supervision from a licensed pharmacist. The APPE year is made up of the four required core APPEs per the 2016 Standards (ie, acute care, ambulatory care, community pharmacy, and health-system pharmacy) and three to four elective rotations, which are each six weeks in duration.

This evaluation is a retrospective analysis of data from the graduating classes of 2018, 2019, and 2020. Data were extracted from the University of Wisconsin-Madison’s learning management system and an established online clerkship database. The last APPE for the graduating class of 2020 was dropped from this analysis, given the changes to rotations and stress associated with the onset of the COVID-19 pandemic. In order to preserve the greatest number of students, all students were included in the analysis, regardless of progression irregularities. Due to progression and the mixed availability of data (due to a campus change in learning management systems and curricular changes), there were several instances of missing data, including one semester’s data in one course. While all students were retained in the analysis, there was no imputation for missing data.

To prepare the data for analysis, the student database with final APPE scores was coded to differentiate APPE poor performers from APPE acceptable performers. In order to better identify students at risk of poor performance, higher mean examination-score thresholds of <78% and <83% were used as opposed to using a traditional definition of failure. Students scoring <83% were coded as poor performers. All other students (ie, those who scored ≥ 83%) were considered acceptable performers. Similarly, data were coded to identify poor performers in seven didactic courses and four skills-based courses (Appendix 1). For didactic courses using mean examination scores, two thresholds of <83% and <78% were used to define poor performers, as these are the lower thresholds used by the campus for a grade of B (ie, GPA of 3.0) and BC (ie, GPA of 2.5), respectively (ie, with <83%, a student would earn less than a B and be considered a poor performer for this analysis). For performance-based assessments, two thresholds of <78% and <70% were used, as these are the cutoffs used by the campus for a BC and C (ie, GPA of 2.0), respectively. Compared to the didactic cutoffs, the thresholds for performance-based assessments were lowered to allow for better identification of students who were struggling and for greater generalizability to institutions who use a pass-fail grading system for higher-stakes performance-based examinations. Students who received less than a C in at least one didactic course were also identified as poor performers.

Descriptive statistics were calculated. Fisher exact tests were used to evaluate the relationship between poor performance in didactic courses and performance-based assessments with APPE poor performers, as both the independent and dependent variables were binomial. A subanalysis using the Fisher exact test was performed to understand the relationship between the number of didactic courses in which a student performed poorly on mean examination scores and APPE poor performance. A sensitivity analysis was performed using logistic regression, including all didactic courses for the outcome of APPE poor performance. All data management and analyses were conducted using StataSE 16 (StataCorp LLC). The University of Wisconsin-Madison Educational Institutional Review Board certified this work as a quality assurance project.

RESULTS

For the graduating classes of 2018, 2019, and 2020, 403 students graduated from the program. However, due to progression and data availability, sample sizes for individual analyses ranged from 254 to 403. There were 49 students (12%) who met the criteria for poor performance in the APPE year. Of those 49 students, 38 students performed poorly in one APPE, six performed poorly in two APPEs, four performed poorly in three APPEs, and one performed poorly in five APPEs. There were six unique students who failed one APPE during their fourth year. Over the three graduating years, there were 18 APPE rotations where students received a grade of <83% in the graduating class of 2018, 20 in 2019, and 29 in 2020. During this period, all students who began APPEs graduated.

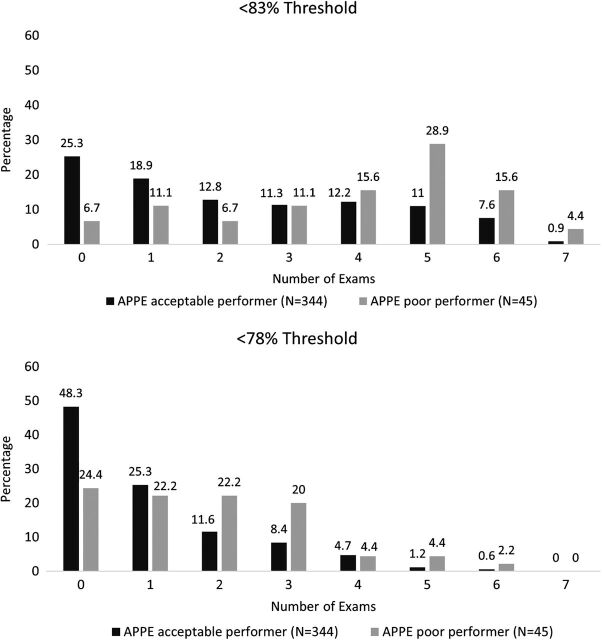

When comparing didactic course mean examination scores to APPE performance, nine APPE poor performers (18.4%) and 21 APPE acceptable performers (6%) earned less than a C in any didactic course (p=.006). At both the <83% and <78% thresholds, there was a significant difference between the number of pharmacy practice didactic mean examination scores on which students performed poorly and performing poorly in APPEs (Figure 1).

Figure 1.

Number of poor didactic examination means by APPE performance. The numbers 0-7 on the x-axes indicate the number of courses with mean examination scores less than the defined threshold. The numbers above the bar chart indicate percentage of students. Abbreviations: APPE=advanced pharmacy practice experience.

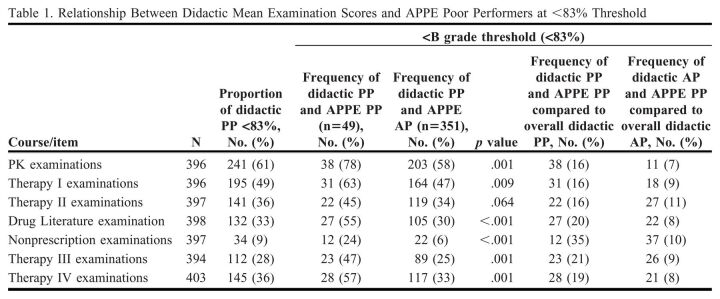

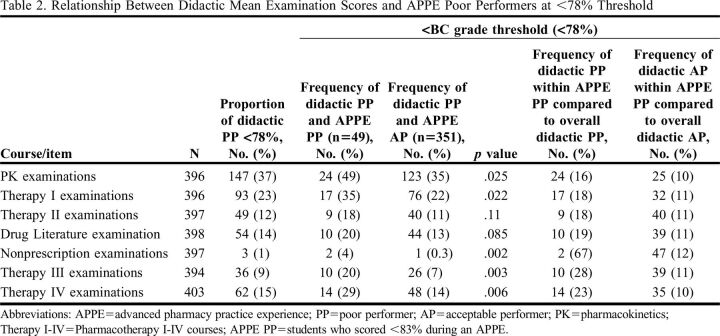

When reviewing the relationship between didactic mean examination scores and APPE performance, the proportion of didactic poor performers was significantly higher among APPE poor performers compared to APPE acceptable performers (Tables 1 and 2). This was consistent at both thresholds, with six of the seven pharmacy practice didactic courses at the <83% threshold and five of the seven courses at the <78% threshold. The sensitivity logistic regression analysis confirmed the primary analysis in that the same six courses were significant and maintained a positive relationship between didactic and APPE performance (ie, students with a <83% mean examination score were more likely to perform poorly in APPEs).

Table 1.

Relationship Between Didactic Mean Examination Scores and APPE Poor Performers at <83% Threshold

Table 2.

Relationship Between Didactic Mean Examination Scores and APPE Poor Performers at <78% Threshold

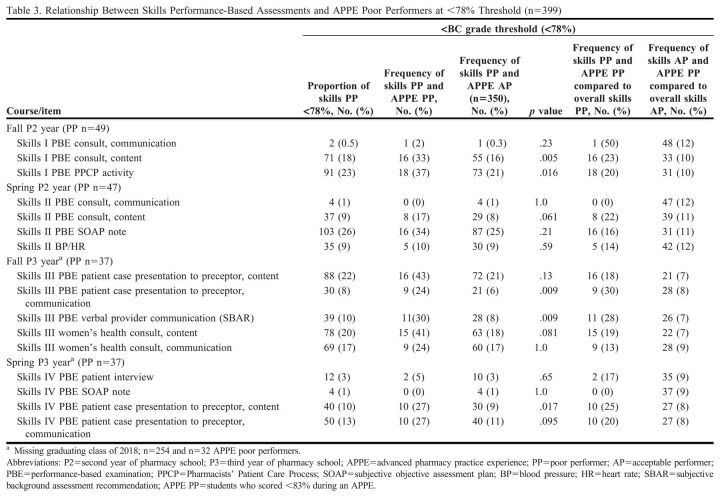

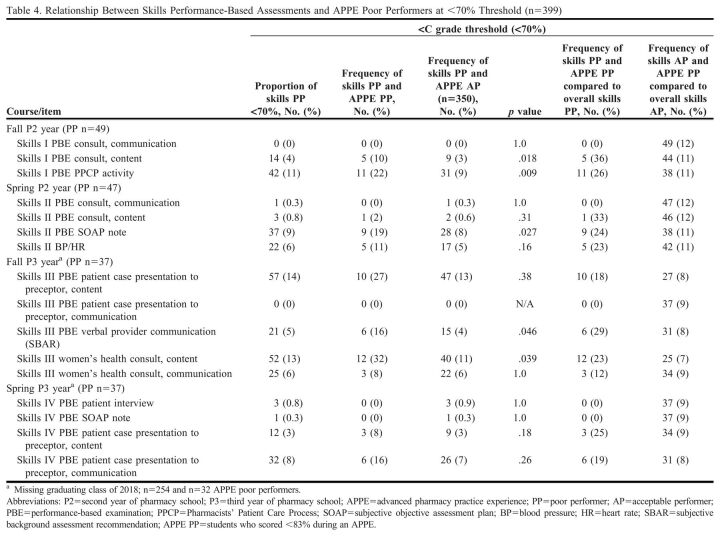

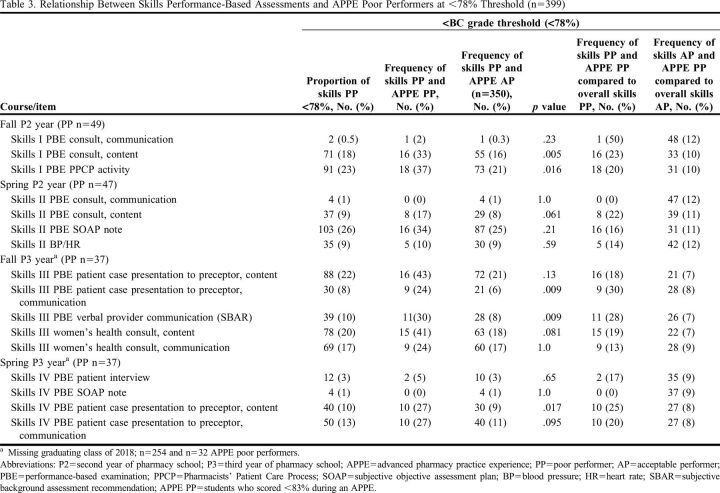

Across the skills-based courses, each semester of the skills-based curriculum included performance-based assessments that were significant as well as assessments that were not significant (Tables 3 and 4). Assessments that tended to be significant required critical clinical thinking by the student. Additionally, assessments were more likely to be significant the first time a skill was summatively assessed, while on subsequent similar assessments, students tended to perform without differentiation in the APPE year.

Table 3.

Relationship Between Skills Performance-Based Assessments and APPE Poor Performers at <78% Threshold (n=399)

Table 4.

Relationship Between Skills Performance-Based Assessments and APPE Poor Performers at <70% Threshold (n=399)

DISCUSSION

This evaluation found that students may be identified for future poor performance on APPEs by assessing multiple markers of performance within didactic and skills-based courses. Specifically, this analysis highlights that there are opportunities to identify students at risk of poor performance on APPEs in the absence of failing a course, suggesting that there has been a gap in identifying these students. In order to better identify students at risk of poor performance in an APPE, we used higher mean examination-score thresholds of <78% and <83%. The risk of APPE poor performance for students in this new score range was greater when students repeatedly scored between 70% and 83%. We also found that poor didactic performance was associated with APPE poor performance, strengthening this known relationship.4,5 By using a higher threshold, additional at-risk students could be easily identified through early warning and intervention systems. Pharmacy programs could intervene earlier to help at-risk students address deficits in knowledge and skills in a consistent and coordinated manner.

Skills-based items that correlated to APPE performance were related to the application of content and critical thinking. Examples of this include identification and resolution of drug-related problems and clinical documentation, as compared to communication and physical assessment skills (ie, blood pressure and heart rate measurement), which do not require the same level of critical thinking. This illustrates the importance of assessment using direct observation of skills, and the importance of these activities in the curriculum. This finding is inconsistent with the conclusions of Call and colleagues, as they recommended against the use of performance-based assessments as a determinant of progression, as they did not find an association between skills practicum failure and poor performance on APPEs.4

As compared to previously published literature, we evaluated variables within pharmacy practice courses at a more granular level, particularly within skills-based courses, and we were able to determine that some activities that were significant for APPE performance in earlier skills-based courses were no longer significant in later courses.4 This suggests that the students who struggle with critical thinking and the application of new skills in the pre-APPE curriculum may struggle when progressing to the APPE year, when they are expected to perform skills in a new environment with potentially new disease states and higher patient complexity, and with higher stress from patient care implications. Additionally, the discrepancy between this work and Call and colleagues may be due to how performance-based assessments were scored and which assessments were evaluated in this analysis. Call and colleagues used a pass-fail grading scheme, while our evaluation used point-based grading, which allowed for evaluation at specific and higher thresholds (<78% and <70%).4 By using a point-based system, applying higher performance thresholds, and assessing multiple skills within a semester, there is opportunity to identify students at risk of poor performance on APPEs, beyond their performance on a single summative assessment.

As required by accreditation standards, our institution’s early warning and intervention policy allows faculty to identify and address academic deficiencies on a course level to promote successful student completion of a course.1 The results of this evaluation suggest these current early warning policies could be refined to look beyond course-level student deficiencies and track student performance on a variety of formative and summative evaluations longitudinally across the curriculum. Other schools and colleges of pharmacy can apply similar analyses to determine specific assessments that correlate to their student APPE performance, with the goal of incorporating the assessments they identify as predictive into their early warning systems. However, other programs will need to identify meaningful and useful thresholds for their courses.

These items can be shared with multiple stakeholders, with the goal of improving identification of students who may benefit from early intervention. For example, instructors may improve student outcomes by focusing resources on support and remediation for students with poor performance on those assessments that correlate with poor performance on APPEs. Student advisors and course coordinators can share their findings with students to encourage student self-reflection, metacognition, and improved performance. Curriculum and assessment committees may wish to undertake curricular mapping efforts and annual surveying of the faculty to ensure identified assessments correlated with APPE poor performance are maintained within a course over time.

There were several variables impacting student performance that we were unable to systematically evaluate. These include students' preferred learning styles, practice experience (eg, internships), mental or physical health challenges, fixed or growth mindset, resilience and grit, and professionalism. The underlying factors contributing to poor performance vary from student to student and may be multifaceted, complex, and interconnected, making them difficult to quantify. This provides an opportunity for pharmacy programs to monitor student performance holistically across the curriculum and identify underlying factors, both academic and nonacademic, for student poor performance. Other factors to explore with a student include their study methods, learning needs, and potential assessment accommodations.16,17 This evaluation also showed that students performing consistently in the BC/C range may perform reasonably well on APPEs, which may be due to a variable not measured or to the complexity of predicting performance. We did not assess student performance in pharmaceutical sciences or social and administrative sciences courses, because our project focused on pharmacy practice courses. It may be valuable to assess student performance across the entire curriculum for a more holistic view.

Future directions of this work include refinement of our current early warning and intervention policy, including the addition or removal of items. Monitoring the rate of APPE poor performers could substantiate the effectiveness of the tracking, interventions, and remediation. Additionally, assessment tools, rubrics, and weighting could be evaluated. Lastly, assessment of student self-efficacy of pharmacy practice skills could be used to triangulate the relationship between didactic scores and performance in practice.

CONCLUSION

This evaluation demonstrated a gap in the identification of students with APPE poor performance who did not fail a didactic course or performance-based assessment. These results could be used to facilitate student self-reflection and improve motivation to promote successful performance in APPEs. This process could also be used by other schools and colleges of pharmacy to identify crucial components within their curriculum in an effort to recognize students in need of additional support and remediation prior to the APPE year.

ACKNOWLEDGMENTS

The authors thank Kim Lintner for her contributions. Funding from the University of Wisconsin Educational Innovation and the Wisconsin Pharmacy Practice Research Initiative provided support for this project.

Appendix 1.

Pharmacy Practice Didactic and Skills-Based Course Descriptions and Related Variable Definitions

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. Accreditation Council for Pharmacy Education; 2015. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf. Accessed March 16, 2023. [Google Scholar]

- 2.Martin RD, Wheeler E, White A, Killam-Worrall LJ. Successful remediation of an advanced pharmacy practice experience for an at-risk student. Am J Pharm Educ. 2018;82(9):1051-1057. doi: 10.5688/ajpe6762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Davis LE, Miller ML, Raub JN, Gortney JS. Constructive ways to prevent, identify, and remediate deficiencies of “challenging trainees” in experiential education. Am J Health Syst Pharmh. 2016;73(13):996-1009. doi: 10.2146/ajhp150330 [DOI] [PubMed] [Google Scholar]

- 4.Call WB, Grice GR, Tellor KB, Armbruster AL, Spurlock AM, Berry TM. Predictors of student failure or poor performance on advanced pharmacy practice experiences. Am J Pharm Educ. 2020;84(10):1363-1370. doi: 10.5688/ajpe7890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nyman H, Moorman K, Tak C, Gurgle H, Henchey C, Munger MA. A modeling exercise to identify predictors of student readiness for advanced pharmacy practice experiences. Am J Pharm Educ. 2020;84(5):605-610. doi: 10.5688/ajpe7783 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Adam J, Bore M, Childs R, et al. Predictors of professional behaviour and academic outcomes in a UK medical school: a longitudinal cohort study. Med Teach. 2015;37(9):868-880. doi: 10.3109/0142159X.2015.1009023 [DOI] [PubMed] [Google Scholar]

- 7.Smith SR. Effect of undergraduate college major on performance in medical school. Acad Med. 1998;73(9):1006-1008. doi: 10.1097/00001888-199809000-00023 [DOI] [PubMed] [Google Scholar]

- 8.Dunleavy DM, Kroopnick MH, Dowd KW, Searcy CA, Zhao X. The predictive validity of the MCAT exam in relation to academic performance through medical school: a lof 2001-2004 matriculants. Acad Med. 2013;88(5):666-671. doi: 10.1097/ACM.0b013e3182864299 [DOI] [PubMed] [Google Scholar]

- 9.Adam J, Bore M, McKendree J, Munro D, Powis D. Can personal qualities of medical students predict in-course examination success and professional behaviour? An exploratory prospective cohort study. BMC Med Educ. 2012;12:69. doi: 10.1186/1472-6920-12-69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lee KB, Vaishnavi SN, Lau SKM, Andriole DA, Jeffe DB. “Making the grade:” noncognitive predictors of medical students’ clinical clerkship grades. J Natl Med Assoc. 2007;99(10):1138-1150. Accessed March 13, 2023. https://pubmed.ncbi.nlm.nih.gov/17987918/. [PMC free article] [PubMed] [Google Scholar]

- 11.Krupat E, Pelletier SR, Dienstag JL. Academic performance on first-year medical school exams: how well does it predict later performance on knowledge-based and clinical assessments? Teach Learn Med. 2017;29(2):181-187. doi: 10.1080/10401334.2016.1259109 [DOI] [PubMed] [Google Scholar]

- 12.Gallimore CE, Porter AL, Barnett SG. Development and application of a stepwise assessment process for rational redesign of sequential skills-based courses. Am J Pharm Educ. 2016;80(8):136. doi: 10.5688/ajpe808136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barnett SG, Porter AL, Allen SM, et al. Expert consensus to finalize a universal evaluator rubric to assess pharmacy students’ patient communication skills. Am J Pharm Educ. 2020;84(12):848016. doi: 10.5688/ajpe848016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barnett SG, Gallimore C, Kopacek KJ, Porter AL. Evaluation of electronic SOAP note grading and feedback. Curr Pharm Teach Learn. 2014;6(4):516-526. doi: 10.1016/j.cptl.2014.04.010 [DOI] [Google Scholar]

- 15.Barnett S, Nagy MW, Hakim RC. Integration and assessment of the situation-background-assessment-recommendation framework into a pharmacotherapy skills laboratory for interprofessional communication and documentation. Curr Pharm Teach Learn. 2017;9(5):794-801. doi: 10.1016/j.cptl.2017.05.023 [DOI] [PubMed] [Google Scholar]

- 16.Chen JS, Matthews DE, Van Hooser J, et al. Improving the remediation process for skills-based laboratory courses in the doctor of pharmacy curriculum. Am J Pharm Educ. 2021;85(7):533-536. doi: 10.5688/ajpe8447 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Vos S, Kooyman C, Feudo D, et al. When experiential education intersects with learning disabilities. Am J Pharm Educ. 2019;83(8):1660-1663. doi: 10.5688/ajpe7468 [DOI] [PMC free article] [PubMed] [Google Scholar]