To the Editor,

We recently read the thought-provoking paper by Azamfirei et al. [1], which delved into the limitations and ethical implications of using ChatGPT in critical decision-making processes. While we appreciate the authors' concerns and the importance of exercising caution when using language models like ChatGPT, we would like to highlight some alternative perspectives on this rapidly evolving technology.

To begin, we agree with the authors that new technologies should not be blindly adopted without proper understanding and evaluation. It is indeed crucial to be aware of the strengths and limitations of tools like ChatGPT, as well as their potential impact on various fields. However, it is also important to consider the remarkable advancements that have been made in recent years and the beneficial applications of language models.

For instance, following two major earthquakes in Turkey, OpenAI's GPT model [2] was employed to identify survivors' locations, as reported on Twitter. As a result, nearly thousand of people were rescued from the wreckage. This example demonstrates that when used appropriately and with human supervision, language models like ChatGPT can play a significant role in disaster management, public health, and other critical areas.

Furthermore, the authors' comparison of ChatGPT to a self-driving system navigating a rocket to low earth orbit offers a valuable perspective. While it is true that we should not use a tool designed for one purpose in an entirely different context, it is also essential to recognize the potential of these models to be adapted and improved for specific tasks. The development of specialized systems for summarizing scientific articles, for example, is not far-fetched and can significantly benefit researchers and practitioners across disciplines [3].

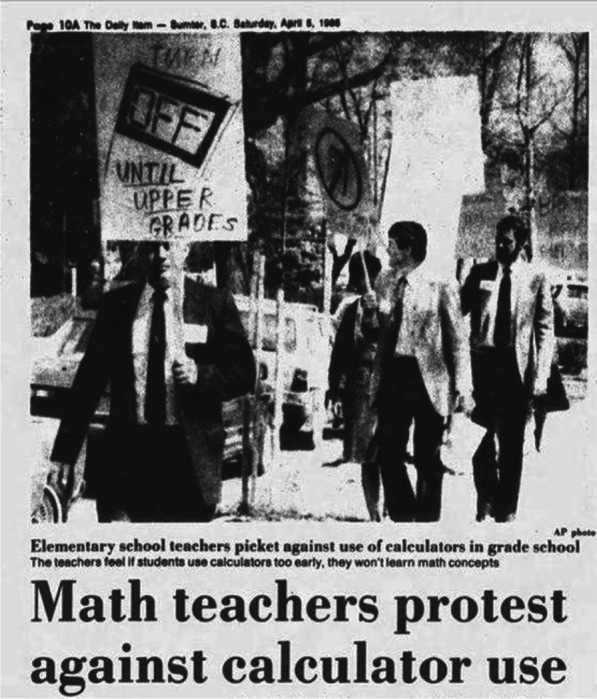

It is worth noting that the adoption of technology often follows a pattern of initial resistance, gradual acceptance, and eventual obsolescence as newer, better solutions emerge [4]. A prime example of this can be seen in the 1988 protest actions by mathematics teachers in the USA, who sought to ban the use of calculators in primary schools, as well as Fig. 1. [5]. Fast forward to the present day, the use of calculators has become far less prevalent, particularly in educational settings, as more advanced devices have rendered them almost obsolete.

Fig. 1.

Teachers protesting against the early use of calculators in elementary schools, stating that it may hinder students' understanding of mathematical concepts [5]

In conclusion, we would like to express my gratitude to Azamfirei et al. for raising essential questions and concerns about the use of language models like ChatGPT. As technology advances at an accelerating pace, it is our collective responsibility to ensure that we understand, evaluate, and harness these tools for the betterment of society. By engaging in constructive discussions like the one initiated by the authors, we can work together to strike a balance between caution and innovation, ultimately making the most of the potential offered by emerging technologies.

Author contributions

Akbasli and Bayrakci collaborated in the conceptualization, design, and writing of the manuscript. Both authors read and approved the final manuscript.

Availability of data and materials

Not applicable, as no datasets were used in the creation of this manuscript.

Declarations

Ethical approval

As this manuscript does not involve the use of human participants, patients, or animals, ethical approval is not applicable.

Competing interests

The authors declare that they have no competing interests of a financial or personal nature that could have influenced the outcome of this study.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Izzet Turkalp Akbasli, Email: izzetakbasli@gmail.com.

Benan Bayrakci, Email: bbenan@yahoo.com.

References

- 1.Azamfirei R, Kudchadkar SR, Fackler J. Large language models and the perils of their hallucinations. Crit Care. 2023;27:120. doi: 10.1186/s13054-023-04393-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, et al. Language models are few-shot learners. Adv Neural Inf Process Syst. 2020;33:1877–1901. [Google Scholar]

- 3.Kieuvongngam V, Tan B, Niu Y. Automatic Text Summarization of COVID-19 Medical Research Articles using BERT and GPT-2. 2020.

- 4.Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47:33. doi: 10.1007/s10916-023-01925-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lawrance J. Math teachers protest against calculator use. The Daily Item. 1988;10.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable, as no datasets were used in the creation of this manuscript.