Abstract

Reliably measuring eye movements and determining where the observer looks are fundamental needs in vision science. A classical approach to achieve high-resolution oculomotor measurements is the so-called dual Purkinje image (DPI) method, a technique that relies on the relative motion of the reflections generated by two distinct surfaces in the eye, the cornea and the back of the lens. This technique has been traditionally implemented in fragile and difficult to operate analog devices, which have remained exclusive use of specialized oculomotor laboratories. Here we describe progress on the development of a digital DPI, a system that builds on recent advances in digital imaging to enable fast, highly precise eye-tracking without the complications of previous analog devices. This system integrates an optical setup with no moving components with a digital imaging module and dedicated software on a fast processing unit. Data from both artificial and human eyes demonstrate subarcminute resolution at 1 kHz. Furthermore, when coupled with previously developed gaze-contingent calibration methods, this system enables localization of the line of sight within a few arcminutes.

Keywords: eye movements, saccade, microsaccade, ocular drift, gaze-contingent display

Introduction

Measuring eye movements is a cornerstone of much vision research. Although the human visual system can monitor a large field, examination of fine spatial detail is restricted to a tiny portion of the retina, the foveola, a region that only covers approximately 1° in visual angle, approximately the size of a dime at arms length. Thus, humans need to continually move their eyes to explore visual scenes: rapid gaze shifts, known as saccades, redirect the foveola every few hundred milliseconds, and smooth pursuit movements keep objects of interest centered on the retina when they move. Oculomotor activity, however, is not restricted to this scale. Surprisingly, eye movements continue to occur even during the so-called periods of fixation, when a stimulus is already examined with the foveola. During fixation, saccades that are just a fraction of a degree (microsaccades), alternate with an otherwise incessant jittery movement (ocular drift) that yields seemingly erratic motion trajectories on the retina (Rolfs, 2009; Kowler, 2011; Poletti & Rucci, 2015).

Thus, eye movements vary tremendously in their characteristics, ranging by over two orders of magnitude in amplitude and three orders of magnitude in speed, from a few arcminutes per second to hundreds of degrees per second. This wide range of motion, together with the growing need to resolve very small movements, pose serious challenges to eye-tracking systems. Although various methods for measuring eye movements have been developed, several factors limit their range of optimal performance. Such factors include changes in the features that are tracked (e.g., the pupil, the iris), translations of the eye as those caused by head movements, and uncertainty in converting raw measurements into gaze positions in the scene.

A technique that tends to be particularly robust to artifacts from head translation and changes in pupil size and combines high resolution with fast dynamics has long been in use, but it has remained confined to specialized oculomotor laboratories. This method, known as dual Purkinje imaging (DPI), does not track the pupil as often done by video eye-trackers (Hooge, Holmqvist, & Nyström, 2016) and relies on cues from more than one surface in the eye, rather than just the cornea (Figure 1A). Specifically, it compares the positions of reflections of an infrared beam of light from the anterior surface of the cornea (the first Purkinje image) and the posterior surface of the lens (the fourth Purkinje image; Figure 1B). As explained elsewhere in this article, these two images move relative to each other as the eye rotates, and their relative motion is little affected by eye translations (Cornsweet & Crane, 1973). Thus, the DPI method enables oculomotor measurements with high spatial and temporal resolution while offering robustness to common potential confounds (Wilson, Campbell, & Simonet, 1992; Yang, Dempere-Marco, Hu, & Rowe, 2002; Drewes, Zhu, Hu, & Hu, 2014).

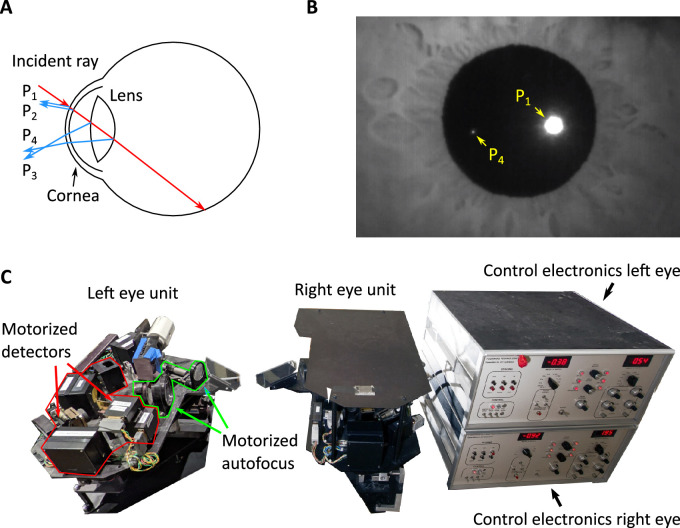

Figure 1.

Purkinje reflections and eye tracking. (A) As it travels through the eye, an incident ray of light gets reflected by the structures that it encounters. The first and second Purkinje reflections (P1 and P2) result from the anterior and posterior surfaces of the cornea. The third and the fourth Purkinje reflections (P3 and P4) originate from the anterior and posterior surface of the lens. (B) An image of the Purkinje reflections captured with the prototype described in this article. P1 and P4 can be imaged simultaneously. Here P3 is out of focus, and P2 largely overlaps with P1. Note that P1 is larger and has a higher intensity than P4. (C) An example of eye-tracker that uses Purkinje reflections, the Generation V Dual Purkinje Eye-tracker (DPI) from Fourward Technologies. This optoelectronic device has mobile components that, when properly tuned, follow P1 and P4 as the eye rotates.

The DPI method has been used extensively in vision science. Applications include examination of the processes underlying the establishment of visual representations (e.g., Deubel & Schneider, 1996; Karn, Møller, & Hayhoe, 1997; Tong, Stevenson, & Bedell, 2008), the perceptual consequences of various types of eye movements (e.g., Hawken & Gegenfurtner, 2001; Hyona, Munoz, Heide, & Radach, 2002; Kowler, 2011), and their motor characteristics (e.g., Kowler, 2011; Najemnik & Geisler, 2008; Snodderly, 1987). Furthermore, this method can deliver measurements with minimal delay, paving the way for procedures of gaze-contingent display control—the updating of the stimulus in real time according to eye movements (McConkie, 1997; Pomplun, Reingold, & Shen, 2001; Geisler & Perry, 1998; Rayner, 1997; Santini, Redner, Iovin, & Rucci, 2007). This is important not just for experimental manipulations, but also because gaze-contingent calibrations allow translating precision into accuracy, that is, they lead to a better determination of where the observer looks in the scene (Poletti & Rucci, 2016). This approach has been used extensively to investigate the purpose of small saccades (Ko, Poletti, & Rucci, 2010; Poletti, Intoy, & Rucci, 2020), the characteristics of foveal vision (Shelchkova, Tang, & Poletti, 2019; Intoy & Rucci, 2020), and the dynamics of vision and attention within the fovea (Intoy, Mostofi, & Rucci, 2021; Zhang, Shelchkova, Ezzo, & Poletti, 2021).

Although several laboratories have performed digital imaging of Purkinje images (Mulligan, 1997; Rosales & Marcos, 2006; Lee, Cho, Shin, Lee, & Park, 2012; Tabernero & Artal, 2014; Abdulin, Friedman, & Komogortsev, 2019), the standard implementation of the DPI principle for eye-tracking relies on analog opto-electronic devices. These systems, originally conceived by Cornsweet and Crane (Cornsweet & Crane, 1973; Crane & Steele, 1985) use servo feedback provided by analog electronics to control the motion of detectors so that they remain centered on the two Purkinje images (Figure 1C). DPI eye-trackers are capable of resolving eye movements with arcminute resolution, but several features have prevented their widespread use. A major limiting factor has been the difficulty to both operate and maintain the device. Sophisticated calibration procedures are required for establishing the proper alignment in the optical elements, preserving their correct motion, and also tuning the system to individual eye characteristics. Because several components move during eye-tracking, these devices are fragile and suffer from wear and stress, requiring frequent maintenance. As a consequence, few DPI eye-trackers are currently in use, most of them in specialized oculomotor laboratories.

The recent technological advances in digital cameras, image acquisition boards, and computational power of digital electronics now enable implementation of the DPI approach in a much more robust and easily accessible device. Here we report recent progress in the development of a digital DPI eye-tracker (the dDPI), a system that uses a fast camera, high-speed image analysis on a dedicated processing unit, and a simple but robust tracking algorithm to yield oculomotor measurements with precision and accuracy comparable to those of the original analog device. In the following sections, we first review the principles underlying DPI eye-tracking. We then describe a prototype dDPI and examine its performance with both artificial and real human eyes.

Measuring eye movements from Purkinje reflections

As shown in Figure 1A, the Purkinje images are the reflections given by the various eye structures encountered by light as it travels toward the retina. Because of the shape of the cornea and lens, the first and fourth Purkinje images, P1 and P4, form on nearby surfaces and can be imaged together without the need of complex optics. Because these two reflections originate from interfaces at different spatial locations and with different shapes, they move at different speeds as the eye rotates. Thus, the relative motion between P4 and P1 provides information about eye rotation. Below we examine the main factors that need to be taken into consideration for using this signal to measure eye movements.

As originally noted by Cornsweet and Crane (1973), the relative motion of P1 and P4 follows a monotonic relation with eye rotation. An approximation of this relation can be obtained, for small eye movements, by using a paraxial model (Cornsweet & Crane, 1973), in which the anterior surface of the cornea and the posterior surface of the lens are treated as a convex and a concave mirror (MC and ML in Figure 2A). Under the assumption of collimated illumination, this model enables analytical estimation of the relative positions, X1 and X4, of the two Purkinje images on the image plane I:

| (1) |

where d represents the distance between the anterior surface of cornea and the posterior surface of the lens; and Δ is the angle between the incident beam of light and the optic axis of the eye, which represents here the angle of eye rotation (see Figure 2A).

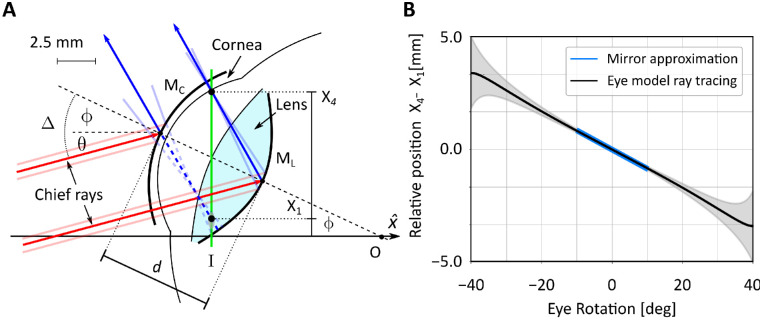

Figure 2.

A paraxial model of Purkinje images formation. (A) The schematic model proposed by Cornsweet and Crane (1973). The anterior surface of the cornea and the posterior surface of the lens are approximated by two mirrors (MC and ML, respectively). The optical axis of the eye is aligned initially with that of the imaging system () and is illuminated by a collimated beam tilted by θ. The eye then rotates by ϕ around its rotation center O. Light (here represented by the chief rays in red) is reflected by the cornea and the lens (blue lines) so that it intersects the image plane I at locations X1 and X4, respectively. These are the positions were the Purkinje reflections P1 and P4 form in the image. (B) The predicted relative motion of the two Purkinje reflections as the eye rotates. Both the motion predicted for small eye rotations by the paraxial mirror model in A (blue line) and the motion predicted over a broader range of eye rotations by a more sophisticated model (black line; see Figure 3) are shown. Shaded regions represent standard errors obtained by varying eye model parameters to reflect normal individual variability. Incident light is here assumed parallel to the optical axis of the imaging system (i.e., θ = 0 in A).

In this study, we focus on the case of the unaccommodated eye. Inserting in Equation 1 the value of d taken for Gullstrand eye model (d = 7.7 mm) and assuming light to be parallel to the optical axis of the imaging system (θ = 0 in Figure 2A), we obtain the blue curve in Figure 2B. These data indicate that for small eye movements—that is, the range of gaze displacement for which the paraxial assumption holds—the relative positions of the two Purkinje images varies almost linearly with eye rotation.

Although the two-mirror approximation of Figure 2A is intuitive, it holds only for small angular deviations in the line of sight. Furthermore, it ignores several factors of Purkinje images formation, including refraction at the cornea and the lens. To gain a deeper understanding of the positions and shapes of the Purkinje images and how they move across a wide range of eye rotations, we simulated a more sophisticated eye model in an optical design software (Zemax OpticStudio). A primary goal of these simulations was to determine the expected sizes, shapes, and strengths of the Purkinje images as the angle of incident light changes because of eye rotations. Being reflections, these characteristics depend both on the source of illumination and the optical and geometrical properties of the eye.

We used a well-known schematic eye model (Atchison & Thibos, 2016), developed on the basis of multiple anatomical and optical measurements of human eyes (Atchison, 2006). This model has been previously compared with other eye models and validated against empirical data (Bakaraju, Ehrmann, Papas, & Ho, 2008; Akram, Baraas, & Baskaran, 2018). Briefly, the model consists of four refracting surfaces (anterior and posterior cornea and lens) and features a lens with a gradient refractive index designed to capture the spatial nonhomogeneity of the human lens. In our implementation, we set the model eye parameters to simulate an unaccommodated emmetropic eye with a pupil of 6 mm, as described in (Atchison, 2006). Because our goal is to develop a system that can be used in vision research experiments, we used light in the infrared range (850 nm), so that it would not interfere with visual stimulation. Finally, because the Purkinje images are formed via reflection, we applied the reflection coefficients of the eye’s multiple layers (e.g., see Chapter 6 in Orfanidis, 2016).

Under exposure to collimated light, eye rotations only change the angle of illumination relative to the optical axis of the eye. We, therefore, modeled eye movements by keeping the model stationary and rotating illumination and imaging system relative to the eye center of rotation, a point on the optical axis 13.8 mm behind the cornea (Fry & Hill, 1962). As with most eye-trackers, this simplification assumes a gaze shift to consist of a pure rotation of the eye and neglects the small translations of the eye in the orbit that accompany rotations (∼0.1 mm per 30°) (Demer & Clark, 2019) (see also analysis of the consequences of eye translations for our prototype in Figure 7). In all cases, we modeled Purkinje images formation on the best focus plane, the plane orthogonal to the optical axis of the eye (I in Figure 2A), where P4 is formed when the illumination source is right in front of the eye.

Figure 7.

Consequences of eye translation. (A) Changes in position of the two Purkinjes as a function of eye translation. (B) Spurious eye rotation (the difference between P1 and P4) measured for a translating eye. (C) Apparatus for head immobilization used in our experiments. The head is immobilized by both a custom dental-imprint bite bar and a magnetic head-rest. (D) Residual eye translations as subjects (N = 3) fixated on a small cue for 5 seconds. Head movements were measured by means of a high-resolution motion capture system that tracked markers placed on the helmet. The three panels show head positions on the three axes.

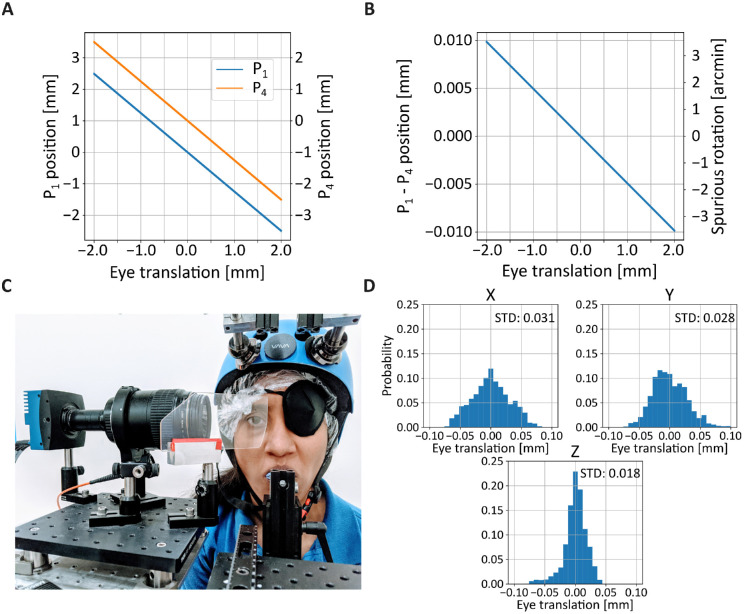

We first examined the impact of the power of the illumination on Purkinje image formation. Figure 3A and B show one of the primary difficulties of using Purkinje images for eye-tracking: the irradiance of P1 and P4 differs considerably, by almost two orders of magnitude. As expected, both vary proportionally with the power of the source and decrease with increasing angle between the optical axis of the eye and the illuminating beam. However, their ratio remains constant as the power of the source varies, and the vast difference in irradiance between the two makes it impossible—in the absence of additional provisions—to image P4 without saturating P1.

Figure 3.

Expected characteristics of Purkinje images from ray-tracing a model of the eye. (A, B) Maximum irradiance of the two Purkinje images as a function of the irradiance of the illumination source. Each curve shows data for a specific angle of eye rotation, measured as deviation between the optical axes of the eye and the imaging system (the latter assumed to be coincident with the illumination axis, θ = 0 as in Figure 2B). The two horizontal dashed lines represent the minimum and maximum intensity measurable with the sensor in our prototype. (C) Locations of the peak intensity of the two Purkinje images as a function of eye rotation. Shaded regions (not visible for P4) represent standard errors obtained by varying eye model parameters to reflect normal individual variability. (D) Relative position of P1 and P4 as a function of both eye rotation and illumination angle.

These data are informative for selecting the power of the illumination. There are two primary constraints that need to be satisfied: from one side, the power to the eye needs to be sufficiently low to meet safety standards for prolonged use. From the other, it needs to be sufficiently high to enable reliable detection of the Purkinje images. The latter requirement is primarily determined by the noise level of the camera, which sets a lower bound for the irradiance of P4, the weaker reflection. Figure 3A and B show the saturation and noise-equivalent levels of irradiance for the camera used in our prototype (see Section 3). P4 is detectable when its irradiance exceeds the noise level (Figure 3B), but the eye-tracking algorithm needs to be able to cope with the simultaneous saturation of large portions of P1 (Figure 3A).

This model also allows for a more rigorous simulation of how the two Purkinje images move as the eye rotates than the paraxial approximation of Figure 2A. Figure 3C shows the chief rays intersections with the image plane, X1 and X4, for eye rotations of ±40°. Note that both images are displaced almost linearly as a function of eye rotation. However, the rate of change differs between P1 and P4 (the different slopes of the curves in Figure 3C). Specifically, P4 moves substantially faster and, therefore, travels for a much larger extent, than P1. The data in Figure 3C indicate that the imaging system should capture approximately 20 mm in the objective space of plane I to enable measurement of eye movements over this range. In practice, with a real eye, the imaged space may be smaller than this, because the occlusion of P4 by the pupil will limit the range of measurable eye movements, as it happens with the analog DPI.

These simulations also allowed examination of how individual changes in eye morphology and optical properties affect the motion of the Purkinje images. This variability is represented by the error bars in Figure 3C, which were obtained by varying model parameters according to their range measured in healthy eyes (Atchison, 2006). These data show that the trajectories of both P1 and P4 are minimally influenced by individual changes in eye characteristics.

Because both Purkinje images move proportionally to eye rotation, the difference in their positions maintains an approximately linear relation with eye movements. This function closely matches the paraxial two-mirror approximation of Equation 1 for small eye movements and extends the prediction to a much wider range of eye rotations (the black line in Figure 2B). In the simulation of this figure, the direction of illumination and the optical axes of both the eye and the imaging system are aligned. However, this configuration is not optimal, because the two Purkinje images overlap when the optic axis of the eye is aligned with the illumination axis. This makes it impossible to detect P4, which is smaller and weaker than P1. As shown in Figure 3D, the specific position of the illuminating beam plays an important role, both in determining departures from linearity and in placing the region where P4 will be obscured by P1. In this figure, the offset between the two Purkinje images is plotted for a range of eye rotations (x-axis) and for various angular positions of the source (distinct curves). In the system prototype described in this article (Figure 5), we placed the source at ϕ = 20° on both the horizontal and vertical axes. This selection provides a good linear operating range, while placing the region in which P4 is not visible away from the most common directions of gaze.

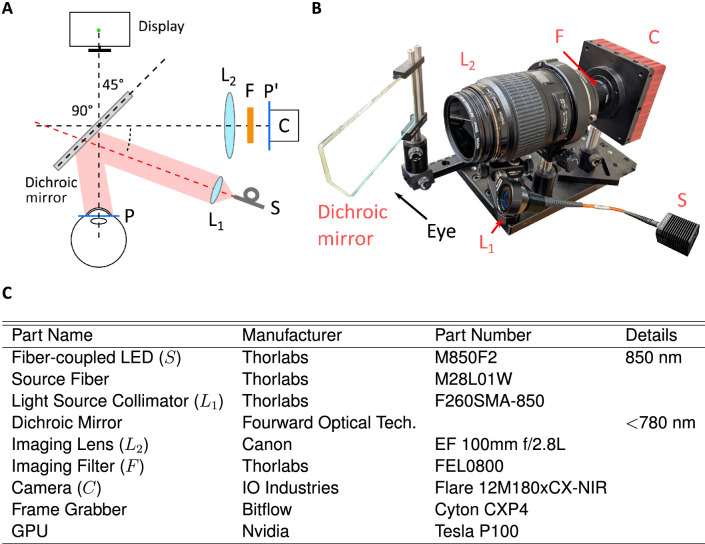

Figure 5.

A dDPI prototype. (A) General architecture and (B) hardware implementation. Infrared light from the source (S) is collimated (lens L1). The beam is directed toward the eye via a dichroic mirror. Purkinje reflections are acquired by a high-speed camera (C) equipped with a long-pass filter (F). The camera lens (L2) relays the plane of the Purkinje images P to the sensor plane P′. (C) List of components in the prototype tested in this study.

A robust tracking algorithm

Once the Purkinje images are acquired in a digital format, a variety of methods are available for their localization and tracking. In the traditional analog DPI device, the system dynamics is primarily determined by the inertia of the mobile components and the filtering of the servo signals, which yield a broad temporal bandwidth (Crane & Steele, 1985). As a consequence, analog DPI data are normally sampled at 0.5 to 1.0 kHz following analog filtering at the Nyquist frequency (Deubel & Bridgeman, 1995; Hayhoe, Bensinger, & Ballard, 1998; Mostofi, Boi, & Rucci, 2016; Yu, Gill, Poletti, & Rucci, 2018). A strength of the analog DPI is the overall minimal delay with which data are made available, which is sufficiently small to enable procedures of gaze-contingent display control, experiments in which the stimulus is modified in real time according to the eye movements performed by the observer. Here we describe a simple tracking algorithm that mimics the processing of the analog DPI and is capable of delivering data at high speed.

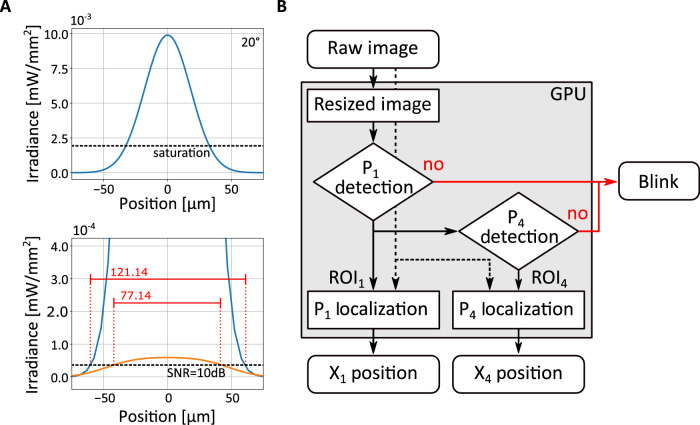

The design of an efficient tracking algorithm needs to take into consideration the different characteristics of the two Purkinje images. Figure 4A shows the images predicted by the model eye explained in Section 2 (the optical model simulated in Figure 3) when a low-power (2 mW) source illuminates the eye from 20° off the camera’s axis. The exposure time was set to 1 ms. Two immediate observations emerge. First, the two reflections differ considerably in size, as P4 has much lower intensity that P1 and will appear in the image as a small blob compared to the first reflection. Second, as pointed out in the previous section, direct imaging of the eye will yield an image of P1 that is largely saturated and an image of P4 close to the noise level. Thus, the localization algorithm should be both tolerant to saturation effects and insensitive to noise.

Figure 4.

A tracking algorithm emulating the analog DPI. (A) Characteristics of Purkinje images. Data are obtained from the model in Figure 3, using a collimated 10 mm beam provided by a 2 mW source at 20° on both axes. Exposure time was set to 1 ms. The top panel shows the profile of P1 before clipping intensity at the saturation level. The bottom panel shows both P1 (with saturated intensity; blue curve) and P4 (orange curve). The red horizontal bars represent the diameters of the reflections exceeding 10 dB. (B) Flowchart of the tracking algorithm. A low-resolution image of the eye (solid line) is first used to detect P1 and P4 and determine their approximate locations. Failure in detecting one of the two reflections is labeled as blink. High-resolution localization of the Purkinje images (dashed lines) is then obtained from selected regions of interest (ROI1 and ROI4).

The algorithm used in this study is shown in Figure 4B. It operates directly on the digital images of the two Purkinjes and yields as output the differences between the Purkinje positions on each of the two Cartesian axes of the image. To ensure low latency, the algorithm operates in two phases, detection and localization. In the first phase, it defines regions of interest (ROIs) in which P1 and P4 are likely to be found. In the localization phase, it then operates on the selected regions at high resolution to determine the coordinates of P1 and P4 precisely.

The detection phase operates on downsampled versions of the acquired images, which are for this purpose decimated by a factor of 8. Different approaches are used for the two reflections, given their distinct characteristics in the images. Because P1 is relatively large and typically the only saturated object in the image, its region of interest (ROI1, a 256 × 256 pixel square) can be directly estimated by computing the center of mass of a binary thresholded image, as long as a sufficiently high threshold is used to avoid influences from other possible reflections in the image. To further speed up computations, this approach can be applied to a restricted portion of the input image, because P1 moves less that P4 and remains within a confined region of the image.

Detection of P4 is more complicated given its lower intensity and smaller size. The algorithm in Figure 4B uses template matching, a method suitable for parallel execution in a GPU. Specifically, the algorithm estimates the location in the image that best matches—in the least squares sense—a two-dimensional Gaussian template of P4, the parameters of which had been previously calibrated for the individual eye under observation. This yields a 64 × 64 pixel square region centered around P4 (ROI4). Like for the computation of ROI1, if needed, also this operation can be restricted to a selected portion of the image to further speed up processing, in this case using knowledge from P1 to predict the approximate position of P4.

In the second phase of the algorithm, ROI1 and ROI4, now sampled at high resolution, are used for fine localization of the two reflections. To this end, the position of each Purkinje was marked by its center of symmetry (the radial symmetry center), a robust feature that can be reliably estimated via a noniterative algorithm developed for accurate tracking of particles (Parthasarathy, 2012). This algorithm enables subpixel resolution, it is robust to saturation effects and has fast execution time.

This is shown in Table 1, which compares three different methods used for estimating the position of P4 in images obtained from ray-tracing the model described in Section 2. In these images, the exact position of P4 is known, providing a ground truth to compare approaches. As shown by these data, Gaussian fitting via maximum likelihood gives the highest accuracy. However, this method is computationally expensive and difficult to implement in parallel hardware—thus, the missing estimation in the GPU column of Table 1. In contrast, estimation of the center of mass can be performed rapidly, but it yields a much larger error. Estimation of the radial symmetry center provides a trade-off between accuracy and speed, with the error comparable with Gaussian fitting and computation time lower than 0.5 ms when implemented in a good quality GPU, like the Nvidia Telsa P100 used in our prototype.

Table 1.

Performance of three methods for localization of Purkinje reflections.

| Method | RMS (arcmin) | Time by CPU (ms) | Time by GPU (ms) |

|---|---|---|---|

| Gaussian fitting | 0.02 | 3,327 | – |

| Center of mass | 0.22 | 34 | 0.05 |

| Radial symmetric center | 0.06 | 521 | 0.32 |

System prototype

The data obtained from the model eye and simulations of the tracking algorithm enabled design and implementation of a prototype. A major requirement in the development of this apparatus was that it could be used successfully in vision research experiments in place of its analog counterpart; that is, that the device would be capable of replacing the traditional analog DPI in most of the relevant functional aspects. The system shown in Figure 5 is based on a simple optical/geometrical arrangement and consists of just a few important components: a camera, an illuminator, and a dichroic mirror, in addition to several accessory elements. Unlike its analog predecessor, none of the components move, guaranteeing robustness and limited maintenance.

The heart of the apparatus is a digital camera that acquires images of the Purkinje reflections. Selection of the camera and its operating characteristics imposes a trade-off between spatial and temporal resolutions. This happens because of the hardwired limits in the camera’s transmission bandwidth, which forces the acquisition of larger images to occur at slower rates. In the prototype of Figure 5, based on our experimental needs, we selected a camera that enables high sampling rates (IO Industries Flare 12Mx180CX-NIR). This camera has 5.5-µm-wide pixels and delivers 187 frames per second at 12 MP. It can achieve a 1-kHz acquisition rate at 2 MP.

Positioning of the camera was based on two factors. To reach the necessary magnification and be able to track relatively large eye movements in all directions, the camera was placed optically in front of the eye. To avoid obstructing the field of view and enabling clear viewing of the display in front of the subject, the camera was physically placed laterally to the eye, at a 90° angle from the eye’s optical axis at rest. This was achieved by means of a custom short-pass dichroic mirror that selectively reflected infrared light at a 45° angle while letting through visible light (see Figure 5A).

Illumination is provided by a fiber-coupled LED at 850 nm delivering a maximum power of 2 mW over a 10-mm collimated beam. The resulting irradiance is well below the safety range for extended use stated in standard normatives (IEC 62471 and EU directive 2006/25/EC). Based on the simulation data from Figure 4, we placed the illuminator 20° off the optical axis of the camera on both the horizontal and vertical axes. This ensures that P1 and P4 will never overlap in the central frontal region of the visual field. This configuration also guarantees good linearity of the system in the range of considered eye movements.

Our simulations indicate that, in the object space, a) the first and fourth Purkinje images move relative to each other at rate of 2.4 µm per arcminute of eye rotation; and b) the reflection that moves the most as the eye rotates, P4, covers about ±4.7 mm for ±20° of eye rotation. To be able to achieve a 1-kHz sampling rate, we only used a portion of our camera’s sensor (11.26 mm wide). This led to the choice of an optical magnification of 1.25, with each pixel covering approximately 1′ and a trackable range of approximately ±19°. Based on the ray-tracing data (Figure 4A), P4 covers approximately 15 pixels with these settings, which should yield a resolution higher than 1′ according to the simulations of Table 1. Selection of different parameters would provide different trade-offs between spatial and temporal performance. For example, a larger field can be tracked at high spatial resolution by acquiring data at 500 Hz.

The tracking algorithm shown in Figure 4 was implemented in parallel processing (CUDA platform) in a GPU (Nvidia Tesla P100), and ran under the supervision of EyeRIS, our custom system for gaze-contingent display (Santini et al., 2007). We have recently fully redesigned this system to be integrated with the dDPI (see section on Accuracy and gaze-contingent display control).

Results

To thoroughly characterize the performance of our prototype, we examined measurements obtained with both artificial and human eyes. Artificial eyes were used to provide ground truth baselines both in stationary tests and in the presence of precisely controlled rotations. Human eye movements were recorded in a variety of conditions that are known to elicit stereotypical and well-characterized oculomotor behaviors.

Performance with artificial eyes

We first examined the noise of the system in the absence of any movements of the eye. As pointed out before, the human eyes are always in motion, even when attempting to maintain steady fixation on a point. Thus, an artificial eye is necessary to evaluate stationary steady-state performance. To this end, we used an artificial eye from Ocular Instruments (OEMI-7 model), which is designed to faithfully replicate the size, shape, and refraction characteristics of the human eye. This model eye includes an external transparent interface replicating the cornea, anterior and posterior chambers filled with 60% glycerine–water solutions (Wang, Mulvey, Pelz, & Holmqvist, 2017), and a lens made of polymethyl methacrylate that mimics the crystalline lens of the human eye.

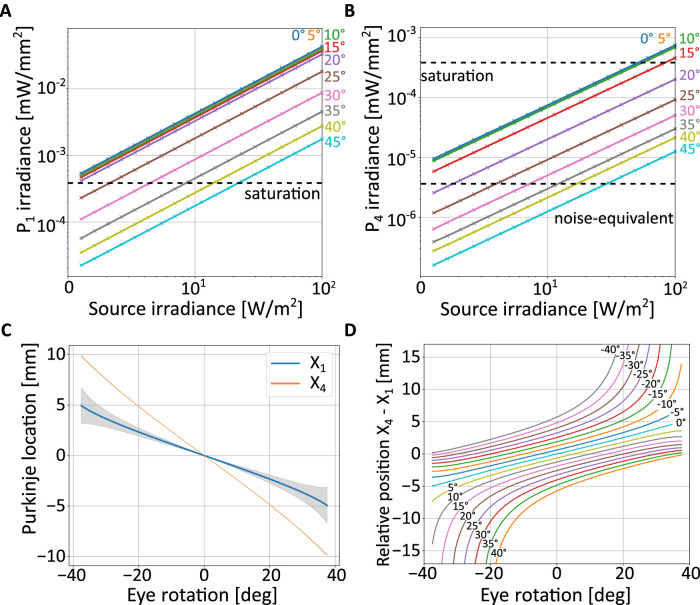

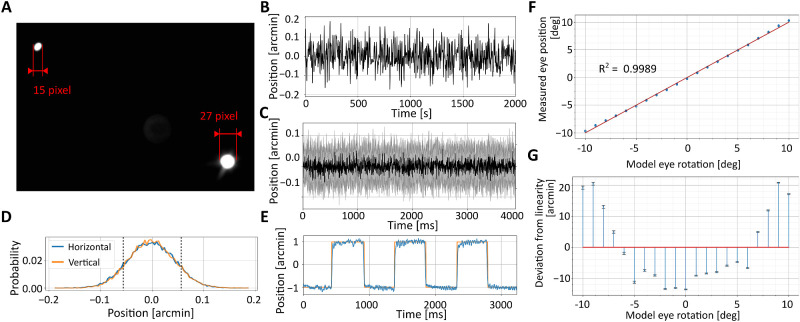

We mounted the model eye on a dovetail rail attached to the eye-tracker's stage so to place it at the position where the subject’s eye is normally at rest, aligned with the apparatus optical axis. We then tuned the eye-tracker to obtain clear and sharp Purkinje images, as we would do with a human eye. The eye-tracker’s output signal was recorded as the artificial eye remained immobile in this position. The variability of this signal sets a limit to the precision—that is, the repeatability of measurements—that can be achieved by the system and provides information on its resolution, the minimum rotation that can be reliably detected. The data in Figure 6A show that the noise of the system is within 0.1′, allowing in principle measurements of extremely small displacements.

Figure 6.

Performance with artificial eyes. (A) Example of Purkinje images acquired at a 1-kHz sampling rate. (B–E) dDPI resolution. (B) An example trace with a stationary artificial eye. (C) Average dDPI output (black line) ± 1 standard deviation (shaded region; N = 30) measured with a stationary eye. (D) The resulting output distributions on both horizontal and vertical axes. Dashed lines represent 1 standard deviation. (E) Measurements obtained when the eye moves following a 1′-amplitude square wave at 1 Hz. The orange line represents the ground truth, as measured by the encoders of the galvanometer controlling the eye. (F, G) dDPI linearity. (F) Output measured when the artificial eye was rotated over a 20° range. The red line is the linear regression of the data. (G) Deviation from linearity in the measured eye position.

We then used the artificial eye to examine performance under controlled rotations. To confirm that the system is indeed capable of resolving very small eye movements, we placed the eye at the same position as before, but now mounted on a custom stage controlled by a galvanometer (GSI MG325DT) rather than on the stationary rail. This apparatus had been calibrated previously by a laser to map driving voltages into very previse measurements of displacements, enabling rotations of the model eye with subarcminute precision. The bottom panel in Figure 6A shows the response of the eye-tracker when the artificial eye was rotated by a 1-Hz square wave with amplitude of only 1′. Measurements from the eye-tracker closely followed the displacements recorded by the galvo encoders, showing that that the system possesses both excellent resolution and sensitivity.

Our calibrated galvanometer could only rotate the artificial eye over a relatively small amplitude range. To test performance over a wider range, we mounted the artificial eye on a manual rotation stage (Thorlabs PR01/M) and systematically varied its orientation in 4° steps over 20°. The data points in Figure 6B show that the eye-tracker correctly measures rotations over the entire range tested. In fact, as expected from the ray-tracing simulations, the system operates almost linearly in this range, with deviations from linearity smaller than 2% (20′) (Figure 6B). During tracking of human eyes, these deviations are further compensated by calibrations. But these data show that the need for nonlinear compensations are minimal, at least with an artificial eye.

Performance with human eyes

Given the positive outcome of the tests with the artificial eye, we then examined performance with human eyes. We collected oculomotor data as observers engaged in a variety of tasks previously examined with analog DPIs and compared analog and digital data. Here we focus on the characteristics of eye movements measured by the dDPI. Details on the tasks and the experimental procedures with the analog devices can be found in the publications cited in this section.

Methods. Thirty-three subjects (21 females, 12 males, aged 24 ± 5 years) with normal vision participated in the dDPI recordings. Subjects either observed the stimulus directly or, if they were not emmetropic, through a Badal optometer that corrected for their refraction. This device was placed immediately after the dichroic mirror and did not interfere with eye-tracking. Subjects provided their informed consent and were compensated according to the protocols approved by the University of Rochester Institutional Review Board.

Eye movements were recorded from the right eye while the left eye was patched. Data were acquired at 1 kHz to match the sampling frequency of the data previously collected with analog DPIs. As it is customary with most eye-trackers, before collecting data, preliminary steps were taken to ensure optimal tracking. These steps included positioning the subject in the apparatus and fine-tuning the offsets and focal length of the imaging system to obtain sharp, centered images of the eye.

Conversion of the dDPI’s raw output in units of camera pixels into visual angle was achieved via the same two-step gaze-contingent procedure that we have used previously to map the voltage signal from the analog DPI into visual angles (Ko et al., 2010). This method yields highly accurate gaze localization (Poletti & Rucci, 2016). It consists of two phases: in the initial phase, a rough mapping was established via bilinear interpolation of the mean eye positions measured as the subject fixated on a grid of nine points on the display. Subjects then fine-tuned the gaze-to-pixel mapping by correcting the position of a retinally stabilized marker, while fixating on various locations marked on the screen. This calibration procedure was performed at the beginning of each experimental session and repeated several times during a session for the marker at the center of the display.

Need for head immobilization. The architecture described in Figure 5A is both practical and flexible: all its elements are off-the-shelf components, and choice of the camera and optics enables selecting the appropriate, experiment-dependent trade-off between resolution and field of operational range. However, because the camera is not at optical infinity, the question emerges on how measurements could be affected by optical misalignments that may result from movements of the head of the subject.

Figure 7 shows the error that can be expected from simulations of our apparatus. Images of the Purkinje reflections were generated in Zemax OpticStudio and tracked by the algorithm described in Figure 4 as the eye translated on the frontoparallel plane (the plane orthogonal to the camera). As a consequence with translation of the eye, both P1 and P4 move (Figure 7A). Although their speed is very similar, it is not identical, yielding spurious eye rotations (Figure 7B). As shown by these data, the expected error in this configuration of the apparatus is approximately 2′ per millimeter of head translation. This error may be tolerable for a broad range of applications. But, in this study, to optimize the quality of the measurements, we made sure to strictly immobilize the head of the observer. This was achieved by a custom dental-imprint bite-bar coupled with a magnetic head rest (Figure 7C). Subjects wore a tightly fitting helmet with embedded magnetic plaques that locked with the plates of the head rest. With this apparatus, head movements were virtually eliminated (Figure 7D).

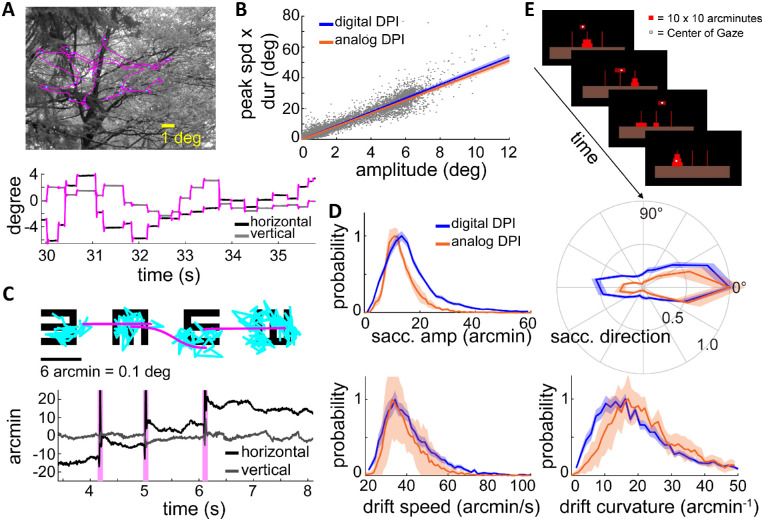

Quality of oculomotor data. In the first set of tests, we examined the robustness of the system to saccades, the abrupt, high-speed gaze shifts that occur frequently during natural tasks (Figure 8A). We recorded saccades as observers freely viewed natural images as in Kuang, Poletti, Victor, and Rucci (2012), searched for targets in noise fields (Intoy et al., 2021), and executed cued saccades (Boi, Poletti, Victor, & Rucci, 2017; Poletti et al., 2020). These tasks elicited saccades of all possible sizes, with amplitudes reaching over 12° and peak speeds up to 400°/s. The dDPI exhibited no difficulty in tracking saccades, yielding traces that were qualitatively indistinguishable from those recorded with the analog device (see example in Figure 8A). The measured saccade dynamics exhibited the stereotypical linear relationship between saccade peak speed, duration, and saccade amplitude known as the main sequence (Bahill, Clark, & Stark, 1975). These characteristics were virtually identical to those previously recorded with the analog device (Mostofi et al., 2020) (cf. blue and orange lines in Figure 8B).

Figure 8.

Human eye movements recorded by the dDPI. (A) Example of eye movements during exploration of a natural scene. An eye trace is shown both superimposed on the image (top) and displayed as a function of time (bottom). (B) Saccade main sequence. Data were collected from N = 14 observers during directed saccades or free viewing of natural images. The orange line represents the regression line of the main sequence for saccades measured in an analog DPI (Mostofi et al., 2020). (C, D) Eye movements recorded during examination of the 20/20 line of an acuity eye-chart. (C) An example of oculomotor trace displayed as in A. Microsaccades and drifts are marked in pink and blue respectively. (D) Characteristics of fixational eye movements. Data represent distributions of saccade amplitude, saccade direction, ocular drift speed, and drift curvature averaged across N = 26 subjects. Data are very similar to those recorded by means of the analog DPI. Lines and shaded errors represent averages and SEM across observers. (E) Gaze-contingent control of a miniaturized version of the Tower of Hanoi game. All participants were able to effortlessly pick up discs by fixating on them, move them by shifting their gaze, and drop them by blinking. Very accurate gaze localization is necessary for this task, since the thickness of each disk was only 10′.

We next examined the performance of the dDPI when observers executed small eye movements. Previous studies with search coil and analog DPI systems have extensively characterized how the eyes move during periods of fixation, when occasional small saccades (microsaccades) (Steinman, Haddad, Skavenski, & Wyman, 1973; Collewijn, Martins, & Steinman, 1981; Cherici, Kuang, Poletti, & Rucci, 2012) separate periods of slower motion (ocular drift) (Cornsweet, 1956; Fiorentini & Ercoles, 1966; Kuang et al., 2012). We have recently shown that both microsaccades and ocular drift are controlled in high-acuity tasks (Ko et al., 2010; Havermann, Cherici, Rucci, & Lappe, 2014; Poletti, Aytekin, & Rucci, 2015; Shelchkova et al., 2019), often yielding highly predictable behaviors. A particularly clear example is given by a standard acuity test: the examination of the 20/20 line of a Snellen eye chart (Intoy & Rucci, 2020). In this task, microsaccades systematically shift gaze from one optotype to the next, and the drifts that occur on each optotype are smaller than those present during maintained fixation on a marker (Intoy & Rucci, 2020).

Given the robustness and repeatability of these effects, we repeated this task using the dDPI. Observers (N = 26) were instructed to report the orientations of tumbling Es in the 20/20 line of a Snellen eye chart. Optotypes were separated by just 10 to 12′ center to center, and each optotype only spanned 5 to 6′. All subjects exhibited the typical oculomotor pattern previously reported (Intoy & Rucci, 2020) with microsaccades systematically shifting gaze rightwards from one optotype to the next (pink curves) and drifts focused on each optotype (blue points, Figure 8C). The characteristics of microsaccades and ocular drift measured by the dDPI were almost identical to those measured with the analog DPI (top in Figure 8D). Ocular drifts recorded with the digital and analog devices also exhibited similar distributions of speed and curvature (bottom in Figure 8D). These results corroborate the observations made with artificial eyes and show that the dDPI has sufficient temporal and spatial resolution to capture the characteristics of fixational eye movements.

Accuracy and gaze-contingent display control. We also tested the accuracy of the system—the capability of localizing gaze in the scene—by implementing a task that could only be carried out with accuracy better than a few arcminutes (Figure 8E). In this task, observers performed a miniature version of the Tower of Hanoi game, a game in which disks need to be piled on poles. However, rather than moving disks with a mouse, participants used their gaze. They picked an object by fixating on it, moved it by shifting their gaze, and dropped it at a desired location by looking there and blinking. Because the disks were only 10′ thick, execution of the task was only possible with gaze localization more accurate than this.

We implemented the task in a new version of EyeRIS, our custom system for gaze-contingent display (Santini et al., 2007), which we have recently redeveloped to operate in real-time Linux. EyeRIS and all associated I/O boards were located in the same computer that hosted the dDPI GPU, so that oculomotor data were acquired directly in digital form. As explained in Santini et al. (2007), EyeRIS signals if the refresh of the monitor exceeds two frames from the acquisition of oculomotor data. Here, experiments were ran at a refresh rate of 240 Hz, so that the average delay between measurement of eye position and update of the display was less than 8 ms. This was also confirmed by mounting the artificial eye on a motorized stage (M-116 precision rotation stage; Physik Instrumente) and comparing the input control signals to the motorized stage, the output signals from the motor encoders, and the output of the photocell on the display.

All subjects were able to execute the task without any training. They learned to interact with the scene in a matter of a few seconds and were able to position objects at the desired locations reliably and effortlessly. Thus, the accuracy afforded by the dDPI enables robust gaze-contingent control of the display.

Discussion

In the past two decades, major advances in the development of camera sensors and digital image processing have led to the flourishing of video-based eye-trackers. These systems have extended the use of eye-tracking far beyond laboratory use, enabling broad ranges of applications. However, most systems fall behind the resolution and accuracy achieved by the most sophisticated traditional approaches, so that the resulting performance is not yet at the level required by all vision science studies. In this article, we have described a digital implementation of the Dual Purkinje Images approach, a method originally developed by Crane and Steele that delivers oculomotor measurements with high temporal and spatial resolution. Our working prototype demonstrates that digital imaging technology has reached a point of maturity where the DPI strategy can be applied effectively.

Several factors contribute to the need for eye-trackers that possess both high precision and accuracy. A strong push for precision comes from the growing understanding of the importance of small eye movements for human visual functions. The last decade has seen a surge in the interest for how humans move their eyes at fixation, the very periods in which visual information is acquired and processed, and considerable effort has been dedicated to studying the characteristics and functions of these movements and the resulting motion on the retina. Precision and sensitivity are needed in these studies to reliably resolve the smallest movements. Furthermore, when combined with minimal delay, high resolution also opens the door for gaze-contingent display control, a powerful experimental tool that enables control of retinal stimulation during normal oculomotor behavior (Santini et al., 2007). This approach allows a rigorous assessment of the consequences of the visual flow impinging onto the retina, as well as decoupling visual contingencies and eye movements.

In terms of accuracy, the capability of finely localizing the line of sight in the visual scene is critically important not just in vision science, but also in a variety of applications. Multiple studies have shown that vision is not uniform within the foveola (Poletti, Listorti, & Rucci, 2013; Shelchkova & Poletti, 2020; Intoy et al., 2021) and humans tend to fixate using a very specific subregion within this area (the preferred retinal locus of fixation), a locus which appears consistently stable across tasks and stimuli (Shelchkova et al., 2019; Intoy & Rucci, 2020; Kilpeläinen, Putnam, Ratnam, & Roorda, 2021; Reiniger, Domdei, Holz, & Harmening, 2021). Accurate localization of the point in the scene projecting to this locus is essential in research questions that involve precise absolute positioning of stimuli (e.g., presentation of stimuli at desired eccentricities), as well as in applications that require fine visuomotor interaction with the scene (e.g., using the eyes as a mouse to interact with a computer).

Unfortunately, gaze localization represents an insidious challenge, because most eye-trackers do not measure gaze position in space directly, but need to convert their raw measurements in visual coordinates, an operation that adds uncertainty. The parameters of this conversion are usually estimated via calibrations with observers fixating at known locations. However, both inaccuracies in the data points themselves and interpolation errors, particularly in highly nonlinear eye-trackers, contribute to lower accuracy. As mentioned, in normal, untrained observers, just the error caused by eye movements during fixation on each of the points of a calibration grid can be as large as the entire foveola (Cherici et al., 2012). In keeping with these considerations, accuracy is reported to be approximately 0.3° to 1.0° for video eye-trackers (Holmqvist, 2015; Blignaut & Wium, 2014), values that likely underestimate the actual errors.

Here, we report that the dDPI can be used in conjunction with the same techniques we previously used with the analog DPI to increase gaze localization accuracy. These procedures consist in the iterative refinement of the eye position measurements given by the eye-tracker into screen coordinates on the basis of real-time visual feedback (Santini et al., 2007) (see also Supplementary Information in Rucci, Iovin, Poletti, & Santini [2007]; Poletti, Burr, & Rucci [2013]). This gaze-contingent calibration effectively improves accuracy by almost one order of magnitude (Poletti & Rucci, 2016). As demonstrated empirically by the real-time scene interaction described in Figure 8E, gaze localization in our apparatus is sufficiently accurate to enable observers to use their gaze to effortlessly pick and drop objects as small as 10′.

The analog DPI’s ability to combine resolution, precision, and real-time stimulation control has been widely applied in vision science. In our laboratory, these techniques have been used, among other things, to examine the influence of eye movements on spatial vision (Rucci & Victor, 2015; Rucci, Ahissar, & Burr, 2018), map visual functions across the fovea (Intoy et al., 2021), study the interaction between eye movements and attention (Zhang et al., 2021; Poletti, Rucci, & Carrasco, 2017), and elucidate oculomotor strategies (Poletti & Rucci, 2016; Yu et al., 2018; Poletti et al., 2020). For example, accurate gaze localization has been instrumental to demonstrate that eye movements improve fine spatial vision (Rucci et al., 2007; Intoy & Rucci, 2020) and that microsaccades precisely position the center of gaze on relevant features of the stimulus (Ko et al., 2010; Poletti et al., 2020). These studies were possible thanks to the capabilities of reliably resolving eye movements at arcminute level, accurately localizing gaze, and modifying stimuli in real time, all of which are now afforded by the system described here.

The dDPI presents several advantages over its analog predecessor. Although very precise, the analog DPI is cumbersome both to use and maintain. Maintenance requires frequent tuning for optimal performance, and the experimenter needs to have good technical knowledge of the apparatus to operate it effectively. In contrast, the dDPI does not rely on the mechanical motion of detectors. It, therefore, requires little maintenance and is easy to operate, like most video-based eye-trackers (Holmqvist & Blignaut, 2020). Furthermore, the dDPI likely achieves higher resolution than its analog predecessor, as the estimation of the Purkinjes’ positions is based on many pixels, rather than just the four quadrants of a detector. Our data indicate that resolution already outperforms the motor precision by which we can rotate an artificial eye (Figure 6). It is, however, important to point out that various factors will contribute to lower resolution in real eyes, including the optical quality of the images, changes in accommodation, and possible movements of the head (Figure 7).

A further advantage of the dDPI is that it can be flexibly configured to the needs of the study under consideration. In our prototype, we used a high-end camera that enabled imaging at high speed of a large portion of the pupil. However, the approach we described can also be implemented with a less expensive camera, paying attention to identifying the best trade-off between spatial resolution, temporal resolution, and trackable range for the specific experiments of interest. Resolution can be traded easily for width of the field of view by modifying the optics. For example, in studies that focus selectively on small eye movements, narrowing the imaged portion of the eye yields more pixels in the regions of interest, and therefore higher resolution. Similarly, temporal resolution can be flexibly modified and traded with spatial resolution by varying the size of the output image. In fact, the dDPI allows higher temporal acquisition rates than those afforded by the analog DPI, which is limited by its internal electrical and mechanical filtering.

To summarize, the digital extension of the DPI approach enables high-resolution eye-tracking while avoiding some of the challenges associated with the traditional analog device. It is worth emphasizing that the prototype described in this study is only a proof of concept that can be improved in a variety of ways. For example, as with the analog DPI, we tested the system with the head immobilized. However, relatively simple improvements in the optics can decrease artifacts caused by head movements. Furthermore, the processing algorithm can be significantly enhanced. To emulate the process of the analog machine, our tracking algorithm relies on a well-tested method for centroid estimation (Parthasarathy, 2012). We did not investigate other algorithms or add new features. However, the flexibility and intelligence provided by software programming allow for the development of more robust algorithms. This is especially true if time is not critical and eye movements can be processed offline.

Acknowledgments

The authors thank Martina Poletti, Soma Mizobuchi, and the other members of the Active Perception Laboratory for helpful comments, discussions, and technical assistance during the course of this work.

Supported by Reality Labs and by NIH grants R01 EY18363 and P30 EY001319.

A Matlab implementation of the eye-tracking algorithm can be found at https://github.com/rueijrwu/dDPIAlgorithm.

Commercial relationships: RW, ZZ, and MR are inventors of a patent on digital DPI eye-tracking granted to the University of Rochester.

Corresponding author: Michele Rucci.

Email: mrucci@ur.rochester.edu.

Address: Department of Brain & Cognitive Sciences, Meliora Hall, University of Rochester, Rochester, NY 14627, USA.

References

- Abdulin, E., Friedman, L., & Komogortsev, O. (2019). Custom video-oculography device and its application to fourth Purkinje image detection during saccades. arXiv, 10.48550/arXiv.1904.07361. [DOI] [Google Scholar]

- Akram, M. N., Baraas, R. C., & Baskaran, K. (2018). Improved wide-field emmetropic human eye model based on ocular wavefront measurements and geometry-independent gradient index lens. Journal of the Optical Society of America, 35(11), 1954–1967. [DOI] [PubMed] [Google Scholar]

- Atchison, D. A. (2006). Optical models for human myopic eyes. Vision Research, 46(14), 2236–2250. [DOI] [PubMed] [Google Scholar]

- Atchison, D. A., & Thibos, L. N. (2016). Optical models of the human eye. Clinical and Experimental Optometry, 99(2), 99–106. [DOI] [PubMed] [Google Scholar]

- Bahill, A. T., Clark, M. R., & Stark, L. (1975). The main sequence, a tool for studying human eye movements. Mathematical Biosciences, 24(3–4), 191–204. [Google Scholar]

- Bakaraju, R. C., Ehrmann, K., Papas, E., & Ho, A. (2008). Finite schematic eye models and their accuracy to in-vivo data. Vision Research, 48(16), 1681–1694. [DOI] [PubMed] [Google Scholar]

- Blignaut, P. J., & Wium, D. (2014). Eye-tracking data quality as affected by ethnicity and experimental design. Behavior Research Methods, 46, 67–80. [DOI] [PubMed] [Google Scholar]

- Boi, M., Poletti, M., Victor, J. D., & Rucci, M. (2017). Consequences of the oculomotor cycle for the dynamics of perception. Current Biology, 27(9), 1268–1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherici, C., Kuang, X., Poletti, M., & Rucci, M. (2012). Precision of sustained fixation in trained and untrained observers. Journal of Vision, 12(6), 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collewijn, H., Martins, A. J., & Steinman, R. M. (1981). Natural retinal image motion: Origin and change. Annals of the New York Academy of Sciences, 374, 312–329. [DOI] [PubMed] [Google Scholar]

- Cornsweet, T. N. (1956). Determination of the stimuli for involuntary drifts and saccadic eye movements. Annals of the New York Academy of Sciences, 46(11), 987–993. [DOI] [PubMed] [Google Scholar]

- Cornsweet, T. N., & Crane, H. D. (1973). Accurate two-dimensional eye tracker using first and fourth Purkinje images. Annals of the New York Academy of Sciences, 63(8), 921–928. [DOI] [PubMed] [Google Scholar]

- Crane, H. D., & Steele, C. M. (1985). Generation-V dual-Purkinje-image eyetracker. Applies Optics, 24(4), 527–537. [DOI] [PubMed] [Google Scholar]

- Demer, J. L., & Clark, R. A. (2019). Translation and eccentric rotation in ocular motor modeling. Progress in Brain Research, 248, 117–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deubel, H., & Bridgeman, B. (1995). Perceptual consequences of ocular lens overshoot during saccadic eye movements. Vision Research, 35(20), 2897–2902. [DOI] [PubMed] [Google Scholar]

- Deubel, H., & Schneider, W. X. (1996). Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Research, 36(12), 1827–1837. [DOI] [PubMed] [Google Scholar]

- Drewes, J., Zhu, W., Hu, Y., & Hu, X. (2014). Smaller is better: Drift in gaze measurements due to pupil dynamics. PLoS One, 9(10), 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorentini, A., & Ercoles, A. M. (1966). Involuntary eye movements during attempted monocular fixation. Atti della Fondazione Giorgio Ronchi, 21, 199–217. [Google Scholar]

- Fry, G. A., & Hill, W. W. (1962). The center of rotation of the eye. Optometry and Vision Science, 39(11), 581–595. [DOI] [PubMed] [Google Scholar]

- Geisler, W. S., & Perry, J. S. (1998). Real-time foveated multiresolution system for lowbandwidth video communication. SPIE, 3299, 294–305. [Google Scholar]

- Havermann, K., Cherici, C., Rucci, M., & Lappe, M. (2014). Fine-scale plasticity of microscopic saccades. Journal of Neuroscience, 34(35), 11665–11672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawken, M., & Gegenfurtner, K. (2001). Pursuit eye movements to second-order motion targets. Journal of the Optical Society of America, 18(9), 2282–2296. [DOI] [PubMed] [Google Scholar]

- Hayhoe, M. M., Bensinger, D. G., & Ballard, D. H. (1998). Task constraints in visual working memory. Vision Research, 38(1), 125–137. [DOI] [PubMed] [Google Scholar]

- Holmqvist, K. (2015). Eye tracking: A comprehensive guide to methods and measures. Oxford, UK: Oxford University Press. [Google Scholar]

- Holmqvist, K., & Blignaut, P. (2020). Small eye movements cannot be reliably measured by video-based P-CR eye-trackers. Behavior Research Methods, 52(5), 2098–2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooge, I., Holmqvist, K., & NystrÃ˝um, M. (2016). The pupil is faster than the corneal reflection (CR): Are video based pupil-CR eye trackers suitable for studying detailed dynamics of eye movements? Vision Research, 128, 6–18. [DOI] [PubMed] [Google Scholar]

- Hyona, J., Munoz, D., Heide, W., & Radach, R. (2002). Transsaccadic memory of position and form. Progress in Brain Research, 140, 165–180. [DOI] [PubMed] [Google Scholar]

- Intoy, J., Mostofi, N., & Rucci, M. (2021). Fast and nonuniform dynamics of perisaccadic vision in the central fovea. Proceedings of the National Academy of Science of the United States of America, 118(37). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Intoy, J., & Rucci, M. (2020). Finely tuned eye movements enhance visual acuity. Nature Communications, 11(795), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karn, K. S., Møller, P., & Hayhoe, M. M. (1997). Reference frames in saccadic targeting. Experimental Brain Research, 115(2), 267–282. [DOI] [PubMed] [Google Scholar]

- Kilpeläinen, M., Putnam, N. M., Ratnam, K., & Roorda, A. (2021). The retinal and perceived locus of fixation in the human visual system. Journal of Vision, 21(11), 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ko, H. K., Poletti, M., & Rucci, M. (2010). Microsaccades precisely relocate gaze in a high visual acuity task. Nature Neuroscience, 13(3), 1549–1553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowler, E. (2011). Eye movements: The past 25 years. Vision Research, 51(13), 1457–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuang, X., Poletti, M., Victor, J. D., & Rucci, M. (2012). Temporal encoding of spatial information during active visual fixation. Current Biology, 22(6), 510–514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, J. W., Cho, C. W., Shin, K. Y., Lee, E. C., & Park, K. R. (2012). 3D gaze tracking method using Purkinje images on eye optical model and pupil. Optics and Lasers in Engineering, 50(5), 736–751. [Google Scholar]

- McConkie, G. W. (1997). Eye movement contingent display control: Personal reflections and comments. Scientific Studies of Reading, 1(4), 303–316. [Google Scholar]

- Mostofi, N., Boi, M., & Rucci, M. (2016). Are the visual transients from microsaccades helpful? Measuring the influences of small saccades on contrast sensitivity. Vision Research, 118, 60–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mostofi, N., Zhao, Z., Intoy, J., Boi, M., Victor, J. D., & Rucci, M. (2020). Spatiotemporal content of saccade transients. Current Biology, 30(20), 3999–4008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulligan, J. B. (1997). Image processing for improved eye-tracking accuracy. Behavior Research Methods, 29(1), 54–65. [DOI] [PubMed] [Google Scholar]

- Najemnik, J., & Geisler, W. S. (2008). Eye movement statistics in humans are consistent with an optimal search strategy. Journal of Vision, 8(3), 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orfanidis, S. J. (2016). Electromagnetic waves and antennas. Available: https://www.ece.rutgers.edu/∼orfanidi/ewa/.

- Parthasarathy, R. (2012). Rapid, accurate particle tracking by calculation of radial symmetry centers. Nature Methods, 9(7), 724–726. [DOI] [PubMed] [Google Scholar]

- Poletti, M., Aytekin, M., & Rucci, M. (2015). Head/eye coordination at the microscopic scale. Current Biology, 25, 3253–3259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., Burr, D. C., & Rucci, M. (2013). Optimal multimodal integration in spatial localization. Journal of Neuroscience, 33(35), 14259–14268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., Intoy, J., & Rucci, M. (2020). Accuracy and precision of small saccades. Scientific Reports, 10(16097), 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., Listorti, C., & Rucci, M. (2013). Microscopic eye movements compensate for nonhomogeneous vision within the fovea. Current Biology, 23(17), 1691–1695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., & Rucci, M. (2015). Control and function of fixational eye movements. Annual Review of Vision Science, 1, 499–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., & Rucci, M. (2016). A compact field guide to the study of microsaccades: Challenges and functions. Vision Research, 118, 83–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poletti, M., Rucci, M., & Carrasco, M. (2017). Selective attention within the foveola. Nature Neuroscience, 20(10), 1413–1417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pomplun, M., Reingold, E. M., & Shen, J. (2001). Peripheral and parafoveal cueing and masking effects on saccadic selectivity in a gaze-contingent window paradigm. Vision Research, 41(21), 2757–2769. [DOI] [PubMed] [Google Scholar]

- Rayner, K. (1997). Understanding eye movements in reading. Scientific Studies of Reading, 1(4), 317–339. [Google Scholar]

- Reiniger, J. L., Domdei, N., Holz, F. G., & Harmening, W. M. (2021). Human gaze is systematically offset from the center of cone topography. Current Biology, 31(18), 4188–4193.e3. [DOI] [PubMed] [Google Scholar]

- Rolfs, M. (2009). Microsaccades: Small steps on a long way. Vision Research, 49(20), 2415–2441. [DOI] [PubMed] [Google Scholar]

- Rosales, P., & Marcos, S. (2006). Phakometry and lens tilt and decentration using a customdeveloped Purkinje imaging apparatus: Validation and measurements. Journal of the Optical Society of America, 23(3), 509–520. [DOI] [PubMed] [Google Scholar]

- Rucci, M., Ahissar, E., & Burr, D. (2018). Temporal coding of visual space. Trends in Cognitive Science, 22(10), 883–895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rucci, M., Iovin, R., Poletti, M., & Santini, F. (2007). Miniature eye movements enhance fine spatial detail. Nature, 447(7146), 852–855. [DOI] [PubMed] [Google Scholar]

- Rucci, M., & Victor, J. D. (2015). The unsteady eye: An information processing stage, not a bug. Trends in Neuroscience, 38(4), 195–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santini, F., Redner, G., Iovin, R., & Rucci, M. (2007). EyeRIS: A general-purpose system for eye movement contingent display control. Behavior Research Methods, 39(3), 350–364. [DOI] [PubMed] [Google Scholar]

- Shelchkova, N., & Poletti, M. (2020). Modulations of foveal vision associated with microsaccade preparation. Proceedings of the National Academy of Science of the United States of America, 117(20), 11178–11183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shelchkova, N., Tang, C., & Poletti, M. (2019). Task-driven visual exploration at the foveal scale. Proceedings of the National Academy of Science of the United States of America, 116(12), 5811–5818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snodderly, D. M. (1987). Effects of light and dark environments on macaque and human fixational eye movements. Vision Research, 27(3), 401–415. [DOI] [PubMed] [Google Scholar]

- Steinman, R. M., Haddad, G. M., Skavenski, A. A., & Wyman, D. (1973). Miniature eye movement. Science, 181(102), 810–819. [DOI] [PubMed] [Google Scholar]

- Tabernero, J., & Artal, P. (14). Lens oscillations in the human eye. Implications for post-saccadic suppression of vision. PLoS One, 9, 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong, J., Stevenson, S. B., & Bedell, H. E. (2008). Signals of eye-muscle proprioception modulate perceived motion smear. Journal of Vision, 8(14), 1–16. [DOI] [PubMed] [Google Scholar]

- Wang, D., Mulvey, F. B., Pelz, J. B., & Holmqvist, K. (2017). A study of artificial eyes for the measurement of precision in eye-trackers. Behavior Research Methods, 49(3), 947–959. [DOI] [PubMed] [Google Scholar]

- Wilson, M. A., Campbell, M. C., & Simonet, P. (1992). Change of pupil centration with change of illumination and pupil size. Optometry and Vision Science, 69(2), 129–136. [DOI] [PubMed] [Google Scholar]

- Yang, G., Dempere-Marco, L., Hu, X., & Rowe, A. (2002). Visual search: Psychophysical models and practical applications. Image and Vision Computing, 20(4), 291–305. [Google Scholar]

- Yu, F., Gill, C., Poletti, M., & Rucci, M. (2018). Monocular microsaccades: Do they really occur? Journal of Vision, 18(3), 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, Y., Shelchkova, N., Ezzo, R., & Poletti, M. (2021). Transient perceptual enhancements resulting from selective shifts of exogenous attention in the central fovea. Current Biology, 31(12), 2698–2703.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]