PURPOSE

Arkansas is one of only four known states that have linked All-Payer Claims Database (APCD) to state's cancer registry (Arkansas Cancer Registry [ACR]). We evaluated the reporting consistency of radiation therapy (RT) between the two sources.

METHODS

Women age ≥ 18 years diagnosed in 2013-2017 with early-stage hormone receptor–positive breast cancer who received breast-conserving surgery were identified. Patients must have continuous insurance coverage (any private plans, Medicaid, and Medicare) in the 13 months (month of diagnosis and 12 months after). Receipt of RT was identified independently from ACR and APCD. We calculated sensitivity, specificity, positive predictive value, and negative predictive value for receipt of RT coded by the registry compared with APCD billing claims as the gold standard. We assessed the degree of concordance between the data sources by Cohen's kappa statistics.

RESULTS

The final sample included 2,695 patients who were in both databases and satisfied our inclusion/exclusion criteria. Using APCD as the gold standard, there were high sensitivity (88.1%) and positive predictive value (87.7%) and moderate specificity (71.1%) and negative predictive value (71.8%). The overall agreement between the two sources was 83.0%, with a kappa statistic of 0.59 (95% CI, 0.56 to 0.63). Consistency measures varied by age, stage, and insurance type with Medicare fee-for-service coverage only having the best and private insurance only the worse consistency.

CONCLUSION

In patients with early-stage hormone receptor–positive breast cancer who received breast-conserving surgery, recording of RT receipt was moderately consistent between Arkansas APCD and ACR. Future studies are needed to identify factors affecting reporting consistency to better use this unique resource in addressing population health problems.

INTRODUCTION

All-payer claims databases (APCDs) are large state databases that include medical, pharmacy, and dental claims and eligibility and provider files collected from private and/or public payers.1 As an effort to increase price transparency, currently, 18 states have legislation mandating the creation and use of APCDs or are actively establishing APCDs and more than 30 states are maintaining or developing an APCD or have a strong interest in developing an APCD.2 State APCDs may include individuals of all ages and can capture longitudinally health care utilization across settings and payers, providing a more complete picture of care even when patients switch insurance plans or are covered concurrently by multiple plans or payers.2 Arkansas is a pioneer of state APCD. Arkansas' APCD included claims from commercial plans, State Employee plans, Medicaid, and Medicare, covering 80% of Arkansans. Although many states have developed APCD, Arkansas is one of only a few states that have linked APCD to state's central cancer registry,3 which provides the critical tumor-related information for cancer research.

CONTEXT

Key Objective

To evaluate reporting consistency of radiation therapy (RT) between Arkansas' All-Payer Claims Database (APCD) and Arkansas Cancer Registry in adult women with early-stage hormone receptor–positive breast cancer who received breast-conserving surgery.

Knowledge Generated

Recording of RT receipt was moderately consistent between APCD and Arkansas Cancer Registry. Consistency measures varied by age, stage, and insurance type with Medicare. Fee-for-service coverage only had the best consistency, and private insurance only the worse consistency.

Relevance

Cancer registries only documented first course of treatment and may under-report nonsurgery treatment. APCD can track patients longitudinally across settings and payers throughout cancer care continuum and may improve documentation of treatment among patients with continuous insurance coverage. Our findings showed good consistency between the two sources, and APCD can be a valuable resource to determine RT received as part of first-course treatment by patients with early-stage hormone receptor–positive breast cancer who received breast-conserving surgery.

Although APCD offers many potential advantages over one-payer claims databases, many challenges have been identified, which could threaten the completeness of data on treatment patterns.4 Therefore, it is important to assess the quality of the linked database. Only one other state has compared reported cancer treatments in the linked APCD registry database. Utah has linked their APCD to state's registry and found that APCD improves cancer treatment data collection; however, identifying treatment using both databases increased false-positive rate when compared with manual abstraction as the gold standard.5

In this study, we assessed the quality of reporting radiation therapy (RT) in the linked Arkansas APCD-Arkansas Cancer Registry (ACR) database among females with early-stage hormone receptor–positive (HR+) breast cancer (BC) who received breast-conserving surgery (BCS). Multiple randomized trials comparing BCS with mastectomy showed no differences in overall survival.6-10 Radiation after BCS is recommended11 and has been shown to significantly reduce the rates of ipsilateral breast tumor recurrence and improve BC-specific and overall survival.12 The specific aims of the study were to (1) compare the consistency in documented RT receipt between APCD and ACR and (2) identify factors associated with discordance between the two sources.

METHODS

This study used only deidentified secondary databases and was determined by our institution's institutional review board not to be a human subject study (IRB# 274492).

Data Sources

We used Arkansas APCD linked to ACR, established by the Arkansas Center for Health Improvement, a nonpartisan, independent health policy center dedicated to improving the health of Arkansans.13 Enrollee's last name, birth date, and sex were used to achieve this linkage, with an estimated matching rate of 96.5%.14 This study included BC patients diagnosed from 2013 to 2017 in ACR and claims from January 1, 2013, to December 31, 2019.

Patient Selection

From ACR, we identified Arkansas women age ≥ 18 years diagnosed in 2013-2017 with early-stage (stages 0-3) HR+ (ie, estrogen receptor– and/or progesterone receptor–positive) BC (ICD-O: C50.0x-C50.9x) who received BCS. Stage was based on either pathologic or clinical stage, whichever was higher. BCS recorded in ACR was defined as partial or less than total mastectomy with/without dissection of axillary lymph nodes. Patients were excluded if they (1) received no surgery, received mastectomy, or had unknown surgery status; (2) were HR− or had unknown HR status; (3) had multiple cancer diagnoses in ACR; (4) were diagnosed from autopsy or death certificate; or (5) had stage 4 BC or unknown stage. Date of cancer diagnosis was from ACR, excluding patients with missing year and/or month of diagnosis. For those missing only day of diagnosis, we used the first day of the recorded diagnosis month as the day of diagnosis.

We then used the unique alias patient IDs created by the Arkansas Center for Health Improvement to identify patients' claims in APCD. The following additional inclusion criteria were implemented to ensure complete claims for all individuals in the study. For private plans and Medicaid, we required continuous medical insurance coverage in the month of BC diagnosis and 12 months after, for a total of 13 months of continuous coverage. Medicare claims were from the Centers for Medicare & Medicaid Services and do not include claims from Medicare Advantage (MA) plans. Therefore, for Medicare claims, we required continuous part A and B coverage and no MA coverage (ie, hereafter Medicare fee-for-service [FFS]) throughout the 13 months. However, claims from MA plans can be part of Arkansas APCD private plan claims if these plans were administered through insurers in Arkansas. For this study, we consider them as private plans.

Receipt of RT

Receipt of RT was identified independently from ACR and APCD to facilitate comparison. ACR collects information on the first course of therapy as documented in the treatment plan. Six variables in ACR could inform RT receipt: RADDOSE (regional dose [cGy]), RADMODALITY (regional RX modality), RADVOL (treatment volume/anatomic site), REASONNORAD (reason for no radiation), RXDATERAD (first date of radiation), and RXDTRADEND (end date of radiation), and the information can be slightly different across variables. We consider a patient as having received RT if any of the six variables reported it or not if none of these variables reported it including no radiation or unknown radiation status.

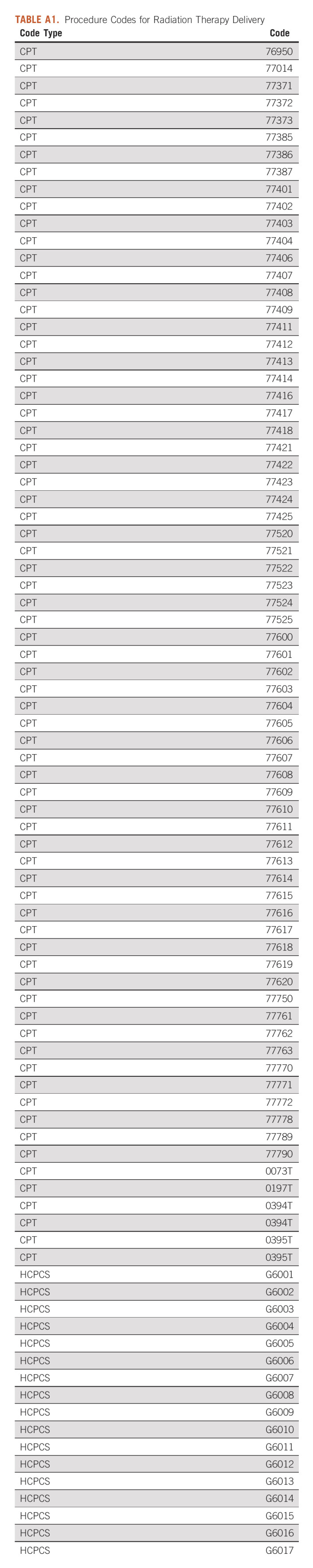

In APCD, RT was identified using procedure codes for radiation delivery (Appendix Table A1). After previous studies,5,15,16 we chose a 1-year postdiagnosis window to identify RT as part of the first course of treatment in APCD. We considered a patient as having received RT if she had at least one claim with these procedure codes. For sensitivity analyses, we used windows of 6 months and 2 years to look for RT claims. In addition, we relaxed the continuous insurance coverage requirement.

Radiation to Breast

In ACR, the variable RADVOL identifies the volume or anatomic target of the most clinically significant regional radiation delivered to the patient during the first course of therapy.17 We consider a patient who received radiation to breast if the site was 18 breast (the primary target is the intact breast, and no attempt has been made to irradiate the regional lymph nodes) or 19 breast/lymph nodes (a deliberate attempt has been made to include regional lymph nodes in the treatment of an intact breast).17

In APCD, radiation procedure codes are not specific to any anatomic site. To identify radiation to breast, we required a diagnosis of BC (malignant neoplasm of female breast: ICD9 174.xx/ICD10 C50.xx; carcinoma in situ of breast: ICD9 233.0x/ICD10 D05.xx) on the day of the procedure to minimize the probability of identifying radiation directed to a metastatic site or for other cancers.

Radiation Starting Date

ACR records the first date when RT was delivered as part of the first course of therapy (RXDATERAD). In APCD, we used the date of the first radiation delivery claim within 1 year after BC diagnosis as the RT starting date.

Key Covariates

Covariates included patient's age at diagnosis (< 64, 64-65, and > 65 years), race/ethnicity (Hispanic, non-Hispanic White, non-Hispanic Black, non-Hispanic Others), stage (0-3), insurance type during the 13 months (private plans only, Medicaid only, Medicare FFS only, private/Medicaid, private/Medicare FFS, Medicaid/Medicare FFS, or all three), residence at time of diagnosis (rural/urban), and year of diagnosis. Age group 64-65 years was studied separately because insurance coverage during this time is expected to change because of age-related Medicare eligibility. Rurality was based on the 2013 National Center for Health Statistics' continuum codes of rurality.18

Analysis

We calculated sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) for RT receipt documented in the registry compared with APCD billing claims as the gold standard. We chose APCD as the gold standard because we required all patients to have continuous insurance coverage during the 13 months; thus, APCD should capture all RT received during this time. Billing claims were also used as the gold standard in previous studies evaluating quality of RT information in cancer registries.15,16 Nonetheless, PPV and NPV when using APCD as the gold standard are the same as sensitivity and specificity when ACR is used as the gold standard.

In addition, we calculated the proportion of patients having records in agreement (ACR yes and APCD yes; ACR no and APCD no) and assessed the degree of agreement using Cohen’s kappa statistic.20 Both statistics do not assume a gold standard. The following scale was used to interpret kappa values: no agreement: ≤ 0, slight agreement: 0.01-0.20, fair agreement: 0.21-0.40, moderate agreement: 0.41-0.60, substantial agreement: 0.61-0.80, and almost perfect agreement: 0.81-1.00.21 We also conducted these analyses for radiation to breast.

We assessed the concordance in the recorded first day of RT by calculating the proportion of patients with reported RT initiation (1) on the same day, (2) 1-7 days apart, (3) 8-15 days apart, (4) 16-30 days apart, (5) 31-60 days apart, (6) 61-90 days apart, or (7) 91-180 days apart among patients with documented RT in both sources. We also determined the sensitivity, specificity, PPV, NPV, proportion in agreement, and kappa statistics separately by the key covariates and performed a logistic regression of reporting disagreement (ref = in agreement) on the key covariates.

All analyses were conducted using SAS software, version 9.4 (SAS Institute, Cary, NC) and Stata software, version 17 (StataCorp LLC, College Station, TX). P < .05 for a two-sided test is considered statistically significant.

RESULTS

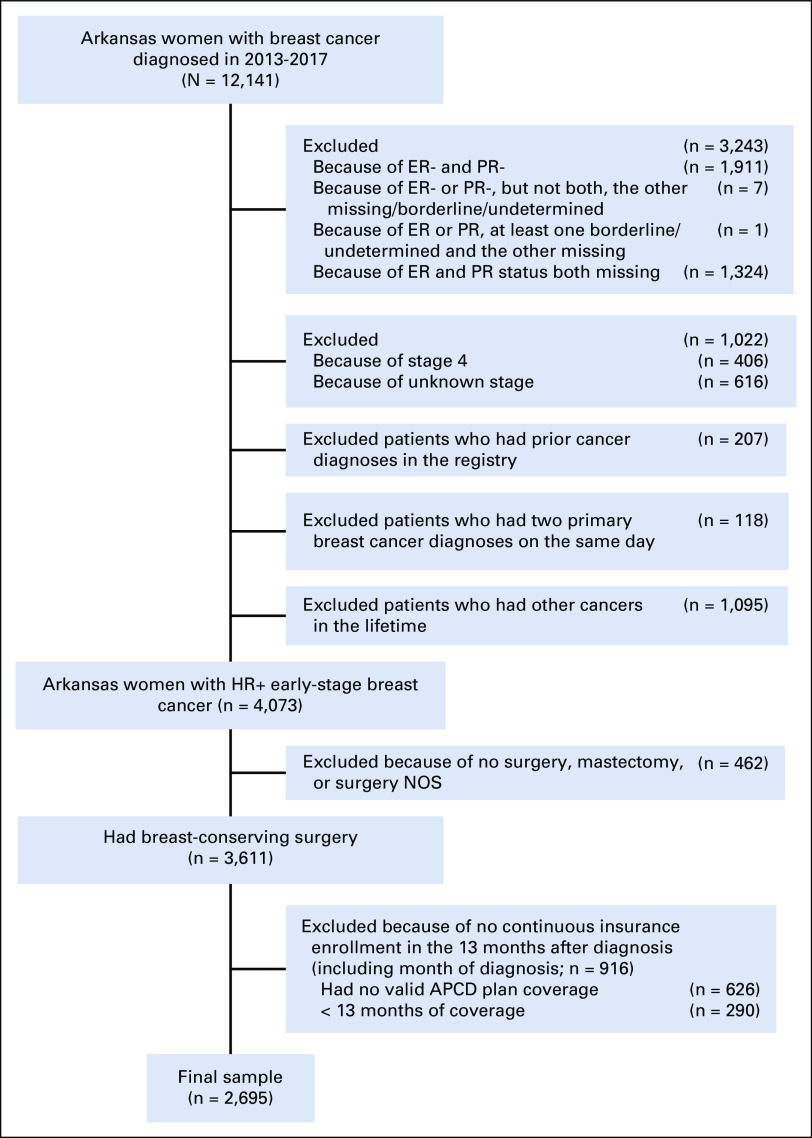

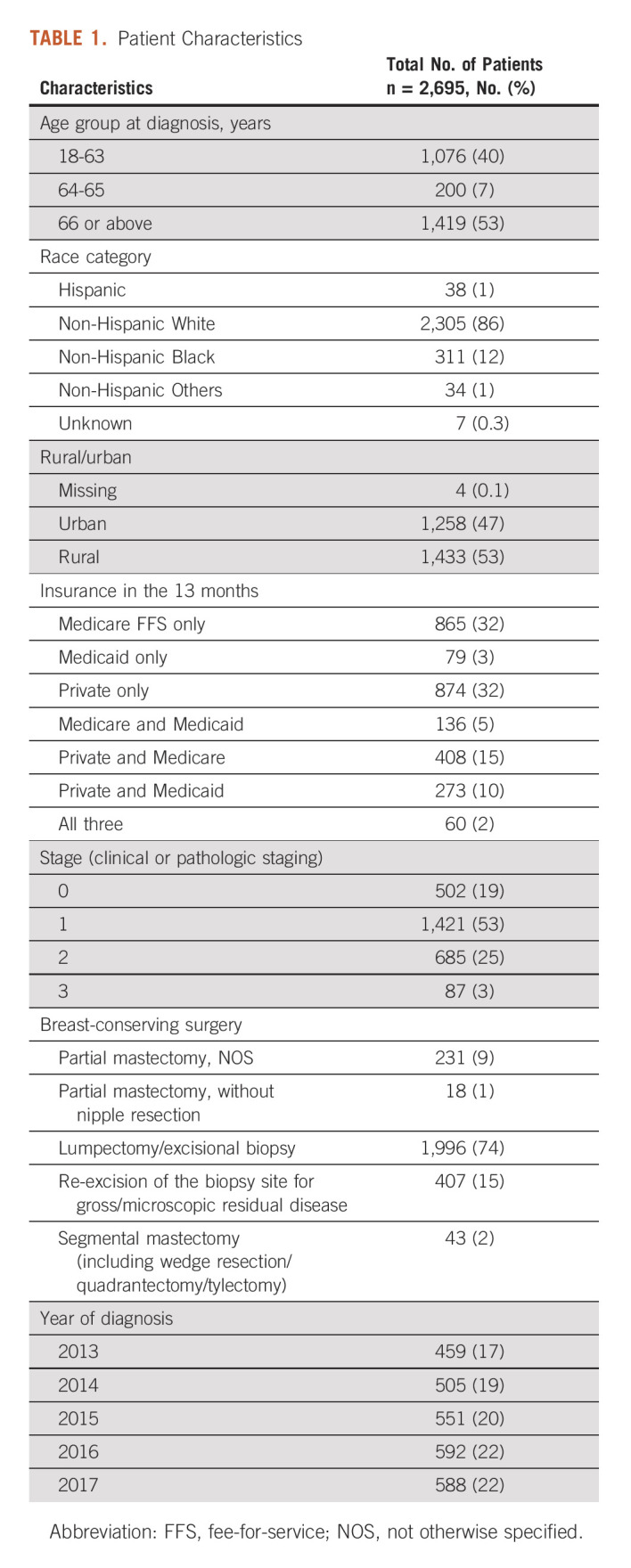

The final cohort included 2,695 patients. Figure 1 shows patients excluded because of various selection criteria. Half of the patients were diagnosed at age ≥ 66 years, and 7% were diagnosed around the Medicare eligibility age (64-65 years). The majority of patients were White (86%) and living in rural areas (53%). During the 13 months, 67% had one type of coverage (Medicare FFS only: 32%, Medicaid only: 3%, and private plans only: 32%) and 33% had a combination of coverages.19%, 53%, 25%, and 3% of the patients had stage 0-3 diseases, respectively (Table 1).

FIG 1.

Patient selection flowchart. APCD, All-Payer Claims Database; ER, estrogen receptor; NOS, not otherwise specified; PR, progesterone receptor.

TABLE 1.

Patient Characteristics

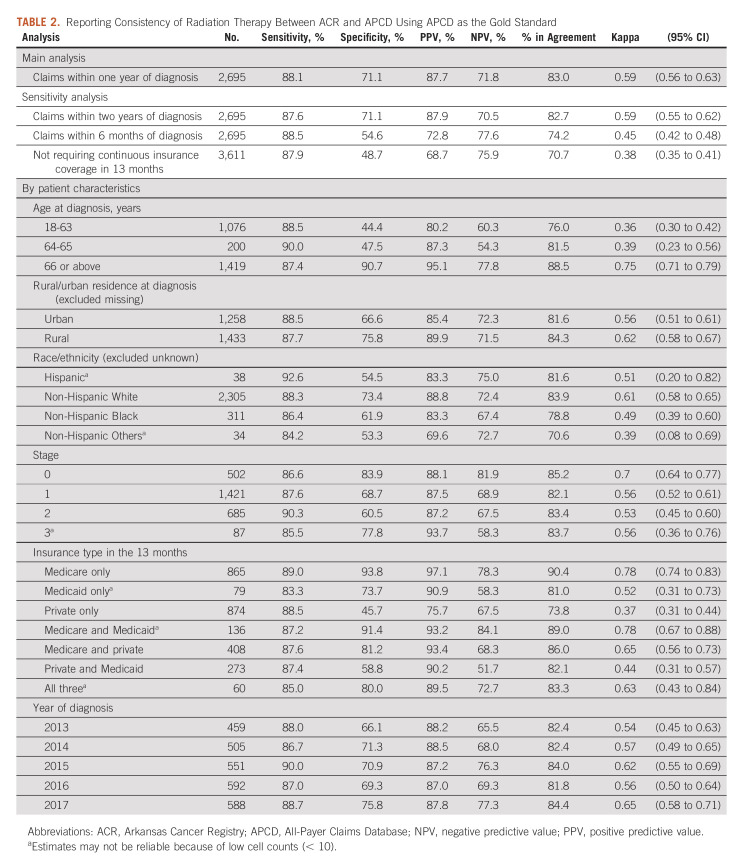

Using claims within 1 year after diagnosis, we found 83.0% agreement in documented RT receipt (ACR yes, APCD yes, 61.7%; ACR no and APCD no, 21.3%). Using APCD as the gold standard, sensitivity, specificity, PPV, and NPV were 88.1%, 71.1%, 87.7%, and 71.8%, respectively. The kappa statistic was 0.59 (95% CI, 0.56 to 0.63). Using claims in 2 years did not significantly affect these statistics. However, using claims in 6 months significantly reduced specificity (54.6%), overall agreement (74.2%), and kappa statistic (0.45; 95% CI, 0.42 to 0.48). Not requiring 13 months of continuous coverage further reduced specificity (48.7%), overall agreement (70.7%), and kappa statistic (0.38; 95% CI, 0.35 to 0.41; Table 2).

TABLE 2.

Reporting Consistency of Radiation Therapy Between ACR and APCD Using APCD as the Gold Standard

Consistency measures varied by age at diagnosis, with patients diagnosed at age ≥ 66 years having the highest specificity (90.7%) and PPV (95.1%), whereas specificity (44.4%) and PPV (80.2%) were lowest in 18-63 years age group. Across insurance groups, patients having Medicare FFS–only coverage in the 13 months had the best consistency with the highest sensitivity (89.0%), specificity (93.8%), PPV (97.1%), second best NPV (78.3%), and highest overall agreement (90.4%) and kappa statistic (0.78; 95% CI, 0.74 to 0.83); patients with private insurance only had the lowest specificity (45.7%), PPV (75.7%), overall agreement (73.8%), and kappa statistic (0.37; 95% CI, 0.31 to 0.73). By stage, patients with stage 0 cancer had the most consistency. Consistency differed by race/ethnicity, with non-Hispanic White patients having the most consistent records; however, estimates for Hispanic patients and non-Hispanic Others may be unreliable because of small numbers (Table 2).

Of all RT claims (n = 106,989) within 1 year after diagnosis, nearly all (98.6%) claims had a BC diagnosis (Medicaid: 97.4%, private: 98.6%, and Medicare: 98.9%) as either the principal (Medicaid: 70.1%, private: 68.2%, and Medicare: 63.7%) or a secondary diagnosis. Among claims with BC as a secondary diagnosis, encounter for antineoplastic RT codes (ICD9 V58.0x, ICD10 Z51.0x) were most frequently used as the principal diagnosis (Medicaid: 94.2%, private: 97.5%, and Medicare: 96.7%).

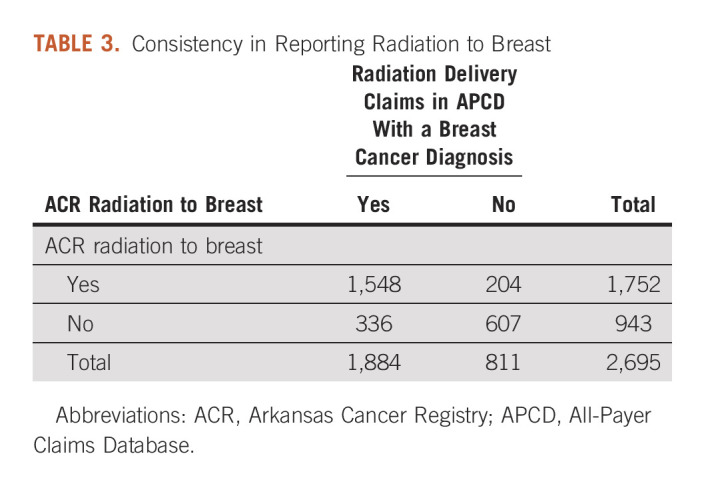

Of the 1,896 patients with documented RT in the ACR, 92.4% were documented as being received radiation to breast and/or regional lymph nodes. Among the 1,888 patients with RT claims within 1 year after cancer diagnosis, 99.8% had claims with BC as a diagnosis. The overall agreement was 80.0% (ACR breast yes and APCD breast yes, 57.4%; ACR breast no and APCD breast no, 22.5%). The sensitivity, specificity, PPV, and NPV were 82.2%, 74.8%, 88.4%, and 64.4% respectively, with a kappa statistic of 0.55 (95% CI, 0.52 to 0.58; Table 3).

TABLE 3.

Consistency in Reporting Radiation to Breast

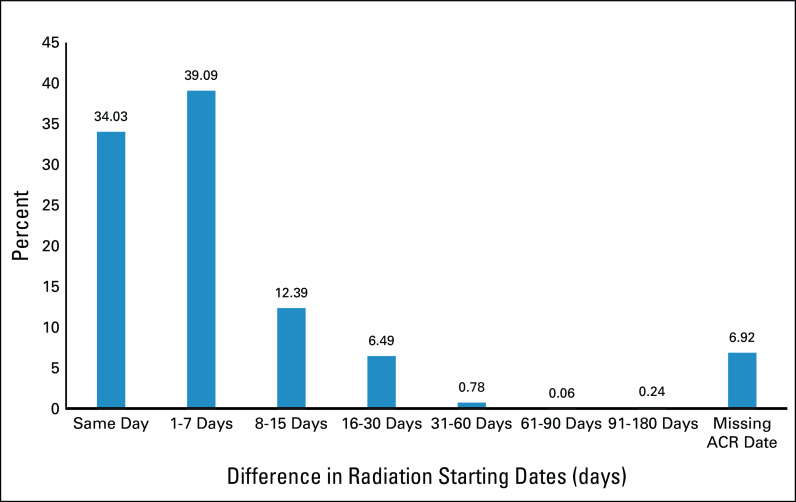

The mean documented RT starting date was 113 days after BC diagnosis in the ACR (n = 1,749) and 111 days in the APCD (n = 1,888). Of the 1,548 patients with a nonmissing starting date in both databases, the mean difference was only 2 days between the two sources; 92.0% had starting dates within 30 days apart (same day, 34.0%; 1-7 days apart, 39.1%; 8-15 days apart, 12.4%; and 16-30 days apart, 6.5%; Fig 2).

FIG 2.

Absolute difference in radiation starting dates (in days) between ACR and APCD claims. ACR, Arkansas Cancer Registry; APCD, All-Payer Claims Database.

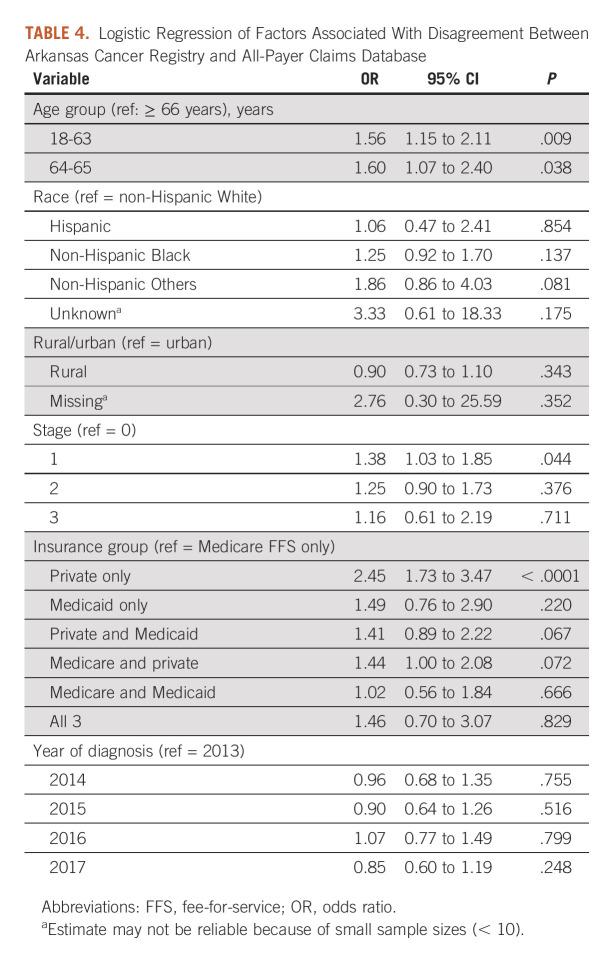

Using logistic regression on disagreement between the two sources, younger age at diagnosis versus age ≥ 66 years (odds ratio; 95% CI: 18-63 years, 1.56; 1.15 to 2.11 and 64-65 years, 1.60; 1.07 to 2.40), stage 1 versus 0 cancer (1.38; 1.03 to 1.85), and private insurance only (2.45; 1.73 to 3.47) versus Medicare FFS only in the 13 months were independently associated with a higher risk of disagreement (Table 4).

TABLE 4.

Logistic Regression of Factors Associated With Disagreement Between Arkansas Cancer Registry and All-Payer Claims Database

DISCUSSION

To our knowledge, we conducted the first study assessing RT quality in Arkansas's APCD linked with state's cancer registry among females with early-stage HR+ BC who received BCS. Overall, we found good concordance between APCD and ACR, with high sensitivity and PPV and moderate specificity and NPV. Medicare FFS claims had the best consistency with the registry, whereas private plan claims, which included claims from multiple private payers, had the worst consistency. Only two other states have linked state's APCD and cancer registry to facilitate cancer-related research.5,22 APCDs can complement state cancer registry beyond the first course of treatment to track patients longitudinally for subsequent care throughout cancer survivorship and assess patterns and quality of care and outcomes.

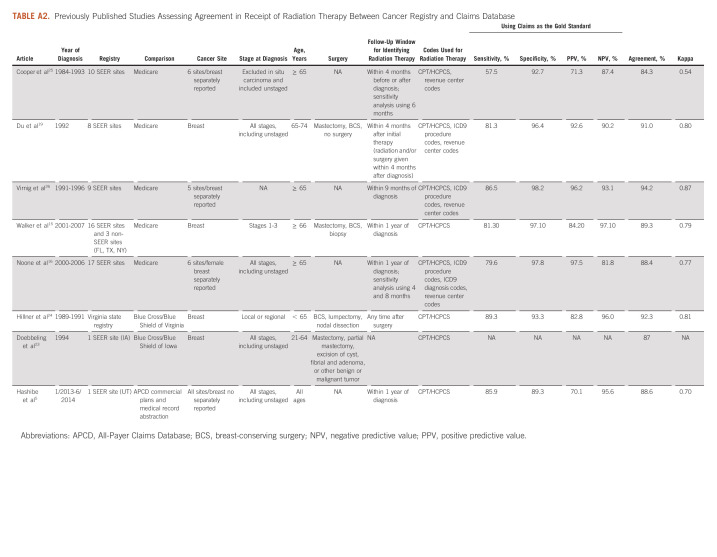

Our findings are generally consistent with previous findings. We conducted a review of published studies of registry-claims–linked databases that have compared RT documented in cancer registry with claims when BC was either the only cancer site15,19,23,24 or one of several cancer sites studied5,16,25,26 (Appendix Table A2). On the basis of reported data in these studies, we calculated sensitivity, specificity, PPV, and NPV using claims as the gold standard and proportion in agreement and kappa statistic (if not already reported in a study and feasible) to facilitate comparison with our findings. Of the eight registry-claims–linked studies identified, five assessed SEER-Medicare–linked database.15,16,19,25,26 These studies varied by years of data used (therefore varying number of SEER sites included), the follow-up window for identifying RT claims, and codes used to identify RT claims. Overall, there was a good agreement between SEER registries and Medicare claims, with 84.3%-94.2% agreement and kappa statistics ranging from 0.54 to 0.87 across the studies, which are consistent with our findings of Medicare FFS claims compared with ACR. However, agreement varied by SEER site,15,16,19 cancer stage,15,16,19 and cancer site.16,26 Moreover, since only Medicare claims were used, these studies were limited to patients age ≥ 65 years with Medicare FFS coverage.

Only three studies have assessed consistency of cancer registries with claims other than Medicare and included patients younger than age 65 years.5,23,24 All three studies used state-specific data. Two studies used earlier data and compared state's cancer registries with claims from a large private payer, Blue Cross/Blue Shield (BCBS) in their states, both restricted to patients under age 65 years.23,24 Doebbeling et al23 matched Iowa cancer registry with Iowa's BCBS for women with in situ or invasive BC from 1989 to 1996. Claims for incident cancer patients in 1994 (n = 445) were examined for consistency with cancer registry; an agreement of 87% was reported for RT. Hillner et al linked claims from Virginia's BCBS with state's cancer registry from 1989 to 1991 and included 918 female patients with BC without in situ or metastatic disease.24 Using claims as the gold standard, the sensitivity, specificity, PPV, and NPV for RT were 89.3%, 93.3%, 82.8%, and 96.0% respectively; 92% were in agreement with a kappa statistic of 0.81 (95% CI, 0.76 to 0.85).24 Similar to Iowa's study,23 no fixed window after surgery was specified to search for RT, which may identify subsequent treatment that was not part of the first course of therapy.24

Only one study compared between cancer registry and APCD. The study used Utah's SEER linked with APCD, covering approximately 90% of commercially insured population in Utah.5 Across all cancer sites, agreement for RT was 88.6%. Using APCD as the gold standard, sensitivity, specificity, PPV, and NPV were 85.9%, 89.3%, 70.1%, and 95.6%, respectively; APCD identified additional 4.4% of patients with RT who were documented as no RT in Utah's SEER.5 For 198 patients with BC under age 65 years, manual abstraction by certified tumor registrar was made using SEER's Patterns of Care abstraction form.5 Compared with manual extraction, SEER demonstrated excellent sensitivity (95.4%) and specificity (100%). Adding APCD improved sensitivity (97.7%) but reduced specificity (85.5%). Using APCD only had moderate sensitivity (74.6%) and high specificity (85.7%).5

We found that risk of disagreement in RT differed by age at diagnosis and was lowest among patients age ≥ 66 years. Previous studies also found that concordance varies by age but were limited to patients age ≥ 65 years.15,16,19 Only the Utah study included all ages.5 Although separate consistency measures by age were not reported, the likelihood of identifying additional patients with RT claims in APCD missed by the registry was lower in younger groups (< 50 and 50-64 years) compared with those age ≥ 75 years, suggesting a better concordance among younger groups.5 However, claims for patients age ≥ 65 years in this study may be incomplete because only those with commercial plans at some time after cancer diagnosis were included. Another study compared RT documented in two large SEER sites (Los Angeles and Detroit) with self-report through a survey of patients age 21-79 years.27 Using self-report as the gold standard, risk of unascertainment (defined as self-reported RT not documented in SEER) was higher among younger patients (age < 50 years) compared with those ≥ 65 years (Los Angeles: 43% v 23%; Detroit: 14% v 9%).27 We also found better agreement in patients with stage 0 cancer compared with those with more advanced stages. This is consistent with previous studies16,19 and has been attributed to more complex treatment plans for advanced disease that could delay RT.

Assessing time to treatment is an important aspect of cancer care delivery. Most published studies relied on claims database to assess time to RT initiation.28-31 Some used RT starting date in cancer registry.32,33 However, no studies have compared the documented starting date between cancer registries and claims. In this study, we found high consistency in RT starting dates between the two sources. Although only 34% had first RT claim on the same day as the RT starting date in ACR, the average gap between the two sources was only 2 days. About 7% of patients with documented RT in both sources had missing RT starting date in ACR, which can be supplemented with information from APCD. This study shows that both data sources can be used to reliably document RT initiation when only one data source is available. APCD can supplement ACR when dates are missing in ACR.

Similar to our study, most published studies used CPT/HCPCS codes in outpatient settings to document RT in claims database.5,15,23,24 Some also used ICD diagnosis codes for general radiation oncology services,16 revenue codes,16,19,25,26 and ICD procedure codes,16,19,26 which are used to document procedures performed in the inpatient setting. In our cohort, adding those codes only identified 27 additional patients in Medicare inpatient claims. However, these codes are not specific to any anatomic sites. Any delivered RT should have a physician claim with a radiation-related CPT/HCPCS code. Clinically, it is also very unlikely that RT aimed to treat early-stage BC is delivered in an inpatient setting. Thus, the nature of these procedures identified only by ICD diagnosis codes, revenue codes, and/or ICD procedure codes is unclear and including claims identified solely by these codes increased the gap between the starting dates documented in ACR and APCD (average 6 days). Therefore, we recommend to not use these codes to identify RT receipt.

A major limitation is that data were from one state. However, given that an increasing number of states developed or are developing their APCD and potentially link APCD to state's cancer registry, our findings have important implications and could be compared in the future with other states when such data become available. Findings from this study may not generalize to patients with breast cancer excluded from this study: patients not in the APCD, did not receive surgery, HR− or with unknown HR status, stage 4 cancer or with unknown stage, or had multiple cancers. Findings also may not be generalized to small private plans with < 2,000 enrollees or self-insured plans who are not mandated to submit claims to APCD and care provided at the Veterans Health Administration. Since all patients had continuous insurance coverage during the 13 months on/after BC diagnosis, findings could not be generalized to those with interruptions in coverage, who were uninsured, or who received uncompensated care during this time.

In conclusion, in this study, to our knowledge, we conducted the first study comparing RT in Arkansas's APCD linked with state's cancer registry. Overall, we found good consistency between the sources with high sensitivity and PPV and moderate specificity and NPV. Medicare FFS claims had the best consistency with the cancer registry, whereas private claims, which included claims from multiple private payers, had the worse consistency. Consistency was lower among younger patients, private-insured patients, or those with more advantaged disease.

APPENDIX

TABLE A1.

Procedure Codes for Radiation Therapy Delivery

TABLE A2.

Previously Published Studies Assessing Agreement in Receipt of Radiation Therapy Between Cancer Registry and Claims Database

Chenghui Li

Research Funding: AstraZeneca (Inst)

John T. Guire

Employment: UAMS

Laura F. Hutchins

Research Funding: Abbott/AbbVie (Inst), Celldex (Inst), Amgen (Inst), Bristol Myers Squibb (Inst), Polynoma (Inst)

No other potential conflicts of interest were reported.

PRIOR PRESENTATION

Presented in part at the ASCO 2022 Annual Meeting, June 3-7, 2022, Chicago, IL.

SUPPORT

C.L. was supported by the National Center for Advancing Translational Sciences of the National Institutes of Health under Award No. UL1 TR003107-02S2. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. Support for the data has been provided in part by the Arkansas Biosciences Institute, the major research component of the Arkansas Tobacco Settlement Proceeds Act of 2000.

AUTHOR CONTRIBUTIONS

Conception and design: Chenghui Li, Laura F. Hutchins

Collection and assembly of data: Chenghui Li, Laura F. Hutchins

Data analysis and interpretation: All authors

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Chenghui Li

Research Funding: AstraZeneca (Inst)

John T. Guire

Employment: UAMS

Laura F. Hutchins

Research Funding: Abbott/AbbVie (Inst), Celldex (Inst), Amgen (Inst), Bristol Myers Squibb (Inst), Polynoma (Inst)

No other potential conflicts of interest were reported.

REFERENCES

- 1.All-Payer Claims Databases. Rockville, MD: Agency for Healthcare Research and Quality; https://www.ahrq.gov/data/apcd/index.htmlhttps://www.ahrq.gov/data/apcd/index.html . [Google Scholar]

- 2.Introduction: Inventory and Prioritization of Measures to Support the Growing Effort in Transparency Using All-Payer Claims Databases. Rockville, MD: Agency for Healthcare Research and Quality; https://www.ahrq.gov/data/apcd/backgroundrpt/intro.html . [Google Scholar]

- 3.McCarthy D. The Commonwealth Fund Report. 2020. State All-Payer Claims Databases: Tools for Improving Health Care Value, Part 1 — How States Establish an APCD and Make It Functional. [Google Scholar]

- 4.Reviewing the Landscape of All-Payer Claims Databases. Rockville, MD: Agency for Healthcare Research and Quality; https://www.ahrq.gov/data/apcd/backgroundrpt/review.html . [Google Scholar]

- 5. Hashibe M, Ou JY, Herget K, et al. Feasibility of capturing cancer treatment data in the Utah All-Payer Claims Database. JCO Clin Cancer Inform. 2019;3:1–10. doi: 10.1200/CCI.19.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Fisher B, Redmond C, Poisson R, et al. Eight-year results of a randomized clinical trial comparing total mastectomy and lumpectomy with or without irradiation in the treatment of breast cancer. N Engl J Med. 1989;320:822–828. doi: 10.1056/NEJM198903303201302. [DOI] [PubMed] [Google Scholar]

- 7. Veronesi U, Cascinelli N, Mariani L, et al. Twenty-year follow-up of a randomized study comparing breast-conserving surgery with radical mastectomy for early breast cancer. N Engl J Med. 2002;347:1227–1232. doi: 10.1056/NEJMoa020989. [DOI] [PubMed] [Google Scholar]

- 8. Wang J, Deng JP, Sun JY, et al. Noninferior outcome after breast-conserving treatment compared to mastectomy in breast cancer patients with four or more positive lymph nodes. Front Oncol. 2019;9:143. doi: 10.3389/fonc.2019.00143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Vila J, Gandini S, Gentilini O. Overall survival according to type of surgery in young (≤40 years) early breast cancer patients: A systematic meta-analysis comparing breast-conserving surgery versus mastectomy. Breast. 2015;24:175–181. doi: 10.1016/j.breast.2015.02.002. [DOI] [PubMed] [Google Scholar]

- 10. Ye JC, Yan W, Christos PJ, et al. Equivalent survival with mastectomy or breast-conserving surgery plus radiation in young women aged < 40 years with early-stage breast cancer: A national registry-based stage-by-stage comparison. Clin Breast Cancer. 2015;15:390–397. doi: 10.1016/j.clbc.2015.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Salerno KE. NCCN guidelines update: Evolving radiation therapy recommendations for breast cancer. J Natl Compr Canc Netw. 2017;15:682–684. doi: 10.6004/jnccn.2017.0072. [DOI] [PubMed] [Google Scholar]

- 12. Buchholz TA, Theriault RL, Niland JC, et al. The use of radiation as a component of breast conservation therapy in National Comprehensive Cancer Network Centers. J Clin Oncol. 2006;24:361–369. doi: 10.1200/JCO.2005.02.3127. [DOI] [PubMed] [Google Scholar]

- 13.ACHI: Arkansas Center for Health Improvement https://achi.net/

- 14.Money K. Using APCD Unique IDs for Data Linkage. Arkansas Center for Health Improvement; 2018. https://www.nahdo.org/sites/default/files/Kenley%20Money%20%20NAHDO%20AR%20APCD%20Unique%20id%20presentation%2020181003%20FINAL.pdf . [Google Scholar]

- 15. Walker GV, Giordano SH, Williams M, et al. Muddy water? Variation in reporting receipt of breast cancer radiation therapy by population-based tumor registries. Int J Radiat Oncol Biol Phys. 2013;86:686–693. doi: 10.1016/j.ijrobp.2013.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Noone AM, Lund JL, Mariotto A, et al. Comparison of SEER treatment data with Medicare claims. Med Care. 2016;54:e55–e64. doi: 10.1097/MLR.0000000000000073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Facility Oncology Registry Data Standard (FORDS) for 2016 American College of Surgeons; 2016. https://www.facs.org/media/r5rl5scw/fords-2016.pdf [Google Scholar]

- 18. Ingram DD, Franco SJ. 2013 NCHS urban–rural classification scheme for counties. National Center for Health Statistics. Vital Health Stat 2. 2014:1–73. [PubMed] [Google Scholar]

- 19. Du X, Freeman JL, Goodwin JS. Information on radiation treatment in patients with breast cancer: The advantages of the linked Medicare and SEER data: Surveillance, Epidemiology and End Results. J Clin Epidemiol. 1999;52:463–470. doi: 10.1016/s0895-4356(99)00011-6. [DOI] [PubMed] [Google Scholar]

- 20. Cohen JA. Coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20:37–46. [Google Scholar]

- 21. McHugh ML. Interrater reliability: The kappa statistic. Biochem Med (Zagreb) 2012;22:276–282. [PMC free article] [PubMed] [Google Scholar]

- 22. Perraillon MC, Liang R, Sabik LM, et al. The role of all-payer claims databases to expand central cancer registries: Experience from Colorado. Health Serv Res. 2022;57:703–711. doi: 10.1111/1475-6773.13901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Doebbeling BN, Wyant DK, McCoy KD, et al. Linked insurance-tumor registry database for health services research. Med Care. 1999;37:1105–1115. doi: 10.1097/00005650-199911000-00003. [DOI] [PubMed] [Google Scholar]

- 24. Hillner BE, McDonald MK, Penberthy L, et al. Measuring standards of care for early breast cancer in an insured population. J Clin Oncol. 1997;15:1401–1408. doi: 10.1200/JCO.1997.15.4.1401. [DOI] [PubMed] [Google Scholar]

- 25. Cooper GS, Yuan Z, Stange KC, et al. Agreement of Medicare claims and tumor registry data for assessment of cancer-related treatment. Med Care. 2000;38:411–421. doi: 10.1097/00005650-200004000-00008. [DOI] [PubMed] [Google Scholar]

- 26. Virnig BA, Warren JL, Cooper GS, et al. Studying radiation therapy using SEER-Medicare-linked data. Med Care. 2002;40(8 suppl):IV-49–IV-54. doi: 10.1097/00005650-200208001-00007. [DOI] [PubMed] [Google Scholar]

- 27. Jagsi R, Abrahamse P, Hawley ST, et al. Underascertainment of radiotherapy receipt in Surveillance, Epidemiology, and End Results registry data. Cancer. 2012;118:333–341. doi: 10.1002/cncr.26295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Gomez DR, Liao KP, Swisher SG, et al. Time to treatment as a quality metric in lung cancer: Staging studies, time to treatment, and patient survival. Radiother Oncol. 2015;115:257–263. doi: 10.1016/j.radonc.2015.04.010. [DOI] [PubMed] [Google Scholar]

- 29. Nadpara P, Madhavan SS, Tworek C. Guideline-concordant timely lung cancer care and prognosis among elderly patients in the United States: A population-based study. Cancer Epidemiol. 2015;39:1136–1144. doi: 10.1016/j.canep.2015.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Onukwugha E, Yong C, Naslund M, et al. Specialist visits and initiation of cancer-directed treatment among a large cohort of men diagnosed with prostate cancer. Urol Oncol. 2017;35:150.e17–150.e23. doi: 10.1016/j.urolonc.2016.11.012. [DOI] [PubMed] [Google Scholar]

- 31. Stokes WA, Hendrix LH, Royce TJ, et al. Racial differences in time from prostate cancer diagnosis to treatment initiation: A population-based study. Cancer. 2013;119:2486–2493. doi: 10.1002/cncr.27975. [DOI] [PubMed] [Google Scholar]

- 32. Jabo B, Lin AC, Aljehani MA, et al. Impact of breast reconstruction on time to definitive surgical treatment, adjuvant therapy, and breast cancer outcomes. Ann Surg Oncol. 2018;25:3096–3105. doi: 10.1245/s10434-018-6663-7. [DOI] [PubMed] [Google Scholar]

- 33. Bui A, Yang L, Myint A, et al. Race, ethnicity, and socioeconomic status are associated with prolonged time to treatment after a diagnosis of colorectal cancer: A large population-based study. Gastroenterology. 2021;160:1394–1396.e3. doi: 10.1053/j.gastro.2020.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]