Abstract

The analysis of omic data depends on machine‐readable information about protein interactions, modifications, and activities as found in protein interaction networks, databases of post‐translational modifications, and curated models of gene and protein function. These resources typically depend heavily on human curation. Natural language processing systems that read the primary literature have the potential to substantially extend knowledge resources while reducing the burden on human curators. However, machine‐reading systems are limited by high error rates and commonly generate fragmentary and redundant information. Here, we describe an approach to precisely assemble molecular mechanisms at scale using multiple natural language processing systems and the Integrated Network and Dynamical Reasoning Assembler (INDRA). INDRA identifies full and partial overlaps in information extracted from published papers and pathway databases, uses predictive models to improve the reliability of machine reading, and thereby assembles individual pieces of information into non‐redundant and broadly usable mechanistic knowledge. Using INDRA to create high‐quality corpora of causal knowledge we show it is possible to extend protein–protein interaction databases and explain co‐dependencies in the Cancer Dependency Map.

Keywords: curation, databases, modeling, networks, text mining

Subject Categories: Computational Biology, Methods & Resources

INDRA assembles molecular mechanisms from text mining and databases by normalizing entities, resolving redundancies and estimating technical reliability. These mechanisms can be used to extend existing databases and generate mechanistic explanations for high‐throughput experimental data.

Introduction

Molecular biology is characterized by a sustained effort to acquire and organize mechanistic information about the molecules governing the behavior of cells, tissues, and organisms (Craver & Darden, 2013). “Mechanism” is used rather loosely in this context since it operates on multiple scales from the structural transitions of individual molecules to the myriad interactions mediating signal transduction or tissue morphology, but it is generally understood to involve a description of the properties, modifications, and behaviors of biomolecules in terms of physical and chemical principles. Individual mechanistic discoveries are reported in the biomedical literature, which, with over 3·107 articles indexed in PubMed as of 2022, constitutes a substantial public investment in science and an essential source of knowledge. However, results in research papers are generally described in natural language designed for human—not machine—consumption. As the literature has grown, and methods of experimental data collection become more diverse, it has become increasingly difficult for any individual scientist to acquire all of the background knowledge necessary to be an expert in a particular problem and fully interpret complex experimental results (Forscher, 1963). Biomedicine is therefore faced with a substantial problem of knowledge aggregation, harmonization, and assembly.

The bioinformatics community has actively worked to make knowledge more accessible by curating information about molecular mechanisms in a machine‐readable form suitable for computational data analysis (Ashburner et al, 2000; Schaefer et al, 2009; Perfetto et al, 2016; Fabregat et al, 2018). This has led to the creation of standard representation languages (Hucka et al, 2003; Demir et al, 2010), and databases that aggregate curated knowledge from multiple primary sources (Jensen et al, 2009; Cerami et al, 2011; Türei et al, 2016). Curated databases form the backbone of many widely used methods of high‐throughput data analysis, including gene set and pathway enrichment, and prior knowledge‐guided network inference (Babur et al, 2021; Dugourd et al, 2021). However, the creation of these databases has largely involved human curation of the literature, which is costly and difficult to sustain (Bourne et al, 2015). As a result, most databases and online resources are incomplete; for example, the creators of Pathway Commons (which aggregates pathway knowledge from 22 primary human‐curated databases) have estimated that their resource covers only 1–3% of the available literature (Valenzuela‐Escárcega et al, 2018). At the same time, databases such as Pathway Commons contain redundant or conflicting information about the same sets of mechanisms because assembling knowledge into a coherent whole is difficult and is dependent on human expertise and curation. Compounding these difficulties is the increasing volume of published scientific articles, which makes ongoing maintenance of a previously created resource necessary to prevent obsolescence; the fact that curation standards and languages evolve along with methods of data collection and analysis further complicates the task of knowledge assembly.

Automated extraction of mechanistic information through literature mining (using natural language processing) has the potential to address many of the challenges associated with manual curation (Ananiadou et al, 2015). However, the precision of machine reading systems remains lower than that of human curators, particularly for complex relationships and the subtle language in specific statements about the mechanism (Allen et al, 2015; Islamaj Doğan et al, 2019; Madan et al, 2019). Nevertheless, at the current state of the art, machine reading can extract simple relations (e.g., post‐translational modifications and binding and regulatory events) at the literature scale (i.e., from a substantial fraction of the body of 3·107 biomedical publications currently available). To accomplish this, a variety of text mining systems have been developed, each with different designs, strengths, and weaknesses. Common steps in these systems include grammatical parsing of sentences, named entity recognition and normalization, also called grounding (i.e., associating entities with a standardized identifier in controlled vocabularies such as HGNC), and event extraction (identifying interactions, transformations, or regulation involving grounded entities). Much of the research in text mining for biology to date has focused on small‐scale studies for method validation, but a handful of efforts have aimed to create large‐scale resources available for use in data analysis by the broader computational biology community (Yuryev et al, 2006; Van Landeghem et al, 2013).

A key requirement for the broader use of text mining in biological data analysis is overcoming the relatively low technical precision of current systems. One way to mitigate the effect of text mining errors is to filter out low‐confidence extractions based on reliability estimates. General reliability estimates can be derived a priori from the published precision scores for specific text mining systems (e.g., Torii et al, 2015; Valenzuela‐Escárcega et al, 2018), but these figures do not account for the fact that error rates can differ substantially for different types of information or sentence structures. An alternative approach is to cross‐reference text‐mined information against previously curated databases (Holtzapple et al, 2020) which yields high‐confidence interactions at the expense of the breadth provided by text mining. For single reading systems, redundancy among extractions (i.e., extracting the same information repeatedly from different spans of text) has been shown to associate positively with reliability (Valenzuela‐Escárcega et al, 2018) but this has not as yet been quantitatively characterized or used to derive reliability scores. In principle, the integration of multiple distinct reading systems with different types and rates of error could provide the information needed to estimate interaction reliability but this has not been previously explored.

Overall, what is still needed are computational tools for the large‐scale assembly of both text‐mined and curated mechanisms in databases to generate knowledge resources with mechanistic detail and genome scale. Human‐generated resources such as Reactome (Fabregat et al, 2018) aspire to this but would benefit in scope and currency from human‐in‐the‐loop collaboration with machines. To accomplish this, machine assembly must overcome challenges associated with combining noisy information about mechanisms at different levels of specificity in the face of the technical errors in grounding and event extraction mentioned above. Users of the resulting knowledge will often have different end goals but still need reliable networks and models. Particularly challenging is the assembly of information that can be used to investigate specific mechanisms at the level of the individual reactions, mutations, or drug‐binding events—something currently possible on a smaller scale using dynamical systems analysis (Lopez et al, 2013) and logic‐based modeling (Saez‐Rodriguez et al, 2009). These more mechanistic networks and models contrast with existing genome‐scale networks that commonly involve unsigned node‐edge graphs that aggregate diverse types of interactions (genetic, physical, co‐localization, etc.) using the simplest possible abstraction.

We previously described a software system, the Integrated Network and Dynamical Reasoning Assembler (INDRA), able to read simplified declarative language and create relatively small mechanistic models that could be executed using dynamical, logic‐based, or causal formalisms (Gyori et al, 2017). This version of INDRA could, for example, convert “word models” such as “Active ATM activates p53. Active p53 transcribes MDM2, etc.” into dynamical ODE‐based models. INDRA accomplishes this using an intermediate representation to decouple the process of knowledge collection from the construction of specific models. More specifically, INDRA normalizes mechanistic information expressed in natural (English) language into a high‐level intermediate machine representation called Statements. Statements can then be used directly to create executable models, for example in rule‐based languages such as BioNetGen or PySB. The current taxonomy of INDRA Statements accounts for the types of biomolecular processes most commonly involved in intracellular biological networks and signal transduction (e.g., post‐translational modifications, positive and negative regulation, binding, and transcriptional regulation) but is extensible to other domains of natural science.

Here, we describe a major extension of the INDRA architecture that allows it to tackle the harder and more generally applicable problem of assembling mechanistic information extracted from the primary research literature at scale (hundreds of thousands of publications). This task presents a set of challenges that are very different from those encountered when converting declarative language into ODE models (Gyori et al, 2017). We accomplished reading at scale by combining the results of multiple reading systems with curated mechanisms from a wide range of databases and structured knowledge sources. Used in this way, INDRA identifies duplicate and partially overlapping Statements, allowing for the automated assembly of mechanistic fragments into a nonredundant and coherent set of interactions and subsequently into large‐scale knowledge assemblies for use in biocuration and data analysis. We illustrate these capabilities of end‐to‐end assembly in INDRA by processing publications and databases relevant to human genomics to create a corpus of ~900,000 unique and specified interactions and regulations among human proteins. We found that the overlap between different machine reading systems was surprisingly small (highlighting both the readers' complementarity and their limitations), but for a given INDRA Statement, the existence of supportive evidence from multiple reading systems was informative of reliability. We used manual curation to quantify the technical error and overlap characteristics of different machine reading systems and then developed predictive models that estimate the reliability of text‐mined extractions in the form of a “belief score.” Finally, to evaluate the utility of machine‐extracted mechanisms, we used the INDRA‐assembled corpus of Statements to prioritize the curation of protein–protein interactions (PPIs) that are not yet captured in the widely used structured knowledgebase, BioGRID (Oughtred et al, 2019). We then used the same assembled corpus to identify and explain gene dependency relationships in the Cancer Dependency Map (DepMap) dataset (Meyers et al, 2017; Tsherniak et al, 2017). In this case, an INDRA‐assembled network helped determine statistically significant codependencies between genes, thus allowing for the detection of new codependencies in cancer. INDRA also provided possible mechanistic explanations rooted in the scientific literature for observed DepMap codependencies.

Results

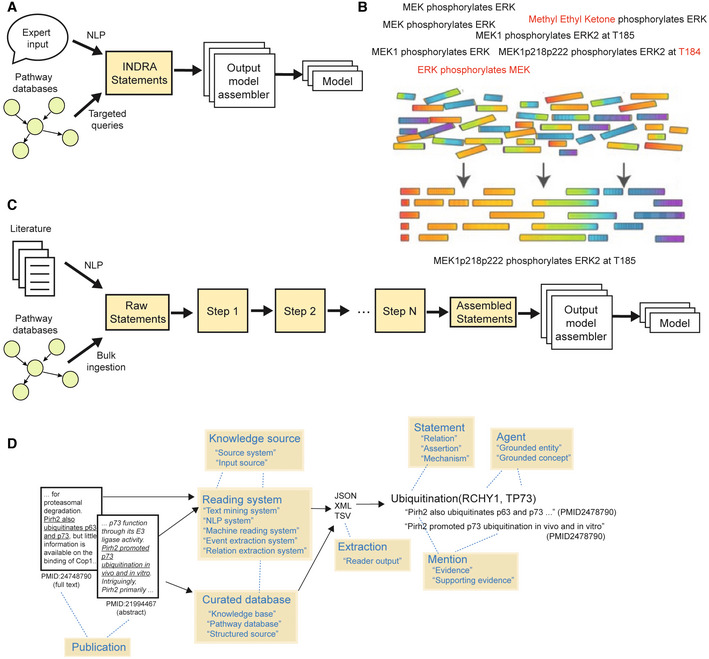

Automated assembly of large knowledgebases from curated databases and machine reading systems raises a series of interconnected, conceptual issues not arising in the conversion of simple declarative natural language into machine readable mechanisms, a problem we previously tackled using the INDRA architecture shown in Fig 1A (Gyori et al, 2017). In particular, each source of information yields many mechanistic fragments that capture only a subset of the underlying process, often at different levels of abstraction. For example, one source might describe the MEK1 (HUGO name MAP2K1) phosphorylation of ERK2 (MAPK1) on a specific threonine residue (T185), whereas another source might describe the same process at the protein family level, stating that MEK phosphorylates ERK, without mentioning a specific isoform, residue or site position (Fig 1B). Individual mechanisms obtained from machine reading are not only fragmented but they also include different types of technical errors that must be overcome (Fig 1B, red font). One familiar analogy to the process of assembling mechanistic fragments into useful knowledge is the assembly of a genome sequence from many noisy, overlapping sequencing reads (Fig 1B). The goal of knowledge assembly is similarly to achieve the best “consensus” representation of the underlying processes, incorporating as much mechanistic detail as possible while minimizing errors. Ultimately, the process is expected to yield computational approaches for finding truly missing or discrepant information, by analogy with variant calling.

Figure 1. Conceptual overview of knowledge assembly.

- Assembly of models from diverse knowledge sources. Structured (pathway databases) and unstructured (literature, expert input in natural language) biological knowledge is converted into machine‐readable, mechanistic fragments. These fragments must be assembled into a coherent corpus before the generation of specific models for data analysis.

- Mechanistic “fragments” capture incomplete but overlapping aspects of an underlying molecular mechanism (here, the phosphorylation of ERK by MEK). Fragments may also contain errors (highlighted in red). Assembly involves identifying relationships between fragments to arrive at a consensus representation that captures available information.

- Artifacts involved in the collection of mechanisms from knowledge sources by INDRA, and their representation as INDRA Statements. Yellow boxes show key terminology used to refer to different artifacts with additional synonyms provided in quotes.

- INDRA knowledge assembly transforms raw statements into assembled statements from which models can be generated. The individual steps of the assembly pipeline (Steps 1 to N, yellow background) operate on INDRA Statements and are configurable from a library of built‐in or user‐defined functions.

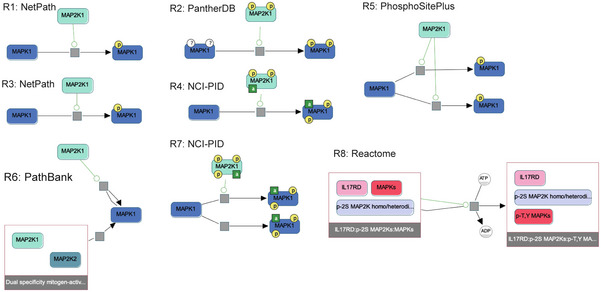

When attempting to scale the process of assembly from curated natural language to scientific publications, we identified multiple technical and conceptual problems that needed to be addressed to assemble coherent knowledge at scale. These included (i) inconsistent use of identifiers for biological entities among different sources, (ii) full or partial redundancy between representations of the same mechanisms, and (iii) technical errors in named entity recognition and relation extraction. Such problems are particularly salient when integrating literature‐mined interactions, but they also exist when aggregating interactions from multiple curated databases, due to differences in curation practices. For example, in Pathway Commons v12 there are at least eight different curated representations of the process by which MAP2K1 phosphorylates MAPK1, each at a different level of detail (Fig EV1A). We developed a set of INDRA algorithms for addressing each of these assembly challenges. These algorithms are general‐purpose and can be configured into custom assembly pipelines (Fig 1C) to support a wide range of modeling applications, as illustrated in the following examples of machine reading, assembly, and data analysis (Box 1).

Figure EV1. Differences in curation practices across databases integrated by Pathway Commons.

A subgraph of the “paths‐from‐to” query between MAP2K1 and MAPK1 obtained from Pathway Commons and visualized using the ChiBE software (Babur et al, 2009). Each biochemical reaction (R1–R8) depicts a different curation of the same reaction in which MAP2K1 phosphorylates MAPK1. The original source database (e.g., NetPath) is shown next to each reaction. Inconsistencies include (i) the reaction structure itself, with some specifying a single step phosphorylation of two sites (e.g., R4) while others specify single‐site phosphorylation (e.g., R1), or the explicit representation of ADP and ATP as part of the reaction (R8); (ii) the phosphorylation status of MAP2K1, with no phosphorylation status given in R1, R3, R5, R6, and R8, two phosphorylation sites indicated in R2, R4, and three phosphorylation sites in R7; (iii) the initial state of MAPK1, with R2 explicitly indicating unphosphorylated status, while other reactions do not make this explicit; (iv) the final state of MAPK1, with some reactions representing MAPK1 phosphorylation on an unspecified site (R1 and R3), and others providing specific phosphorylation sites (e.g., R2); (v) the specification of active states, with R4 being the only reaction representing MAP2K1 explicitly as active, while R4 and R7 are the only reactions specifying that MAPK1 is active after phosphorylation; and (vi) the presence of other co‐factors such as IL17RD (R8) as part of the reaction.

Box 1. Representing knowledge captured from multiple sources in INDRA (Fig 1D).

Scientific publications contain descriptions of mechanisms (interaction, regulation, etc.) involving biological entities. These descriptions can be extracted either by human experts and stored in curated databases or by automated reading systems using natural language processing. Collectively, reading systems and curated databases serve as knowledge sources for INDRA. Extractions from knowledge sources are made available to INDRA in a variety of custom machine‐readable formats such as JSON, XML, and TSV. INDRA processes such extractions into a standardized representation, a set of INDRA Statements. Each Statement represents a type of mechanism (e.g., Ubiquitination), and has multiple elements, including Agents representing biological entities such as proteins or small molecules, and potentially also mechanistic detail such as an amino acid residue for a modification. Each Statement can be supported by one or more mentions, each representing a single curated database entry or a single extraction by a reading system from a sentence in a given publication. Mentions are represented by INDRA as Evidence objects that have a multitude of properties representing rich provenance for each mention, including the source sentence and the identifiers of the source publication.

INDRA integrates mechanisms from pathway databases and machine reading

We used six machine reading systems, Reach (Valenzuela‐Escárcega et al, 2018), Sparser (McDonald et al, 2016), MedScan (Novichkova et al, 2003), TRIPS/DRUM (Allen et al, 2015), RLIMS‐P (Torii et al, 2015), and the ISI/AMR system (Garg et al, 2016) to process 567,507 articles (using full‐text content when available, and allowed by copyright restrictions, and abstracts otherwise; Table 1) curated as having relevance to human protein function (see the “Article corpus for event extraction” section of Materials and Methods). Reader output was normalized to INDRA Statements (see “INDRA Statement representation” in Materials and Methods), yielding ~5.9·106 unassembled or “raw” Statements (Fig 2A). Readers differed in the types of relations they extracted: Reach, Sparser, MedScan, and TRIPS/DRUM produced a multitude of different INDRA Statement types (each between 19 and 28 different types, depending on the reader) while RLIMS‐P is limited to extracting Phosphorylation Statements, and ISI/AMR to Complex Statements. Overall, readers extracted 31 different Statement types (Table EV1). These were combined with approximately 7.3·105 INDRA Statements extracted from structured sources such as Pathway Commons and the BEL Large Corpus; this used previously described extraction logic (a means of converting structured information of different types into INDRA Statements; Gyori et al, 2017) but extended to multiple additional sources including SIGNOR (Perfetto et al, 2016). In combination, reading and databases yielded a total of ~6.7·106 raw Statements. We then processed these raw Statements using an assembly process as described below and illustrated schematically in Fig 2A. In what follows, we refer to the resulting set of assembled INDRA Statements as the INDRA Benchmark Corpus.

Table 1.

Distribution of content types for literature corpus.

| Content type | Count | Percentage |

|---|---|---|

| PubMed abstract | 384,628 | 67.8% |

| Elsevier | 81,567 | 14.4% |

| PMC open access | 74,654 | 13.2% |

| PMC author's manuscript | 25,950 | 4.6% |

| Missing | 707 | 0.1% |

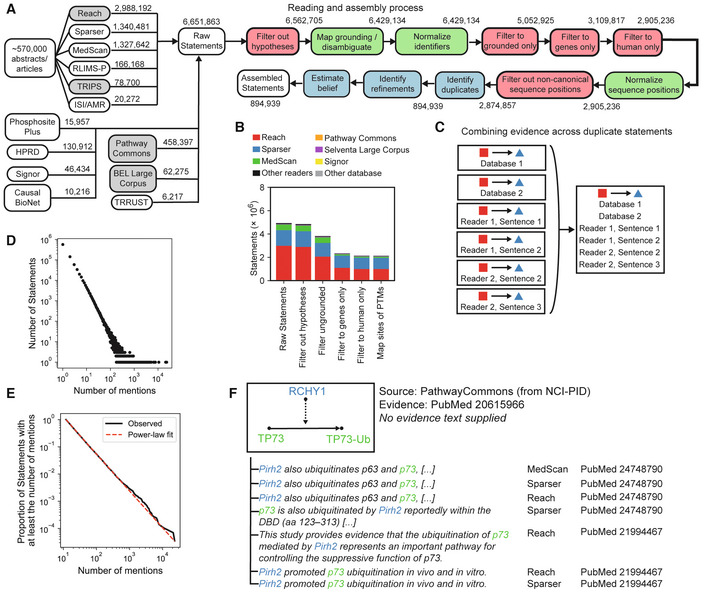

Figure 2. The INDRA knowledge assembly pipeline was used to create a Benchmark Corpus.

- The INDRA assembly pipeline for the Benchmark Corpus. The pipeline starts with ~570 thousand publications processed by multiple reading systems, as well as structured database sources including Pathway Commons and SIGNOR. Raw Statements extracted from these sources proceed through filtering (red), normalization (green), and assembly (blue) steps. Gray shading on input modules indicates modules that were originally introduced in Gyori et al (2017).

- Number of INDRA Statements, by source, at key stages of the assembly pipeline shown in panel (A).

- Combining duplicate Statements. INDRA identifies raw Statements that are identical and creates a single unique Statement with all of the associated mentions.

- Distribution of mention counts (including both mentions in text and database entries) across all Statements in the Benchmark Corpus. Each point in the scatterplot represents the number of Statements with a given number of mentions.

- Complement cumulative distribution of Statements as a function of the number of mentions supporting them (black) and the maximum likelihood estimate of a power‐law fit to the distribution (red).

- Assembly of Statements enriches curated mechanisms in pathway databases with literature evidence from text mining. Here, a reaction in Pathway Commons represents the ubiquitination of TP73 (p73) by the ubiquitin ligase RCHY1 (Pirh2). Reach, Sparser, and MedScan each extract statements matching the one from Pathway Commons and provide references to PubMed identifiers and specific evidence sentences as provenance.

After collecting information from each source, a pipeline involving a series of normalization and filtering procedures was applied (green and red boxes, respectively, in Fig 2A). These processing steps are also available as individual and reusable software modules in INDRA. First, we removed Statements that were supported by mentions indicative of a hypothesis rather than an assertion (for instance, including sentences phrased as “we tested whether…”). Next, “grounding mapping” was performed to correct systematic errors in named entity normalization, which often arise due to the ambiguity of biomedical naming conventions. INDRA integrates a manually curated mapping table that fixes those entities frequently misidentified by reading systems (described in detail in Bachman et al (2018)) and a set of machine‐learned models that perform disambiguation based on text context (by integrating the Adeft (Steppi et al, 2020) and Gilda (Gyori et al, 2022) systems). “ER” is an example of a common but ambiguous entity: it can stand for endoplasmic reticulum, estrogen receptor, estradiol receptor, emergency room, and a variety of other entities and concepts depending on context. As currently implemented, Reach, Sparser, and other reading systems ground “ER” deterministically to a single identifier (e.g., estrogen receptor) irrespective of context. In contrast, the machine‐learned disambiguation models integrated into INDRA predict the most likely meaning of entities such as ER based on surrounding text; this is then used to correct the results of text reading systems.

The next step of the grounding mapping process normalizes identifiers for individual entities using a network of cross‐references between equivalent identifiers in different namespaces (Fig EV2A). This addresses the opposite problem from the one described above (i.e., one name corresponding to multiple entities), namely that a single entity can have multiple identifiers in different namespaces, and these identifiers can be assigned inconsistently across machine reading systems and curated database sources. For example, a metabolite such as prostaglandin E‐2 identified using a Chemical Entities of Biological Interest identifier (ChEBI; Hastings et al, 2016) will be assigned additional equivalent identifiers and a standard name so that it has the same canonical form as an equivalent metabolite identified using an NCBI Medical Subject Heading identifier (MESH; Fig EV2A and B). This procedure ensures that Agents in INDRA Statements take on canonical identifiers in multiple namespaces, irrespective of the identifier used in the original source of knowledge.

Figure EV2. Ontology graph guiding INDRA knowledge assembly.

- A subgraph of the INDRA ontology graph showing the neighborhood of the node representing “prostaglandin E2” in the ChEBI database (CHEBI: 15551). Edges represent “isa” relationships to more general terms (and from more specific terms), and “xref” edges represent identifier equivalence to nodes representing entries in other databases including MeSH, DrugBank, ChEMBL, CAS, PubChem, and NCIT. Each ontology graph node also provides a name that can be used for standardization and display purposes.

- Example of three entities with inconsistent names and identifiers which, when standardized by INDRA using the ontology graph, are normalized to consistent entities with identical names and sets of identifiers.

After these filtering and correction steps were performed, 38% of Statements contained Agents with ungrounded entities; these were filtered out. In examining entity texts corresponding to Agents that were ungrounded and therefore filtered out, we found that the most commonly occurring unnormalized Agent texts were generic, high‐level terms such as “expression,” “activity,” “cytokine,” “growth,” and “signaling” consistent with our previous finding that ungrounded entities often correspond to generic concepts lacking context (Bachman et al, 2018). Because the current study focuses on biology involving human genes, we also filtered Statements to include only those containing human genes and their families or multi‐protein complexes. Each of these processing and filtering steps operates at the level of individual Statements and changes both the overall number of Statements and the proportion of Statements in the corpus from each input source, as shown in Fig 2B.

The final normalization procedure we performed was sequence position normalization. This accounts for inconsistencies in attributed sequence positions of post‐translational modifications, some of which involve outright errors in residue numbers, while others involve the implicit, interchangeable use of residue numbers between human and model organism reference sequences (preprint: Bachman et al, 2022). Human and mouse residue numbers are also used interchangeably in many papers even though residue numbering in orthologous proteins frequently differs. In all cases, a method for sequence normalization is necessary for accurate knowledge assembly. After normalization, we filtered out Statements containing non‐canonical sequence positions (about 1% of all Statements) as these likely arose from machine reading errors. This yielded a final corpus of ~2.9·106 filtered and normalized Statements.

We then used INDRA to combine Statements representing equivalent mechanisms from different sources into a single unique Statement; each unique Statement was associated with the supporting mentions from all contributing knowledge sources including curated databases and reading systems (Fig 2C). In some cases, multiple readers will have extracted the same mechanisms from the same sentence, but different reading systems often generated mentions supporting a specific Statement from different sentences in given publications or even from different publications (Fig 2C). This highlights the substantial differences between reading systems and the benefits of the multi‐reader approach used in this paper. For the Benchmark Corpus, ~2.9·106 filtered Statements yielded ~9·105 unique Statements after combining duplicates (Fig 2A), with an average of ~3 supporting mentions per Statement. However, the distribution of mentions per Statement was highly non‐uniform, with a large number of Statements (63%) attributable to a single sentence or database entry, and a small number of Statements (82 in total) having > 1,000 supporting mentions (Fig 2D). For example, the Statement that “TP53 binds MDM2” has 2,494 distinct pieces of evidence. Although the data are noisy for high mention counts, the distribution of mentions per Statement appears linear on a log–log plot (Fig 2D) implying a long‐tailed distribution potentially following a power law. To confirm this, we fitted the observed mention distribution using two approaches: (i) linear regression of the complement cumulative distribution of mention counts on a log scale, which showed a strong linear relationship (r 2 = 0.999, P < 10−17), and implied a power law exponent of α = 2.33 and (ii) fitting directly to a power law using the powerlaw software package (Alstott et al, 2014), which showed that the distribution was fit by a power law with exponent α = 2.38 (standard error σ = 0.008; Fig 2E) and was more likely than alternative distributions such as exponential (P < 10−38) or positive log‐normal (P < 10−30). Thus, the distribution of Statements having a given number of supporting mentions is similar to long‐tailed distributions observed in a variety of domains including linguistics, computer networking, and demographics (Clauset et al, 2009).

A significant benefit of jointly assembling mechanisms from both databases and literature is that curated interactions from databases become linked to textual evidence that supports the interaction (Fig 2F). For example, the fact that RCHY1 ubiquitinates TP73 appears as a curated interaction in the NCI‐PID database (Schaefer et al, 2009) with reference to PMID20615966 (Sayan et al, 2010), but without providing specific supporting text within that publication. In the Benchmark Corpus, INDRA aligns seven mentions obtained from text mining with the ubiquitination of TP73 by RCHY1 and these are derived from four sentences found in two more recent publications (Wu et al, 2011, 2; Coppari et al, 2014; Fig 2F). Such aggregation of evidence across curated databases and text‐mining systems is highly beneficial because it increases our confidence in the accuracy and relevance of a mechanism (Kemper et al, 2010). In these cases, INDRA, due to its automated nature, provides a substantial advantage for linking literature sources to specific interactions compared to comparable manual curation, which would be laborious and time consuming.

Detecting hierarchical relationships between mechanisms

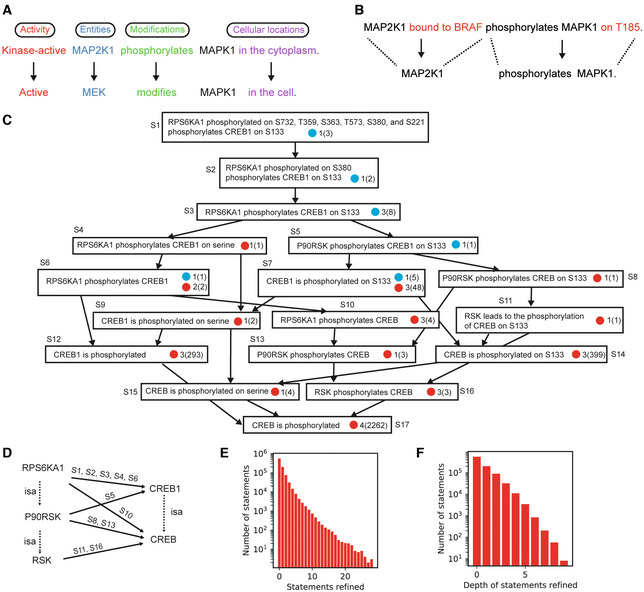

Following processing, filtering, and the identification of duplicate Statements it is necessary to identify relationships among “overlapping” Statements (Fig 3A). A pair of Statements is considered to be overlapping when one functions as a refinement (i.e., adds additional mechanistic detail) to the other. Although the analogy in this case is not perfect, something similar is required in genome assembly—if a shorter sequence is fully contained in a longer sequence, the shorter one is redundant. When such a relationship exists between two Statements, we say that the more detailed one “refines” the less detailed one. Refinement can happen at the level of entities (e.g., one Agent representing a protein family and another Agent a specific member of that family), or molecular states and context (e.g., an explicit reference to a site of post‐translational modification in one Statement and its omission in another). The refinement relationship between Statements is determined using a partial ordering logic that compares pairs of Statements based on their individual elements (where elements include the Agents involved in the Statement, and, depending on the type of Statement, post‐translational modifications, cellular locations, types of molecular activity, etc.) and determines whether each element is either equivalent to or a refinement of the other (Fig 3A). To accomplish this, INDRA makes use of hierarchies of each relevant type of element, including proteins and their families and complexes drawn from FamPlex (Bachman et al, 2018), combined with chemical and bioprocess taxonomies from ChEBI and the Gene Ontology (Ashburner et al, 2000; e.g., MAP2K1 is a specific gene in the MEK family, Fig 3A, blue), protein activity types (e.g., kinase activity is a specific type of molecular activity, Fig 3A, red), post‐translational modifications (e.g., phosphorylation is a type of modification, Fig 3A, green), and cellular locations (also obtained from the Gene Ontology; e.g., that the cytoplasm is a compartment of the cell, Fig 3A, purple). A Statement is also considered a refinement of another if it contains additional contextual details but is otherwise a match across corresponding elements. One example of such a refinement relationship is shown in Fig 3B, in which the first Statement (Fig 3B, top) describes an additional molecular state (MAP2K1 being bound to BRAF) and mechanistic detail (T185 as the specific site of modification of MAPK1) over another Statement (Fig 3B, bottom) which omits these contextual details.

Figure 3. Identifying refinement relationships among Statements.

- Refinement by hierarchies of Statement elements as defined by INDRA. The two Statements shown contain the same number and types of information but all elements in the top Statement are refinements of the corresponding elements in the bottom Statement according to the INDRA Statement hierarchies.

- Refinement by additional context. The upper Statement contains all information in the lower one but also provides additional detail, making it a refinement of the one below.

- Example refinement graph for a Statement from the example corpus. For clarity, the transitive reduction of the hierarchy is shown, and each Statement object is displayed via its English language equivalent. Each node in the graph represents a statement with blue or red circles representing evidence from pathway databases or mentions extracted by machine reading systems, respectively. Next to each blue or red circle, the number of different sources is shown with the overall number of mentions from these sources in parentheses. For example, the statement “CREB1 is phosphorylated on S133” has five pieces of evidence from one pathway database source, and 48 mentions extracted by three reading systems. Edges represent refinement relationships and point from more specific to less specific Statements.

- Graph of family relationships (dotted isa edges) and Statements representing phosphorylation (solid edges, annotated with Statement identifiers from panel C), between different levels of specificity of the RSK and CREB protein families.

- Number of Statements based on the total number of other Statements that they refine.

- Number of Statements with different depths of Statements that they refine (i.e., the length of the longest path in the graph of refinement relations starting with the given Statement).

Pairwise refinement of relationships among Statements is most easily represented using a graph in which nodes represent Statements and directed edges point from a node representing a Statement to another node representing the Statement that it refines. Such Statement refinement graphs can be quite deep (i.e., the length of a directed path starting from a Statement can consist of a large number of edges going through many refined Statements). For example, the refinement subgraph for RPS6KA1 phosphorylated on S732, T359, S363, T573, S380, and S221 phosphorylates CREB1 on S133 (Fig 3C, where RPS6KA1 encodes the ribosomal S6 kinase and CREB1 a transcription factor) has nine levels. The refinement relationships for this Statement reveal the varying levels of specificity at which a given mechanism is described in sources: CREB is phosphorylated has 2,268 mentions in the literature collected by four reading systems, RPS6KA1 phosphorylates CREB1 has three mentions in total from both literature and curated databases, and CREB1 is phosphorylated on S133 has 399 mentions. It is also worth noting that support from curated databases for these Statements (Fig 3C, blue circles) is not attributable to a single database source. For example, the Statement labeled S1 in Fig 3C is derived only from Pathway Commons, S5 only from SIGNOR, and S7 only from HPRD (Mishra, 2006). Thus, human‐curated databases are individually incomplete and mutually inconsistent with respect to the way they report specific mechanisms and the literature they cite as supporting evidence.

Organizing Statements hierarchically helps to ensure that an assembled model does not contain information that is mechanistically redundant. For instance, when the Statements in Fig 3C are viewed as a graph with nodes representing entities (RPS6KA1, CREB1, etc.) and edges representing phosphorylation reactions (Fig 3D, solid arrows), five partially redundant edges can be identified (e.g., RPS6KA1 → CREB1, P90RSK → CREB1, and P90RKS → CREB) connecting members of the RSK and CREB protein families at different levels of specificity (e.g., P90RSK is a member of the RSK family, Fig 3D, dashed arrows). A key feature of INDRA is that it can recover Statement refinement relationships, enabling principled resolution of complex redundancies, for example, by retaining only Statements that are not refined by any other Statements (in the case of Fig 3C, the Statement labeled as S1 at the top of the graph). The refinement graph in Fig 3C also reveals how a highly specific Statement can serve as evidence for all the other Statements it subsumes, a relationship that is exploited when estimating Statement reliability.

We found that refinement relationships were common in the Benchmark Corpus: 38% of Statements refined at least one other Statement, and some Statements refined a large number of other Statements, including 89 Statements that refined at least 20 other Statements (Fig 3E). These Statements are typically ones that represent a canonical (i.e., often described) mechanism (e.g., the mechanism by which members of the AKT protein family phosphorylate GSK3 proteins) at a high level of detail and subsume multiple variants of the same mechanism described at a lower level of detail. We also found that the Benchmark Corpus contained tens of thousands of refinements involving three or more levels (Fig 3F), emphasizing that many mechanisms across databases and literature are described at many levels of specificity. INDRA assembly can reconstruct these relationships and allow resolving the corresponding redundancy.

Modeling the reliability of INDRA Statements with the help of a curated corpus

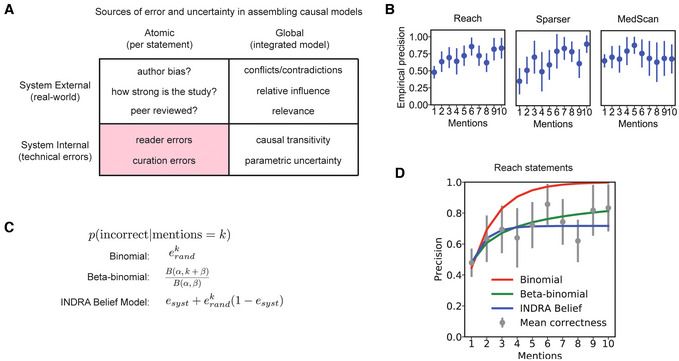

One of the most challenging problems in using mechanisms generated by text mining is the unknown reliability of the extracted information. While the notion of “reliability” includes conventional scientific concerns, such as the strength of the evidence supporting a particular finding or study (Fig 4A, upper left quadrant), in practice the overwhelming majority of incorrect assertions result from technical errors in machine reading (Fig 4A, lower left quadrant). Common reading errors include systematic misidentification of named entities, incorrect polarity assignment (e.g., classifying activation as inhibition), failure to recognize negative evidence (e.g., “A does not cause B"), and difficulty distinguishing hypotheses from assertions and conclusions (e.g., “we tested whether A causes B" as opposed to “A causes B"; Valenzuela‐Escárcega et al, 2018; Noriega‐Atala et al, 2019). These errors arise primarily because scientific texts use a wide range of non‐standard naming conventions to refer to genes, proteins, and other entities, as well as complex grammatical structures to convey the confidence associated with a result or data point. Indeed, much of the art in scientific writing is to generate text that appears to progress inexorably from a hypothesis to the description of supporting evidence to a conclusion and its caveats. This type of writing can be difficult even for humans to fully understand. However, addressing the technical errors of reading systems at the level of individual Statements is a prerequisite for addressing the additional issues that arise when Statements are combined into causal models (Fig 4A, right quadrants). Additional challenges with integrated models include dealing with contradictions between Statements, assessing the relative influence or relevance of multiple Statements in a given context, as well as issues surrounding causal transitivity across multiple Statements.

Figure 4. Estimating statement belief for a single machine reader.

- A classification of sources of error and uncertainty in assembling causal models. Sources are classified according to whether they are external or internal to the INDRA system, and whether they arise at the level of individual Statements (atomic) or an integrated network or model (global).

- Empirical precision of three reading systems based on the number of mentions supporting a given Statement extracted by that reader.

- Mathematical formulas for Statement correctness for three different Belief Models. Each model specifies the probability that a Statement is incorrect overall given that a specific number k of mentions support it from a given source. e rand : random error for the source; e syst : systematic error for the source; B(α, β): Beta function.

- Fits of the three belief models in (C) plotted against the empirical precision of Reach‐extracted Statements.

To study the reliability of our assembled Statements, we sampled a set of Statements from the Benchmark Corpus. The sampled Statements had between 1 and 10 mentions per Statement and arose from five reading systems (Reach, Sparser, MedScan, RLIMS‐P, and TRIPS; we excluded the ISI/AMR system from this analysis due to the low number of extractions it produced). Two of the authors, both of whom are PhD biomedical research scientists, used this to develop a Curated Corpus from the sampled Statements. Curation involved determining whether a given mention correctly supported a specific Statement based on human understanding of the sentence containing the mention and the overall context of the publication. Statements were sampled by mention count in a stratified manner to establish the relationship between mention count and reliability; high mention‐count Statements are therefore overrepresented relative to their baseline frequency in the Benchmark Corpus (see “Statement Curation” section of Materials and Methods). The resulting data set covers 1,800 Statements with a combined total of 6,022 unique mentions a subset of which (see Table 2 and “Statement Curation” section of Materials and Methods) was used to assess the individual technical reliability of reading systems.

Table 2.

Summary of Statement curation dataset for the purposes of single reader belief assessment.

| Reader | Mention curation | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Reach | Complete | 57 (119) | 26 (41) | 25 (36) | 16 (25) | 26 (36) | 24 (28) | 26 (35) | 31 (50) | 18 (22) | 20 (24) |

| Incomplete | 57 (119) | 26 (41) | 25 (36) | 16 (25) | 26 (36) | 24 (28) | 26 (36) | 31 (50) | 18 (22) | 20 (24) | |

| RLIMS‐P | Complete | 87 (109) | 24 (26) | 23 (25) | 10 (10) | 6 (6) | 6 (6) | 6 (6) | 6 (6) | 7 (7) | 25 (25) |

| Incomplete | 87 (109) | 24 (26) | 23 (25) | 10 (10) | 10 (10) | 11 (11) | 12 (12) | 11 (11) | 12 (12) | 25 (25) | |

| TRIPS | Complete | 158 (199) | 46 (51) | 28 (29) | 3 (3) | 7 (7) | 12 (12) | 24 (26) | 12 (13) | 9 (11) | 9 (9) |

| Incomplete | 158 (199) | 46 (51) | 28 (29) | 9 (11) | 10 (10) | 12 (12) | 24 (26) | 12 (13) | 9 (11) | 9 (10) | |

| Sparser | Complete | 9 (25) | 13 (25) | 9 (13) | 6 (12) | 11 (19) | 6 (8) | 16 (19) | 2 (3) | 3 (7) | 11 (12) |

| Incomplete | 9 (25) | 13 (25) | 10 (14) | 6 (12) | 12 (20) | 8 (10) | 16 (19) | 23 (29) | 13 (21) | 19 (21) | |

| MedScan | Complete | 63 (96) | 22 (31) | 4 (6) | 0 (0) | 2 (2) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 13 (19) |

| Incomplete | 63 (96) | 22 (31) | 15 (22) | 12 (15) | 23 (26) | 13 (17) | 9 (13) | 7 (11) | 9 (13) | 13 (19) |

Entries are formatted as “number correct (total curated).” Each column shows the number of mentions (between 1 and 10) supporting a given Statement in the curation dataset. Rows marked as “Complete” show counts only for Statements for which all mentions were curated while “Incomplete” also includes Statements where less than the total number of mentions was curated. Counts across readers are not unique; if a Statement has mentions from multiple readers, it is counted in multiple rows.

For a single reading system, the reliability of an extracted Statement has been observed to increase with the number of different supporting mentions (Valenzuela‐Escárcega et al, 2018). We hypothesized that a Statement with multiple mentions would be more reliable if the mentions had been independently extracted by more than one reading system. To test this idea, we used two complementary approaches to create models of Statement reliability: (i) structured probability models that build on empirical error characteristics of individual reading systems based on the Curated Corpus and (ii) machine learning (ML) models trained on the Curated Corpus. Structured probability models require much less training data, but, given sufficient training data, machine‐learned models are generally more expressive and likely to be more accurate in predicting Statement reliability.

Modeling the reliability of Statements from individual reading systems

We first examined the error characteristics of individual reading systems. For individual readers, analysis of the Curated Corpus showed that while Statements with more mentions are generally more reliable, in many cases Statements supported by many sentences were still incorrect due to the presence of systematic errors (Fig 4B). For example, the Sparser reading system extracted the Statement MAOA binds MAOB with 10 mentions from 10 different publications, but all extractions were incorrect because the system incorrectly interpreted “association” as referring to a physical interaction rather than a statistical association between MAOA and MAOB (monoamine oxidase A and B), which is what the original publications described. We compared three alternative probability models for their ability to capture the dependence of sentence reliability on mention count: (i) a simple binomial model, (ii) a beta‐binomial model (a binomial model in which the probability of success at each trial follows a beta distribution), and (iii) a two‐parameter model that captures both random and systematic errors—we termed this latter model the INDRA Belief Model (Fig 4C; see “The INDRA Belief Model” section of Materials and Methods). Parameters for each of the three models were estimated from the data from the Curated Corpus using Markov chain Monte–Carlo (MCMC; see “Parameter estimation for INDRA Belief, Binomial, and Beta‐Binomial Models” section of Materials and Methods). Both the beta‐binomial model and the INDRA Belief Model outperformed the binomial model at predicting Statement correctness from mention counts, primarily due to their ability to capture the empirical observation that even high‐mention Statements do not approach an accuracy of 100% (a phenomenon accounted for by modeling systematic reader errors; Fig 4D, Table 3). The INDRA Belief Model performed slightly better than the beta‐binomial model at predicting Statement correctness for both the Reach and Sparser reading systems (Table 3) due to its better fit to low mention‐count Statements (Fig 4D, mentions 1, 2, and 3). An additional advantage of the INDRA Belief Model is that the random and systematic error rates e rand and e syst are interpretable and can be estimated heuristically by examining a small number of high‐mention Statements (with precision approximately equal to e syst ) and 1‐mention Statements (with precision equal to e syst + (1 − e syst )e rand ). This makes it possible to set reasonable parameters for the INDRA Belief Model based on prior intuition or examination of a small number of exemplary Statements. Since the INDRA Belief Model performed the best overall, it is used as the default model in INDRA when no curation data are available. However, the beta‐binomial model more accurately fit the underlying distribution of correct mentions for each Statement, suggesting that further research is needed on such error models (Fig EV3).

Table 3.

Maximum likelihood values for alternative belief models using best‐fit parameters (lower values indicate a better fit).

| Model | Reach, −log(Max likelihood) | Sparser, −log(Max likelihood) | MedScan, −log(Max likelihood) |

|---|---|---|---|

| Binomial (1 param) | 375.4 | 104.7 | 150.1 |

| Beta‐binomial (2 params) | 260.2 | 90.7 | 97.4 |

| INDRA Belief Model (2 params) | 259.5 | 91.2 | 96.9 |

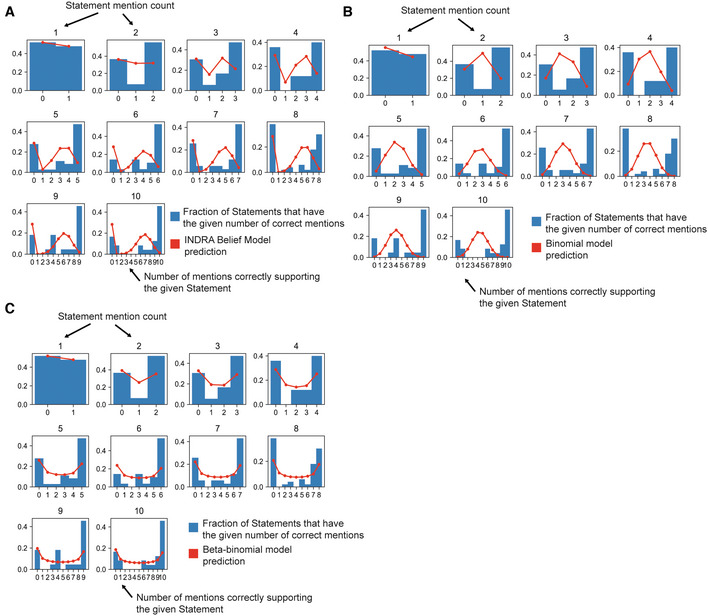

Figure EV3. Observed and predicted distributions of mentions correctly extracted by Reach for Statements supported by up to 10 Reach mentions.

- Frequencies of correct mentions predicted by the INDRA Belief Model. The blue bars in each subplot show the frequencies of statements with k correctly extracted mentions for n total mentions for the Statement (considering mentions from the Reach reader only). The red line in each subplot shows the frequencies of correct mentions expected by the INDRA Belief Model. The INDRA Belief Model expects a substantial proportion of Statements to have an intermediate number of correctly extracted mentions, whereas the empirical data suggests that Statements are more likely to be associated with mentions that are either all correct or incorrect.

- Frequencies of correct mentions expected by the Binomial model. Blue bars are identical to (A).

- Frequencies of correct mentions expected by the Beta‐binomial model. Blue bars are identical to (A) and (B). The Beta‐binomial model differs from the INDRA Belief Model and Binomial models in that it predicts relatively greater proportions of Statements with mentions that are either all correct or incorrect.

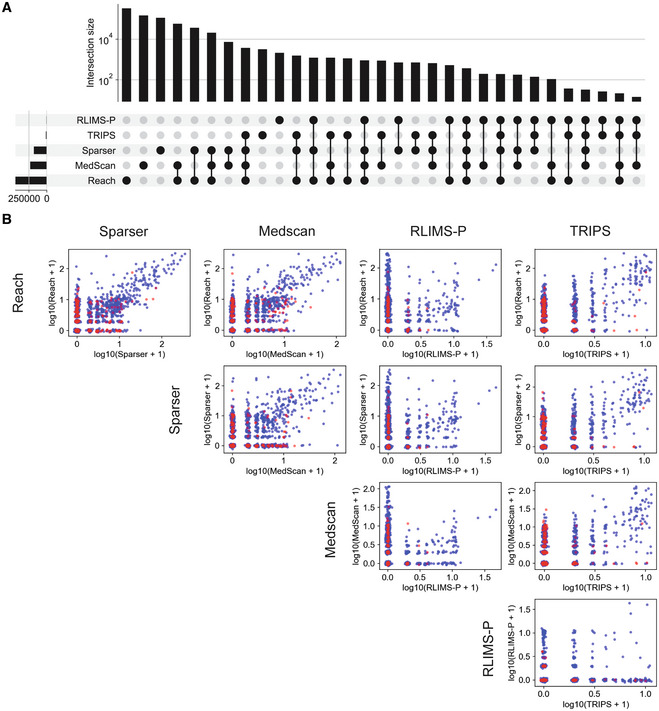

Multi‐reader overlap is associated with higher Statement frequency and reliability

To better understand the potential for multi‐reader reliability assessment, we characterized the extent of reader overlap in the Benchmark Corpus (i.e., when two or more readers produce mentions supporting the same Statement). We found that 19% of assembled Statements had supporting mentions from two or more reading systems (Table 4; Figs 5A and EV4A), but the bulk of Statements was supported exclusively by either Reach, Sparser, or MedScan (Fig 5A). The low overlap between readers is attributable to differences in their design, including their approaches to grammatical parsing, named entity recognition, associated resources (i.e., which lexical sources each reader incorporates), and the types of grammatical or semantic patterns that can be recognized. Low overlap among readers implies that using multiple reading systems in an integrated fashion via INDRA can increase coverage relative to any single reading system.

Table 4.

Frequencies of relations in the corpus by the total number of sources.

| Num. readers | Freq. (%) |

|---|---|

| 1 | 81.3% |

| 2 | 14.42% |

| 3 | 3.55% |

| 4 | 0.67% |

| 5 | 0.05% |

Figure 5. Estimating Statement belief with multiple machine readers combined.

- Upset plot (equivalent to a Venn diagram with more than three sets) of Statement support for five machine reading systems integrated by INDRA. For a given Statement, two or more readers intersect if they each provide supporting mentions for it. The top 10 subsets are shown; for a full upset plot of all subsets, see Fig EV4A.

- Number of mentions from Reach and Sparser (left) and Reach and MedScan (right) for a given Statement, each Statement being represented by a red dot. Mention counts are plotted on a logarithmic scale.

- The percentage of Statements for which an intersection (i.e., any overlap) between reading systems is observed as a function of the number mentions from a given reader; the data are plotted separately for each of the five reading systems.

- Empirical Statement precision as a function of the number of mentions from Reach (left) and Sparser (right), plotting the cases for which only Reach or Sparser provides supporting mentions for a Statement (red) and the case where all Statements are taken into account (blue).

Figure EV4. Reader overlap and Statement correctness.

- Upset plot (equivalent to a Venn diagram with more than three sets) of Statement support for five machine reading systems integrated by INDRA. Data are identical to Fig 5A but intersection sizes are plotted on a log scale and all 32 possible reader combinations are shown.

- Multi‐reader mention counts and Statement correctness. Each subplot shows the relationship between mention counts from a combination of two readers for manually curated Statements. Blue points represent Statements that were curated as correct; red points were curated as incorrect. A small amount of random jitter has been added to each point to indicate the density of points with fewer mention counts.

Despite the relatively small overlap among readers, the number of mentions from each reader supporting a Statement showed substantial correlation, with both ρ(Reach, Sparser) and ρ(Reach, MedScan) > 0.6 (Table 5). We found, however, that these correlations in mention counts among reading systems were primarily driven by a subset of relations with very high numbers of mentions (Fig 5B). More generally, we found that reader overlaps for a Statement increases as a function of the number of supporting mentions an individual reader extracted for the Statement (Fig 5C). Overall, these data support the observation that, if a mechanism represented by a Statement is described in many different sentences across input documents, multiple systems are likely to extract supporting mentions, and these will often come from different sentences and publications (as we showed in Fig 2C and E).

Table 5.

Correlations between reader mention counts.

| Sparser | MedScan | RLIMS‐P | TRIPS | |

|---|---|---|---|---|

| Reach | 0.611 | 0.633 | 0.072 | 0.374 |

| Sparser | 0.454 | 0.114 | 0.420 | |

| MedScan | 0.034 | 0.338 | ||

| RLIMS‐P | 0.096 |

When we examined the relationship between reader overlap and Statement correctness using the Curated Corpus, we found that Statements supported by many mentions were more likely to overlap with other readers and be correct (Fig EV4B, blue points along diagonals). Notably, in the case of Reach, the reader for which the most extensive subset of curated Statements was generated, we found that the probability of Statement correctness increased with the overall number of Reach mentions, but only for high‐mention Statements that also included support from other readers (Fig 5D, blue points). For relations with support only from Reach, empirical correctness increased from 1 to 2 mentions (an observation consistent with the findings regarding the Reach system's precision (Valenzuela‐Escárcega et al, 2018)), but additional Reach‐only mentions were not associated with substantial further increases in precision (Fig 5D, red points). Thus, in a multi‐reader setting, the absence of reader overlap also plays a key role in assessing Statement reliability. These observations imply that combining multiple reading systems can be highly valuable when assessing Statement correctness based on supporting mentions. It also provides information that can be used by developers of reading systems to increase recall and precision.

To characterize systematic issues affecting multiple readers, we also examined sentences associated with Statements that were incorrectly extracted by more than one reader. Recurring errors included misgrounding due to overlapping aliases (e.g., grounding—by four readers—of “TPP1” to gene TPP1 rather than gene ACD for which “TPP1” is an alias), incorrect extraction of negative results (e.g., “our preliminary attempts have not identified direct phosphorylation of PPARγ by MST2,” extracted by three readers as a Phosphorylation statement in which MST2 modified PPARγ), unrelated subclauses being causally linked (e.g., “quiescent cells attenuate eIF2α phosphorylation and induction of the ER stress proapoptotic gene GADD153” incorrectly extracted by three readers as a phosphorylation of GADD153 by eIF2α), incomplete named entity recognition (e.g., “Shc associates with epidermal growth factor (EGF) receptor,” incorrectly extracted by two readers as binding between Shc and EGF, not EGFR), and extraction of protein–DNA binding as protein–protein binding (phrases similar to “c‐Jun binds to AP‐1 sites to regulate gene expression” incorrectly extracted by four readers as binding between c‐Jun and the AP‐1 complex, which includes c‐Jun as a component). In many of these cases, human readers are able to recognize subtleties in the language that are difficult for machines to parse correctly.

Two approaches to modeling the reliability of Statements from multiple readers

We evaluated two strategies for assessing the reliability of Statements using mention counts from multiple readers: (i) extending the INDRA Belief Model and (ii) training machine learning models on the Curated Corpus. Even though reader errors were not fully independent of each other (Fig EV4B), we assumed independence between different reading systems (Zhang, 2004) to extend the INDRA Belief Model while adding the fewest additional model parameters. Specifically, we adjusted how the model formulated error estimates to express the probability that all mentions extracted by the readers were jointly incorrect (see “The INDRA Belief Model” section of Materials and Methods). We also assessed how well the extended INDRA Belief Model could predict Statement correctness based on mention counts per reading system compared to several different machine‐learned classifiers. These classifiers included Logistic Regression on log‐transformed mention counts, k‐Nearest Neighbors, support vector classifiers, and Random Forests (see the “Machine‐learned models of Statement reliability” of Materials and Methods). Models were compared based on the area under the precision‐recall curve (AUPRC), which is a more robust metric for class‐imbalanced data (~73% of Statements in our curated corpus were correct) than the area under the receiver‐operator curve (AUROC). In interpreting the AUPRC values, note that the curated corpus is, by construction, biased toward Statements with higher mention counts and, therefore, greater reader overlap. For example, Statements supported by only a single reader constitute 81% of the Benchmark corpus (Table 4) but only 35% of the curated corpus (see “Statement Curation” section of Materials and Methods). As such, reported AUPRCs should be interpreted as a measure of the relative performance of each model across Statements supported by different combinations of readers rather than measures of general performance.

We found that, when mention counts were the only input feature, the INDRA Belief Model yielded the greatest AUPRC, followed by the Logistic Regression and Random Forest models (Table EV2, rows 1, 3, and 2, respectively). However, machine learning models outperformed the INDRA Belief Model when they were extended to use additional Statement features, such as the Statement type, the number of supporting articles (i.e., the number of distinct publications from which mentions were extracted), the average length of the mention texts (longer sentences were more likely to be incorrectly interpreted), and the presence of the word “promoter” in the sentence (a frequent indicator that a sentence describing a protein to DNA promoter interaction had been mis‐extracted as a PPI; Table EV2, rows 8–13; see the “Encoding of features for Statement belief prediction” section of Materials and Methods). This implies that—as long as sufficient training data are available—machine‐learned classifiers can use additional Statement‐associated features to boost performance relative to the INDRA Belief Model which relies solely on mention counts.

Additionally, since INDRA can identify refinement relationships among Statements (Fig 3), mentions can be combined across different levels of detail for use in reliability estimation. For example, evidence supporting the specific Statement, “MAP2K1 phosphorylates MAPK1 on T185,” also supports the more generic Statement, “MEK phosphorylates ERK.” Combining these refining mentions improved precision and recall: the AUPRC of the Random Forest model increased from 0.893 to 0.913 when using only mention counts (Table EV2, row 2 vs. 17), and from 0.932 to 0.937 when using all features (Table EV2, row 9 vs. 24). Further when we incorporated overlapping mentions from curated databases as features alongside reader mentions, we found that the Random Forest model's AUPRC increased to 0.942—the highest AUPRC reached across all models and conditions. Because mentions from more specific Statements flow to more general ones but not the reverse, the belief estimates for the most specific Statements are determined only by their directly supporting evidence. This leads to an overall inverse relationship between specificity and belief that allows Statements to be filtered to the most specific statement lying above a certain threshold of belief, thereby excluding potentially unreliable and highly specific Statements in which extracted details may reflect technical errors rather than meaningful additional context.

Because readers perform differently on the same input text, Statements supported by multiple readers are less common than Statements supported by a single reader, but our analysis showed that both the existence of reader overlap as well as lack of overlap for a given Statement can be informative in predicting Statement correctness. Moreover, in the absence of human‐curated data across multiple Statement features—a type of data that is laborious to generate—a parametric model (such as the INDRA Belief Model) based on the error profiles of individual readers can perform well from a precision‐recall perspective. When sufficient curated training data are available, machine learning models such as Random Forests can achieve greater performance, obtaining the highest AUPRCs in several different configurations. These findings provide empirical support for INDRA's approach to assembling sets of Statements from multiple text mining and curated database sources with principled estimates of correctness. Both the INDRA Belief Model and the machine‐learned classifier models are available in the belief submodule of INDRA and allow parameters to be either manually set or estimated from curation data.

Validation of assembled mechanisms and comparison against curated resources

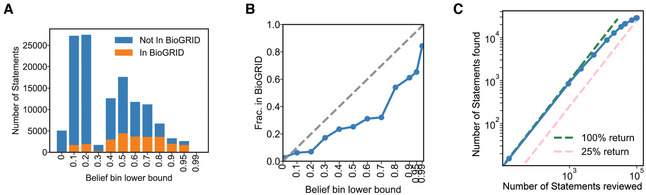

To test INDRA on a prototypical biocuration task, we compared the subset of Statements representing human PPIs in the Benchmark Corpus to the BioGRID database (Oughtred et al, 2019). BioGRID is a curated public database containing structured information on protein–protein and protein–small molecule interactions, as well as genetic interactions obtained from multiple organisms. These interactions were extracted by expert curators from a combination of high‐throughput datasets and focused studies. As a measure of the utility of INDRA for biocuration, we determined (i) the number of previously‐uncurated PPIs that the INDRA Benchmark Corpus could add to BioGRID and (ii) the amount of new literature evidence that it could add to PPIs currently in BioGRID. We used our best‐performing Random Forest model to assign a belief to each INDRA Statement in the Benchmark Corpus.

The Benchmark Corpus contained ~26,000 Statements representing PPIs already in BioGRID and ~101,000 PPIs that were absent (Fig 6A); the latter potentially represent known but previously uncurated interactions. By grouping all PPIs in bins defined by belief score, we found that belief score was highly correlated with whether a PPI was curated in BioGRID (Fig 6B). This provides a quantitative corroboration of the belief scores and, by extension, suggests that a substantial number of the PPIs absent from BioGRID involve reading errors that are associated with low belief scores. The belief scores obtained from the Random Forest model can be interpreted as calibrated probabilities of correctness, allowing belief scores to estimate the number of correct Statements in each bin. The proportion of Statements in BioGRID was consistently below the belief score for the bin, suggesting that each bin contained correctly extracted but uncurated PPIs (Fig 6B, blue line below diagonal). Assuming that all Statements found in BioGRID were correctly extracted, we estimated a lower bound of 28,600 correct but uncurated PPIs in the Benchmark Corpus, a 6% increase over the ~480,000 unique human PPIs in BioGRID.

Figure 6. Comparison of INDRA‐assembled mechanisms with a curated resource, BioGRID.

- Number of INDRA Statements representing PPIs (i.e., complex formation between two human proteins) grouped into bins by their belief score (as determined by a random forest belief model), differentiating whether the PPI represented by the Statement appears in BioGRID (orange) or not (blue).

- Fraction of INDRA Statements representing PPIs that appear in BioGRID grouped into bins by their belief score. A gray dashed line shows the expected fraction of correct Statements in each belief bin. The space between the gray and blue lines (i.e., between the expected fraction of correct Statements in each bin and the fraction of Statements that appear in BioGRID) represents an estimate of the set of correct Statements missing from BioGRID.

- Plot showing estimated curation yield if Statements were reviewed by decreasing belief score for inclusion into a curated resource. The blue line plots the number of correct Statements expected to be found as a function of the number of Statements reviewed, with green and pink dashed lines serving as guides showing 100% return (i.e., every reviewed Statement is correct) and 25% return (i.e., 1 out of 4 reviewed Statements is correct).

As a practical matter, extending a curated resource such as BioGRID would logically involve focusing first on Statements with the highest belief scores. The ~2,200 uncurated Statements with belief scores > 0.9 would be expected to yield > 1,870 PPIs or roughly six correct for every seven reviewed. Statements with lower belief scores are more numerous but also have a lower expected yield: 18,700 correct Statements would be expected among the 41,600 uncurated Statements with belief scores between 0.4 and 0.9, with the curation yield starting at 67% (for Statements with belief between 0.8 and 0.9) to 29% (for Statements with belief between 0.4 and 0.5) (Fig 6C). To illustrate this, we examined one PPI not currently in BioGRID that involved binding of the KIF1C kinesin to RAB6A, a GTPase and regulator of membrane trafficking. INDRA assembled a total of 40 mentions supporting this PPI, extracted by two machine reading systems (Reach and Sparser), into a Statement with a belief score of 0.82. Human curation confirmed that the interaction had been reliably demonstrated using both co‐immunoprecipitation and reconstitution experiments (Lee et al, 2015).

A second application of INDRA is to add evidence for PPIs already in BioGRID and thereby (i) provide different types of evidence for an existing PPI (e.g., mass spectrometry vs. 2‐hybrid interaction), (ii) reveal additional biological settings or cell types in which a PPI might occur, and (iii) provide additional mechanistic detail about a particular PPI. As an example of (i) and (ii), BioGRID lists only three publications as a reference for the interaction between brain‐derived neurotrophic factor (BDNF) and the NTRK2 receptor tyrosine kinase, whereas the INDRA Benchmark Corpus contains 168 mentions of this interaction from a total of 94 publications. Some of these additional publications provide primary experimental evidence for this interaction (e.g., Vermehren‐Schmaedick et al, 2014) whereas Wang et al (2009a) discuss the role of the BDNF‐NTRK2 interaction in important clinical settings. As an example of (iii), the interaction between paxillin (PXN) and the tyrosine kinase PTK2B is supported by six references in BioGRID; INDRA not only identified 49 mentions from 18 different publications supporting this PPI but assembled a Statement with substantially more mechanistic information than BioGRID: namely that PTK2B, when phosphorylated on Y402, phosphorylates PXN on Y118 (Park et al, 2006; Moody et al, 2012, 2). This example shows that for a PPI lacking mechanistic detail, INDRA can illuminate the directionality and type of regulation, as well as the amino acids involved in posttranslational modifications.

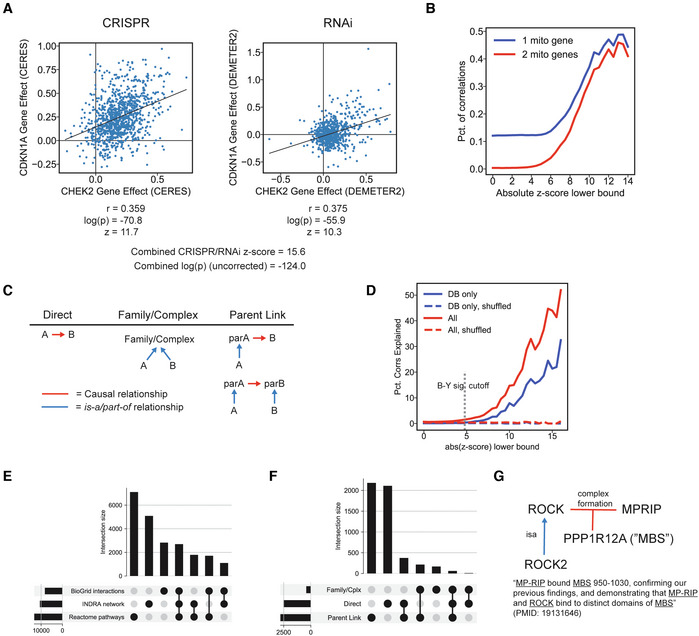

Detecting and explaining gene dependency correlations with an assembled causal network

To study how networks that incorporate text‐mined information can aid in the interpretation of functional genomic datasets, we used INDRA to detect and explain significant gene dependencies in the Cancer Dependency Map (https://depmap.org; Meyers et al, 2017; Tsherniak et al, 2017). The DepMap reports the effects of RNAi or CRISPR‐Cas9 mediated gene inactivation on cell viability and growth in > 700 cancer cell lines using a competition assay. In this assay, the effect of gene inactivation is assessed by determining the rate at which a specific knockout (or knockdown) disappears from a co‐culture comprising cells transfected with a genome‐scale RNAi or CRISPR‐Cas9 library. It has previously been observed that genes whose knockouts have similar effects on viability across a large number of cell lines—a phenomenon known as codependency—frequently participate in the same protein complex or pathway (Meyers et al, 2017; Tsherniak et al, 2017; Pan et al, 2018; Doherty et al, 2021; Rahman et al, 2021; Shimada et al, 2021). For example, CHEK2 and CDKN1A have a correlation coefficient of 0.359 and 0.375 in DepMap CRISPR and RNAi data, respectively (Fig 7A), and this codependency can be explained by the fact that the CHEK2 kinase is an activator of CDKN1A (also known as p21) and that the two genes jointly regulate cell cycle progression. To obtain robust measures of gene co‐dependencies, we combined the CRISPR and RNAi perturbation data by converting the Pearson correlation coefficients for each gene pair into signed z‐scores and computing the combined z‐score between the two datasets using Stouffer's method (Fig 7A). In analyzing the data, we first accounted for a bias also observed by others (Dempster et al, 2019; Rahman et al, 2021), namely that many of the strongest correlations are between mitochondrial genes (Fig 7B). These correlations have been described as an artifact of the screening method (such as the timepoint of the viability measurements relative to cell doubling time) rather than reflecting true co‐dependencies (Rahman et al, 2021). We considered the correlations among these genes to be “explained” a priori due to their shared mitochondrial function. Using the mitochondrial gene database MitoCarta as a reference (Rath et al, 2021), we excluded correlations among them from subsequent analysis.

Figure 7. Detecting and explaining gene codependency in cancer cell lines using an INDRA‐assembled network.

- CRISPR (left) and RNAi (right) data from DepMap showing the codependency of the CHEK2 and CDKN1A genes across a panel of cancer cell lines (each blue dot represents a cell line, placed according to normalized cell viability change upon gene perturbation). Black lines show the linear regression plot over the cell line viability values.

- Percent of gene codependencies (i.e., correlations) involving one or two mitochondrial genes as a function of the absolute z‐score corresponding to the codependency.

- Patterns of network nodes and edges that constitute an “explanation” for an observed DepMap codependency, including “Direct” (a direct edge between two specific genes A and B), “Family/Complex” (two genes A and B are part of the same family or complex), and “Parent Link” (where one or both of the specific genes A and B are related via a parent family/complex they are part of).

- Percent of codependencies/correlations explained using the INDRA network when considering all edges (red) or only edges supported by curated databases, excluding text mining (blue), with randomly shuffled controls shown.

- Upset plot showing the intersection of explanations for DepMap codependencies provided by three networks: BioGRID interactions, the INDRA network, and Reactome pathways.

- Upset plot showing the intersection of three types of explanation for DepMap codependencies provided by the INDRA Network, corresponding to explanation patterns shown in panel (C).

- An example explanation for the codependency between ROCK2 and MPRIP derived from the INDRA network. INDRA provides evidence for a complex in which ROCK (the protein family of which ROCK2 is a member) binds MPRIP in a three‐way complex with PPP1R12A (also called MBS) through the mention shown at the bottom (extracted from Wang et al, 2009a; Wang et al, 2009b).

From the Benchmark Corpus of assembled INDRA Statements, we generated a network model in which each node represents a human gene and each directed edge corresponds to an INDRA Statement (such as Phosphorylation, Activation, etc.) connecting two nodes. We used the resulting network for two tasks: first, to constrain the number of hypotheses tested when determining the statistical significance of codependency correlations and second, to find mechanistic explanations for the observed codependencies.

For the first task, we calculated the number of codependencies that were significant at a false discovery rate (FDR) of 0.05 using three methods for controlling FDR with and without the use of the network to limit the number of hypotheses tested (Table 6). Overall, fewer codependencies were significant when we restricted comparisons to relationships in the INDRA‐assembled network, both because the network is incomplete and because many codependencies reflect indirect functional relationships (which are not captured by a single direct edge in the network). However, many codependencies (4,007 using Benjamini–Yekutieli FDR correction) were detected as significant only when using the network (Table 6, “INDRA only”) due to the smaller number of hypotheses tested. Moreover, the majority of these (2,729) were based on interactions obtained only from machine reading, of which > 60% were supported by a Statement with a belief score greater than 0.5.

Table 6.

Number of codependencies detected at a significance cutoff of P < 0.05 without multiple hypothesis correction or after one of three methods for multiple hypothesis testing correction (Bonferroni, Benjamini–Hochberg, and Benjamini–Yekutieli).

| No prior | INDRA prior | ||

|---|---|---|---|

| Total | INDRA only | ||

| Number of comparisons (non‐mitochondrial) | 121,778,711 | 265,874 | N/A a |

| Correlations with uncorrected P < 0.05 | 21,526,511 | 63,926 a | N/A a |

| Significant corrs after Bonferroni | 99,544 | 4,982 | 1,836 |

| Significant corrs after Benjamini–Hochberg | 5,025,535 | 30,127 | 7,506 |

| Significant corrs after Benjamini–Yekutieli | 972,831 | 12,812 | 4,007 |

Results are shown for a case in which no prior is used and data are analyzed directly (“No prior”), or when an INDRA prior is used (“INDRA prior/Total”). The rightmost column shows the number of novel codependencies recovered exclusively when an INDRA prior was used along with correction for multiple testing (“INDRA prior/INDRA only”).

Figures for uncorrected P‐values do not apply to the “INDRA prior/INDRA‐only” case because without correction for multiple testing, the prior does not play a role in determining significance. Figures are shown for the “INDRA prior/Total” case to establish the number of codependencies with uncorrected P‐values > 0.05 that fall within the scope of the INDRA network; this serves as an upper bound for the number of correlations determined to be significant with the different approaches to multiple testing shown in the bottom three rows.