Abstract

According to the Behavior Analyst Certification Board (BACB), services commonly provided by behavior analysts include writing and revising protocols for teaching new skills. To our knowledge, there are currently no published, peer-reviewed articles or texts focused on developing skill acquisition protocols. The purpose of this study was to develop and evaluate the effectiveness of a computer-based instruction (CBI) tutorial on acquisition of skills related to writing an individualized protocol based on a research article. The tutorial was developed based on a variety of expert samples recruited by the experimenters. Fourteen students enrolled in a university behavior analysis program participated in a matched-subjects group experimental design. The training was separated into three modules on protocol components, identifying important information in a research article, and individualizing the protocol for a learner. Training was self-paced and completed in the absence of a trainer. The training included the following behavioral skills training components: instruction, modeling, individualized pacing, opportunities to actively respond and rehearse skills, and frequent specific feedback. The tutorial resulted in a significant increase in accuracy of protocols during posttest when compared to a textual training manual. This study contributes to the literature by applying CBI training procedures to a complex skill, as well as evaluating training in the absence of a trainer, and provides a technology for clinicians to learn effectively and efficiently to write a technological, individualized, and empirically based protocol.

Keywords: Applied behavior analysis, Computer-based instruction, Protocol, Skill acquisition, Staff training

According to Baer et al. (1968), a defining feature of behavior analytic interventions is that they are technological; that is, they describe all relevant contingencies, stimuli, and responses such that the procedures can be replicated. In behavioral intervention programs, it remains important that acquisition and reduction programs include detailed information to ensure that the programs will be implemented accurately. The Behavior Analyst Certification Board (BACB, 2022a) outlines services commonly provided by behavior analysts, including writing and revising protocols for both teaching new skills and reducing problem behavior. At a minimum, such protocols provide a description of target client behaviors and intervention procedures for instructors to follow but may include additional details such as prerequisite skills (Maurice et al., 1996; Miltenberger, 2007). A protocol may be used as a (1) training tool for behavior technicians; (2) job aid to reference while implementing programming; (3) record of intervention procedures; and (4) the framework for developing procedural integrity checklists to measure accountability.

A line of research exists evaluating the contents of behavior support plans intended to address behavior reduction in particular (Quigley et al., 2018; Vollmer et al., 1991; Williams & Vollmer, 2015). These studies used a combination of indirect methods to make recommendations, including surveying behavior analysts, or examining the content of existing documents. For example, Williams and Vollmer (2015) developed a list of 20 essential components of written behavior reduction protocols based on expert evaluation. These components were identified by surveying 36 experts across several journal editorial boards who rated 28 items from essential to nonessential using a five-point Likert scale. Although some of the 20 items identified as essential could apply to skill acquisition programs (i.e., defined target behaviors, measurable objectives, detailed data collection procedures, specific generalization and maintenance strategies, and consequences for target behavior), most apply primarily to behavior reduction programming. In addition, skill acquisition programs require different components such as prompts, prompt fading, and reinforcement. Although not completely aligned with skill acquisition, the research on behavior reduction provides us with insight on how to evaluate content of clinical documentation. In addition to demonstrating that survey results often match the content of existing quality documents, repeated measures on behavior plan documents over time demonstrate little change. For example, Quigley et al. (2018) state that “the survey data indicate much of what was considered essential for 25 years is still considered essential by behavior analysts today. This consistency of opinion over time could help facilitate practice guidelines for essential components of written behavior plans” (p. 442). They also support the notion that investigations of this nature can be used to develop practice guidelines, trainings, and job expectations.

To our knowledge, there are currently no published, peer-reviewed articles focused on developing skill acquisition protocols. Although it is common for these documents to interchangeably be referred to as protocols, programs, or curricula, the term “protocol” will be used for the purpose of this article. In our experience, we have anecdotally noted that skill acquisition protocols are often based on in-house or commercially available templates or preexisting protocols. For example, a clinic may maintain an in-house database of templates for various commonly implemented programs (e.g., gross motor imitation, visual–visual matching) that an instructor can individualize for a specific learner. As an alternative, an instructor might use a protocol previously written for a different learner and modify it as needed. Commercially available resources include books or curricula that guide providers on how to progress from assessment results to quality goals and programs and often provide detailed protocols to use (e.g., Fovel, 2001; Knapp & Turnbull, 2014a, 2014b, 2014c; Leaf et al., 1999; Maurice et al., 1996; Mueller & Nkosi, 2010). For example, The Big Book of ABA Programs (Mueller & Nkosi, 2010) includes over 500 protocols that can be photocopied and used for learners. Multiple online programs also provide detailed program protocols and templates (e.g., ABADat, 2022; The New England Center for Children, 2020; Rethink Behavioral Health, 2022). As another example of ready-made skill acquisition programming, the Autism Curriculum Encyclopedia (The New England Center for Children, 2020) provides subscribers with more than 2,000 customizable lesson plans based on behavior analytic strategies across a wide array of skills. These types of curricula may be helpful in that they often (1) offer a variety of skills to teach; (2) break down teaching steps; (3) suggest prompts and error correction; (4) streamline program development; and (5) may be aligned to the organization’s preferred approach, making following the steps easier on the part of the behavior technician.

Despite some benefits, this method of using previously existing protocols presents several challenges. For one, they are not individualized for the learner. Templated acquisition protocols require modification to individualize them for each learner based on learning history, patterns of responding, and potential unintentional stimulus control problems (Grow & LeBlanc, 2013). For example, Maurice et al. (1996) describe how the teaching procedures included in their curriculum are “generic and may need to be modified for your child . . . although prompting suggestions are provided, there are many error-correction procedures that could be used when your child responds incorrectly or fails to respond at all” (p. 65). It is important to note that section 2.14 of the Ethics Code for Behavior Analysts (BACB, 2020) states not only that behavior analysts summarize behavior-change intervention procedures in writing, but that they must be designed to meet the diverse needs, benefits, context, and resources of the client; therefore, using a generic program that is not individualized for your client, without any modification, may be an ethical violation, and most important, may prevent the client from reaching progress potential.

Because relying on preexisting documents saves clinicians time, response effort, and resources, it is possible that this method may prevent clinicians from seeking out or maintaining skills related to designing unique, client-centered programming. For reasons outlined above, this is an important skill, and also a complex one. Writing a skill acquisition protocol requires competency across many areas, including creating operational definitions, selecting and describing the best prompt and prompt fading procedures, error correction, generalization, and maintenance, and designing a method of data collection and analysis. The process of writing such a protocol involves many decision points, in addition to the skill of simply assembling the components into an effective plan.

In addition, the Ethics Code for Behavior Analysts (BACB, 2020) emphasizes the need for using data-based decisions for programming, and using interventions based on scientific evidence. Published research continually provides updated methods and refinements to teaching procedures, and available curricula or templates may not be updated as quickly as this information becomes available. Therefore, basing protocols on recent literature, meaning that ABA providers can contact an article and then read, analyze, and synthesize that research into teaching procedures, is an important and valuable skill for behavior analysts who write protocols.

Altogether, these skills of identifying important teaching program components, including recent research considerations, and individualizing protocols as needed, may be time consuming for providers if not taught to fluency. Demand for behavior analysts has increased by 5,852% in the last 12 years (BACB, 2022b), and availability of behavior analysts has historically struggled to keep up with that demand. An analysis by Zhang and Cummings (2019) found that the per capita supply of certified ABA providers fell below the benchmark informed by the BACB guidelines in 49 states and Washington, DC, and that even if the recommended caseloads were doubled, they would still fall below the benchmark in 42 states and Washington, DC. It is evident that demand for behavior analysts is high, and workloads are heavy. Therefore, a training program to teach these skills is more important than ever, and must be efficient if it is to fit into a clinician’s multitude of responsibilities. Because designing and monitoring acquisition programming is one of the primary responsibilities of certified ABA providers, the target audience for this research includes behavior technicians, board certified behavior analysts (BCBAs), board certified assistant behavior analysts (BCaBAs), and students of behavior analysis.

A body of research has demonstrated the effectiveness of behavioral skills training (BST; instructions, modeling, rehearsal, feedback) for teaching clinicians, parents, teachers, and others to implement new protocols (e.g., Gianoumis et al., 2012; Sarokoff & Sturmey, 2004; Ward-Horner & Sturmey, 2012). Studies have also evaluated the efficacy of using individual components of BST (e.g., Drifke et al., 2017; Jenkins & DiGennaro Reed, 2016; Ward-Horner & Sturmey, 2012) and delivering components of BST via computer (e.g., Higgins et al., 2017; Hirst et al., 2013; Knowles et al., 2017; Schnell et al., 2017; Shea et al., 2020; Walker & Sellers, 2021). For example, Hirst et al. (2013) evaluated the effects of varied computer-delivered feedback accuracy on task acquisition. Participants were taught to match symbols to nonsense words on a computer program and feedback of varied accuracy was provided. The authors found that inaccurate feedback not only negatively affected participant performance during teaching trials but that exposure to inaccurate feedback also negatively affected later learning trials in which inaccuracies were corrected, highlighting the importance of frequent and correct feedback during computer-based learning. Computer-based instruction (CBI) makes training materials and administration accessible from any computer with internet connection, eliminates the need for an in-vivo trainer, and offers self-pacing and simultaneous completion for trainees (Pear et al., 2011). Due to a relative deficit of trainers compared to staff requiring training (Graff & Karsten, 2012), staff training methods that reduce the need for a trainer to be physically present can be more widely disseminated. Research also suggests that these types of learning interfaces may be more effective than passive, indirect training in which learners simply read or listen to content and are not required to actively emit a response.

For example, “traditional” methods of instruction generally consist of either written instructions or a structured oral presentation intended to present didactic information about a particular subject (Williams & Zahed, 1996). Direct training, such as CBI, may include features such as (1) interactivity; (2) feedback; (3) independent administration in the absence of an instructor; and (4) self-paced instruction; and may include strategies of programmed instruction, including (1) operationally defining skills to be learned; (2) small units of instruction; (3) systematic presentation of material; and (4) opportunities for active responding (Marano et al., 2020).

The efficacy and efficiency of computer and web-based instruction to teach students and clinicians have been evaluated across a variety of clinical skills, including identifying and analyzing functional analysis data (Chok et al., 2012; Schnell et al., 2017), visually analyzing baseline treatment graphs (O'Grady et al., 2018; Wolfe & Slocum, 2015), implementing discrete trial and backward training protocols (e.g., Eldevik et al., 2013; Geiger et al., 2018; Gerencser et al., 2018; Higbee et al., 2016; Nosik et al., 2013; Nosik & Williams, 2011; Pollard et al., 2014), implementation of photographic activity schedules (Gerencser et al., 2017), appropriate interactions with consumer’s parents (Ingvarsson & Hanley, 2006), completing preference assessments (Marano et al., 2020), detecting antecedents and consequences of problem behavior (Scott et al., 2018), implementing imitation interventions (Wainer & Ingersoll, 2013), and appropriately receiving feedback (Walker & Sellers, 2021). CBI offers several advantages for training clinical skills: (1) training can be completed at their own pace according to a flexible, cost-effective training schedule; (2) employers may also benefit from this format of training in that it is convenient, does not require the presence of a trainer or supervisor, and includes the ability to automatically collect training data.

CBI often incorporates components of BST including instruction, modeling (i.e., examples), individualized pacing, opportunities to actively respond and rehearse skills, and frequent and specific feedback. Although Skinner (1954, 1958) recommends that training include features such as active engagement, self-paced instruction, immediate feedback, and systematic steps, a review of asynchronous staff training methods recommends these components be evaluated due to an overall lack of research (Marano et al., 2020). Including each of these components and requiring completion within a specific duration may facilitate the acquisition of a repertoire of skills necessary to create a technological and effective skill acquisition protocol within a reasonable timeframe.

Despite behavior analytic skills having been taught via CBI, there are currently no empirically based resources for training ABA providers to write a skill acquisition protocol. Therefore, the purpose of this study was twofold. First, we aimed to design a computer-based tutorial to teach the skills related to writing an individualized acquisition protocol based on a research article. According to a review of asynchronous training methods by Marano et al. (2020), common features of CBI include self-paced instruction, interactive activities, feedback, examples and nonexamples, and mastery criteria; therefore, these features were included in the training. Second, we wished to pilot the efficacy of this training program. Participants were exposed to a pretest, training, and a posttest, and a matched-subjects two-group design evaluated outcomes against a control group. Social validity of goals, procedures, and outcomes (Wolf, 1978) were evaluated by the participants and practicing behavior analysts.

Tutorial Development

Protocol Sampling

To identify quality practices in writing acquisition protocols, we solicited the assistance of 24 behavior analysts identified as directing clinical centers for children with autism spectrum disorder (ASD). These were all individuals or organizations known by the authors or colleagues in their department, and recruitment emails requested that they redirect us to the individual most involved in reviewing or training on skill acquisition. Recruits were excluded if their role did not include a focus on skill acquisition, or if they were not in a supervisory role. Eight of these recruits responded and were included, representing a variety of programs, training backgrounds, and geographical locations within the United States (see Table 1). Two behavior analysts recorded their process of writing an individualized skill acquisition protocol based on a published research article. Using the Adobe Captivate screen recording feature, these participants recorded videos of the activity on their computer screen while they opened documents and wrote their protocols. The remaining six participants provided samples of skill acquisition protocols used in their practice and answered questions related to their process of developing those protocols. All files from these participants were received by a research assistant and coded for anonymity before being reviewed by the authors.

Table 1.

Expert sample participant characteristics N(%)

| Years of experience writing programs | |||

| 5–9 | 10–14 | 15+ | |

| 2 (25%) | 3 (37.5%) | 3 (37.5%) | |

| Frequency of writing or editing programs | |||

| Multiple times per week | A few times per month | Very rarely or never | |

| 3 (37.5%) | 1 (12.5%) | 4 (50%) | |

| How often programs are based on research | |||

| Almost always (75% or more) | Frequently (30-75%) | Rarely (less than 30%) | |

| 4 (50%) | 3 (37.5%) | 1 (12.5%) | |

| Do they teach others to write programs | |||

| Yes | No | ||

| 6 (75%) | 2 (25%) | ||

| Primary work setting | |||

| University | Center | Home or Outpatient | |

| 3 (37.5%) | 2 (25%) | 3 (37.5%) | |

| Highest academic degree | |||

| Masters | Doctorate | ||

| 2 (25%) | 6 (75%) | ||

Task Analysis of Components

Two independent data collectors then created a task analysis for each sample protocol by listing out all components or details included. Two of the authors then compared these task analyses for similarity. If both agreed that a component appeared across two or more samples, it was included in the final synthesized task analysis (see Appendix A). If any section of sample protocols was named differently, but contained similar content (e.g., target, goal, operational definition), then these were considered the same component, and the most common term was used. If the authors disagreed on any components appearing across sample protocols, a faculty member within their department published in skill acquisition was identified to provide input and determine inclusion or exclusion however, no disagreements occurred.

Table 6.

Datasheet

| Creating a Skill Acquisition Program | Complete and Accurate (1) |

Partially Complete/ Accurate (.5) |

Not Included 0 |

|---|---|---|---|

| Program name is specific to skill being taught and would be able to include multiple exemplars or targets. | |||

| Learner name or initials are correct. | |||

| Operational definition is concise, specific, objective, and reliably measurable. | |||

| Operational definition includes topography of behavior, latency, onset/offset criteria (if appropriate) and examples/nonexamples OR correct/incorrect responses. | |||

| Measurement section accurately describes how data are collected clearly and completely enough for replication | |||

| Measurement section includes how data are summarized; summary method is appropriate. | |||

| Procedures section includes all appropriate components (antecedents, consequences, prompts, generalization, maintenance, and targets) as based on the research article. | |||

| Antecedents section describes when and how to present auditory stimuli technically. | |||

| Antecedents section describes when and how to present visual stimuli technically, including placement and rotation. | |||

| Antecedents section describes when and how to establish attention, eye contact, and/or observing responses technically. | |||

| Procedure includes clear and complete instructions on how instructor responds to a correct response (i.e., praise, preferred items, latency, schedule of reinforcement). | |||

| Procedure includes description of an error. | |||

| Procedure includes clear and complete instructions on how instructor responds to an incorrect response including error correction, re-presentation, consequence following re-presentations. | |||

| Procedure includes clear and complete instructions on how instructor responds to no response. | |||

| Prompt and prompt fading strategies include relevant, appropriate, clear, instructions on type of prompt. | |||

| Prompt and prompt fading strategies include relevant, appropriate, clear, and complete instructions on how to fade and/or increase prompts. | |||

| Mastery criteria section includes measurable criteria for advancement, including accuracy, duration, independence, and number of sessions. | |||

| Mastery criteria are appropriate for target skill. | |||

| Generalization section includes how to program for generalization; method of programming for generalization is appropriate and accurate. | |||

| Generalization section includes procedures for assessing generalization. | |||

| Maintenance section includes how to program maintenance; Method of programming for maintenance is appropriate and accurate. | |||

| Maintenance section includes how to assess maintenance, including follow-up schedule. | |||

| Targets section includes targets and/or stimuli that will be taught or used for generalization. | |||

| Program is appropriately individualized according to details from client vignette. | |||

| Program details are accurate according to method from research article. Variations are made according to learner characteristics and Discussion recommendations/limitations. |

Computer-Based Tutorial Programming

The computer training was designed based on the synthesized task analysis. Adobe Captivate was used to develop content specific to each component of the task analysis. This software allows for the designer to add text, graphics, sound, video, and features that the user can interact with. Timing features allow for the designer to choose if content transitioned at a certain speed or transitioned when the user clicked to interact with the training. For example, some media appeared on the page after the user was provided a few seconds to read displayed content, but pages would not advance without the user clicking a “next” icon. This software requires publishing and must be hosted on a website or compatible platform. For the purpose of this study, a university Blackboard account was used. An entire course was created to host the tutorial, and logins were created for each trainee.

Experimenters identified objectives related to each component and used a backward course design strategy (McKeachie & Svinicki, 2014) to develop content. The tutorial included textual instruction, questions with immediate feedback, rehearsal, and examples/nonexamples and was separated into three modules. Thirteen gradable quiz questions were included throughout the tutorial. Every multiple-choice question had four response options, and only one answer could be chosen. Multiple selection questions also had four possible responses and required participants to select check boxes next to each correct answer. Matching questions required participants to either drag and drop answers into columns or cells or select a letter representing the matching item for program components from a drop-down menu. Each question was programmed to be worth 10 points (the default point value for the quizzing software).

Incorrect quiz answers resulted in an incorrect message with specific feedback comprised of the correct answer, and an explanation as to why that answer was correct. Participants had the opportunity to respond correctly before moving on but were not required to. This feature was chosen for efficiency, as a previous study found no difference in performance when participants were required to respond correctly before proceeding (O'Grady et al., 2018). Correct responses immediately resulted in text and animation-based feedback (e.g., “you got it!” and a gif of popular TV characters dancing). Following each section of the module, 5-min breaks were prompted via embedded text.

Module 1

In Module 1, participants were introduced to acquisition protocols, and were taught to list all sections of a protocol in order. After a brief introduction to the purpose of a protocol, some learning objectives, and navigation instructions, trainees progressed through each component of the task analysis in sequential order. Each section of this module provided the content of that component, some examples, as well as important considerations. For example, the section on generalization stressed the need to both program for and assess generalization, provided examples of each written into a protocol, and then gave lists of methods to achieve this. Once all components were introduced, trainees learned to list all 13 independently using stimulus prompt fading. First, they typed each component in text fields alongside the full list as it appeared on screen. Next, they typed each component into text fields alongside the first letter of each component. Trainees could click “check my answer” to view the full list and clear their answers to try again. Finally, participants listed each component into text fields without any stimulus prompt. Again, they had the option to check answers and respond again before proceeding but were not required to.

Module 2

In Module 2, participants were oriented to an empirical research article, where to find information, and how to use the information to support their protocol. The home page displayed screen shots of the four main sections of an article, and navigated by clicking to expand each section, numbered in order. They would first click “Introduction” and be directed to a page with the Introduction on the right and click-to-view questions on the left. From top to bottom, each section had questions that included where to find that section (e.g., the introduction is the first major section of the article, and appears immediately after the title and abstract), what information could be found in that section, and how to use that section of the article to gather information for your teaching protocol. For example, trainees learned that the introduction frequently provides an overview of previous research, and that learning what has and has not been successful for teaching certain skills could help make an informed decision on their teaching strategies.

Module 3

Finally, In Module 3, participants were taught to individualize the sections of an acquisition protocol that they had outlined according to example learner characteristics and considerations, including how to find and individualize prompt and error correction methods. First, trainees were provided with a research article and a client vignette. Client vignettes were developed by the experimenters, and included information typically provided to clinicians about new clients, as well as details important to skill acquisition development. These were developed to determine if participants included learning history and preferences or reinforcers into their programs. The vignette described a hypothetical client’s gender, age, interfering behavior, vocal-verbal skills, prompt and error correction history, and preferred items. The tutorial instructed them to read both documents in their entirety before returning to Module 3.

They were then taken through each component, one by one, again. Before beginning writing, trainees were provided with some basic writing tips, including writing in a specific but concise manner, and keeping tense consistent throughout the document. In each component section, they were given brief reminders of considerations and a long text field to write out the details of that protocol section for the specified client in the vignette using the methods from the article. Once they finished writing, they clicked “next” and the tutorial provided a sample written by one of the authors. They could then go back to check and edit their own response, or proceed to the next section, where the same text fields and samples were provided for each component. The samples served as models from expert protocol samples for trainees to imitate and included a rationale as to why the author included the information they did. Once trainees wrote a completed protocol, they were guided through the end of the tutorial. They were congratulated for completing the content, provided with some follow up considerations (e.g., continuing to collect procedural integrity data after protocols are written, trained, and implemented), and the training concluded.

Evaluation of Staff Training

Method

Participants, Setting, and Materials

Participants were 14 students enrolled in an undergraduate or graduate (masters or postmasters) behavior analysis program who had not received formal training on writing an acquisition protocol. The only exclusion criterion was scoring greater than 75% on the pretest. Pretest scores were collected and ranked into three equal percentile ranges (1%–25%, 26%–50%, 51%–75%). Pretest, treatment, and posttest components were conducted in participant homes on personal computers. Participants completed a questionnaire to provide information on relevant characteristics related to tutorial performance (see Table 2).

Table 2.

Participant questionnaire and results N (%)

| How often do you write programs to teach clients skills? | ||||

| Never | Rarely | Sometimes | Often | |

| Treatment | 3 (43%) | 2 (29%) | 1 (14%) | 1 (14%) |

| Control | 3 (43%) | 2 (29%) | 1 (14%) | 1 (14%) |

| In your current position, is one of your responsibilities to write programs to teach clients skills? | ||||

| No, someone else has this responsibility | No, but I often help with this task | Yes, I am expected to do this under close supervision | Yes, this is part of my independent responsibilities | |

| Treatment | 2 (29%) | 2 (29%) | 3 (43%) | 0 |

| Control | 4 (57%) | 1 (14%) | 2 (29%) | 0 |

| How many years have you worked in the field of ABA? | ||||

| I don’t work in ABA | Less than 1 year | 1–2 years | More than 2 years | |

| Treatment | 1 (14%) | 2 (29%) | 4 (57%) | 0 |

| Control | 0 | 1 (14%) | 1 (14%) | 5 (71%) |

| What is your current GPA? | ||||

| Less than 3.5 | 3.5–3.7 | 3.7–3.8 | 3.9–4.0 | |

| Treatment | 0 | 0 | 2 (29%) | 5 (71%) |

| Control | 0 | 2 (29%) | 1 (14%) | 4 (57%) |

| What is your highest degree held? | ||||

| Bachelors | Masters degree in an unrelated field | Masters in another related field | Masters in ABA | |

| Treatment | 5 (71%) | 0 | 2 (29%) | 0 |

| Control | 5 (71%) | 0 | 0 | 2 (29%) |

| How confident do you feel about your skills in developing research-based skill acquisition programs for others? | ||||

| Not confident at all | Somewhat confident | Very confident | Extremely confident | |

| Treatment | 2 (29%) | 5 (71%) | 0 | 0 |

| Control | 1 (14%) | 5 (71%) | 1 (14%) | 0 |

Dependent Variables and Data Collection

During the pretest and posttest, data were collected on correct completion of each component of a skill acquisition protocol based on a research article and a client vignette (see Appendix B). Articles used were chosen based on author agreement that they were recently published in a major peer-reviewed behavior analytic journal, described procedures thoroughly and clearly, and targeted a skill commonly taught by ABA providers. Vignettes were created by the authors to contrive details to guide individualization of protocols, for example the client’s successful prompt history, imitation skills, and reinforcers. These data were summarized as the percentage of correct components of a skill acquisition protocol. Percentages were calculated by dividing the number of correct components by the total number of components. Individual scores were graphically represented on a dot plot, and data were summarized as group means and visually analyzed using bar graphs.

Data were also collected on quiz performance during the tutorial and reported as mean percentage of correct responses per participant across quizzes. Data were summarized by dividing the total number of points earned by the number of total points possible and multiplying by 100%. Quiz scores were automatically scored by the computer program and saved by the experimenter. Finally, data were collected on the duration to complete the tutorial for each participant, as well as to complete the pretest and posttest, by using a timing feature on session recordings and recording total time at the end of each task. All primary data were collected and summarized by the experimenters.

Experimental Design

Participants from each pretest range were randomly assigned to treatment or control groups. A matched-subjects group experimental design was used to compare mean scores on testing between the treatment group and a control group. A between–subjects two-group design compares two samples of equal individuals deemed equivalent on specific variables, one of which receives the intervention. This design controls for extraneous variables by recruiting individuals from the same population and matching subjects before randomizing assignment to control and treatment groups to minimize differences across groups. A limitation of this design is that participants cannot be matched across all variables due to availability constraints. Therefore, the most important variable to reduce variability due to individual differences is often chosen. For the purpose of this study, pretest scores were chosen as the variable along which participants were matched, as it controlled for performance-related variance across participants within each group. A functional relation is then demonstrated if changes are observed postintervention for the treatment group, and those changes are determined to be significantly different from pretest, significantly different than the control group, and that these changes are unlikely to be due to chance (Jackson, 2012). An independent-groups t test, a parametric statistical test that compares the means of two different samples, was used to compare group scores pre- and postintervention. Effect size was also calculated to determine whether the proportion of variance in the dependent variable was accounted for by the manipulation of the independent variable. Effect size indicates how large a role the conditions of the independent variable played in determining scores on the dependent variable (Jackson, 2012). It was predicted that the treatment group would have significant improvement in posttest scores compared to pretest scores, and that this improvement would be significantly different than any changes observed for the control group.

Experimental Procedure

Pretest

During the pretest condition, participants met with the experimenter via Zoom or Google Meet. Participants were instructed to create a protocol based on the method in a research article using a script (Appendix C). All participants were then given a research article and a new client vignette (see Appendix B). Half of participants (quasirandomly selected) received the first article and client vignette for the pretest and the second article and client vignette for the posttest. For the other half of participants, order of document presentation was reversed to control for difficulty of materials. All participants were proctored live by using the screen share feature as they completed the pretest. The experimenter viewed the participant’s desktop throughout the task. No instructions, additional materials, or feedback was provided.

Training

After the pretest, participants were asked to complete the electronic participant demographic survey sent to them via email while the experimenter scored the pretest. Participants were then randomly assigned to control or treatment group based on score range. At first, participants in each percentile range (1%–25%, 26%–50%, 51%–75%) were assigned using a random generator. As subsequent participants scored within those ranges, they were matched to randomly assigned participants, and placed in the other group. Individuals in the control group were provided with textual self-instructional materials that matched instructional content on how to write a skill acquisition protocol. A PDF “manual” was sent via email, and participants were told that they could review the manual for as long as they’d like, and that they may leave and come back to it as they wish. They were also asked to email the experimenter when they finished reviewing the manual. Participants in the treatment group were asked to complete a learning tutorial that would take 1–2 hr to complete; they were provided with login information to the computer-based tutorial. They were also told that they could leave and come back to the tutorial and complete it at their own pace. For both groups, instructions were given vocally during video conferencing immediately following the demographic survey, as well as in written format in an email containing the necessary files and links. Textual prompts for 5-min breaks were embedded following each section of the tutorial. This study aimed to evaluate the effectiveness of the tutorial in the absence of trainer presence; therefore, participants completed the tutorial/manual on their own time following the pretest meeting. Quiz scores and duration of interaction with the tutorial were automatically recorded. Control group participants were asked to record how long in minutes they spent reviewing the manual.

Posttest

Once participants completed training and contacted the experimenter, a posttest meeting was set up in the same manner as pretest. All participants were given a research article and client vignette comparable to pretest materials. Procedures remained identical to the pretest. Control group participants were not allowed to use the manual or any notes taken from it. Treatment group participants were also not permitted to use the tutorial or any notes. This was ensured by having participants use the webcam to show the experimenter their immediate area, as well as use the screen share feature to allow the participant to view their entire desktop. Participants were monitored throughout their training to ensure they did not use supplemental materials or leave the area.

Interobserver Agreement and Procedural Integrity

A second, independent blind data collector scored 50% of randomly selected protocols during pretest and posttest. Agreement was defined as both observers scoring a component as correct or incorrect. Interobserver agreement was calculated for each protocol by dividing the number of components with agreement by the total number of components and multiplying by 100. Mean interobserver agreement was 90% (range: 83%–96%). The software was programmed to automatically record duration measures and quiz responses; therefore, calculation of interobserver agreement was unnecessary for these dependent variables.

Procedural integrity data were collected via recordings of pretest and posttest meetings to ensure that the experimenter provided instructions via the provided script, live proctored the participant throughout, and that no additional instructions or resources were provided or used. Procedural integrity data were collected for 33% of sessions evenly across pretest and posttest of both treatment and control groups. Data were collected on each study component along a task analysis and summarized as percentage of steps performed correctly. Mean procedural integrity was 97% (range: 83%–100%).

Social Validity

Social validity of goals of this study were determined via a questionnaire administered to BCBAs asking questions regarding current protocol development practices and the utility of a training system to teach these skills, as well as the importance of the skills targeted. These BCBAs were selected by again emailing those identified as being directors of ABA centers or programs and did not include the same experts sampled for protocols during tutorial development. In total, five respondents provided their feedback on the importance of goals identified in this study. The same respondents completed a separate questionnaire to evaluate the social validity of outcomes. They rated five randomly selected treatment group posttest protocols on thoroughness, quality, ease of implementation, and acceptability. All 14 participants completed an anonymous electronic questionnaire at their convenience regarding the social validity of the procedures following their participation in intervention. They rated the ease and acceptability of the training system they experienced, either the tutorial or the control group manual. All questionnaires included questions with clearly defined items on a Likert scale and options for free response (see Tables 1, 2 and 3).

Table 3.

Social validity of goals survey results

| Writing a skill acquisition protocol based on current research is a skill that behavior technicians and new BCBAs are well versed in | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 1 (20%) | 3 (60%) | 1 (20%) | 0 |

| Trainings and instruction on how to write research-based acquisition protocols are widely available | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 5 (100%) | 0 | 0 |

| Available trainings on how to write these protocols are good quality and based on staff training best practice | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 2 (40%) | 3 (60%) | 0 |

| This is an important skill for behavior technicians and BCBAs | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 0 | 5 (100%) |

| If a quality computer-based training were available to teach this skill, I would consider using it | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 1 (20%) | 4 (80%) |

Results

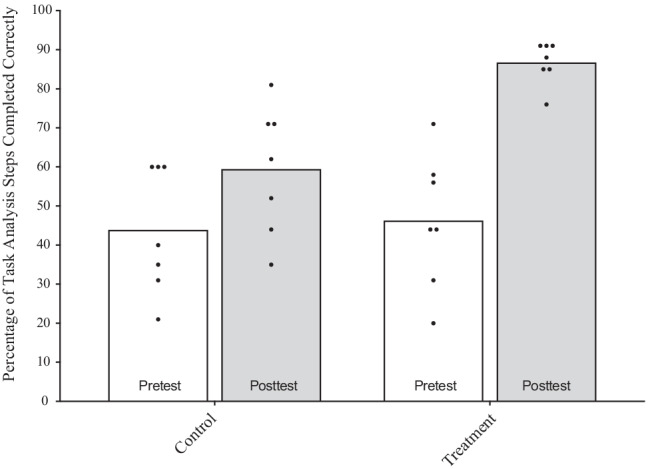

Figure 1 shows individual participant and mean group scores on percentage of protocol steps correctly completed. During the pretest, control and treatment group means were comparably low (44% [range: 21%–60%] and 46% [range: 20%–71%], respectively). Following training with the pdf manual, the control group mean increased to 59% (range: 35%–81%), a mean increase of 16%. Following training with the computer-based tutorial, the treatment group mean increased to 87% (range: 76%–91%), a mean increase of 40%. Individual score data points for this group clustered, indicating consistent posttest performance. Mean difference scores were compared for control and treatment groups using an independent groups t-test. Difference scores were significantly higher for the treatment group, t(12) = (3.27, p < 0.003) (one-tailed).

Fig. 1.

Group and individual performance on pretests and posttests for control and treatment groups Note. The bars represent mean scores for each group, and the data points within each of those bars represents individual scores within that group

Participant duration on study tasks varied slightly across groups, with control group participants spending more time on both writing and self-paced training. During pretest, treatment group participants spent an average of 64 min (range: 47–81 min) writing their protocols, whereas control group participants took an average of 78 min (range: 58–90 min). During the training phase, treatment group participants spent an average of 119 min (range: 70–175 min) completing self-paced training, whereas control group participants took an average of 136 min (range: 68–140 min). During posttest, treatment group participants spent an average of 68 min (range: 60–88 min) writing their protocols, whereas control group participants took an average of 78 min (range: 42–90 min). Quiz scores for treatment group participants averaged 95% (range: 87%–100%).

Responses to the social validity of goals survey are displayed in Table 3. Eighty percent of respondents disagreed when asked whether writing research-based acquisition goals is a skill in which behavior technicians and new BCBAs are well-versed. All respondents indicated that trainings on this skill are not widely available, that this is an important skill for technicians an BCBAs, and that they would consider using a quality computer-based training to teach this skill. One respondent commented, “Although resources and some manuals include information on the procedures or ‘should dos’ of creating protocols, I am not aware of a training manual related to creating full protocols, and this is an area the field should address.”

Table 4 shows the results of surveys used to assess social validity of procedures across all participants. Mean scores for each question across control and treatment groups is displayed. Both groups agreed that the content of the trainings were at an appropriate difficulty level, that the training was applicable to their work, and that they felt confident in writing protocols following the training. All participants in the treatment group agreed that they enjoyed the format of the tutorial and that it was preferable to other training formats, whereas control group participants did not agree with these questions.

Table 4.

Social validity of procedures survey results

| The content of the training was at an appropriate difficulty level | ||||

| Strongly Disagree | Disagree | Agree | Strongly Agree | |

| Treatment | 0 | 0 | 2 (29%) | 5 (71%) |

| Control | 1 (14%) | 0 | 2 (29%) | 4 (57%) |

| I will apply the content of the training when I write programs for earners as part of my clinical work | ||||

| Strongly Disagree | Disagree | Agree | Strongly Agree | |

| Treatment | 0 | 0 | 0 | 7 (100%) |

| Control | 0 | 0 | 0 | 7 (100%) |

| I enjoyed the format of the training | ||||

| Strongly Disagree | Disagree | Agree | Strongly Agree | |

| Treatment | 0 | 0 | 2 (29%) | 5 (71%) |

| Control | 3 (43%) | 0 | 0 | 4 (57%) |

| The training was preferable to other training formats | ||||

| Strongly Disagree | Disagree | Agree | Strongly Agree | |

| Treatment | 0 | 0 | 1 (14%) | 6 (86%) |

| Control | 2 (29%) | 0 | 2 (29%) | 3 (43%) |

| After the training, I feel confident in writing skill acquisition protocols | ||||

| Strongly Disagree | Disagree | Agree | Strongly Agree | |

| Treatment | 0 | 0 | 3 (43%) | 4 (57%) |

| Control | 0 | 0 | 3 (43%) | 4 (57%) |

Survey results for social validity outcomes are displayed in Table 5. Respondents scored all five protocols favorably, indicating that they included all important components important to teaching the skill and that procedures could be easily implemented. Respondents also agreed that the protocols were high quality and would be acceptable in their practice.

Table 5.

Social validity of outcomes survey results

| This protocol includes all components important to teaching the skill | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 5 (100%) | 0 |

| This protocol is written in a way that procedures could easily be implemented | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 0 | 5 (100%) |

| This protocol is of high quality | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 3 (60%) | 2 (40%) |

| I would accept and use this protocol in my practice | |||

| Strongly Disagree | Disagree | Agree | Strongly Agree |

| 0 | 0 | 2 (40%) | 3 (60%) |

Discussion

To our knowledge, there are no existing investigations on packages designed to explicitly teach ABA providers to create skill acquisition protocols from scratch with only minimal resources available. Many providers likely rely on curricula or templates for client programs; however, the related protocols may not meet employer or funder expectations, or may not be guided by current research. Therefore, the purpose of this study was to evaluate the effectiveness of a computer-based tutorial on teaching skills related to writing an individualized acquisition protocol based on a research article. The tutorial resulted in a significant increase in accuracy of protocols during posttest when compared to a textual training manual. This study contributes to the literature by applying CBI training procedures to a complex skill, as well as evaluating training in the absence of a trainer (Marano et al., 2020).

Although the BACB (2022a) identifies writing protocols as a primary job responsibility of ABA providers, no clear guidelines on this skill exist in the literature or guiding textbooks. Such a skill is important because it ensures that practitioners write protocols that are individualized and based on current skill acquisition research. CBI training on this skill is particularly helpful in a growing, high-demand field in which the qualified trainers are unable to meet training needs (Zhang & Cummings, 2019). This tutorial was completed by all participants in under 2 hr, without a trainer present, and was rated as a favorable format by participants. In addition, training was more efficient for the intervention group, whose participants spent less overall time completing training than participants in the control group.

In developing this research question, the authors found that a multitude of resources and literature exists on the contents of behavior plans focused primarily on behavior reduction. Although the content did not translate well to skill acquisition, this line of research informed our methods, and warrant a comprehensive evaluation of their own. Future research should focus on a review of the literature evaluating and advising on behavior plan content, as well as a comparison of contents across types of documentation used in the field.

This training was based upon a sample of protocols submitted by experts in the field, and participants were taught to include specific components, and details on those components were taught based on the provided samples. Due to this design, posttests produced similar protocols with variations in detail, but similar content. Future research may focus on analyzing protocols across a wider population of individuals and include multiple exemplar training to promote generalized responding. Providers may have varied expectations as to the content of protocols, and it is important that providers can write protocols in varied ways, while still including critical implementation details. For example, our participants were taught to outline components first, resulting in similar ordering of component sections, whereas expert samples varied in terms of the order in which they appeared. Wording also varied across expert samples, for example, teaching targets were sometimes called “stimuli” or “teaching sets.” In addition, although prerequisites did not appear across two or more of our expert samples, it did appear on at least one, indicating that some employers may expect clinicians to demonstrate generalized responding with components included on protocols.

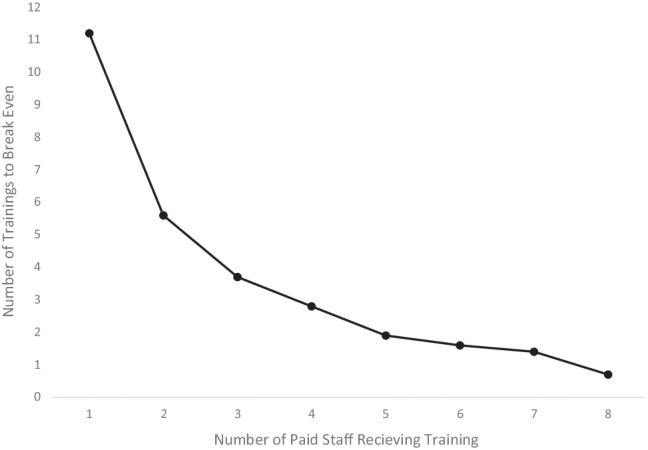

Development of the tutorial required training in the software, quizzing features, and hosting requirements. In total, learning and developing the tutorial took roughly 40 hr. The time and training requirements of developing these training materials may be considered a limitation; however, these materials can continue to be administered to ongoing cohorts of trainees in the absence of the trainer. We conducted a return-on-investment analysis as in Walker and Seller’s (2021) article on computer-based training. The purpose of such an analysis is to demonstrate how initial cost of developing a CBI training compares to ongoing trainings that require the presence of a paid trainer. To do so, the initial investment is calculated, and diminishes for each time the training is used, typically at a lower operating cost than that of an in-person trainer. The investigators spent 40 hr in total developing the tutorial, including learning the software, developing content, and corresponding with colleagues to facilitate hosting it on Blackboard. Costs of training development is estimated at $1,680. This cost was calculated by multiplying the average hourly wage of an eLearning Developer (Glassdoor, 2022) by 40 hr spent on development.

Operating costs calculated for in-person trainings of a similar duration was then compared to development cost to determine how many trainings, per number of employees, would be needed to break even, not including training space overhead or materials (Figure 2). If eight employees participated in training at a time, it would surpass the cost of tutorial development after a single training. If only one employee was trained at a time, the tutorial development cost would be surpassed after 12 trainings. This indicates that the tutorial is likely a cost-effective staff training method for most organizations.

Fig. 2.

Return on investment. Note. Each data point in this figure represents the number of in-person trainings that would need to be provided as a function of the number of paid staff receiving the training in order to recuperate the $1,680 initial development cost of the CBI

In addition, the primary dependent variable was accuracy of protocol components, determined by rating protocols across a task analysis. The qualitative nature of each task analysis item resulted in some subjectivity (e.g., “mastery criterion is appropriate for this skill”). Although IOA averaged 90%, agreement amongst data collectors required general retraining on IOA procedures due to initial low agreement scores. Future research may consider other methods of data collection to reduce subjectivity and reliably determine outcomes of training.

This training system may be a feasible replacement or supplement to existing methods of editing templates, and lead to more effective and efficient protocol-writing repertoires. Because the program is easy to administer, can be completed within several hours in the absence of a trainer, and is accessible from any computer with internet connection, it may be a viable and training option for employers and instructors. This training may also encourage ABA providers to contact the research literature to guide protocol development, an effortful requirement of our field which has warranted previous research (Briggs & Mitteer, 2021).

This study was the first to investigate methods to teach ABA providers to create skill acquisition protocols. To our knowledge, no guidelines or empirically validated trainings exist to teach this skill. The feasibility of a training program was demonstrated and validated by experienced BCBAs evaluating outcomes. Because this is the first study of its kind the results should be viewed with measured caution, as future research is needed to replicate and extend findings. Future research suggestions include administering training to a larger and more diverse group of behavior analysts and students, improving upon the training by including more content and making training development and completion more efficient, and evaluating different types of research and acquisition protocols. Such research will further inform how clinicians learn complex skills that are integral to the success of their clients in a manner that is preferred, efficient, and effective. Finally, the authors urge those charged with designing coursework, supervised fieldwork, and professional development to incorporate topics related to writing programs for skill acquisition.

Appendix A

Appendix B

Training Vignette

Mary Jones is a 5-year-old girl who follows one-step instructions, has a generalized imitation repertoire, mands for a variety of preferred items, and displays moderate levels of motor stereotypy and eye gazing. Her IEP goals include auditory-visual matching of objects and pictures. This will be her first auditory-visual matching program. In Mary’s visual-visual matching program with objects, errorless learning procedures are used and have been effective. She uses a token board, and tokens are established as conditioned reinforcers.

Write an acquisition program for Mary using the more effective procedure from the study. No computer is available for this program, so pictures will be printed. When writing details for your program, aside from the information provided about the client, you may include any other hypothetical details you like.

Testing Vignettes

John Douglas is a 12-year-old boy diagnosed with ASD. He has extensive experience with discrete trial instruction. He has a strong imitation repertoire, vocally communicates via simple sentences and can follow a 12-component visual activity schedule. He has had difficulty progressing with auditory-visual tasks. Errors vary with teaching targets, and he rarely demonstrates correct responding with novel generalization targets. Anecdotally, his teacher reports that his expressive skills are stronger than his receptive skills.

Write an acquisition program for John using the most effective procedures from the study. When writing details for your program, aside from the information provided about the client, you may include any other hypothetical details you like.

Matthew Jordan is a 4-year-old boy with some experience with discrete trial instruction. He has good attending, communicates vocally, has a generalized manding repertoire, demonstrates a variety of intraverbals, and has some overselectivity with listener responding activities. No challenging behavior is reported, but he has a history with prompt dependency. He loves sports and uses a Philadelphia Eagles token board with his instructor.

Write an acquisition program for Matthew using the procedure from the study. When writing details for your program, aside from the information provided about the client, you may include any other hypothetical details you like.

Appendix C

Participant Instructions Script

Shortly you will be asked to read an article and a client description and create a protocol based on the article. A protocol outlines specifically how to run a teaching program for a client. You will not be allowed to use any template, aides, or other resources to complete this task. You can use a blank Word document to write your protocol. If you need to leave the computer, you will not be able to continue your participation, so please take time now to settle in. You will be given an hour and thirty minutes to complete this task. I won’t be able to answer any questions about the task until you have completed all parts of the study. If you do not know how to do any part of this task, please do your best and submit the parts that you can complete. When you’re ready to start, please share your screen with me.

Authors' Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Allison Parker. The first draft of the manuscript was written by Allison Parker, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

The authors did not receive support from any organization for the submitted work.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Code Availability

Information regarding software used in this study is available from the corresponding author on reasonable request.

Declarations

Conflicts of Interest/Competing Interests

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Ethics Approval

This study was reviewed and approved by the Caldwell University Institutional Review Board and performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments.

Consent to Participate

Freely given, informed consent to participate in the study was obtained from all participants.

Consent for Publication

Freely given, informed consent to use data obtained during the study for publication purposes was obtained from all participants.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- ABADat. (2022). ABADat.

- Baer DM, Wolf MM, Risley TR. Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis. 1968;1:91–97. doi: 10.1901/jaba.1968.1.91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behavior Analyst Certification Board. (2020). Ethics code for behavior analysts. https://bacb.com/wp-content/ethics-code-for-behavior-analysts/

- Behavior Analyst Certification Board. (2022a). Board Certified Behavior Analyst Handbook.https://www.bacb.com/wp-content/uploads/2022/01/BCBAHandbook_220601.pdf

- Behavior Analyst Certification Board. (2022b). US employment demand for behavior analysts: 2010–2021.

- Briggs, A. M., & Mitteer, D. R. (2021). Updated strategies for making regular contact with the scholarly literature. Behavior Analysis in Practice, 8, –1, 12. Advance online publication. 10.1007/s40617-021-00590-8 [DOI] [PMC free article] [PubMed]

- Chok JT, Shlesinger A, Studer L, Bird FL. Description of a practitioner training program on functional analysis and treatment development. Behavior Analysis in Practice. 2012;5(2):25–36. doi: 10.1007/BF03391821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drifke MA, Tiger JH, Wierzba BC. Using behavioral skills training to teach parents to implement three-step prompting: A component analysis and generalization assessment. Learning & Motivation. 2017;57:1–14. doi: 10.1016/j.lmot.2016.12.001. [DOI] [Google Scholar]

- Eldevik S, Ondire I, Hughes JC, Grindle CF, Randell T, Remington B. Effects of computer simulation training on in vivo discrete trial teaching. Journal of Autism & Developmental Disorders. 2013;43(3):569–578. doi: 10.1007/s10803-012-1593-x. [DOI] [PubMed] [Google Scholar]

- Fovel, J. T. (2001). The ABA program companion: Organizing quality programs for children with autism and PDD. DRL Books.

- Geiger KB, LeBlanc LA, Hubik K, Jenkins SR, Carr JE. Live training versus e-learning to teach implementation of listener response programs. Journal of Applied Behavior Analysis. 2018;51(2):220–235. doi: 10.1002/jaba.444. [DOI] [PubMed] [Google Scholar]

- Gerencser KR, Higbee TS, Akers JS, Contreras BP. Evaluation of interactive computerized training to teach parents to implement photographic activity schedules with children with autism disorder. Journal of Applied Behavior Analysis. 2017;50(3):567–581. doi: 10.1002/jaba.386. [DOI] [PubMed] [Google Scholar]

- Gerencser KR, Higbee TS, Contreras BP, Pellegrino AJ, Gunn SL. Evaluation of interactive computerized training to teach paraprofessionals to implement errorless discrete trial instruction. Journal of Behavioral Education. 2018;27(4):461–487. doi: 10.1007/s10864-018-9308-9. [DOI] [Google Scholar]

- Gianoumis S, Seiverling L, Sturmey P. The effects of behavior skills training on correct teacher implementation of natural language paradigm teaching skills and child behavior. Behavioral Interventions. 2012;27:57–74. doi: 10.1002/bin.1334. [DOI] [Google Scholar]

- Glassdoor. (2022). Salary: Elearning developer. https://www.glassdoor.com/Salaries/philadelphia-elearning-developer-salary-SRCH_IL.0,12_IM676_KO13,32.htm?clickSource=searchBtn

- Graff RB, Karsten AM. Evaluation of a self-instruction package for conducting stimulus preference assessments. Journal of Applied Behavior Analysis. 2012;45(1):69–82. doi: 10.1901/jaba.2012.45-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grow L, LeBlanc L. Teaching receptive language skills: Recommendations for instructors. Behavior Analysis in Practice. 2013;6:56–75. doi: 10.1007/BF03391791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higbee TS, Aporta AP, Resende A, Nogueira M, Goyos C, Pollard JS. Interactive computer training to teach discrete-trial instruction to undergraduates and special educators in Brazil: A replication and extension. Journal of Applied Behavior Analysis. 2016;49(4):780–793. doi: 10.1002/jaba.329. [DOI] [PubMed] [Google Scholar]

- Higgins WJ, Luczynski KC, Carroll RA, Fisher WW, Mudford OC. Evaluation of a telehealth training package to remotely train staff to conduct a preference assessment. Journal of Applied Behavior Analysis. 2017;50(2):238–251. doi: 10.1002/jaba.370. [DOI] [PubMed] [Google Scholar]

- Hirst JM, DiGennaro Reed F, Reed DD. Effects of varying feedback accuracy on task acquisition: A computerized translational study. Journal of Behavioral Education. 2013;22:1–15. doi: 10.1007/s10864-012-9162-0. [DOI] [Google Scholar]

- Ingvarsson ET, Hanley GP. An evaluation of computer-based programmed instruction for promoting teachers’ greetings of parents by name. Journal of Applied Behavior Analysis. 2006;39(2):203–214. doi: 10.1901/jaba.2006.18-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson, S. L. (2012). Research methods and statistics (4th ed.). Thomson Wadsworth.

- Jenkins SR, DiGennaro Reed FD. A parametric analysis of rehearsal opportunities on procedural integrity. Journal of Organizational Behavior Management. 2016;36:255–281. doi: 10.1080/01608061.2016.1236057. [DOI] [Google Scholar]

- Knapp, J., & Turnbull, C. (2014a). A complete ABA curriculum for individuals on the autism spectrum with a developmental age of 1–4 years: A step-by-step treatment manual including materials for teaching 140 foundational skills. Jessica Kingsley.

- Knapp, J., & Turnbull, C. (2014b). A complete ABA curriculum for individuals on the autism spectrum with a developmental age of 3–5 years: A step-by-step treatment manual including materials for teaching 140 foundational skills. Jessica Kingsley.

- Knapp, J., & Turnbull, C. (2014c). A complete ABA curriculum for individuals on the autism spectrum with a developmental age of 7 years up to young adulthood: A step-by-step treatment manual including materials for teaching 140 foundational skills. Jessica Kingsley.

- Knowles C, Massar M, Raulston TJ, Machalicek W. Telehealth consultation in a self-contained classroom for behavior: A pilot study. Preventing School Failure. 2017;61(1):28–38. doi: 10.1080/1045988X.2016.1167012. [DOI] [Google Scholar]

- Leaf, R., McEachin, J., & Harsh, J. D. (1999). A work in progress: Behavior management strategies & a curriculum for intensive behavioral treatment of autism. DRL Books.

- Marano KE, Vladescu JC, Reeve KF, Sidener TM, Cox DJ. A review of the literature on staff training strategies that minimize trainer involvement. Behavioral Interventions. 2020;35(4):604–641. doi: 10.1002/bin.1727. [DOI] [Google Scholar]

- Maurice, C. E., Green, G. E., & Luce, S. C. (1996). Behavioral intervention for young children with autism: A manual for parents and professionals. PRO-ED.

- McKeachie, W. J., & Svinicki, M. (2014). Teaching tips: Strategies, research, and theory for college and university teachers (14th ed.). Houghton Mifflin.

- Miltenberger, R. G. (2007). Behavioral skills training procedures. In R. G. Miltenberger (Eds.), Behavior modification: Principles and procedures (5th ed., pp. 251–266). Wadsworth/Cengage Learning.

- Mueller, M. M., & Nkosi, A. (2010). The big book of ABA programs. Stimulus Publications.

- Nosik MR, Williams WL, Garrido N, Lee S. Comparison of computer based instruction to behavior skills training for teaching staff implementation of discrete-trial instruction with an adult with autism. Research in Developmental Disabilities. 2013;34(1):461–468. doi: 10.1016/j.ridd.2012.08.011. [DOI] [PubMed] [Google Scholar]

- Nosik MR, Williams WL. Component evaluation of a computer based format for teaching discrete trial and backward chaining. Research in Developmental Disabilities. 2011;32(5):1694–1702. doi: 10.1016/j.ridd.2011.02.022. [DOI] [PubMed] [Google Scholar]

- O'Grady, A. C., Reeve, S. A., Reeve, K. F., Vladescu, J. C., & (Jostad) Lake, C. M. (2018). Evaluation of computer-based training to teach adults visual analysis skills of baseline-treatment graphs. Behavior Analysis in Practice, 11, 254–266. 10.1007/s40617-018-0266-4 [DOI] [PMC free article] [PubMed]

- Pear, J. J., Schnerch, G. J., Silva, K. M., Svenningsen, L., & Lambert, J. (2011). Web-based computer-aided personalized system of instruction. In W. Buskist, & J. E. Groccia (Eds.), New directions for teaching and learning, (pp. 85–94). Jossey-Bass.

- Pollard JS, Higbee TS, Akers JS, Brodhead MT. An evaluation of interactive computer training to teach instructors to implement discrete trials with children with autism. Journal of Applied Behavior Analysis. 2014;47(4):1–12. doi: 10.1002/jaba.152. [DOI] [PubMed] [Google Scholar]

- Quigley SP, Ross RK, Field S, Conway AA. Toward an understanding of the essential components of behavior analytic service plans. Behavior Analysis in Practice. 2018;11:436–444. doi: 10.1007/s40617-018-0255-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rethink Behavioral Health. (2022). Rethink. https://www.rethinkbehavioralhealth.com/

- Sarokoff RA, Sturmey P. The effects of behavioral skills training on staff implementation of discrete-trial teaching. Journal of Applied Behavior Analysis. 2004;37(4):535–538. doi: 10.1901/jaba.2004.37-535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnell LK, Sidener TM, DeBar RM, Vladescu JC, Kahng S. Effects of computer-based training on procedural modifications to standard functional analyses. Journal of Applied Behavior Analysis. 2017;51:87–98. doi: 10.1002/jaba.423. [DOI] [PubMed] [Google Scholar]

- Scott, J., Lerman, D. C., & Luck, K. (2018) Computer-based training to detect antecedents and consequences of problem behavior. Journal of Applied Behavior Analysis, 51(4), 784–801. 10.1002/jaba.495 [DOI] [PubMed]

- Shea KA, Sellers TP, Smith SG, Bullock AJ. Self-guided behavioral skills training: A public health approach to promoting nurturing care environments. Journal of Applied Behavior Analysis. 2020;53(4):1889–1903. doi: 10.1002/jaba.769. [DOI] [PubMed] [Google Scholar]

- Skinner, B. F. (1954). The science of learning and the art of teaching. Harvard Educational Review, 24, 86–97

- Skinner B. F. Teaching Machines. Science. 1958;128(3330):969–977. doi: 10.1126/science.128.3330.969. [DOI] [PubMed] [Google Scholar]

- The New England Center for Children. (2020). Autism curriculum encyclopedia. https://www.acenecc.org

- Vollmer TR, Iwata BA, Zarcone JR, Rodgers RA. A content analysis of written behavior management programs. Research in Developmental Disabilities. 1991;13:429–441. doi: 10.1016/0891-4222(92)90001-M. [DOI] [PubMed] [Google Scholar]

- Wainer AL, Ingersoll BR. Disseminating ASD interventions: A pilot study of a distance learning program for parents and professionals. Journal of Autism & Developmental Disorders. 2013;43(1):11–24. doi: 10.1007/s10803-012-1538-4. [DOI] [PubMed] [Google Scholar]

- Walker S, Sellers T. Teaching appropriate feedback reception skills using computer-based instruction: A systematic replication. Journal of Organizational Behavior Management. 2021;41(3):1–19. doi: 10.1080/01608061.2021.1903647. [DOI] [Google Scholar]

- Ward-Horner J, Sturmey P. Component analysis of behavior skills training in functional analysis. Behavioral Interventions. 2012;27:75–92. doi: 10.1002/bin.1339. [DOI] [Google Scholar]

- Williams TC, Zahed H. Computer-based training versus traditional lecture: Effect on learning and retention. Journal of Business & Psychology. 1996;11:297–310. doi: 10.1007/BF02193865. [DOI] [Google Scholar]

- Williams DE, Vollmer TR. Essential components of written behavior treatment programs. Research in Developmental Disabilities. 2015;36:323–327. doi: 10.1016/j.ridd.2014.10.003. [DOI] [PubMed] [Google Scholar]

- Wolf MM. Social validity: The case for subjective measurement or how applied behavior analysis is finding its heart. Journal of Applied Behavior Analysis. 1978;11(2):203–214. doi: 10.1901/jaba.1978.11-203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe K, Slocum TA. A comparison of two approaches to training visual analysis of AB graphs. Journal of Applied Behavior Analysis. 2015;48(2):472–477. doi: 10.1002/jaba.212. [DOI] [PubMed] [Google Scholar]

- Zhang YX, Cummings JR. Supply of certified applied behavior analysts in the United States: Implications for service delivery for children with autism. Psychiatric Services in Advance. 2019;71(4):385–388. doi: 10.1176/appi.ps.201900058. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Information regarding software used in this study is available from the corresponding author on reasonable request.