Abstract

Animal survival necessitates adaptive behaviors in volatile environmental contexts. Virtual reality (VR) technology is instrumental to study the neural mechanisms underlying behaviors modulated by environmental context by simulating the real world with maximized control of contextual elements. Yet current VR tools for rodents have limited flexibility and performance (e.g., frame rate) for context-dependent cognitive research. Here, we describe a high-performance VR platform with which to study contextual behaviors immersed in editable virtual contexts. This platform was assembled from modular hardware and custom-written software with flexibility and upgradability. Using this platform, we trained mice to perform context-dependent cognitive tasks with rules ranging from discrimination to delayed-sample-to-match while recording from thousands of hippocampal place cells. By precise manipulations of context elements, we found that the context recognition was intact with partial context elements, but impaired by exchanges of context elements. Collectively, our work establishes a configurable VR platform with which to investigate context-dependent cognition with large-scale neural recording.

Supplementary Information

The online version contains supplementary material available at 10.1007/s12264-022-00964-0.

Keywords: Virtual reality, Spatial context, Contextual behavior, Hippocampus, Place cell, Learning and memory, Ca2+ imaging

Introduction

Mammals associate the environmental context with emotional experiences and express adaptive defensive behaviors during future exposure to the context [1–4]. The environmental context consists of various individual elements and is enriched with multisensory information. Together with the immersive nature of contextual behaviors, it is a great challenge to quantitatively control the specific contextual elements and quickly switch between distinct contexts in freely-moving animals. Moreover, it is difficult to recapitulate the real world (e.g., spatial geometry and size) in standard neuroscience research laboratories. Virtual reality (VR) is a powerful technology with which to tackle these challenges and has been widely used in neuroscience research and human therapy such as treating phobias and Alzheimer’s disease, and stroke rehabilitation [5–7]. Among the wide variety of VR systems, many commercial VR programs for 3D graphics are tailored for human use with complex 3D rendering and lack flexibility or compatibility with specific experimental needs such as large-scale neural recording. Further limitations include the high cost and lack of standardized VR solutions. Nevertheless, custom-made VR systems in rodents enable the manipulation of virtual context and combination with head-fixed neural recording, including intracellular recording and 2-photon imaging [8–10]. However, these systems require substantial modifications to implement new types of experiment. Efforts have been made to develop general VR software such as the ViRMeN graphics package [11], but many improvements are still needed. For instance, the 30-Hz frame rate in ViRMeN is close to the lower bound for VR display requirements and 3D graphics editing is still not straightforward, requiring laborious programing. In fact, editing a 3D map is highly sophisticated and almost impossible to implement in lightweight software with comparable capability as dedicated professional software. Therefore, it is desirable to develop a flexible and affordable VR platform to train context-dependent cognitive behaviors, which can be readily used by small laboratories without the need for sophisticated programing. We previously engineered a VR system with hardware modules including VR display and multisensory presentation modules which can be replaced and upgraded independently [12]. This system has a real-time interactive VR display, but it requires multiple algorithms and complex mechanisms to establish behavioral protocols and does not support advanced functions such as trial-by-trial switching between more contexts, trial-by-trial changes in specific context elements, recording precise timestamps of animal behavior and system actions, integrating different types of I/O data, and streaming the data live and continuously into a local disk. These functionalities are critical for high performance of a VR system and establishing sophisticated behavioral tasks.

In this study, we have developed software with advanced functions, integrated new interface hardware for the software, and reorganized the hardware modules adapted from our previous VR system. More importantly, we have established a pipeline to set up sophisticated protocols for contextual behaviors and continuously streaming data at a high sampling rate. We have also provided detailed descriptions for the design and implementation of our new VR platform to ease the replication of this platform in small laboratories. This platform supports the following technological features: (1) real-time processing in external devices and a high sampling rate of data acquisition; (2) lightweight software and a high frame rate of VR display; (3) flexible assembly of VR modules to control sensory stimuli and external devices; (4) independent editing of virtual 3D maps; and (5) cost-effectiveness and ease of use. Using this VR platform, we have successfully trained animals to learn context-dependent tasks and recorded thousands of place cells in the hippocampus across hundreds of trials. We have further set up more sophisticated behavioral protocols for the spatial context-dependent delayed-sample-to-match task and unveiled the functional roles of individual contextual elements in context-discrimination tasks.

Materials and Methods

The VR Platform

The software package for the VR platform, device drivers, and documents are available from https://github.com/XuChunLab/VR_platform/. In addition, the following are available from this GitHub repertoire: two dll files (folder Drivers/Rapoo V310/) to retrieve laser mouse data for 1D and 2D navigation; the list of hardware with parameters and order information; and design of the head plate and its adaptor (see Supplemental Materials).

Animals and Surgeries

Animals were housed under a 12-h light/dark cycle and provided with food and water ad libitum in the animal facility of the Institute of Neuroscience (Center for Excellence in Brain Science and Intelligence Technology, CAS). All animal procedures were carried out in accordance with institutional guidelines and were approved by the Institutional Animal Care and Use Committee (IACUC No. NA-047-2020) of the Institute of Neuroscience. Adult (>8 weeks) wild-type C57BL/6J mice (Shanghai Laboratory Animal Center, Shanghai, China) were used.

Mice were anesthetized with isoflurane (induction 5%, maintenance 2%; RWD R510IP, RWD, Shenzhen, China) and fixed in a stereotactic frame (RWD, China). Local analgesic (lidocaine, Shandong Hualu Pharmaceutical, China) was administered before surgery. Body temperature was maintained at 35°C by a feedback-controlled heating pad (FHC Inc., Bowdoin, ME, USA). A custom-made head plate was fixed to the skull by dental acrylic (Super Bond C&B, Moriyama-shi, Shiga, Japan) for head-fixed behavioral training.

For Ca2+ imaging, the AAV2/9-CaMKII-GCaMP6f vector (500 µL, 1.82 × 1013 GC/mL; Taitool Bioscience, Shanghai, China) was loaded into a glass pipette (tip diameter 10–20 µm) connected to a Picospritzer III (Parker Hannifin Corp., Hollis, NH, USA) and injected into hippocampal CA1 (AP, posterior to bregma; ML, lateral to the midline; DV, below the brain surface; in mm): AP–1.82, LAT –1.5, DV–1.5. The pipette was left in the injection site for at least 3 min after injection. Two weeks after AAV injection, a gradient index lens (GRIN lens, 64519, 1.8 mm 0.25 pitch 0.55 NA, Edmund, Shenzhen, China) was implanted above the injection site during a second surgery. Briefly, a small craniotomy was made above the hippocampus and the brain tissue above the target was aspirated with a 100-µL pipette attached to a vacuum pump (BT100-1F, LongerPump, Hebei, China). PBS was repeatedly applied to the exposed tissue to prevent drying. Aspiration was stopped once a thin layer of horizontal fibers on the hippocampal surface was visualized. Once the surface of the hippocampus was clear of blood, the GRIN lens was slowly lowered to above CA1 with a custom holder (RWD, China) on the stereotaxic arm (–1.6 mm from brain surface) and fixed to the skull using light-curing dental resin (CharmFil Flow, DentKist Inc., Korea). Finally, a custom-made head plate was fixed to the skull using dental acrylic (Super Bond C&B, Japan).

Behavioral Training in the VR Platform

Pre-training

The animal was water restricted for 1–2 days until the body weight was reduced to 80%– 90%. After this, the body weight was maintained above 85% and water (0.5 mL–0.8 mL) was provided each day. One week after head-plate implantation, each mouse was head-fixed and habituated to a rotating Styrofoam cylinder (diameter 20 cm and width 10 cm) and to running on it by itself. This phase lasted for a week with daily training for 15 min–30 min. Then each mouse was habituated to black-and-white gratings on the wall in a 25-cm VR linear track that was shorter than the VR linear track used in formal training. A maximal 4 drops of water reward (1.5 µL–2 µL per drop, minimal interval 0.1 s) were available after each trial. The total water reward in a typical session was in the range of 0.8 mL –1 mL. The animal was given extra water in the home cage if it did not get enough water reward (0.8 mL) in a behavioral session. In this stage, mice learned to continuously run and lick. Once the animal could complete >70 trials in one session per day, the length of the linear track was extended to 50 cm. Next, the length of the linear track was extended to 100 cm once the animal completed at least 70 trials in the previous stage. The pre-training was complete if the animal finished at least 70 trials in one session in the last stage.

Place-dependent Reward Task

After the pre-training, each mouse was first habituated to a linear track (100 cm) consisting of an 80-cm context and a 20-cm corridor and then formally trained in the new track with context and corridor components (Fig. 3A). A session was run daily and typically had 80–120 trials running on a complete linear track. Two cohorts of mice were trained with a reward (as in pre-training) in the context or corridor. The last 2/3 trials in each session were included for analysis. If the context was associated with the water reward, the mouse received the reward if it licked in the context. Over the course of learning, a mouse would start to lick as soon as it reached the beginning of the context. When the corridor was associated with the water reward, the mouse would gradually concentrate the licks to the corridor across learning sessions.

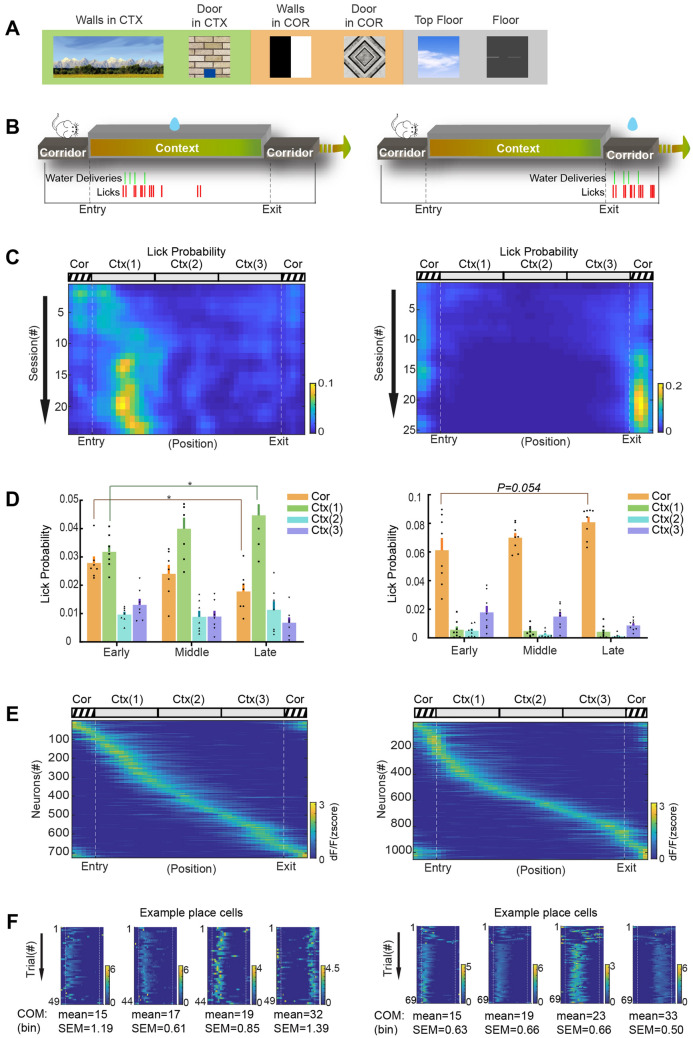

Fig. 3.

Context-dependent behavioral training. A Example images of the walls, door, floor, and ceiling used in the virtual context (Ctx) and corridor (Cor). B Upper, cartoon of the trial structure with water reward available in the context or corridor; lower, example licking rasters (red ticks, licks; green ticks, water deliveries). C Example heat maps showing the lick probability (normalized by lick number in the whole trial) in the corridor and context (3 equal parts). The lick probability in each spatial bin (50 bins in total) is color-coded. Each row represents the session number after pre-training. D Summary of lick probability (mean ± SEM) in the corridor and 3 parts of context in the early, middle, and late stages of the behavioral sessions. Left, water is rewarded in the context (n = 7 mice). The lick probability in early and late stages are significantly different in the corridor (0.028 ± 0.002 early vs 0.018 ± 0.003 late, P = 0.0176, unpaired t-test) and context part 1 (0.032 ± 0.002 early vs 0.045 ± 0.004 late, P = 0.0157, unpaired t-test). Right, water is rewarded in the corridor (n = 8 mice). The lick probability in the corridor is higher in the late than in the early stage (0.061 ± 0.008 early vs 0.081 ± 0.004 late, P = 0.054, unpaired t-test). E Heatmaps of z-scored Ca2+ signals of CA1 neurons when animals are navigating in context-dependent tasks. Left, 725 place cells out of 1735 cells recorded from 4 mice. Right, 1054 place cells out of 1467 cells from 3 mice. F Heatmaps of z-scored Ca2+ signals of example place cells across trials in one session. The COM (center of mass) is summarized as spatial bins (mean ± SEM).

Context Discrimination Task

The context was composed of four visual elements on the left/right walls, top floor (ceiling), and front door. The left/right wall had 2 versions: a black-and-white striped background with a parallelogram pattern (A/B), and the same background with a dot pattern (a/b). The front wall had a gray background with pentagons (C) or concentric circles (c). The top floor had a white background with black stars (D) or gray pebble shapes (d). The total volume of water reward was 0.8–1 mL in a typical session. The animal was given extra water in the home cage if the volume of reward in the behavioral task did not reach 0.8 mL. CO2 was used for air-puffs 100–300 ms in duration at 5–10 pounds per square inch.

After pre-training, each mouse was trained with interleaved trials of contexts 1 and 2. Upon detection of licking in the context, context 1 was paired with water reward and context 2 was paired with an air-puff to the eye. To encourage the animal to run as many trials as possible, the maximal water reward in one trial was 4 drops even if the animal kept making correct choices. To avoid excessive punishment, an air-puff in one trial was only delivered once even if the animal kept making wrong choices. These settings were each preconfigured into the Arduino-based modules for water reward and air puff.

For the animal, it was the correct rejection of licking behavior in the context 2 that required learning. The training continued until the animal reliably showed good rejection accuracy (60%–70%) in context 2 for at least 5 sessions (Fig. 4). Then, the training was moved to the next stage of the discrimination task.

Fig. 4.

Context discrimination training. A Diagram of the Go/No-Go task based on context discrimination. B Cartoon of the four types of elements to compose the two contexts. C Summary of behavioral accuracy (mean ± SEM) in context 1 (hit rate) and context 2 (rejection rate) in the beginning and well-trained stages (n = 5 sessions). The rejection rate significantly increases after learning (unpaired t-test: mouse 1, 0.10 ± 0.055 vs 0.60 ± 0.055, P = 0.00020; mouse 2, 0.080 ± 0.049 vs 0.74 ± 0.051, P <0.0001).

In the stage of the discrimination task with test contexts, one session was run each day and contained 5 probe trials in addition to the standard trials (15 Go trials and 10 No-Go trials). The probe and standard trials were randomly interleaved. The context elements were changed as designed and each session only tested one type of changed context in the probe trials. The omission types were tested in a random sequence and repeated for multiple rounds. Next, the switch types were tested in a random sequence and repeated for multiple rounds. The animal’s licks in the probe trials did not lead to any consequence (Fig. 5).

Fig. 5.

Context recognition task with element changes. A Cartoon of the behavioral outcomes in trials with different contexts in the context recognition task. The probe trials for a test context are interleaved by Go and No-Go trials with contexts 1 and 2. A session typically consists of 15 Go trials, 10 No-Go trials, and 5 Test trials. B Diagram of how test contexts are constructed. Context 2’ is modified from context 2 with element omissions (types 1–5). Context 3 is a combination of elements from contexts 1 and 2 (types 6–10). C Summary of the rejection rate (mean ± SEM) in different types of test contexts with element omissions (n = 5 sessions). Compared to trials of the No-Go context, the rejection rates in test contexts are all non-significant, except for context type 4 in mouse 1 (paired t-test, P = 0.0240). D Summary of the rejection rate (mean ± SEM) in different types of test contexts with element interchanged (n = 3 sessions). Compared to trials of the No-Go context, the rejection rates for context type 10 in both mice are significantly lower (paired t-test: P = 0.0099 mouse 1; P = 0.014, mouse 2) but not for the rest.

Context-Dependent Delayed-Sample-to-Match Task

After pre-training as above, the animal had learned running and licking in the linear track. The pre-training continued by habituating the animal to a new spatial environment consisting of contexts and a corridor. The animal learned to lick for reward at the left or right ports after entry into the corridor. The water reward was randomly available in either of these ports. The animal was expected to show comparable licks for the two sides (40%–60%) after some habituation sessions.

Next, the animal went through the training of stage 1. The animal was trained in a block design to learn a delayed-sample-to-match task (Fig. 6A). While each session consisted of multiple blocks, every two blocks were composed of neighboring contexts (AA in the first block, AB in the second). To facilitate the behavioral training, some teaching trials were introduced, in which the water reward was delivered at the correct side as soon as the animal entered the corridor regardless of licking behavior. To ensure successful learning with randomly-interleaved trials, the trial number in one block was progressive reduced. With these two considerations, the whole training process was divided into several steps and trained with decreasing trial numbers and numbers of teaching trials in one block. The steps were as follows: 20 trials in one block, one teaching trial in every 5 trials; 10 trials in one block, one teaching trial in every 8 trials; 10 trials in one block without a teaching trial. Finally, the animal was trained with randomly-interleaved trials of AA and AB contexts.

Fig. 6.

Context-dependent delayed-sample-to-match task. A Cartoon of the behavioral outcomes in trials with different combinations of two consecutive contexts in the delayed-sample-to-match task. B Diagram of how the two contexts are constructed. Contexts 1 and 2 differ in the left and right walls. C Schema of training stages in the delayed-sample-to-match task. D Summary of behavioral accuracy (mean ± SEM) in training stages 1 and 2 (n = 5 sessions). Both mice learn the tasks with context configurations of AA and AB at stage1 (unpaired t-test: P = 0.015 mouse 1; P <0.0001, mouse 2) and with additional context configurations of BB and BA at stage 2 (unpaired t-test: P = 0.0061 mouse 1; P = 0.046, mouse 2).

After the behavioral accuracy in stage 1 reached 70%–80%, the animal entered the next training stage (stage 2). In stage 2, the context configurations BB and BA were added to the training block. The animal was trained in a strategy similar to stage 1 with four blocks in a random sequence and repeated for multiple rounds. In the end, mice were able to accomplish the task in random trial switching between context configurations AA, AB, BB, and BA (Fig. 6).

Miniscope Ca2+ Imaging

Two weeks after GRIN lens implantation, we started to check for GCamP6f fluorescence using a miniature epifluorescence microscope (Miniscope, UCLA V2, Labmaker, Berlin, Germany). The Miniscope was connected to a portable computer for live viewing of the fluorescence imaging to guide the alignment and focal planes. Once a clear field of view with sufficient expression was observed, the Miniscope was fixed to the skull via a baseplate using UV glue and dental acrylic under isoflurane anesthesia. The Miniscope was detached, the baseplate sealed with a baseplate cover, and the animal returned to its home cage for recovery. Imaging experiments were performed at least at four weeks after virus expression. The Miniscope imaging was triggered by the start of VRrun via a TTL signal. Digital imaging data were acquired from a CMOS imaging sensor (Aptina, MT9V032, Suzhou, China), transmitted to the computer via the DAQ box and a USB. The Miniscope data were acquired at 30 frames per second and recorded to uncompressed AVI files by DAQ software (MiniScopeControl, UCLA, USA).

Ca2+ Imaging Analysis

The field of view was cropped and then 2× spatially and 3× temporally down-sampled using the moviepy python package to reduce the computation load afterwards. The pre-processed data were processed by CaImAn (1.8.5) toolbox [13] using constrained non-negative matrix factorization extension [14]. The motion correction and source extraction were all done with default parameter settings except the following: down-sampling factor in time for initialization (tsub) 4; neuron diameter (gSiz) 13 pixels; 2D Gaussian kernel smoothing (gSig) 3 pixels; minimum peak-to-noise ratio (min_pnr) 10; and spatial consistency (rval_thr) 0.85. The accepted raw traces (estimates.C) of neuronal activity were manually checked in case of abnormal baseline shift. The raw traces were further analyzed in MatLab.

Place cells were identified by calculating the spatial information (SI) adapted from a previous study [15].

The whole VR linear track was divided into 50 spatial bins. is the overall average Ca2+ activity, is the average activity in each spatial bin i, and is the probability the mouse stayed in. Place cells were accepted if the P value of SI passed the bootstrap test. For bootstrapping, the ΔF/F trace of each trial was split into 10 segments. The SI was recalculated with randomly-shuffled data segments. The SI of the cell was considered significant if it was >95% of the shuffled SI after 1,000 iterations.

The center of mass (COM) for each place cell was calculated by the equation:

where n is the number of bins with Ca2+ signals >0, ∆Fi is the fluorescence in the i-th bin of Ca2+ signals, and xi is the distance of the i-th bin from the start position.

Quantification and Statistical Analysis

The summary of quantification was reported as the mean value with the standard error of the mean (SEM). The numbers of cells and animals is indicated by n. Statistical analysis was performed in GraphPad Prism 8 or MatLab. The two-sided paired t-test, unpaired t-test, and one-way ANOVA test were used to test for statistical significance. Statistical parameters including the exact value of n, precision measures (mean ± SEM) and statistical significance are reported in the text and in the figure legends (see individual sections). The significance threshold was set to 0.05 (n.s., P >0.05; *P <0.05; **P <0.01; ***P <0.001; ****P <0.0001).

Results

Design and Hardware for the VR Platform

The central goal of the VR platform was to enable immersive and interactive virtual experiences in rodents by real-time interactions between the virtual context and animals and to be compatible with neural recording and manipulation. To this end, we designed the VR platform with separable modules for flexibility and replaceability (see overview in Fig. 1A). As shown in Fig. 1B, the working loop for the VR platform was as follows. While the animal was freely running on the Styrofoam cylinder, its locomotion is detected by the laser mouse (6#) and sent to the computer (4#). Meanwhile, the custom-written VR software (named VRrun) in the computer (4#) lively updated the VR position of the animal and VR display (2#). Depending on the experimental protocol and settings for the virtual context, various event markers with timestamps (e.g., context entry and exit) were sent to different functional modules (3#) via a data acquisition (DAQ) card (1#). The neural recording system (5#) was synchronized by TTL signals from the DAQ card.

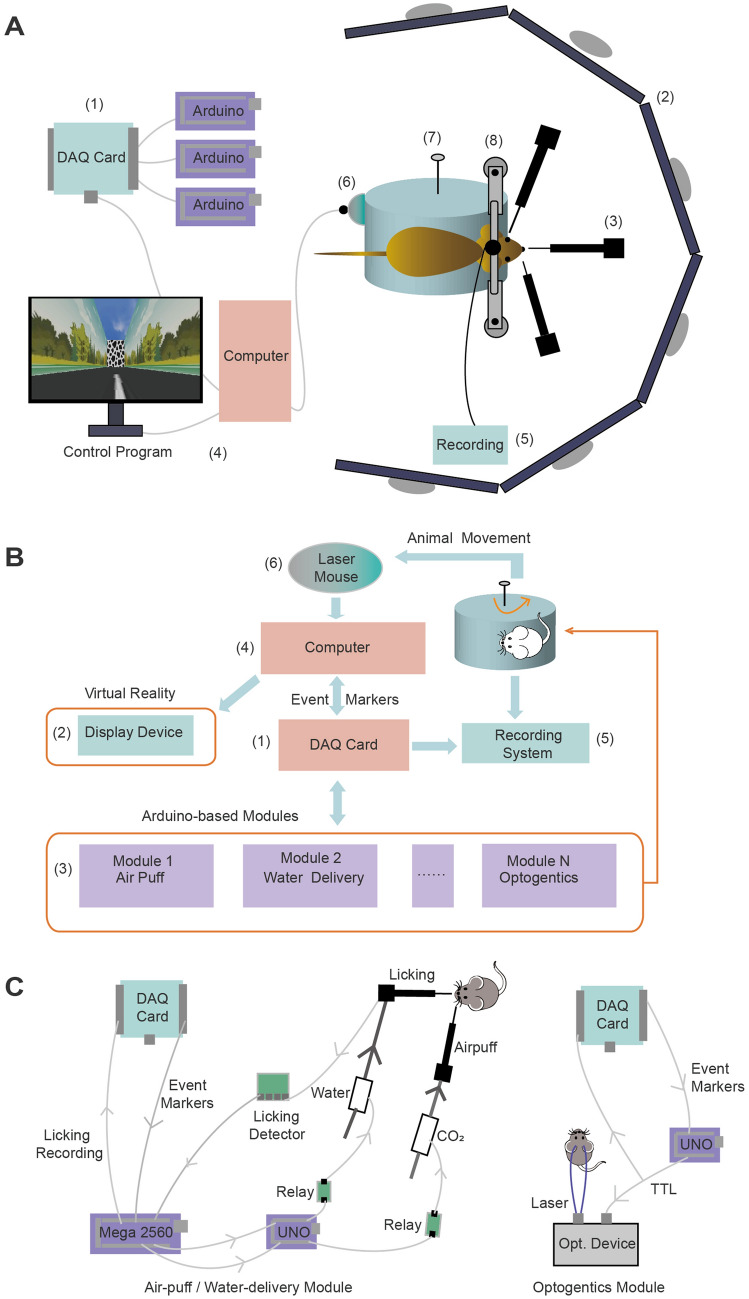

Fig. 1.

Hardware for the VR platform. A Cartoon showing the assembly of the VR platform. The components are (1) a DAQ card, (2) 6 LCD screens covering a 270° view for VR display, (3) Arduino-based modules connected with the DAQ card, (4) a computer, (5) a neural recording system, (6) a laser mouse, (7) a Styrofoam cylinder, and (8) a head-fixation setup. B Organization chart of the VR platform. (1) A DAQ card to acquire data and send TTL signals. (2) A VR display device controlled by the computer. (3) Arduino-based and DAQ-connected modules for lick detection, water delivery, and air-puffs. (4) Computer to run VRrun (main program) and communicate with the DAQ card. (5) Neural recording such as Ca2+ imaging and electrophysiological recording synchronized with VRrun. (6) A laser mouse to detect the head-fixed animal’s locomotion on the customized Styrofoam cylinder (7–8). C Scheme of the design for the air-puff/water delivery and optogenetic modules.

The three key modules in the hardware were the locomotion detection, VR display, and optional function modules. The first was the real-time detection of the animal’s locomotion on a rotating cylinder, which allowed it to actively interact with the VR platform. The commercial computer mouse was sufficient for fast, sensitive, and accurate detection of the rotation speed of the Styrofoam cylinder as a proxy for the animal’s locomotion. In general, most laser mice on the market can do this job with a relatively good working distance and tolerance on the somewhat uneven surface of the cylinder. To make use of the laser mouse, we rewrote its driver so that its data could be retrieved by VRrun and that the Windows Operating System (Win OS) would not recognize it as a mouse for the Win OS.

The second was the interactive VR display based on real-time locomotion. The hardware for VR display communicated with VRrun using standard interfaces including a video graphics array (VGA) and a high-definition multimedia interface (HDMI). We use an array of six LCD (liquid crystal display) screens covering 270° of view to update in real time the VR scenes controlled by a powerful graphic card. Alternatively, we used a commercial projector to project onto a curved back-projecting screen after adaptive adjustment by an integrative instrument; defining the display area on a curved screen was fast, reliable, and customizable.

The third were various functional modules to execute the experimental protocol which defined when and how to reward/punish the animal and send out TTL triggers for optogenetics and other modules for auditory, olfactory, and somatosensory stimuli. Depending on the type of event markers sent from the DAQ card, these modules executed pre-defined actions accordingly. It was the user’s choice to construct specific modules. The key module to interact with the animal was the one controlling feedback, including water rewards and air-puffs (Fig. 1C). We chose a Mega (Arduino Mega 2500) as a master to control the feedback with pre-defined settings. Whenever licking was detected, the Mega instantaneously made a feedback decision based on the status updated by the event markers from the DAQ card. For example, if the protocol defined context 1 as a rewarding context and context 2 as a non-rewarding context, as the upper computer, the Mega only sent a command to a lower computer (Arduino UNO) when the animal was inside context 1 but not context 2. This UNO controlled the onset and volume of water reward via a pump with pre-defined settings. It also controlled the air-puffs based on the command from Mega. This module and other optional modules (e.g., an optogenetic module) operated independently and in parallel, and interacted with VRrun via a DAQ card. Powered by Arduino microcontrollers that are simple and easy to use, these modules were capable of real-time processing and responding with minimum delays (<1 ms) without compromising VRrun performance such as the frame rate of the VR display. In principle, more modules and DAQ cards can be added in the same manner and the VR system can be easily scaled up to control more devices and execute more complicated tasks.

Owing to the high sampling rate of the DAQ card, VRrun was capable of saving data that the DAQ card acquired from various functional modules with high temporal precision. We set the DAQ sampling rate at 1 kHz, which ensured a temporal precision much higher than those in the external devices connected with the DAQ card. If needed, the temporal resolution can be further increased to 0.1 ms as long as the buffer of the DAQ card is large enough to keep I/O data in the communication with VRrun. In principle, each specific module could be replaced and upgraded with the user’s choice. Taken together, our modular design for the hardware allows for building a VR platform with high flexibility and performance to fulfill specific experimental needs with effective costs (about CNY 60,000).

Software for the VR Platform

VRrun (main program) is the heart of the software for the VR platform. It was developed in C++ using multithread programing and has four parallel threads in order to control the VR display and process the I/O of DAQ efficiently. The principle of modular design also applies to the framework of VRrun. It used the main thread (thread 1#) to initialize the VR display with the program setting and then synchronized external devices by sending out TTL signals via the DAQ card. In addition, it has five functional modules (Fig. 2A). The first module (thread #2) continuously monitored the animal’s locomotion by retrieving data from a laser mouse. The second module (thread #1) updated the VR display based on the real-time locomotion and saved VR spatial locations. The third module (thread #3) continuously saved the data with high temporal precision processed by a DAQ card. The fourth module (thread #4) saved the VR information (timestamps and VR locations) for a specific VR (e.g., entry and exit of a context) defined in the setting file. The fifth module (thread #4) controlled external hardware devices with specific experimental operations (e.g., water reward and air-puff) and saved their timestamps.

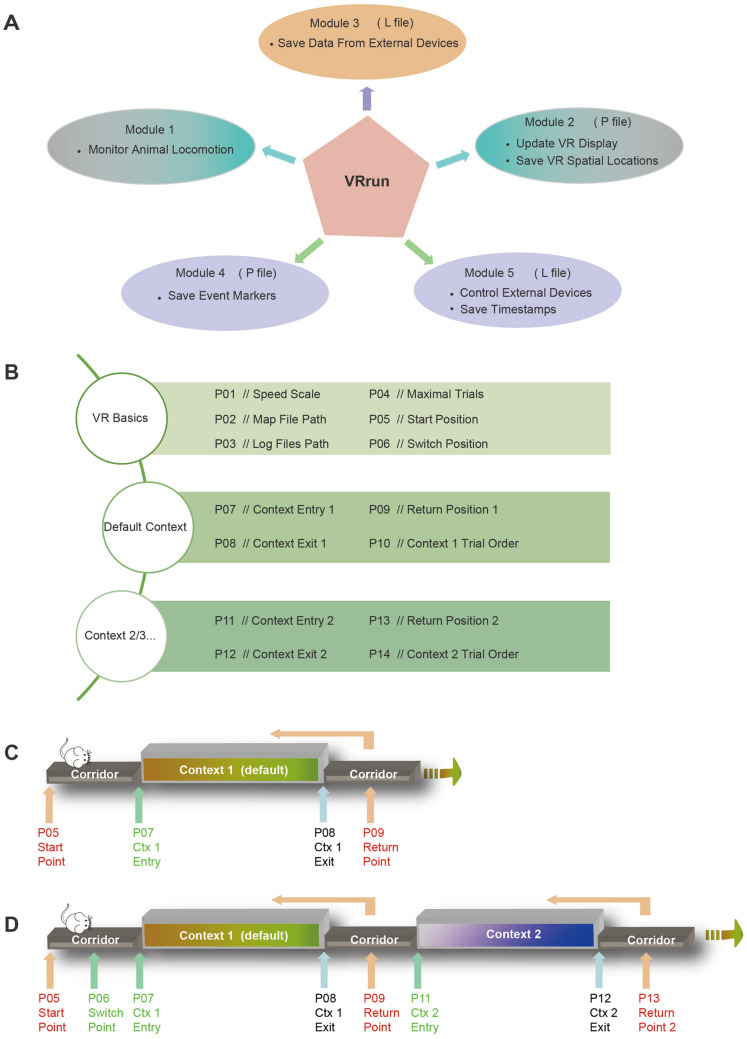

Fig. 2.

Software design and trial structure for the VR platform. A Five functional modules of the control program (VRrun). Module 1 monitors the animal’s locomotion in real time. Module 2 updates the VR display in real time and saves the animal’s spatial locations in the VR into the P file. Module 3 saves the data acquired by the DAQ card into the L file. Module 4 records the time-stamps for specific event markers into the P file. Module 5 controls external hardware devices (e.g., water delivery and air-puff) and records the corresponding timestamps into the L file. B The setting file for VRrun consists of two basic sections and others for specific contexts. C, D Parameters to define the VR spatial positions in the trial structure with a default context (C) and two contexts (D).

VRrun requires a setting file to determine the basic parameters including speed scale factor, trial number, context information and file paths of a log file and a 3D map file (Fig. 2B). The 3D map file contains a 3D virtual space and should be readable by Irrlicht Engine, which is an open-source and real-time 3D engine written in C++ (irrlicht.sourceforge.net, openGL 4.6.0, irrlicht-1.8.4). The 3D map file can be independently created and edited by third-party software such as 3ds Max. In our experience, this independent editing of 3D maps is very handy because it allows for map editing without changing VRrun. The context information in the 3D map needs to be correctly defined in the settings file so that VRrun sends out context markers precisely.

The frame rate for VR display is determined by the main process in the VRrun and is equivalent to the behavioral sampling rate in the VR platform. In a standard laptop computer (CPU 2.8 GHz, RAM 16 GB), this sampling rate is on average at 300 Hz (260 Hz–330 Hz). This can be higher in a computer with better hardware configurations. The standard behavioral recording has a sampling rate of at least 30 Hz, which is acceptable for the behavioral research of free exploration and relatively slow spatial navigation. Behavioral recording at higher sampling rate (~120 Hz is sufficient) is needed for fast running and fine behaviors such as food pellet fetching and licking [16, 17]. In summary, the sampling rate in our VR platform is far more than enough for most behavioral research. Taken together, the modular design and parallel processing in the VR software allows for efficient data handling and compatibility with various 3D maps and external devices.

Trial Structure and Data for Contextual Behaviors

Each trial was composed of a starting point (P05) and a returning point (P09) as well as a context in between (Fig. 2C). The animal’s VR position is teleported back to the starting point once it reaches the returning point so that the next trial starts from the same starting point (Fig. 2C). By doing so, many virtual contexts are connected in an endless chain by the corridor. From the animal’s point of view, it is like running through an unlimited number of contexts one after another (Video S1). The context needs two parameters to define the entry and exit positions (P07 and P08 for the default context, Fig. 2B, C).

The trial structure also supported the switch between distinct contexts. To do so, we needed to define a switch point before entering the default context (P06, Fig. 2D). In each trial, VRrun exposed the animal to an entry point (e.g., P07 and P11) of different contexts based on the setting file when the animal approached this switch point (P06). In the current version of VRrun, up to 6 contexts can be added in the setting file. For instance, if the 3D map had two types of virtual context, an additional returning point (P13) and additional context entry and exit points (P11 and P12) needed to be defined (Fig. 2B and D). The user can define which context to enter in a specific trial in the setting file (P10 and P14). As such, the trials to visit a certain context can be defined in a pseudorandom fashion. Generally, all these parameters in Fig. 2C and D can be calculated by the map-editing software and verified by another custom-written program named VRset.

Three files (P, L, and log files) are created by each run of VRrun. The P file saves the VR spatial location and VR event markers, and the L file saves the data processed by the DAQ card. Both P and L files are synchronized by VRrun. The log file saves basic information (e.g., experiment time and setting and file path) and trial information (e.g., VR context settings) in the behavioral session.

Context-dependent Behavioral Training and Place Cells in the Hippocampus

To determine whether and how the animals recognize the virtual context and corridor in this VR platform (Fig. 3A), we trained animals to run forward and collect a water reward in a place-dependent manner (Fig. 3B). Over the course of training, the animals learned to discriminate the context and the corridor and exhibited place-specific licking behaviors (Fig. 3C, D). By leveraging the head-mountable Miniscope Ca2+ imaging, we recorded from thousands of hippocampal CA1 pyramidal neurons in some animals performing these tasks. The CA1 neurons showed clear and stable place fields covering the entire length of the virtual context and corridor (Fig. 3E, F). These results demonstrated that the animal can recognize the virtual context and corridor in the VR platform and associate the reward with them, which are well represented by the place fields of hippocampal CA1 neurons.

Context Recognition Task with Element Changes

VRrun supports trial-by-trial switching between contexts with precise changes of elements. This feature is critical to probe an animal’s context recognition with precise changes of context elements. The first step is to train the animal to discriminate different contexts in a context-dependent Go/No-Go task (Fig. 4A). We created two virtual contexts differing in the elements of left and right wall, front door, and ceiling (Fig. 4B). As shown in Fig.4C, the animals showed similar hit rates in two contexts at the beginning of the training (after the pre-training), suggesting that they had not learned correct rejection for air-puffs. In the well-trained stage, both animals showed significantly lower hit rates in context 2 to avoid an air-puff (Fig. 4C), indicating that they successfully accomplished the context discrimination task after learning.

Next, we went on to access the animal’s context recognition by measuring its licking responses to a test context. Each session consisted of probe trials with a test context and standard trials with Go or No-Go contexts (context 1 or context 2) that the animal had already learned (Fig. 5A). These probe and standard trials were interleaved in a random fashion to avoid a potential learning effect in probe trials. The animals went through many sessions with one type of test context per session. The test contexts were composed either by omission of No-Go context elements (context 2’) or by combination of individual elements of Go and No-Go context elements (context 3) (Fig. 5B). When the animals were tested by the contexts with omission, we found that the rejection rates were mostly at the same level as those in trained contexts, except for one test context (abc0) in mouse 1 (Fig. 5C). When the animals were tested by the contexts with interchange, we found that the rejection rates dropped to zero with interchange of two elements but not one element (Fig. 5D). These results demonstrated that animals can retrieve the contextual memory with partial cues or a small proportion (1/4) of interfering elements from an opposite context. This discrimination task allows us to investigate the neural mechanism by which individual context elements are encoded and integrated in the brain to generate the context recognition.

Context-Dependent Delayed-Sample-to-Match Task

Rodents are valuable models in which to study the neural mechanisms underlying complex cognitive functions beyond simple sensory-response associations [18]. However, it remains to be shown whether a complex cognitive task in the spatial domain can be studied in head-fixed animals that are compatible with large-scale recording such as two-photon Ca2+ imaging. To this end, we sought to establish a delayed-sample-to-match cognitive task based on the spatial context in our VR platform. In each trial of this task, another cohort of mice sequentially went through two spatial contexts and made the decision to lick the left or right water port by judging whether or not the two contexts were the same (Fig. 6A, B). After pre-training, the animals first learned to discriminate two context configurations of AA and AB and to lick left or right accordingly (Fig. 6C). Once the animal’s performance reached the learning criterion (70%–80%), the training moved into the final stage with two additional context configurations of BB and BA. Ultimately, the animals learned to discriminate these four-context configurations with good performance (70%–80%), suggesting successful learning of the delayed-sample-to-match task (Fig. 6D). These results suggest that our VR platform is fully capable of training animals to perform context-dependent complex cognitive tasks.

Discussion

In this study, we developed a VR platform with high-frame-rate VR display, real-time VR interaction, and precise control of the context elements on a trial-by-trial basis. This platform is tailored for small neuroscience laboratories with modular designs in both hardware and software. It can be quickly assembled from a list of cost-effective components to flexibly fulfill the user’s specific experimental needs regarding virtual spatial contexts.

Novel Technological Features and Applications of the VR Platform

At the core of our VR platform is the custom-written software VRrun displaying VR and connecting with a laser mouse and other external devices via the DAQ card. VRrun refreshes the display at an average frame rate of 300 Hz, which can be even higher in computers with more advanced configurations. Taking advantage of the high sampling rate of the DAQ card, VRrun saves organized data from various functional modules in real time with a high sampling rate of 1 kHz. It reads the VR map that is editable by third-party software and controls Arduino-based hardware that is easy to implement in the lab. These technological features have not yet been fulfilled for spatial cognitive research by current VR tools. Together with the hardware modules we developed in this study, this VR platform offers a complete package to establish various kinds of cognitive behaviors in virtual spatial contexts.

When the animal was engaging in the immersive contextual behaviors in our VR system, its hippocampal place cells developed typical place fields (Fig. 3D). Notably, this VR platform has enabled us to successfully train mice to perform context-dependent tasks which require fast and precise switching of context. In particular, we have train animals to perform the classical delayed-match-to-sample task based on the spatial context (Fig. 6), which involves advanced cognition and has not yet been accomplished in the spatial domain. Large-scale neural recording is immediately applicable in this head-fix preparation [19]. Therefore, the development of this VR platform opens new possibilities for the study of neural mechanisms underlying cognitive behaviors in the spatial domain.

Limitation and Future Direction of the VR Platform

The settings of software VRrun are currently defined in a TXT file with strict format requirements. It is worth developing a graphic user interface to intuitively set those parameters for basic settings and different contexts. Each run of VRrun only reads a VR map that is edited before the experimental session. Together with the fact that the number of virtual contexts in a single VR map is limited, our VR platform does not support an interactive VR map that allows the temporary appearance of an object or visual stimulus.

Animal locomotion is currently translated from the rotation speed of a Styrofoam cylinder and detected by a laser mouse. While this is the only form of locomotion supported in this VR platform, it would be useful to accommodate more forms, including treadmills and joysticks in the future [20, 21]. Consequently, this VR platform would be readily applicable to other experimental subjects such as non-human primates or human participants by updating the hardware module for measuring locomotion. In summary, our VR platform can be quickly replicated in standard labs by the details reported in this study and paves the way to investigating cognitive functions in rodents using virtual spatial contexts.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We thank all members of the Xu lab for helpful discussions and comments. We thank Drs. Chengyu Li and Haohong Li for technical support and sharing resources. This work was supported by the National Science and Technology Innovation 2030 Major Program (2022ZD0205000), the National Key R&D Program of China, the Strategic Priority Research Program of the Chinese Academy of Sciences (XDB32010105, XDBS01010100), Shanghai Municipal Science and Technology Major Project (2018SHZDZX05), Lingang Lab (LG202104-01-08), the National Natural Science Foundation of China (31771180 and 91732106), and an International Collaborative Project of the Shanghai Science and Technology Committee (201978677).

Conflict of interest

The authors declare that there are no conflicts of interest.

Footnotes

Xue-Tong Qu, Jin-Ni Wu and Yunqing Wen have contributed equally to this work.

Change history

3/28/2025

A Correction to this paper has been published: 10.1007/s12264-025-01382-8

Contributor Information

Hua He, Email: hehua1624@smmu.edu.cn.

Yu Liu, Email: yu.liu@ia.ac.cn.

Chun Xu, Email: chun.xu@ion.ac.cn.

References

- 1.LeDoux JE. Emotion circuits in the brain. Annu Rev Neurosci 2000, 23: 155–184. [DOI] [PubMed] [Google Scholar]

- 2.Maren S, Phan KL, Liberzon I. The contextual brain: Implications for fear conditioning, extinction and psychopathology. Nat Rev Neurosci 2013, 14: 417–428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fanselow MS, Poulos AM. The neuroscience of mammalian associative learning. Annu Rev Psychol 2005, 56: 207–234. [DOI] [PubMed] [Google Scholar]

- 4.Maren S. Neurobiology of Pavlovian fear conditioning. Annu Rev Neurosci 2001, 24: 897–931. [DOI] [PubMed] [Google Scholar]

- 5.Bohil CJ, Alicea B, Biocca FA. Virtual reality in neuroscience research and therapy. Nat Rev Neurosci 2011, 12: 752–762. [DOI] [PubMed] [Google Scholar]

- 6.Laver KE, George S, Thomas S, Deutsch JE, Crotty M. Virtual reality for stroke rehabilitation. Cochrane Database Syst Rev 2011, 11: CD008349. [DOI] [PubMed] [Google Scholar]

- 7.García-Betances RI, Arredondo Waldmeyer MT, Fico G, Cabrera-Umpiérrez MF. A succinct overview of virtual reality technology use in Alzheimer’s disease. Front Aging Neurosci 2015, 7: 80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dombeck DA, Harvey CD, Tian L, Looger LL, Tank DW. Functional imaging of hippocampal place cells at cellular resolution during virtual navigation. Nat Neurosci 2010, 13: 1433–1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hölscher C, Schnee A, Dahmen H, Setia L, Mallot HA. Rats are able to navigate in virtual environments. J Exp Biol 2005, 208: 561–569. [DOI] [PubMed] [Google Scholar]

- 10.Harvey CD, Collman F, Dombeck DA, Tank DW. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature 2009, 461: 941–946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Aronov D, Tank DW. Engagement of neural circuits underlying 2D spatial navigation in a rodent virtual reality system. Neuron 2014, 84: 442–456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Liu L, Wang ZY, Liu Y, Xu C. An immersive virtual reality system for rodents in behavioral and neural research. Int J Autom Comput 2021, 18: 838–848. [Google Scholar]

- 13.Giovannucci A, Friedrich J, Gunn P, Kalfon J, Brown BL, Koay SA. CaImAn an open source tool for scalable calcium imaging data analysis. Elife 2019, 8: e38173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhou PC, Resendez SL, Rodriguez-Romaguera J, Jimenez JC, Neufeld SQ, Giovannucci A, et al. Efficient and accurate extraction of in vivo calcium signals from microendoscopic video data. Elife 2018, 7: e28728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gonzalez WG, Zhang HW, Harutyunyan A, Lois C. Persistence of neuronal representations through time and damage in the Hippocampus. Science 2019, 365: 821–825. [DOI] [PubMed] [Google Scholar]

- 16.Reis PM, Jung S, Aristoff JM, Stocker R. How cats lap: Water uptake by Felis catus. Science 2010, 330: 1231–1234. [DOI] [PubMed] [Google Scholar]

- 17.Ruder L, Schina R, Kanodia H, Valencia-Garcia S, Pivetta C, Arber S. A functional map for diverse forelimb actions within brainstem circuitry. Nature 2021, 590: 445–450. [DOI] [PubMed] [Google Scholar]

- 18.Wu Z, Litwin-Kumar A, Shamash P, Taylor A, Axel R, Shadlen MN. Context-dependent decision making in a premotor circuit. Neuron 2020, 106: 316-328.e6. [DOI] [PubMed] [Google Scholar]

- 19.Tang YJ, Li L, Sun LQ, Yu JS, Hu Z, Lian KQ, et al. In vivo two-photon calcium imaging in dendrites of rabies virus-labeled V1 corticothalamic neurons. Neurosci Bull 2020, 36: 545–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Liu QQ, Yang X, Song R, Su JY, Luo MX, Zhong JL, et al. An infrared touch system for automatic behavior monitoring. Neurosci Bull 2021, 37: 815–830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Huang K, Yang Q, Han YN, Zhang YL, Wang ZY, Wang LP, et al. An easily compatible eye-tracking system for freely-moving small animals. Neurosci Bull 2022, 38: 661–676. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.