Abstract

Background

Existing literature on online reviews of healthcare providers generally portrays online reviews as a useful way to disseminate information on quality. However, it remains unknown whether online reviews for assisted living (AL) communities reflect AL care quality. This study examined the association between AL online review ratings and residents’ home time, a patient-centered outcome.

Methods

Medicare beneficiaries who entered AL communities in 2018 were identified. The main outcome is resident home time in the year following AL admission, calculated as the percentage of time spent at home (i.e., not in institutional care setting) per day being alive. Additional outcomes are the percentage of time spent in emergency room, inpatient hospital, nursing home, and inpatient hospice. AL online Google reviews for 2013–2017 were linked to 2018–2019 Medicare data. AL average rating score (ranging 1–5) and rating status (no-rating, low-rating, and high-rating) were generated using Google reviews. Linear regression models and propensity score weighting were used to examine the association between online reviews and outcomes. The study sample included 59,831 residents in 12,143 ALs.

Results

Residents were predominately older (average 81.2 years), non-Hispanic White (90.4%), and female (62.9%), with 17% being dually eligible for Medicare and Medicaid. From 2013 to 2017, ALs received an average rating of 4.1 on Google, with a standard deviation of 1.1. Each one-unit increase in the AL’s average online rating was associated with an increase in residents’ risk-adjusted home time by 0.33 percentage points (P<0.001). Compared with residents in ALs without ratings, residents in high-rated ALs (average rating >=4.4) had a 0.64 pp (P<0.001) increase in home time.

Conclusions

Higher online rating scores were positively associated with residents’ home time, while absence of ratings was associated with reduced home time. Our results suggest that online reviews may be a quality signal with respect to home time.

Keywords: assisted living, patient-centered outcome, home time, online reviews

INTRODUCTION

Assisted living (AL) communities are an important and a growing component of the U.S. residential care system. An estimated 28,000 ALs currently serve close to 1,000,000 residents who are typically older, and have multiple co-morbidities and significant physical and cognitive impairments, similar to nursing home residents.1–3 However, unlike nursing homes, which are required to disclose quality information through public reporting websites (e.g., Nursing Home Compare, NHC), there are no state or federal mandatory reporting requirements for ALs. Thus, when choosing an AL community, customers are able to make decisions based on price and amenities, but not on quality. Although online reviews of ALs have become quite common, the extent to which prospective AL customers may rely on existing online reviews as a source of information regarding AL care quality is currently unknown.

Whether online reviews reflect care quality remains highly debated. Some studies found that online ratings were correlated with quality measures such as physician quality,4 number of deficiencies and complaints reported by NHC,5 rating scores from patient surveys,6 and patient outcomes such as mortality rates, hospitalization rates, and postoperative complications.7,8 Yet, other studies reported no clear relationship between online reviews and outcomes such as 30-day coronary artery bypass graft mortality9 and readmission rates.10 To date, there have been no studies of online reviews for ALs and it remains unknown whether such reviews are indicative of care quality provided in ALs.

One key feature of online reviews in healthcare is the large proportion of patients who do not write feedback following their healthcare encounters. A recent survey reported that only 44% of patients provide such reviews.11 Consequently, a significant proportion of healthcare providers do not receive online reviews. For example, previous work on hospital online reviews suggests that only 31% of hospitals in the Hospital Compare dataset had reviews.12 It is, therefore, important to understand whether no-review is a positive or a negative signal or something else entirely.

In recent years, there has been a growing interest in measuring outcomes that align with patients’ values and preferences, and home time, as well as healthy home time, has emerged as an important patient-centered metric for evaluating the quality of care and life.13,14 Home time is most often defined as days alive and not in healthcare institutions, over a given time period.15,16 In this study, we use home time as the main outcome and examine its association with online AL ratings. There are several advantages of using home time as the metric for evaluating resident outcome. First, home time indicates the absence of institutional care, which is often highly valued by older adults, including AL residents.17,18 Home time predicts well other patient-centered outcomes such as mobility impairment, depression, and difficulty in self-care.15 As an additional advantage, home time can be a valuable outcome measure from the perspective of policymakers as it accounts for days spent in healthcare institutions such as hospitals and nursing homes and, therefore, reflects the overall burden on the health system.19

Our study’s objectives were to examine: 1) the association between online consumer ratings and home time in ALs with at least 1 review, and 2) differences in resident home time between ALs with and without online ratings.

METHODS

This study was reviewed and approved by the University of Rochester Institutional Review Board.

Data and Sample

We used a previously described method to identify Medicare fee-for-service (FFS) beneficiaries residing in ALs, by matching their residential 9-digit zip codes to the zip codes of ALs.20 We restricted the sample to residents who entered AL between January and December of 2018 (i.e., new residents). We excluded those who were enrolled in Medicare Advantage (MA) plans in any month of the study period because their chronic condition information and outpatient claims data tend to be incomplete.21 A total of 59,831 residents in 12,143 ALs were identified.

On Google, people can search for a place to read and write reviews. To add ratings or reviews for a place, users must sign into their Google account. After logging in, they can select a certain number of stars (ranging from 1 being the worst to 5 being the best), and have the option to write details about their experience. Importantly, all reviews are public, and users are unable to add anonymous reviews. To ensure that the reviews are authentic and useful, Google uses an automated detection system to remove inappropriate content, which is either removed automatically or flagged for further manual review.22 In addition to the individual ratings, Google also shows an average rating of all historical ratings for the place.

AL online review data were obtained using Google Maps Application Programming Interface (API) and a commercial website Outscraper.23 Using the list of ALs where Medicare beneficiaries resided, we obtained Google reviews posted between January 1, 2013, and December 31, 2017. Among the 12,143 ALs included in the study cohort, 7,250 communities were rated during the study period (~60%).

To construct the outcome variable, home time, we followed residents for 365 days following AL admission. We identified their hospital stays from the Medicare Provider and Review (MedPAR), nursing home stays from the Minimum Data Set 3.0 (MDS), emergency room (ER) stays from outpatient claims, and institutional hospice stays from hospice claims. Residents’ characteristics, e.g., socio-demographics and chronic conditions, were obtained from the Medicare Beneficiary Summary File (MBSF). AL location (urban/rural) and bed size information were obtained from the national directory of AL communities.20

Dependent Variables

Home time was the main outcome of interest and was defined as the percentage of time spent at “home” (i.e., outside of institutional settings, including ER, hospital, nursing home, and inpatient hospice) per day being alive in the year following AL admission. Home time was constructed this way to account for residents who died in the year of AL admission. To calculate home time, we counted the number of days alive (365 – days in death) and the number of days spent in hospitals, ER, nursing homes, and institutional hospice. We then subtracted the number of days spent in the aforementioned settings from the number of days alive to obtain the number of days at home. In calculating home time, the numerator was the number of days at home and the denominator was the number of days alive . Therefore, home time ranges from 0% (spending all days alive in healthcare institutions) to 100% (spending all days alive at home). We constructed similar measures for each of the care settings as our secondary outcomes of interest, including the percentage of days alive in ER, hospital, nursing home, and inpatient hospice.

Independent Variables

Our main explanatory variables of interest were AL average rating score (1–5, a higher score indicating more positive review) and rating status (no-rating, low-rating, and high-rating). For ALs with at least 1 review, we calculated the mean rating scores between 2013 and 2017. The rating score was constructed this way because the goal was to examine whether existing reviews (reviews that were available at the time of AL admission) were predictive of future outcomes (i.e., home time for residents who entered ALs in 2018). The forward-looking outcome variables and the backward-looking explanatory variables help to mitigate the potential bias arising from simultaneity.10

AL review status was defined as a categorical variable with 3 levels: no-rating, low-rating (average rating score below the median score of 4.4), and high-rating (average rating score above the median). This 3-level categorical variable allowed us to not only test the difference in performance for ALs without and with ratings, but also for ALs with low and high rating scores.

Statistical Analysis

Analyses were performed at the resident level. Descriptive statistics of resident characteristics were conducted by the rating status of the AL community where they resided.

Multiple linear regression models were used to examine the associations between average online ratings and resident home time and time spent in other settings. To account for resident-level risks, we adjusted each model for resident age, sex, race and ethnicity, Medicare-Medicaid dually eligible status at AL admission, the presence of chronic conditions such as Alzheimer’s disease or other dementia (ADRD) and heart failure, the total number of chronic conditions (see full list of chronic conditions in Table 1), and whether the resident died in the year following AL admission (as a proxy for unmeasured health status). We also adjusted the AL location (rural/urban) and size (number of beds>=25 or not) as these variables are likely to relate to the availability and range of services provided in ALs. Because prior studies have suggested that state-level factors such as regulations may impact resident outcomes and care utilization,24,25 we included state fixed effects in the regression models to adjust for state-level differences. Standard errors were clustered at the AL level. As a robustness check, we included AL random effects in addition to resident characteristics, AL characteristics, and state fixed effects. Analyses were conducted using Stata version 17 (Stata Corp LP, College Station, Texas).

Table 1.

Sample characteristics by AL rating status

| No-rating AL | Low-rating AL (rating <4.4) | High-rating AL (rating >=4.4) | Total | |

|---|---|---|---|---|

| Sample Characteristics (%) | N=16,691 | N=22,707 | N=20,433 | N=59,831 |

| Outcome measures, mean (SD) | ||||

| Percent of days alive at home | 93.4 (15.7) | 93.9 (14.7) | 94.7 (13.4) | 94.0 (14.6) |

| Percent of days alive in ER | 0.4 (1.0) | 0.4 (0.9) | 0.3 (0.8) | 0.4 (0.9) |

| Percent of days alive in hospital | 1.9 (5.3) | 1.8 (5.0) | 1.6 (4.4) | 1.8 (4.9) |

| Percent of days alive in nursing home | 4.1 (13.4) | 3.7 (12.1) | 3.2 (11.2) | 3.6 (12.2) |

| Percent of days alive in hospice | 0.2 (2.5) | 0.2 (2.8) | 0.2 (2.4) | 0.2 (2.6) |

| Resident characteristics | ||||

| Age, mean (SD) | 78.3 (13.7) | 81.7 (10.9) | 82.9 (9.9) | 81.2 (11.6) |

| <65 | 13.1 | 6.4 | 4.4 | 7.6 |

| 65–74 | 19.2 | 14.4 | 11.9 | 14.9 |

| 75–84 | 27.2 | 31.1 | 31.6 | 30.2 |

| 85+ | 40.5 | 48.1 | 52.1 | 47.3 |

| Female | 60.8 | 62.8 | 64.7 | 62.9 |

| Race | ||||

| Non-Hispanic White | 86.1 | 90.9 | 93.4 | 90.4 |

| Non-Hispanic Black | 6.6 | 4.7 | 2.8 | 4.6 |

| Hispanic | 3.8 | 2.3 | 1.5 | 2.4 |

| Other Race | 3.6 | 2.2 | 2.2 | 2.6 |

| Dually eligible | 26.3 | 15.9 | 10.5 | 17 |

| Number of Chronic Conditions | ||||

| <=10 | 32.6 | 28 | 28.9 | 29.6 |

| 11–19 | 50.9 | 55.7 | 56.8 | 54.7 |

| >=20 | 16.5 | 16.3 | 14.3 | 15.7 |

| Chronic conditions | ||||

| Alzheimer’s Disease and Related Dementias | 37.7 | 40.3 | 39.6 | 39.3 |

| Acute Myocardial Infarction | 7.4 | 7.5 | 7.8 | 7.6 |

| Atrial Fibrillation | 24 | 28.1 | 28.8 | 27.2 |

| Chronic Kidney Disease | 45.9 | 48.8 | 48.6 | 47.9 |

| Chronic Obstructive Pulmonary Disease | 36.3 | 37.5 | 36.3 | 36.8 |

| Heart Failure | 38.7 | 42.1 | 41.5 | 40.9 |

| Diabetes | 40 | 42.5 | 39.5 | 40.8 |

| Ischemic Heart Disease | 56.6 | 62.7 | 62.3 | 60.8 |

| Osteoporosis | 31.5 | 37.6 | 39 | 36.4 |

| Rheumatoid Arthritis/Osteoarthritis | 68.5 | 75.2 | 76.9 | 73.9 |

| Mobility Impairments | 10 | 10.3 | 9.5 | 9.9 |

| Obesity | 27 | 26.2 | 25.2 | 26.1 |

| Cancer a | 18.3 | 21.7 | 22.3 | 21 |

| Anxiety/Depression | 61.8 | 62.4 | 61 | 61.8 |

| Mental Illness b | 24.3 | 19.3 | 15.9 | 19.5 |

| Hip/Pelvic Fracture | 2.8 | 3.1 | 3.3 | 3.1 |

| Stroke/Transient Ischemic Attack | 9.3 | 10.1 | 9.7 | 9.7 |

| Pressure Ulcers and Chronic Ulcers | 11.7 | 13 | 12.1 | 12.3 |

| Drug Use Disorder | 11.4 | 8.8 | 7 | 8.9 |

| Decedent | 11.2 | 12.3 | 12.8 | 12.2 |

| AL characteristics | ||||

| Large AL (number of beds >= 25) | 80.4 | 96.2 | 94.9 | 91.3 |

| AL location | ||||

| Urban | 70.3 | 87.1 | 83.2 | 81.1 |

| Large rural | 9.7 | 5.5 | 7.9 | 7.5 |

| Small rural | 10.5 | 2.3 | 3.8 | 5.1 |

| Missing | 9.5 | 5 | 5.1 | 6.3 |

Abbreviations: AL, assisted living; ER, emergency room.

Cancer includes breast cancer, colorectal cancer, endometrial cancer, lung cancer, and prostate cancer.

Mental illness includes bipolar disorders, personality disorders, schizophrenia, and schizophrenia and other psychotic disorders

To assess whether residents in ALs without ratings had worse outcomes, we first used propensity score weighting to balance the distribution of resident and AL characteristics across AL categories (no-rating, low-rating, and high-rating). Resident characteristics include age, sex, race and ethnicity, dual eligibility, and health conditions. AL characteristics include the location and size of the AL. We then used doubly robust regression models with state dummies to examine the difference in resident outcomes for residents in ALs with no-rating, low-rating, and high-rating. Estimation of propensity score was conducted using the R package Toolkit for Weighting and Analysis of Nonequivalent Groups (TWANG).26 A separate R package, the survey package was then used to perform the outcome analyses using weights.27

Sensitivity Analysis

Reviews Fraud

Providers’ incentive to manipulate online reviews can lead to biased ratings and impede review usefulness.28 Although Google uses the automated detection system and manual review to remove inappropriate content considered to be fake reviews and spam, one may still be concerned that undetected fake reviews can dilute the true quality information and therefore weaken the results. To address this concern, we made an extreme assumption that all 5-star reviews were fake and excluded these reviews.7 We then re-calculated the average ratings and compared the results with findings from the main analyses.

Number of Reviews

Another concern about the review dataset is related to the limited number of reviews that ALs received over the study period, where one extreme review can heavily skew the average rating of reviews. On average, ALs in the study sample received 4 reviews (SD 5.6). Quality information of ALs with a small number of reviews may be more prone to bias and including those ALs in the analyses may lead to unreliable estimation. To gain some insights on this issue, we re-estimated the models on the subsample of ALs with at least 5 reviews.

RESULTS

Sample Characteristics

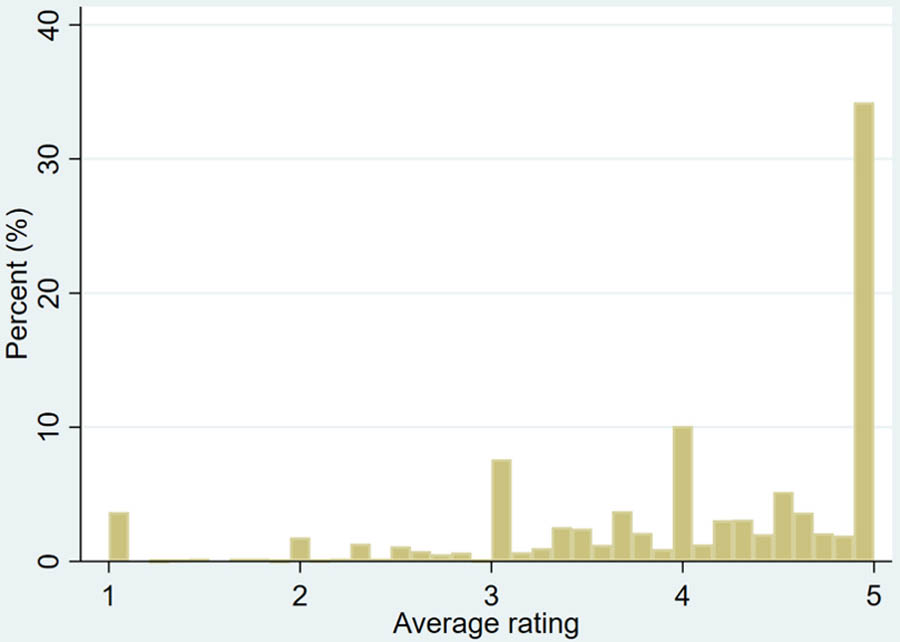

The study sample included 59,831 residents in 12,143 ALs. Overall, 40.3% of ALs did not receive any reviews (no-rating), 30.6% were low-rating ALs, and 29.1% were high-rating ALs. Figure 1 shows that for ALs with at least 1 review, the distribution of mean ratings was skewed, with the majority receiving relatively high ratings (e.g., above 4).

Figure 1.

The distribution of AL average rating

Table 1 presents sample characteristics by AL rating status. Without considering any adjustments, residents in high-rating ALs had longer home time (94.7%), whereas, in no-rating ALs and low-rating ALs, residents spent 93.4% to 93.9% of their time at home. Residents in high-rating ALs also tended to spend less time in other settings, including ER (0.3%), hospitals (1.6%), and nursing homes (3.2%).

Compared to residents in ALs with no-rating and in low-rating ALs, residents in high-rating ALs tend to be older (52.1% aged 85 years and older), more likely to be female (64.7%), non-Hispanic White (93.4%), less likely to be dually eligible (10.5%), more likely to have chronic conditions such as atrial fibrillation (28.8%) and osteoporosis (39%), and less likely to have mental illness (15.9%). Compared to ALs with ratings, ALs without ratings tend to be smaller (80.4% had <25 beds) and less likely to locate in urban areas (70.3%).

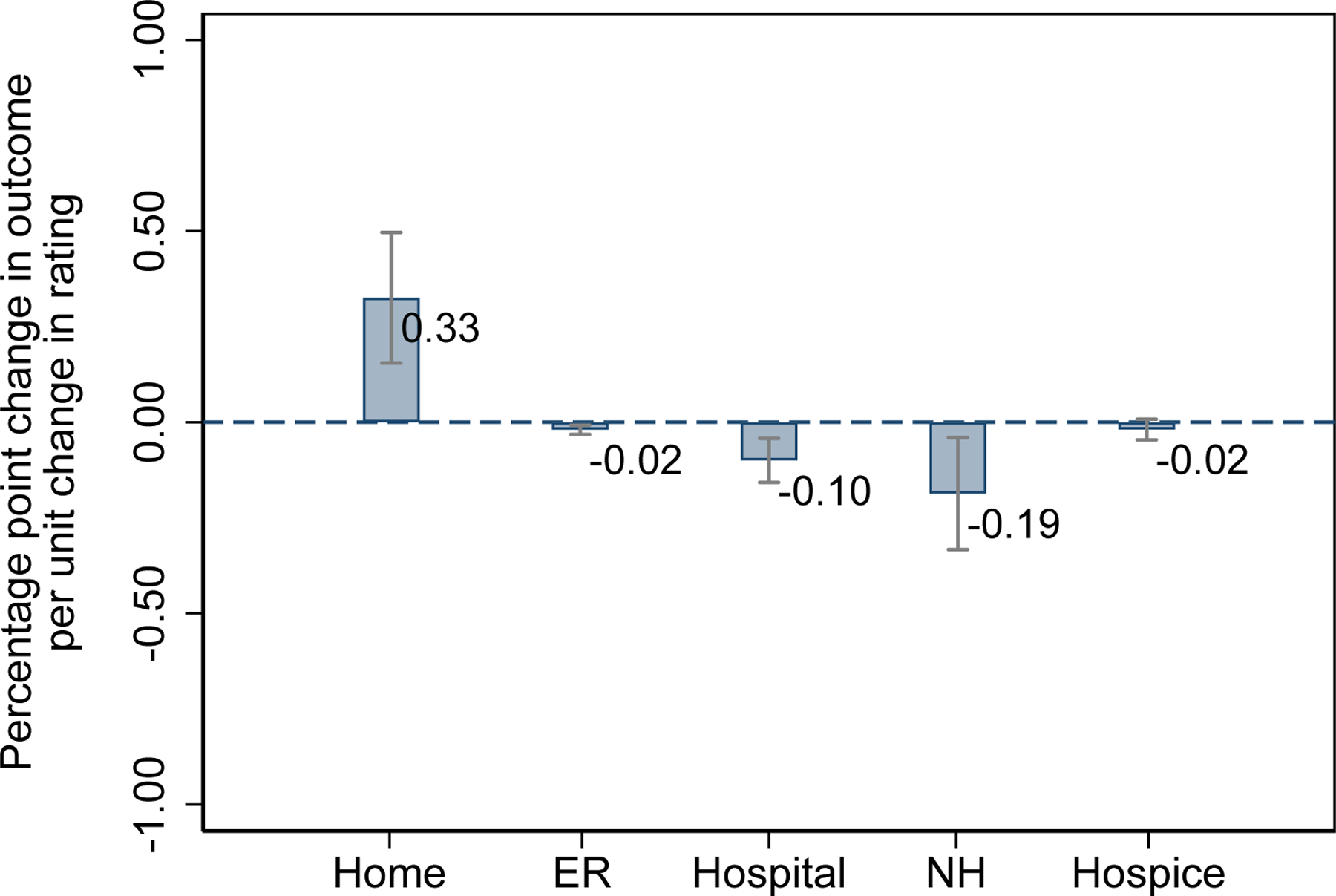

AL Online Rating and Resident Outcomes

Controlling for resident and AL characteristics, and state fixed effects, we found that a one-unit increase in average rating is associated with a 0.33 percentage point (pp; 95% CI, 0.15 to 0.50; P<0.001) increase in home time, a 0.02 (95% CI, −0.03 to −0.01; P<0.01) decrease in the percent of time spent in ER, a 0.1 pp (95% CI, −0.16 to −0.04; P<0.001) decrease in the percent of time spent in hospital, and a 0.19 (95% CI, −0.33 to −0.04; P<0.05) decrease in the percent of time spent in nursing homes (Figure 2). Given that on average residents spent 342.9 days at home in the year following AL admission, the 0.33 pp increase in home time can be translated to 1.1 additional days at home (0.33*342.9/100). In the Supplementary Table S1, we present the results with additional adjustment of AL random effects. These results are consistent with the main findings.

Figure 2.

Associations of AL average rating score with resident home time and time in ER, hospital, nursing home, and hospice in the year following AL admission. Models adjusted for resident age, sex, dual status, and chronic conditions. AL location (urban/rural), AL size, and state fixed effects were included in all models. Standard errors were clustered at the AL level. The vertical lines show the robust 95% CI.

Abbreviations: AL, assisted living; ER, emergency room.

In the Supplementary Figure S1 we presented the balance diagnostics for propensity score estimation. All the standardized differences were below the level of 0.1, suggesting that propensity score weighting improved the balance in every pretreatment covariate included in the model. Table 2 reports the results from regression models that examined the association between AL rating status and residents’ outcomes using the weighted sample, where the reference group is residents in ALs without ratings. Compared with the residents in ALs without ratings, residents in low-rating ALs spent more time at home (0.15 pp; 95% CI, −0.16 to 0.48; P = 0.34), although the difference is not statistically significant. Residents in low-rating ALs also spent less time in ER (−0.03 pp; 95% CI, −0.04 to −0.01; P<0.01) compared with residents in no-rating ALs. Residing in high-rating ALs was associated with more time at home (0.64 pp, or 2.2 additional days; 95% CI, 0.31 to 0.97; P<0.001), less time in ER (−0.03 pp, 95% CI, −0.05 to −0.02; P<0.001), hospital (−0.16 pp, 95% CI, −0.26 to −0.06; P<0.01), and nursing home (0.43 pp, 95% CI, −0.71 to −0.15; P<0.01).

Table 2.

Associations of AL rating status with resident home time and time in ER, hospital, nursing home, and hospice in the year following AL admission

| Percentage point change in the share of days alive in |

|||||

|---|---|---|---|---|---|

| Rating (ref: No-rating AL) |

Home | ER | Hospital | Nursing home | Hospice |

| Low-rating AL (rating <4.4) |

0.15 | −0.03 ** | −0.05 | −0.12 | 0.04 |

| (−0.16 to 0.48) | (−0.04 to −0.01) | (−0.15 to 0.06) | (−0.39 to 0.15) | (−0.02 to 0.09) | |

| High-rating AL (rating >=4.4) |

0.64 *** | −0.03 *** | −0.16 ** | −0.43 ** | −0.02 |

| (0.31 to 0.97) | (−0.05 to −0.02) | (−0.26 to −0.06) | (−0.71 to −0.15) | (−0.07 to 0.04) | |

| Observations | 59,831 | 59,831 | 59,831 | 59,831 | 59,831 |

| State fixed effects | Y | Y | Y | Y | Y |

Abbreviations: AL, assisted living; ER, emergency room.

Results were obtained from regressions based on propensity score weighted sample and adjustment for state fixed effects. Robust 95% CI shown in parentheses below estimates.

P<0.001

P<0.01

P<0.05

Sensitivity Analyses

After removing all 5-star reviews and recalculating the average rating, we found that a one-unit increase in AL’s rating score was associated with a 0.23 pp (95% CI, 0.08 to 0.39; P<0.01) increase in home time (Supplementary Table S2), which is smaller than the results in the main finding (0.35 pp). This smaller yet significant coefficient suggests that even if there were some fake reviews, online reviews are still informative about AL quality.

When restricted to ALs with at least 5 reviews, we found that the relationship between AL average rating score and resident home time was still positive and significant (Supplementary Table S3). The estimated coefficient was larger than the main finding (0.56 pp vs. 0.33 pp), suggesting that the quality difference among ALs in the new sample is likely to be larger than in the main analyses.

DISCUSSION

We found that the residents of ALs with higher online ratings had significantly longer home time. On average, a one-unit increase in rating was associated with more than one additional day spent at home. Furthermore, we found that residing in high-rating ALs versus no-rating ALs was associated with 2.2 additional days at home, suggesting that no-rating ALs performed worse than high-rating ALs. The practical importance of the extra 1.1 to 2.2 days spent at home may vary from person to person. Some may argue that the effect size of these result is not very large. However, given the importance of being free from institutionalized settings, any time away from these settings is likely to be valued by older adults. Moreover, the combined effect of “institution free” days on the healthcare system is not trivial.

A number of prior studies have explored the association between online ratings and quality of care, although not in ALs. Most relevant to our study are those conducted in nursing homes. Johari et al., examined the association between online ratings and conventional NHC ratings and reported a weak correlation.29 Li et al., found that social media ratings of nursing homes were significantly correlated with and predictive of NHC quality measures.5 However, neither study examined the direct association between online ratings and resident-centered outcomes. Our study fills this gap in the literature by exploring the association between online ratings and resident home time. The positive and statistically significant association between online AL ratings and home time suggests that online ratings may be an important source for stakeholders to gain insights into the quality of care from residents’ perspective. However, it is important to note that information from online AL ratings should not replace, but rather complement conventional measures of resident experience (e.g., obtained by surveys) because online rating systems have their own limitations.30 For example, online ratings are not risk-adjusted and can be impacted by factors other than the quality of care (e.g., accessibility).31

Our finding that no-review signals poor quality is consistent with the results from a prior study in which the authors reported that primary care physicians (PCPs) with low perceived quality are less likely to be rated online.32 Our finding, however, is different from another study that reported (using RateMDs platform) that while no-rating surgeons deliver better patient outcomes than low-rating surgeons, they perform no worse than high-rating surgeons.7 There are several possible reasons for these inconsistencies. One may be the different feedback mechanisms used by Google and RateMDs. Using a large data set of online reviews obtained from eBay, Dellarocas and Wood found that dissatisfied customers are less willing to provide feedback after they receive poor products or services, which is likely due to the fear of being “retaliated” because reviews are not anonymous.33 Similar phenomena may also exist in online healthcare ratings. Unlike Google where the users must log in to write a review and therefore the review is linked to their Google profile that may include name and pictures, RateMDs users may write reviews without an account and reviews are anonymous. It is also possible that social interactions cause patients to feel empathy towards providers and make them more likely to omit negative feedback online, even if they had bad experiences.34,35 Unlike settings such as AL or primary care where customers and providers often have frequent social interactions, as noted by Lu and Rui in their paper,7 patients may only see cardiac surgeons once or twice in their life and therefore are less likely to feel awkward to report negative experience online. Hence, in settings where the interactions are less common (e.g., surgeons), absence of reviews may simply reflect the lack of willingness to provide feedback online. However, in settings where the customers have frequent interactions with providers (e.g., ALs and PCPs), absence of reviews may actually signal quality. Lastly, the potential resident selection may also impact the probability of providers being rated online. In Lu and Rui’s paper, the authors focus on patients who arrived at hospitals through ER and therefore the surgeon-patient assignment was likely to be random. In the present study, although propensity score weighting was used to mitigate the selection bias, systematic differences may still exist because of possible non-random provider-resident assignment. If observably poor-quality AL communities disproportionately attract residents who are less able to leave reviews, this could cause differences in the observed patterns.

Our findings on online AL reviews have some practical implications for customers, AL providers, and policymakers. AL’s online reviews may signal quality of care and customers may use such information in searching for AL care. AL providers who seek to improve the care they provide, especially those with lower quality, may want to learn from online reviews and find areas for improvement. Lastly, because currently there are no mandatory reporting requirements for AL communities, online reviews may be a valuable source for policymakers to gain some insights into the care provided in ALs as it is reported by the consumers. In addition, efforts to make online reviews more accessible may help potential consumers to make more informed decisions in their AL selection.

Limitations

There are a few limitations of this study. First, we focused on Medicare FFS beneficiaries residing in AL communities, and the results might not be generalizable to AL residents who participated in MA plans. Second, the possible selection bias could lead to systematic differences among residents across different AL categories. Selection bias can originate from both the AL community and residents. Customers with better financial and cognitive capacities may be more likely to check online ratings and make selections based on that search, and those residents may be in better overall health. Alternatively, AL communities with better quality are likely to attract more residents allowing them to cherry-pick residents who have better health and therefore need less care. Third, it should be noted that our analyses were based on reviews from Google, and the results may not be extrapolated to other online review platforms that use different feedback mechanisms. This is because the design of the feedback systems (e.g., anonymous or not) may affect customers’ reviewing behavior and therefore the study conclusions. Additional validation studies using data from other platforms may be helpful. Finally, because online ratings have not been validated it is possible they do not accurately reflect the views of all AL residents and their families.

CONCLUSIONS

In this study, we examined the association between online AL ratings and a patient-centered outcome, home time. We found a significant positive association between online rating and resident home time and that no-rating signals worse quality. Our results suggest that online reviews may be a valuable source for obtaining information on AL care.

Supplementary Material

IMPACT STATEMENT.

We certify that this work is novel. This is the first study in the United States that used national data to assess the association between assisted living (AL) online ratings and residents’ home time, a patient-centered outcome. We found that each one-unit increase in the AL’s average online rating (ranging from 1 to 5) was associated with an increase in residents’ risk-adjusted home time by 0.33 percentage points (pp; P<0.001; or 1.1 days). Furthermore, compared with residents in ALs without ratings, residents in high-rated ALs (average rating >=4.4) had a 0.64 pp (P<0.001; or 2.2 days) increase in home time. Our findings have some practical implications for customers, AL providers, and policymakers.

Key Points

In this observational study that included 59,831 residents in 12,143 ALs, we found that higher online rating scores were positively associated with residents’ home time, while the absence of ratings was associated with reduced home time.

Why does this paper matter?

It remains unknown in the existing literature whether AL’s online reviews are associated with resident outcomes. Findings from this study suggest that AL’s online reviews may signal quality of care issues, offering consumers information to make more informed decisions in their AL selection.

ACKNOWLEDGMENT

Sponsor’s Role

This study was conducted with the support of the following funders: Agency for Healthcare Research and Quality (AHRQ) (R01HS026893 [HTG]). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Funding Source:

This work was supported by the Agency for Healthcare Research and Quality grant number R01HS026893.

Footnotes

Conflict of Interest

The authors have indicated they have no potential conflicts of interest to disclose.

REFERENCES

- 1.Temkin-Greener H, Mao Y, McGarry B, Zimmerman S, Li Y. Health Care Use and Outcomes in Assisted Living Communities: Race, Ethnicity, and Dual Eligibility. Med Care Res Rev Published online October 8, 2021:10775587211050188. doi: 10.1177/10775587211050189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zimmerman S, Guo W, Mao Y, Li Y, Temkin-Greener H. Health Care Needs in Assisted Living: Survey Data May Underestimate Chronic Conditions. J Am Med Dir Assoc 2021;22(2):471–473. doi: 10.1016/j.jamda.2020.11.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.National Center for Assisted Living. Assisted Living Facts and Figures Accessed April 15, 2022. https://www.ahcancal.org/Assisted-Living/Facts-and-Figures/Pages/default.aspx

- 4.Gao GG, McCullough JS, Agarwal R, Jha AK. A Changing Landscape of Physician Quality Reporting: Analysis of Patients’ Online Ratings of Their Physicians Over a 5-Year Period. Journal of Medical Internet Research 2012;14(1):e2003. doi: 10.2196/jmir.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Li Y, Cai X, Wang M. Social media ratings of nursing homes associated with experience of care and “Nursing Home Compare” quality measures. BMC Health Services Research 2019;19(1):260. doi: 10.1186/s12913-019-4100-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Campbell L, Li Y. Are Facebook user ratings associated with hospital cost, quality and patient satisfaction? A cross-sectional analysis of hospitals in New York State. BMJ Qual Saf 2018;27(2):119–129. doi: 10.1136/bmjqs-2016-006291 [DOI] [PubMed] [Google Scholar]

- 7.Lu SF, Rui H. Can We Trust Online Physician Ratings? Evidence from Cardiac Surgeons in Florida. Management Science 2018;64(6):2557–2573. doi: 10.1287/mnsc.2017.2741 [DOI] [Google Scholar]

- 8.Ryskina KL, Andy AU, Manges KA, Foley KA, Werner RM, Merchant RM. Association of Online Consumer Reviews of Skilled Nursing Facilities With Patient Rehospitalization Rates. JAMA Network Open 2020;3(5):e204682. doi: 10.1001/jamanetworkopen.2020.4682 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Okike K, Peter-Bibb TK, Xie KC, Okike ON. Association Between Physician Online Rating and Quality of Care. Journal of Medical Internet Research 2016;18(12):e6612. doi: 10.2196/jmir.6612 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Saifee DH, Zheng Z (Eric), Bardhan IR, Lahiri A. Are Online Reviews of Physicians Reliable Indicators of Clinical Outcomes? A Focus on Chronic Disease Management. Information Systems Research 2020;31(4):1282–1300. doi: 10.1287/isre.2020.0945 [DOI] [Google Scholar]

- 11.RepuGen. RepuGen Patient Review Survey 2021. Accessed April 25, 2022. https://www.repugen.com/patient-review-survey-2021

- 12.Ranard BL, Werner RM, Antanavicius T, et al. Yelp Reviews Of Hospital Care Can Supplement And Inform Traditional Surveys Of The Patient Experience Of Care. Health Affairs 2016;35(4):697–705. doi: 10.1377/hlthaff.2015.1030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ankuda CK, Grabowski DC. Is every day at home a good day? Journal of the American Geriatrics Society 2022;70(9):2481–2483. doi: 10.1111/jgs.17973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Burke LG, Orav EJ, Zheng J, Jha AK. Healthy Days at home: A novel population-based outcome measure. Healthcare 2020;8(1):100378. doi: 10.1016/j.hjdsi.2019.100378 [DOI] [PubMed] [Google Scholar]

- 15.Lee H, Shi SM, Kim DH. Home Time as a Patient-Centered Outcome in Administrative Claims Data. Journal of the American Geriatrics Society 2019;67(2):347–351. doi: 10.1111/jgs.15705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chesney TR, Haas B, Coburn NG, et al. Patient-Centered Time-at-Home Outcomes in Older Adults After Surgical Cancer Treatment. JAMA Surgery 2020;155(11):e203754. doi: 10.1001/jamasurg.2020.3754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Barnato AE, Herndon MB, Anthony DL, et al. Are Regional Variations in End-of-Life Care Intensity Explained by Patient Preferences? Med Care 2007;45(5):386–393. doi: 10.1097/01.mlr.0000255248.79308.41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Harris-Wallace B, Schumacher JG, Perez R, et al. The Emerging Role of Health Care Supervisors in Assisted Living. Seniors Hous Care J 2011;19(1):97–108. [PMC free article] [PubMed] [Google Scholar]

- 19.Fonarow GC, Liang L, Thomas L, et al. Assessment of Home-Time After Acute Ischemic Stroke in Medicare Beneficiaries. Stroke 2016;47(3):836–842. doi: 10.1161/STROKEAHA.115.011599 [DOI] [PubMed] [Google Scholar]

- 20.Temkin-Greener H, Mao Y, Li Y, McGarry B. Using Medicare Enrollment Data to Identify Beneficiaries in Assisted Living. Journal of the American Medical Directors Association Published online February 20, 2022. doi: 10.1016/j.jamda.2022.01.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Master Beneficiary Summary File (MBSF): 30 CCW Chronic Conditions Segment | ResDAC. Accessed May 23, 2022. https://resdac.org/cms-data/files/mbsf-30-cc

- 22.A look at how we tackle fake and fraudulent contributed content Google. Published February 18, 2021. Accessed April 20, 2022. https://blog.google/products/maps/google-maps-101-how-we-tackle-fake-and-fraudulent-contributed-content/

- 23.Outscraper Accessed April 18, 2022. https://outscraper.com/

- 24.Temkin-Greener H, Guo W, Hua Y, et al. End-Of-Life Care In Assisted Living Communities: Race And Ethnicity, Dual Enrollment Status, And State Regulations. Health Affairs 2022;41(5):654–662. doi: 10.1377/hlthaff.2021.01677 [DOI] [PubMed] [Google Scholar]

- 25.Thomas KS, Cornell PY, Zhang W, et al. The Relationship Between States’ Staffing Regulations And Hospitalizations Of Assisted Living Residents. Health Affairs 2021;40(9):1377–1385. doi: 10.1377/hlthaff.2021.00598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Burgette L, Griffin BA, McCaffrey D. Propensity scores for multiple treatments: A tutorial for the mnps function in the twang package. R package Rand Corporation 2017;478. [Google Scholar]

- 27.Lumley T survey: analysis of complex survey samples Published online 2020. [Google Scholar]

- 28.Mayzlin D, Dover Y, Chevalier J. Promotional Reviews: An Empirical Investigation of Online Review Manipulation. American Economic Review 2014;104(8):2421–2455. doi: 10.1257/aer.104.8.2421 [DOI] [Google Scholar]

- 29.Johari K, Kellogg C, Vazquez K, Irvine K, Rahman A, Enguidanos S. Ratings game: an analysis of Nursing Home Compare and Yelp ratings. BMJ Qual Saf 2018;27(8):619–624. doi: 10.1136/bmjqs-2017-007301 [DOI] [PubMed] [Google Scholar]

- 30.Verhoef LM, Belt THV de, Engelen LJ, Schoonhoven L, Kool RB. Social Media and Rating Sites as Tools to Understanding Quality of Care: A Scoping Review. Journal of Medical Internet Research 2014;16(2):e3024. doi: 10.2196/jmir.3024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Associations between internet-based patient ratings and conventional surveys of patient experience in the English NHS: an observational study | BMJ Quality & Safety. Accessed November 14, 2022. https://qualitysafety.bmj.com/content/21/7/600 [DOI] [PubMed]

- 32.Gao GG, Greenwood BN, McCullough J, Agarwal R. A digital soapbox? The information value of online physician ratings. In: Conference on Information Systems and Technology Citeseer; 2011:11–12. [Google Scholar]

- 33.Dellarocas C, Wood CA. The Sound of Silence in Online Feedback: Estimating Trading Risks in the Presence of Reporting Bias. Management Science 2008;54(3):460–476. doi: 10.1287/mnsc.1070.0747 [DOI] [Google Scholar]

- 34.Andreoni J, Rao JM. The power of asking: How communication affects selfishness, empathy, and altruism. Journal of Public Economics 2011;95(7):513–520. doi: 10.1016/j.jpubeco.2010.12.008 [DOI] [Google Scholar]

- 35.Fradkin A, Grewal E, Holtz D, Pearson M. Bias and Reciprocity in Online Reviews: Evidence From Field Experiments on Airbnb In: Proceedings of the Sixteenth ACM Conference on Economics and Computation. EC ‘15. Association for Computing Machinery; 2015:641. doi: 10.1145/2764468.2764528 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.