Abstract

The predictive maintenance of electrical machines is a critical issue for companies, as it can greatly reduce maintenance costs, increase efficiency, and minimize downtime. In this paper, the issue of predicting electrical machine failures by predicting possible anomalies in the data is addressed through time series analysis. The time series data are from a sensor attached to an electrical machine (motor) measuring vibration variations in three axes: X (axial), Y (radial), and Z (radial X). The dataset is used to train a hybrid convolutional neural network with long short-term memory (CNN-LSTM) architecture. By employing quantile regression at the network output, the proposed approach aims to manage the uncertainties present in the data. The application of the hybrid CNN-LSTM attention-based model, combined with the use of quantile regression to capture uncertainties, yielded superior results compared to traditional reference models. These results can benefit companies by optimizing their maintenance schedules and improving the overall performance of their electric machines.

Keywords: electrical machines, empirical wavelet transform, fault detection, Savitzky–Golay filter, temporal fusion transformer

1. Introduction

Given the potential for significant reductions in maintenance costs, increased productivity, and reduced downtime, predictive maintenance of electrical machinery has become a top priority for companies [1]. Over the last few years, there has been much attention to applying predictive maintenance methods to predict electrical machine breakdowns by locating anomalies [2]. Identifying anomalous behavior in equipment is increasingly recognized as a crucial factor in anticipating maintenance actions [3] and achieving gains by avoiding unplanned downtime [4].

This paper thoroughly examines this critical topic by focusing on predicting electrical machine failures by examining time series data collected from sensors attached to the electrical machines. Optimizing maintenance schedules, increasing equipment lifespan, and enhancing the overall performance of electrical machines are some of the objectives of this study. Maintenance optimization is increasingly being explored using deep learning models [5,6,7,8], which is the focus of the method presented in this paper.

A major component of predictive maintenance is anomaly detection, which enables businesses to spot possible breakdowns quickly [9]. Time series data, such as the data gathered for this study, is particularly well suited for this kind of analysis since it enables us to look at how a given statistic changes over time [10]. Quantile regression, a statistical technique, was used to handle uncertainties in the time series data [11].

Real-world time series data generally exhibit non-linearities [12], making it challenging to apply conventional prediction techniques [13]. Therefore, advanced techniques were employed to address this issue, including convolutional neural network (CNN) [14], long short-term memory (LSTM) attention [15], and quantile regression [11], to accurately predict machine failures and manage uncertainties present in the data.

In this paper, we propose a novel approach to predicting electrical machine failures by forecasting possible anomalies in the data. Specifically, we utilize time series data from a vibration sensor attached to a real electrical machine, measuring variations in three axes (axial, radial, and radial X). By extracting features from the data using a CNN to predict time series data using a hybrid model based on LSTM with an attention mechanism, this paper presents a solution that can be applied to time series anomaly prediction that can be extended to other engineering fields.

Based on a hybrid CNN-LSTM attention model, an anomaly detection algorithm called empirical-cumulative-distribution-based outlier detection (ECOD) is applied to leverage the predictions in the 10% and 90% quantiles, providing the machine operator with the probability levels of faults. The resulting neural-based predictive maintenance tool can help companies make informed decisions about their maintenance processes.

This paper has the following contributions to improving fault detection based on time-based analyses:

The hybrid LSTM-CNN architecture with attention and gated residual networks (GRN) enhances the accuracy of the predictions.

The quantile regression at the network output helps to manage uncertainties present in the data.

The use of empirical wavelet transform and the Savitzky–Golay filter assist in reducing noise in the signal and extracting relevant features for the analysis.

The remainder of this paper is organized as follows: Section 2 presents a review of the related work in predictive maintenance for electrical machines. Section 3 overviews the proposed methodology, including data collection and pre-processing, the custom hybrid CNN-LSTM attention model, quantile regression, and the ECOD anomaly detection algorithm. Section 4 presents the experimental results and analysis of the proposed approach, and Section 5 concludes the paper and discusses future research directions.

2. Related Works

There is a growing effort to improve ways of diagnosing electrical machines [16]; in this context, several approaches have been used to predict engine failures based on time series data. One is to use vibration analysis techniques to detect changes in the vibration signature of an engine [17], which can indicate misalignment, excessive wear, or other mechanical problems. Machine learning algorithms [18], such as decision trees [19], can be used to identify patterns in sensor readings and make predictions based on this information, while deep learning techniques have been widely used [20].

Time series spectrum analysis can be used to identify changes in the machine’s electrical signals, which may indicate failures in internal components such as bearings or windings [21]. Signal processing algorithms, such as the Fourier transform, can extract relevant information from these electrical signals and predict potential failures [22]. Furthermore, using time series forecasting, the increase in the number of failures can be monitored to assess the condition of the system being monitored [23].

State-of-the-art techniques have been applied to improve the prediction capability, such as the attention mechanism combined with AdaBoost proposed by Long et al. [24] for machine fault diagnosis. Yang et al. [25] proposed an ensemble empirical mode decomposition (EEMD) for the fault diagnosis of asynchronous machines, showing that their approach had a recognition rate of 99%, considering broken rotor bars, air gap eccentricity, and normal state.

A wide range of models have been successfully applied in time series forecasting. However, choosing the appropriate model is a challenging task [26], considering that the characteristics of the data influence model performance and since some methods have specific properties that can be helpful for non-linear forecasting. For improved signal analysis with non-linearity, techniques such as seasonality decomposition [27], wavelet transform [28], and empirical wavelet transform [29] show promise for denoising.

Hybrid models which combine noise suppression methods such as seasonal decomposition or wavelet transforms with forecasting models have been increasingly employed [30]. The advantage of using these approaches is that high-frequency variations are disregarded. The model has more effective results because it focuses on the variation trend and not on the signal noise [31]. An important observation to consider is that the filters cannot be too coarse to reduce all the variation in the signal, so a proper case evaluation must be performed [32].

Regarding noise reduction, Faysal et al. [33] proposed a noise-eliminated ensemble empirical mode decomposition (NEEEMD) method for fault diagnosis in rotating machinery. They proved that the NEEEMD could be more generalized and robust for the problem. In addition, using an ensemble-based method with wavelet packet transform (WPT), Chui et al. [34] showed that the signal-to-noise ratio could be improved using an optimized ensemble empirical model combined with WPT.

A technique that has been highlighted for noise reduction in time series forecasting is the empirical wavelet transform (EWT) [35]. Zhao et al. [36] and Xu et al. [37] applied the EWT considering an adaptive spectrum segmentation for the improvement in signal processing and fault diagnosis. Fault detection using EWT has proven to be promising, as presented by Xin et al. [38] for rotating machinery and by Xu et al. [39] for rolling bearings. Deng et al. [40], and Huang et al. [41] applied the EWT for machine bearing fault detection. The application of EWT for failure diagnosis extends to other types of machines, such as wind turbines [42], and other forecasting applications [43].

Among the time series forecasting models, there are several approaches such as neuro-fuzzy systems [44], autoregressive integrated moving average (ARIMA) [45], LSTM [46], ensemble learning methods [47], and TFT [48]. According to Li et al. [49], the TFT can improve the reliability and compactness of the forecasting and can even be applied to medium-term hourly time series data.

The wavelet neuro-fuzzy method was used by Stefenon et al. [50], who focused on time series forecasting to propose a model and assess solar prediction capability. Wavelets were incorporated into the model for feature extraction, where they analyzed whether it is possible to anticipate the production of electrical power with a hybrid model while taking solar trackers into account with a sufficient degree of precision. A forecast can be made and it can be decided whether using solar tracking is worthwhile by assuming a hybrid computational model.

A novel hybrid model that considers the benefits of linearity and non-linearity, as well as the effect of manual operations, was proposed by Fan et al. [51], combining the LSTM and ARIMA models. The LSTM model clearly outperforms the ARIMA model regarding fluctuating non-linear data. Results from coupling models outperform separate ones, with the ARIMA-LSTM model performing even better when production is adversely affected by frequent manual procedures.

Feng et al. [52] used an enhanced TFT prediction model to supply air temperatures in high-speed train carriages. The model effectively outperformed seven prominent methods in time series computing tasks, as shown by empirical simulations using a dataset comprising high-speed rail air-conditioning operations at a specific site in China. The focus of the prediction problem in the time dimension was also examined.

By combining machine learning classifiers with the feature extraction method wavelet scattering transform (WST), Toma et al. [53] proposed a system for classifying bearing faults. The experimental results showed that WST might improve bearing fault classification accuracy when compared to EWT, information fusion, and wavelet packet decomposition, achieving good classification accuracy for the fault diagnosis of rotating machinery.

To diagnose bearing faults, Van et al. [54] proposed the particle swarm optimization least-squares wavelet support vector machine classifier. One essential part of a spinning machine is the bearing; hence, it is crucial to maintain the bearing’s health. A thorough comparison of the suggested approach with existing approaches was conducted using a benchmark-bearing dataset.

To reduce noise in both the frequency and time domains, Tian et al. [55] introduced the wavelet-SANet anti-noise, a wavelet-based self-attention network for machinery malfunction diagnostics. This approach combines frequency-oriented fusion modules and transformer modules. The experimental findings on two open-bearing datasets show good performance for identifying machine faults.

Wang et al. [56] used the dual-tree complex WPT with the sub-band averaging kurtogram to diagnose problems with spinning machinery. Their approach divides a signal into sub-signals using a sliding window, then the sub-band kurtosis is computed. The efficacy and advancements of the suggested method were validated by a simulation case and two applications for fault diagnosis of a planetary gearbox and a rolling bearing.

The normal multi-component signal produced by machinery vibration frequently has various interference components that obscure defective features. Zhang et al. [57] presented a weak feature augmentation method based on EWT and improved adaptive bistable stochastic resonance (IABSR) to extract faulty features in precision machinery. The approach achieved fault feature improvement in the low-frequency band of the harmonic spectrum by fully utilizing the signal decomposition capability of EWT and the signal enhancement of IABSR. These two case studies on the identification of machinery faults illustrated the usefulness and superiority of the suggested method.

Machine faults can be accurately diagnosed by using vibration signal properties such as instantaneous frequency, instantaneous amplitude, or spectral kurtosis. Shi et al. [58] developed a wavelet-based technique, dubbed wavelet-based synchro extracting transform (WSET), and applied it to fault diagnosis. Two rotor and rolling bearing benchmarks were used to test the efficacy of WSET in identifying failure features for malfunction identification.

An important subsystem of a high-speed train is the wheelset bearing system, and its service safety significantly depends on identifying and treating any compound problems in this system. In this sense, Ding [59] proposed a double impulsiveness measurement indices bilaterally driven EWT method to detect and diagnose defects. Additional demodulation was performed on the signals found in the sideband lower–upper boundary pairs of the EWT to find compound faults in the wheelset bearing system. Simulation, bench, and running tests validated the proposed method.

By analyzing the inter-harmonic content of the current signal, Gadanayak and Mallick [60] established a method for arcing high-impedance fault (HIF) identification in distribution feeders. The newly created unique knot-based empirical mode decomposition and maximum overlap discrete WPT was employed to separate the inter-harmonic components. The findings showed that the suggested method can detect HIFs quickly while achieving good security against failure.

Liu et al. [61] developed an approach to enhance EWT to address the spectrum segmentation flaw and improve the method’s capacity to extract bearing fault data. The maximum envelope-fitting method highlighted each mode and reduced the number of point extremes that were not useful. Reducing the number of filters suppresses noise interference on the modal. Data on gearbox bearing faults in wind turbines and locomotive bearings confirmed the method’s efficacy.

To fuse three-channel vibration signals for the weak failure detection of hydraulic pumps, Yu et al. [62] presented a novel vibration signal fusion approach combining the improved EWT and the variance contribution rate. Simulation and experiment analyses showed that the fusion method effectively detects weak faults in hydraulic pumps. From the literature, EWT has advanced the field of machine fault diagnostics.

While the above-mentioned related works employ various techniques to predict machine failures, our work differs by combining the strengths of both CNNs and LSTMs in a hybrid model. Using LSTM allows us to model patterns of the time series data. At the same time, CNN extracts important features such as trend changes and other patterns commonly observed in time series data, which are often variable.

The CNN-LSTM hybrid model has been successfully applied in many domains, including natural language processing and computer vision. Still, its application in time series forecasting, particularly in the context of predictive maintenance, has not been extensively explored. Our approach leverages these two architectures to identify potential anomalies. Additionally, we employ quantile regression to manage uncertainties present in the data. The approach enables us to make better predictions and identify potential anomalies, which may be challenging with traditional methods.

3. Dataset

The dataset is in time series format, collected from a sensor attached to the machine’s structure for use in this research. The machine consists of a three-phase, synchronous, alternating-current motor installed in an industrial plant with a vibration sensor attached to its casing. The motor is used in an exhaust fan located near a furnace with two poles and is powered by a frequency inverter that controls its speed. The findings can be evaluated for other types of equipment, such as hydraulic pumps, if vibration analysis is of interest.

The sensor is attached to the machine housing and can be either glued or bolted, ensuring proper contact to avoid noise in the data. The sensor measures the temperature on the surface of the housing and in the environment, rotation speed, frequency, vibrations, etc. For this research, only the vibrations in three axes, namely X, Y, and Z, are used as the input for the model, as they are relevant to detecting anomalies in the equipment, particularly in monitoring vibrations and imbalances.

After filtering and removing null values, the dataset comprised 7675 records between August 2021 and August 2022 (one year). It is worth noting that even though the data period is relatively long and vibration data is reported hourly, there are some periods within this interval where no data was collected for various reasons. Therefore, data pre-processing is necessary to generate more robust and reliable results.

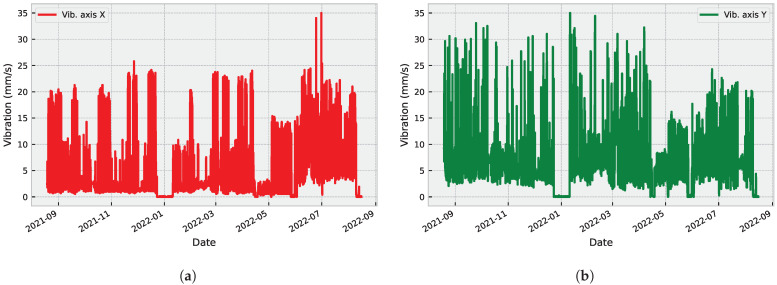

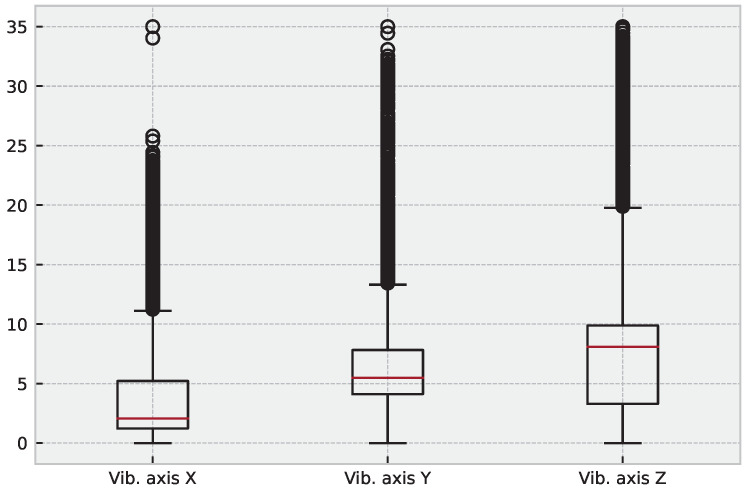

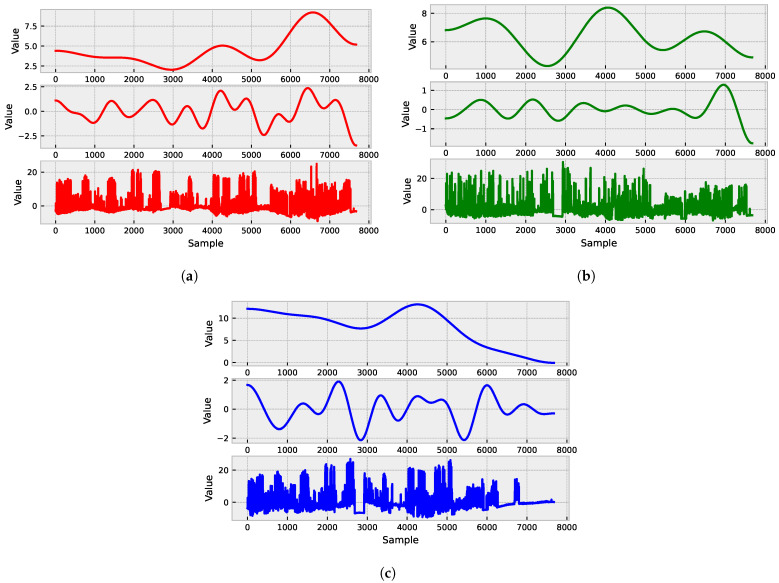

Figure 1 shows the raw vibration signals collected from three different axes: X (Figure 1a), Y (Figure 1b), and Z (Figure 1c). These signals display varying levels of amplitude and frequency, indicating different types of vibration patterns.

Figure 1.

Raw vibration signals collected from three different axes: X (a), Y (b), and Z (c).

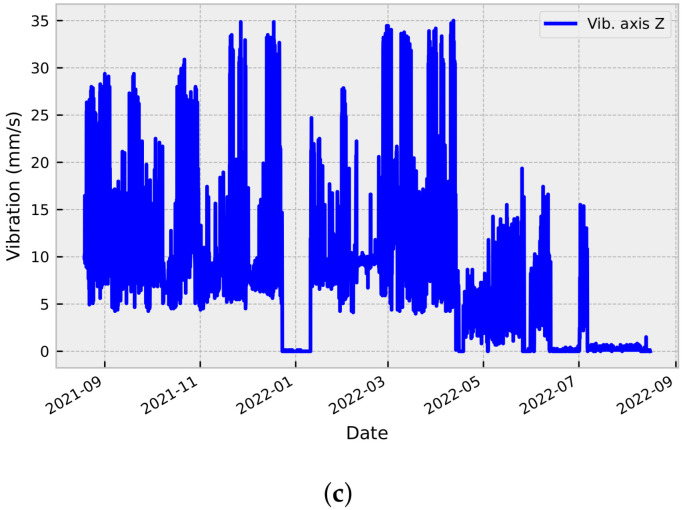

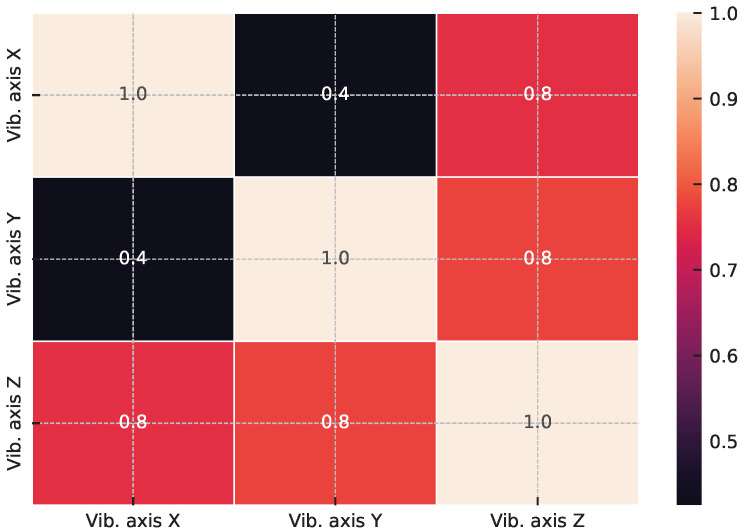

Moreover, abnormal vibration records can lead to important conclusions about operating load, useful life, imbalance, and others. We present the dataset characteristics in Table 1 to further analyze the signals, and additional statistical characteristics of the considered dataset are presented in Figure 2 and Figure 3.

Table 1.

Dataset characteristics.

| Vib. Axis X | Vib. Axis Y | Vib. Axis Z | |

|---|---|---|---|

| Mean | 4.5519 | 6.3701 | 7.9366 |

| Median | 2.0588 | 5.4902 | 8.0980 |

| Mode | 1.3725 | 0.0000 | 0.1373 |

| Range | 35.0000 | 35.0000 | 35.0000 |

| Variance | 29.8170 | 20.0177 | 46.2006 |

| Std. Dev. | 5.4605 | 4.4741 | 6.7971 |

| 25th %ile | 1.2353 | 4.1176 | 3.2941 |

| 50th %ile | 2.0588 | 5.4902 | 8.0980 |

| 75th %ile | 5.2157 | 7.8235 | 9.8824 |

| IQR | 3.9804 | 3.7059 | 6.5882 |

| Skewness | 1.7963 | 2.3620 | 1.4814 |

| Kurtosis | 2.4265 | 8.7628 | 3.0811 |

Figure 2.

PhiK correlation matrix for the analyzed signals. The matrix shows the pair-wise correlations between signals, with warmer colors indicating stronger positive correlations and cooler colors indicating stronger negative correlations.

Figure 3.

Boxplot for the analyzed signals. The plot displays the distribution of signal values, with each box representing the interquartile range (IQR) and the median line. The whiskers extend to the minimum and maximum values within 1.5 times the IQR, and any outliers beyond this range are shown as individual points.

4. Methodology

This section will present the proposed method, along with a summary of the employed techniques: empirical wavelet transform, anomaly detection, and quantile regression will be explained in detail.

4.1. Empirical Wavelet Transform

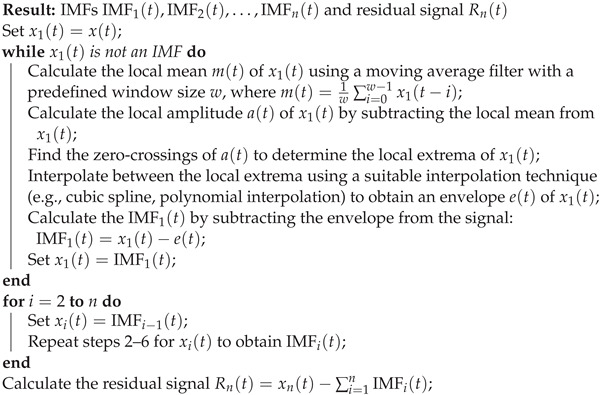

The EWT is a signal processing technique that decomposes a signal into oscillatory modes with different scales and frequencies [63]. Given an input signal and a mother wavelet , the EWT first generates a set of n non-linear and non-stationary functions called intrinsic mode functions (IMFs) using Algorithm 1 [64,65].

| Algorithm 1: Empirical wavelet transform |

|

After obtaining the set of IMFs, the EWT applies a Fourier transform to each IMF to obtain a set of n spectrograms, which are used to visualize the time–frequency content of the signal. The EWT can be expressed mathematically as follows:

where is the ith filter defined as the convolution of the scaling function and the mother wavelet scaled by a factor of :

| (1) |

A major advantage of EWT is its ability to adaptively decompose a signal into a set of components, each representing a distinct frequency band. This adaptability allows EWT to accurately capture a signal’s local and global characteristics, making it well-suited to analyze complex and irregular data patterns. EWT enables the extraction of information from signals with low signal-to-noise ratios [66].

The ability of EWT to handle non-stationary signals makes it a promising choice for analyzing time-varying data, such as those typically encountered in predictive maintenance tasks. Another benefit of EWT is its computational efficiency, important when working with large datasets or when real-time processing is required. Its flexibility in selecting wavelet functions allows for the optimal representation of the signal under analysis, further increasing its effectiveness in a wide range of applications [67].

Savitzky–Golay Filter

The Savitzky–Golay filter is a polynomial smoothing filter often used to remove noise from time series data while preserving the underlying trends in the data [68]. The filter works by fitting a polynomial of a specified order to a local window of the data and using this polynomial to estimate the smoothed values at each point in the time series [69].

Given a time series with N data points, the Savitzky–Golay filter estimates the smoothed value at each point using a polynomial of order p and a local window of size :

| (2) |

where the coefficients are obtained by solving a least-squares problem that minimizes the sum of the squared errors between the polynomial fit and the original data:

| (3) |

The solution to this least-squares problem can be written in terms of a set of pre-computed coefficients that only depend on the order of the polynomial p and the size of the local window . These coefficients can be pre-computed and stored in a matrix M for efficient computation of the smoothed values:

| (4) |

where is a vector of length N containing the estimated smoothed values and is a vector of length N containing the original data. The coefficients in the matrix M can be obtained as follows:

| (5) |

where X is a matrix with dimensions containing the powers of the time variable t for the local window of size and the polynomial order p. Specifically, for and .

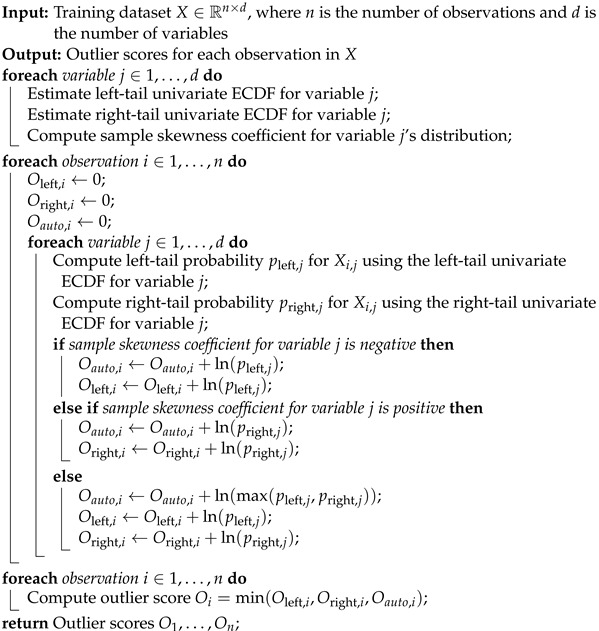

4.2. Anomaly Detection

The ensemble of complementary outlier detection algorithms detects outliers in a dataset based on rare events in low-density regions of the probability distribution. The algorithm uses an ensemble of complementary detectors, each capturing a different aspect of outlier behavior. Formally, let be a set of n d-dimensional observations, where each observation is a vector of d real-valued variables. The ECOD algorithm proceeds as follows:

For each variable , the left and right tails of the empirical cumulative distribution functions (ECDFs) are estimated. Next, the ECOD computes the sample skewness coefficient for the jth feature distribution, used to determine whether to use the left- or right-tail probability in computing the outlier score.

An assessment of the observation is attained by computing three values: the O-left score, the O-right score, and the O-auto score. The O-left score constitutes an assessment of the outliers located in the lower tail of the distribution for each variable; the O-right score quantifies outliers situated in the upper tail of the distribution for each variable; and the O-auto score implements an adaptive adjustment of the tail probabilities based on the distribution’s skewness. The procedure is summarized in Algorithm 2.

| Algorithm 2: ECOD outlier detection algorithm |

|

4.3. Quantile Regression

Quantile regression is an extension of the linear regression model that estimates the conditional quantiles of the response variable. It estimates the values of the response variable at various quantiles of the response’s conditional distribution given the predictor variables. The method is beneficial when the mean regression function does not represent the relationship between the response and predictor variables and when the response distribution is asymmetric or has heavy tails.

Let Y be the response variable and let represent a vector of p predictor variables. The quantile regression model can be formulated as follows:

| (6) |

where is the conditional -quantile of Y given , is the quantile level (with ), is the vector representing the -quantile intercept and slope parameters, respectively, and is the error term that follows a -dependent distribution with zero mean and finite variance. The regression coefficients quantify the impact of the predictor variable on the -quantile of the response variable.

To estimate the quantile regression coefficients, one typically minimizes the following objective function:

| (7) |

where is the observed response for the ith observation, is the vector of predictor variables for the ith observation, is the vector of quantile regression coefficients, and is the check function. Here, is an indicator function that equals 1 when and 0 otherwise.

4.4. Limitations

A limitation in applying the proposed method is that the anomaly conditions can be related to high frequencies, and the use of filters can hide these patterns; therefore, an analysis of the relationship between identifying what is noise and what is a failure characteristic should be conducted.

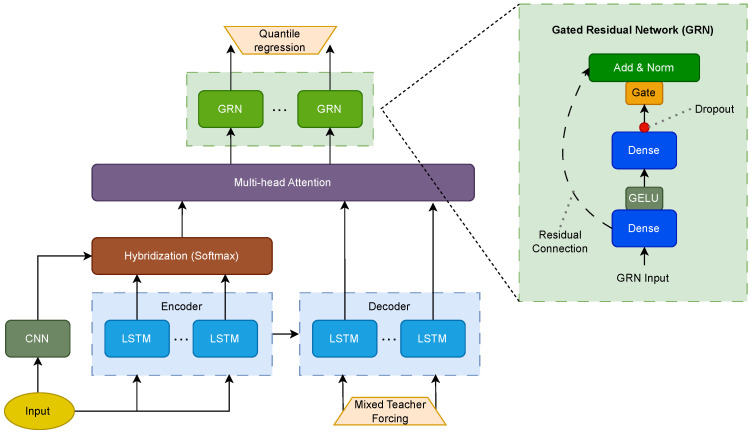

5. Proposed Architecture

The proposed architecture for time series forecasting is a hybrid neural network that combines the strengths of LSTM and CNN. The network is designed to capture complex temporal dependencies in time series data by leveraging the complementary strengths of LSTM and CNN, while using attention mechanisms and gated residual units to improve the accuracy and stability of the predictions. Table 2 summarizes the main parameters and variables employed in this section.

Table 2.

Summary of parameters and variables in the proposed architecture.

| Symbol | Description |

|---|---|

| X | Input sequence (time series of interest) |

| Input at time step t | |

| h | Sequence of hidden states from LSTM encoder |

| Hidden state at time step t | |

| W | Weight matrices |

| b | Bias vectors |

| Input gate vector at time step t | |

| Forget gate vector at time step t | |

| Output gate vector at time step t | |

| Candidate gate vector at time step t | |

| Candidate memory cell at time step t | |

| c | Feature map of the input sequence from CNN |

| Attention weights for encoder hidden state and CNN output | |

| Context vector at time step t | |

| Query matrix at time step t | |

| Key matrix at time step t | |

| Value matrix at time step t | |

| Multi-head attention weights for key-value pairs | |

| Multi-head context vector at time step t | |

| Intermediate output of the GRN | |

| Intermediate output of the GRN | |

| Quantile regression output at time step t |

First, the LSTM encoder processes the input sequence (given by the time series of interest) to produce a sequence of hidden states , which summarize the temporal information of the input. The LSTM equations for computing the hidden states are:

| (8) |

| (9) |

| (10) |

| (11) |

| (12) |

| (13) |

in which is the sigmoid function, ⊙ is element-wise multiplication, and W and b are weight matrices and bias vectors, respectively. The input and hidden state are concatenated and multiplied by different weight matrices , , , , , , , and , as well as bias vectors , , , and , to produce input, forget, output, and candidate gate vectors , , , and . The output gate controls which part of the candidate memory cell is passed through the hyperbolic tangent activation function to produce the hidden state [70].

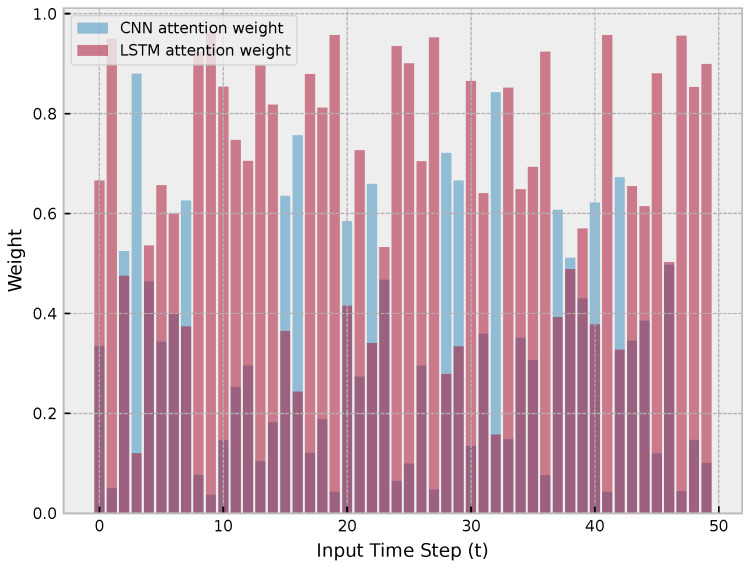

Next, an attention mechanism combines the encoder output h with the output of a CNN, denoted as c, which is a feature map of the input sequence obtained by applying convolutional filters to the time series. The attention mechanism computes a context vector as a weighted sum of the encoder output h and the CNN output c, where the weights are learned dynamically based on the final error through backpropagation. The attention weights for each encoder hidden state and CNN output are computed as:

| (14) |

and the context vector is computed as follows:

| (15) |

After the attention mechanism combines the encoder output h and the CNN output c to produce the context vector , the multi-head attention mechanism is used to link the decoder output to the hybridized LSTM output .

The query matrix corresponds to the decoder output at time step t, and has dimensions , where is the dimension of the query vector and m is the number of attention heads. The key and value matrices and correspond to the hybridized encoder and CNN output at time step t, respectively, and have dimensions and , where and are the dimensions of the key and value vectors, respectively, and T is the length of the input time series. The multi-head attention weights for each key–value pair are then computed as follows:

| (16) |

where is the hth attention head of the query matrix , and is the ith column of the key matrix . The multi-head context vector is then computed as a weighted sum of the value matrix , using the attention weights :

| (17) |

where is the ith column of the value matrix .

After the multi-head attention mechanism links the decoder output to the hybridized encoder and the CNN output, the output is passed through gated residual networks (GRN) to produce the final quantile regression outputs.

Specifically, the GRN takes the multi-head context vector as the input and first applies two separate linear transformations, denoted as and , to the input vector . The resulting output is then passed through a Gaussian error linear unit (GELU) activation function, followed by another linear transformation, denoted as , to produce the intermediate output :

| (18) |

| (19) |

where and are bias terms; and the GELU activation function is given by:

| (20) |

where is the cumulative distribution function (CDF) of the standard normal distribution, i.e.,

| (21) |

The GELU function applies the identity function to positive inputs and smoothly maps negative inputs to zero, using the CDF of the standard normal distribution to introduce non-linearity. The resulting function is continuous and differentiable everywhere [71].

The intermediate output is then passed through the gated linear unit (GLU) transformation, allowing for the suppression of unnecessary parts of the GRN. The GLU transformation is defined as follows:

| (22) |

where is the sigmoid activation function, and , , , and are learned parameters.

Finally, the output of the GLU transformation is added to the input vector and passed through layer normalization to produce the final quantile regression output :

| (23) |

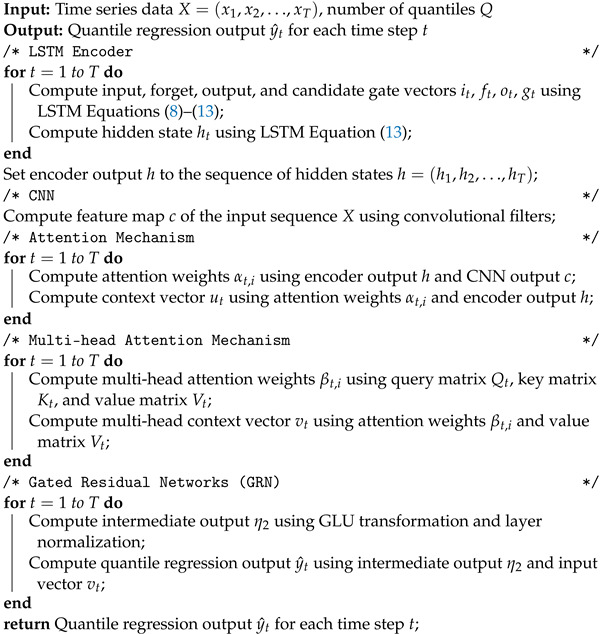

where layer normalization helps to stabilize network training. The procedure is summarized in Algorithm 3. The structure of the proposed method is shown in Figure 4.

| Algorithm 3: Hybrid LSTM-CNN with attention and GRN for time series forecasting |

|

Figure 4.

Graphical representation of the hybrid LSTM-CNN with attention and GRN for time series forecasting.

6. Results

In this study, the time series signals were pre-processed using several techniques to enhance the accuracy of the forecasting model. This step is considered crucial, since time series data may have characteristics that can affect forecasting models, such as noise, missing values, and seasonality. Data pre-processing improves model accuracy and interpretability.

Data noise can arise from measurement errors, recording inconsistencies, or random fluctuations. Smoothing techniques, such as moving averages or exponential smoothing, can reduce the impact of noise and improve model pattern capture. Missing values in time series data can lead to gaps in the input sequence, resulting in poor predictions.

Thus, we pre-processed the data to ensure the input sequences were clean and suitable for our hybrid LSTM-CNN architecture. Concerning this, the signals were first normalized using min–max normalization to ensure that all data points fell within the same range, thereby preventing the influence of outliers on the model.

To capture the trend of the signals, we utilized the EWT (see Figure 5),to decompose the signal into different frequency bands and capture any trends in the low-frequency components. This allowed us to de-trend the signals and remove any long-term patterns or irregularities that could affect the model’s accuracy.

Figure 5.

EWT decomposition of the signal captures any trends in the low-frequency components and allows for the de-trending of the signals to remove long-term patterns or irregularities that could affect the model’s accuracy. Resulting EWT decomposition for axes: X (a), Y (b), and Z (c).

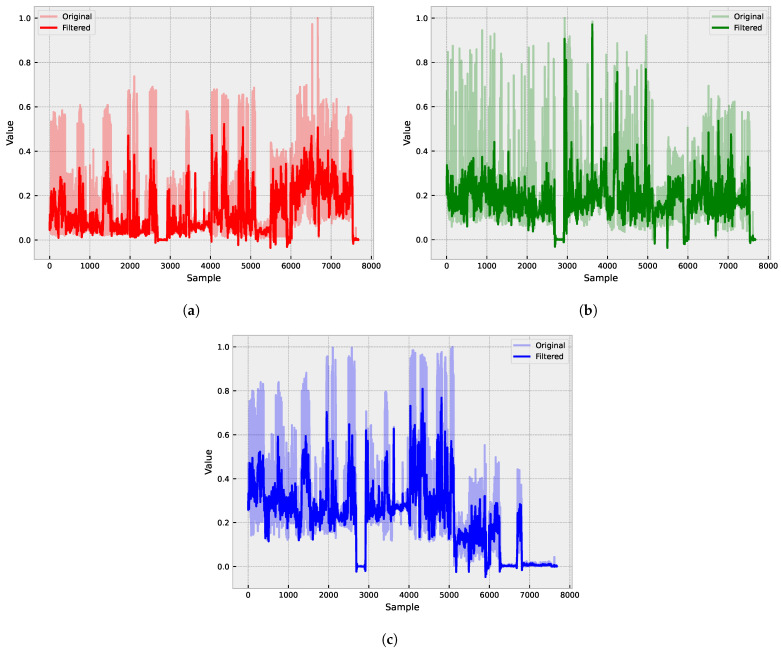

Finally, we applied a Savitzky–Golay to filter the signals and remove any remaining high-frequency noise or fluctuations. Figure 6 presents the impact of applying the Savitzky–Golay filter to the time series data. The figure includes two plots: one displaying the original, noisy signal and the other showing the filtered signal after applying the Savitzky–Golay filter. The plots are designed to visually demonstrate the effectiveness of the filter in reducing high-frequency noise while preserving the overall shape and trend of the original signal. By comparing the two plots, it becomes clear that the filtered signal is smoother and less affected by noise, making it a more suitable input for the forecasting model.

Figure 6.

Application of a smoothing filter to improve signal quality. The Savitzky–Golay filter removes high-frequency noise and fluctuations while preserving the signal’s shape and trend. This results in a cleaner and more accurate signal that can be used as input for the forecasting model. Original and filtered signals for axes: X (a), Y (b), and Z (c).

6.1. Time Series Forecasting

First, we compared the proposed model with traditional regression methods available from most off-the-shelf forecasting libraries. We relied on the traditional MSE (mean square error) metric, as shown in Table 3. The table shows that the proposed model outperforms the analyzed regression methods. The following configuration was used: a sequence of 50 input time steps to predict the next 5 time steps, resulting in a network with 11,343,653 trainable parameters. A one-layer LSTM was used both in the encoder and decoder, each with 64 hidden units; while the multi-head attention mechanism was set to 16 heads. For the CNN, a pre-trained ResNet18 was employed.

Table 3.

Comparison of the proposed model with the traditional benchmarks regarding the MSE.

| Model | MSE |

|---|---|

| ElasticNet Regression | 0.0056 |

| Decision Tree Regressor | 0.0048 |

| RandomForestRegressor | 0.0023 |

| K-Nearest Neighbours Regressor | 0.0051 |

| XGBoost | 0.0013 |

| Bagging Regressor | 0.0025 |

| Extra Trees Regressor | 0.0020 |

| MLP Regressor | 0.0015 |

| Gaussian Process Regressor | 0.0350 |

| Proposed Model | 0.0006 |

Then, Table 4 displays the performance of three different models regarding quantile regression (QR) accuracy for the 10% and 90% percentiles. The first model listed is the default Seq2Seq model, which serves as a baseline for comparison. The second model, Seq2Seq + MHA, includes a multi-head attention mechanism to improve the accuracy of the predictions. Finally, the third model is the proposed model, which utilizes the hybrid LSTM-CNN architecture with attention and gated residual networks (GRN) to enhance the accuracy of the predictions.

Table 4.

Comparison of the proposed model with the traditional benchmarks regarding the QR.

| Model | QR 10% | QR 90% |

|---|---|---|

| Default Seq2Seq | 0.0056 | 0.0068 |

| Seq2Seq + MHA | 0.0056 | 0.0064 |

| Proposed Model w/ResNet18 | 0.0028 | 0.0030 |

The results show that both the Seq2Seq + MHA and proposed models outperform the default Seq2Seq model for both quantiles, with the proposed model achieving the lowest QR values of 0.0031 and 0.0030 for the 10% and 90% percentiles, respectively. This indicates that the proposed model is more accurate in predicting extreme events in the time series data.

The findings of this study suggest that the hybrid LSTM-CNN architecture with attention and GRN is an effective approach for time series forecasting, particularly when predicting extreme events. The results also highlight the importance of utilizing attention mechanisms and GRN to enhance the accuracy of the predictions. Figure 7 illustrates the effectiveness of the hybrid LSTM-CNN architecture with attention and GRN for time series forecasting. The figure displays the way in which the network combines the inputs from the LSTM encoder and the CNN input to generate improved predictions, particularly for extreme events.

Figure 7.

Illustration of how the network dynamically adjusts the weights of the LSTM encoder and CNN input to improve the results.

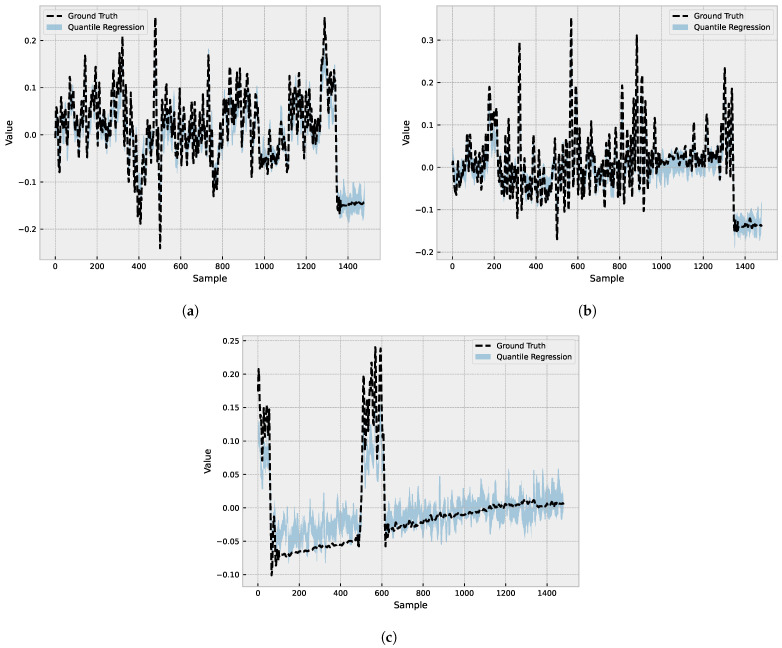

Figure 8 displays the results of three different predictions made by the forecasting model. Each figure presents a plot displaying the actual values (shown as a dashed line) along with the 10% and 90% quantile ranges (QR) illustrated as two separate lines. The shaded area between the QR lines represents the range of values that contain 80% of the predicted values. By visually analyzing the shaded area in relation to the actual values, we can assess the model’s accuracy and its ability to capture the range of possible outcomes. A narrow shaded area indicates that the model is more confident in its predictions, while a wider shaded area signifies greater uncertainty.

Figure 8.

The figures show the predicted values for the 10% and 90% quantile ranges (QR) for the X (a); Y (b); and Z (c) axes. The shaded area between the QRs represents the range of values that contain 80% of the predicted values.

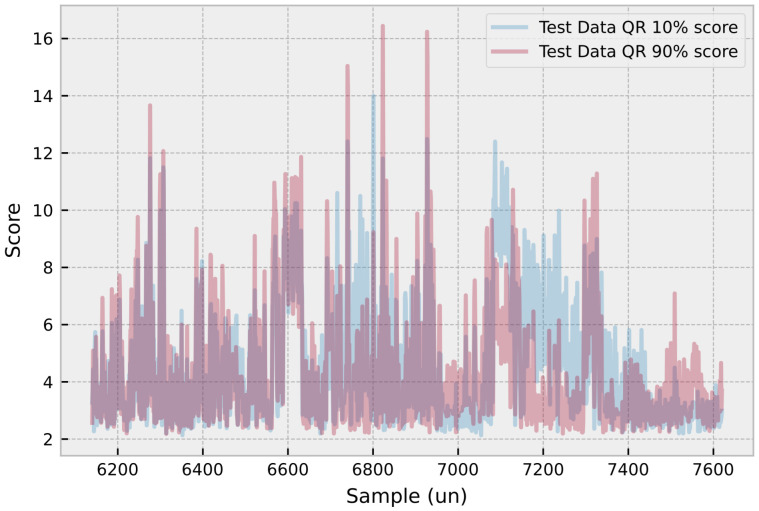

6.2. Anomaly Detection

Figure 9 demonstrates the application of the ECOD anomaly detection algorithm in the context of the neural-based predictive maintenance tool for electrical machines. The figure presents a plot that combines the 10% and 90% quantile predictions with the anomaly detection results derived from the ECOD algorithm. The plot showcases how the algorithm identifies potential faults within the given quantile range, allowing for a more comprehensive assessment of the machine’s health. By combining the quantile predictions with ECOD anomaly detection, machine operators can gain a deeper understanding of the machine’s health and the probability of faults occurring. This information enables them to make more informed decisions regarding maintenance planning and take proactive measures to address potential issues.

Figure 9.

ECOD algorithm leverages quantile predictions for probability-based maintenance planning.

7. Conclusions

In conclusion, this research addresses the critical issue of predictive maintenance for electrical machines. This study provides a neural-based predictive maintenance tool by developing a custom hybrid CNN-LSTM attention model utilizing quantile regression. This tool can effectively predict electrical machine failures and manage uncertainties present in the data.

Using vibration sensor data measured in three axes (axial, radial, and radial X) and applying advanced neural network techniques provides an accurate and efficient predictive maintenance tool that can greatly benefit companies. The developed tool allows companies to optimize their maintenance schedules and improve the overall performance of their electrical machines, ultimately reducing maintenance costs, increasing efficiency, and minimizing unplanned downtime.

Once the proposed model is properly trained, it can be used with inference data to define which equipment is most likely to fail concerning the machine’s vibration characteristics. This analysis can be used in predictive maintenance, providing more information about the machine’s health under evaluation.

While the data employed in this analysis is derived from a three-phase motor, the model has the potential to be expanded to other equipment with similar operations. Future work should be conducted applying the method in the field and determining its efficiency in automatically identifying equipment that needs maintenance based on machine learning, thus making the operators’ tasks easier.

Author Contributions

Writing—original draft and software A.B.; formal analysis and supervision, L.O.S.; supervision, E.C.; methodology, S.F.S.; writing—review and editing, V.C.M.; review and supervision, L.d.S.C. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in the experiments of this paper is confidential.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

The authors V.C.M. and L.d.S.C. thank the National Council for Scientific and Technological Development—CNPq (Grants number: 307958/2019-1-PQ, 307966/2019-4-PQ, and 408164/2021-2-Universal), and Fundação Araucária PRONEX Grant 042/2018 for its financial support of this work. The author L.O.S. thanks the National Council for Scientific and Technological Development—CNPq (Grant number: 308361/2022-9).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Kizito R., Scruggs P., Li X., Devinney M., Jansen J., Kress R. Long short-term memory networks for facility infrastructure failure and remaining useful life prediction. IEEE Access. 2021;9:67585–67594. doi: 10.1109/ACCESS.2021.3077192. [DOI] [Google Scholar]

- 2.Stefenon S.F., Seman L.O., Schutel Furtado Neto C., Nied A., Seganfredo D.M., Garcia da Luz F., Sabino P.H., Torreblanca González J., Leithardt V.R.Q. Electric field evaluation using the finite element method and proxy models for the design of stator slots in a permanent magnet synchronous motor. Electronics. 2020;9:1975. doi: 10.3390/electronics9111975. [DOI] [Google Scholar]

- 3.De Jonge B., Scarf P.A. A review on maintenance optimization. Eur. J. Oper. Res. 2020;285:805–824. doi: 10.1016/j.ejor.2019.09.047. [DOI] [Google Scholar]

- 4.Nguyen K.T., Medjaher K. A new dynamic predictive maintenance framework using deep learning for failure prognostics. Reliab. Eng. Syst. Saf. 2019;188:251–262. doi: 10.1016/j.ress.2019.03.018. [DOI] [Google Scholar]

- 5.Yoo Y., Jo H., Ban S.W. Lite and efficient deep learning model for bearing fault diagnosis using the CWRU dataset. Sensors. 2023;23:3157. doi: 10.3390/s23063157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hassan M.U., Steinnes O.M.H., Gustafsson E.G., Løken S., Hameed I.A. Predictive maintenance of Norwegian road network using deep learning models. Sensors. 2023;23:2935. doi: 10.3390/s23062935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Inyang U.I., Petrunin I., Jennions I. Diagnosis of multiple faults in rotating machinery using ensemble learning. Sensors. 2023;23:1005. doi: 10.3390/s23021005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhao M., Shi P., Xu X., Xu X., Liu W., Yang H. Improving the accuracy of an R-CNN-based crack identification system using different preprocessing algorithms. Sensors. 2022;22:7089. doi: 10.3390/s22187089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ma X., Wu J., Xue S., Yang J., Zhou C., Sheng Q.Z., Xiong H., Akoglu L. A comprehensive survey on graph anomaly detection with deep learning. IEEE Trans. Knowl. Data Eng. 2021 doi: 10.1109/TKDE.2021.3118815. [DOI] [Google Scholar]

- 10.Sopelsa Neto N.F., Stefenon S.F., Meyer L.H., Ovejero R.G., Leithardt V.R.Q. Fault prediction based on leakage current in contaminated insulators using enhanced time series forecasting models. Sensors. 2022;22:6121. doi: 10.3390/s22166121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.He Y., Yan Y., Xu Q. Wind and solar power probability density prediction via fuzzy information granulation and support vector quantile regression. Int. J. Electr. Power Energy Syst. 2019;113:515–527. doi: 10.1016/j.ijepes.2019.05.075. [DOI] [Google Scholar]

- 12.Wang H., Yang T., Han Q., Luo Z. Approach to the quantitative diagnosis of rolling bearings based on optimized VMD and Lempel–Ziv complexity under varying conditions. Sensors. 2023;23:4044. doi: 10.3390/s23084044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chen L., Liu X., Zeng C., He X., Chen F., Zhu B. Temperature prediction of seasonal frozen subgrades based on CEEMDAN-LSTM hybrid model. Sensors. 2022;22:5742. doi: 10.3390/s22155742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee H., Lee J. Convolutional model with a time series feature based on RSSI analysis with the Markov transition field for enhancement of location recognition. Sensors. 2023;23:3453. doi: 10.3390/s23073453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hasan F., Huang H. MALS-Net: A multi-head attention-based LSTM sequence-to-sequence network for socio-temporal interaction modelling and trajectory prediction. Sensors. 2023;23:530. doi: 10.3390/s23010530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Itajiba J.A., Varnier C.A.C., Cabral S.H.L., Stefenon S.F., Leithardt V.R.Q., Ovejero R.G., Nied A., Yow K.C. Experimental comparison of preferential vs. common delta connections for the star-delta starting of induction motors. Energies. 2021;14:1318. doi: 10.3390/en14051318. [DOI] [Google Scholar]

- 17.Ali M.Z., Shabbir M.N.S.K., Liang X., Zhang Y., Hu T. Machine learning-based fault diagnosis for single-and multi-faults in induction motors using measured stator currents and vibration signals. IEEE Trans. Ind. Appl. 2019;55:2378–2391. doi: 10.1109/TIA.2019.2895797. [DOI] [Google Scholar]

- 18.Ramu S.K., Irudayaraj G.C.R., Subramani S., Subramaniam U. Broken rotor bar fault detection using Hilbert transform and neural networks applied to direct torque control of induction motor drive. IET Power Electron. 2020;13:3328–3338. doi: 10.1049/iet-pel.2019.1543. [DOI] [Google Scholar]

- 19.Tran V.T., Yang B.S., Oh M.S., Tan A.C.C. Fault diagnosis of induction motor based on decision trees and adaptive neuro-fuzzy inference. Expert Syst. Appl. 2009;36:1840–1849. doi: 10.1016/j.eswa.2007.12.010. [DOI] [Google Scholar]

- 20.Ellefsen A.L., Bjørlykhaug E., Æsøy V., Ushakov S., Zhang H. Remaining useful life predictions for turbofan engine degradation using semi-supervised deep architecture. Reliab. Eng. Syst. Saf. 2019;183:240–251. doi: 10.1016/j.ress.2018.11.027. [DOI] [Google Scholar]

- 21.Wu J., Hu K., Cheng Y., Zhu H., Shao X., Wang Y. Data-driven remaining useful life prediction via multiple sensor signals and deep long short-term memory neural network. ISA Trans. 2020;97:241–250. doi: 10.1016/j.isatra.2019.07.004. [DOI] [PubMed] [Google Scholar]

- 22.Yoo Y.J. Fault detection of induction motor using fast Fourier transform with feature selection via principal component analysis. Int. J. Precis. Eng. Manuf. 2019;20:1543–1552. doi: 10.1007/s12541-019-00176-z. [DOI] [Google Scholar]

- 23.Branco N.W., Cavalca M.S.M., Stefenon S.F., Leithardt V.R.Q. Wavelet LSTM for fault forecasting in electrical power grids. Sensors. 2022;22:8323. doi: 10.3390/s22218323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Long Z., Zhang X., Zhang L., Qin G., Huang S., Song D., Shao H., Wu G. Motor fault diagnosis using attention mechanism and improved adaboost driven by multi-sensor information. Measurement. 2021;170:108718. doi: 10.1016/j.measurement.2020.108718. [DOI] [Google Scholar]

- 25.Yang Z., Kong C., Wang Y., Rong X., Wei L. Fault diagnosis of mine asynchronous motor based on MEEMD energy entropy and ANN. Comput. Electr. Eng. 2021;92:107070. doi: 10.1016/j.compeleceng.2021.107070. [DOI] [Google Scholar]

- 26.Medeiros A., Sartori A., Stefenon S.F., Meyer L.H., Nied A. Comparison of artificial intelligence techniques to failure prediction in contaminated insulators based on leakage current. J. Intell. Fuzzy Syst. 2022;42:3285–3298. doi: 10.3233/JIFS-211126. [DOI] [Google Scholar]

- 27.Stefenon S.F., Seman L.O., Mariani V.C., Coelho L.S. Aggregating prophet and seasonal trend decomposition for time series forecasting of Italian electricity spot prices. Energies. 2023;16:1371. doi: 10.3390/en16031371. [DOI] [Google Scholar]

- 28.Deng C., Deng Z., Lu S., He M., Miao J., Peng Y. Fault diagnosis method for imbalanced data based on multi-signal fusion and improved deep convolution generative adversarial network. Sensors. 2023;23:2542. doi: 10.3390/s23052542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ronkin M., Bykhovsky D. Passive fingerprinting of same-model electrical devices by current consumption. Sensors. 2023;23:533. doi: 10.3390/s23010533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Stefenon S.F., Freire R.Z., Meyer L.H., Corso M.P., Sartori A., Nied A., Klaar A.C.R., Yow K.C. Fault detection in insulators based on ultrasonic signal processing using a hybrid deep learning technique. IET Sci. Meas. Technol. 2020;14:953–961. doi: 10.1049/iet-smt.2020.0083. [DOI] [Google Scholar]

- 31.Rhif M., Ben Abbes A., Farah I.R., Martínez B., Sang Y. Wavelet transform application for/in non-stationary time-series analysis: A review. Appl. Sci. 2019;9:1345. doi: 10.3390/app9071345. [DOI] [Google Scholar]

- 32.Stefenon S.F., Kasburg C., Nied A., Klaar A.C.R., Ferreira F.C.S., Branco N.W. Hybrid deep learning for power generation forecasting in active solar trackers. IET Gener. Transm. Distrib. 2020;14:5667–5674. doi: 10.1049/iet-gtd.2020.0814. [DOI] [Google Scholar]

- 33.Faysal A., Ngui W.K., Lim M.H., Leong M.S. Noise eliminated ensemble empirical mode decomposition scalogram analysis for rotating machinery fault diagnosis. Sensors. 2021;21:8114. doi: 10.3390/s21238114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chui K.T., Gupta B.B., Liu R.W., Vasant P. Handling data heterogeneity in electricity load disaggregation via optimized complete ensemble empirical mode decomposition and wavelet packet transform. Sensors. 2021;21:3133. doi: 10.3390/s21093133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gao J., Wang X., Wang X., Yang A., Yuan H., Wei X. A high-impedance fault detection method for distribution systems based on empirical wavelet transform and differential faulty energy. IEEE Trans. Smart Grid. 2022;13:900–912. doi: 10.1109/TSG.2021.3129315. [DOI] [Google Scholar]

- 36.Zhao B., Li Q., Lv Q., Si X. A spectrum adaptive segmentation empirical wavelet transform for noisy and nonstationary signal processing. IEEE Access. 2021;9:106375–106386. doi: 10.1109/ACCESS.2021.3099500. [DOI] [Google Scholar]

- 37.Xu Y., Zhang K., Ma C., Sheng Z., Shen H. An adaptive spectrum segmentation method to optimize empirical wavelet transform for rolling bearings fault diagnosis. IEEE Access. 2019;7:30437–30456. doi: 10.1109/ACCESS.2019.2902645. [DOI] [Google Scholar]

- 38.Xin Y., Li S., Zhang Z., An Z., Wang J. Adaptive reinforced empirical morlet wavelet transform and its application in fault diagnosis of rotating machinery. IEEE Access. 2019;7:65150–65162. doi: 10.1109/ACCESS.2019.2917042. [DOI] [Google Scholar]

- 39.Xu Y., Deng Y., Zhao J., Tian W., Ma C. A novel rolling bearing fault diagnosis method based on empirical wavelet transform and spectral trend. IEEE Trans. Instrum. Meas. 2020;69:2891–2904. doi: 10.1109/TIM.2019.2928534. [DOI] [Google Scholar]

- 40.Deng W., Zhang S., Zhao H., Yang X. A novel fault diagnosis method based on integrating empirical wavelet transform and fuzzy entropy for motor bearing. IEEE Access. 2018;6:35042–35056. doi: 10.1109/ACCESS.2018.2834540. [DOI] [Google Scholar]

- 41.Huang X., Wen G., Liang L., Zhang Z., Tan Y. Frequency phase space empirical wavelet transform for rolling bearings fault diagnosis. IEEE Access. 2019;7:86306–86318. doi: 10.1109/ACCESS.2019.2922248. [DOI] [Google Scholar]

- 42.Wang X., Tang G., Wang T., Zhang X., Peng B., Dou L., He Y. Lkurtogram guided adaptive empirical wavelet transform and purified instantaneous energy operation for fault diagnosis of wind turbine bearing. IEEE Trans. Instrum. Meas. 2021;70:1–19. doi: 10.1109/TIM.2020.3043946. [DOI] [Google Scholar]

- 43.Zhang X., Kuenzel S., Colombo N., Watkins C. Hybrid short-term load forecasting method based on empirical wavelet transform and bidirectional long short-term memory neural networks. J. Mod. Power Syst. Clean Energy. 2022;10:1216–1228. doi: 10.35833/MPCE.2021.000276. [DOI] [Google Scholar]

- 44.Stefenon S.F., Freire R.Z., Coelho L.S., Meyer L.H., Grebogi R.B., Buratto W.G., Nied A. Electrical insulator fault forecasting based on a wavelet neuro-fuzzy system. Energies. 2020;13:484. doi: 10.3390/en13020484. [DOI] [Google Scholar]

- 45.Kurnyta A., Baran M., Kurnyta-Mazurek P., Kowalczyk K., Dziendzikowski M., Dragan K. The experimental verification of direct-write silver conductive grid and ARIMA time series analysis for crack propagation. Sensors. 2021;21:6916. doi: 10.3390/s21206916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fernandes F., Stefenon S.F., Seman L.O., Nied A., Ferreira F.C.S., Subtil M.C.M., Klaar A.C.R., Leithardt V.R.Q. Long short-term memory stacking model to predict the number of cases and deaths caused by COVID-19. J. Intell. Fuzzy Syst. 2022;6:6221–6234. doi: 10.3233/JIFS-212788. [DOI] [Google Scholar]

- 47.Masini R.P., Medeiros M.C., Mendes E.F. Machine learning advances for time series forecasting. J. Econ. Surv. 2023;37:76–111. doi: 10.1111/joes.12429. [DOI] [Google Scholar]

- 48.Lim B., Arık S.Ö., Loeff N., Pfister T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021;37:1748–1764. doi: 10.1016/j.ijforecast.2021.03.012. [DOI] [Google Scholar]

- 49.Li D., Tan Y., Zhang Y., Miao S., He S. Probabilistic forecasting method for mid-term hourly load time series based on an improved temporal fusion transformer model. Int. J. Electr. Power Energy Syst. 2023;146:108743. doi: 10.1016/j.ijepes.2022.108743. [DOI] [Google Scholar]

- 50.Stefenon S.F., Kasburg C., Freire R.Z., Silva Ferreira F.C., Bertol D.W., Nied A. Photovoltaic power forecasting using wavelet neuro-fuzzy for active solar trackers. J. Intell. Fuzzy Syst. 2021;40:1083–1096. doi: 10.3233/JIFS-201279. [DOI] [Google Scholar]

- 51.Fan D., Sun H., Yao J., Zhang K., Yan X., Sun Z. Well production forecasting based on ARIMA-LSTM model considering manual operations. Energy. 2021;220:119708. doi: 10.1016/j.energy.2020.119708. [DOI] [Google Scholar]

- 52.Feng G., Zhang L., Ai F., Zhang Y., Hou Y. An improved temporal fusion transformers model for predicting supply air temperature in high-speed railway carriages. Entropy. 2022;24:1111. doi: 10.3390/e24081111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Toma R.N., Gao Y., Piltan F., Im K., Shon D., Yoon T.H., Yoo D.S., Kim J.M. Classification framework of the bearing faults of an induction motor using wavelet scattering transform-based features. Sensors. 2022;22:8958. doi: 10.3390/s22228958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Van M., Hoang D.T., Kang H.J. Bearing fault diagnosis using a particle swarm optimization-least squares wavelet support vector machine classifier. Sensors. 2020;20:3422. doi: 10.3390/s20123422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Tian A., Zhang Y., Ma C., Chen H., Sheng W., Zhou S. Noise-robust machinery fault diagnosis based on self-attention mechanism in wavelet domain. Measurement. 2023;207:112327. doi: 10.1016/j.measurement.2022.112327. [DOI] [Google Scholar]

- 56.Wang L., Liu Z., Cao H., Zhang X. Subband averaging kurtogram with dual-tree complex wavelet packet transform for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2020;142:106755. doi: 10.1016/j.ymssp.2020.106755. [DOI] [Google Scholar]

- 57.Zhang X., Wang J., Liu Z., Wang J. Weak feature enhancement in machinery fault diagnosis using empirical wavelet transform and an improved adaptive bistable stochastic resonance. ISA Trans. 2019;84:283–295. doi: 10.1016/j.isatra.2018.09.022. [DOI] [PubMed] [Google Scholar]

- 58.Shi Z., Yang X., Li Y., Yu G. Wavelet-based synchroextracting transform: An effective TFA tool for machinery fault diagnosis. Control Eng. Pract. 2021;114:104884. doi: 10.1016/j.conengprac.2021.104884. [DOI] [Google Scholar]

- 59.Ding J. A double impulsiveness measurement indices-bilaterally driven empirical wavelet transform and its application to wheelset-bearing-system compound fault detection. Measurement. 2021;175:109135. doi: 10.1016/j.measurement.2021.109135. [DOI] [Google Scholar]

- 60.Gadanayak D.A., Mallick R.K. Interharmonics based high impedance fault detection in distribution systems using maximum overlap wavelet packet transform and a modified empirical mode decomposition. Int. J. Electr. Power Energy Syst. 2019;112:282–293. doi: 10.1016/j.ijepes.2019.04.050. [DOI] [Google Scholar]

- 61.Liu Q., Yang J., Zhang K. An improved empirical wavelet transform and sensitive components selecting method for bearing fault. Measurement. 2022;187:110348. doi: 10.1016/j.measurement.2021.110348. [DOI] [Google Scholar]

- 62.Yu H., Li H., Li Y. Vibration signal fusion using improved empirical wavelet transform and variance contribution rate for weak fault detection of hydraulic pumps. ISA Trans. 2020;107:385–401. doi: 10.1016/j.isatra.2020.07.025. [DOI] [PubMed] [Google Scholar]

- 63.Klaar A.C.R., Stefenon S.F., Seman L.O., Mariani V.C., Coelho L.S. Optimized EWT-Seq2Seq-LSTM with attention mechanism to insulators fault prediction. Sensors. 2023;23:3202. doi: 10.3390/s23063202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Liu N., Li Z., Sun F., Wang Q., Gao J. The improved empirical wavelet transform and applications to seismic reflection data. IEEE Geosci. Remote Sens. Lett. 2019;16:1939–1943. doi: 10.1109/LGRS.2019.2911092. [DOI] [Google Scholar]

- 65.Zheng J., Huang S., Pan H., Jiang K. An improved empirical wavelet transform and refined composite multiscale dispersion entropy-based fault diagnosis method for rolling bearing. IEEE Access. 2020;8:168732–168742. doi: 10.1109/ACCESS.2019.2940627. [DOI] [Google Scholar]

- 66.Thirumala K., Pal S., Jain T., Umarikar A.C. A classification method for multiple power quality disturbances using EWT based adaptive filtering and multiclass SVM. Neurocomputing. 2019;334:265–274. doi: 10.1016/j.neucom.2019.01.038. [DOI] [Google Scholar]

- 67.Kedadouche M., Thomas M., Tahan A. A comparative study between empirical wavelet transforms and empirical mode decomposition methods: Application to bearing defect diagnosis. Mech. Syst. Signal Process. 2016;81:88–107. doi: 10.1016/j.ymssp.2016.02.049. [DOI] [Google Scholar]

- 68.Kordestani H., Zhang C. Direct use of the Savitzky–Golay filter to develop an output-only trend line-based damage detection method. Sensors. 2020;20:1983. doi: 10.3390/s20071983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Chen Y., Cao R., Chen J., Liu L., Matsushita B. A practical approach to reconstruct high-quality landsat NDVI time-series data by gap filling and the Savitzky–Golay filter. ISPRS J. Photogramm. Remote Sens. 2021;180:174–190. doi: 10.1016/j.isprsjprs.2021.08.015. [DOI] [Google Scholar]

- 70.Stefenon S.F., Seman L.O., Aquino L.S., Coelho L.S. Wavelet-Seq2Seq-LSTM with attention for time series forecasting of level of dams in hydroelectric power plants. Energy. 2023;274:127350. doi: 10.1016/j.energy.2023.127350. [DOI] [Google Scholar]

- 71.Ni S., Jia P., Xu Y., Zeng L., Li X., Xu M. Prediction of CO concentration in different conditions based on Gaussian-TCN. Sens. Actuators Chem. 2023;376:133010. doi: 10.1016/j.snb.2022.133010. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset used in the experiments of this paper is confidential.