Abstract

Objective:

The manual extraction of case details from patient records for cancer surveillance efforts is a resource-intensive task. Natural Language Processing (NLP) techniques have been proposed for automating the identification of key details in clinical notes. Our goal was to develop NLP application programming interfaces (APIs) for integration into cancer registry data abstraction tools in a computer-assisted abstraction setting.

Methods:

We used cancer registry manual abstraction processes to guide the design of DeepPhe-CR, a web-based NLP service API. The coding of key variables was done through NLP methods validated using established workflows. A container-based implementation including the NLP wasdeveloped. Existing registry data abstraction software was modified to include results from DeepPhe-CR. An initial usability study with data registrars provided early validation of the feasibility of the DeepPhe-CR tools.

Results:

API calls support submission of single documents and summarization of cases across multiple documents. The container-based implementation uses a REST router to handle requests and support a graph database for storing results. NLP modules extract topography, histology, behavior, laterality, and grade at 0.79–1.00 F1 across common and rare cancer types (breast, prostate, lung, colorectal, ovary and pediatric brain) on data from two cancer registries. Usability study participants were able to use the tool effectively and expressed interest in adopting the tool.

Discussion:

Our DeepPhe-CR system provides a flexible architecture for building cancer-specific NLP tools directly into registrar workflows in a computer-assisted abstraction setting. Improving user interactions in client tools, may be needed to realize the potential of these approaches. DeepPhe-CR: https://deepphe.github.io/.

Keywords: Cancer Informatics, Cancer Registry, Natural Language Processing, Application Programming Interfaces, Data Abstraction

INTRODUCTION

Cancer abstracts based on individual patient records provide the foundation for cancer surveillance efforts at the local, state, and national levels. These reports are generated manually by cancer registrars, who extract descriptors of tumor location, morphology, behavior, and other cancer characteristics from the documentation in the Electronic Health Records (EHRs), e.g. clinical notes, pathology notes and other relevant documentation. Given the complexity and volume of clinical text found in modern EHRs, natural language processing (NLP) techniques present an appealing strategy for optimizing this resource-intensive process. By partially or fully automating the identification of key information, NLP tools have been shown to help registrars identify relevant details,1–5 thus potentially speeding the ease and efficiency of data abstraction. To that end, we have adapted our DeepPhe (Deep Phenotyping for Oncology Research) system for creating longitudinal patient histories from clinical notes,6 to build DeepPhe-CR (Deep Phenotyping for Cancer Registries),7 a software-service platform for embedding cancer-optimized NLP into cancer registrar data abstraction tools and workflows in a computer-assisted setting.

Effective cancer surveillance presents a challenge in coordinated data collection. Cancer abstracts often begin at a hospital where a patient is diagnosed or treated for cancer. This initial hospital abstract is collected by skilled registrars, often holding the Certified Tumor Registrar (CTR) certification, according to constantly updated guidelines and data standards published by a variety of organizations such as the National Cancer Institute’s Surveillance Epidemiology and End-Results (SEER) program, the Center for Disease Control’s National Program of Cancer Registries (NPCR), and the American College of Surgeons’ Commission on Cancer (CoC). Contributions from all these standards agencies are coordinated by the North American Association of Central Cancer Registries (NAACCR) into an annual data standards release, describing the procedures for extracting key details such as tumor site, morphology, stage, first-course of treatment, and survival8. Once collected, hospital abstracts are submitted to the state central cancer registry. At the state cancer registry, hospital abstracts and other clinical documents are consolidated into a single patient-level document (although abstracting may be further complicated for patients with multiple primary cancers) using established workflows supported by registry software tools such as SEER*DMS9, and are then submitted to national programs such as SEER and NPCR. With an increasingly fluid data ecosystem, cancer registrars are expected to synthesize information from a variety of clinical notes, pathology reports, and other sources of narrative text for submission to many different local and national agencies, according to increasingly complex data collection standards.

As identifying and coding relevant content in clinical notes can be a highly laborious process, partially or fully automated approaches based on NLP techniques have been implemented as a means of increasing efficiency and accuracy (as reviewed by Savova, et al. 10 and Wang et al.11). A variety of NLP methods have been applied to a number of challenges in extracting cancer information from clinical text, including treatments12, recurrences13, and other attributes.14 Recent efforts have explored the application of these techniques to SEER registry tasks, including the application of multi-task convolutional neural networks (CNNs) to a large set of electronic documents from the Louisiana Tumor Registry,1 the use of CNNs to extract information from pediatric cancer pathology notes,2 mapping of concepts in pathology notes to ICD-O-3 codes,4 and the use of transfer learning techniques to apply models trained in one registry to notes from other registries.3

Since 2014, we have been developing the DeepPhe system to extract and visualize longitudinal patient histories from clinical text to combine with the structured data from electronic medical record (EMRs). Based on the Apache Clinical Text and Knowledge Extraction System (cTAKES) NLP platform,15 DeepPhe combines focused NLP for identifying and coding key cancer variables with capabilities for summarization to provide views at multiple granularities, from individual mentions to high-level summaries.6 Extracted results can be displayed and interpreted at both the cohort and individual patient and cancer levels using our web-based visual analytics tool.16

A broad range of prior efforts have used NLP techniques to extract phenotypes from the EHR clinical narrative.10 Perhaps most similar to DeepPhe, recent efforts by Alawad, et al.1 and Yoon et al.5 have successfully applied multi-task deep convolutional learning to extract attributes including site, laterality, behavior, histology, and grade. However, these tools work in batch modes, processing large corpora of textual documents and assembling outputs for a subsequent analysis. These methods also have relied on a “bronze” standard for ground truth where the predictions are modeled using the values found in abstracted registry reports associated with the collection of pathology documents for a tumor, rather than gold standard codes derived from each pathology document itself. This approach is not without limitations: performance is good for more common sites but suffers for rarer sites.

As pointed out above, DeepPhe-CR (DeepPhe for Cancer Registries) builds on DeepPhe but was designed to support registrar data abstraction processes, which rely upon manual review of patient’s EMR documents to identify key cancer details, which are then entered into appropriate forms in web-based interfaces. Tools that close the gap between these workflow steps add NLP outputs to registry workflows, potentially bringing improvements in task completion times, accuracy, and user satisfaction. Specifically, NLP-enhanced abstraction tools might extract relevant concepts, pre-populating appropriate fields in registry interfaces, thus providing registrars with faster access to relevant values and guiding their review of the notes. Although this approach – known as computer-assisted clinical coding, has been explored in a number of fields,17 including recent applications to cancer registry abstraction,1–4 reports to date have focused on information extraction and classification tasks for full automation, with little attention paid to the details of how these techniques might be integrated into registry tools and workflows in a computer-assisted setting.

To further explore the possible benefits of integrating NLP into cancer registry workflows, our team of informaticians, clinicians, epidemiologists, and registrars collaborated to understand registrar needs, built the modified version of DeepPhe -- DeepPhe-CR -- providing REST18 API calls designed to support integration with registry software in a computer-assisted setting. Here, we describe the goals and design of the system, and lessons learned through the interactions with registrars at multiple SEER registries.

METHODS

Basic Requirements

Initial design discussions with cancer registrars identified several criteria that must be met for a successful integration of DeepPhe-CR with registry software. As the widely used SEER*DMS software and other prominent registry tools are web-based, integration through client-side HTTP calls was determined to be the most straightforward approach. Specifically, we decided to expose DeepPhe-CR functionality via the REST software architectural style API18, capable of submitting documents and retrieving results, including the type and location of relevant text spans (indicated by start and end character indices).

We also identified the importance of providing this REST API as a single point of access. The principal DeepPhe software stack (which we modified for DeepPhe-CR) involves multiple components, including a processing pipeline and a database for result storage. To simplify integration while providing maximal flexibility for future evolution, the design of DeepPhe-CR aims to hide these components behind a single-entry point. To simplify adoption, the DeepPhe-CR software is provided as a set of Docker containers designed for easy installation and configuration.

Finally, because our goal is computer-assisted abstraction we identified several steps necessary to support registrars in their review and coding of clinical documents. The DeepPhe-CR output include spans and their represented classes for registry-pertinent extracted variables. Registrars review documents as presented in text windows in a graphical user interface. A “pre-annotation” setup for using NLP results to facilitate abstraction is needed to support this effort. This configuration activates the NLP modules to identify text spans associated with extracted items and color-codes each span of the relevant data item type in the text window.

Discussions with registrars also led to the identification of primary site (topography, major and minor), histology, behavior, laterality, grade, and stage as the key variables to be extracted from the notes (Table 1). In addition to the required elements, DeepPhe-CR extracts certain biomarkers, which are items of growing interest to the registry community. To balance registrars’ needs for highly-accurate extraction against the challenges of building highly effective clinical NLP tools, we established an initial goal of extraction of each of these attributes at F1 scores of 0.75 or better to demonstrate initial feasibility for computer-assisted abstraction. This goal of 0.75 F1 is guidance provided by the Cancer Registries based on their previous work on efficiency within a computer-assisted setting. F1 is the harmonic mean of precision/positive predictive value and recall/sensitivity and is the classic metric for reporting overall NLP system performance.

Table 1:

Cancer data elements to be extracted

| NAACCR Data item | NAACCR Item Number |

|---|---|

| Primary Site (topography – major and minor) | 400 |

| Histology | 522 |

| Behavior Code | 523 |

| Laterality | 410 |

| Grade | 440 |

| AJCC Clinical TNM Stage | 1001, 1002, 1003, 1004 |

| AJCC Pathological TNM Stage | 1011, 1012, 1013, 1014 |

AJCC: American Joint Committee on Cancer; NAACCR: North American Association of Central Cancer Registries; TNM: tumor, node, metastasis.

Inquiries, API Development, and Prototype

A series of discussions and software demonstrations served to introduce the DeepPhe-CR software development team to registrar workflow practices. Insights from these discussions were used to develop the draft specification for the REST API. Based on this draft, we developed an initial version of the DeepPhe-CR software stack. The specification document and the DeepPhe-CR tools were iteratively refined until the necessary functionality was complete to allow integration into registry software. Discussions with two independent registry software developer teams validated this approach.

Information Extraction

A serially executed NLP workflow was used to develop the approaches for extracting the required data items. Two domain experts and an informatician (GS) developed detailed annotation guidelines and piloted them on a set of 30 patients. Disagreements were tracked and discussed. As a result, the annotation guidelines were adjusted to address the areas of disagreement. Inter-annotator agreement was computated at 0.77–1 kappa on an additional set of 30 patients. Gold standard annotations for 1560 randomly selected patients with several common cancer types – breast, lung, colorectal, ovarian, and prostate as well as a rare type of cancer (pediatric brain cancer) were created based on the finalized annotation guidelines and split into training, development, and test sets. The data were provided by two SEER cancer registries (Kentucky and Louisiana). Pediatric brain cancer was included as it represents a rare type cancer thus data sparcity. The original DeepPhe ontology was modified to the ICD-O to create the DeepPhe-CR ontology. Thus, the DeepPhe-CR consists of the existing DeepPhe approaches6 with the DeepPhe-CR ontology as the backbone. The train split was used to identify missing concepts from the DeepPhe-CR ontology and the development split – to validate the ontology extensions. DeepPhe-CR was evaluated on the held-out test split. Table 2 provides the distribution across types of cancers and train/development/test splits.

Table 2:

Data distribution (number of patients) across common types of cancers (breast, lung, prostate, colorectal, ovarian) and a rare type of cancer (pediatric brain cancer)

| Breast | Lung | Prostate | Colorectal | Ovarian | Pediatric Brain | Total | |

|---|---|---|---|---|---|---|---|

| Train | 231 | 198 | 178 | 90 | 90 | 120 | 907 |

| Development | 72 | 55 | 48 | 30 | 29 | 41 | 234 |

| Test | 134 | 120 | 106 | 30 | 29 | 39 | 419 |

| Total | 437 | 373 | 332 | 150 | 148 | 200 | 1560 |

Integration Example, User Study, and Generalization

Upon completion of the initial API, implementations, and methods development, the tools were integrated into the Kentucky Cancer Registry’s Cancer Patient Data Management System (CPDMS), a robust and stable software platform developed and implemented across the state.19 This initial implementation was designed to demonstrate the feasibility of including DeepPhe-CR in a registry-scale software system in a computer-assisted abstraction setting. In a series of recorded sessions, three members of the project team who were skilled with cancer data abstraction were provided a brief introduction to the augmented CPDMS tool. They then used both the original CPDMS tool and the enhanced version to abstract the information for cancer cases, each supported by documents of varying length and relevant context. Accuracy, task completion time, and qualitative observations were used to descriptively assess the utility of the revised workflow.

Discussions with registry staff from two additional states (Massachusetts and Louisiana) were used to verify generalizability of the workflow to new contexts. Similarly, new gold standard datasets were created to account for data from the Louisiana Tumor Registry, which was used to further evaluate the system.

RESULTS

Inquiries, API Development, and Prototype

Our discussions of functional requirements and our observations of registrar practices confirmed our early design decisions, including the provision of REST calls through a single point of access, and the need for including spans and associated classes within the responses. Our inquiries into the registry workflows revealed that registry processes are driven by individual documents, not patients. Each document is reviewed as it comes in, at which time it is either associated with an existing patient/tumor or a new patient/tumor record is created. Thus, it is desirable for the DeepPhe-CR API to include calls for submitting individual documents and receiving appropriate results. Finally, security was identified as a priority. Although registry software will generally be hosted in a secure environment, some form of authorization should be associated with each REST call.

To address these needs, as well as the requirements for a single-point of access and ease of installation, we developed a Docker-based architecture involving two containers: a reverse proxy container and a container for the core document processing. The reverse proxy container provides the single point of access through a web server, handing calls off to the document processing container. The use of this proxy server adds minimal overhead while providing the flexibility needed for further evolution, allowing the addition of new calls, or even the reallocation of the document processing services into multiple containers, without requiring any changes to client systems. Security is provided through an HTTP Bearer-Token that must be provided to authenticate each request.

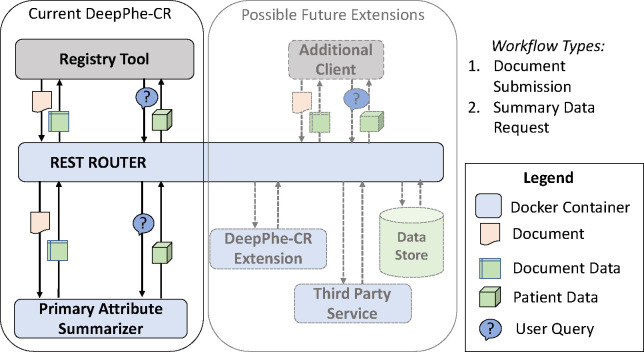

The core document processing system is a modified version of the original DeepPhe system, enhanced to support the single-document approach needed by registry workflows. Although not immediately required by the registries, support for patient-level document submission (as offered by the original DeepPhe) was included as well. Together, these features are implemented through several key components found in the document processing container: 1) The core NLP functionality; 2) a Neo4j graph database server augmented with a DeepPhe-CR extension for storing and retrieving results from individual documents, as necessary for patient summarization; 3) a summarizer module; and 4) a query processor capable of handling requests for summarization and other stored data. An overview of the DeepPhe-CR architecture is given in Figure 1.

Figure 1:

The DeepPhe-CR architecture

The DeepPhe-CR API supports four calls (Table 3). The simplest approach, designed to meet the most important need identified by the registries, takes a single document and returns the NLP-extracted data. Alternatively, one or more documents can be submitted and processed, with results stored for summarization and retrieval via a subsequent API call. This submission can take one of two forms – immediate return of results, and queueing for future processing. In the case of a single document, the summary will contain only the data found in the document, and this workflow is essentially equivalent to single document submission and summary retrieval. If multiple documents for the same patient are submitted, a requested patient summary will address items contained in all documents. Sample calls and returned results are given in Supplemental Appendix A..

Table 3:

API calls for submitting information to the DeepPhe-CR services and retrieving results

| API Call | Description | Return value |

|---|---|---|

| summarizeOneDoc | Summarize a single document, no caching | JSON containing single-document neoplasm summary |

| summarizePatientDoc | Summarize a single document, cache the document for patient summary to be retrieved on subsequent call | JSON containing single-document neoplasm summary results |

| queuePatientDoc | Cache the document for patient summary to be retrieved on subsequent call. Used to submit multiple documents prior for summarization via summarizePatients call. | JSON containing a Boolean value indicating that the the document has been submitted. |

| summarizePatient | Retrieve summarized patient information | JSON containing all results extracted for a patient, including a cross-document summary |

DeepPhe-CR’s Docker-based containerized implementation is straightforward to install, requiring only a few commands. DeepPhe-CR source code (all open source), installation instructions, documentation API calls and tools for managing the Docker containers, including execution of integration tests, can be found on the DeepPhe-CR release GitHub site, reachable through: https://deepphe.github.io.

Information Extraction

The information extracted by the DeepPhe-CR is listed in Table 1. As an extension of the original DeepPhe system, DeepPhe-CR specifically addresses the extraction of values for these required variables from clinical free text of varied types (e.g. clinical notes, radiology reports, pathology notes, etc.). As was described above, the DeepPhe-CR approaches are the same as DeepPhe’s6 – an end-to-end hybrid pipeline combining symbolic and machine learners driven by knowledge represented in its DeepPhe-CR backbone ontology. The machine learners are for the tasks of sentence splitting and part-of-speech tagging which we take from Apache cTAKES; therefore we did not retrain them. DeepPhe-CR results on the held-out test split are in Table 4. The results demonstrate that DeepPhe-CR achieves high F1 scores on both the common (colorectal, prostate, breast, ovary and lung) and rare (pediatric brain) types of cancers. Note that because DeepPhe-CR assigns a value for each required category of topography, histology, behavior, laterality and grade, the F1 equates to accuracy thus precision and recall values are the same (assuming no missing values in the gold annotations).

Table 4:

DeepPhe-CR results for cancer core attributes on the held-out testsplit.

| Attribute | Colorectal, Prostate, Breast, Ovary, Lung Cancers | Pediatric Brain Cancer | ||||

|---|---|---|---|---|---|---|

| Attribute | P | R | F1 | P | R | F1 |

| Topography: ICD-O code (MAJOR SITE) | 0.91 | 0.91 | 0.91 | 1.00 | 1.00 | 1.00 |

| Topography: ICD-O code (SUBSITE) | 0.85 | 0.85 | 0.85 | 0.79 | 0.79 | 0.79 |

| Histology: ICD-O code | 0.83 | 0.83 | 0.83 | 0.87 | 0.87 | 0.87 |

| Behavior: ICD-O code | 0.88 | 0.88 | 0.88 | 0.97 | 0.97 | 0.97 |

| Laterality: ICD-O code | 0.96 | 0.96 | 0.96 | 0.97 | 0.97 | 0.97 |

| Grade: ICD-O code | 0.81 | 0.81 | 0.81 | 0.92 | 0.92 | 0.92 |

| AJCC Pathological T value | 0.98 | 0.92 | 0.94 | n/a | n/a | n/a |

| AJCC Pathological N value | 0.98 | 0.98 | 0.98 | n/a | n/a | n/a |

| AJCC Pathological M value | 0.99 | 1.00 | 0.99 | n/a | n/a | n/a |

P=precision/positive predictive value; R=recall/sensitivity; F1=harmonic mean of precision and recall

The DeepPhe-CR ontology includes representation of biomarkers and their relations to other classes, such as cancer types. This allows the system to extract and summarize biomarkers of interest and associate them with other extracted information. Biomarker classes are mostly assembled from NCIt,20 though some protein classes come from Human Phenotype Ontology (HPO).21 Custom relations were introduced between appropriate cancer branches and biomarkers, e.g. breast cancer and ER, PR, HER2. The system can identify relations of almost 150 types. DeepPhe-CR achieved 0.90 F1 for biomarker extraction with precision/positive predictive value 0.91 and recall/sensitivity 0.89 on the test split from Table 2.

Integration Example, User Study, and Generalization

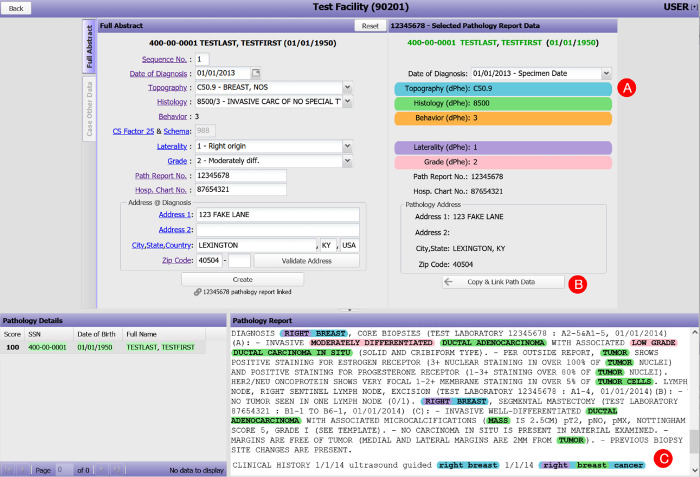

The integration of the DeepPhe-CR features into the CPDMS tool involved the addition of two new features to the basic CPDMS data capture screen. A new set of controls on the right-hand side of the screen displays suggested items as extracted by DeepPhe-CR. At the bottom of the screen, a text

box displays the document from which information is extracted, with spans highlighted in colors matching those used to label the suggested items. Selected items can be copied to the data annotation inputs with a single click (Figure 2).

Figure 2:

The data abstraction screen from the Cancer Patient Data Management System, augmented to support suggested data items as extracted from clinical text by DeepPhe-CR: (A) document-level topography, histology, behavior, laterality, and grade values are shown color-coded and labelled with appropriate ICD-O/NAACCR codes; (B) Suggested items and demographics can be copied to the in-progress abstract with a single click; (C) The clinical text is highlighted with color codes indicating text spans associated with the five summary values displayed in the suggestion area above (A). Thus, “Right breast cancer” is associated with topography C50.9, “breast” with histology 8500, and “right” with laterality 1.

In an initial usability study, two participating registrars experienced with cancer data abstraction used the enhanced CPDMS/DeepPhe-CR tool to annotate cancer documents. Although participants differed in how quickly they were able to use the tools, both were able to use the tool appropriately and expressed enthusiasm for the enhancements.

The relationship between task completion time with and without DeepPhe-CR annotations was mixed. For one of the two participants, task completion time was significantly faster with the DeepPhe-CR annotations (with DeepPhe-CR average time 31.7 sec, standard deviation 14.0 sec; without DeepPhe-CR average time 59.7 seconds, standard deviation 14.9 sec, Wilcoxon’s W, p < 0.05). No significant difference was found for the other participant. This may be due to the need to review the document to avoid omitting any relevant information: even when they felt confident about the results extracted by DeepPhe-CR, participants carefully reviewed documents in search of any additional information that may have been missed by the NLP tools.

DISCUSSION

Cancer surveillance is built on the foundation of cancer registry data abstraction work, involving manually reading and extracting information from clinical text. This careful interpretation of clinical free-text is a resource-intensive process, requiring significant person-power from trained experts who identify and code key items of interest. NLP tools capable of automating or semi-automating the identification of these key details have the potential to improve registrar efficiency and accuracy. Improving the efficiency of registry workflows also facilitates the expansion of the registry dataset to also include additional information, such as genomic reports, without additional staff resources.

Our DeepPhe-CR system provides a flexible architecture for building cancer-specific NLP tools directly into registrar workflows in a computer-assisted abstraction setting. Based on a familiar containerized implementation and REST-like18 architectures, these tools can easily be installed and accessed by web-based registry tools, thus minimizing changes to familiar workflows and encouraging adoption. An initial deployment within the Kentucky Cancer Registry’s CPDMS provides a demonstration of the feasibility of this approach.

Our aspirational goal is to develop methods to enable the near-complete automation of many cancer registry data abstraction tasks. Although the performance of the DeepPhe-CR NLP components meets the set goal of 0.75 F1 for a computer-assisted abstraction, the goal for full automation is in the 95–98% F1 range, as informally set forward by SEER and others. For a subset of the documents, that high level F1 is already achieved. Methods for identifying these documents with high confidence need to be developed thus introducing complete automation for a portion of the incoming data. Our goal is to facilitate cancer registrar efforts, providing greater user satisfaction and confidence in resulting abstraction in a human-computer interaction mode. Thus, as of now, DeepPhe-CR tools provide useful input that helps registrars complete their work within the computer-assisted abstraction setting. We also note that DeepPhe-CR is a modular architecture with inherent pluggable utility to registry software and can be enhanced with more accurate NLP methods.

The use of DeepPhe-CR annotations as a tool for supporting manual abstracting and coding is consistent with our observations during our user study of the integration of DeepPhe-CR into the Kentucky CPDMS. Although participants were enthusiastic about the NLP assistance and showed clear signs of using the extracted attributes, we did not see any consistent indication that the NLP tools helped to reduce the time required to complete abstraction tasks. Some of this effect was clearly due to the need to verify system feedback -- users were establishing their trust in the system -- as participants regularly scrolled through documents to find highlighted text corresponding to DeepPhe-CR suggestions. System suggestions that were either omitted (i.e., when DeepPhe-CR was unable to identify an attribute of a tumor) or incorrect caused problems, as users had to read the note to find appropriate text. We believe that continuous and prolonged use of DeepPhe-CR will aid in user gains in familiarity and trust of the system and of the relatively high performance of the methods for selected attributes.

Enhancements to the registry software user interface and workflow to provide additional support for the integration of NLP output predictions might provide additional gains. The integration of confidence scores displayed to the registrars for each DeepPhe-CR extracted variable was the most requested feature to add. Such scores might help focus registrar attention on those suggested items that have the greatest uncertainty and are currently under development. User interface enhancements, such as showing the context in which extracted items were found, might help minimize the effort required to verify NLP suggestions. The design of the DeepPhe-CR API also has the potential to provide additional gains, if supported through appropriate revisions to registry workflows. Specifically, DeepPhe-CR’s ability to summarize multiple pathology reports might reduce the effort needed to manually link details across reports. However, revisions to data abstraction workflows and tools will be necessary to realize these improvements.

More advanced functionality might engage registrars in providing feedback that might be used to improve the NLP tools. Such tools might allow users to indicate when an extracted attribute was correct and to suggest alternate values for incorrect results, providing feedback that might be incorporated into dynamically evolving methods for information extraction.

Further work might be needed to determine appropriate approaches for evaluating the impact of the NLP-augmented abstraction process. Although reductions in the time required for document abstraction might appear to be the most obvious potential benefit, other metrics such as subjective satisfaction and accuracy should be considered. Even if subsequent studies continue to suggest that the DeepPhe-CR annotations do not reduce task completion time, improvements in these alternative measures might be sufficient evidence to justify adoption of the NLP-aided approach to cancer data abstraction.

Limitations of this work include the relatively small sample size in terms of the number of participants in the usability study. Although the document corpus is adequate for methods development, it remains possible that results on unseen datasets will not reach the F1 scores seen in the testing set. The validation of the NLP approaches with clinical data from additional registries along with additional types of cancers and of the tools with users from those sites will be needed to demonstrate broader generalizable utility.

CONCLUSION

The use of NLP to assist in the extraction of required reporting of cancer attributes and streamlining cancer data abstraction processes is an appealing possibility. Our DeepPhe-CR system provides a containerized set of abstraction tools supported by a REST API, providing infrastructure suitable for integration into registry software. Accurate methods and a use case demonstrate the feasibility of this approach, while also demonstrating the need for improved methods. The DeepPhe-CR tools are available at https://deepphe.github.io.

Supplementary Material

ACKNOWLEDGMENTS

David Harris and Lisa Witt provided vital annotation help and assistance in user-testing prototype tools. Brent Mumphrey, Susan Gershman, and Hung Tran provided useful feedback to the development of the tools described in this paper.

FUNDING

HH, SF, ZY, EBD, JCJ, IH, DR, RK, JLW, and GS were supported by NCI grant UH3CA243120. HH, JLW, and GS were also supported by NCI Grant U24CA248010. EDB, JCJ, IH, DR, and RK were supported by P30 CA177558, and HHSN261201800013I/HHSN26100001 (SEER KY). XW was supported by NCI-SEER (HHSN261201800007I/HHSN26100002) and CDC-NPCR (NU58DP006332) to the Louisiana Tumor Registry.

REFERENCES

- 1.Alawad M, Gao S, Qiu JX, Yoon HJ, Blair Christian J, Penberthy L, et al. Automatic extraction of cancer registry reportable information from free-text pathology reports using multitask convolutional neural networks. J Am Med Inform Assoc JAMIA. 2019. Nov 9;27(1):89–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Yoon HJ, Peluso A, Durbin EB, Wu XC, Stroup A, Doherty J, et al. Automatic information extraction from childhood cancer pathology reports. JAMIA Open. 2022. Jul 1;5(2):ooac049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alawad M, Gao S, Qiu J, Schaefferkoetter N, Hinkle JD, Yoon HJ, et al. Deep Transfer Learning Across Cancer Registries for Information Extraction from Pathology Reports. In: 2019 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI). 2019. p. 1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rios A, Durbin EB, Hands I, Kavuluru R. Assigning ICD-O-3 codes to pathology reports using neural multi-task training with hierarchical regularization. In: Proceedings of the 12th ACM Conference on Bioinformatics, Computational Biology, and Health Informatics [Internet]. New York, NY, USA: Association for Computing Machinery; 2021. [cited 2022 Nov 15]. p. 1–10. (BCB ‘21). Available from: 10.1145/3459930.3469541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yoon HJ, Klasky HB, Gounley JP, Alawad M, Gao S, Durbin EB, et al. Accelerated training of bootstrap aggregation-based deep information extraction systems from cancer pathology reports. J Biomed Inform. 2020. Oct 1;110:103564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Savova GK, Tseytlin E, Finan S, Castine M, Miller T, Medvedeva O, et al. DeepPhe: A Natural Language Processing System for Extracting Cancer Phenotypes from Clinical Records. Cancer Res. 2017. Oct 31;77(21):e115–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.DeepPhe,. A Natural Language Processing System for Extracting Cancer Phenotypes from Clinical Records [Internet]. A Natural Language Processing System for Extracting Cancer Phenotypes from Clinical Records. [cited 2022 Nov 14]. Available from: https://deepphe.github.io

- 8.Adamo M, Groves C, Dickie L, Ruhl J. SEER Program Coding and Staging Manual 2022. [Internet]. National Cancer Institute; 2021. Available from: https://seer.cancer.gov/manuals/2022/SPCSM_2022_MainDoc.pdf [Google Scholar]

- 9.National Cancer Institute. SEER Data Management System [Internet]. SEER. [cited 2022 Nov 8]. Available from: https://seer.cancer.gov/seerdms/index.html [Google Scholar]

- 10.Savova GK, Danciu I, Alamudun F, Miller T, Lin C, Bitterman DS, et al. Use of Natural Language Processing to Extract Clinical Cancer Phenotypes from Electronic Medical Records. Cancer Res. 2019. Nov 1;79(21):5463–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang L, Fu S, Wen A, Ruan X, He H, Liu S, et al. Assessment of Electronic Health Record for Cancer Research and Patient Care Through a Scoping Review of Cancer Natural Language Processing. JCO Clin Cancer Inform. 2022. Jul;6:e2200006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zeng J, Banerjee I, Henry AS, Wood DJ, Shachter RD, Gensheimer MF, et al. Natural Language Processing to Identify Cancer Treatments With Electronic Medical Records. JCO Clin Cancer Inform. 2021. Dec 1;(5):379–93. [DOI] [PubMed] [Google Scholar]

- 13.Karimi YH, Blayney DW, Kurian AW, Shen J, Yamashita R, Rubin D, et al. Development and Use of Natural Language Processing for Identification of Distant Cancer Recurrence and Sites of Distant Recurrence Using Unstructured Electronic Health Record Data. JCO Clin Cancer Inform. 2021. Apr;5:469–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bitterman D, Miller T, Harris D, Lin C, Finan S, Warner J, et al. Extracting Relations between Radiotherapy Treatment Details. In: Proceedings of the 3rd Clinical Natural Language Processing Workshop [Internet]. Online: Association for Computational Linguistics; 2020. [cited 2022 Nov 14]. p. 194–200. Available from: https://aclanthology.org/2020.clinicalnlp-1.21 [Google Scholar]

- 15.Savova GK, Masanz JJ, Ogren PV, Zheng J, Sohn S, Kipper-Schuler KC, et al. Mayo clinical Text Analysis and Knowledge Extraction System (cTAKES): architecture, component evaluation and applications. J Am Med Inform Assoc JAMIA. 2010;17(5):507–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Yuan Z, Finan S, Warner J, Savova G, Hochheiser H. Interactive Exploration of Longitudinal Cancer Patient Histories Extracted From Clinical Text. JCO Clin Cancer Inform. 2020. May;4:412–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Campbell S, Giadresco K. Computer-assisted clinical coding: A narrative review of the literature on its benefits, limitations, implementation and impact on clinical coding professionals. Health Inf Manag J. 2020. Jan 1;49(1):5–18. [DOI] [PubMed] [Google Scholar]

- 18.Fielding RT, Taylor RN, Erenkrantz JR, Gorlick MM, Whitehead J, Khare R, et al. Reflections on the REST architectural style and “principled design of the modern web architecture” (impact paper award). In: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering [Internet]. New York, NY, USA: Association for Computing Machinery; 2017. [cited 2022 Jul 20]. p. 4–14. (ESEC/FSE 2017). Available from: 10.1145/3106237.3121282 [DOI] [Google Scholar]

- 19.Kentucky Cancer Registry. CPDMS.net Hospital Cancer Registry Management System [Internet]. [cited 2022 Nov 8]. Available from: https://www.kcr.uky.edu/software/cpdmsnet.php

- 20.Sioutos N, Coronado S de, Haber MW, Hartel FW, Shaiu WL, Wright LW. NCI Thesaurus: A semantic model integrating cancer-related clinical and molecular information. J Biomed Inform. 2007. Feb 1;40(1):30–43. [DOI] [PubMed] [Google Scholar]

- 21.Köhler S, Gargano M, Matentzoglu N, Carmody LC, Lewis-Smith D, Vasilevsky NA, et al. The Human Phenotype Ontology in 2021. Nucleic Acids Res. 2021. Jan 8;49(D1):D1207–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.