Abstract

The current paper studies the problem of minimizing a loss f(x) subject to constraints of the form Dx ∈ S, where S is a closed set, convex or not, and D is a matrix that fuses parameters. Fusion constraints can capture smoothness, sparsity, or more general constraint patterns. To tackle this generic class of problems, we combine the Beltrami-Courant penalty method of optimization with the proximal distance principle. The latter is driven by minimization of penalized objectives involving large tuning constants ρ and the squared Euclidean distance of Dx from S. The next iterate xn+1 of the corresponding proximal distance algorithm is constructed from the current iterate xn by minimizing the majorizing surrogate function . For fixed ρ and a subanalytic loss f(x) and a subanalytic constraint set S, we prove convergence to a stationary point. Under stronger assumptions, we provide convergence rates and demonstrate linear local convergence. We also construct a steepest descent (SD) variant to avoid costly linear system solves. To benchmark our algorithms, we compare their results to those delivered by the alternating direction method of multipliers (ADMM). Our extensive numerical tests include problems on metric projection, convex regression, convex clustering, total variation image denoising, and projection of a matrix to a good condition number. These experiments demonstrate the superior speed and acceptable accuracy of our steepest variant on high-dimensional problems. Julia code to replicate all of our experiments can be found at https://github.com/alanderos91/ProximalDistanceAlgorithms.jl

Keywords: Majorization minimization, steepest descent, ADMM, convergence

1. Introduction

The generic problem of minimizing a continuous function f(x) over a closed set S of can be attacked by a combination of the penalty method and distance majorization. The classical penalty method seeks the solution of a penalized version hρ(x) = f(x) + ρq(x) of f(x), where the penalty q(x) is nonnegative and 0 precisely when x ∈ S. If one follows the solution vector xρ as ρ tends to ∞, then in the limit one recovers the constrained solution (Beltrami, 1970; Courant, 1943). The function

is one of the most fruitful penalties in this setting. Our previous research for solving this penalized minimization problem has focused on an MM (majorization-minimization) algorithm based on distance majorization (Chi et al., 2014; Keys et al., 2019). In distance majorization one constructs the surrogate function

using the Euclidean projection 𝒫(xn) of the current iterate xn onto S. The minimum of the surrogate occurs at the proximal point

| (1) |

According to the MM principle, this choice of xn+1 decreases gρ(x | xn) and hence the objective hρ(x) as well. As we note in our previous JMLR paper (Keys et al., 2019), the update (1) reduces to the classical proximal gradient method when S is convex (Parikh, 2014).

We have named this iterative scheme the proximal distance algorithm (Keys et al., 2019; Lange, 2016). It enjoys several virtues. First, it allows one to exploit the extensive body of results on proximal maps and projections. Second, it does not demand that the constraint set S be convex. If S is merely closed, then the map 𝒫(x) may be multivalued, and one must choose a representative element from the projection 𝒫(xn). Third, the algorithm does not require the objective function f(x) to be differentiable. Fourth, the algorithm dispenses with the chore of choosing a step length. Fifth, if sparsity is desirable, then the sparsity level can be directly specified rather than implicitly determined by the tuning parameter of the lasso or other penalty.

Traditional penalty methods have been criticized for their numerical instability. This hazard is mitigated in the proximal distance algorithm by its reliance on proximal maps, which are often highly accurate. The major defect of the proximal distance algorithm is slow convergence. This can be ameliorated by Nesterov acceleration (Nesterov, 2013). There is also the question of how fast one should send ρ to ∞. Although optimal schedules are rarely known, simple numerical experiments yield a good choice. Finally, soft constraints can be achieved by stopping the steady increase of ρ at a finite value.

1.1. Proposed Framework

Distance majorization can be generalized in various ways. For instance, it can be expanded to multiple constraint sets. In practice, at most two constraint sets usually suffice. Another generalization is to replace the constraint x ∈ S by the constraint Dx ∈ S, where D is a compatible matrix. Again, the original case D = I is allowed. By analogy with the fused lasso of Tibshirani et al. (2005), we will call the matrix D a fusion matrix. With these ideas in mind, we examine the general problem of minimizing a differentiable function f(x) subject to r fused constraints Dix ∈ Si. We approach this problem by extending the proximal distance method. For a fixed penalty constant ρ, the objective function and its MM surrogate now become

where 𝒫i(y) denotes the projection of y onto Si. Any or all of the fusion matrices Di can be the identity I. Our motivating premise posits that projection onto each set Si is more straightforward than projection onto its preimage . When the contrary is true, one should clearly favor projection onto the preimage.

Fortunately, we can simplify the problem by defining S to be the Cartesian product and D to be the stacked matrix

Our objective and surrogate then revert to the less complicated forms

| (2) |

| (3) |

respectively, where 𝒫(x) is the Cartesian product of the projections 𝒫i(x). Note that all closed sets Si with simple projections, including sparsity sets, are fair game.

1.2. Our Contributions

In the framework described above, we summarize the contributions of the current paper.

Section 2 describes solution algorithms for minimizing the penalized loss hρ(x). Our first algorithm is based on Newton’s method applied to the surrogate gρ(x | xn). For some important problems, Newton’s method reduces to least squares. Our second method is a steepest descent algorithm on gρ(x | xn) tailored to high dimensional problems.

For a sufficiently large ρ, we show that when f(x) and S are convex and f(x) possesses a unique minimum point in the preimage of S under D, y ∈ D−1(S), the penalized loss hρ(x) attains its minimum value. This is the content of Proposition 3.1. Similarly, Proposition 3.2 shows that the surrogate gρ(x | xn) also attains its minimum.

- If in addition f(x) is differentiable, then Proposition 3.3 demonstrates that the MM iterates xn for minimizing hρ(x) satisfy

where zρ minimizes hρ(x). If f(x) is also L-smooth and μ-strongly convex, then Proposition 3.4 shows that zρ is unique and the iterates xn converge to zρ at a linear rate. More generally, Proposition 4.1 proves that the iterates xn of a generic MM algorithm converge to a stationary point of a coercive subanalytic function h(x) with a good surrogate. Our objectives and their surrogates fall into this category. Proposition 4.2 specializes the results of Proposition 4.1 to proximal distance algorithms. Propositions 4.3 and 4.4 further specialize to proximal distance algorithms with sparsity constraints and demonstrate a linear rate of convergence. Sparsity sets appear in model selection.

Finally, we discuss a competing alternating direction method of multipliers (ADMM) algorithm and note its constituent updates. Our extensive numerical experiments compare the two proximal distance algorithms to ADMM. We find that proximal distance algorithms are competitive with and often superior to ADMM in terms of accuracy and running time.

1.3. Notation

The symbols x and y and their decorated variants are typically reserved for optimization variables. Fusion operators D are problem specific but understood as real matrices. Generally, D is neither injective nor surjective; the inverse image of S is denoted D−1(S). The function f(x) represents the loss in the constrained minimization problem

whereas is the the penalized loss. Our point to set distance functions are based on the Euclidean norm via

Finally, the notation 𝒫S(y) indicates the unique Euclidean projection of y onto the closed convex set S. If S is merely closed, then 𝒫S(y) may be set-valued. Depending on the context, 𝒫S(y) also denotes a particular representative of this set.

2. Different Solution Algorithms

Unless f(x) is a convex quadratic, exact minimization of the surrogate gρ(x | xn) is likely infeasible. As we have already mentioned, to reduce the objective hρ(x) in (2), it suffices to reduce the surrogate (3). For the latter task, we recommend Newton’s method on small and intermediate-sized problems and steepest descent on large problems. The exact nature of these generic methods are problem dependent. The following section provides a high-level overview of each strategy and we defer details on our later numerical experiments to the appendices.

2.1. Newton’s Method and Least Squares

Unfortunately, the proximal operator is no longer relevant in calculating the MM update xn+1. When f(x) is smooth, Newton’s method for the surrogate gρ(x | xn) defined in Equation (3) employs the update

where Hn = d2f(xn) is the Hessian. To enforce the descent property, it is often prudent to substitute a positive definite approximation Hn for d2f(xn). In statistical applications, the expected information matrix is a natural substitute. It is also crucial to retain as much curvature information on f(x) as possible. Newton’s method has two drawbacks. First, it is necessary to compute and store d2f(xn). This is mitigated in statistical applications by the substitution just mentioned. Second, there is the necessity of solving a large linear system. Fortunately, the matrix Hn + ρDtD is often well-conditioned relative to Hn, for example, when D has full column rank and DtD is positive definite. The method of conjugate gradients can be called on to solve the linear system in this ideal circumstance.

To reduce the condition number of the matrix Hn + ρDtD even further, one can sometimes rephrase the Newton step as iteratively reweighted least squares. For instance, in a generalized linear model, the gradient ∇f(x) and the expected information H can be written as

where r is a vector of standardized residuals, Z is a design matrix, and W is a diagonal matrix of case weights (Lange, 2010; Nelder and Wedderburn, 1972). The Newton step is now equivalent to minimizing the least squares criterion

In this context a version of the conjugate gradient algorithm adapted to least squares is attractive. The algorithms LSQR (Paige and Saunders, 1982) and LSMR (Fong and Saunders, 2011) perform well when the design is sparse or ill conditioning is an issue. Given the numerical stability of the iteratively reweighted least squares updates, we favor them over the explicit Newton steps in implementing proximal distance algorithms.

2.2. Proximal Distance by Steepest Descent

In high-dimensional optimization problems, gradient descent is typically employed to avoid matrix inversion. Determination of an appropriate step length is now a primary concern. In the presence of fusion constraints Dx ∈ S and a convex quadratic loss , the gradient of the proximal distance objective at xn amounts to

For the steepest descent update xn+1 = xn − tnvn, one can show that the optimal step length is

This update obeys the descent property and avoids matrix inversion. One can also substitute a local convex quadratic approximation around xn for f(x). If the approximation majorizes f(x), then the descent property is preserved. In the failure of majorization, the safeguard of step halving is trivial to implement.

In addition to Nesterov acceleration, gradient descent can be accelerated by the subspace MM technique (Chouzenoux et al., 2010). Let Gn be the matrix with k columns determined by the k most current gradients of the objective hρ(x), including ∇hρ(xn). Generalizing our previous assumption, suppose f(x) has a quadratic surrogate with Hessian Hn at xn. Overall we get the quadratic surrogate

of gρ(x | xn). We now seek the best linear perturbation xn + Gnβ of xn by minimizing qρ(xn + Gnβ | xn) with respect to the coefficient vector β. To achieve this end, we solve the stationary equation

and find , where the gradient is

The indicated matrix inverse is just k × k.

2.3. ADMM

ADMM (alternating direction method of multipliers) is a natural competitor to the proximal distance algorithms just described (Hong et al., 2016). ADMM is designed to minimize functions of the form f(x) + g(Dx) subject to x ∈ C, where C is closed and convex. Splitting variables leads to the revised objective f(x) + g(y) subject to x ∈ C and y = Dx. ADMM invokes the augmented Lagrangian

with Lagrange multiplier λ and step length μ > 0. At iteration n + 1 of ADMM one calculates successively

| (4) |

| (5) |

| (6) |

Update (4) succumbs to Newton’s method when f(x) is smooth and , and update (5) reduces to a proximal map of g(y). Update (6) of the Lagrange multiplier λ amounts to steepest ascent on the dual function. A standard extension to the scheme in (4) through (6) is to vary the step length μ by considering the magnitude of residuals (Boyd et al., 2011). For example, letting rn = Dx − y and sn = μDt(yn−1 − yn) denote primal and dual residuals at iteration n, we make use of the heuristic

which (a) keeps the primal and dual residuals within an order of magnitude of each other, (b) makes ADMM less sensitive to the choice of step length, and (c) improves convergence.

Our problem conforms to the ADMM paradigm when S is equal to the Cartesian product and . Fortunately, the y update (5) reduces to a simple formula (Bauschke and Combettes, 2017). To derive this formula, note that the proximal map y = proxαg(z) satisfies the stationary condition

for any z, including z = Dxn+1 + λn, and any α, including α = ρ/μ. Since the projection map 𝒫(y) has the constant value 𝒫(z) on the line segment [z, 𝒫(z)], the value

satisfies the stationary condition. Because the explicit update (5) for y decreases the Lagrangian even when S is nonconvex, we will employ it generally.

The x update (4) is given by the proximal map when and D = I. Otherwise, the update of x is more problematic. Assuming f(x) is smooth and , Newton’s method gives the approximate update

Our earlier suggestion of replacing d2f(xn) by a positive definite approximation also applies here. Let us emphasize that ADMM eliminates the need for distance majorization. Although distance majorization is convenient, it is not necessarily a tight majorization. Thus, one can hope to see gains in rates of convergence. Balanced against this positive is the fact that ADMM is often slow to converge to high accuracy.

2.4. Proximal Distance Iteration

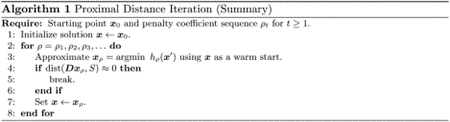

We conclude this section by describing proximal distance algorithms in pseudocode. As our theoretical results will soon illustrate, the choice of penalty parameter ρ is tied to the convergence rate of any proximal distance algorithm. Unfortunately, a large value for ρ is necessary for the iterates to converge to the constraint set S. We ameliorate this issue by slowly sending ρ → ∞ according to annealing schedules from the family of geometric progressions ρ(t) = rt−1 with t ≥ 1. Here we parameterize the family by an initial value ρ = 1 and a multiplier r > 1. Thus, our methods approximate solutions to min f(x) subject to Dx ∈ S by solving a sequence of increasingly penalized subproblems, min xhρ(t)(x). Algorithm 1 gives a high-level sketch of proximal distance iteration.

In practice we can only solve a finite number of subproblems so we prescribe the following convergence criteria

| (7) |

| (8) |

| (9) |

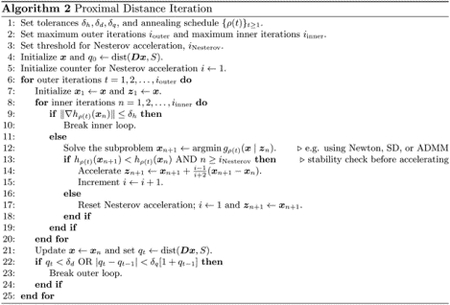

Condition (7) is a guarantee that a solution estimate xn is close to a stationary point after n inner iterations for the fixed value of ρ = ρ(t). In conditions (8) and (9), the vector xt denotes the δh-optimal solution estimate once condition (7) is satisfied for a particular subproblem along the annealing path. Condition (8) is a guarantee that solutions along the annealing path adhere to the fusion constraints at level δd. In general, condition (8) can only be satisfied for large values of ρ(t). Finally, condition (9) is used to terminate the annealing process if the relative progress made in decreasing the distance penalty becomes too small as measured by δq. Algorithm 2 describes the flow of proximal distance iteration in practice. Nesterov acceleration in inner iterations is a key ingredient. Warm starts in solving subsequent subproblems are implicit in our formulation.

3. Convergence Analysis: Convex Case

In this section we summarize convergence results for proximal distance algorithms on convex problems. Proofs of all propositions appear in Section 7. We begin our discussion by recalling the definition of the next iterate

| (10) |

and the descent property

enjoyed by all MM algorithms. As noted earlier, we can assume a single fusion matrix D and a single closed convex constraint set S. With these ideas firmly in mind, we state a sufficient condition for the existence of a minimum point of the penalized loss hρ(x). Further constraints on x beyond those imposed in the distance penalties are rolled into the essential domain of the convex loss f(x). This is particularly beneficial for a quadratic loss with an affine constraint (Keys et al., 2019; Lange, 2016).

Proposition 3.1 Suppose the convex function f(x) on possesses a unique minimum point y on the closed convex set T = D−1(S). Then for all sufficiently large ρ, the objective is coercive and therefore attains its minimum value.

Next we show that the surrogate function gρ(x | xn+1) defined in equation (3) attains its minimum value for large enough ρ. This ensures that the algorithm map (10) is well-defined.

Proposition 3.2 Under the conditions of Proposition 3.1, for sufficiently large ρ, every surrogate is coercive and therefore attains its minimum value. If

for all x and some positive semidefinite matrix A and subgradient vn at xn, and if the inequality utAu > 0 holds whenever ∥Du∥ = 0 and u ≠ 0, then for ρ sufficiently large, gρ(x | xn) is strongly convex and hence coercive.

As an illustration of Proposition 3.2, suppose that D is the forward difference operator with unit spacing. By virtue of our convexity assumption, it suffices to prove that the surrogate gρ(x | xn+1) is coercive along all rays emanating from the origin. The only vectors with Dv = 0 are multiples of 1. Thus, we only need the map t ↦ f(t1) to tend to ∞ as |t| does. This is much weaker condition than the strong convexity of f(x). In other words f(x) must compensate for the penalty where the penalty is not coercive. Uniqueness of xn+1 holds whenever gρ(x | xn) is strictly convex regardless of whether the conditions imposed by the proposition are true.

In our ideal convex setting we have a first convergence result for fixed ρ.

Proposition 3.3 Supposes (a) that S is closed and convex, (b) that the loss f(x) is convex and differentiable, and (c) that the constrained problem possesses a unique minimum point. For ρ sufficiently large, let zρ denote a minimal point of the objective hρ(x) defined by equation (2). Then the MM iterates (10) satisfy

Furthermore, the iterate values hρ(xn) systematically decrease.

In even more restricted circumstances, one can prove linear convergence of function values in the framework of Karimi et al. (2016). Specifically, our result hinges on deriving a Polyak-Łojasiewicz inequality for (sub)gradients of hρ(x), from which linear convergence follows almost immediately.

Proposition 3.4 Suppose that S is a closed and convex set and that the loss f(x) is L-smooth and μ-strongly convex. Then the objective possesses a unique minimum point zρ, and the proximal distance iterates xn satisfy

Convergence of ADMM is well studied in the optimization literature (Beck, 2017). Appendix B summarizes the main findings as they apply to the ADMM algorithms of Section 2.3.

4. Convergence Analysis: General Case

We now depart the comfortable confines of convexity. Our analysis relies on the Fréchet subdifferential (Kruger, 2003) and the theory of semialgebraic sets and functions. Readers unfamiliar with these topics are encouraged to read Appendix A for a brief review of the relevant theory.

The presentation of our results relies on the prior chain of reasoning established by Keys et al. (2019). Specifically, our arguments invoke Zangwill’s global convergence theorem for algorithm maps (Luenberger et al., 1984, Chapter 7, Section 7). The key ingredients of the theory are (i) a solution set (for instance a set of stationary points), (ii) an algorithm map that is closed outside the solution set, (iii) a compact set containing the iterates xn generated by the map, and (iv) a Lyapunov function that decreases along the iterates. Keys et al. (2019) set the stage in their Propositions 5 through 8 by showing that proximal distance algorithms with D = I satisfy the restrictions (i)-(iv) imposed by Zangwill’s global convergence theorem. Note that our algorithm maps inherit their multivalent nature from multivalent Euclidean projections onto nonconvex sets. In any event, the main hurdles to overcome in proving convergence with fixed ρ > 0 are

establishing coercivity of hρ(x),

demonstrating strong convexity of gρ(x | xn), and

showing hρ(x) satisfies a Polyak-Łojasiewicz inequality.

In the present case with D ≠ I, the coercivity assumption (a) is tied to requirement (iii) in Zangwill’s theorem as stated above. The strong convexity assumption (b) is fortunately imposed on the surrogate rather than the objective. It is also crucial that the Euclidean distance dist(x, S) to a semialgebraic set S is a semialgebraic function. In view of the closure properties of such functions, the function is also semialgebraic. Semialgebraic theory is quite general, and many common set constraints fall within its purview. For example, the nonnegative orthant and the unit sphere 𝒮p−1 are semialgebraic.

The next proposition considers the convergence of the iterates of a generic MM algorithm to a stationary point. Readers seeking a proof may consult Section A.10 in the appendix of Keys et al. (2019).

Proposition 4.1 In an MM algorithm suppose the objective h(x) is coercive, continuous, and subanalytic and all surrogates g(x | xn) are continuous, μ-strongly convex, and satisfy the Lipschitz condition

on the compact set {x : h(x) ≤ h(x0)}. Then the MM iterates xn+1 = argminx g(x | xn) converge to a stationary point.

This result builds on theoretical contributions extending Kurdyka’s, Łojasiewicz’s, and Polyak’s inequalities to nonsmooth analysis, the generic setting of semialgebraic sets and functions, and proximal algorithms (Bolte et al., 2007; Attouch et al., 2010; Kang et al., 2015; Cui et al., 2018; Le Thi et al., 2018). Note that the stationary point may represent a local minimum or even a saddle point rather than a global minimum.

Before stating a precise result for our proposed proximal distance algorithms, let us clarify the nature of the Fréchet subdifferential in the current setting. This entity is determined by the identity

for which Danskin’s theorem yields the directional derivative

Here S(x) is the solution set where the minimum is attained. The Fréchet differential

holds owing to Corollary 1.12.2 and Proposition 1.17 of Kruger (2003) since dist(Dx, S)2 is locally Lipschitz. The latter fact follows from the identity a2 − b2 = (a + b)(a − b) with a = dist(Dy, S)2 and b = dist(Dx, S)2, given that dist(w, S) is Lipschitz and bounded on bounded sets.

In any event, a stationary point x satisfies 0 = ∇f(x) + ρDt(Dx − z) for all z ∈ S(x). As we expect, the stationary condition is necessary for x to furnish a global minimum. Indeed, if it fails, take z ∈ S(x) with surrogate satisfying ∇gρ(x | x) ≠ 0. Then the negative gradient −∇gρ(x | x) is a descent direction for gρ(x | x), which majorizes hρ(x). Hence, −∇gρ(x | x) is also a descent direction for hρ(x). This conclusion is inconsistent with x being a local minimum of the objective.

Having clarified Proposition 4.1 in our context, we state a convergence result on hρ(x).

Proposition 4.2 Suppose in our proximal distance setting that ρ is sufficiently large, the closed constraint sets Si and the loss f(x) are semialgebraic, and f(x) is differentiable with a locally Lipschitz gradient. Under the coercive assumption made in Proposition 3.2, the proximal distance iterates xn converge to a stationary point of the objective hρ(x).

The coercivity assumption requires that hρ(x) be coercive for sufficiently large ρ. This is not as restrictive as it sounds. If S is compact and f(x) is convex or bounded below, then the primary hindrances are the directions v where Dv = 0. Unless D is the trivial matrix 0, this null space has Lebesgue measure 0.

We conclude this section by communicating two results involving the set Sk ⊂ Rp whose members have at most k nonzero components. The sparsity constraint defining Sk is usually expressed as ∥x∥0 ≤ k for all feasible vectors x. Projection of x onto Sk preserves the top k components of x in absolute value but sends all other components to 0. Sparsity sets can be extended to matrix-valued variables X with the sparsity constraint applying to the entries, rows, or columns. Projection in the second case ranks rows by their norms and replaces the lowest ranked rows by the 0 vector. The next proposition establishes that Sk is semialgebraic in a general setting.

Proposition 4.3 The order statistics of a finite set of semialgebraic functions are semialgebraic. Hence, sparsity sets are semialgebraic.

For sparsity constrained problems, one can establish a linear rate of convergence under the right hypotheses. The next proposition proves convergence for a wide class of fused models.

Proposition 4.4 Suppose in our proximal distance setting that ρ is sufficiently large, the constraint set is a sparsity set Sk, and the loss f(x) is semialgebraic, strongly convex, and has a Lipschitz gradient. Then the proximal distance iterates xn converge to a stationary point x∞. Convergence occurs at a linear rate provided Dx∞ has k unambiguous largest components in magnitude. When the rows of D are unique, the complementary set of points x where Dx has k ambiguous largest components in magnitude has Lebesgue measure 0.

5. Numerical Examples

This section considers five concrete examples of constrained optimization amenable to distance majorization with fusion constraints, with D denoting the fusion matrix in each problem. In each case, the loss function is both strongly convex and differentiable. The specific examples that we consider are the metric projection problem, convex regression, convex clustering, image denoising with a total variation penalty, and projection of a matrix to one with a better condition number. Each example is notable for the large number of fusion constraints and projections to convex constraint sets, except in convex clustering. In convex clustering we encounter a sparsity constraint set. Quadratic loss models feature prominently in our examples. Interested readers may consult our previous work for nonconvex examples with D = I (Keys et al., 2019; Xu et al., 2017).

5.1. Mathematical Descriptions

Here we provide the mathematical details for each example.

5.1.1. Metric Projection

Solutions to the metric projection problem restore the triangle inequality to noisy distance data represented as m nodes of a graph (Brickell et al., 2008; Sra et al., 2005). Specifically, data are encoded in an m × m dissimilarity matrix Y = (yij) with nonnegative weights in the matrix W = (wij). Metric projection requires finding the symmetric semi-metric X = (xij) minimizing

subject to all nonnegativity constraints xij ≥ 0 and all triangle inequality constraints xij − xik − xkj ≤ 0. The diagonal entries of Y, W, and X are zero by definition. The fusion matrix D has rows, and the projected value of DX must fall in the set S of symmetric matrices satisfying all pertinent constraints.

One can simplify the required projection by stacking the nonredundant entries along each successive column of X to create a vector x with entries. This captures the lower triangle of X. The sparse matrix D is correspondingly redefined to be . These maneuvers simplify constraints to Dx ≥ 0, and projection involves sending each entry u of Dx to max{0, u}. Putting everything together, the objective to minimize is

where D consists of blocks T and Ip2 and p1 and p2 count the number of triangle inequality and nonnegativity constraints, respectively. The linear system (I + ρDtD)x = b appears in both the MM and ADMM updates for xn. Application of the Woodbury and Sherman-Morrison formulas yield an exact solution to the linear system and allow one to forgo iterative methods. The interested reader may consult Appendix C for further details.

5.1.2. Convex Regression

Convex regression is a nonparametric method for estimating a regression function under shape constraints. Given m responses yi and corresponding predictors , the goal is to find the convex function ψ(x) minimizing the sum of squares . Asymptotic and finite sample properties of this convex estimator have been described in detail by Seijo and Sen (2011). In practice, a convex regression program can be restated as a finite dimensional problem of finding the value θi and subgradient of ψ(x) at each sample point (yi, xi). Convexity imposes the supporting hyperplane constraint for each pair i ≠ j. Thus, the problem becomes one of minimizing subject to these m(m − 1) inequality constraints. In the proximal distance framework, we must minimize

where D = [A B] encodes the required fusion matrix. The reader may consult Appendix D for a description of each algorithm map.

5.1.3. Convex Clustering

Convex clustering of m samples based on d features can be formulated in terms of the regularized objective

based on columns xi and ui of and , respectively. Here each is a sample feature vector and the corresponding ui represents its centroid assignment. The predetermined weights wij have a graphical interpretation under which similar samples have positive edge weights wij and distant samples have 0 edge weights. The edge weights are chosen by the user to guide the clustering process. In general, minimization of Fγ(U) separates over the connected components of the graph. To allow all sample points to coalesce into a single cluster, we assume that the underlying graph is connected. The regularization parameter γ > 0 tunes the number of clusters in a nonlinear fashion and potentially captures hierarchical information. Previous work establishes that the solution path U(γ) varies continuously with respect to γ (Chi and Lange, 2015). Unfortunately, there is no explicit way to determine the number of clusters entailed by a particular value of γ prior to fitting U(γ).

Alternatively, we can attack the problem using sparsity and distance majorization. Consider the penalized objective

The fusion matrix D has columns wij(ei − ej) and serves to map the centroid matrix U to a matrix V encoding the weighted differences wij(ui − uj). The members of the sparsity set Sk are matrices with at most k non-zero columns. Projection of UD onto the closed set Sk forces some centroid assignments to coalesce, and is straightforward to implement by sorting the Euclidean lengths of the columns of UD and sending to 0 all but the k most dominant columns. Ties are broken arbitrarily.

Our sparsity-based method trades the continuous penalty parameter γ > 0 in the previous formulation for an integer sparsity index . For example with k = 0, all differences ui − uj are coerced to 0, and all sample points cluster together. The other extreme assigns each point to its own cluster. The size of the matrices D and UD can be reduced by discarding column pairs corresponding to 0 weights. Appendix E describes the projection onto sparsity sets and provides further details.

5.1.4. Total Variation Image Denoising

To approximate an image U from a noisy input W matrix, Rudin et al. (1992) regularize a loss function f(U) by a total variation (TV) penalty. After discretizing the problem, the least squares loss leads to the objective

where U, are rectangular monochromatic images and γ controls the strength of regularization. The anisotropic norm

is often preferred because it induces sparsity in the differences. Here Dp is a forward difference operator on p data points. Stacking the columns of U into a vector u = vec(U) allows one to identify a fusion matrix D and write TV1(U) compactly as TV1(u) = ∥Du∥1. We append a row with a single 1 in the last component to make D full rank. In this context we reformulate the denoising problem as minimizing f(u) subject to the set constraint ∥Du∥1 ≤ γ. This revised formulation directly quantifies the quality of a solution in terms of its total variation and brings into play fast pivot-based algorithms for projecting onto multiples of the ℓ1 unit ball (Condat, 2016). Appendix F provides descriptions of each algorithm.

5.1.5. Projection of a Matrix to a Good Condition Number

Consider an m × p matrix M with m ≥ p and full singular value decomposition M = UΣVt. The condition number of M is the ratio σmax/σmin of the largest to the smallest singular value of M. We denote the diagonal of Σ as σ. Owing to the von Neumann-Fan inequality, the closest matrix N to M in the Frobenius norm has the singular value decomposition N = UXV t, where the diagonal x of X satisfies inequalities pertinent to a decent condition number (Borwein and Lewis, 2010). Suppose c ≥ 1 is the maximum condition number. Then every pair (xi, xj) satisfies xi − cxj ≤ 0. Note that xi − cxi > 0 if and only if xi < 0. Thus, nonnegativity of the entries of x is enforced. The proximal distance approach to the condition number projection problem invokes the objective and majorization

at iteration n, where qnij = min{xni − cxnj,0}. We can write the majorization more concisely as

where vecQn stacks the columns of Qn = (qnij) and the p2 × p fusion matrix D satisfies (Dx)k = xi − cxj for each component k. The minimum of the surrogate occurs at the point . This linear system can be solved exactly. Appendix G provides additional details.

5.2. Numerical Results

Our numerical experiments compare various strategies for implementing Algorithm 2. We consider two variants of proximal distance algorithms. The first directly minimizes the majorizing surrogate (MM), while the second performs steepest descent (SD) on it. In addition to the aforementioned methods, we tried the subspace MM algorithm described in Section 2.2. Unfortunately, this method was outperformed in both time and accuracy comparisons by Nesterov accelerated MM; the MM subspace results are therefore omitted. We also compare our proximal distance approach to ADMM as described in Section 2.3. In many cases updates require solving a large linear system; we found that the method of conjugate gradients sacrificed little accuracy and largely outperformed LSQR and therefore omit comparisons. The clustering and denoising examples are exceptional in that the associated matrices DtD are sufficiently ill-conditioned to cause failures in conjugate gradients. Table 1 summarizes choices in control parameters across each example.

Table 1:

Summary of control parameters used in each example.

| δ h | δ d | δ q | ρ(t) = rt | |

|---|---|---|---|---|

| Metric Projection | 10−3 | 10−2 | 10−6 | min{108, 1.2t−1} |

| Convex Regression | 10−3 | 10−2 | 10−6 | min{108, 1.2t−1} |

| Convex Clustering | 10−2 | 10−5 | 10−6 | min{108, 1.2t−1} |

| Image Denoising | 10−1 | 10−1 | 10−6 | min{108, 1.5t−1} |

| Condition Numbers | 10−3 | 10−2 | 10−6 | min{108, 1.2t−1} |

We now explain example by example the implementation details behind our efforts to benchmark the three strategies (MM, SD, and ADMM) in implementing Algorithm 2. In each case we initialize the algorithm with the solution of the corresponding unconstrained problem. Performance is assessed in terms of speed in seconds or milliseconds, number of iterations until convergence, the converged value of the loss f(x), and the converged distance to the constraint set dist(Dx, S), as described in Algorithm 2. Additional metrics are highlighted where applicable. The term inner iterations refers to the number of iterations to solve a penalized subproblem argmin hρ(x) for a given ρ whereas outer iterations count the total number of subproblems solved. Lastly, we remind readers that the approximate solution to argmin hρ(t)(x) is used as a warm start in solving argmin hρ(t+1)(x).

5.2.1. Metric Projection.

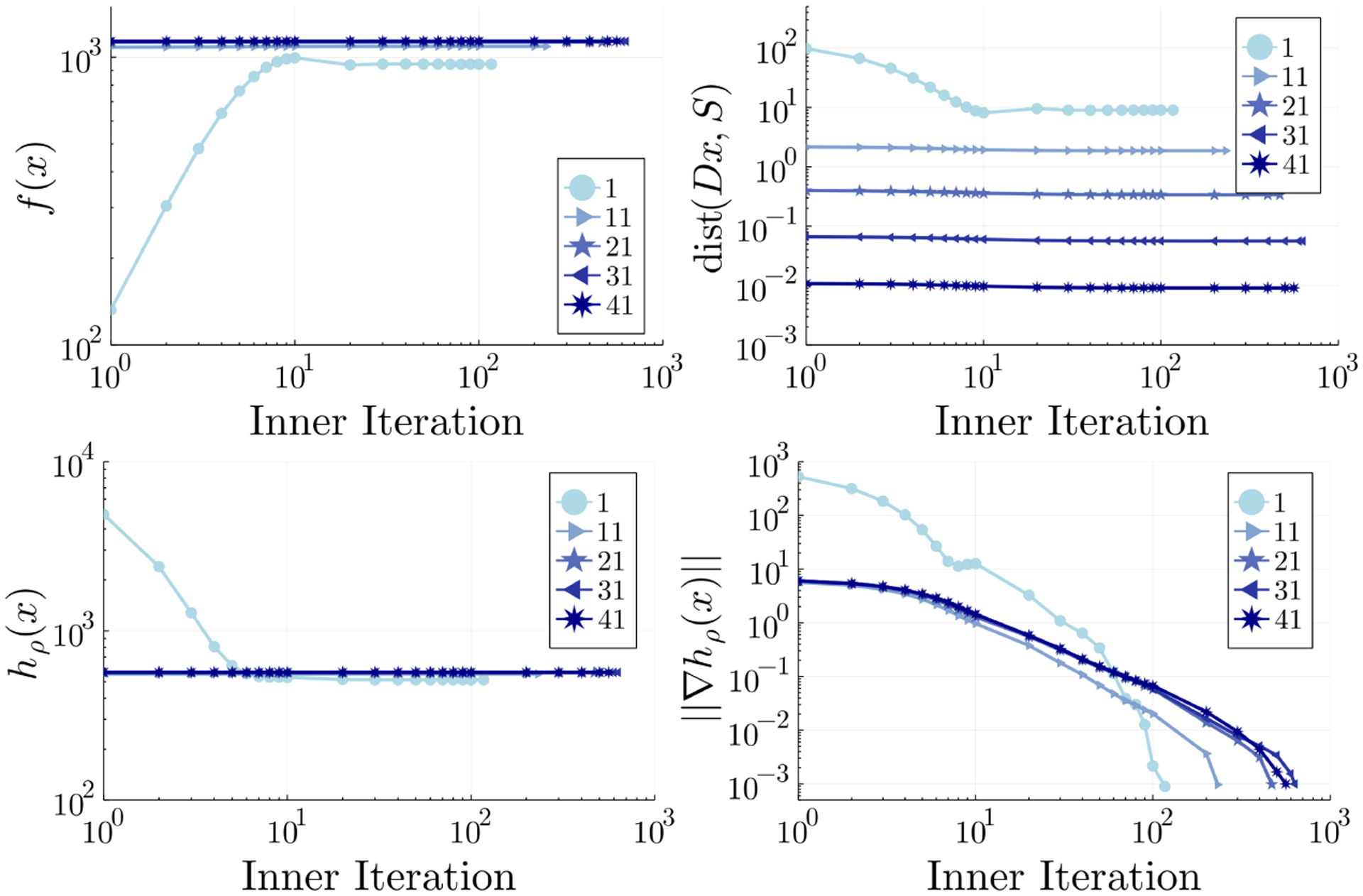

In our comparisons, we use input matrices whose iid entries yij are drawn uniformly from the interval [0, 10] and set weights wij = 1. Each algorithm is allotted a maximum of 200 outer and 105 inner iterations, respectively, to achieve a gradient norm of δh = 10−3 and distance to feasibility of δd = 10−2. The relative change parameter is set to δq = 0 and the annealing schedule is set to ρ(t) = min{108, 1.2t−1} for the proximal distance methods. Table 2 summarizes the performance of the three algorithms. Best values appear in boldface. All three algorithms converge to a similar solution as indicated by the final loss ∥y − x∥2 and distance values. It is clear that SD matches or outperforms MM and ADMM approaches on this example. Notably, the linear system appearing in the MM update admits an exact solution (see Appendix C.5), yet SD has a faster time to solution with fewer iterations taken. The selected convergence metrics in Figure 1 vividly illustrate stability of solutions xρ = argmin hρ(x) along an annealing path from ρ = 1 to ρ = 1.240 ≈ 1470. Specifically, solving each penalized subproblem along the sequence results in marginal increase in the loss term with appreciable decrease in the distance penalty. Except for the first outer iteration, there is minimal decrease of the loss, distance penalty, or penalized objective within a given outer iteration even as the gradient norm vanishes. The observed tradeoff between minimizing a loss model and minimizing a nonnegative penalty is well-known in penalized optimization literature (Beltrami, 1970; Lange, 2016, see Proposition 7.6.1 on p. 183).

Table 2:

Metric projection. Times are averaged over 3 replicates with standard deviations in parentheses. Reported iteration counts reflect the total inner iterations taken with outer iterations in parentheses.

| Time (s) | Loss ×10−3 | Distance ×103 | Iterations | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| m | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM |

| 16 | 0.0429 (0:000709) |

0.0338 (0:000137) | 0.107 (0.000858) |

0.237 | 0.237 | 0.237 | 9.24 | 9.24 | 9.24 | 4980 (37) |

3920 (37) |

7030 (37) |

| 32 |

1.24 (0.00309) |

1:28 (0.00617) |

2.6 (0.0149) |

1.14 | 1.14 | 1.14 | 9.13 | 9.13 | 9.13 | 16000 (41) |

15400 (41) |

17300 (41) |

| 64 | 19.5 (0.00835) |

16.7 (0.0148) |

43.9 (0.0616) |

4.69 | 4.69 | 4.69 | 8.7 | 8.7 | 8.7 | 30100 (44) |

24200 (44) |

33700 (44) |

| 128 | 171 (0.613) |

150 (0.28) |

725 (0.772) |

18.4 | 18.4 | 18.4 | 9.44 | 9.44 | 9.44 | 29900 (44) |

23700 (44) |

51900 (44) |

| 256 | 1670 (0.702) |

1570 (5.63) |

9110 (75.4) |

75.3 | 75.3 | 75.3 | 8.68 | 8.68 | 8.68 | 32500 (46) |

26700 (46) |

76100 (46) |

Figure 1:

Loss, distance, penalized objective, and gradient norm for SD on metric projection problem with 32 nodes, labeled by outer iteration.

5.2.2. Convex Regression.

In our numerical examples the observed functional values yi are independent Gaussian deviates with means ψ(xi) and common variance σ2 = 0.1. The predictors are iid deviates sampled from the uniform distribution on [−1, 1]d. We choose the simple convex function ψ(xi) = ∥xi∥2 for our benchmarks for ease in interpretation; the interested reader may consult the work of Mazumder et al. (2019) for a detailed account of the applicability of the technique in general. Each algorithm is allotted a maximum of 200 outer and 104 inner iterations, respectively, to converge with δh = 10−3, δd = 10−2, and δq = 10−6. The annealing schedule is set to ρ(t) = min{108, 1.2t−1}.

Table 3 demonstrates that although the SD approach is appreciably faster than both MM and ADMM, the latter appear to converge on solutions with marginal improvements in minimizing the loss ∥y − θ∥2, distance, and mean squared error (MSE) measured using ground truth functional values ψ(xi) and estimates θi. Interestingly, increasing both the number of features and samples does not necessarily increase the amount of required computational time in using a proximal distance approach; for example, see results with d = 2 and d = 20 features. This may be explained by sensitivity to the annealing schedule.

Table 3:

Convex regression. Times are reported as averages over 3 replicates.

| Time (s) | Loss ×103 | Distance ×104 | MSE ×102 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| d | m | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM |

| 1 | 50 | 0.015 (0.00477) |

0.0109 (0.00162) |

0.0171 (0.0033) |

454 | 454 | 454 | 87.3 | 87.4 | 87.5 | 70.1 | 70.1 | 70.1 |

| 100 | 0.0382 (0.002) |

0.0311 (0.000301) |

0.0578 (0.00179) |

510 | 510 | 510 | 94.3 | 94.4 | 94.5 | 75.3 | 75.3 | 75.3 | |

| 200 | 0.138 (0.00662) |

0.0991 (0.00138) |

0.205 (0.00213) |

471 | 471 | 471 | 92.2 | 92.2 | 92.2 | 71 | 71 | 71 | |

| 400 | 0.565 (0.012) |

0.464 (0.000493) |

0.862 (0.0184) |

501 | 501 | 501 | 96.9 | 97 | 97 | 79.1 | 79.1 | 79.1 | |

| 2 | 50 | 1.71 (0.00334) |

0.693 (0.00341) |

16 (0.00511) |

122 | 126 | 118 | 85.9 | 85.3 | 85.5 | 72.2 | 73.1 | 70.8 |

| 100 | 11.8 (0.0189) |

3.33 (0.00704) |

66.6 (0.0844) |

162 | 163 | 162 | 99.4 | 98 | 98.4 | 95.4 | 95.8 | 95.1 | |

| 200 | 51.4 (0.0701) |

14.1 (0.00805) |

230 (0.273) |

233 | 234 | 233 | 98.1 | 94.2 | 96.8 | 123 | 123 | 122 | |

| 400 | 200 (1.06) |

50.3 (0.299) |

917 (1.48) |

239 | 239 | 238 | 94.3 | 90.8 | 91.9 | 140 | 140 | 140 | |

| 10 | 50 | 0.19 (0.00281) |

0.00722 (9.99 × 10−5) |

0.196 (0.00743) |

0.000891 | 0.00109 | 0.000838 | 0.821 | 2.23 | 0.488 | 8.59 | 8.63 | 8.61 |

| 100 | 0.854 (0.0002) |

0.0644 (0.000503) |

0.873 (0.00191) |

0.000937 | 0.00097 | 0.000943 | 0.154 | 0 | 0.11 | 10.3 | 10.2 | 10.3 | |

| 200 | 3.77 (0.00486) |

0.398 (0.00888) |

3.89 (0.0132) |

0.000883 | 0.00099 | 0.00101 | 0.281 | 0 | 0.292 | 9.64 | 9.63 | 9.65 | |

| 400 | 26.8 (0.405) |

3.17 (0.0183) |

27.6 (0.0488) |

0.000992 | 0.000997 | 0.000999 | 0.185 | 0.288 | 0.176 | 9.41 | 9.42 | 9.39 | |

| 20 | 50 | 0.39 (0.00132) |

0.00791 (2.6 × 10−5) |

0.399 (0.00193) |

0.000991 | 0.00542 | 0.00091 | 0.027 | 7.06 | 0.0696 | 9.77 | 9.34 | 9.57 |

| 100 | 1.46 (0.000164) |

0.0684 (0.000174) |

1.58 (0.0329) |

0.000995 | 0.000965 | 0.000996 | 0.0308 | 0 | 0 | 9.13 | 9.22 | 9 | |

| 200 | 7.7 (0.166) |

0.414 (0.00109) |

7.78 (0.0105) |

0.000984 | 0.00113 | 0.000961 | 0.251 | 1.74 | 0.438 | 9.6 | 9.61 | 9.6 | |

| 400 | 30.2 (0.0275) |

3.03 (0.00605) |

30 (0.0157) |

0.000997 | 0.00105 | 0.00142 | 0.0921 | 1.68 | 0.646 | 10 | 10.2 | 10.2 | |

5.2.3. Convex Clustering.

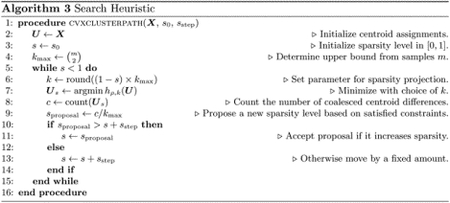

To evaluate the performance of the different methods on convex clustering, we consider a mixture of simulated data and discriminant analysis data from the UCI Machine Learning Repository (Dua and Graff, 2019). The simulated data in gaussian300 consists of 3 Gaussian clusters generated from bivariate normal distributions with means μ = (0.0, 0.0)t, (2.0, 2.0)t, and (1.8, 0.5)t, standard deviation σ = 0.1, and class sizes n1 = 150, n2 = 50, n3 = 100. This easy dataset is included to validate Algorithm 3 described later as a reasonable solution path heuristic. The data in iris and zoo are representative of clustering with purely continuous or purely discrete data, respectively. In these two datasets, samples with same class label form a cluster. Finally, the simulated data spiral500 is a classic example that thwarts k-means clustering. Each algorithm is allotted a maximum of 104 inner iterations to solve a ρ-penalized subproblem at level δh = 10−2. The annealing schedule is set to ρ(t) = min{108, 1.2t−1} over 100 outer iterations with δd = 10−5 and δq = 10−6.

Because the number of clusters is usually unknown, we implement the search heuristic outlined in Algorithm 3. The idea behind the heuristic is to gradually coerce clustering without exploring the full range of the hyperparameter k. As one decreases the number of admissible nonzero centroid differences k from kmax to 0, sparsity (1 − k/kmax) in the columns of UD increases to reflect coalescing centroid assignments. Thus, Algorithm 1 generates a list of candidate clusters that can be evaluated by various measures of similarity (Vinh et al., 2010). For example, the adjusted Rand index (ARI) provides a reasonable measure of the distance to the ground truth in our examples as it accounts for both the number of identified clusters and cluster assignments. We also report the related normed Mutual Information (NMI). The ARI takes values on [−1, 1] whereas NMI appears on a [0, 1] scale.

ADMM, as implemented here, is not remotely competitive on these examples given its extremely long compute times and failure to converge in some instances. These times are only exacerbated by the search heuristic and therefore omit ADMM from this example. The findings reported in Table 4 indicate the same accuracy for MM (using LSQR) and SD as measured by loss and distance to feasibility. Here we see that the combination of the proximal distance algorithms and the overall search heuristic (Algorithm 3) yields perfect clusters in the gaussian300 example on the basis of ARI and NMI. To its disadvantage, the search heuristic is greedy and generally requires tuning. Both MM and SD achieve similar clusters as indicated by ARI and NMI. Notably, SD generates candidate clusters faster than MM.

Table 4:

Convex clustering. Times reflect the total time spent generating candidate clusterings using Algorithm 3. Additional metrics correspond to the optimal clustering on the basis of maximal ARI. Time and clustering indices are averaged over 3 replicates with standard deviations reported in parentheses.

| Time (s) | Loss | Distance ×105 | ARI | NMI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| dataset | features | samples | classes | MM | SD | MM | SD | MM | SD | MM | SD | MM | SD |

| zoo | 16 | 101 | 7 | 95.6 (0.268) |

77.1 (2.02) |

1600 | 1600 | 8.62 | 8.62 | 0.841 (0) |

0.848 (0.0118) |

0.853 (0) |

0.856 (0.00256) |

| iris | 4 | 150 | 3 | 76.4 (0.129) |

62.8 (2) |

596 | 596 | 8.8 | 8.8 |

0.575 (0) |

0.575 (0) |

0.734 (0) |

0.734 (0) |

| gaussian300 | 2 | 300 | 3 | 190 (0.173) |

155 (0.177) |

598 | 598 | 8.98 | 8.98 |

1 (0) |

1 (0) |

1 (0) |

1 (0) |

| spiral500 | 2 | 500 | 2 | 715 (18.5) |

561 (0.501) |

998 | 998 | 8.98 | 8.98 |

0.133 (0) |

0.133 (0) |

0.366 (0) |

0.366 (0) |

5.2.4. Total Variation Image Denoising.

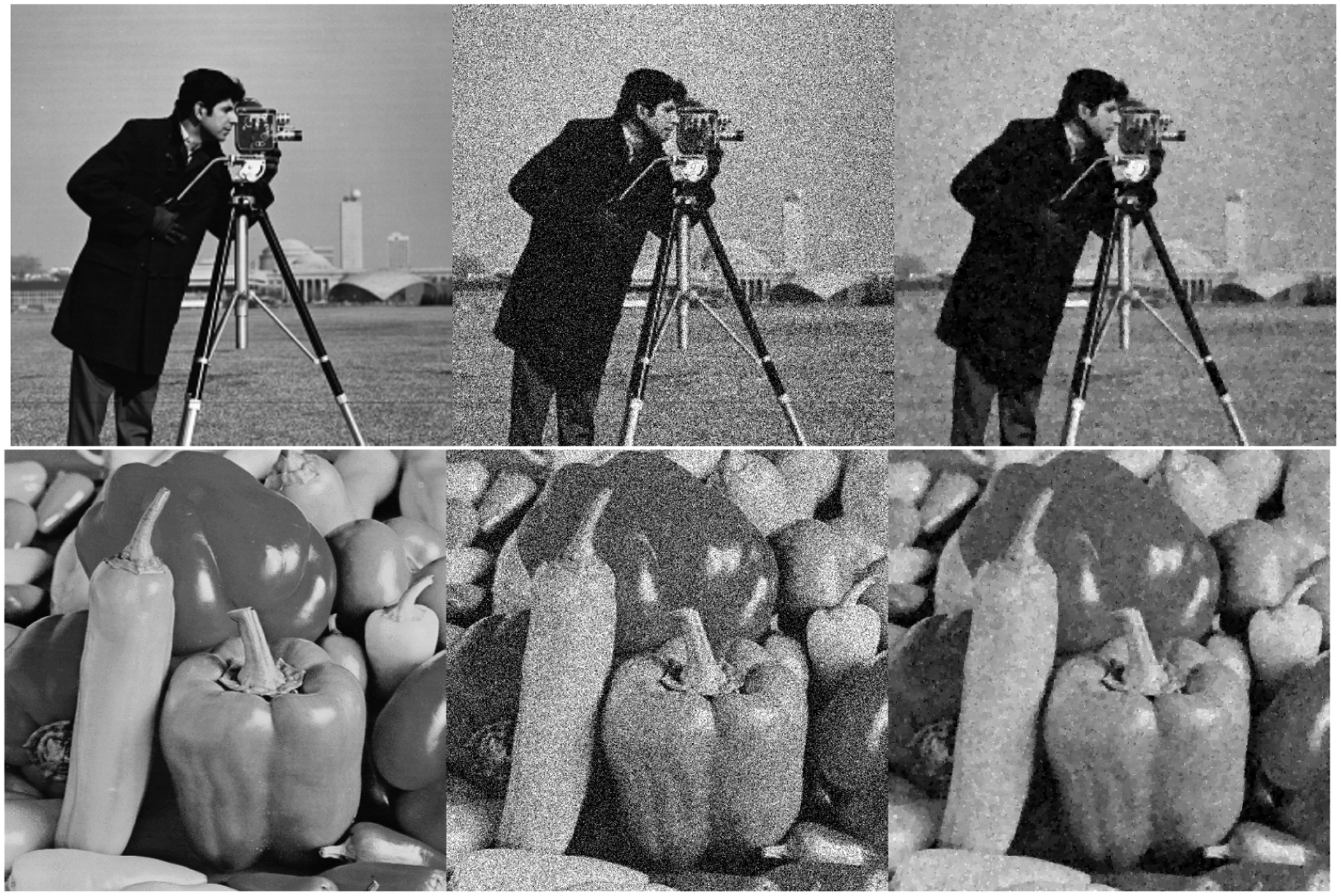

To evaluate our denoising algorithm, we consider two standard test images, cameraman and peppers_gray. White noise with σ = 0.2 is applied to an image and then reconstructed using our proximal distance algorithms. Only MM and SD are tested with a maximum of 100 outer and 104 inner iterations, respectively, and convergence thresholds δh = 10−1, δd = 10−1, and δq = 10−6. A moderate schedule ρ(t) = {108, 1.5t−1} performs well even with such lax convergence criteria. Table 5 reports convergence metrics and image quality indices, MSE and Peak Signal-to-Noise Ratio (PSNR). Timings reflect the total time spent generating solutions, starting from a 0% reduction in the total variation of the input image U up to 90% reduction in increments of 10%. Explicitly, we take γ0 = TV1(U) and vary the control parameter γ = (1 − s)γ0 with s ∈ [0, 1] to control the strength of denoising. Figure 5.2.4 depicts the original and reconstructed images along the solution path.

Table 5:

Image denoising. Times reflect the total time spent generating candidate images, averaged over 3 replicates, ultimately achieving 90% reduction in total variation

| Time (s) | Loss | Distance ×103 | MSE ×105 | PSNR | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| image | width | height | MM | SD | MM | SD | MM | SD | MM | SD | MM | SD |

| cameraman | 512 | 512 | 557 (5.01) |

181 (0.906) |

8090 | 8090 | 93.1 | 93.1 | 284 | 284 | 25.5 | 25.5 |

| peppers_gray | 512 | 512 | 553 (6.28) |

183 (2.11) |

8020 | 8020 | 92.6 | 92.5 | 290 | 290 | 25.4 | 25.4 |

Figure 2:

Sample images along the solution path of the search heuristic. Images are arranged from left to right as follows: reference, noisy input, and 90% reduction.

5.2.5. Projection of a Matrix to a Good Condition Number.

We generate base matrices as random correlation matrices using MatrixDepot.jl (Zhang and Higham, 2016), which relies on Davies’ and Higham’s refinement (Davies and Higham, 2000) of the Bendel-Mickey algorithm (Bendel and Mickey, 1978). Simulations generate matrices with condition numbers c(M) in the set {119, 1920, 690}. Our subsequent analyses target condition number decreases by a factor a such a ∈ {2, 4, 16, 32}. Each algorithm is allotted a maximum of 200 outer and 104 inner iterations, respectively with choices δh = 10−3, δd = 10−2, δq = 10−6, and ρ(t) = 1.2t−1. Table 6 summarizes the performance of the three algorithms. The quality of approximate solutions is similar across MM, SD, and ADMM in terms of loss, distance, and final condition number metrics. Interestingly, the MM approach requires less time to deliver solutions of comparable quality to SD solutions as the size p of the input matrix increases.

Table 6:

Condition number experiments. Here c(M) is the condition number of the input matrix, a is the decrease factor, and c(X) is the condition number of the solution.

| Time (ms) | Loss ×103 | Distance ×105 | c(X) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p | c(M) | a | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM | MM | SD | ADMM |

| 10 | 119 | 2 | 0.576 (0.104) |

0.391 (0.00629) |

0.582 (0.172) |

0.549 | 0.548 | 0.549 | 41.1 | 41.1 | 40.6 | 59.4 | 59.4 | 59.4 |

| 4 | 0.413 (0.00321) |

0.359 (0.00129) |

0.543 (0.101) |

4.92 | 4.92 | 4.92 | 236 | 234 | 236 | 29.7 | 29.7 | 29.7 | ||

| 16 | 0. 439 (0.00319) |

0.424 (0.00427) |

0.535 (0.00395) |

130 | 130 | 130 | 926 | 929 | 927 | 7.44 | 7.44 | 7.44 | ||

| 32 | 0.662 (0.00336) |

0.496 (0.00357) |

0.824 (0.00459) |

821 | 821 | 821 | 984 | 983 | 983 | 3.72 | 3.72 | 3.72 | ||

| 100 | 1920 | 2 |

37.7 (2.89) |

51.1 (3.77) |

149 (0.972) |

0.00119 | 0.00119 | 0.00119 | 0.208 | 0.208 | 0.204 | 960 | 960 | 960 |

| 4 |

17.1 (0.164) |

26.5 (2.8) |

66.4 (0.485) |

0.0107 | 0.0107 | 0.0107 | 0.845 | 0.845 | 0.877 | 480 | 480 | 480 | ||

| 16 |

10.1 (0.301) |

11. (0.0472) |

35.3 (0.437) |

0.436 | 0.436 | 0.436 | 17.8 | 17.9 | 17.9 | 120 | 120 | 120 | ||

| 32 |

26 (2.23) |

33.4 (0.49) |

74 (0.893) |

3.26 | 3.26 | 3.26 | 95.2 | 95.2 | 95.2 | 60 | 60 | 60 | ||

| 1000 | 59400 | 2 |

60.3 (0.83) |

75.3 (1.35) |

157 (3.98) |

1.15 × 10−6 | 1.15 × 10−6 | 1.15 × 10−6 | 0 | 0 | 0 | 29700 | 29700 | 29600 |

| 4 |

57.4 (3.37) |

71.8 (0.882) |

131 (8.44) |

1.04 × 10−5 | 1.04 × 10−5 | 1.04 × 10−5 | 0 | 0 | 0 | 14800 | 14800 | 14800 | ||

| 16 |

55.6 (3.3) |

71.9 (2.05) |

152 (38.8) |

0.000258 | 0.000258 | 0.000261 | 0 | 0 | 0 | 3710 | 3710 | 3690 | ||

| 32 |

23200 (855) |

30000 (337) |

87400 (1340) |

0.0011 | 0.0011 | 0.00111 | 0.11 | 0.11 | 0 | 1860 | 1860 | 1850 | ||

6. Discussion

We now recapitulate the main findings of our numerical experiments. Tables 2 through 6 show a consistent pattern of superior speed by the steepest descent (SD) version of the proximal distance algorithm. This is hardly surprising since unlike ADMM and MM, SD avoids solving a linear system at each iteration. SD’s speed advantage tends to persist even when the linear system can be solved exactly. The condition number example summarized in Table 6 is an exception to this rule. Here the MM updates leverage a very simple matrix inverse. MM is usually faster than ADMM. We attribute MM’s performance edge to the extra matrix-vector multiplications involving the fusion matrix D required by ADMM. In fairness, ADMM closes the speed gap and matches MM on convex regression.

The choice of annealing schedule can strongly impact the quality of solutions. Intuitively, driving the gradient norm ∥∇hρ(x)∥ to nearly 0 for a given ρ keeps the proximal distance methods on the correct annealing path and yields better approximations. Provided the penalized objective is sufficiently smooth, one expects the solution xρ ≈ argmin hρ(x) to be close to the solution xρ′ ≈ argmin hρ′(x) when the ratio ρ′/ρ > 1 is not too large. Thus, choosing a conservative δh for the convergence criterion ∥∇hρ(x)∥ ≤ δh may guard against a poorly specified annealing schedule. Quantifying sensitivity of intermediate solutions xρ with respect to ρ is key in avoiding an increase in inner iterations per subproblem; for example, as observed in Figure 1. Given the success of our practical annealing recommendation to overcome the unfortunate coefficients in Propositions 3.3 and 3.4, this topic merits further consideration in future work.

In practice, it is sometimes unnecessary to impose strict convergence criteria on the proximal distance iterates. It is apparent that the convergence criteria on convex clustering and image denoising are quite lax compared to choices in other examples, specifically in terms of δh. Figure 1 suggests that most of the work in metric projection involves driving the distance penalty downhill rather than in fitting the loss. Surprisingly, Table 4 shows that our strict distance criterion δd = 10−5 in clustering is achieved. This implies dist(Dx, S)2 ≤ 10−10 on the selected solutions with ρ ≤ 108, yet we only required ∥∇hρ(x)∥ ≤ 10−2 on each subproblem. Indeed, not every problem may benefit from precise solution estimates. The image processing example underscores this point as we are able to recover denoised images with the choices δh = δd = 10−1. Problems where patterns or structure in solutions are of primary interest may stand to benefit from relaxed convergence criteria.

Our proximal distance method, as described in Algorithm 2, enjoys several advantages. First, fusion constraints fit naturally in the proximal distance framework. Second, proximal distances enjoy the descent property. Third, there is a nearly optimal step size for gradient descent when second-order information is available on the loss. Fourth, proximal distance algorithms are competitive if not superior to ADMM on many problems. Fifth, proximal distance algorithms like iterative hard thresholding rely on set projection and are therefore helpful in dealing with hard sparsity constraints. The main disadvantages of the proximal distance methods are (a) the overall slow convergence due to the loss of curvature information on the distance penalty and (b) the need for a reasonable annealing schedule. In practice, a little experimentation can yield a reasonable schedule for an entire class of problems. Many competing methods are only capable of dealing with soft constraints imposed by the lasso and other convex penalties. To their detriment, soft constraints often entail severe parameter shrinkage and lead to an excess of false positives in model selection.

Throughout this manuscript we have stressed the flexibility of the proximal distance framework in dealing with a wide range of constraints as a major strength. From our point of view, proximal distance iteration adequately approximates feasible, locally optimal solutions to constrained optimization problems for well behaved constraint sets, for instance convex sets or semialgebraic sets. Combinatorially complex constraints or erratic loss functions can cause difficulties for the proximal distance methods. The quadratic distance penalty dist(Dx, S)2 is usually not an issue, and projection onto the constraint should be fast. Poor loss functions may either lack second derivatives or may possess a prohibitively expensive and potentially ill-conditioned Hessian d2f(x). In this setting techniques such as coordinate descent and regularized and quasi-Newton methods are viable alternatives for minimizing the surrogate gρ(x | xn) generated by distance majorization. In any event, it is crucial to design a surrogate that renders each subproblem along the annealing path easy to solve. This may entail applying additional majorizations in f(x). Balanced against this possibility is the sacrifice of curvature information with each additional majorization.

We readily acknowledge that other algorithms may perform better than MM and proximal distance algorithms on specific problems. The triangle fixing algorithm for metric projection is a case in point (Brickell et al., 2008), as are the numerous denoising algorithms based on the ℓ1 norm. This objection obscures the generic utility of the proximal distance principle. ADMM can certainly be beat on many specific problems, but nobody seriously suggests that it be rejected across the board. Optimization, particularly constrained optimization, is a fragmented subject, with no clear winner across problem domains. Generic methods serve as workhorses, benchmarks, and backstops.

As an aside, let us briefly note that ADMM can be motivated by the MM principle, which is the same idea driving proximal distance algorithms. The optimal pair (x, y) and λ furnishes a stationary point of the Lagrangian. Because the Lagrangian is linear in λ, its maximum for fixed (x, y) is ∞. To correct this defect, one can add a viscosity minorization to the Lagrangian. This produces the modified Lagrangian

The penalty term has no impact on the x and y updates. However, the MM update for λ is determined by the stationary condition

so that

The choice α = 1 gives the standard ADMM update. Thus, the ADMM algorithm alternates decreasing and increasing the Lagrangian in a search for the saddlepoint represented by the optimal trio (x, y, λ).

In closing we would like to draw the reader’s attention to some generalizations of the MM principle and connections to other well-studied algorithm classes. For instance, a linear fusion constraint Dx ∈ S can in principle by replaced by a nonlinear fusion constraint M(x) ∈ S. The objective and majorizer are then

The objective has gradient g = ∇f(x) + ρdM(x)t{M(x)− 𝒫S[M(x)]}. The second differential of the majorizer is approximately d2f(x) + ρdM(x)tdM(x) for M(x) close to 𝒫S[M(x)]. Thus, gradient descent can be implemented with step size

assuming the denominator is positive.

Algebraic penalties such as ∥g(x)∥2 reduce to distance penalties with constraint set {0}. The corresponding projection operator sends any vector y to 0 so that the algebraic penalty ∥g(x)∥2 = dist[g(x), {0}]2. This observation is pertinent to constrained least squares with g(x) = d − Cx (Golub and Van Loan, 1996). The proximal distance surrogate can be expressed as

and minimized by standard least squares algorithms. No annealing is necessary. Inequality constraints g(x) ≤ 0 behave somewhat differently. The proximal distance majorization is not the same as the Beltrami quadratic penalty (Beltrami, 1970). However, the standard majorization (Lange, 2016)

brings them back into alignment.

7. Proofs

In this section we provide proofs for the convergence results discussed in Section 3 and Section 4 for the convex and nonconvex cases, respectively.

7.1. Proposition 3.1

Proof Without loss of generality we can translate the coordinates so that y = 0. Let B be the unit sphere {x: ∥x∥ = 1}. Our first aim is to show that hρ(x) > f(0) throughout B. Consider the set B ∩ T, which is possibly empty. On this set the infimum b of f(x) is attained, so b > f(0) by assumption. The set B\T will be divided into two regions, a narrow zone adjacent to T and the remainder. Now let us show that there exists a δ > 0 such that hρ(x) ≥ f(x) ≥ f(0) + δ for all x ∈ B with dist(Dx, S) ≤ δ. If this is not so, then there exists a sequence xn ∈ B with and . By compactness, some subsequence of xn converges to z ∈ B ∩ T with f(z) ≤ f(0), contradicting the uniqueness of y. Finally, let a = minx∈B f(x). To deal with the remaining region take ρ large enough so that . For such ρ, hρ(x) > f(0) everywhere on B. It follows that on the unit ball {x : ∥x∥ ≤ 1}, hρ(x) is minimized at an interior point. Because hρ(x) is convex, a local minimum is necessarily a global minimum.

To show that the objective hρ(x) is coercive, it suffices to show that it is coercive along every ray {tv: t ≥ 0, ∥v∥ = 1} (Lange, 2016). The convex function r(t) = hρ(tv) satisfies . Because r(0) < r(1), the point 1 is on the upward slope of r(t), and the one-sided derivative . Coerciveness follows from this observation. ■

7.2. Proposition 3.2

Proof The first assertion follows from the bound gρ(x | xn) ≥ hρ(x). To prove the second assertion, we note that it suffices to prove the existence of some constant ρ > 0 such that the matrix A + ρDtD is positive definite (Debreu, 1952). If no choice of ρ renders A + ρDtD positive definite, then there is a sequence of unit vectors um and a sequence of scalars ρm tending to ∞ such that

| (11) |

By passing to a subsequence if needed, we may assume that the sequence um converges to a unit vector u. On the one hand, because DtD is positive semidefinite, inequality (11) compels the conclusions , which must carry over to the limit. On the other hand, dividing inequality (11) by ρm and taking limits imply utDtDu ≤ 0 and therefore ∥Du∥ = 0. Because the limit vector u violates the condition utAu > 0, the required ρ > 0 exists. ■

7.3. Proposition 3.3

Proof Systematic decrease of the iterate values hρ(xn) is a consequence of the MM principle. The existence of zρ follows from Proposition 3.1. To prove the stated bound, first observe that the function is convex, being the sum of the convex function f(x) and a linear function. Because ∇gρ(xn+1 | xn)t(x − xn+1) ≥ 0 for any x in S, the supporting hyperplane inequality implies that

or equivalently

| (12) |

Now note that the difference

has gradient

Because 𝒫(x) is non-expansive, the gradient ∇d(x | y) is Lipschitz with constant 1. The tangency conditions d(y | y) = 0 and ∇d(y | y) = 0 therefore yield

| (13) |

for all x. At a minimum zρ of hρ(x), combining inequalities (12) and (13) gives

Adding the result

over n and invoking the descent property hρ(xn+1) ≤ hρ(xn), telescoping produces the desired error bound

This is precisely the asserted bound. ■

7.4. Proposition 3.4

Proof The existence and uniqueness of zρ are obvious. The remainder of the proof hinges on the facts that hρ(x) is μ-strongly convex and the surrogate gρ(x | w) is L + ρ∥D∥2-smooth for all w. The latter assertion follows from

These facts together with ∇gρ(zρ | zρ) = ∇hρ(zρ) = 0 imply

| (14) |

The strong convexity condition

entails

It follows that . This last inequality and inequality (14) produce the Polyak-Łojasiewicz bound

Taking c = L + ρ∥D∥2 and

the Polyak-Łojasiewicz bound gives

Rearranging this inequality yields

which can be iterated to give the stated bound. ■

7.5. Proposition 4.2

Proof To validate the subanalytic premise of Proposition 4.1, first note that semialgebraic functions and sets are automatically subanalytic. The penalized loss

is semialgebraic by the sum rule. Under the assumption stated in Proposition 3.2, gρ(x | xn) is strongly convex and coercive for ρ sufficiently large. Continuity of gρ(x | xn) is a consequence of the continuity of f(x). The Lipschitz condition follows from the fact that the sum of two Lipschitz functions is Lipschitz. Under these conditions and regardless of which projected point PS(x) is chosen, the MM iterates are guaranteed to converge to a stationary point. ■

7.6. Proposition 4.3

Proof The first claim is true owing to the inclusion-exclusion formula

and the previously stated closure properties. For n = 3 and k = 2 the inclusion-exclusion formula reads f(2) = max{f1, f2} + max{f1, f3} + max{f2, f3}−2max{f1, f2, f3}. To prove the second claim, note that a sparsity set in with at most k nontrivial coordinates can be expressed as the zero set {x: y(p−k) = 0}, where yi = |xi|. Thus, the sparsity set is semialgebraic. ■

7.7. Proposition 4.4

Proof Proposition 4.2 proves that the proximal distance iterates xn converge to x∞. Suppose that Dx∞ has k unambiguous largest components in magnitude. Then Dxn shares this property for large n. It follows that all occur in the same k-dimensional subspace S for large n. Thus, we can replace the sparsity set Sk by the subspace S in minimization from some n onward. Convergence at a linear rate now follows from Proposition 3.4.

To prove that the set A of points x such that Dx has k ambiguous largest components in magnitude has measure 0, observe that it is contained in the set T where two or more coordinates tie. Suppose x satisfies the tie condition for two rows and of D. If the rows of D are unique, then the equality (di − dj)tx = 0 defines a hyperplane in x space and consequently has measure 0. Because there are a finite number of row pairs, T as a union has measure 0. ■

Acknowledgements

H.Z. and K.L. were supported by NIH grants R01-HG006139 and R35-GM141798. H.Z. was also supported by NSF grant DMS-2054253.

Appendix A. Relevant Theory for Nonconvex Analysis

Let us first review the notion of a Fréchet subdifferential (Kruger, 2003). If h(x) is a function mapping into , then its Fréchet subdifferential at x ∈ dom f is defined as

The set ∂Fh(x) is closed, convex, and possibly empty. If h(x) is convex, then ∂Fh(x) reduces to its convex subdifferential. If h(x) is differentiable, then ∂Fh(x) reduces to its ordinary differential. At a local minimum x, Fermat’s rule 0 ∈ ∂Fh(x) holds. For a locally Lipschitz and directionally differentiable function, the Fréchet subdifferential becomes

Here duh(x) is the directional derivative of h(x) at x in the direction u. This result makes it clear that at a critical point, all directional derivatives are flat or point uphill.

We will also need some notions from algebraic geometry (Bochnak et al., 2013). For simplicity we focus on the class of semialgebraic functions and the corresponding class of semialgebraic subsets of . The latter is the smallest class that:

contains all sets of the form {x: q(x) > 0} for a polynomial q(x) in p variables, and

is closed under the formation of finite unions, finite intersections, set complements, and Cartesian products.

A function is said to be semialgebraic if its graph is a semialgebraic set of . The class of real-valued semialgebraic functions contains all polynomials p(x) and all 0/1 indicators of algebraic sets. It is closed under the formation of sums and products and therefore constitutes a commutative ring with identity. The class is also closed under the formation of absolute values, reciprocals when a(x) ≠ 0, nth roots when a(x) ≥ 0, and maxima max{a(x), b(x)} and minima min{a(x), b(x)}. Finally, the composition of two semialgebraic functions is semialgebraic.

Appendix B. Convergence Properties of ADMM

To avail ourselves of the known results, we define three functions

the second and third being the Lagrangian and dual function. This notation leads to following result; see Beck (2017) for an accessible proof.

Proposition B.1 Suppose that S is closed and convex and that the loss f(x) is proper, closed, and convex with domain whose relative interior is nonempty. Also assume the dual function q(λ) achieves its maximum value. If the objective achieves its minimum value for all a ≠ 0, then the ADMM running averages

satisfy

Note that Proposition 3.2 furnishes a sufficient condition under which the functions achieve their minima. Linear convergence holds under stronger assumptions.

Proposition B.2 Suppose that S is closed and convex, that the loss f(x) is L-smooth and μ-strongly convex, and that the map determined by D is onto. Then the ADMM iterates converge at a linear rate.

Giselsson and Boyd (2016) proved Proposition B.2 by operator methods. A range of convergence rates is specified there.

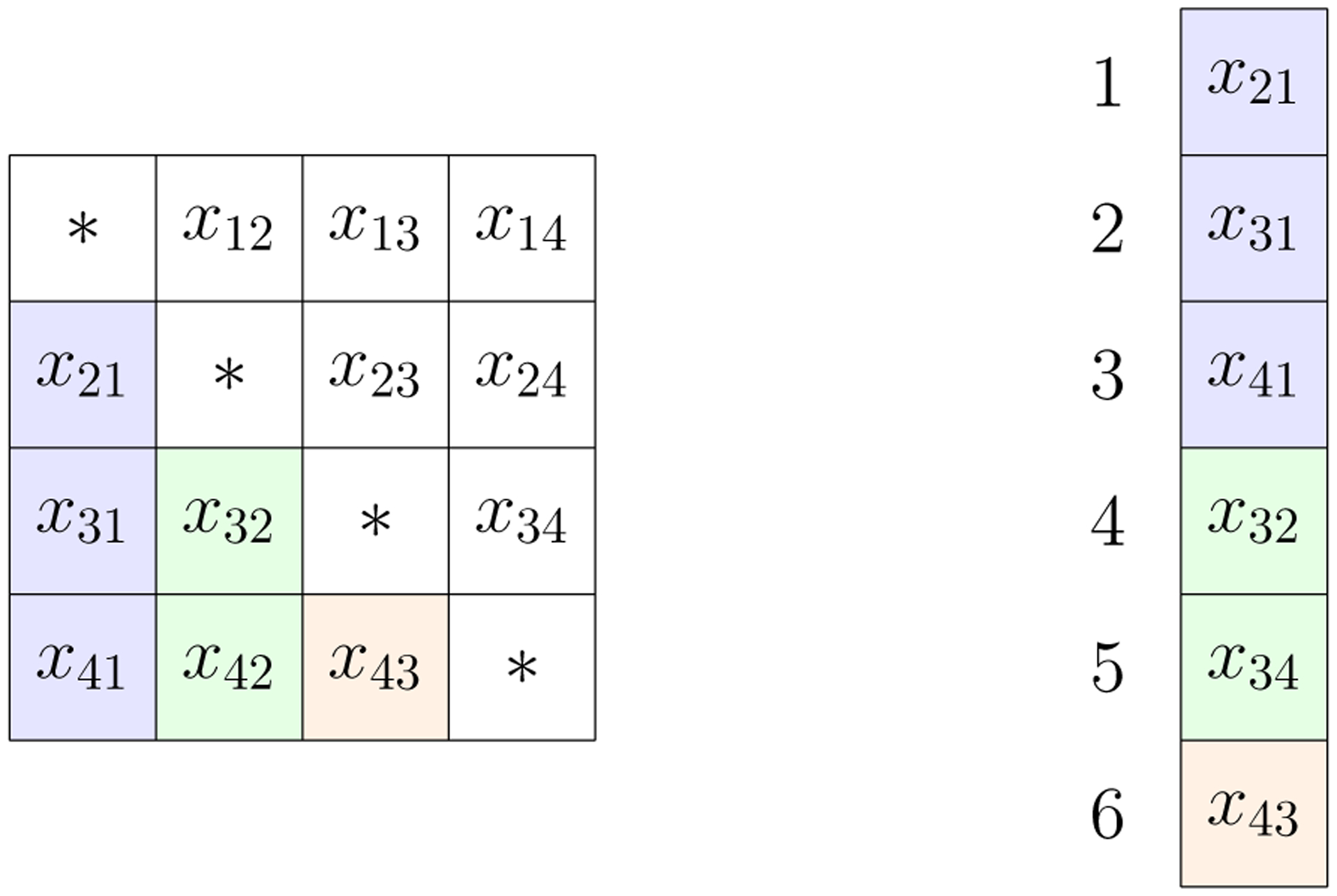

Appendix C. Additional Details for Metric Projection Example

Given a n × n dissimilarity matrix C = (cij) with non-negative weights wij, our goal is to find a semi-metric X = (xij). We start by denoting trivec an operation that maps a symmetric matrix X to a vector x, x = trivec(X) (Figure 3). Then we write the metric projection objective as

where c = trivec(C). Here T encodes triangle inequalities and the mi count the number of constraints of each type. The usual distance majorization furnishes a surrogate

The notation 𝒫(·, S) denotes projection onto a set S. The fusion matrix D = [T ; I] stacks the two operators; the joint projection operates in a block-wise fashion.

Figure 3:

Example of a symmetric matrix X and its minimal representation x = trivec(X).

C.1. MM

We rewrite the surrogate explicitly as a least squares problem minimizing :

where c ≡ y from the main text. Updating the RHS bn in the linear system reduces to evaluating the projection and copy operations. It is worth noting that triangle fixing algorithms that solve the metric nearness problem operate in the same fashion, except they work one triangle a time. That is, each iteration solves least squares problems compared to 1 in this formulation. A conjugate gradient type of algorithm solves the normal equations directly using AtA, whereas LSQR type methods use only A and At.

C.2. Steepest Descent

The updates xn+1 = xn − tn∇hρ(xn) admit an exact solution for the line search parameter tn. Recall the generic formula from the main text:

Identifying vn with ∇hρ(xn) we have

C.3. ADMM

Taking y as the dual variable and λ as scaled multipliers, the updates for each ADMM block are

Finally, the Multipliers follow the standard update.

C.4. Properties of the Triangle Inequality Matrix

These results have been documented before and are useful in designing fast subroutines for Dx and DtDx. Recall that m counts the number of nodes in the problem and is the number of parameters. In this notation and DtD = TtT + Ip.

Proposition C.1 The matrix T has rows and columns.

Proof Interpret X as the adjacency matrix for a complete directed graph on m nodes without self-edges. When X is symmetric the number of free parameters is therefore . An oriented 3-cycle is formed by fixing 3 nodes so there are such cycles. Now fix the orientation of the 3-cycles and note that each triangle encodes 3 metric constraints. The number of constraints is therefore . ■

Proposition C.2 Each column of T has 3(m − 2) nonzero entries.

Proof In view of the previous result, the entries Tij encode whether edge j participates in constraint i. We proceed by induction on the number of nodes m. The base case m = 3 involves one triangle and is trivial. Note that a triangle encodes 3 inequalities.

Now consider a complete graph on m nodes and suppose the claim holds. Without loss of generality, consider the collection of 3-cycles oriented clockwise and fix an edge j. Adding a node to the graph yields 2m new edges, two for each of the existing m nodes. This action also creates one new triangle for each existing edge. Thus, edge j appears in 3(m − 2)+3 = 3[(m + 1)−2] triangle inequality constraints based on the induction hypothesis. ■

Proposition C.3 Each column of T has m − 2 +1s and 2(m − 2) −1s.

Proof Interpret the inequality xij ≤ xik + xkj with i > k > j as the ordered triple xij, xik, xkj. The statement is equivalent to counting

where N denotes the number of constraints. In view of the previous proposition, it is enough to prove a(N) = m − 2. Note that a(3) = 1, meaning that xij appears in position 1 exactly once within a given triangle. Given that an edge (i, j) appears in 3(m−2) constraints, divide this quantity by the number of constraints per triangle to arrive at the stated result. ■

Proposition C.4 The matrix T has full column rank.

Proof It is enough to show that A = TtT is full rank. The first two propositions imply

To compute the off-diagonal entries, fix a triangle and note that two edges i and j appear in all three of its constraints of the form xi ≤ xj + xk. There are three possibilities for a given constraint c:

It follows that

By Proposition C.2, an edge i appears in 3(m − 2) constraints. Imposing the condition that edge j also appears reduces this number by m − 2, the number of remaining nodes that can contribute edges in our accounting. The calculation

establishes that A is strictly diagonally dominant and hence full rank. ■

Proposition C.5 The matrix TtT has at most 3 distinct eigenvalues of the form m − 2, 2m − 2, and 3m − 4 with multiplicities 1, m − 1, and , respectively.

Proof Let be the incidence matrix of a complete graph with m vertices. That is M has entry me,v = 1 if vertex v occurs in edge e and 0 otherwise. Each row of M has two entries equal to 1; each column of M has m−1 entries equal to 1. It is easy to see

The Gram matrices MtM and MMt share the same positive eigenvalues. Since has eigenvalue 2m−2 with multiplicity 1 and eigenvalue m−2 with multiplicity m − 1, MMt has eigenvalue 2m − 2 with multiplicity 1, eigenvalue m − 2 with multiplicity m − 1, and eigenvalue 0 with multiplicity m(m − 3)/2. Therefore the eigenvalues of TtT are m − 2, 2m − 2, and 3m − 4 with multiplicities 1, m − 1, and m(m − 3)/2 respectively. ■

In general, it is easy to check that the matrix m × m matrix aI + b11t has the eigenvector 1 with eigenvalue a + mb and m − 1 orthogonal eigenvectors

with eigenvalue a. Note that each ui is perpendicular to 1. None of these eigenvectors is normalized to have length 1. Although the eigenvectors ui are certainly convenient, they are not unique.

To recover the eigenvectors of TtT, and hence those DtD, we can leverage the eigenvectors of MtM, which we know. The following generic observations are pertinent. If a matrix A has full SVD USV t, then its transpose has full SVD At = VSUt. As mentioned AAt and AtA share the same nontrivial eigenvalues. These can be recovered as the nontrivial diagonal entries of S2. Suppose we know the eigenvectors U of AAt = US2Ut. Since AtU = VS, then presumably we can recover some of the eigenvectors V as AtUS+, where S+ is the diagonal pseudo-inverse of S.

C.5. Fast Subroutines for Solving Linear Systems

Using the Woodbury formula, the inverse of TtT can be expressed as

Solving linear system TtT invokes two matrix vector multiplications involving the incidence matrix M. Mv corresponds to taking pairwise sums of the components of a vector v of length m. Mtw corresponds to taking a combination of column and row sums of a lower triangular matrix with the lower triangular part populated by the components of a vector w with length . Both operations cost O(m2) flops. This result can be extended to the full fusion matrix DtD that incorporates non-negativity constraints and, more importantly, to the linear system I + ρDtD:

Appendix D. Additional Details for Convex Regression Example

We start by formulating the proximal distance version of the problem:

where v = [θ; vec(Ξ)] stacks each optimization variable into a vector of length n(1 + d). This maneuver introduces matrices

where [Aθ]k = θj − θi and [B vec(Ξ)]k = 〈xi − xj, ξj〉 according to the ordering i > j.

D.1. MM

We rewrite the surrogate explicitly a least squares problem minimizing :

where b ≡ y to avoid clashing with notation in ADMM below. In this case it seems better to store D explicitly in order to avoid computing xi − xj each time one applies D, Dt, or DtD.

D.2. Steepest Descent

The updates vn+1 = vn − tn∇hρ(vn) admit an exact solution for the line search parameter tn. Taking qn = ∇hρ(vn) as the gradient we have

Note that Aqn = ∇θhρ(vn), the gradient with respect to function values θ.

D.3. ADMM

Take y as the dual variable and λ as scaled multipliers. Then the ADMM updates are

and with the update for the multipliers being standard.

Appendix E. Additional Details for Convex Clustering Example

We write u = vec(U) and x = vec(X), so the surrogate becomes

E.1. MM

Rewrite the surrogate explicitly a least squares problem minimizing :

E.2. Steepest Descent

The updates un+1 = un − tn∇hρ(un) admit an exact solution for the line search parameter tn. Taking qn = ∇hρ(un) as the gradient we have

Note that blocks in are equal to 0 whenever the projection of block [Dun]ℓ is non-zero.

E.3. ADMM

Take y as the dual variable and λ as scaled multipliers. Minimizing the u block involves solving a single linear system:

Multipliers follow the standard update.

E.4. Blockwise Sparse Projection