Abstract

Objectives Patient and provider-facing screening tools for social determinants of health have been explored in a variety of contexts; however, effective screening and resource referral remain challenging, and less is known about how patients perceive chatbots as potential social needs screening tools. We investigated patient perceptions of a chatbot for social needs screening using three implementation outcome measures: acceptability, feasibility, and appropriateness.

Methods We implemented a chatbot for social needs screening at one large public hospital emergency department (ED) and used concurrent triangulation to assess perceptions of the chatbot use for screening. A total of 350 ED visitors completed the social needs screening and rated the chatbot on implementation outcome measures, and 22 participants engaged in follow-up phone interviews.

Results The screened participants ranged in age from 18 to 90 years old and were diverse in race/ethnicity, education, and insurance status. Participants ( n = 350) rated the chatbot as an acceptable, feasible, and appropriate way of screening. Through interviews ( n = 22), participants explained that the chatbot was a responsive, private, easy to use, efficient, and comfortable channel to report social needs in the ED, but wanted more information on data use and more support in accessing resources.

Conclusion In this study, we deployed a chatbot for social needs screening in a real-world context and found patients perceived the chatbot to be an acceptable, feasible, and appropriate modality for social needs screening. Findings suggest that chatbots are a promising modality for social needs screening and can successfully engage a large, diverse patient population in the ED. This is significant, as it suggests that chatbots could facilitate a screening process that ultimately connects patients to care for social needs, improving health and well-being for members of vulnerable patient populations.

Keywords: patients, chatbot interaction, implementation, digital, social determinants of health

Background and Significance

There is growing interest in screening for social needs to understand and address the link between health inequities and social determinants of health (SDoH), 1 2 3 4 5 the conditions in which people are born, grow, live, work, and age. Social needs are the needs of an individual as a result of their SDoH, such as housing instability, food insecurity, or unemployment. 6 Hospital emergency departments (EDs) may be one appropriate place for social needs screening as EDs serve vulnerable populations with a high prevalence of social needs. 7 8 However, SDoH screening in the ED is not routine, and even when needs are identified, referral to community services and follow-up may be beyond the current capacities of many EDs.

Patients benefit from assistance to complete screening and contact community resources. 9 Yet implementing face-to-face social needs screening and referral in the ED is challenging due to anticipated patient discomfort and clinician burden. 10 11 Self-administered screening could overcome these challenges. Potential approaches include patient-facing surveys distributed via paper, 12 13 14 automated phone calls and text messaging, 15 tablets, 16 17 18 and personal health records. 19 While patient- and provider-facing SDoH screening tools exist, such as direct entry by patients or providers into electronic health records (EHRs), 20 they face limited uptake. There are well-known disparities in patient adoption of online portals and use of personal health records. 21 22 One reason for nonuse of patient portals includes privacy and information security concerns, which indicates the importance of building patient trust in communication systems. 23 Thus, getting patient input is important to address patients' health and social needs 24 25 26 27 28 and to design more accessible and trustworthy approaches to better engage patients.

Chatbots are computer programs that simulate conversations with users that are increasingly adopted in the health care field, 29 30 and have the potential to address the above screening barriers. Chatbots may increase patient uptake as a more understandable and engaging tool compared to traditional online surveys. 31 32 33 Further, patients may be more willing to disclose personal information 34 and social needs information 18 to a computer. Individuals may feel more inclined to use conversational agents for discussing sensitive health topics, such as addiction, 35 depression, 36 and posttraumatic stress disorder, 37 as technology can enable more confidential methods of information and support seeking. 38 39 Despite growing interest in chatbot technology for health, there are few published studies on conversational user interfaces in health care. 29 40 41 42 The literature on conversational agents in health care is largely aimed at treatment and monitoring of health conditions, such as mental health, 43 44 45 46 Alzheimer's, 47 heart failure, 48 asthma, 49 and human immunodeficiency virus, 50 health service support, such as patient history taking, 51 52 and triage and diagnosis support, 53 54 and patient education, on topics such as sexual health, 55 smoking, 56 alcohol use, 57 and breast cancer. 58

While studies of conversational agents in health care have shown moderate evidence of usability and effectiveness, 42 there is a need for further exploration on the role of conversational agents in real-world settings. 40 User feedback on conversational agents in health care remains mixed, with some users expressing desire for interactivity and agent empathy, whereas others report a dislike of these qualities. 32 33 42 There are also still few conversational agent evaluations with users in clinical settings. 40 59 Prior research on conversational agents in real-world settings has identified the need for providing actionable and accurate information. 59 Additionally, prior work that has studied conversational agents for social needs screening in clinical settings has indicated the importance of designing for and with vulnerable populations, such as people with low health literacy, to improve chatbot understandability. 32 More work is needed to evaluate chatbots in clinical settings, in particular chatbots for social needs screening. We have little knowledge about patients' sharing practices around social needs-related data during real-world clinic visits. Furthermore, we have not established if patients find chatbots feasible and acceptable for social needs screening, nor whether the ED is an appropriate site for social needs screening chatbots. This is important as it could lead to a screening process that is more likely to result in patients receiving care for social needs. We build on prior work to evaluate a chatbot implementation situated within the ED workflow, and investigate patient perceptions of the screening and resource provision in the ED context.

Objectives

We investigated three implementation outcome measures 60 to evaluate the success of a chatbot implementation for social needs screening at a large hospital ED. Our aim was to address the following research questions: (1) How do patients rate the acceptability, feasibility, and appropriateness of a chatbot implementation in the ED for social needs screening? (2) What are patient perceptions of using a chatbot for social needs screening?

Methods

Study Design

In this study, we deployed a chatbot for social needs screening in a real-world context to understand patients' perspectives on the acceptability, feasibility, and appropriateness of using the tool in the ED. We used concurrent triangulation as a mixed-methods approach to confirm and corroborate findings within our study. 61 First, we collected ratings of implementation measures via surveys with participants who completed screening using the chatbot. Second, we conducted follow-up interviews with a subset of participants to further understand patient perspectives.

Setting and Recruitment

The study took place in the ED at Harborview Medical Center, a large, public, tertiary care teaching hospital, in the Pacific Northwest region of the United States from November 9, 2020, to February 28, 2021. Patients were approached by a research assistant after completing ED registration and triage. They were considered eligible if they were at least 18 years old, English or Spanish-speaking, and did not have an acute medical or psychiatric condition. We used the Emergency Severity Index (ESI) as the qualification for identifying patients who would be able to participate in the study. 62 Patients were considered eligible if they had an ESI of 3 to 5 (i.e., not requiring immediate medical attention based on triage algorithm). In the chatbot screening, participants read a short introduction to the study and were asked if they consent to participating by clicking “Okay, let's start” to proceed.

Collection of Social Needs and Implementation Measures

The chatbot for social needs screening provides relevant community resources to ED patients ( Figs. 1 and 2 ). Participants interacted with the chatbot on an iPad and could use optional disposable headphones. The screening was available in English and Spanish. Participants used the chatbot to answer 16 questions about their social needs that were adapted from the Accountable Health Communities Health-Related Social Needs (AHC HRSN) Screening Tool, 63 the Benefits Eligibility Screening Tool (BEST), 64 and the Los Angeles County Health Agency (LACHA) screening guide (Johnson 2019 65 ; see Supplementary Appendix: Screening Questionnaire , available in the online version).

Fig. 1.

Screenshots of user interaction with HarborBot for social needs screening.

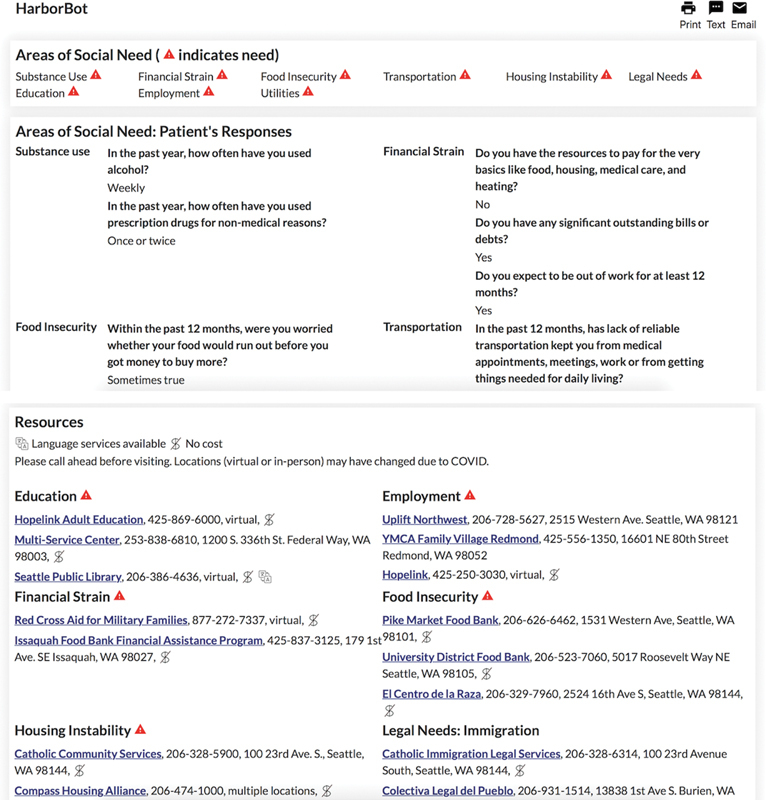

Fig. 2.

Screenshots of chatbot screening output with user responses and list of tailored community resources.

At the end of the screening, the chatbot asked participants to rate three implementation outcome measures 60 to assess the acceptability, feasibility, and appropriateness of the chatbot on a Likert scale from 1 “completely disagree” to 5 “completely agree.” Using these measures, “acceptability” assesses the perception that a given innovation is agreeable or satisfactory, “appropriateness” assesses the perceived compatibility of the innovation for a given issue and practice setting, and “feasibility” assesses the extent to which the innovation can be successfully used or carried out. 60

Participants were also asked 6 demographic questions about their age, gender, race/ethnicity, education, relationship status, and insurance status. Finally, participants were asked if they would be willing to take part in a follow-up interview. Participants were eligible for a follow-up interview if they had a working phone number. Upon completion of the screening, the participant was handed a printed copy of their responses and a list of matching community resources ( Fig. 2 ), and encouraged to share their responses with their ED care team. Participants could optionally send their responses and resource list to themselves via email and text.

Chatbot Design

HarborBot is a web application that is accessible on mobile phones and desktops (see Supplementary Appendix: Chatbot Design , available in the online version). The chatbot interacts with users through chat and voice (output only) in a scripted dialogue. The front-end web application is hosted on Google Cloud, developed using HTML, CSS, and Javascript, and uses Python to communicate with multiple API services. Fig. 1 shows the graphical user interface for HarborBot. We used BotUI ( https://botui.org/ ), a Javascript framework, to build the chatbot user interface, and REDCap database 66 to store user responses. After screening completion, social needs are highlighted in red and relevant resources are brought to the top of the page, but all the resources were included to ensure that participants had access, regardless of whether or not they chose to disclose their social needs. Fig. 2 shows the graphical user interface for HarborBot's response summary and resource page. We compiled a list of local community resource organizations to share with users based upon resources distributed by social workers at the Harborview Medical Center ED. These resources were drawn from the Emerald City Resource Guide 67 and Washington 211, 68 online databases that help connect people to community resources in Seattle and Washington state. We followed the BEST, LACHA, and AHC HRSN Screening Tool's scoring instructions on what responses constitute a social need for each domain, which then determined if the corresponding resource is highlighted on the page.

Follow-up Interview

We interviewed participants about their experience using the chatbot. Participants were contacted via email or text message accompanied by a phone call 2 to 4 days after their ED visit. The follow-up interview was either conducted at the time of contact or scheduled for a later date. The interviews were conducted by phone and were audio-recorded, except for one participant who did not consent to be recorded. These interviews were semi-structured and asked participants about their perceptions of whether the chatbot was an acceptable, feasible, and appropriate way of screening (see Supplementary Appendix: Qualitative Interview , available in the online version). We also asked participants how they used the resource list, how they currently search for and access community resources, and in what ways a chatbot could facilitate this process. The Health Literacy Single-Item Literacy Screener (SILS) is a single-item question that was administered to identify adults with limited reading ability. 69 Participants were offered a USD 30 gift card after the interview.

Data Analysis

We used descriptive statistics to analyze the participant demographic information ( Table 1 ) and implementation ratings ( Table 2 ). Analyses were performed using Microsoft Excel (version 16.43) and RStudio (version 2022.12.0 + 353). We followed an inductive-deductive thematic approach 70 in the analysis of the interview data. Three team members performed inductive coding on an initial set of three interviews. Four team members then clustered the codes to develop a codebook. We incorporated concepts from the Consolidated Framework for Implementation Research framework 71 to draw from established concepts in implementation theory. Once all four team members reached agreement on the codes, we applied the codebook to the remaining interviews.

Table 1. Study participant demographics.

| Screened participants ( n = 350), n % | Interview participants ( n = 22), n % | |

|---|---|---|

| Age (y) | ||

| 18–25 | 29 (8.3) | 2 (9.1) |

| 26–35 | 83 (23.7) | 6 (27.3) |

| 36–45 | 57 (16.3) | 4 (18.2) |

| 46–55 | 36 (10.3) | 3 (13.6) |

| 56–65 | 23 (6.6) | 2 (9.1) |

| ≥ 66 | 20 (5.7) | 1 (4.5) |

| Prefer not to answer | 102 (29.1) | 4 (18.2) |

| Gender | ||

| Male | 187 (53.4) | 11 (50.0) |

| Female | 135 (38.6) | 11 (50.0) |

| Additional gender category | 16 (4.6) | 0 (0.0) |

| Prefer not to answer | 12 (3.4) | 0 (0.0) |

| Racial/ethnic background | ||

| White | 134 (38.3) | 11 (50.0) |

| Black, African American or African | 78 (22.3) | 4 (18.2) |

| Latin American, Central American, Mexican or Mexican American, Hispanic or Chicano | 53 (15.1) | 2 (9.1) |

| More than one race | 38 (10.9) | 2 (9.1) |

| Asian: Indian, Chinese, Filipino, Japanese, Korean, Vietnamese, Other | 15 (4.3) | 2 (9.1) |

| Other | 14 (4.0) | 0 (0.0) |

| Prefer not to answer | 18 (5.1) | 1 (4.5) |

| Education | ||

| Some college | 83 (23.7) | 5 (22.7) |

| High school graduate | 72 (20.6) | 4 (18.2) |

| Bachelor's degree | 38 (10.9) | 6 (27.3) |

| Less than high school | 36 (10.3) | 2 (9.1) |

| Some high school | 31 (8.9) | 2 (9.1) |

| Graduate school | 31 (8.9) | 1 (4.5) |

| Associate degree | 28 (8.0) | 2 (9.1) |

| Prefer not to answer | 31 (8.9) | 0 (0.0) |

| Relationship status | ||

| Single/never married | 158 (45.1) | 11 (50.0) |

| Married | 62 (17.7) | 1 (4.5) |

| Divorced | 47 (13.4) | 7 (31.8) |

| Committed relationship/partnered | 30 (8.6) | 3 (13.6) |

| Separated | 15 (4.3) | 0 (0.0) |

| Widowed | 8 (2.3) | 0 (0.0) |

| Prefer not to answer | 30 (8.6) | 0 (0.0) |

| Health insurance | ||

| Medicaid | 90 (25.7) | 5 (22.7) |

| No health insurance | 56 (16.0) | 2 (9.1) |

| Employer provided | 53 (15.1) | 3 (13.6) |

| Medicare | 53 (15.1) | 5 (22.7) |

| Don't know | 28 (8.0) | 4 (18.2) |

| Other | 24 (7.0) | 2 (9.1) |

| Charity care | 8 (2.3) | 0 (0.0) |

| Private health insurance | 8 (2.3) | 0 (0.0) |

| COBRA | 1 (0.3) | 1 (4.6) |

| Prefer not to answer | 29 (8.3) | 0 (0.0) |

| Health Literacy Single-Item Literacy Screener (SILS) | ||

| 1: never | – | 10 (45.5) |

| 2: rarely | – | 9 (40.9) |

| 3: sometimes | – | 3 (13.6) |

| 4: often | – | 0 (0.0) |

| 5: always | – | 0 (0.0) |

Table 2. Ratings of implementation measures.

| Constructs | Implementation outcome measures | Sample size of respondents, n (%) | Average rating on 1–5 Likert's scale (SD) | Median rating on 1–5 Likert's scale (IQR) |

|---|---|---|---|---|

| Acceptability | I like the use of this chatbot to answer these questions | 297 (84.9) | 3.93 (0.98) | 4 (1) |

| Feasibility | Using this chatbot to answer these questions seems easy to use | 301 (86.0) | 4.20 (0.86) | 4 (1) |

| Appropriateness | Using this chatbot to answer these questions seems suitable | 302 (86.3) | 4.10 (0.86) | 4 (1) |

Abbreviations: IQR, interquartile range: SD, standard deviation.

Transcript coding was divided among the four team members, and during each iteration of coding, team members coded one to two different transcripts. In research meetings, questions or concerns related to particular excerpts were discussed. Each team member reviewed the transcripts, and disagreements were discussed to achieve consensus. We returned to the initial interviews to recode them with the finalized codebook. We continued discussions across all the interviews to identify themes and patterns in the interviews to explain the ratings and provide additional insights.

Results

Participant Characteristics

A total of 832 patients were approached and 410 patients (49%) agreed to participate in the study. Of those who agreed, 353 patients completed the screening and 3 patients under the age of 18 were removed. There were 350 participants who consented and completed the screening. The participants who completed screening (“screened participants”) ranged in age from 18 to 90 years old (mean 40.7, standard deviation [SD] = 14.7) and were diverse in age, race/ethnicity, education, and insurance status, and nearly half were single or never married ( Table 1 ). Among the participants, 329 participants completed the screening in English and 21 participants in Spanish. The screening took 10.92 minutes on average (SD = 7.50).

Of the 350 participants, 22 agreed to follow-up interviews. We conducted follow-up phone interviews and qualitative analysis concurrently until reaching thematic saturation. 72 Interview participants (P1–P22) ranged in age from 18 to 68 years old (mean 40.6, SD = 14.4). They were largely representative of the demographics in the screened participant sample, with a larger representation of White/Caucasian participants and smaller representation of those who received some college or less. Three interview participants (13.6%) reported that they “sometimes” need help to read written health material. The interviews lasted on average 42 minutes.

RQ1: Patient Ratings of the Chatbot Implementation: Acceptability, Feasibility, and Appropriateness

Our findings demonstrate the value of the chatbot which was rated by participants as an acceptable, feasible, and appropriate means of social needs screening, with average ratings of 3.93 (SD = 0.99), 4.20 (SD = 0.86), and 4.10 (SD = 0.86), respectively ( Table 2 ). Fig. 3 shows the Likert scale rating distribution for acceptability, feasibility, and appropriateness of the chatbot. The majority of participants agreed that they liked using the chatbot and it was easy to use and appropriate, with some discrepancy among the acceptability ratings ( Fig. 3 ). Figs. 4 to 6 and Tables 3 to 5 show the Likert rating response distribution by age, ethnicity, and education. There were some differences in perceptions of acceptability between age groups, ethnicities, and education levels. The percentage of the participants who agreed or completely agreed that the chatbot is acceptable was 88.9% among younger participants aged 18 to 25, compared to 65.0% among participants more than 66 years old. Additionally, 79.7% of Black, African American, or African participants agreed or completely agreed that the chatbot is acceptable, compared to 53.9 and 61.5% of Asian participants and Other participants (who identified as Native American, Pacific Islander, or Middle Eastern). Participants who completed less than high school, some college, or were a high school graduate, 78.1, 79.5 and 77.3% respectively, agreed or completely agreed that the chatbot is acceptable to a greater extent than participants in graduate school or who completed some high school, 66.7 and 66.7%.

Fig. 3.

Diverging stacked bar chart of Likert scale ratings for acceptability, feasibility, and appropriateness, accompanied by mean and standard deviation for each measure. The percentage of positive responses (agree and completely agree) is stacked on the right and the percentage of negative responses (disagree and completely disagree) is stacked on the left, with neutral (neither agree nor disagree) in the center.

Fig. 4.

Diverging stacked bar charts of Likert's scale ratings for acceptability, feasibility, and appropriateness with response distributions by age. The mean and standard deviation for each group are shown on the right.

Fig. 6.

Diverging stacked bar charts of Likert's scale ratings for acceptability, feasibility, and appropriateness with response distributions by education. The mean and standard deviation for each group are shown on the right.

Table 3. Response distributions of acceptability ratings by age, ethnicity, and education.

| Acceptability | Completely disagree (%) | Disagree (%) | Neither agree nor disagree (%) | Agree (%) | Completely agree (%) | Average rating on 1–5 Likert's scale (SD) |

|---|---|---|---|---|---|---|

| All participants | 13 (4.4) | 8 (2.7) | 53 (17.9) | 136 (45.8) | 87 (29.3) | 3.93 (0.98) |

| Age (y) | ||||||

| 18–25 | 0 (0.0) | 0 (0.0) | 3 (11.1) | 16 (59.3) | 8 (29.6) | 4.19 (0.61) |

| 26–35 | 4 (5.2) | 2 (2.6) | 16 (20.8) | 35 (45.5) | 20 (26.0) | 3.84 (1.01) |

| 36–45 | 1 (1.9) | 4 (7.7) | 8 (15.4) | 24 (46.2) | 15 (28.9) | 3.92 (0.96) |

| 46–55 | 3 (9.7) | 0 (0.0) | 4 (12.9) | 11 (35.5) | 13 (41.9) | 4.0 (1.19) |

| 56–65 | 1 (5.0) | 2 (10.0) | 2 (10.0) | 11 (55.0) | 4 (20.0) | 3.75 (1.04) |

| >= 66 | 1 (5.0) | 0 (0.0) | 6 (30.0) | 7 (35.0) | 6 (30.0) | 3.85 (1.01) |

| Prefer not to answer | 3 (4.3) | 0 (0.0) | 14 (20.0) | 32 (45.7) | 21 (30.0) | 3.97 (0.94) |

| Racial/ethnic background | ||||||

| White | 3 (2.5) | 1 (0.8) | 24 (20.2) | 57 (47.9) | 34 (28.6) | 3.99 (0.86) |

| Black, African American or African | 5 (7.8) | 1 (1.6) | 7 (10.9) | 29 (45.3) | 22 (34.4) | 3.97 (1.10) |

| Latin American, Central American, Mexican or Mexican American, Hispanic or Chicano | 3 (5.9) | 2 (3.9) | 7 (13.7) | 25 (49.0) | 14 (27.5) | 3.88 (1.04) |

| More than one race | 0 (0.0) | 1 (3.3) | 6 (20.0) | 14 (46.7) | 9 (30.0) | 4.03 (0.80) |

| Asian: Indian, Chinese, Filipino, Japanese, Korean, Vietnamese, Other | 1 (7.7) | 2 (15.4) | 3 (23.1) | 2 (15.4) | 5 (38.5) | 3.62 (1.33) |

| Other | 1 (7.7) | 1 (7.7) | 3 (23.1) | 6 (46.2) | 2 (15.4) | 3.54 (1.08) |

| Prefer not to answer | 0 (0.0) | 0 (0.0) | 3 (42.9) | 3 (42.9) | 1 (14.3) | 3.71 (0.70) |

| Education | ||||||

| Some college | 2 (2.7) | 0 (0.0) | 13 (17.8) | 38 (52.1) | 20 (27.4) | 4.01 (0.84) |

| High school graduate | 3 (4.6) | 2 (3.0) | 10 (15.2) | 32 (48.5) | 19 (28.8) | 3.94 (0.98) |

| Bachelor's degree | 1 (2.6) | 0 (0.0) | 11 (29.0) | 17 (44.7) | 9 (23.7) | 3.87 (0.86) |

| Less than high school | 3 (9.4) | 0 (0.0) | 4 (12.5) | 11 (34.4) | 14 (43.8) | 4.03 (1.19) |

| Some high school | 4 (14.8) | 3 (11.1) | 2 (7.4) | 10 (37.0) | 8 (29.6) | 3.56 (1.40) |

| Graduate school | 0 (0.0) | 2 (7.4) | 7 (25.9) | 11 (40.7) | 7 (25.9) | 3.85 (0.89) |

| Associate degree | 0 (0.0) | 1 (3.6) | 6 (21.4) | 12 (42.9) | 9 (32.1) | 4.04 (0.82) |

| Prefer not to answer | 0 (0.0) | 0 (0.0) | 0 (0.0) | 5 (83.3) | 1 (16.7) | 4.17 (0.37) |

Abbreviation: SD, standard deviation.

Table 5. Response distributions of appropriateness ratings by age, ethnicity, and education.

| Appropriateness | Completely disagree (%) | Disagree (%) | Neither agree nor disagree (%) | Agree (%) | Completely agree (%) | Average rating on 1–5 Likert's scale (SD) |

|---|---|---|---|---|---|---|

| All participants | 6 (2.0) | 9 (3.0) | 35 (11.6) | 150 (49.7) | 102 (33.8) | 4.10 (0.86) |

| Age | ||||||

| 18–25 | 0 (0.0) | 0 (0.0) | 4 (14.8) | 15 (55.6) | 8 (29.6) | 4.15 (0.65) |

| 26–35 | 1 (1.3) | 1 (1.3) | 8 (10.3) | 45 (57.7) | 23 (29.5) | 4.13 (0.74) |

| 36–45 | 2 (3.6) | 2 (3.6) | 4 (7.3) | 26 (47.3) | 21 (38.2) | 4.13 (0.95) |

| 46–55 | 0 (0.0) | 2 (6.5) | 2 (6.5) | 14 (45.2) | 13 (41.9) | 4.23 (0.83) |

| 56–65 | 0 (0.0) | 2 (10.5) | 0 (0.0) | 12 (63.2) | 5 (26.3) | 4.05 (0.83) |

| ≥ 66 | 0 (0.0) | 1 (5.0) | 5 (25.0) | 5 (25.0) | 9 (45.0) | 4.10 (0.94) |

| Prefer not to answer | 3 (4.2) | 1 (1.4) | 12 (16.7) | 33 (45.8) | 23 (31.9) | 4.0 (0.96) |

| Racial/ethnic background | ||||||

| White | 1 (0.9) | 2 (1.7) | 15 (12.7) | 56 (47.5) | 44 (37.3) | 4.19 (0.78) |

| Black, African American or African | 3 (4.6) | 4 (6.2) | 4 (6.2) | 31 (47.7) | 23 (35.4) | 4.03 (1.04) |

| Latin American, Central American, Mexican or Mexican American, Hispanic or Chicano | 1 (1.9) | 2 (3.9) | 5 (9.6) | 29 (55.8) | 15 (28.9) | 4.06 (0.84) |

| More than one race | 0 (0.0) | 1 (3.1) | 3 (9.4) | 15 (46.9) | 13 (40.6) | 4.25 (0.75) |

| Asian: Indian, Chinese, Filipino, Japanese, Korean, Vietnamese, Other | 0 (0.0) | 0 (0.0) | 3 (23.1) | 5 (38.5) | 5 (38.5) | 4.15 (0.77) |

| Other | 1 (7.1) | 0 (0.0) | 4 (28.6) | 8 (57.1) | 1 (7.1) | 3.57 (0.90) |

| Prefer not to answer | 0 (0.0) | 0 (0.0) | 1 (12.5) | 6 (75.0) | 1 (12.5) | 4.0 (0.50) |

| Education | ||||||

| Some college | 2 (2.7) | 2 (2.7) | 5 (6.7) | 38 (50.7) | 28 (37.3) | 4.17 (0.87) |

| High school graduate | 2 (3.0) | 1 (1.5) | 5 (7.6) | 39 (59.1) | 19 (28.8) | 4.09 (0.83) |

| Bachelor's degree | 0 (0.0) | 1 (2.6) | 8 (21.1) | 19 (50.0) | 10 (26.3) | 4.0 (0.76) |

| Less than high school | 0 (0.0) | 1 (3.0) | 5 (15.2) | 10 (30.3) | 17 (51.5) | 4.30 (0.83) |

| Some high school | 1 (3.5) | 2 (6.9) | 1 (3.5) | 17 (58.6) | 8 (27.6) | 4.0 (0.95) |

| Graduate school | 1 (3.6) | 0 (0.0) | 6 (21.4) | 11 (39.3) | 10 (35.7) | 4.04 (0.94) |

| Associate degree | 0 (0.0) | 1 (3.7) | 4 (14.8) | 12 (44.4) | 10 (37.0) | 4.15 (0.80) |

| Prefer not to answer | 0 (0.0) | 1 (16.7) | 1 (16.7) | 4 (66.7) | 0 (0.0) | 3.50 (0.76) |

Abbreviation: SD, standard deviation.

Fig. 5.

Diverging stacked bar charts of Likert's scale ratings for acceptability, feasibility, and appropriateness with response distributions by ethnicity. The mean and standard deviation for each group are shown on the right.

Table 4. Response distributions of feasibility ratings by age, ethnicity, and education.

| Feasibility | Completely disagree (%) | Disagree (%) | Neither agree nor disagree (%) | Agree (%) | Completely agree (%) | Average rating on 1–5 Likert's scale (SD) |

|---|---|---|---|---|---|---|

| All participants | 10 (3.3) | 4 (1.3) | 16 (5.3) | 158 (52.5) | 113 (37.5) | 4.20 (0.86) |

| Age (y) | ||||||

| 18–25 | 0 (0.0) | 0 (0.0) | 1 (3.7) | 14 (51.9) | 12 (44.4) | 4.41 (0.56) |

| 26–35 | 1 (1.3) | 0 (0.0) | 3 (3.8) | 45 (57.0) | 30 (38.0) | 4.30 (0.66) |

| 36–45 | 2 (3.8) | 1 (1.9) | 2 (3.8) | 26 (49.1) | 22 (41.5) | 4.23 (0.90) |

| 46–55 | 0 (0.0) | 0 (0.0) | 2 (6.3) | 15 (46.9) | 15 (46.9) | 4.41 (0.61) |

| 56–65 | 1 (5.6) | 1 (5.6) | 1 (5.6) | 10 (55.6) | 5 (27.8) | 3.94 (1.03) |

| ≥ 66 | 1 (5.0) | 1 (5.0) | 1 (5.0) | 10 (50.0) | 7 (35.0) | 4.05 (1.02) |

| Prefer not to answer | 5 (6.9) | 1 (1.4) | 6 (8.3) | 38 (52.8) | 22 (30.6) | 3.99 (1.03) |

| Racial/ethnic background | ||||||

| White | 2 (1.7) | 2 (1.7) | 8 (6.7) | 64 (53.3) | 44 (36.7) | 4.22 (0.78) |

| Black, African American or African | 5 (7.7) | 0 (0.0) | 3 (4.6) | 29 (44.6) | 28 (43.1) | 4.15 (1.07) |

| Latin American, Central American, Mexican or Mexican American, Hispanic or Chicano | 2 (4.0) | 1 (2.0) | 1 (2.0) | 28 (56.0) | 18 (36.0) | 4.18 (0.89) |

| More than one race | 0 (0.0) | 1 (3.2) | 2 (6.5) | 15 (48.4) | 13 (41.9) | 4.29 (0.73) |

| Asian: Indian, Chinese, Filipino, Japanese, Korean, Vietnamese, Other | 0 (0.0) | 0 (0.0) | 0 (0.0) | 8 (61.5) | 5 (38.5) | 4.38 (0.49) |

| Other | 1 (7.7) | 0 (0.0) | 2 (15.4) | 7 (53.9) | 3 (23.1) | 3.85 (1.03) |

| Prefer not to answer | 0 (0.0) | 0 (0.0) | 0 (0.0) | 7 (77.8) | 2 (22.2) | 4.22 (0.42) |

| Education | ||||||

| Some college | 1 (1.3) | 0 (0.0) | 3 (4.0) | 42 (56.0) | 29 (38.7) | 4.31 (0.67) |

| High school graduate | 4 (6.0) | 0 (0.0) | 4 (6.0) | 34 (50.8) | 25 (37.3) | 4.13 (0.98) |

| Bachelor's degree | 0 (0.0) | 2 (5.3) | 2 (5.3) | 19 (50.0) | 15 (39.5) | 4.24 (0.78) |

| Less than high school | 0 (0.0) | 0 (0.0) | 3 (9.4) | 13 (40.6) | 16 (50.0) | 4.41 (0.65) |

| Some high school | 1 (3.6) | 2 (7.1) | 0 (0.0) | 16 (57.1) | 9 (32.1) | 4.07 (0.96) |

| Graduate school | 2 (7.1) | 0 (0.0) | 2 (7.1) | 15 (53.6) | 9 (32.1) | 4.04 (1.02) |

| Associate degree | 0 (0.0) | 0 (0.0) | 2 (7.4) | 15 (55.6) | 10 (37.0) | 4.30 (0.60) |

| Prefer not to answer | 2 (33.3) | 0 (0.0) | 0 (0.0) | 4 (66.7) | 0 (0.0) | 3.0 (1.41) |

Abbreviation: SD, standard deviation.

RQ2: Patients' Perceptions of Using the Chatbot for Social Needs Screening

Analysis of the interviews identified six qualitative themes that describe ways in which participants perceived the chatbot as acceptable, feasible, and appropriate, and potential barriers to use.

Acceptability

Participants were satisfied that the chatbot provided a responsive interaction which acknowledged patients' answers and replied with personalized resources. Additionally, they liked how the chatbot afforded privacy during information disclosure, but raised questions about the security of their data. Participants appreciated the chatbot screening as an important first step in fostering a sense of care at the ED, while noting that it is important to follow up with patients to ensure they access resources.

Chatbot Provides Responsive, Engaging Interaction

Overall, participants found that the chatbot was responsive and engaged them during screening. Participants liked that the chatbot maintained the responsiveness of a human interaction and guided them through each question ( Table 6 ). Participants also liked that the chatbot provided personalized recommendations for community resources, avoiding information overload through extraneous recommendations. They appreciated that the conversation was brief, rather than repetitive, unlike past surveys that asked many similar questions about the same type of social need. P13 was looking for food assistance and found that the resources were tailored to their social needs ( Table 6 ).

Table 6. Example quotes from the qualitative analysis.

| Theme | Theme description | Example quotes |

|---|---|---|

| Acceptability | Chatbot provides responsive, engaging interaction | “If you put yes or no, depending on what you put, you have another answer on the chatbot. If [the screening] was just a couple of questions on paper, then you wouldn't receive that reply” (P17). “It seems more personal because it literally narrows down and takes out what you said yes to, what you said no to. Then it only gives you information on what you need help with, instead of giving you a load of information on certain things that [are not relevant to you]...say, if you're not an alcoholic, it's not giving you a number to AA … If you need a food bank, it's giving you a number to food banks, it's giving you a number to donation places” (P13). |

| Acceptability | Chatbot helped preserve privacy during information disclosure, but prompted questions about data sharing and security | “I'd rather answer my questions and everything with the chatbot. That way [other patients are] not hearing what's going on with me as far as money. In fact, a lot of the things that I don't like is I have to repeat, like give them my address, my phone number, in front of these strangers who you don't know...I don't want to give out that kind of information out loud...since we were online and the chatbot actually submitted the information that I sent out, that was actually probably one of the things that I felt safest with at that time” (P6). “There's a couple times where I was in the ED by myself. I've been medicated with morphine or something, and I have been out there and waiting for a cab, and this person would sit next to me to try to grab whatever was in my bag” (P6). “I have to try to be careful what app I'm using or whatever…because there are predators out there that will steal your identity” (P10). |

| Feasibility | Chatbot is easy to use and understand | “The instructions were pretty self-explanatory. You could understand the instructions, like when it was dragging you to the next page and what to do and all that. So that was pretty cool that they broke everything down for you as you went along… I didn't have to ask [the research assistant] anything the whole time I did it” (P5). |

| Feasibility | Chatbot screening is quick to complete | “I think you have some free time…[compared to] a survey that comes through email, I know I get them all the time and almost never filled them out. So the way in which the survey is administered [via chatbot], I think it's a good way to get more responses” (P16). |

| Appropriateness | Chatbot screening is appropriate in the ED context | “I think in the setting of the hospital that it was easier to use the chatbot than it would be to find a quiet place to sit down where you could have a discussion with a person” (P1). “I don't feel comfortable talking about my financial situation with my doctor...They can be helpful if they provide you the information there, or they redirect you…Usually there's no conversation to bring it up…Well, they're just telling you what to do to make it better, or they're going to prescribe you something. So sometimes that conversation doesn't go along with the housing” (P17). “I don't want to tell them that I'm homeless because I feel like I'm being treated differently as opposed if I just tell them, oh, okay, well I live over here… The whole issue, I think just came down to, they found out I was homeless. I was sleeping outside. The doctor expressed that they didn't want to do the surgery because I didn't have a sterile place to heal. I said, well, that's what you guys are here for. You have respite beds that you provide for people that need a place to heal. And so the answer that I got was, well, we can't reserve respite beds” (P5). |

| Screening is the first step in fostering a sense of care | Screening is the first step in fostering a sense of care | “I just feel like the more access and the more ways of making people get the resources the better. I don't feel like there should just be one way of getting resources out to people…considering a lot of the day services will give you booklets with resources. But the problem with that was the resources wouldn't be updated, so a lot of it was outdated. A lot of places you would call were closed down. They wasn't operating no more. So the booklet was useless at the end of the day” (P5). “It's good to have an actual human being there...telling me that they want to get you help or they can get you assistance, and then they stay there and you answer the questions that they're asking you. It feels like a more believable situation...it would be nice to maybe have someone contact you the day after you get out or a couple of days after you get out and go over what you filled out instead of just an automated voice” (P19). “It was easy to answer because it had preloaded answers…but, [you] can't elaborate too much with a chatbot…It asked, 'Are you or somebody in your household experiencing hardship?' Then, I said yes. Then, it asks how, and I said income, but I wasn't able to type in more than income…I wanted to say it was his income, not mine. That's the issue right now because we're sitting here waiting for unemployment” (P6). |

Chatbot Helped Preserve Privacy during Information Disclosure, but Prompted Questions about Data Sharing and Security

Participants who did not want to be overheard in the ED valued the chatbot. They liked that they could input their responses instead of speaking out loud ( Table 6 ). There was a sense that the ED was not a secure place to discuss personal information and the chatbot afforded privacy from answering questions in an open space. P6 was not only worried about being overheard, but worried about other ED visitors who might take and view their responses if they were on paper ( Table 6 ). Privacy during information disclosure was very important to participants to avoid direct judgment or stolen information. Participants desired that their information be stored securely in the EHR after the chatbot interaction, and assumed that their information would not be shared with unauthorized individuals. However, some participants were cautious of what information to share with the chatbot as they felt it may lead to stolen information. P10 was hesitant about sharing personal information via the chatbot and explained that they try to be careful no matter what application they use ( Table 6 ). Together, these examples illustrate that participants found privacy-preserving aspects of the chatbot to be acceptable, including no requirement to speak responses out loud, and assurances that responses would not be shared inappropriately. However, data security was a concern that reduced acceptability.

Feasibility

Participants found that the chatbot was a feasible method of social needs screening in the ED. They found the chatbot easy to use, understand, and quick to complete.

Chatbot is Easy to Use and Understand

Participants found the chatbot easy to use which facilitated the successful completion of screening. In support of their high ratings of feasibility, participants said they could easily understand and answer the questions ( Table 6 ). P13 agreed the chatbot was easy to use and compared the experience to playing a computer game. Further, P5 liked using the tablet and selecting multiple choice options rather than typing because their hand was broken. P10 described themselves as less familiar with technology, but still found the chatbot as easy to use: “I don't dislike it, but I'm just used to doing regular straight paper, not a tablet. I'm not there yet…I'm not knowledgeable like some other people” (P10).

Chatbot Screening is Quick to Complete

When asked about how easy the chatbot was to use, participants found the chatbot feasible because it could be used quickly and easily. The screening did not take a lot of time to complete: “It was faster...more convenient maybe than talking to the representative directly” (P3). The chatbot was direct and easy to understand, whereas people may not be as direct: “You just answer Yes or No, it's not that difficult” (P2). P16 thought it was an efficient and effective way to get responses since they had free time in the ED waiting room and they would not be motivated to complete a survey sent via email ( Table 6 ). Overall, participants reported that they did not mind filling out questions to pass the time and the chatbot only took a short time to complete.

Appropriateness

Participants perceived the chatbot as an appropriate technology for the setting. Participants were comfortable sharing their social needs with the chatbot to avoid attention from other ED visitors and social judgment present in face-to-face screening.

P1 found that the ED was busy and the chatbot was compatible with this context ( Table 6 ). Most participants did not feel comfortable calling attention to themselves in the ED, and using the chatbot on the tablet seemed like a casual, normal activity that everyone was participating in. Participants cited fear of social judgment as a reason that they preferred using the chatbot: “You might open up to a chatbot and not a person…[there is] a lot of shame involved in some issues” (P4). P4 was searching for stabilized housing options and had spent the last 15 years learning about homelessness. Interacting with a chatbot has the potential to minimize social judgment that would occur if talking with a health care worker “because you don't have to deal with its [the chatbot's] attitude” (P6).

Participants also had different levels of comfort with what information to share with health care providers. They may be uncomfortable or embarrassed to talk with a health care provider about social needs, especially a provider they do not know. While P17 discussed how health care providers can be helpful to provide information about social needs and redirect them to resources, they were not comfortable with bringing up their social needs to their provider ( Table 6 ). P21 even hesitated to disclose information, such as their ability to pay for utilities, via the chatbot as they felt it may change the care they receive from ED providers. Others discussed receiving lower quality care at the ED based on their social needs in the past and did not want that to reoccur ( Table 6 ).

Screening is the First Step in Fostering a Sense of Care

The chatbot was perceived as a valuable first step in learning about social resources. P11 was homeless on and off for over 20 years and explained that screening for social needs was important because “a lot of people don't know where to look…[and] don't have access to the internet, so I think the way it [chatbot] was brought to me [on a tablet] in the hospital was an awesome thing.” Even for those who know where to look, using the chatbot was seen as another way of accessing information, particularly since the current resources they are aware of may not be meeting their needs ( Table 6 ). All participants said they would use the chatbot in the future and most were open to tools that helped them discover resources.

However, effective follow-up on patients' social needs is necessary for patients to feel cared for in the ED context. Participants mentioned that the screening should feel personal and serve a purpose beyond collecting information. P19 felt the chatbot did not provide personal benefits: “It was just a way of filling out the survey...It didn't benefit anything really.” P19 wanted to have a person in the loop to ensure that they are going to receive help ( Table 6 ). Further, patients may want to elaborate on specific answers to ensure that they get help ( Table 6 ). For example, one participant tried to hand off the printed output to their provider, but kept being redirected to the next staff person until they were able to share their printed screening results with a social worker.

Participants rarely brought the printed responses and resource list to start a conversation with their provider. Some participants were recurring patients who felt that ED providers are very busy and did not want to bother them by bringing up their social needs. Although few participants expressed concerns about sharing social needs through a chatbot in the ED, the above-mentioned concerns and preferences around sharing social needs might hinder some patients' sharing and early engagement with providers. To increase appropriateness of a chatbot for social needs screening in an ED context, patients require secure and reliable pathways for following up on resources.

Discussion

Our findings indicate that the chatbot implementation at the ED was perceived by patients as a feasible, acceptable, and appropriate form of outreach that could increase uptake. The ED has an explicit mission statement to care for vulnerable populations, and participants recognized the ED as a place where many individuals with social needs go for assistance and could participate in the screening. Those who may be more in need of resources, such as those who have not completed an advanced degree, may be more receptive to the chatbot screening, for example, patients who completed less than high school may find the chatbot more acceptable than patients who completed graduate school. The qualitative responses supported the survey responses when triangulating on the data. This is significant, as it suggests that chatbots could facilitate a screening process that ultimately connects patients to care for social needs, supporting the mission of EDs as part of the social safety net and improving health and well-being for members of the most vulnerable patient populations. Providers could use social needs information to better personalize treatment plans and direct patients to resources available in the hospital and community.

However, not all participants were positive about chatbots and strategies to improve uptake in this group will be important future work. Those who did not want to use the chatbot described themselves as being less familiar with new technology and applications. The presence of a trained professional in the hospital ED can help to support the screening process, in particular for older patients who may find a chatbot screening less acceptable than younger patients. Some participants felt uncomfortable sharing social needs with providers in the ED after completing the screening. This was due to patients' perceived prioritization of medical needs over social needs at the ED, and the potential negative impact on their emergency care. Although prior work indicated that some patients want help with social needs from providers, 15 most interview participants did not discuss their screening results with ED providers. For those who have data security concerns or do not want to discuss social needs with their providers, future chatbot design should inform patients how their data will be accessed for clinical purposes. If desired, they should be allowed to opt out of data sharing. For patients who want to elaborate on their answers, they should be provided flexibility within the chatbot interaction to express themselves and emphasize what resource they need the most assistance with.

Some participants wanted reliable and actionable support in accessing resources, thus one future direction is to link chatbots with existing health care systems to facilitate referrals. It is important to establish pathways to alert providers to acute social needs, get patients in touch with community-based organizations for resource referral, and help providers follow up on patients afterwards. The design of a chatbot for social needs screening may benefit from standardization since conversational user interfaces in health care can lead to unintended consequences, such as miscommunication due to information overload. 73 In the next steps, we plan to craft recommendations for system-wide implementation of the screening and referral process developed. Further, departmental and health system stakeholders plan to integrate social needs screening with existing technologies, such as EHRs. There is ongoing research to prepopulate social needs by extracting social needs-related information from clinical notes to address challenges of patient data collection. 74

The chatbot intervention could be further improved to reduce low uptake by establishing trust through screening in additional contexts outside the ED. In future work, the intervention could be evaluated at primary care clinics where some individuals may feel more comfortable disclosing needs, such as community health clinics that serve low-income patients. We believe that the ED waiting area is an ideal location for social needs screening because idle time is spent there, many patients with social needs are present, and the patients with lower ESI who would be more receptive to participating make up the waiting room population. However, universal screening in primary care may also be conducive to social needs screening, by supporting patient comfort and promoting more regular social needs screenings. Prior research has indicated there is little provider and patient discomfort with SDoH screening in primary care settings 75 76 and that open discussions of social needs improved patients' relationships with their health care team. 77 On the other hand, in EDs, providers have reported discomfort asking SDoH screening questions they believed to be stigmatizing, and patients questioned the purpose of the screening questions. 78 Although self-administered screening for social needs in primary care settings is generally associated with high levels of acceptability by patients, 18 79 health care stakeholders have expressed concern about the presence of few patients with social needs in primary care clinics which serve insured members who may be of higher socioeconomic status. 80 Before implementing social needs screening interventions, primary care clinics should evaluate their patient population to determine how they can reach patients facing social needs.

Health interventions that have been proven to improve health outcomes are typically longitudinal, tailored interventions that connect patients with community health workers for case management. 81 While more institutional support is needed to follow-up with patients, chatbots may serve as comfortable first touchpoint in the patient's journey through the ED to disclose social needs. In many of the interview conversations, participants mentioned their reasons for visiting the ED, including nonmedical issues, such as medical bill assistance and medication refill. Additionally, some participants left the ED waiting room before being admitted, due to long wait times. Thus, screening in the ED waiting room prior to admission may have a wider reach and be completed by more individuals than are actually admitted.

There are several limitations in this study. First, our findings are largely based on participants' screening responses and interviews with a convenience sample. While we aimed to recruit participants representative of the ED patient population, self-selection bias may be present in participants who opted to participate in the study. For instance, participants who had particularly negative experiences in the ED may be less prone to participate or adopt chatbots. Second, the presence of the research team during recruitment and novelty effect of the chatbot could also have influenced their use and feedback on the chatbot. Finally, our study was conducted in a large public hospital in one geographic region of the United States, which may limit the generalizability of our findings. Despite these limitations, our study has a number of strengths, including its reach and mixed-methods approach that provide important groundwork to guide future studies.

Conclusion

We evaluated patients' perceptions of feasibility, acceptability, and appropriateness of using a chatbot for social needs screening in the ED by collecting ratings and conducting follow-up interviews among a diverse sample. Our findings demonstrate that chatbots are an acceptable, feasible, and appropriate form of screening for patients and can successfully engage a large, diverse patient population in the ED setting. Participants observed that the chatbot screening was responsive, easy to use, efficient, comfortable, and enhanced privacy during information disclosure. In future work, health system stakeholders plan to integrate social needs screening with existing technologies, such as EHRs, to augment patient data collection with clinical notes information, and to reduce provider burden and information overload.

Clinical Relevance Statement

The chatbot screening has the potential to reduce ED provider and social worker burden through EHR integration to summarize patients' acute social needs and automatic referral to the relevant department. Providers may not discuss social needs with patients because there is not an established pathway to address them. The chatbot screening can therefore help to identify and address social needs that may go unaddressed during patient visits. Without knowledge of patients' social needs, such as their inability to afford prescribed medication, the effectiveness of health care can be diminished. Given that patients may be concerned about social needs disclosure, health systems should facilitate social needs screening to protect patient privacy and improve treatment.

Multiple-Choice Questions

-

When implementing a chatbot for social needs screening, which of the following are important intervention qualities for users?

High noise level

Ease of use

Entertainment

Complex language

Correct Answer: The correct answer is option b because participants found the chatbot easy to use which facilitated the successful completion of screening.

-

When implementing an intervention at the ED, which of the following help to facilitate the intervention?

Number of patients

Patient hobbies

Time of day

Health care workers

Correct Answer: The correct answer is option d because health care workers, such as research assistants, physicians, nurses, and social workers play a role in facilitating the screening intervention in the ED waiting room and responding to patients who bring up their screening responses and results.

Acknowledgements

We would like to thank all of the participants for their time and feedback. We thank the emergency department staff and study coordinators Thomas Paulsen, Kyle Steinbock, and Layla Anderson for their role in facilitating the study recruitment. We would also like to acknowledge the Prosocial Computing Lab and Digital SDoH Workgroup for their feedback throughout the project, Rafal Kocielnik for the alpha development of HarborBot, and Scott James George, Harpreet Singh, and the team at Harbor-UCLA Medical Center for their support.

Funding Statement

Funding This project was supported by the National Center for Advancing Translational Sciences of the National Institutes of Health under award number: UL1 TR002319. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects. Study procedures were approved by the University of Washington Institutional Review Board (IRB) and received a waiver of written consent. In the chatbot screening, participants read a short introduction to the study and were asked if they consent to participating by clicking “Okay, let's start” in order to proceed.

Supplementary Material

References

- 1.Marmot M.Social determinants of health inequalities Lancet 2005365(9464):1099–1104. [DOI] [PubMed] [Google Scholar]

- 2.Gottlieb L M, Wing H, Adler N E. A systematic review of interventions on patients' social and economic needs. Am J Prev Med. 2017;53(05):719–729. doi: 10.1016/j.amepre.2017.05.011. [DOI] [PubMed] [Google Scholar]

- 3.Adler N E, Glymour M M, Fielding J. Addressing social determinants of health and health inequalities. JAMA. 2016;316(16):1641–1642. doi: 10.1001/jama.2016.14058. [DOI] [PubMed] [Google Scholar]

- 4.; Commission on Social Determinants of Health Marmot M, Friel S, Bell R, Houweling T A, Taylor S.Closing the gap in a generation: health equity through action on the social determinants of health Lancet 2008372(9650):1661–1669. [DOI] [PubMed] [Google Scholar]

- 5.Sulo S, Feldstein J, Partridge J, Schwander B, Sriram K, Summerfelt W T. Budget impact of a comprehensive nutrition-focused quality improvement program for malnourished hospitalized patients. Am Health Drug Benefits. 2017;10(05):262–270. [PMC free article] [PubMed] [Google Scholar]

- 6.Castrucci B, Auerbach J.Meeting individual social needs falls short of addressing social determinants of healthHealth Affairs Blog 2019 Accessed March 2, 2023 at:https://www.healthaffairs.org/do/10.1377/forefront.20190115.234942/

- 7.Malecha P W, Williams J H, Kunzler N M, Goldfrank L R, Alter H J, Doran K M. Material needs of emergency department patients: a systematic review. Acad Emerg Med. 2018;25(03):330–359. doi: 10.1111/acem.13370. [DOI] [PubMed] [Google Scholar]

- 8.Gordon J A. The hospital emergency department as a social welfare institution. Ann Emerg Med. 1999;33(03):321–325. doi: 10.1016/s0196-0644(99)70369-0. [DOI] [PubMed] [Google Scholar]

- 9.Hsu C, Cruz S, Placzek H. Patient perspectives on addressing social needs in primary care using a screening and resource referral intervention. J Gen Intern Med. 2020;35(02):481–489. doi: 10.1007/s11606-019-05397-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tong S T, Liaw W R, Kashiri P L. Clinician experiences with screening for social needs in primary care. J Am Board Fam Med. 2018;31(03):351–363. doi: 10.3122/jabfm.2018.03.170419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Persaud S. Addressing social determinants of health through advocacy. Nurs Adm Q. 2018;42(02):123–128. doi: 10.1097/NAQ.0000000000000277. [DOI] [PubMed] [Google Scholar]

- 12.Bleacher H, Lyon C, Mims L, Cebuhar K, Begum A. The feasibility of screening for social determinants of health: seven lessons learned. Fam Pract Manag. 2019;26(05):13–19. [PubMed] [Google Scholar]

- 13.Zulman D M, Maciejewski M L, Grubber J M. Patient-reported social and behavioral determinants of health and estimated risk of hospitalization in high-risk veterans affairs patients. JAMA Netw Open. 2020;3(10):e2021457. doi: 10.1001/jamanetworkopen.2020.21457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Power-Hays A, Li S, Mensah A, Sobota A. Universal screening for social determinants of health in pediatric sickle cell disease: a quality-improvement initiative. Pediatr Blood Cancer. 2020;67(01):e28006. doi: 10.1002/pbc.28006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chang C, Ceci C, Uberoi M, Waselewski M, Chang T. Youth perspectives on their medical team's role in screening for and addressing social determinants of health. J Adolesc Health. 2022;70(06):928–933. doi: 10.1016/j.jadohealth.2021.12.016. [DOI] [PubMed] [Google Scholar]

- 16.Berger-Jenkins E, Monk C, DʼOnfro K. Screening for both child behavior and social determinants of health in pediatric primary care. J Dev Behav Pediatr. 2019;40(06):415–424. doi: 10.1097/DBP.0000000000000676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Katz-Wise S L, Reisner S L, White Hughto J M, Budge S L. Self-reported changes in attractions and social determinants of mental health in transgender adults. Arch Sex Behav. 2017;46(05):1425–1439. doi: 10.1007/s10508-016-0812-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gottlieb L, Hessler D, Long D, Amaya A, Adler N. A randomized trial on screening for social determinants of health: the iScreen study. Pediatrics. 2014;134(06):e1611–e1618. doi: 10.1542/peds.2014-1439. [DOI] [PubMed] [Google Scholar]

- 19.Tai-Seale M, Downing N L, Jones V G. Technology-enabled consumer engagement: promising practices at four health care delivery organizations. Health Aff (Millwood) 2019;38(03):383–390. doi: 10.1377/hlthaff.2018.05027. [DOI] [PubMed] [Google Scholar]

- 20.Gold R, Bunce A, Cowburn S. Adoption of social determinants of health EHR tools by community health centers. Ann Fam Med. 2018;16(05):399–407. doi: 10.1370/afm.2275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ancker J S, Barrón Y, Rockoff M L. Use of an electronic patient portal among disadvantaged populations. J Gen Intern Med. 2011;26(10):1117–1123. doi: 10.1007/s11606-011-1749-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Singh P, Jonnalagadda P, Morgan E, Fareed N. Outpatient portal use in prenatal care: differential use by race, risk, and area social determinants of health. J Am Med Inform Assoc. 2022;29(02):364–371. doi: 10.1093/jamia/ocab242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Anthony D L, Campos-Castillo C, Lim P S. Who isn't using patient portals and why? Evidence and implications from a national sample of US adults. Health Aff (Millwood) 2018;37(12):1948–1954. doi: 10.1377/hlthaff.2018.05117. [DOI] [PubMed] [Google Scholar]

- 24.Wu A W, Weston C M, Ibe C A. The Baltimore community-based organizations neighborhood network: enhancing capacity together (CONNECT) cluster RCT. Am J Prev Med. 2019;57(02):e31–e41. doi: 10.1016/j.amepre.2019.03.013. [DOI] [PubMed] [Google Scholar]

- 25.Capp R, Misky G J, Lindrooth R C. Coordination program reduced acute care use and increased primary care visits among frequent emergency care users. Health Aff (Millwood) 2017;36(10):1705–1711. doi: 10.1377/hlthaff.2017.0612. [DOI] [PubMed] [Google Scholar]

- 26.Kaufman S, Ali N, DeFiglio V, Craig K, Brenner J.Early efforts to target and enroll high-risk diabetic patients into urban community-based programs Health Promot Pract 201415(2, Suppl):62S–70S. [DOI] [PubMed] [Google Scholar]

- 27.Lin M P, Blanchfield B B, Kakoza R M. ED-based care coordination reduces costs for frequent ED users. Am J Manag Care. 2017;23(12):762–766. [PubMed] [Google Scholar]

- 28.Wilcox D, McCauley P S, Delaney C, Molony S L. Evaluation of a hospital: community partnership to reduce 30-day readmissions. Prof Case Manag. 2018;23(06):327–341. doi: 10.1097/NCM.0000000000000311. [DOI] [PubMed] [Google Scholar]

- 29.Laranjo L, Dunn A G, Tong H L. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc. 2018;25(09):1248–1258. doi: 10.1093/jamia/ocy072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Montenegro J L, da Costa C A, da Rosa Righi R. Survey of conversational agents in health. Expert Syst Appl. 2019;129:56–67. [Google Scholar]

- 31.Xiao Z, Zhou M X, Liao Q V. Tell me about yourself: using an AI-powered chatbot to conduct conversational surveys with open-ended questions. ACM Trans Comput Hum Interact (TOCHI) 2020;27(03):1–37. [Google Scholar]

- 32.Kocielnik R, Agapie E, Argyle A. HarborBot: a chatbot for social needs screening. AMIA Annu Symp Proc. 2019;2019:552–561. [PMC free article] [PubMed] [Google Scholar]

- 33.Kocielnik R, Langevin R, George J S

- 34.Lucas G M, Gratch J, King A, Morency L P. It's only a computer: virtual humans increase willingness to disclose. Comput Human Behav. 2014;37:94–100. [Google Scholar]

- 35.Auriacombe M, Moriceau S, Serre F. Development and validation of a virtual agent to screen tobacco and alcohol use disorders. Drug Alcohol Depend. 2018;193:1–6. doi: 10.1016/j.drugalcdep.2018.08.025. [DOI] [PubMed] [Google Scholar]

- 36.Philip P, Micoulaud-Franchi J A, Sagaspe P. Virtual human as a new diagnostic tool, a proof of concept study in the field of major depressive disorders. Sci Rep. 2017;7(01):42656. doi: 10.1038/srep42656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lucas G M, Rizzo A, Gratch J. Reporting mental health symptoms: breaking down barriers to care with virtual human interviewers. Front Robot AI. 2017;4:51. [Google Scholar]

- 38.Stowell E, Lyson M C, Saksono H

- 39.Cornelius J B, St Lawrence J S, Howard J C. Adolescents' perceptions of a mobile cell phone text messaging-enhanced intervention and development of a mobile cell phone-based HIV prevention intervention. J Spec Pediatr Nurs. 2012;17(01):61–69. doi: 10.1111/j.1744-6155.2011.00308.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kocaballi A B, Sezgin E, Clark L. Design and evaluation challenges of conversational agents in health care and well-being: selective review study. J Med Internet Res. 2022;24(11):e38525. doi: 10.2196/38525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tudor Car L, Dhinagaran D A, Kyaw B M. Conversational agents in health care: scoping review and conceptual analysis. J Med Internet Res. 2020;22(08):e17158. doi: 10.2196/17158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Milne-Ives M, de Cock C, Lim E. The effectiveness of artificial intelligence conversational agents in health care: systematic review. J Med Internet Res. 2020;22(10):e20346. doi: 10.2196/20346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kamita T, Ito T, Matsumoto A, Munakata T, Inoue T. A chatbot system for mental healthcare based on SAT counseling method. Mob Inf Syst. 2019;2019:1–11. [Google Scholar]

- 44.Ly K H, Ly A M, Andersson G. A fully automated conversational agent for promoting mental well-being: a pilot RCT using mixed methods. Internet Interv. 2017;10:39–46. doi: 10.1016/j.invent.2017.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fitzpatrick K K, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health. 2017;4(02):e19. doi: 10.2196/mental.7785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Inkster B, Sarda S, Subramanian V. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR Mhealth Uhealth. 2018;6(11):e12106. doi: 10.2196/12106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Griol D, Callejas Z. Mobile conversational agents for context-aware care applications. Cognit Comput. 2016;8(02):336–356. [Google Scholar]

- 48.Galescu L, Allen J, Ferguson G, Quinn J, Swift M. Speech recognition in a dialog system for patient health monitoring. Paper presented at: 2009 IEEE International Conference on Bioinformatics and Biomedicine Workshop, Washington, DC, United States; November 1–4, 2009:302–307 IEEE.

- 49.Rhee H, Allen J, Mammen J, Swift M. Mobile phone-based asthma self-management aid for adolescents (mASMAA): a feasibility study. Patient Prefer Adherence. 2014;8:63–72. doi: 10.2147/PPA.S53504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Vita S, Marocco R, Pozzetto I.The'doctor apollo'chatbot: a digital health tool to improve engagement of people living with HIV J Int AIDS Soc 201821(Suppl 8):e2518730362663 [Google Scholar]

- 51.Denecke K, Hochreutener S L, Pöpel A, May R.Self-anamnesis with a conversational user interface: concept and usability study Methods Inf Med 201857(5-06):243–252. [DOI] [PubMed] [Google Scholar]

- 52.Ni L, Lu C, Liu N, Liu J. Mandy: towards a smart primary care chatbot application. In: International Symposium on Knowledge and Systems Sciences, Bangkok, Thailand; Singapore: Springer; November 17–19, 2017:38–52

- 53.Razzaki S, Baker A, Perov Y

- 54.Ghosh S, Bhatia S, Bhatia A. Quro: facilitating user symptom check using a personalised chatbot-oriented dialogue system. Stud Health Technol Inform. 2018;252:51–56. [PubMed] [Google Scholar]

- 55.Wilson N, MacDonald E J, Mansoor O D, Morgan J. In bed with Siri and Google Assistant: a comparison of sexual health advice. BMJ. 2017;359:j5635. doi: 10.1136/bmj.j5635. [DOI] [PubMed] [Google Scholar]

- 56.Wang H, Zhang Q, Ip M, Lau J T. Social media–based conversational agents for health management and interventions. Computer. 2018;51(08):26–33. [Google Scholar]

- 57.Elmasri D, Maeder A. A conversational agent for an online mental health intervention. In: International Conference on Brain Informatics, Cham,: Springer; October 13, 2016: 243–251

- 58.Chaix B, Bibault J E, Pienkowski A. When chatbots meet patients: one-year prospective study of conversations between patients with breast cancer and a chatbot. JMIR Cancer. 2019;5(01):e12856. doi: 10.2196/12856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Fan X, Chao D, Zhang Z, Wang D, Li X, Tian F. Utilization of self-diagnosis health chatbots in real-world settings: case study. J Med Internet Res. 2021;23(01):e19928. doi: 10.2196/19928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Weiner B J, Lewis C C, Stanick C. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(01):108. doi: 10.1186/s13012-017-0635-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Creswell J W, Creswell J D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. Thousand Oaks, CA: Sage Publications; 2017

- 62.Tanabe P, Gimbel R, Yarnold P R, Kyriacou D N, Adams J G. Reliability and validity of scores on The Emergency Severity Index version 3. Acad Emerg Med. 2004;11(01):59–65. doi: 10.1197/j.aem.2003.06.013. [DOI] [PubMed] [Google Scholar]

- 63.Centers for Medicare and Medicaid Services . The accountable health communities health-related social needs screening tool. AHC Screening Tool. 2019. Accessed March 15, 2022 at:https://innovation.cms.gov/files/worksheets/ahcm-screeningtool.pdf

- 64.Benefits Eligibility Screening Tool (BEST) . ( n.d. ). Accessed December 9, 2021 at:https://www.disabilitybenefitscenter.org/glossary/benefits-eligibility-screening-tool

- 65.Johnson S, Liu P, Campa D

- 66.Harris P A.Research Electronic Data Capture (REDCap) - planning, collecting and managing data for clinical and translational research BMC Bioinformatics 201213(Suppl 12):A15 [Google Scholar]

- 67.Emerald City Resource Guide [Internet]. Real Change; 2021. Accessed December 2022 at:https://www.realchangenews.org/emerald-city-resource-guide

- 68.Washington 2-1-1 [Internet]. Washington 211; 2022. Accessed December 12, 2022 at:https://wa211.org/

- 69.Morris N S, MacLean C D, Chew L D, Littenberg B. The Single Item Literacy Screener: evaluation of a brief instrument to identify limited reading ability. BMC Fam Pract. 2006;7(01):21. doi: 10.1186/1471-2296-7-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Hsieh H F, Shannon S E. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(09):1277–1288. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- 71.Damschroder L J, Aron D C, Keith R E, Kirsh S R, Alexander J A, Lowery J C. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(01):50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Hennink M M, Kaiser B N, Marconi V C. Code saturation versus meaning saturation: how many interviews are enough? Qual Health Res. 2017;27(04):591–608. doi: 10.1177/1049732316665344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Ash J S, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11(02):104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Lybarger K, Ostendorf M, Yetisgen M. Annotating social determinants of health using active learning, and characterizing determinants using neural event extraction. J Biomed Inform. 2021;113:103631. doi: 10.1016/j.jbi.2020.103631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.LaForge K, Gold R, Cottrell E. How 6 organizations developed tools and processes for social determinants of health screening in primary care: an overview. J Ambul Care Manage. 2018;41(01):2–14. doi: 10.1097/JAC.0000000000000221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Buitron de la Vega P, Losi S, Sprague Martinez L.Implementing an EHR-based screening and referral system to address social determinants of health in primary care Med Care 201957(Suppl 6, Suppl 2):S133–S139. [DOI] [PubMed] [Google Scholar]

- 77.Drake C, Batchelder H, Lian T. Implementation of social needs screening in primary care: a qualitative study using the health equity implementation framework. BMC Health Serv Res. 2021;21(01):975. doi: 10.1186/s12913-021-06991-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Wallace A S, Luther B, Guo J W, Wang C Y, Sisler S, Wong B. Implementing a social determinants screening and referral infrastructure during routine emergency department visits, Utah, 2017–2018. Prev Chronic Dis. 2020;17:E45. doi: 10.5888/pcd17.190339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Hassan A, Blood E A, Pikcilingis A. Youths' health-related social problems: concerns often overlooked during the medical visit. J Adolesc Health. 2013;53(02):265–271. doi: 10.1016/j.jadohealth.2013.02.024. [DOI] [PubMed] [Google Scholar]

- 80.Sundar K R. Universal screening for social needs in a primary care clinic: a quality improvement approach using the your current life situation survey. Perm J. 2018;22:18–89. doi: 10.7812/TPP/18-089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Butler E D, Morgan A U, Kangovi S. Screening for unmet social needs: Patient engagement or alienation? NEJM Catal. 2020;1(04):10. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.