Abstract

Does our mood change as time passes? This question is central to behavioural and affective science, yet it remains largely unexamined. To investigate, we intermixed subjective momentary mood ratings into repetitive psychology paradigms. We demonstrate that task and rest periods lowered participants’ mood, an effect we call “Mood Drift Over Time.” This finding was replicated in 19 cohorts totaling 28,482 adult and adolescent participants. The drift was relatively large (−13.8% after 7.3 minutes of rest, Cohen’s d = 0.574) and was consistent across cohorts. Behaviour was also impacted: participants were less likely to gamble in a task that followed a rest period. Importantly, the drift slope was inversely related to reward sensitivity. We show that accounting for time using a linear term significantly improves the fit of a computational model of mood. Our work provides conceptual and methodological reasons for researchers to account for time’s effects when studying mood and behaviour.

Introduction

An important but implicit notion amongst behavioural and affective scientists is that each participant has a baseline mood or affective state that will remain constant during an experiment or only vary with emotionally salient events.1 Mood is modelled as a discounted sum of rewards and punishments,2,3 but many models hold that the time scale over which these events unfold is irrelevant and the passage of time itself has no effect on mood.

This assumption of a constant affective background has profound methodological implications for psychological experiments. First, consider a “resting state” functional brain scan in which a participant is asked to stare at a fixation cross. Based on the constant affective background assumption, comparisons of resting-state neuroimaging data between (for example) depressed and non-depressed participants are thought to reveal differences in their task-general traits, rather than their response to experimentally imposed rest periods. Second, consider an event-related design, such as a gambling or face recognition task, during which participants experience stimuli (wins or losses) that elicit emotional reactions. When analysing these data, responses to task stimuli are thought to occur on top of (and are often contrasted to) the affective baseline, which is presumed to be time-invariant.

Whilst convenient, this assumption of a constant affective background contradicts evidence from multiple fields that time impacts mood and behaviour. Affective chronometry research has demonstrated that affect changes systematically with time after an affective stimulus,4–7 and that individuals vary in the rates at which positive or negative affect decays after an event.8,9 Such individual differences may be linked to mental health. For instance, psychopathologists theorise that anhedonia, a symptom of both depression and schizophrenia, arises from a failure to sustain reward responses for a normative period of time.10 And studies of ADHD suggest that hyperactivity’s impulsive behaviour results from delay aversion, the idea that a delay is itself unpleasant and impulsivity is simply a rational choice to avoid it.11–13

Economists speak of the opportunity cost of time, suggesting that time spent performing one activity incurs the cost of other alternatives they might have chosen instead (such as paid work or leisure).14–16 This idea is fundamental to the explore/exploit question that has recently preoccupied neuroscientists.17–19 Affect is central to this question: it is currently thought that negative affective states (such as boredom) building over time provide the subjective motivation to switch to a different activity.20,21

When participants are engaged in a psychological task or rest period, they are committed to exploiting that task environment and are unable to explore other activities. This sense of constraint, or reduced agency, is considered central to feelings of boredom and its associated negative affect.22 We might therefore conceive of a psychological task’s behavioural constraint as a sort of negative affective stimulus that could gradually draw mood downward.

If this is true and the constant affective background assumption is violated, this could be problematic given evidence that spontaneous affective changes vary systematically between the individuals and groups being compared in affective science. For example, spontaneous negative thoughts are known to occur and vary substantially between humans, as highlighted by extensive work in mind-wandering.23–26 Similarly, it is well known from occupational psychology that periods of low or relatively constant stimulation (as occurs in rest or repetitive experimental tasks) can induce varying levels of boredom.27,28 These insights raise the possibility that mood states will follow a similar pattern of inter-individual variability, creating potential confounds for resting-state and event-related experiments. But the size, stability, and clinical correlates of this variability remain unexplored.

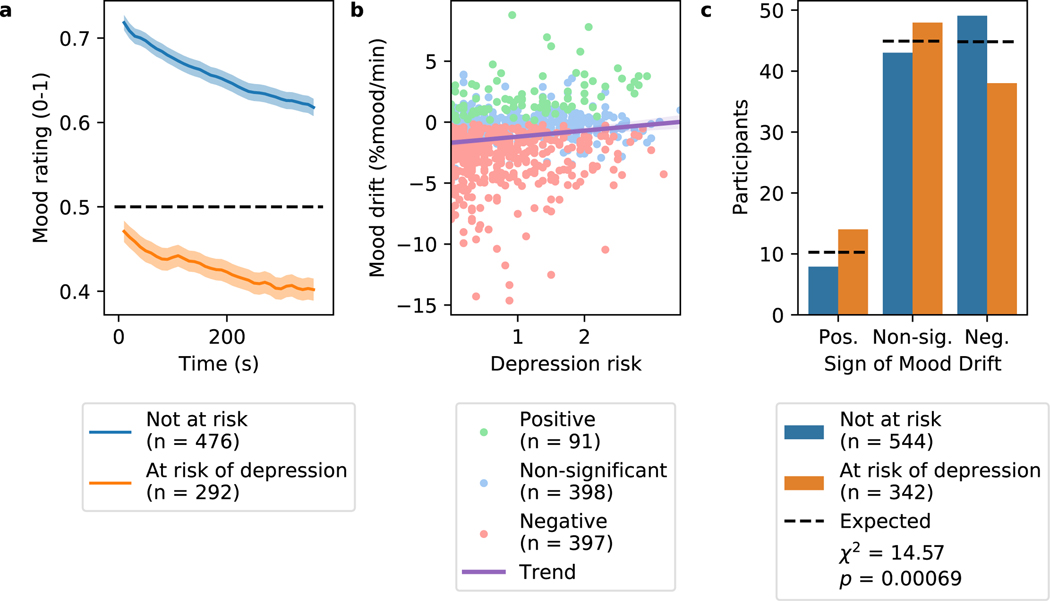

In order to answer these fundamental questions, we examine how the passage of time affects mood in a variety of experiments across studies, participants, and settings. We find that participants’ mood worsened considerably during rest periods and simple tasks, an effect we call “Mood Drift Over Time” (“mood drift” for short). This downward mood drift was replicated in 19 large and varied cohorts, totaling 116 healthy and depressed adolescents recruited in person, 1,913 adults recruited online from across the United States, and 26,896 participants performing a gambling task in a mobile app. It was not observed when participants freely chose their own activities. We show that mood drift is related to, but not a trivial extension of, the existing constructs of boredom and thought content (including the task-unrelated thought often considered central to mind-wandering). We show that mood drift slopes are positively correlated with reward sensitivity and that this relationship is moderated by overall life happiness. These findings may have profound implications for experimental design and interpretation in affective science.

Results

Characterising the Effect

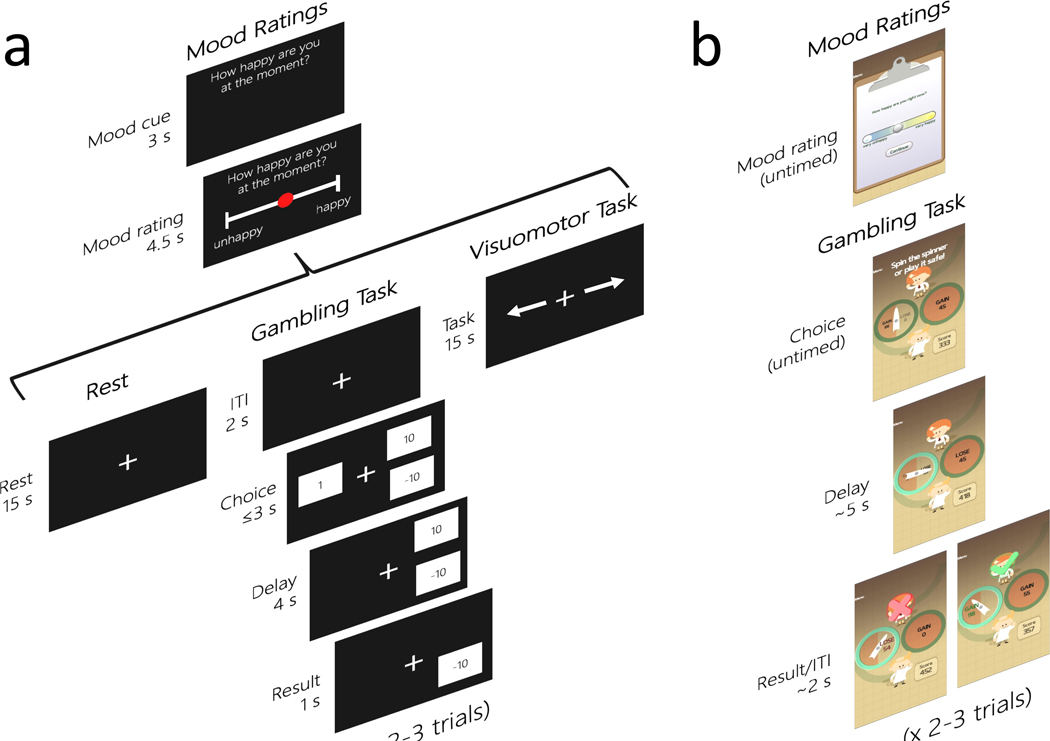

The results to follow characterise the average person’s gradual decline in mood during rest and simple tasks, a phenomenon we call “Mood Drift Over Time” (“mood drift” for short). This effect was initially observed in a task where participants were periodically asked to rate their mood (Figure 1A). Between these mood ratings, the initial cohort was first asked to stare at a central fixation cross. They were told that the rest period would last up to 7 minutes and that they would be asked to rate their mood “every once in a while”. The mood ratings observed during this rest period inspired a number of slightly modified tasks to better characterise the effect and eliminate methodological confounds. Each modification was presented to a new cohort of naive participants so that memory and expectations would not affect their mood ratings. Each cohort also played a gambling game at some point in the task, in which they chose between an uncertain gamble or a certain outcome. This task is a standard one commonly used to examine mood.3,29–31 It was included to observe the effects of rest on rational behaviour, to maintain links with previous studies of mood and reward,2,3,32 and to enable related analyses on a large cohort of participants (n=26,896) playing a similar game on their smartphones33 (Figure 1B). A list of the cohorts we examined is in Extended Data Table 1).

Figure 1:

One cycle (mood rating + task) of the administered to (A) online participants and (B) mobile app participants. After completing their first mood rating, participants completed one cycle of the rest, gambling, or visuomotor task, then completed another mood rating, and so on. In the case of the rest and visuomotor tasks, the cycle duration was determined by time. In the case of the gambling task, it was determined by the time taken to complete 2 or 3 (randomised) trials of the gambling task.

To quantify time’s effect on mood, we created a linear mixed effects (LME) model with terms for initial mood and mood slope (i.e., change in mood per unit time) as random effects that were fitted to each subject’s data. The factors of interest described in the following sections were included in the model as fixed effects (see Methods). One factor of particular interest is a depression risk score for each participant, a continuous value defined as their score on the Mood and Feelings Questionnaire (MFQ, for adolescents) or the Center for Epidemiologic Studies Depression Scale (CES-D, for adults) divided by a clinical cutoff, i.e., MFQ/12 or CES-D/16. The model was fitted to the cohort of all participants who experienced an opening period of rest, visuomotor task, or random gambling. The slope parameter learned for each participant was used to quantify that participant’s mood drift. The distribution of slopes was assumed to be Gaussian,34 but LME models are robust to violations of this assumption.35 All statistical tests used were two-sided unless otherwise specified.

Because the smartphone game cohort was large enough to fit hyperparameters in a held-out set of participants, this cohort’s mood ratings were also fitted to a computational model that estimates each participant’s initial mood and their sensitivity to rewards, reward prediction, and time (See Methods). The model’s time sensitivity parameter for each participant was used to quantify their mood drift.

Mood Drift Over Time Is Sizeable During Rest

Our first objective was to estimate the size of the effect. In our initial cohort (called 15sRestBetween in Extended Data Table 1)of 40 adults recruited on Amazon Mechanical Turk (MTurk), we asked whether mood would change consistently during a rest period that preceded a gambling game. We observed a gradual decline in mood over time (Figure 2A, blue line). After 9.7 minutes of rest, the change in mood was considerable (Mean ± standard error (SE) = 22.4% ± 4.15% of the mood scale). We replicated this in 5 other adult MTurk cohorts that received shorter opening rest periods (Figure 2A, other lines).

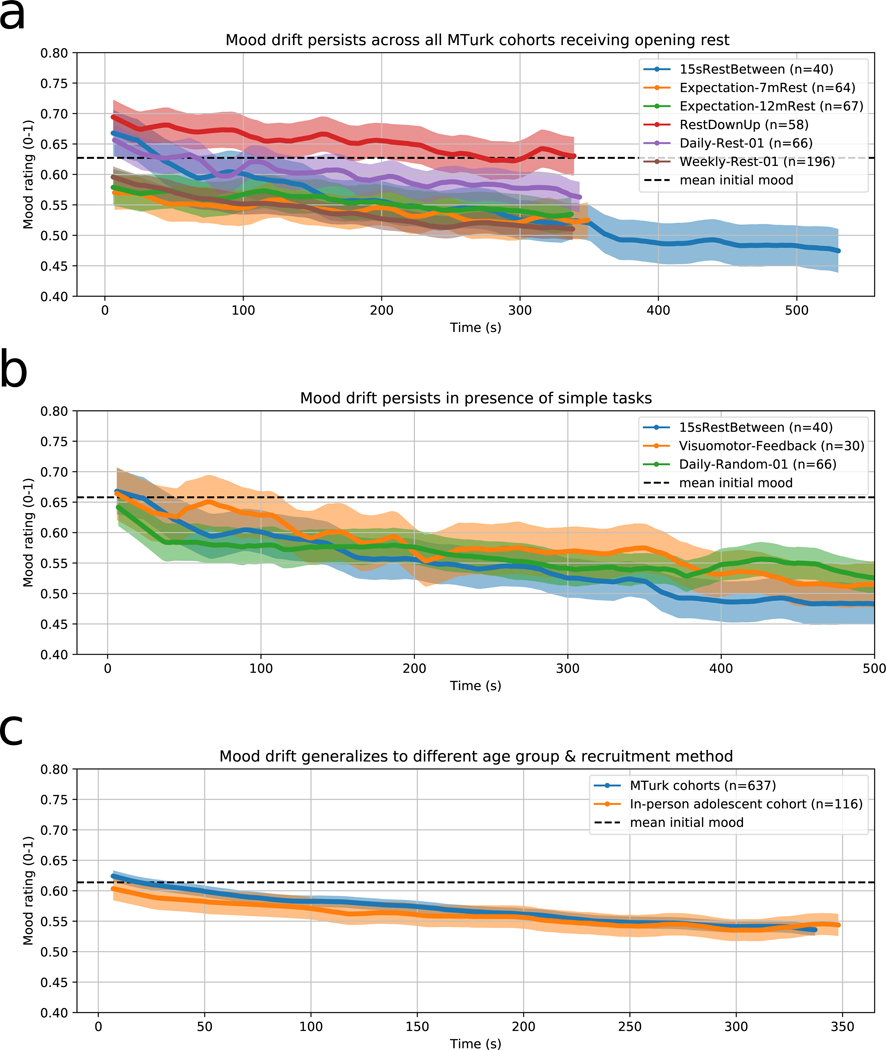

Figure 2:

The timecourse of mood drift is consistently present across many cohorts and task modulations. These plots each show the mean timecourse of mood across participants in various online cohorts for the first block of the task. Each participant’s mood between ratings was linearly interpolated before averaging across participants. The shading around each line represents the standard error of the mean. Each name in the legend corresponds to a cohort completing a slightly different task (Extended Data Table 1). Mean initial mood refers to the mean of cohort means, not the mean of subject means. (a) Mean timecourse of mood ratings during an opening rest period in all Amazon Mechanical Turk (MTurk) cohorts that received it. Mood drift was discovered in one cohort (blue line) and replicated in five independent naive cohorts. (b) Mood drift was observed not only in rest periods (blue), but also in a simple task requiring action and giving feedback (orange), and in a random gambling task with 0-mean reward prediction errors and winnings (green). (c) Mood drift was observed both in adults recruited on MTurk (combining across all MTurk participants that received opening rest or visuomotor task periods) (blue) and in adolescents recruited in person (orange).

Mood Drift Over Time Is Robust to Methodological Choices

To examine possible methodological confounds, we created slightly modified versions of the task to see whether the observed decline in mood ratings might be due to the following:

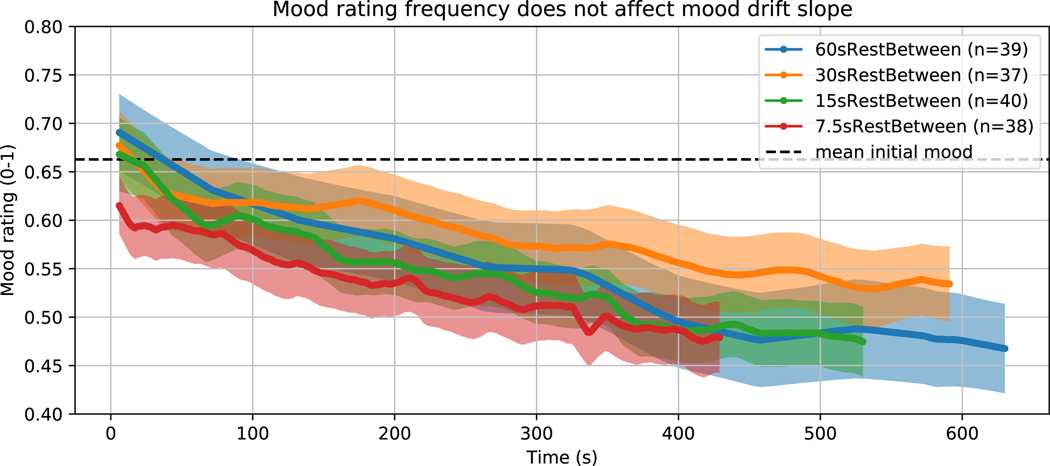

The aversive nature of rating one’s mood: we did not find evidence that more frequent ratings changed mood drift (inter-rating-interval x time interaction = −0.0103 %mood, 95%CI = (−0.0267, 0.0061), t810 = − 1.23,p = 0.219, 2-sided, Extended Data Figure 1).

The method of rating mood and its susceptibility to fatigue: we did not find evidence that making every mood rating require an equally easy single keypress changed mood drift (−2.22 vs. −2.45 %mood/min, 95%CI = (−0.772, 1.23), t70 = 0.427, p = 0.671, 2-sided).

The expected duration of the rest period: groups expecting different rest durations did not have different mood drift (−1.47 vs. −1.53%mood/min, 95%CI = (−0.613, 0.743), t104 = 0.185, p = 0.854, 2-sided).

Multitasking or task switching: participants moved their mood rating slider on 97.7% of trials.

The results of these control analyses suggested that mood drift cannot be explained by these methodological factors (Supplementary Note C).

Mood Drift Over Time Occurs During Tasks

To see whether this decline was specific to rest or more generally linked to time on task, we administered two variants of the task. The first variant (cohort Visuomotor-Feedback, n = 30) was designed to mimic rest very closely while requiring the participant to respond regularly and giving feedback on their performance. Specifically, a fixation cross moved back and forth periodically across the screen, the participant was asked to press a button whenever it crossed the centerline, and each response would make the cross turn green if the response was accurate or red if it was too early or late (see Methods). In the second variant (cohort Daily-Random-01, n = 66), the subject played a random gambling game in which gambling outcomes and reward prediction errors (RPEs) were both random with mean zero. Both of these tasks produced similar mood timecourses, and we did not find evidence of a difference between the LME slope parameters of this group and those of the original cohort (−2.19 vs. −2.45 %mood/min, 95%CI = (−0.876, 1.40), t68 = 0.437, p = 0.663 for visuomotor task, −1.91 vs. −2.45, 95%CI = (−0.453, 1.52), t104 = 1.07, p = 0.287 for random gambling, both 2-sided) (Figure 2B).

Mood Drift Over Time Is Generalizable

We next investigated the generalizability of this result across age groups and recruitment methods. To do this, we collected similar rest + gambling data via an online task from adolescent participants recruited in person at the National Institute of Mental Health in Bethesda, MD and asked to complete the task online via their home computers (see Methods). This group (Adolescent-01, n=116) showed a pattern of declining mood similar to that observed in the MTurk cohort (Figure 2C) (−1.69 vs. −1.93 %mood/min, 95%CI = (−0.122, 0.599), t884 = 1.09, p = 0.275, 2-sided).

To more precisely characterise the effect, we fitted a large LME model to the complete cohort of online participants (both adults and adolescents) completing rest or simple tasks in the first block (Extended Data Table 2). The mood drift parameter (rate of mood decline with time) for these 886 participants was Mean ± SE = −1.89 ± 0.185 %mood/min, which was significantly less than 0 (t864 = −10.3, p < 0.001. After 7.3 minutes (the mean duration of the first block of trials), the mean decrease in mood estimated by this LME model was 13.8% of the mood scale. This corresponds to a Cohen’s d = 0.574, with a 95% CI = (0.464, 0.684).36

Mood Drift Is Diminished in a Mobile App Gambling Game

We next tested whether mood drift could be observed in a large dataset (n = 26, 896) of mood ratings during a similar gambling task played on a mobile app. All analyses were applied to an exploratory cohort of 5,000 of these participants, then re-applied to the confirmatory cohort of all remaining participants after preregistration (https://osf.io/paqf6). We applied the LME modeling procedure to this confirmatory cohort and again found a slope parameter that was significantly below zero at the group level (Mean ± SE = −0.881 ± 0.0613 %mood/min,t22804 = −14.4, p < 0.001).

It is notable that even in this relatively engaging game (in which tens of thousands of participants completed the task despite not being paid for participating or penalised for failing to finish), mood tended to decrease with time spent on task.

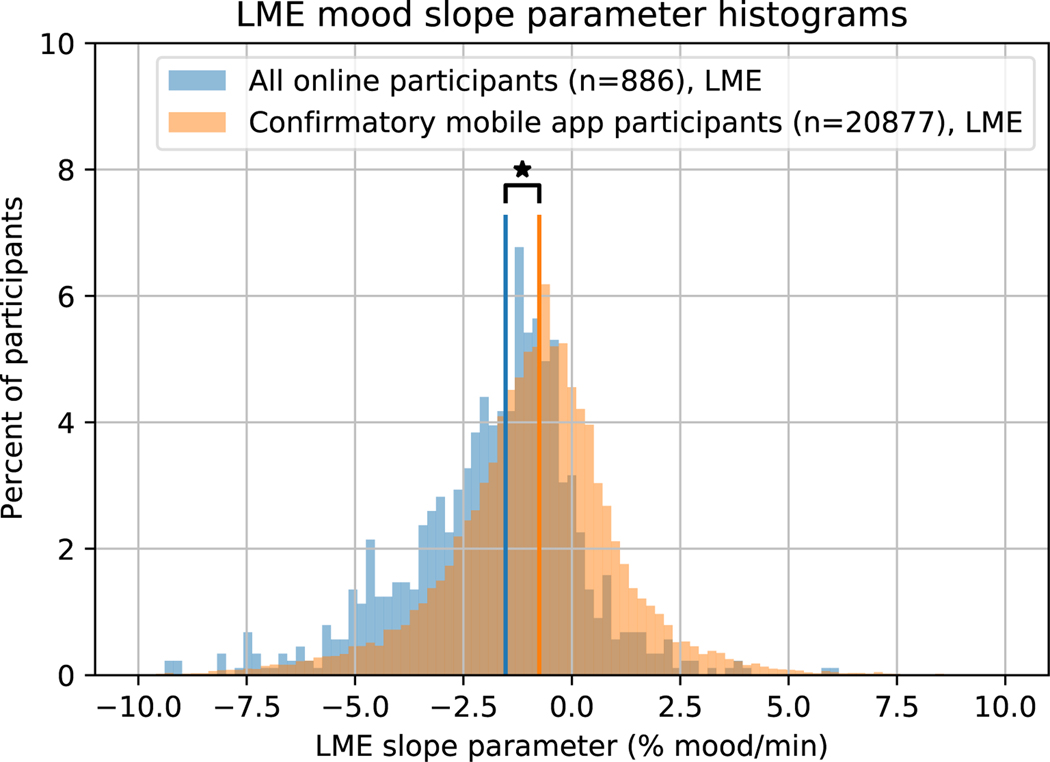

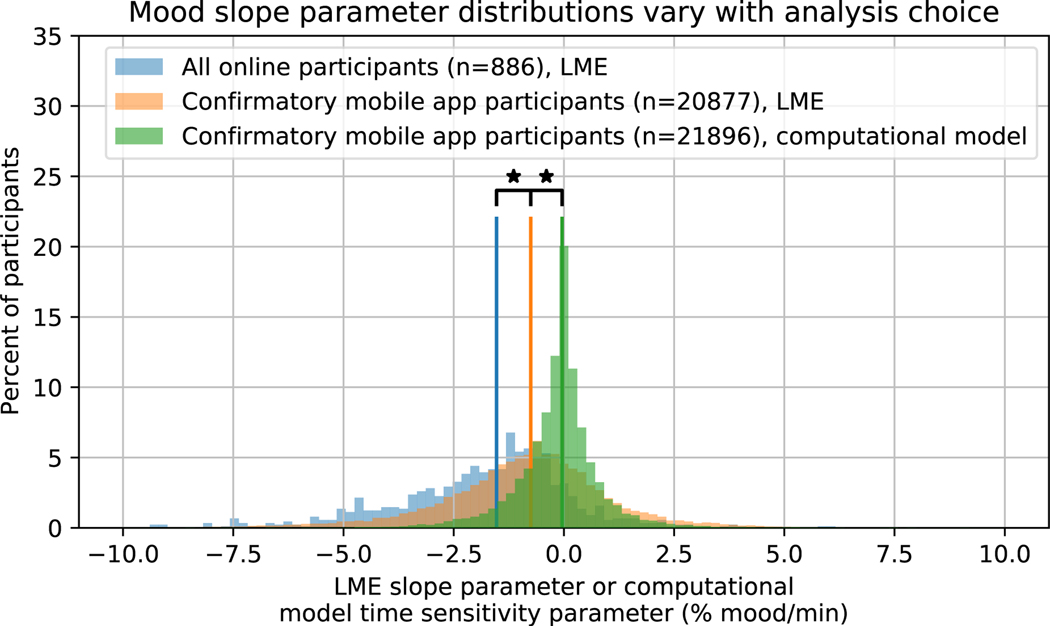

We note, however, that mood drift was significantly smaller in this cohort (median=−0.752, inter-quartile range (IQR)=2.10 %mood/min) than in the combined cohort of online participants (median=−1.53, IQR=2.34 %mood/min, 2-sided Wilcoxon rank-sum test, W21761 = −14.5, p < 0.001, Extended Data Figure 2).87.5% of online participants had negative slopes in the LME analysis, whereas only 70.2% of mobile app participants did. A histogram of the LME slope parameters for online and mobile app participants is plotted in Figure 3. This shows that, as one might expect, mood drift is sensitive to task context.

Figure 3:

Individual subject LME slope parameters for online participants (blue) and mobile app participants (orange). The online participants had slopes below zero on average (Mean ± SE = −1.89 ± 0.185 %mood/min, t864 = −10.3, p < 0.001), as did the mobile app participants (Mean ± SE = −0.881 ± 0.0613 %mood/min, t22804 = −14.4, p < 0.001). Mood drift was significantly less negative in the mobile app participants (median=−0.752, IQR=2.10 %mood/min) than in the online participants (median=−1.53, IQR=2.34 %mood/min, 2-sided Wilcoxon rank-sum test, W21761 = −14.5, p < 0.001). Vertical lines represent group medians. Stars indicate p < 0.05.]

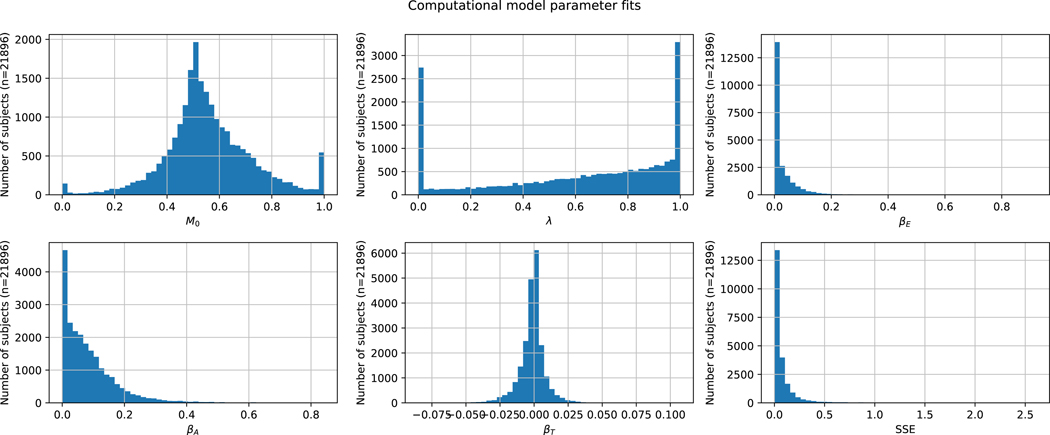

Next, to disentangle mood drift from the effects of reward and reward prediction error in this dataset, we fitted the computational model described in the Methods section to the mobile app data. Including the mood slope parameter in the model decreased the mean squared error on testing data (the last two mood ratings of the task) from 0.336% to 0.325% of the mood scale for the median subject across regularizations, a significant improvement (IQR=0.00197%, 2-sided Wilcoxon signed-rank test, W499 = 0, p < 0.001). This suggests that time on task affected a participant’s mood beyond the impacts of reward and expectation, and did so in a way that was stable within individuals because improved fits were observed in held-out data. Fits and parameter distributions can be seen in Extended Data Figures 3 and 4. The distribution of participants’ time sensitivity parameters βT (which can be interpreted as mood drift independent of reward effects) was centered significantly below zero (Mean ± SE = −0.128 ± 0.00668 %mood/min, 2-sided Wilcoxon signed-rank test W21895 = 1.00 * 108, p < 0.001).

Mood Drift Over Time Is Absent in Freely Chosen Activities

After the surprising finding that mood drift appeared during an engaging mobile app game, we wondered whether this phenomenon would be observed in daily life, outside the context of a psychological task. We therefore designed and preregistered (https://osf.io/gt7a8) a task in which the initial rest period was replaced with 7 minutes of free time, during which the participant could pursue activities of their choice. Participants completing this task (cohort Activities, n=450) were asked to rate their mood just before and just after the break period. They were then asked to report what they did. The most frequent activities reported were thinking, reading the news, and standing up (Supplementary Table 3).

This group was the first sample investigated in this study that did not exhibit mood drift. The mood ratings just after the free period were not statistically different from the mood ratings before the free period (66.6% vs. 65.7%, 95%CI = (−2.15,97), t449 = −1.33, one − tailed PH0:decrease = 0.0918, PH0:increase = 0.908). This change in mood was significantly greater than that of a cohort who received the standard rest period with interspersed mood ratings (cohort BoredomAfterOnly, n=150) (0.909% vs. −8.11%, 95%CI = (5.95, 12.1), t598 = 6.28, p < 0.001, 2-sided). This shows that, perhaps unsurprisingly, mood drift is not universal to all activities. However, the nominal increase in mood during this period (0.130% mood/min) was much smaller than the decrease in mood observed during a typical rest period (−1.89% mood/min). Each minute in which participants could choose their activity raised their collective mood less than 10% of the mood decline experienced during a minute of rest.

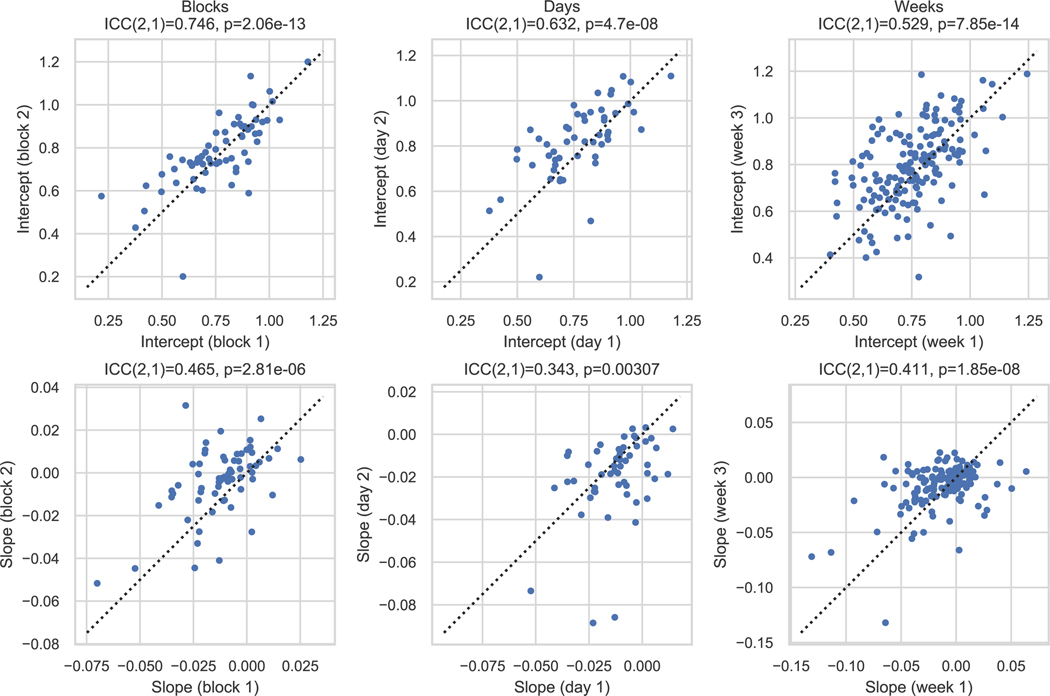

Inter-Individual Differences

Having characterised the effect at the group level, we next turned our attention to the individual. The motivation for this line of analysis is that if an individual’s mood slope is different from that of others in a way that remains stable over days or weeks, it may be linked to traits of clinical and theoretical interest. While the group average mood drift is negative during rest and simple tasks, there is considerable variation across participants (2.5th - 97.5th percentile of subject-level mood drift for online participants: −7.23 − 1.79%mood/min) (Figure 3). Using an intraclass correlation coefficient (ICC) on cohorts that completed the task more than once, we found that these individual differences had moderate, statistically significant stability across blocks (ICC(2,1) = 0.465, p < 0.001), days (ICC(2,1) = 0.343, p = 0.0031), and weeks (ICC(2,1) = 0.411, p < 0.001, one-sided since ICC values are expected to be positive) (Extended Data Figure 5,Supplementary Note D).We therefore investigated the relationship between this variability and other traits of clinical and theoretical interest.

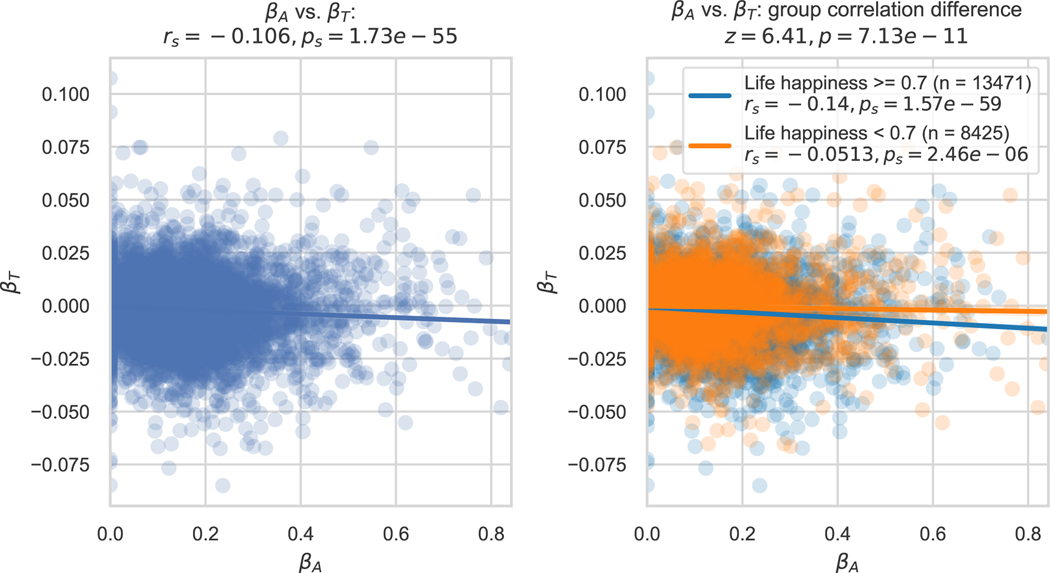

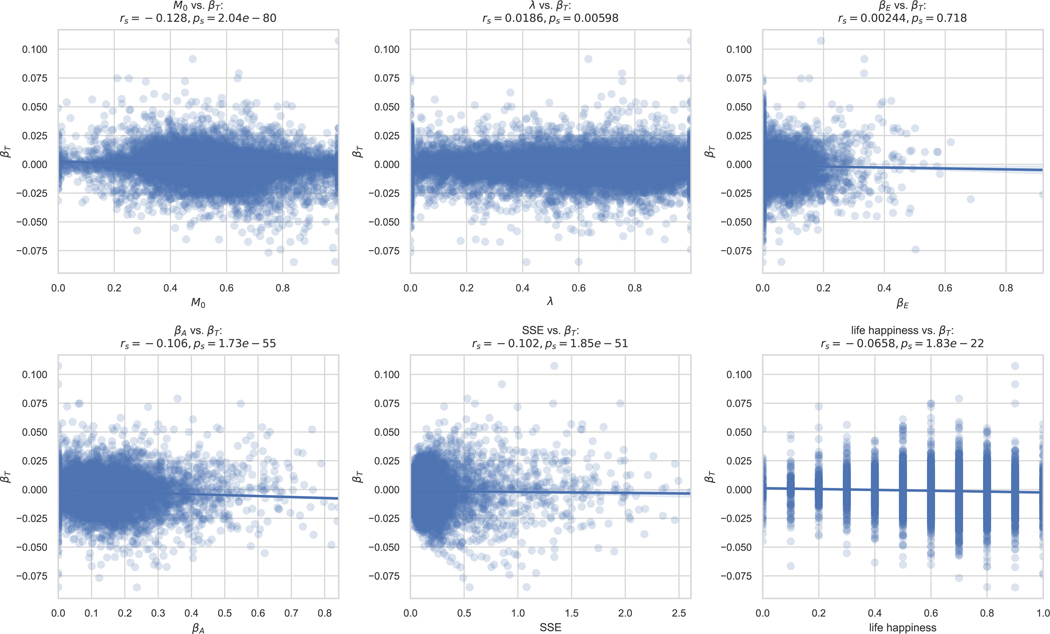

Mood Drift Is Associated with Sensitivity to Rewards

Mood is central to depression, which is thought to relate etiologically to reward responsiveness.37,38 The idea that mood drift might be related to this responsiveness prompted us to investigate the relationship between participants’ mood drift, reward sensitivity, and life happiness in our computational model fits. The time sensitivity/mood drift parameter βT was anticorrelated with the reward sensitivity parameter βA (rs = − 0.106, p < 0.001, 2-sided) (Figure 4, left). This anticorrelation was weaker in participants with life happiness below the median (i.e., those at greater risk of depression) than it was in those at/above it (rs =−0.0513 vs. −0.14, Z = 6.41, p < 0.001, 2-sided) (Figure 4, right). This suggests that people more sensitive to the passage of time are also more sensitive to rewards, and that this relationship is less pronounced in those with greater depression risk.

Figure 4:

Individual differences in sensitivity to the passage of time relate to other individual differences in the mobile app cohort. The computational model’s time sensitivity parameter βT for each participant in the mobile app cohort is plotted against that participant’s reward sensitivity parameter βA (left). rs and ps denote Spearman correlation coefficient and corresponding P value. When grouped by life happiness, participants with happiness at or above the median had a stronger βT − βA anticorrelation than participants with happiness below the median (right). Each dot is a participant (n=21,896). Each line is a linear best fit, and patches show the 95% confidence interval of this fit. The group difference in Spearman correlations was statistically confirmed using a z statistic. P values shown are 2-sided with no correction for multiple comparisons.

The direct relationship between depression risk and mood drift was significant, but its effect on model fit was very small. In our online participant LME model, higher depression risk score was significantly associated with less negative mood drift (depression-risk * time interaction, Mean ± SE = 0.515 ± 0.109%mood/min, t869 = 4.75, p < 0.001, Extended Data Figure 6).Whilst the model fit improved, the within-individual variance explained by the addition of this interaction term was very small (f2 = 0.00289).39,40 Nevertheless, the interaction term’s significance was replicated in two more independent cohorts (including the mobile app cohort, where time sensitivity and life happiness were weakly anticorrelated, Extended Data Figure 7,bottom right) and was robust to methodological artefacts such as floor effects (Supplementary Notes E-G).

Taken together, these results demonstrate relationships between mood drift and other important individual differences: depression risk, life happiness, and reward sensitivity.

Impact on Behaviour

Participants Are Less Likely to Gamble After Rest Periods

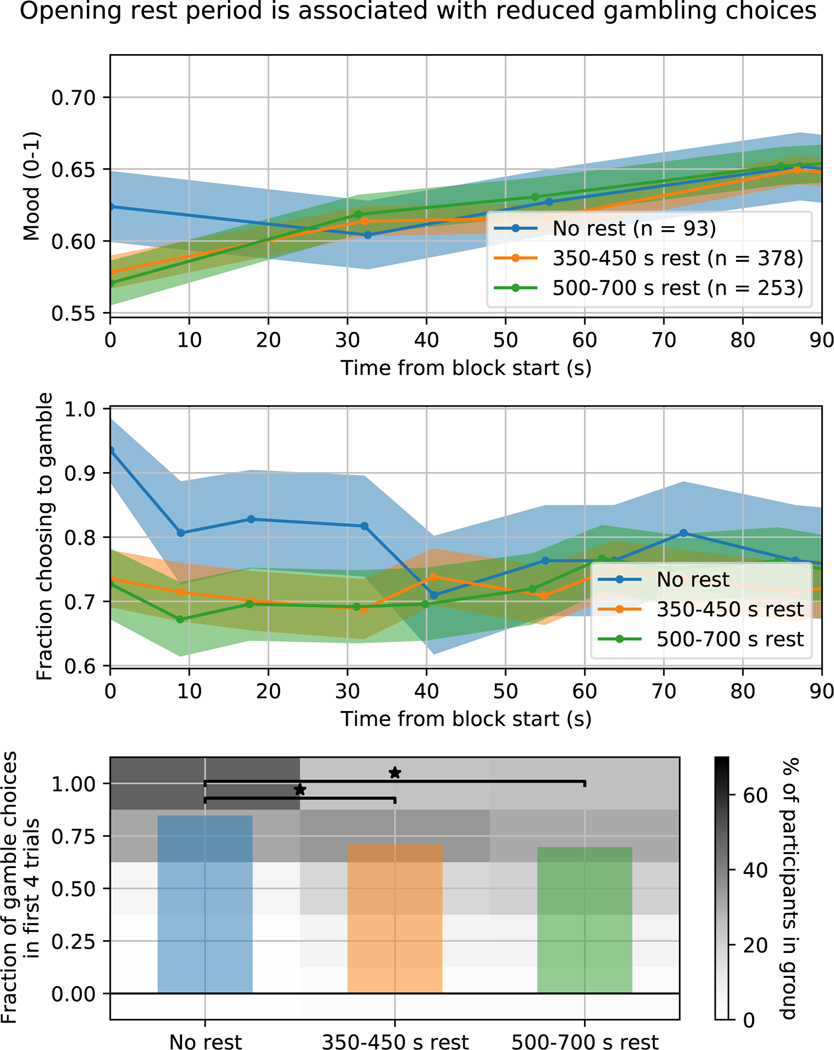

To investigate whether mood drift’s effects extend to behaviours beyond subjective mood reports, we examined the impact of rest and mood drift on behaviour in the gambling tasks. Past research has shown that a participant’s choice between a certain outcome and a more exciting but uncertain gamble is affected by mood as induced by unexpected gifts,41,42 music,43 and feedback.31 We asked whether mood drift would influence this behaviour in a similar way.

We observed that gambling (specifically positive closed-loop gambling, in which participants tended to receive positive RPEs) participants who had a preceding rest or visuomotor task block had significantly lower mood at gambling onset than those who did not (median 0.55 vs. 0.66, IQR 0.28 vs. 0.31, 2-sided Wilcoxon rank-sum test, W722 = 2.08, p = 0.0377) (Figure 5, top). This effect was no longer significant at the next mood rating, which took place around trial 4 of gambling. We therefore examined gambling behaviour in these first 4 trials. Those who had experienced either a short (350–450 s) or long (500–700 s) opening rest period were significantly less likely to gamble than those who had not (median=3, IQR=2 for both short- and long-rest, 2-sided Wilcoxon rank-sum test, no-rest vs. short-rest: W469 = 4.85, p < 0.001; no-rest vs long-rest: W344 = 4.79, p < 0.001; both < 0.05/3 controlling for multiple comparisons). (Figure 5, bottom). However, we did not find evidence of a difference between the long and short rest groups (2-sided W629 = 0.52, p = 0.603). Trial-wise gambling behaviour differences between rest and no-rest groups are most pronounced in the first four trials, much like the differences observed in mood (Figure 5, middle). However, no significant correlation was observed between an individual’s mood drift parameter during the preceding rest block and the number of times they chose to gamble in the first 4 trials (rs = 0.0317, p = 0.427, 2-sided).

Figure 5:

Rest periods decreased the likelihood of choosing to gamble in the first 4 trials after rest ended. Top: mean ± standard error mood ratings across participants in their first block of (positive closed-loop) gambling preceded by different rest period durations. Middle: fraction of participants in each group that chose to gamble on each trial of this first gambling block (mean ± 95 percent confidence intervals derived from a binomial distribution). Bottom: bars show mean across participants of the fraction of the first 4 trials of this first gambling block that participants chose to gamble. Histogram shows the distribution of choices (i.e., to gamble on 0, 1, 2, 3, or 4 trials) within each group. Stars indicate that a pair of groups was significantly different (2-sided Wilcoxon rank-sum test, no-rest vs. short-rest: W469 = 4.85, p < 0.001; no-rest vs long-rest: W344 = 4.79, p < 0.001; both < 0.05/3 controlling for multiple comparisons). Sample sizes are: No rest group: n = 93 participants, 350–450 s rest group: n = 378 participants, 500–700 s rest group: n = 253 participants).

Relationship to Boredom and Thought Content

We next examined whether the existing construct of boredom or mind-wandering (MW) could trivially explain mood drift. In a preregistered (https://osf.io/gt7a8) data collection and analysis, we examined the relationship between mood drift and these more established constructs at the state level, state change level, and trait level (Supplementary Notes L-M).Participants were randomised to a boredom, MW, or Activities cohort (described previously) at the time of participation.

Mood Drift Over Time is Weakly Related to State Boredom

We assessed whether mood drift could be explained by boredom. Participants completed a rest block with interspersed mood ratings, plus a state boredom questionnaire (the Multidimensional State Boredom Scale’s short form, MSBS-SF)44 afterwards (cohort BoredomAfterOnly, n = 150), or before and afterwards (cohort BoredomBeforeAndAfter, n = 150), and a trait-boredom questionnaire (the short boredom proneness scale, SBPS).45

In our LME model of mood, we added a factor for final state boredom (i.e., at the end of the rest block). We then compared this baseline model to one that further added the interaction between final-boredom and time. The difference represents the ability of boredom to account for mood drift. Whilst the model fit improved, the added within-individual variance explained by the addition of this new interaction term was very small (f2 = 0.00578). The change in state boredom across the rest block produced similar results (f2 = 0.0111).

Including time’s interaction with trait boredom in the model did not explain significant additional variance in mood (Likelihood ratio test: χ2(1, N = 16) = 0.0253, p = 0.874).

Mood Drift Over Time is Weakly Related to Thought Content

We also assessed whether mood drift could be explained by the content of ongoing thought, including the task-unrelated thought, stimulus-independent thought, and spontaneity often considered in definitions of MW.46 We note that such content-based definitions of MW are controversial and does not capture the dynamics-based definition espoused by some researchers.47,48 New participants completed a rest block with interspersed mood ratings, plus a Multidimensional Experience Sampling (MDES) questionnaire49) afterwards (cohort MwAfterOnly, n = 150), or before and afterwards (cohort MwBeforeAndAfter, n = 150), and a trait-MW questionnaire (the mind-wandering questionnaire (MWQ)50). MDES results produce 13 principal components that attempt to capture the content of ongoing thought. We investigated how well this complete collection of components explains within-individual mood variance.

In our LME model of mood, we added 13 factors for “final” MDES components (i.e., at the end of the rest block). We then compared this baseline model to one that further added the 13 interactions between these final-MDES components and time. The difference represents the ability of MDES components to account for mood drift. Whilst the model fit improved, the within-individual variance explained by the addition of these new interaction terms was small (f2 = 0.0227). The change in MDES components across the rest block produced similar results (f2 = 0.0380). Including time’s interaction with trait MW in the model did not explain significant additional variance in mood (χ2(1, N = 16) = 0.305, p = 0.581).

Discussion

In this study, we describe the discovery of a highly replicable and relatively large effect which we call Mood Drift Over Time: the average participant’s mood gradually declined with time as they completed simple tasks or rest periods. Mood’s sensitivity to the passage of time is a long-intuited phenomenon that is widely acknowledged in literature51–53 and philosophy.54–56 Our results provide robust empirical evidence for this phenomenon and reveal its temporal structure, its variability across individuals, and its level of stability. These results call into question the long-held constant affective background assumption in behavioural and affective science.

The mechanism that enables mood to be sensitive to the passage of time is not yet known. One possibility is that humans store expectations about the rate of rewards and punishments in the environment and that prolonged periods of monotony violate such expectations. Such a view aligns with the recently articulated theoretical progress in integrating opportunity cost across time to guide behaviour.21 Lower mood could function as an estimate of that opportunity cost, making mood drift an adaptive signal that informs decisions to exploit (stay on task) or explore (switch task).20

Supporting this reward/cost-based interpretation of our findings is our observation that depressed participants showed less negative mood drift. This would at first seem paradoxical since phenomena such as boredom have traditionally been linked to melancholia and depression (e.g., by Schopenhaur57 and Kierkergaard58). Yet it has been argued cogently59 that such a view conflates negative affect as a trait (e.g., proneness to boredom) with negative affect as a state (a momentary experience). Since valuation of reward is thought to be reduced in depression,37,38 it is possible that misalignment with one’s goals and violation of reward expectations—and resultant downward mood drift—will be less pronounced in depression. This interpretation is supported by our finding that mood drift is less pronounced in those with lower reward sensitivity, and that the relationship between reward sensitivity and mood drift was moderated by depression risk (Figure 4). It is tempting to speculate that reduced mood drift could contribute to reduced motivation for action or environmental change in those with depression.

We found that mood declined during rest and tasks (including a mobile app more engaging than most experiments) but not freely chosen activities. This suggests that researchers are subjecting their participants to an unnatural stressor in their experiments without accounting for it in their analyses or interpretations. Changes in mood on the scale of tens of minutes prevent these longer blocks of time from being truly interchangeable. This means that variations in experimental procedures that might seem inconsequential could still introduce confounds.

For example, let’s consider a large collaborative study that is based on multisite imaging data collection, such as ENIGMA.60 In this dataset, centres vary in the duration of the resting-state fMRI scan and whether it takes place at the start or end of the scan session.61 This could lead to high variability between sites simply because patients at sites with longer or later scans spent more of the scan in a bad mood. At best, the neural correlates of that decreased mood will be uncorrelated with the effect of interest, increasing noise and reducing statistical power. At worst, they could be mistaken for neural correlates of a certain genotype that is more common in the country where the longer scans took place. (We do not imply that mood drift lowers reliability in resting-state MRI;62–64 we simply point out its role as a potential confound when drawing inferences about mood and brain states during/after rest.)

In this paper, we introduce the new term Mood Drift Over Time for the following reasons. First, the phenomenon is highly replicable; second, it is of considerable effect size; third, it is relevant to both everyday situations and to scientific experiments; fourth, mood drift does not seem to be captured by existing terms such as boredom or mind-wandering. We employ the term mood drift in the spirit of describing a mental phenomenon,65–67 as a first step before explaining or categorising it. It is possible that mechanisms for mood drift are reward sensitivity and opportunity cost, yet the subjective experience and its influence on the outcome of experimental studies seem to require the separate term that we have introduced.

The distinction between mood drift and boredom requires special consideration due to their apparent similarities. State boredom assessed using the MSBS-SF44 accounted for modest variance beyond other factors. Of course, the MSBS is only one (relatively well established) way of measuring boredom; moreover, there is debate about the very conceptualisation of boredom and its heterogeneity.22,59,68 Therefore, we cannot conclude purely from these results that boredom is not driving mood drift. Future work might instead ask participants to directly report their boredom,69 enabling more frequent assessment of boredom as an emotion.70

Importantly, we show that accounting for time using a linear term significantly improves the fit of a computational model of mood. A linear term may be unrealistic as we expect that on a bounded mood scale, the effect will eventually saturate. However, we propose that until alternative models have been established, the linear term may be a good-enough way to account for the substantial effects of mood drift on the time scale of most experiments.

Our study has several strengths, including adherence to good data analysis practices such as preregistration and replication, the addition of a longitudinal design to test reliability, and the use of rigorous computational modeling (including train-test splits and regularisation). Our study demonstrated the effect in adolescents as well as adults and showed how the effect differs in people with varying reward sensitivity and depression risk. We used control experiments to eliminate potential confounds and test alternative explanations (Supplementary Notes C-G).

Yet our study should also be seen in light of some shortcomings.

First, this study uses self-reported momentary mood ratings as in previous studies with similar methodology.2,3 Such ratings can be criticised as being subjective and difficult to interpret. However, mood is a well-established construct of central importance to affective science. Its definition as a long-duration affective state that is not immediately responsive to stimuli71,72 makes it central to the study of mood disorders defined by long-term affect.73 Mood is distinct from emotion, in part, by being less temporally responsive.74–76 Mood’s links to long-term context makes it the more useful construct to describe gradual changes in affect.

Despite its subjectivity, self-report remains the gold standard for the measurement of mood and emotion.76–78 It is widely used in clinical,79 epidemiological,80 and psychological research (including ecological momentary assessment81). Other physiological “markers” of affect are typically benchmarked against these self-reports. And evidence suggests that these candidates lack the reliability of self-reports: different emotions cannot be distinguished by their autonomic nervous system signatures,82 facial expressions,83,84 or neural activity.85 In our experiments, initial mood ratings showed strong association with trait mood ratings, underscoring their psychometric validity (Extended Data Figure 8).

Our study cannot conclusively determine mood drift’s behavioural consequences. On average, rest induces downward mood drift (Figure 2) and decreases gambling behaviour (Figure 5). However, a significant correlation between and individual’s mood drift and gambling behaviour was not observed. Our results are not able to discern whether the change in behaviour is directly linked to mood drift or to some other consequence of rest.

Our study’s limited set of tasks, all of which induced mood drift, makes it difficult to discern the phenomenon’s key contributing factors. We chose to focus on a category that is extremely common in neuroscience: long, neutral, low-stimulation tasks. Most researchers would see these qualities as unobjectionable or even desirable. We hope that the results of this study will lead researchers to reexamine this idea in their own research.

Methods

Participants

Online Adult Participants

Online adult participants were recruited using Amazon Mechanical Turk (Amazon.com, Inc., Seattle, WA), a service that allows a person needing work done (a “requester”) to pay other people (“workers”) to do computerised tasks (“jobs”) from home.86 Requesters can use “qualifications” to require certain demographic or performance criteria in their participants. We required that our participants be adults living in the United States, that they have completed over 5,000 jobs for other requesters, and that over 97% of their jobs have been satisfactory to the requester. We also required that participants had not performed any of our tasks (which were relatively similar to the ones in this study) before.

Every online participant received the same written instructions and provided informed consent on a web page where they were required to click “I Agree” to participate. Because we did not obtain information by direct intervention or interaction with the participants and did not obtain any personally identifiable private information, our MTurk studies were classified as not human subjects research and were determined to be exempt from IRB review by the NIH Office of Human Subjects Research Protections (OHSRP). The consent process and task/survey specifics were approved by the OHSRP. For data to be included in the final analyses, participants were required to complete both a task and a survey (described below). Participants submitted a 6-to-10-digit code revealed at the end of each one to prove that they had completed it. Both the task and survey had to be completed in a 90-minute period starting when they accepted the job on Amazon Mechanical Turk.

The consent form included a description of the tasks they were about to perform, but cohorts were blinded to the specific cohort to which they had been assigned. Most cohorts were collected in series, but some were randomised to a cohort at the time of participation (we have specified these in the Methods or Results). In the initial cohorts, no statistical methods were used to pre-determine sample sizes, but our cohort sample sizes are similar to those reported in,2 and our combined cohorts are much larger.

914 participants completed the task online. Some data files did not save properly due to technical difficulties or the participant closing the task window before being asked to do so. 44 participants whose task or survey data did not save were excluded. Of the 870 remaining Mechanical Turk participants, 390 were female (44.8%). Participants had a mean age of 37.6 years (range: 19–74).

A subset of the online adult participants were invited to return the following day to repeat the same task and survey a second time. Of the 66 individuals who completed both the task and the survey on the first day, 53 (80.3%) completed the task and survey on the second day. Gambling trials were randomised independently so that the subject was not seeing the exact same trials both times. Participants could complete the second task and survey any time in the following three days, but the task and survey had to be done together in the same 90-minute period.

Similarly, a different cohort was invited to return a week after their first run to repeat the same task and survey. These participants could complete the second task and survey any time in the following six days, but the task and survey had to be done together in the same 90-minute period. This cohort was then invited to complete the same task and survey a third time, two weeks after their first run. 196 individuals completed the task and survey the first week. 163 (83.2%) of these completed the task and survey the second week and 158 (80.6%) completed the task and survey the third week. 149 (76.0%) individuals completed the task and survey in all three weeks.

Online Adolescent Participants

Adolescent participants recruited in person at the National Institute of Mental Health were also invited to participate by completing a similar task on their computer at home. These participants completed a different set of questionnaires, developed for adolescents, about their mental health. Every participant received the same scripted instructions and provided informed consent to a protocol approved by the NIH Institutional Review Board.

There were 230 adolescents enrolled in the NIMH depression characterization study who were offered to complete tasks for this study. 129 agreed, a participation rate of 56.1%. 10 adolescents who had not completed all three questionnaires were excluded from the results, as were 3 participants who declined to allow their data to be shared openly. Of the remaining 116 adolescent participants, 77 were female (66.4%). They had a mean age of 16.3 years (range: 12 – 19). 56 participants (48.2%) had been diagnosed with major depressive disorder (MDD) by a clinician at the NIH, and 4 were determined to have sub-clinical MDD (3.4%). Participants had a mean depression score of MFQ = 6.5 (± 5.5 SD) and a mean anxiety score of SCARED = 2.2 (± 3.0 SD).

To assess the stability of findings in this population, the in-person adolescent participants were invited to return each week to complete the same task again, up to three times. 82 (70.6%) individuals completed the task a week later and 4 (3.4%) completed the task a third time the following week. The analyses presented in this paper use only the first run from this cohort.

Boredom, Mind-Wandering, and Activities Participants

In response to reviewer comments, a preregistered follow-up analysis included five new cohorts of MTurk participants who received similar tasks that also included mood ratings, rest periods, and the gambling game. This group was recruited to investigate the impacts of boredom and mind-wandering on mood changes, so they completed surveys about these traits in addition to the demographics, CES-D, and SHAPS questions. Participants were randomised to one of these 5 “follow-up cohorts,” summarised in Extended Data Table 1:

BoredomBeforeAndAfter (n=150), who received a boredom state questionnaire both before and after a 7-minute rest period with 15 s of rest between mood ratings.

BoredomAfterOnly (n=150), who received a boredom state questionnaire only after a 7-minute rest period with 15 s of rest between mood ratings.

MwBeforeAndAfter (n=150), who received a multidimensional experience sampling (MDES) questionnaire both before and after a 7-minute rest period with 15 s of rest between mood ratings.

MwAfterOnly (n=150), who received an MDES questionnaire only after a 7-minute rest period with 15 s of rest between mood ratings.

Activities (n=450), who received instructions to leave the task for 7 minutes and perform activities of their choice, completing mood ratings just before and after this period.

After the rest periods described above, each group completed a block of negative closed-loop gambling trials and a block of positive closed-loop gambling trials (as described in the “Gambling Blocks” section). Details of the cohorts’ tasks are found in the following sections. A full description of the preregistered tasks and analyses can be found at https://osf.io/gt7a8, registered on November 18, 2021. 1143 participants completed these tasks online. 93 participants were excluded because their task or survey data was incomplete or did not save, because they completed the task more than once despite instructions to the contrary, or because they failed to answer one or more “catch” questions correctly on the survey. Of the 1050 remaining participants, 463 were female (44.1%). Participants had a mean age of 39.3 years (range: 20–80).

The above sample sizes were selected using power calculations described in detail in the preregistration. For the scale validation experiments, a sample size of 150 in each group with an alpha of 0.01 gives 99.02 power to detect a medium effect (d = 0.5) and 83.04% power to detect an intermediate effect (d = 0.3) assuming the effect truly is null at a population level. Power for linear multiple regression tests were calculated in G*Power.87 In the boredom and MW cohorts, samples of 150 participants were selected to provide 80% power to detect a 7.99% increase in variance explained with the inclusion of a single parameter (alpha = 0.01, total predictors) and a 95% power to detect a 12.18% change in variance explained. In analyses using a pair of cohorts, 300 participants gives 80% power to detect a 3.93% increase in variance explained and a 95% power to detect a 6.01% increase in variance explained. An Activities cohort of 450 participants was chosen to provide 80% power to detect a difference between the Activities and MTurk cohorts of Cohen’s d = 0.2, and it also provides 80% power to detect a decrease in mood in the Activities cohort of Cohen’s d = 0.15.

Mobile App Participants

Gambling behaviour and mood rating data were collected from a mobile app called “The Great Brain Experiment”, described in.3 The Research Ethics Committee of University College London approved the study. When participants opened the app for the first time, they gave informed consent by reading a screen of information about the research and clicking “I Agree.” They then rated their life satisfaction as an integer between 0 (not at all) and 10 (completely). Any time they used the app after this, participants could then choose between several games, including one called “What makes me happy?” that was used in this research. We used a subset of 26,896 people, primarily from the US and UK, in our analyses. The median life satisfaction of the included participants, which will be used as a proxy for depression risk in this cohort, was 7/10. Age for this cohort was provided in bands. These are the bands and number of individuals in each band in the subset of data used in our analysis: 18–24 (6,500), 25–29 (4,522), 30–39 (7,190), 40–49 (4,829), 50–59 (2,403), 60–69 (1,158), and 70+ (294). 13,168 were female (49.0%).

Mobile app participants were randomly split into an exploratory cohort of 5,000 participants and a confirmatory cohort of all remaining participants. All analyses and hyperparameters involving mobile app participants were optimised using only the exploratory cohort, then tested on the confirmatory cohort. These confirmatory analyses were preregistered on the Open science Framework (https://osf.io/paqf6, registered on January 29, 2021).

In the linear mixed effects model described below, we made an effort to exclude participants who were outliers in the time they took to complete the task. Such outliers would have a large effect on the LME model’s mood slope term, where non-zero slopes would lead to large errors in these outlier participants. Outlier completion times also suggest that the participant was not fully paying attention to the task, either by responding without thinking or leaving the app for an extended period. Mobile app participants with an average task completion time that was less than Q1 − 1.5 * IQR or greater than Q3 + 1.5 * IQR (where Q1 is the 25th percentile, Q3 is the 75th percentile, and IQR = Q3-Q1) were excluded from this linear mixed effects analysis. 4.65% of participants were excluded based on these criteria, leaving n = 20, 877 mobile app participants.

Task and Survey

The online tasks were created using PsychoPy3 (v2020.1.2) and were uploaded to the task hosting site Pavlovia for distribution to participants. Pavlovia used the javascript package PsychoJS to display tasks in the web browser. Each task used the latest version of Pavlovia and PsychoJS available at the time of data collection. A list of all cohorts collected can be seen in Extended Data Table 1.

Mood Ratings

The task given to online participants is outlined in Figure 1A. Periodically during all tasks, participants were asked to rate their mood. Participants first saw the question “How happy are you at the moment?” for 3 seconds. Then a slider appeared below the question, with a scale whose ends were labeled “unhappy” and “happy.” A red circle indicated the current slider position, and it started in the middle for each rating. Participants could press and hold the left and right arrow keys to move the slider, then spacebar to lock in their response. If the spacebar was not pressed in 4.5 seconds, the current slider position was used as their mood rating.

As part of the instructions at the start of each run, the participant was asked to rate their overall “life happiness” in a similar (but slightly slower) rating. In this case, participants first saw the question “Taken all together, how happy are you with your life these days?” for 4 seconds. The slider then appeared, and the participant had 6.5 seconds to respond.

In one alternative version of the task, participants were asked to rate their mood with a single keypress instead of a slider. They could press a key 1–9 to indicate their current mood, where 1 indicated “very unhappy” and 9 indicated “very happy.” This alternative version was used to investigate the possibility that mood effects could be an artefact of the rating method, where participants’ ratings converged to the middle because this rating required the least effort.

Rest Blocks

In some blocks, participants were asked to simply rest in between mood ratings. These rest periods consisted of a central fixation cross presented on the screen. The duration of the rest period was 15 seconds for most versions of the experiment. For some versions, this duration was made longer or shorter to disentangle the impacts of rating frequency and elapsed time on mood, investigating the possibility that the mood ratings themselves were aversive.

Thought Probes and Activities Questions

Follow-up versions of the task included thought probes about state boredom or the emotional valence of ongoing thought (including mind-wandering). These groups received rest blocks as described above, but with additional questions just before and/or after it.

Two cohorts were collected to quantify the relationship between mood drift and boredom. Each received a rest period with mood ratings 20 seconds apart, followed by the Multidimensional State Boredom Scale’s short form (MSBS-SF), an 8-item scale of state boredom.44 Participants rated statements like “I feel bored” on a 7-point Likert scale from 1 (“Strongly Disagree”) to 7 (“Strongly Agree”). Their level of boredom was quantified as the sum of their ratings on the 8 questions. The first (cohort BoredomBeforeAndAfter, n = 150) completed the MSBS-SF both before and after the rest period. The second (cohort BoredomAfterOnly, n = 150) completed the MSBS-SF only after the rest period.

Two other cohorts were collected to quantify the relationship between mood drift and the emotional valence of ongoing thought (including mind-wandering). Each participant in the two mind-wandering cohorts received a rest period with mood ratings 20 seconds apart, followed by a 13-item Multidimensional Experience Sampling (MDES) as described by Turnbull et al.49 Participants were asked to respond to a set of questions by clicking on a continuous slider. Most questions, like “my thoughts were focused on the task I was performing”, were rated from “not at all” (scored as −0.5) to “completely” (scored as 0.5). The first (cohort MwBeforeAndAfter, n = 150) completed the MDES only after the rest period. The second (cohort MwAfterOnly, n = 150) completed the MDES only after the rest period.

As described by Ho et al.,88 we used principal components analysis (PCA) to quantify the affective valence of thought at each administration of MDES. We first compiled the MDES responses of all participants in the MwAfterOnly group into a matrix with 13 (the number of items in each administration) columns and 450 (the number of administrations) rows. We then used scikit-learn’s PCA function to find 13 orthogonal dimensions explaining the MDES variance. The use of PCA orthogonalises the MDES responses, which is desirable for their use as explanatory variables in an LME.35

For a preregistered analysis, we focused on the emotional content of ongoing thought (this approach was later abandoned in favour of examining the collective predictive power of all 13 MDES components, Supplementary Notes L-M).By examining the component matrix, we identified the component that loaded most strongly onto the “emotion” item of the MDES (in which they reported their thoughts as being negative or positive). The “emotion dimension” of each MDES (in both MW cohorts)) was then quantified as the amplitude of this component, calculated by applying this prelearned PCA transformation to the data and extracting the corresponding column. The sign of PCA components is not meaningful, so we arbitrarily chose that increased emotion dimension would represent more negative thoughts.

Another follow-up task investigated the impact on mood of a break period where participants were released to do whatever they wanted. Just before this break period, an alarm sound was played on repeat, and participants were asked to increase the volume on their computer until they could hear the alarm clearly. Participants were informed that they would have 7 minutes to put the task aside and do something else but should be ready to come back when the alarm sounded at the end. After these instructions and before the break, they rated their mood. During the break, the task window displayed a message saying “this is the break. An alarm will sound when the break is over” After the alarm sounded and participants returned, they rated their mood again. They were then asked 27 questions about how much of the break they spent doing various activities. They were asked to rate each by clicking on a 5-point Likert scale with options labeled “not at all” (scored at 0%), “a little” (scored at 25%), “about half the time” (scored at 50%), “a lot” (scored at 75%), or “the whole time” (scored at 100%). These scores were used to roughly describe the most common activities performed by the participants during the break.

Participants were randomised to one of the follow-up cohorts described in this section at the time of participation.

Task Blocks

In some blocks, participants completed a simple visuomotor task. In this task, the fixation cross moved back and forth across the screen in a sine wave pattern (peak-peak amplitude: 1x screen height, period: 4 seconds). Participants were asked to press the spacebar at the exact moment when the cross was in the center of the screen (as denoted by a small dot). In some blocks, they received feedback on their performance: each time they responded, the white cross turned green for 400 ms if the spacebar was pressed within the middle 40% of the sine wave’s position amplitude (i.e., less than 0.262 seconds before or after the actual center crossing).

Gambling Blocks

In each trial of the gambling task, participants saw a central fixation cross for 2 seconds. Three boxes with numbers in them then appeared. Two boxes on the right side of the screen indicated the possible point values they could receive if they chose to gamble (the “win” and “loss” values). On the left side, a single number indicated the points they would receive if they chose not to gamble (the “certain” value). Participants had 3 seconds to press the right or left arrow key to indicate whether they wanted to gamble or not. If no choice was made, gambling was chosen by default. After making their choice, the option(s) not chosen would disappear. If they chose to gamble, both possible gambling outcomes appeared for 4 seconds, then the actual outcome appeared for 1 second. If they chose not to gamble, the certain outcome appeared for 5 seconds. The locations (top/bottom) of the higher and lower gambling options were randomised.

The gambling outcome values were calculated according to several rules depending on the version of the experiment. In each version, the “base” value was a random value between −4 and 4 points. The other value was this base value plus a positive or negative reward prediction error (RPE). If they chose to gamble, participants would always receive the base value + RPE option. To encourage gambling, the “certain” value was set to (win + 2 * loss)/3, or 1/3 of the way from the loss value to the win value. (Note that this rule was the same for every subject and was therefore unlikely to drive individual differences in gambling behaviour.)

In the “random” version, the RPE was a random value with uniform distribution between −5.0 and 5.0. RPE magnitudes of less than 0.03 were increased to 0.03. If 3 trials in a row happened to have the same outcome (win or loss), the next trial was forced to have the other outcome.

In the “closed-loop” version, RPEs were calculated based on the difference between a participant’s mood and a “target mood” of 0 or 1. Some blocks of trials were “positive” blocks in which the participant had a 70% chance of winning on each trial (“positive congruent trials”) and a 30% chance of losing (“positive incongruent trials”). Other blocks were “negative” blocks in which the participant had a 70% chance of losing on each trial (“negative congruent trials”) and a 30% chance of winning (“negative incongruent trials”). If there had been 3 incongruent trials in a row, the next trial was forced to be congruent. The RPE was calculated as in a Proportional-Integral (PI) controller: a weighted sum of the current difference and the integral across all such differences reported so far in the block. The weightings were different for congruent and incongruent trials. Specifically, the RPE was set to:

Where is the trial index relative to the start of the block, M(t) is the mood reported after trial t, and is the target mood for the current block. RPEs with a magnitude of less than 0.03 were assigned a magnitude of 0.03.

During gambling blocks, mood ratings occurred after every 2 or 3 trials (on average, 1 rating every 2.4 trials). Every subject received mood ratings after the same set of trials.

At the end of the task, participants were presented with their overall point total. These point totals were translated into a cash bonus of $1–6 depending on their performance. Bonus cutoffs were determined based on simulations such that any value 1–6 were possible to achieve, but a typical subject gambling at every opportunity could be expected to receive approximately $3. Upon payment, participants received $8 for their participation (this was later increased to $10) plus this bonus.

Survey

After performing the task, online adult participants were asked to complete a series of questionnaires. In the demographics portion, they were asked for their age, gender and location (city and state). They were also asked to indicate their overall status using the MacArthur Scale of Subjective Social Status.89 Shown a ten-rung ladder, participants clicked on the rung that represented their overall status relative to others in the United States. This scale is a widely used indicator of subjective social status, and in certain cases, it has been shown to indicate health status better than objective measures of socioeconomic status.90

After the demographics portion, online adult participants completed questionnaires including the Center for Epidemiologic Studies Depression Scale (CES-D), a 20-item scale of depressive symptoms.91 They also completed the Snaith-Hamilton Pleasure Scale (SHAPS), a 14-item scale of hedonic capacity.92

In-person adolescent participants completed a different set of questionnaires, selected to be age-appropriate and maintain consistency with other ongoing research projects. These questionnaires included the Short Child Self-Report Mood and Feelings Questionnaire (MFQ), a 13-item scale of how the participant has been feeling and acting recently.79,93 They also included the Screen for Child Anxiety Related Emotional Disorders (SCARED), a 41-item scale of childhood anxiety.94 These questionnaires were completed before the subject began completing the online tasks described above.

Participants recruited for follow-up investigations of boredom, mind-wandering, and free time activities also completed the short boredom proneness scale (SBPS), an 8-item scale of an individual’s proneness to boredom in everyday life.45 They also completed the 5-item mind-wandering questionnaire (MWQ), which quantifies a person’s proneness to mind-wandering in everyday life.50 The SBPS and MWQ were used to quantify trait-level boredom and mind-wandering, respectively.

Mobile App

The task given to mobile app participants is outlined in Figure 1B. Mobile app participants completed 30 trials of a gambling game. In each trial, participants chose between a certain option and a gamble, represented as a spinner in a circle with two possible outcomes. If the participant chose to gamble, the spinner rotated for approximately 5 seconds before coming to rest on one of the two outcomes. Participants were equally likely to win or lose if they chose to gamble. The points were added to or subtracted from the participant’s total during an approximately 2-second inter-trial interval before the game advanced to the next trial. After every 2–3 trials (12 times per play), the participant rated their mood. They were presented with the question, “How happy are you right now?”. A slider was presented with a range from “very unhappy” to “very happy.” The participant could select a value by moving their finger on the slider and tapping “Continue”. No limit was placed on their reaction times.

Each participant received 11 gain trials (with gambles between one positive outcome and one zero), 11 loss trials (one negative outcome and one zero), and 8 mixed trials (one positive and one negative outcome). The possible gambling outcomes were randomly drawn from a list of 60 gain trials, 60 loss trials, and 30 mixed trials. Participants played one of two versions of the app, between which the only difference was the precise win, loss, and certain amounts in these lists. The amounts in the first version are described in detail in the supplementary material of.3 In the second version, gain trials had 3 certain amounts (35, 45, 55) and 15 gamble amounts (59, 66, 72, 79, 85, 92, 98, 105, 111, 118, 124, 131, 137, 144, 150). As in the first version, the set of loss trials was identical to the gain trials except that the values were negative. Mixed trials has 3 prospective gains (40, 44, 75) and 10 prospective losses (−10, −19, −28, −37, −46, −54, −63, −72, −81, −90). Both versions are described further in.33 The median participant played the game for approximately 5 minutes.

After playing the game, participants saw their score plotted against those of other players, and they were told if their score was a “new record” for them. They could then choose to play again and try to improve their score. We reasoned that introducing the notion of a “new record” would significantly change participants’ motivations and behaviour on subsequent runs, and we therefore limited our analysis to the first run from each participant.

Linear Mixed Effects Model

Analyses and statistics were performed using custom scripts written in Python 3. Participants’ momentary subjective mood ratings were fitted with a linear mixed effects (LME) model with rating time as a covariate using the Pymer4 software package (http://eshinjolly.com/pymer4/).95 Rating times were converted to minutes to satisfy the algorithm’s convergence criteria while maintaining interpretability. This method resulted in each participant’s data being modelled by a slope and intercept parameter such that:

| (1) |

where M0 is the estimated mood at block onset (intercept), βT is the estimated change in mood per minute (slope), and T(t) is the time in minutes from the start of the block. The LME modeling algorithm also produced a group-level slope and intercept term as well as confidence intervals and statistics testing against the null hypothesis that the true slope or intercept was zero.

The first block of the first run for all online adult and in-person adolescent cohorts experiencing rest or random gambling first were fitted together in a single model, with factors:

| (2) |

isMale is 1 if the participant reported their gender as “male,” 0 otherwise. meanIRI0ver20 is the mean inter-rating interval across the block(s) of interest (in seconds) minus 20 (a round number near the mean). totalWinnings is the total points won by the participant in the block(s). meanRPE is the mean reward prediction error across the block(s). totalWinnings and meanRPE will be zero for participants who were experiencing rest instead of gambling. fracRiskScore is the participant’s clinical depression risk score divided by a clinical cutoff: i.e., their MFQ score divided by 12 or their CES-D score divided by 16.

While the bounded mood scale prevents the error term of our mood models from being truly Gaussian, LMEs are typically robust to such non-Gaussian distributions.35

For reliability analyses, the first block of each run was modelled separately for each cohort/run with the same model shown above. An intraclass correlation coefficient quantifying absolute agreement (ICC(2,1)) between the runs of each cohort, was calculated using R’s “psych” package, accessed through the python wrapper package rpy2.

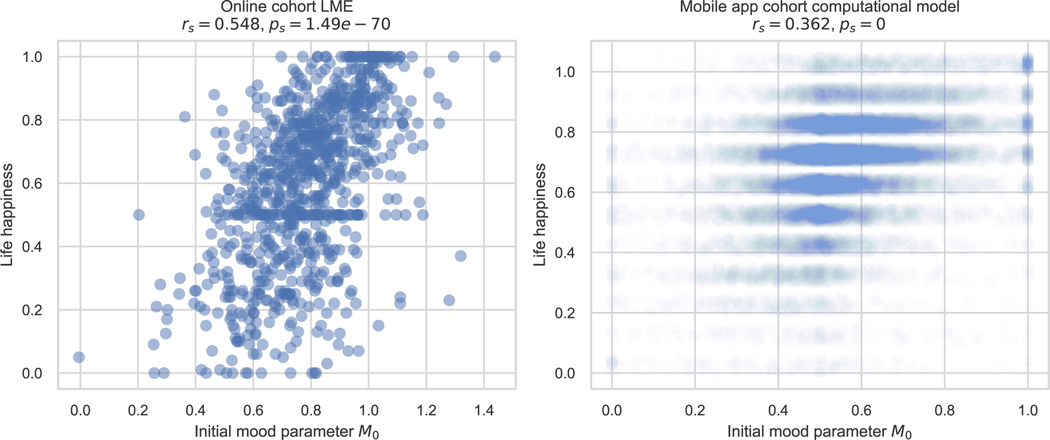

To measure the psychometric validity of the subjective momentary mood ratings, we correlated the initial mood (or “Intercept”) parameter of this model with the life happiness ratings. The correlation was highly significant (rs = 0.548, p < 0.001, 2-sided, Extended Data Figure 8,left).

For comparisons with the online data, the same model was also employed in the initial analysis of the mobile app data.

LME Model Comparisons

To compare the ability of additional terms like depression risk and state boredom to explain variance in our model of mood, we employed an ANOVA that compared two models: a reduced model with the factor but without its interaction with time, and an expanded model with both the factor and its interaction with time. All factors in Equation 2 were included in both models (except in the case of depression risk, where the reduced model contained fracRiskScore but not its interaction with Time). We then used R’s ANOVA function to compare the expanded and reduced model. The degrees of freedom were quantified as the difference in the number of parameters in the two models.

To examine the impact of including a factor(s) on mood variance explained, we used the within-individual and between-individual variance explained ( and ) as defined in.96,97 This calculation required a null model including only an intercept and random effects, which we defined as:

| (3) |

The within-individual variance of each model was defined as:

| (4) |

where is the variance of the residuals of the model, is the variance of the random effects, is the variance of the residuals of the null model, and is the variance of the random effects in the null model. The variance of the random effects in a model was calculated using R’s MuMIn library,98 taking into account the correlation between model factors.

The between-individual variance of each model was defined as:

| (5) |

where k was defined as the harmonic mean of the number of mood ratings being modelled for each participant.

Because the depression risk, boredom, and mind-wandering factors were constant for each subject, we focus primarily on the between-individual variance explained .

To compare the variance explained by the expanded and reduced models as a measure of effect size, we used Cohen’s f2 statistic,39, 40 defined as:

| (6) |

Where is the variance explained by the expanded model and is the variance explained by the reduced model. Separate f2 values can be calculated using the within-individual or between-individual variances. Using Cohen’s guidelines,39 f2 ≥ 0.02 is considered a small effect, f2 ≥ 0.15 is considered a medium effect, and f2 ≥ 0.35 is considered a large effect.

Computational Model

When examining the effect of time on mood during random gambling in the mobile app data, we next attempted to disentangle time’s effects from those of reward and expectation using a computational model. The model is based on one described in detail by2 that has been validated on behavioural data from a similar gambling task. The authors found that changes in momentary subjective mood were predicted accurately by a weighted combination of current and past rewards and RPEs in the task. Quantifying RPEs relies on subjective expectations that are formulated according to a “primacy model,” in which expected reward is more heavily influenced by early rewards than it is by recent ones.

The model described in2 was modified to include a coefficient βT that linearly relates time and mood. Our modified model is defined as follows:

| (7) |

In the above equation, is the trial index, and is the estimated mood rating from trial t. M0 (the estimated mood at time 0), λ (an exponential discounting factor), and the βs are learned parameters of the model. A(t) is the actual outcome (in hundreds of points) of trial t, T(t) is the time of trial t in minutes, and E(t) is the primacy model of the subject’s reward expectation in trial t, defined as:

| (8) |

If we remove the influence of time (i.e., set our βT = 0), the full mood model in2 is equivalent to this one as long as its reward prediction error coefficient is less than its expectation coefficient (i.e., ) and , where and denote the values and defined in2). The values in our model can be derived from the values in theirs by setting and .

We used the PyTorch package99 on a GPU to fit 500 models simultaneously for each subject. βT was initialised to random values with distribution . βE and βA were initialised to random values with distribution Lognormal (0, 1) and capped to the interval [0,10] on every iteration. M0 and λ were initialised to random values with normal distributions , then sigmoid-transformed (to facilitate optimization and conform to the interval [0, 1]) using the standard logistic function:

| (9) |

At the end of 100,000 iterations, the model with the lowest sum of squared errors (SSE) (i.e., ) was selected. The time coefficient βT learned by the model could then be used as a measure of the influence of time on that participant’s mood, disentangled from the effects of rewards and RPEs.

End-to-end optimization was carried out using ADAM100 with a learning rate of α = 0.005. L2 penalty terms were placed on the β terms and added to the sum of squared errors. This meant that the objective function being minimised was:

| (10) |

The regularization hyperparameters λEA and λT were determined from a tuning step, in which the model was trained on the first 10 mood ratings and tested on the last two in each of 5,000 exploratory participants. One model was trained with each combination of λEA and λT ranging from 10−4 to 103 in 20 steps (evenly spaced on a log scale). The testing loss (median across participants) across penalty terms was fitted to a third degree polynomial using Skikit-Learn’s kernel ridge regression with regularization strength α = 10.0. The best fitting regularization hyperparameters were defined as those that minimised this smoothed testing loss.

As in the LME, the bounded mood scale prevents the error term of our mood models from being truly Gaussian. Our computational model attempted to mitigate the effect of non-Gaussianity by capping mood predictions to the allowable range, initialising parameters to non-normal distributions, and restricting parameters to feasible ranges on every iteration.

As in the online cohort’s LME model, the initial mood parameter M0 showed psychometric validity. It was significantly correlated with life happiness (rs = 0.362, p < 0.001, Extended Data Figure 8, right).

Control Model

To quantify the effect of including the time-related term, we fitted a control model without βT. This control model is defined as follows:

| (11) |

As in the primary model, the regularization hyperparameter λEA in this control model was tuned using the method described above.

Data Availability

All data used in the manuscript have been made publicly available. Online Participants’ data can be found on the Open Science Framework at https://osf.io/km69z/. Mobile App Participants’ data can be found on Dryad at https://doi.org/10.5061/dryad.prr4xgxkk.101

Code Availability

The code for the task and survey is available on GitLab at https://gitlab.pavlovia.org/mooddrift. Our data analysis software, as well as the means to create a Python environment that automatically installs it on a user’s machine, has been made available online at https://github.com/djangraw/MoodDrift.

Extended Data

Extended Data Fig. 1.

Mood rating frequency does not affect mood drift slope

Mean ± STE mood rating at each time in the 4 cohorts with 60 s, 30 s, 15 s, and 7.5 s of rest between mood ratings (cohorts 60sRestBetween, 30sRestBetween, 15sRestBetween, and 7.5sRestBetween, respectively). The magnitude of mood drift did not vary with the frequency of mood ratings.

Extended Data Fig. 2.

Mood slope parameter distributions vary with analysis choice

Histogram of the LME mood slope parameters for the online cohort (blue) and the confirmatory mobile app cohort (orange), along with the computational model time sensitivity parameter for the confirmatory mobile app cohort (green). Mobile app participants with outlier task completion times were excluded from the LME analysis (see Methods). Note that the use of LME modeling to analyze the mobile app data significantly lowered the distribution of slopes compared to when the computational model was used (median = −0.752 vs. −0.0408, IQR= 2.10 vs. 0.764 %mood/min, 2-sided Wilcoxon rank-sum test, W42771 = −54.2, p<0.001), but the LME slopes from the mobile app were still significantly greater than those of the online cohort (median = −1.53 vs. 0.752, IQR = 2.34 vs. 2.1 %mood/min, 2-sided Wilcoxon rank-sum test, W21761 = 14.5, p<0.001). Vertical lines represent group medians. Stars indicate p<0.05. P values were not corrected for multiple comparisons.

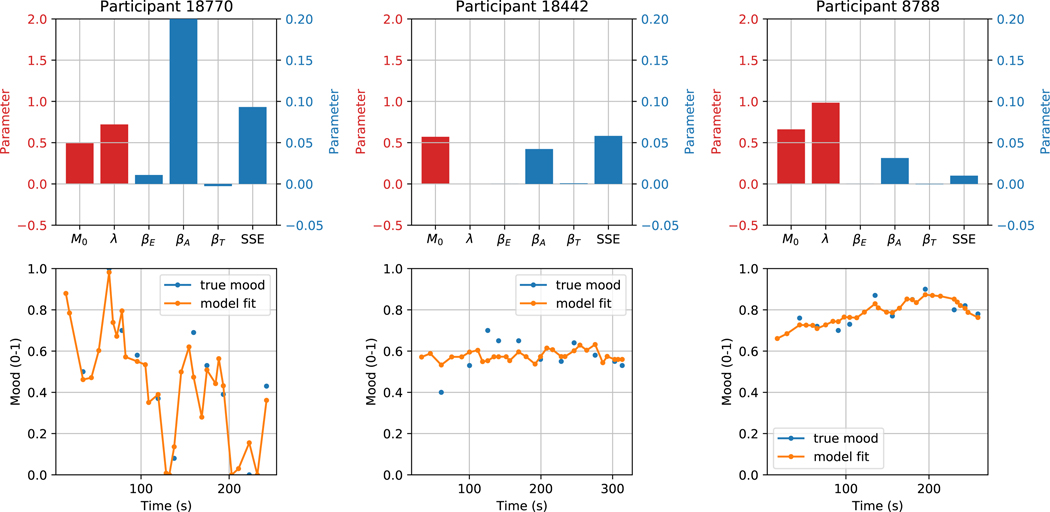

Extended Data Fig. 3.

Sample fits of the computational model

Sample fits of the computational model for three random subjects in the confirmatory mobile app cohort. SSE = sum squared error, a measure of goodness of fit to the training data. In the top plots, the red bars are in units of the left-hand y axis, and the blue bars are in units of the right-hand y axis.

Extended Data Fig. 4.

Histogram of computational model parameters

Histogram of computational model parameters across the 21,896 confirmatory mobile app subjects.

Extended Data Fig. 5.

Mood drift stability over blocks, days, and weeks

Stability of LME coefficients estimating the initial mood (top) and slope of mood over time (bottom) for each participant across rest periods one block apart (left), 1 day apart (middle), and 2 weeks apart (right). ICC denotes the intra-class correlation coefficient for each comparison. P values shown are one-sided (since ICC values are expected to be positive) with no correction for multiple comparisons.

Extended Data Fig. 6.

Relationship between mood drift and depression risk