Abstract

Introduction

It is known that only a limited proportion of developed clinical prediction models (CPMs) are implemented and/or used in clinical practice. This may result in a large amount of research waste, even when considering that some CPMs may demonstrate poor performance. Cross-sectional estimates of the numbers of CPMs that have been developed, validated, evaluated for impact or utilized in practice, have been made in specific medical fields, but studies across multiple fields and studies following up the fate of CPMs are lacking.

Methods and analysis

We have conducted a systematic search for prediction model studies published between January 1995 and December 2020 using the Pubmed and Embase databases, applying a validated search strategy. Taking random samples for every calendar year, abstracts and articles were screened until a target of 100 CPM development studies were identified. Next, we will perform a forward citation search of the resulting CPM development article cohort to identify articles on external validation, impact assessment or implementation of those CPMs. We will also invite the authors of the development studies to complete an online survey to track implementation and clinical utilization of the CPMs.

We will conduct a descriptive synthesis of the included studies, using data from the forward citation search and online survey to quantify the proportion of developed models that are validated, assessed for their impact, implemented and/or used in patient care. We will conduct time-to-event analysis using Kaplan-Meier plots.

Ethics and dissemination

No patient data are involved in the research. Most information will be extracted from published articles. We request written informed consent from the survey respondents. Results will be disseminated through publication in a peer-reviewed journal and presented at international conferences.

OSF registration

Keywords: EPIDEMIOLOGY, Primary Prevention, Prognosis

STRENGTHS AND LIMITATIONS OF THIS STUDY.

The longitudinal follow-up of developed clinical prediction models (CPMs), and the combination of systematic literature search with an online survey to retrieve follow-up information are strengths of this protocol.

Follow-up of the fate of the developed CPMs is partly dependent on participant response rates to the online survey.

Restricting the language of included studies to English will exclude studies published in other languages.

Introduction

With the increasing emphasis on personalised healthcare in clinical practice, prediction models have become ever more popular.1 2 Clinical prediction models (CPMs) can inform individuals and clinicians about the risk of having (diagnosis) or developing (prognosis) a particular disorder or outcome. They can improve healthcare quality by helping clinicians and individuals with timely decision making, or improved cost-effectiveness of treatment strategies.3 4

The annual number of articles on prediction model development has exponentially increased over the last decades.3 5 6 While some CPMs have been implemented and are regularly used in clinical practice, the clinical uptake of CPMs is known to be limited relative to the number that are developed.4 7 Prior to implementing a CPM in practice, research must show that its predictions are generalisable to individuals independent of model development, through external validation.4 8 Following validation, the impact of the CPM on clinical management and outcomes should ideally be evaluated.8 Currently, based on reviews, a minority of developed models are externally validated: 52% for cardiovascular outcomes,9 36% for chronic kidney disease,10 12% for lung cancer,11 8.7% for prognostic obstetrics CPMs12 and 4.6% for mental disorders.13 Although it has rarely been quantified systematically, even fewer CPMs undergo impact assessment. Only 0.2% of the above-mentioned prognostic models obstetrics had undergone impact assessment, and no impact studies of the CPMs for mental disorders were reported.12 13 In addition, the proportion of CPMs being implemented or used in clinical practice has not been studied systematically and is limited to one study on the clinical utilization of artificial intelligence in the COVID-19 response.14 Studies of CPMs that are not translated into clinical practice, such as well-performing models that do not undergo external validation or validated models that are never assessed for clinical utility, can be considered research waste.

As the aforementioned percentages exemplify, quantitative estimations of the numbers of developed and validated CPMs have been published in selected medical fields. These, however, likely reflect the estimation in fields where prediction modelling is more common. In addition, each review is a snapshot of the situation at a particular time in a particular field, and interpretation is hampered by differences in methodology across studies. Moreover, systematic literature reviews are not suitable for quantifying utilized CPMs in clinical practice as evidence of utilization may not be published in the medical literature. Finally, not all utilized CPMs may have followed the recommended research steps from development to validation, impact assessment and finally implementation and utilization. Phases may have been skipped or never reported.

The aim of this study is to evaluate the proportion of CPMs that undergo external validation, impact assessment or implementation and make it into clinical practice after development.

Methods and analysis

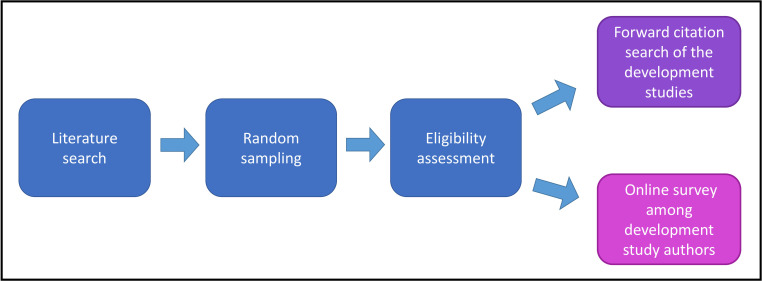

We have conducted a systematic search of published prediction modelling publications to identify published CPM articles for health outcomes. We will follow-up these srticles by performing a forward citation search to identify any subsequent validation, impact assessment or implementation of the CPMs. We will additionally conduct an online survey among study authors of the CPM cohort to track their implementation and clinical utilization (figure 1).

Figure 1.

Schematic view of the study methods.

The protocol for this study has been prepared in accordance with the Strengthening the Reporting of Observational studies in Epidemiology guidance.15 Also, the Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols statement16 have been incorporated in the description of the systematic search and the forward citation search. This protocol has been registered on the Open Science Framework (OSF) Registries platform (https://osf.io/nj8s9).

Patient and public involvement

This study was performed without participant involvement in design or conduct.

Systematic search for prediction model articles

Information sources and search strategy

We performed a systematic literature search in PubMed and Embase for prediction model studies published between January 1995 and December 2020. To do this, we used the validated search strategy for retrieving CPM studies published by Ingui and Rogers.17 The strategy has demonstrated a sensitivity of 98.2% (91.5–99.9) and a specificity of 86.1% (85.4–86.7) for identifying prediction model articles (box 1).17 We adjusted this strategy for the Pubmed and Embase searches, restricting our searches to human studies only. The final adjusted search terms are provided in online supplemental file 1.

Box 1. Original search strategy from the Ingui study.

‘(Validat$ OR Predict$.ti. OR Rule$) OR (Predict$ AND (Outcome$ OR Risk$ OR Model$)) OR ((History OR Variable$ OR Criteria OR Scor$ OR Characteristic$ OR Finding$ OR Factor$) AND (Predict$ OR Model$ OR Decision$ OR Identif$ OR Prognos$)) OR (Decision$ AND (Model$ OR Clinical$ OR Logistic Models/)) OR (Prognostic AND (History OR Variable$ OR Criteria OR Scor$ OR Characteristic$ OR Finding$ OR Factor$ OR Model$))’

bmjopen-2023-073174supp001.pdf (301KB, pdf)

Study records and data management

Publications’ records including the titles and abstracts from the searched online databases were imported into EndNote Citation Manager separately for each publication year. Based on the estimated positive predictive value (PPV) of the search string (PPV (95% CI) 3.5% (2.7–4.6)),17 we expected to require 110 randomly selected studies by the search hits per year, 2860 in total, to identify at least 100 articles, with at least one eligible development article in each year. Details about the random selection of abstracts are provided in online supplemental file 1. The unselected abstracts of each publication year were held in reserve. As the target sample size of 100 articles, and one minimum development article per year, was not reached after the screening process, we increased the sample size by increments of 30 articles per year, until we had included at least 100 papers. With the target sample size of 100 development articles, we will be able to estimate the proportions of developed CPMs that are later validated, assessed for impact, implemented and used with reasonable precision. With this sample size we will reach Wilson score CI half-width (i.e., margin of error) of 2.6% assuming a proportion of 1% and score CI half-width of 8% assuming a proportion of 20%.

Selection process

The titles and abstracts of all studies identified through the electronic search were screened in duplicate by two reviewers. Any conflicts were discussed between the two reviewers. If conflicts were unresolved, a final decision was made by discussions during team consensus meetings. The selection process will be presented in a Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flowchart.16

Eligibility criteria

Articles were eligible for inclusion in this study if they described development, validation or impact assessment of a multivariable model for use in healthcare settings.12 Included articles were labelled as: development studies, non-regression development studies, validation studies and impact studies. An article was categorised as a development study if it aimed to develop a regression-based multivariable prediction model for a health outcome. Studies developing a prediction model which did not use logistic, proportional hazards or linear regression were designated as ‘non-regression’ development studies. An article that aimed to externally validate a health prediction model (regression-based) in a different population or in a different time period was designated as a ‘validation study’. Finally, ‘impact studies’ included studies assessing the impact of a health prediction model on health outcomes, efficiency or costs, including (quasi-)experimental studies and health-economic assessments. Studies on model updates (e.g., adding/dropping predictor(s) to/from a previously developed model) were categorised as development studies. An article could be of more than one type (e.g., development and validation study).

Articles were excluded from the review if they did not present any developed multivariable prediction models, external validation or impact assessment in their main results (e.g., aetiological studies, risk factor assessments, systematic reviews); if they were based on a single predictor (e.g., one biomarker), diagnostic tools or questionnaires (e.g., for diagnosis of depression); if they presented prediction models that were neither diagnostic nor prognostic; if they did not yield predictions for individuals; if they did not concern humans; or if they were methodological studies. Other articles that were excluded were those that were not written in English, conference abstracts and articles with no available full text. Studies were not deemed as a validation study if they had used a random train-test split. Finally, studies were not considered as impact studies if they were based on decision curve analysis only. Further details about the eligibility criteria are summarised in table 1.

Table 1.

Eligibility criteria in the systematic search

| Type of inclusion | Description |

| Development study | Study presenting development of a regression-based multivariable model, with more than one predictor, for a clinical health outcome |

| Non-regression development study | Study describing development of a multivariable prediction model not based on traditional logistic, proportional hazards or linear regression (e.g., a random forest, neural network or a score chart not based on multivariable regression) |

| Validation study | Study reporting the predictive performance of a multivariable prediction model in a different population or in a later period of time |

| Impact study | Study aiming to assess the impact of a health prediction model concerning health outcomes, efficiency or costs (e.g., a (quasi-) experiment or a health-economic analysis) |

Full-text articles were retrieved for all selected studies for full-text screening and data extraction.

Data collection process

We will perform data extraction for all included development articles. Two reviewers will independently use a standardised data extraction form to retrieve information from each article. Discrepancies or disagreements will be discussed between reviewers; any remaining conflicts will be adjudicated by the lead author. Some studies may have investigated more than one model. In such instances, data will be extracted for each model in the study. Data and records will be stored on a secure platform.

Data items

Data variables to be extracted will be selected from the CHecklist for critical Appraisal and data extraction for systematic Reviews of prediction Modelling Studies5 and the Prediction model Risk Of Bias Assessment Tool.6 Extracted data from development articles will include the medical domain; publishing journal; impact factor at the year of publication; country; publication date (year, month); study design; outcomes to be predicted; study setting; study population; data sources; patient characteristics; total study sample size; number of individuals with the outcome; number of models developed; type of model (diagnostic/prognostic); number and type of predictors; accompanying internal or external validation; validation type; predictive performance measures (discrimination and calibration); and model presentation (nomogram, sum score, online calculator, etc.). This form will be piloted for five included articles and will contain instructions for the reviewers on how to assess the models presented in the articles. For example, the reviewer is instructed to separately extract information for each developed or validated model within a single paper.

Forward citation search of the CPM development studies

Following the systematic search, we will search for publications citing the included CPM development articles, with a focus on regression-based models. We will conduct a forward citation search of the included studies in Scopus and Web of Science, two known tools for this aim, using the digital object identifier search function in the two online databases. Detailed information about the forward search details and procedure are described in online supplemental file 1.

Eligibility criteria

Articles found in the forward citation search will be deemed eligible if they are validation, model update or impact studies evaluating the developed model. We will also include guidelines and consensus statements that include recommendations with respect to model implementation; meta-analyses or (systematic) reviews directly assessing or comparing the model to other models; and articles reporting decision aids based on the models (e.g., development or piloting of decision aids or computerised decision-support systems). Protocol articles for external validation or impact assessment will also be included. Protocols will be additionally assessed for any other published articles that might have been missed by the search. Studies updating/adjusting the included developed CPM will be separately labelled.

Exclusion criteria are: meta-analyses or (systematic) reviews not specifically recommending or reporting the predictive performance of the included model, and studies using different sets of predictors (except for model updating studies). Other exclusions include methodological research, validation of single predictors, letters to editors and articles with no English full text available.

Selection process for forward citation search

First, titles and abstracts of all studies identified through the search will be screened by the researchers. After training reviewers in an initial title and abstract screening phase with duplicate screening by independent reviewers, we will proceed with single screening. Title and abstract screening will be sensitive: in case of doubt regarding eligibility, articles will proceed to full text assessment. Next, eligible articles will go through full text assessment and data extraction. The selection process will be presented in a PRISMA flowchart.

Data items

Data items to extract from all studies included via the forward citation search will commonly include country; publication date (year, month); number of shared authors with the CPM development article; publishing journal and impact factor in the year of publication; setting; country; data sources; study design; total study sample size; study population; population characteristics; primary study outcome; secondary study outcomes (if applicable). Additional data will be extracted depending on the article type:

Validation and model update studies: number of individuals with the outcome; validation type; predictive performance measures (discrimination and calibration); and model presentation (nomogram; sum score; online calculator; etc).

Empirical impact studies and decision model-based impact studies: target population; modelling method (individual level or cohort-level) (for decision model-based impact studies); prediction model-based innovation that is evaluated (risk threshold(s), risk-based management options); comparison condition; and whether there are recommendations to apply the prediction model-based innovation or not.

Development or piloting of a prediction model-based innovation: description of prediction model-based innovation; target population; intended user (healthcare professional, patient); and moment of application.

Meta-analyses and systematic reviews: total number of individuals with the outcome; summary predictive performance measures of the model of interest (discrimination and calibration); number of included models (in case of model comparisons); and whether there are recommendations to use or not use the model/prediction model-based innovation.

Guidelines and consensus statements: name of the guideline/consensus statement; the issuing organisation; country of issue and whether there are recommendations to apply the prediction model-based innovation or not.

Data synthesis

Data on validation studies, impact studies, systematic reviews, decision aids and guideline/consensus papers will be described using the median (upper quartile and lower quartile) or N (%), as appropriate. For each included citing article, time since publication of the CPM development articles will be calculated. Kaplan-Meier plots will be drawn for each article type published after model development. Plots will also be stratified based on the CPMs’ publication date.

Online survey among model developers

In this track of the study, we will perform a survey among the authors of the included CPM development articles to study the implementation process and clinical utilization. The survey questions can be found in online supplemental file 1. The survey will be distributed using the Qualtrics platform (available at https://www.qualtrics.com/). We will first contact the corresponding author. In case of non-response, we will subsequently contact the last author, the second to last, first and second author (in this order), also if updated contact information for the author is not found. If any of the latter mentioned authors are the corresponding author, we will move to the next author. Details of data collection are presented in online supplemental file 1. Data collection will be complete when one complete response is received. Non-response from all contacted authors will be recorded as missing follow-up information. Informed consent from participants will be obtained using an online consent form and authors can withdraw at any time.

In addition, we will perform a targeted Google search for each CPM using the model name and/or developer (or developing group/consortium, if applicable) to obtain additional information on implementation or utilization of CMPs after the date of publication, if authors could not be reached or in case of non-response. For each CPM we will scan the first 30 results for each targeted search result, to obtain publicly available documentation of implementation or utilization. Information on the date of each finding and the description of utilization or implementation will be recorded.

Variables and outcomes measured in the follow-up survey

Implementation of prediction models

To evaluate implementation we will ask authors about any recommendations in medical guidelines, incorporation of CPMs in training programmes, construction of any charts/nomograms/scoring rules and software/automated systems/web-based calculators that could facilitate and encourage model utilization after development.8 We have previously consulted with an independent panel of experts in prediction model research about the products of implementation that would be relevant to consider.

Utilization of prediction models

In our follow-up survey, a utilized model will be defined as a multivariable risk prediction model that has been systematically used for medical decision making or risk counselling of patients for whom the model is intended (table 2). ‘Used for medical decision making’ means that the patient-specific outcome of the model has been considered in creating the differential diagnosis, making a decision on the nature of further diagnostic work-up, medical treatment or preventive measures in individual patients. ‘Used for risk counselling of patients’ means that the patient-specific outcome of the model has been used to inform the patient about their risk.

Table 2.

Definition of outcomes measured by the follow-up survey

| Definition | Description |

| Clinical utilization | |

| Multivariable risk prediction model systematically applied for medical decision making or risk counselling of individuals intended by the model | Utilization in the same centre(s) involved in model development (yes/no), in the same country as model development (yes/no), number of centres |

| Multivariable risk prediction model systematically applied for decision making or risk counselling of individuals for research purposes | Application for validation study, impact study, inclusion criteria in randomised controlled trial or observational studies |

| Implementation | |

| Recommendation in medical guidelines | In the same country (yes/no) |

| Incorporation in training programmes | For healthcare professionals, research purposes or educational curriculum |

| Construction of nomograms/charts/scoring rules | |

| Software and integration into IT systems | |

Utilization can be assistive, where the risk prediction is presented without a decision recommendation; or directive, where the model’s decision or management recommendations should be followed.8 A model is systematically utilized if it has been consistently or frequently used for individuals in settings the developed model is intended for. Data to define clinical utilization of developed CPMs in this study will be collected using the online survey. To ascertain this outcome, questions differentiate between utilization in clinical and research settings. CPMs will be defined as ‘utilized in clinical settings’ if they have been clinically used outside research settings. In case of clinical utilization, further information about the scale of utilization (number of centres, local or international) will be collected. In case of utilization in research settings, questions about the type and study setting will be asked (e.g., for validation or impact studies, or for study inclusion in observational or experimental studies). Authors will be asked to provide possible documents/links for further verification of results.

We sought feedback on the definitions and piloted the questionnaire with an independent panel of 14 researchers with experience in implementing prediction models.

Data synthesis

Data collected by the online survey will be summarised using descriptive statistics (median (upper quartile, lower quartile) or N (%), as appropriate). We will report the number of CPMs that have been implemented and/or clinically utilized. Additionally, we will stratify our results based on CPM publication date, in decades. Moreover, we will present results separately for models that have and have not been validated externally and have and have not undergone impact analyses. If the number of outcomes CPMs permits, we will conduct logistic regressions with implementation and utilization as outcomes, to obtain adjusted ORs of publication date (not categorised), external validation and impact analysis.

Ethics and dissemination

No patient data are involved in the research. Most information will be extracted from published articles. We request written informed consent from the survey respondents. Results will be disseminated through publication in a peer-reviewed journal and presented at international conferences.

Patient and public involvement

Not applicable.

Supplementary Material

Footnotes

Twitter: @_BArshi_, @laure_wynants, @LauraCowley28

Contributors: For this manuscript, BA has contributed to conceptualisation, writing, methodology, reviewing and editing. LW and LS have contributed to conceptualisation, methodology, administration, supervision, reviewing and editing. LEC, ER and KR have contributed to conceptualisation, reviewing and editing.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1.Liao W-L, Tsai F-J. Personalized medicine: a paradigm shift in healthcare. BioMedicine 2013;3:66–72. 10.1016/j.biomed.2012.12.005 [DOI] [Google Scholar]

- 2.Collins GS, Reitsma JB, Altman DG, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. British Journal of Surgery 2015;102:148–58. 10.1002/bjs.9736 [DOI] [PubMed] [Google Scholar]

- 3.Geersing G-J, Bouwmeester W, Zuithoff P, et al. Search filters for finding prognostic and diagnostic prediction studies in MEDLINE to enhance systematic reviews. PLoS One 2012;7:e32844. 10.1371/journal.pone.0032844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cowley LE, Farewell DM, Maguire S, et al. Methodological standards for the development and evaluation of clinical prediction rules: a review of the literature. Diagn Progn Res 2019;3:16. 10.1186/s41512-019-0060-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Moons KGM, de Groot JAH, Bouwmeester W, et al. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the charms checklist. PLoS Med 2014;11:e1001744. 10.1371/journal.pmed.1001744 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Moons KGM, Wolff RF, Riley RD, et al. PROBAST: a tool to assess risk of bias and applicability of prediction model studies: explanation and elaboration. Ann Intern Med 2019;170:W1. 10.7326/M18-1377 [DOI] [PubMed] [Google Scholar]

- 7.de Jong Y, Ramspek CL, Zoccali C, et al. Appraising prediction research: a guide and meta-review on bias and applicability assessment using the prediction model risk of bias assessment tool (PROBAST). Nephrology (Carlton) 2021;26:939–47. 10.1111/nep.13913 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Moons KGM, Kengne AP, Grobbee DE, et al. Risk prediction models: II. external validation, model updating, and impact assessment. Heart 2012;98:691–8. 10.1136/heartjnl-2011-301247 [DOI] [PubMed] [Google Scholar]

- 9.Wessler BS, Nelson J, Park JG, et al. External validations of cardiovascular clinical prediction models: a large-scale review of the literature. Circ: Cardiovascular Quality and Outcomes 2021;14. 10.1161/CIRCOUTCOMES.121.007858 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Collins GS, Omar O, Shanyinde M, et al. A systematic review finds prediction models for chronic kidney disease were poorly reported and often developed using inappropriate methods. J Clin Epidemiol 2013;66:268–77. 10.1016/j.jclinepi.2012.06.020 [DOI] [PubMed] [Google Scholar]

- 11.Gray EP, Teare MD, Stevens J, et al. Risk prediction models for lung cancer: a systematic review. Clin Lung Cancer 2016;17:95–106. 10.1016/j.cllc.2015.11.007 [DOI] [PubMed] [Google Scholar]

- 12.Kleinrouweler CE, Cheong-See FM, Collins GS, et al. Prognostic models in obstetrics: available, but far from applicable. Am J Obstet Gynecol 2016;214:79–90. 10.1016/j.ajog.2015.06.013 [DOI] [PubMed] [Google Scholar]

- 13.Salazar de Pablo G, Studerus E, Vaquerizo-Serrano J, et al. Implementing precision psychiatry: a systematic review of individualized prediction models for clinical practice. Schizophr Bull 2021;47:284–97. 10.1093/schbul/sbaa120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mann S, Berdahl CT, Baker L, et al. Artificial intelligence applications used in the clinical response to COVID-19: a scoping review. PLOS Digit Health 2022;1:e0000132. 10.1371/journal.pdig.0000132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Knottnerus A, Tugwell P. STROBE—a checklist to strengthen the reporting of observational studies in epidemiology. Journal of Clinical Epidemiology 2008;61:323. 10.1016/j.jclinepi.2007.11.006 [DOI] [PubMed] [Google Scholar]

- 16.Page MJ, McKenzie JE, Bossuyt PM, et al. The prisma 2020 statement: an updated guideline for reporting systematic reviews. Syst Rev 2021;10:89. 10.1186/s13643-021-01626-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ingui BJ, Rogers MAM. Searching for clinical prediction rules in MEDLINE. J Am Med Inform Assoc 2001;8:391–7. 10.1136/jamia.2001.0080391 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2023-073174supp001.pdf (301KB, pdf)