Summary

When stimulated, neural populations in the visual cortex exhibit fast rhythmic activity with frequencies in the gamma band (30–80 Hz). The gamma rhythm manifests as a broad resonance peak in the power-spectrum of recorded local field potentials, which exhibits various stimulus dependencies. In particular, in macaque primary visual cortex (V1), the gamma peak frequency increases with increasing stimulus contrast. Moreover, this contrast dependence is local: when contrast varies smoothly over visual space, the gamma peak frequency in each cortical column is controlled by the local contrast in that column’s receptive field. No parsimonious mechanistic explanation for these contrast dependencies of V1 gamma oscillations has been proposed. The stabilized supralinear network (SSN) is a mechanistic model of cortical circuits that has accounted for a range of visual cortical response nonlinearities and contextual modulations, as well as their contrast dependence. Here, we begin by showing that a reduced SSN model without retinotopy robustly captures the contrast dependence of gamma peak frequency, and provides a mechanistic explanation for this effect based on the observed non-saturating and supralinear input-output function of V1 neurons. Given this result, the local dependence on contrast can trivially be captured in a retinotopic SSN which however lacks horizontal synaptic connections between its cortical columns. However, long-range horizontal connections in V1 are in fact strong, and underlie contextual modulation effects such as surround suppression. We thus explored whether a retinotopically organized SSN model of V1 with strong excitatory horizontal connections can exhibit both surround suppression and the local contrast dependence of gamma peak frequency. We found that retinotopic SSNs can account for both effects, but only when the horizontal excitatory projections are composed of two components with different patterns of spatial fall-off with distance: a short-range component that only targets the source column, combined with a long-range component that targets columns neighboring the source column. We thus make a specific qualitative prediction for the spatial structure of horizontal connections in macaque V1, consistent with the columnar structure of cortex.

When presented with a stimulus, populations of neurons within visual cortices exhibit elevated rhythmic activity with frequencies in the so-called gamma band (30–80 Hz) (Jia et al., 2013; Ray and Maunsell, 2010). These gamma oscillations can be observed in local field potential (LFP) or electroencephalogram (EEG) recordings and, when present, manifest as peaks in the LFP/EEG power-spectra. It has been proposed that gamma oscillations perform key functions in neural processing such as feature binding (Singer, 1999), dynamic communication or routing between cortical areas (Fries, 2005, 2015; Ni et al., 2016; Palmigiano et al., 2017), or as a timing or “clock” mechanism that can enable coding by spike timing (Buzsáki and Chrobak, 1995; Draguhn and Buzsáki, 2004; Fries et al., 2007; Hopfield, 2004; Jefferys et al., 1996). These proposals remain controversial (Burns et al., 2010; Ray and Maunsell, 2010).

While the computational role of gamma rhythms is not well understood, much is known about their phenomenology. For example, defining characteristics of gamma oscillations, such as the width and height of the spectral gamma peak, as well as its location on the frequency axis (peak frequency), exhibit systematic dependencies on various stimulus parameters (Gieselmann and Thiele, 2008; Henrie and Shapley, 2005; Jia et al., 2013; Ray and Maunsell, 2010). In particular, in the primary visual cortex (V1) of macaque monkeys, the power-spectrum gamma peak moves to higher frequencies as the contrast of a large and uniform grating stimulus is increased (Jia et al., 2013; Ray and Maunsell, 2010). This establishes a monotonic relationship between gamma peak frequency and the grating contrast. We will refer to this contrast-frequency relationship, obtained using a grating stimulus with uniform contrast, as the “contrast dependence” of gamma peak frequency.

Moreover, when animals are presented with a stimulus with non-uniform contrast that varies over the visual field (and hence over nearby cortical columns in V1), it is the local stimulus contrast that determines the peak frequency of gamma oscillations at a cortical location (Ray and Maunsell, 2010). Specifically, Ray and Maunsell 2010 used a Gabor stimulus (which has smoothly decaying contrast with increasing distance from the stimulus center), and found that the gamma peak frequency of different V1 recording sites match the predictions resulting from the frequency-contrast relationship obtained from the uniform grating experiment, but using the local Gabor contrast in that site’s receptive field. We refer to this second effect as the “local contrast dependence” of gamma peak frequency.

It is well-known that networks of excitatory and inhibitory neurons with biologically realistic neural and synaptic time-constants can exhibit oscillations with frequency in the gamma band (e.g., Brunel and Wang 2003; Tsodyks et al. 1997; see Buzsáki and Wang 2012 for a review). However, no mechanistic circuit model of visual cortex has been proposed which can robustly and comprehensively account for the contrast dependence of gamma oscillations. Jia et al. 2013 did propose a rate model that accounts for the increase of gamma peak frequency with increasing global contrast. Their treatment only modeled the interactions between a single excitatory and a single inhibitory population, which is sufficient for spatially uniform stimuli, but cannot explain the local contrast dependence of the gamma peak frequency. Moreover, even in the case of a uniform-contrast stimulus, this model could only produce very weak contrast-dependence of peak frequency, and further required a contrast-dependent scaling of the intrinsic time-constant of excitatory neurons. Here, we develop a parsimonious and self-contained mechanistic model (with fixed neural and network parameters) which accounts for the global as well as local contrast dependence of the gamma peak.

It is not clear how the local contrast dependence of gamma oscillations can be reconciled with key features of cortical circuits. This locality would trivially emerge if cortical columns were non- or weakly interacting; in that case each column’s oscillation properties would be determined by its feedforward input (controlled by the local contrast). However, nearby cortical columns do interact strongly via the prominent horizontal connections connecting them (Gilbert and Wiesel, 1989). These interactions manifest, e.g., in contextual modulations of V1 responses, such as in surround suppression (Cavanaugh et al., 2002), which are thought to be partly mediated by horizontal connections (Schwabe et al., 2010).

Surround suppression is the phenomena wherein stimuli outside the classical receptive field (RF) of V1 neurons, which by themselves cannot drive the cell to respond, nevertheless modulate the cells’ response, typically by suppressing it. Surround suppression results in a non-monotonic “size tuning curve”, which is obtained by measuring a cell’s response to circular gratings of varying sizes centered on that cell’s RF: the response first increases with increasing stimulus size, but then decreases as the grating increasingly covers regions surrounding the RF. Here we test whether a model of V1, featuring biologically plausible horizontal connections, can capture both surround suppression and the local contrast dependence of gamma oscillations.

A parsimonious, biologically plausible model of cortical circuitry which has successfully accounted for a range of cortical contextual modulations and their contrast dependence is the stabilized supralinear network (SSN) (Ahmadian et al., 2013; Rubin et al., 2015). In particular, the SSN robustly captures the contrast dependencies of surround suppression, e.g., that size tuning curves peak at smaller stimulus sizes with increasing stimulus contrast (Rubin et al., 2015). Being a recurrently connected firing rate model with excitatory and inhibitory neurons, we expect the SSN to be able to exhibit oscillations similar to gamma rhythms. However, to capture fast dynamical phenomena, and in particular the gamma band resonance frequency, it is key to properly account for fast synaptic filtering as provided by the fast ionotropic receptors, AMPA and GABAA (Barbieri et al., 2014; Brunel and Wang, 2003; Ledoux and Brunel, 2011). At the same time, it is useful to include the slower NMDA conductances, to help stabilize the network dynamics given strong overall recurrent excitation. We thus started by extending the SSN model to properly account for input currents through different synaptic receptor types, with different filtering timescales.

Gamma oscillations do not behave like sustained oscillations, as sustained oscillations display a sharp peak in the power spectrum, typically followed by trailing peaks at subsequent harmonics. Such oscillations would also be auto-coherent, i.e. have a consistent phase over multiple oscillation cycles. By contrast, gamma oscillations are not auto-coherent and their timing and duration vary stochastically (Burns et al., 2011, 2010), resulting in a single broad peak in the power-spectrum, with no visible higher harmonics (Jia et al., 2013; Ray and Maunsell, 2010), consistent with transient (damped) and noise-driven oscillations (Burns et al., 2011, 2010; Xing et al., 2012). We therefore studied the SSN in a regime where in the absence of time-dependent external inputs its firing rates reach a steady state, but when perturbed it can exhibit damped oscillations with a characteristic frequency (technically, this means the network is close to, but below, a Hopf bifurcation, i.e., a transition to a regime of sustained oscillations). When perturbed by structureless noise that is sufficiently fast (the biological network’s irregular spiking itself can provide such a noise source), these noise-driven damped oscillations manifest as a resonance peak in the power-spectrum of network activity (Kang et al., 2010; Xing et al., 2012).

We start the Results section by developing an extension of the SSN that models the dynamics of input currents through different synaptic receptor types, with different timescales. We then study a reduced noise-driven SSN composed of two units representing excitatory (E) and inhibitory (I) sub-populations. We show that, for a wide range of biological parameters, this reduced SSN model generates gamma oscillations with peak frequency that robustly increases with increasing external drive to the network. We show that this robust contrast dependence is a consequence of a key feature of the SSN: the supralinear input-output (I/O) function of its neurons (which is known to fit well the non-saturating and expansive relationship between the firing rate and membrane voltage of V1 neurons (Anderson et al., 2000; Priebe and Ferster, 2008)). We next investigate the gamma peak’s local contrast dependence using an expanded retinotopically organized SSN model o V1, with E and I units in different cortical columns. We show that this network is capable of reproducing the local contrast dependence of gamma peak frequency while exhibiting realistic surround suppression. However, as we show, this is only possible when the spatial fall-off of excitatory connection strengths has two distinct components: a sharp immediate fall across a cortical column’s width, and a slower fall off that can range over several columns. This “local plus long-range” spatial structure of horizontal connections, which we will more shortly refer to as “columnar structure”, balances the trade-off between capturing local contrast dependence (requiring short-range or weak horizontal connections) and surround suppression (requiring the opposite). We show that achieving this balance does not require fine-tuning of parameters and is robust to considerable parameter variations. We end by providing a mathematical explanation of the mechanism underlying local contrast dependence reconciled with strong surround suppression in this model, based on the structure of its normal oscillatory modes. Finally, in the Discussion, we conclude by discussing the implications of our findings for the structure of cortical horizontal connections and the shape of neural input/output nonlinearities.

Results

Noise-driven SSN with multiple synaptic currents

As motivated in the introduction, and with the aim of modeling gamma oscillations, we started by extending the SSN model to properly account for synaptic currents through different receptor types with different kinetics. In its original form, the SSN’s activity dynamics are governed by standard firing rate equations (Dayan and Abbott, 2001), in which each neuron is described by a single dynamical variable: either its output firing rate (Ahmadian et al., 2013; Rubin et al., 2015) or its total input current (Hennequin et al., 2018). In the extended model, by contrast, each neuron will have more than one dynamical variable, corresponding to its input currents through different synaptic receptor types. Concretely, we will include the three main ionotropic synaptic receptors in the model: AMPA and NMDA receptors which mediate excitatory inputs, and GABAA (henceforth abbreviated to GABA) receptors which mediate the inhibitory input. For a network of N neurons, we will arrange these input currents to different neurons into three N-dimensional vectors, hα, where α ∈ {AMPA, NMDA, GABA } denotes the receptor type. To model the kinetics of different receptors, we will ignore the very fast rise-times of all receptor types (as the corresponding timescales are much faster than the characteristic timescales of gamma oscillations), and only account for the receptor decay-times, which we denote by τα. With this assumption, the dynamics of are governed by (see Methods for a derivation)

| (1) |

where rt is the vector of firing rates, Wα rt and denote the recurrent and external inputs to the network mediated by receptor α, respectively, and Wα are N × N matrices denoting the contributions of different receptortypes to recurrent connectivity weightsl; the total recurrent connectivity weight matrix is thus given by W ≡ ∑α Wα. As in the cortex, the external input to the network is excitatory, and for simplicity we further assume that it only enters through AMPA receptors (i.e. is nonzero only for α = AMPA, and we will thus drop this superscript and denote this input by It); including an NMDA components will not affect our results, as NMDA is slow relative to gamma band timescales. To close the system for the dynamical variables , we have to relate the output rate of a neuron to its total input current. The fast synaptic filtering provided by AMPA and GABA allows for a static (or instantaneous) approximation to the input-output (I/O) transfer function of neurons (Brunel et al., 2001; Fourcaud and Brunel, 2002) (see Methods for further justification of this approximation):

| (2) |

where the I/O function F (⋅) acts element-wise on its vector argument. As in the original SSN, we take this 1/O transfer function to be a supralinear rectified power-law, which is the essential ingredient of the SSN (see Fig. 1 A inset): , where k is a positive constant, n > 1 (corresponding to supralinearity), and [x]+ ≡ max(0, x) denotes rectification. While the I/O function of biological neurons saturates at high firing rates (e.g., due to refractoriness), throughout the natural dynamic range of cortical neurons firing rates stay relatively low. In fact, in V1 neurons the relationship between the firing rate and the mean membrane potential (an approximate surrogate for the neuron’s net input) shows no saturation throughout the entire range of firing rates driven by visual stimuli, and is well approximated by a supralinear rectified power-law (Anderson et al., 2000; Priebe and Ferster, 2008).

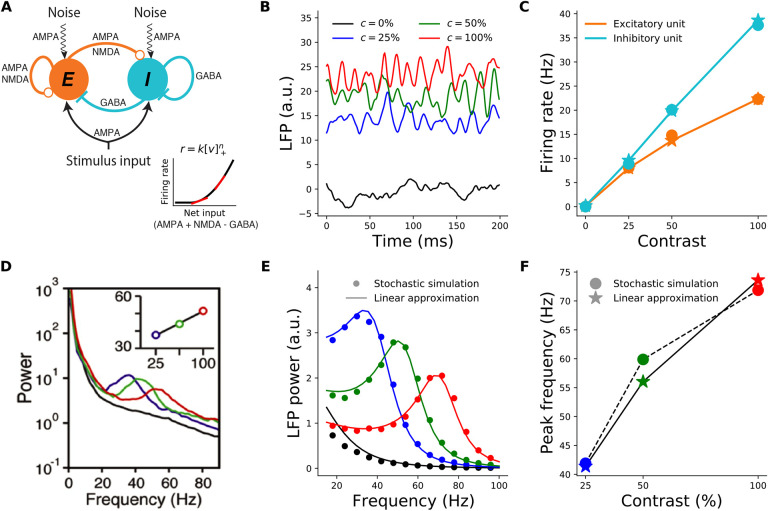

Figure 1.

Contrast dependence of the gamma peak frequency in the 2-population model. A: Schematic of the 2-population Stabilized Supralinear Network (SSN). Excitatory (E) connections end in a circle; inhibitory (I) connections end in a line. Each unit represents a sub-population of V1 neurons of the corresponding E/I type. Both receive inputs from the stimulus, as well as noise input. Inset: The rectified power-law Input/Output transfer function of SSN units (black). Red lines indicate the slope of the I/O function at particular locations. B: Local field potential (LFP) traces, modeled as total net input to the E unit, from the stochastic model simulations under four different stimulus contrasts (c): 0% (black) equivalent to no stimulus or spontaneous activity, 25% (blue), 50% (green), 100% (red). The same color scheme for stimulus contrast is used throughout the paper. (Note that we take stimulus strength (input firing rate) to be proportional to contrast, although in reality it is monotonic but sublinear in contrast, Kaplan et al., 1987). C: Mean firing rates of the excitatory (orange) and inhibitory (cyan) units as a function of contrast, from the stochastic simulations (dots) and the noise-free approximation of the fixed point, Eq. (3) (stars). Note that the dots and stars closely overlap. D: Reproduction of figure 1I from (Ray and Maunsell, 2010) showing the average of experimentally measured LFP power-spectra in Macaque V1. The inset shows the dependence of gamma peak frequency on the contrast of the grating stimulus covering the recording site’s receptive field. E: LFP power-spectra for c = 0%, 25%, 50%, 100% (black, blue, green, and red curves, respectively) calculated from the noise-driven stochastic SSN simulations (dots), or using the linearized approximation (solid lines). F: Gamma peak frequency as a function of contrast, obtained from power-spectra calculated using stochastic simulations (dots and dashed line) or the linearized approximation (stars and solid line).

Two-population model

We start by studying a reduced two-population model of V1 consisting of two units (or representative mean-field neurons): one excitatory and one inhibitory unit, respectively representing the excitatory and inhibitory neural sub-populations in the retinotopically relevant region of V1. This reduced model is appropriate for studying conditions in which the spatial profile of the activity is irrelevant, e.g., for a full-field grating stimulus where we can assume the relevant V1 network is uniformly activated by the stimulus. Both units receive external inputs, and make reciprocal synaptic connections with each other as well as themselves (Fig. 1 A).

As pointed out in the introduction, empirical evidence is most consistent with visual cortical gamma oscillations resulting from noise-driven fluctuations, and not from sustained coherent oscillations (Burns et al., 2011, 2010; Kang et al., 2010; Xing et al., 2012). To model such noise-driven oscillations using the SSN, as in (Hennequin et al., 2018), we assumed the external input consists of two terms It=IDC + ηt, where IDC represents the feedforward stimulus drive to the network (by a steady time-independent stimulus) and scales with the contrast of the visual stimulus, and ηt represents the stochastic noise input to the network. This input noise could be attributed to several sources, including sources that are (biologically) external or internal to V1. External noise can originate upstream in the lateral geniculate nucleus (LGN) of thalamus, or in feedback from higher areas. Internally generated noise results from the network’s own irregular spiking (not explicitly modeled) which survives mean-field averaging as a finite-size effect (given the finite size of the implicitly-modeled spiking neuron sub-populations underlying the SSN’s units). For parsimony, we assumed noise statistics are independent of stimuli, and thus of contrast.1 More specifically, we assumed that noise inputs to different neurons are independent, and each component is temporally correlated pink noise with a correlation time on the order of a few milliseconds (our main results are robust to changes in this parameter, as well as to the introduction of input noise correlation across neurons).

For the first results shown in Fig. 1, we directly simulated the stochastic Eq. (1). Fig. 1C (dots) shows the average firing rates found in these simulations, and their contrast-dependence. The LFP signal is thought to result primarily from inputs to pyramidal cells, as they have relatively large dipole moments (Einevoll et al., 2013); we therefore took the net input to the E sub-population to represent the LFP signal. Fig. 1B shows examples of raw simulated LFP traces for different stimulus contrasts. For high enough contrast (including all nonzero contrasts shown), the LFP signal exhibits oscillatory behavior. These oscillations can be studied via their power-spectra (Fig. 1E, dots; see Methods). As Fig. 1F shows, the peak frequencies of the simulated LFP power-spectra shift to higher frequencies with increasing contrast. The two-population SSN model thus captures the empirically observed contrast dependence of gamma peak frequency (compare Fig. 1F with Fig. 1-I of Ray and Maunsell 2010 reproduced here as Fig. 1D).

To understand this behavior better, we employed a linearization scheme for calculating the LFP power-spectra. The linearization method allows for faster numerical computation of the LFP power-spectra, without the need to simulate the stochastic system Eq. (1). More importantly, the linearized framework allows for analytical approximations and insights, which as we show below, elucidate the mechanism underlying the contrast dependence of the gamma peak. We thus explain this approximation with some detail here (see Methods for further details). In any stimulus condition (corresponding to a given IDC), we first find the network’s steady state in the absence of noise, by numerically solving the noise-free version of Eq. (1) (without linearization). The corresponding fixed-point equations can be simplified if we sum them over α, and define and We then arrive at the same fixed-point equation for h* as in the original SSN (Ahmadian et al., 2013):

| (3) |

After numerically finding h*, we then expand Eq. (1) to first order in the noise and noise-drive deviations around the fixed point, , to obtain

| (4) |

where we defined

| (5) |

where F′ (h*) denotes the vector of gains (slopes) of the I/O functions of different neurons at the operating point h* (see the red tangent lines in Fig. 1 A inset), and diag constructs a diagonal matrix from the vector. As we explain in the next subsection, the neural gains and their dependence on the operating point rates (themselves dependent on the stimulus , via Eq. (3)) play a crucial role in the contrast dependence of gamma peak frequency.

Technically, the linear approximation is valid for small noise strengths, but we found that for the noise levels that elicited fluctuations with realistic sizes, the approximation was very good. As shown in Fig. 1E–F, the LFP power-spectra and their peak frequencies obtained using the linear approximation agree very well with those estimated from the direct stochastic simulations of Eq. (1). The firing rates of E and I units at the fixed-point solution Eq. (3) also provide a very good approximation to their mean steady-state rates, at different contrasts, as obtained from direct stochastic simulations of Eq. (1) (Fig. 1C). Below, we will thus calculate all power-spectra using the computationally faster noise-free determination of the fixed point, Eq. (3), and the linear approximation, Eq. (4), instead of stochastic simulations of Eq. (1).

Robustness of the two-population model

To demonstrate that the SSN robustly produces the contrast dependence of gamma peak frequency, we simulated 1000 different instances of the 2-population network with parameters randomly drawn from wide but biologically plausible ranges. The sampled parameters were the weights of the connections between the two units (E → I, I → E) and their self-connections (E → E, I →I), the relative strength of input to the excitatory and inhibitory units, and the NMDA fraction of excitatory synaptic weights.

The parameters were sampled independently except for the enforcement of two inequality constraints which previous work has shown to be necessary for ensuring the network’s dynamical stability without strong inhibition domination leading to very weak excitatory activity (see Methods for details). The parameter set was also rejected if the resulting SSN did not reach a stable fixed point for all studied stimulus conditions (this corresponds to our modelling choice to have the SSN in a damped oscillation regime).

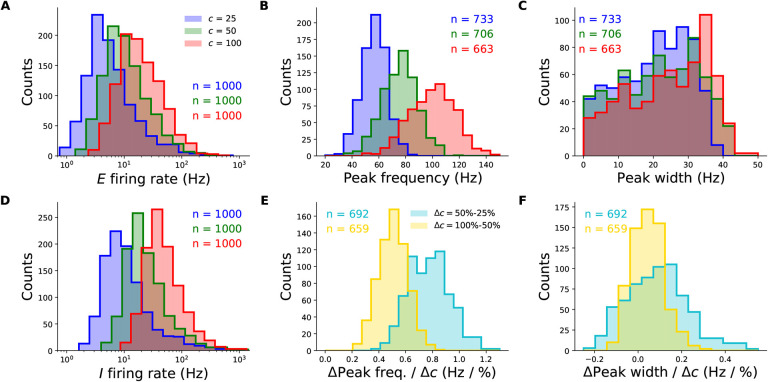

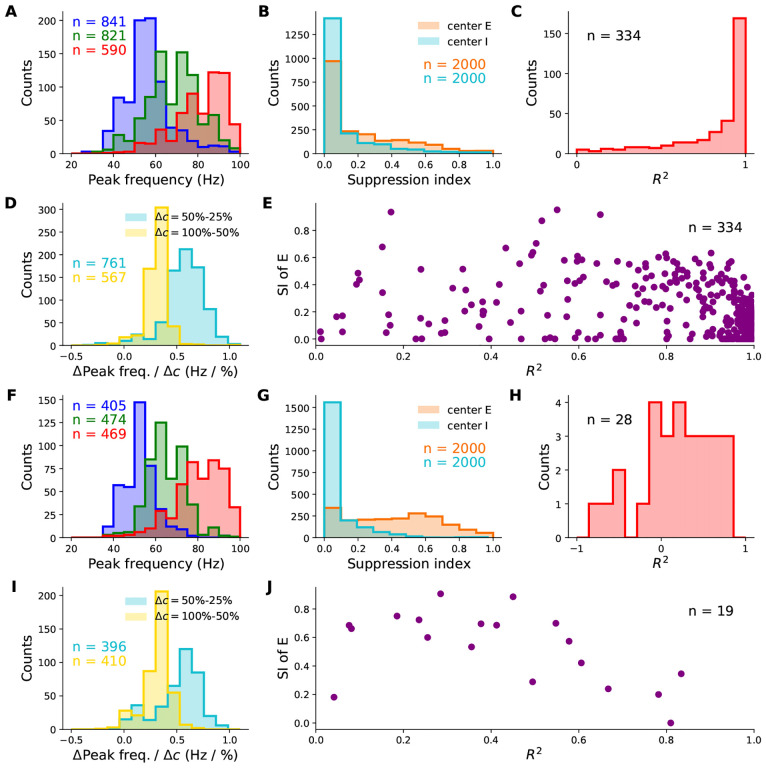

The majority of randomly sampled models produced steady-state excitatory and inhibitory firing rates that were within biologically plausible ranges, across all contrasts (Fig. 2A and D). Furthermore, many two-population networks produced peak frequencies that were in the gamma band (30–80 Hz) for all contrast conditions, though some produced peaks at higher frequencies for the highest contrasts (Fig. 2 B). The distributions also shift towards higher frequencies with increasing contrast, suggesting that the two-population SSN is indeed able to robustly reproduce the contrast dependence of gamma peak frequency. To demonstrate this more directly, we show the distributions of the changes in peak frequency normalized by the change in contrast in Fig. 2E. No sampled network produced a negative change in peak frequency with increasing stimulus contrast. As a further corroboration of our model, we also studied how the width of the gamma peak changed with increasing contrast. While (Jia et al., 2013; Ray and Maunsell, 2010) did not quantify changes in their gamma peak width with increasing contrast, their results suggest that no significant systematic change in width was observed (Fig. 1 D). Similarly, in our two-population network, changes in the half-width of the gamma peak are relatively small, and the direction of change can be positive or negative with similar probability (Fig. 2 F).

Figure 2.

Robustness of the contrast-dependence of gamma peak frequency to network parameter variations. One thousand 2-population SSN’s were simulated with randomly sampled parameters (but conditioned on producing stable noise-free steady-states), across wide biologically plausible ranges. All histograms show counts of sampled networks; the total numbers (n’s) vary across different histograms, as different subsets of network produced the corresponding feature or value in the corresponding condition (e.g., a gamma peak at 50% contrast). Panels A-E and F-J show results for the columnar and non-columnar models, respectively. A: Distributions of the excitatory unit’s firing rate in response to 25%, 50%, and 100% contrast stimuli (blue, green, red), plotted on a logarithmic scale. 100% of networks shown across all contrasts. B: Distributions of the gamma peak frequencies at different stimulus contrasts. The n’s (upper right) give the number of networks with a power spectrum peak above 20 Hz. C: Distributions of the gamma peak widths at different stimulus contrasts. D: Same as panel A, but for the inhibitory unit. E: Distributions of the change in gamma peak-frequency normalized by the change in stimulus contrast, either 25% and 50% (cyan) or 50% and 100% (yellow). F: Same as panel E, but for gamma peak-width.

Mechanism underlying the contrast dependence of gamma peak frequency

As we will now show, the SSN sheds light on the mechanism underlying the contrast dependence of the gamma peak, and specifically pins it to the increasing neuronal gain with increasing neuronal activity, due to the expansive, supralinear nature of the neuronal I/O transfer function. This also explains the robustness of the effect to changes in connectivity and external input parameters as demonstrated in the previous subsection.

In the linearized approximation, the LFP power-spectrum (see Eqs. (24)–(26) in Methods) can be expressed in terms of the so-called Jacobian matrix, i.e. the matrix of couplings of the dynamical variables, , in the linear system Eq. (4); thus, for a network of N neurons, the Jacobian is a 3N × 3N matrix, or 6 × 6 in the two-population model (see Eqn. 33 for the explicit form). The existence of damped oscillations and the value of their frequency (which is the resonance frequency manifesting as a peak in the power-spectrum) are in turn determined by the existence of complex eigenvalue of the Jacobian matrix, and the value of their imaginary parts. Previously, the eigenvalues of the Jacobian for a standard E-I firing rate network, without different synaptic current types, were analyzed by Tsodyks et al. 1997, and conditions for emergence of (damped or sustained) oscillations were found. In Appendix 1, we show that, given the slowness of NMDA receptors relative to gamma timescales, the effect of NMDA receptors on the relevant complex eigenvalues of the Jacobian can be safely ignored, and as a result, only two (out of 6) eigenvalues of the resulting Jacobian can become complex (and thus able to create a gamma peak). Moreover, this pair (which we denote by λ±) correspond to the two eigenvalues of a standard E-I rate model (Tsodyks et al., 1997) whose E and I neural time-constants are given, respectively, by the AMPA and GABA decay times:

| (6) |

where and . Here we defined

| (7) |

where is the total synaptic weight from unit b to unit a, and (as in Eq. (5)) is the gain of unit a, i.e. the slope of its I/O function, at the operating point set by the stimulus. We refer to as effective synaptic connection weights. Unlike raw synaptic weights, these effective weights are modulated by the neural gains, and thereby by the activity levels in the steady state operating point, which is in turn controlled by the stimulus.

As mentioned, (damped or sustained) oscillations emerge when the above eigenvalues are complex (in which case λ+ and λ- are complex conjugates). This happens when the expression under the radical in Eqn. 6 is negative, i.e.

| (8) |

Qualitatively, the left hand side of the above inequality is a measure of the strength of the effective negative feedback between the E and I sub-populations, while the right hand size is a measure of the positive feedback in the network (arising from the network’s recurrent excitation and disinhibition). Oscillations thus emerge when the negative feedback loop between E and I is sufficiently strong, in the precise sense of Eqn. 8.

During spontaneous activity (when the external input is zero or very weak), the rates of both E and I populations are very small. This means that the spontaneous activity operating point sits near the rectification of the neuronal I/O transfer functions where the neural gains are very low (Fig. 1A left). Thus in the spontaneous activity state, the dimensionless effective connections are relatively small. In the limit of , the left hand side of Eqn. 8 goes to zero, while its right side goes to (γI - γE)2 which is generically positive; hence the inequality is not satisfied. This shows that the spontaneous A activity state generically does not exhibit oscillations, in agreement with lack of empirical observation of gamma oscillation during spontaneous activity.

On the other hand, when condition Eqn. 8 does hold, the frequency of the oscillations are given by the imaginary part of the eigenvalues, i.e.

| (9) |

(the division by 2π is because the eigenvalue imaginary parts give the angular frequency). As we will discuss further below, the resonance frequency (or approximately the gamma peak frequency [Fig. 3B]) thus depends on the effective connections weights and is thus modulated by the neural gains. (Note, however, that the scale or order of magnitude of this frequency is set by γE and γI, i.e., by the decay times of AMPA and GABA, as the effective connection weights are dimensionless and cannot determine the dimensionful scale of the gamma frequency; ignoring the “positive feedback” contribution in Eq. (9), we find , which for τAMPA ~ τGABA ~ 4–6 ms, is on the order of 30–40 Hz.)

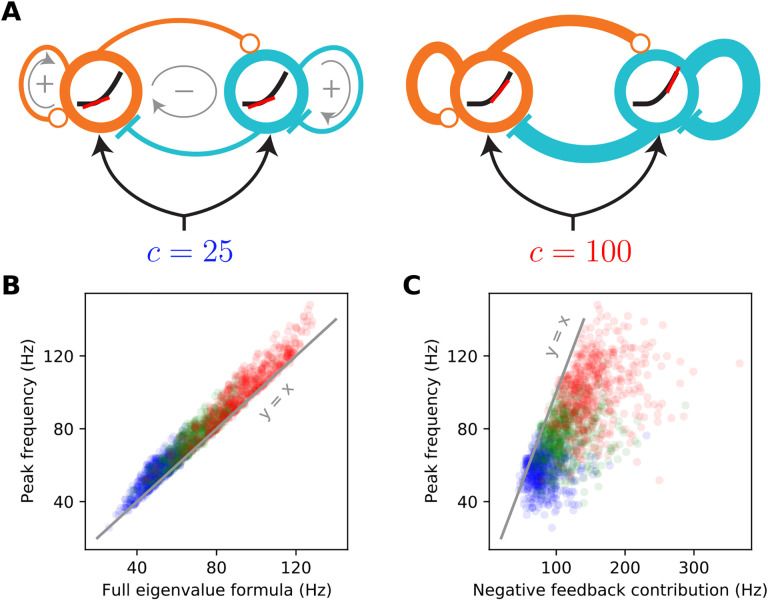

Figure 3.

The supralinear nature of the neural transfer function can explain the contrast dependence of gamma frequency. A: Schematic diagrams of the 2-population SSN (see Fig. 1A) receiving a low (left) or high (right) contrast stimulus. The thickness of connection lines represents the strength of the corresponding effective connection weight, which is the product of the anatomical weight and the input/ouput gain of the presynaptic neuron. The gain is the slope (red line) of the neural supralinear transfer function (black curve), shown inside the circles representing the E (orange) and I (cyan) units. A resonance frequency exists when the effective “negative feedback” (gray arrow enlcosing a minus sign) dominates the effective “positive feedback” (gray arrows enclosing positive signs), in the sense of the inequality Eq. (8). As the stimulus drive (c) increases (right panel), the neurons’ firing rates at the network’s operating point increase. As the transfer function is supralinear, this translates to higher neural gains and stronger effective connections. When a resonance frequency already exists at the lower contrast, this strengthening of effective recurrent connections leads to an increase in the gamma peak frequency, approximately given by the imaginary part of the linearized SSN’s complex eigenvalue, Eq. (6). B: The eigenvalue formula (9) provides an excellent approximation to the gamma peak frequency across sampled networks and contrasts simulated in Fig. 2; correlation coefficient =0.98 (p < 10−6), for all data points combined across 25% (blue), 50% (green), and 100% (red) contrasts. C: The negative feedback loop contribution to the resonance frequency (Eq. (9) with the second term under the square root neglected) overestimates gamma peak frequency but is positively and significantly correlated with it; correlation coefficient =0.67(p < 10−6).

Equation (9) provides the insight into the contrast dependence of the gamma peak frequency (see Fig. 3). As contrasts increase the fixed-point firing rates increase (Fig. 1C). Because the SSN I/O transfer function is non-saturating and supralinear, as the rates increase the gains (i.e., the slope of the I/O transfer functions) of the E and I cells are also guaranteed to increase (Fig. 3A right vs. left). The increase in the gains leads in turn to the strengthening of the effective connection weights, Eq. (7), and therefore of the network’s negative E-I feedback loop. When Eq. (8) is satisfied, a rough approximation (Fig. 3B vs. C) to the resonance frequency is obtained by ignoring the positive feedback contribution (the second term under the square root in Eq. (9)). With only the negative feedback contribution retained, it is clear that an increase in neural gains leads to an increase in the resonance frequency (the precise conditions for this to occur are given in Appendix 2 below). Thus as contrast increases, we expect the gamma peak in the LFP power-spectrum to move to higher frequencies, due to increasing neural gains and effective connectivity as dictated by the supralinear neural I/O transfer function.

Retinotopic SSN

We next investigated whether the SSN can account for the locality of contrast dependence of gamma peak frequency, when V1 receives a stimulus with a spatially varying contrast profile. To this end, we expanded our network from two units representing global E and I populations to many units that are retinotopically organized. We thus model the cortex as a two-dimensional grid that has an E and I sub-population (corresponding to SSN units) at each grid location, corresponding to a cortical column (Fig. 4A). In the retinotopic SSN, the stimulus input can vary across the network: each column can receive a different input proportional to the contrast within its receptive field. We presented this network with uniform-contrast grating stimuli of various sizes and contrasts (the stimulus in Fig. 4A), as well as a Gabor stimulus (Fig. 4E), similar to the one used in Ray and Maunsell 2010, with a contrast profile that decays smoothly with deviation from the stimulus center according to a Gaussian profile (see Eq. (54)). Gamma peak frequency shows only a weak dependence on stimulus orientation (Jia et al., 2013), possibly due to the averaging of LFP over an area larger than the size of orientation minicolumns. To keep our model parsimonious and computationally more tractable, we thus chose the size of our cortical columns to be roughly half the hypercolumn size in Macaque, and neglected the orientation map structure, and the dependence of external inputs and horizontal connections on preferred orientation.

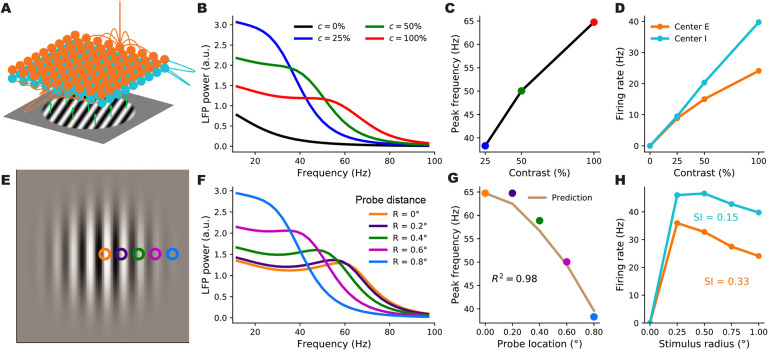

Figure 4.

A retinotopically-structured SSN model of V1, with a boost to local, intra-columnar excitatory connectivity, exhibits a local contrast-dependence of gamma peak frequency, as well as robust surround suppression of firing rates. A: Schematic of the model’s retinotopic grid, horizontal connectivity, and stimulus inputs. Each cortical column has an excitatory and an inhibitory sub-population (orange and cyan balls) which receive feedforward inputs (green arrows) from the visual stimulus, here a grating, according to the column’s retinotopic location. Orange lines show horizontal connections projecting from two E units; note boost to local connectivity represented by larger central connection. Inhibitory connections (cyan lines) only targeted the same column, to a very good approximation. B: LFP power-spectra in the center column evoked by flat gratings of contrasts 25% (blue), 50% (green), and 100% (red). C: Gamma peak frequency as a function of flat grating contrast. Note that peaks were defined as local maxima of the relative power-spectrum, i.e., the point-wise ratio of the absolute power-spectrum (as shown in B), to the power-spectrum at zero contrast; see Methods. D: Firing rate responses of E (orange) and I (cyan) center sub-populations as a function of grating contrast. E: The Gabor stimulus with non-uniform contrast (falling off from center according to a Gaussian). The colored circles show the five different cortical locations (retinotopically mapped to the visual field) probed by the LFP “electrodes”. The orange probe was at the center and the distance between adjacent probe locations was 0.2° of visual angle (corresponding to 0.4 mm in V1, the width of the model columns). F: LFP power-spectra evoked by the Gabor stimulus at different probe locations (legend shows the probe distances from the Gabor center). G: Gamma peak frequency of the power-spectra at increasing distance from the Gabor center. The golden curve is the prediction for peak frequency in the displaced probe location based on the Gabor contrast in that location and the gamma peak frequency obtained in the center location for the flat grating of the same contrast. The predictor’s fit to actual Gabor frequencies is very tight (R2=0.98), exhibiting local gamma contrast dependence. H : Size tuning curves of the center E (orange) and I (cyan) subpopulations, at full contrast. E and I firing rates vary non-monotonically with grating size and exhibit surround suppression (suppression indices were 0.33 and 0.15, respectively.

We wish to study the trade-off in this model between capturing surround suppression of firing rates and capturing the local dependence of gamma peak frequency, and asked whether parameter choices exist for which the model can capture both of these effects. In particular, we studied the effect of the spatial profile of the horizontal recurrent connections between and within different cortical columns on this trade-off. In one extreme, we can consider a network in which long-range connections between different columns are very weak, and thus cortical columns are weakly interacting and can be approximated by independent two-population networks which were studied above (Figs. 1–1). In this case, the frequency of the gamma resonance in each column depends only on the gains and activity levels in that column, which are in turn set by the feedforward input to that column, controlled by the local stimulus contrast. Therefore a network with such a connectivity structure would trivially reproduce the local contrast-dependence of gamma peak frequency. However, due to lack of strong inter-columnar interactions, such a network would fail to produce significant surround suppression of firing rates. In the other extreme, inter-columnar strengths are strong and, importantly, have a smooth fall-off (e.g. an exponential fall) with growing distance between pre- and post-synaptic columns; this is the case in most cortical network models, including the SSN model of (Rubin et al., 2015) that captures surround suppression and its various contrast-dependencies. However, as we will show below, in such networks, when horizontal connections are strong enough to produce surround suppression, the gamma peak frequency is typically shared across all activated columns, regardless of the spatial contrast profile of the stimulus, and thus this connectivity structure cannot capture the local contrast-dependence of gamma peak frequency. Indeed, as shown below, within this class of networks (i.e., those with a smooth spatial connectivity profile), we did not find connectivity parameters (controlling the range and strength of horizontal connections) for which the network could produce significant surround suppression and yet capture the local contrast-dependence of gamma (see Fig. 5 and 6).

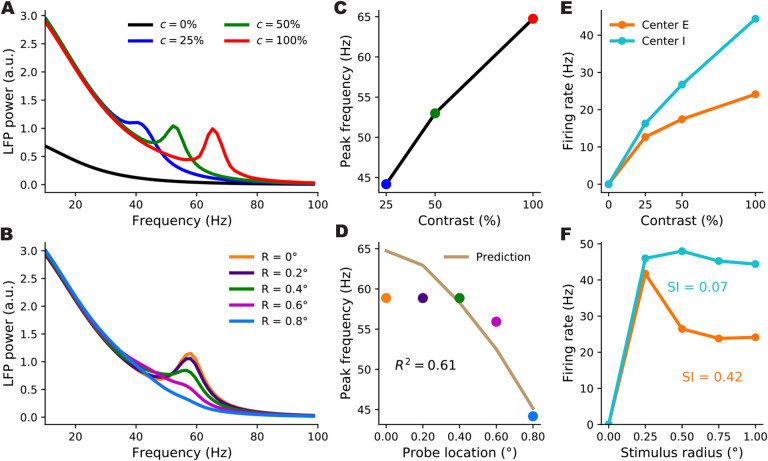

Figure 5.

Behaviour of retinotopic V1 models with and without boosted intra-columnar recurrent excitatory connectivity (columnar vs. non-columnar models, respectively) across their parameter space. We simulated 2000 different networks of each type with parameters (11 in total) randomly sampled across wide, biologically plausible ranges. All histograms show counts of sampled networks; the total number of samples vary across histograms, as only subsets of networks exhibited the corresponding feature with a value in the shown range. Panels A-E and F-J show results for the columnar and non-columnar models, respectively. A & F: Distributions of gamma peak frequency, recorded at stimulus center, for different contrasts of the uniform grating (histograms for 25%, 50%, and 100% contrasts in blue, green and red, respectively). D & I: Distributions of the change in gamma peak-frequency normalized by the change in grating contrast, changing from25% to 50% (cyan) or from 50% to 100% (yellow). B & G: Distributions of the suppression index for the center E (orange) and I (cyan) sub-populations. C & H : The distributions of the coefficient of variation R2, as a measure of the locality of gamma peak contrast dependence. The R2 quantifies the goodness-of-fit of predicted gamma peak frequency based on local Gabor contrast (see Fig. 4G showing such a fit in an example network). E & J: The joint distribution of R2 and the suppression index of the center E sub-population. Only a very small minority of sampled non-columnar networks produced R2 > −1 and R2 > 0 to appear in H and; hence the small n’s, corresponding to 1.4% and 1% of samples, respectively

Figure 6.

Gamma peak contrast dependence and surround suppression in an example non-columnar retinotopic SSN without a boost in intra-columnar E-connections. Panel descriptions are the same as for the right three columns in Fig. 4. Among 2000 sampled non-columnar networks, this network was the best sample we found in terms of capturing the local contrast dependence of gamma peak frequency (subject to having a nonzero suppression index, and producing realistic non-multiple gamma peaks in the LFP power-spectra which are not unbiologically sharp). However, as seen in panel D, this network only yielded an R2 of 0.61, as an index of locality for the gamma peak’s contrast dependence, and gamma peak frequency stayed roughly constant over most of the area covered by the Gabor stimulus (panel B).

We then asked whether connectivity structures which involve a sum of strong, spatially smooth long-range connections and an additional boost to local, intra-columnar connectivity could produce both of these effects. In such a structure, the connection strength between two units first undergoes a sharp drop when the distance between the units exceeds the width of a column, and then falls off smoothly over a longer distance, possibly ranging over several columns. This modification can be thought of as adding a local, intra-columnar-only component to a connectivity profile with smooth fall-off. Specifically, we let the excitatory horizontal connections in our model have such a form. Denoting the strength of connection from the unit of type b at location y to the unit of type a at location x by Wx,a∣y,b (with, a, b ∈ {E,I}), we thus chose:

| (10) |

where δx,y (the local component) is the Kronecker delta: 1 when x = y and zero otherwise. The λE,E and λl,E parameters lie between 0 and 1, and interpolate between the two extremes of connectivity structure: for λa,E = 0 the horizontal connectivity profile has only one spatial scale and falls off smoothly with distance, while for λa,E = 1 connectivity is purely local and intra-columnar. The orange lines in Fig. 4A show examples of this connectivity profile. Below we will refer to horizontal excitatory connectivity structures with nonzero (and significant) λa,E as columnar, and to those with λa,E = 0 as non-columnar; we will also refer to SSN models with these connectivity types as “columnar” and “non-columnar” models or networks, respectively, for short. As in previous work (Rubin et al., 2015), we chose a smooth gaussian profile for inhibitory connections (see Eq. (51)), with a relatively short range (see the cyan lines in Fig. 4A); thus inhibitory projections essentially only targeted the source column.

In Fig. 4B–H, we show the behavior of firing rates and the gamma peak in an example columnar network (with λE,E = 0.72 and λl,E = 0.70) in response to different stimuli. We first presented this network with flat gratings of varying sizes and contrasts, and measured the LFP power-spectrum and the firing rate responses of the E and I sub-populations at the “center” column, i.e., at the retinotopic location on which the grating was centered. Firing rates of center E and I both increased with contrast (Fig. 4D), and for large enough gratings, we verified that the gamma peak frequency also increases with increasing contrast (Fig. 4B–C), matching the results of the reduced 2-population model and our previously built intuition. To study surround suppression, we formed the so-called size-tuning curve of the center E and I populations, based on their responses to full-contrast flat gratings of different sizes (Fig. 4H). Both E and I responses showed surround suppression: the response first grows but then drops with increasing grating size. The center E sub-population had a suppression index (SI, see Methods for the definition; SI= 0 is no suppression, = 1 is complete suppression) of 0.33 consistent with biologically reported values of suppression indices (Gieselmann and Thiele, 2008).

To study the locality of contrast dependence, we modeled the experiment of Ray and Maunsell 2010, and presented this network with a Gabor stimulus, which has spatially varying contrast with a gaussian profile. We then computed the LFP spectrum at five locations (“columns”) of increasing distance from the center of the Gabor stimulus (the colored squares in Fig. 4E) with the farthest one lying at 0.8 degrees of visual angle from the Gabor center (compare with the Gabor’s σ which was 0.5° as in Ray and Maunsell 2010). The LFP power-spectra at all locations are shown in Fig. 4F. As seen, the gamma peak moves to lower frequencies with increasing distance from the Gabor center, which is accompanied by a decrease in the local contrast (i.e. the contrast of the Gabor stimulus at the receptive field location of the recording site). To quantify the locality of this contrast dependence, we again followed Ray and Maunsell 2010, by comparing the actual peak frequency at location x with a prediction that solely depended on the local contrast, c(x), of the Gabor stimulus at x. The prediction was the peak frequency of gamma recorded at the center location, when the network is presented with a large flat grating of (uniform) contrast equal to c(x). We found that the prediction was in very close agreement with the actual peak frequencies at all distances (Fig. 4F). As a measure of the locality of gamma contrast-dependence, we used the corresponding coefficient of determination, R2, which quantifies the agreement between the predicted and actual peak frequencies. (By definition, , where SSE denotes the sum of squared differences between the predicted and the actual gamma peak frequencies, and Var denotes the variance of the latter; R2 is thus bounded above by 1, which is attained when the prediction perfectly matches the actual data.) In the example shown in Fig. 4F, we found R2 = 0.98.

To investigate whether the above behavior did or did not require fine tuning of network parameters, we simulated 2000 networks with randomly picked parameters. There were 11 parameters in total, characterising the strengths and ranges of horizontal connections, including A λa,E, the strength of feedforward connections, and the ratio of NMDA to AMPA in recurrent excitatory synapses. These parameters were were picked randomly and independently (except for the enforcement of three inequality constraints, similar to the samplings of the two population model in Fig. 2) across wide ranges of values consistent with biological estimates; see Methods and Table 1 for details. Again, similar to the sampling of the two-population model, sampled networks which failed to reach a stable steady-state in any stimulus condition were discarded.

Table 1.

Parameters of models used in different figures.

| Parameter | Fig. 1 | Figs. 2–3 | Fig. 4 | Fig. 5 | Fig. S1 | Unit | Description |

|---|---|---|---|---|---|---|---|

| n | 2 | - | power-law I/O exponent | ||||

| k | 0.0194 | mV−2 · ms | power-law I/O pre-factor | ||||

| τ corr | 5 | ms | noise correlation time | ||||

| τ AMPA | 5 | ms | AMPA decay time | ||||

| τ GABA | 7 | ms | GABAA decay time | ||||

| τ NMDA | 100 | ms | NMDA decay time | ||||

| ρ N | 0.39 | 0 – 0.5 | 0.39 | 0.3 – 0.5 | 0.45 | - | NMDA share of excitation |

| J EE | 124 | 100 – 300 | 124 | 100 – 300 | 165 | mV | total E → E connection weight |

| J IE | 116 | 100 – 300 | 116 | 100 – 300 | 123 | mV | total E → I connection weight |

| J EI | 103 | 50 – 150 | 103 | 50 – 150 | 114 | mV | total I → E connection weight |

| J II | 59.3 | 50 – 150 | 59.3 | 50 – 150 | 57.1 | mV | total I → I connection weight |

| g E | 21.9 | 10 – 30 | 21.9 | 10 – 30 | 21.7 | mV/s | E feedforward current per 1% contrast |

| g I | 10.3 | 5 – 15 | 10.3 | 5 – 15 | 10.6 | mV/s | I feedforward current per 1% contrast |

| λEE | - | - | 0.72 | 0.25 – 0.75† | 0 | - | locality of E → E connections |

| λIE | - | - | 0.70 | 0.25 – 0.75† | 0 | - | locality of E → I connections |

| σ EE | - | - | 0.296 | 0.15 – 0.60 | 0.265 | mm | range of E → E connections |

| σ IE | - | - | 0.554 | 0.15 – 0.60 | 0.294 | mm | range of E → I connections |

| σ EI | - | - | 0.09 | mm | range of I → E connections | ||

| σ II | - | - | 0.09 | mm | range of I → I connections | ||

| N col | 1 | 1 | 172 | - | number of cortical columns | ||

| L | - | - | 6.4 | mm | retinotopic network width | ||

| Δx | - | - | 0.4 | mm | cortical column width | ||

| M | - | - | 2 | mm/degrees | cortical magnification factor | ||

| w RF | - | - | 0.04° | degrees | grating input’s margin width | ||

| σ Gabor | - | - | 0.5° | degrees | Gabor stimulus sigma | ||

In figures 2,3 and 5, parameters were sampled independently and uniformly from the ranges given in the table, except for enforcing three inequality constraints (i.e., sampled parameter sets violating any of these inequalities were rejected). See the main text (Methods) for details.

these were the ranges for sampled λEE and λIE of the columnar model; these parameters were zero in the non-columnar model.

The vast majority of sampled networks produced biologically plausible center excitatory and inhibitory firing rates which increased with increasing contrast (E and I firing rates did not exceed 100 Hz in, respectively, 90% and 81% of networks). The majority of networks produced surround suppression in excitatory (70% of samples) and inhibitory (53% of samples) populations, with many networks yielding strong suppression in both populations (Fig. 5B). In addition, in response to grating stimuli (with uniform contrast profile), many networks produced gamma-band peaks in the LFP power spectrum that moved upward in frequency with increasing contrast (Fig. 5A and D; the number of networks yielding gamma peak for each nonzero contrast is denoted in panel A). Finally, for each network, we again quantified the locality of contrast dependence of peak frequency, using the R2 coefficient for the match between peak frequencies obtained at different recording locations on the Gabor stimulus, and their predictions based on the local Gabor contrast and the peak frequency obtained using the flat grating with that contrast. A sizable fraction of networks resulted in a high R2 signifying local contrast-dependence of gamma peak frequency (Fig. 5C). Moreover, many of these networks exhibited strong surround suppression as well (Fig. 5E). Sixty six networks (4.2% of samples) yielded an R2 > 0.8 and SIE > 0.25.

In sum, the columnar model, which emphasizes the intra-columnar excitatory connectivity (Eq. (10)), can robustly exhibit strong surround suppression in conjunction with gamma peak frequencies controlled by the local contrast, as observed empirically, without requiring a fine tuning of parameters.

Retinotopic SSNs with non-columnar excitatory connectivity do not account for local contrast dependence of gamma frequency.

To further show the importance of a boost in intra-columnar excitatory connectivity for obtaining local contrast dependence despite strong surround suppression, we next sampled retinotopic SSN models without this structure in horizontal connections (“non-columnar” model). In these models horizontal excitatory connections fall off smoothly over distance between the source and target columns (corresponding to λa,E = 0 in the notation of Eq. (10)). We found that while many sampled non-columnar models exhibited strong surround suppression (on average stronger than in the sampled columnar models) none of the sampled models exhibited gamma peak frequencies with sufficiently local contrast-dependence.

The sampled non-columnar networks robustly exhibited surround suppression which was strong in a large fraction of these networks, and, especially in excitatory units, was on average stronger than in the sampled columnar models (Fig. 5G vs. B). Many networks produced LFP power-spectrum peaks in the gamma band with frequency increasing with contrast (Fig. 5F and I), but sampled networks with these properties were about half as common as in the case of the columnar model (Fig. 5A and D). Moreover, when presented with the Gabor stimulus, we found that the contrast dependence of gamma peak frequency in the vast majority of non-columnar networks was far from local, and the same peak frequency was shared across most of the retinotopic region stimulated by the Gabor stimulus (see the power spectra of an example sampled non-columnar network in Fig. 6A–B). Only 1% of sampled non-columnar networks exhibited a positive R2 (our measure of local contrast dependence), compared to 15% of columnar networks (Fig. 5H and J). Only 4 non-columnar networks (out of 2000 sampled networks) exhibited an R2 > 0.6 in conjunction with any degree of surround suppression (positive suppression index) of E firing rates (Fig. 5J). However, three of these networks which had the highest R2’s exhibited non-biological gamma-band power spectra, featuring either multiple gamma peaks or unrealistically sharp ones. The fourth network produced realistic gamma peaks and achieved an R2 = 0.61; the LFP power spectra, gamma frequency contrast dependence, and size tuning curves for this example network are shown in Fig. 6.

We conclude that the non-columnar model class cannot robustly exhibit both surround suppression of firing rates and local contrast dependence of gamma peak frequency.

Mechanism underlying the local contrast dependence of gamma peak frequency in the columnar SSN

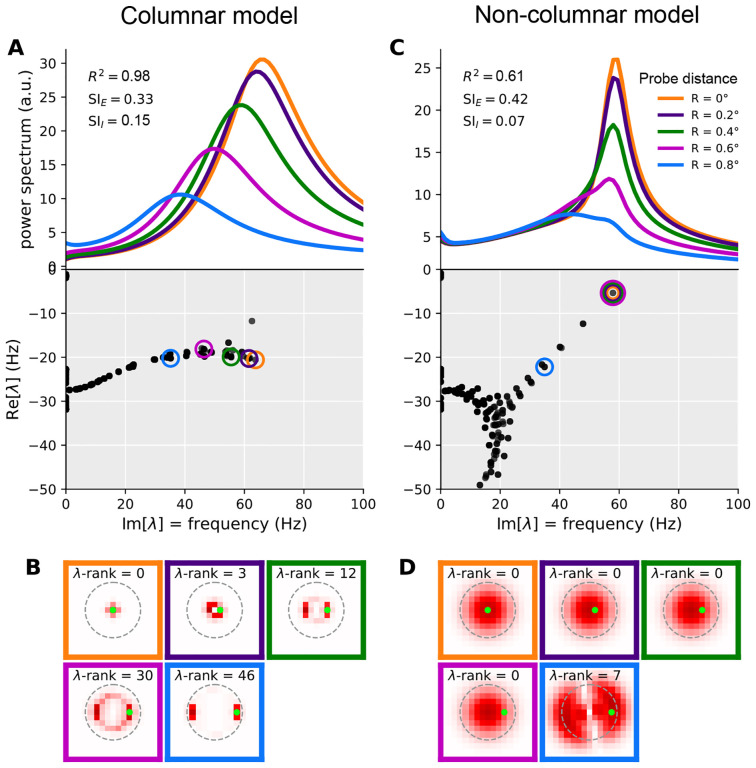

We can understand the mechanism underlying the local contrast dependence of gamma frequency in the columnar model, and its failure in the non-columnar model, by looking at the spatial profile of the normal oscillatory modes of these networks. Normal modes are the eigenvectors of the Jacobian matrix, the effective connectivity matrix of the linearized network introduced before Eq. (6), and normal oscillatory modes are the Jacobian eigenvectors with complex eigenvalues. (As described above, the oscillation frequency is given by the imaginary part of the eigenvalue, and thus we are particularly interested in the eigenvectors of eigenvalues with imaginary part in the gamma band.) The Jacobian is dependent on the operating point of the linearization, which is in turn set by the stimulus. The relevant stimulus condition for us is the Gabor stimulus, or more generally a stimulus with non-uniform contrast.

As we discussed above, gamma peak frequency would be trivially determined by local contrast in a network with only local connectivity (corresponding to λAe = 1 in our model) and disconnected cortical columns. The disconnected columns act like the 2-population model of the first part, and can oscillate independently of other columns by a frequency set by the operating point of that column, which is in turn set by the stimulus input to that column and thus the local contrast. In such a network, all Jacobian eigenvectors are completely localized spatially at a single column. Since the mode is localized, its eigenvalue and hence its natural frequency, are entirely determined by the stimulus contrast over that column.

By contrast, in the model with long-range connections and λaE = 0 (the non-columnar model) the eigenvectors can spatially cover a large region of retinotopic space, and lead to coherent and synchronous oscillations at the same frequency (set by that mode’s eigenvalue) across many columns. To see this, consider the case of a stimulus with uniform contrast. Such a stimulus does not break the network’s translational symmetry (since recurrent connections do not care about absolute location, and only depend on relative distance of pre- and post-synaptic columns). Due to this symmetry all eigenmodes are completely delocalized and have (sinusoidal) plane wave spatial profiles extending over the entire retinotopic space. A non-uniform stimulus does break the translational symmetry and leads to relative localization of eigenvectors. However, the scale of this localization is set by the scale over which the stimulus contrast varies appreciably. If this variation is smooth, the eigenvectors can still cover a large region. In the case of the Gabor stimulus, the σ of the Gaussian profile of this stimulus sets this length scale. Thus eigenvectors tend to cover much of the space covered by the stimulus. This can be seen in Fig. 7D showing oscillatory eigenvectors of an example non-columnar network, for modes which maximally contribute to the gamma peaks recorded at different locations. When such an eigenvector has a complex oscillatory eigenvalue (which is sufficiently close to the imaginary axis so that its oscillations are not strongly damped), it will give rise to coherent gamma oscillations across the area covered by the stimulus, at the same frequency set by the mode’s eigenvalue. The corresponding peak will thus appear at the same frequency in the power-spectra of the LFP recorded across this space, despite smooth variations in local contrast (Fig. 7C top). This mechanism thus breaks the local contrast dependence of peak frequency.

Figure 7.

Mechanism of local contrast dependence in the retinotopic SSN with columnar structure and its failure in the non-columnar model. The left and right columns correspond to the columnar (retinotopic SSN with boosted local, intra-columnar excitatory connectivity) and non-columnar (retinotopic SSN without the boost in local connectivity) example networks in Fig. 4 and Fig. 6, respectively. A: Top: relative LFP power spectra recorded at different probe locations in the Gabor stimulus condition (see 4E; same colors are used here to denote the different LFP probes). Relative power spectrum is the point-wise ratio of the evoked power spectrum (evoked by the Gabor stimulus) to the spontaneous power spectrum in the absence of visual stimulus (the absolute power spectrum for the same conditions was given in Fig. 4F). Bottom: the eigenvalue spectrum in the complex plane, with real and imaginary axis exchanged so that the imaginary axis aligns with the frequency axis on top (eigenvalues are also scaled by 1/(2π) to correspond to non-angular frequency). The eigenvalues were weighted separately for each probe, according to Eq. 11, and the eigenvalue with the highest weight was circled with the probe’s color (see 4E). This eigenvalue contributes the strongest peak to the power spectrum at that probe’s location. B: Each sub-panel corresponds to one of the probe locations (as indicated by the frame color), and plots the absolute value of the highest-weight eigenvector (more precisely, the function |ℛa(x)| defined in Eq. (37)) over cortical space. Thus, this is the eigenvector corresponding to the circled eigenvalue in panel A, bottom. The λ-rank in each sub-panel is the order (counting from 0) of the eigenvalue according to decreasing imaginary part, which is the eigen-mode’s natural frequency. The green dot in each sub-panel shows the location of the LFP probe. C-D: Same as A and B, but for the retinotopic SSN model with no columnar structure.

Our columnar model, via its parameters λaE, interpolates between disconnected networks and the kind of network just discussed. With a sufficient boost of intra-columnar connectivity (i.e., with sufficiently large λaE) the eigenvectors of this model become approximately localized, not to single columns, but to a small number of columns receiving similar stimulus contrasts. This is shown in an example columnar network in Fig. 7B, showing different Jacobian eigenvectors (all for the Gabor stimulus condition) which are approximately localized at different locations. Even those eigenvectors that are relatively more spread, tend to extend over rings encircling the Gabor’s center, and thus cover an area receiving the same contrast. The eigenvalue and natural frequency of such modes is thus largely controlled by that contrast value: modes that do not extend to a given location do not contribute to the power-spectrum recorded at that location, while modes that are localized nearby only “see” the local contrast.

This observation further explains the typical shape of the eigenvalue spectrum observed in columnar networks. As seen in Fig. 7A (bottom) the eigenvalue spectrum consists of a near-continuum of eigenvalues extending along the imaginary axis. Eigenvalues with higher (lower) imaginary parts (i.e., the corresponding modes’ natural frequency) have eigenvectors localized at regions of higher (lower) contrast. In this way, eigenvalues with different imaginary parts roughly correspond to different locations that have different local contrasts, with imaginary part decreasing with local contrast. By contrast, oscillatory eigenvalues in non-columnar networks, especially eigenvalues near the imaginary axis within the gamma band, tend to be isolated (Fig. 7C bottom shows this in an example non-columnar network); the corresponding mode cannot be associated with a given location or contrast. Indeed since the eigenvectors of these modes extend over many columns receiving varying contrasts, the mode’s eigenvalue and oscillation frequency are not determined by stimulus contrast at any single column, but rather by the entire spatial profile of contrast, in a complex manner.

The above qualitative discussion can be made quantitative using the linearized approximation. In Methods (see Eqs. (39) and (41)), we derive an expression for the power-spectrum at a location as a sum of individual contributions by different eigenmodes.2 As show in Eq. (41), mode a, with eigenvalue λa, contributes a peak to the power spectrum located at frequency given by the imaginary part of λa (the mode’s natural frequency). The half-width of the peak is given by minus the real part of the eigenvalue, which we denote by γa. Finally, the peak amplitude is proportional to

| (11) |

where ℛa(x) is the component of the mode’s right eigenvector at column x’s E population (after summing the components corresponding to different synaptic receptors; see Eq. (37)). Thus this peak only leaves an imprint on the LFP power-spectrum in locations where this eigenvector has appreciable components. The amplitude is also inversely related to the square of the peak’s half-width, γa, which by definition measures the distance between the eigenvalue and the imaginary axis. Thus eigenvalues closer to this axis produce stronger and sharper peaks, which appear in the LFP spectrum probed at location x, only if the corresponding right eigenvector has strong (excitatory) components there. In Fig. 7, separately for each of the five LFP probe locations on the Gabor stimulus, we picked the mode with the highest amplitude as defined in Eq. (11). The corresponding eigenvalue is circled in the eigenvalue spectrum plots (bottom plots in Fig. 7A and C for the columnar and non-columnar example models, respectively). In Fig. 7B and D we then plot the corresponding eigenvectors; more precisely, we have plotted |Ra(x)| which, according to Eq. (11), controls the strength of a mode’s contribution at different locations x. As observed, in the non-columnar model, the eigenvectors spread over the entire region covered by the Gabor stimulus. Thus the same mode makes the strongest contribution to the LFP spectra (shown in Fig. 7A and C, top plots) at all probe locations, except for the one that is farthest from the Gabor center. Since this best mode is shared across the first four probe locations, its fixed eigenvalue (Fig. 7C, bottom plot) determines the location of the power-spectrum peak (Fig. 7C, top plot) in all but the farthest probe location.

By contrast, in the columnar model, eigenvectors cover a considerably smaller area within which contrast varies little. As a result, each mode only affects the LFP power spectrum locally, and when the probe moves, the best mode changes quickly, as if the best eigenvector “moves” with the probe (Fig. 7B). In turn, the corresponding best eigenvalues also move to lower frequencies along the imaginary axis (Fig. 7A, top), as the probe moves farther from the Gabor center, according to the local contrast “seen” by their eigenvector.

Inter-columnar projections in the columnar model are nevertheless sufficiently strong to be able to give rise to strong surround suppression, as evidenced above. It is also worth noting that for large gratings that give rise to surround suppression, the contrast is uniform over a broad area, in which case even the eigenvectors of the columnar model tend to cover a broad area (mathematically, this is because when stimulated with a uniform stimulus, the columnar model also has approximate translational invariance, and therefore its eigenvectors tend towards delocalized approximate plane waves).

In summary, the columnar model can balance the requirements for locality of gamma contrast dependence and strong surround suppression, because of the intermediate spatial spread of its eigenvectors, which tend to cover relatively small areas with roughly uniform contrast.

Discussion

In this work we have shown that the expanded SSN is able to robustly display the contrast dependence of gamma peak frequency in both a two-population and a retinotopic network. The retinotopic model successfully balances the trade-off in horizontal connection strength such that both the local contrast dependence of the gamma peak frequency and the surround suppression of firing rates are observed robustly. In order to capture gamma oscillations using the SSN, we expanded the model beyond an E-I network to a varied synaptic network model. Crucially, the SSN account sheds light on the mechanism underlying the contrast dependence of gamma peak frequency and points to the key role of the non-saturating and expansive neural transfer function, observed empirically (Finn et al., 2007; Priebe and Ferster, 2005), in giving rise to this effect.

Finding the power-spectra using the linearization to Eqn. 1, helped us make analytic simplifications. From these simplifications, we gained insights on how the SSN captures the gamma contrast dependence. As contrast increases, firing rates increase, which, due to the supralinear neural transfer function of SSN, lead to increasing neural gains. This in turn strengthens effective connectivity, leading to faster oscillations. Moreover, by finding the power-spectra via linearization, we were able to rapidly compute power-spectra which allowed for extensive explorations of the model’s parameter space.

In this work, for simplicity, we assumed an instantaneous I/O function between net synaptic input (∑β hβ) and the output rate. This is based on the approximations discussed in (Fourcaud and Brunel, 2002), which is valid when the fast synaptic filtering time-constants (τAMPA and τGABA) are much smaller than the neuronal membrane time-constants. However, our framework can easily be generalized beyond this approximation by using the full neuronal linear response filter obtained from the Fokker-Planck treatment of (Fourcaud and Brunel, 2002). The main change due to such a modification would be to render the neural gains frequency dependent. We expect this dependence to be weak because we are in the regime of fast synaptic filtering as compared to the neuronal membrane time constant, and so we expect the transfer function to be approximately instantaneous (Fourcaud and Brunel, 2002). Therefore we do not expect that including the full neuronal linear response filter would change our qualitative results.

As we have shown, retinotopic SSN networks account for the co-existence of the local contrast dependence of gamma peak frequency and strong surround suppression by balancing long-range horizontal connection strength that decreases exponentially with distance with an additional strengthening of very local excitatory connections. One possible scenario is that the additional local connection strength is needed to compensate for the possible effects of the model’s coarse retinotopic grid which might have distorted the functional effects of a connectivity profile with a smooth, single-scale fall-off, without a local boost. Alternatively, this may represent a measurable increase in connection strength above an exponential function of distance at short distances, below ~200–400μm. Indeed, it has been previously noted that anatomical findings on the spatial profile of horizontal connections in the macaque cortex point to such a mixture of short-range or local and long-range connections, with the local component not extending beyond 400μm (the size of our model’s columns) (Voges et al., 2010).

It is notable that, in modeling surround suppression in V1, Obeid and Miller (2021) also found that they needed to increase the central weight strengths, relative to an exponential fall-off of strength on a grid, for their SSN model to account for two other observations. These observations were the decrease in inhibition received by a cell when it is surround suppressed (Adesnik, 2017; Ozeki et al., 2009), and the fact that the strongest surround suppression occurs when surround orientation matches center orientation, even if the center orientation is not the cell’s preferred orientation (Shushruth et al., 2012; Trott and Born, 2015). Other phenomena they addressed could be explained by their SSN model with or without this extra local strength. We believe that other visual cortical phenomena previously addressed with the SSN model (Adesnik, 2017; Hennequin et al., 2018; Liu et al., 2018; Rubin et al., 2015) would not be affected by such boosting, as the mechanisms inferred behind them appear independent of these connectivity details.

Recently some evidence of enhanced connectivity at very short distances (~20μm) has been found in mouse V1 (Oldenburg et al., 2022). Optogenetic stimulation of ten cells found excitation of nearby cells only at such short distances from one of the stimulated cells, with suppression at longer distances. In a model, this required an extra component of connectivity that decreased with distance on a very short length scale, in addition to one with a longer length scale. As they point out, such extra local strength might account for the observation that preferred orientations in mouse visual cortex are correlated on a similar very short length scale (Kondo et al., 2016). It will be interesting to see if evidence of such a short-length scale component of connectivity is evident in monkeys, where the local contrast dependence of gamma was measured, and conversely to see if mice show similar local contrast dependence of gamma.

Methods

Stabilized supralinear network (SSN) with different synaptic receptor types

In its original form, the Stabilized Supralinear Network (SSN) is a firing rate network of excitatory and inhibitory neurons that have a supralinear rectified power-law input/output (I/O) transfer function:

| (12) |

where n > 1 and [h]+ ≡ max(0,h) denotes rectification of h. The dynamics can either be formulated in terms of the inputs to the units (Hennequin et al., 2018) or in terms of their output firing rates (Ahmadian et al., 2013; Rubin et al., 2015). Here we adopt the former case for which the dynamical state of the network, in a network of N neurons, is given by the N-dimensional vector of inputs vvt, which evolves according to the dynamical system

| (13) |

Here, I is the external input vector, T = diag(τ1, …, τN) is the diagonal matrix of synaptic time constants, and f acts element-wise. Finally, W is the N × N matrix of recurrent connection weights between the units in the network. This connectivty matrix observes Dale’s law, meaning the sign of the weight does not change over columns. If we order neurons such that excitatory neurons appear first and inhibitory neurons second, this matrix takes the form

| (14) |

where WXY (X, Y ∈ [E, I]) have non-negative elements.

The above model does not take into account the distinct dynamics of currents through different synaptic receptor channels: AMPA, GABAA (henceforth GABA), and NMDA. Only the fast receptors, AMPA and GABA, have timescales relevant to gamma band oscillations. These receptors have very fast rise times, which correspond to frequencies much higher than the gamma band. We therefore ignored the rise times of all receptors. With this assumption, upon arrival of an action potential in a pre-synaptic terminal at time t = 0, the post-synaptic current through receptor channel α (α ∈ {A = AMPA, G = GABA, N = NMDA}) with decay time τα is given by where θ(t) is the Heaviside step function and wα is the contribution of receptor α to the synaptic weight. This is the impulse response solution to the differential equation , where δ(t) is the Dirac delta representing the spike at time t = 0. In the mean-field firing rate treatment, the delta function is averaged and is replaced by a smooth rate function r(t). Extending this to cover post-synaptic currents from all synapses into all neurons we obtain the equation

| (15) |

where rt and hα are N-dimensional vectors of the neurons’ firings rates and input currents of type α, respectively, and Wα are N × N matrices containing the contribution of receptor type α to the recurrent synaptic weights. If we add an external input to the right side (before filtering by the synaptic receptors), we obtain Eq. (1). Since AMPA and NMDA only contribute to excitatory synapses, and GABA only to inhibitory ones, in general the Wα have the following block structure

| (16) |

For simplicity, we further assumed that the fraction of NMDA and AMPA is the same in all excitatory synapses. In this case all Wα can be written in terms of the four blocks of the full connectivity matrix W ≡ ∑αWα, introduced in Eq. (14):

| (17) |

where the scalar ρN is the fractional contribution of NMDA to excitatory synaptic weights.

As noted in Results, to close the system of equations for the dynamical variables , we have to relate the output rate of a neuron to its total input current, . In general, the relationship between the total input and the firing rate of a neuron, or the mean firing rate of a population of statistically equivalent neurons, is nonlinear and dynamical, meaning the rate at a given instant depends on the preceding history of input, and not just on the instantaneous input. However, as shown by (Brunel et al., 2001; Fourcaud and Brunel, 2002), the firing rate of spiking neurons receiving low-pass filtered noise with fast auto-correlation timescales is approximately a function of the instantaneous input. The fast filtered noise is exactly what irregular spiking of the spiking network generates after synaptic filtering (as in Eq. (15)) by the fast AMPA and GABA receptors. (While our rate model does not explicitly model (irregular) spiking, it can be thought of as a mean-field approximation to a spiking network where each SSN unit or “neuron” represents a sub-population of spiking neurons, with the rate of that unit representing the average firing rate of the underlying spiking population.) We thus use this static approximation to the I/O transfer function and assume the firing rates of our model units are given by Eq. (2): , where F(.) is the rectified power-law function of Eq. (12).